#Web API Security

Explore tagged Tumblr posts

Text

https://nyuway.com/why-ptaas-is-a-game-changer-for-your-cybersecurity/

#Dynamic Application Security Testing (DAST)#Web Application DAST#API Security DAST#Penetration Testing as a Service (PTaaS)#Vulnerability Assessment and Penetration Testing (VA PT)

0 notes

Text

TCoE Framework: Best Practices for Standardized Testing Processes

A Testing Center of Excellence (TCoE) framework focuses on unifying and optimizing testing processes across an organization. By adopting standardized practices, businesses can improve efficiency, consistency, and quality while reducing costs and redundancies.

Define Clear Objectives and Metrics

Set measurable goals for the TCoE, such as improved defect detection rates or reduced test cycle times. Establish key performance indicators (KPIs) to monitor progress and ensure alignment with business objectives.

Adopt a Robust Testing Framework

Use modular and reusable components to create a testing framework that supports both manual and automated testing. Incorporate practices like data-driven and behavior-driven testing to ensure flexibility and scalability.

Leverage the Right Tools and Technologies

Standardize tools for test automation, performance testing, and test management across teams. Integrate AI-driven tools to enhance predictive analytics and reduce test maintenance.

Focus on Skill Development

Provide continuous training to ensure teams stay updated with the latest testing methodologies and technologies. Encourage certifications and cross-functional learning.

Promote Collaboration and Knowledge Sharing

Foster collaboration between development, QA, and operations teams. Establish a knowledge repository for sharing test scripts, results, and best practices.

By implementing these best practices, organizations can build a high-performing TCoE framework that ensures seamless, standardized, and efficient testing processes.

#web automation testing#ui automation testing#web ui testing#ui testing in software testing#automated website testing#web ui automation#web automation tool#web automation software#automated web ui testing#web api test tool#web app testing#web app automation#web app performance testing#security testing for web application

0 notes

Text

Web Application Penetration Testing

Blacklock offers web application penetration testing to help businesses ensure the security of their applications. This service includes identifying and exploiting vulnerabilities in web applications, APIs, and mobile applications. Our API penetration testing helps to improve application security by simulating real-world attacks and identifying weaknesses before they can be exploited by malicious actors. Contact Blacklock Security to enhance your web application security and get it work smoothly.

0 notes

Text

Implementing Throttling and Rate Limiting in Django REST Framework

Introduction:In today’s API-driven world, ensuring that your API is secure and efficient is crucial. One way to achieve this is by implementing throttling and rate limiting in your Django REST Framework (DRF) applications. Throttling controls the rate at which clients can make requests to your API, helping to prevent abuse and ensuring that your server resources are not overwhelmed by excessive…

0 notes

Text

Securing Your Digital Identity: Get Your Google API and OAuth Credentials Now

As of today, it is so easy to get the Google API and Client credentials with a few clicks via Google Developer Console. Before that, it is essential to know what API and Client credentials are. In this blog, we discuss the API and client credentials and when to use them. Are you searching for the Step by Step instructions to get the API key and OAuth Credentials? Then keep on reading….

Both API keys and OAuth are the different types of authentication handled by Cloud Endpoints.

These two differ most in the following ways:

The application or website performing the API call is identified by the API key.

An app or website’s user, or the person using it, is identified by an authentication token.

API keys provide project authorization

To decide which scheme is most appropriate, it’s important to understand what API keys and authentication can provide.

API keys provide

Project identification — Identify the application or the project that’s making a call to this API

Project authorization — Check whether the calling application has been granted access to call the API and has enabled the API in their project

API keys aren’t as secure as authentication tokens, but they identify the application or project that’s calling an API. They are generated on the project making the call, and you can restrict their use to an environment such as an IP address range, or an Android or iOS app.

By identifying the calling project, you can use API keys to associate usage information with that project. API keys allow the Extensible Service Proxy (ESP) to reject calls from projects that haven’t been granted access or enabled in the API.

Contrarily, authentication strategies often have two objectives:

Verify the identity of the calling user securely using user authentication.

Check the user's authorization to see if they have the right to submit this request.

A safe method of identifying the user who is calling is provided by authentication mechanisms.

In order to confirm that it has permission to call an API, endpoints also examine the authentication token.

The decision to authorize a request is made by the API server based on that authentication.

The calling project is identified by the API key, but the calling user is not.

An API key, for example, can identify the application that is making an API call if you have developed an application that is doing so.

Protection of API keys

In general, API keys is not seen to be safe because clients frequently have access to them. This will make it simple for someone to steal an API key. Unless the project owner revokes or regenerates the key, it can be used indefinitely once it has been stolen because it has no expiration date. There are better methods for authorization, even though the limitations you can place on an API key minimize this.

API Keys: When to Use?

An API may require API keys for part or all of its methods.

This makes sense to do if:

You should prevent traffic from anonymous sources.

In the event that the application developer wants to collaborate with the API producer to troubleshoot a problem or demonstrate the usage of their application, API keys identify an application's traffic for the API producer.

You wish to limit the number of API calls that are made.

You want to analyze API traffic to find usage trends.

APIs and services allow you to view application consumption.

You want to use the API key to filter logs.

API keys: When not to use?

Individual user identification – API keys are used to identify projects, not people

On secured authorization

Finding the authors of the project

Step-by-step instructions on how to get Google API and OAuth credentials using the Google developer console.

Step 1

Browse Google developer console

Step 2

Select your project or create a new project by clicking on the New project button

Step 3

Provide your project name, organization, and location, and click on create.

And That’s it. You have created a New Project.

Step 4

Navigate to the Enabled API and services at the Left sidebar and click on Credentials

Step 5

Move on to create Credentials

Here to get your API key click on the API key. Instantly you will get your API key for your Project.

To get your OAuth Credentials

Navigate to the OAuth Client ID on the Create Credentials drop-down menu.

Step 6

Here you need to create an application. A client ID is used to identify a single app to Google’s OAuth servers. If your app runs on multiple platforms, each will need its own client ID.

Step 7

Select the appropriate application type from the drop-down

The name of the client will be auto-generated. This is only to recognize the client console and does not show to the end users.

Step 8

Enter your URL for the Authorized JavaScript origins by clicking on Add URL

Provide your Authorized redirect URLs

Finally click on Create

Step 9

You will get an OAuth Client Id and Client Secret instantly.

Epilogue

Getting Google API and OAuth credentials is an important step in developing applications that interact with Google services. It allows developers to access data from Google APIs and services in a secure and reliable way. With the correct setup, developers can create powerful applications that can be used by millions of users. In summary, getting Google API and OAuth credentials is essential for any developer wishing to build web applications that interact with Google services.

#google drive#google cloud#google#blog post#Google api#oauth#oauth tutorial#oauthsecurity#google security#web developers#software development#developers

0 notes

Text

Technology plays a vital role in everyone’s daily lives, from the simplest forms of applications to the most creative inventions. Every application or software has been built by a web developer. In these present days, students and youngsters want to be web developers to make their careers way better.

#Web Development#Remote Work in Web Development#Web Development Certifications#Interview Tips for Web Developers#Salary Expectations in Web Development#Job Satisfaction in Web Development#Future of Web Development#Content Management Systems (CMS)#Website Optimization#Cross-browser Compatibility#Mobile Development#Web Security#APIs (Application Programming Interfaces)

0 notes

Text

"Bots on the internet are nothing new, but a sea change has occurred over the past year. For the past 25 years, anyone running a web server knew that the bulk of traffic was one sort of bot or another. There was googlebot, which was quite polite, and everyone learned to feed it - otherwise no one would ever find the delicious treats we were trying to give away. There were lots of search engine crawlers working to develop this or that service. You'd get 'script kiddies' trying thousands of prepackaged exploits. A server secured and patched by a reasonably competent technologist would have no difficulty ignoring these.

"...The surge of AI bots has hit Open Access sites particularly hard, as their mission conflicts with the need to block bots. Consider that Internet Archive can no longer save snapshots of one of the best open-access publishers, MIT Press, because of cloudflare blocking. Who know how many books will be lost this way? Or consider that the bots took down OAPEN, the worlds most important repository of Scholarly OA books, for a day or two. That's 34,000 books that AI 'checked out' for two days. Or recent outages at Project Gutenberg, which serves 2 million dynamic pages and a half million downloads per day. That's hundreds of thousands of downloads blocked! The link checker at doab-check.ebookfoundation.org (a project I worked on for OAPEN) is now showing 1,534 books that are unreachable due to 'too many requests.' That's 1,534 books that AI has stolen from us! And it's getting worse.

"...The thing that gets me REALLY mad is how unnecessary this carnage is. Project Gutenberg makes all its content available with one click on a file in its feeds directory. OAPEN makes all its books available via an API. There's no need to make a million requests to get this stuff!! Who (or what) is programming these idiot scraping bots? Have they never heard of a sitemap??? Are they summer interns using ChatGPT to write all their code? Who gave them infinite memory, CPUs and bandwidth to run these monstrosities? (Don't answer.)

"We are headed for a world in which all good information is locked up behind secure registration barriers and paywalls, and it won't be to make money, it will be for survival. Captchas will only be solvable by advanced AIs and only the wealthy will be able to use internet libraries."

#ugh#AI#generative AI#literally a plagiarism machine#and before you're like “oH bUt Ai Is DoInG sO mUcH gOoD...” that's machine learning AI doing stuff like finding cancer#generative AI is just stealing and then selling plagiarism#open access#OA#MIT Press#OAPEN#Project Gutenberg#various AI enthusiasts just wrecking the damn internet by Ctrl+Cing all over the damn place and not actually reading a damn thing

42 notes

·

View notes

Text

I miss being able to just use an API with `curl`.

Remember that? Remember how nice that was?

You just typed/pasted the URL, typed/piped any other content, and then it just prompted you to type your password. Done. That's it.

Now you need to log in with a browser, find some obscure settings page with API keys and generate a key. Paternalism demands that since some people insecurely store their password for automatic reuse, no one can ever API with a password.

Fine-grained permissions for the key? Hope you got it right the first time. You don't mind having a blocking decision point sprung on you, do ya? Of course not, you're a champ. Here's some docs to comb through.

That is, if the service actually offers API keys. If it requires OAuth, then haha, did you really think you can just make a key and use it? you fool, you unwashed barbarian simpleton.

No, first you'll need to file this form to register an App, and that will give you two keys, okay, and then you're going to take those keys, and - no, stop, stop trying to use the keys, imbecile - now you're going to write a tiny little program, nothing much, just spin up a web server and open a browser and make several API calls to handle the OAuth flow.

Okay, got all that? Excellent, now just run that program with the two keys you have, switch back to the browser, approve the authorization, and now you have two more keys, ain't that just great? You can tell it's more secure because the number of keys and manual steps is bigger.

And now, finally, you can use all four keys to make that API call you wanted. For now. That second pair of keys might expire later.

20 notes

·

View notes

Text

10 web application firewall benefits to keep top of mind - CyberTalk

New Post has been published on https://thedigitalinsider.com/10-web-application-firewall-benefits-to-keep-top-of-mind-cybertalk/

10 web application firewall benefits to keep top of mind - CyberTalk

EXECUTIVE SUMMARY:

These days, web-based applications handle everything from customer data to financial transactions. As a result, for cyber criminals, they represent attractive targets.

This is where Web Application Firewalls (or WAFs) come into play. A WAF functions as a private security guard for a web-based application or site; always on-guard, in search of suspicious activity, and capable of blocking potential attacks. But the scope of WAF protection tends to span beyond what most leaders are aware of.

In this article, discover 10 benefits of WAFs that cyber security decision-makers should keep top-of-mind, as to align WAF functions with the overarching cyber security strategy.

1. Protection against OWASP Top 10 threats. A WAF can stop application layer attacks, including the OWASP Top 10 (with minimal tuning and no false positives). WAFs continuously update rule sets to align with the latest OWASP guidelines, reducing the probability of successful attacks.

2. API protection. WAFs offer specialized protection against API-specific threats, ensuring the integrity of data exchanges. WAFs can block threats like parameter tampering and can find abnormal behavioral patterns that could be indicative of API abuse.

Advanced WAFs can understand and validate complex API calls, ensuring that only legitimate requests are processed. They can also enforce rate limiting and access controls specific to different API endpoints.

3. Bot & DDoS protection. WAFs can distinguish between malicious and legitimate bot traffic, preventing DDoS threats, credential stuffing, content scraping and more. This area of WAF capability is taking on increasing importance, as bots are blazing across the web like never before, negatively impacting the bottom line and customer experiences.

4. Real-time intelligence. Modern WAFs leverage machine learning to analyze traffic patterns and to provide up-to-the-minute protection against emerging threats, enabling businesses to mitigate malicious instances before exploitation-at-scale can occur.

5. Compliance adherence. WAFs enable organizations to meet regulatory requirements, as they implement much-needed security controls and can provide detailed audit logs.

The granular logging and reporting capabilities available via WAF allow organizations to demonstrate due diligence in protecting sensitive data.

Many WAFs come with pre-configured rule sets designed to address specific compliance requirements, rendering it easier to maintain a compliant posture as regulations continue to evolve.

6. Reduced burden on development teams. Stopping vulnerabilities at the application layer enables development or IT team to focus on core functionalities, rather than the constant patching of security issues.

This “shift-left” approach to security can significantly accelerate development cycles and improve overall application quality. Additionally, the insights offered by WAFs can help developers understand common attack patterns, informing better security practices as everyone moves forward.

7. Customizable rule sets. Advanced WAFs offer the flexibility to create and fine-tune rules that are specific to an organization’s needs. This customization allows for the adaptation to unique application architecture and traffic patterns, minimizing false positives, while maintaining robust protection.

Organizations can create rules to address specific threats to their business, such as protecting against business logic attacks unique to their application.

And the ability to gradually implement and test new rules in monitoring mode before enforcing them ensures that security measures will not inadvertently disrupt legitimate business operations.

8. Performance optimization. Many WAFs include content delivery network (CDN) capabilities, improving application performance and UX while maintaining security.

Caching content and distributing it globally can significantly reduce latency and improve load times for users worldwide. This dual functionality of security and performance optimization offers a compelling value proposition. Organizations can upgrade both their security posture and user satisfaction via a single cyber security solution.

9. Operational insights. WAFs present actionable operational insights pertaining to traffic patterns, attack trends and application behavior. These insights can drive continuous security posture improvement, inform risk assessments and help cyber security staff better allocate security resources.

10. Cloud-native security. As organizations migrate to the cloud, WAFs intended for cloud environments ensure consistent protection across both hybrid and multi-cloud infrastructure. Cloud-native WAFs can scale automatically with applications, offering uncompromising protection amidst traffic spikes or rapid cloud expansions.

Cloud-native WAFs also offer centralized management. This simplifies administration and ensures consistent policy enforcement. By virtue of the features available, these WAFs can provide enhanced protection against evolving threats.

Further thoughts

WAFs afford organizations comprehensive protection. When viewed not only as a security solution, but also as a business enablement tool, it becomes clear that WAFs are an integral component of an advanced cyber security strategy. To explore WAF products, click here.

For more cloud security insights, click here. Lastly, to receive cyber security thought leadership articles, groundbreaking research and emerging threat analyses each week, subscribe to the CyberTalk.org newsletter.

#10 web application firewall benefits to keep top of mind#Administration#amp#analyses#API#application layer#applications#approach#architecture#Article#Articles#audit#Behavior#bot#bot traffic#bots#Business#cdn#Cloud#cloud infrastructure#Cloud Security#cloud services#Cloud-Native#compliance#comprehensive#content#continuous#credential#credential stuffing#customer data

0 notes

Text

𝗪𝗲𝗲𝗸𝗹𝘆 𝗠𝗮𝗹𝘄𝗮𝗿𝗲 & 𝗧𝗵𝗿𝗲𝗮𝘁𝘀 𝗥𝗼𝘂𝗻𝗱𝘂𝗽 | 𝟭𝟬 𝗙𝗲𝗯 - 𝟭𝟲 𝗙𝗲𝗯 𝟮𝟬𝟮𝟱

1️⃣ 𝗙𝗜𝗡𝗔𝗟𝗗𝗥𝗔𝗙𝗧 𝗠𝗮𝗹𝘄𝗮𝗿𝗲 𝗘𝘅𝗽𝗹𝗼𝗶𝘁𝘀 𝗠𝗶𝗰𝗿𝗼𝘀𝗼𝗳𝘁 𝗚𝗿𝗮𝗽𝗵 𝗔𝗣𝗜 FINALDRAFT is targeting Windows and Linux systems, leveraging Microsoft Graph API for espionage. Source: https://www.elastic.co/security-labs/fragile-web-ref7707

2️⃣ 𝗦𝗸𝘆 𝗘𝗖𝗖 𝗗𝗶𝘀𝘁𝗿𝗶𝗯𝘂𝘁𝗼𝗿𝘀 𝗔𝗿𝗿𝗲𝘀𝘁𝗲𝗱 𝗶𝗻 𝗦𝗽𝗮𝗶𝗻 𝗮𝗻𝗱 𝗧𝗵𝗲 𝗡𝗲𝘁𝗵𝗲𝗿𝗹𝗮𝗻𝗱𝘀 Four distributors of the criminal-encrypted service Sky ECC were arrested in Spain and the Netherlands. Source: https://www.bleepingcomputer.com/news/legal/sky-ecc-encrypted-service-distributors-arrested-in-spain-netherlands/

3️⃣ 𝗔𝘀𝘁𝗮𝗿𝗼𝘁𝗵: 𝗡𝗲𝘄 𝟮𝗙𝗔 𝗣𝗵𝗶𝘀𝗵𝗶𝗻𝗴 𝗞𝗶𝘁 𝗧𝗮𝗿𝗴𝗲𝘁𝘀 𝗠𝗮𝗷𝗼𝗿 𝗘𝗺𝗮𝗶𝗹 𝗣𝗿𝗼𝘃𝗶𝗱𝗲𝗿𝘀 The Astaroth phishing kit is used to bypass 2FA and steal credentials from Gmail, Yahoo, AOL, O365, and third-party logins. Source: https://slashnext.com/blog/astaroth-a-new-2fa-phishing-kit-targeting-gmail-yahoo-aol-o365-and-3rd-party-logins/

4️⃣ 𝗥𝗮𝗻𝘀𝗼𝗺𝗛𝘂𝗯 𝗕𝗲𝗰𝗼𝗺𝗲𝘀 𝟮𝟬𝟮𝟰’𝘀 𝗧𝗼𝗽 𝗥𝗮𝗻𝘀𝗼𝗺𝘄𝗮𝗿𝗲 𝗚𝗿𝗼𝘂𝗽 RansomHub overtook competitors in 2024, hitting over 600 organisations worldwide. Source: https://www.group-ib.com/blog/ransomhub-never-sleeps-episode-1/

5️⃣ 𝗕𝗮𝗱𝗣𝗶𝗹𝗼𝘁 𝗖𝗮𝗺𝗽𝗮𝗶𝗴𝗻: 𝗦𝗲𝗮𝘀𝗵𝗲𝗹𝗹 𝗕𝗹��𝘇𝘇𝗮𝗿𝗱 𝗧𝗮𝗿𝗴𝗲𝘁𝘀 𝗚𝗹𝗼𝗯𝗮𝗹 𝗡𝗲𝘁𝘄𝗼𝗿𝗸𝘀 The Seashell Blizzard subgroup runs a multiyear global operation for continuous access and data theft. Source: https://www.microsoft.com/en-us/security/blog/2025/02/12/the-badpilot-campaign-seashell-blizzard-subgroup-conducts-multiyear-global-access-operation/

Additional Cybersecurity News:

🟢 𝗔𝗽𝗽𝗹𝗲 𝗙𝗶𝘅𝗲𝘀 𝗔𝗰𝘁𝗶𝘃𝗲𝗹𝘆 𝗘𝘅𝗽𝗹𝗼𝗶𝘁𝗲𝗱 𝗭𝗲𝗿𝗼-𝗗𝗮𝘆 Apple patches a critical zero-day vulnerability affecting iOS devices. Source: https://www.techspot.com/news/106731-apple-fixes-another-actively-exploited-zero-day-vulnerability.html

🟠 𝗝𝗮𝗽𝗮𝗻 𝗜𝗻𝘁𝗿𝗼𝗱𝘂𝗰𝗲𝘀 "𝗔𝗰𝘁𝗶𝘃𝗲 𝗖𝘆𝗯𝗲𝗿 𝗗𝗲𝗳𝗲𝗻𝗰𝗲" 𝗕𝗶𝗹𝗹 Japan is moving towards offensive cybersecurity tactics with a new legislative push. Source: https://www.darkreading.com/cybersecurity-operations/japan-offense-new-cyber-defense-bill

🔴 𝗖𝗿𝗶𝘁𝗶𝗰𝗮𝗹 𝗡𝗩𝗜𝗗𝗜𝗔 𝗔𝗜 𝗩𝘂𝗹𝗻𝗲𝗿𝗮𝗯𝗶𝗹𝗶𝘁𝘆 𝗗𝗶𝘀𝗰𝗼𝘃𝗲𝗿𝗲𝗱 A severe flaw in NVIDIA AI software has been discovered, enabling container escapes. Source: https://www.wiz.io/blog/nvidia-ai-vulnerability-deep-dive-cve-2024-0132

6 notes

·

View notes

Text

https://nyuway.com/why-ptaas-is-a-game-changer-for-your-cybersecurity/

0 notes

Text

Boost Your Website Performance with URL Monitor: The Ultimate Solution for Seamless Web Management

In today's highly competitive digital landscape, maintaining a robust online presence is crucial. Whether you're a small business owner or a seasoned marketer, optimizing your website's performance can be the difference between success and stagnation.

Enter URL Monitor, an all-encompassing platform designed to revolutionize how you manage and optimize your website. By offering advanced monitoring and analytics, URL Monitor ensures that your web pages are indexed efficiently, allowing you to focus on scaling your brand with confidence.

Why Website Performance Optimization Matters

Website performance is the backbone of digital success. A well-optimized site not only enhances user experience but also improves search engine rankings, leading to increased visibility and traffic. URL Monitor empowers you to stay ahead of the curve by providing comprehensive insights into domain health and URL metrics. This tool is invaluable for anyone serious about elevating their online strategy.

Enhancing User Experience and SEO

A fast, responsive website keeps visitors engaged and satisfied. URL Monitor tracks domain-level performance, ensuring your site runs smoothly and efficiently. With the use of the Web Search Indexing API, URL Monitor facilitates faster and more effective page crawling, optimizing search visibility. This means your website can achieve higher rankings on search engines like Google and Bing, driving more organic traffic to your business.

Comprehensive Monitoring with URL Monitor

One of the standout features of URL Monitor is its ability to provide exhaustive monitoring of your website's health. Through automatic indexing updates and daily analytics tracking, this platform ensures you have real-time insights into your web traffic and performance.

Advanced URL Metrics

Understanding URL metrics is essential for identifying areas of improvement on your site. URL Monitor offers detailed tracking of these metrics, allowing you to make informed decisions that enhance your website's functionality and user engagement. By having a clear picture of how your URLs are performing, you can take proactive steps to optimize them for better results.

Daily Analytics Tracking

URL Monitor's daily analytics tracking feature provides you with consistent updates on your URL indexing status and search analytics data. This continuous flow of information allows you to respond quickly to changes, ensuring your website remains at the top of its game. With this data, you can refine your strategies and maximize your site's potential.

Secure and User-Friendly Interface

In addition to its powerful monitoring capabilities, URL Monitor is also designed with user-friendliness in mind. The platform offers a seamless experience, allowing you to navigate effortlessly through its features without needing extensive technical knowledge.

Data Security and Privacy

URL Monitor prioritizes data security, offering read-only access to your Google Search Console data. This ensures that your information is protected and private, with no risk of sharing sensitive data. You can trust that your website's performance metrics are secure and reliable.

Flexible Subscription Model for Ease of Use

URL Monitor understands the importance of flexibility, which is why it offers a subscription model that caters to your needs. With monthly billing and no long-term commitments, you have complete control over your subscription. This flexibility allows you to focus on growing your business without the burden of unnecessary constraints.

Empowering Business Growth

By providing a user-friendly interface and secure data handling, URL Monitor allows you to concentrate on what truly matters—scaling your brand. The platform's robust analytics and real-time insights enable you to make data-driven decisions that drive performance and growth.

Conclusion: Elevate Your Website's Potential with URL Monitor

In conclusion, URL Monitor is the ultimate solution for anyone seeking hassle-free website management and performance optimization. Its comprehensive monitoring, automatic indexing updates, and secure analytics make it an invaluable tool for improving search visibility and driving business growth.

Don't leave your website's success to chance. Discover the power of URL Monitor and take control of your online presence today. For more information, visit URL Monitor and explore how this innovative platform can transform your digital strategy. Unlock the full potential of your website and focus on what truly matters—scaling your brand to new heights.

3 notes

·

View notes

Text

ok since i've been sharing some piracy stuff i'll talk a bit about how my personal music streaming server is set up. the basic idea is: i either buy my music on bandcamp or download it on soulseek. all of my music is stored on an external hard drive connected to a donated laptop that's next to my house's internet router. this laptop is always on, and runs software that lets me access and stream my any song in my collection to my phone or to other computers. here's the detailed setup:

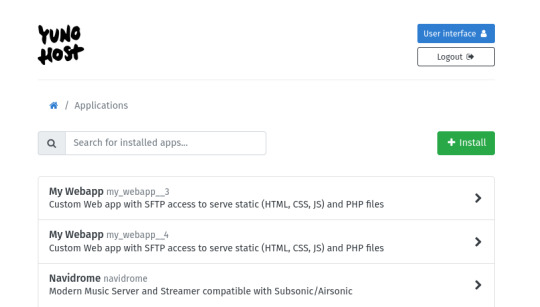

my home server is an old thinkpad laptop with a broken keyboard that was donated to me by a friend. it runs yunohost, a linux distribution that makes it simpler to reuse old computers as servers in this way: it gives you a nice control panel to install and manage all kinds of apps you might want to run on your home server, + it handles the security part by having a user login page & helping you install an https certificate with letsencrypt.

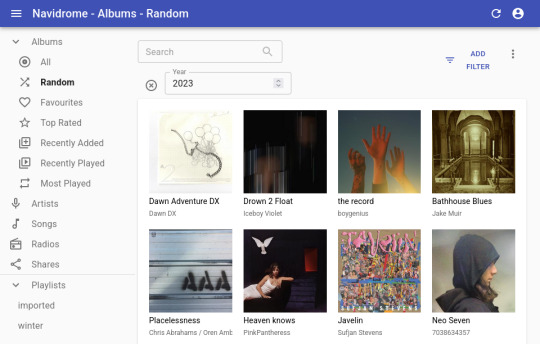

***

to stream my music collection, i use navidrome. this software is available to install from the yunohost control panel, so it's straightforward to install. what it does is take a folder with all your music and lets you browse and stream it, either via its web interface or through a bunch of apps for android, ios, etc.. it uses the subsonic protocol, so any app that says it works with subsonic should work with navidrome too.

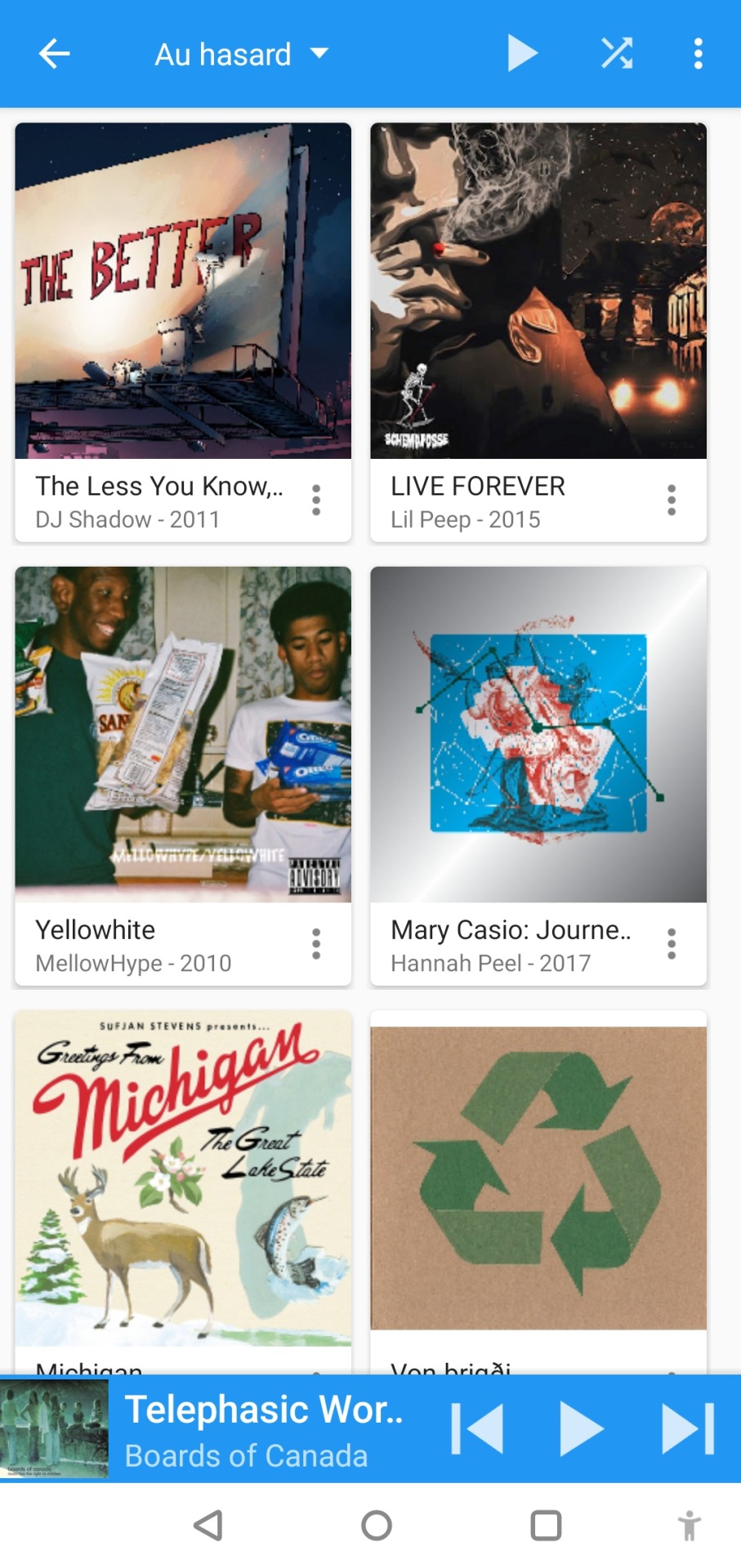

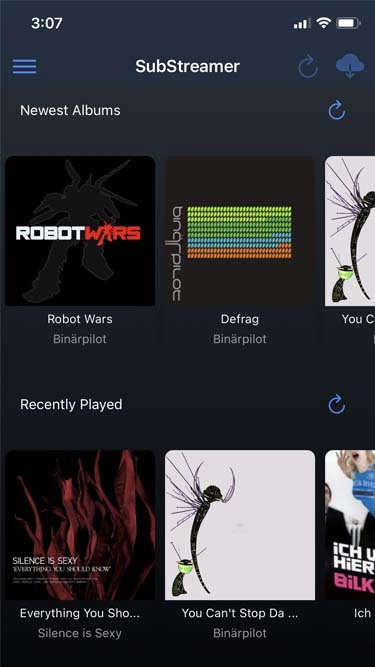

***

to listen to my music on my phone, i use DSub. It's an app that connects to any server that follows the subsonic API, including navidrome. you just have to give it the address of your home server, and your username and password, and it fetches your music and allows you to stream it. as mentionned previously, there's a bunch of alternative apps for android, ios, etc. so go take a look and make your pick. i've personally also used and enjoyed substreamer in the past. here are screenshots of both:

***

to listen to my music on my computer, i use tauon music box. i was a big fan of clementine music player years ago, but it got abandoned, and the replacement (strawberry music player) looks super dated now. tauon is very new to me, so i'm still figuring it out, but it connects to subsonic servers and it looks pretty so it's fitting the bill for me.

***

to download new music onto my server, i use slskd which is a soulseek client made to run on a web server. soulseek is a peer-to-peer software that's found a niche with music lovers, so for anything you'd want to listen there's a good chance that someone on soulseek has the file and will share it with you. the official soulseek client is available from the website, but i'm using a different software that can run on my server and that i can access anywhere via a webpage, slskd. this way, anytime i want to add music to my collection, i can just go to my server's slskd page, download the files, and they directly go into the folder that's served by navidrome.

slskd does not have a yunohost package, so the trick to make it work on the server is to use yunohost's reverse proxy app, and point it to the http port of slskd 127.0.0.1:5030, with the path /slskd and with forced user authentification. then, run slskd on your server with the --url-base slskd, --no-auth (it breaks otherwise, so it's best to just use yunohost's user auth on the reverse proxy) and --no-https (which has no downsides since the https is given by the reverse proxy anyway)

***

to keep my music collection organized, i use beets. this is a command line software that checks that all of the tags on your music are correct and puts the file in the correct folder (e.g. artist/album/01 trackname.mp3). it's a pretty complex program with a ton of features and settings, i like it to make sure i don't have two copies of the same album in different folders, and to automatically download the album art and the lyrics to most tracks, etc. i'm currently re-working my config file for beets, but i'd be happy to share if someone is interested.

that's my little system :) i hope it gives the inspiration to someone to ditch spotify for the new year and start having a personal mp3 collection of their own.

34 notes

·

View notes

Text

Discover the future of cryptocurrency trading with Cupo.ai, an advanced platform designed to maximize your profits while keeping you in full control of your funds. Leveraging cutting-edge algorithms and artificial intelligence, Cupo.ai analyzes market trends to execute trades that enhance your earnings and minimize risks.

Why Choose Cupo.ai?

Your Funds, Your Control: With Cupo.ai, your assets remain securely in your exchange accounts. We never touch your money, ensuring complete control and peace of mind.

Seamless Web Application: Access the full Cupo ecosystem without any installation. Initiate auto trading, monitor balances, review profitability, and access transaction history—all in one user-friendly platform.

Reliability and Security: Our spot trading algorithm buys and holds cryptocurrency in your exchange wallet until the market price increases, selling it to generate profit. We prioritize data security, ensuring your information is protected.

Autonomous Trading: The adaptive algorithm automatically signals when to buy and sell cryptocurrencies based on market movements, directly managing assets in your exchange account.

Getting Started is Easy:

1. Select a crypto exchange from the available options.

2. Input the API keys generated from your account on the chosen exchange.

3. Add up to four currency pairs of your choice.

4. Press “Start auto-trading” and let Cupo.ai handle the rest.

Join a Thriving Community:

With over 2,000 customers worldwide and more than $8 million in daily transactions, Cupo.ai is trusted by a global community of investors. Our integration with leading crypto exchanges like Binance, Coinbase, and Bitfinex ensures a seamless trading experience.

Flexible Subscription Plans:

Choose a plan that suits your needs and enjoy all the benefits of Cupo.ai:

180 Days: Access to all main platform functions for 180 days.

365 Days: Access to all platform functions for 365 days plus 90 additional days as a gift.

1095 Days: Access to all platform functions for 730 days plus one more year for free.

Experience the future of cryptocurrency trading with Cupo.ai—where innovation meets security, and your financial goals are within reach.

6 notes

·

View notes

Text

Django REST Framework: Authentication and Permissions

Secure Your Django API: Authentication and Permissions with DRF

Introduction Django REST Framework (DRF) is a powerful toolkit for building Web APIs in Django. One of its key features is the ability to handle authentication and permissions, ensuring that your API endpoints are secure and accessible only to authorized users. This article will guide you through setting up authentication and permissions in DRF, including examples and…

#custom permissions#Django API security#Django JWT integration#Django REST Framework#DRF authentication#Python web development#token-based authentication

0 notes

Text

Crafting Web Applications For Businesses Which are Responsive,Secure and Scalable.

Hello, Readers!

I’m Nehal Patil, a passionate freelance web developer dedicated to building powerful web applications that solve real-world problems. With a strong command over Spring Boot, React.js, Bootstrap, and MySQL, I specialize in crafting web apps that are not only responsive but also secure, scalable, and production-ready.

Why I Started Freelancing

After gaining experience in full-stack development and completing several personal and academic projects, I realized that I enjoy building things that people actually use. Freelancing allows me to work closely with clients, understand their unique challenges, and deliver custom web solutions that drive impact.

What I Do

I build full-fledged web applications from the ground up. Whether it's a startup MVP, a business dashboard, or an e-commerce platform, I ensure every project meets the following standards:

Responsive: Works seamlessly on mobile, tablet, and desktop.

Secure: Built with best practices to prevent common vulnerabilities.

Scalable: Designed to handle growth—be it users, data, or features.

Maintainable: Clean, modular code that’s easy to understand and extend.

My Tech Stack

I work with a powerful tech stack that ensures modern performance and flexibility:

Frontend: React.js + Bootstrap for sleek, dynamic, and responsive UI

Backend: Spring Boot for robust, production-level REST APIs

Database: MySQL for reliable and structured data management

Bonus: Integration, deployment support, and future-proof architecture

What’s Next?

This blog marks the start of my journey to share insights, tutorials, and case studies from my freelance experiences. Whether you're a business owner looking for a web solution or a fellow developer curious about my workflow—I invite you to follow along!

If you're looking for a developer who can turn your idea into a scalable, secure, and responsive web app, feel free to connect with me.

Thanks for reading, and stay tuned!

2 notes

·

View notes