#Web Scraping Services

Explore tagged Tumblr posts

Text

Abode Enterprise

Abode Enterprise is a reliable provider of data solutions and business services, with over 15 years of experience, serving clients in the USA, UK, and Australia. We offer a variety of services, including data collection, web scraping, data processing, mining, and management. We also provide data enrichment, annotation, business process automation, and eCommerce product catalog management. Additionally, we specialize in image editing and real estate photo editing services.

With more than 15 years of experience, our goal is to help businesses grow and become more efficient through customized solutions. At Abode Enterprise, we focus on quality and innovation, helping organizations make the most of their data and improve their operations. Whether you need useful data insights, smoother business processes, or better visuals, we’re here to deliver great results.

#Data Collection Services#Web Scraping Services#Data Processing Service#Data Mining Services#Data Management Services#Data Enrichment Services#Business Process Automation Services#Data Annotation Services#Real Estate Photo Editing Services#eCommerce Product Catalog Management Services#Image Editing service

1 note

·

View note

Text

Best Tools & Techniques for Data Extraction from Multiple Sources

Data extraction is common and rapidly grown in the business landscape. As the technology advances, it is vital to update tools and techniques for best extracted outcomes. Read further in detail about tools and techniques for data extraction services.

#data extraction services#data scraping services#data extraction company#data digitization#web data extraction services#data extraction services india#data extraction companies#outsource data extraction#outsource data extraction services

3 notes

·

View notes

Text

Market Research with Web Data Solutions – Dignexus

6 notes

·

View notes

Text

Lensnure Solutions is a passionate web scraping and data extraction company that makes every possible effort to add value to their customer and make the process easy and quick. The company has been acknowledged as a prime web crawler for its quality services in various top industries such as Travel, eCommerce, Real Estate, Finance, Business, social media, and many more.

We wish to deliver the best to our customers as that is the priority. we are always ready to take on challenges and grab the right opportunity.

3 notes

·

View notes

Text

Lensnure Solution provides top-notch Food delivery and Restaurant data scraping services to avail benefits of extracted food data from various Restaurant listings and Food delivery platforms such as Zomato, Uber Eats, Deliveroo, Postmates, Swiggy, delivery.com, Grubhub, Seamless, DoorDash, and much more. We help you extract valuable and large amounts of food data from your target websites using our cutting-edge data scraping techniques.

Our Food delivery data scraping services deliver real-time and dynamic data including Menu items, restaurant names, Pricing, Delivery times, Contact information, Discounts, Offers, and Locations in required file formats like CSV, JSON, XLSX, etc.

Read More: Food Delivery Data Scraping

#data extraction#lensnure solutions#web scraping#web scraping services#food data scraping#food delivery data scraping#extract food ordering data#Extract Restaurant Listings Data

2 notes

·

View notes

Text

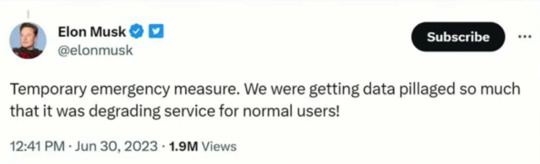

Fascinated that the owners of social media sites see API usage and web scraping as "data pillaging" -- immoral theft! Stealing! and yet, if you or I say that we should be paid for the content we create on social media the idea is laughed out of the room.

Social media is worthless without people and all the things we create do and say.

It's so valuable that these boys are trying to lock it in a vault.

#socail media#data mining#web scraping#twitter#reddit#you are the product#free service#free as in privacy invasion#pay me for that banger tweet you wretched nerd

8 notes

·

View notes

Text

How to Extract Amazon Product Prices Data with Python 3

Web data scraping assists in automating web scraping from websites. In this blog, we will create an Amazon product data scraper for scraping product prices and details. We will create this easy web extractor using SelectorLib and Python and run that in the console.

#webscraping#data extraction#web scraping api#Amazon Data Scraping#Amazon Product Pricing#ecommerce data scraping#Data EXtraction Services

3 notes

·

View notes

Text

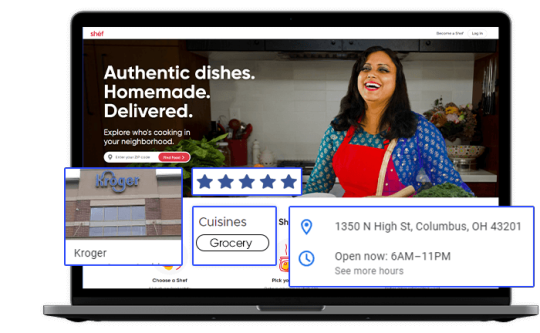

Kroger Grocery Data Scraping | Kroger Grocery Data Extraction

Shopping Kroger grocery online has become very common these days. At Foodspark, we scrape Kroger grocery apps data online with our Kroger grocery data scraping API as well as also convert data to appropriate informational patterns and statistics.

#food data scraping services#restaurantdataextraction#restaurant data scraping#web scraping services#grocerydatascraping#zomato api#fooddatascrapingservices#Scrape Kroger Grocery Data#Kroger Grocery Websites Apps#Kroger Grocery#Kroger Grocery data scraping company#Kroger Grocery Data#Extract Kroger Grocery Menu Data#Kroger grocery order data scraping services#Kroger Grocery Data Platforms#Kroger Grocery Apps#Mobile App Extraction of Kroger Grocery Delivery Platforms#Kroger Grocery delivery#Kroger grocery data delivery

2 notes

·

View notes

Text

HMS2, ou Heavy Melting Scrap 2, é uma classificação de sucata de aço usada na indústria siderúrgica. Representa uma categoria de sucata de aço que não é tão pesada ou volumosa como a HMS-1, mas ainda é adequada para reciclagem em novos produtos siderúrgicos. Aqui estão algumas características principais do aço HMS-2 e seu escopo de comercialização no mercado europeu:

Características do aço HMS-2:

Composição: O HMS-2 inclui vários tipos de sucata ferrosa, como peças menores de aço estrutural, materiais mais leves e peças diversas de aço. Pode conter uma mistura de tipos de aço e ligas.

Tamanho e forma: O HMS-2 pode vir em vários formatos e tamanhos, incluindo folhas, placas, tubos e componentes estruturais menores. Normalmente é menos massivo e denso em comparação com o HMS-1.

Contaminantes: Embora o HMS-2 seja geralmente mais limpo do que os tipos de sucata de qualidade inferior, ele pode conter uma porcentagem ligeiramente maior de contaminantes do que o HMS-1. Esses contaminantes podem incluir revestimentos, tintas, ferrugem e outras substâncias não metálicas.

Escopo de comercialização do aço HMS-2 no mercado europeu?

Demanda: O mercado europeu de sucata de aço, incluindo HMS-2, é impulsionado pela robusta indústria siderúrgica da região, projetos de construção, desenvolvimento de infraestruturas e atividades de produção. A demanda por sucata de aço é influenciada pelas necessidades de matérias-primas desses setores. A Europa dá grande ênfase à reciclagem e à sustentabilidade. O preço do aço HMS-2 no mercado europeu é influenciado pela dinâmica da oferta e da procura, bem como pelos preços globais do aço. Os produtores de aço europeus normalmente têm padrões de qualidade específicos para materiais de sucata, incluindo HMS-2.

EDELSTAHL VIRAT IBERICA é um importador, exportador e comprador emergente de #HMS1, #HMS2, sucata ferrosa, sucata de motores eletrónicos e sucata triturada em #Portugal…

Saber mais: https://moldsteel.eu/recycling-products/

Chat WhatsApp: +351-920016150 E-mail: [email protected]

#europe#porto#portugal#din2738#edelstashlviratibrica#oportunidades#viratsteels#empresas#b2b#agricultura#hms1#HMS2#web scraping services#recycling#mining#hss steel#turkiye

3 notes

·

View notes

Text

Business Processing Services Company

Fusion Digitech is a leading business processing services company in the USA, offering comprehensive solutions tailored to streamline operations and boost efficiency. With a strong commitment to quality, innovation, and client satisfaction, we specialize in data entry, document management, digital transformation, and customer support services. Our team of skilled professionals leverages the latest technology to deliver cost-effective and scalable solutions that help businesses optimize resources and focus on core objectives. Whether you’re a startup or an enterprise, Fusion Digitech is your trusted partner in driving productivity and growth through reliable business processing services. Experience excellence with Fusion Digitech.

0 notes

Text

Unlock the Power of Data with Uniquesdata's Data Scraping Services!

In today’s data-driven world, timely and accurate information is key to gaining a competitive edge. 🌐

At Uniquesdata, our Data Scraping Services provide businesses with structured, real-time data extracted from various online sources. Whether you're looking to enhance your e-commerce insights, analyze competitors, or improve decision-making processes, we've got you covered!

💼 With expertise across industries such as e-commerce, finance, real estate, and more, our tailored solutions make data accessible and actionable.

📈 Let’s connect and explore how our data scraping services can drive value for your business.

#web scraping services#data scraping services#database scraping service#web scraping company#data scraping service#data scraping company#data scraping companies#web scraping companies#web scraping services usa#web data scraping services#web scraping services india

3 notes

·

View notes

Text

The Role of Custom Web Data Extraction: Enhancing Business Intelligence and Competitive Advantage

Your off-the-shelf scraping tool worked perfectly last month. Then your target website updated their layout. Everything broke.

Your data pipeline stopped. Your competitive intelligence disappeared. Your team scrambled to fix scripts that couldn’t handle the new structure.

This scenario repeats across thousands of businesses using off-the-shelf extraction tools. Here’s the problem: 89% of leaders recognize web data’s importance. But standardized solutions fail when websites fight back with anti-bot defenses, dynamic content, or simple redesigns.

Custom extraction solves these problems. AI-powered systems see websites like humans do. They adapt automatically when things change.

This article reveals how custom web data extraction delivers reliable intelligence where off-the-shelf tools fail. You’ll discover why tailored solutions outperform one-size-fits-all approaches. You’ll also get to see a detailed industry-specific guide showing how business leaders solve their most complex data challenges.

Beyond Basic Scraping: What Makes Custom Web Data Extraction Different

Basic tools rely on rigid scripts. They expect websites to stay frozen in time. That’s simply not how the modern web works.

Today’s websites use sophisticated blocking techniques:

Rotating CAPTCHA challenges.

Browser fingerprinting.

IP rate limiting.

Complex JavaScript frameworks that render content client-side.

Custom solutions overcome these barriers. They use advanced capabilities you won’t find in basic tools.

Here’s what sets custom web data extraction apart:

Tailored architecture designed for your specific needs and target sources.

AI-powered browsers that render pages exactly as humans see them.

Intelligent IP rotation through thousands of addresses to avoid detection.

Automatic adaptation when target websites change their structure.

Enterprise-grade scale monitoring millions of pages across thousands of sources.

Basic tools might handle dozens of sites with hundreds of results. But they require constant babysitting from your team. Every website redesign breaks your scripts. Every new blocking technique stops your data flow.

On the other hand, enterprise-grade custom solutions monitor thousands of sources simultaneously. Their scrapers extract millions of data points with pinpoint accuracy and adapt automatically when sites change structure.

But here’s what really matters: intelligent data processing.

Raw scraped data is messy and inconsistent. Tailored solutions transform this chaos into structured intelligence by:

Cleaning and standardizing information automatically.

Matching products across different retailers despite varying naming conventions.

Identifying and flagging anomalies that could indicate data quality issues.

Structuring unstructured data into analysis-ready formats.

Industry research reveals that the technical barriers are real. 82% of organizations need help overcoming data collection challenges:

55% face IP blocking.

52% struggle with CAPTCHAs.

56% deal with dynamic content that traditional tools can’t handle.

This is why sophisticated businesses partner with experienced providers like Forage AI. We’ve perfected these capabilities over decades of experience and provide you with enterprise-grade capabilities without the headaches of maintaining complex infrastructure.

Now that you understand what makes custom extraction powerful, let’s see how this capability transforms the core business functions that drive competitive advantage.

Transforming Business Intelligence Across Key Functions

Custom web data extraction doesn’t just collect information. It revolutionizes how organizations understand their markets, customers, and competitive landscape.

Here’s how it transforms three critical business intelligence areas:

Real-Time Competitive Analysis

Forget checking competitor websites once a week. Custom extraction provides continuous competitive surveillance. It captures changes the moment they happen.

Your system monitors:

Pricing changes and product launches across competitor portfolios.

Executive appointments and organizational restructuring at target companies.

Regulatory filings and compliance updates from government sources.

Market expansion and strategic partnerships across your industry.

The competitive advantage:

Shift from reactive to proactive strategic positioning.

Respond within hours instead of days when competitors make a move.

Anticipate market shifts before other players spot them.

Position strategically based on live competitive intelligence.

Customer Intelligence & Market Insights

Understanding your customers means looking beyond your own data. You need to see how they behave across the entire market.

Custom extraction aggregates customer sentiment, preferences, and feedback from every relevant touchpoint online.

Comprehensive customer intelligence includes:

Review patterns across all major platforms to identify valued features.

Social media conversations to spot emerging trends before mainstream awareness.

Forum discussions to understand unmet needs representing new opportunities.

Purchase behavior signals across competitor platforms and review sites.

Strategic insights you gain:

Why customers choose competitors over you.

What actually drives their purchase decisions.

How their preferences evolve over time.

Which features and benefits resonate most strongly with your target market.

Operational Intelligence

Smart organizations use web data to optimize operations beyond marketing and sales. Custom extraction provides the external intelligence that makes internal operations more efficient and strategic.

Supply chain optimization through:

Supplier monitoring of websites, industry news, and regulatory announcements.

Commodity price tracking and shipping delay alerts.

Geopolitical event monitoring that could affect procurement strategies.

Risk management enhancement via:

Early warning signals from news sources and regulatory sites.

Compliance issue identification before they impact operations.

Reputation threat monitoring across digital channels.

Strategic planning support including:

Competitor expansion intelligence and market opportunity identification.

Industry trend analysis that shapes future strategy.

Market condition assessment for long-term decision-making.

This operational intelligence enables informed strategic planning. You gain comprehensive context for critical business decisions.

With these transformed business functions providing superior market intelligence, you’re positioned to create sustainable competitive advantages. But how exactly does this intelligence translate into lasting business benefits? Let’s examine the specific advantages that compound over time.

Creating Sustainable Competitive Advantages

The real power of custom web data extraction isn’t just better information. It’s the systematic advantages that compound over time. Your organization becomes increasingly difficult for competitors to match.

Speed and Agility

Research shows that 73% of organizations achieve quicker decision-making through systematic web data collection. But speed isn’t just about faster decisions. It’s about being first to market opportunities.

Immediate competitive benefits:

Capitalize on competitor pricing errors immediately rather than discovering them days later.

Adjust strategy while competitors are still gathering information.

Position yourself for new opportunities while others are still analyzing.

Compounding speed advantages:

Each quick response strengthens your market position. Customers associate your brand with market leadership. New opportunities become easier to capture.

Consider dynamic pricing strategies. They adjust in real-time based on competitor actions, inventory levels, and demand signals. Organizations using this approach report revenue increases of 5-25% compared to static pricing models.

Complete Market Coverage

While competitors rely on off-the-shelf tools that have limited coverage, custom extraction provides 360-degree market visibility. Industry research indicates that 98% of organizations need more data of at least one type. Tailored solutions eliminate this limitation entirely.

Your monitoring advantage includes:

Direct competitors and adjacent markets that could affect your business.

Pricing, inventory, promotions plus customer sentiment and regulatory changes.

Primary markets plus possibilities of international expansion.

Current conditions and emerging trends before they become obvious.

The scale difference is striking. Simple extraction tools can only handle dozens of products from a few sites before breaking down. Custom extraction monitors thousands of sources continuously with high accuracy. This creates market intelligence that’s simply impossible with off-the-shelf solutions.

Predictive Analytics Capability

With comprehensive, real-time data flowing systematically, you can build predictive capabilities. You anticipate market changes rather than just responding to them.

This is where Forage AI’s expertise becomes critical. We process data from 500M+ websites with AI-powered techniques, transforming raw information into strategic insights. 53% of organizations use public web data specifically to build the AI models that power these predictive insights.

Predictive intelligence detects:

Customer churn signals weeks before accounts show obvious warning signs.

Supply chain disruptions preventing inventory shortages before they impact operations.

Fraud detection patterns identifying suspicious activities before financial losses occur.

Lead scoring optimization predicting which prospects convert before competitors spot them.

The combination of speed, coverage, and prediction creates competitive advantages that are difficult for rivals to replicate. They’d need to invest in similar systematic data capabilities to match your market intelligence. By that time, you’ve gained additional advantages through earlier implementation.

These competitive benefits become even more powerful when applied to specific industry challenges. Let’s take a look at how different sectors leverage these capabilities for measurable ROI.

Industry-Specific Applications That Drive ROI

Different industries face unique competitive challenges. Custom web data extraction solves these in specific, measurable ways.

E-commerce & Retail

Retail operates in the most price-transparent market in history. 75% of retail organizations collect market data systematically while 51% use it specifically for brand health monitoring across multiple channels.

But here’s what sets custom extraction apart from basic extraction tools:

Visual Intelligence Engines: Extract and analyze product images across 1000+ competitor sites to identify color trends, style patterns, and merchandising strategies. Spot emerging visual trends 48 hours before they go mainstream by handling JavaScript-heavy product galleries that load dynamically as users scroll – something basic tools simply can’t manage.

Review Feature Mining: Go beyond sentiment scores. Extract unstructured review data to identify specific product features customers mention that aren’t in your specs. When customers repeatedly request “pockets” in competitor dress reviews, you’ll know before your next design cycle.

Micro-Influencer Discovery: Scrape social media platforms to find micro-influencers already organically mentioning your product category. Identify authentic voices with engaged audiences before they’re on anyone’s radar.

Stock Pattern Prediction: Monitor availability patterns across competitor sites to predict stockouts 7-10 days in advance. This isn’t just checking “in stock” labels – it’s analyzing restocking frequencies, quantity limits, and shipping delays.

Financial Services

Financial institutions face unique challenges around risk assessment, regulatory compliance, and market intelligence.

Custom extraction delivers capabilities impossible with standard tools:

Alternative Data Signals: Extract job postings, online company reviews, and web traffic patterns to assess company health 90 days before earnings reports. When a tech company suddenly posts 50 new sales positions while their engineering hiring freezes, you’ll spot the pivot early.

Multi-Language Regulatory Intelligence: Monitor 200+ regulatory websites across dozens of languages simultaneously for policy changes. Detect subtle shifts in compliance requirements weeks before official translations appear. This requires sophisticated language processing beyond basic translation.

ESG Risk Detection: Scrape news sites, NGO reports, and social media for real-time Environmental, Social, and Governance risk indicators. Identify supply chain controversies or environmental violations before they impact investment portfolios.

High-Frequency Data Extraction: Handle encrypted financial documents and real-time feeds from trading platforms. Process complex data structures that update milliseconds apart while maintaining accuracy.

Healthcare

Healthcare organizations need extraction capabilities that handle complex medical data and compliance requirements:

Clinical Trial Competition Intelligence: Extract real-time patient enrollment numbers and protocol changes from ClinicalTrials.gov and competitor sites. Know when rivals struggle with recruitment or modify trial endpoints. This means parsing complex medical documents and research papers.

Physician Opinion Tracking: Monitor medical forums and conference abstracts for emerging treatment preferences. Detect when specialists start discussing off-label uses or combination therapies 6 months before publication.

Drug Shortage Prediction: Combine Food and Drug Administration databases with pharmacy inventory signals to predict shortages 2-3 weeks early. Extract data from multiple formats while handling medical terminology variations.

Patient Journey Mapping:��Analyze anonymized patient experiences from health forums to understand real treatment pathways. Navigate HIPAA-compliant extraction while capturing meaningful insights.

Manufacturing

Manufacturing requires extraction solutions that handle technical complexity across global supply chains:

Component Crisis Detection: Monitor 500+ distributor websites globally for lead time changes on critical components. Detect when a key supplier extends delivery from 8 to 12 weeks before it impacts your production line.

Patent Innovation Tracking: Extract and analyze competitor patent filings to identify technology directions 18 months before product launches. Parse technical specifications and CAD file references to understand true innovation patterns.

Quality Signal Detection: Mine consumer forums and review sites for early product defect patterns. Identify quality issues weeks before they escalate to recalls. This requires understanding technical language across multiple industries.

Sustainability Compliance Monitoring: Extract supplier ESG certifications, audit results, and environmental data from diverse sources. Track your entire supply chain’s compliance status in real-time across different reporting standards.

The Bottom Line: Measurable Impact Across Your Business

When you add it all up, custom web data extraction delivers three types of measurable value:

Immediate efficiency gains through automated intelligence gathering, reducing data processing time by 30-40% while improving decision speed and accuracy.

Revenue acceleration via dynamic pricing optimization (5-25% increases), market timing advantages, and strategic positioning based on comprehensive market understanding.

Risk reduction through early warning systems that spot threats before they impact operations, enabling proactive responses rather than costly reactive measures.

Organizations implementing these capabilities systematically are 57% more likely to expect significant revenue growth. The compound effect means early adopters gain advantages that become increasingly difficult for competitors to match.

These industry applications prove a key point. Sophisticated web data extraction isn’t just a technical capability. It’s a strategic business tool that drives measurable edge across diverse sectors and use cases.

Conclusion: Custom Data Extraction as Competitive Necessity

The evidence is clear. Organizations that systematically leverage web data consistently outperform those relying on manual methods or standard extraction techniques.

89% of business leaders recognize data’s importance. But only those implementing custom extraction solutions capture its full competitive potential.

This isn’t about having better tools. It’s about fundamentally transforming how you understand and respond to market dynamics. Custom web data extraction provides the systematic intelligence foundation that modern competitive strategy requires.

The question isn’t whether to invest in these capabilities. It’s how quickly you can implement them before competitors gain similar advantages.

Ready to stop guessing and start knowing? Contact Forage AI to discover how custom web data extraction can transform your competitive positioning and business intelligence capabilities.

0 notes

Text

The pharmaceutical industry is a highly competitive and dynamic sector where accurate and real-time data is essential. Monitoring drug prices and tracking pharmaceutical market trends enable businesses to make informed decisions, optimize pricing strategies, and remain competitive. Actowiz Solutions specializes in web scraping services that help pharmaceutical companies, healthcare providers, and regulatory bodies collect and analyze critical market data.

#Monitoring drug prices and tracking#web scraping and data extraction services#Scrapes drug pricing data#pharma web scraping

0 notes

Text

Unlocking the Web: How to Use an AI Agent for Web Scraping Effectively

In this age of big data, information has become the most powerful thing. However, accessing and organizing this data, particularly from the web, is not an easy feat. This is the point where AI agents step in. Automating the process of extracting valuable data from web pages, AI agents are changing the way businesses operate and developers, researchers as well as marketers.

In this blog, we’ll explore how you can use an AI agent for web scraping, what benefits it brings, the technologies behind it, and how you can build or invest in the best AI agent for web scraping for your unique needs. We’ll also look at how Custom AI Agent Development is reshaping how companies access data at scale.

What is Web Scraping?

Web scraping is a method of obtaining details from sites. It is used in a range of purposes, including price monitoring and lead generation market research, sentiment analysis and academic research. In the past web scraping was performed with scripting languages such as Python (with libraries like BeautifulSoup or Selenium) however, they require constant maintenance and are often limited in terms of scale and ability to adapt.

What is an AI Agent?

AI agents are intelligent software system that can be capable of making decisions and executing jobs on behalf of you. In the case of scraping websites, AI agents use machine learning, NLP (Natural Language Processing) and automated methods to navigate websites in a way that is intelligent and extract structured data and adjust to changes in the layout of websites and algorithms.

In contrast to crawlers or basic bots however, an AI agent doesn’t simply scrape in a blind manner; it comprehends the context of its actions, changes its behavior and grows with time.

Why Use an AI Agent for Web Scraping?

1. Adaptability

Websites can change regularly. Scrapers that are traditional break when the structure is changed. AI agents utilize pattern recognition and contextual awareness to adjust as they go along.

2. Scalability

AI agents are able to manage thousands or even hundreds of pages simultaneously due to their ability to make decisions automatically as well as cloud-based implementation.

3. Data Accuracy

AI improves the accuracy of data scraped in the process of filtering noise recognizing human language and confirming the results.

4. Reduced Maintenance

Because AI agents are able to learn and change and adapt, they eliminate the need for continuous manual updates to scrape scripts.

Best AI Agent for Web Scraping: What to Look For

If you’re searching for the best AI agent for web scraping. Here are the most important aspects to look out for:

NLP Capabilities for reading and interpreting text that is not structured.

Visual Recognition to interpret layouts of web pages or dynamic material.

Automation Tools: To simulate user interactions (clicks, scrolls, etc.)

Scheduling and Monitoring built-in tools that manage and automate scraping processes.

API integration You can directly send scraped data to your database or application.

Error Handling and Retries Intelligent fallback mechanisms that can help recover from sessions that are broken or access denied.

Custom AI Agent Development: Tailored to Your Needs

Though off-the-shelf AI agents can meet essential needs, Custom AI Agent Development is vital for businesses which require:

Custom-designed logic or workflows for data collection

Conformity with specific data policies or the lawful requirements

Integration with dashboards or internal tools

Competitive advantage via more efficient data gathering

At Xcelore, we specialize in AI Agent Development tailored for web scraping. Whether you’re monitoring market trends, aggregating news, or extracting leads, we build solutions that scale with your business needs.

How to Build Your Own AI Agent for Web Scraping

If you’re a tech-savvy person and want to create the AI you want to use Here’s a basic outline of the process:

Step 1: Define Your Objective

Be aware of the exact information you need, and the which sites. This is the basis for your design and toolset.

Step 2: Select Your Tools

Frameworks and tools that are popular include:

Python using libraries such as Scrapy, BeautifulSoup, and Selenium

Playwright or Puppeteer to automatize the browser

OpenAI and HuggingFace APIs for NLP and decision-making

Cloud Platforms such as AWS, Azure, or Google Cloud to increase their capacity

Step 3: Train Your Agent

Provide your agent with examples of structured as compared to. non-structured information. Machine learning can help it identify patterns and to extract pertinent information.

Step 4: Deploy and Monitor

You can run your AI agent according to a set schedule. Use alerting, logging, and dashboards to check the agent’s performance and guarantee accuracy of data.

Step 5: Optimize and Iterate

The AI agent you use should change. Make use of feedback loops as well as machine learning retraining in order to improve its reliability and accuracy as time passes.

Compliance and Ethics

Web scraping has ethical and legal issues. Be sure that your AI agent

Respects robots.txt rules

Avoid scraping copyrighted or personal content. Avoid scraping copyrighted or personal

Meets international and local regulations on data privacy

At Xcelore We integrate compliance into each AI Agent development project we manage.

Real-World Use Cases

E-commerce Price tracking across competitors’ websites

Finance Collecting news about stocks and financial statements

Recruitment extracting job postings and resumes

Travel Monitor hotel and flight prices

Academic Research: Data collection at a large scale to analyze

In all of these situations an intelligent and robust AI agent could turn the hours of manual data collection into a more efficient and scalable process.

Why Choose Xcelore for AI Agent Development?

At Xcelore, we bring together deep expertise in automation, data science, and software engineering to deliver powerful, scalable AI Agent Development Services. Whether you need a quick deployment or a fully custom AI agent development project tailored to your business goals, we’ve got you covered.

We can help:

Find scraping opportunities and devise strategies

Create and design AI agents that adapt to your demands

Maintain compliance and ensure data integrity

Transform unstructured web data into valuable insights

Final Thoughts

Making use of an AI agent for web scraping isn’t just an option for technical reasons, it’s now an advantage strategic. From better insights to more efficient automation, the advantages are immense. If you’re looking to build your own AI agent or or invest in the best AI agent for web scraping.The key is in a well-planned strategy and skilled execution.

Are you ready to unlock the internet by leveraging intelligent automation?

Contact Xcelore today to get started with your custom AI agent development journey.

#ai agent development services#AI Agent Development#AI agent for web scraping#build your own AI agent

0 notes

Link

Are you fed up with the manual grind of data collection for your marketing projects? Welcome to a new era of efficiency with Outscraper! This game-changing tool makes scraping Google search results simpler than ever before. In our latest blog post, we explore how Outscraper can revolutionize the way you gather data. With its easy-to-use interface, you don’t need to be a tech wizard to navigate it. The real-time SERP data and cloud scraping features ensure you can extract crucial information while protecting your IP address. What's even better? Outscraper is currently offering a lifetime deal for just $119, significantly slashed from the original price of $1,499. This makes it the perfect investment for marketers and business owners eager to enhance their strategies. Don't miss out on this fantastic opportunity to streamline your data-gathering process! Click the link to read the full review and discover how Outscraper can take your marketing game to the next level. #Outscraper #DataCollection #MarketingTools #GoogleSearch #LifetimeDeal #DigitalMarketing #DataScraping #TechReview #SEO #MarketingStrategies

#GoogleSearchResultsScraper#lifetimedeal#dataextractiontool#keywordsearches#SERPdata#AppSumo#webscrapingservice#cloudscraping#Outscraper#datastructuring#web scraping service

0 notes

Text

Tapping into Fresh Insights: Kroger Grocery Data Scraping

In today's data-driven world, the retail grocery industry is no exception when it comes to leveraging data for strategic decision-making. Kroger, one of the largest supermarket chains in the United States, offers a wealth of valuable data related to grocery products, pricing, customer preferences, and more. Extracting and harnessing this data through Kroger grocery data scraping can provide businesses and individuals with a competitive edge and valuable insights. This article explores the significance of grocery data extraction from Kroger, its benefits, and the methodologies involved.

The Power of Kroger Grocery Data

Kroger's extensive presence in the grocery market, both online and in physical stores, positions it as a significant source of data in the industry. This data is invaluable for a variety of stakeholders:

Kroger: The company can gain insights into customer buying patterns, product popularity, inventory management, and pricing strategies. This information empowers Kroger to optimize its product offerings and enhance the shopping experience.

Grocery Brands: Food manufacturers and brands can use Kroger's data to track product performance, assess market trends, and make informed decisions about product development and marketing strategies.

Consumers: Shoppers can benefit from Kroger's data by accessing information on product availability, pricing, and customer reviews, aiding in making informed purchasing decisions.

Benefits of Grocery Data Extraction from Kroger

Market Understanding: Extracted grocery data provides a deep understanding of the grocery retail market. Businesses can identify trends, competition, and areas for growth or diversification.

Product Optimization: Kroger and other retailers can optimize their product offerings by analyzing customer preferences, demand patterns, and pricing strategies. This data helps enhance inventory management and product selection.

Pricing Strategies: Monitoring pricing data from Kroger allows businesses to adjust their pricing strategies in response to market dynamics and competitor moves.

Inventory Management: Kroger grocery data extraction aids in managing inventory effectively, reducing waste, and improving supply chain operations.

Methodologies for Grocery Data Extraction from Kroger

To extract grocery data from Kroger, individuals and businesses can follow these methodologies:

Authorization: Ensure compliance with Kroger's terms of service and legal regulations. Authorization may be required for data extraction activities, and respecting privacy and copyright laws is essential.

Data Sources: Identify the specific data sources you wish to extract. Kroger's data encompasses product listings, pricing, customer reviews, and more.

Web Scraping Tools: Utilize web scraping tools, libraries, or custom scripts to extract data from Kroger's website. Common tools include Python libraries like BeautifulSoup and Scrapy.

Data Cleansing: Cleanse and structure the scraped data to make it usable for analysis. This may involve removing HTML tags, formatting data, and handling missing or inconsistent information.

Data Storage: Determine where and how to store the scraped data. Options include databases, spreadsheets, or cloud-based storage.

Data Analysis: Leverage data analysis tools and techniques to derive actionable insights from the scraped data. Visualization tools can help present findings effectively.

Ethical and Legal Compliance: Scrutinize ethical and legal considerations, including data privacy and copyright. Engage in responsible data extraction that aligns with ethical standards and regulations.

Scraping Frequency: Exercise caution regarding the frequency of scraping activities to prevent overloading Kroger's servers or causing disruptions.

Conclusion

Kroger grocery data scraping opens the door to fresh insights for businesses, brands, and consumers in the grocery retail industry. By harnessing Kroger's data, retailers can optimize their product offerings and pricing strategies, while consumers can make more informed shopping decisions. However, it is crucial to prioritize ethical and legal considerations, including compliance with Kroger's terms of service and data privacy regulations. In the dynamic landscape of grocery retail, data is the key to unlocking opportunities and staying competitive. Grocery data extraction from Kroger promises to deliver fresh perspectives and strategic advantages in this ever-evolving industry.

#grocerydatascraping#restaurant data scraping#food data scraping services#food data scraping#fooddatascrapingservices#zomato api#web scraping services#grocerydatascrapingapi#restaurantdataextraction

4 notes

·

View notes