#Web scraping Email addresses

Explore tagged Tumblr posts

Text

Google Search Results Data Scraping

Google Search Results Data Scraping

Harness the Power of Information with Google Search Results Data Scraping Services by DataScrapingServices.com. In the digital age, information is king. For businesses, researchers, and marketing professionals, the ability to access and analyze data from Google search results can be a game-changer. However, manually sifting through search results to gather relevant data is not only time-consuming but also inefficient. DataScrapingServices.com offers cutting-edge Google Search Results Data Scraping services, enabling you to efficiently extract valuable information and transform it into actionable insights.

The vast amount of information available through Google search results can provide invaluable insights into market trends, competitor activities, customer behavior, and more. Whether you need data for SEO analysis, market research, or competitive intelligence, DataScrapingServices.com offers comprehensive data scraping services tailored to meet your specific needs. Our advanced scraping technology ensures you get accurate and up-to-date data, helping you stay ahead in your industry.

List of Data Fields

Our Google Search Results Data Scraping services can extract a wide range of data fields, ensuring you have all the information you need:

-Business Name: The name of the business or entity featured in the search result.

- URL: The web address of the search result.

- Website: The primary website of the business or entity.

- Phone Number: Contact phone number of the business.

- Email Address: Contact email address of the business.

- Physical Address: The street address, city, state, and ZIP code of the business.

- Business Hours: Business operating hours

- Ratings and Reviews: Customer ratings and reviews for the business.

- Google Maps Link: Link to the business’s location on Google Maps.

- Social Media Profiles: LinkedIn, Twitter, Facebook

These data fields provide a comprehensive overview of the information available from Google search results, enabling businesses to gain valuable insights and make informed decisions.

Benefits of Google Search Results Data Scraping

1. Enhanced SEO Strategy

Understanding how your website ranks for specific keywords and phrases is crucial for effective SEO. Our data scraping services provide detailed insights into your current rankings, allowing you to identify opportunities for optimization and stay ahead of your competitors.

2. Competitive Analysis

Track your competitors’ online presence and strategies by analyzing their rankings, backlinks, and domain authority. This information helps you understand their strengths and weaknesses, enabling you to adjust your strategies accordingly.

3. Market Research

Access to comprehensive search result data allows you to identify trends, preferences, and behavior patterns in your target market. This information is invaluable for product development, marketing campaigns, and business strategy planning.

4. Content Development

By analyzing top-performing content in search results, you can gain insights into what types of content resonate with your audience. This helps you create more effective and engaging content that drives traffic and conversions.

5. Efficiency and Accuracy

Our automated scraping services ensure you get accurate and up-to-date data quickly, saving you time and resources.

Best Google Data Scraping Services

Scraping Google Business Reviews

Extract Restaurant Data From Google Maps

Google My Business Data Scraping

Google Shopping Products Scraping

Google News Extraction Services

Scrape Data From Google Maps

Google News Headline Extraction

Google Maps Data Scraping Services

Google Map Businesses Data Scraping

Google Business Reviews Extraction

Best Google Search Results Data Scraping Services in USA

Dallas, Portland, Los Angeles, Virginia Beach, Fort Wichita, Nashville, Long Beach, Raleigh, Boston, Austin, San Antonio, Philadelphia, Indianapolis, Orlando, San Diego, Houston, Worth, Jacksonville, New Orleans, Columbus, Kansas City, Sacramento, San Francisco, Omaha, Honolulu, Washington, Colorado, Chicago, Arlington, Denver, El Paso, Miami, Louisville, Albuquerque, Tulsa, Springs, Bakersfield, Milwaukee, Memphis, Oklahoma City, Atlanta, Seattle, Las Vegas, San Jose, Tucson and New York.

Conclusion

In today’s data-driven world, having access to detailed and accurate information from Google search results can give your business a significant edge. DataScrapingServices.com offers professional Google Search Results Data Scraping services designed to meet your unique needs. Whether you’re looking to enhance your SEO strategy, conduct market research, or gain competitive intelligence, our services provide the comprehensive data you need to succeed. Contact us at [email protected] today to learn how our data scraping solutions can transform your business strategy and drive growth.

Website: Datascrapingservices.com

Email: [email protected]

#Google Search Results Data Scraping#Harness the Power of Information with Google Search Results Data Scraping Services by DataScrapingServices.com. In the digital age#information is king. For businesses#researchers#and marketing professionals#the ability to access and analyze data from Google search results can be a game-changer. However#manually sifting through search results to gather relevant data is not only time-consuming but also inefficient. DataScrapingServices.com o#enabling you to efficiently extract valuable information and transform it into actionable insights.#The vast amount of information available through Google search results can provide invaluable insights into market trends#competitor activities#customer behavior#and more. Whether you need data for SEO analysis#market research#or competitive intelligence#DataScrapingServices.com offers comprehensive data scraping services tailored to meet your specific needs. Our advanced scraping technology#helping you stay ahead in your industry.#List of Data Fields#Our Google Search Results Data Scraping services can extract a wide range of data fields#ensuring you have all the information you need:#-Business Name: The name of the business or entity featured in the search result.#- URL: The web address of the search result.#- Website: The primary website of the business or entity.#- Phone Number: Contact phone number of the business.#- Email Address: Contact email address of the business.#- Physical Address: The street address#city#state#and ZIP code of the business.#- Business Hours: Business operating hours#- Ratings and Reviews: Customer ratings and reviews for the business.

0 notes

Text

Social Media and Privacy Concerns!!! What You Need to Know???

In a world that is becoming more digital by the day, social media has also become part of our day-to-day lives. From the beginning of sharing personal updates to networking with professionals, social media sites like Facebook, Instagram, and Twitter have changed the way we communicate. However, concerns over privacy have also grown, where users are wondering what happens to their personal information. If you use social media often, it is important to be aware of these privacy risks. In this article, we will outline the main issues and the steps you need to take to protect your online data privacy. (Related: Top 10 Pros and Cons of Social media)

1. How Social Media Platforms Scrape Your Data The majority of social media platforms scrape plenty of user information, including your: ✅ Name, email address, and phone number ✅ Location and web browsing history ✅ Likes, comments, and search history-derived interests. Although this enhances the user experience as well as advertising, it has serious privacy issues. (Read more about social media pros and cons here) 2. Risks of Excessive Sharing Personal Information Many users unknowingly expose themselves to security risks through excessive sharing of personal information. Posting details of your daily routine, location, or personal life can lead to: ⚠️ Identity theft ⚠️Stalking and harassment ⚠️ Cyber fraud

This is why you need to alter your privacy settings and be careful about what you post on the internet. (Read this article to understand how social media affects users.) 3. The Role of Third-Party Apps in Data Breaches Did you register for a site with Google or Facebook? Handy, maybe, but in doing so, you're granting apps access to look at your data, normally more than is necessary. Some high profile privacy scandals, the Cambridge Analytica one being an example, have shown how social media information can be leveraged for in politics and advertising. To minimize danger: 👍Regularly check app permissions 👍Don't sign up multiple accounts where you don't need to 👍Strong passwords and two-factor authentication To get an in-depth overview of social media's impact on security, read this detailed guide. 4. How Social Media Algorithms Follow You You may not realize this, but social media algorithms are tracking you everywhere. From the likes you share to the amount of time you watch a video, sites monitor it all through AI-driven algorithms that learn from behavior and build personalized feeds. Though it can drive user engagement, it also: ⚠️ Forms filter bubbles that limit different perspectives ⚠️ Increases data exposure in case of hacks ⚠️ Increases ethical concerns around online surveillance Understanding the advantages and disadvantages of social media will help you make an informed decision. (Find out more about it here) 5. Maintaining Your Privacy: Real-Life Tips

To protect your personal data on social media: ✅ Update privacy settings to limit sharing of data ✅ Be cautious when accepting friend requests from unknown people ✅ Think before you post—consider anything shared online can be seen by others ✅ Use encrypted messaging apps for sensitive conversations These small habits can take you a long way in protecting your online existence. (For more detailed information, read this article) Final Thoughts Social media is a powerful tool that connects people, companies, and communities. There are privacy concerns, though, and you need to be clever about how your data is being utilized. Being careful about what you share, adjusting privacy settings, and using security best practices can enable you to enjoy the benefits of social media while being safe online. Interested in learning more about how social media influences us? Check out our detailed article on the advantages and disadvantages of social media and the measures to be taken to stay safe on social media.

#social media#online privacy#privacymatters#data privacy#digital privacy#hacking#identity theft#data breach#socialmediaprosandcons#social media safety#cyber security#social security

2 notes

·

View notes

Text

Every Black Friday I sit down and unsubscribe from every emailing list I somehow got added to over the past year and have some fucking peace and quiet in my inbox for a bit before it somehow starts all over again when the evil monsters on the web scrape my email address from my cold unwilling fingers yet again.

2 notes

·

View notes

Text

I blocked this guy for spreading misinformation, but I want to address the points they made so people don't buy into this shit.

"Have you really never heard of a denoiser?" Glaze and Nightshade cannot be defeated by denoisers. Please see the paper I link in #2. This point is amazingly easy to debunk, and I'm not sure why people are still championing it. Both programs work by changing what the software "sees." Denoising can blur these artifacts, but it does not fundamentally get rid of them.

"White knighting for amoral techbro apps." This was a very early techbro attack on Glaze to try to convince people it was another way to steal data. As I said in an earlier post, it does use a dataset to enable it to add artifacts to your work. It is essentially using AI against itself, and it is effective. There's a whole peer-reviewed paper on how it works. I've posted it before, but if you missed it, you can read it here: https://arxiv.org/abs/2310.13828 (and unlike Generative AI apps, this paper explains exactly how the technology works.)

"Wasting resources." The point is to make the machines unusable, which ultimately will reduce the stress on our infrastructure. If the datasets no longer work, the use of them decreases. Unfortunately, the inability for people to adequately protect their work has led to massive electricity-wasting farms for generative AI, just like what happened with NFTs and cryptocurrency. If enough people inject unusable data in the the systems, the systems themselves become unusable, and the use decreases.

"My artistic vanity." I'm not a good artist. But my artwork HAS been scraped and used. I don't know why I have to keep saying this to make my anger and pain valid, but a few months back, all my artwork was revenge-scraped and stuffed into Midjourney. The person who did it also stripped my name from it, so I am not even able to HOPE to have it removed. I have nothing left to lose. I want to make those motherfuckers pay.

"The google thing only defeats weak watermarks." This is true. But a "strong" watermark must be completely different on each work you post, and also must cover most of the work. This is easily researched. I don't know about you, but I don't have time to make a new watermark on each piece I post, and I also don't want it to cover most of the artwork. Just so you know, the watermark detector works by looking for the same pattern on multiple works by the same person. If you use the same watermark on each piece, it doesn't matter how strong you think it is. It's removable. If you have the time to do it, then yes, this is effective. But it needs to be complex and different on each piece.

Next, I've seen a couple posts going around today stating that you can't even have an account on Glaze because they're closed.

They're not. But to prevent techbros from making accounts, you have to message the team so they can make sure you aren't using AI in your work. The instructions are here: https://glaze.cs.uchicago.edu/webinvite.html

Nightshade is not available on the web yet, but Glaze is. Nightshade will be soon, and they are planning a combo web app that will both Glaze and Nightshade your work.

In the meantime, if you want someone to Nightshade your work for you, please let me know. I have offered this before, and I will offer it again. Email me at [email protected] with your artwork, and let me know you'd like me to Nightshade it for you. There will always be some artifacts, but I will work with you until you are happy with the result.

Lastly, I know my messages are working because I keep getting people spreading misinformation that these things don't work. Please know that I have done the research, I do have a personal stake in this (because hundreds of my pieces are part of Midjourney now) and I am only posting this because I truly believe this is the way to fight back against plagiarism machines.

I don't know why people are so angry when I post about them. I know people don't all have access, which is why I'm offering my resources to help. I know this is a new technology, which is why I read through the boring scientific paper myself so I can validate the claims.

This is the last post I'll make on the matter. If you want to ask questions, fine, but I don't really have the mental capacity to argue with everyone anymore, and I'm not going to.

8 notes

·

View notes

Text

How to Track Restaurant Promotions on Instacart and Postmates Using Web Scraping

Introduction

With the rapid growth of food delivery services, companies such as Instacart and Postmates are constantly advertising for their restaurants to entice customers. Such promotions can range from discounts and free delivery to combinations and limited-time offers. For restaurants and food businesses, tracking these promotions gives them a competitive edge to better adjust their pricing strategies, identify trends, and stay ahead of their competitors.

One of the topmost ways to track promotions is using web scraping, which is an automated way of extracting relevant data from the internet. This article examines how to track restaurant promotions from Instacart and Postmates using the techniques, tools, and best practices in web scraping.

Why Track Restaurant Promotions?

1. Contest Research

Identify promotional strategies of competitors in the market.

Compare their discounting rates between restaurants.

Create pricing strategies for competitiveness.

2. Consumer Behavior Intuition

Understand what kinds of promotions are the most patronized by customers.

Deducing patterns that emerge determine what day, time, or season discounts apply.

Marketing campaigns are also optimized based on popular promotions.

3. Distribution Profit Maximization

Determine the optimum timing for promotion in restaurants.

Analyzing competitors' discounts and adjusting is critical to reducing costs.

Maximize the Return on investments, and ROI of promotional campaigns.

Web Scraping Techniques for Tracking Promotions

Key Data Fields to Extract

To effectively monitor promotions, businesses should extract the following data:

Restaurant Name – Identify which restaurants are offering promotions.

Promotion Type – Discounts, BOGO (Buy One Get One), free delivery, etc.

Discount Percentage – Measure how much customers save.

Promo Start & End Date – Track duration and frequency of offers.

Menu Items Included – Understand which food items are being promoted.

Delivery Charges - Compare free vs. paid delivery promotions.

Methods of Extracting Promotional Data

1. Web Scraping with Python

Using Python-based libraries such as BeautifulSoup, Scrapy, and Selenium, businesses can extract structured data from Instacart and Postmates.

2. API-Based Data Extraction

Some platforms provide official APIs that allow restaurants to retrieve promotional data. If available, APIs can be an efficient and legal way to access data without scraping.

3. Cloud-Based Web Scraping Tools

Services like CrawlXpert, ParseHub, and Octoparse offer automated scraping solutions, making data extraction easier without coding.

Overcoming Anti-Scraping Measures

1. Avoiding IP Blocks

Use proxy rotation to distribute requests across multiple IP addresses.

Implement randomized request intervals to mimic human behavior.

2. Bypassing CAPTCHA Challenges

Use headless browsers like Puppeteer or Playwright.

Leverage CAPTCHA-solving services like 2Captcha.

3. Handling Dynamic Content

Use Selenium or Puppeteer to interact with JavaScript-rendered content.

Scrape API responses directly when possible.

Analyzing and Utilizing Promotion Data

1. Promotional Dashboard Development

Create a real-time dashboard to track ongoing promotions.

Use data visualization tools like Power BI or Tableau to monitor trends.

2. Predictive Analysis for Promotions

Use historical data to forecast future discounts.

Identify peak discount periods and seasonal promotions.

3. Custom Alerts for Promotions

Set up automated email or SMS alerts when competitors launch new promotions.

Implement AI-based recommendations to adjust restaurant pricing.

Ethical and Legal Considerations

Comply with robots.txt guidelines when scraping data.

Avoid excessive server requests to prevent website disruptions.

Ensure extracted data is used for legitimate business insights only.

Conclusion

Web scraping allows tracking restaurant promotions at Instacart and Postmates so that businesses can best optimize their pricing strategies to maximize profits and stay ahead of the game. With the help of automation, proxies, headless browsing, and AI analytics, businesses can beautifully keep track of and respond to the latest promotional trends.

CrawlXpert is a strong provider of automated web scraping services that help restaurants follow promotions and analyze competitors' strategies.

0 notes

Text

Web scraping and digital marketing are becoming more closely entwined at the moment, with more professionals harnessing tools to gather the data they need to optimize their efforts. Here is a look at why this state of affairs has come about and how web scraping can be achieved effectively and ethically. The Basics The intention of a typical web scraping session is to harvest information from other sites through the use of APIs that are widely available today. You can conduct web scraping with Python and a few other programming languages, so it is a somewhat technical process on the surface. However, there are software solutions available which aim to automate and streamline this in order to encompass the needs of less tech-savvy users. Through the use of public APIs, it is perfectly legitimate and above-board to scrape sites and services in order to extract the juicy data that you crave. The Benefits From a marketing perspective, data is a hugely significant asset that can be used to shape campaigns, consider SEO options for client sites, assess target audiences to uncover the best strategies for engaging them and so much more. While you could find and extract data manually, this is an incredibly time-consuming process as well as being tedious for the person tasked with it. Conversely with the assistance of web scraping solutions, valuable and most importantly actionably information can be uncovered and parsed as needed in a fraction of the time. The Uses To appreciate why web scraping has risen to prominence in a digital marketing context, it is worth looking at how it can be used by marketers to reach their goals. Say, for example, you need to find out more about the prospective users of a given product or service, and all you have is a large list of their email addresses provided as part of your mailing list. This is a good starting point, but addresses alone are not going to give you any real clue of what factors define each individual. Web scraping through APIs will allow you to build a far better picture of these users based on the rest of their publically available online presence. This will allow you to then leverage this data to create bespoke marketing messaging that is tailored to users and treats them uniquely, rather than as a homogenous group. The same tactics can be applied to a range of other circumstances, such as monitoring prices on a competing e-commerce site, generating leads to win over new customers and much more besides. The Challenges Collating data from third party sites automatically is not always straightforward, in part because many sites seek to prevent automated systems from doing this. There are also ethical issues to consider, and it is generally better to only use information that is public to fuel your marketing efforts, or else customers and clients could feel like they are being stalked. Even with all this in mind, there are ample opportunities to make effective use of web scraping for digital marketing in a way that will benefit both marketers and clients alike.

0 notes

Text

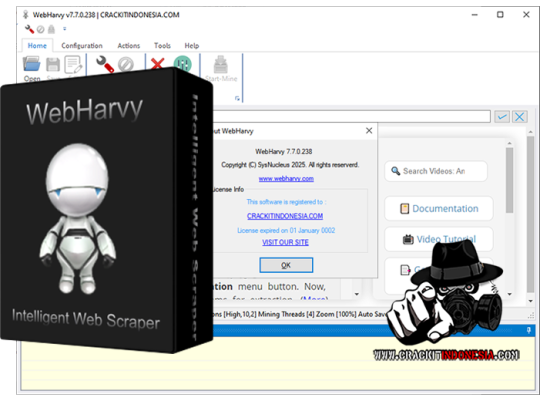

Intuitive Powerful Visual Web Scraper - WebHarvy can automatically scrape Text, Images, URLs & Emails from websites, and save the scraped content in various formats. WebHarvy Web Scraper can be used to scrape data from www.yellowpages.com. Data fields such as name, address, phone number, website URL etc can be selected for extraction by just clicking on them! - Point and Click Interface WebHarvy is a visual web scraper. There is absolutely no need to write any scripts or code to scrape data. You will be using WebHarvy's in-built browser to navigate web pages. You can select the data to be scraped with mouse clicks. It is that easy ! - Scrape Data Patterns Automatic Pattern Detection WebHarvy automatically identifies patterns of data occurring in web pages. So if you need to scrape a list of items (name, address, email, price etc) from a web page, you need not do any additional configuration. If data repeats, WebHarvy will scrape it automatically. - Export scraped data Save to File or Database You can save the data extracted from web pages in a variety of formats. The current version of WebHarvy Web Scraper allows you to export the scraped data as an XML, CSV, JSON or TSV file. You can also export the scraped data to an SQL database. - Scrape data from multiple pages Scrape from Multiple Pages Often web pages display data such as product listings in multiple pages. WebHarvy can automatically crawl and extract data from multiple pages. Just point out the 'link to the next page' and WebHarvy Web Scraper will automatically scrape data from all pages. - Keyword based Scraping Keyword based Scraping Keyword based scraping allows you to capture data from search results pages for a list of input keywords. The configuration which you create will be automatically repeated for all given input keywords while mining data. Any number of input keywords can be specified. - Scrape via proxy server Proxy Servers To scrape anonymously and to prevent the web scraping software from being blocked by web servers, you have the option to access target websites via proxy servers. Either a single proxy server address or a list of proxy server addresses may be used. - Category Scraping Category Scraping WebHarvy Web Scraper allows you to scrape data from a list of links which leads to similar pages within a website. This allows you to scrape categories or subsections within websites using a single configuration. - Regular Expressions WebHarvy allows you to apply Regular Expressions (RegEx) on Text or HTML source of web pages and scrape the matching portion. This powerful technique offers you more flexibility while scraping data. - WebHarvy Support Technical Support Once you purchase WebHarvy Web Scraper you will receive free updates and free support from us for a period of 1 year from the date of purchase. Bug fixes are free for lifetime. WebHarvy 7.7.0238 Released on May 19, 2025 - Updated Browser WebHarvy’s internal browser has been upgraded to the latest available version of Chromium. This improves website compatibility and enhances the ability to bypass anti-scraping measures such as CAPTCHAs and Cloudflare protection. - Improved ‘Follow this link’ functionality Previously, the ‘Follow this link’ option could be disabled during configuration, requiring manual steps like capturing HTML, capturing more content, and applying a regular expression to enable it. This process is now handled automatically behind the scenes, making configuration much simpler for most websites. - Solved Excel File Export Issues We have resolved issues where exporting scraped data to an Excel file could result in a corrupted output on certain system environments. - Fixed Issue related to changing pagination type while editing configuration Previously, when selecting a different pagination method during configuration, both the old and new methods could get saved together in some cases. This issue has now been fixed. - General Security Updates All internal libraries have been updated to their latest versions to ensure improved security and stability. Sales Page:https://www.webharvy.com/ DOWNLOAD LINKS & INSTRUCTIONS: Sorry, You need to be logged in to see the content. Please Login or Register as VIP MEMBERS to access. Read the full article

0 notes

Text

Securing API Integrations: Best Practices for Contact Form Data Transmission

In today’s digital ecosystem, contact forms are more than just entry points for customer inquiries—they’re gateways to critical data that drive business operations. Whether the information is funneled into a CRM, ticketing system, or email marketing platform, the underlying mechanism that powers this is API integration. But with increasing cyber threats and stricter data regulations, securing the transmission of contact form data through APIs has never been more important.

This blog delves into the best practices for ensuring your contact form data is transmitted securely via API integrations, covering encryption, authentication, compliance, and more.

Why Securing Contact Form Data Matters

Contact forms often collect personally identifiable information (PII), such as:

Names

Email addresses

Phone numbers

Company names

Inquiry messages

Unsecured transmission of this data can lead to breaches, phishing attacks, or unauthorized access—exposing businesses to legal consequences and reputational damage. Secure API integration mitigates these risks and ensures data integrity, privacy, and compliance with laws like GDPR, HIPAA, and CCPA.

Common Threats to API-Based Data Transmission

Before diving into the best practices, it's important to understand the threats you’re defending against:

Man-in-the-middle (MITM) attacks – where data is intercepted between the contact form and the API.

Unauthorized access – due to poor authentication or exposed API keys.

Injection attacks – through unsanitized input, enabling attackers to manipulate API calls.

Data leakage – from insecure storage or logging mechanisms.

Replay attacks – where a malicious user resends legitimate API requests to gain unauthorized access or cause disruptions.

Best Practices for Securing API Integrations with Contact Forms

1. Use HTTPS with SSL/TLS Encryption

The first and most critical step is to ensure all communication between the form, your server, and any third-party APIs happens over HTTPS. HTTPS encrypts the data in transit, making it nearly impossible for attackers to intercept and read sensitive information.

Pro tip: Use strong TLS configurations and ensure your SSL certificate is up to date.

2. Implement Strong Authentication and Authorization

APIs must be protected by robust authentication mechanisms. Relying solely on static API keys can be risky if they’re not stored securely or become exposed.

Best practices include:

OAuth 2.0 – A widely accepted protocol for secure delegated access.

JWT (JSON Web Tokens) – Allows secure transmission of claims between parties.

IP whitelisting – Restrict API access to known IP addresses.

Role-based access control (RBAC) – Ensure only authorized applications and users have access.

3. Validate and Sanitize Input Data

Never trust data coming from a user-facing contact form. Always validate and sanitize inputs before processing or transmitting them to an API to prevent injection attacks.

Validation examples:

Use regex patterns to validate email formats.

Limit message length to prevent buffer overflows.

Sanitize inputs by removing or escaping special characters.

4. Rate Limiting and Throttling

Implement API rate limiting to protect your integration from abuse. Bots or attackers may try to flood your endpoints with traffic, potentially leading to denial-of-service (DoS) attacks or data scraping.

Suggested limits:

Max 60 requests per minute per IP

Max 1000 requests per day per user

Use appropriate HTTP response headers like 429 Too Many Requests to inform clients of limits.

5. Encrypt Data at Rest and in Transit

While HTTPS encrypts data in transit, you should also encrypt sensitive data at rest if you're storing it temporarily before pushing it to an API.

Encryption standards to consider:

AES-256 for data at rest

TLS 1.2 or higher for data in transit

Never store unencrypted form submissions on disk, in logs, or database tables.

6. Use Web Application Firewalls (WAFs)

A WAF helps filter out malicious requests to your contact form and API endpoints. They block suspicious IPs, detect bots, and protect against common OWASP Top 10 threats.

Features to look for:

SQL injection protection

Cross-site scripting (XSS) filters

Geo-blocking for unknown regions

Cloudflare, AWS WAF, and Sucuri are popular options.

7. Secure API Keys and Secrets

API credentials should never be hardcoded into frontend code or exposed in browser-accessible scripts. Instead:

Store secrets in server-side environment variables.

Use secret management tools like HashiCorp Vault or AWS Secrets Manager.

Rotate keys periodically and revoke unused credentials.

8. Enable Logging and Monitoring

Real-time monitoring can alert you to anomalies like spikes in traffic or repeated failed API calls, indicating an attack or integration issue.

What to log:

Timestamped request metadata

Status codes and error responses

Authentication failures

Ensure logs themselves do not store sensitive information, and secure them with proper access control.

9. Implement CSRF and CAPTCHA Protection

To prevent bots and malicious actors from abusing your contact form, use:

CSRF tokens to verify that a submission originated from your site.

CAPTCHAs or reCAPTCHA to block automated submissions.

This reduces the risk of your API being bombarded with spam or test payloads.

10. Follow the Principle of Least Privilege

Only allow the contact form integration to access what it absolutely needs. If the form only needs to create a lead in a CRM, don’t give it permission to delete or modify records.

This principle minimizes potential damage if credentials are compromised.

11. Secure Third-Party Services

If your contact form integrates with external APIs (e.g., HubSpot, Mailchimp, Salesforce), ensure these services:

Use HTTPS exclusively

Provide granular API scopes

Offer audit trails

Comply with regulations like GDPR and SOC 2

Always vet vendors for their security posture before integration.

12. Test for Vulnerabilities Regularly

Conduct regular penetration testing and vulnerability scans to uncover weak spots in your form and API integration. Include both automated tools and manual ethical hacking.

Tools to try:

OWASP ZAP

Burp Suite

Postman Security Testing

Fix any discovered vulnerabilities immediately and document the fixes.

13. Comply with Data Protection Regulations

Different regions have specific laws about how user data should be collected, stored, and transmitted. For example:

GDPR (EU): Requires explicit user consent and secure handling of PII.

CCPA (California): Grants users the right to know and delete their data.

HIPAA (US): Regulates health-related data.

Make sure your API-based contact form integration complies with relevant laws, especially if collecting data from users in regulated industries.

14. Include a Clear Privacy Policy

Your contact form should link to a privacy policy explaining:

What data is collected

How it will be used

Where it will be transmitted

How long it will be stored

This not only ensures compliance but also builds trust with users.

15. Fail Gracefully and Securely

Even with all protections, failures can occur. Ensure your contact form and API integration handle errors securely:

Don’t expose stack traces or internal server information in error responses.

Avoid leaking API credentials in logs or responses.

Inform the user of a failure without revealing system details.

Use secure error handling to maintain a good user experience while protecting your backend.

Conclusion

Securing API integrations for contact form data transmission is not optional—it’s a necessity in a world where data breaches are commonplace and user privacy is paramount. From implementing HTTPS and strong authentication to input validation and compliance, each layer of protection contributes to a robust, secure system.

Organizations that proactively secure their contact form integrations not only protect themselves from cyber threats but also enhance their credibility and user trust. By adopting these best practices, you're not just guarding data—you're safeguarding your brand.

0 notes

Text

Easy Data delivers customized web scraping and analytics-ready datasets for agencies, brands, and market researchers in Southeast Asia.

Website: https://easydata.io.vn

Email: [email protected]

Phone: +84 352 860 385

Address: Ho Chi Minh City, Vietnam

1 note

·

View note

Text

Scrape Lawyers Data from State Bar Directories

Scrape Lawyers Data from State Bar Directories

Scraping lawyers' data from State Bar Directories can be a valuable technique for law firm marketing. By extracting information such as contact details, practice areas, and geographical locations of lawyers, law firms can gain a competitive edge in their marketing efforts. This article will guide you through the process of effectively Scraping Lawyers' Data From State Bar Directories and how it can benefit your law firm marketing strategy.

Scraping is the automated extraction of data from websites using web scraping tools or custom scripts. State Bar Directories are comprehensive databases containing information about licensed lawyers in a particular state. They typically include details such as the lawyer's name, contact information, law school attended, and areas of practice. We at DataScrapingServices.com scrape this data, law firms can build their own database of potential clients and tailor their marketing campaigns accordingly.

List Of Data Fields

When scraping lawyers' data from State Bar Directories, it is important to identify the specific data fields that are relevant to your law firm marketing goals. Some common data fields to consider include:

- Lawyer's Name: This helps in personalizing your marketing communications.

- Contact Information: Phone numbers and email addresses allow you to reach out to potential clients.

- Areas Of Practice: Knowing the areas of law in which a lawyer specializes helps you target specific audiences.

- Geographical Location: This information is crucial for localized marketing campaigns.

By compiling these data fields, you can create a comprehensive and targeted list of lawyers to engage with for your marketing efforts.

Benefits of Scrape Lawyers Data from State Bar Directories

Scraping lawyers' data from State Bar Directories offers several benefits for law firm marketing:

- Accurate And Up-To-Date Information: State Bar Directories are maintained by legal authorities, ensuring the data is reliable and current.

- Targeted Marketing Campaigns: By filtering lawyers based on practice areas and geographical locations, you can tailor your marketing messages to specific audiences.

- Competitive Advantage: Accessing lawyers' data gives you insights into your competitors' marketing strategies, allowing you to stay ahead in the market.

- Cost-Effective Lead Generation: By directly contacting lawyers who match your target criteria, you can save time and resources compared to traditional lead generation methods.

Overall, scraping lawyers' data from State Bar Directories empowers law firms to make informed marketing decisions and connect with potential clients more effectively.

Best Lawyers Data Scraping Services

Superlawyers.com Data Scraping

Personal Injury Lawyer Email List

Justia.com Lawyers Data Scraping

Avvo.com Lawyers Data Scraping

Verified US Attorneys Data from Lawyers.com

Extracting Data from Barlist Website

Attorney Email Database Scraping

Australia Lawyers Data Scraping

Bar Association Directory Scraping

Best Scrape Lawyers Data from State Bar Directories Services USA:

New Orleans, Philadelphia, San Jose, Jacksonville, Arlington, Dallas, Fort Wichita, Boston, Worth, Sacramento, El Paso, Columbus, Houston, San Francisco, Raleigh, Memphis, Austin, San Antonio, Milwaukee, Bakersfield, Miami, Louisville, Albuquerque, Atlanta, Denver, San Diego, Oklahoma City, Seattle, Orlando, Springs, Chicago, Nashville, Virginia Beach, Colorado, Omaha, Long Beach, Portland, Kansas Los Angeles, Washington, Las Vegas, Indianapolis, Tulsa, Honolulu, Tucson and New York.

Conclusion

Scraping lawyers' data from State Bar Directories is a powerful tool for law firm marketing. By leveraging this technique, law firms can access valuable information about lawyers in their target market and customize their marketing campaigns accordingly. Whether it's personalizing communication, targeting specific practice areas, or reaching out to lawyers in specific geographical locations, scraping lawyers' data can significantly enhance your law firm's marketing strategy. Embrace this technology-driven approach to gain a competitive edge and connect with potential clients more efficiently.

Website: Datascrapingservices.com

Email: [email protected]

#scrapelawyersdatafromstatebardirectories#statebardirectorydatascraping#lawyersdatascraping#lawyersemaillist#lawyersmailinglistscraping#webscrapingservices#datascrapingservices#datamining#dataanalytics#webscrapingexpert#webcrawler#webscraper#webscraping#datascraping#dataentry#emaillistscraping#emaildatabase

0 notes

Text

Why Businesses Need Reliable Web Scraping Tools for Lead Generation.

The Importance of Data Extraction in Business Growth

Efficient data scraping tools are essential for companies looking to expand their customer base and enhance their marketing efforts. Web scraping enables businesses to extract valuable information from various online sources, such as search engine results, company websites, and online directories. This data fuels lead generation, helping organizations find potential clients and gain a competitive edge.

Not all web scraping tools provide the accuracy and efficiency required for high-quality data collection. Choosing the right solution ensures businesses receive up-to-date contact details, minimizing errors and wasted efforts. One notable option is Autoscrape, a widely used scraper tool that simplifies data mining for businesses across multiple industries.

Why Choose Autoscrape for Web Scraping?

Autoscrape is a powerful data mining tool that allows businesses to extract emails, phone numbers, addresses, and company details from various online sources. With its automation capabilities and easy-to-use interface, it streamlines lead generation and helps businesses efficiently gather industry-specific data.

The platform supports SERP scraping, enabling users to collect information from search engines like Google, Yahoo, and Bing. This feature is particularly useful for businesses seeking company emails, websites, and phone numbers. Additionally, Google Maps scraping functionality helps businesses extract local business addresses, making it easier to target prospects by geographic location.

How Autoscrape Compares to Other Web Scraping Tools

Many web scraping tools claim to offer extensive data extraction capabilities, but Autoscrape stands out due to its robust features:

Comprehensive Data Extraction: Unlike many free web scrapers, Autoscrape delivers structured and accurate data from a variety of online sources, ensuring businesses obtain quality information.

Automated Lead Generation: Businesses can set up automated scraping processes to collect leads without manual input, saving time and effort.

Integration with External Tools: Autoscrape provides seamless integration with CRM platforms, marketing software, and analytics tools via API and webhooks, simplifying data transfer.

Customizable Lead Lists: Businesses receive sales lead lists tailored to their industry, each containing 1,000 targeted entries. This feature covers sectors like agriculture, construction, food, technology, and tourism.

User-Friendly Data Export: Extracted data is available in CSV format, allowing easy sorting and filtering by industry, location, or contact type.

Who Can Benefit from Autoscrape?

Various industries rely on web scraping tools for data mining and lead generation services. Autoscrape caters to businesses needing precise, real-time data for marketing campaigns, sales prospecting, and market analysis. Companies in the following sectors find Autoscrape particularly beneficial:

Marketing Agencies: Extract and organize business contacts for targeted advertising campaigns.

Real Estate Firms: Collect property listings, real estate agencies, and investor contact details.

E-commerce Businesses: Identify potential suppliers, manufacturers, and distributors.

Recruitment Agencies: Gather data on potential job candidates and hiring companies.

Financial Services: Analyze market trends, competitors, and investment opportunities.

How Autoscrape Supports Business Expansion

Businesses that rely on lead generation services need accurate, structured, and up-to-date data to make informed decisions. Autoscrape enhances business operations by:

Improving Customer Outreach: With access to verified emails, phone numbers, and business addresses, companies can streamline their cold outreach strategies.

Enhancing Market Research: Collecting relevant data from SERPs, online directories, and Google Maps helps businesses understand market trends and competitors.

Increasing Efficiency: Automating data scraping processes reduces manual work and ensures consistent data collection without errors.

Optimizing Sales Funnel: By integrating scraped data with CRM systems, businesses can manage and nurture leads more effectively.

Testing Autoscrape: Free Trial and Accessibility

For businesses unsure about committing to a web scraper tool, Autoscrapeoffers a free account that provides up to 100 scrape results. This allows users to evaluate the platform's capabilities before making a purchase decision.

Whether a business requires SERP scraping, Google Maps data extraction, or automated lead generation, Autoscrape delivers a reliable and efficient solution that meets the needs of various industries. Choosing the right data scraping tool is crucial for businesses aiming to scale operations and enhance their customer acquisition strategies.

Investing in a well-designed web scraping solution like Autoscrape ensures businesses can extract valuable information quickly and accurately, leading to more effective marketing and sales efforts.

0 notes

Text

Yuh as one of those human reviewers (not for the docs writer LLM but for Google search quality, bias, and text summaries more generally), it's a terrible terrible privacy mess to base LLMs off of data which is not published on the web. Yes there are issues with web scraping to train bots as far as intellectual property, but that info is all public in one way or another. I can scrape the New York Times for restaurant reviews and ask an LLM to create a review for an imaginary Thai restaurant, but those reviews were at least meant for public viewing in the first place. It wouldn't be the end of the world if the synthetic review copied something verbatim like "chicken enlivened by lemongrass and ginger".

Because LLMs are being trained on all the data of all the users, there's no guarantee that whatever goes into the "black box" will not come out to another user given the right prompting. It's just a statistical process of generating the most likely string of associated words, connections between which are reweighted based on reviewer and user feedback. If in the training data a string of connected words is presented, like "come to the baby shower at 6pm for Mary Poppins at 123 Blueberry Lane, Smallville, USA, 90210", that exact address could at some point be regurgitated in whole to another user, whether the prompting was intentional or not.

The LLM doesn't "know" what data is sensitive. The LLM does not "protect" data from one user from being used by another. The LLM doesn't have the contextual awareness to know that some kinds of information could present more risk for harm, or that some words represent more identifiable data than others.

All of the data is being amalgamated into the LLM likely with only some very broad tools for grooming the data set, like perhaps removing the corpus of one user or removing input with a certain percentage of non-English characters, say, and likely things like street addresses, phone numbers, names, and emails which can be easily removed are already being redacted from the data sets. But if it's put into words, it's extremely likely to be picked up indiscriminately as part of the training set.

The Google text products for search I've worked on can be very literal to the training data, usually repeating sentences wholesale when making summaries. An email LLM could be giving you whole sentences that had been written by a person, or whole phrases, but still be "ai generated"- it just happens that the most likely order for those words is exactly as a human or humans had written before. Obviously that makes sense because people say the same things all the time and the LLMs are probability machines. But because the training sets of data are so massive, it's not being searched every time to see if the text is a verbatim match to something the LLM had been trained on, or running a sniff check for whether that information is specific to an individual person. This "quoting" is more likely for prompts where there are fewer data points that the LLM is trained on, so compared to say, "write an email asking to reschedule the meeting to 2pm" which has 20 million examples, if I prompted "write an origin story for my DND character, a kind halfling bard named Kiara who travels in a mercenary band. Include how she discovered a love of music and how she joined the mercenaries" or "generate a table of semiconductor contractors for XYZ corp, include turnaround times for prototypes, include batch yield, include Unit cost" , we're a lot more likely to see people's (unpublished and private!) trade secrets being quoted. The corporations are going to have a fit, especially since they've been sold the Google Office suite for years.

At best, the data sets are being massaged by engineers using some complex filters to remove some information, and the bots are being put through sampling to see how often they return results which are directly quoted from text, and the reviewers are giving low ratings to responses which seem to quote very specific info out of nowhere. But if the bot changes just one word, or a few, while still rephrasing the information, it's impossible to check whether that information has a match in the training data without human review, and there's no guarantee another bot making the comparison like a plagiarism checker would catch it. Once the data is in the set, there are no guarantees.

The only way Google gets around these likelihoods of copyright infringement or privacy law is by having you the user waive your rights and agree as part of the terms of service not to include "sensitive" info.. so if you're somehow hurt by a leak of your info or creative ideas , it's because you used the service wrong. Might not stand up in court, but still be advised not to agree to this stuff. It's highly irresponsible to use LLMs which are being trained on unpublished user data and I'm sure that companies are going to throw a fit and demand to opt out of being scraped for data at scale for their whole google suite.

🚨⚠️ATTENTION FELLOW WRITERS⚠️🚨

If you use Google Docs for your writing, I highly encourage you to download your work, delete it from Google Docs, and transfer it to a different program/site, unless you want AI to start leeching off your hard work!!!

I personally have switched to Libre Office, but there are many different options. I recommend checking out r/degoogle for options.

Please reblog to spread the word!!

28K notes

·

View notes

Text

Top Contact Discovery Tools for B2B Lead Generation

A contact discovery tool is a software solution designed to help businesses find and verify contact information for key decision-makers at companies. These tools are especially useful for B2B (business-to-business) marketing and sales teams looking to generate leads and build relationships with prospects. With a contact discovery tool, you can uncover email addresses, phone numbers, job titles, and social media profiles of individuals within specific companies or industries. This streamlines the process of lead generation and outreach, making it easier to connect with potential clients and partners.

How Does a Contact Discovery Tool Work?

Contact Discovery Tool typically work by utilizing vast databases, web scraping, public records, and social media profiles to gather contact information. These tools often offer search filters that allow users to narrow down results based on specific criteria such as:

Industry or company size

Location

Job title or department (e.g., marketing, sales, HR)

Social media profiles (e.g., LinkedIn)

Keywords or company names

Once the tool finds a match, it provides the contact’s name, email, phone number, and often additional details like the company’s website, social media handles, and business news. Many tools also offer email verification to ensure the contact information is valid and up-to-date.

Top Contact Discovery Tools for B2B Businesses

Here are some of the leading contact discovery tools that businesses can use to gather accurate contact information and enhance lead generation efforts:

1. Hunter.io

Hunter.io is one of the most popular contact discovery tools for finding and verifying email addresses. With its easy-to-use interface, businesses can search for emails based on domain names, company names, or specific keywords. The tool also offers an email verification feature to ensure that the email addresses are valid and deliverable.

Key Features:

Find and verify email addresses based on domains

Advanced search filters to find contacts within companies

Email address verification

Browser extension for quick access

2. Clearbit

Clearbit provides a suite of tools that help businesses find detailed contact information for leads, including email addresses, job titles, phone numbers, and company data. Clearbit’s data enrichment tool is particularly useful for enhancing existing leads with more detailed information. It's a great option for B2B companies that want to focus on targeting decision-makers and other key individuals.

Key Features:

Real-time data enrichment

Company and individual contact discovery

Integration with CRMs and marketing automation tools

API for seamless integration into existing systems

3. ZoomInfo

ZoomInfo offers one of the most extensive databases of business contacts. It enables businesses to discover contact details for decision-makers, including phone numbers, emails, job titles, and social media accounts. ZoomInfo uses AI to help you identify the most promising leads based on various signals, such as job changes or specific business interests.

Key Features:

Access to a large database of business contacts

Detailed insights into company profiles

Lead scoring and segmentation based on intent data

CRM and marketing automation tool integrations

4. Apollo.io

Apollo.io is a contact discovery and lead generation tool that helps B2B companies find, engage, and manage contacts more efficiently. It provides an extensive database of over 200 million contacts and allows businesses to search based on various filters such as job title, company size, and industry. Apollo also offers email sequences and analytics for outreach campaigns.

Key Features:

Access to millions of B2B contacts

Advanced search filters and segmentation

Email sequencing and outreach automation

CRM integration and analytics

5. Lusha

Lusha is a popular tool for discovering business contact information and improving lead generation efforts. It offers a browser extension that helps users access contact details directly from LinkedIn profiles. Lusha also provides email addresses, phone numbers, and social media profiles to facilitate outreach.

Key Features:

Real-time contact discovery from social media profiles (e.g., LinkedIn)

Access to phone numbers, emails, and social media accounts

Integration with CRM systems like Salesforce

Browser extension for quick contact discovery

6. Skrapp

Skrapp is an email-finding tool that helps businesses discover contact information for leads and prospects. With Skrapp, users can find email addresses based on domain names, company names, or social profiles like LinkedIn. It also includes an email verification tool to ensure the quality of the leads you gather.

Key Features:

Find email addresses based on domain or social profile

Bulk email search for large-scale lead generation

Email verification for accuracy

Integration with LinkedIn for easy lead discovery

7. Snov.io

Snov.io is a versatile contact discovery and email outreach tool that helps businesses find emails, verify them, and automate email campaigns. With its vast database, businesses can find leads across industries, use filters to refine searches, and export contact lists to CSV files or integrate with CRMs.

Key Features:

Find and verify email addresses

Email drip campaigns and automation

Lead segmentation and CRM integrations

Search for contacts by company domain or industry

8. Prospect.io

Prospect.io is an all-in-one sales automation tool that includes a contact discovery feature. With Prospect.io, users can search for leads, capture contact details, and integrate those leads into an automated outreach workflow. The tool provides email and phone number discovery, email verification, and detailed company profiles.

Key Features:

Contact discovery (email and phone number)

Email verification

Automated outreach and email sequences

CRM integration

9. FindThatLead

FindThatLead is another tool designed to help B2B businesses discover contact information for potential leads. It offers a simple platform that lets users search for leads based on domain names, industries, or LinkedIn profiles. With FindThatLead, users can also verify emails and export contact lists for outreach.

Key Features:

Email and phone number discovery

LinkedIn integration for easy contact finding

Bulk email search

Email verification

10. UpLead

UpLead is a contact discovery tool that allows users to search for verified business contacts based on company data, job titles, and industries. The platform focuses on data quality, offering a high level of accuracy for contacts and companies. UpLead also features an email verification system to ensure that your outreach efforts aren’t wasted on incorrect contact details.

Key Features:

Search for verified business contacts

Access to company information and decision-makers

Real-time email verification

Bulk contact export to CSV files

Conclusion

Contact discovery tools are an essential part of the B2B sales and marketing toolkit. They help businesses quickly identify and connect with key decision-makers, improving lead generation efforts and increasing sales opportunities. Whether you're looking for contact information for email outreach or to better understand potential leads, the tools listed above offer powerful solutions. Selecting the right contact discovery tool depends on your specific business needs, such as database size, integration capabilities, and pricing. With the right tool, you can unlock valuable leads and accelerate your sales process.

1 note

·

View note

Text

Data Scraper

JOB OVERVIEW Job Summary: We are seeking a detail-oriented and tech-savvy Data Scraper to collect phone numbers and email addresses from various online sources. The ideal candidate will have a strong background in web scraping, data mini… Apply Now

0 notes

Text

Data Scraper

JOB OVERVIEW Job Summary: We are seeking a detail-oriented and tech-savvy Data Scraper to collect phone numbers and email addresses from various online sources. The ideal candidate will have a strong background in web scraping, data mini… Apply Now

0 notes

Text

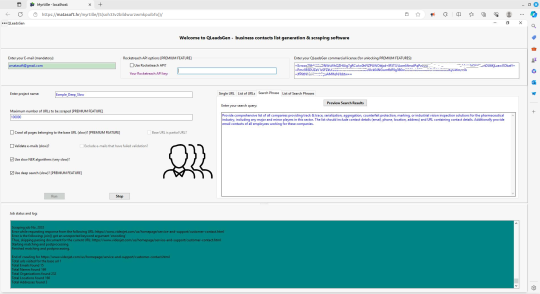

QLeadsGen is a cutting-edge software designed to streamline your B2B marketing and sales efforts through advanced artificial intelligence and natural language processing. It efficiently gathers crucial business data from the web, including:

- Email addresses

- Phone numbers

- Company URLs

- Contact names

- Organization details

- Locations and addresses

Here are some of its standout features:

- AI-driven search engine for pinpoint company targeting.

- Advanced Named Entity Recognition for precise contact extraction.

- Integration with RocketReach API for access to high-quality employee data.

- Intelligent email matching to consolidate contact information.

- A free version available to explore core functionalities.

With QLeadsGen, you can:

- Target leads in specific industries or create highly-focused contact lists.

- Provide search criteria or a list of websites for AI to scrape data.

Whether you're looking to enhance your lead generation process or to expand your sales outreach, QLeadsGen uses AI to make the process seamless. Try the free version to witness the impact of AI-powered contact scraping on your business.

#LeadGeneration #AIMarketing #B2BLeads #ContactScraping #SalesIntelligence #DigitalMarketing #EmailMarketing #WebScraping #DataMining #Leads #BusinessLeads #DigitalMarketing

Learn more: https://matasoft.hr/QTrendControl/index.php/qleadsgen-business-contacts-web-scraping-software

0 notes