#aggregate function in mysql

Explore tagged Tumblr posts

Text

Mastering Aggregate Functions in SQL: A Comprehensive Guide

Introduction to SQL: In the realm of relational databases, Structured Query Language (SQL) serves as a powerful tool for managing and manipulating data. Among its many capabilities, SQL offers a set of aggregate functions that allow users to perform calculations on groups of rows to derive meaningful insights from large datasets.

Learn how to use SQL aggregate functions like SUM, AVG, COUNT, MIN, and MAX to analyze data efficiently. This comprehensive guide covers syntax, examples, and best practices to help you master SQL queries for data analysis.

#aggregate functions#sql aggregate functions#aggregate functions in sql#aggregate functions in dbms#aggregate functions in sql server#aggregate functions in oracle#aggregate function in mysql#window function in sql#aggregate functions sql#best sql aggregate functions#aggregate functions and grouping#aggregate functions dbms#aggregate functions mysql#aggregate function#sql window functions#aggregate function tutorial#postgresql aggregate functions tutorial.

0 notes

Text

25 Udemy Paid Courses for Free with Certification (Only for Limited Time)

2023 Complete SQL Bootcamp from Zero to Hero in SQL

Become an expert in SQL by learning through concept & Hands-on coding :)

What you'll learn

Use SQL to query a database Be comfortable putting SQL on their resume Replicate real-world situations and query reports Use SQL to perform data analysis Learn to perform GROUP BY statements Model real-world data and generate reports using SQL Learn Oracle SQL by Professionally Designed Content Step by Step! Solve any SQL-related Problems by Yourself Creating Analytical Solutions! Write, Read and Analyze Any SQL Queries Easily and Learn How to Play with Data! Become a Job-Ready SQL Developer by Learning All the Skills You will Need! Write complex SQL statements to query the database and gain critical insight on data Transition from the Very Basics to a Point Where You can Effortlessly Work with Large SQL Queries Learn Advanced Querying Techniques Understand the difference between the INNER JOIN, LEFT/RIGHT OUTER JOIN, and FULL OUTER JOIN Complete SQL statements that use aggregate functions Using joins, return columns from multiple tables in the same query

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Python Programming Complete Beginners Course Bootcamp 2023

2023 Complete Python Bootcamp || Python Beginners to advanced || Python Master Class || Mega Course

What you'll learn

Basics in Python programming Control structures, Containers, Functions & Modules OOPS in Python How python is used in the Space Sciences Working with lists in python Working with strings in python Application of Python in Mars Rovers sent by NASA

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Learn PHP and MySQL for Web Application and Web Development

Unlock the Power of PHP and MySQL: Level Up Your Web Development Skills Today

What you'll learn

Use of PHP Function Use of PHP Variables Use of MySql Use of Database

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

T-Shirt Design for Beginner to Advanced with Adobe Photoshop

Unleash Your Creativity: Master T-Shirt Design from Beginner to Advanced with Adobe Photoshop

What you'll learn

Function of Adobe Photoshop Tools of Adobe Photoshop T-Shirt Design Fundamentals T-Shirt Design Projects

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Complete Data Science BootCamp

Learn about Data Science, Machine Learning and Deep Learning and build 5 different projects.

What you'll learn

Learn about Libraries like Pandas and Numpy which are heavily used in Data Science. Build Impactful visualizations and charts using Matplotlib and Seaborn. Learn about Machine Learning LifeCycle and different ML algorithms and their implementation in sklearn. Learn about Deep Learning and Neural Networks with TensorFlow and Keras Build 5 complete projects based on the concepts covered in the course.

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Essentials User Experience Design Adobe XD UI UX Design

Learn UI Design, User Interface, User Experience design, UX design & Web Design

What you'll learn

How to become a UX designer Become a UI designer Full website design All the techniques used by UX professionals

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Build a Custom E-Commerce Site in React + JavaScript Basics

Build a Fully Customized E-Commerce Site with Product Categories, Shopping Cart, and Checkout Page in React.

What you'll learn

Introduction to the Document Object Model (DOM) The Foundations of JavaScript JavaScript Arithmetic Operations Working with Arrays, Functions, and Loops in JavaScript JavaScript Variables, Events, and Objects JavaScript Hands-On - Build a Photo Gallery and Background Color Changer Foundations of React How to Scaffold an Existing React Project Introduction to JSON Server Styling an E-Commerce Store in React and Building out the Shop Categories Introduction to Fetch API and React Router The concept of "Context" in React Building a Search Feature in React Validating Forms in React

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Complete Bootstrap & React Bootcamp with Hands-On Projects

Learn to Build Responsive, Interactive Web Apps using Bootstrap and React.

What you'll learn

Learn the Bootstrap Grid System Learn to work with Bootstrap Three Column Layouts Learn to Build Bootstrap Navigation Components Learn to Style Images using Bootstrap Build Advanced, Responsive Menus using Bootstrap Build Stunning Layouts using Bootstrap Themes Learn the Foundations of React Work with JSX, and Functional Components in React Build a Calculator in React Learn the React State Hook Debug React Projects Learn to Style React Components Build a Single and Multi-Player Connect-4 Clone with AI Learn React Lifecycle Events Learn React Conditional Rendering Build a Fully Custom E-Commerce Site in React Learn the Foundations of JSON Server Work with React Router

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Build an Amazon Affiliate E-Commerce Store from Scratch

Earn Passive Income by Building an Amazon Affiliate E-Commerce Store using WordPress, WooCommerce, WooZone, & Elementor

What you'll learn

Registering a Domain Name & Setting up Hosting Installing WordPress CMS on Your Hosting Account Navigating the WordPress Interface The Advantages of WordPress Securing a WordPress Installation with an SSL Certificate Installing Custom Themes for WordPress Installing WooCommerce, Elementor, & WooZone Plugins Creating an Amazon Affiliate Account Importing Products from Amazon to an E-Commerce Store using WooZone Plugin Building a Customized Shop with Menu's, Headers, Branding, & Sidebars Building WordPress Pages, such as Blogs, About Pages, and Contact Us Forms Customizing Product Pages on a WordPress Power E-Commerce Site Generating Traffic and Sales for Your Newly Published Amazon Affiliate Store

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

The Complete Beginner Course to Optimizing ChatGPT for Work

Learn how to make the most of ChatGPT's capabilities in efficiently aiding you with your tasks.

What you'll learn

Learn how to harness ChatGPT's functionalities to efficiently assist you in various tasks, maximizing productivity and effectiveness. Delve into the captivating fusion of product development and SEO, discovering effective strategies to identify challenges, create innovative tools, and expertly Understand how ChatGPT is a technological leap, akin to the impact of iconic tools like Photoshop and Excel, and how it can revolutionize work methodologies thr Showcase your learning by creating a transformative project, optimizing your approach to work by identifying tasks that can be streamlined with artificial intel

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

AWS, JavaScript, React | Deploy Web Apps on the Cloud

Cloud Computing | Linux Foundations | LAMP Stack | DBMS | Apache | NGINX | AWS IAM | Amazon EC2 | JavaScript | React

What you'll learn

Foundations of Cloud Computing on AWS and Linode Cloud Computing Service Models (IaaS, PaaS, SaaS) Deploying and Configuring a Virtual Instance on Linode and AWS Secure Remote Administration for Virtual Instances using SSH Working with SSH Key Pair Authentication The Foundations of Linux (Maintenance, Directory Commands, User Accounts, Filesystem) The Foundations of Web Servers (NGINX vs Apache) Foundations of Databases (SQL vs NoSQL), Database Transaction Standards (ACID vs CAP) Key Terminology for Full Stack Development and Cloud Administration Installing and Configuring LAMP Stack on Ubuntu (Linux, Apache, MariaDB, PHP) Server Security Foundations (Network vs Hosted Firewalls). Horizontal and Vertical Scaling of a virtual instance on Linode using NodeBalancers Creating Manual and Automated Server Images and Backups on Linode Understanding the Cloud Computing Phenomenon as Applicable to AWS The Characteristics of Cloud Computing as Applicable to AWS Cloud Deployment Models (Private, Community, Hybrid, VPC) Foundations of AWS (Registration, Global vs Regional Services, Billing Alerts, MFA) AWS Identity and Access Management (Mechanics, Users, Groups, Policies, Roles) Amazon Elastic Compute Cloud (EC2) - (AMIs, EC2 Users, Deployment, Elastic IP, Security Groups, Remote Admin) Foundations of the Document Object Model (DOM) Manipulating the DOM Foundations of JavaScript Coding (Variables, Objects, Functions, Loops, Arrays, Events) Foundations of ReactJS (Code Pen, JSX, Components, Props, Events, State Hook, Debugging) Intermediate React (Passing Props, Destrcuting, Styling, Key Property, AI, Conditional Rendering, Deployment) Building a Fully Customized E-Commerce Site in React Intermediate React Concepts (JSON Server, Fetch API, React Router, Styled Components, Refactoring, UseContext Hook, UseReducer, Form Validation)

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Run Multiple Sites on a Cloud Server: AWS & Digital Ocean

Server Deployment | Apache Configuration | MySQL | PHP | Virtual Hosts | NS Records | DNS | AWS Foundations | EC2

What you'll learn

A solid understanding of the fundamentals of remote server deployment and configuration, including network configuration and security. The ability to install and configure the LAMP stack, including the Apache web server, MySQL database server, and PHP scripting language. Expertise in hosting multiple domains on one virtual server, including setting up virtual hosts and managing domain names. Proficiency in virtual host file configuration, including creating and configuring virtual host files and understanding various directives and parameters. Mastery in DNS zone file configuration, including creating and managing DNS zone files and understanding various record types and their uses. A thorough understanding of AWS foundations, including the AWS global infrastructure, key AWS services, and features. A deep understanding of Amazon Elastic Compute Cloud (EC2) foundations, including creating and managing instances, configuring security groups, and networking. The ability to troubleshoot common issues related to remote server deployment, LAMP stack installation and configuration, virtual host file configuration, and D An understanding of best practices for remote server deployment and configuration, including security considerations and optimization for performance. Practical experience in working with remote servers and cloud-based solutions through hands-on labs and exercises. The ability to apply the knowledge gained from the course to real-world scenarios and challenges faced in the field of web hosting and cloud computing. A competitive edge in the job market, with the ability to pursue career opportunities in web hosting and cloud computing.

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Cloud-Powered Web App Development with AWS and PHP

AWS Foundations | IAM | Amazon EC2 | Load Balancing | Auto-Scaling Groups | Route 53 | PHP | MySQL | App Deployment

What you'll learn

Understanding of cloud computing and Amazon Web Services (AWS) Proficiency in creating and configuring AWS accounts and environments Knowledge of AWS pricing and billing models Mastery of Identity and Access Management (IAM) policies and permissions Ability to launch and configure Elastic Compute Cloud (EC2) instances Familiarity with security groups, key pairs, and Elastic IP addresses Competency in using AWS storage services, such as Elastic Block Store (EBS) and Simple Storage Service (S3) Expertise in creating and using Elastic Load Balancers (ELB) and Auto Scaling Groups (ASG) for load balancing and scaling web applications Knowledge of DNS management using Route 53 Proficiency in PHP programming language fundamentals Ability to interact with databases using PHP and execute SQL queries Understanding of PHP security best practices, including SQL injection prevention and user authentication Ability to design and implement a database schema for a web application Mastery of PHP scripting to interact with a database and implement user authentication using sessions and cookies Competency in creating a simple blog interface using HTML and CSS and protecting the blog content using PHP authentication. Students will gain practical experience in creating and deploying a member-only blog with user authentication using PHP and MySQL on AWS.

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

CSS, Bootstrap, JavaScript And PHP Stack Complete Course

CSS, Bootstrap And JavaScript And PHP Complete Frontend and Backend Course

What you'll learn

Introduction to Frontend and Backend technologies Introduction to CSS, Bootstrap And JavaScript concepts, PHP Programming Language Practically Getting Started With CSS Styles, CSS 2D Transform, CSS 3D Transform Bootstrap Crash course with bootstrap concepts Bootstrap Grid system,Forms, Badges And Alerts Getting Started With Javascript Variables,Values and Data Types, Operators and Operands Write JavaScript scripts and Gain knowledge in regard to general javaScript programming concepts PHP Section Introduction to PHP, Various Operator types , PHP Arrays, PHP Conditional statements Getting Started with PHP Function Statements And PHP Decision Making PHP 7 concepts PHP CSPRNG And PHP Scalar Declaration

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Learn HTML - For Beginners

Lean how to create web pages using HTML

What you'll learn

How to Code in HTML Structure of an HTML Page Text Formatting in HTML Embedding Videos Creating Links Anchor Tags Tables & Nested Tables Building Forms Embedding Iframes Inserting Images

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Learn Bootstrap - For Beginners

Learn to create mobile-responsive web pages using Bootstrap

What you'll learn

Bootstrap Page Structure Bootstrap Grid System Bootstrap Layouts Bootstrap Typography Styling Images Bootstrap Tables, Buttons, Badges, & Progress Bars Bootstrap Pagination Bootstrap Panels Bootstrap Menus & Navigation Bars Bootstrap Carousel & Modals Bootstrap Scrollspy Bootstrap Themes

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

JavaScript, Bootstrap, & PHP - Certification for Beginners

A Comprehensive Guide for Beginners interested in learning JavaScript, Bootstrap, & PHP

What you'll learn

Master Client-Side and Server-Side Interactivity using JavaScript, Bootstrap, & PHP Learn to create mobile responsive webpages using Bootstrap Learn to create client and server-side validated input forms Learn to interact with a MySQL Database using PHP

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Linode: Build and Deploy Responsive Websites on the Cloud

Cloud Computing | IaaS | Linux Foundations | Apache + DBMS | LAMP Stack | Server Security | Backups | HTML | CSS

What you'll learn

Understand the fundamental concepts and benefits of Cloud Computing and its service models. Learn how to create, configure, and manage virtual servers in the cloud using Linode. Understand the basic concepts of Linux operating system, including file system structure, command-line interface, and basic Linux commands. Learn how to manage users and permissions, configure network settings, and use package managers in Linux. Learn about the basic concepts of web servers, including Apache and Nginx, and databases such as MySQL and MariaDB. Learn how to install and configure web servers and databases on Linux servers. Learn how to install and configure LAMP stack to set up a web server and database for hosting dynamic websites and web applications. Understand server security concepts such as firewalls, access control, and SSL certificates. Learn how to secure servers using firewalls, manage user access, and configure SSL certificates for secure communication. Learn how to scale servers to handle increasing traffic and load. Learn about load balancing, clustering, and auto-scaling techniques. Learn how to create and manage server images. Understand the basic structure and syntax of HTML, including tags, attributes, and elements. Understand how to apply CSS styles to HTML elements, create layouts, and use CSS frameworks.

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

PHP & MySQL - Certification Course for Beginners

Learn to Build Database Driven Web Applications using PHP & MySQL

What you'll learn

PHP Variables, Syntax, Variable Scope, Keywords Echo vs. Print and Data Output PHP Strings, Constants, Operators PHP Conditional Statements PHP Elseif, Switch, Statements PHP Loops - While, For PHP Functions PHP Arrays, Multidimensional Arrays, Sorting Arrays Working with Forms - Post vs. Get PHP Server Side - Form Validation Creating MySQL Databases Database Administration with PhpMyAdmin Administering Database Users, and Defining User Roles SQL Statements - Select, Where, And, Or, Insert, Get Last ID MySQL Prepared Statements and Multiple Record Insertion PHP Isset MySQL - Updating Records

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Linode: Deploy Scalable React Web Apps on the Cloud

Cloud Computing | IaaS | Server Configuration | Linux Foundations | Database Servers | LAMP Stack | Server Security

What you'll learn

Introduction to Cloud Computing Cloud Computing Service Models (IaaS, PaaS, SaaS) Cloud Server Deployment and Configuration (TFA, SSH) Linux Foundations (File System, Commands, User Accounts) Web Server Foundations (NGINX vs Apache, SQL vs NoSQL, Key Terms) LAMP Stack Installation and Configuration (Linux, Apache, MariaDB, PHP) Server Security (Software & Hardware Firewall Configuration) Server Scaling (Vertical vs Horizontal Scaling, IP Swaps, Load Balancers) React Foundations (Setup) Building a Calculator in React (Code Pen, JSX, Components, Props, Events, State Hook) Building a Connect-4 Clone in React (Passing Arguments, Styling, Callbacks, Key Property) Building an E-Commerce Site in React (JSON Server, Fetch API, Refactoring)

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Internet and Web Development Fundamentals

Learn how the Internet Works and Setup a Testing & Production Web Server

What you'll learn

How the Internet Works Internet Protocols (HTTP, HTTPS, SMTP) The Web Development Process Planning a Web Application Types of Web Hosting (Shared, Dedicated, VPS, Cloud) Domain Name Registration and Administration Nameserver Configuration Deploying a Testing Server using WAMP & MAMP Deploying a Production Server on Linode, Digital Ocean, or AWS Executing Server Commands through a Command Console Server Configuration on Ubuntu Remote Desktop Connection and VNC SSH Server Authentication FTP Client Installation FTP Uploading

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Linode: Web Server and Database Foundations

Cloud Computing | Instance Deployment and Config | Apache | NGINX | Database Management Systems (DBMS)

What you'll learn

Introduction to Cloud Computing (Cloud Service Models) Navigating the Linode Cloud Interface Remote Administration using PuTTY, Terminal, SSH Foundations of Web Servers (Apache vs. NGINX) SQL vs NoSQL Databases Database Transaction Standards (ACID vs. CAP Theorem) Key Terms relevant to Cloud Computing, Web Servers, and Database Systems

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Java Training Complete Course 2022

Learn Java Programming language with Java Complete Training Course 2022 for Beginners

What you'll learn

You will learn how to write a complete Java program that takes user input, processes and outputs the results You will learn OOPS concepts in Java You will learn java concepts such as console output, Java Variables and Data Types, Java Operators And more You will be able to use Java for Selenium in testing and development

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Learn To Create AI Assistant (JARVIS) With Python

How To Create AI Assistant (JARVIS) With Python Like the One from Marvel's Iron Man Movie

What you'll learn

how to create an personalized artificial intelligence assistant how to create JARVIS AI how to create ai assistant

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

Keyword Research, Free Backlinks, Improve SEO -Long Tail Pro

LongTailPro is the keyword research service we at Coursenvy use for ALL our clients! In this course, find SEO keywords,

What you'll learn

Learn everything Long Tail Pro has to offer from A to Z! Optimize keywords in your page/post titles, meta descriptions, social media bios, article content, and more! Create content that caters to the NEW Search Engine Algorithms and find endless keywords to rank for in ALL the search engines! Learn how to use ALL of the top-rated Keyword Research software online! Master analyzing your COMPETITIONS Keywords! Get High-Quality Backlinks that will ACTUALLY Help your Page Rank!

Enroll Now 👇👇👇👇👇👇👇 https://www.book-somahar.com/2023/10/25-udemy-paid-courses-for-free-with.html

#udemy#free course#paid course for free#design#development#ux ui#xd#figma#web development#python#javascript#php#java#cloud

2 notes

·

View notes

Text

Unlock Your Data Superpower: 2025 Complete SQL Bootcamp from Zero to Hero in SQL

Think about the last time you interacted with an app. Whether you were booking a ride, scrolling through your favorite playlist, or even checking your emails, data was working hard in the background to deliver that experience. But have you ever wondered how all that data is stored, accessed, or queried?

The answer is SQL.

Whether you’re dreaming of becoming a data analyst, stepping into backend development, or simply want to get smarter about tech, learning SQL is one of the most high-leverage skills you can build in 2025. And there’s one course that stands out from the rest: the 2025 Complete SQL Bootcamp from Zero to Hero in SQL.

Let’s break it down and see why this bootcamp might be the missing puzzle piece in your career upgrade.

Why SQL Is Still King in 2025

Let’s get something straight — SQL isn’t just alive; it’s thriving.

While new languages and tools emerge every year, SQL has remained the gold standard for working with relational databases. Whether you're pulling reports, managing large sets of information, or automating workflows, SQL is the tool professionals reach for.

Here’s why SQL remains timeless:

Universality: SQL is used everywhere – from tech giants to startups to public agencies.

Ease of Use: Simple, readable syntax that’s beginner-friendly.

Career Value: Mastering SQL opens doors to roles in data science, business intelligence, software engineering, and more.

Foundation for Advanced Tech: Learning SQL is often the first step into more complex tools like PostgreSQL, MySQL, Snowflake, BigQuery, and even AI data pipelines.

Who This Course is For

The beauty of the 2025 Complete SQL Bootcamp from Zero to Hero in SQL is that it speaks to everyone — whether you’re starting from scratch or looking to refresh your knowledge.

Beginners: You’ve never touched code before? No problem.

Career Changers: Want to move into a data-related job? This course is built for transition.

Students & Graduates: Want to make your resume stand out? SQL makes that happen.

Professionals: Already in tech or business? This course helps you communicate better with developers and analysts.

Entrepreneurs: Understand your data, make smarter decisions, and stop depending on someone else for basic reporting.

What You’ll Learn — A Breakdown

So what exactly will you walk away with?

This bootcamp isn’t just about watching videos and collecting certificates. It’s about building real skills. The course is structured in a way that teaches through doing. Each module includes hands-on exercises, projects, and challenges that will reinforce your learning step-by-step.

✅ SQL Basics

Understanding what SQL is and how databases work

SELECT, FROM, WHERE – the foundational commands

Filtering, sorting, and limiting data sets

✅ Intermediate Concepts

Aggregate functions (COUNT, SUM, AVG)

GROUP BY and HAVING

JOINs – Inner, Left, Right, and Full Outer Joins

✅ Real-World Scenarios

Writing complex queries on real databases

Troubleshooting and debugging code

Using SQL with Python and other tools

✅ Advanced Topics

Subqueries and Nested Queries

Window Functions

Indexing and Query Optimization

Data normalization and design

✅ Project-Based Learning

You’ll complete real-world projects to build your confidence and portfolio. These projects include data exploration, reporting dashboards, and building query logic for business problems.

Why This Bootcamp Beats Other SQL Courses

There are hundreds of SQL courses out there. So why should you invest your time in this one?

Here’s what makes it different:

1. Structured, But Flexible

The course is laid out with a clear path from beginner to advanced, but you can jump around at your own pace. No pressure, no overwhelm.

2. Instructor Support

You won’t be left on your own. The instructor is responsive, professional, and invested in your success. Ask questions and get answers when you’re stuck.

3. Lifetime Access & Updates

Once you enroll, you get lifetime access. You can revisit lessons, rework exercises, and even review updates added to the course in the future.

4. High-Quality Content

Clean audio, sharp visuals, clear explanations. No fluff, just quality education that respects your time.

5. Massive Community

You’re not learning alone. Join thousands of others learning alongside you. Share insights, get help, and stay motivated.

Career Benefits of Learning SQL in 2025

So what’s in it for you, really? What can mastering SQL lead to?

🚀 New Job Opportunities

Roles like:

Data Analyst

Business Intelligence Developer

Backend Developer

Database Administrator

Financial Analyst

SQL is often listed as a must-have skill for these positions.

💼 Promotion or Career Growth

If you're already working in tech, marketing, or business, adding SQL to your toolbox makes you more efficient and valuable to your team.

💰 Higher Salary

SQL skills can boost your earning potential. SQL-certified professionals and analysts often earn more due to their ability to make data-driven decisions.

🧠 Improved Problem-Solving

SQL teaches logic. Writing queries is like solving puzzles — you learn to ask better questions and think more analytically.

How Long Will It Take?

This depends on your pace. On average:

1 hour a day: You’ll finish in 4–5 weeks

Weekend warrior: You’ll complete it in 6–8 weeks

Fast-tracker: It’s possible to complete the entire bootcamp in 2–3 weeks if you're dedicated

Consistency is key. The course is designed to move with you, whether you're learning after work or in between gigs.

Tools & Platforms You’ll Use

You don’t need a fancy setup. Just a laptop and an internet connection.

This course teaches:

PostgreSQL (free and industry-standard)

pgAdmin for managing databases

SQL syntax you can apply to MySQL, SQLite, and more

Everything is covered step-by-step, even installation, so you won’t feel lost.

Testimonials That Speak Volumes

Still on the fence? Here’s what some students say:

“I went from zero to writing complex SQL queries in less than a month. Got a job offer in data analysis after finishing this course!” — Sarah T., Career Switcher

“The instructor is clear and engaging. Loved the real-world examples. Worth every second.” — Amir D., Marketing Manager

“This bootcamp helped me build a data portfolio I could actually show in interviews.” — Jason L., Graduate

Final Thoughts: Invest in a Skill That Pays for Itself

In 2025, data is everywhere. But the real power lies in knowing how to use it. SQL gives you that power. It’s not just a coding language—it’s a career accelerator, a problem-solving toolkit, and a stepping stone to countless opportunities.

Whether you're eyeing a promotion, planning a career switch, or just want to build something meaningful with data, the 2025 Complete SQL Bootcamp from Zero to Hero in SQL is your gateway.

Don’t wait around for opportunity to knock—build the skills that open doors.

0 notes

Text

Karenderia Multiple Restaurant System Nulled Script 1.1.8

Karenderia Multiple Restaurant System Nulled Script – Ultimate Solution for Online Food Ordering If you're looking to launch a professional, multi-restaurant food ordering platform without spending a fortune, the Karenderia Multiple Restaurant System Nulled Script is your perfect starting point. This powerful and feature-rich PHP-based script is designed for entrepreneurs and developers who want to build scalable food delivery systems like UberEats, DoorDash, or Grubhub—with full control and customization options at your fingertips. What is Karenderia Multiple Restaurant System Nulled Script? The Karenderia Multiple Restaurant System Nulled Script (KMRS) is a comprehensive food ordering and delivery platform created using PHP and MySQL. With its intuitive admin panel, responsive design, and robust set of features, it enables users to manage multiple restaurants, accept orders, and oversee every aspect of their online food business. This nulled version provides you with all premium functionalities unlocked—for free. Technical Specifications Script Type: PHP Web Application Database: MySQL Responsive Layout: Fully Mobile-Friendly Admin Panel: Advanced Admin Dashboard with Full Control Third-Party Integration: Supports SMS Gateways, Payment APIs, Google Maps Multilingual Support: Yes License: Nulled – All Premium Features Available Top Features and Benefits Multi-Restaurant Capability: Manage unlimited restaurants from a single dashboard. Real-Time Order Management: Receive, accept, or reject orders in real-time with notification support. Customer and Merchant Panels: Separate and secure login areas for both customers and restaurant owners. Flexible Payment Methods: Includes PayPal, Stripe, cash on delivery, and more. Google Maps Integration: Seamless delivery area mapping and customer location tracking. Promo & Coupons: Easily create marketing campaigns using discounts and promo codes. Advanced Reporting: Track sales, performance, and analytics with detailed reports. Why Choose Karenderia Multiple Restaurant System Nulled Script? By choosing the Karenderia Multiple Restaurant System Nulled Script, you gain access to a premium solution without the premium price tag. It's ideal for startups and developers looking to test, deploy, or scale their food ordering businesses fast and affordably. Plus, it allows you to keep 100% of the profit—no monthly subscription fees or hidden costs. Use Cases Food Delivery Startups: Create your own localized delivery service in days, not months. Cloud Kitchen Aggregators: Manage various cloud kitchen brands under a single platform. Restaurant Franchises: Oversee multiple branches with centralized control and performance tracking. How to Install and Use Download the Karenderia Multiple Restaurant System Nulled Script from our secure server. Upload the files to your web hosting environment using FTP or cPanel. Create a MySQL database and import the provided SQL file. Configure the database and site settings in the config files. Access the admin panel, add restaurants, and start accepting orders! Frequently Asked Questions (FAQs) Is the nulled script safe to use? Yes, our version of the Karenderia Multiple Restaurant System Nulled Script is carefully checked and tested to ensure it's free of malware or backdoors. However, always scan with your own antivirus software for added safety. Can I customize the look and feel of the platform? Absolutely! The script is fully open-source, allowing developers to make UI and backend modifications to match your brand and business requirements. Is this suitable for non-technical users? While some basic setup knowledge is required, we provide step-by-step instructions to help even beginners launch the platform with minimal effort. Does it support mobile apps? Yes, Karenderia comes with optional mobile app support (not included in this download), making it even easier for customers to order via Android and iOS. Can I use this script on multiple domains?

Since this is the nulled version, there are no restrictions—you can use it on as many domains as you wish. Get More Nulled Tools Looking to further enhance your WordPress site? Check out our flatsome NULLED theme—one of the best responsive WooCommerce themes available for free on our platform. Want to boost your SEO performance? Download All in One SEO Pack Pro and watch your website traffic grow! Don’t miss the opportunity to launch your food delivery business with the Karenderia Multiple Restaurant System . Download it now and experience the power of premium features—without the premium price!

0 notes

Text

Text to SQL LLM: What is Text to SQL And Text to SQL Methods

SQL LLM text

How text-to-SQL approaches help AI write good SQL

SQL is vital for organisations to acquire quick and accurate data-driven insights for decision-making. Google uses Gemini to generate text-to-SQL from natural language. This feature lets non-technical individuals immediately access data and boosts developer and analyst productivity.

How is Text to SQL?

A feature dubbed “text-to-SQL” lets systems generate SQL queries from plain language. Its main purpose is to remove SQL code by allowing common language data access. This method maximises developer and analyst efficiency while letting non-technical people directly interact with data.

Technology underpins

Recent Text-to-SQL improvements have relied on robust large language models (LLMs) like Gemini for reasoning and information synthesis. The Gemini family of models produces high-quality SQL and code for Text-to-SQL solutions. Based on the need, several model versions or custom fine-tuning may be utilised to ensure good SQL production, especially for certain dialects.

Google Cloud availability

Current Google Cloud products support text-to-SQL:

BigQuery Studio: available via Data Canvas SQL node, SQL Editor, and SQL Generation.

Cloud SQL Studio has “Help me code” for Postgres, MySQL, and SQLServer.

AlloyDB Studio and Cloud Spanner Studio have “Help me code” tools.

AlloyDB AI: This public trial tool connects to the database using natural language.

Vertex AI provides direct access to Gemini models that support product features.

Text-to-SQL Challenges

Text-to-SQL struggles with real-world databases and user queries, even though the most advanced LLMs, like Gemini 2.5, can reason and convert complex natural language queries into functional SQL (such as joins, filters, and aggregations). The model needs different methods to handle critical problems. These challenges include:

Provide Business-Specific Context:

Like human analysts, LLMs need a lot of “context” or experience to write appropriate SQL. Business case implications, semantic meaning, schema information, relevant columns, data samples—this context may be implicit or explicit. Specialist model training (fine-tuning) for every database form and alteration is rarely scalable or cost-effective. Training data rarely includes semantics and business knowledge, which is often poorly documented. An LLM won't know how a cat_id value in a table indicates a shoe without context.

User Intent Recognition

Natural language is less accurate than SQL. An LLM may offer an answer when the question is vague, causing hallucinations, but a human analyst can ask clarifying questions. The question “What are the best-selling shoes?” could mean the shoes are most popular by quantity or revenue, and it's unclear how many responses are needed. Non-technical users need accurate, correct responses, whereas technical users may benefit from an acceptable, nearly-perfect query. The system should guide the user, explain its decisions, and ask clarifying questions.

LLM Generation Limits:

Unconventional LLMs are good at writing and summarising, but they may struggle to follow instructions, especially for buried SQL features. SQL accuracy requires careful attention to specifications, which can be difficult. The many SQL dialect changes are difficult to manage. MySQL uses MONTH(timestamp_column), while BigQuery SQL uses EXTRACT(MONTH FROM timestamp_column).

Text-to-SQL Tips for Overcoming Challenges

Google Cloud is constantly upgrading its Text-to-SQL agents using various methods to improve quality and address the concerns identified. These methods include:

Contextual learning and intelligent retrieval: Provide data, business concepts, and schema. After indexing and retrieving relevant datasets, tables, and columns using vector search for semantic matching, user-provided schema annotations, SQL examples, business rule implementations, and current query samples are loaded. This data is delivered to the model as prompts using Gemini's long context windows.

Disambiguation LLMs: To determine user intent by asking the system clarifying questions. This usually involves planning LLM calls to see if a question can be addressed with the information available and, if not, to create follow-up questions to clarify purpose.

SQL-aware Foundation Models: Using powerful LLMs like the Gemini family with targeted fine-tuning to ensure great and dialect-specific SQL generation.

Verification and replenishment: LLM creation non-determinism. Non-AI methods like query parsing or dry runs of produced SQL are used to get a predicted indication if something crucial was missed. When provided examples and direction, models can typically remedy mistakes, thus this feedback is sent back for another effort.

Self-Reliability: Reducing generation round dependence and boosting reliability. After creating numerous queries for the same question (using different models or approaches), the best is chosen. Multiple models agreeing increases accuracy.

The semantic layer connects customers' daily language to complex data structures.

Query history and usage pattern analysis help understand user intent.

Entity resolution can determine user intent.

Model finetuning: Sometimes used to ensure models supply enough SQL for dialects.

Assess and quantify

Enhancing AI-driven capabilities requires robust evaluation. Although BIRD-bench and other academic benchmarks are useful, they may not adequately reflect workload and organisation. Google Cloud has developed synthetic benchmarks for a variety of SQL engines, products, dialects, and engine-specific features like DDL, DML, administrative requirements, and sophisticated queries/schemas. Evaluation uses offline and user metrics and automated and human methods like LLM-as-a-judge to deliver cost-effective performance understanding on ambiguous tasks. Continuous reviews allow teams to quickly test new models, prompting tactics, and other improvements.

#SQLLLM#TexttoSQLLLM#TexttoSQL#VertexAI#TexttoSQLMethods#ChallengesofTexttoSQL#technology#technews#news#technologynews#technologytrends#govindhtech

0 notes

Text

SQL Online Course with Certificate | Learn SQL Anytime

Master SQL from the comfort of your home with our comprehensive SQL online course with certificate. Perfect for beginners and professionals alike, this course is designed to equip you with the skills needed to work confidently with databases and data queries. Through step-by-step video tutorials, interactive assignments, and real-world projects, you’ll learn how to retrieve, manipulate, and analyze data using SQL across platforms like MySQL, PostgreSQL, and Microsoft SQL Server. The curriculum covers core concepts including SELECT statements, joins, subqueries, aggregations, and database functions—making it ideal for aspiring data analysts, developers, and business intelligence professionals. Upon completion, you’ll receive an industry-recognized certificate that showcases your expertise and can be added to your resume or LinkedIn profile. With lifetime access, self-paced modules, and expert support, this online SQL course is your gateway to mastering one of the most in-demand data skills today. Enroll now and become SQL-certified on your schedule.

0 notes

Text

Best Data Analysis Courses Online [2025] | Learn, Practice & Get Placement

Surely, in this era where data is considered much more valuable than oil, data analytics must not be considered a hobby or niche skill; it must be considered a requisite for careers. Fresh graduates, current workers looking to upgrade, and even those wishing to pursue completely different careers may find that this comprehensive Master's in Data Analytics study-thorough training in the use of tools like Python, SQL, and Excel, providing them with greater visibility during applications in the competitive job market of 2025.

What is a Master’s in Data Analytics?

A Master's in Data Analytics is comprehensive training crafted for career advancement, with three primary goals for attaining expertise in:

· Data wrangling and cleaning

· Database querying and reporting

· Data visualization and storytelling

· Predictive analytics and basic machine learning

What Will You Learn? (Tools & Topics Breakdown)

1. Python for Data Analysis

· Learn how to automate data collection, clean and preprocess datasets, and run basic statistical models.

· Use libraries like Pandas, NumPy, Matplotlib, and Seaborn.

· Build scripts to analyze large volumes of structured and unstructured data.

2. SQL for Data Querying

· Master Structured Query Language (SQL) to access, manipulate, and retrieve data from relational databases.

· Work with real-world databases like MySQL or PostgreSQL.

· Learn advanced concepts like JOINS, Window Functions, Subqueries, and Data Aggregation.

3. Advanced Excel for Data Crunching

· Learn pivot tables, dashboards, VLOOKUP, INDEX-MATCH, macros, conditional formatting, and data validation.

· Create visually appealing, dynamic dashboards for quick insights.

· Use Excel as a lightweight BI tool.

4. Power BI or Tableau for Data Visualization

· Convert raw numbers into powerful visual insights using Power BI or Tableau.

· Build interactive dashboards, KPIs, and geographical charts.

· Use DAX and calculated fields to enhance your reports.

5. Capstone Projects & Real-World Case Studies

· Work on industry-focused projects: Sales forecasting, Customer segmentation, Financial analysis, etc.

· Build your portfolio with 3-5 fully documented projects.

6. Soft Skills + Career Readiness

Resume assistance and LinkedIn profile enhancement.

Mock interviews organized by domain experts.

Soft skills training for data-storied narrations and client presentations.

Any certification that counts toward your resume.

100% Placement Support: What Does That Mean?

Most premium online programs today come with dedicated placement support. This includes:

Resume Review & LinkedIn Optimization

Mock Interviews & Feedback

Job Referrals & Placement Drives

Career Counseling

Best Data Analytics Jobs in 2025 in Top Companies

These companies are always on the lookout for data-savvy professionals:

· Google

· Amazon

· Flipkart

· Deloitte

· EY

· Infosys

· Accenture

· Razorpay

· Swiggy

· HDFC, ICICI & other financial institutions and many more companies you can target

Why Choose Our Program in 2025?

Here's what sets our Master's in Data Analytics course apart:

Mentors with 8-15 years of industry experience

Project-based curriculum with real datasets

Certifications aligned with industry roles

Dedicated placement support until you're hired

Access from anywhere - Flexible for working professionals

Live doubt-solving, peer networking & community support

#Data Analytics Jobs#Data Analysis Courses Online#digital marketing#Jobs In delhi#salary of data analyst

0 notes

Text

Aggregate Functions

Imagine you’re a detective, and you have a big box of clues (that’s your MySQL database!). Sometimes, you don’t need to look at every single clue in detail. Instead, you want to get a quick summary, like “How many clues do I have in total?” or “What’s the most important clue?”. That’s where aggregate functions come in! They help us summarize information from our database in a snap. Here are the…

0 notes

Text

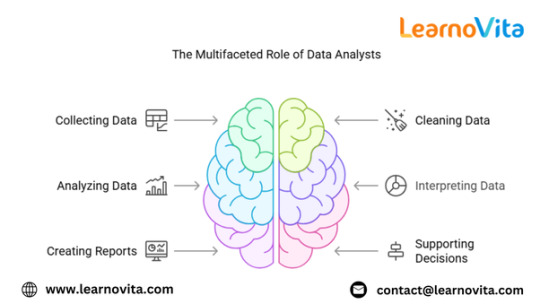

The Essential Tools Every Data Analyst Must Know

The role of a data analyst requires a strong command of various tools and technologies to efficiently collect, clean, analyze, and visualize data. These tools help transform raw data into actionable insights that drive business decisions. Whether you’re just starting your journey as a data analyst or looking to refine your skills, understanding the essential tools will give you a competitive edge in the field from the best Data Analytics Online Training.

SQL – The Backbone of Data Analysis

Structured Query Language (SQL) is one of the most fundamental tools for data analysts. It allows professionals to interact with databases, extract relevant data, and manipulate large datasets efficiently. Since most organizations store their data in relational databases like MySQL, PostgreSQL, and Microsoft SQL Server, proficiency in SQL is a must. Analysts use SQL to filter, aggregate, and join datasets, making it easier to conduct in-depth analysis.

Excel – The Classic Data Analysis Tool

Microsoft Excel remains a powerful tool for data analysis, despite the rise of more advanced technologies. With its built-in formulas, pivot tables, and data visualization features, Excel is widely used for quick data manipulation and reporting. Analysts often use Excel for smaller datasets and preliminary data exploration before transitioning to more complex tools. If you want to learn more about Data Analytics, consider enrolling in an Best Online Training & Placement programs . They often offer certifications, mentorship, and job placement opportunities to support your learning journey.

Python and R – The Power of Programming

Python and R are two of the most commonly used programming languages in data analytics. Python, with libraries like Pandas, NumPy, and Matplotlib, is excellent for data manipulation, statistical analysis, and visualization. R is preferred for statistical computing and machine learning tasks, offering packages like ggplot2 and dplyr for data visualization and transformation. Learning either of these languages can significantly enhance an analyst’s ability to work with large datasets and perform advanced analytics.

Tableau and Power BI – Turning Data into Visual Insights

Data visualization is a critical part of analytics, and tools like Tableau and Power BI help analysts create interactive dashboards and reports. Tableau is known for its ease of use and drag-and-drop functionality, while Power BI integrates seamlessly with Microsoft products and allows for automated reporting. These tools enable business leaders to understand trends and patterns through visually appealing charts and graphs.

Google Analytics – Essential for Web Data Analysis

For analysts working in digital marketing and e-commerce, Google Analytics is a crucial tool. It helps track website traffic, user behavior, and conversion rates. Analysts use it to optimize marketing campaigns, measure website performance, and make data-driven decisions to improve user experience.

BigQuery and Hadoop – Handling Big Data

With the increasing volume of data, analysts need tools that can process large datasets efficiently. Google BigQuery and Apache Hadoop are popular choices for handling big data. These tools allow analysts to perform large-scale data analysis and run queries on massive datasets without compromising speed or performance.

Jupyter Notebooks – The Data Analyst’s Playground

Jupyter Notebooks provide an interactive environment for coding, data exploration, and visualization. Data analysts use it to write and execute Python or R scripts, document their findings, and present results in a structured manner. It’s widely used in data science and analytics projects due to its flexibility and ease of use.

Conclusion

Mastering the essential tools of data analytics is key to becoming a successful data analyst. SQL, Excel, Python, Tableau, and other tools play a vital role in every stage of data analysis, from extraction to visualization. As businesses continue to rely on data for decision-making, proficiency in these tools will open doors to exciting career opportunities in the field of analytics.

0 notes

Text

Course Code: CSE 370

Course Name: Database Systems Semester: Summer 24 Lab 02: SQL Subqueries & Aggregate Functions Activity List ● All commands are shown in the red boxes. ● In the green box, write the appropriate query/answer. ● All new queries should be typed in the command window after mysql> ● Start by connecting to the server using: mysql -u root -p [password: <just press enter>] ● For more MySQL queries, go to…

0 notes

Text

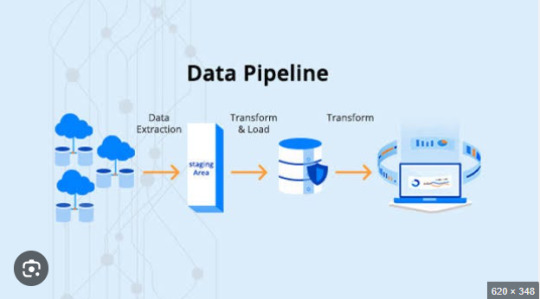

ETL Pipeline Performance Tuning: How to Reduce Processing Time

In today’s data-driven world, businesses rely heavily on ETL pipelines to extract, transform, and load large volumes of data efficiently. However, slow ETL processes can lead to delays in reporting, bottlenecks in data analytics, and increased infrastructure costs. Optimizing ETL pipeline performance is crucial for ensuring smooth data workflows, reducing processing time, and improving scalability.

In this article, we’ll explore various ETL pipeline performance tuning techniques to help you enhance speed, efficiency, and reliability in data processing.

1. Optimize Data Extraction

The extraction phase is the first step of the ETL pipeline and involves retrieving data from various sources. Inefficient data extraction can slow down the entire process. Here’s how to optimize it:

a) Extract Only Required Data

Instead of pulling all records, use incremental extraction to fetch only new or modified data.

Implement change data capture (CDC) to track and extract only updated records.

b) Use Efficient Querying Techniques

Optimize SQL queries with proper indexing, partitioning, and WHERE clauses to fetch data faster.

Avoid SELECT * statements; instead, select only required columns.

c) Parallel Data Extraction

If dealing with large datasets, extract data in parallel using multi-threading or distributed processing techniques.

2. Improve Data Transformation Efficiency

The transformation phase is often the most resource-intensive step in an ETL pipeline. Optimizing transformations can significantly reduce processing time.

a) Push Transformations to the Source Database

Offload heavy transformations (aggregations, joins, filtering) to the source database instead of handling them in the ETL process.

Use database-native stored procedures to improve execution speed.

b) Optimize Joins and Aggregations

Reduce the number of JOIN operations by using proper indexing and denormalization.

Use hash joins instead of nested loops for large datasets.

Apply window functions for aggregations instead of multiple group-by queries.

c) Implement Data Partitioning

Partition data horizontally (sharding) to distribute processing load.

Use bucketing and clustering in data warehouses like BigQuery or Snowflake for optimized query performance.

d) Use In-Memory Processing

Utilize in-memory computation engines like Apache Spark instead of disk-based processing to boost transformation speed.

3. Enhance Data Loading Speed

The loading phase in an ETL pipeline can become a bottleneck if not managed efficiently. Here’s how to optimize it:

a) Bulk Loading Instead of Row-by-Row Inserts

Use batch inserts to load data in chunks rather than inserting records individually.

Tools like COPY command in Redshift or LOAD DATA INFILE in MySQL improve bulk loading efficiency.

b) Disable Indexes and Constraints During Load

Temporarily disable foreign keys and indexes before loading large datasets, then re-enable them afterward.

This prevents unnecessary index updates for each insert, reducing load time.

c) Use Parallel Data Loading

Distribute data loading across multiple threads or nodes to reduce execution time.

Use distributed processing frameworks like Hadoop, Spark, or Google BigQuery for massive datasets.

4. Optimize ETL Pipeline Infrastructure

Hardware and infrastructure play a crucial role in ETL pipeline performance. Consider these optimizations:

a) Choose the Right ETL Tool & Framework

Tools like Apache NiFi, Airflow, Talend, and AWS Glue offer different performance capabilities. Select the one that fits your use case.

Use cloud-native ETL solutions (e.g., Snowflake, AWS Glue, Google Dataflow) for auto-scaling and cost optimization.

b) Leverage Distributed Computing

Use distributed processing engines like Apache Spark instead of single-node ETL tools.

Implement horizontal scaling to distribute workloads efficiently.

c) Optimize Storage & Network Performance

Store intermediate results in columnar formats (e.g., Parquet, ORC) instead of row-based formats (CSV, JSON) for better read performance.

Use compression techniques to reduce storage size and improve I/O speed.

Optimize network latency by placing ETL jobs closer to data sources.

5. Implement ETL Monitoring & Performance Tracking

Continuous monitoring helps identify performance issues before they impact business operations. Here’s how:

a) Use ETL Performance Monitoring Tools

Use logging and alerting tools like Prometheus, Grafana, or AWS CloudWatch to monitor ETL jobs.

Set up real-time dashboards to track pipeline execution times and failures.

b) Profile and Optimize Slow Queries

Use EXPLAIN PLAN in SQL databases to analyze query execution plans.

Identify and remove slow queries, redundant processing, and unnecessary transformations.

c) Implement Retry & Error Handling Mechanisms

Use checkpointing to resume ETL jobs from failure points instead of restarting them.

Implement automatic retries for temporary failures like network issues.

Conclusion

Improving ETL pipeline performance requires optimizing data extraction, transformation, and loading processes, along with choosing the right tools and infrastructure. By implementing best practices such as parallel processing, in-memory computing, bulk loading, and query optimization, businesses can significantly reduce ETL processing time and improve data pipeline efficiency.

If you’re dealing with slow ETL jobs, start by identifying bottlenecks, optimizing SQL queries, and leveraging distributed computing frameworks to handle large-scale data processing effectively. By continuously monitoring and fine-tuning your ETL workflows, you ensure faster, more reliable, and scalable data processing—empowering your business with real-time insights and decision-making capabilities.

0 notes

Text

5 Powerful Programming Tools Every Data Scientist Needs

Data science is the field that involves statistics, mathematics, programming, and domain knowledge to extract meaningful insights from data. The explosion of big data and artificial intelligence has led to the use of specialized programming tools by data scientists to process, analyze, and visualize complex datasets efficiently.

Choosing the right tools is very important for anyone who wants to build a career in data science. There are many programming languages and frameworks, but some tools have gained popularity because of their robustness, ease of use, and powerful capabilities.

This article explores the top 5 programming tools in data science that every aspiring and professional data scientist should know.

Top 5 Programming Tools in Data Science

1. Python

Probably Python is the leading language used due to its versatility and simplicity together with extensive libraries. It applies various data science tasks, data cleaning, statistics, machine learning, and even deep learning applications.

Key Python Features for Data Science:

Packages & Framework: Pandas, NumPy, Matplotlib, Scikit-learn, TensorFlow, PyTorch

Easy to Learn; the syntax for programming is plain simple

High scalability; well suited for analyzing data at hand and enterprise business application

Community Support: One of the largest developer communities contributing to continuous improvement

Python's versatility makes it the go-to for professionals looking to be great at data science and AI.

2. R

R is another powerful programming language designed specifically for statistical computing and data visualization. It is extremely popular among statisticians and researchers in academia and industry.

Key Features of R for Data Science:

Statistical Computing: Inbuilt functions for complex statistical analysis

Data Visualization: Libraries like ggplot2 and Shiny for interactive visualizations

Comprehensive Packages: CRAN repository hosts thousands of data science packages

Machine Learning Integration: Supports algorithms for predictive modeling and data mining

R is a great option if the data scientist specializes in statistical analysis and data visualization.

3. SQL (Structured Query Language)

SQL is important for data scientists to query, manipulate, and manage structured data efficiently. The relational databases contain huge amounts of data; therefore, SQL is an important skill in data science.

Important Features of SQL for Data Science

Data Extraction: Retrieve and filter large datasets efficiently

Data Manipulation: Aggregate, join, and transform datasets for analysis

Database Management: Supports relational database management systems (RDBMS) such as MySQL, PostgreSQL, and Microsoft SQL Server

Integration with Other Tools: Works seamlessly with Python, R, and BI tools

SQL is indispensable for data professionals who handle structured data stored in relational databases.

4. Apache Spark

Apache Spark is the most widely utilized open-source, big data processing framework for very large-scale analytics and machine learning. It excels in performance for handling a huge amount of data that no other tool would be able to process.

Core Features of Apache Spark for Data Science:

Data Processing: Handle large datasets on high speed.

In-Memory Computation: Better performance in comparison to other disk-based systems

MLlib: A Built-in Machine Library for Scalable AI Models.

Compatibility with Other Tools: Supports Python (PySpark), R (SparkR), and SQL

Apache Spark is best suited for data scientists working on big data and real-time analytics projects.

5. Tableau

Tableau is one of the most powerful data visualization tools used in data science. Users can develop interactive and informative dashboards without needing extensive knowledge of coding.

Main Features of Tableau for Data Science:

Drag-and-Drop Interface: Suitable for non-programmers

Advanced Visualizations: Complex graphs, heatmaps, and geospatial data can be represented

Data Source Integration: Database, cloud storage, and APIs integration

Real-Time Analytics: Fast decision-making is achieved through dynamic reporting

Tableau is a very popular business intelligence and data storytelling tool used for making data-driven decisions available to non-technical stakeholders.

Data Science and Programming Tools in India

This led to India's emergence as one of the data science and AI hubs, which has seen most businesses, start-ups, and government organizations take significant investments into AI-driven solutions. The increase in demand for data scientists boosted the adoption rate of programming tools such as Python, R, SQL, and Apache Spark.

Government and Industrial Initiatives Gaining Momentum Towards Data Science Adoption in India

National AI Strategy: NITI Aayog's vision for AI driven economic transformation.

Digital India Initiative: This has promoted data-driven governance and integration of AI into public services.

AI Adoption in Enterprises: The big enterprises TCS, Infosys, and Reliance have been adopting AI for business optimisation.

Emerging Startups in AI & Analytics: Many Indian startups have been creating AI-driven products by using top data science tools.

Challenges to Data Science Growth in India

Some of the challenges in data science growth despite rapid advancements in India are:

Skill Gaps: Demand outstrips supply.

Data Privacy Issues: The emphasis lately has been on data protection laws such as the Data Protection Bill.

Infrastructure Constraint: Computational high-end resources are not accessible to all companies.

To bridge this skill gap, many online and offline programs assist students and professionals in learning data science from scratch through comprehensive training in programming tools, AI, and machine learning.

Kolkata Becoming the Next Data Science Hub

Kolkata is soon emerging as an important center for education and research in data science with its rich academic excellence and growth in the IT sector. Increasing adoption of AI across various sectors has resulted in businesses and institutions in Kolkata concentrating on building essential data science skills in professionals.

Academic Institutions and AI Education

Multiple institutions and private learning centers provide exclusive AI Courses Kolkata, dealing with the must-have programming skills such as Python, R, SQL, and Spark. Hands-on training sessions are provided by these courses about data analytics, machine learning, and AI.

Industries Using Data Science in Kolkata

Banking & Finance: Artificial intelligence-based risk analysis and fraud detection systems

Healthcare: Data-driven Predictive Analytics of patient care optimisation

E-Commerce & Retail: Customized recommendations & customer behavior analysis

EdTech: AI based adaptive learning environment for students.

Future Prospects of Data Science in Kolkata

Kolkata would find a vital place in India's data-driven economy because more and more businesses as well as educational institutions are putting money into AI and data science. The city of Kolkata is currently focusing strategically on technology education and research in AI for future innovations in AI and data analytics.

Conclusion

Over the years, with the discovery of data science, such programming tools like Python and R, SQL, Apache Spark, and Tableau have become indispensable in the world of professionals. They help in analyzing data, building AI models, and creating impactful visualizations.

Government initiatives and investments by the enterprises have seen India adapt rapidly to data science and AI, thus putting a high demand on skilled professionals. As a beginner, the doors are open with many educational programs to learn data science with hands-on experience using the most popular tools.

Kolkata is now emerging as a hub for AI education and innovation, which will provide world-class learning opportunities to aspiring data scientists. Mastery of these programming tools will help professionals stay ahead in the ever-evolving data science landscape.

0 notes

Text

Master SQL in 2025: The Only Bootcamp You’ll Ever Need

When it comes to data, one thing is clear—SQL is still king. From business intelligence to data analysis, web development to mobile apps, Structured Query Language (SQL) is everywhere. It’s the language behind the databases that run apps, websites, and software platforms across the world.

If you’re looking to gain practical skills and build a future-proof career in data, there’s one course that stands above the rest: the 2025 Complete SQL Bootcamp from Zero to Hero in SQL.

Let’s dive into what makes this bootcamp a must for learners at every level.

Why SQL Still Matters in 2025

In an era filled with cutting-edge tools and no-code platforms, SQL remains an essential skill for:

Data Analysts

Backend Developers

Business Intelligence Specialists

Data Scientists

Digital Marketers

Product Managers

Software Engineers

Why? Because SQL is the universal language for interacting with relational databases. Whether you're working with MySQL, PostgreSQL, SQLite, or Microsoft SQL Server, learning SQL opens the door to querying, analyzing, and interpreting data that powers decision-making.

And let’s not forget—it’s one of the highest-paying skills on the job market today.

Who Is This Bootcamp For?

Whether you’re a complete beginner or someone looking to polish your skills, the 2025 Complete SQL Bootcamp from Zero to Hero in SQL is structured to take you through a progressive learning journey. You’ll go from knowing nothing about databases to confidently querying real-world datasets.

This course is perfect for:

✅ Beginners with no prior programming experience ✅ Students preparing for tech interviews ✅ Professionals shifting to data roles ✅ Freelancers and entrepreneurs ✅ Anyone who wants to work with data more effectively

What You’ll Learn: A Roadmap to SQL Mastery

Let’s take a look at some of the key skills and topics covered in this course:

🔹 SQL Fundamentals

What is SQL and why it's important

Understanding databases and tables

Creating and managing database structures

Writing basic SELECT statements

🔹 Filtering & Sorting Data

Using WHERE clauses

Logical operators (AND, OR, NOT)

ORDER BY and LIMIT for controlling output

🔹 Aggregation and Grouping

COUNT, SUM, AVG, MIN, MAX

GROUP BY and HAVING

Combining aggregate functions with filters

🔹 Advanced SQL Techniques

JOINS: INNER, LEFT, RIGHT, FULL

Subqueries and nested SELECTs

Set operations (UNION, INTERSECT)

Case statements and conditional logic

🔹 Data Cleaning and Manipulation

UPDATE, DELETE, and INSERT statements

Handling NULL values

Using built-in functions for data formatting

🔹 Real-World Projects

Practical datasets to work on

Simulated business cases

Query optimization techniques

Hands-On Learning With Real Impact

Many online courses deliver knowledge. Few deliver results.

The 2025 Complete SQL Bootcamp from Zero to Hero in SQL does both. The course is filled with hands-on exercises, quizzes, and real-world projects so you actually apply what you learn. You’ll use modern tools like PostgreSQL and pgAdmin to get your hands dirty with real data.

Why This Course Stands Out

There’s no shortage of SQL tutorials out there. But this bootcamp stands out for a few big reasons:

✅ Beginner-Friendly Structure

No coding experience? No problem. The course takes a gentle approach to build your confidence with simple, clear instructions.

✅ Practice-Driven Learning

Learning by doing is at the heart of this course. You’ll write real queries, not just watch someone else do it.

✅ Lifetime Access

Revisit modules anytime you want. Perfect for refreshing your memory before an interview or brushing up on a specific concept.

✅ Constant Updates

SQL evolves. This bootcamp evolves with it—keeping you in sync with current industry standards in 2025.

✅ Community and Support

You won’t be learning alone. With a thriving student community and Q&A forums, support is just a click away.

Career Opportunities After Learning SQL

Mastering SQL can open the door to a wide range of job opportunities. Here are just a few roles you’ll be prepared for:

Data Analyst: Analyze business data and generate insights

Database Administrator: Manage and optimize data infrastructure

Business Intelligence Developer: Build dashboards and reports

Full Stack Developer: Integrate SQL with web and app projects

Digital Marketer: Track user behavior and campaign performance

In fact, companies like Amazon, Google, Netflix, and Facebook all require SQL proficiency in many of their job roles.

And yes—freelancers and solopreneurs can use SQL to analyze marketing campaigns, customer feedback, sales funnels, and more.

Real Testimonials From Learners

Here’s what past students are saying about this bootcamp:

⭐⭐⭐⭐⭐ “I had no experience with SQL before taking this course. Now I’m using it daily at my new job as a data analyst. Worth every minute!” – Sarah L.

⭐⭐⭐⭐⭐ “This course is structured so well. It’s fun, clear, and packed with challenges. I even built my own analytics dashboard!” – Jason D.

⭐⭐⭐⭐⭐ “The best SQL course I’ve found on the internet—and I’ve tried a few. I was up and running with real queries in just a few hours.” – Meera P.

How to Get Started

You don’t need to enroll in a university or pay thousands for a bootcamp. You can get started today with the 2025 Complete SQL Bootcamp from Zero to Hero in SQL and build real skills that make you employable.

Just grab a laptop, follow the course roadmap, and dive into your first database. No fluff. Just real, useful skills.

Tips to Succeed in the SQL Bootcamp

Want to get the most out of your SQL journey? Keep these pro tips in mind:

Practice regularly: SQL is a muscle—use it or lose it.

Do the projects: Apply what you learn to real datasets.

Take notes: Summarize concepts in your own words.

Explore further: Try joining Kaggle or GitHub to explore open datasets.

Ask questions: Engage in course forums or communities for deeper understanding.

Your Future in Data Starts Now

SQL is more than just a skill. It’s a career-launching power tool. With this knowledge, you can transition into tech, level up in your current role, or even start your freelance data business.

And it all begins with one powerful course: 👉 2025 Complete SQL Bootcamp from Zero to Hero in SQL

So, what are you waiting for?

Open the door to endless opportunities and unlock the world of data.

0 notes

Text

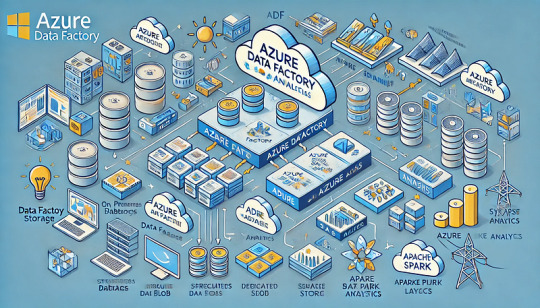

Explore how ADF integrates with Azure Synapse for big data processing.

How Azure Data Factory (ADF) Integrates with Azure Synapse for Big Data Processing

Azure Data Factory (ADF) and Azure Synapse Analytics form a powerful combination for handling big data workloads in the cloud.

ADF enables data ingestion, transformation, and orchestration, while Azure Synapse provides high-performance analytics and data warehousing. Their integration supports massive-scale data processing, making them ideal for big data applications like ETL pipelines, machine learning, and real-time analytics. Key Aspects of ADF and Azure Synapse Integration for Big Data Processing

Data Ingestion at Scale ADF acts as the ingestion layer, allowing seamless data movement into Azure Synapse from multiple structured and unstructured sources, including: Cloud Storage: Azure Blob Storage, Amazon S3, Google

Cloud Storage On-Premises Databases: SQL Server, Oracle, MySQL, PostgreSQL Streaming Data Sources: Azure Event Hubs, IoT Hub, Kafka

SaaS Applications: Salesforce, SAP, Google Analytics 🚀 ADF’s parallel processing capabilities and built-in connectors make ingestion highly scalable and efficient.

2. Transforming Big Data with ETL/ELT ADF enables large-scale transformations using two primary approaches: ETL (Extract, Transform, Load): Data is transformed in ADF’s Mapping Data Flows before loading into Synapse.

ELT (Extract, Load, Transform): Raw data is loaded into Synapse, where transformation occurs using SQL scripts or Apache Spark pools within Synapse.

🔹 Use Case: Cleaning and aggregating billions of rows from multiple sources before running machine learning models.

3. Scalable Data Processing with Azure Synapse Azure Synapse provides powerful data processing features: Dedicated SQL Pools: Optimized for high-performance queries on structured big data.

Serverless SQL Pools: Enables ad-hoc queries without provisioning resources.

Apache Spark Pools: Runs distributed big data workloads using Spark.

💡 ADF pipelines can orchestrate Spark-based processing in Synapse for large-scale transformations.

4. Automating and Orchestrating Data Pipelines ADF provides pipeline orchestration for complex workflows by: Automating data movement between storage and Synapse.

Scheduling incremental or full data loads for efficiency. Integrating with Azure Functions, Databricks, and Logic Apps for extended capabilities.

⚙️ Example: ADF can trigger data processing in Synapse when new files arrive in Azure Data Lake.

5. Real-Time Big Data Processing ADF enables near real-time processing by: Capturing streaming data from sources like IoT devices and event hubs. Running incremental loads to process only new data.

Using Change Data Capture (CDC) to track updates in large datasets.

📊 Use Case: Ingesting IoT sensor data into Synapse for real-time analytics dashboards.

6. Security & Compliance in Big Data Pipelines Data Encryption: Protects data at rest and in transit.

Private Link & VNet Integration: Restricts data movement to private networks.

Role-Based Access Control (RBAC): Manages permissions for users and applications.

🔐 Example: ADF can use managed identity to securely connect to Synapse without storing credentials.

Conclusion

The integration of Azure Data Factory with Azure Synapse Analytics provides a scalable, secure, and automated approach to big data processing.

By leveraging ADF for data ingestion and orchestration and Synapse for high-performance analytics, businesses can unlock real-time insights, streamline ETL workflows, and handle massive data volumes with ease.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

Master SQL and Advanced Excel | Complete Data Skills Course Online

Supercharge your data skills with our all-in-one Master SQL and Advanced Excel course — the perfect combination for anyone looking to excel in data analysis, reporting, and business intelligence. Whether you're a student, working professional, or aspiring data analyst, this course equips you with two of the most in-demand tools used in today’s data-driven world.

Start with mastering SQL, where you'll learn how to query databases, manipulate data, write complex joins, use aggregate functions, and work with real-world datasets using MySQL, PostgreSQL, or SQL Server. Then, dive deep into Advanced Excel — covering pivot tables, VLOOKUP, INDEX-MATCH, Power Query, data visualization, dashboards, and automation using macros.

0 notes

Text

Python Full Stack Development Course AI + IoT Integrated | TechEntry

Join TechEntry's No.1 Python Full Stack Developer Course in 2025. Learn Full Stack Development with Python and become the best Full Stack Python Developer. Master Python, AI, IoT, and build advanced applications.

Why Settle for Just Full Stack Development? Become an AI Full Stack Engineer!

Transform your development expertise with our AI-focused Full Stack Python course, where you'll master the integration of advanced machine learning algorithms with Python’s robust web frameworks to build intelligent, scalable applications from frontend to backend.

Kickstart Your Development Journey!

Frontend Development

React: Build Dynamic, Modern Web Experiences:

What is Web?

Markup with HTML & JSX

Flexbox, Grid & Responsiveness

Bootstrap Layouts & Components

Frontend UI Framework

Core JavaScript & Object Orientation

Async JS promises, async/await