#article rewriter github

Link

#.NET#.NETCore#ASP.NET#ASP.NETCore#Microsoft#OpenSource#PowerShell#PowerShellCore#TypeScript#VisualStudioCode

2 notes

·

View notes

Text

Free Software: New Version/Rewrite of MPDQ, the autoqueuer for the Music Player Daemon

I have a giant music library, but I also have a horrible tendency to keep listening to the same thing over and over (tell me you're neurodivergent without telling me you're neurodivergent).

However, I love eclectic soundtracks that don’t limit themselves to a single "sound" or "genre". They might lean towards one genre, but you’ll suddenly get a song or two that is completely different in the mix.

I'd written a program -- mpdq -- originally way back in 2017, and then rehashed it in 2020. I've been pretty happy with it. Mostly.

Now, nearly seven years (the hell?!) after it was first released, I've done a massive rewrite to solve some of the remaining problems with the last version, simplify a lot of things, and fix some stuff.

This release contains breaking changes. Most notably, rather than running in the background, it's currently written to only be run as a timed process. I currently have it set to a two minute interval cronjob with a queue length of 6 songs.

- Rewrote and simplified a lot of the workings (and removed some troubling sub-sub-sub-shells).

- Now written to run on a timer like a cronjob. (Pausing works the same, though.)

- Massively simplified the genre selection into a "gating" mechanism.

- Made the album and artist frequency times actually work, and using the same log

- Configurable way to skip artist and album frequency for specified genres

- Because of the way it handles genres (just numerically, and then as an exact string), multiple-genre tags are treated as discrete entries, e.g. "Concert;Rock" is completely separate from either "Concert" or "Rock".

Installation and configuration are a bit simpler too, and if MPD throws an error or restarts, it won't completely tank mpdq anymore.

Check it out:

Program webpage: https://uriel1998.github.io/mpdq/

GitHub: https://github.com/uriel1998/mpdq

GitLab: https://gitlab.com/uriel1998/mpdq

My repository: https://git.faithcollapsing.com/ssaus/mpdq/

Featured Image by Tibor Janosi Mozes from Pixabay

Read the full article

0 notes

Text

How I Learn React.js in just 30 Days

https://techcurve.co/digital-services/web-design-and-development.htmlhttps://www.techcurveittraining.com/

Learn React.JS framework with Tech Curve It Training Program:

React (also known as React.js or React.JS) is a free and open-source front-end JavaScript library for building user interfaces or UI components. It is maintained by Facebook and a community of individual developers and companies. React can be used as a base in the development of single-page or mobile applications. However, React is only concerned with state management and rendering that state to the DOM, so creating React applications usually requires the use of additional libraries for routing, as well as certain client-side functionality.

How I Learn React.JS: Tech Curve Expert Provides A Complete Guide For Beginners.

Every front-end developer and web developer knows how frustrating and painful it is to write the same code at multiple places. If they need to add a button on multiple pages they are forced to do a lot of code. Developers using other frameworks face the challenges to rework most codes even when crafting components that change frequently. Developers wanted a framework or library which allowed them to break down complex components and reuse the codes to complete their projects faster. Here React comes in and solves this problem.

React is the most popular javascript library for building user interfaces. It is fast, flexible and it also has a strong community sitting online to help you every time. The coolest thing about React is it’s based on components, you break down your complex code into individual pieces i.e components and that helps developers in organizing their code in a better way. A lot of companies are moving to React and that’s the reason most of the beginners and experienced developers are also expanding their knowledge learning this library.

Learning this library is a daunting task. I watch a lot of tutorials and you try to get the best material to learn this library but it can become overwhelming. I don’t know the right path or step-by-step process to learn it. But Tech Curve IT Training program provided the proper roadmap for me and other beginners to understand the React.js language

The Prerequisites That I Have Learned In The Starting Process Of Tech Curve IT Training Program.

Basic knowledge of HTML, CSS, and JavaScript.

Basic understanding of ES6 features. Learned some of the ES6 features.

The features at least beginners to learned:-

1. Let

2. Const

3. Arrow functions

4. Imports and Exports

5. Classes

Basic understanding of how to use npm.

Simple todo-app

Simple calculator app

Build a shopping cart

Display GitHub’s user stats using GitHub API

After some time, I have started Building my own React

I have just started learning React.JS, it is essential to understand the logic behind it. The experts always say that: “You have to learn by doing it yourself.” This is why I started rewriting React from scratch and it is a good idea. If you agree with me, then this article is for you! This way, I learn how to properly use each data structure and how to choose between them.

Why React?

Now, the main question that arises in front of us is why one should use React. There are so many open-source platforms for making front-end web application development easier, like Angular. Let us take a quick look at the benefits of React over other competitive technologies or frameworks. With the front-end world-changing on a daily basis, it’s hard to devote time to learning a new framework – especially when that framework could ultimately become a dead end. So, if you're looking for the next best thing but you're feeling a little bit lost in the framework jungle, I suggest checking out React.

Summary

In this article, I have shared my learning experience about React.js. Tech Curve IT Training helps me a lot in learning React.js over other JavaScript libraries. I have also learned how to create a simple React.js app.

Learn React

Get started learning React.js free here: https://www.techcurveittraining.com/

Find more information relating to React.js Development

https://techcurve.co/digital-services/web-design-and-development.html

7 notes

·

View notes

Text

About DataFan

Hi everyone! My name’s Lockea and you’ve found my blog. Congrats! I’m a data scientist and acafan (academic fan, a member of the fandom who also studies fandom). My main acafan project right now is comprehensive data analytical analysis of what people read and write on AO3.

So what is DataFan? DataFan is a project where I answer questions about fanfiction using data analytic techniques. I answer everything from the easy (want to know what story rating gets the most hits?) to the not so easy (Is there a relationship between the average length of a chapter and how many comments the chapter gets?). If you’ve got a question about AO3, I’ll do my best to answer it.

What about Fandom Stats? Fandom Stats is a great project and I looked at their source code when I was first starting out with DataFan (both projects are written in the same programming language!) but Fandom Stats can only answer simple questions about fandom and can’t perform any analytical analysis. This isn’t a bash on Fandom Stats! It’s a great tool but DataFan was written with the goal of eventually performing complex artificial intelligence and predictive analytics on fandom trends.

Can I ask DataFan a question? Yes! Absolutely! Simply send me an ask or submit a post for questions that exceed ask lengths.

Is DataFan Open Source? Yep! It’s written in python using all open source tools. Send me an ask or DM and I’ll send you a link to the github repo.

Can I use DataFan for myself? You can use the code I use to pull the data from AO3 for your own use. You can use the Jupyter Notebooks written for DataFan analysis to construct your own queries. You CANNOT use my research directly without citations. If you want to use my research, please contact me so we can get the right citations and licenses figured out (don’t worry, it doesn’t cost money). All that said, unless you’re planning to study or practice data science yourself, you’re better off just asking me a question and I’ll do the querying.

Wait, can you clarify that? If you ask DataFan a question, then I perform the analysis and write up a short article discussing the results of the analysis. This resulting article and data can be cited. For example, if you’re writing a paper for school on fandom and want to know something DataFan can answer.

The only time you may want to perform your own analysis and NOT ask me a question is if you are studying data science or practice data science yourself. In that case, you may use the AO3 scraper and write your own queries. Please do not directly copy and paste any work I’ve done (including copying my Jupyter Notebooks) and call it your own, however, as that’s just plain not cool. The exception is if you wish to rewrite a Notebook as a learning experience.

(Basically, don’t be a dick and give credit where credit is due)

What else does DataFan do? Currently, I have a panel at various conventions called “How to Write the Perfect Fanfiction” which is half about my 20+ years experience as a fanfiction writer and half data evidence backed silliness about fandom using DataFan’s backend. Want me to come to a convention near you? Let me know! I’m based out of CA but I travel all over the USA. I’m also in the process of writing several peer reviewed articles on data science and fandom studies.

48 notes

·

View notes

Text

How I rewrote Nexus Tools with Dart

Last month, I updated a project of mine called Nexus Tools, which is an installer for Google's Android SDK Platform Tools. It's one of my most popular software projects, with around 1.1-1.3k users per month, and version 5.0 is a complete rewrite. The switch seemed to go fine (no bug reports yet!), so I wanted to write a blog post about the development process, in the hopes that it might help others experimenting with bash scripts or Dart programming.

The old bash script

Before v5.0, Nexus Tools was written as a bash script, which is a series of commands that runs in Bash Shell (or a Bash-compatible environment). I only supported Mac and Linux at first, but over the years I also added compatibility for Chrome OS, Bash for Windows 10, and Macs with Apple Silicon chips. The main process is the same across all platforms: Nexus Tools creates a folder in the home directory, downloads and unzips the SDK Platform Tools package from Google's server, and adds it to the system path. Nothing too complicated.

However, Nexus Tools still broke in some manner almost every time I updated it. Bash scripts are difficult to adequately test, because they are interpreted at runtime by the Bash shell, instead of being compiled as machine code. There are different versions of Bash being used today, and some versions don't support all scripting features. This is especially an issue on macOS, which still ships with Bash v3.2 from 2007, because newer versions use the GPLv3 license that Apple doesn't want to deal with. Apple switched the default shell to Zsh on newer macOS versions, but Zsh scripts are pretty different than Bash scripts.

Bash scripts also can't do much on their own — they call the applications present on the computer. Most Linux and macOS systems have the same set of basic tools installed that Nexus Tools requires (mainly curl and unzip), but verifying that each utility I wanted to use worked similarly on each supported platform was an added layer of complexity that I didn't enjoy dealing with.

In short, bash scripts are great for scripting your own PC or environments similar to each other, but less so for multiple operating systems and versions of Bash shell.

Choosing Dart

I decided to try rewriting Nexus Tools as a command-line Dart application. Dart is a programming language created by Google, originally intended for use in web applications, but more recently has become part of the larger Flutter framework for creating web/mobile/desktop apps. However, you can also create command-line applications and scripts in Dart, which can be compiled for use on Mac, Linux, and Windows.

There are many other ways of creating command-line compiled applications that are cross-platform, but Dart's JS-like syntax is easy for me to deal with, so I went with it.

The rewriting process

The bash script version of Nexus Tools was around 250 lines of code, and even with my limited Dart experience, it only took around 8-10 hours spread across multiple days to get a functionally-identical version working in Dart. Not too bad!

Just like the bash version, the Dart version created a folder in the home directory, downloaded the tools and unzipped them, and then added the directory to the system's path. The download is handled by Dart's own http library, and then unzipped with the archive library. One of my goals here was to avoid calling external tools wherever possible, and that was (mostly) achieved. The only times Nexus Tools calls system commands is for file operations and for installing ADB drivers on Windows — more on that later.

I still had to write a few functions for functionality that Dart and its main libraries don't seem to provide, like one for adding a directory to the system path and another for determining the CPU architecture. I was a bit surprised by that last one — the 'io' library has an easy way to check the host operating system, but not the CPU?

My main concern with switching to a compiled application was security on macOS. Apple requires all applications, even ones distributed outside the App Store, to be notarized with an Apple-issued developer ID or an error message will appear. However, the Nexus Tools executable created with dart compile doesn't seem to have any issues with this. Maybe Apple doesn't enforce signing with command-line applications?

Adding Windows support

Dart supports Windows, so switching to Dart allowed me to add Windows support without much extra work. The process for installing the Android SDK Tools on Windows involves most of the same steps as on Mac/Linux, but calls to the system required different commands. For example, adding Nexus Tools to the system path on Windows just requires calling the "setx" command on Windows, but on macOS and Linux I have to add a line to a text file.

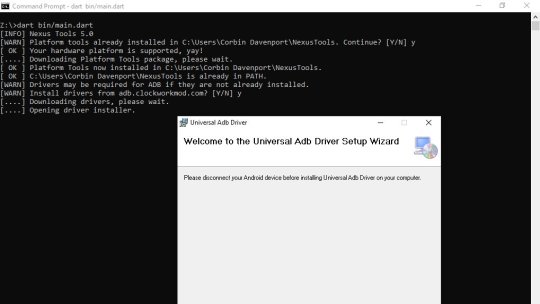

The tricky part with using the Android Platform Tools applications on Windows is usually drivers, so I wanted to integrate the step of optionally installing drivers when Nexus Tools is running on Windows. Thankfully, Koushik Dutta created a Universal ADB Drivers installer a while back that solves this problem, so Nexus Tools just downloads that and runs it.

Creating the wrapper script

The main unique feature about Nexus Tools is that it runs without actually downloading the script to your computer — you just paste in a terminal command, which grabs the bash script from GitHub and runs it in the Bash Shell.

bash <(curl -s https://raw.githubusercontent.com/corbindavenport/nexus-tools/master/install.sh)

I wanted to retain this functionality for two reasons. First, it's convenient. Second, many articles and tutorials written over the years that mention Nexus Tools just include the installation command without any links to the project.

I reduced the bash script code to the bare minimum required to download the Nexus Tools executable and run it, and you can see it here. The neat part is that it uses GitHub's permalinks for a project's downloads (e.g. project/releases/latest/download/file.zip), so the script always grabs the latest available version from the releases page — I don't have to update the script at all when I publish a new version, I just have to make sure the downloads have the correct file name.

I also created a similar wrapper script for Windows, which runs when you paste the below command into PowerShell (or the fancy new Windows Terminal).

iex ((New-Object System.Net.WebClient).DownloadString('https://raw.githubusercontent.com/corbindavenport/nexus-tools/master/install.ps1'))

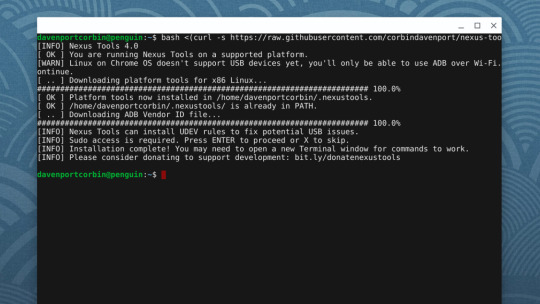

I'm pretty happy that running Nexus Tools on Windows is just as quick and easy as on Mac and Linux. Here's what it looks like on Linux:

And here's what it looks like on Windows 10:

Pretty neat!

Conclusion

I definitely could have continued to maintain Nexus Tools as a bash script, given enough testing and debugging with every release. The transition was mostly for my own personal reasons rather than strictly technological reasons — I was really sick of bash scripting. And in the end, this is my software project, so I'm gonna do what I want!

I think the switch has been a success, though. It runs exactly as well as the previous bash version (you can't even tell a difference on the surface), and I've been able to add Windows support with minimal additional work. I haven't received a single bug report, and the average number of people using Nexus Tools every day has remained at the same level of 20-50 people.

The one downside is that Nexus Tools doesn't run natively on Apple Silicon Macs, because I don't have an ARM Mac to compile it on (and Dart's compiler doesn't support cross-compiling), but it works fine in Apple's Rosetta compatibility layer.

0 notes

Text

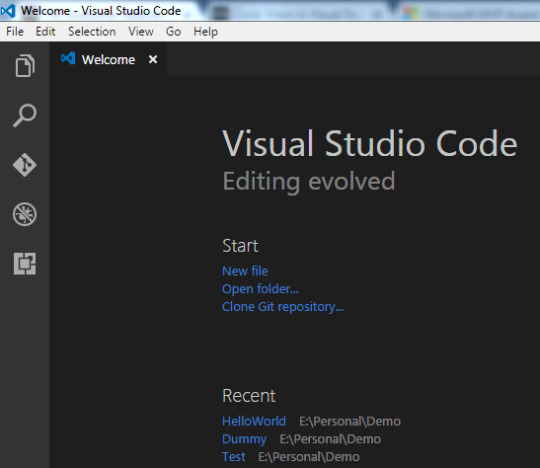

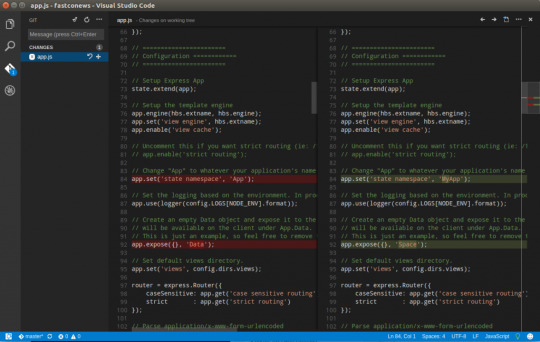

Git Visual Studio Code

GitDuck is a video chat tool built for developers that has direct integration to the IDE so you can talk, share your code in real-time and easily collaborate with your team. It enables remote developers to work as if they were side by side. With GitDuck you can code with people using different IDEs, like VSCode or WebStorm, IntelliJ or PyCharm.

Visual Studio Connect To Git

Git For Visual Studio Code

Chapters ▾

Visual Studio Code has git support built in. You will need to have git version 2.0.0 (or newer) installed. The main features are: See the diff of the file you are editing in the gutter.

The easiest way to connect to your GitHub repositories in Visual Studio. Download GitHub Extension for Visual Studio anyway. Download Download GitHub Extension for Visual Studio. By downloading the extension you agree to the End-User License Agreement. Requires Windows and Visual Studio.

1. Getting Started

1.1 About Version Control

1.2 A Short History of Git

1.3 What is Git?

1.4 The Command Line

1.5 Installing Git

1.6 First-Time Git Setup

1.7 Getting Help

1.8 Summary

2. Git Basics

2.1 Getting a Git Repository

2.2 Recording Changes to the Repository

2.3 Viewing the Commit History

2.4 Undoing Things

2.5 Working with Remotes

2.6 Tagging

2.7 Git Aliases

2.8 Summary

3. Git Branching

3.1 Branches in a Nutshell

3.2 Basic Branching and Merging

3.3 Branch Management

3.4 Branching Workflows

3.5 Remote Branches

3.6 Rebasing

3.7 Summary

4. Git on the Server

4.1 The Protocols

4.2 Getting Git on a Server

4.3 Generating Your SSH Public Key

4.4 Setting Up the Server

4.5 Git Daemon

4.6 Smart HTTP

4.7 GitWeb

4.8 GitLab

4.9 Third Party Hosted Options

4.10 Summary

5. Distributed Git

5.1 Distributed Workflows

5.2 Contributing to a Project

5.3 Maintaining a Project

5.4 Summary

6. GitHub

6.1 Account Setup and Configuration

6.2 Contributing to a Project

6.3 Maintaining a Project

6.4 Managing an organization

6.5 Scripting GitHub

6.6 Summary

7. Git Tools

7.1 Revision Selection

7.2 Interactive Staging

7.3 Stashing and Cleaning

7.4 Signing Your Work

7.5 Searching

7.6 Rewriting History

7.7 Reset Demystified

7.8 Advanced Merging

7.9 Rerere

7.10 Debugging with Git

7.11 Submodules

7.12 Bundling

7.13 Replace

7.14 Credential Storage

7.15 Summary

8. Customizing Git

8.1 Git Configuration

8.2 Git Attributes

8.3 Git Hooks

8.4 An Example Git-Enforced Policy

8.5 Summary

9. Git and Other Systems

9.1 Git as a Client

9.2 Migrating to Git

9.3 Summary

10. Git Internals

10.1 Plumbing and Porcelain

10.2 Git Objects

10.3 Git References

10.4 Packfiles

10.5 The Refspec

10.6 Transfer Protocols

10.7 Maintenance and Data Recovery

10.8 Environment Variables

10.9 Summary

A1. Appendix A: Git in Other Environments

A1.1 Graphical Interfaces

A1.2 Git in Visual Studio

A1.3 Git in Visual Studio Code

A1.4 Git in IntelliJ / PyCharm / WebStorm / PhpStorm / RubyMine

A1.5 Git in Sublime Text

A1.6 Git in Bash

A1.7 Git in Zsh

A1.8 Git in PowerShell

A1.9 Summary

A2. Appendix B: Embedding Git in your Applications

A2.1 Command-line Git

A2.2 Libgit2

A2.3 JGit

A2.4 go-git

A2.5 Dulwich

A3. Appendix C: Git Commands

A3.1 Setup and Config

A3.2 Getting and Creating Projects

A3.3 Basic Snapshotting

A3.4 Branching and Merging

A3.5 Sharing and Updating Projects

A3.6 Inspection and Comparison

A3.7 Debugging

A3.8 Patching

A3.9 Email

A3.10 External Systems

A3.11 Administration

A3.12 Plumbing Commands

2nd Edition

Git in Visual Studio Code

Visual Studio Code has git support built in.You will need to have git version 2.0.0 (or newer) installed.

See the diff of the file you are editing in the gutter.

The Git Status Bar (lower left) shows the current branch, dirty indicators, incoming and outgoing commits.

You can do the most common git operations from within the editor:

Initialize a repository.

Clone a repository.

Create branches and tags.

Stage and commit changes.

Push/pull/sync with a remote branch.

Resolve merge conflicts.

View diffs.

With an extension, you can also handle GitHub Pull Requests:https://marketplace.visualstudio.com/items?itemName=GitHub.vscode-pull-request-github.

The official documentation can be found here: https://code.visualstudio.com/Docs/editor/versioncontrol.

Those new to Visual Studio (VS) Code might just see a code editor. The true power of VS Code lies in it’s extensions, integrated terminal and other features. In this hands-on tutorial, you’re going to learn how to use Visual Studio Code by working with a Git repo.

Related:What You Need to Know about Visual Studio Code: A Tutorial

Using built-in VS Code tooling and a few extensions, you’re going to write code and commit that code to source control using a single interface.

This blog post is a snippet of a chapter from the eBook From Admin to DevOps: The BS Way to DevOps in Azure. If you like this chapter and want to learn about doing the DevOps in Azure, check it out!

Tutorial Overview

In this tutorial, you’re going to learn how to use various VS Code features on Windows by building a project using Visual Studio Code and Git. You’ve been tasked with figuring out how to build an Azure VM with Terraform as a small proof of concept (POC) project. You have VS Code and have heard of its capability as a full IDE and want to put it to the test.

You’re going to:

Create a VS Code workspace to share with your team

Install the Terraform extension

Modify the Terraform configuration file to fit your naming convention and Azure subscription

Create a snippet for a common task you’ve found yourself typing over and over

Commit the Terraform configuration file to a Git repo

This tutorial will not be meant to show how to use Terraform to deploy Azure VMs. We already have an article on Terraform and Azure VMs for that. This tutorial will focus on learning Visual Studio Code.

Does this sound like an interesting project? If so, read on to get started!

Prerequisites

To follow along with this Visual Studio Code Git tutorial, please be sure you have the following:

VS Code – All examples will be using VS Code 1.44 although earlier versions will likely work as well.

Terraform – All examples will be using Terraform for Windows v0.12.24.

Git for Windows installed – All examples will be using v2.26. If you’d like VS Code to be Git’s default editor, be sure to select it upon installation.

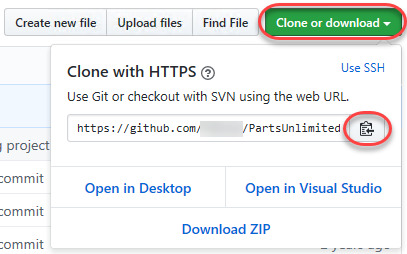

Clone the Git Repo

Since this tutorial is going to be focusing on working with code in a GitHub repo, your first task is cloning that GitHub repo to your local computer.

For this project, you’ll be working from a GitHub repo called VSCodeDemo. Since VS Code has native Git integration, you can clone a Git repo with no additional configuration. To do so:

Open the command palette with Ctrl-Shift-P, type git where you will notice various options, as shown below.

2. Choose Git: Clone which VS Code will then prompt you for the repo’s URL. Here, provide the URL https://github.com/NoBSDevOps/VSCodeDemo.gitand hit Enter.

3. Choose a folder to place the cloned project files. This project will place the repo folder in the root of C:. Once you select the repository location, VS Code will invoke git.exe in the background and clone the repo to your computer.

4. When it’s finished, VS Code will prompt if you would like to open the cloned repository immediately as shown below, click Open to do so.

You now have an open folder in VS Code for the Git repo. You now need to “save” this open folder and all settings you’ll be performing in a workspace.

Creating a Workspace

Now that you have a folder opened containing a Git repo, save a workspace by going up to the File menu and clicking on Save Workspace As….

Save the workspace as project in the project folder. VS Code will then create a file called project.code-workspace in the Git repo folder. This workspace now knows what folder you had opened. Now when the workspace is opened in the future, it will automatically open the C:VSCodeDemo folder.

Now, instead of a folder name, you will see the name of the workspace.

Setting up Extensions

Extensions are one of the most useful features of VS Code. Extensions allows you to bolt on functionality to help you manage many different projects. In this tutorial, you’re going to be working with Terraform.

Open up one of the Terraform configuration files in the workspace along the left sidebar. Notice how an editor tab opens up and shows the text but that’s about it. There’s no usual syntax highlighting or any other features. VS Code thinks this is a plain-text file and displays it accordingly. Let’s remedy that.

For VS Code to “understand” a Terraform configuration file, you need an extension. Extensions are a huge part of VS Code that opens up a world of new functionality. In this case, you need the Terraform extension to assist in building Terraform configuration files and deploying infrastructure with Terraform.

To install the Terraform extension, click on the extensions button on the Activity Bar and search for terraform. You’ll see multiple extensions show up but for this project, click on Install for the top result created by Mikael Olenfalk. VS Code will then install the extension.

Once installed, navigate back to the workspace and click on one of the TF files in the workspace. You’ll immediately see one of the most obvious differences when using an extension, syntax coloring.

Now you can see in the following screenshot that VS Code “knows” what a comment is (by making it green), what a string is (by making it red) and so on. It’s now much easier to read a Terraform configuration file.

There’s a lot more functionality included with Mikael’s Terrafom extension. Be sure to investigate all of the potential benefits you can get from this extension if using Terraform.

Code Editing

Chances are when you find a script or configuration file on the Internet, it’s not going to be exactly how you need it. You’re going to need to modify it in some way.

In this tutorial’s example, you’d like to change the main block label in the infrastructure-before.tf. Terraform configuration file to perhaps project. To do that, you’ll need to find and replace some text. In VS Code, there are multiple ways to do that.

One of the most common ways to find a string and replace it with another is the good ol’ find and replace functionality.

Hit Ctrl-F and you’ll see a dialog similar to the following screenshot. Here you can type in the string you’d like to find and if you click on the down arrow, it will expand and provide a spot to input a string to replace it with. In the screenshot below, you can see options like Aa and Ab| for case-sensitive searching and also regular expressions.

You can also perform a “find and replace” using Ctrl-D. Simply select the text you’d like to find and begin hitting Ctrl-D. You’ll find that VS Code will begin to highlight each instance of that string with a blinking cursor.

When you’ve selected all items, start typing and VS Code changes all instances at once just as if you had selected each one individually.

Saving Time with Snippets

Let’s say you’re really getting into Terraform and Azure and are tired of typing out the Terraform configuration file block to create a new Azure resource group in the following code snippet.

To save time creating these blocks, create a VS Code snippet.

Related:VS Code Snippets: Speed Up Coding with Shortcuts

To create a VS Code snippet:

Copy the azurerm_resource_group block from the Infrastructure-before.tf Terraform configuration file.

2. Open the command palette with Ctrl-Shift-P.

3. Type “snippets” to filter the list of options.

4. Select Preferences: Configure User Snippets. This brings up a list of all the snippet files typically separated by language.

5. Type “terraform” to filter by the Terraform snippets.

6. Select terraform (Terraform) to open the Terraform snippets file (terraform.json).

Visual Studio Connect To Git

With the Terraform snippets file open, remove all of the comments and copy/paste the following JSON element inside.

Note the use of t and the backslashes. You can’t directly place tab characters inside of a snippet. To represent a tab character, you must use t. Also, you must escape characters like double quotes, dollar signs, curly braces, and backslashes with a backslash.

8. Save the terraform.json file.

Git For Visual Studio Code

9. Go back to the Terraform configuration file and type “rg”. Notice now you see an option to expand a snippet.

10. Select the rg snippet as shown above. Notice that it now expands to the snippet you just created with three items highlighted.

VS Code highlighted each of the words to act as placeholders due to the variables defined in the terraform.json snippets file (${1:block label}).

At this point, you can hit Tab and simply type in the values you need without worrying about how to create the block itself.

For a full breakdown on snippet syntax, be sure to check out the Snippets in Visual Studio Code documentation.

Commit Code to Git

At this point, you’ve cloned a public GitHub repo that contains a couple of Terraform configuration files. You’ve edited some files and now you’re ready to get those changes back up to the GitHub repo.

To get changes back up to the GitHub repo, you must first use Visual Studio Code and Git to commit changes to your local cloned Git repo. When you cloned the GitHub repo earlier, you downloaded not only the configuration files but also a Git repo.

If you’ve been following along, you should now have the cloned Git repo open with a couple of pending changes, two to be exact. How do you know that? By noticing the number in the Activity Bar, as shown below.

When you have a Git repo opened in Visual Studio Code, you’ll get a glimpse on the number of files that you can stage and commit into a local Git repo in the Activity Bar.

Click on the Source Control item on the left and you’ll see two items; the infrastructure-before.tf Terraform configuration file and the workspace you saved earlier (project.code-workspace). The configuration file will have a red M to the right indicating it’s been modified. The workspace file will have a green U to the right of it because it’s untracked meaning it’s currently not under source control.

To ensure both of these files get back to the GitHub repo, first create a helpful commit message indicating why you’re committing these files. The message can be any descriptive summary. Once you’ve written a commit message, stage the changes. Staging changes in Visual Studio Code in Git adds the file contents to the staging area preparing for a commit to the repo.

While on the Source Control pane, click on the + icon beside each file to stage them as shown below.

Once staged, click on the check mark to commit all of the staged changed, as shown below.

You will probably receive an error message indicating you need to configure a user.name and user.email in Git.

No problem. You simply need to provide Git the information it needs. To do that, go into your VS Code integrated terminal and run the following two commands changing my email address and name for yours.

Now try to commit the files. You should now see that the files commit to the repo.

You can stage all changed files without manually clicking on the + beside each file by committing them all at once. VS Code will automatically stage all of the files for you.

If you were working on a team with a shared repo, the next step would be to push these changes back to the GitHub repo or opening a pull request.

Conclusion

VS Code is a feature-rich IDE. It can not only help you write and understand code better, it can also build and make changes to infrastructure, invoke utilities and more. VS Code provides you one place to manage all of your development efforts.

Although this tutorial only covered a portion of what VS Code can do, this IDE is capable of so much more. If you’d like to learn about what VS Code can do, check out What You Need to Know about Visual Studio Code: A Tutorial.

0 notes

Text

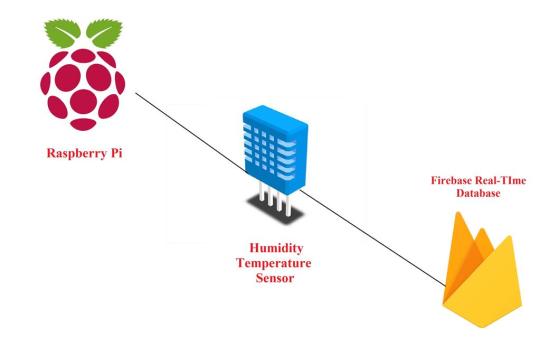

Working with Pi - 2

Connecting to Firebase

This week I spent time figuring out how to capture images using the Pi cam and sending data to Firebase.

Pi cam

There are four parts to it.

Capturing a picture: ✔️

This is the article where I started but it work, based on Alex’s suggestion. My issue was working with installing Imagemagick. May be it’s lack of experience, but based on Google search, I couldn’t find a simple workaround to use it. So I broke down the steps and started doing each step individually. Pi’s timelapse documentation came in handy.

Setting up a Cron job: ✔️

Tom Igoe’s page was super helpful.

Saving them in a folder:

I think it’s about definig the path. But I couldn’t figure out a way to do the Cron job by storing the images in a folder. So my Home quickly got crowded with images. All of which I had to manually delete.

Sending it to Firebase:

Read below.

Useful references:

This has good documentation on how to take photos: https://picamera.readthedocs.io/en/release-1.13/recipes1.html#capturing-to-a-network-stream

Sending data to Firebase

Before using Firebase, I was inclined to use MongoDB. Based on research, it turned out to be a slightly harder to send images. It’s easier to send text files. So I switched to Firebase.

One of the challenges working with Pi and hardware in general is that there are different ways to do the same thing. So a big chunk of my time was consumed in reading and looking for good documentation with clear explanation.

⇩ This one didn’t work:

Setting up Firebase: This was the easy part. I followed the steps from this article and created a project.

Since I didn’t know how to send images to Firebase, I tried to work with a sensor. I picked the DHT sensor. Little did I know how fragile and finicky it is.

I started with this documentation. As I went about debugging the Python script, I went into a rabbit hole. The problem: I used the Thorny Python IDE to run the script. It turns that the environment is in 2 but my script was in 3.

Issues: I noticed that the console was throwing each line as an error. So I started debugging line by line. It started with missing libraries. A lot which I installed but the part that eventually made me start from scratch was the missing Adafruit DHT library. Akash (Software background) did a quick check and told me that it was deprecated. So I asked a classmate, Nick Boss, who knew CS to help me find a workaround. Initially we tried to rewrite the code but it was still throwing errors. It came down to GPIO pin library. I am still not sure what the exact problem was. Nick used iPython. I don’t think I understood it but after adding another GB of library and no clear solution, I realised that it’s best to pause.

⇩ This is in progress:

I found a much cleaner set of instructions in this Github repo.

So far, I finished all the steps and currently figuring out how to add the Pyrebase wrapper for Firebase.

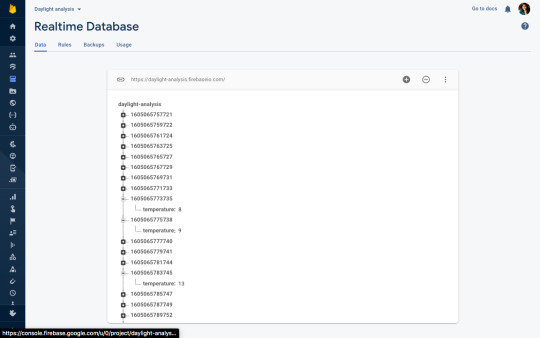

⇩ This worked. Yay!

Eric offered to help me yesterday connecting Firebase with Node. I don’t know Js plus I haven’t much enough documentation with it so I let me give this a shot.

His first question was how are we connecting the DHT to the Pi. So we searched for node Serial port and found the Serial Port link.

Next, we had to find a way to read the GPIO pins. I couldn’t comprehend the need but nevertheless. We searched for “gpio reading node js” and then found the w3 school documentation on OnOff module for NPM. We used the blinking LED for code reference.

Following up, we searched for the OnOff NPM package.

Next, we had to figure out how to read OnOff dht value. The article here was useful. Alongside, we referred to Tom’s code on GPIO pins here.

The code finally worked and we started to get reading. Or so we thought. The reading was in binary. So we spent some more time to figure it out. We tried to refer to this article but it didn’t finally work. After a while we realized that the sensor isn’t working. Given that it has no role in my project, I instead thought of sending some random data or message to Firebase.

Two things were helpful. Setting the interval as described here and getting a timestamp in Js.

The image above is the screenshot of the same.

0 notes

Text

Should software companies invest in rewriting C code in Rust?

T he C programming language was designed by by Dennis Ritchie close to 50 years ago. It is a general-purpose procedural programming language. Throughout the years, C has dominated the market for a few fundamental reasons. It is the easiest language to port to any new hardware architecture or operating system platform. While any language that compiles to native machine language can be used to write the base of an operating system, C remained the choice due to its portability. C also produces very fast code that runs directly on computer processors. However, with its versatility came some short comings.

C allows a wide range of software bugs and these software bugs are often the root cause for security issues. Some common sources of bugs in C code are "buffer overflows", "off-by-one errors", and "out of bounds reads". This is in addition to dangling pointers and memory leaks. C and C++ software are especially vulnerable as they have no language specific mechanisms for bounds checking or any of the sort. While C++ has smart pointers, nothing forces their use although C++ is an attractive choice due to being able to consume C libraries. All these issues are been the cause of many security exploits. C, inherently, is not memory safe.

So, what is the alternative if we are writing system software where bytecode and languages running in a virtual machine, such as java and C#, are not an option?

Ten years ago, Mozilla, the authors of the popular Firefox web browser, which was written in C++ with a graphics interface in XUL, an extensible xml syntax language for user interfaces, started a new project called Rust. Rust is a new programming language that promises to overcome the deficiencies in C. This makes it an attractive option to replace C. Rust moves a lot of the runtime issues in C to compile time issues. For instance, if your program or library is going to read memory outside an array index, the application will not run and if the index number is read at runtime, the application will stop running and you will not get wrong results. This prevents security bugs caused by reading incorrect or random data from memory. Such bugs have often caused headaches for Windows users and people utilizing server software written in C due to the excessive security implications. In addition to that, Rust has a strict ownership system that makes software very robust. This ownership system avoids memory leaks and pointers to random data. This saves time for developers and allows them to concentrate on algorithm writing instead of fighting with the programming language.

This raises a question. Should companies invest in porting their C code to Rust? I would say yes and for the following reasons.

The rust standard language and standard libraries are constantly under development to add convenience methods and language features. Basically, Rust is designed with the developer in mind.

Thanks to its memory safety strength, Rust saves money and developer resources that would otherwise be wasted on hunting difficult to find security bugs.

Rust has built-in unit testing features. Every piece of software in development should have two kinds of tests. The first type is unit tests which test individual libraries and pieces of code by isolating them and making sure they produce their intended results. The second type is integration tests. Integration tests make sure the entire software system continues to function as intended after changes to the code in any part of the software are committed. There are many 3rd party libraries for testing C and C++ software. Every software company adopts a different testing system. This is not required under Rust since it has built in unit testing and integration testing features. As we mentioned in the previous point, Rust is designed with the developer in mind.

Another reason why it makes sense for companies to invest in porting their C code to Rust is interoperability. Rust has tools to provide C API headers. In other words, you can write safe code in Rust and allow C and C++ applications to use it. The opposite is also true. Rust has community provided crates that bind to many popular existing C libraries.

Microsoft is also adopting Rust, albeit gradually. About 70% of the security bugs in the Windows platform are memory safety bugs. It is important to mention that the Microsoft’s platform of software including Microsoft Windows and Office make heavy use of C and C++. This makes Microsoft the owners of the world’s largest C and C++ codebases. However, Microsoft is now working on rust bindings for windows runtime libraries and is hosting them on Github. This gives Rust credibility and encourages other companies to follow through as well.

Linus Torvalds, the man behind the Linux kernel development, is often regarded as one of the most experienced and knowledgeable C developers. Currently, the Linux kernel is mostly C and Assembly code. It is developed by companies such as Intel, Microsoft, Google, and many individual developers. Torvalds has often rejected the use of C++ in the Kernel. Nevertheless, he has shown no objection towards including rust code in the Linux kernel.

If you are writing new system software, I highly recommend Rust. The community is unique and helpful. My experience also shows that its adoption and gradually porting C code to Rust yields not only cost savings but provides highly efficient software.

I hope you have enjoyed my article. Please visit my website if you need a true Murex expert that can help you out. I am an experienced IT consultant and I often have articles and tutorials that can be beneficial to my readers.

0 notes

Link

In 2020, we are blessed with a number of frameworks and libraries to help us with web development. But there wasn't always so much variety. Back in 2005, a new scripting language called Mocha was created by a guy named Brendan Eich. Months after being renamed to LiveScript, the name was changed again to JavaScript. Since then, JavaScript has come a long way.

In 2010, we saw the introduction of Backbone and Angular as the first JavaScript frameworks and, by 2016, 92 per cent of all websites used JavaScript. In this article, we are going to have a look at three of the main JavaScript frameworks (Angular, React and Vue) and their status heading into the next decade.

For some brilliant resources, check out our list of top web design tools, and this list of excellent user testing software, too.

01. Angular

AngularJS was released in 2010 but by 2016 it was completely rewritten and released as Angular 2. Angular is a full- blown web framework developed by Google, which is used by Wix, Upwork, The Guardian, HBO and more.

Pros:

Exceptional support for TypeScript

MVVM enables developers to separate work on the same app section using the same set of data

Excellent documentation

Cons:

Has a bit of a learning curve

Migrating from an old version can be difficult.

Updates are introduced quite regularly meaning developers need to adapt to them

What's next?

In Angular 9, Ivy is the default compiler. It's been put in place to solve a lot of the issues around performance and file size. It should make applications smaller, faster and simpler.

When you compare previous versions of Angular to React and Vue, the

final bundle sizes were a lot a bigger when using Angular. Ivy also makes Progressive Hydration possible, which is something the Angular team showed off at I/O 2019. Progressive Hydration uses Ivy to load progressively on the server and the client. For example, once a user begins to interact with a page, components' code along with any runtime is fetched piece by piece.

Ivy seems like the big focus going forward for Angular and the hope is to make it available for all apps. There will be an opt-out option in version 9, all the way through to Angular 10.

02. React

React was initially released in 2013 by Facebook and is used for building interactive web interfaces. It is used by Netflix, Dropbox, PayPal and Uber to name a few.

Pros:

React uses the virtual DOM, which has a positive impact on performance

JSX is easy to write

Updates don't compromise stability

Cons:

One of the main setbacks is needing third-party libraries to create more complex apps

Developers are left in the dark on the best way to develop

What's next?

At React Conf 2019, the React team touched on a number of things they have been working on. The first is Selective Hydration, which is where React will pause whatever it's working on in order to prioritise the components that the user is interacting with. As the user goes to interact with a particular section, that area will be hydrated. The team has also been working on Suspense, which is React's system for orchestrating the loading of code, data and images. This enables components to wait for something before they render.

Both Selective Hydration and Suspense are made possible by Concurrent Mode, which enables apps to be more responsive by giving React the ability to enter large blocks of lower priority work in order to focus on something that's a higher priority, like responding to user input. The team also mentioned accessibility as another area they have been looking at, by focusing on two particular topics – managing focus and input interfaces.

03. Vue

Vue was developed in 2014 by Evan You, an ex-Google employee. It is used by Xiaomi, Alibaba and GitLab. Vue managed to gain popularity and support from developers in a short space of time and without the backing of a major brand.

Pros:

Very light in size

Beginner friendly – easy to learn

Great community

Cons:

Not backed by a huge company, like React with Facebook and Angular with Google

No real structure

What's next?

Vue has set itself the target of being faster, smaller, more maintainable and making it easier for developers to target native. The next release (3.0) is due in Q1 2020, which includes a virtual DOM rewrite for better performance along with improved TypeScript Support. There is also the addition of the Composition API, which provides developers with a new way to create components and organise them by feature instead of operation.

Those developing Vue have also been busy working on Suspense, which suspends your component rendering and renders a fallback component until a condition is met.

One of the great things with Vue's updates is they sustain backward compatibility. They don't want you to break your old Vue projects. We saw this in the migration from 1.0 to 2.0 where 90 per cent of the API was the same.

How does the syntax of frameworks compare?

All three frameworks have undergone changes since their releases but one thing that's critical to understand is the syntax and how it differs. Let's have a look at how the syntax compares when it comes to simple event binding:

Vue: The v-on directive is used to attach event listeners that invoke methods on Vue instances. Directives are prefixed with v- in order to indicate that they are special attributes provided by Vue and apply special reactive behaviour to the rendered DOM. Event handlers can be provided either inline or as the name of the method.

React: React puts mark up and logic in JS and JSX, a syntax extension to JavaScript. With JSX, the function is passed as the event handler. Handling events with React elements is very similar to handling events on DOM elements. But there are some syntactic differences; for instance, React events are named using camelCase rather than lowercase.

Angular: Event binding syntax consists of a target event name within parentheses on the left of an equal sign and a quoted template statement on the right. Alternatively, you can use the on- prefix, known as the canonical form.

Popularity and market

Let's begin by looking at an overall picture of the three frameworks in regards to the rest of the web by examining stats from W3Techs. Angular is currently used by 0.4 per cent of all websites, with a JavaScript library market share of 0.5 per cent. React is used by 0.3 per cent of all websites and a 0.4 per cent JavaScript library market share and Vue has 0.3 per cent for both. This seems quite even and you would expect to see the numbers rise.

Google trends: Over the past 12 months, React is the most popular in search terms, closely followed by Angular. Vue.js is quite a way behind; however, one thing to remember is that Vue is still young compared to the other two.

Job searches: At the time of writing, React and Angular are quite closely matched in terms of job listings on Indeed with Vue a long way behind. On LinkedIn, however, there seems to be more demand for Vue developers.

Stack Overflow: If you look at the Stack Overflow Developer Survey results for 2019, React and Vue.js are both the most loved and wanted web frameworks. Angular sits down in ninth position for most loved but third most wanted.

GitHub: Vue has the most number of stars with 153k but it has the least number of contributors (283). React on the other hand has 140k stars and 1,341 contributors. Angular only has 59.6k stars but has the highest number of contributors out of the three with 1,579.

NPM Trends: The image above shows stats for the past 12 months, where you can see React has a higher number of downloads per month compared to Angular and Vue.

Mobile app development

One main focus for the big three is mobile deployment. React has React Native, which has become a popular choice for building iOS and Android apps not just for React users but also for the wider app development community. Angular developers can use NativeScript for native apps or Ionic for hybrid mobile apps, whereas Vue developers have a choice of NativeScript or Vue Native. Because of the popularity of mobile applications, this remains a key area of investment.

Other frameworks to look out for in 2020

If you want to try something new in 2020, check out these JavaScript frameworks.

Ember: An open-source framework for building web applications that works based on the MVVM pattern. It is used by several big companies like Microsoft, Netflix and LinkedIn.

Meteor: A full-stack JavaScript platform for developing modern web and mobile applications. It's easy to learn and has a very supportive community.

Conclusion

All three frameworks are continually improving, which is an encouraging sign. Everyone has their own perspective and preferred solution about which one they should use but it really comes down to the size of the project and which makes you feel more comfortable.

The most important aspect is the continued support of their communities, so if you are planning to start a new project and have never used any of the three before, then I believe you are in safe hands with all of them. If you haven't had a chance to learn any of the three frameworks yet, then I suggest making it your New Year's resolution to start learning. The future will revolve around these three.

0 notes

Link

Which is the best framework – Angular or React?

To be honest, every framework has its pros and cons. Each of the frameworks has a lot to offer and therefore choosing the right framework for your business among the best two is difficult.

This article aims to educate its readers, whether a newcomer or a freelancer or an enterprise-grade architect, of how both the frameworks excel in their environments catering to the requirements matching them.

This blog is all about an in-depth comparison between Angular and React in a structured manner and how you can focus on the most suitable framework by customizing them as per your requirements.

Let us brush up the basics before diving deep into the topic.

How to start?

Before jumping on to any framework, you need to ask yourself the following questions:

How mature is the framework?

What are the features that make it best suitable for my project?

What architecture does it employ?

What ecosystem the framework has surrounded itself with?

How to test and update the app?

Who performs better?

Which is best suited for Mobile App Development?

When can React and Angular be used?

These set of questions will guide to start the assessment of any tool you are looking to opt for.

How mature is the framework?

To check the maturity of the framework, you need to do research right from their launch to the current state.

Let us walk through their maturity levels.

React

React is:

A declarative, efficient, and flexible JavaScript library created by Facebook.

A User Interface (UI) library

A tool used for building UI components

Facebook is responsible for developing and maintaining React. React has been involved in Facebook’s own products like Instagram and Whatsapp. So you can say, React has been around 6 years now entering to a mature state. It has been named as one of the most popular projects on GitHub. It has been leading with approximately 119,000 stars to its credit at the time of writing.

Angular

Though Angular isn’t old as React, it is in the house for 3 years now ruling the hearts of the developers. Maintained by Google, Angular has been able to find its place in more than 600 applications in Google such as Google Analytics, Google cloud platform, Firebase Console, and many more.

Popularity

As per Google trends, React is a winner in the search domain as it is leading. But people are more interested in Angular due to multiple options for ready-to-go solutions.

What are the features that make it best suitable for my project?

React

1. Component-Based

The application is divided into small modules known as components to create a view.

Passing of rich data through your app and keeping the state out of the DOM is easy as templates aren’t used for writing component logic. The visuals and interactions in the applications are defined by the components in React.

2. Declarative

React holds a record in creating interactive and dynamic user interfaces for web and mobile applications.

During any change in data, React has the capacity to update and render just the right components. All you have to do is for every state in your application, create simple views.

If you wish to have a code that is more readable and easier to debug, declarative views are the best to opt for.

3. JSX

A close resemblance with HTML, JSX is a markup syntax that is a combination of Javascript + XML.

Writing React components with JSX is easier. JSX makes easier to write the code by adding HTML to React and it converts HTML tags to react elements. JSX has always been one of the best ReactJS features and thus Web developers will always go for this easy way out.

4. One-way Data Binding

The organization of React apps is in a series of nested components. With the functional nature of the components, the arguments are the source through which the components receive information. They further pass the information through their return values. This process is known as a one-way data flow. Further, the data is passed from components to their children.

For the state of your app, the parent component will have a container. The snapshot of the state is passed to the child component by the parent component. This the parent component does via the read-only props. Further, the child component communicates with the parent for updating the state through callbacks bounded by button or form in the child component.

5. Virtual DOM

Virtual DOM is a virtual copy of the original DOM object. React provides this facility for every DOM object as manipulation in the virtual DOM is quicker than the original DOM as it is a one-way data binding.

React updates only those objects in the real DOM which have changed in the Virtual DOM. This improves the performance of the application more as compared to it would have while manipulating the real DOM directly. Thus this reason is behind React being considered as a high-performance JavaScript library.

To put it simply, the React makes sure that the DOM matches the state as per your requirement you need the UI to be in. The developer doesn’t need to know the attribute manipulation, event handling or the manual DOM updates happen behind the scenes. This is a priced benefit of being a React developer.

6. Event handling

React creates its event system which is compatible with the W3C object model.

Event handling with React elements is quite similar to event handling on DOM elements. The differences between the two are:

camelCase is used to write React events.

Curly braces are used to write React event handlers.

One of the good practices is using the event handler as a method in the component class.

The SyntheticEvent object wraps the event object inside the React event handlers. Performance drastically improves as the objects received at an event handler are reused for other events. Asynchronous access to object properties is not possible as the event’s properties are changed due to reuse.

Instances of synthetic events wrap the browsers’ native event. With a cross-browser interface to a native event, relax about any incompatibility with event names and fields.

To reduce memory overhead, event delegation in addition to the pool of event objects is used to implement React event.

7. React Native

A custom renderer for React, React Native makes use of native components instead of web components as building blocks.

With the basic concepts of React, like JSX, components, state, and props you can head towards React Native. You need to know about the Native components to React Native. Other than transforming React code to work on iOS and Android, React Native provides access to the features these platforms offer.

Angular

With the release of Angular 8, multiple improvements and features are introduced. Whether you are any angular development company or angular developer or anyone who is looking for an upgrade, Angular is making projects faster and smaller with every improvement thus driving the developer and user experience to the next level of perfection.

1. Differential Loading

In spite of angular being a complete framework with its own package for a network request, form validation, and many more, Angular has one disadvantage-the app/bundle size.

The challenge that we were facing with Angular 7 was the large bundle size due to the conversion of modern JS/TS code in JS. After the conversion, the final application bundle is created for all the browsers (new and old) concerning the app performance on all the browsers.

This challenge of large bundle size was overcome in Angular 8 by reducing the bundle size by the concept of differential loading.

When we build apps with ng build, two separate bundles are created for the production dedicated to the older and newer browsers respectively. The correct bundle gets loaded automatically by the browser, thus improving the performance for Angular 8 by loading less code by the newer browsers.

2. Ivy Renderer

According to a source, 63% of all US traffic comes from smartphones and tablets. It is further forecasted that the number will increase to 80% by the end of this year.

One of the biggest challenges for a front end developer is increasing the loading speed of the website. Unfortunately, mobile devices always stay behind in this race either due to slow or bad internet connectivity thus making it more challenging for the developers.

But we never run out of solutions. We can use CDN, PWA, and others for loading the application faster. But if you want to have some out of the box solution, then reducing the bundle size is the ultimate solution and thus, IVY comes into the picture.

IVY is meant to build a next-generation rendering pipeline for Angular 8.0

Ivy is an angular renderer that uses incremental DOM. Ivy modifies the working of the framework without any changes to the Angular applications. On completion of IVY, the angular applications become small, simple, and faster. IVY consists of two main concepts:

Tree shakable:To focus only on the code in use, the unused code is removed. This results in faster runtime and smaller bundles.

Local:For a faster compilation, the changing components are recompiled.

The benefits of Ivy are:

Bundles are smaller

Templates are debuggable

Tests are faster

Builds are faster

Lots of bugs fixed

It rewrites the Angular compiler and runtime code to reach:

Better compatibility with tree-shaking

Improved build times

Improvised build sizes

Loaded with features like lazy loading of components rather than modules.

3. Web Workers

With the newest release of Angular v8, web workers can now be easily integrated with Angular. Though you might be aware of web workers, let us have a small brush up. As defined by Sitepoint,

“Web workers is an asynchronous system, or protocol, for web pages to execute tasks in the background, independently from the main thread and website UI. It is an isolated environment that is insulated from the window object, the document object, direct internet access and is best suited for long-running or demanding computational tasks.”

Have you built an application that includes a lot of calculations on UI? Are you experiencing the UI to be slow?

Having heavy calculations, data table manipulations, and other complex computations results in a laggy UI. JavaScript running on the main thread is not the only thing. Other things like calculations also run on it thus resulting in a bad user experience. Thus, web workers come into the picture to resolve this issue.

Therefore you can say if your application is unresponsive while processing data, web workers are helpful.

Due to JavaScript being single-threaded, there is a possibility of asynchronous data calls to take place. Facilitating to run the CPU intensive computations in the background thread, Web workers are used. This is achieved by freeing the main thread and updating the user interface.

Put simply, web workers are useful if heavy computations are offloaded to another thread.

4. Lazy Loading

Lazy loading helps in bringing down the size of large files. The required files are lazily loaded.

Previously in the older versions of Angular, @loadChildren property was used by the route configuration. This property accepts a string. If any typo occurred or any module name has been recorded wrong, Angular doesn’t consider this as wrong. It accepts the value that was there until we try building it.

To overcome this, dynamic imports in router configuration is added in Angular 8 thus enabling the usage of import statement for lazy loading the module. Thus, errors will be easily recognized and we can put a stop on waiting till the build time to recognize the errors in the code.

5. Bazel Support

Now increase the possibilities to build your CLI application with Bazel. The Angular framework is built with Bazel. Since it is expected to be included in version 9, it is available as opt-in in Angular 8.

The main advantages of Bazel are:

Using the same tool in building backends and frontends.

The build time is faster

Incremental build for the modified part.

Ejection of hidden Bazel files that are hidden by default.

Cache on the build farm

Dynamic imports for the lazy loaded modules

6. CLI Improvements

With continuous improvement in the Angular CLI, the ng build, ng test and ng run has accorded themselves by 3rd-party libraries and tools. For example, with the help of a deploy command, the new capabilities are already in use by AngularFire.

Angular CLI is equipped with commands such as ng new, serve, test, build and add for quicker development experience.

With the new ng deploy in the house, developers can deploy their final app to the cloud. Just a few clicks from their command-line interface and the work is done.

One important thing to remember, add a builder as it accords your project’s deployment capability to a specific hosting provider. But, this has to be done before using the command.

With Angular 8, it has added new features to ngUpgrade. This new feature will make the life of developers easier for upgrading their Angular.js apps to Angular 8.

7. TypeScript 3.4

The most important part is TypeScript 3.4 as it is required to run your Angular 8 project. A new flag is introduced in TypeScript 3.4 known as –incremental.

From the last compilation, the TypeScript is asked to save the information of the project graph by the incremental. Every time –incremental invokes TypeScript, it will utilize the information for detecting the cheapest way of type-checking and emit changes to your project.

To know more about Angular 8 and its dominance, you can also read the blog on “Top Reasons to Choose Angular 8 for Your Next Web Development Project.”

What architecture does it employ?

Angular and React both have a component-based architecture. Component-based architecture means they consist of cohesive, reusable, and modular components. The major difference is during the technical stack. React uses JavaScript whereas Angular uses TypeScript for compact and error-free development.

Angular, a robust front-end development framework has the power to structure your application. It frees you from the worry of routing libraries during coding of the application.

The advanced functionalities provided by Angular are as follows:

Availability of templates for creating UI views with powerful syntax.

To add components and testing, command-line tools are provided.

Completing intelligent code with IDEs.

For faster and better scenario tests, Protractor is used.

For complex choreographies and animation timelines, intuitive APIs are used.

The infrastructure consists of ARIA enabled components.

Injected Dependency

XSS Protection

The crucial point between Angular and React for their architectural differences takes an important turn with the increasing popularity of React for building web applications. Some of the key functionalities of React to highlight are:

Syntax extension to JavaScript, JSX.

The React elements can be created easily.

To update and match React elements, React DOM.

For splitting UI into independent, reusable pieces, React accords Component API

XSS protection

What ecosystem the framework has surrounded itself with?

There are multiple tools created around the open-source frameworks. These tools are friendly and sometimes help more than the framework itself. Let us get into the details of the most popular tools and libraries that are associated with both frameworks.

Angular

1. Angular CLI

A CLI tool helps in bootstrapping the project without configuring the build yourself. This popular trend with modern frameworks allows in generating and running a project with a couple of commands. The responsible scripts for application development, starting a development server, and running tests are all hidden in node_modules. This can be used for generating new code during the development process and during the dependency installation.

Managing dependencies in your project is easy with Angular now. A dependency can be installed when ng add is used. This dependency will automatically get configured for usage. For instance, if you run ng add@angular/material, Angular Material gets downloaded by Angular CLI from the npm registry. Angular CLI runs its install script and enables the use of Angular Material by automatic configuring the application using Angular Schematics. Libraries are allowed by the Schematics, a workflow tool to make changes to your codebase. In other words, there is a provision for resolving backward incompatibility issues by library authors while installing a new version.

2. Ionic Framework

If you are interested in hybrid mobile applications, then Ionic is a popular framework. There is a Cordova container nicely integrated with Angular and has a material component library. This helps in easy setup and also for mobile application development. Ionic is a good choice if you prefer a hybrid app over the native one.

3. Angular Material

Angular has come up with a Material component library having great options for ready-made components.

4. Angular Universal

Bundling different tools are all about Angular Universal. This helps in server-side rendering for Angular applications. With the integration of Angular Universal with Angular CLI, it supports a number of Node.js frameworks, like express and hapi, also with .NET core.

5. Augury

A browser extension for Chrome and Firefox, Augury helps in debugging the running Angular applications in development mode. Augury can be used to monitor the change detection, the component tree, and helps in optimizing performance issues.

React

1. Create React App

If you are looking for a quick set up of new projects, this CLI utility called Create React App is all you need. Just like Angular CLI, it permits us to generate a new project, run the app during the ongoing development process or creating a production bundle.

Jest is used for unit testing for React apps. It also supports application profiling by using environment variables. Other features are proxies for the backend for local development, Flow and TypeScript, Sass, PostCSS, and many more features.

2. React Native

Developed by Facebook, React Native is a platform used for native mobile app development using React. React Native is capable of building applications with a truly native UI, unlike Ionic that specializes in hybrid applications.

React Native has made provisions for standard React components bounding to their native counterparts. Another advantage of using React Native is it allows you to create components of your own and bind them to native code written in Objective-C, Java or Swift.

3. Material UI

React has a Material Design Component library. As compared to Angular, this library with React is more mature and houses a wider range of components.

4. Next.js

React Applications on the server-side is done by Next.js framework. This option is flexible enough for application rendering partially or completely on the server, revert the result to the client, and continue in the browser. Next.js tries to simplify the complex task of creating universal applications with a minimal amount of new primitives and requirements for the structure of your project.

5. Gatsby

A static website generator, Gatsby uses React.js allowing us to use GraphQL to query the data for your websites. These websites may be defined in markdown, YAML, JSON, external API’s as well as popular content management systems.

6. React 360

Want to create virtual reality applications for the browsers? This library called React 360 will do the needful. React 360 has a provision for a declarative React API built on top of the WebGL and WebVR browser APIs. This makes it easier for creating 360 VR experiences.

React Developer Tools

For debugging React applications, React Dev Tools are a browser extension that allows traversing the React component tree and observe their props and state.

How to test and update the app?

Tools like Jasmine and Protractor can be used for Angular IO testing and debugging for a complete project. React lags here as a different set of tools are required for performing different sets of testing. For instance, Jest for JavaScript code testing, Enzyme for component testing, etc. This results in an increased effort in the testing process.

Ease of Update

With an improved CLI equipped with commands like ng_update, upgrading any app to higher versions of Angular has become easy. Thus, Angular app development has become painless with the automated updating process for most of the cases.

React has provisions for seamless transitions between the two versions. But updating and migrating third-party components is possible only with the use of external libraries. The developers always have to keep a check always whether the third-party libraries used are compatible with the latest versions of the JavaScript framework or not. This increases the job of the developers.

Who performs better?

React has one-way data-binding whereas Angular has two-way data binding. With one-way data-binding, changes are rendered in the UI element once the model state is updated. However, there is no change in the model state when a change in the UI element is made unlike in Angular. If the UI element in Angular changes, the change is rendered in the model state and vice versa. Though Angular is easier to grasp, React has proven a better data overview with an increase in the project size. This also provides an easy debugging process with React.

Any application has multiple states. The complexity increases with repeated data morphing. Once the data changes, the components of the UI are also modified. This makes the data to stay updated always. This state is handled by Redux in React whereas, Angular doesn’t accord any use of Redux to handle the state. But if the application is large enough, there are chances that Redux has to be involved.

Since the commonly used methodology of working with React components is passing the data from parent component to its child component, Flux, a pattern for managing the flow of data through a React application, assigns this model as a default one for data handling.

In the flux methodology, the distinct roles for dealing with the data are:

Stores

Dispatcher

Views

The main idea behind this is:

The stores are updated through triggering actions. The dispatcher is called by actions through which the stores subscribe for the modification of their own data. Once the dispatch is triggered, and the store is updated, a change event will be emitted. This change is rerendered accordingly by the Views.

Flux is basically used for controlling and managing the application state. In Angular, two-way data binding triggers updates in a flow. If any action is wrongly implemented as no code is created in complete accuracy, would result in multiple errors. Further, it may be hard to debug.

In a nutshell we can say,

React performs well because:

Component Reuse

Virtual DOM

Community Support

Building dynamic web applications is easier.

Support of handy tool

Rich JavaScript library

SEO friendly

Easy to test

Angular performs well because:

Higher quality code due to component-based architecture.

Higher scalability and cleaner code due to use of TypeScript.

Asynchronous programming is efficiently handled due to RxJS.

Mobile-first approach.

Higher performance due to hierarchical dependency injection.

Optimizing the bundle size with differential load.

Seamless update with Angular CLI

Powerful ecosystem