#computer vision data annotation

Text

A Journey into the World of Computer Vision in Artificial Intelligence

Training data for Computer Vision aids machine learning and deep learning models in understanding by dividing visuals into smaller sections that may be tagged. With the help of the tags, it performs convolutions and then leverages the tertiary function to make recommendations about the scene it is observing.

#computer vision services & solutions#computer vision training data#computer vision data annotation#training data for computer vision

0 notes

Text

ADVANTAGES OF DATA ANNOTATION

Data annotation is essential for training AI models effectively. Precise labeling ensures accurate predictions, while scalability handles large datasets efficiently. Contextual understanding enhances model comprehension, and adaptability caters to diverse needs. Quality assurance processes maintain data integrity, while collaboration fosters synergy among annotators, driving innovation in AI technologies.

#Data Annotation Company#Data Labeling Company#Computer Vision Companies in India#Data Labeling Companies in India#Image Annotation Services#Data labeling & annotation services#AI Data Solutions#Lidar Annotation

0 notes

Link

Status update by Maruful95

Hello there, I'm Md. Maruful Islam is a skilled trainer of data annotators from Bangladesh. I currently view my job at Acme AI, the pioneer in the data annotation sector, as an honour. I've improved my use of many annotation tools throughout my career, including SuperAnnotate, Supervise.ly, Kili, Cvat, Tasuki, FastLabel, and others. I have written consistently good annotations, earning me credibility as a well-respected professional in the industry. My GDPR, ISO 27001, and ISO 9001 certifications provide even more assurance that data security and privacy laws are followed. I genuinely hope you will consider my application. I'd like to learn more about this project as a data annotator so that I may make recommendations based on what I know. I'll do the following to make things easier for you:Services: Detecting Objects (Bounding Boxes)Recognizing Objects with PolygonsKey PointsImage ClassificationSegmentation of Semantics and Instances (Masks, Polygons)Instruments I Utilize: Super AnnotateLABELBOXRoboflowCVATSupervisedKili PlatformV7Data Types: ImageTextVideosOutput Formats: CSVCOCOYOLOJSONXMLSEGMENTATION MASKPASCAL VOCVGGFACE2

0 notes

Text

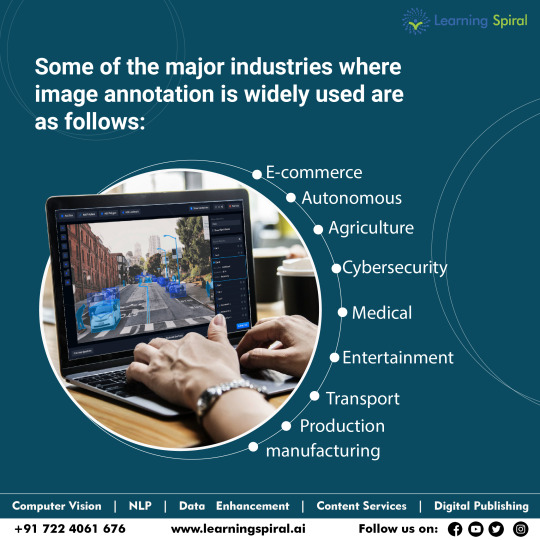

At Learning Spiral, get the best image annotation services for a variety of sectors. By using these image annotation services, businesses can save time and money, improve the accuracy of their machine-learning models, and scale their operations as needed.

For more details visit: https://learningspiral.ai/

#Data Annotation Company#Data Labeling Company#Computer Vision Companies in India#Data Labeling Companies in India#Image Annotation Services#Data labeling & annotation services#AI Data Solutions#Lidar Annotation#annotation projects#Data annotation projects

0 notes

Text

Visit: https://learningspiral.ai/

#lidar annotation#computer vision company#data annotation company#data labeling company#data annotation and labeling services#data annotation companies in india

1 note

·

View note

Text

4 Ways to Deliver Cleaner Colonoscopy Datasets

Colonoscopies are exams that can help healthcare providers spot potentially life-threatening issues within the intestines. These exams have been around for decades and are largely effective. But the human element still provides varying results for patients.

Heterogeneous performance during endoscopy can lead to differing opinions and inaccurate diagnoses. Fortunately, things are changing, and there are many ways to deliver cleaner colonoscopy datasets.

Computer Vision

Computer vision is a form of AI that's dramatically changing healthcare. Instead of relying solely on a healthcare provider's knowledge and keen eye, operators can use this technology to spot issues. It works similarly to the human eye. Machines can "view" colonoscopy imaging and learn how to identify artifacts that matter.

AI models for gastroenterology computer vision have the potential to substantially improve diagnostic accuracy, helping providers make better decisions for their patients.

AI-Assisted Annotation

Of course, computer vision needs detailed training before operators can deploy it. That's where annotation comes in.

Accurate annotation involves creating the context for the images computer vision sees. Before deployment, computer vision needs to process thousands of datasets. The more information it learns, the better it performs.

Traditionally, manual annotation was the only way to get clear colonoscopy datasets. But AI-assisted annotation changes that. High-quality training platforms can label colonoscopy videos of any length, annotating content in many ways. Because AI is at the helm, operators can focus less time on menial tasks and focus on other ways to improve training efficiency.

Infinite Oncologies

Another way to improve AI models for gastroenterology computer vision is to create as many gastroenterology models as possible. Colon issues are complex, and there are many potential issues to look for during endoscopy. Training computer vision to spot only polyps can result in missed problems.

Multiple classifications and sub-classifications can help operators build a highly accurate gastroenterology model capable of identifying several issues.

AI and Human Review

Finally, it's important to review and correct datasets. Accuracy is paramount, as a computer vision model is only as accurate as its training models.

AI can help improve accuracy by spotting areas of interest and tagging artifacts. Operators can then go in to review datasets, make necessary corrections and feed machine learning models highly accurate datasets for faster deployment.

Read a similar article about computer vision models here at this page.

#dataops software#ai annotation tools for teams#computer vision models for radiology annotation#ai models for gastroenterology computer vision#ai data platform for ultrasound computer vision#histology ai model development#surgical ai training models

0 notes

Photo

Read article visit Link : https://www.datalabeler.com/benefits-of-using-artificial-intelligence-and-machine-learning/

0 notes

Link

It is well known that data science teams dedicate significant time and resources to developing and managing training data for AI and machine learning models — and the principal requisite for it is high-quality computer vision datasets. It is common for problems to stem from poor in-house tooling, labeling rework, difficulty locating data, and difficulties in collaborating & iterating on distributed teams’ data.

#Annotation Strategies for Computer Vision Training Data#data verification company#cogitotech usa#cogitotech#USA#business model#business metadata capturing#Business#moderation system#AI technology#machine learning

0 notes

Link

0 notes

Text

2023.08.31

i have no idea what i'm doing!

learning computer vision concepts on your own is overwhelming, and it's even more overwhelming to figure out how to apply those concepts to train a model and prepare your own data from scratch.

context: the public university i go to expects the students to self-study topics like AI, machine learning, and data science, without the professors teaching anything TT

i am losing my mind

based on what i've watched on youtube and understood from articles i've read, i think i have to do the following:

data collection (in my case, images)

data annotation (to label the features)

image augmentation (to increase the diversity of my dataset)

image manipulation (to normalize the images in my dataset)

split the training, validation, and test sets

choose a model for object detection (YOLOv4?)

training the model using my custom dataset

evaluate the trained model's performance

so far, i've collected enough images to start annotation. i might use labelbox for that. i'm still not sure if i'm doing things right 🥹

if anyone has any tips for me or if you can suggest references (textbooks or articles) that i can use, that would be very helpful!

54 notes

·

View notes

Text

What is a Data pipeline for Machine Learning?

As machine learning technologies continue to advance, the need for high-quality data has become increasingly important. Data is the lifeblood of computer vision applications, as it provides the foundation for machine learning algorithms to learn and recognize patterns within images or video. Without high-quality data, computer vision models will not be able to effectively identify objects, recognize faces, or accurately track movements.

Machine learning algorithms require large amounts of data to learn and identify patterns, and this is especially true for computer vision, which deals with visual data. By providing annotated data that identifies objects within images and provides context around them, machine learning algorithms can more accurately detect and identify similar objects within new images.

Moreover, data is also essential in validating computer vision models. Once a model has been trained, it is important to test its accuracy and performance on new data. This requires additional labeled data to evaluate the model's performance. Without this validation data, it is impossible to accurately determine the effectiveness of the model.

Data Requirement at multiple ML stage

Data is required at various stages in the development of computer vision systems.

Here are some key stages where data is required:

Training: In the training phase, a large amount of labeled data is required to teach the machine learning algorithm to recognize patterns and make accurate predictions. The labeled data is used to train the algorithm to identify objects, faces, gestures, and other features in images or videos.

Validation: Once the algorithm has been trained, it is essential to validate its performance on a separate set of labeled data. This helps to ensure that the algorithm has learned the appropriate features and can generalize well to new data.

Testing: Testing is typically done on real-world data to assess the performance of the model in the field. This helps to identify any limitations or areas for improvement in the model and the data it was trained on.

Re-training: After testing, the model may need to be re-trained with additional data or re-labeled data to address any issues or limitations discovered in the testing phase.

In addition to these key stages, data is also required for ongoing model maintenance and improvement. As new data becomes available, it can be used to refine and improve the performance of the model over time.

Types of Data used in ML model preparation

The team has to work on various types of data at each stage of model development.

Streamline, structured, and unstructured data are all important when creating computer vision models, as they can each provide valuable insights and information that can be used to train the model.

Streamline data refers to data that is captured in real-time or near real-time from a single source. This can include data from sensors, cameras, or other monitoring devices that capture information about a particular environment or process.

Structured data, on the other hand, refers to data that is organized in a specific format, such as a database or spreadsheet. This type of data can be easier to work with and analyze, as it is already formatted in a way that can be easily understood by the computer.

Unstructured data includes any type of data that is not organized in a specific way, such as text, images, or video. This type of data can be more difficult to work with, but it can also provide valuable insights that may not be captured by structured data alone.

When creating a computer vision model, it is important to consider all three types of data in order to get a complete picture of the environment or process being analyzed. This can involve using a combination of sensors and cameras to capture streamline data, organizing structured data in a database or spreadsheet, and using machine learning algorithms to analyze and make sense of unstructured data such as images or text. By leveraging all three types of data, it is possible to create a more robust and accurate computer vision model.

Data Pipeline for machine learning

The data pipeline for machine learning involves a series of steps, starting from collecting raw data to deploying the final model. Each step is critical in ensuring the model is trained on high-quality data and performs well on new inputs in the real world.

Below is the description of the steps involved in a typical data pipeline for machine learning and computer vision:

Data Collection: The first step is to collect raw data in the form of images or videos. This can be done through various sources such as publicly available datasets, web scraping, or data acquisition from hardware devices.

Data Cleaning: The collected data often contains noise, missing values, or inconsistencies that can negatively affect the performance of the model. Hence, data cleaning is performed to remove any such issues and ensure the data is ready for annotation.

Data Annotation: In this step, experts annotate the images with labels to make it easier for the model to learn from the data. Data annotation can be in the form of bounding boxes, polygons, or pixel-level segmentation masks.

Data Augmentation: To increase the diversity of the data and prevent overfitting, data augmentation techniques are applied to the annotated data. These techniques include random cropping, flipping, rotation, and color jittering.

Data Splitting: The annotated data is split into training, validation, and testing sets. The training set is used to train the model, the validation set is used to tune the hyperparameters and prevent overfitting, and the testing set is used to evaluate the final performance of the model.

Model Training: The next step is to train the computer vision model using the annotated and augmented data. This involves selecting an appropriate architecture, loss function, and optimization algorithm, and tuning the hyperparameters to achieve the best performance.

Model Evaluation: Once the model is trained, it is evaluated on the testing set to measure its performance. Metrics such as accuracy, precision, recall, and score are computed to assess the model's performance.

Model Deployment: The final step is to deploy the model in the production environment, where it can be used to solve real-world computer vision problems. This involves integrating the model into the target system and ensuring it can handle new inputs and operate in real time.

TagX Data as a Service

Data as a service (DaaS) refers to the provision of data by a company to other companies. TagX provides DaaS to AI companies by collecting, preparing, and annotating data that can be used to train and test AI models.

Here’s a more detailed explanation of how TagX provides DaaS to AI companies:

Data Collection: TagX collects a wide range of data from various sources such as public data sets, proprietary data, and third-party providers. This data includes image, video, text, and audio data that can be used to train AI models for various use cases.

Data Preparation: Once the data is collected, TagX prepares the data for use in AI models by cleaning, normalizing, and formatting the data. This ensures that the data is in a format that can be easily used by AI models.

Data Annotation: TagX uses a team of annotators to label and tag the data, identifying specific attributes and features that will be used by the AI models. This includes image annotation, video annotation, text annotation, and audio annotation. This step is crucial for the training of AI models, as the models learn from the labeled data.

Data Governance: TagX ensures that the data is properly managed and governed, including data privacy and security. We follow data governance best practices and regulations to ensure that the data provided is trustworthy and compliant with regulations.

Data Monitoring: TagX continuously monitors the data and updates it as needed to ensure that it is relevant and up-to-date. This helps to ensure that the AI models trained using our data are accurate and reliable.

By providing data as a service, TagX makes it easy for AI companies to access high-quality, relevant data that can be used to train and test AI models. This helps AI companies to improve the speed, quality, and reliability of their models, and reduce the time and cost of developing AI systems. Additionally, by providing data that is properly annotated and managed, the AI models developed can be exp

2 notes

·

View notes

Text

Hello there,

I'm Md. Maruful Islam is a skilled trainer of data annotators from Bangladesh. I currently view my job at Acme AI, the pioneer in the data annotation sector, as an honour. I've improved my use of many annotation tools throughout my career, including SuperAnnotate, Supervise.ly, Kili, Cvat, Tasuki, FastLabel, and others.

I have written consistently good annotations, earning me credibility as a well-respected professional in the industry. My GDPR, ISO 27001, and ISO 9001 certifications provide even more assurance that data security and privacy laws are followed.

I genuinely hope you will consider my application. I'd like to learn more about this project as a data annotator so that I may make recommendations based on what I know.

I'll do the following to make things easier for you:

Services:

Detecting Objects (Bounding Boxes)

Recognizing Objects with Polygons

Key Points

Image Classification

Segmentation of Semantics and Instances (Masks, Polygons)

Instruments I Utilize:

Super Annotate

LABELBOX

Roboflow

CVAT

Supervised

Kili Platform

V7

Data Types:

Image

Text

Videos

Output Formats:

CSV

COCO

YOLO

JSON

XML

SEGMENTATION MASK

PASCAL VOC

VGGFACE2

#ai data annotator jobs#data annotation#image annotation services#machine learning#ai data annotator#ai image#artificial intelligence#annotation#computer vision#video annotation

0 notes

Text

Learning Spiral is a leading provider of data annotation services in India. The company offers a wide range of data labeling services in different sectors, including automobile, healthcare, education, cybersecurity, e-commerce, etc.

For more details visit: https://learningspiral.ai/

#Data Labeling Company#Computer Vision Companies in India#Data Labeling Companies in India#Image Annotation Services#Data labeling & annotation services#AI Data Solutions#Lidar Annotation#annotation projects#Data annotation projects

0 notes

Text

AI vs. Machine Learning vs. Deep Learning: Differentiating Their Roles

In today's technology-driven world, terms like AI, machine learning, and deep learning are often used interchangeably, leading to confusion. While they are interconnected, they exhibit distinct differences. In this article, we will explore the concepts of AI, machine learning, and deep learning, highlighting their unique characteristics and how they contribute to the evolving field of technology.

Artificial Intelligence (AI):

Artificial Intelligence course, or AI, pertains to the creation of computer systems capable of performing tasks traditionally requiring human intelligence. These tasks include problem-solving, decision-making, language understanding, and image recognition, among others. The main goal of AI is to create intelligent machines capable of mimicking human behavior and cognitive abilities.

Machine Learning (ML):

Machine Learning is a subset of Artificial intelligence Institute that focuses on algorithms and statistical models to enable computers to learn from and make predictions or decisions without explicit programming. It is the practice of giving computers the ability to learn and improve from experience. ML algorithms can process vast amounts of data to identify patterns, make predictions, and take actions based on the insights gained.

Deep Learning (DL):

Deep Learning is a specialized subset of machine learning that is inspired by the structure and function of the human brain. It involves training artificial Intelligence training neural networks with multiple layers of interconnected nodes, or neurons, to learn hierarchical representations of data. Deep learning models excel in processing unstructured data, such as images, videos, and natural language, enabling them to achieve remarkable accuracy in tasks like image classification, speech recognition, and language translation.

Key Differences

Approach:

AI is a broader concept that encompasses various approaches, including machine learning and deep learning. Machine learning, on the other hand, focuses on artificial intelligence training course algorithms to learn from data and make predictions. Deep learning takes machine learning a step further by simulating the human brain's neural networks and achieving advanced pattern recognition and data representation capabilities.

Data Requirements:

Machine learning algorithms require labeled or annotated data to train on. This data serves as input, and the algorithms learn from it to make predictions. In contrast, deep learning algorithms can learn directly from raw, unstructured data, eliminating the need for explicit data labeling. This ability to process unstructured data gives deep learning models an edge in various complex tasks.

Model Complexity:

Deep learning models are typically more intricate than traditional machine learning models. Unlike traditional machine learning algorithms that depend on manually engineered features to extract pertinent information from data, deep learning models autonomously learn representations of data through multiple layers of abstraction. In contrast, deep learning models automatically learn and extract features from the data, reducing the need for manual feature engineering. This makes deep learning models more scalable and adaptable to different problem domains.

Performance and Accuracy:

Deep learning models have demonstrated exceptional performance in tasks such as image and speech recognition, natural language processing, and computer vision. They have achieved state-of-the-art accuracy levels, often surpassing human capabilities. Machine learning models, while less complex, still deliver impressive results in many applications but may require more effort in feature engineering and tuning to achieve optimal performance.

Hardware Requirements:

Deep learning models typically require powerful hardware resources, such as graphics processing units (GPUs) or tensor processing units (TPUs), to handle the vast amount of computations involved in training and inference. Machine learning models, depending on their complexity, can be trained and deployed on standard hardware configurations.

Application Domains:

AI, machine learning, and deep learning are applied across diverse fields. Artificial intelligence certification has widespread use in industries like healthcare, finance, gaming, and autonomous vehicles. Machine learning is extensively employed in recommendation systems, fraud detection, customer segmentation, and predictive analytics. Deep learning has excelled in computer vision, speech recognition, natural language processing, and autonomous systems.

AI, machine learning, and deep learning are interconnected but distinct concepts. Artificial intelligence full course represents the

broader goal of creating intelligent machines, while machine learning and deep learning are subsets that contribute to achieving that goal. Machine learning focuses on algorithms and statistical models, whereas deep learning simulates the human brain's neural networks. Both machine learning and deep learning have made significant advancements and find applications in various domains. Understanding these differences helps us appreciate the incredible potential and impact of these technologies in shaping the future.

Data Science & AI

youtube

Artificial Intelligence Career

youtube

0 notes

Text

The Advantages of AI Development in Data Analysis

Artificial intelligence is no longer a subject of science fiction. It's already revolutionizing industries far and wide. Every year, AI improves and paves the way for more innovation.

While most people think of what AI can do for healthcare, robotics and consumer technology, new developments are also changing the face of data analysis. Here's how.

Streamlined Data Preparation

We live in the age of big data, and organizations of all sizes have a repository of information they need to process. In the past, the only way to make use of any of that collected data was to prepare it manually. Data scientists had to generate reports and handle exploration on their own.

Fortunately, that's not the case now. AI can produce models and help visualize key information in only a few clicks. Companies investing in machine learning can also benefit from continued AI development. For example, AI labeling for computer vision significantly reduces the amount of manual work required to train models.

Better Data Accuracy

Another common problem in data analysis is potential inaccuracies. Inaccurate data can lead to false interpretations and countless flaws. It defeats the entire purpose of having the data.

Errors are more common than most realize. Humans aren't perfect, so issues are bound to happen.

AI can greatly reduce the number of flaws in massive data sets. The technology can learn common human-caused errors and detect them when they occur. The technology can detect and resolve deficiencies automatically, providing peace of mind that you're working with correct data.

Less Human Intervention

The biggest advantage of AI in data analysis is the reduced reliance on manual work. Not only is manual work expensive, but it's also prone to errors. AI takes most of the human aspect out of the equation entirely.

AI labeling for computer vision only needs manual intervention when clarifying errors, reducing the resources invested in getting systems deployed. AI can also surface insights from enormous data sets in minutes. Instead of manually sifting through numerical data, you can rely on technology to get the information you want accurately and efficiently.

Continued developments can help AI learn data nuances, allowing it to spot patterns, alert you to anomalies and more.

Read a similar article about tools for machine learning teams here at this page.

#clinical data analysis tools#annotation quality control#aerial imaging#computer vision models#synthetic aperture radar image segmentation using ai#data annotation tools#ai labeling for computer vision

0 notes

Text

Best approaches for data quality control in AI training

The phrase “garbage in, trash out” has never been more true than when it comes to artificial intelligence (AI)-based systems. Although the methods and tools for creating AI-based systems have become more accessible, the accuracy of AI predictions still depends heavily on high-quality training data. You cannot advance your AI development strategy without data quality management.

In AI, data quality can take many different forms. The quality of the source data comes first. For autonomous vehicles, that may take the form of pictures and sensor data, or it might be text from support tickets or information from more intricate business correspondence.

Unstructured data must be annotated for machine learning algorithms to create the models that drive AI systems, regardless of where it originates. As a result, the effectiveness of your AI systems as a whole depends greatly on the quality of annotation.

Establishing minimum requirements for data annotation quality control

The key to better model output and avoiding issues early in the model development pipeline is an efficient annotation procedure

The best annotation results come from having precise rules in place. Annotators are unable to use their techniques consistently without the norms of engagement.

Additionally, it’s crucial to remember that there are two levels of annotated data quality:

The instance level: Each training example for a model has the appropriate annotations. To do this, it is necessary to have a thorough understanding of the annotation criteria, data quality metrics, and data quality tests to guarantee accurate labelling.

The dataset level: Here, it’s important to make sure the dataset is impartial. This can easily occur, for instance, if the majority of the road and vehicle photos in a collection were shot during the day and very few at night. In this situation, the model won’t be able to develop the ability to accurately recognise objects in photographs captured in low light.

Creating a data annotation quality assurance approach that is effective

Choosing the appropriate quality measures is the first step in assuring data quality in annotation. This makes it possible to quantify the quality of a dataset. You will need to determine the appropriate syntax for utterances in several languages while developing a natural language processing (NLP) model for a voice assistant, for instance.

A standard set of examples should be used to create tests that can be used to measure the metrics when they have been defined. The group that annotated the dataset ought to design the test. This will make it easier for the team to come to a consensus on a set of rules and offer impartial indicators of how well annotators are doing.

On how to properly annotate a piece of media, human annotators may disagree. One annotator might choose to mark a pedestrian who is only partially visible in a crosswalk image as such, whereas another annotator might choose to do so. Clarify rules and expectations, as well as how to handle edge cases and subjective annotations, using a small calibration set.

Even with specific instructions, annotators could occasionally disagree. Decide how you will handle those situations, such as through inter-annotator consensus or agreement. In order to ensure that your annotation is efficient, it can be helpful to discuss data collecting procedures, annotation needs, edge cases, and quality measures upfront.

In the meantime, always keep in mind that approaches to identify human exhaustion must take this reality into consideration in order to maintain data quality. To detect frequent issues related to fatigue, such as incorrect boundaries/color associations, missing annotations, unassigned attributes, and mislabeled objects, think about periodically injecting ground truth data into your dataset.

The fact that AI is used in a variety of fields is another crucial factor. To successfully annotate data from specialist fields like health and finance, annotators may need to have some level of subject knowledge. For such projects, you might need to think about creating specialised training programmes.

Setting up standardised procedures for quality control

Processes for ensuring data quality ought to be standardised, flexible, and scalable. Manually examining every parameter of every annotation in a dataset is impractical, especially when there are millions of them. Making a statistically significant random sample that accurately represents the dataset is important for this reason.

Choose the measures you’ll employ to gauge data quality. In classification tasks, accuracy, recall, and F1-scores—the harmonic mean of precision and recall—are frequently utilised.

The feedback mechanism used to assist annotators in fixing their mistakes is another crucial component of standardised quality control procedures. In order to find faults and tell annotators, you should generally use programming. For instance, for a certain dataset, the dimensions of general objects may be capped. Any annotation that exceeds the predetermined limits may be automatically blocked until the problem is fixed.

A requirement for enabling speedy inspections and corrections is the development of effective quality-control tools. Each annotation placed on an image in a dataset for computer vision is visually examined by several assessors with the aid of quality control tools like comments, instance-marking tools, and doodling. During the review process, these approaches for error identification help evaluators identify inaccurate annotations.

Analyze annotator performance using a data-driven methodology. For managing the data quality of annotations, metrics like average making/editing time, project progress, jobs accomplished, person-hours spent on various scenarios, the number of labels/day, and delivery ETAs are all helpful.

Summary of data quality management

A study by VentureBeat found that just 13% of machine learning models are actually used in practise. A project that might have been successful otherwise may be harmed by poor data quality because quality assurance is a crucial component of developing AI systems.

Make sure you start thinking about data quality control right away. You may position your team for success by developing a successful quality assurance procedure and putting it into practise. As a result, you’ll have a stronger foundation for continually improving, innovating, and establishing best practises to guarantee the highest quality annotation outputs for all the various annotation kinds and use cases you might want in the future. In conclusion, making this investment will pay off in the long run.

About Data Labeler

Data Labeler aims to provide a pivotal service that will allow companies to focus on their core business by delivering datasets that would let them power their algorithms.

Contact us for high-quality labeled datasets for AI applications [email protected]

0 notes