#computing history

Explore tagged Tumblr posts

Text

You might have heard of 32-bit and 64-bit applications before, and if you work with older software, maybe 16-bit and even 8-bit computers. But what came before 8-bit? Was it preceded by 4-bit computing? Were there 2-bit computers? 1-bit? Half-bit?

Well outside that one AVGN meme, half-bit isn't really a thing, but the answer is a bit weirder in other ways! The current most prominent CPU designs come from Intel and AMD, and Intel did produce 4-bit, 8-bit, 16-bit, 32-bit and 64-bit microprocessors (although 4-bit computers weren't really a thing). But what came before 4-bit microprocessors?

Mainframes and minicomputers did. These were large computers intended for organizations instead of personal use. Before microprocessors, they used transistorized integrated circuits (or in the early days even vacuum tubes) and required a much larger space to store the CPU.

And what bit length did these older computers have?

A large variety of bit lengths.

There were 16-bit, 32-bit and 64-bit mainframes/minicomputers, but you also had 36-bit computers (PDP-10), 12-bit (PDP-8), 18-bit (PDP-7), 24-bit (ICT 1900), 48-bit (Burroughs) and 60-bit (CDC 6000) computers among others. There were also computers that didn't use binary encoding to store numbers, such as decimal computers or the very rare ternary computers (Setun).

And you didn't always evolve by extending the bit length, you could upgrade from an 18-bit computer to a more powerful 16-bit computer, which is what the developers of early UNIX did when they switched over from the PDP-7 to the PDP-11, or offer 32-bit over 36-bit, which happened when IBM phased out the IBM 7090 in favor of the the System/360 or DEC phased out the PDP-10 in favor of the VAX.

154 notes

·

View notes

Text

Sam Siam: FurryMuck Land (Zine, 1993)

A zine about a text-based furry MMO that continues to this day. You can read it here.

#internet archive#zine#zines#furry#furries#anthro#furry fandom#furry fandom history#furry history#muck#mu*#text games#text based game#internet history#old internet#computing history#computer history#1993#1990s#90s

457 notes

·

View notes

Text

The first place that “do not fold, spindle or mutilate” was taken off the punch card and unpacked in all its metaphorical glory was the student protests at the University of California-Berkeley in the mid-1960s, what became known as the “Free Speech Movement.” The University of California administration used punch cards for class registration. Berkeley protestors used punch cards as a metaphor, both as a symbol of the “system” — first the registration system and then bureaucratic systems more generally — and as a symbol of alienation.

[…]

Because the punch card symbolically represented the power of the university, it made a suitable point of attack. Some students used the punch cards in subversive ways. An underground newspaper reported:

Some ingenious people (where did they get this arcane knowledge? Isn’t this part of the Mysteries belonging to Administration?) got hold of a number of blank IBM cards, and gimmicked the card-puncher till it spoke no mechanical language, but with its little slots wrote on the cards simple letters: “FSM”, “STRIKE” and so on. A symbol, maybe: the rebels are better at making the machine talk sense than its owners. (“Letter from Berkeley” 12; Draper 113)

Students wore these punch cards like name tags. They were thought sufficiently important symbols of the Free Speech Movement that they were used as illustrations on the album cover of the record that the Movement issued.

Another form of technological subversion was for students to punch their own cards, and slip them in along with the official ones: Some joker among the campus eggheads fed a string of obscenities into one of Cal’s biggest and best computers — with the result that the lists of new students in various classes just can NOT be read in mixed company. (Berlandt, “IBM Enrolls” 1)

These pranks were the subversion of the technician. The students were indicating their ability to control the machines, and thus, symbolically, the machinery of the university. But it also indicates, like the students’ and administrations’ shared use of the machine metaphor, something of the degree of convergence of student and administration beliefs and methods. This sort of metaphorical technical subversion rarely rises above the level of prank.

Perhaps more radical, or at least with less confused symbolism, were students who destroyed punch cards in symbolic protest: the punch cards that the university used for class registration stood for all that was wrong with the university, and by extension, America. Students at Berkeley and other University of California branches burned their registration punch cards in anti-University protests just as they burned draft cards in anti-Vietnam protests.

—Steven Lubar, “Do Not Fold, Spindle or Mutilate”: A Cultural History of the Punch Card

29 notes

·

View notes

Text

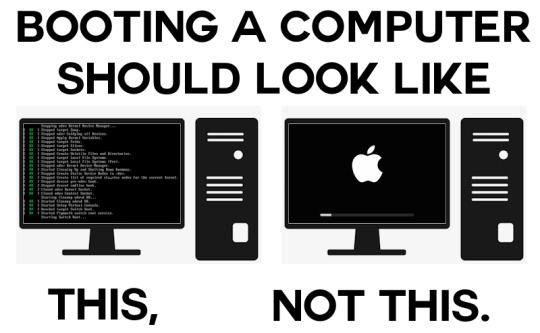

What makes a good boot sequence?

A while ago, I had my first truly viral post on Mastodon. It was this:

You might've seen it. It got almost four hundred boosts and reached beyond Mastodon to reddit and even 4chan. I even saw an edit with a spinning frog on the left screen. I knew the post would go down well with tech.lgbt but I never expected it to blow up the way it did.

I tried my best to express succinctly exactly what it is I miss about BIOS motherboards in the age of UEFI in this picture. I think looking at a logo and spinner/loading bar is boring compared to seeing a bunch of status messages scroll up the screen indicating hardware being activated, services being started up and tasks being run. It takes the soul out of a computer when it hides its computeriness.

I think a lot of people misunderstood my post as expressing a practical preference over an aesthetic one, and there was at least a few thinking this was a Linux fanboy post, which it certainly is not. So here's the long version of a meme I made lol.

Stages

I remember using two family desktop computers before moving over a family laptop. One ran Windows XP and the other ran Windows 7. Both were of the BIOS era, which meant that when booting, they displayed some status information in white on black with a blinking cursor before loading the operating system. On the XP machine, I spent longer in this liminal space because it dual-booted. I needed to select Windows XP from a list of Linux distros when booting it.

I've always liked this. Even as a very little kid I had some sense that what I was seeing was a look back into the history of computing. It felt like a look "behind the scenes" of the main GUI-based operating system into something more primitive. This made computers even more interesting than they already were, to me.

Sequences

The way old computers booted was appealing to my love of all kinds of fixed, repeating sequences. I never skip the intros to TV shows and I get annoyed when my local cinema forgets to show the BBFC ratings card immediately before the film, even though doing so is totally pointless and it's kinda strange that they do that in the first place. Can you tell I'm autistic?

Booting the windows 7 computer would involve this sequence of distinct stages: BIOS white text -> Windows 7 logo with "starting windows" below in the wrong aspect ratio -> switch to correct resolution with loading spinner on the screen -> login screen.

Skipping any would feel wrong to me because it's missing a step in one of those fixed sequences I love so much. And every computer that doesn't start with BIOS diagnostic messages is sadly missing that step to my brain, and feels off.

Low-level magic

I am extremely curious about how things work and always have been, so little reminders when using a computer that it has all sorts of complex inner workings and background processes going on are very interesting to me, so I prefer boot sequences that expose the low-level magic going on and build up to the GUI. Starting in the GUI immediately presents it as fundamental, as if it's not just a pile of abstractions on top of one another. It feels deceptive.

There may actually be some educational and practical value in computers booting in verbose mode by default. Kids using computers for the first time get to see that there's a lot more to their computer than the parts they interact with (sparking curiosity!), and if a boot fails, technicians are better able to diagnose the problem over a phone call with a non-technical person.

Absolute boot sequence perfection

There's still one last thing missing from my family computer's boot sequence, and that's a brief flicker of garbage on screen as VRAM is cleared out. Can't have everything I guess. Slo-mo example from The 8-Bit Guy here:

171 notes

·

View notes

Text

IBM System/360 mainframe assembly line in 1965

80 notes

·

View notes

Text

It was all downhill after 2018.

31 notes

·

View notes

Text

73 notes

·

View notes

Text

"Before it did anything else, the American computer was tasked with finding more efficient ways to kill people."

I'm sure we've nothing to worry about with artificial intelligence...

3 notes

·

View notes

Text

Unlike 99% of human languages, computer languages are designed. Many of them never catch on for real applications. So what makes a computer language successful? Here's one case study...

#software development#software engineering#golang#coding#artificial language#concurrency#google#programming languages#compiler#computing history#mascot#gophers

5 notes

·

View notes

Text

Back in an era when computers were the size of a room and only government agencies and large companies could afford to have one, IBM was king of the mainframes. But they had a lineup of several incompatible computers, some intended for scientific uses (the IBM 7090/7094), others were for commercial uses (the IBM 7080 and IBM 7010). IBM wanted to have a single unified architecture so that software could be exchanged between them and customers could upgrade from cheaper, lower powered machines to more higher powered ones.

What came out of it was the IBM System/360 line of mainframes (referring to the concept of "360 degrees" making up a circle) that ended up being the dominant mainframe computer for decades to come, it got cloned by competitors, and its descendants are still being produced to this day.

The IBM System/360 had many features that since then became foundational for modern computing.

An entirely binary number system. While some computers (such as the IBM 7090) used a binary system, others operated exclusively in decimal mode, encoded using binary coded decimals using 4 bits for each digit (such as the IBM 7080 and IBM 7010). Others went a step further and were only capable of storing decimal digits 0 to 9 (like the IBM 7070).

To store textual information, each character was stored in 8 bits, establishing the dominance of 8 bit bytes. Previous systems would typically use 6 bits to store text, and would usually only enable a single case of letters. The IBM 7070 didn't provide access to bits and characters were stored in 2 decimal digits. It was also one of the first machines to support the then new ASCII standard, although notably it provided much better support for IBM's proprietary EBCDIC encodings which came to dominate mainframe computing.

Even though it was a 32-bit system, memory was byte addressed. Previous systems would access memory one word at a time (for the IBM 7090, this was 36 bits per word, for the IBM 7010, this was 10 digits plus a sign), or had variable length words and accessed them through their last digits (IBM 7080 and IBM 7010). The IBM System/360 however accessed 32-bit words as 4 bytes by their lowest address byte.

Two's complement arithmetic. Previous machines (even the binary IBM 7090) would encode numbers as sign/magnitude pairs, so for example -3 would be encoded identically to 3 except for the sign bit. Two's complement encoding, now the standard in modern computers, makes it much easier to handle signed arithmetic, by storing -3 as a large power of 2 minus 3.

69 notes

·

View notes

Text

Fifty-Five Hollywood Online Interactive Kits (Windows 3.1, Hollywood Online, 1994/1995/1996)

You can play them in your browser here.

Edit: my mistake, they're for WIndows 3.1, emulated in DOSBox.

#internet archive#windows 3.1#old software#computer history#computing history#movie#movies#movie history#film history#press kit#multimedia#1994#1995#1996#1990s#90s

181 notes

·

View notes

Note

Related to the previous ask, since I agree that few retro technologies would strictly improve life if they came back, but are there any technologies you would've liked to see develop in a different direction (e.g. touchscreens never replaced buttons on phones etc.)?

I don't know if it's a wish, exactly but I've often wondered about what computers would have been like if we had not all but abandoned research and development of analog computers.

My job today is working on software for designing computer chips, and although they are unbelievably more complex than chips I used to design myself 30 years earlier, the basic technology has not really changed. Digital logic, that works by quantizing electrical voltages into two different states we interpret as 1 and 0.

This approach goes back to the earliest days of computer design, but it was not the only approach. Some analog computers were built, usually for modeling physics based math like ballistic trajectory and such.

It never caught on, because it has a bunch of shortcomings with error accumulation and such, and also we lack fundamentals like analog programming languages.

But there are interesting possibilities of a modern version of an analog computer, if the issues can be addressed. Creating programs where uncertainty exists for instance, probability computation etc. the whole system would be capable of producing a range of answers at every level, not just binary responses.

31 notes

·

View notes

Text

youtube

#konrad zuse#computers#computing history#Z1 computer#z3 Computer#german computer science#cybernetics#cybernetic#Youtube

3 notes

·

View notes

Text

Wrong: Ada Lovelace invented computer science and immediately tried to use it to cheat at gambling because she was Lord Byron's daughter.

Right: Ada Lovelace invented computer science and immediately tried to use it to cheat at gambling because that was the closest you could get in 1850 to being a Super Mario 64 speedrunner.

#history#computers#computer science#ada lovelace#memes#gaming#video games#super mario 64#speedrunning

65K notes

·

View notes

Text

I was a Java noob

At one time, every user was a newbie. Facebook recently reminded me of something I wrote 11 years ago today, when I was learning Java:

I'm still trying to wrap my mind around the notion that Java (apparently) implements write-once semantics for references and primitive types but lacks read-only semantics for methods or objects. I'm also wishing Java collections provided a copy constructor for iterators. Despite these flaws, its advantages over C++ are stark.

Looking back, here are my reactions:

In Java, the natural way to implement read-only semantics for methods and objects is to define a read-only interface. This is what the JOML library does, for instance. For each mutable class (Matrix4f, Quaternionf, Vector3d) it defines an interface (Matrix4fc, Quaternionfc, Vector3dc) with read-only semantics. I wish I'd known that trick in 2012.

Some of Java's advantages are historical. Gosling in 1995 foresaw the importance of threads and URLs, so he built them into the language. Stoustrup in 1982 did not foresee their importance. (Believe it or not, URLs weren't invented until 1994!)

Some of Java's advantages are because it didn't try as hard to maintain compatibility with C. For instance, Stoustrup was doubtless aware of the portability headaches in C, such as "int" having different limits on different architectures, but it seemed more important to be able to re-use existing C code in C++ than to fix fundamental flaws in the language.

Not sure why I wanted a copy constructor for iterators. Nowadays I rarely use iterators directly, thanks to the "enhanced for loop" added in Java SE 5. That feature was 8 years old when I learned Java. Not sure why I wasn't using it.

I love Java even more than I did in 2012. Nowadays I dread working in C/C++

#programming languages#java#computing history#c++ language#c++#facebook#software development#newbie#coding

4 notes

·

View notes