#copy module in Ansible

Explore tagged Tumblr posts

Text

Ansible Copy: Automated file copy module

Ansible Copy: Automated file copy module #homelab #ansible #automation #copyfiles #managepermissions #synchronizedata #localhosts #remotehosts #configurationmanagement #filemanagement #ansiblecopymodule

There is no doubt that Ansible is an excellent tool in the home lab, production, and any other environment you want to automate. It provides configuration management capabilities, and the learning curve isn’t too steep. There is a module, in particular, we want to look at, the Ansible copy module, and see how we can use it to copy files between a local machine and a remote server. Table of…

View On WordPress

#Ansible automation tool#Ansible file permissions management#Ansible playbook for file management#copy module in Ansible#copying files with Ansible#fetch module in Ansible#local to remote file transfer#managing remote servers#recursive directory copy#synchronization between remote hosts

0 notes

Text

Migrating Virtual Machines to Red Hat OpenShift Virtualization with Ansible Automation Platform

As organizations accelerate their cloud-native journey, traditional virtualization platforms are increasingly being reevaluated in favor of more agile and integrated solutions. Red Hat OpenShift Virtualization offers a unique advantage: the ability to manage both containerized workloads and virtual machines (VMs) on a single, unified platform. When combined with the Ansible Automation Platform, this migration becomes not just feasible—but efficient, repeatable, and scalable.

In this blog, we’ll explore how to simplify and streamline the process of migrating existing virtual machines to OpenShift Virtualization using automation through Ansible.

Why Migrate to OpenShift Virtualization?

Red Hat OpenShift Virtualization extends Kubernetes to run VMs alongside containers, allowing teams to:

Reduce infrastructure complexity

Centralize workload management

Modernize legacy apps without rewriting code

Streamline DevOps across VM and container environments

By enabling VMs to live inside Kubernetes-native environments, you gain powerful benefits such as integrated CI/CD pipelines, unified observability, GitOps, and more.

The Migration Challenge

Migrating VMs from platforms like VMware vSphere or Red Hat Virtualization (RHV) into OpenShift isn’t just a “lift and shift.” You need to:

Map VM configurations to kubevirt-compatible specs

Convert and move disk images

Preserve networking and storage mappings

Maintain workload uptime and minimize disruption

Manual migrations can be error-prone and time-consuming—especially at scale.

Enter Ansible Automation Platform

Ansible simplifies complex IT tasks through agentless automation, and its ecosystem of certified collections supports a wide range of infrastructure—from VMware and RHV to OpenShift.

Using Ansible Automation Platform, you can:

✅ Automate inventory collection from legacy VM platforms ✅ Pre-validate target OpenShift clusters ✅ Convert and copy VM disk images ✅ Create KubeVirt VM definitions dynamically ✅ Schedule and execute cutovers at scale

High-Level Workflow

Here’s what a typical Ansible-driven VM migration to OpenShift looks like:

Discovery Phase

Use Ansible collections (e.g., community.vmware, oVirt.ovirt) to gather VM details

Build an inventory of VMs to migrate

Preparation Phase

Prepare OpenShift Virtualization environment

Verify necessary storage and network configurations

Upload VM images to appropriate PVCs using virtctl or automated pipelines

Migration Phase

Generate KubeVirt-compatible VM manifests

Create VMs in OpenShift using k8s Ansible modules

Validate boot sequences and networking

Post-Migration

Test workloads

Update monitoring/backup policies

Decommission legacy VM infrastructure (if applicable)

Tools & Collections Involved

Here are some key Ansible resources that make the migration seamless:

Red Hat Ansible Certified Collections:

kubernetes.core – for interacting with OpenShift APIs

community.vmware – for interacting with vSphere

oVirt.ovirt – for RHV environments

Custom Roles/Playbooks – for automating:

Disk image conversions (qemu-img)

PVC provisioning

VM template creation

Real-World Use Case

One of our enterprise customers needed to migrate over 100 virtual machines from VMware to OpenShift Virtualization. With Ansible Automation Platform, we:

Automated 90% of the migration process

Reduced downtime windows to under 5 minutes per VM

Built a reusable framework for future workloads

This enabled them to consolidate management under OpenShift, improve agility, and accelerate modernization without rewriting legacy apps.

Final Thoughts

Migrating VMs to OpenShift Virtualization doesn’t have to be painful. With the Ansible Automation Platform, you can build a robust, repeatable migration framework that reduces risk, minimizes downtime, and prepares your infrastructure for a hybrid future.

At HawkStack Technologies, we specialize in designing and implementing Red Hat-based automation and virtualization solutions. If you’re looking to modernize your VM estate, talk to us—we’ll help you build an automated, enterprise-grade migration path.

🔧 Ready to start your migration journey?

Contact us today for a personalized consultation or a proof-of-concept demo using Ansible + OpenShift Virtualization. visit www.hawkstack.com

0 notes

Text

Master Ansible: Automation & DevOps with Real Projects

1. Introduction

Ansible is a powerful open-source tool used for IT automation, configuration management, and application deployment. In the realm of DevOps, automation is crucial for streamlining operations, reducing errors, and speeding up processes. This article delves into the world of Ansible, exploring its capabilities and demonstrating how it can transform your DevOps practices through real-world projects.

2. Getting Started with Ansible

Ansible Installation To get started with Ansible, you first need to install it. Ansible is available for various operating systems, including Linux, macOS, and Windows. Installation is straightforward, typically involving a simple command like pip install ansible for Python environments. Once installed, you can verify the installation with ansible --version.

Basic Commands and Concepts Ansible uses simple, human-readable YAML files for automation, making it accessible even to those new to coding. The primary components include inventory files, playbooks, modules, and plugins. An inventory file lists all the hosts you want to manage, while playbooks define the tasks to execute on those hosts.

3. Core Components of Ansible

Inventory Files Inventory files are a cornerstone of Ansible’s architecture. They define the hosts and groups of hosts on which Ansible commands, modules, and playbooks operate. These files can be static or dynamic, allowing for flexible management of environments.

Playbooks Playbooks are YAML files that contain a series of tasks to be executed on managed nodes. They are the heart of Ansible’s configuration management, enabling users to describe the desired state of their systems.

Modules and Plugins Modules are reusable, standalone scripts that perform specific tasks such as installing packages or managing services. Plugins extend Ansible’s functionality, providing additional capabilities like logging, caching, and connection management.

4. Ansible Configuration Management

Managing Files and Directories Ansible makes it easy to manage files and directories across multiple systems. You can use the copy module to transfer files, the template module to manage configuration files, and the file module to manage permissions and ownership.

Automating User Management User management is a common task in system administration. With Ansible, you can automate the creation, deletion, and modification of user accounts and groups, ensuring consistent user management across your infrastructure.

5. Ansible for Application Deployment

Deploying Web Applications Ansible excels at deploying web applications. You can automate the deployment of entire web stacks, including web servers, application servers, and databases. Playbooks can handle everything from installing necessary packages to configuring services and deploying code.

Managing Dependencies Managing dependencies is crucial for successful application deployment. Ansible can automate the installation of dependencies, ensuring that all required packages and libraries are available on the target systems.

6. Network Automation with Ansible

Configuring Network Devices Ansible’s network automation capabilities allow you to configure routers, switches, firewalls, and other network devices. Using modules designed for network management, you can automate tasks like interface configuration, VLAN management, and firmware updates.

Automating Network Security Security is a top priority in network management. Ansible can automate the configuration of security policies, firewalls, and intrusion detection systems, helping to protect your network from threats.

7. Ansible Roles and Galaxy

Creating and Using Roles Roles are a powerful way to organize and reuse Ansible code. By structuring your playbooks into roles, you can simplify your automation tasks and make your code more modular and maintainable.

Sharing Roles with Ansible Galaxy Ansible Galaxy is a community hub for sharing Ansible roles. It allows you to find and reuse roles created by others, accelerating your automation projects and promoting best practices.

8. Advanced Ansible Techniques

Ansible Vault for Secrets Ansible Vault is a feature that allows you to securely store and manage sensitive data, such as passwords and API keys. By encrypting this information, Ansible Vault helps protect your sensitive data from unauthorized access.

Using Conditionals and Loops Conditionals and loops in Ansible playbooks enable more dynamic and flexible automation. You can use conditionals to execute tasks based on certain conditions and loops to perform repetitive tasks efficiently.

9. Real-World Ansible Projects

Automating CI/CD Pipelines Continuous Integration and Continuous Deployment (CI/CD) are key components of modern DevOps practices. Ansible can automate the entire CI/CD pipeline, from code integration and testing to deployment and monitoring, ensuring fast and reliable software delivery.

Infrastructure as Code with Ansible Infrastructure as Code (IaC) is a methodology for managing and provisioning computing infrastructure through machine-readable scripts. Ansible supports IaC by enabling the automation of infrastructure setup, configuration, and management.

10. Integrating Ansible with Other Tools

Ansible and Jenkins Jenkins is a popular open-source automation server used for building, testing, and deploying software. Ansible can be integrated with Jenkins to automate post-build deployment tasks, making it a powerful addition to the CI/CD workflow.

Ansible and Kubernetes Kubernetes is a container orchestration platform that automates the deployment, scaling, and management of containerized applications. Ansible can be used to manage Kubernetes clusters, automate application deployment, and handle configuration management.

11. Troubleshooting Ansible

Common Errors and Solutions Even with its simplicity, Ansible can encounter errors during playbook execution. Common issues include syntax errors in YAML files, missing modules, and incorrect inventory configurations. Knowing how to troubleshoot these errors is essential for smooth automation.

Debugging Playbooks Ansible provides several debugging tools and strategies, such as the -v flag for verbose output and the debug module for printing variables and task outputs. These tools help identify and resolve issues in your playbooks.

12. Security and Compliance with Ansible

Automating Security Patches Keeping systems up to date with the latest security patches is crucial for maintaining security. Ansible can automate the patch management process, ensuring that all systems are consistently updated and secure.

Compliance Checks Compliance with industry standards and regulations is a vital aspect of IT management. Ansible can automate compliance checks, providing reports and remediations to ensure your systems meet required standards.

13. Ansible Best Practices

Writing Readable Playbooks Readable playbooks are easier to maintain and troubleshoot. Using descriptive names for tasks, organizing your playbooks into roles, and including comments can make your Ansible code more understandable and maintainable.

Version Control and Collaboration Version control systems like Git are essential for managing changes to your Ansible codebase. They facilitate collaboration among team members, allow for version tracking, and help avoid conflicts.

14. Future of Ansible in DevOps

Emerging Trends As DevOps practices evolve, Ansible continues to adapt and grow. Emerging trends include increased focus on security automation, integration with AI and machine learning for smarter automation, and expanded support for hybrid and multi-cloud environments.

0 notes

Text

Automation and Scripting in Enterprise Linux: Ansible, Bash, and Python

Automation and scripting are crucial in managing enterprise Linux environments efficiently. They help in streamlining administrative tasks, reducing errors, and saving time. In this post, we will explore three powerful tools for automation and scripting in enterprise Linux: Ansible, Bash, and Python.

1. Ansible: Simplifying Configuration Management

Overview: Ansible is an open-source automation tool used for configuration management, application deployment, and task automation. It uses a simple, human-readable language called YAML for its playbooks, making it easy to write and understand.

Key Features:

Agentless: No need to install any software on target machines.

Idempotent: Ensures that operations are repeatable and produce the same result every time.

Extensible: Supports a wide range of modules for different tasks.

Example Use Case: Deploying a Web Server

---

- name: Install and configure Apache web server

hosts: webservers

become: yes

tasks:

- name: Install Apache

yum:

name: httpd

state: present

- name: Start and enable Apache service

service:

name: httpd

state: started

enabled: yes

- name: Deploy index.html

copy:

src: /path/to/local/index.html

dest: /var/www/html/index.html

Benefits:

Easy to set up and use.

Scales efficiently across multiple systems.

Reduces the complexity of managing large infrastructures.

2. Bash: The Power of Shell Scripting

Overview: Bash is the default shell in many Linux distributions and is widely used for scripting and automation tasks. Bash scripts can automate routine tasks, perform system monitoring, and manage system configurations.

Key Features:

Ubiquitous: Available on virtually all Linux systems.

Flexible: Can combine various command-line utilities.

Interactive: Useful for both command-line operations and scripting.

Example Use Case: Automated Backup Script

#!/bin/bash

BACKUP_SRC="/home/user/data"

BACKUP_DEST="/backup"

TIMESTAMP=$(date +%Y%m%d%H%M%S)

BACKUP_NAME="backup_$TIMESTAMP.tar.gz"

# Create a backup

tar -czf $BACKUP_DEST/$BACKUP_NAME $BACKUP_SRC

# Print the result

if [ $? -eq 0 ]; then

echo "Backup successful: $BACKUP_NAME"

else

echo "Backup failed"

fi

Benefits:

Directly interacts with the system and its utilities.

Excellent for quick and simple tasks.

Easily integrates with cron jobs for scheduled tasks.

3. Python: Advanced Scripting and Automation

Overview: Python is a powerful, high-level programming language known for its readability and versatility. It is extensively used in system administration, automation, web development, and data analysis.

Key Features:

Extensive Libraries: Rich ecosystem of modules and packages.

Readability: Clean and easy-to-understand syntax.

Cross-Platform: Works on various operating systems.

Example Use Case: Monitoring Disk Usage

import shutil

def check_disk_usage(disk):

total, used, free = shutil.disk_usage(disk)

print(f"Disk usage on {disk}:")

print(f" Total: {total // (2**30)} GB")

print(f" Used: {used // (2**30)} GB")

print(f" Free: {free // (2**30)} GB")

if free / total < 0.2:

print("Warning: Less than 20% free space remaining!")

else:

print("Sufficient free space available.")

check_disk_usage("/")

Benefits:

Great for complex automation tasks and scripts.

Strong support for integrating with various APIs and web services.

Ideal for data manipulation and processing tasks.

Conclusion

Each of these tools—Ansible, Bash, and Python—offers unique strengths and is suited to different types of tasks in an enterprise Linux environment. Ansible excels in configuration management and large-scale automation, Bash is perfect for quick and simple scripting tasks, and Python shines in complex automation, data processing, and integration tasks.

By leveraging these tools, organizations can achieve greater efficiency, consistency, and reliability in their IT operations. Whether you are deploying applications, managing configurations, or automating routine tasks, mastering these tools will significantly enhance your capabilities as a Linux system administrator.

For more details click www.qcsdclabs.com

#linux#docker#redhatcourses#container#containerorchestration#kubernetes#information technology#containersecurity#dockerswarm#aws

0 notes

Text

How to Install and Test Ansible on Linux CentOS

Introduction

Ansible is an open source automation software written in Python. It runs on UNIX-like systems and can provision and configure both UNIX-like and Windows systems. Unlike other automation software, Ansible does not require an agent to run on a target system. It leverages on the SSH connection and python interpreter to perform the given tasks on the target system. Ansible can be installed on a cloud server to manage other cloud servers from a central location, or it can also be configured to use on a personal system to manage cloud or on-premises systems.

Prerequisites

Server with at least 1GB RAM and CentOS 7 installed.

You must be logged in via SSH as sudo or root user. This tutorial assumes that you are logged in as a sudo user.

Step 1: Update the System

Update the system with the latest packages and security patches using these commands.

sudo yum -y update

Step 2: Install EPEL Repository

EPEL or Extra Packages for Enterprise Linux repository is a free and community based repository which provide many extra open source software packages which are not available in default YUM repository.

We need to install EPEL repository into the system as Ansible is available in default YUM repository is very old.

sudo yum -y install epel-repo

Update the repository cache by running the command.

sudo yum -y update

Step 3: Install Ansible

Run the following command to install the latest version of Ansible.

sudo yum -y install ansible

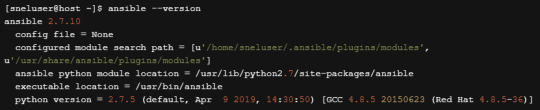

You can check if Ansible is installed successfully by finding its version.

ansible --version

You should see a similar output.

Ansible is now installed on your server.

Step 4: Testing Ansible (Optional)

Now that we have Ansible installed, let’s play around to see some basic uses of this software. This step is optional.

Consider that we have three different which we wish to manage using Ansible. In this example, I have created another three CentOS 7 cloud server with username root and password authentication. The IP address assigned to my cloud servers are

192.168.0.101 192.168.0.102 192.168.0.103

You can have less number of servers to test with.

Step 4.1 Generate SSH Key Pair

Although we can connect to remote hosts using a password through Ansible it is recommended to set up key-based authentication for easy and secure logins.

Generate an SSH key pair on your system by running the command.

ssh-keygen

You will be prompted to provide a name and password for key pair. Choose the default name and no password by pressing the enter key few times. You should see the following output.

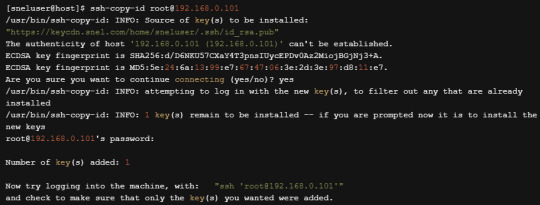

Step 4.2 Copy Public Key into Target Server

Now that our key pair is ready, we need to copy the public key into our target systems. Run the following command to copy the public key into the first server.

ssh-copy-id [email protected]

Type yes when prompted to trust target host’s fingerprint. Put the password of root account when prompted. The output will be similar to shown below.

You can now try to login to the target system by running the command.

It should log you in without asking for a password. Repeat step 4.2 for all the remaining two hosts.

Step 4.3 Configure Ansible Hosts

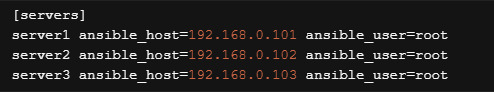

By default, Ansible reads the host file from the location /etc/ansible/hosts. Open the hosts file into the editor.

sudo vi /etc/ansible/hosts

Replace the existing content with the following lines into the editor. Make sure to replace your actual IP address and username.

Save the file and exit from the editor.

Step 4.4 Connect using Ansible

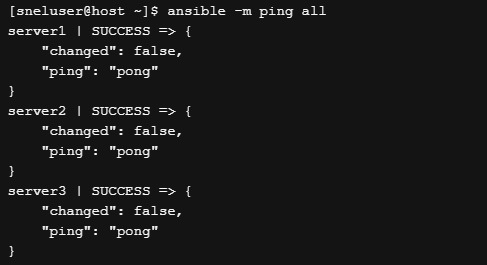

We have done the minimal configuration required to connect to the remote machine using Ansible. Run the following command to ping the host using Ansible ping module.

ansible -m ping all

If your server can successfully connect to the remote hosts, you should see the following output.

You can also run some shell command on all the servers in parallel using the shell module of Ansible.

ansible -m shell -a 'yum -y update' all

You can also run your command on a single host if you wish.

ansible -m shell -a 'yum -y update' server1

Conclusion

In this detailed tutorial, we learned how to install Ansible on CentOS 7. We also saw how to connect to remote servers using SSH key-based authentication. We ran some simple Ansible command to connect to our servers. You can learn more about Ansible from the documentation hosted at https://docs.ansible.com/

1 note

·

View note

Text

Managing VMware vSphere Virtual Machines with Ansible

I was tasked with extraordinary daunting task of provisioning a test environment on vSphere. I knew that the install was going to fail on me multiple times and I was in dire need of a few things:

Start over from inception - basically a blank sheet of paper

Create checkpoints and be able to revert to those checkpoints fairly easily

Do a ton of customization in the guest OS

The Anti-Pattern

I’ve been enslaved with vSphere in previous jobs. It’s a handy platform for various things. I was probably the first customer to run ESX on NetApp NFS fifteen years ago. I can vividly remember that already back then I was incredibly tired of “right clicking” in vCenter and I wrote extensive automation with the Perl bindings and SDKs that were available at the time. I get a rash if I have to do something manually in vCenter and I see it as nothing but an API endpoint. Manual work in vCenter is the worst TOIL and the anti-pattern of modern infrastructure management.

Hello Ansible

I manage my own environment, which is KVM based, entirely with Ansible. Sure, it’s statically assigned virtual machines but surprisingly, it works just great as I’m just deploying clusters where HA is dealt with elsewhere. When this project that I’m working on came up, I frantically started to map out everything I needed to do in the Ansible docs. Not too surprisingly, Ansible makes everything a breeze. You’ll find the VMware vSphere integration in the “Cloud Modules” section.

Inception

I needed to start with something. That includes some right-clicking in vCenter. I uploaded this vmdk file into one the datastores and manually configured a Virtual Machine template with the uploaded vmdk file. This is I could bear with as I only had to do it once. Surprisingly, I could not find a CentOS 7 OVA/OVF file that could deploy from (CentOS was requirement for the project, I’m an Ubuntu-first type of guy and they have plenty of images readily available).

Once you have that Virtual Machine template baked. Step away from vCenter, logout, close tab. Don’t look back (remember the name of the template!)

I’ve stashed the directory tree on GitHub. The Ansible pattern I prefer is that you use a ansible.cfg local to what you’re doing, playbooks to carry out your tasks and apply roles as necessary. I’m not going through the minutia of getting Ansible installed and all that jazz. The VMware modules have numerous Python dependences and they will tell you what is missing, simply pip install <whatever is complaining> to get rolling.

Going forward, let's assume:

git clone https://github.com/NimbleStorage/automation-examples cd cloud/vmware-vcenter

There are some variables that needs to be customized and tailored to any specific environment. The file that needs editing is host_vars/localhost that needs to be copied from host_vars/localhost-dist. Mine looks similar to this:

--- vcenter_hostname: 192.168.1.1 vcenter_username: [email protected] vcenter_password: "HPE Cyber Security Will See This" vcenter_datastore: MY-DSX vcenter_folder: / vcenter_template: CentOS7 vcenter_datacenter: MY-DC vcenter_resource_pool: MY-RP # Misc config machine_group: machines machine_initial_user: root machine_initial_password: osboxes.org # Machine config machine_memory_mb: 2048 machine_num_cpus: 2 machine_num_cpu_cores_per_socket: 1 machine_networks: - name: VM Network - name: Island Network machine_disks: - size_gb: 500 type: thinProvisioned datastore: "{{ vcenter_datastore }}"

I also have a fairly basic inventory that I’m working with (in hosts):

[machines] tme-foo-m1 tme-foo-m2 tme-foo-m3 tme-foo-m4 tme-foo-m5 tme-foo-m6

Tailor your config and let’s move on.

Note: The network I’m sitting on is providing DHCP services with permanent leases and automatic DNS registration, I don’t have to deal with IP addressing. If static IP addressing is required, feel free to modify to your liking but I wouldn’t even know where to begin as the vmdk image I’m using as a starter is non-customizable.

Deploy Virtual Machines

First things first, provision the virtual machines. I intentionally didn’t want to screw around with VM snapshots to clone from. Full copies of each VM is being performed. I’m running this on the most efficient VMware storage array in the biz so I don’t really have to care that much about space.

Let’s deploy!

$ ansible-playbook deploy.yaml PLAY [localhost] **************************************************** TASK [Gathering Facts] ********************************************************************* Monday 04 November 2019 04:12:51 +0000 (0:00:00.096) 0:00:00.096 ******* ok: [localhost] TASK [deploy : Create a virtual machine from a template] ************************************************************** Monday 04 November 2019 04:12:52 +0000 (0:00:00.916) 0:00:01.012 ******* changed: [localhost -> localhost] => (item=tme-foo-m1) changed: [localhost -> localhost] => (item=tme-foo-m2) changed: [localhost -> localhost] => (item=tme-foo-m3) changed: [localhost -> localhost] => (item=tme-foo-m4) changed: [localhost -> localhost] => (item=tme-foo-m5) changed: [localhost -> localhost] => (item=tme-foo-m6) PLAY RECAP ********************************************************** localhost : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 Monday 04 November 2019 04:31:37 +0000 (0:18:45.897) 0:18:46.910 ******* ===================================================================== deploy : Create a virtual machine from a template ---------- 1125.90s Gathering Facts ----------------------------------------------- 0.92s Playbook run took 0 days, 0 hours, 18 minutes, 46 seconds

In this phase we have machines deployed. They’re not very useful yet as I want to add my current SSH key from the machine I’m managing the environment from. Copy roles/prepare/files/authorized_keys-dist to roles/prepare/files/authorized_keys:

cp roles/prepare/files/authorized_keys-dist roles/prepare/files/authorized_keys

Add your public key to roles/prepare/files/authorized_keys. Also configure machine_user to match the username your managing your machines from.

Now, let’s prep the machines:

$ ansible-playbook prepare.yaml PLAY [localhost] **************************************************** TASK [Gathering Facts] ********************************************************************* Monday 04 November 2019 04:50:36 +0000 (0:00:00.102) 0:00:00.102 ******* ok: [localhost] TASK [prepare : Gather info about VM] ********************************************************************* Monday 04 November 2019 04:50:37 +0000 (0:00:00.889) 0:00:00.991 ******* ok: [localhost -> localhost] => (item=tme-foo-m1) ok: [localhost -> localhost] => (item=tme-foo-m2) ok: [localhost -> localhost] => (item=tme-foo-m3) ok: [localhost -> localhost] => (item=tme-foo-m4) ok: [localhost -> localhost] => (item=tme-foo-m5) ok: [localhost -> localhost] => (item=tme-foo-m6) TASK [prepare : Register IP in inventory] ********************************************************************* Monday 04 November 2019 04:50:41 +0000 (0:00:04.191) 0:00:05.183 ******* <very large blurb redacted> TASK [prepare : Test VM] ********************************************************************* Monday 04 November 2019 04:50:41 +0000 (0:00:00.157) 0:00:05.341 ******* ok: [localhost -> None] => (item=tme-foo-m1) ok: [localhost -> None] => (item=tme-foo-m2) ok: [localhost -> None] => (item=tme-foo-m3) ok: [localhost -> None] => (item=tme-foo-m4) ok: [localhost -> None] => (item=tme-foo-m5) ok: [localhost -> None] => (item=tme-foo-m6) TASK [prepare : Create ansible user] ********************************************************************* Monday 04 November 2019 04:50:46 +0000 (0:00:04.572) 0:00:09.914 ******* changed: [localhost -> None] => (item=tme-foo-m1) changed: [localhost -> None] => (item=tme-foo-m2) changed: [localhost -> None] => (item=tme-foo-m3) changed: [localhost -> None] => (item=tme-foo-m4) changed: [localhost -> None] => (item=tme-foo-m5) changed: [localhost -> None] => (item=tme-foo-m6) TASK [prepare : Upload new sudoers] ********************************************************************* Monday 04 November 2019 04:50:49 +0000 (0:00:03.283) 0:00:13.198 ******* changed: [localhost -> None] => (item=tme-foo-m1) changed: [localhost -> None] => (item=tme-foo-m2) changed: [localhost -> None] => (item=tme-foo-m3) changed: [localhost -> None] => (item=tme-foo-m4) changed: [localhost -> None] => (item=tme-foo-m5) changed: [localhost -> None] => (item=tme-foo-m6) TASK [prepare : Upload authorized_keys] ********************************************************************* Monday 04 November 2019 04:50:53 +0000 (0:00:04.124) 0:00:17.323 ******* changed: [localhost -> None] => (item=tme-foo-m1) changed: [localhost -> None] => (item=tme-foo-m2) changed: [localhost -> None] => (item=tme-foo-m3) changed: [localhost -> None] => (item=tme-foo-m4) changed: [localhost -> None] => (item=tme-foo-m5) changed: [localhost -> None] => (item=tme-foo-m6) PLAY RECAP ********************************************************** localhost : ok=9 changed=6 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 Monday 04 November 2019 04:51:01 +0000 (0:00:01.980) 0:00:24.903 ******* ===================================================================== prepare : Test VM --------------------------------------------- 4.57s prepare : Gather info about VM -------------------------------- 4.19s prepare : Upload new sudoers ---------------------------------- 4.12s prepare : Upload authorized_keys ------------------------------ 3.59s prepare : Create ansible user --------------------------------- 3.28s Gathering Facts ----------------------------------------------- 0.89s prepare : Register IP in inventory ---------------------------- 0.16s Playbook run took 0 days, 0 hours, 0 minutes, 20 seconds

At this stage, things should be in a pristine state. Let’s move on.

Managing Virtual Machines

The bleak inventory file what we have created should now be usable. Let’s ping our machine farm:

$ ansible -m ping machines tme-foo-m5 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" } tme-foo-m4 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" } tme-foo-m3 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" } tme-foo-m2 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" } tme-foo-m1 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" } tme-foo-m6 | SUCCESS => { "ansible_facts": { "discovered_interpreter_python": "/usr/bin/python" }, "changed": false, "ping": "pong" }

As a good Linux citizen you always want to update to all the latest packages. I provided a crude package_update.yaml file for your convenience. It will also reboot the VMs once completed.

Important: The default password for the root user is still what that template shipped with. If you intend to use this for anything but a sandbox exercise, consider changing that root password.

Snapshot and Restore Virtual Machines

Now to the fun part. I’ve redacted a lot of the content I created for this project for many reasons, but it involved making customizations and installing proprietary software. In the various stages I wanted to create snapshots as some of these steps were not only lengthy, they were one-way streets. Creating a snapshot of the environment was indeed very handy.

To create a VM snapshot for the machines group:

$ ansible-playbook snapshot.yaml -e snapshot=goldenboy PLAY [localhost] **************************************************** TASK [Gathering Facts] ********************************************************************* Monday 04 November 2019 05:09:25 +0000 (0:00:00.096) 0:00:00.096 ******* ok: [localhost] TASK [snapshot : Create a VM snapshot] ********************************************************************* Monday 04 November 2019 05:09:27 +0000 (0:00:01.893) 0:00:01.989 ******* changed: [localhost -> localhost] => (item=tme-foo-m1) changed: [localhost -> localhost] => (item=tme-foo-m2) changed: [localhost -> localhost] => (item=tme-foo-m3) changed: [localhost -> localhost] => (item=tme-foo-m4) changed: [localhost -> localhost] => (item=tme-foo-m5) changed: [localhost -> localhost] => (item=tme-foo-m6) PLAY RECAP ********************************************************** localhost : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 Monday 04 November 2019 05:09:35 +0000 (0:00:08.452) 0:00:10.442 ******* ===================================================================== snapshot : Create a VM snapshot ------------------------------- 8.45s Gathering Facts ----------------------------------------------- 1.89s Playbook run took 0 days, 0 hours, 0 minutes, 10 seconds

It’s now possible to trash the VM. If you ever want to go back:

$ ansible-playbook restore.yaml -e snapshot=goldenboy PLAY [localhost] **************************************************** TASK [Gathering Facts] ********************************************************************* Monday 04 November 2019 05:11:38 +0000 (0:00:00.104) 0:00:00.104 ******* ok: [localhost] TASK [restore : Revert a VM to a snapshot] ********************************************************************* Monday 04 November 2019 05:11:38 +0000 (0:00:00.860) 0:00:00.964 ******* changed: [localhost -> localhost] => (item=tme-foo-m1) changed: [localhost -> localhost] => (item=tme-foo-m2) changed: [localhost -> localhost] => (item=tme-foo-m3) changed: [localhost -> localhost] => (item=tme-foo-m4) changed: [localhost -> localhost] => (item=tme-foo-m5) changed: [localhost -> localhost] => (item=tme-foo-m6) TASK [restore : Power On VM] ********************************************************************* Monday 04 November 2019 05:11:47 +0000 (0:00:08.466) 0:00:09.431 ******* changed: [localhost -> localhost] => (item=tme-foo-m1) changed: [localhost -> localhost] => (item=tme-foo-m2) changed: [localhost -> localhost] => (item=tme-foo-m3) changed: [localhost -> localhost] => (item=tme-foo-m4) changed: [localhost -> localhost] => (item=tme-foo-m5) changed: [localhost -> localhost] => (item=tme-foo-m6) PLAY RECAP ********************************************************** localhost : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 Monday 04 November 2019 05:12:02 +0000 (0:00:15.232) 0:00:24.663 ******* ===================================================================== restore : Power On VM ---------------------------------------- 15.23s restore : Revert a VM to a snapshot --------------------------- 8.47s Gathering Facts ----------------------------------------------- 0.86s Playbook run took 0 days, 0 hours, 0 minutes, 24 seconds

Destroy Virtual Machines

I like things neat and tidy. This is how you would clean up after yourself:

$ ansible-playbook destroy.yaml PLAY [localhost] **************************************************** TASK [Gathering Facts] ********************************************************************* Monday 04 November 2019 05:13:12 +0000 (0:00:00.099) 0:00:00.099 ******* ok: [localhost] TASK [destroy : Destroy a virtual machine] ********************************************************************* Monday 04 November 2019 05:13:13 +0000 (0:00:00.870) 0:00:00.969 ******* changed: [localhost -> localhost] => (item=tme-foo-m1) changed: [localhost -> localhost] => (item=tme-foo-m2) changed: [localhost -> localhost] => (item=tme-foo-m3) changed: [localhost -> localhost] => (item=tme-foo-m4) changed: [localhost -> localhost] => (item=tme-foo-m5) changed: [localhost -> localhost] => (item=tme-foo-m6) PLAY RECAP ********************************************************** localhost : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0 Monday 04 November 2019 05:13:37 +0000 (0:00:24.141) 0:00:25.111 ******* ===================================================================== destroy : Destroy a virtual machine -------------------------- 24.14s Gathering Facts ----------------------------------------------- 0.87s Playbook run took 0 days, 0 hours, 0 minutes, 25 seconds

Summary

I probably dissed VMware more than necessary in this post. It’s a great infrastructure platform that is being deployed by 99% of the IT shops out there (don’t quote me on that). I hope you enjoyed this tutorial on how to make vSphere useful with Ansible.

Trivia: This tutorial brought you by one of the first few HPE Nimble Storage dHCI systems ever brought up!

1 note

·

View note

Text

In this guide, we will install Semaphore Ansible Web UI on CentOS 7|CentOS 8. Semaphore is an open source web-based solution that makes Ansible easy to use for IT teams of all kinds. It gives you a Web interface from where you can launch and manage Ansible Tasks. Install Semaphore Ansible Web UI on CentOS 7|CentOS 8 Semaphore depends on the following tools: MySQL >= 5.6.4/MariaDB >= 5.3 ansible git >= 2.x We will start the installation by ensuring these dependencies are installed on your CentOS 7|CentOS 8 server. So follow steps in the next sections to ensure all is set. Before any installation we recommend you perform an update on the OS layer: sudo yum -y update A reboot is also essential once the upgrade is made: sudo reboot -f Step 1: Install MariaDB Database Server We have a comprehensive guide on installation of MariaDB on CentOS 7|CentOS 8. Run the commands below to install the latest stable release of MariaDB database server. curl -LsS -O https://downloads.mariadb.com/MariaDB/mariadb_repo_setup sudo bash mariadb_repo_setup sudo yum install MariaDB-server MariaDB-client MariaDB-backup Start and enable mariadb database service: sudo systemctl enable --now mariadb Secure database server after installation: $ sudo mariadb-secure-installation Switch to unix_socket authentication [Y/n] n Change the root password? [Y/n] y Remove anonymous users? [Y/n] y Disallow root login remotely? [Y/n] y Remove test database and access to it? [Y/n] y Reload privilege tables now? [Y/n] y Step 2: Install git 2.x on CentOS 7|CentOS 8 Install git 2.x on your CentOS 7 server using our guide below. Install latest version of Git ( Git 2.x ) on CentOS 7 Confirm git version. $ git --version git version 2.34.1 Step 3: Install Ansible on CentOS 7|CentOS 8 Install Ansible on your CentOS 7 server. sudo yum -y install epel-release sudo yum -y install ansible Test if ansible command is available. $ ansible --version ansible 2.9.27 config file = /etc/ansible/ansible.cfg configured module search path = [u'/root/.ansible/plugins/modules', u'/usr/share/ansible/plugins/modules'] ansible python module location = /usr/lib/python2.7/site-packages/ansible executable location = /usr/bin/ansible python version = 2.7.5 (default, Nov 16 2020, 22:23:17) [GCC 4.8.5 20150623 (Red Hat 4.8.5-44)] Step 4: Download Semaphore Visit the Semaphore Releases page and copy the download link for your OS. sudo yum -y install wget curl VER=$(curl -s https://api.github.com/repos/ansible-semaphore/semaphore/releases/latest|grep tag_name | cut -d '"' -f 4|sed 's/v//g') wget https://github.com/ansible-semaphore/semaphore/releases/download/v$VER/semaphore_$VER_linux_amd64.rpm Install Semaphore package: $ sudo rpm -Uvh semaphore_$VER_linux_amd64.rpm Preparing… ################################# [100%] Updating / installing… 1:semaphore-0:2.8.53-1 ################################# [100%] Check if you have semaphore binary in your $PATH. $ which semaphore /usr/bin/semaphore $ semaphore version v2.8.53 Usage help document: $ semaphore --help Ansible Semaphore is a beautiful web UI for Ansible. Source code is available at https://github.com/ansible-semaphore/semaphore. Complete documentation is available at https://ansible-semaphore.com. Usage: semaphore [flags] semaphore [command] Available Commands: completion generate the autocompletion script for the specified shell help Help about any command migrate Execute migrations server Run in server mode setup Perform interactive setup upgrade Upgrade to latest stable version user Manage users version Print the version of Semaphore Flags: --config string Configuration file path -h, --help help for semaphore Use "semaphore [command] --help" for more information about a command. Step 5: Setup Semaphore Run the following command to start Semaphore setup in your system.

$ sudo semaphore setup Hello! You will now be guided through a setup to: 1. Set up configuration for a MySQL/MariaDB database 2. Set up a path for your playbooks (auto-created) 3. Run database Migrations 4. Set up initial semaphore user & password What database to use: 1 - MySQL 2 - BoltDB 3 - PostgreSQL (default 1): 1 DB Hostname (default 127.0.0.1:3306): DB User (default root): root DB Password: DB Name (default semaphore): semaphore Playbook path (default /tmp/semaphore): /opt/semaphore Web root URL (optional, example http://localhost:8010/): http://localhost:8010/ Enable email alerts (y/n, default n): n Enable telegram alerts (y/n, default n): n Enable LDAP authentication (y/n, default n): n Confirm these values are correct to initiate setup. Is this correct? (yes/no): yes Config output directory (default /root): WARN[0037] An input error occured:unexpected newline Running: mkdir -p /root.. Configuration written to /root/config.json.. Pinging db.. Running DB Migrations.. Checking DB migrations Creating migrations table ...... Migrations Finished Set username Username: admin Email: [email protected] WARN[0268] sql: no rows in result set level=Warn Your name: Admin User Password: StrongUserPassword You are all setup Admin User! Re-launch this program pointing to the configuration file ./semaphore -config /root/config.json To run as daemon: nohup ./semaphore -config /root/config.json & You can login with [email protected] or computingpost. You can set other configuration values on the file /root/config.json. Step 6: Configure systemd unit for Semaphore Let’s now configure Semaphore Ansible UI to be managed by systemd. Create systemd service unit file. sudo vi /etc/systemd/system/semaphore.service The add: [Unit] Description=Semaphore Ansible UI Documentation=https://github.com/ansible-semaphore/semaphore Wants=network-online.target After=network-online.target [Service] Type=simple ExecReload=/bin/kill -HUP $MAINPID ExecStart=/usr/bin/semaphore server --config /etc/semaphore/config.json SyslogIdentifier=semaphore Restart=always [Install] WantedBy=multi-user.target Create Semaphore configurations directory: sudo mkdir /etc/semaphore Copy your configuration file to created directory: sudo ln -s /root/config.json /etc/semaphore/config.json Stop running instances of Semaphore. sudo pkill semaphore Confirm: ps aux | grep semaphore Reload systemd and start semaphore service. sudo systemctl daemon-reload sudo systemctl restart semaphore Check status to see if running: $ systemctl status semaphore ● semaphore.service - Semaphore Ansible UI Loaded: loaded (/etc/systemd/system/semaphore.service; disabled; vendor preset: disabled) Active: active (running) since Tue 2022-04-19 13:29:42 UTC; 3s ago Docs: https://github.com/ansible-semaphore/semaphore Main PID: 8636 (semaphore) CGroup: /system.slice/semaphore.service └─8636 /usr/bin/semaphore server --config /etc/semaphore/config.json Apr 19 13:29:42 centos.example.com systemd[1]: Started Semaphore Ansible UI. Apr 19 13:29:42 centos.example.com semaphore[8636]: MySQL [email protected]:3306 semaphore Apr 19 13:29:42 centos.example.com semaphore[8636]: Tmp Path (projects home) /tmp/semaphore Apr 19 13:29:42 centos.example.com semaphore[8636]: Semaphore v2.8.53 Apr 19 13:29:42 centos.example.com semaphore[8636]: Interface Apr 19 13:29:42 centos.example.com semaphore[8636]: Port :3000 Apr 19 13:29:42 centos.example.com semaphore[8636]: Server is running Set Service to start at boot. $ sudo systemctl enable semaphore Created symlink /etc/systemd/system/multi-user.target.wants/semaphore.service → /etc/systemd/system/semaphore.service. Port 3000 should now be Open $ sudo ss -tunelp | grep 3000 tcp LISTEN 0 128 [::]:3000 [::]:* users:(("semaphore",pid=8636,fd=8)) ino:36321 sk:ffff8ae3b4e59080 v6only:0

Step 7: Setup Nginx Proxy (Optional) To be able to access Semaphore Web interface with a domain name, use the guide below to setup. Configure Nginx Proxy for Semaphore Ansible Web UI Step 8: Access Semaphore Web interface On your web browser, open semaphore Server IP on port 3000 or server name. Use the username/email created earlier during installation to Sign in. Web console for semaphore should be shown after authentication. You’re ready to manage your servers with Ansible and powerful Web UI. The initial steps required are: Add SSH keys / API keys used by Ansible – Under Key Store > create key Create Inventory file with servers to manage – Under Inventory > create inventory Create users and add to Team(s) Create Environments Add Playbook repositories Create Task Templates and execute Also check a detailed guide on semaphore Web UI. For Ubuntu / Debian installation, check: Setup Semaphore Ansible Web UI on Ubuntu / Debian

0 notes

Text

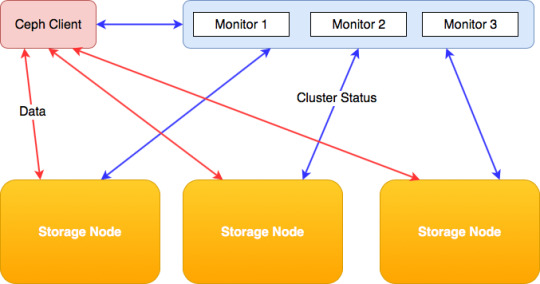

Ceph Client

Ceph.client.admin.keyring ceph.bootstrap-mgr.keyring ceph.bootstrap-osd.keyring ceph.bootstrap-mds.keyring ceph.bootstrap-rgw.keyring ceph.bootstrap-rbd.keyring Use ceph-deploy to copy the configuration file and admin key to your admin node and your Ceph Nodes so that you can use the ceph CLI without having to specify the monitor address.

Generate a minimal ceph.conf file, make a local copy, and transfer it to the client: juju ssh ceph-mon/0 sudo ceph config generate-minimal-conf tee ceph.conf juju scp ceph.conf ceph-client/0: Connect to the client: juju ssh ceph-client/0 On the client host, Install the required software, put the ceph.conf file in place, and set up the correct.

1.10 Installing a Ceph Client. To install a Ceph Client: Perform the following steps on the system that will act as a Ceph Client: If SELinux is enabled, disable it and then reboot the system. Stop and disable the firewall service. For Oracle Linux 6 or Oracle Linux 7 (where iptables is used instead of firewalld ), enter: For Oracle Linux 7, enter.

Ceph kernel client (kernel modules). Contribute to ceph/ceph-client development by creating an account on GitHub. Get rid of the releases annotation by breaking it up into two functions: prepcap which is done under the spinlock and sendcap that is done outside it.

Ceph Client List

Ceph Client Log

Ceph Client Windows

A python client for ceph-rest-api After learning there was an API for Ceph, it was clear to me that I was going to write a client to wrap around it and use it for various purposes. January 1, 2014.

Ceph is a massively scalable, open source, distributed storage system.

These links provide details on how to use Ceph with OpenStack:

Ceph - The De Facto Storage Backend for OpenStack(Hong Kong Summittalk)

Note

Configuring Ceph storage servers is outside the scope of this documentation.

Authentication¶

We recommend the cephx authentication method in the Cephconfig reference. OpenStack-Ansible enables cephx by default forthe Ceph client. You can choose to override this setting by using thecephx Ansible variable:

Deploy Ceph on a trusted network if disabling cephx.

Configuration file overrides¶

OpenStack-Ansible provides the ceph_conf_file variable. This allowsyou to specify configuration file options to override the defaultCeph configuration:

The use of the ceph_conf_file variable is optional. By default,OpenStack-Ansible obtains a copy of ceph.conf from one of your Cephmonitors. This transfer of ceph.conf requires the OpenStack-Ansibledeployment host public key to be deployed to all of the Ceph monitors. Moredetails are available here: Deploying SSH Keys.

The following minimal example configuration sets nova and glanceto use ceph pools: ephemeral-vms and images respectively.The example uses cephx authentication, and requires existing glance andcinder accounts for images and ephemeral-vms pools.

For a complete example how to provide the necessary configuration for a Cephbackend without necessary access to Ceph monitors via SSH please seeCeph keyring from file example.

Extra client configuration files¶

Deployers can specify extra Ceph configuration files to supportmultiple Ceph cluster backends via the ceph_extra_confs variable.

Ceph Client List

These config file sources must be present on the deployment host.

Ceph Client Log

Alternatively, deployers can specify more options in ceph_extra_confsto deploy keyrings, ceph.conf files, and configure libvirt secrets.

The primary aim of this feature is to deploy multiple ceph clusters ascinder backends and enable nova/libvirt to mount block volumes from thosebackends. These settings do not override the normal deployment ofceph client and associated setup tasks.

Deploying multiple ceph clusters as cinder backends requires the followingadjustments to each backend in cinder_backends Onyx sierra.

The dictionary keys rbd_ceph_conf, rbd_user, and rbd_secret_uuidmust be unique for each ceph cluster to used as a cinder_backend.

Monitors¶

The Ceph Monitor maintains a master copy of the cluster map.OpenStack-Ansible provides the ceph_mons variable and expects a list ofIP addresses for the Ceph Monitor servers in the deployment:

Configure os_gnocchi with ceph_client¶

Ceph Client Windows

If the os_gnocchi role is going to utilize the ceph_client role, the followingconfigurations need to be added to the user variable file:

0 notes

Text

Introduction to Ansible and Its Importance in DevOps

Automation has become the backbone of modern IT operations, and tools like Ansible are at the forefront of this revolution. But what exactly is Ansible, and why should you consider using it in your DevOps practices?

What is Ansible? Ansible is an open-source automation tool that simplifies the process of configuring and managing computers. It allows users to automate repetitive tasks, deploy applications, and manage complex IT environments without the need for complex scripts or programming. With Ansible, tasks that would normally take hours or even days can be completed in minutes, all while maintaining a high level of consistency and reliability.

Why Use Ansible in DevOps? In the world of DevOps, speed, efficiency, and consistency are key. Ansible meets these needs by offering a simple, agentless architecture that can automate the deployment and management of applications across multiple servers.

Simplifying Automation with Ansible Ansible makes automation easy with its simple, human-readable language, YAML (Yet Another Markup Language). This means you don't need to be a coding expert to write Ansible playbooks. The tool also eliminates the need for agents on remote systems, reducing overhead and making it easier to manage large environments.

Enhancing Efficiency and Consistency By automating repetitive tasks, Ansible helps teams save time and reduce the risk of human error. With Ansible, you can ensure that your systems are configured exactly as you want them, every time. This consistency is crucial in maintaining reliable and secure IT operations.

Getting Started with Ansible

Ready to dive in? Here’s how you can get started with Ansible.

Installing Ansible Before you can start using Ansible, you need to install it. The process is straightforward, but there are a few requirements and dependencies you'll need to meet.

Requirements and Dependencies Ansible requires Python (version 3.5 or later) to run. You’ll also need a system with a Unix-like OS (Linux, macOS, or Windows with WSL) to install it.

Step-by-Step Installation Guide

Install Python: Most systems come with Python pre-installed. You can check by running python --version or python3 --version.

Install Ansible: Once Python is installed, you can use pip to install Ansible. Run the command pip install ansible to get started.

Verify Installation: To verify that Ansible is installed correctly, run ansible --version.

Ansible Architecture Overview

Understanding Ansible’s architecture is key to mastering its use.

Core Components: Inventory, Modules, and Playbooks

Inventory: This is a list of hosts (computers) that Ansible manages.

Modules: These are the units of code Ansible uses to perform tasks.

Playbooks: These are files that define the tasks Ansible will execute on your hosts.

Ansible Configuration File and Its Significance The Ansible configuration file (ansible.cfg) is crucial as it allows you to define settings and behaviors for Ansible, such as default module paths, remote user information, and more.

Core Concepts in Ansible

Ansible’s power lies in its simplicity and flexibility. Here are some core concepts to understand:

Understanding Playbooks and Their Role Playbooks are the heart of Ansible. They define the tasks you want to perform on your managed hosts.

Structure of a Playbook A playbook is written in YAML format and typically consists of one or more "plays." Each play defines a set of tasks executed on a specified group of hosts.

Modules and How They Work Modules are the building blocks of Ansible. They are used to perform actions on your managed hosts.

Commonly Used Ansible Modules Some commonly used modules include:

apt/yum: For package management

service: For managing services

copy/template: For managing files

Creating Custom Modules If Ansible doesn’t have a module that meets your needs, you can create your own. Custom modules can be written in any language that returns JSON, making them highly flexible.

Advanced Ansible Techniques

As you become more familiar with Ansible, you’ll want to explore more advanced techniques.

Using Roles for Better Playbook Organization Roles are a way to organize playbooks and manage complex configurations more easily.

Best Practices for Role Management Keep your roles simple and focused. Each role should perform one specific function. This makes it easier to maintain and reuse roles across different projects.

Ansible Galaxy: The Hub of Community Content Ansible Galaxy is a community hub where you can find roles created by other Ansible users.

Finding and Using Roles from Ansible Galaxy To use a role from Ansible Galaxy, you can simply run ansible-galaxy install <role_name>. This will download the role to your system, and you can use it in your playbooks just like any other role.

Contributing to Ansible Galaxy If you create a role that you think others might find useful, you can share it on Ansible Galaxy. This is a great way to contribute to the community and get feedback on your work.

Ansible in Real-World Projects

Now that you understand the basics, let's look at how you can use Ansible in real-world projects.

Automating Server Provisioning Ansible is perfect for automating server provisioning. You can create playbooks to set up servers with all the necessary software and configurations in minutes.

Setting Up a Web Server with Ansible To set up a web server, you can write a playbook that installs the web server software, configures it, and starts the service. This entire process can be automated with Ansible, saving you time and reducing the risk of errors.

Automating Database Deployment Just like with web servers, you can use Ansible to automate the deployment of databases. This includes installing the database software, creating databases, and configuring access permissions.

Continuous Integration and Deployment (CI/CD) with Ansible Ansible can also be used in CI/CD pipelines to automate the deployment of applications.

Integrating Ansible with Jenkins By integrating Ansible with Jenkins, you can automate the deployment process whenever there’s a change in your codebase. This ensures that your applications are always up-to-date and running smoothly.

Deploying Applications with Zero Downtime Ansible can help you achieve zero-downtime deployments by automating the process of updating your servers without taking them offline. This is crucial for maintaining service availability and minimizing disruptions.

Troubleshooting and Debugging in Ansible

Even with automation, things can go wrong. Here’s how to troubleshoot and debug Ansible.

Common Errors and How to Fix Them Some common errors in Ansible include syntax errors in playbooks, missing or incorrect modules, and connectivity issues with managed hosts. Ansible provides clear error messages to help you identify and fix these issues.

Debugging Tips and Tools Ansible provides several tools for debugging, such as the --check option to simulate a playbook run, the -v option for verbose output, and the debug module for printing variable values during execution.

Best Practices for Using Ansible in Production

When using Ansible in production, it's important to follow best practices to ensure security, performance, and reliability.

Security Considerations Always use secure methods for storing sensitive information, such as Ansible Vault or environment variables. Avoid hardcoding passwords or other sensitive data in your playbooks.

Performance Optimization Techniques To optimize performance, use strategies like parallelism to execute tasks on multiple hosts simultaneously. Also, minimize the number of tasks in each playbook to reduce execution time.

Conclusion

Ansible is a powerful tool for automating tasks and managing IT environments. Mastering Ansible can streamline your DevOps processes, improve efficiency, and ensure consistency across your infrastructure. Whether you’re just getting started or looking to deepen your knowledge, Ansible offers endless opportunities for growth and innovation.

0 notes

Text

Ansible: Unleash the power of control over SSH

Heard about Ansible but not sure what exactly it is? Don’t worry! You will know about it in the next few minutes…

Ansible is an open-source DevOps tool which is helpful for configuration management, deployment, provisioning, etc. in business.

The following topics about Ansible will be covered in this blog:

Need of Ansible

Advantages of Ansible

Architecture of Ansible

Working of Ansible

Need of Ansible:

Before knowing about Ansible, you should know about the problem faced before Ansible.

At the beginning of network computation, deploying and managing servers efficiently was a challenge. During that period, system administrators used to handle servers personally, install software, change configurations and administer services on individual servers.

With the increasing number of data centers and complexity in hosting applications, administrators realized that as fast the applications were enabling, scaling the manual system management was a tough task. IT team started spending more time on the configuration of systems because the development team used to release software frequently and it obstructed the velocity of work of the developers. And that’s where server provisioning and configuration management tool started developing.

Administrating the server fleet always requires keeping updating, pushing changes, copying files on them. Due to these tasks, the things turn out to be very complicated as well time-consuming. And then comes the solution for the above-stated problem and the solution is ANSIBLE.

Advantages of Ansible:

· Agentless: For automation, you don’t need to install any other software or firewall ports on the client systems. Also, you don’t need to set up a separate management structure.

· Easy to set up and upgrade: To use Ansible’s playbooks you do not require special coding skills. Upgrading Ansible is very easy as there are no dependencies. For upgrading, you can simply change upgrade the Ansible code on your control system.

· Powerful: Ansible allows you to model even highly complex IT workflows.

· Flexible: You can orchestrate the entire application environment without worrying about where it is deployed. Ansible has fewer dependencies and thus it is more stable and also very fast to implement.

Now let us see the architecture and working of the Ansible.

Architecture of Ansible:

Public/Private Cloud:

It is the Linux Server. And also it is the container for all IT installation and configurations.

Host:

It is a bunch of machines that connect to the ansible server and pushes the playbooks through the SSH key.

Ansible automation engine:

This engine allows the users to directly run a playbook that gets deployed on the hosts. There are multiple components in the Ansible automation engine. The components are host inventory, modules, playbooks, and plugins.

i. Host Inventory: The host inventory enlists the IP addresses of all the hosts.

ii. Modules: Ansible is a package of hundreds of inbuilt modules and modules are the pieces of code that get executed when you are running a playbook. A playbook consists of plays, a play consists of different task and task includes modules.

While running a playbook, the modules get executed on your hosts and the modules contain action in them. The action takes place in host machines when you run a playbook. Here you can customize the modules even. All you have to do is to write a few lines of coding and make it a module so that you can run it at any time you want.

iii. Playbooks: In Ansible, Playbooks actually define your workflow because the task gets executed in the same order that you have written them. For example, if you are writing first to install a package and then start, it’ll follow the same. Playbooks use the YAML code. YAML coding is a very simple data serialization language; which is pretty much like English.

iv. Plugins: Plugins are special kinds of modules. Before a module is getting executed on the nodes the plugins get executed. Plugins get executed on the main control machine for logging purposes. To avoid costly fact-gathering operations, cache plugins are used to keep a cache of facts. Ansible has action plugins, these are front-end modules, and they are used to execute tasks on the controller machine before calling the modules themselves.

v. Connection Plugin: Sometimes you can use a connection plugin instead of SSH for connecting with host machines. For example, You can easily connect to all Docker containers and start configuring as Ansible provides you with a docker container connection plugin.

Working of Ansible:

Ansible working starts initially by connecting to nodes and pushing out small programs which are ansible modules. Ansible executes these modules over SSH by default and later remove them when finished.

The entire execution of the Playbook is controlled by Ansible management node what we call as the controlling node. This is the node from which you are running the installation, and the inventory file is providing the list of the host where the modules need to be run. The ssh connection is made by the management node, and then it executes the modules on the host machines and installs the product. The modules are removed once they are installed. And that’s how ansible works.

Use of Ansible:

· Orchestration:

Ansible provides Orchestration for aligning the business request with the data, applications, and infrastructure. The policies and service levels are defined through automated workflows. An application-aligned infrastructure is created that can be scaled up or down based on the needs of each application.

· Configuration Management:

The consistency of the performance of the product is established and maintained by recording and updating detailed information. It describes an enterprise’s hardware and software.

· Application Deployment:

After defining and managing the application with Ansible, teams can effectively manage the entire life cycle of the application.

· Security and Compliance:

Ansible is essential in everything that is deployed. After defining the security policy, scanning and remediation of site-wide security policy can be integrated into other automated processes.

Conclusion:

Congratulations! You have now learned about Ansible. For managing the infrastructure Ansible is a game-changer. Now it’s your time to implement in your project.

To learn more about Ansible and to increase your earnings and being more valuable to your company, visit our website and check the course today.

0 notes

Text

300+ TOP CHEF Interview Questions and Answers

CHEF Interview Questions for freshers and experienced :-

1. What is chef? The chef is a well-structured, powerful management tool that is used to transfer the infrastructure into codes. With chef, you can easily develop and use the scripts for automation and IT process. 2. What are the primary components of Chef? The architecture of the chef tool can be easily broken down in the following components: Chef node Chef server Chef workstation 3. What is the chef node? The chef node is a hosting element of the chef tool that is managed through the chef-client and responsible to share data across the network. 4. What is the chef server? The chef server is referred to as the center of the chef tool is responsible for storing necessary data and configuring nodes. 5. Define chef workstation? You can consider the chef workstation as the modifying host for the data and cookbooks. 6. Describe the chef resource and its functions? The chef resource is a crucial aspect of the infrastructure which is used to install and run a service. It can be used to: Describe a configured item Choose resources like services, package, and template List down the properties of the resources Group the resources into recipes 7. What is the importance of the chef nodes? DevOps professionals often consider chef nodes as the virtual constituent that is an integral of the infrastructure. Chef nodes help to execute any resource. 8. What is a recipe in the chef tool? The recipe in the chef is often described as a group of resources. The recipe contains all the information reuired to configure an aspect of the system. 9. Write down the functions of the recipe? The recipe is used to perform the following function: Install and manage components of software Deploy applications in chef Execute other recipes in the system Manage system files 10. Describe the difference between a recipe and cookbook in the chef workstation? When developers group down resources together they receive the recipe which is useful when configuring and implementing policies. Now, when they group down recipes what they receive is the cookbook. The concept is similar to the food cookbook and recipes.

CHEF Interview Questions 11. Define step by step process to update the chef cookbook? Here is the step-by-step process you need to follow while updating the chef cookbook: Step 1: Go to the workstation to run the knife SSH Step 2: Run both chef-client and knife SSH directly on the server Step 3: You can consider and utilize the chef-client as a daemon to restart the service 12. What will happen if the action is not defined in chef resource? If the developer did not define a particular action for the chef resource, then it will automatically choose a default action for itself. 13. Describe the run-list in the chef? The run list can be described as a list of roles and responsibilities of the recipes in an order to define which recipe needs to run in which order. It is significant to have run-list when you are dealing with multiple cookbooks in the chef. 14. What are the benefits of using run-list? There are several benefits of using run-list including: Ensure recipes are running in the same order as specified It specifies the node on which recipes will run Transfer the workstation to chef-server 15. What instructions do you need to perform bootstrap in chef? To perform or order bootstrap in the chef, you need: Public IP address or hostname Account Id and password to log into the node You can use the keyword-authentication instead of ID and password 16. Do you know what DK is in chef? As a beginner, you must know that DK is the server station in the chef that is used to interact with the system. There are pre-installed tools in DK which makes interaction much easier and effective. 17. What is chef repository and why do we use it? As a developer, you can consider the chef repository as a collection of cookbooks, data bags, environments, roles and more. You can also sync the chef repository with the version control system to further enhance the overall performance. 18. Showcase your knowledge about the test kitchen in chef? The test kitchen is the tool which is used to enable cookbooks on the server. It also helps in creating various virtual machines on the cloud. 19. What are the primary advantages of a test kitchen? The key advantages of using the test kitchen are: It allows you to use various virtualization providers that create virtual machines It speeds up the overall development cycle It helps you to run cookbooks on the server 20. Have you learned about signature header? If yes, then explain it? The signature header is crucial to validate the interaction that is created or exist between the chef server and node. 21. What is the role of SSL in chef? It can be challenging to find out the right data has been accessed in a pile of servers and chef clients. Therefore, it is crucial to establish the SSL connection to make sure you have accessed the right data in the chef. 22. Describe the starter kit in chef? The primary reason to use starter kit to configure files in the chef tool. It is used to get the clear information for the configuration process. 23. What is chefDK? In the tool, chefDK is the software development kit that is used to develop and test the cookbooks. 24. Does the chef use the ruby? Yes, chef- the configuration tool is entirely written in the Ruby and leverage the pure domain-specific language. 25. What is the difference between Chef and Ansible? Although both Ansible and Chef are popular configuration management tools they are far different than being similar. Both of them have different strength, structures, benefits, and drawbacks. While configuration files are known as cookbooks in chef, they are known as a playbook in ansible. Chef operates on client-server and Ansible is agentless. 26. What is the source of truth? Define the chef’s source of truth? Source of truth is the process of structuring information modules and data in a way that every data is edited at a single place. What actually differentiates chef from the ansible is the way how it handles the source of truth. Unlike ansible, the chef has its chef serves as the source of truth and process includes deploying updated cookbooks on one or two servers. 27. What is the orchestration tool? Is chef orchestration tool? The orchestration tool is often defined as a way to represent complex systems and models in the easiest manner. So, no, the chef is not an orchestration tool. 28. Describe one main difference between chef and puppet tool? The key difference between chef and puppet is that Puppet is the set of tools while the chef is the Ruby DSL and set of configured tools. 29. Define the chef-repo? Chef repo is a directory that is pre-installed in your chef workstation and used to store: Data bags Environments Roles 30. Define the role of chef validator? Chef validator can be defined as a process to analyze to ensure every reuest that is made from the client-server to chef-server is authentic. 31. What do you understand by knife SSL check command in chef? Knife SSL check is the subcommand that is leverage to validate the state of SSL certification and uickly response to troubleshoot the issue. 32. What is data bags in chef? Data bags are the variables that basically store the JSON data and can be easily accessible through the chef server. 33. Where do you store the cookbooks in the chef? The cookbooks are basically stored in the bookshelf directory of chef which can be easily modified as per your needs. 34. What attributes represent in the chef? Attributes in the chef are used at the first hand to represent the information of nodes. 35. What do you understand by “chef template”? Chef templates are the embedded templates that used to creates static files that consist of Ruby statements and expressions. 36. What Happens During The Bootstrap Process? During the bootstrap process, the node downloads and installs chef-client, registers itself with the Chef server, and does an initial check in. During this check in, the node applies any cookbooks that are part of its run-list. 37. How Do You Apply An Updated Cookbook To Your Node? We mentioned two ways. Run knife Ssh from your workstation. SSH directly into your server and run chef-client. You can also run chef-client as a daemon, or service, to check in with the Chef server on a regular interval, say every 15 or 30 minutes. Update your Apache cookbook to display your node’s host name, platform, total installed memory, and number of CPUs in addition to its FQDN on the home page. Update index.html.erb like this.

hello from – RAM CPUs Then upload your cookbook and run it on your node. 38. What Would You Set Your Cookbook’s Version To Once It’s Ready To Use In Production? According to Semantic Versioning, you should set your cookbook’s version number to 1.0.0 at the point it’s ready to use in production. 39. Create A Second Node And Apply The Awesome Customers Cookbook To It. How Long Does It Take? You already accomplished the majority of the tasks that you need. You wrote the awesome customers cookbook, uploaded it and its dependent cookbooks to the Chef server, applied the awesome customers cookbook to your node, and verified that everything’s working. All you need to do now is: Bring up a second Red Hat Enterprise Linux or Centos node. Copy your secret key file to your second node. Bootstrap your node the same way as before. Because you include the awesome customers cookbook in your run-list, your node will apply that cookbook during the bootstrap process. The result is a second node that’s configured identically to the first one. The process should take far less time because you already did most of the work. Now when you fix an issue or add a new feature, you’ll be able to deploy and verify your update much more quickly! 40. What’s The Value Of Local Development Using Test Kitchen? Local development with Test Kitchen: Enables you to use a variety of virtualization providers that create virtual machine or container instances locally on your workstation or in the cloud. Enables you to run your cookbooks on servers that resemble those that you use in production. Speeds up the development cycle by automatically provisioning and tearing down temporary instances, resolving cookbook dependencies, and applying your cookbooks to your instances. 41. Which Of The Following Lets You Verify That Your Node Has Successfully Bootstrapped? The Chef management console. Knife node list Knife node show You can use all three of these methods. 42. What Is The Command You Use To Upload A Cookbook To The Chef Server? Knife cookbook upload. CHEF Questions and Answers Pdf Download

Read the full article

0 notes

Text

Best Devops training institute in noida

Best Devops training institute in noida