#data enginner

Explore tagged Tumblr posts

Text

5 Tips for Aspiring and Junior Data Engineers

Data engineering is a multidisciplinary field that requires a combination of technical and business skills to be successful. When starting a career in data engineering, it can be difficult to know what is necessary to be successful. Some people believe that it is important to learn specific technologies, such as Big Data, while others believe that a high level of software engineering expertise is essential. Still others believe that it is important to focus on the business side of things.

The truth is that all of these skills are important for data engineers. They need to be able to understand and implement complex technical solutions, but they also need to be able to understand the business needs of their clients and how to use data to solve those problems.

In this article, we will provide you with five essential tips to help you succeed as an aspiring or junior data engineer. Whether you’re just starting or already on this exciting career path, these tips will guide you toward excellence in data engineering.

1 Build a Strong Foundation in Data Fundamentals

One of the most critical aspects of becoming a proficient data engineer is establishing a solid foundation in data fundamentals. This includes understanding databases, data modeling, data warehousing, and data processing concepts. Many junior data engineers make the mistake of rushing into complex technologies without mastering these fundamental principles, which can lead to challenges down the road.

Start by learning about relational databases and SQL. Understand how data is structured and organized. Explore different data warehousing solutions and data storage technologies. A strong grasp of these fundamentals will serve as the bedrock of your data engineering career.

2 Master Data Integration and ETL

Efficient data integration and ETL (Extract, Transform, Load) processes are at the heart of data engineering. As a data engineer, you will often be responsible for extracting data from various sources, transforming it into a usable format, and loading it into a data warehouse or data lake. Failing to master ETL processes can lead to inefficiencies and errors in your data pipelines.

Dive into ETL tools and frameworks like Apache NiFi, Talend, or Apache Beam. Learn how to design robust data pipelines that can handle large volumes of data efficiently. Practice transforming and cleaning data to ensure its quality and reliability.

3 Learn Programming and Scripting

Programming and scripting are essential skills for data engineers. Many data engineering tasks require automation and custom code to handle complex data transformations and integration tasks. While you don’t need to be a software developer, having a strong command of programming languages like Python or Scala is highly beneficial.

Take the time to learn a programming language that aligns with your organization’s tech stack. Practice writing scripts to automate repetitive tasks, and explore libraries and frameworks that are commonly used in data engineering, such as Apache Spark for big data processing.

4 Learn Distributed Computing and Big Data Technologies

The data landscape is continually evolving, with organizations handling increasingly large and complex datasets. To stay competitive as a data engineer, you should familiarize yourself with distributed computing and big data technologies. Ignoring these advancements can limit your career growth.

Study distributed computing concepts and technologies like Hadoop and Spark. Explore cloud-based data solutions such as Amazon Web Services (AWS) and Azure, which offer scalable infrastructure for data processing. Understanding these tools will make you more versatile as a data engineer.

5 Cultivate Soft Skills and Collaboration

In addition to technical expertise, soft skills and collaboration are vital for success in data engineering. You’ll often work in multidisciplinary teams, collaborating with data scientists, analysts, and business stakeholders. Effective communication, problem-solving, and teamwork are essential for translating technical solutions into actionable insights.

Practice communication and collaboration by working on cross-functional projects. Attend team meetings, ask questions, and actively participate in discussions. Developing strong soft skills will make you a valuable asset to your organization.

Bonus Tip: Enroll in Datavalley’s Data Engineering Course

If you’re serious about pursuing a career in data engineering or want to enhance your skills as a junior data engineer, consider enrolling in Datavalley’s Data Engineering Course. This comprehensive program is designed to provide you with the knowledge and practical experience needed to excel in the field of data engineering. With experienced instructors, hands-on projects, and a supportive learning community, Datavalley’s course is an excellent way to fast-track your career in data engineering.

Course format:

Subject: Data Engineering Classes: 200 hours of live classes Lectures: 199 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 70% scholarship on all our courses Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation For more details on the Big Data Engineer Masters Program visit Datavalley’s official website.

Why choose Datavalley’s Data Engineering Course?

Datavalley offers a beginner-friendly Data Engineering course with a comprehensive curriculum for all levels.

Here are some reasons to consider our course:

Comprehensive Curriculum: Our course teaches you all the essential topics and tools for data engineering. The topics include, big data foundations, Python, data processing, AWS, Snowflake advanced data engineering, data lakes, and DevOps.

Hands-on Experience:We believe in experiential learning, which means you will learn by doing. You will work on hands-on exercises and projects to apply what you have learned.

Project-Ready, Not Just Job-Ready: Upon completion of our program, you will be equipped to begin working right away and carry out projects with self-assurance.

Flexibility: Self-paced learning is a good fit for both full-time students and working professionals because it lets learners learn at their own pace and convenience.

Cutting-Edge Curriculum: Our curriculum is regularly updated to reflect the latest trends and technologies in data engineering.

Career Support: We offer career guidance and support, including job placement assistance, to help you launch your data engineering career.

On-call Project Assistance After Landing Your Dream Job: Our experts can help you with your projects for 3 months. You’ll succeed in your new role and tackle challenges with confidence.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#data analytics course#data science course#business intelligence#power bi#data engineering roles#data enginner#online data engineering course#datavisualization#data visualization#dataanalytics

0 notes

Text

Essential Python Tools Every Developer Needs

Today, we'll look at some of the most popular Python tools developers, coders & data scientists use worldwide. If you know how to use these Python tools correctly, they can be useful for various tasks.

Pandas: Used for Data Analysis Scipy: Algorithms to use with numpy PyMySQL: MYSQL connector HDFS: C/C++ wrapper for Hadoop connector Airflow: Data Engineering tool PyBrain: Algorithms for ML Matplotlib: Data visualization tool Python: Powerful shell Redis: Redis'access libraries Dask: Data Enginnering tool Jupyter: Research collabaration tool SQLAIchemy: Python SQL Toolkit Seaborn: Data Visuailzation tool Elasticsearch: Data Search Engine Numpy: Multidimensional arrays Pymongo: MongoDB driver Bookeh: Data Visualization tool Luigi: Data engineering tool Pattern: Natural language Keras: Hingh-lavel neural network API SymPy: Symbolic math

Visit our website for more information: https://www.marsdevs.com/python

0 notes

Text

1 note

·

View note

Text

Learn machine Learning With Data Science | certification course

Boost your Resume and Carrer opportunities by joining our Online Certification Program of Machine learning and Data science under expert trainers guidance. So let’s come and learn together. Visit Us : https://learnandbuild.in/course/ml-with-data-science

#machine learning#data science#app development#software enginner#data analytics#learn and build#technology#tech updates#tech company

1 note

·

View note

Text

LPU launches B.Tech Programs in Data Science & BlockChain in collaboration with the Industry

Lovely Professional University (LPU) is known for its unique approach to education with its emphasis on hands-on learning and industry immersion. Keeping with this belief, the university has introduced three ground-breaking engineering programs. The highlight of these programs is that they have been developed by industry experts, and will be taught in collaboration with them. These engineering programs are in the newly emerging areas like Data Science & Data Engineering, BlockChain, and Full Stack Development.

Read more by clicking this link below-

https://happenings.lpu.in/lpu-launches-b-tech-programs-in-data-science-blockchain/

The relevance of these programs can be understood from the fact that as per LinkedIn’s 2020 Emerging Jobs Report, BlockChain specialist is the number 1 emerging job in India, while Data Science and Full Stack Developers are ranked 3rd and 4th at the global level.

Admission Process

Admission to LPU in B.Tech (Hons.) programs is quite competitive and considering the scenario of restricted movement and keeping the focus on the safety of candidates, LPU extends the option of Online Remotely Proctored LPUNEST exam from home. Online registration for LPUNEST has already started. Students can get scholarships on the basis of LPUNEST. The exam would be conducted online where students will have an option to choose their exam date and time using the online slot booking mechanism. Since the class 12 results are not announced yet, the students can still take scholarships in LPU on the basis of class 10 marks.

0 notes

Video

youtube

#28 Practical Python Inheritance | Python Tutorial for Beginners Tek Sol...

0 notes

Text

Word find tag (wood/s, dark, ground, tree/s)

The lovely @thegreatobsesso tagged me to search my WIP for wood(s), dark, ground and tree(s). Thanks, friend!

These are from Bridge From Ashes and I realised after I chose them that they're all pretty effective introductions to some of the women in the book. Notes in italics!

WOOD

She follows my eye to the glass case. “Do you like those knives?” I’m not sure how to answer that. “I have ones nearly the same. I’ve never seen any so similar.” “They’re very unusual. I’ve only ever seen sets like that come from one source.” She gives me a sad, knowing look and directs us to an orange velvet couch with years of scratches and scores in the varnish of its rolled wooden arms.

📝 Indira Enginn, underworld crime granny, dishes out turmeric lattes and hard truths wrapped in love, provides you with new clothes to replace the ones you got covered in blood when you killed that guy.

DARK

She slides down from the stool and I catch her arm before she walks away. “What’s up?” she says. “Nothing. Just take it easy, OK?” “For you, lovely?” She smiles. “Anything.” And I think that’s what I needed to hear. All the darkness, all the shit, all the fucked up mess no-one can fix, inside my head and outside of it, but she looks happy. Even though she isn’t, not really. And maybe so do I, even though I’m not either.

📝 Freyja El Khatib, sweetness and light with a crunchy knife-throwing centre, make a mess of her bar and you won't walk out of there in one piece, will match you drink for drink and break your hands if you piss her off.

GROUND

She taps a finger on the back of my hand where the line from the graft has long since vanished. “And you would’ve left him dead in a pool of blood on the floor in temp holding if I hadn’t stopped you, which would’ve been a whole mess of paperwork that we’d both have had to deal with. There’s no moral high ground here.”

📝 Agent Zola Kartheiser, forced into Authority service after conviction for illicit data trade, impossible to say no to, it's not technically murder if someone else does it for you.

TREE

“I got tenth, twenty-second, and seventy-third floor.” She pulls out another pack. “And these. Premium rooftops. These hit hard. Real hard. Wild abandon right at the start, a blast of freedom, then brutal panic just before the final rush. I was going to take out the last-minute regret, but I thought better of it. It’s part of what makes these so hot. I wouldn’t recommend them to most people, but I know you can handle them and I reckon they'd be right up your street. That what you’re after?”

📝 Viande McCavitt, aggressively talented engineer of mind-altering frequencies and prosthetic body parts, thinks you're a very interesting machine, would kill you for getting in her way...haha only joking (but not really)

Tagging @felixwriting, @forthesanityofsome, @juls-writes and @kaiusvnoir. If you'd like to do it, the words to search you WIP(s) for are smoke, cloud, mist, and steam 💜

#my writing#project frequency#bridge from ashes#writeblr tags#word find tag#writers on tumblr#writeblr community#am writing#original fiction#tumblr writers#writeblr#writing community#original writers

13 notes

·

View notes

Note

Lando works his butt off, like his knowledge is suprising, like even Ted has said how Lando can look at Data and then get the needed results from it... Lando can explain parts of the car and what it does, i remember in 2021 he watched race highlights on twitch and gave good explations about situations etc. Even about other drivers and what had happened. He doesn't go off vibes.

Lando gets to know his team, brought everyone at the mtc expensive water bottles, takes them out, helps take his car apart. Like he is still in contact with his ex head enginner Marc, Marc even did Quadrants Pitwall this year.

Lando will do lots of mclaren sponsership events during a non race week over different time zones just before his home race aka his busiest race of the year, whilst his teammate has a week off.

Hes apparently one of the drivers who spends the longest signing autographs at races.

our boy is great isn't he? 🥹 🫶

4 notes

·

View notes

Text

Reverse enginnering the unreleased GameBoy Printer COLOR

For a long time, when I checked out the graphics for the 64DD title Mario Artist Paint Studio, the successor to Mario Paint, I found a bunch of graphics and text that seemed to be for printing images. I was not entirely sure how that worked, how to even access it. I didn’t know enough reverse enginnering “techniques” to even bother while I was translating all the Mario Artist titles.

Fast forward to this month, and with new tools that I wrote, I started to think again about this feature, because it seemed like a full fledged working overlay code. So I went on, and tried to find the other overlays, such as Game Boy Camera menu, and see how the game loaded the overlay and access it.

After finding the LBAs for those overlays, I tried to backtrack the process of loading until I find what is seemingly a bunch of functions to load, init and access the overlay. Bingo! One of them accesses the unused printer overlay. So all I need is to force a way to access it, such as repointing a button’s purpose.

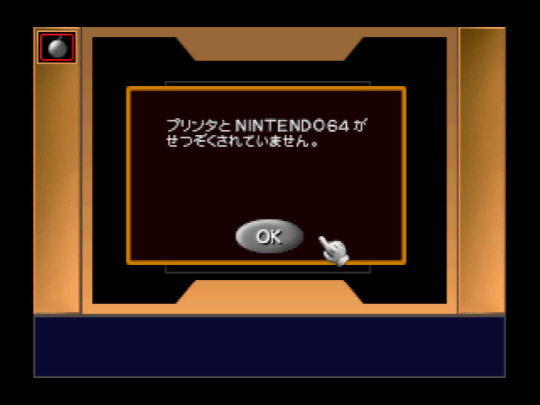

Okay, so I’m greeted with an error that the Printer is not connected. Now it was time to know what it is actually doing to detect the printer. Turns out it expects the Transfer Pak, as there are errors involving the “64GB Cable” (japanese name)? With JoyBus commands that I’ve never seen?

I eventually respond with something that it wants with a custom script, and then I see commands that are pretty much as the same as commands used for the GameBoy Printer.

Thank you Shonumi for having this great article about the technical aspects of the GameBoy Printer, else I would have never known: https://shonumi.github.io/articles/art2.html

So, okay, we need to “emulate” an unreleased Transfer Pak Link cable feature, alongside some response from the printer.

For people who wants some technical information, here’s how the Transfer Pak Link Cable feature works, this uses the JoyBus commands 0x14 to write data (0x23 bytes to send, 0x01 byte to receive), and 0x13 to read data (0x03 bytes to send, 0x21 bytes to receive). (Oddly close to the commands used for GameCube to access the GBA...)

Those commands basically constantly uses 0x20 bytes for data, and 1 byte for some kind of CRC which, sorry, I haven’t taken the time to figure out the calculation, my script just fetches the CRC output.

I plan to write more about the Transfer Pak communication aspects later, as I want to focus on the GameBoy Printer Color.

Eventually it sends GameBoy Printer commands if you “emulate” the Transfer Pak link cable, then receives the so called “keepalive” byte, usually 0x81. And sure enough, I get a Pocket Printer screen.

You get a screen like this, where you can print vertically, horizontally, or vertical strips, with a bigger resolution. You can zoom the picture a bit when you hover the pointer to the picture. You can set the paper color to get a preview of the output, set the brightness and contrast, not unlike the GameBoy Camera, and then a button to print. It is slow, but I found one undocumented command for the GameBoy Printer: Command 0x08. It seems when there’s an error, this command is sent and may interrupt what the GameBoy Printer is doing? Either way, I’m sure Shonumi would be interested to verify this command.

However, this article is about the GameBoy Printer COLOR. Turns out the game expects more than one keepalive byte, it expects 0x81... but it also expects 0x82. What happens if we use that one?

Pocket Printer Color detected, with a full color picture at the center, and more settings. This time there are 5 print settings: slow horizontal/vertical, fast horizontal/vertical (difference explained later), and vertical strips. We are also allowed to change the brightness, contrast, and hue. And of course, the print button.

This is pretty huge, because not only we get to emulate an unreleased Transfer Pak Link Cable support, but also emulating an unreleased, heck, even unannounced GameBoy Printer Color, and Paint Studio provides a fully functional menu for it.

So here’s the commands for that hardware:

0x01 - Init 0x02 - Print Settings (used before copying) 0x04 - Copy Pixel Line 0x06 - Print? (used after copying) 0x08 - Stop? 0x0F - Nop (Status)

It is pretty similar to the original Printer, but some things are performed differently. First, it uses Command 0x02, which uses 5 bytes now, which I’m not sure of the definition (magic bytes, after all), aside that it may set the horizontal resolution, which is either 320 (slow print) or 160 (fast print).

Then it uses command 0x04, which copies a full pixel line, in either resolution, and sends a linear RGBA5551 line just like the N64 format, which means the printer supports a pretty good amount of colors.

After copying all the lines, command 0x06 is used... twice. I’m not sure what it does, but the menu does ask if you want to print the image again, and if I say yes, it only sends that command again, so that means the printer has a large buffer to contain a linear 320x240 RGBA5551 image, and that this command is related to print the image.

As far as I know, the printer status byte definition is very similar, if not the same as the regular printer.

I have made a script for Project64 that “emulates” the necessary stuff so you can mess around with this feature: https://github.com/LuigiBlood/EmuScripts/blob/master/N64/Project64/DMPJ_PocketPrinter.js

You can select the Printer Type between Normal and Color, and you have to click the Save & Load button to access this feature. Disable the script to recover the use of that button. There’s also a bit of documentation inside.

It also creates a RAW file output of what’s copied, so you can take a look at the image with a proper viewer.

This is kind of an experimental blog post, as I’m not very good at writing this stuff, but I hope this gives a good view of the GameBoy Printer Color.

#N64#64DD#Mario Artist#Paint Studio#Game Boy#Printer#Color#GB#GBC#reverse engineering#emulation#Nintendo#Nintendo 64

273 notes

·

View notes

Text

The Role of a Senior Data Engineer: Key Responsibilities and Skills

Data Engineers play a crucial role in today's data-driven world. They collect, process, and store data, enabling data scientists and analysts to utilize it effectively. As the data landscape evolves, the demand for Senior Data Engineers continues to rise. In this article, we will explore the evolving role of a Senior Data Engineer, essential skills required to excel in this role and how a course at Datavalley can help you acquire and hone these skills.

The Evolving Role of a Data Engineer

Senior data engineers are accountable for building and upkeeping data collection systems, pipelines, and management tools. They oversee the activities of junior data engineers and the architectures themselves. While this role has always been essential, it has evolved significantly in recent years due to the proliferation of big data and cloud technologies. Here are some key aspects of a Senior Data Engineer's responsibilities:

1. Data Ingestion:

One of the primary tasks of a Data Engineer is to design systems that can efficiently ingest data from various sources, such as databases, APIs, and streaming platforms. This requires expertise in tools like Apache Kafka, Apache Nifi, or cloud-based solutions like AWS Kinesis.

2. Data Modeling and Transformation:

Data engineers play a critical role in creating data models that enhance data accessibility and comprehension for non-technical stakeholders, including analysts and data scientists.

Data Engineers need to create and manage data pipelines that transform raw data into a format suitable for analysis. Proficiency in SQL is crucial for data modeling, and ETL (Extract, Transform, Load) tools like Apache Spark or Talend are commonly used for data transformation.

3. Collaborative Engagement

Daily meetings are a fundamental aspect of a data engineer's routine. These meetings often include the daily scrum, where team members discuss recent achievements, ongoing tasks, and potential obstacles.

Additionally, data engineers actively participate in cross-functional meetings, collaborating with data scientists, data analysts, product managers, and application developers.

4. Data Storage and Data Query Optimization:

Selecting the right data storage solution is critical for performance and scalability. Data Engineers should be well-versed in traditional databases (e.g., PostgreSQL, MySQL) as well as NoSQL databases (e.g., MongoDB, Cassandra) and cloud-based storage options (e.g., Amazon S3, Azure Data Lake Storage).

As data volumes continue to grow, data engineers are tasked with optimizing data queries to ensure efficient data retrieval. This optimization process may involve the creation of indexes, data restructuring, or data aggregation to facilitate quicker queries.

5. Data Quality and Governance:

Ensuring data accuracy and compliance with regulations is vital. Data Engineers must implement data quality checks, data lineage tracking, and access controls to maintain data integrity.

6. Cloud Computing:

The cloud has revolutionized data engineering. Familiarity with cloud platforms like AWS, Azure, or Google Cloud is essential, as many organizations are migrating their data infrastructure to the cloud.

7. DevOps and Automation:

Data Engineers often collaborate closely with DevOps teams to automate deployment, scaling, and monitoring of data pipelines. Proficiency in tools like Docker and Kubernetes is increasingly valuable.

Data engineers are instrumental in managing the data infrastructure that underpins data processing and storage. This aspect of their role often encompasses a combination of development and operational tasks, particularly in larger teams.

8. Facilitating Data Flow

In essence, data engineers serve as intermediaries between data sources, storage systems, and end-users. Their role is to ensure the smooth flow of data within an organization, making certain that data is readily available and accessible to those who require it.

By bridging the gap between technology and business, data engineers empower analysts and data scientists to focus on deriving insights rather than grappling with data retrieval and manipulation.

9. Data Security:

Protecting sensitive data is a top priority. Knowledge of encryption techniques, IAM (Identity and Access Management), and security best practices is crucial.

Essential Skills for a Senior Data Engineer

Now that we've outlined the evolving role of a Senior Data Engineer, let's delve into the essential skills needed to excel in this field.

1. Strong Programming Skills:

A Senior Data Engineer should be proficient in at least one programming language commonly used in data engineering, such as Python, Java, or Scala. These languages are essential for building data pipelines and working with big data frameworks.

2. Proficiency in SQL:

SQL is the backbone of data manipulation and querying. A Senior Data Engineer should have a deep understanding of SQL to design efficient database schemas and perform complex data transformations.

3. Data Modeling:

Creating effective data models is crucial for organizing data and ensuring its accessibility and usability. Skills in data modeling techniques like entity-relationship diagrams and star schemas are invaluable. Getting advanced data engineering certifications with Snowflake’s cloud-based platform would be beneficial.

4. Big Data Technologies:

Familiarity with big data technologies such as Apache Hadoop, Spark, and Flink is essential. These tools are used for processing and analyzing massive datasets efficiently.

5. Cloud Platforms:

As mentioned earlier, cloud platforms like AWS, Azure Cloud Services, and Google Cloud Platform are increasingly central to data engineering. Knowledge of these platforms, including services like AWS Lambda and Azure Data Factory, is a must.

6. ETL Tools:

Data Engineers need expertise in ETL tools like Apache NiFi, Talend, or cloud-based alternatives to streamline data integration and transformation processes.

7. Database Management:

A deep understanding of both SQL and NoSQL databases is essential. Data Engineers should be able to design, optimize, and manage databases effectively.

8. Data Pipeline Orchestration:

Tools like Apache Airflow or cloud-native solutions like AWS Step Functions are essential for orchestrating complex data pipelines.

9. Version Control:

Proficiency with version control systems like Git is essential for collaboration and code management in data engineering projects.

10. Interpersonal Skills:

Communication, problem-solving, and teamwork are equally important for a Senior Data Engineer. They often collaborate with data scientists, analysts, and business stakeholders, so effective communication is vital.

Why Datavalley for Data Engineer?

To acquire and master these essential skills for a Senior Data Engineer, consider enrolling in a course at Datavalley. Datavalley offers a comprehensive Data Engineer program designed to provide hands-on experience and in-depth knowledge in all the areas mentioned above. Here's why Datavalley is the right choice:

Comprehensive Curriculum: Our courses cover Python, SQL fundamentals, Snowflake advanced data engineering, cloud computing, Azure cloud services, ETL, Big Data foundations, DevOps, Data lake, AWS data analytics.

1. Expert Instructors: Datavalley's courses are led by experienced data engineers who have worked in the industry for years. Experts teach modules to broaden your understanding and provide industrial insights.

2. Hands-on Projects: The best way to learn is by doing. Datavalley's curriculum includes hands-on projects that allow you to apply your skills in real-world scenarios.

3. Cutting-edge Tools and Technologies: Datavalley stays up-to-date with the latest tools and technologies used in the field of data engineering. You'll learn the most relevant and in-demand skills.

4. Industry Networking: We provide opportunities to connect with industry professionals, helping you build a network that can be invaluable in your career.

5. Career Support: Datavalley goes beyond teaching technical skills. They offer career support services, including resume reviews and interview coaching, to help you land your dream job.

6. Project-Ready, Not Just Job-Ready: Our program prepares you to start working and carry out projects with confidence.

7. On-call Project Assistance After Landing Your Dream Job: Our experts will help you excel in your new role with 3 months of on-call project assistance.

Conclusion

Becoming a Senior Data Engineer requires a diverse set of technical skills and a deep understanding of data infrastructure. As data continues to grow in importance, so does the demand for skilled data engineers.

Datavalley's Big Data Engineer Masters Program can provide you with the knowledge and practical experience you need to excel in this role. Whether you're looking to advance your career or enter the field of data engineering, Datavalley is the right choice to help you achieve your goals. Invest in your future as a Senior Data Engineer and unlock the potential of data on your journey.

#datavalley#dataexperts#data engineering#data enginner#data visualizations#data engineering training#data engineering course

0 notes

Text

Soft Skills For Engineering Students To Land a Better Job - Arya College

Top Engineering Collages of Engineering in Jaipur have many courses like Mechanical, Production, Electronics, Design, Software and Civil for all of them communication is important for everyone.

Lalani Jones Engineering Vice President at Tachyon Inc.(San Diego) says “ We look for people who can lead a team of four to six people—motivated and a person who can quickly learn which people are best at doing what”

“Either it’s a Start-up or a Big organization it’s important to use these skills very effectively, and give solutions accordingly those are called Global Engineers.

Many Employees choose to hire skills rather than people

The Global Engineers must keep track of what is happening in the global market to determine gaps in their knowledge, skills, and attitude so they will know where to focus. They must be very expressive with career development so they can strategically plan their employment and learning activities, and they must learn how to learn so they can fill the learning gaps. An Engineer Graduate Today:-

Private Collages of Engineering in Jaipur teaches some skills which are very important that many firms look for skills and attribution broad areas:-

Defining Skills:-echnical Skills-a Sound knowledge of the engineering fundamentals within their discipline, build solid basic basics of mathematical know-how.

Enabling skills:- Soft Skills.

Some Of The Soft Skills Are:-

Written or Verbal skill:- Communication is to present information cleanliness to understand better. Like presentations, and training sessions; d in non-non-reforms for such as emails, memos, strategy documentation, etc.

Stress Control Skill:- To Reduce the stress in working premises you must have this skill, with this you can reduce the triggers area and eliminate them

Public Dealing skills:- A good Public dealer negotiate to get the best outcome and value for all parties. They are the people who can the take attention of an audience and persuade them in a certain direction.

Creative and strategic planner:- These skills are best for business strategies and implement them. Engineers should have this soft skill to plan their projects, make efficient use of resources, and achieve the best results. creativity is a Important skill for engineers. It gives the ability to think outside the box.

Research, ethics, and Commitment with time:- Research is a most important skill of an Engineer without this they can’t process and develop a new idea. Making a decision that affects human beings and must be safe to use, sustainable, and beneficial to society, in the long run, Ethics, Dedication, and commitment are meant toward any organization for completing a task within the time frame.

Adaptability:- Engineers need to adopt new technology and skills that change day by day and also run any large-scale project effectively and with efficiency.

BTech Collage of Enginnering in Rajasthan have many goof teachers for soft skill for Engineers

Future Engineers

Information Sources:- Can get information about anything quickly and knows how to Execute and use the information.

Can do anything:- Understand the Engineering basics to the degree that he or she can quickly access what needs to be done, can acquire the tools needed, and can use these tools proficiently.

Works with anyone anywhere:- has the communication skills, team skills, and understanding of the global and current issues necessary to work effectively with other people.

Imagination can make a reality:-Has the entrepreneurial spirit, the imagination, and the managerial skills to identify needs, come up with new solutions, and see them through.

Engineers Must Have These Skills Too:-

An Ability to Teach new skills and apply them like Maths and Science.

An ability to analyses the data and Design the new thoughts

An ability to understand the requirement or process to meet desired needs.

An ability to function on multi-disciplinary teams.

Identity, Formulate and solve problems. An understanding of professional and ethical responsibility.

An Ability to communicate effectively

Learn new things constantly.

A knowledge of contemporary issues.

Conclusion

Best Engineering Collages in Rajasthan says thatr rapid changes that the world is curranty going through, coupled with changes in engineering education starting to take place in the nineties, are likely to result in extensive education. The new structure will continue to be based on solid preparation in maths and science. A good Engineer must be able to do more than just perform technical skills. He will be responsible for creating new ideas and solutions and seeing them through. Not only must the engineer innovate but he or she must also be able to help the invocation a reality with their communication skills.

0 notes

Text

Evidence Based Web #1

なんかもうめっちゃ興味あるんだよなー。エビデンスベースウェブ。

finding myself having strong interrrest on evidence based web, i ve been starting some surveys on the current situation around it for both about progress of the web documents, and methodologies of the evicence based informaion.

the keyword would be like 1. data driven business, A/B tests, AI, machine leraning, binary machine leraning, labeling, causation modeling, general AI ( GAI ), next version of web crawlers, web meta data, academic science, statistics, and universal graph.

キーワードは広範で、これは「科学的に大学とか研究機関が心理、医療、健康、生産性、経済学、恋愛、性、社会ストレス、意思決定、などの領域に理想的な環境を作り強く定義された協議の科学的手法や統計、アナリシス手法を加えているものたちのみならず、スタートアップエコノミーを広く一種のエビデンスベースの実証活動と捉え、仮説としてのプリシード、仮設のミニマムな検証としてのシード、仮説検証後のPDCAとして因果関係グラフを各エッジ(因果kな系)を分離した形で検証比較(統合ROIの比較とエッジ(causation = 因果kな系)の尤度検証)を行いアーリーなどを進めてい存在、それを連続的に社会が後押しする社会動態、クリエイティブ資本の定義はこれだなーというものも含める。企業やいろんな主体がデータだ、因果関係だ、尤度だ、という方向に向かっていく理由も同じだし、僕ら人工知能研究開発者がユニバーサルグラフは最終的に相応的な粒度の、人間が発見したラベルから、バイナリを機械学習にして因果関係グラフにしたもの、またそれに言語ラベルグラフを作り、情報の価値を把握するグーグルサーチの基盤アルゴリズムのようなものに統合されて、そ子に思考系(僕が作ったような統合ROI判定いの思考でもうできちゃうとこまできてる)が入ればもう考える神可能になるね、というシナリオにおいて、この「あらゆるレイヤーで、因果関係を一本一本引き抜いて、きっちりエビデンスつけてっちゃう、そしてそれをウェブに放流して、みんなが検証して追試して投げかえしていく」ということは前段として必須であったのだが、これが満たされて行こうとしてるのが、もうほとんど僕の定義でいう「ホモデウス間も無くっす」んもシナリオ通りであるって感じだ。もちろんもっと複合的な要因で成立するんだろうけど、エビデンスベースウェブはその前哨戦で登場する。

ah translate it into eng.... OK .. so like we ve seen the huge amazing growth of the scientific documents, exchanges in many many academic fields publishing there evidence based papers, which are following rigid types of verification process on the causation. our impression of this thing, happening on the evidence based scientific papers , is like the speed is increasing faster and faster, and the granulalirity’s getting smaller and smaller, the exchange speed ( span ) of one paper anounced and published to other researhers groups react is faster and quicker, and it makes me, remember the born of blogosphere, and SNS, so it’s a like, the Web. so i say it’s something coming up. evidneced base web, but this is not onoly academic things, but all of you and company, startups or big company, all us, indivisuals , small groups to big ones, all are generated the data driven, and “the causations evidences” and publshing the web.

you may have heard about this, General AI, which can live forever instead of us who dies super fast, will have the whole universal causations graph, which has all the granulralry, as possible as detailed, and as possible as huge, graph of the causational relationshiops, inside, and all are calculated, for all the states and generations of the future moments, it caliaulate the best thing that he should have, and he makes decision based on it, and act on it. it’s the algorythms of the god, a.k.a homo deus.

plus to this, how the invention of the startups class are done ? Rihard Florida described the situations and the fast of born of the creative class, who are keep doing the creative activities. i wanna put something on his great finding, that the fast growh of connected economy scale, and technology innovation cycle where the oulet ( output ) of some nodes ( infomration onde, or companies and projects ) connected to the inlet of other projects/companies, and and they are now invented a fixed reusable components called stock operations. it’s not only serial entrepreneurs who takes the recursive change agent role, bug developers ( who are creating smaller grained github code another forks one nad generate another putting some refinement another, and some other enginners uses it to invent some others, and side effects they create the innovation through the services / business ) or all kind of people around it,now the quick recursive cycles has kicked around 2000, and now the speed is obviously increasing old society. yey ? then the difinition of hte startups are now, they are the entity to do the business based causation verification. it’s the key difference between “creative class” has been doing, and startup class are doing. I wonder why, the society has invented this startup class to take a lot of venture capital from the old capital assets, to do keep doing this trials. see the worlds around, find the problems on it, see the causations around, look into peoples deeper part of mind, find the causations, doubt it, doubt it, and make the assumptions set, and do the startups, hire developers, artists ship it, and see the results with visible variables ( KPI )s following the causations results, so in a word, they are verifying it.

疲れた次から各論

2 notes

·

View notes

Photo

https://ecudepot.com/product/mb-48v-test-platform/ Mercedes-Benz W205 W213 W222 W167 Chassis Mild-Hybrid car with 48 Volt Lithium battery usually report fault code like: B183349, B183371, B183384, B183319, Repair methods were No longer a secret, this test bench is for repair enginner to check the battery pouch and DC-DC voltage inverter status, diagnose, read data stream, simulate the car start to enable the battery output 48 volt voltage. #MercedesBenzHybrid #MildHybrid #48VlithiumBattery #MHEV #MercedesMHEV #Mercedes48V #MercedesBatteryPouch #MercedesDCDC #ActuatorBlocked #b183349 #b183371 #B183384 #B183319 #DCDCconverter #VoltageInverter #PowerConverter

0 notes

Text

URGENTE: Multinacional holandesa está com mais de 15 vagas de emprego On e Offshore para o RJ, MG e PR

A companhia mundial Fugro, especialista em dados geográficos, está recrutando profissionais de Minas Gerais, Paraná e Rio de Janeiro para concorrer as suas vagas de emprego em aberto. São diversas oportunidades e uma delas poderá ser sua! Para isso, basta se inscrever em algum dos processos seletivos e aguardar ser convocado. No entanto, somente se candidate após verificar os requisitos do cargo desejado.

Multinacional Fugro abre mais de 15 vagas de emprego para os profissionais de Belo Horizonte, Pinhais e Rio das Ostras

Antes de saber como realizar a sua candidatura, ressaltamos que o site PetroSolGas não possui nenhuma ligação quanto aos processos seletivos. Aqui, nós somente divulgamos, para que as vagas de emprego alcancem o maior número possível de profissionais que estão desempregados.

Portanto, caso venha a se interessar por algum dos cargos citados, verifique se atende aos requisitos solicitados pela Fugro e faça a sua inscrição, conforme ensinaremos depois.

As vagas de emprego ofertadas pela Fugro são:

Airborne Sensor Operator — Marine, Rio das Ostras, Rio de Janeiro;

Analista Comercial, Belo Horizonte, Minas Gerais;

Assistente Comercial, Pinhais, Paraná;

C# Developer \ Desenvolvedor C#, Rio das Ostras, Rio de Janeiro;

Data Processor \ Analista Proc. De Dados Pl, Rio das Ostras, Rio de Janeiro;

Estagiário Engenharia Civil, Pinhais, Paraná;

Estágio, Rio das Ostras, Rio de Janeiro;

Maritime Qhsse Coordinator \ Coord. Marit. Qsms, Rio das Ostras, Rio de Janeiro;

Offshore Data Processor \ Analista Proc. de Dados Offshore Pl, Rio das Ostras, Rio de Janeiro;

Pl Surveyor \ Ope Survey Pl, Rio das Ostras, Rio de Janeiro;

Proposal Analyst \ Analista de Propostas, Rio das Ostras, Rio de Janeiro;

Sênior Innovation Enginner \ Engenheiro de Inovação Sênior, Rio das Ostras, Rio de Janeiro;

Software Engineer Team Lead — Land, Rio das Ostras, Rio de Janeiro;

Systems Support Engineer — Marine, Rio das Ostras, Rio de Janeiro;

Técnico de Campo, Belo Horizonte, Minas Gerais;

Técnico em Segurança do Trabalho, Belo Horizonte, Minas Gerais.

Se interessou por alguma oportunidade de emprego? Saiba como se inscrever nos processos seletivos da companhia

Caso alguma dessas vagas de emprego seja do seu interesse, não perca tempo! Realize logo a sua candidatura, antes que outro profissional seja chamado. Afinal, a empresa não determinou um prazo de validade para nenhuma das oportunidades.

Conforme dito anteriormente, a companhia está disponibilizando alguns cargos para os estados de Minas Gerais, Paraná e Rio de Janeiro. Mais precisamente, os municípios de Belo Horizonte, Pinhais e Rio das Ostras.

Dessa forma, caso não resida em nenhuma dessas cidades, as chances de consegui uma vaga de emprego será reduzida, mas não totalmente!

A Fugro está sempre ofertando novas oportunidades, quem sabe da próxima vez uma delas não seja sua!

Agora, para aqueles que residem em alguma dessas localidades, basta acessar esse link aqui e logo estará na página de inscrições. Sendo assim, basta escolher a vaga de emprego desejada, analisar os requisitos solicitados e se eles são compatíveis com o seu currículo profissional.

Se sim, chegou o tão esperado momento de se candidatar na vaga de emprego. Preencha tudo o que se pede e aguarde o contato ou resposta da Fugro sobre o processo seletivo. Desde já, desejamos boa sorte a todos!

O post URGENTE: Multinacional holandesa está com mais de 15 vagas de emprego On e Offshore para o RJ, MG e PR apareceu primeiro em Petrosolgas.

0 notes

Video

youtube

#26 Practical Python FUNCTIONS | Python Tutorial for Beginners Tek Solut...

0 notes

Text

KNN Pros And Cons

Dataspoof.info

Welcome to dataspoof.info

Man-made consciousness Local area

About Us

Fabricate Your Skills With Adaptable Online Courses

Hi everybody, welcome to the DataSpoof!

I'm Abhishek Singh. The AI enginner. I began DataSpoof as an energy, and presently it's engaging more than 40000+ perusers internationally by assisting them with learning Data Science and Machine learning.

Around here at DataSpoof, I cover every one of the parts of Artificial Intelligence. You will see as free substance around Data Science, Machine learning, Deep learning, Computer vision and Big Data.Constant notice for COVID-19 cases utilizing Python In this instructional exercise, you will find out with regards to how to get continuous notices for COVID-19 cases utilizing python. Like by far most on earth as of now, I'm really stressed over COVID-19. I wind up ceaselessly analyzing my own prosperity and pondering whether/when I will contract it. The current situation is exceptionally intense for us all. We should stand together and battle for this pandemic crown that is the best way to overcome this thing. Rather than standing around and letting whatever is ailing me hold me down, I chose to give a valiant effort and continue to get refreshed on the COVID-19 patients. So I chose to make an ongoing warning on my framework that will assist me with monitoring these cases. Inside the present instructional exercise, you will figure out how to

K-Nearest Neighbors without any preparation in python

The K-Nearest Neighbors is a direct calculation, we can carry out this calculation without any problem. It is utilized to address the two orders just as relapse issues. KNN calculation is utilized in an assortment of utilizations like clinical, banking, farming, and genomics. There is one cool application which is client agitating, in this we foresee whether the client will drop our membership plan or stick with it. In the clinical area, we can utilize KNN to order between sound patients and diabetic patients.

https://www.dataspoof.info/

0 notes