#databricks testing

Explore tagged Tumblr posts

Text

How Databricks Unity Catalog and Datagaps Automate Governance and Validation

Data quality is the backbone of accurate analytics, regulatory compliance, and efficient business operations. As organizations scale their data ecosystems, maintaining high data integrity becomes more challenging.

The seamless integration between Databricks Unity Catalog and Datagaps DataOps Suite provides a powerful framework for automated governance and validation, ensuring that data remains accurate, complete, and compliant at all times.

In our previous discussion, we highlighted how Datagaps enhances metadata management, lineage tracking, and automation within Unity Catalog. This article takes the next step by diving into data quality assurance – a crucial component of enterprise-wide data governance.

By leveraging Datagaps Data Quality Monitor, organizations can implement automated validation strategies, reduce manual effort, and integrate real-time data quality scores into Unity Catalog for proactive governance. Let’s explore how these technologies work together to ensure high-quality, reliable data that drives better decision-making and compliance.

The Growing Need for Automated Data Quality Assurance

Modern enterprises manage vast amounts of structured and unstructured data across multiple platforms. Ensuring data accuracy, completeness, and consistency is no longer just a best practice – it’s a necessity for regulatory compliance and business intelligence.

Databricks Unity Catalog provides a centralized governance framework for managing metadata, access controls, and data lineage across an organization. By integrating with Datagaps Data Quality Monitor, enterprises can automate data validation, reduce errors, and gain deeper insights into data health and integrity.

6 Key Data Quality Dimensions

Effective data quality management revolves around six fundamental dimensions:

Accuracy – Ensuring data reflects real-world values without discrepancies.

Completeness – Verifying that all required fields and records are present.

Consistency – Maintaining uniformity across multiple data sources and systems.

Timeliness – Ensuring data is up-to-date and available when needed.

Uniqueness – Eliminating duplicate records and redundant data entries.

Validity – Enforcing compliance with defined formats, business rules, and constraints.

By addressing these dimensions, organizations can improve the trustworthiness of their data assets, enhance AI/ML outcomes, and comply with industry regulations.

Automating Data Quality Validation with White-Box and Black-Box Testing

Ensuring data integrity at scale requires a systematic approach to validation. Two widely used methodologies are:

1. White-Box Testing

Examines internal data transformations, lineage, and business rules.

Ensures that every step in the ETL (Extract, Transform, Load) process adheres to defined standards.

Provides deeper insights into data processing logic to catch issues at the source.

2. Black-Box Testing

Focuses on output validation by comparing actual results against expected benchmarks.

Useful for detecting anomalies, missing records, and schema mismatches.

Works well for regulatory compliance and end-to-end data pipeline testing.

A hybrid approach combining both techniques ensures robust validation and proactive anomaly detection.

How Unity Catalog and Datagaps Data Quality Monitor Work Together

1. Unified Governance and Automated Validation

Databricks Unity Catalog centralizes metadata management, access control, and lineage tracking.

Datagaps Data Quality Monitor extends these capabilities with automated quality checks, reducing manual efforts.

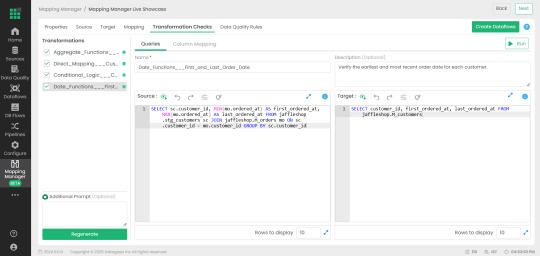

2. Mapping Manager Utility: Simplifying Test Case Automation

One of the standout features of Datagaps Data Quality Monitor is the Mapping Manager Utility, which:

Extracts mapping configurations from Databricks Unity Catalog.

Automatically generates white-box and black-box test cases.

Reduces the need for manual intervention, increasing efficiency and scalability.

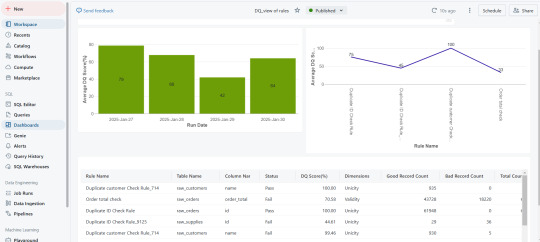

3. Real-Time Data Quality Scores for Proactive Governance

After test execution, a data quality score is generated.

These scores are seamlessly integrated into Databricks Unity Catalog, allowing real-time monitoring.

Organizations can visualize data quality insights through dashboards and take corrective actions before issues impact business operations.

Key Use Cases

ETL and Data Pipeline Validation – Ensuring data transformations adhere to defined business rules.

Regulatory Compliance and Audit Readiness – Mitigating risks associated with inaccurate reporting.

Enterprise Data Lakehouse Governance – Enhancing consistency across distributed datasets.

AI/ML Data Preprocessing – Ensuring clean, high-quality data for better model performance.

Automated Data Quality Checks – Reducing manual data validation efforts for faster, more reliable insights.

Scalability for Large Datasets – Efficiently managing high-volume, high-velocity enterprise data.

Faster QA Cycles – Automating test case execution for rapid turnaround.

Lower Operational Resources – Reducing human intervention, saving time and resources.

The Business Impact: Why This Integration Matters

Enhanced Automation – Eliminates manual quality checks and increases efficiency.

Real-Time Monitoring – Provides instant visibility into data quality metrics.

Stronger Compliance – Supports industry standards and regulations effortlessly.

Scalability – Designed for large-scale, complex data ecosystems.

Cost Efficiency – Reduces operational overhead and improves ROI on data management initiatives.

Ensuring data quality at scale requires a combination of automated governance, real-time monitoring, and seamless integration. The connection between Databricks Unity Catalog and Datagaps Data Quality Monitor provides a comprehensive solution to achieve this goal.

With automated test case generation, continuous data validation, and integrated governance, organizations can ensure their data is always accurate, complete, and compliant—laying the foundation for data-driven decision-making and regulatory confidence.

0 notes

Text

If one more person at work asks me to do something today, I will cry.

I’ll do it.

But I will cry.

#I’m in the middle of 2 POCs#2 RFPs and a workshop#I have 2 custom and 4 standard demos to give over the next 3 days#I have a cert test on Thursday#and I’m prepping for the databricks summit next week#I’m actually looking forward to getting on a plane because it will be 3 hours where no one can ask me to do anything#mylife#tech world things#to be clear I still love my job#this week is just a lot#the tiniest of violins

70 notes

·

View notes

Text

Maximizing Collaboration and Productivity: Azure DevOps and Databricks Pipelines

Data is the backbone of modern businesses, and processing it efficiently is critical for success. However, as data projects grow in complexity, managing code changes and deployments becomes increasingly difficult. That's where Continuous Integration and C

Data is the backbone of modern businesses, and processing it efficiently is critical for success. However, as data projects grow in complexity, managing code changes and deployments becomes increasingly difficult. That’s where Continuous Integration and Continuous Delivery (CI/CD) come in. By automating the code deployment process, you can streamline your data pipelines, reduce errors, and…

View On WordPress

#Azure#Azure DevOps#Build Pipeline#CD/CD#Databricks#GitHub Repo#microsoft azure#Python#Python Testing#Release Pipeline

0 notes

Text

Understanding DP-900: Microsoft Azure Data Fundamentals

The DP-900, or Microsoft Azure Data Fundamentals, is an entry-level certification designed for individuals looking to build foundational knowledge of core data concepts and Microsoft Azure data services. This certification validates a candidate’s understanding of relational and non-relational data, data workloads, and the basics of data processing in the cloud. It serves as a stepping stone for those pursuing more advanced Azure data certifications, such as the DP-203 (Azure Data Engineer Associate) or the DP-300 (Azure Database Administrator Associate).

What Is DP-900?

The DP-900 exam, officially titled "Microsoft Azure Data Fundamentals," tests candidates on fundamental data concepts and how they are implemented using Microsoft Azure services. It is part of Microsoft’s role-based certification path, specifically targeting beginners who want to explore data-related roles in the cloud. The exam does not require prior experience with Azure, making it accessible to students, career changers, and IT professionals new to cloud computing.

Exam Objectives and Key Topics

The DP-900 exam covers four primary domains:

1. Core Data Concepts (20-25%) - Understanding relational and non-relational data. - Differentiating between transactional and analytical workloads. - Exploring data processing options (batch vs. real-time).

2. Working with Relational Data on Azure (25-30%) - Overview of Azure SQL Database, Azure Database for PostgreSQL, and Azure Database for MySQL. - Basic provisioning and deployment of relational databases. - Querying data using SQL.

3. Working with Non-Relational Data on Azure (25-30%) - Introduction to Azure Cosmos DB and Azure Blob Storage. - Understanding NoSQL databases and their use cases. - Exploring file, table, and graph-based data storage.

4. Data Analytics Workloads on Azure (20-25%) - Basics of Azure Synapse Analytics and Azure Databricks. - Introduction to data visualization with Power BI. - Understanding data ingestion and processing pipelines.

Who Should Take the DP-900 Exam?

The DP-900 certification is ideal for: - Beginners with no prior Azure experience who want to start a career in cloud data services. - IT Professionals looking to validate their foundational knowledge of Azure data solutions. - Students and Career Changers exploring opportunities in data engineering, database administration, or analytics. - Business Stakeholders who need a high-level understanding of Azure data services to make informed decisions.

Preparation Tips for the DP-900 Exam

1. Leverage Microsoft’s Free Learning Resources Microsoft offers free online training modules through Microsoft Learn, covering all exam objectives. These modules include hands-on labs and interactive exercises.

2. Practice with Hands-on Labs Azure provides a free tier with limited services, allowing candidates to experiment with databases, storage, and analytics tools. Practical experience reinforces theoretical knowledge.

3. Take Practice Tests Practice exams help identify weak areas and familiarize candidates with the question format. Websites like MeasureUp and Whizlabs offer DP-900 practice tests.

4. Join Study Groups and Forums Online communities, such as Reddit’s r/AzureCertification or Microsoft’s Tech Community, provide valuable insights and study tips from past exam takers.

5. Review Official Documentation Microsoft’s documentation on Azure data services is comprehensive and frequently updated. Reading through key concepts ensures a deeper understanding.

Benefits of Earning the DP-900 Certification

1. Career Advancement The certification demonstrates foundational expertise in Azure data services, making candidates more attractive to employers.

2. Pathway to Advanced Certifications DP-900 serves as a prerequisite for higher-level Azure data certifications, helping professionals specialize in data engineering or database administration.

3. Industry Recognition Microsoft certifications are globally recognized, adding credibility to a resume and increasing job prospects.

4. Skill Validation Passing the exam confirms a solid grasp of cloud data concepts, which is valuable in roles involving data storage, processing, or analytics.

Exam Logistics

- Exam Format: Multiple-choice questions (single and multiple responses). - Duration: 60 minutes. - Passing Score: 700 out of 1000. - Languages Available: English, Japanese, Korean, Simplified Chinese, and more. - Cost: $99 USD (prices may vary by region).

Conclusion

The DP-900 Microsoft Azure Data Fundamentals certification is an excellent starting point for anyone interested in cloud-based data solutions. By covering core data concepts, relational and non-relational databases, and analytics workloads, it provides a well-rounded introduction to Azure’s data ecosystem. With proper preparation, candidates can pass the exam and use it as a foundation for more advanced certifications. Whether you’re a student, IT professional, or business stakeholder, earning the DP-900 certification can open doors to new career opportunities in the growing field of cloud data management.

1 note

·

View note

Text

How iceDQ Ensures Reliable Data Migration to Databricks?

iceDQ helped a pharmaceutical company automate the entire migration testing process from Azure Synapse to Databricks. By validating schema, row counts, and data consistency, they reduced manual work and improved accuracy. The integration with CI/CD pipelines made delivery faster and audit-ready. Avoid migration headaches—discover iceDQ’s Databricks migration testing.

#data migration testing#data warehouse testing#data migration testing tools#data reliability engineering#production data monitoring#icedq#etl testing

0 notes

Text

Llama 4: Smarter, Faster, More Efficient Than Ever

Llama 4's earliest models Available today in Azure AI Foundry and Azure Databricks, provide personalised multimodal experiences. Meta designed these models to elegantly merge text and visual tokens into one model backbone. This innovative technique allows programmers employ Llama 4 models in applications with massive amounts of unlabelled text, picture, and video data, setting a new standard in AI development.

Superb intelligence Unmatched speed and efficiency

The most accessible and scalable Llama generation is here. Unique efficiency, step performance changes, extended context windows, mixture-of-experts models, native multimodality. All in easy-to-use sizes specific to your needs.

Most recent designs

Models are geared for easy deployment, cost-effectiveness, and billion-user performance scalability.

Llama 4 Scout

Meta claims Llama 4 Scout, which fits on one H100 GPU, is more powerful than Llama 3 and among the greatest multimodal models. The allowed context length increases from 128K in Llama 3 to an industry-leading 10 million tokens. This opens up new possibilities like multi-document summarisation, parsing big user activity for specialised activities, and reasoning across vast codebases.

Reasoning, personalisation, and summarisation are targeted. Its fast size and extensive context make it ideal for compressing or analysing huge data. It can summarise extensive inputs, modify replies using user-specific data (without losing earlier information), and reason across enormous knowledge stores.

Maverick Llama 4

Industry-leading natively multimodal picture and text comprehension model with low cost, quick responses, and revolutionary intelligence. Llama 4 Maverick, a general-purpose LLM with 17 billion active parameters, 128 experts, and 400 billion total parameters, is cheaper than Llama 3.3 70B. Maverick excels at picture and text comprehension in 12 languages, enabling the construction of multilingual AI systems. Maverick excels at visual comprehension and creative writing, making it ideal for general assistant and chat apps. Developers get fast, cutting-edge intelligence tuned for response quality and tone.

Optimised conversations that require excellent responses are targeted. Meta optimised 4 Maverick for talking. Consider Meta Llama 4's core conversation model a multilingual, multimodal ChatGPT-like helper.

Interactive apps benefit from it:

Customer service bots must understand uploaded photographs.

Artificially intelligent content creators who speak several languages.

Employee enterprise assistants that manage rich media input and answer questions.

Maverick can help companies construct exceptional AI assistants who can connect with a global user base naturally and politely and use visual context when needed.

Llama 4 Behemoth Preview

A preview of the Llama 4 teacher model used to distil Scout and Maverick.

Features

Scout, Maverick, and Llama 4 Behemoth have class-leading characteristics.

Naturally Multimodal: All Llama 4 models employ early fusion to pre-train the model with large amounts of unlabelled text and vision tokens, a step shift in intelligence from separate, frozen multimodal weights.

The sector's longest context duration, Llama 4 Scout supports up to 10M tokens, expanding memory, personalisation, and multi-modal apps.

Best in class in image grounding, Llama 4 can match user requests with relevant visual concepts and relate model reactions to picture locations.

Write in several languages: Llama 4 was pre-trained and fine-tuned for unmatched text understanding across 12 languages to support global development and deployment.

Benchmark

Meta tested model performance on standard benchmarks across several languages for coding, reasoning, knowledge, vision understanding, multilinguality, and extended context.

#technology#technews#govindhtech#news#technologynews#Llama 4#AI development#AI#artificial intelligence#Llama 4 Scout#Llama 4 maverick

0 notes

Text

Insight VC explains the biggest mistake of raising the founders from raising a large environment

Today, the VCs are the same as the VCs, the VCs are like the decision, but it does not write a big test unless it is AI. But it's not exactly what happened. “It is a broader,” he said. Under management, insight partners invest in all levels of insights with the $ 90 billion. It is known with large inspections written both. For example, insights The $ 10 billion agreement of Co-LED databricks is…

0 notes

Text

Insight VC explains the biggest mistake of raising the founders from raising a large environment

Today, the VCs are the same as the VCs, the VCs are like the decision, but it does not write a big test unless it is AI. But it's not exactly what happened. “It is a broader,” he said. Under management, insight partners invest in all levels of insights with the $ 90 billion. It is known with large inspections written both. For example, insights The $ 10 billion agreement of Co-LED databricks is…

0 notes

Text

#dataquality#Databricks#cloud data testing#DataOps#Datagaps#Catalog#Unity Catalog#Datagaps BI Validator

0 notes

Text

Accelerating Digital Transformation with Acuvate’s MVP Solutions

A Minimum Viable Product (MVP) is a basic version of a product designed to test its concept with early adopters, gather feedback, and validate market demand before full-scale development. Implementing an MVP is vital for startups, as statistics indicate that 90% of startups fail due to a lack of understanding in utilizing an MVP. An MVP helps mitigate risks, achieve a faster time to market, and save costs by focusing on essential features and testing the product idea before fully committing to its development

• Verifying Product Concepts: Validates product ideas and confirms market demand before full development.

Gathering User Feedback: Collects insights from real users to improve future iterations.

Establishing Product-Market Fit: Determines if the product resonates with the target market.

Faster Time-to-Market: Enables quicker product launch with fewer features.

Risk Mitigation: Limits risk by testing the product with real users before large investments.

Gathering User Feedback: Provides insights that help prioritize valuable features for future development.

Here are Acuvate’s tailored MVP models for diverse business needs

Data HealthCheck MVP (Minimum Viable Product)

Many organizations face challenges with fragmented data, outdated governance, and inefficient pipelines, leading to delays and missed opportunities. Acuvate’s expert assessment offers:

Detailed analysis of your current data architecture and interfaces.

A clear, actionable roadmap for a future-state ecosystem.

A comprehensive end-to-end data strategy for collection, manipulation, storage, and visualization.

Advanced data governance with contextualized insights.

Identification of AI/ML/MV/Gen-AI integration opportunities and cloud cost optimization.

Tailored MVP proposals for immediate impact.

Quick wins and a solid foundation for long-term success with Acuvate’s Data HealthCheck.

know more

Microsoft Fabric Deployment MVP

Is your organization facing challenges with data silos and slow decision-making? Don’t let outdated infrastructure hinder your digital progress.

Acuvate’s Microsoft Fabric Deployment MVP offers rapid transformation with:

Expert implementation of Microsoft Fabric Data and AI Platform, tailored to your scale and security needs using our AcuWeave data migration tool.

Full Microsoft Fabric setup, including Azure sizing, datacenter configuration, and security.

Smooth data migration from existing databases (MS Synapse, SQL Server, Oracle) to Fabric OneLake via AcuWeave.

Strong data governance (based on MS PurView) with role-based access and robust security.

Two custom Power BI dashboards to turn your data into actionable insights.

know more

Tableau to Power BI Migration MVP

Are rising Tableau costs and limited integration holding back your business intelligence? Don’t let legacy tools limit your data potential.

Migrating from Tableau to Microsoft Fabric Power BI MVP with Acuvate’s Tableau to Power BI migration MVP, you’ll get:

Smooth migration of up to three Tableau dashboards to Power BI, preserving key business insights using our AcuWeave tool.

Full Microsoft Fabric setup with optimized Azure configuration and datacenter placement for maximum performance.

Optional data migration to Fabric OneLake for seamless, unified data management.

know more

Digital Twin Implementation MVP

Acuvate’s Digital Twin service, integrating AcuPrism and KDI Kognitwin, creates a unified, real-time digital representation of your facility for smarter decisions and operational excellence. Here’s what we offer:

Implement KDI Kognitwin SaaS Integrated Digital Twin MVP.

Overcome disconnected systems, outdated workflows, and siloed data with tailored integration.

Set up AcuPrism (Databricks or MS Fabric) in your preferred cloud environment.

Seamlessly integrate SAP ERP and Aveva PI data sources.

Establish strong data governance frameworks.

Incorporate 3D laser-scanned models of your facility into KDI Kognitwin (assuming you provide the scan).

Enable real-time data exchange and visibility by linking AcuPrism and KDI Kognitwin.

Visualize SAP ERP and Aveva PI data in an interactive digital twin environment.

know more

MVP for Oil & Gas Production Optimalisation

Acuvate’s MVP offering integrates AcuPrism and AI-driven dashboards to optimize production in the Oil & Gas industry by improving visibility and streamlining operations. Key features include:

Deploy AcuPrism Enterprise Data Platform on Databricks or MS Fabric in your preferred cloud (Azure, AWS, GCP).

Integrate two key data sources for real-time or preloaded insights.

Apply Acuvate’s proven data governance framework.

Create two AI-powered MS Power BI dashboards focused on production optimization.

know more

Manufacturing OEE Optimization MVP

Acuvate’s OEE Optimization MVP leverages AcuPrism and AI-powered dashboards to boost manufacturing efficiency, reduce downtime, and optimize asset performance. Key features include:

Deploy AcuPrism on Databricks or MS Fabric in your chosen cloud (Azure, AWS, GCP).

Integrate and analyze two key data sources (real-time or preloaded).

Implement data governance to ensure accuracy.

Gain actionable insights through two AI-driven MS Power BI dashboards for OEE monitoring.

know more

Achieve Transformative Results with Acuvate’s MVP Solutions for Business Optimization

Acuvate’s MVP solutions provide businesses with rapid, scalable prototypes that test key concepts, reduce risks, and deliver quick results. By leveraging AI, data governance, and cloud platforms, we help optimize operations and streamline digital transformation. Our approach ensures you gain valuable insights and set the foundation for long-term success.

Conclusion

Scaling your MVP into a fully deployed solution is easy with Acuvate’s expertise and customer-focused approach. We help you optimize data governance, integrate AI, and enhance operational efficiencies, turning your digital transformation vision into reality.

Accelerate Growth with Acuvate’s Ready-to-Deploy MVPs

Get in Touch with Acuvate Today!

Are you ready to transform your MVP into a powerful, scalable solution? Contact Acuvate to discover how we can support your journey from MVP to full-scale implementation. Let’s work together to drive innovation, optimize performance, and accelerate your success.

#MVP#MinimumViableProduct#BusinessOptimization#DigitalTransformation#AI#CloudSolutions#DataGovernance#MicrosoftFabric#DataStrategy#PowerBI#DigitalTwin#AIIntegration#DataMigration#StartupGrowth#TechSolutions#ManufacturingOptimization#OilAndGasTech#BusinessIntelligence#AgileDevelopment#TechInnovation

1 note

·

View note

Text

#Visualpath offers the Best Online DBT Courses, designed to help you excel in data transformation and analytics. Our expert-led #DBT Online Training covers tools like Matillion, Snowflake, ETL, Informatica, Data Warehousing, SQL, Talend, Power BI, Cloudera, Databricks, Oracle, SAP, and Amazon Redshift. With flexible schedules, recorded sessions, and hands-on projects, we provide a seamless learning experience for global learners. Master advanced data engineering skills, prepare for DBT certification, and elevate your career. Call +91-9989971070 for a free demo and enroll today!

WhatsApp: https://www.whatsapp.com/catalog/919989971070/

Visit Blog: https://databuildtool1.blogspot.com/

Visit: https://www.visualpath.in/online-data-build-tool-training.html

#visualpathedu #testing #automation #selenium #git #github #JavaScript #Azure #CICD #AzureDevOps #playwright #handonlearning #education #SoftwareDevelopment #onlinelearning #newtechnology #software #education #ITskills #training #trendingcourses #careers #students #typescript

#DBT Training#DBT Online Training#DBT Classes Online#DBT Training Courses#Best Online DBT Courses#DBT Certification Training Online#Data Build Tool Training in Hyderabad#Best DBT Course in Hyderabad#Data Build Tool Training in Ameerpet

0 notes

Text

Data Analyst - Python, Spark, Databricks

technologies such as Scala and Pyspark (Python) Systems analysis – Design, Coding, Unit Testing and other SDLC activities… Required Qualifications: Undergraduate degree or equivalent experience 3+ years on working experience in Python, Pyspark, Scala 3+ years… Apply Now

0 notes

Text

Data Analyst - Python, Spark, Databricks

technologies such as Scala and Pyspark (Python) Systems analysis – Design, Coding, Unit Testing and other SDLC activities… Required Qualifications: Undergraduate degree or equivalent experience 3+ years on working experience in Python, Pyspark, Scala 3+ years… Apply Now

0 notes

Text

Azure Databricks: Unleashing the Power of Big Data and AI

Introduction to Azure Databricks

In a world where data is considered the new oil, managing and analyzing vast amounts of information is critical. Enter Azure Databricks, a unified analytics platform designed to simplify big data and artificial intelligence (AI) workflows. Developed in partnership between Microsoft and Databricks, this tool is transforming how businesses leverage data to make smarter decisions.

Azure Databricks combines the power of Apache Spark with Azure’s robust ecosystem, making it an essential resource for businesses aiming to harness the potential of data and AI.

Core Features of Azure Databricks

Unified Analytics Platform

Azure Databricks brings together data engineering, data science, and business analytics in one environment. It supports end-to-end workflows, from data ingestion to model deployment.

Support for Multiple Languages

Whether you’re proficient in Python, SQL, Scala, R, or Java, Azure Databricks has you covered. Its flexibility makes it a preferred choice for diverse teams.

Seamless Integration with Azure Services

Azure Databricks integrates effortlessly with Azure’s suite of services, including Azure Data Lake, Azure Synapse Analytics, and Power BI, streamlining data pipelines and analysis.

How Azure Databricks Works

Architecture Overview

At its core, Azure Databricks leverages Apache Spark’s distributed computing capabilities. This ensures high-speed data processing and scalability.

Collaboration in a Shared Workspace

Teams can collaborate in real-time using shared notebooks, fostering a culture of innovation and efficiency.

Automated Cluster Management

Azure Databricks simplifies cluster creation and management, allowing users to focus on analytics rather than infrastructure.

Advantages of Using Azure Databricks

Scalability and Flexibility

Azure Databricks automatically scales resources based on workload requirements, ensuring optimal performance.

Cost Efficiency

Pay-as-you-go pricing and resource optimization help businesses save on operational costs.

Enterprise-Grade Security

With features like role-based access control (RBAC) and integration with Azure Active Directory, Azure Databricks ensures data security and compliance.

Comparing Azure Databricks with Other Platforms

Azure Databricks vs. Apache Spark

While Apache Spark is the foundation, Azure Databricks enhances it with a user-friendly interface, better integration, and managed services.

Azure Databricks vs. AWS Glue

Azure Databricks offers superior performance and scalability for machine learning workloads compared to AWS Glue, which is primarily an ETL service.

Key Use Cases for Azure Databricks

Data Engineering and ETL Processes

Azure Databricks simplifies Extract, Transform, Load (ETL) processes, enabling businesses to cleanse and prepare data efficiently.

Machine Learning Model Development

Data scientists can use Azure Databricks to train, test, and deploy machine learning models with ease.

Real-Time Analytics

From monitoring social media trends to analyzing IoT data, Azure Databricks supports real-time analytics for actionable insights.

Industries Benefiting from Azure Databricks

Healthcare

By enabling predictive analytics, Azure Databricks helps healthcare providers improve patient outcomes and optimize operations.

Retail and E-Commerce

Retailers leverage Azure Databricks for demand forecasting, customer segmentation, and personalized marketing.

Financial Services

Banks and financial institutions use Azure Databricks for fraud detection, risk assessment, and portfolio optimization.

Getting Started with Azure Databricks

Setting Up an Azure Databricks Workspace

Begin by creating an Azure Databricks workspace through the Azure portal. This serves as the foundation for your analytics projects.

Creating Clusters

Clusters are the computational backbone. Azure Databricks makes it easy to create and configure clusters tailored to your workload.

Writing and Executing Notebooks

Use notebooks to write, debug, and execute your code. Azure Databricks’ notebook interface is intuitive and collaborative.

Best Practices for Using Azure Databricks

Optimizing Cluster Performance

Select the appropriate cluster size and configurations to balance cost and performance.

Managing Data Storage Effectively

Integrate with Azure Data Lake for efficient and scalable data storage solutions.

Ensuring Data Security and Compliance Implement RBAC, encrypt data at rest, and adhere to industry-specific compliance standards.

Challenges and Solutions in Using Azure Databricks

Managing Costs

Monitor resource usage and terminate idle clusters to avoid unnecessary expenses.

Handling Large Datasets Efficiently

Leverage partitioning and caching to process large datasets effectively.

Debugging and Error Resolution

Azure Databricks provides detailed logs and error reports, simplifying the debugging process.

Future Trends in Azure Databricks

Enhanced AI Capabilities

Expect more advanced AI tools and features to be integrated, empowering businesses to solve complex problems.

Increased Automation

Automation will play a bigger role in streamlining workflows, from data ingestion to model deployment.

Real-Life Success Stories

Case Study: How a Retail Giant Scaled with Azure Databricks

A leading retailer improved inventory management and personalized customer experiences by utilizing Azure Databricks for real-time analytics.

Case Study: Healthcare Advancements with Predictive Analytics

A healthcare provider reduced readmission rates and enhanced patient care through predictive modeling in Azure Databricks.

Learning Resources and Support

Official Microsoft Documentation

Access in-depth guides and tutorials on the Microsoft Azure Databricks documentation.

Online Courses and Certifications

Platforms like Coursera, Udemy, and LinkedIn Learning offer courses to enhance your skills.

Community Forums and Events

Join the Databricks and Azure communities to share knowledge and learn from experts.

Conclusion

Azure Databricks is revolutionizing the way organizations handle big data and AI. Its robust features, seamless integrations, and cost efficiency make it a top choice for businesses of all sizes. Whether you’re looking to improve decision-making, streamline processes, or innovate with AI, Azure Databricks has the tools to help you succeed.

FAQs

1. What is the difference between Azure Databricks and Azure Synapse Analytics?

Azure Databricks focuses on big data analytics and AI, while Azure Synapse Analytics is geared toward data warehousing and business intelligence.

2. Can Azure Databricks handle real-time data processing?

Yes, Azure Databricks supports real-time data processing through its integration with streaming tools like Azure Event Hubs.

3. What skills are needed to work with Azure Databricks?

Knowledge of data engineering, programming languages like Python or Scala, and familiarity with Azure services is beneficial.

4. How secure is Azure Databricks for sensitive data?

Azure Databricks offers enterprise-grade security, including encryption, RBAC, and compliance with standards like GDPR and HIPAA.

5. What is the pricing model for Azure Databricks?

Azure Databricks uses a pay-as-you-go model, with costs based on the compute and storage resources used.

0 notes

Text

Databricks Certified Data Engineer Professional Practice Exam For Best Preparation

Are you aspiring to become a certified data engineer with Databricks? Passing the Databricks Certified Data Engineer Professional exam is a significant step in proving your advanced data engineering skills. To simplify your preparation, the latest Databricks Certified Data Engineer Professional Practice Exam from Cert007 is an invaluable resource. Designed to mimic the real exam, it provides comprehensive practice questions that will help you master the topics and build confidence. With Cert007’s reliable preparation material, you can approach the exam with ease and increase your chances of success.

Overview of the Databricks Certified Data Engineer Professional Exam

The Databricks Certified Data Engineer Professional exam evaluates your ability to leverage the Databricks platform for advanced data engineering tasks. You will be tested on a range of skills, including:

Utilizing Apache Spark, Delta Lake, and MLflow to manage and process large datasets.

Building and optimizing ETL pipelines.

Applying data modeling principles to structure data in a Lakehouse architecture.

Using developer tools such as the Databricks CLI and REST API.

Ensuring data pipeline security, reliability, and performance through monitoring, testing, and governance.

Successful candidates will demonstrate a solid understanding of Databricks tools and the capability to design secure, efficient, and robust pipelines for data engineering.

Exam Details

Number of Questions: 60 multiple-choice questions

Duration: 120 minutes

Cost: $200 per attempt

Primary Coding Language: Python (Delta Lake functionality references are in SQL)

Certification Validity: 2 years from the date of passing

Exam Objectives and Weightage

The exam content is divided into six key objectives:

Databricks Tooling (20%) Proficiency in Databricks developer tools, including the CLI, REST API, and notebooks.

Data Processing (30%) Deep understanding of data transformation, optimization, and real-time streaming tasks using Databricks.

Data Modeling (20%) Knowledge of structuring data effectively for analysis and reporting in a Lakehouse architecture.

Security and Governance (10%) Implementation of secure practices for managing data access, encryption, and auditing.

Monitoring and Logging (10%) Ability to use tools and techniques to monitor pipeline performance and troubleshoot issues.

Testing and Deployment (10%) Knowledge of building, testing, and deploying reliable data engineering solutions.

Preparation Tips for Databricks Certified Data Engineer Professional Exam

1. Leverage Cert007 Practice Exams

The Databricks Certified Data Engineer Professional Practice Exam by Cert007 is tailored to provide a hands-on simulation of the real exam. Practicing with these questions will sharpen your understanding of the key concepts and help you identify areas where additional study is needed.

2. Understand the Databricks Ecosystem

Develop a strong understanding of the core components of the Databricks platform, including Apache Spark, Delta Lake, and MLflow. Focus on how these tools integrate to create seamless data engineering workflows.

3. Study the Official Databricks Learning Pathway

Follow the official Data Engineer learning pathway provided by Databricks. This pathway offers structured courses and materials designed to prepare candidates for the certification exam.

4. Hands-On Practice

Set up your own Databricks environment and practice creating ETL pipelines, managing data in Delta Lake, and deploying models with MLflow. This hands-on experience will enhance your skills and reinforce theoretical knowledge.

5. Review Security and Governance Best Practices

Pay attention to secure data practices, including access control, encryption, and compliance requirements. Understanding governance within the Databricks platform is essential for this exam.

6. Time Management for the Exam

Since you’ll have 120 minutes to answer 60 questions, practice pacing yourself during the exam. Aim to spend no more than 2 minutes per question, leaving time to review your answers.

Conclusion

Becoming a Databricks Certified Data Engineer Professional validates your expertise in advanced data engineering using the Databricks platform. By leveraging high-quality resources like the Cert007 practice exams and committing to hands-on practice, you can confidently approach the exam and achieve certification. Remember to stay consistent with your preparation and focus on mastering the six key objectives to ensure your success.

Good luck on your journey to becoming a certified data engineering professional!

0 notes