#dbcc examples

Explore tagged Tumblr posts

Text

Keeping Your Database on Point with DBCC CHECKDB

Let’s explore how to wield the power of T-SQL with DBCC CHECKDB in SQL Server 2022, diving into the nitty-gritty of maintaining your database’s integrity with style. Picture this: your database, the backbone of your digital operations, humming along smoothly, all thanks to the vigilance in maintaining its health. SQL Server 2022 brings with it an enhanced version of a tool that’s nothing short of…

View On WordPress

#database integrity check#preventive database maintenance#SQL Server 2022 DBCC CHECKDB#T-SQL examples

0 notes

Text

Data Recovery : Page level restore in SQL Server

Data Recovery : Page level restore in SQL Server

In this article, we shall discuss the importance of understanding the internals of the page restoration for a database administrator. Most of the time, performing a page-level restore suffices for database availability. A good backup strategy is a key to recovery or restoration, and SQL Server provides us with an option to fix database pages at a granular level. Performing a page level restore in…

View On WordPress

#dbcc checkdb#dbcc examples#dbcc ind#dbcc page#page level restore in sql server#restore page using SSMS#restore page using T-SQL

0 notes

Text

Sql Server Error - a Quick Overview

Why Almost Everything You've Learned About Sql Server Error Is Wrong

In case the query touches less data it'll be less inclined to encounter the issue. Problem is going to be resolved after following the above mentioned measures. The issue needs to be resolved. The issue with the error log file is that there's so much data collected it is occasionally tough to figure out where the real errors lie.

Everything beyond is thought of as an error To have the ability to use an apostrophe in a string, it must be escaped, so that it's not regarded as a string delimiter. Occasionally you will notice an error stating that the database can't be recovered and it's marked suspect. For example, the next error points out that we would have to restore our database or endeavor to rebuild the log. Now it doesn't even wish to me register and throwing the exact same 18452 error. In the event the above mentioned manual solutions to repair the error do not do the job, then it may be that the database may have corrupt. If you come across a severity 22 error, you'll need to run DBCC CHECKDB to figure out the magnitude of the damage.

Now you're seeing errors on-screen, but in a manufacturing environment, you don't acquire such flexibility. The error can be encountered at the right time of upgrading SQL server to some other version. Accordingly, so as to receive your critical data back, you will need to understand how to fix SQL server error 823. The error that's captured in the SQL Server error log can be a result of different factors. The error 823 can cause severe data loss circumstances. SetWindowText returns success and there's no runtime error, but the text doesn't appear. All the data are kept in a database in the shape of MDF and NDF files. In addition, it is going to continue to keep the data safe means with no loss. The real data is stored in the remaining part of the pages.

The Chronicles of Sql Server Error

You should inspect the error log daily to make sure that all prospective issues are investigated as speedily as possible. Also by default, the latest error log is named ERRORLOG and it does not have any extension. The very first step to receive it resolve is to look at the SQL Server error log.

The Argument About Sql Server Error

Make it part of your routine database maintenance. SQL Server service was paused. It is trying to intialize SSL support but it cannot find the requisite information.

Sql Server Error - What Is It?

Your default database may be missing. When a query is run, SQL attempts to allocate the optimum quantity of memory in order for it to run efficiently. Thus, for optimum compatibility, it's suggested that you use anytime you run queries returning more than 1 result even if the specific driver you're using allows you to do otherwise. System databases are made automatically if we install MS SQL Server. When seeking to log in, the database might not be prepared to accept the connection. The entire database will be impacted.

The server wasn't found or wasn't accessible. It enables easy storage and retrieval of data from application running on the same computer or other computers residing in the same network. SQLEXPRESS'' folders, you have to install SQL Server. SQL Server deliberately hides the character of the authentication error and provides State 1. It cannot roll committed transactions forward because it has no room on the disk. Begin your SQL Server instance and you may then connect to SQL Server utilizing the SA login.

In MS SQL Server, two forms of databases are readily available. Testdb database is going to be created as shown in the subsequent snapshot. Therefore, backing up database regularly is a great practice that aids in restoring data is true of disaster. The AdventureWorks 2014 sample database is utilized via the report.

New Questions About Sql Server Error

If you are working to connect using Windows Authentication, verify that you're properly logged into the proper domain. Your login may not be authorized to connect. The login was created. It failed for user is one of the most common error which everyone might have seen at least once. As a consequence the user doesn't have permission to use SQL database. Therefore, users are continuously querying about the reason for the same i.e. exact cause of the SQL Server operating system error 3. So, they will understand the causes and prevent Server from the future disaster.

You're unlikely ever to should alter the processor affinity defaults if you don't encounter performance issues but it's still worthwhile understanding what they are and the way in which they work. A Unix socket file is used if you don't specify a host name or whenever you Sql Server Error specify the particular host name localhost. Since you can see above that LOG folder consists of many files. The absolute most current error log file is known as ERRORLOG.

youtube

1 note

·

View note

Text

System Center Configuration Manager current branch 1810 KB4486457 available

System Center Configuration Manager current branch 1810 KB4486457 available.

Issues that are fixed

First wave issues Synchronization of Office 365 updates may fail after you update to Configuration Manager current branch, version 1810. Errors messages that resemble one of the following are recorded in the WSyncMgr.log file: ProcessFileManifest() failed to process O365 file manifest. Caught exception: System.Net.WebException: An exception occurred during a WebClient request. ProcessFileManifest() failed to process O365 file manifest. Caught exception: System.UriFormatException: Invalid URI: The URI scheme is not valid. The distribution point upgrade process may fail. This causes a block of additional content distribution to that server. Errors messages that resemble the following are recorded in the distmgr.log file: Failed to copy D:\SRVAPPS\Microsoft Configuration Manager\bin\x64\ccmperf.dll to \\{server}\SMS_DP$\sms\bin\ccmperf.dll. GLE = 32 All superseded updates are removed and no are longer applicable on a client, even before expiration. This issue occurs even if the Do not expire a superseded software update until the software update is superseded for 3 months option is enabled. Performance improvements have been made to the Data Replication Service for device discovery data. The second and successive phases of a deployment start automatically after the success of the first phase, regardless of start conditions. Phased deployment deadline behavior settings are inconsistent between the Create Phased Deployment Wizard and the Phase Settings properties. When you run a Servicing Plan after you select a Product Category, the filter is not added correctly. The Cloud Management Gateway (CMG) content service is not created correctly when the CMG role is added after you update to Configuration Manager current branch, version 1810. The No deployment package option is selected after you change the properties of an Automatic Deployment Rule (ADR). After this update rollup is applied, affected ADRs can be re-created and their properties changes without any further issue. The Configuration Manager Message Processing Engine (MPE) may not always process Active Directory discovery data when optional attributes are added. Errors that resemble the following are recorded in the SMS_Message_Processing_Engine.log: ERROR: Got SQL exception when handle discovery message. Exception: System.Data.SqlClient.SqlException (0x80131904): String or binary data would be truncated.~~ The Service Connection Tool (serviceconnection.exe) fails and you receive the following error message when you use the -connect parameter: ERROR: System.IO.Exception : The directory is not empty. A user without Full Administrator rights may be unable to create or edit Windows Defender ATP Policies, even when you add them to the Endpoint Protection Manager security role. The Prerequisite Installation Checker incorrectly gives the option to retry a site installation again. If a second retry is tried, the administrator must run the Configuration Manager Update Reset Tool (CMUpdateReset.exe) to resolve the issue. Processing of .bld files by the SMS_Notification_Manager component takes longer than expected. This leads to delays in processing data and a backlog of files in the \inboxes\bgb.box folder. After you update to Configuration Manager current branch, version 1810, remote SQL providers who use Microsoft SQL Server 2014 or an earlier version may not always query the database. Errors that resemble the following are recorded in the smsprov.log: *** User $' does not have permission to run DBCC TRACEON. The Software Updates Patch Downloader component retries updates, up to three times. These retries fail and return error code 404. Windows Server 2016 updates are displayed incorrectly as available when you schedule updates to a Windows Server 2019 operating system image. Searching for a user’s first or last name, or full name, returns no results from the Overview section of the Assets and Compliance node of the Configuration Manager console. This issue occurs even when full discovery data is available. Globally available release issues After you enable support for express installation files, content may not always download from Windows Server Update Services (WSUS) servers in the following scenarios: Configuration Manager client installation through Software Update Point Installing updates directly from WSUS Windows Feature on Demand (FOD) or Language Pack (LP) acquisition After you update to Configuration Manager current branch, version 1810, device enrollment can overwrite Windows telemetry collection values that were previously set by Group Policy. This issue can cause value toggling between full and basic, for example, when Group Policy is applied. Hardware inventory is updated to include information about add-ins for Office365 and standalone Office products. Desktop Analytics deployment plans show a larger device count in the Configuration Manager console than in the Desktop Analytics Portal. Configuration Manager client setup may fail over a metered (for example, cellular) network connection. This may occur even if client policy settings allow for those connections. An error message that resembles the following is recorded in the Ccmsetup.log file on the client: Client deployment cannot be fulfilled because use of metered network is not allowed. Client setup may fail because of SQL Server CE schema changes. Errors that resemble the following are recorded in the Ccmsetup-client.log on the client: MSI: Setup was unable to compile Sql CE script file %windir%\CCM\DDMCache.sqlce. The error code is 80040E14. If an application is in a partly compliant state, and the client sees that a dependency is installed but the main application is not and requires re-enforcement, available deployment causes the following issues: The application is displayed as required or past due even though the deployment is available and there is no supersedence relation. Clicking Install has no effect. Sign in to Azure services fails when you use the Create Workflow in the Azure Services Wizard, even when correct credentials are used. Configuration Manager setup may fail the prerequisite check during installation or an update of a site server. This issue occurs if the environment uses SQL Always On. The “Firewall exception for SQL Server” rule shows a status of failed, and errors messages that resemble the following are recorded, even if the correct firewall exceptions are configured: ERROR: Failed to access Firewall Policy Profile. ERROR: Failed to connect to WMI namespace on Firewall exception for SQL Server; Error; The Windows Firewall is enabled and does not have exceptions configured for SQL Server or the TCP ports that are required for intersite data replication. The alternative download server that is listed in the "Specify intranet Microsoft update service location" window is not propagated to the Group Policy settings on the client. The download of Office 365 updates, such as “Semi-annual Channel Version 1808 for x86 Build 10730.20264” or “Monthly Channel Version 1812 for x64 Build 11126.20196” may fail. No errors are logged in the Patchdownloader.log file. However, entries that resemble the following are logged in the AdminUI.log log: (SMS_PackageToContent.ContentID={content_ID},PackageID='{package_ID}') does not exist or its IsContentValid returns false. We will (re)download this content. Read the full article

1 note

·

View note

Text

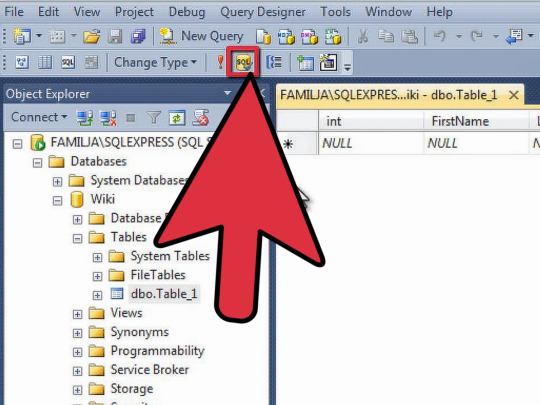

SQL Server - Reset the numeric counter (seed) of an Identity column

If you came across this article, you probably need to reset the numeric value (seed counter) of an identity column used by a table in your SQL Server database. The method described here works with all versions of SQL Server : SQL Server 2002, SQL Server 2005, SQL Server 2008, SQL Server 2008R2, SQL Server 2012, SQL Server 2014, SQL Server 2016, up to the most recent SQL Server 2017.

Arguments

table_name: Is the name of the table for which to check the current identity value. The table specified must contain an identity column. Table names must follow the rules for identifiers. Two or three part names must be delimited, such as 'Person.AddressType' or . NORESEED: Specifies that the current identity value shouldn't be changed. RESEED: Specifies that the current identity value should be changed. new_reseed_value: Is the new value to use as the current value of the identity column. WITH NO_INFOMSGS: Suppresses all informational messages.

Example

For example, the following command: Will reset to 1 the identity column value of Table1. Once executed, the command will output the following confirmation message: Identity information control: current Identity value '1', current column value '1. DBCC execution completed. If DBCC error messages were displayed, contact your system administrator.

References

If you need additional info, you can also take a look to the dedicated DBCC CHECKIDENT page available on the official SQL Server 2017 documentation. Read the full article

0 notes

Text

Defrag Those Indexes - Maintenance

This article was written back before I was looking into Sql Server 2005. The underlying idea is the same, in order to keep your database running healthy you will need to maintain and administer the underlying architecture. In this short but subtle refresh I've separated the 2000 concepts and implementations from those used today in Sql Server 2005. If you have comments or corrections do feel free to email me or leave a comment. SQL Server 2000 ===================================================== It is imperative you maintenance your Database. One way to check up on the indexes per table is to run the DBCC SHOWCONTIG command as DBCC SHOWCONTIG ('tbl_YourTableName') with fast,ALL_INDEXES You'll end up with a very similar display like the following... DBCC SHOWCONTIG scanning 'tbl_YourTableName' table... Table: 'tbl_YourTableName' (1113627606); index ID: 1, database ID: 8 TABLE level scan performed. - Pages Scanned................................: 1680 - Extent Switches..............................: 217 - Scan Density .......: 96.33% - Logical Scan Fragmentation ..................: 0.18% DBCC SHOWCONTIG scanning 'tbl_YourTableName' table... Table: 'tbl_YourTableName' (1113627606); index ID: 2, database ID: 8 LEAF level scan performed. - Pages Scanned................................: 480 - Extent Switches..............................: 64 - Scan Density .......: 92.31% - Logical Scan Fragmentation ..................: 0.83% DBCC SHOWCONTIG scanning 'tbl_YourTableName' table... Table: 'tbl_YourTableName' (1113627606); index ID: 5, database ID: 8 LEAF level scan performed. - Pages Scanned................................: 696 - Extent Switches..............................: 95 - Scan Density .......: 90.63% - Logical Scan Fragmentation ..................: 0.72% DBCC execution completed. If DBCC printed error messages, contact your system administrator. What you're really looking for is the Scan fragmentation to be as low as possible. I've also read on other sites that you want the Scan Desity to be as close to each other as possible like for example 87:96 is fairly close and gives you a density over 90%. From that you can easily run a defrag on each index as follows DBCC INDEXDEFRAG (8, 1113627606, 5) DBCC INDEXDEFRAG (8, 1113627606, 2) DBCC INDEXDEFRAG (8, 1113627606, 1) More Information can be obtained via: Sql Server Performancedbcc commands And sql-server-performance.com/rebuilding_indexes and here, SQL Server Index Fragmentation and Its Resolution If you just wish to defrangment the entire database this little script will put you on your way: (from the link above sql server performance site) SET DatabaseName --Enter the name of the database you want to reindex DECLARE @TableName varchar(255) DECLARE TableCursor CURSOR FOR SELECT table_name FROM information_schema.tables WHERE table_type = 'base table' OPEN TableCursor FETCH NEXT FROM TableCursor INTO @TableName WHILE @@FETCH_STATUS = 0 BEGIN DBCC DBREINDEX(@TableName,' ',90) FETCH NEXT FROM TableCursor INTO @TableName END CLOSE TableCursor DEALLOCATE TableCursor ------END SCRIPT SQL Server 2005 =================================================== Fastforward to today, IndexDefrag still works in 2005, but if you read around the 'net you'll find many more references to choose to rebuild your indexes via the ALTER INDEX command, which is new for SQL Server 2005. Before you go out and begin de-fragmenting tables like crazy, it's best to have a plan, finding out how much fragmentation is in your table in 2005 is easier too with the newer function that provides this information. below I've expanded on what you can find around the internet, the below script will allow you to identify rows over 100k and with more than 10% fragmentation, I also handled the commonly seen error for databases that are of the standard dictionary order (80), and the common "Error near '(' ". /script/ SET NOCOUNT ON DECLARE @db_id SMALLINT SET @db_id = DB_ID() SELECT OBJECT_NAME(i.object_id) AS TableName, i.name AS TableIndexName, phystat.avg_fragmentation_in_percent, rows FROM sys.dm_db_index_physical_stats(@db_id, NULL, NULL, NULL, 'DETAILED') phystat inner JOIN sys.indexes i WITH(NOLOCK) ON i.object_id = phystat.object_id AND i.index_id = phystat.index_id INNER JOIN sys.partitions p WITH(NOLOCK) ON p.OBJECT_ID = i.object_id WHERE phystat.avg_fragmentation_in_percent > 10 AND ROWS > 100000 /end script/ More information on this system function is availble here: http://technet.microsoft.com/en-us/library/ms188917.aspx This link will even give you a cool little script you can use to find out what tables in your database are fragmented over 40%, which is very useful if you are planning a weekend of maintenance. http://www.sql-server-performance.com/articles/per/detect_fragmentation_sql2000_sql2005_p1.aspx Now that you have the needed information on how fragmented the indexes are you can begin defragmenting using the Alter Index command ALTER INDEX ALL ON TableName REBUILD What is especially cool about 2005 is that you can choose to throttle the amount of CPUs you use for the defragging process, with the MAXDOP option note, with the following command you can restrict the number of processors down to 2 CPUs: ALTER INDEX ALL ON TableName REBUILD WITH(MAXDOP=2) You can also choose to rebuild your INDEX and continue to make it available for your users as it defrags, I suspect this has performance implications but none the less the command would be: ALTER INDEX ALL ON TableName REBUILD WITH(MAXDOP=2, ONLINE=ON) More information on the ALTER INDEX command is available on the MSDN here: http://msdn2.microsoft.com/en-us/library/ms188388.aspx Happy Defragging!

0 notes

Text

Boosting Performance

Fixing smallish databases which are less than 1-2 gb may be just annoying when you are dealing with multiple indexes, but try managing some of those larger ERP databases with literally thousands of tables! Talk about a database from hell, having to sift through 10 of thousands of indexes can be a real chore if you're searching for performance bottle necks. There are some great solutions out there that all cost money per instance or per site license and can get quite pricey. But just about all of those products are charging you for something you can do on your own. The article below is an extension of my previous blog on maintaining those indexes It's the script that has evolved from some very basic loops and DMV ( dm_db_index_physical_stats ). In my script (follow the link below) You'll find that I chose to stick to a SAMPLED stats, which essentially looks at the number of compressed pages. If you're talking millions of row of data and a very short maintenance window, you're going to want to stick to SAMPLED, otherwise, you'll opt for the full DETAILED search if A) your maintenance window is greater than the amount of time it takes for a DETAIL report to be returned B) your database is small enough One of the problems that I've ran into with my production databases that use a FULL recovery model is the maintaining the size of the transaction logs. The problem is that I COULD switch them over to be SIMPLE recovery models, and then the transaction log would not grow out of control during the rebuild / reorganization (Defrag) of the indexes, but I've found this not to be an ideal situation, since in my organization even though I have a maintenance window on the weekends, the business comes first, so if they require access to their data, I must make it available. Having good backups that allow the company to return up to the minute before failure using the FULL recovery model is important to us. So in the script (see link below) Keep Your SQL Server Indexes Fragmentation Free My DevX article was all about Sql Server 2005 and Sql Server 2008, but what about Sql Server 2000? surely you don't want to be stuck just running a performance monitor on your all your 2000 servers right? Here is the updated 2000 script to find all fragmented tables in 2000 along with the DBCC DBREINDEX wich will rebuild them for you. Catch-22: if the table has less than 1000 rows, a rebuild will not change anything and you may be left with having to drop and recreate the index manually anyways. The Code: ...I created a temp table manually to capture the results of SHOWCONTIG which I pipe to a grid result using the WITH TABLERESULTS hint, next this is joined against the information schema tables table. In the Where clause I specified that we are interested in 'Base Table's only so that we don't get a lot of system table specific stuff. I also added the cirteria that we want to see only fragmented tables of 5% and that have over 1000 rows. In my case my legacy databases are all well under 50gb and thus this query makes sense. This example use a cursor, as it's an old script, but I'll post up a cursor-less version as I prefer cursor-less solutions. As always watch those log files when you are defragging indexes! USE YourDatabaseName--Enter the name of the database you want to reindex CREATE TABLE #db_defrag ( ObjectName VARCHAR(255), ObjectID INT, IndexName VARCHAR(255), IndexID INT, INT, Pages INT, BIGINT, MinRecordSize INT, MaximumRecordSize INT, AverageRecordSize INT, ForwardedRecords INT, Extents INT, ExtentSwitches INT, AverageFreeBytes DECIMAL(20,2), AveragePageDensity DECIMAL(20,2), ScanDensity DECIMAL(20,2), BestCount INT, ActualCount INT, LogicalFragmentation DECIMAL(20,2), ExtentFragmentation DECIMAL(20,2)) DECLARE @sql AS VARCHAR(100) SET @sql ='DBCC SHOWCONTIG WITH TABLERESULTS' INSERT INTO #db_defrag EXEC (@sql) DECLARE @TableName varchar(255) DECLARE TableCursor CURSOR FOR SELECT table_name FROM information_schema.tables tbl INNER JOIN #db_defrag dfrg ON tbl.table_name = dfrg.ObjectName WHERE table_type = 'base table' AND dfrg.LogicalFragmentation >= 5 AND > 1000 ORDER BY LogicalFragmentation DESC OPEN TableCursor FETCH NEXT FROM TableCursor INTO @TableName WHILE @@FETCH_STATUS = 0 BEGIN DBCC DBREINDEX(@TableName,' ',90) SET @sql = 'UPDATE STATISTICS ' + @TableName EXEC (@Sql) FETCH NEXT FROM TableCursor INTO @TableName END CLOSE TableCursor DEALLOCATE TableCursor DROP TABLE #db_defrag

0 notes

Link

The purpose behind creating SQL indexes on database tables is to enhance the performance of the queries that read data from the base table by speeding up the data retrieval processes. But these transactional tables are not static and changing very frequently over time. These changes that are performed on the database table include adding new records, modifying or deleting the existing records. As these changes should be replicated to the related table indexes, the table index will become fragmented over time.

SQL Server Index Fragmentation Overview

There are two main types of SQL index fragmentation; Internal and External Fragmentation. The Internal Fragmentation is caused by the inserting a new record or updating an existing record with values that do not fit in the current free space in the data page and result with splitting the page into two pages to fit the new value. In this case, the SQL Server Engine will move about 50% of the data from the current page to the new data page that is generated from the split operation, in order to keep the SQL index tree balanced. Internal fragmentation can be caused also by the random delete operations that result in empty space on the data pages. This type of operations leaves the data pages unfilled and results with larger SQL Server index size and slower performance due to the need to read more data pages to retrieve the requested data.

The External Fragmentation is caused when the data pages of the SQL Server index are scattered on the database physical file, due to having the logical order of the SQL Server index does not match the physical order on the underlying disk drive. External fragmentation can be caused by random delete operations that leave a number of the extent pages empty while having the extent itself reserved. This type of fragmentations results in performance degradation, as it will take more time and consume more resources in jumping between the scattered data pages in order to retrieve the requested data from the underlying disk drive.

The reason why we care much about the SQL index fragmentation is that the index that you have created to enhance the system performance, may degrade the performance of your queries after being highly fragmented. Imagine difference in time and number of disk reads between reading a specific number of rows from a healthy SQL index in which the data is stored in contiguous data pages within the database file, and reading the same number of rows from a highly fragmented SQL Server index, in which the data is scattered in noncontiguous data pages within the database file.

If it is found that the table SQL index is fragmented, you need to fix the fragmentation issue by performing SQL Server Index Reorganization or Index Rebuild, based on the SQL index fragmentation percentage. For example, a SQL Server index with fragmentation percentage of less than 30% can be reorganized, where the SQL index with a fragmentation level higher than 30% should be rebuilt.

Note: Check Maintaining SQL Server Indexes for more information.

You can proactively overcome SQL Server index fragmentation and pages splitting issues by designing the index properly and setting the Fill Factor and pad_index index creation options with the propervalues.

Note: Check SQL Server Index Operations for more information.

SQL Server Index Fragmentation Report

It is not considered a best practice just to create a SQL index in your database table to take advantages from the data retrieval operation enhancement and leave that SQL Server index forever without continuously monitoring its fragmentation and maintain it.

SQL Server provides us with several methods that can be used to check the SQL index fragmentation percentage.

SQL Server Index Properties

The first method is the Fragmentation page of the SQL Server Index Properties window. Right-click on the index that you need to check and choose the Properties option. From the SQL Server Index Properties page, browse the Fragmentation page, and you will see the index fragmentation percentage and how much the SQL Server index pages are full under the Fragmentation section, as shown below:

In a few steps, you can easily check the SQL Server index fragmentation percentage of the selected index from the index properties window. But what if you need to have an overview of the fragmentation percentage for all table’s indexes or all database tables indexes? In this case, you need to go and check it one by one. As a result, a single work week will not be enough for one database report!

sys.dm_db_index_physical_stats

Another method that can be used to check the fragmentation percentage for the database indexes is querying the sys.dm_db_index_physical_stats dynamic management function, that was introduced the first time in SQL Server 2005, as a replacement for the deprecated DBCC SHOWCONTIG command. It provides information about the size and fragmentation percentage for the database indexes.

In order to get meaningful information about the fragmentation percentage of all indexes under the specified database from the sys.dm_db_index_physical_stats DMF, you need to join it with the sys.indexes DMV, as in the T-SQL script below:

SELECT OBJECT_NAME(Index_Info.OBJECT_ID) AS TableName ,Index_Info.name AS IndexName ,Index_Stat.index_type_desc AS IndexType ,Index_Stat.avg_fragmentation_in_percent IndexFragmPercent FROM sys.dm_db_index_physical_stats(DB_ID(), NULL, NULL, NULL, NULL) Index_Stat INNER JOIN sys.indexes Index_Info ON Index_Info.object_id = Index_Stat.object_id AND Index_Info.index_id = Index_Stat.index_id ORDER BY IndexFragmPercent DESC

Based on your SQL development skills, you can perform the filtration that you need to retrieve the SQL index fragmentation on the required scope, with no option to get a summary for the overall instance databases, taking into consideration that the shown data is since the last SQL Server service restart. The result in our case will be like:

SQL Server Index Physical Statistics Standard Report

SQL Server provides us with several standard reports that contain useful statistical information about the selected database. The Index Physical Statistics report, is one of the SQL Server standard reports that returns statistical information about the SQL Server index partitions, fragmentation percentage and the number of pages on each SQL index partition at the database level, since the last SQL Server service restart, with recommendation how to fix the index fragmentation issue, if exists, based on the fragmentation percentage of the SQL Server index.

To view the SQL Server Index Physical Statistics report, right-click on your database, choose Reports -> Standard Reports then select the Index Physical Statistics report, as shown below:

And the generated database level report, that cannot be filtered and needs expanding each table indexes result manually, will be as shown below:

ApexSQL Defrag

ApexSQL Defrag tool is a 3rd party SQL index defragmentation tool that can be easily used to check SQL Server index fragmentation and usage information and perform or schedule the suitable fix for the index fragmentation issue.

From one central location, you can analyze and review the fragmentation and usage information at different levels, including the SQL index fragmentation and usage information at the SQL Server index level, the table level, the database level and finally at the overall SQL Server instance level. It provides us with variant types of filters that can be used to display and maintain only your target indexes.

ApexSQL Defrag allows us also to configure the Fill Factor setting for the table indexes, create alerts for all defragmentation jobs and policies and send Email notification for successful or failed jobs.

The displayed information will provide a good view about the SQL index size and the fragmentation percentage, that will not be affected by any SQL Server service restart or server reboot. This is because this information will be stored in a central repository database for the ApexSQL Defrag tool.

Installing ApexSQL Defrag

ApexSQL Defrag can be easily downloaded from ApexSQL Download Center and installed to your server by following the straight-forward installation wizard, as shown below:

First, you will be asked to specify the service account that will be used as an execution context for the ApexSQL Defrag agent and conveys commands to the SQL Server instance, as follows:

After specifying the ApexSQL Defrag service account, you will be asked to specify where to install the ApexSQL Defrag tool and whether to create a shortcut icon for the tool in the desktop, as shown below:

Checking that the provided path contains minimum 127MB free space, ApexSQL Defrag will be installed successfully to your server. And the following notification will be displayed, providing you with the ability to start ApexSQL Defrag directly:

If you run ApexSQL Defrag for the first time, you will be asked to confirm the creation of the central repository database, where these data historical and configuration data will be stored, as follows:

Add New Server

After creating the repository database, ApexSQL Defrag will be started with no SQL instance. To check the SQL index fragmentation percentage on a specific SQL Server instance, you need to add that instance to that tool. To achieve that, click on the Add button, under the Home tab, as shown below:

In the Connect to SQL Server window, you will be asked to provide the name of the SQL Server instance that will be used and the credentials that are required to connect to that server, as follows:

SQL Server Index Analysis

When the server is added, you can perform a new check for all SQL index fragmentation information from Fragmentation tab below:

ApexSQL Defrag provides you with the ability to analyze all indexes on that instance based on three modes:

DETAILED: in which all data and SQL index pages will be read during the scan process

SAMPLED: in which only 1% of the pages will be read, taking into consideration that the SQL Server index contains more than 10,000 pages

LIMITED: in which only the pages located at the parent level of b-tree will be read

To analyze SQL Server index information from the connected SQL Server instance, click on the Analyze button, under the Fragmentation tab, and choose to perform a Fast or Deep analyzing. We will perform Deep analyzing to get full information, as shown below:

Keep monitoring the progress bar at the bottom side of the tool to check the analyze progress, as follows:

And a comprehensive report, that contains full information about all SQL indexes created under each database of this SQL Server instance, will be displayed as shown below:

With the ability to dive between the different levels; SQL Server instance, database, table or index, with single click under the same central window, and filter the retrieved result based on a specific fragmentation level or threshold, as shown below:

In addition, you can drag any column from the report columns to group the overall report based on that column values, such as the example below, in which we grouped the report data based on the Table name:

ApexSQL Defrag Reports

ApexSQL Defrag provides us with a number of reports that shows statistical information about the database indexes. These reports can be configured and checked under the Reports tab below:

The first report is the Total report, that shows a graphical representation for SQL index fragmentation and disk space usage at the selected level. For example, if you click at the instance name, the report will draw the fragmentation percentage graph and the space usage graph at the SQL Server instance level, as below:

If you click on any database from the Server Explorer window, the report will automatically change the view to show the graph at the database level, as shown below:

The second report, that can be also viewed at different levels, is the Statistics report. This report shows statistical information about the total indexes size and fragmentation and the distribution of the index types at the selected level, as shown below:

The third report is the Top 10 report, that views the top 10 clustered and non-clustered indexes with the largest size and highest SQL index fragmentation percentage, at the specified level. For example, the following report shows the top 10 expensive indexes, based on the size and fragmentation, at the SQL server instance level:

Moving down to a specific database, the report will automatically show the top10 expensive indexes, based on the size and fragmentation, at the selected database level, as shown below:

Diving deeply to a specific table under that database, the report will show the top 10 expensive indexes, based on the size and fragmentation, as follows:

ApexSQL Defrag Report Export

ApexSQL Defrag allows us to export the fragmentation reports to different files formats, includes PDF, IMG, HTML, CS, and XML, with the ability to customize the report setting before it being generated. Under the Reports tab, click on the Options button:

From the Edit Export Options window, you can configure the different options, starting from the naming convention of the generated file, the path where the file will be saved, the page orientation and finally the content of each report, as shown below:

For example, to export the generated Total report at the specified level, click on the Export button, under the Reports tab, and choose the type of file, to which this Total report will be exported. In a few seconds, the report will be generated, asking you whether you want to open the generated report. Click Yes to open that report directly, as shown below:

In addition, you can export the Top 10 report at the specified level, by clicking on the Export button, under the Reports tab, and specify the export file type, and the report will be generated quickly, asking you if you want to view the generated report directly. Click Yes to open the generated, as shown below:

It is clear from the previous examples, that we can easily use ApexSQL Defrag tool to review and analyze the SQL index fragmentation percentage information and dive at the different level starting from the SQL Server instance level until the SQL Server index level and display it in different exportable formats, without having the displayed information affected by any server or service restart, as they will be stored in a repository database.

0 notes

Text

SQL Server Compact Toolbox 3.7–Visual Guide of new features

After more than 235.000 downloads, version 3.7 of my SQL Server Compact Toolbox extension for Visual Studio 2013, 2012 and 2010 is now available for download (and available via Tools/Extensions in Visual Studio). This blog post is a visual guide to the new features included in this release, many suggested by users of the tool via the CodePlex issue tracker. A major “theme” for this release has been to improve the experience for Visual Studio 2013 users, which has no Server Explorer/DDEX support for SQL Server Compact at all. Add Column, Index and Foreign Key to table As Server Explorer support is completely missing in VS 2013, and only available for 4.0 in VS 2012, I have added dialogs for building script to add columns, indexes and foreign keys to a table. Right click any table, and select the desired dialog: Note that currently the Index and Foreign Key dialogs only support a single column, I am aware of this, so please vote here and/or here. Generate Entity Framework 6 Entity Data Model with VS 2012 and SQL Compact 3.5 The code generation menu items have now been moved to a sub-menu: In addition, as the Entity Framework Tools are now available for Visual Studio 2012 as well as Visual Studio 2013, it is now possible to generate a “database first” Entity Framework model (EDMX) based on a SQL Server Compact database in VS 2012. And I have just contributed an Entity Framework SQL Server Compact 3.5 provider (available as prerelease on NuGet), that allows you to use SQL Server Compact 3.5 with Entity Framework 6. Copy database file It is now possible to copy a database file, and then for example paste it into your project, should you so desire, simply by pressing Ctrl+C on the selected database, or selecting the context menu item: Data only export to SQL Server The current data export feature has been enhanced to support SQL Server and IDENTITY columns by using DBCC CHECKINDENT instead of the unsupported ALTER TABLE statements currently used. New "ALTER column" context menu item To help you modify columns, a new context menu to script ALTER COLUMN statements has been added: “Database designer” I am now hosting a web based database designer based on http://code.google.com/p/wwwsqldesigner/ (which implements only the SQL Server Compact column data types). Current state is “alpha” (and there are known issues with IE 11)! Server DGML now has table selection When generating a database diagram for a SQL Server database, you can now select which tables to include in the graph, which can be helpful when diagramming databases with many tables. I have already blogged about this earlier. Other improvements and bug fixes Missing database files indicated in tree view No longer depends on the SQL Server Compact 3.5 runtime, and no longer depends on DbProviderFactory, simply requires either 3.5 or 4.0 in GAC Each Query opens in new window BUG: "Upgrade to 4.0" was blocked in Visual Studio 2013 BUG: Not ideal support for Dark Theme in VS 2012/2013 (improved slightly) BUG: EF6 EDMX code generation with VB was broken

0 notes

Link

Data Definition Language queries don’t go through the optimizer, right? While normally, my short answer to this question in the past would have been, yes. However, with testing comes knowledge and I want to be sure about the statement.

I’m working with a team of people to completely rewrite the SQL Server Execution Plans book. We’ll probably be published in April or May. It’s going to be amazing. The structure will be completely different and the book will represent five years of additional knowledge in how execution plans work and how to read and interpret them since the last book was written. However, enough on that. Let’s answer the question about Data Definition Language.

First of all, we need to quickly define our terms. Data Definition Language (DDL) represents the syntax for queries that build your databases and the data structures within them. As a result, if you read the documentation at MSDN, you’ll see that this includes CREATE, ALTER, DROP, ENABLE/DISABLE TRIGGER, TRUNCATE TABLE and UPDATE STATISTICS. Consequently, these command are separated from the Data Manipulation Language (DML) queries that are used to SELECT, UPDATE, DELETE and INSERT your data (amongst other things).

Testing the Data Definition Language Query

Here is an example of one of the simplest possible DDL queries:

CREATE TABLE dbo.MyNewTable ( MyNewTableID INT PRIMARY KEY IDENTITY(1, 1), MyNewValue NVARCHAR(50) ); GO

We can check whether or not you’re going to get an execution plan for this in two easy ways. You can capture the estimated plan, or run the query and capture the actual plan. Since most of the time, it’s preferable to work with the added runtime information that an actual plan provides, let’s start there. However, if you execute the query above and try to capture an actual plan, you will not get one. So, let’s capture the estimated plan instead. Here’s what we see:

Not much of a plan really. The devil is in the details though, so let’s open the Properties page (always use the Properties page, the ToolTip is just too unreliable in the amount of information displayed):

That is ALL the properties that are available. The implication is simple, SQL Server doesn’t create execution plans for DDL statements.

Further, the lack of a plan suggests that these queries must not go through the query optimization process. We can check this. There is a Dynamic Management View (DMV), sys.dm_exec_query_optimizer_info, that shows aggregate values for the work done by the optimizer. It’s a little tricky to capture the metrics of a single query, but it is possible (NOTE: don’t run this on production, please):

DBCC FREEPROCCACHE(); GO SELECT * INTO OpInfoAfter FROM sys.dm_exec_query_optimizer_info AS deqoi; GO DROP TABLE OpInfoAfter; GO --gather the existing optimizer information SELECT * INTO OpInfoBefore FROM sys.dm_exec_query_optimizer_info AS deqoi; GO --run a query CREATE TABLE dbo.MyNewTable ( MyNewTableID INT PRIMARY KEY IDENTITY(1, 1), MyNewValue NVARCHAR(50) ); GO SELECT * INTO OpInfoAfter FROM sys.dm_exec_query_optimizer_info AS deqoi; GO --display the data that has changed SELECT oia.counter, (oia.occurrence - oib.occurrence) AS ActualOccurence, (oia.occurrence * oia.value - oib.occurrence * oib.value) AS ActualValue FROM OpInfoBefore AS oib JOIN OpInfoAfter AS oia ON oib.counter = oia.counter WHERE oia.occurrence <> oib.occurrence; GO DROP TABLE OpInfoBefore; DROP TABLE OpInfoAfter; GO

If we run this, we won’t see any values. This is because this CREATE TABLE statement doesn’t go through the optimizer. There’s no optimization possible, so you won’t see an execution plan of any kind.

Does this mean that my first answer is correct then? Does the optimizer skip making execution plans for DDL statements?

Changing the Data Definition Language Query

Let’s modify the query so that we’re doing an ALTER table instead of a CREATE:

ALTER TABLE Sales.Customer WITH CHECK ADD CONSTRAINT SomeTest FOREIGN KEY(CustomerID) REFERENCES [dbo].[Agent] ([AgentId]);

If we check the Estimated and Actual plans using the same methods above, we don’t get an execution plan (well, the estimated plan is the same T-SQL place holder that we saw). What happens if we see what the optimizer did? This time, we get results:

counter ActualOccurence ActualValue elapsed time 2 0 final cost 2 0.139429282 insert stmt 1 1 maximum DOP 2 0 optimizations 2 2 search 1 2 2 search 1 tasks 2 117 search 1 time 2 0 tables 2 2 tasks 2 117 view reference 1 1

I’ll tell you right now, I don’t know what this represents. I suspect I’d need to run the debugger to see it. Maybe it’s a plan for the CHECK process of the check constraint. The optimizer is involved in this process, twice. Two different statements were optimized. One involved inserting data and one involved referencing a view. However, at the end of the process, we still don’t see an execution plan displayed.

How About Extended Events

Can we capture the events to see what’s happening? We can try. I’ll spin up a Session and capture the following events:

query_post_execution_showplan sql_statement_completed sp_statement_completed

In addition, I’ll enable event correlation so that we can see the order in which events occurred. Now, I’ll try running the ALTER TABLE command to see what we can find out:

name statement attach_activity_id.guid attach_activity_id.seq query_post_execution_showplan NULL 65A0A74B-E5D5-460C-846D-87808C334283 1 sp_statement_completed SELECT * FROM [Sales].[Customer] 65A0A74B-E5D5-460C-846D-87808C334283 2 sql_statement_completed ALTER TABLE Sales.Customer WITH CHECK ADD CONSTRAINT SomeTest FOREIGN KEY(CustomerID) REFERENCES [dbo].[Agent] ([AgentId]) 65A0A74B-E5D5-460C-846D-87808C334283 3

And there we have it. We can see that there was a plan compiled, first, then a procedure call was made with a single statement: SELECT * FROM Sales.Customer. Finally our SQL Batch statement that ran the ALTER TABLE was created. Everything is grouped by the activity GUID and we have them in the sequence determined by attach_activity_id.seq. Here’s the execution plan:

In short, there was a plan generated here, but it is, as I guessed at, the plan for the check constraint process. There is no plan for the Data Definition Language statement.

Conclusion

Just saying something doesn’t make it true. You have to be able to prove it. From every evidence that Microsoft supplies us, the DDL statements do not generate an execution plan or go through the query optimization process. Because we tested this theory by attempting to create a plan, by observation of the optimizer through the sys.dm_exec_query_optimizer_info DMV, and through Extended Events, we can therefore safely say that none of the statements tested created any kind of plan for the DDL statement.

Have I tested every variation on every possible DDL statement? No. Is it possible that other statements could have execution plans associated with them? I won’t say that’s impossible, because nothing is impossible. Therefore, I’ll simply say, that’s unlikely.

The post Query Optimizer and Data Definition Language Queries appeared first on Home Of The Scary DBA.

0 notes

Text

Navigating Through the Storm: Repairing SQL Server 2022 Database Corruption

Below is a structured approach to tackling database corruption in SQL Server 2022, featuring practical T-SQL code examples. Introduction Database corruption is a daunting challenge for any database administrator (DBA). In SQL Server 2022, Microsoft has continued to enhance its robust set of tools to detect, diagnose, and repair corrupt databases. This article provides a hands-on guide for…

View On WordPress

#database integrity solutions#DBCC CHECKDB examples#SQL Server 2022 corruption repair#SQL Server corruption prevention#T-SQL repair scripts

0 notes

Text

Ensuring Database Integrity with DBCC CHECKDB in SQL Server

Introduction Maintaining the integrity of your SQL Server databases is crucial for ensuring data reliability and preventing corruption. One of the most powerful tools available for this purpose is DBCC CHECKDB. In this article, we’ll explore what DBCC CHECKDB does, how to use it effectively, and provide practical T-SQL code examples to help you incorporate it into your database maintenance…

View On WordPress

0 notes

Text

DBCC DROPCLEANBUFFERS vs DBCC FREEPROCCACHE

In the vast and intricate world of SQL Server, two commands that often spark interest among database professionals are DBCC DROPCLEANBUFFERS and DBCC FREEPROCCACHE. These commands play a pivotal role in the performance tuning and testing of SQL Server environments. By delving into practical T-SQL code examples, we can uncover the applications and implications of these commands, thereby enhancing…

View On WordPress

#DBCC DROPCLEANBUFFERS usage#DBCC FREEPROCCACHE command#query optimization techniques#SQL cache management#SQL Server performance

0 notes

Link

When it comes to SQL Server performance, you might not think of it as a big deal because of today’s modern hardware but every once in a while, your office phone will start ringing off the hook, your email inbox will start blowing up… SQL Server has been brought to its knees. What happened?

That’s what we are going to look at in this article. The main goal is to identify what some of the common problems are and take a close look at the SSMS Performance Dashboard tool. This tool is available out of the box for DBAs allowing to diagnose and resolve their SQL Server performance issues quickly and easily for all supported versions of SQL Server from SQL Server 2008 to SQL Server 2017.

SSMS Performance Dashboard used to be a separate download that required a schema to be deployed but with the release of SSMS 17.2, Microsoft released the Performance Dashboard as a part of Standard Reports into SSMS eliminating the previous installation process.

So, let’s select a server in SSMS from Object Explorer, and from the right-click context menu choose Reports > Standard Reports and open the Performance Dashboard:

Next, we will see the data populated in the report:

This report is designed to help a DBA easily single out if there is any present performance killers on the targeted SQL Server. From the dashboard itself, we can further click on the hyperlinks to navigate to additional report for further drill through. For example, click the IO Statistics under the Historical Information to show the number of IOs performed for each database since it was brought online:

Each hyperlink will generate a report that puts the targeted task into a specific category, so it’s easier to read the generated data and what makes this a great SQL Server monitoring solution.

The most common performance issues which the Performance Dashboard can pick up and help you resolve it cover cases like:

CPU bottlenecks (shows queries with the most CPU utilization)

I/O bottlenecks (shows queries with the most disk activity)

Index recommendations (missing indexes)

Blocking

Resource contention (latch contention)

If a CPU holds back the rest of the system, SQL Server Performance Dashboard Report will capture additional diagnostic data that may be necessary to resolve the problem and point out the presence of the issue:

All this information can be used in a global set of SQL Server tools that make an ecosystem. For instance, after reading the reports, we can fire up the Activity Monitor from the same right-click context menu:

This monitor provides an overview of the active expensive queries among other useful information about SQL Server processes and how these processes affect the current instance of SQL Server:

Microsoft indicates that this monitoring has lightweight profiling infrastructure which basically means the service can run all the time and it only takes 1.5 – 2% of resource consumption in terms of CPU. This doesn’t hit overall performance of most SQL Servers and in return brings a lot to the table.

How, what we can do from here is right-click the actively expensive query and choose Show Execution Plan:

Why? Because recent CPU rate used by this query (see “sys.dm_exec_query_stats.total_worker_time“) is huge, and also the elapsed time of all completed execution of this query (see “sys.dm_exec_query_stats.total_elapsed_time“) is huge which means that this query could be running for hours.

This will open the Execution Plan for this query which we can examine further and look for bottlenecks. In this case, if we pause the mouse over the “Merge Join” operator which is the obvious performance killer with 93% of the total cost relative to the batch, we can view the description and properties of the operator in the displayed tooltip. As shown below, the Estimated number of Rows is extremely high (1,994,870,000) and that’s the cause of the long running query:

This is a very artificial example, but the point is that we found the smoking gun here. Let’s also take a look at a more real-world example and see what we can do when we come upon a slow/long running query.

Now, what I like to do before analyzing queries is to create a clear system state which basically means to remove as much as possible from the SQL Server cache. This is easily done by executing the query from below:

CHECKPOINT; GO DBCC DROPCLEANBUFFERS; DBCC FREESYSTEMCACHE('ALL'); GO

After this step, here’s another query captured as an expensive active query. When executed in SSMS, the status bar indicates that it took 3 seconds to complete:

This is all good, but when it comes to performance you can also consider another free tool like ApexSQL Plan that helps me analyze actual/estimated execution plans, and optimize SQL queries better than SSMS’s native execution plans.

Next, always remember to clear the cache and then execute the query if you want to capture more reliable results. Let’s run the same query in ApexSQL Plan and see the result. Create a new session, paste the code in the editor, along with the “clear cache” part in front, and from the Management tab hit the Actual button. If previously a connection was not established, set the targeted Server, Authentication method, Database and hit the OK to get the actual execution plan:

What we get inside the ApexSQL Plan is operator level performance information of an execution plan. Understanding all of the factors that are needed to ensure that a query is executed as quickly as possible is more of an advanced topic. I do want to highlight the fact that getting in, analyzing and truly optimizing queries does require a fair amount of experience with SQL Server. Also worth mentioning, is that some of the topics fall under the domain of your database developer or administrator. Now, with this little disclaimer being tossed out there it’s okay if you don’t understand the execution plan. But, on the other side, all we need to know right now is under the Select statement under the Duration column:

Note that the execution time is in milliseconds and that it’s 4,177 or 4 seconds. If you remember the result from the previous run in SSMS, it was 3 seconds. The reason why it took more for SQL Server to fetch the same data is that this time, the cache was cleared. Next, I’d like to mention another neat optimization tool part of the global ecosystem called Database Engine Tuning Advisor. Go back to SSMS, right-click anywhere inside the query editor and from the context menu choose Analyze Query in Database Engine Tuning Advisor command:

This tool examines how queries are processed in the databases you specify. All we need to do from here, is just to hit the Start analysis button at the top right:

Once the analysis is finished, under the Recommendations tab, you should see suggestions on how you can improve query processing performance by modifying database structures such as indexes, indexed views, and partitioning. In this case, there is only one index recommendation that should improve execution of this query at estimated 34%:

Let’s finish the optimization by going to Action > Apply Recommendations:

Hit the OK to confirm:

Once done, click Close to finish up the process:

Go back to ApexSQL Plan and execute the same query one more time. This time, the execution took 3,853 milliseconds:

This might not sound like a substantial improvement (4,177 to 3,853) but even a slight 8% improvement like this can go a long way if you think that this query could be coming from an application and the count could go as high as 1000 per hour. And remember, this 8% improvement is done with a single index creation.

I hope this article has been informative for you and I thank you for reading.

References: New in SSMS: Performance Dashboard built-in SQL Server Performance Dashboard Reports unleashed for Enterprise Monitoring !!! Start and Use the Database Engine Tuning Advisor

0 notes

Link

Indexes are paramount to achieving good database and application performance. Poorly designed indexes and a lack of the same are primary sources of poor SQL Server performance. This article describes an approach for SQL server index optimization to increase query performance.

An index is a copy of information from a table that speeds up retrieval of rows from the table or view. Two main characteristics of an index are:

Smaller than a table – this allows SQL Server to search the index more quickly, so when a query hits a particular column in our table and if that column has an index on it, the SQL Server can choose to search the index rather than searching the entire table because the index is much smaller and therefor it can be scanned faster

Presorted – this also means that search can be performed faster because everything is already presorted, for example, if we’re searching for some string that starts with the letter “Z” the SQL Server is smart enough to start the search from the bottom of an index because it knows where the search criteria is going to be

Available index types

In general, SQL Server supports many types of indexes but in this article, we assume the reader has a general understanding of the index types available in SQL Server and will only list the most used ones that have the greatest impact on SQL Server index optimization. For a general description of all index types, please see Index Types.

Clustered – determines how the data is written to the disk e.g. if a table doesn’t have a clustered index, the data can be written in any order making it hard for SQL Server to access it, however, when present, a clustered index sorts and stores the data rows of the table or view usually in alphabetical on numerical order. So, if we have an identity field with a clustered index on it then the data will be written on the disk based on the number of that identity. There can only be one way in which SQL Server can order the data physically on the disk and therefore we’re only allowed one clustered index per table. One important thing to know is that a clustered index is automatically created with a primary key

Nonclustered – this is the most common type in SQL Server and typically more than one on a single table. The maximum number of nonclustered indexes vary depending on the SQL Server version but the number goes e.g. in SQL Server 2016 as high as 999 per table. Unlike clustered indexes, which actually organize data, the nonclustered index is a bit different. The best analogy would be to think of it as a book. If we go to the very end of a book, usually there is an indexing part that basically has a huge list of topics and it points on which page they’re on. A typical scenario is the reader finds a topic/term and it points e.g. to a chapter on page 256. If the reader goes to that page, the searched information is right there. The point is to find it very fast without the need to go through the entire book searching for the content and this is basically what nonclustered index does

Columnstore – converts data that is normally stored in columns and converts it into rows and in doing so it allows the database engine to search more quickly. They are the standard for storing and querying large data warehousing fact tables

Spatial – this type of index provides the ability to perform certain operations more efficiently on spatial objects like geometry and geography. They are somewhat uncommon outside of specialized GIS systems

XML – as the name applies, this index type is associated with the XML data type and they convert XML data into a tabular format and again this allows them to be searched more quickly. There’re two types: primary and secondary. A primary index is a requirement in order to create secondary

Full-text – provides efficient support for sophisticated word searches and English language queries in character string data. This is the only type of index that allows us to run a different type of query and to find words that sound like each other or different forms of a word, so for example if a singular word search is performed, the index would also return a plural of the specified word

Creating an index

So, now that we gained some basic knowledge and a general idea of what indexes do let’s see a few SQL Server index optimization real-world examples using SQL Server Management Studio (SSMS) to create an index and most importantly take a closer look at some performance benefits of indexes.

First things first, we need to create a testing table and insert some data into it. The following example uses the AdventureWorks2014 database but you’re welcome to use any database also because we’re creating a new table that can be deleted afterward. Execute the code from below to create a brand new copy of the existing Person.Address table:

SELECT * INTO Person.AddressIndexTest FROM Person.Address a;

We just created a new table called Person.AddressIndexTest and by executing the query from above we’ve copied 19614 records into it but unlike the source table the newly creaded one has absolutely no indexes on it:

Because this table has no indexes on it, if we open up ApexSQL Plan and look at the execution plan, it’s going to be a full table scan (scan all rows from the table), row-by-row bringing back the result that is specified in the Select statement:

Now, let’s query the new data in SSMS and see how we can analyze information returned in the results grid. Before analyzing queries and performance testing it’s recommended to use the below code:

CHECKPOINT; GO DBCC DROPCLEANBUFFERS; DBCC FREESYSTEMCACHE('ALL'); GO

The CHECKPOINT and DBCC DROPCLEANBUFFERS are just creating a clean system state. It’s going to remove as much as possible from the cache because when doing performance testing it’s highly advisable to start with a clean state of memory each time. This way we know that any changes in performance weren’t caused by some data being cached that hasn’t been cached on previous runs.

The other part of the same query will be the Select statement in which we’re returning one column from the table we just created. But wrapped around the Select statement, let’s add the SET STATISTICS IO to display information regarding the amount of disk activity generated by T-SQL statements itself. So, when we execute the statement, it will of course return some data but the interesting part is when we switch over to the Messages tab where we can see how the table was queried under the hood:

As it can be seen, there’re 339 logical reads and the same amount of read-ahead reads. What it’s important here is to know what those two represents:

read-ahead reads – is when the data is read from the disk and then copied into memory (cache) AKA disk activity

logical reads – is when the data is read from the cache AKA memory activity

An easier way to get the same information is to get the actual execution plan of just the Select statement in ApexSQL Plan, and then switch to I/O reads tab. Remember to always clear the cache or you might see zero read-ahead reads. Why? Because we already executed the Select statement once in SSMS and after that SQL Server accesses data from the cache:

Now, let’s create an index on the table and then run the same query again and there should be a lower number of reads. The easiest way to create an index is to go to Object Explorer, locate the table, right-click on it, go to the New index, and then click the Non-Clustered index command:

This will open the New index window in which we can click the Add button on the lower right to add a column to this index:

For this example, we only need one column to be added to the index and that’s the one from the Select statement. So, select the AddressID table column and hit the OK button to proceed:

Back to the new index table, Under the Index key columns tab, there should be the column we just added (if it’s not, switch the page and it will appear). SQL Server has automatically chosen a name for the index and if needed this can be changed but why not go with the defaults. To complete the index creation just hit the OK button one more time:

Once this is done, let’s run the exact same query and look at the I/O reads again:

This time, we got 1 physical, 13 logical, and 11 read-ahead reads which is significantly less than before. Notice that we got the exact same result back with much less disk activity and access to the cache within the addition of a single index and SQL Server fulfilled the request of this query but did significantly less work. Why is that? Well, we can look at a few things to explain this. First, let’s go to Object Explorer, right-click our table and choose Properties:

This will open the table properties window and if we switch over to Storage page, there’s a Data space item which represents the size of the data in the table in megabytes (in this case 2.68 MB) and the first time when we ran the query without the index, SQL Server had to read every row in the table but the index is only 0.367 MB. So, after we created the index, SQL Server could execute the same query by only reading the index because in this case the index is 7.3x smaller than the actual table:

We can also look at the statistics. Statistics play a significant role in the database performance world. Once the index is created, SQL Server automatically creates statistics. Back to Object Explorer, expand the table, expend the Statistics folder and right-click the statistic with the exact same name as previously created index and choose Properties:

Again, this will open the statistics properties window in which we can switch to Details page under which we can see that the index is broken into different ranges:

You’ll see hundreds of these ranges in statistics on tables and because of them, SQL Server knows if values searched for are at the very beginning, middle, or end of an index and therefore it doesn’t need to read the entire index. Usually, it will start at some percentage thru and just read to the end of it.

So, these two factors: an index is usually smaller than a table and the fact that statistics are maintained on an index allows the SQL Server to find the particular data we’re looking for by using fewer resources and more quickly. Bear in mind that the indexes provide for a performance increase when the data is being read from a database but they can also lead to a performance decrease when the data is being written. Why? Simply because when inserting data into a table, SQL Server would have to update both the table and the index values increasing the writing resources. The general rule of thumb is to be aware of how often a table is being read vs how often is written to. Tables that are primarily read-only can have many indexes and tables that are written to often should have fewer indexes.

Columnstore index

A columnstore index is a technology for storing, retrieving and managing data by using a columnar data format, called a columnstore. Creating a columnstore index is similar to creating a regular index. Expand a table in Object Explorer, right-click on the Index folder and there will be two items: Clustered and Non-Clustered Columnstore Index:

Both commands open up the same new index dialog. So, rather than showing the same process of creating another type of indexes let’s look at an existing Columnstore indexes in our sample database. Now, what I like to do is to run a query from below against a targeted database and it will return a list of all indexes for all user tables from that database:

USE AdventureWorks2014 GO SELECT DB_NAME() AS Database_Name, sc.name AS Schema_Name, o.name AS Table_Name, i.name AS Index_Name, i.type_desc AS Index_Type FROM sys.indexes i INNER JOIN sys.objects o ON i.object_id = o.object_id INNER JOIN sys.schemas sc ON o.schema_id = sc.schema_id WHERE i.name IS NOT NULL AND o.type = 'U' ORDER BY o.name, i.type;

The results grid shows the database name, schema name, table name, index name, and most importantly index type. Scrolling down thru the list of indexes find one that says nonclustered columnstore and, as we can see from below example, one is attached to the SalesOrderDetail table:

Next, let’s run a Select query in ApexSQL Plan and again remember to add a checkpoint to clean the buffers:

CHECKPOINT; GO DBCC DROPCLEANBUFFERS; DBCC FREESYSTEMCACHE('ALL'); GO SET STATISTICS IO ON; SELECT sod.SalesOrderID, sod.CarrierTrackingNumber FROM Sales.SalesOrderDetail sod OPTION(IGNORE_NONCLUSTERED_COLUMNSTORE_INDEX); SET STATISTICS IO OFF;

The first time we’ll run it with the option to ignore the columnstore index. The idea behind this is to see what kind of performance we get when we ignore the index and as shown below we got: scan count of 1, 1246 logical reads, 3 physical reads, and 1242 read-ahead reads:

We already know what those I/O reads represent but let’s just comment out the option to ignore the columnstore index and see what happens:

So, this time the index will be used and instead of disk and memory activity we got previously, this time we got 218 lob logical reads, 3 lob physical reads, and 422 lob read-ahead reads:

So, we went down from over 1,200 read-ahead reads to about 400 which means SQL Server is doing 66% less work.

LOB reads – you’ll see those when reading different data types

How does this work? A columnstore index takes data that is typically stored in columns and instead stores it in rows. In this case, we were looking for information stored in two columns: SalesOrderID and CarrierTrackingNumber but the table SalesOrderDetail has 9 additional columns. Without the columnstore index, SQL Server has to read all the details of every row in order to find the two that we specified. But with the columnstore index that data is stored in rows rather than columns and therefore the SQL Server only has to read the appropriate rows and furthermore can ignore a large percentage of the rows which ultimately give us a significant SQL Server index optimization increase by simply causing the SQL Server to do less work.

Explore execution plans

Numbers can be confusing even when we know what they represent. ApexSQL Plan does have a compare option which allows comparing data side-by-side but what I like to do is to explore the execution plans and look at a few things that break things down making it easy to understand what is good and what is bad even for a first-time user.

So, what I’d normally do is edit the Query text and execute two Select statements in one query from which one has the option to exclude the index, and the other one is what SQL Server would normally choose to fulfill the request. This way, if we hover the mouse over first Select statement a tooltip will appear showing T-SQL code. As shown below, this one forces the execution plan to ignore the columnstore index. Furthermore, it shows the total query cost relative to the batch which is 86.4% in this case. Down below we can also see that it’s scanning a clustered index, entirely or only a range:

If we move on to a regular Select statement, notice that query cost is only 13.6% which is a decrease of 84%. Looking at the execution plan, we can also verify that the columnstore index was used this time. Furthermore, if we just look at the bars representing the visual indicator of the total cost, CPU cost, and I/O cost we can see the performance boost on this query within the presence of a single index:

Columnstore indexes were introduced in SQL Server 2014 with a major limitation when adding a columnstore index on a table, in that the table would become read-only. Luckily, Microsoft has removed this limitation in SQL Server 2016 and starting from this version and newer we have the read-write functionality.

I hope this article has been informative for you and I thank you for reading.

For more information about on SQL Server index tuning, please see articles on how to optimize SQL Server query performance – part 1 and part 2

Useful links

Statistics

Indexes

SQL Server Index Design Guide

The post How to create and optimize SQL Server indexes for better performance appeared first on Solution center.

0 notes

Link

Have you ever gotten a new computer, hooked it up and said: “this computer is blazing fast, I love it”? I have. A year from then, I was like “this computer is so slow, I need a new one”.

Performance is a big deal and this was the opening line in an article that was written on How to optimize SQL Server query performance. The initial article shows not only how to design queries with the performance in mind, but also shows how to find slow performance queries and how to fix the bottlenecks of those queries. I’d highly recommend reading the above article first because this one would give a lot more meaning but also because it’s an appendix to this topic.

Before we continue further, here’s a quick recap of the covered subjects and their main goals:

Query optimization overview – In this part, we got familiar with what query plans are all about. This part explained in detail how query plans help SQL Server in finding the best possible and efficient path to the data and what happens when a query is submitted to SQL Server including steps that it goes through to find those routes. Furthermore, we also covered statistics and how they help the Query Optimizer to build the most efficient plan so that SQL Server can find the best way to fetch data. We also mentioned a few tips and tricks (guidelines) on how to be proactive in designing queries with the performance in mind to ensure that our queries are going to perform well right out the gate.

Working with query plans – Here we jumped over to a free tool called ApexSQL Plan to get familiar with query plans and understand how to “decipher” them which will ultimately help us find the bottlenecks in slow performance queries. We mentioned statistics again, but this time we’ll also show where they’re stored in SQL Server and how to view them. Furthermore, these statistics can be maintained and kept up-to-date to ensure that the Query Optimizer will create best guesses when fetching data. We also covered examples of different use case scenarios on how SQL Server is accessing data using full table scan vs full index scan vs index seek, etc.