#page level restore in sql server

Explore tagged Tumblr posts

Text

Reliable Website Maintenance Services In India | NRS Infoways

In today’s hyper‑connected marketplace, a website is far more than a digital brochure—it is the beating heart of your brand experience, your lead‑generation engine, and your most valuable sales asset. Yet many businesses still treat their sites as “launch‑and‑forget” projects, only paying attention when something breaks. At NRS Infoways, we understand that real online success demands continuous care, proactive monitoring, and seamless enhancements. That’s why we’ve built our Reliable Website Maintenance Services In India to deliver round‑the‑clock peace of mind, bulletproof performance, and measurable ROI for forward‑thinking companies like yours.

Why Website Maintenance Matters—And Why “Reliable” Makes All the Difference

Search engines reward fast, secure, and regularly updated sites with higher rankings; customers reward them with trust and loyalty. Conversely, a sluggish, outdated, or vulnerable site can cost you traffic, conversions, and brand reputation—sometimes overnight. Our Reliable Website Maintenance Services In India go beyond the basic “fix‑it‑when‑it‑breaks” model. We combine proactive health checks, performance tuning, security hardening, and content optimization into a single, cohesive program that keeps your digital storefront open, polished, and ready for growth.

What Sets NRS Infoways Apart?

1. Proactive Performance Monitoring

We leverage enterprise‑grade monitoring tools that continuously scan load times, server resources, and user journeys. By identifying bottlenecks before they escalate, we ensure smoother experiences and higher conversion rates—24/7.

2. Robust Security & Compliance

From real‑time threat detection to regular firewall updates and SSL renewals, your site stays impervious to malware, SQL injections, and DDoS attacks. We align with global standards such as GDPR and PCI‑DSS, keeping you compliant and trustworthy.

3. Seamless Content & Feature Updates

Launching a new product line? Running a seasonal promotion? Our dedicated team updates layouts, landing pages, and plugins—often within hours—to keep your messaging sharp and relevant without disrupting uptime.

4. Data‑Driven Optimization

Monthly analytics reviews highlight user behavior, bounce rates, and conversion funnels. We translate insights into actionable tasks—A/B testing CTAs, compressing heavy images, or refining navigation—all folded into our maintenance retainer.

5. Transparent Reporting & SLAs

Every client receives detailed monthly reports covering task logs, incident resolutions, and performance metrics. Our Service Level Agreements guarantee response times as low as 30 minutes for critical issues, underscoring the “Reliable” in our Reliable Website Maintenance Services In India.

Real‑World Impact: A Success Snapshot

A Delhi‑based B2B SaaS provider reached out to NRS Infoways after repeated downtime eroded user trust and slashed demo bookings by 18 %. Within the first month of onboarding, we:

Migrated their site to a high‑availability cloud cluster

Deployed a Web Application Firewall (WAF) to fend off bot attacks

Compressed multimedia assets, cutting average load time from 4.2 s to 1.3 s

Implemented weekly backup protocols with versioned restores

Result? Organic traffic climbed 27 %, demo sign‑ups rebounded 31 %, and support tickets fell by half—proving that consistent, expert care translates directly into revenue.

Flexible Plans That Scale With You

Whether you manage a lean startup site or a sprawling enterprise portal, we offer tiered packages—Basic, Professional, and Enterprise—each customizable with à‑la‑carte add‑ons like e‑commerce catalog updates, multi‑language support, or advanced SEO audits. As your business evolves, our services scale seamlessly, ensuring you never pay for overhead you don’t need or sacrifice features you do.

Partner With NRS Infoways Today

Your website is too important to leave to chance. Join the growing roster of Indian businesses that rely on NRS Infoways for Reliable Website Maintenance Services In India and experience the freedom to innovate while we handle the technical heavy lifting. Ready to protect your digital investment, delight your visitors, and outpace your competition?

Connect with our maintenance experts now and power your growth with reliability you can measure.

0 notes

Text

Jobs Portal Nulled Script 4.1

Download the Best Jobs Portal Nulled Script for Free Are you looking for a powerful, customizable, and free job board solution to launch your own employment platform? The Jobs Portal Nulled Script is your ideal solution. This fully-featured Laravel-based job board script offers premium functionality without the high cost. Whether you're building a local job site or a global hiring platform, this nulled script gives you everything you need—completely free. What is the Jobs Portal Nulled Script? The Jobs Portal Nulled Script is a premium Laravel-based job board application designed for businesses, HR agencies, and entrepreneurs who want to build a seamless job posting and recruitment website. The script comes packed with advanced features like employer and candidate dashboards, resume management, email notifications, location-based job search, and more—all without any licensing fees. Why Choose This Nulled Script? Unlike expensive premium plugins or themes, this Jobs Portal Nulled Script offers unmatched value. It provides a user-friendly interface, customizable design, and enterprise-level tools to make recruitment easier for both job seekers and employers. Plus, it's completely free to download from our site, allowing you to save money while building a professional job board. Technical Specifications Framework: Laravel 8+ Database: MySQL 5.7 or higher Language: PHP 7.4+ Responsive Design: Fully mobile-optimized API Ready: RESTful API endpoints available SEO Optimized: Built-in tools for on-page SEO Top Features and Benefits Employer & Candidate Dashboards: Tailored experiences for recruiters and job seekers. Smart Resume Management: Easily manage and filter resumes by job category and skills. Advanced Job Search: Location and keyword-based filtering for accurate results. Email Alerts: Automated job notifications for registered users. Payment Integration: Support for paid job postings with multiple gateways. Multi-language Support: Reach users across the globe effortlessly. Who Can Use This Script? The Jobs Portal Nulled Script is versatile and ideal for: HR agencies wanting a digital recruitment platform. Startups looking to monetize job listings or applications. Universities or colleges offering campus recruitment tools. Freelancers who want to provide job board services to clients. How to Install and Use Installing the Jobs Portal Nulled Script is straightforward: Download the script from our website. Upload the files to your server using FTP or a file manager. Create a MySQL database and import the provided SQL file. Edit the .env file to include your database credentials. Run the Laravel migration and seed commands to set up the tables. Visit yourdomain.com to start configuring your job portal! No technical expertise? No worries. The documentation provided makes it easy even for beginners to set up a complete job board system. FAQs – Frequently Asked Questions Is the Jobs Portal Nulled Script safe to use? Yes, we carefully scan and verify all files to ensure they are free of malware or backdoors. However, always install scripts in a secure environment. Can I customize the script? Absolutely. Since it’s built on Laravel, you have full control to customize routes, models, views, and controllers to fit your unique business model. Does the script support third-party integrations? Yes. You can integrate third-party services like payment gateways, newsletter tools, and analytics platforms with ease. Is it legal to use a nulled script? While we provide the script for educational and testing purposes, always ensure you comply with local software laws and licensing terms if you go live. Recommended Tools for WordPress Users If you're managing your site with WordPress, we recommend using UpdraftPlus Premium nulled for effortless backups and restoration. For search engine optimization, All in One SEO Pack Pro is a must-have tool to help your website rank faster and more effectively.

Take your online recruitment platform to the next level today. Download the Jobs Portal and build a modern, scalable, and highly effective job board without spending a dime!

0 notes

Text

Blog Post 1

It holds true that for most of 19th and 20th century, in the business world, there were two resources that influence most, if not all of decision and moves being made. These two resources were time and money. With enough of both of these resources, any business (with a healthy amount of hard work) could, and would succeed. In the 21st century, the business world has started to really concentrate on a third resource (previously important, but not so much as the former two). This third resource is of course Information, which is really starting to define who has power and influence; and who doesn’t; mainly based on the capacity of storage, processing speed, accessibility and reliability of the information they handle.

Information is really dictating a lot of market trends, because the main idea is that, the more a business knows, the better it can predict. And they can predict a lot, well anything (to a certain degree of course, but the accuracy is sort of scary and surprising). A strong proven practice are personalized ads on the internet; like the ads one can see on the sidebar when you are messing around Facebook and not doing homework, or whatever. The only real bottleneck with all of this, is how do you create what is called “relevant information”. Well, that is actually kind of easy, all you need is data, lots and lots of data, like massive amounts of data and an analyst (read: my dream job).

Now getting data might be relatively easy; every nowadays is outputting data in some way, shape or form (just need to know what or where to look for). The true challenge here is how to organize and store said data, to be used later to create the previously mentioned “relevant information”. Why is it challenging? Well, its because not all data is same; and this has nothing to do with its important, all data is important (until it isn’t), its more about its digital attributes and composition.

What do I mean by, not all data is same? The data produced from different unique sources is going to look very different, and that makes storage a challenge. Data could be different documents in various formats, an array of numbers, several different pictures, even a collection of keystrokes are considered value data to some individuals. So, storing all this different type of data types can be difficult, if a corporation is interested in one than one of these data types. Relational style databases did pretty well at the beginning.

But a relational style database, or SQL databases, can only go so far when tackling this problem because of the ridged way everything is structured, and by its nature the defined structure isn’t really mutable (like it is, but really its every costly time wise to do so, and you might end up just creating a new database). Non-relational style database, also called NoSQL (Not Only SQL) databases are in a way much more flexible in the way the store data.

But we aren’t talking about a few tables, second challenge of SQL databases, they aren’t really scalable, corporation handle data on a much large scale; an estimate by priceonomis is 7.5 septillions (that’s 21 zeros) gigabytes of data. Oh, and this is what they generate in a day, granted I will say that only about half of this data is usable but still, that a whole lot of data!

Before we jump more into NoSQL, lets compare for a bit the two different styles of database.

https://youtu.be/LA5gY-LH63E

https://youtu.be/mqV-zYQhavc

Getting back on topic, that being NoSQL, which offers corporation the way to actually manage all this data. I am going to focus on one particular NoSQL database, Cassandra (created in 2008 by Apache). Why Cassandra? There are others such as MongoDB and Couchbase (very popular in the industry right now) but Cassandra offers two distinct advantages (in my opinion):

1. Cassandra has its on language, CQL, or Cassandra query language; which is very close to SQL, they aren’t really close enough to be siblings more like cousins. It is very easy for someone that has SQL knowledge to pick up CQL. Because of the way data is organized in a non-structured way, there is no support in CQL for things like “JOIN”, “GROUP BY”, or “FOREIGN KEY”. A great thing is that CQL can actual actually handle object data. This is probably due to the fact that Cassandra was written in Java.

2. The asynchronous masterless replication that Cassandra employs. The basic concept (its not super complex but it ain’t a cake walk either) is through this style of replication, this database model offers a high availability or accessibility to data with no single point of failure. This is due to data clusters.

https://youtu.be/zk00Bu8s4p0

Before we tackle replication together (because its what I really want to showcase), we need to cover a few concepts, just to make sure we are all on the same page.

Database Clusters

this is an industry standard practice for corporation and business that handle data in large quantities (so basically every corporation really). The idea is that there are two or more servers or nodes running together, servicing the same data sets, in both read and write requests. Why do this? Why have two very expensive pieces of equipment ($1000 to $2500 on the enterprise level) doing the same thing? Redundancy. Meaning that all nodes have the same data, this ensures that backups, restoration and data accessibility is almost a guarantee. The multiple servers also help with load balancing and scaling, because there are more nodes, more users can access the same data across the different nodes. And because of the larger network of nodes within the database, a lot of process can be automated. There can be one node just dedicate to being a coordinator/manager node (this is not a master node in anyway), which would run specific scripts and subprograms for the entire network.

Database Replication

ok, ok, there idea is simple its just copying data from one node to another in a database cluster. And you are right, but the process behind it is what truly impressive (at least to me). By the way a database that has replication added to it, is called a distributed database management system or DDBMS. So, database replication will track any changes, additions, or deletions made to any data point, will perform the same operation on the same data point in all other locations. There are several different replication techniques and models (will be explored in future posts). The key of database replication is the set up of them, several steps must be properly followed and understood for the overall set up to work. Replication is not the same as backing up data, because the replicated data is still within the network, connected to the original data; while backing up data is usually stored offsite.

Single Point of Failure

In the business world, having the lights on and getting/keeping everything running is a great concern, because it affects the bottom line. But let’s be realistic, something is going to fail at some point (its inevitable). And one of the goals of a database (based on the CAP theorem) is availability, so having a point of failure within a database system is not something most companies are looking for. This is solved by using database clusters, and replication, since they create a failsafe through redundancy. And it does it on several levels, between the load balancing, the multiple servers, and the multiple levels of access to data.

https://youtu.be/l0IQDSdVcs4

1 note

·

View note

Text

Data Recovery : Page level restore in SQL Server

Data Recovery : Page level restore in SQL Server

In this article, we shall discuss the importance of understanding the internals of the page restoration for a database administrator. Most of the time, performing a page-level restore suffices for database availability. A good backup strategy is a key to recovery or restoration, and SQL Server provides us with an option to fix database pages at a granular level. Performing a page level restore in…

View On WordPress

#dbcc checkdb#dbcc examples#dbcc ind#dbcc page#page level restore in sql server#restore page using SSMS#restore page using T-SQL

0 notes

Text

Top Sql Server Database Choices

SQL database has become the most common database utilized for every kind of business, for customer accounting or product. It is one of the most common database servers. Presently, you could restore database to some other instance within the exact same subscription and region. In the event the target database doesn't exist, it'll be created as a member of the import operation. If you loose your database all applications which are using it's going to quit working. Basically, it's a relational database for a service hosted in the Azure cloud.

Focus on how long your query requires to execute. Your default database may be missing. In terms of BI, it's actually not a database, but an enterprise-level data warehouse depending on the preceding databases. Make certain your Managed Instance and SQL Server don't have some underlying issues that may get the performance difficulties. It will attempt to move 50GB worth of pages and only than it will try to truncate the end of the file. If not, it will not be able to let the connection go. On the other hand, it enables developers to take advantage of row-based filtering.

If there's a need of returning some data fast, even supposing it really isn't the whole result, utilize the FAST option. The use of information analysis tools is dependent upon the demands and environment of the company. Moreover partitioning strategy option may also be implemented accordingly. Access has become the most basic personal database. With the notion of data visualization, it enables the visual accessibility to huge amounts of information in easily digestible values. It is not exactly challenging to create the link between the oh-so hyped Big Data realm and the demand for Big Storage.

Take note of the name of the instance that you're attempting to connect to. Any staging EC2 instances ought to be in the identical availability zone. Also, confirm that the instance is operating, by searching for the green arrow. Managed Instance enables you to pick how many CPU cores you would like to use and how much storage you want. Managed Instance enables you to readily re-create the dropped database from the automated backups. In addition, if you don't want the instance anymore, it is possible sql server database to easily delete it without worrying about underlying hardware. If you are in need of a new fully-managed SQL Server instance, you're able to just visit the Azure portal or type few commands in the command line and you'll have instance prepared to run.

Inside my case, very frequently the tables have 240 columns! Moreover, you may think about adding some indexes like column-store indexes that may improve performance of your workload especially when you have not used them if you used older versions of SQL server. An excellent data analysis is liable for the fantastic growth the corporation. In reality, as well as data storage, additionally, it includes data reporting and data analysis.

Microsoft allows enterprises to select from several editions of SQL Server based on their requirements and price range. The computer software is a comprehensive recovery solution with its outstanding capabilities. Furthermore, you have to learn a statistical analysis tool. It's great to learn about the tools offered in Visual Studio 2005 for testing. After making your database, if you wish to run the application utilizing SQL credentials, you will want to create a login. Please select based on the edition of the application you've downloaded earlier. As a consequence, the whole application can't scale.

What Is So Fascinating About Sql Server Database?

The data is kept in a remote database in an OutSystems atmosphere. When it can offer you the data you require, certain care, caution and restraint needs to be exercised. In addition, the filtered data is stored in another distribution database. Should you need historical weather data then Fetch Climate is a significant resource.

Storage Engine MySQL supports lots of storage engines. Net, XML is going to be the simplest to parse. The import also ought to be completed in a couple of hours. Oracle Data Pump Export is an extremely strong tool, it permits you to pick and select the type of data that you would like. Manual process to fix corrupted MDF file isn't so straightforward and several times it's not able to repair due to its limitations. At this point you have a replica of your database, running on your Mac, without the demand for entire Windows VM! For instance, you may want to make a blank variant of the manufacturing database so that you are able to test Migrations.

The SQL query language is vital. The best thing of software development is thinking up cool solutions to everyday issues, sharing them along with the planet, and implementing improvements you receive from the public. Web Designing can end up being a magic wand for your internet business, if it's done in an effective way. Any web scraping project starts with a need. The developers have option to pick from several RDBMS according to certain requirements of each undertaking. NET developers have been working on that special database for a very long moment. First step is to utilize SQL Server Management Studio to create scripts from a present database.

youtube

1 note

·

View note

Text

What Is openGauss?

openGauss is a user-friendly, enterprise-level, and open-source relational database jointly built with partners. openGauss provides multi-core architecture-oriented ultimate performance, full-link service, data security, AI-based optimization, and efficient O&M capabilities. openGauss deeply integrates Huawei's years of R&D experience in the database field and continuously builds competitive features based on enterprise-level scenario requirements. For the latest information about openGauss, visit https://opengauss.org/en/.

openGauss is a database management system.

A database is a structured dataset. It can be any data, such as shopping lists, photo galleries, or a large amount of information on a company's network. To add, access, and process massive data stored in computer databases, you need a database management system (DBMS). The DBMS can manage and control the database in a unified manner to ensure the security and integrity of the database. Because computers are very good at handling large amounts of data, the DBMS plays a central role in computing as standalone utilities or as part of other applications.

An openGauss database is a relational database.

A relational database organizes data using a relational model, that is, data is stored in rows and columns. A series of rows and columns in a relational database are called tables, which form the database. A relational model can be simply understood as a two-dimensional table model, and a relational database is a data organization consisting of two-dimensional tables and their relationships.

In openGauss, SQL is a standard computer language often used to control the access to databases and manage data in databases. depending on your programming environment, you can enter SQL statements directly, embed SQL statements into code written in another language, or use specific language APIs that contain SQL syntax.

SQL is defined by the ANSI/ISO SQL standard. The SQL standard has been developed since 1986 and has multiple versions. In this document, SQL92 is the standard released in 1992, SQL99 is the standard released in 1999, and SQL2003 is the standard released in 2003. SQL2011 is the latest version of the standard. openGauss supports the SQL92, SQL99, SQL2003, and SQL2011 specifications.

openGauss provides open-source software.

Open-source means that anyone can use and modify the software. Anyone can download the openGauss software and use it at no cost. You can dig into the source code and make changes to meet your needs. The openGauss software is released under the Mulan Permissive Software License v2 (http://license.coscl.org.cn/MulanPSL2/) to define the software usage scope.

An openGauss database features high performance, high availability, high security, easy O&M, and full openness.

High performance

It provides the multi-core architecture-oriented concurrency control technology and Kunpeng hardware optimization, and achieves that the TPC-C benchmark performance reaches 1,500,000 tpmC in Kunpeng 2-socket servers.

It uses NUMA-Aware data structures as the key kernel structures to adapt to the trend of using multi-core NUMA architecture on hardware.

It provides the SQL bypass intelligent fast engine technology.

It provides the USTORE storage engine for frequent update scenarios.

High availability (HA)

It supports multiple deployment modes, such as primary/standby synchronization, primary/standby asynchronization, and cascaded standby server deployment.

It supports data page cyclic redundancy check (CRC), and automatically restores damaged data pages through the standby node.

It recovers the standby node in parallel and promotes it to primary to provide services within 10 seconds.

It provides log replication and primary selection framework based on the Paxos distributed consistency protocol.

High security

It supports security features such as fully-encrypted computing, access control, encryption authentication, database audit, and dynamic data masking to provide comprehensive end-to-end data security protection.

Easy O&M

It provides AI-based intelligent parameter tuning and index recommendation to automatically recommend AI parameters.

It provides slow SQL diagnosis and multi-dimensional self-monitoring views to help you understand system performance in real time.

It provides SQL time forecasting that supports online auto-learning.

Full openness

It adopts the Mulan Permissive Software License, allowing code to be freely modified, used, and referenced.

It fully opens database kernel capabilities.

It provides excessive partner certifications, training systems, and university courses.

0 notes

Text

Fortinet NSE 6 - FortiWeb 6.1 NSE6_FWB-6.1 Practice Test Questions

If you want to clear Fortinet NSE6_FWB-6.1 exam on the first attempt, then you should go through PassQuestion Fortinet NSE 6 - FortiWeb 6.1 NSE6_FWB-6.1 Practice Test Questions so you can easily clear your exam on the first attempt. Make sure that you are using our NSE6_FWB-6.1 questions and answers multiple times so you can avoid all the problems that you are facing. It is highly recommended for you to use NSE6_FWB-6.1 Practice Test Questions in different modes so you can strengthen your current preparation level. Moreover, it will help you assess your preparation and you will be able to pass your Fortinet NSE6_FWB-6.1 exam successfully.

Fortinet NSE 6 - FortiWeb 6.1 (NSE6_FWB-6.1)

Fortinet NSE 6 - FortiWeb 6.1 exam will prepare you for the FortiWeb 6.1 Specialist Exam. The FortiWeb Specialist exam counts toward one of the four NSE 6 specializations required to get the NSE 6 certification.You will learn how to deploy,configure, and troubleshoot Fortinet's web application firewall: FortiWeb. Networking and security professionals involved in the administration and support of FortiWeb can attend this NSE6_FWB-6.1 exam to get certified.

Exam Details

Fortinet NSE 6 - FortiWeb 6.1 Exam series: NSE6_FWB-6.1 Number of questions: 30 Exam time: 60 minutes Language: English and Japanese Product version: FortiWeb 6.1 Status: Available

Prerequisites

Knowledge of OSI layers and the HTTP protocol

Basic knowledge of HTML, JavaScript, and server-side dynamic page languages, such as PHP

Basic experience using FortiGate port forwarding

Exam Topics

Integrating Front-End SNAT and Load Balancers Machine Learning and Bot Detection Signatures and Sanitization DoS and Defacement SSL/TLS Authentication and Access Control PCI DSS Compliance Caching and Compression HTTP Routing, Rewriting, and Redirects Troubleshooting

View Online Fortinet NSE 6 - FortiWeb 6.1 NSE6_FWB-6.1 Free Questions

What key factor must be considered when setting brute force rate limiting and blocking? A.A single client contacting multiple resources B.Multiple clients sharing a single Internet connection C.Multiple clients from geographically diverse locations D.Multiple clients connecting to multiple resources Answer: D

What role does FortiWeb play in ensuring PCI DSS compliance? A.It provides the ability to securely process cash transactions. B.It provides the required SQL server protection. C.It provides the WAF required by PCI. D.It provides credit card processing capabilities. Answer: D

What must you do with your FortiWeb logs to ensure PCI DSS compliance? A.Store in an off-site location B.Erase them every two weeks C.Enable masking of sensitive data D.Compress them into a .zip file format Answer: C

Which two statements about the anti-defacement feature on FortiWeb are true? (Choose two.) A.Anti-defacement can redirect users to a backup web server, if it detects a change. B.Anti-defacement downloads a copy of your website to RAM, in order to restore a clean image, if it detects defacement. C.FortiWeb will only check to see if there are changes on the web server; it will not download the whole file each time. D.Anti-defacement does not make a backup copy of your databases. Answer: CD

When viewing the attack logs on FortiWeb, which client IP address is shown when you are using XFF header rules? A.FortiGate public IP B.FortiWeb IP C.FortiGate local IP D.Client real IP Answer: D

0 notes

Text

Install Docker On Windows 2019

Estimated reading time: 6 minutes

NOTE: Currently not compatible with Apple Silicon (ARM). This project relies on Docker which has not been ported to Apple Silicon yet. If you are running Windows, download the latest release and add the binary into your PATH. If you are using Chocolatey then run: choco install act-cli. If you are using Scoop then run: scoop install act.

Nov 07, 2019 Here’s how you can install Docker on Windows 10 64-bit: Enable Hyper-V in your system. Download Docker Desktop for Windows and open the Docker for Windows Installer file. In the Configuration dialog window, check or uncheck the boxes based on your preferences.

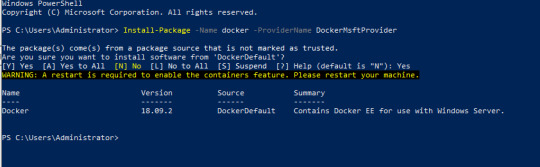

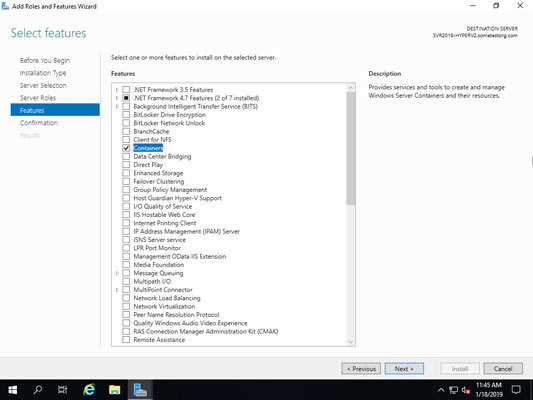

This will install the Docker-Microsoft PackageManagement Provider from the PowerShell Gallery. Sample output is as shown below: Step 2: Install Docker on Windows Server 2019. Once the Containers feature is enabled on Windows Server 2019, install the latest Docker Engine and Client by running the command below in your PowerShell session.

Docker Desktop for Windows is the Community version of Docker for Microsoft Windows.You can download Docker Desktop for Windows from Docker Hub.

By downloading Docker Desktop, you agree to the terms of the Docker Software End User License Agreement and the Docker Data Processing Agreement.

System requirements

Your Windows machine must meet the following requirements to successfully install Docker Desktop.

Hyper-V backend and Windows containers

Windows 10 64-bit: Pro, Enterprise, or Education (Build 17134 or higher).

For Windows 10 Home, see System requirements for WSL 2 backend.

Hyper-V and Containers Windows features must be enabled.

The following hardware prerequisites are required to successfully run ClientHyper-V on Windows 10:

64 bit processor with Second Level Address Translation (SLAT)

4GB system RAM

BIOS-level hardware virtualization support must be enabled in theBIOS settings. For more information, seeVirtualization.

WSL 2 backend

Windows 10 64-bit: Home, Pro, Enterprise, or Education, version 1903 (Build 18362 or higher).

Enable the WSL 2 feature on Windows. For detailed instructions, refer to the Microsoft documentation.

The following hardware prerequisites are required to successfully runWSL 2 on Windows 10:

64-bit processor with Second Level Address Translation (SLAT)

4GB system RAM

BIOS-level hardware virtualization support must be enabled in theBIOS settings. For more information, seeVirtualization.

Download and install the Linux kernel update package.

Note

Docker supports Docker Desktop on Windows for those versions of Windows 10 that are still within Microsoft’s servicing timeline.

What’s included in the installer

The Docker Desktop installation includes Docker Engine,Docker CLI client, Docker Compose,Notary,Kubernetes,and Credential Helper.

Containers and images created with Docker Desktop are shared between alluser accounts on machines where it is installed. This is because all Windowsaccounts use the same VM to build and run containers. Note that it is not possible to share containers and images between user accounts when using the Docker Desktop WSL 2 backend.

Nested virtualization scenarios, such as running Docker Desktop on aVMWare or Parallels instance might work, but there are no guarantees. Formore information, see Running Docker Desktop in nested virtualization scenarios.

About Windows containers

Looking for information on using Windows containers?

Switch between Windows and Linux containersdescribes how you can toggle between Linux and Windows containers in Docker Desktop and points you to the tutorial mentioned above.

Getting Started with Windows Containers (Lab)provides a tutorial on how to set up and run Windows containers on Windows 10, Windows Server 2016 and Windows Server 2019. It shows you how to use a MusicStore applicationwith Windows containers.

Docker Container Platform for Windows articles and blogposts on the Docker website.

Install Docker Desktop on Windows

Double-click Docker Desktop Installer.exe to run the installer.

If you haven’t already downloaded the installer (Docker Desktop Installer.exe), you can get it from Docker Hub. It typically downloads to your Downloads folder, or you can run it from the recent downloads bar at the bottom of your web browser.

When prompted, ensure the Enable Hyper-V Windows Features or the Install required Windows components for WSL 2 option is selected on the Configuration page.

Follow the instructions on the installation wizard to authorize the installer and proceed with the install.

When the installation is successful, click Close to complete the installation process.

If your admin account is different to your user account, you must add the user to the docker-users group. Run Computer Management as an administrator and navigate to Local Users and Groups > Groups > docker-users. Right-click to add the user to the group.Log out and log back in for the changes to take effect.

Start Docker Desktop

Docker Desktop does not start automatically after installation. To start Docker Desktop, search for Docker, and select Docker Desktop in the search results.

When the whale icon in the status bar stays steady, Docker Desktop is up-and-running, and is accessible from any terminal window.

If the whale icon is hidden in the Notifications area, click the up arrow on thetaskbar to show it. To learn more, see Docker Settings.

When the initialization is complete, Docker Desktop launches the onboarding tutorial. The tutorial includes a simple exercise to build an example Docker image, run it as a container, push and save the image to Docker Hub.

Congratulations! You are now successfully running Docker Desktop on Windows.

If you would like to rerun the tutorial, go to the Docker Desktop menu and select Learn.

Automatic updates

Starting with Docker Desktop 3.0.0, updates to Docker Desktop will be available automatically as delta updates from the previous version.

When an update is available, Docker Desktop automatically downloads it to your machine and displays an icon to indicate the availability of a newer version. All you need to do now is to click Update and restart from the Docker menu. This installs the latest update and restarts Docker Desktop for the changes to take effect.

Uninstall Docker Desktop

To uninstall Docker Desktop from your Windows machine:

From the Windows Start menu, select Settings > Apps > Apps & features.

Select Docker Desktop from the Apps & features list and then select Uninstall.

Click Uninstall to confirm your selection.

Important

Uninstalling Docker Desktop destroys Docker containers, images, volumes, andother Docker related data local to the machine, and removes the files generatedby the application. Refer to the back up and restore datasection to learn how to preserve important data before uninstalling.

Where to go next

Getting started introduces Docker Desktop for Windows.

Get started with Docker is a tutorial that teaches you how todeploy a multi-service stack.

Troubleshooting describes common problems, workarounds, andhow to get support.

FAQs provide answers to frequently asked questions.

Release notes lists component updates, new features, and improvements associated with Docker Desktop releases.

Back up and restore data provides instructions on backing up and restoring data related to Docker.

windows, install, download, run, docker, local

Microsoft SQL Server is a database system that comprises many components, including the Database Engine, Analysis Services, Reporting Services, SQL Server Graph Databases, SQL Server Machine Learning Services, and several other components.

SQL Server 2019 has a lot of new features:

Intelligence across all your data with Big Data Clusters

Choice of language and platform

Industry-leading performance

Most secured data platform

Unparalleled high availability

End-to-end mobile BI

SQL Server on Azure

Download SQL Server 2019

Step 1 : Go to https://www.microsoft.com/en-us/evalcenter/evaluate-sql-server-2019.

Install Docker Engine On Windows 2019

Step 2 :To download the installer you need to fill a short form and provide your contact information.

Run the installer

Step 3 :After the download completes, run the downloaded file. Select Basic installation type.

Step 4 :Select ACCEPT to accept the license terms.

Step 5 :Accept the install location, and click INSTALL.

Step 6 :When the download completes, installation will automatically begin.

Step 7 :After installation completes, select CLOSE.

Step 8 :After this has completed, you will have SQL Server 2019 Installation Center installed.

Install SQL Server Management Studio

The steps for installing SQL Server Management Studio are as follows:

Install Docker On Windows Server 2019 Without Internet

Step 9 :Open the SQL Server 2019 Installation Center application

Step 10 :Select installation on the left pane, then install SQL Server Management tools.

Step 11 :Select Download SQL Server Management Studio (SSMS).

Step 12 :After the download completes, click RUN

Step 13 :Then INSTALL

Install Docker On Windows Server 2019 Offline

Step 14 :When installation completes, click CLOSE.

Step 15 :After this has completed, you will have several new software apps installed, including SQL Server Management Studio.

Use SQL Server Management Studio

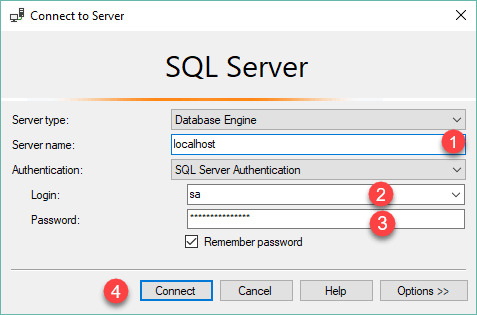

Step 16 :When you open the SQL Server Management Studio application, you’ll first see a Connect to Server window. This window allows you to establish a connection with the SQL Server instance that you already installed. The Server Name will show instance you installed, and the Authentication will show Windows Authentication. The Server Type is Database Engine.

Install Docker On Windows 2019 Iso

Step 17 :Click the CONNECT button.

0 notes

Text

Modify an Amazon RDS for SQL Server instance from Standard Edition to Enterprise Edition

Microsoft SQL Server is available in various editions, and each edition brings unique features, performance, and pricing options. The edition that you install also depends on your specific requirements. Many of our customers want to change from the Standard Edition of Amazon RDS for SQL Server to Enterprise Edition to utilize its higher memory and high-availability features. To do so, you need to upgrade your existing RDS for SQL Server instance from Standard Edition to Enterprise Edition. This post walks you through that process. Prerequisites Before you get started, make sure you have the following prerequisites: Amazon RDS for SQL Server Access to the AWS Management Console SQL Server Management Studio Walkthrough overview Amazon RDS supports DB instances running several versions and editions of SQL Server. For the full list, see Microsoft SQL Server versions on Amazon RDS. For this post, we discuss the following editions: Standard – This edition enables database management with minimal IT resources, with limited feature offerings, a lack of some high-availability features, and few online DDL operations compared to Enterprise Edition. Additionally, Standard Edition has a limitation of 24 cores and 128 GB of memory. Enterprise: This is the most complete edition to use with your mission-critical workloads. With Enterprise Edition, you have all the features with no limitation of CPU and memory. The upgrade process includes the following high-level steps: Take a snapshot of the existing RDS for SQL Server Standard Edition instance. Restore the snapshot as an RDS for SQL Server Enterprise Edition instance. Verify the RDS for SQL Server Enterprise Edition instance. Upgrade your RDS for SQL Server instance on the console We first walk you through modifying your RDS for SQL Server edition via the console. We take a snapshot of the existing RDS for SQL Server instance and then restore it as a different edition of SQL Server. You can check your version of RDS for SQL Server on the SQL Server Management Studio. On the Amazon RDS console, choose Databases. Select your database and on the Actions menu, choose Take snapshot. For Snapshot name, enter a name. Choose Take snapshot. On the Snapshots page, verify that snapshot is created successfully and check that the status is Available. Select the snapshot and on the Actions menu, choose Restore snapshot. Under DB specifications, choose the new edition of SQL Server (for this post, SQL Server Enterprise Edition). For DB instance identifier, enter a name for your new instance. Select your instance class. Choose Restore DB instance. Wait for the database to be restored. After the database is restored, verify the version of SQL Server. The following screenshot shows the new RDS for SQL Server database created from the snapshot, with all databases, objects, users, permissions, passwords, and other RDS for SQL Server parameters, options, and settings restored with the snapshot. Upgrade your RDS for SQL Server instance via the AWS CLI You can also use the AWS Command Line Interface (AWS CLI) to modify the RDS for SQL Server instance: Create a DB snapshot using create-db-snapshot CLI: aws rds create-db-snapshot ^ --db-instance-identifier mydbinstance ^ --db-snapshot-identifier mydbsnapshot Restore the database from the snapshot using restore-db-instance-from-db-snapshot CLI: aws rds restore-db-instance-from-db-snapshot ^ --db-instance-identifier mynewdbinstance ^ --db-snapshot-identifier mydbsnapshot^ --engine sqlserver-ee Clean up To avoid incurring future costs, delete your RDS for SQL Server Standard Edition resources, because they’re no longer required. On the Amazon RDS console, choose Databases. Select your old database and on the Actions menu, choose Delete. Conclusion In this post, I showed how to modify Amazon RDS for SQL Server from Standard Edition to Enterprise Edition using the snapshot restore method. Upgrading to Enterprise Edition allows you to take advantage of higher memory and the edition’s high-availability features. To learn more about most effective way of working with Amazon RDS for SQL Server, see Best practices for working with SQL Server. About the author Yogi Barot is Microsoft Specialist Senior Solution Architect at AWS, she has 22 years of experience working with different Microsoft technologies, her specialty is in SQL Server and different database technologies. Yogi has in depth AWS knowledge and expertise in running Microsoft workload on AWS. https://aws.amazon.com/blogs/database/modify-an-amazon-rds-for-sql-server-instance-from-standard-edition-to-enterprise-edition/

0 notes

Text

cPanel - Website and Hosting Management Tool

Build and Manage your WordPress Website. One Low Predictable Price!

cPanel Hosting Services will empower a Website owner with a wide variety of options at a higher level of control And automation tools designed to simplify the process of hosting a web site

With the industries best graphical interface. cPanel is an excellent option for you to consider.

cPanel's time saving automation's will make managing your Website easier than ever.

With over 80 different features which includes creating Email Accounts, Backups, File Manager, Adding Domains, MX Records, Softaculous and and the Site Publisher interface to quickly create simple websites from a variety of templates.

cPanel is the leading web hosting control panel available today

Currently in the hosting market cPanel is considered as the leading website management tool

It’s simple graphical web based interface empowers web developers, administrators and resellers to effectively develop and manage their websites

Not just for developers, even a non-professional and less technical people can easily create and manage websites and their hosting account with cPanel, you will have access to stats, disk usage and space, bandwidth usage, add, or remove email accounts, MX Records, FTP accounts.

Install different The File Manager, PHP my Sql scripts, get access to your online Web Mail

And more advanced functions, such as MIME types, cron jobs, OpenPGP keys, Apache handlers, addon domains and sub domains, password protected directories

cPanel Hosting Services is an easy to use interface for web maintenance. Even a user completely new to web hosting can easily manage their own website.

Advantages of cPanel hosting services:

Easy to use

Supports all languages

Adapts the screen size automatically, so we can use on any device

Has built-in file manager to manage the files easily

Integrated with phpMy Admin tool to manage the databases easily

Has Integrated email wizard which helps to send or receive the mails using a mail client

cPanel handles automatic upgrades of Apache, MySQL, PHP and other web applications

cPanel Features:

Looking for cPanel Hosting Services with over 80 different features?

Files

File Manager

Use the File Manager interface to manage your files. This feature allows you to upload, create, remove, and edit files without the need for FTP or other third-party applications.

Images

Use the Images interface to manage your images. You can view and resize images, or use this feature to convert image file types.

Directory Privacy

Use the Directory Privacy interface to limit access to certain resources on your website. Enable this feature to password-protect specific directories that you do not want to allow visitors to access.

Disk Usage

Use the Disk Usage interface to scan your disk and view a graphical overview of your account's available space. This feature can help you to manage your disk space usage.

Web Disk

Use the Web Disk feature to access to your website's files as if they were a local drive on your computer.

FTP Accounts

Use the FTP Accounts interface to manage File Transfer Protocol (FTP) accounts.

FTP Connections

Use the FTP Connections interface to view current connections to your site via FTP. Terminate FTP connections to prevent unauthorized or unwanted file access.

Anonymous FTP

Use the Anonymous FTP interface to allow users to connect to your FTP directory without a password. Use of this feature may cause security risks.

Backup

Use the Backup interface to back up your website. This feature allows you to download a zipped copy of your cPanel account's contents (home directory, databases, email forwarders, and email filters) to your computer.

File Restoration

Use the File Restoration interface to restore items from backed-up files.

Backup Wizard

Use the Backup Wizard interface as a step-by-step guide to assist you in backup creation.

Databases

phpMyAdmin

phpMyAdmin is a third-party tool that you can use to manipulate MySQL databases. For more information about how to use phpMyAdmin, visit the phpMyAdmin website.

MySQL Databases

Use the MySQL Databases interface to manage large amounts of information on your websites. Many web-based applications (for example, bulletin boards, Content Management Systems, and online retail shops) require database access.

MySQL Database Wizard

Use the MySQL Database Wizard interface to manage large amounts of information on your websites with a step-by-step guide. Many web-based applications (for example, bulletin boards, content management systems, and online retail shops) require database access.

Remote MySQL

Use the Remote MySQL interface to configure databases that users can access remotely. Use this feature if you want to allow applications (for example, bulletin boards, shopping carts, or guestbooks) on other servers to access your databases.

PostgreSQL Databases

Use the PostgreSQL Databases interface to manage large amounts of information on your websites. Many web-based applications (for example, bulletin boards, content management systems, and online retail shops) require database access.

PostgreSQL Database Wizard

To simultaneously create a database and the account that will access it, use the PostgreSQL Database Wizard interface. Many web-based applications (for example, bulletin boards, content management systems, and online retail shops) require database access.

phpPgAdmin

phpPgAdmin is a third-party tool that you can use to manipulate PostgreSQL databases. For more information about how to use phpPgAdmin, visit the phpPgAdmin website.

Domains

Some of our Free Domain Name are mentioned and briefly mentioned for your kind regards.

Site Publisher

Use the Site Publisher interface to quickly create simple websites from a variety of templates. You can use this feature, for example, to ensure that visitors can find your contact information while you design a more elaborate website.

Addon Domains

Use the Addon Domains interface to add more domains to your account. Each addon domain possesses its own files and will appear as a separate website to your visitors.

Subdomains

Subdomains are subsections of your website that can exist as a new website without a new domain name. Use this interface to create memorable URLs for different content areas of your site. For example, you can create a subdomain for your blog that visitors can access through blog.example.com.

Aliases

Use the Aliases interface to create domain aliases. Domain Aliases allow you to point additional domain names to your account's existing domains. This allows users to reach your website if they enter the pointed domain URL in their browsers.

Redirects

Use the Redirects interface to make a specific webpage redirect to another webpage and display its contents. This allows users to access a page with a long URL through a page with a shorter, more memorable URL.

Zone Editor

Use the Zone Editor interface to add, edit, and remove A, AAAA, CNAME, SRV, MX, and TXT DNS records. It combines the functions of the Simple Zone Editor and Advanced Zone Editor interfaces.

For more information please visit our site https://rshweb.com/blog-what-is-cpanel or https://rshweb.com/

0 notes

Text

300+ TOP Oracle ADF Interview Questions and Answers

Oracle ADF Interview Questions for freshers experienced :-

1. What is Oracle ADF? Oracle Application Development Framework, usually called Oracle ADF, provides a commercial Java framework for building enterprise applications. It provides visual and declarative approaches to Java EE development. It supports rapid application development based on ready-to-use design patterns, metadata-driven, and visual tools. 2. What is the ADF Features? Making Java EE Development Simpler. Oracle ADF implements the Model-View-Controller (MVC) design pattern and offers an integrated a solution that covers all the layers of this architecture with a solution to such areas as: Object/Relational mapping, Data persistence, Reusable controller layer, Rich Web user interface framework, Data binding to UI, Security and customization. Its supports Rapid Application Development. Declarative approve (XML Driven) Reduce Maintenance Cost and time SOA Enabled 3. What is ADF BC (Business Components)? Describe them. All of these features can be summarized by saying that using ADF Business Components for your J2EE business service layer makes your life a lot easier. The key ADF Business Components that cooperate to provide the business Service implementation is: Entity Object: An entity object represents a row in a database table and simplifies modifying its data by handling all DML operations for you. It can encapsulate business logic for the row to ensure your business rules are consistently enforced. You associate an entity object with others to reflect relationships in the underlying database schema to create a layer of business domain objects to reuse in multiple applications. Application Module: An application module is a transactional component that UI clients use to work with application data. It defines an updatable data model and top-level procedures and functions (called service methods) related to a logical unit of work related to an end-user task. View Object: A view object represents a SQL query and simplifies working with its results. You use the full power of the familiar SQL language to join, project, filter, sort, and aggregate data into exactly the “shape” required by the end-user task at hand. This includes the ability to link a view object with others to create master/detail hierarchies of any complexity. When end users modify data in the user interface, your view objects collaborate with entity objects to consistently validate and save the changes. 4. How does ADF fall in MVC architecture? Oracle ADF Architecture is based on the Model-View-Controller (MVC) design pattern.MVC consists of three layers which are a model layer, view layer, controller layer. Oracle ADF implements MVC and further separates the model layer from the business services to enable service-oriented development of applications. The Oracle ADF architecture is based on four layers: The Business Services Layer This layer provides access to data from various sources and handles business logic. ADF Component comes, in this section are ViewObject, EntityObject, ViewLink, Association, etc The Model layer This layer provides an abstraction layer on top of the Business Services layer, enabling the View and Controller layers to work with different implementations of Business Services in a consistent way. ADF Component comes in this section are PageDefn, DataBindings,DataControls(AppModuleDataControl, WebServiceDataControl) The Controller layer This layer provides a mechanism to control the flow of the Web application. ADF Component comes in this section are TaskFlows(Bounded and unbounded, faces-config.xml, adfc-config.xml) The View layer This layer provides the user interface of the application. ADF components comes in this section are jsff, jspx page. 5. Oracle ADF Life Cycle Step by step It has the following phases : a) Restore view : When we hit the URL in the browser it will build the component tree corresponding to the tags given in the JSF pages. for the first time, it will build the tree and saves in the server memory. For the second time onwards, it will try to restore the existing component tree from the server memory. Otherwise, if there are any changes in the page components it will rebuild the tree again. b) Initialize Context : In this phase the databinding.cpx file will be read and the bindingcontext object will be created based on that. Binding context is nothing but a mapping of pages and page definitions. From the binding context, it will identify the page definition corresponding to the page. it will read the page definition file and creates the binding container objects. c) Prepare model : Once the binding Container is ready it will try to prepare the model objects bypassing any parameter values and if there are any task flow parameters are all evaluated in the phase d) Apply Request Values : If we enter any values in the browser against the form fields, those values will be applied against the component tree objects. e) Process validation : Once the values are applied to fields they will be validated if there any client-side validation is specified. like a mandatory min-max length. f) Update model values : Once UI validation is passed values will be applied to model objects like VO and EO g) Validate Model Updates : If there is any validation specified at entity level that will be executed. h) Invoke action : Event-related actions like the action method and action listener code will be executed i) Metadata commit : If there are any MDS related changes will be saved. j) Render response : Based on the action it will either navigate to the next page and display the response in the browser are continuing on the same page. 6. What are the various components in ADF? Oracle ADF has following components ADF Business Components: VO, EO & AM ADF Model : DataBinding (.cpx, .xml) & DataControls(.dcx) ADF View: JSP, JSF, ADF Faces etc. ADF Controller: Task flows (adf-config.xml), faces-config.xml 7. How to enable security to ADF Application? Go to the menu Application -> Secure -> Configure ADF Security and go through the wizard of creating login pages. Go to the menu Application -> Secure -> Application Roles and create an application role for this application. Create a test user and assign an application role to it. Grant all required application resources to this role. 8. How can you manage the transaction in ADF? In ADF transaction can we manage at ApplicationModule as well as task flow level. Task flow supports different mode of transaction like: No Controller transaction Always begin new transaction Always use existing transaction Use Existing Transaction if possible 9. What is the purpose of Chanage Indicator? While committing data to the table, database will check those columns to check weather we are committing on the latest data or not. 10. What is PPR in ADF? PPR means Partial Page Rendering. It means that in ADF we can refresh the portion of the page. We don't need to submit the whole page for that.