#e-commerce web scraping tool

Explore tagged Tumblr posts

Text

Benefits of Digital Shelf Analytics for Online Retailers

Boost your Ecommerce strategy with Digital Shelf Analytics. Optimize product visibility, analyze competitors, and stay competitive in the online Retail Industry.

#ecommerce data scraping service#data scraping#e-commerce web scraping tool#ecommerce web scraping tool#DigitalShelfAnalyticsServices

0 notes

Text

Why Should You Do Web Scraping for python

Web scraping is a valuable skill for Python developers, offering numerous benefits and applications. Here��s why you should consider learning and using web scraping with Python:

1. Automate Data Collection

Web scraping allows you to automate the tedious task of manually collecting data from websites. This can save significant time and effort when dealing with large amounts of data.

2. Gain Access to Real-World Data

Most real-world data exists on websites, often in formats that are not readily available for analysis (e.g., displayed in tables or charts). Web scraping helps extract this data for use in projects like:

Data analysis

Machine learning models

Business intelligence

3. Competitive Edge in Business

Businesses often need to gather insights about:

Competitor pricing

Market trends

Customer reviews Web scraping can help automate these tasks, providing timely and actionable insights.

4. Versatility and Scalability

Python’s ecosystem offers a range of tools and libraries that make web scraping highly adaptable:

BeautifulSoup: For simple HTML parsing.

Scrapy: For building scalable scraping solutions.

Selenium: For handling dynamic, JavaScript-rendered content. This versatility allows you to scrape a wide variety of websites, from static pages to complex web applications.

5. Academic and Research Applications

Researchers can use web scraping to gather datasets from online sources, such as:

Social media platforms

News websites

Scientific publications

This facilitates research in areas like sentiment analysis, trend tracking, and bibliometric studies.

6. Enhance Your Python Skills

Learning web scraping deepens your understanding of Python and related concepts:

HTML and web structures

Data cleaning and processing

API integration

Error handling and debugging

These skills are transferable to other domains, such as data engineering and backend development.

7. Open Opportunities in Data Science

Many data science and machine learning projects require datasets that are not readily available in public repositories. Web scraping empowers you to create custom datasets tailored to specific problems.

8. Real-World Problem Solving

Web scraping enables you to solve real-world problems, such as:

Aggregating product prices for an e-commerce platform.

Monitoring stock market data in real-time.

Collecting job postings to analyze industry demand.

9. Low Barrier to Entry

Python's libraries make web scraping relatively easy to learn. Even beginners can quickly build effective scrapers, making it an excellent entry point into programming or data science.

10. Cost-Effective Data Gathering

Instead of purchasing expensive data services, web scraping allows you to gather the exact data you need at little to no cost, apart from the time and computational resources.

11. Creative Use Cases

Web scraping supports creative projects like:

Building a news aggregator.

Monitoring trends on social media.

Creating a chatbot with up-to-date information.

Caution

While web scraping offers many benefits, it’s essential to use it ethically and responsibly:

Respect websites' terms of service and robots.txt.

Avoid overloading servers with excessive requests.

Ensure compliance with data privacy laws like GDPR or CCPA.

If you'd like guidance on getting started or exploring specific use cases, let me know!

2 notes

·

View notes

Text

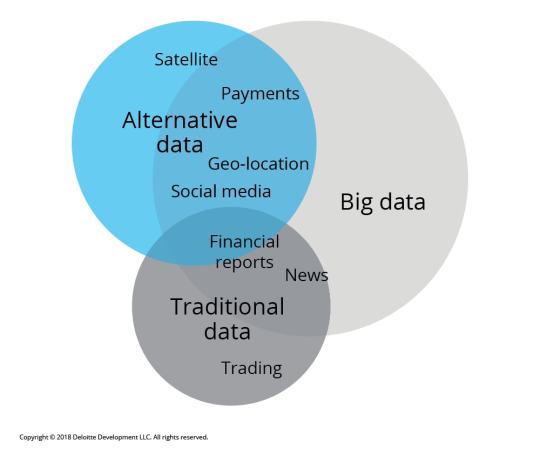

📊 Unlocking Trading Potential: The Power of Alternative Data 📊

In the fast-paced world of trading, traditional data sources—like financial statements and market reports—are no longer enough. Enter alternative data: a game-changing resource that can provide unique insights and an edge in the market. 🌐

What is Alternative Data? Alternative data refers to non-traditional data sources that can inform trading decisions. These include:

Social Media Sentiment: Analyzing trends and sentiments on platforms like Twitter and Reddit can offer insights into public perception of stocks or market movements. 📈

Satellite Imagery: Observing traffic patterns in retail store parking lots can indicate sales performance before official reports are released. 🛰️

Web Scraping: Gathering data from e-commerce websites to track product availability and pricing trends can highlight shifts in consumer behavior. 🛒

Sensor Data: Utilizing IoT devices to track activity in real-time can give traders insights into manufacturing output and supply chain efficiency. 📡

How GPT Enhances Data Analysis With tools like GPT, traders can sift through vast amounts of alternative data efficiently. Here’s how:

Natural Language Processing (NLP): GPT can analyze news articles, earnings calls, and social media posts to extract key insights and sentiment analysis. This allows traders to react swiftly to market changes.

Predictive Analytics: By training GPT on historical data and alternative data sources, traders can build models to forecast price movements and market trends. 📊

Automated Reporting: GPT can generate concise reports summarizing alternative data findings, saving traders time and enabling faster decision-making.

Why It Matters Incorporating alternative data into trading strategies can lead to more informed decisions, improved risk management, and ultimately, better returns. As the market evolves, staying ahead of the curve with innovative data strategies is essential. ���

Join the Conversation! What alternative data sources have you found most valuable in your trading strategy? Share your thoughts in the comments! 💬

#Trading #AlternativeData #GPT #Investing #Finance #DataAnalytics #MarketInsights

2 notes

·

View notes

Text

Amazon Product Review Data Scraping | Scrape Amazon Product Review Data

In the vast ocean of e-commerce, Amazon stands as an undisputed titan, housing millions of products and catering to the needs of countless consumers worldwide. Amidst this plethora of offerings, product reviews serve as guiding stars, illuminating the path for prospective buyers. Harnessing the insights embedded within these reviews can provide businesses with a competitive edge, offering invaluable market intelligence and consumer sentiment analysis.

In the realm of data acquisition, web scraping emerges as a potent tool, empowering businesses to extract structured data from the labyrinthine expanse of the internet. When it comes to Amazon product review data scraping, this technique becomes particularly indispensable, enabling businesses to glean actionable insights from the vast repository of customer feedback.

Understanding Amazon Product Review Data Scraping

Amazon product review data scraping involves the automated extraction of reviews, ratings, and associated metadata from Amazon product pages. This process typically entails utilizing web scraping tools or custom scripts to navigate through product listings, access review sections, and extract relevant information systematically.

The Components of Amazon Product Review Data:

Review Text: The core content of the review, containing valuable insights, opinions, and feedback from customers regarding their experience with the product.

Rating: The numerical or star-based rating provided by the reviewer, offering a quick glimpse into the overall satisfaction level associated with the product.

Reviewer Information: Details such as the reviewer's username, profile information, and sometimes demographic data, which can be leveraged for segmentation and profiling purposes.

Review Date: The timestamp indicating when the review was posted, aiding in trend analysis and temporal assessment of product performance.

The Benefits of Amazon Product Review Data Scraping

1. Market Research and Competitive Analysis:

By systematically scraping Amazon product reviews, businesses can gain profound insights into market trends, consumer preferences, and competitor performance. Analyzing the sentiment expressed in reviews can unveil strengths, weaknesses, opportunities, and threats within the market landscape, guiding strategic decision-making processes.

2. Product Enhancement and Innovation:

Customer feedback serves as a treasure trove of suggestions and improvement opportunities. By aggregating and analyzing product reviews at scale, businesses can identify recurring themes, pain points, and feature requests, thus informing product enhancement strategies and fostering innovation.

3. Reputation Management:

Proactively monitoring and addressing customer feedback on Amazon can be instrumental in maintaining a positive brand image. Through sentiment analysis and sentiment-based alerts derived from scraped reviews, businesses can swiftly identify and mitigate potential reputation risks, thereby safeguarding brand equity.

4. Pricing and Promotion Strategies:

Analyzing Amazon product reviews can provide valuable insights into perceived product value, price sensitivity, and the effectiveness of promotional campaigns. By correlating review sentiments with pricing fluctuations and promotional activities, businesses can refine their pricing strategies and promotional tactics for optimal market positioning.

Ethical Considerations and Best Practices

While Amazon product review data scraping offers immense potential, it's crucial to approach it ethically and responsibly. Adhering to Amazon's terms of service and respecting user privacy are paramount. Businesses should also exercise caution to ensure compliance with relevant data protection regulations, such as the GDPR.

Moreover, the use of scraped data should be guided by principles of transparency and accountability. Clearly communicating data collection practices and obtaining consent whenever necessary fosters trust and credibility.

Conclusion

Amazon product review data scraping unlocks a wealth of opportunities for businesses seeking to gain a competitive edge in the dynamic e-commerce landscape. By harnessing the power of automated data extraction and analysis, businesses can unearth actionable insights, drive informed decision-making, and cultivate stronger relationships with their customers. However, it's imperative to approach data scraping with integrity, prioritizing ethical considerations and compliance with regulatory frameworks. Embraced judiciously, Amazon product review data scraping can be a catalyst for innovation, growth, and sustainable business success in the digital age.

3 notes

·

View notes

Text

25 Python Projects to Supercharge Your Job Search in 2024

Introduction: In the competitive world of technology, a strong portfolio of practical projects can make all the difference in landing your dream job. As a Python enthusiast, building a diverse range of projects not only showcases your skills but also demonstrates your ability to tackle real-world challenges. In this blog post, we'll explore 25 Python projects that can help you stand out and secure that coveted position in 2024.

1. Personal Portfolio Website

Create a dynamic portfolio website that highlights your skills, projects, and resume. Showcase your creativity and design skills to make a lasting impression.

2. Blog with User Authentication

Build a fully functional blog with features like user authentication and comments. This project demonstrates your understanding of web development and security.

3. E-Commerce Site

Develop a simple online store with product listings, shopping cart functionality, and a secure checkout process. Showcase your skills in building robust web applications.

4. Predictive Modeling

Create a predictive model for a relevant field, such as stock prices, weather forecasts, or sales predictions. Showcase your data science and machine learning prowess.

5. Natural Language Processing (NLP)

Build a sentiment analysis tool or a text summarizer using NLP techniques. Highlight your skills in processing and understanding human language.

6. Image Recognition

Develop an image recognition system capable of classifying objects. Demonstrate your proficiency in computer vision and deep learning.

7. Automation Scripts

Write scripts to automate repetitive tasks, such as file organization, data cleaning, or downloading files from the internet. Showcase your ability to improve efficiency through automation.

8. Web Scraping

Create a web scraper to extract data from websites. This project highlights your skills in data extraction and manipulation.

9. Pygame-based Game

Develop a simple game using Pygame or any other Python game library. Showcase your creativity and game development skills.

10. Text-based Adventure Game

Build a text-based adventure game or a quiz application. This project demonstrates your ability to create engaging user experiences.

11. RESTful API

Create a RESTful API for a service or application using Flask or Django. Highlight your skills in API development and integration.

12. Integration with External APIs

Develop a project that interacts with external APIs, such as social media platforms or weather services. Showcase your ability to integrate diverse systems.

13. Home Automation System

Build a home automation system using IoT concepts. Demonstrate your understanding of connecting devices and creating smart environments.

14. Weather Station

Create a weather station that collects and displays data from various sensors. Showcase your skills in data acquisition and analysis.

15. Distributed Chat Application

Build a distributed chat application using a messaging protocol like MQTT. Highlight your skills in distributed systems.

16. Blockchain or Cryptocurrency Tracker

Develop a simple blockchain or a cryptocurrency tracker. Showcase your understanding of blockchain technology.

17. Open Source Contributions

Contribute to open source projects on platforms like GitHub. Demonstrate your collaboration and teamwork skills.

18. Network or Vulnerability Scanner

Build a network or vulnerability scanner to showcase your skills in cybersecurity.

19. Decentralized Application (DApp)

Create a decentralized application using a blockchain platform like Ethereum. Showcase your skills in developing applications on decentralized networks.

20. Machine Learning Model Deployment

Deploy a machine learning model as a web service using frameworks like Flask or FastAPI. Demonstrate your skills in model deployment and integration.

21. Financial Calculator

Build a financial calculator that incorporates relevant mathematical and financial concepts. Showcase your ability to create practical tools.

22. Command-Line Tools

Develop command-line tools for tasks like file manipulation, data processing, or system monitoring. Highlight your skills in creating efficient and user-friendly command-line applications.

23. IoT-Based Health Monitoring System

Create an IoT-based health monitoring system that collects and analyzes health-related data. Showcase your ability to work on projects with social impact.

24. Facial Recognition System

Build a facial recognition system using Python and computer vision libraries. Showcase your skills in biometric technology.

25. Social Media Dashboard

Develop a social media dashboard that aggregates and displays data from various platforms. Highlight your skills in data visualization and integration.

Conclusion: As you embark on your job search in 2024, remember that a well-rounded portfolio is key to showcasing your skills and standing out from the crowd. These 25 Python projects cover a diverse range of domains, allowing you to tailor your portfolio to match your interests and the specific requirements of your dream job.

If you want to know more, Click here:https://analyticsjobs.in/question/what-are-the-best-python-projects-to-land-a-great-job-in-2024/

#python projects#top python projects#best python projects#analytics jobs#python#coding#programming#machine learning

2 notes

·

View notes

Text

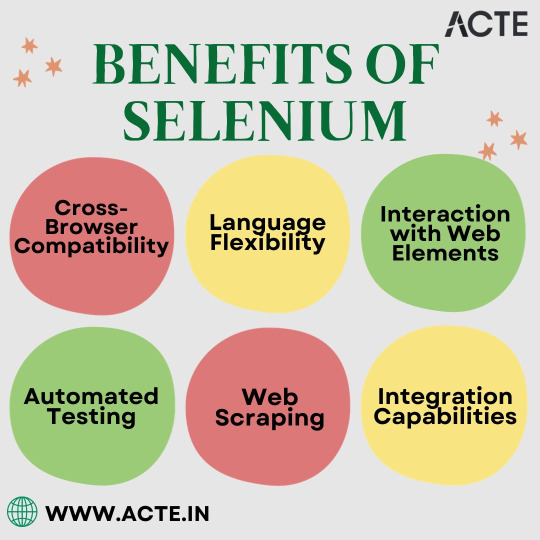

Why Choose Selenium? Exploring the Key Benefits for Testing and Development

In today's digitally driven world, where speed, accuracy, and efficiency are paramount, web automation has emerged as the cornerstone of success for developers, testers, and organizations. At the heart of this automation revolution stands Selenium, an open-source framework that has redefined how we interact with web browsers. In this comprehensive exploration, we embark on a journey to uncover the multitude of advantages that Selenium offers and how it empowers individuals and businesses in the digital age.

The Selenium Advantage: A Closer Look

Selenium, often regarded as the crown jewel of web automation, offers a variety of advantages that play important roles in simplifying complex web tasks and elevating web application development and testing processes. Let's explore the main advantages in more detail:

1. Cross-Browser Compatibility: Bridging Browser Gaps

Selenium's remarkable ability to support various web browsers, including but not limited to Chrome, Firefox, Edge, and more, ensures that web applications function consistently across different platforms. This cross-browser compatibility is invaluable in a world where users access websites from a a variety of devices and browsers. Whether it's a responsive e-commerce site or a mission-critical enterprise web app, Selenium bridges the browser gaps seamlessly.

2. Language Flexibility: Your Language of Choice

One of Selenium's standout features is its language flexibility. It doesn't impose restrictions on developers, allowing them to harness the power of Selenium using their preferred programming language. Whether you're proficient in Java, Python, C#, Ruby, or another language, Selenium welcomes your expertise with open arms. This language flexibility fosters inclusivity among developers and testers, ensuring that Selenium adapts to your preferred coding language.

3. Interaction with Web Elements: User-like Precision

Selenium empowers you to interact with web elements with pinpoint precision, mimicking human user actions effortlessly. It can click buttons, fill in forms, navigate dropdown menus, scroll through pages, and simulate a wide range of user interactions with the utmost accuracy. This level of precision is critical for automating complex web tasks, ensuring that the actions performed by Selenium are indistinguishable from those executed by a human user.

4. Automated Testing: Quality Assurance Simplified

Quality assurance professionals and testers rely heavily on Selenium for automated testing of web applications. By identifying issues, regressions, and functional problems early in the development process, Selenium streamlines the testing phase, reducing both the time and effort required for comprehensive testing. Automated testing with Selenium not only enhances the efficiency of the testing process but also improves the overall quality of web applications.

5. Web Scraping: Unleashing Data Insights

In an era where data reigns supreme, Selenium emerges as a effective tool for web scraping tasks. It enables you to extract data from websites, scrape valuable information, and store it for analysis, reporting, or integration into other applications. This capability is particularly valuable for businesses and organizations seeking to leverage web data for informed decision-making. Whether it's gathering pricing data for competitive analysis or extracting news articles for sentiment analysis, Selenium's web scraping capabilities are invaluable.

6. Integration Capabilities: The Glue in Your Tech Stack

Selenium's harmonious integration with a wide range of testing frameworks, continuous integration (CI) tools, and other technologies makes it the glue that binds your tech stack together seamlessly. This integration facilitates the orchestration of automated tests, ensuring that they fit seamlessly into your development workflow. Selenium's compatibility with popular CI/CD (Continuous Integration/Continuous Deployment) platforms like Jenkins, Travis CI, and CircleCI streamlines the testing and validation processes, making Selenium an indispensable component of the software development lifecycle.

In conclusion, the advantages of using Selenium in web automation are substantial, and they significantly contribute to efficient web development, testing, and data extraction processes. Whether you're a seasoned developer looking to streamline your web applications or a cautious tester aiming to enhance the quality of your products, Selenium stands as a versatile tool that can cater to your diverse needs.

At ACTE Technologies, we understand the key role that Selenium plays in the ever-evolving tech landscape. We offer comprehensive training programs designed to empower individuals and organizations with the knowledge and skills needed to harness the power of Selenium effectively. Our commitment to continuous learning and professional growth ensures that you stay at the forefront of technology trends, equipping you with the tools needed to excel in a rapidly evolving digital world.

So, whether you're looking to upskill, advance your career, or simply stay competitive in the tech industry, ACTE Technologies is your trusted partner on this transformative journey. Join us and unlock a world of possibilities in the dynamic realm of technology. Your success story begins here, where Selenium's advantages meet your aspirations for excellence and innovation.

5 notes

·

View notes

Text

Scrape Daily Price Updates from Online Retailers to Stay Competitive

Introduction

In today's highly competitive e-commerce landscape, businesses must stay agile and informed to remain relevant. One of the most effective ways to achieve this is to Scrape Daily Price Updates from Online Retailers. By collecting real-time pricing data, companies can monitor competitors, respond quickly to market changes, and adjust their pricing strategies accordingly. Daily price scraping offers critical insights that drive more intelligent business decisions. Through Web Scraping Average Price Changes on Online Stores, retailers can analyze pricing trends, spot seasonal shifts, and understand how competitors position their products. This allows them to optimize their prices to stay attractive without sacrificing margins. Additionally, the ability to Track Retailer Price Changes with Web Scraping gives businesses a strategic edge. Whether launching a new product or planning a promotional campaign, having up-to-date pricing data ensures they remain competitive while delivering better customer value.

This blog will explore how daily price scraping transforms e-commerce strategy—helping businesses boost profitability, improve pricing accuracy, and thrive in an ever-evolving digital marketplace.

Why Daily Price Scraping Matters?

Price scraping involves extracting pricing information from online retail platforms using automated tools or scripts. Unlike periodic manual checks, Web Scraping Daily Product Price Updates Data provides continuous information, enabling businesses to respond swiftly to market changes. Here's why it's a game-changer:

Dynamic Market Adaptation: Online retail prices fluctuate frequently due to promotions, demand shifts, or competitor strategies. Daily scraping ensures businesses stay updated with these changes.

Competitive Edge: Monitoring competitors' prices allows retailers to adjust their pricing strategies to remain attractive to customers without sacrificing margins.

Data-Driven Decisions: Access to fresh pricing data supports strategic decisions, from inventory management to marketing campaigns. Businesses can Extract Price Change Frequency From ECommerce Sites to understand how often and why prices shift.

Customer Trust: Offering competitive prices builds customer loyalty and enhances brand reputation in a price-sensitive market.

With Web Scraping For Average Price Fluctuations Data, companies can gain deeper insights into pricing patterns, enabling smarter, faster decisions. By integrating daily price scraping into their operations, businesses can transform raw data into actionable insights, ensuring they remain agile and customer-focused.

Key Applications of Daily Price Scraping

Daily price scraping is a versatile tool with applications across various aspects of online retail. Below are some of the most impactful ways businesses leverage this practice:

1. Competitive Pricing Strategies

Staying competitive in e-commerce requires a deep understanding of how rivals price their products. Daily price scraping enables businesses to:

Track competitors' pricing trends for similar products.

Identify promotional offers or discounts that may influence customer behavior.

Adjust prices in real-time to match or beat competitors while maintaining profitability.

Analyze pricing patterns to predict future moves by competitors.

For example, an electronics retailer can use scraped data to ensure their smartphones are priced competitively against major players like Amazon or Best Buy, capturing price-sensitive customers.

2. Price Optimization

Finding the sweet spot for pricing—balancing profitability and customer appeal—is a constant challenge. Daily price scraping supports price optimization by:

Providing insights into market price ranges for specific product categories.

Highlighting opportunities to increase margins on high-demand items.

Identifying underpriced products that could be adjusted for better revenue.

Supporting A/B testing of pricing strategies to determine what resonates with customers.

Retailers can use these insights to fine-tune their pricing models, ensuring they maximize revenue while remaining attractive to shoppers.

3. Inventory and Stock Management

Pricing data often correlates with demand and availability. By scraping prices daily, businesses can:

Detect sudden price drops that may indicate competitors' overstock or clearance sales.

Adjust inventory levels to avoid stockouts or excess inventory.

Prioritize fast-moving products based on pricing trends.

Forecast demand for seasonal or trending items.

For instance, a fashion retailer might notice a competitor slashing prices on winter coats, signaling a potential shift in demand, allowing them to adjust their stock accordingly.

4. Market Trend Analysis

Daily price scraping provides a window into broader market trends, helping businesses anticipate shifts and plan strategically. Key benefits include:

Identifying seasonal pricing patterns, such as holiday sales or back-to-school promotions.

Tracking price volatility in specific product categories, like electronics or home goods.

Understanding consumer behavior based on how prices influence purchasing decisions.

Spotting emerging products gaining traction through competitive pricing.

These insights enable retailers to align their strategies with market dynamics, ensuring they capitalize on opportunities and mitigate risks.

5. Personalized Marketing Campaigns

Pricing data can inform targeted marketing efforts, enhancing customer engagement. Daily scraping supports this by:

Identifying price points that resonate with specific customer segments.

Highlighting opportunities for limited-time offers or flash sales.

Enabling dynamic pricing in email campaigns or personalized promotions.

Supporting loyalty programs with competitive pricing incentives

For example, a retailer could use scraped data to offer exclusive discounts on products a customer has browsed, increasing conversion rates.

Tools and Technologies for Effective Price Scraping

Businesses rely on a combination of tools and technologies designed for automation, scalability, and accuracy to scrape prices effectively. Here are some popular options:

Web Scraping Frameworks:

BeautifulSoup (Python): Ideal for parsing HTML and extracting pricing data from static websites.

Scrapy: A robust framework for large-scale scraping projects with built-in support for handling complex websites.

Puppeteer: A Node.js library for scraping dynamic, JavaScript-heavy websites by controlling headless browsers.

Cloud-Based Solutions:

AWS Lambda: Serverless computing for running scraping scripts at scale.

Google Cloud Functions: Cost-effective for scheduling daily scraping tasks.

Azure Data Factory: Integrates scraping with data processing pipelines.

Data Storage and Analysis:

PostgreSQL/MySQL: This stores large volumes of pricing data.

MongoDB: Suitable for handling unstructured or semi-structured data.

Tableau/Power BI: This is used to visualize pricing trends and generate insights.

Businesses can streamline their scraping processes and ensure reliable data collection by selecting the right combination of tools.

Boost your e-commerce strategy with real-time data scraping for better insights!

Contact Us Today!

Best Practices for Daily Price Scraping

To maximize the benefits of daily price scraping, businesses should adopt best practices that ensure efficiency and compliance. Here are some recommendations:

Automate Scheduling: Use cron jobs or cloud schedulers to run scraping scripts daily at optimal times, such as during low-traffic periods.

Handle Dynamic Websites: Employ headless browsers or APIs to scrape JavaScript-rendered pages, ensuring accurate data extraction.

Monitor Data Quality: Implement validation checks to detect incomplete or inaccurate data, such as missing prices or incorrect formats.

Scale Gradually: Start with a small set of products or retailers and expand as scraping processes stabilize.

Integrate with Analytics: Feed scraped data into analytics platforms to generate real-time dashboards and reports.

Stay Updated: Regularly update scraping scripts to adapt to website changes, such as new layouts or anti-scraping measures.

These practices help businesses maintain a robust and efficient scraping operation, delivering consistent value.

Real-World Success Stories

Daily price scraping has powered success for retailers across industries. Here are a few examples:

Global Electronics Retailer: A leading electronics chain used daily price scraping to monitor competitors' prices for TVs and laptops. Adjusting prices in real-time increased sales by 15% during the holiday season.

Fashion E-Commerce Platform: An online fashion retailer leveraged scraped data to optimize pricing for seasonal collections. This led to a 20% improvement in profit margins while maintaining competitive prices.

Grocery Delivery Service: A grocery delivery startup used price scraping to track competitors' prices for staples like milk and bread. This enabled them to offer competitive bundles, boosting customer retention by 10%.

These success stories highlight the tangible impact of daily price scraping on revenue, customer satisfaction, and market positioning.

The Future of Price Scraping in E-Commerce

As e-commerce continues to evolve, Web Scraping E-commerce Websites will play an increasingly critical role in shaping competitive strategies. Emerging trends influencing the future of daily price scraping include:

AI-Powered Scraping: Machine learning algorithms will enhance data extraction accuracy and predict pricing trends more precisely.

Real-Time Pricing Engines: Integration with AI-driven pricing systems will enable instant price adjustments based on scraped data.

Cross-Platform Scraping: Businesses will gather pricing information from websites, mobile apps, and social media marketplaces.

Sustainability Focus: Retailers will use pricing insights to optimize supply chains, reduce overstocking, minimize waste, and support eco-conscious initiatives.

Moreover, the availability of comprehensive datasets, such as an Ecommerce Product & Review Dataset , will enrich pricing intelligence by combining product performance insights with customer feedback. By embracing these technological advancements, businesses can unlock new opportunities to innovate, adapt, and lead in the ever-competitive e-commerce environment.

How Product Data Scrape Can Help You?

Customized Data Extraction Solutions: We build tailored scraping tools to collect specific retail data such as product prices, availability, ratings, and promotions from e-commerce websites, mobile apps, and marketplaces.

Real-Time & Scheduled Scraping: Our systems support real-time and scheduled scraping, ensuring you receive up-to-date data at the frequency you need—daily, hourly, or weekly.

Multi-Platform Retail Coverage: We can extract data from multiple platforms, including websites, social media shops, and apps, providing a complete view of the retail landscape across various channels.

Clean, Structured, and Scalable Datasets: We deliver data in clean, structured formats (CSV, JSON, Excel, etc.) that are easy to integrate into your systems for analytics, dashboards, or machine learning models.

Compliance-First Approach: We follow ethical web scraping practices and stay updated with platform policies and regional data compliance laws to ensure safe and responsible data collection.

Conclusion

Daily price scraping is a powerful tool for online retailers seeking to navigate the complexities of the digital marketplace. Its applications are vast and impactful, from competitive pricing to inventory management and personalized marketing. With the support of Ecommerce Data Scraping Services, businesses can automate and streamline the collection of valuable pricing intelligence. By leveraging the right tools, adopting best practices, and staying attuned to market trends, companies can Extract Popular E-Commerce Website Data efficiently and consistently. This enables timely reactions to competitor moves and fuels data-driven strategies across departments. In today's data-driven environment, the ability to Extract E-commerce Data regularly empowers retailers to make smarter decisions, enhance customer satisfaction, and secure a long-term competitive edge. In an era where data is king, daily price scraping remains an essential asset for any e-commerce player aiming to thrive in an ever-evolving digital world.

At Product Data Scrape, we strongly emphasize ethical practices across all our services, including Competitor Price Monitoring and Mobile App Data Scraping. Our commitment to transparency and integrity is at the heart of everything we do. With a global presence and a focus on personalized solutions, we aim to exceed client expectations and drive success in data analytics. Our dedication to ethical principles ensures that our operations are both responsible and effective.

Read More>> https://www.productdatascrape.com/scrape-daily-retail-prices-online.php

#ScrapeDailyPriceUpdatesFromOnlineRetailers#WebScrapingAveragePriceChangesOnOnlineStores#WebScrapingDailyProductPriceUpdatesData#ExtractPriceChangeFrequencyFromECommerceSites#WebScrapingForAveragePriceFluctuationsData

0 notes

Text

Transform Insights with Web Data Scraping

Iconic Data Scrap excels in Web Data Scraping, offering businesses high-quality, structured data from the web. Our advanced tools and expert team extract insights on pricing, market trends, and competitors with unmatched precision. Trusted by world-famous brands and backed by over 100 awards, our Web Data Scraping solutions enhance business intelligence and decision-making. From e-commerce to manufacturing, we tailor data to your needs. Discover how Web Data Scraping can give you a competitive edge—reach out today for customized, actionable data solutions!

0 notes

Text

Alarming rise in AI-powered scams: Microsoft reveals $4 Billion in thwarted fraud

New Post has been published on https://thedigitalinsider.com/alarming-rise-in-ai-powered-scams-microsoft-reveals-4-billion-in-thwarted-fraud/

Alarming rise in AI-powered scams: Microsoft reveals $4 Billion in thwarted fraud

AI-powered scams are evolving rapidly as cybercriminals use new technologies to target victims, according to Microsoft’s latestCyber Signals report.

Over the past year, the tech giant says it has prevented $4 billion in fraud attempts, blocking approximately 1.6 million bot sign-up attempts every hour – showing the scale of this growing threat.

The ninth edition of Microsoft’s Cyber Signals report, titled “AI-powered deception: Emerging fraud threats and countermeasures,” reveals how artificial intelligence has lowered the technical barriers for cybercriminals, enabling even low-skilled actors to generate sophisticated scams with minimal effort.

What previously took scammers days or weeks to create can now be accomplished in minutes.

The democratisation of fraud capabilities represents a shift in the criminal landscape that affects consumers and businesses worldwide.

The evolution of AI-enhanced cyber scams

Microsoft’s report highlights how AI tools can now scan and scrape the web for company information, helping cybercriminals build detailed profiles of potential targets for highly-convincing social engineering attacks.

Bad actors can lure victims into complex fraud schemes using fake AI-enhanced product reviews and AI-generated storefronts, which come complete with fabricated business histories and customer testimonials.

According to Kelly Bissell, Corporate Vice President of Anti-Fraud and Product Abuse at Microsoft Security, the threat numbers continue to increase. “Cybercrime is a trillion-dollar problem, and it’s been going up every year for the past 30 years,” per the report.

“I think we have an opportunity today to adopt AI faster so we can detect and close the gap of exposure quickly. Now we have AI that can make a difference at scale and help us build security and fraud protections into our products much faster.”

The Microsoft anti-fraud team reports that AI-powered fraud attacks happen globally, with significant activity originating from China and Europe – particularly Germany, due to its status as one of the largest e-commerce markets in the European Union.

The report notes that the larger a digital marketplace is, the more likely a proportional degree of attempted fraud will occur.

E-commerce and employment scams leading

Two particularly concerning areas of AI-enhanced fraud include e-commerce and job recruitment scams.In the ecommerce space, fraudulent websites can now be created in minutes using AI tools with minimal technical knowledge.

Sites often mimic legitimate businesses, using AI-generated product descriptions, images, and customer reviews to fool consumers into believing they’re interacting with genuine merchants.

Adding another layer of deception, AI-powered customer service chatbots can interact convincingly with customers, delay chargebacks by stalling with scripted excuses, and manipulate complaints with AI-generated responses that make scam sites appear professional.

Job seekers are equally at risk. According to the report, generative AI has made it significantly easier for scammers to create fake listings on various employment platforms. Criminals generate fake profiles with stolen credentials, fake job postings with auto-generated descriptions, and AI-powered email campaigns to phish job seekers.

AI-powered interviews and automated emails enhance the credibility of these scams, making them harder to identify. “Fraudsters often ask for personal information, like resumes or even bank account details, under the guise of verifying the applicant’s information,” the report says.

Red flags include unsolicited job offers, requests for payment and communication through informal platforms like text messages or WhatsApp.

Microsoft’s countermeasures to AI fraud

To combat emerging threats, Microsoft says it has implemented a multi-pronged approach across its products and services. Microsoft Defender for Cloud provides threat protection for Azure resources, while Microsoft Edge, like many browsers, features website typo protection and domain impersonation protection. Edge is noted by the Microsoft report as using deep learning technology to help users avoid fraudulent websites.

The company has also enhanced Windows Quick Assist with warning messages to alert users about possible tech support scams before they grant access to someone claiming to be from IT support. Microsoft now blocks an average of 4,415 suspicious Quick Assist connection attempts daily.

Microsoft has also introduced a new fraud prevention policy as part of its Secure Future Initiative (SFI). As of January 2025, Microsoft product teams must perform fraud prevention assessments and implement fraud controls as part of their design process, ensuring products are “fraud-resistant by design.”

As AI-powered scams continue to evolve, consumer awareness remains important. Microsoft advises users to be cautious of urgency tactics, verify website legitimacy before making purchases, and never provide personal or financial information to unverified sources.

For enterprises, implementing multi-factor authentication and deploying deepfake-detection algorithms can help mitigate risk.

See also: Wozniak warns AI will power next-gen scams

Want to learn more about AI and big data from industry leaders? Check out AI & Big Data Expo taking place in Amsterdam, California, and London. The comprehensive event is co-located with other leading events including Intelligent Automation Conference, BlockX, Digital Transformation Week, and Cyber Security & Cloud Expo.

Explore other upcoming enterprise technology events and webinars powered by TechForge here.

#2025#ai#ai & big data expo#ai tools#AI-powered#Algorithms#amp#applications#approach#artificial#Artificial Intelligence#authentication#automation#awareness#azure#bank#Big Data#billion#Blockchain#bot#Business#california#chatbots#China#Cloud#Commerce#communication#comprehensive#conference#consumers

0 notes

Text

SheetMagic AI 2025 Review

Introduction

Managing large datasets, generating content, and automating repetitive tasks in spreadsheets can be time-consuming and prone to errors. SheetMagic AI is an innovative Google Sheets add-on designed to simplify these processes by integrating powerful AI capabilities directly into your spreadsheets. With features like AI-driven content generation, web scraping, formula automation, and data analysis, SheetMagic transforms Google Sheets into a dynamic workspace for marketers, analysts, and businesses. In this review, we’ll explore its features, benefits, pricing, and practical applications.

Overview

SheetMagic AI is a versatile tool that enhances Google Sheets by leveraging AI models like GPT-3.5 Turbo, GPT-4 Turbo, DALL-E 3, and GPT-Vision. It automates workflows such as bulk content creation, data categorization, formula generation, and web scraping. The platform is ideal for professionals across industries like marketing, e-commerce, sales, SEO, and education. With its user-friendly interface and multilingual support, SheetMagic empowers users to streamline their workflows without extensive technical expertise.

What Is SheetMagic AI?

SheetMagic AI is a Google Sheets add-on that integrates advanced AI capabilities to automate tasks like generating bulk content, analyzing datasets, extracting insights from websites, and cleaning data. It enables users to interact with spreadsheet data using natural language prompts and formulas like =ai("Your prompt here"). Designed for efficiency and accuracy, SheetMagic AI simplifies complex workflows while maintaining flexibility for customization.

Key Features

AI-Powered Content Generation: Create bulk product descriptions, marketing emails, or SEO-friendly text directly within Google Sheets.

Web Scraping: Extract website elements such as meta titles, descriptions, headings, and paragraphs on a schedule.

Formula Automation: Generate complex formulas with natural language prompts.

Data Cleaning & Categorization: Remove duplicates, standardize values, and organize datasets efficiently.

Automated Reports & Dashboards: Build real-time reports and dashboards for performance tracking.

Team Collaboration: Enable real-time collaboration across teams with shared spreadsheets.

Multilingual Support: Process data in over 20 languages for global accessibility.

Features and Benefits

How Does It Work?

SheetMagic AI operates through a simple workflow:

Install the Add-On: Download SheetMagic from the Google Workspace Marketplace.

Input Prompts or Upload Data: Use natural language prompts or upload datasets for processing.

AI Processing: Automate tasks like content generation or web scraping using built-in formulas.

Analyze & Visualize Data: Summarize trends with charts and graphs or extract actionable insights.

Collaborate & Share: Work with team members in real-time on shared spreadsheets.

Benefits

Efficiency Gains: Automates repetitive tasks like formula creation or data entry in minutes.

Enhanced Accuracy: Reduces human errors by cleaning and organizing data automatically.

Improved Collaboration: Allows teams to work together seamlessly in real-time.

SEO Optimization: Generates programmatic SEO datasets for improved search rankings.

Global Accessibility: Supports multiple languages for international users.

My Experience Using It

Using SheetMagic AI was transformative for my workflow during a recent e-commerce project. The web scraping feature allowed me to extract product details from competitor websites effortlessly—saving hours of manual work. The AI-powered content generation tool was particularly impressive; it created bulk product descriptions optimized for SEO directly within my spreadsheet.

The formula automation tool simplified complex calculations by generating accurate formulas based on natural language prompts—a feature that proved invaluable for financial modeling tasks. However, I did encounter minor challenges with integrating SheetMagic into existing workflows; some advanced features required initial familiarization.

Overall, SheetMagic AI significantly enhanced my productivity while maintaining high standards of accuracy across various tasks.

Pros and Cons

Advantages

Time-Saving Automation: Reduces manual effort in tasks like data entry and formula creation.

User-Friendly Interface: Accessible even for users with minimal technical skills.

Versatile Applications: Supports diverse use cases across industries like marketing and analytics.

Multilingual Support: Enables global accessibility with support for over 20 languages.

Unlimited Usage Plans: Offers unlimited AI usage when connected to OpenAI API keys.

Disadvantages

Learning Curve for Advanced Features: Some tools require initial familiarization to maximize benefits.

Limited Free Trial Options: No free trial available; users must commit to paid plans immediately.

API Key Requirement: Requires users to connect their own OpenAI API key for full functionality.

Who Should Use It?

SheetMagic AI is ideal for:

Marketers & SEO Professionals: Automates content creation and programmatic SEO datasets efficiently.

E-commerce Businesses: Generates bulk product descriptions and analyzes competitor data seamlessly.

Data Analysts & Researchers: Simplifies formula creation and organizes large datasets effectively.

Educators & Students: Enhances learning resources by summarizing lecture notes or creating quizzes.

Pricing Plans & Evaluation

SheetMagic AI offers two primary pricing tiers:

Basic Plan ($19/month):

Unlimited AI usage (requires OpenAI API key).

Web scraping functionality with timed intervals.

Formula automation tools.

Pro Plan ($79/month):

Includes Basic Plan features.

Advanced analytics tools like real-time dashboards.

Enhanced collaboration features for teams.

Evaluation

While the Basic Plan provides excellent value for individual users or small businesses exploring basic features like web scraping or formula automation, larger organizations will benefit more from the Pro Plan due to its expanded capabilities like team collaboration tools and advanced analytics dashboards.

Compared to traditional spreadsheet tools or standalone automation platforms, SheetMagic offers exceptional value by combining multiple functionalities into one intuitive add-on.

Conclusion

SheetMagic AI is a powerful tool that transforms Google Sheets into an intelligent workspace by automating workflows such as content generation, web scraping, formula creation, and data analysis. Its ability to streamline complex tasks while maintaining flexibility makes it an invaluable asset for marketers, analysts, e-commerce professionals, educators, and researchers alike.

Although there are minor limitations—such as the need for an API key—the overall benefits far outweigh these drawbacks. Whether you’re looking to enhance productivity or unlock new possibilities within Google Sheets, SheetMagic AI provides an efficient solution tailored to modern business needs.

#phd journey#academics#grad school#phd life#phd research#phd student#productivity#study tips#university#phdblr#phdjourney#research#data analytics#data science#data driven decisions

0 notes

Text

Web Scraping Explained: A Guide to Ethical Data Extraction

Web scraping is a technique used to automatically extract information from websites. Instead of manually copying and pasting data, web scraping uses programs that can read and collect information from web pages efficiently. It has become a popular tool among professionals who require large volumes of data for research, analysis, or business intelligence. In this article, we’ll explain what web scraping is, how it works, and why ethical practices matter—along with the value of working with a trusted website designing company in India to guide your digital strategy.

What Is Web Scraping?

Web scraping allows computers to mimic how a human browses the web and extracts data. The process typically involves sending requests to a website's server, receiving the HTML code in response, and then parsing that code to collect specific pieces of data such as product prices, contact details, or user reviews. The collected information is often stored in databases or spreadsheets for further analysis.

Many developers prefer to use programming languages like Python for web scraping due to its simplicity and robust libraries like Beautiful Soup and Scrapy. These tools make it easier to navigate through the structure of websites and extract meaningful information quickly.

When working with a professional website designing company in India, businesses can ensure that their web scraping efforts are seamlessly integrated into their digital platforms and follow best practices for compliance and performance.

Common Applications of Web Scraping

Web scraping is used across various industries and for multiple purposes:

E-commerce: Online retailers monitor competitor pricing and gather product data to adjust their own strategies.

Market Research: Companies collect customer feedback or product reviews to better understand consumer sentiment.

Journalism: Reporters use scraping tools to gather facts and track news stories.

Academia & Research: Researchers compile large datasets for analysis and insights.

By partnering with an experienced website designing company in India, businesses can implement web scraping tools that are tailored to their unique goals and ensure that they operate efficiently and ethically.

The Importance of Ethical Web Scraping

As powerful as web scraping is, it comes with significant ethical responsibilities. One of the primary concerns is consent. Extracting data from a website without permission can violate terms of service or even infringe on privacy laws.

Another important consideration is respecting the robots.txt file—a public document that outlines which parts of a website can be accessed by automated tools. Responsible scrapers always check this file and adhere to its guidelines.

Additionally, any data collected must be used responsibly. If you're using the data for commercial purposes or sharing it publicly, ensure that it doesn’t include personal or sensitive information without consent. Ethical data use not only protects individuals but also builds trust in your brand.

A reliable website designing company in India can help ensure your scraping solutions comply with legal standards and align with industry best practices.

Best Practices for Ethical Web Scraping

To ensure your scraping strategy is responsible and sustainable, keep the following tips in mind:

Review the Website’s Terms of Service: Understand what is and isn’t allowed before scraping.

Respect robots.txt: Follow the website’s guidelines to avoid prohibited areas.

Limit Your Request Frequency: Too many requests in a short time can slow down or crash a site. Adding delays between requests is a good practice.

Protect User Data: Always anonymize personal information and never share sensitive data without proper consent.

These practices help avoid legal trouble and ensure your scraping activity doesn’t negatively impact other websites or users. A reputable website designing company in India can also implement these controls as part of your digital infrastructure.

Real-World Applications and Professional Support

Small businesses and startups often use web scraping for competitive analysis, such as tracking product pricing or consumer trends. When used correctly, this method offers valuable insights that can lead to better business decisions.

To build scraping tools that are efficient, secure, and aligned with your business goals, consider working with a professional website designing company in India. Agencies like Dzinepixel have helped numerous businesses create secure and scalable platforms that support ethical data collection methods. Their experience ensures your scraping projects are both technically sound and compliant with privacy laws and web standards.

Final Thoughts

Web scraping is a powerful tool that can unlock valuable data and insights for individuals and businesses. However, with this power comes the responsibility to use it ethically. Understanding how web scraping works, respecting site guidelines, and using data responsibly are all crucial steps in making the most of this technology.

If you're planning to incorporate web scraping into your digital strategy, it’s wise to consult with a professional website designing company in India. Their expertise can help you develop robust solutions that not only deliver results but also maintain ethical and legal standards.

By taking the right steps from the beginning, you can benefit from the vast potential of web scraping—while building a trustworthy and future-ready online presence.

#best web development agencies india#website design and development company in india#website development company in india#web design company india#website designing company in india#digital marketing agency india

0 notes

Text

Amazon Scraper API Made Easy: Get Product, Price, & Review Data

If you’re in the world of e-commerce, market research, or product analytics, then you know how vital it is to have the right data at the right time. Enter the Amazon Scraper API—your key to unlocking real-time, accurate, and comprehensive product, price, and review information from the world's largest online marketplace. With this amazon scraper, you can streamline data collection and focus on making data-driven decisions that drive results.

Accessing Amazon’s extensive product listings and user-generated content manually is not only tedious but also inefficient. Fortunately, the Amazon Scraper API automates this process, allowing businesses of all sizes to extract relevant information with speed and precision. Whether you're comparing competitor pricing, tracking market trends, or analyzing customer feedback, this tool is your secret weapon.

Using an amazon scraper is more than just about automation—it’s about gaining insights that can redefine your strategy. From optimizing listings to enhancing customer experience, real-time data gives you the leverage you need. In this blog, we’ll explore what makes the Amazon Scraper API a game-changer, how it works, and how you can use it to elevate your business.

What is an Amazon Scraper API?

An Amazon Scraper API is a specialized software interface that allows users to programmatically extract structured data from Amazon without manual intervention. It acts as a bridge between your application and Amazon's web pages, parsing and delivering product data, prices, reviews, and more in machine-readable formats like JSON or XML. This automated process enables businesses to bypass the tedious and error-prone task of manual scraping, making data collection faster and more accurate.

One of the key benefits of an Amazon Scraper API is its adaptability. Whether you're looking to fetch thousands of listings or specific review details, this amazon data scraper can be tailored to your exact needs. Developers appreciate its ease of integration into various platforms, and analysts value the real-time insights it offers.

Why You Need an Amazon Scraper API

The Amazon marketplace is a data-rich environment, and leveraging this data gives you a competitive advantage. Here are some scenarios where an Amazon Scraper API becomes indispensable:

1. Market Research: Identify top-performing products, monitor trends, and analyze competition. With accurate data in hand, businesses can launch new products or services with confidence, knowing there's a demand or market gap to fill.

2. Price Monitoring: Stay updated with real-time price fluctuations to remain competitive. Automated price tracking via an amazon price scraper allows businesses to react instantly to competitors' changes.

3. Inventory Management: Understand product availability and stock levels. This can help avoid stock outs or overstocking. Retailers can optimize supply chains and restocking processes with the help of an amazon product scraper.

4. Consumer Sentiment Analysis: Use review data to improve offerings. With Amazon Review Scraping, businesses can analyze customer sentiment to refine product development and service strategies.

5. Competitor Benchmarking: Compare products across sellers to evaluate strengths and weaknesses. An amazon web scraper helps gather structured data that fuels sharper insights and marketing decisions.

6. SEO and Content Strategy: Extract keyword-rich product titles and descriptions. With amazon review scraper tools, you can identify high-impact phrases to enrich your content strategies.

7. Trend Identification: Spot emerging trends by analyzing changes in product popularity, pricing, or review sentiment over time. The ability to scrape amazon product data empowers brands to respond proactively to market shifts.

Key Features of a Powerful Amazon Scraper API

Choosing the right Amazon Scraper API can significantly enhance your e-commerce data strategy. Here are the essential features to look for:

Scalability: Seamlessly handle thousands—even millions—of requests. A truly scalable Amazon data scraper supports massive workloads without compromising speed or stability.

High Accuracy: Get real-time, up-to-date data with high precision. Top-tier Amazon data extraction tools constantly adapt to Amazon’s evolving structure to ensure consistency.

Geo-Targeted Scraping: Extract localized data across regions. Whether it's pricing, availability, or listings, geo-targeted Amazon scraping is essential for global reach.

Advanced Pagination & Sorting: Retrieve data by page number, relevance, rating, or price. This allows structured, efficient scraping for vast product categories.

Custom Query Filters: Use ASINs, keywords, or category filters for targeted extraction. A flexible Amazon scraper API ensures you collect only the data you need.

CAPTCHA & Anti-Bot Bypass: Navigate CAPTCHAs and Amazon’s anti-scraping mechanisms using advanced, bot-resilient APIs.

Flexible Output Formats: Export data in JSON, CSV, XML, or your preferred format. This enhances integration with your applications and dashboards.

Rate Limiting Controls: Stay compliant while maximizing your scraping potential. Good Amazon APIs balance speed with stealth.

Real-Time Updates: Track price drops, stock changes, and reviews in real time—critical for reactive, data-driven decisions.

Developer-Friendly Documentation: Enjoy a smoother experience with comprehensive guides, SDKs, and sample codes—especially crucial for rapid deployment and error-free scaling.

How the Amazon Scraper API Works

The architecture behind an Amazon Scraper API is engineered for robust, scalable scraping, high accuracy, and user-friendliness. At a high level, this powerful Amazon data scraping tool functions through the following core steps:

1. Send Request: Users initiate queries using ASINs, keywords, category names, or filters like price range and review thresholds. This flexibility supports tailored Amazon data retrieval.

2. Secure & Compliant Interactions: Advanced APIs utilize proxy rotation, CAPTCHA solving, and header spoofing to ensure anti-blocking Amazon scraping that mimics legitimate user behavior, maintaining access while complying with Amazon’s standards.

3. Fetch and Parse Data: Once the target data is located, the API extracts and returns it in structured formats such as JSON or CSV. Data includes pricing, availability, shipping details, reviews, ratings, and more—ready for dashboards, databases, or e-commerce tools.

4. Real-Time Updates: Delivering real-time Amazon data is a core advantage. Businesses can act instantly on dynamic pricing shifts, consumer trends, or inventory changes.

5. Error Handling & Reliability: Intelligent retry logic and error management keep the API running smoothly, even when Amazon updates its site structure, ensuring maximum scraping reliability.

6. Scalable Data Retrieval: Designed for both startups and enterprises, modern APIs handle everything from small-scale queries to high-volume Amazon scraping using asynchronous processing and optimized rate limits.

Top 6 Amazon Scraper APIs to Scrape Data from Amazon

1. TagX Amazon Scraper API

TagX offers a robust and developer-friendly Amazon Scraper API designed to deliver accurate, scalable, and real-time access to product, pricing, and review data. Built with enterprise-grade infrastructure, the API is tailored for businesses that need high-volume data retrieval with consistent uptime and seamless integration.

It stands out with anti-blocking mechanisms, smart proxy rotation, and responsive documentation, making it easy for both startups and large enterprises to deploy and scale their scraping efforts quickly. Whether you're monitoring price fluctuations, gathering review insights, or tracking inventory availability, TagX ensures precision and compliance every step of the way.

Key Features:

High-volume request support with 99.9% uptime.

Smart proxy rotation and CAPTCHA bypassing.

Real-time data scraping with low latency.

Easy-to-integrate with structured JSON/CSV outputs.

Comprehensive support for reviews, ratings, pricing, and more.

2. Zyte Amazon Scraper API

Zyte offers a comprehensive Amazon scraping solution tailored for businesses that need precision and performance. Known for its ultra-fast response times and nearly perfect success rate across millions of Amazon URLs, Zyte is an excellent choice for enterprise-grade projects. Its machine learning-powered proxy rotation and smart fingerprinting ensure you're always getting clean data, while dynamic parsing helps you retrieve exactly what you need—from prices and availability to reviews and ratings.

Key Features:

Ultra-reliable with 100% success rate on over a million Amazon URLs.

Rapid response speeds averaging under 200ms.

Smart proxy rotation powered by machine learning.

Dynamic data parsing for pricing, availability, reviews, and more.

3. Oxylabs Amazon Scraper API

Oxylabs delivers a high-performing API for Amazon data extraction, engineered for both real-time and bulk scraping needs. It supports dynamic JavaScript rendering, making it ideal for dealing with Amazon’s complex front-end structures. Robust proxy management and high reliability ensure smooth data collection for large-scale operations. Perfect for businesses seeking consistency and depth in their scraping workflows.

Key Features:

99.9% success rate on product pages.

Fast average response time (~250ms).

Offers both real-time and batch processing.

Built-in dynamic JavaScript rendering for tough-to-reach data.

4. Bright Data Amazon Scraper API

Bright Data provides a flexible and feature-rich API designed for heavy-duty Amazon scraping. It comes equipped with advanced scraping tools, including automatic CAPTCHA solving and JavaScript rendering, while also offering full compliance with ethical web scraping standards. It’s particularly favored by data-centric businesses that require validated, structured, and scalable data collection.

Key Features:

Automatic IP rotation and CAPTCHA solving.

Support for JavaScript rendering for dynamic pages.

Structured data parsing and output validation.

Compliant, secure, and enterprise-ready.

5. ScraperAPI

ScraperAPI focuses on simplicity and developer control, making it perfect for teams who want easy integration with their own tools. It takes care of all the heavy lifting—proxies, browsers, CAPTCHAs—so developers can focus on building applications. Its customization flexibility and JSON parsing capabilities make it a top choice for startups and mid-sized projects.

Key Features:

Smart proxy rotation and automatic CAPTCHA handling.

Custom headers and query support.

JSON output for seamless integration.

Supports JavaScript rendering for complex pages.

6. SerpApi Amazon Scraper

SerpApi offers an intuitive and lightweight API that is ideal for fetching Amazon product search results quickly and reliably. Built for speed, SerpApi is especially well-suited for real-time tasks and applications that need low-latency scraping. With flexible filters and multi-language support, it’s a great tool for localized e-commerce tracking and analysis.

Key Features:

Fast and accurate search result scraping.

Clean JSON output formatting.

Built-in CAPTCHA bypass.

Localized filtering and multi-region support.

Conclusion

In the ever-evolving digital commerce landscape, real-time Amazon data scraping can mean the difference between thriving and merely surviving. TagX’s Amazon Scraper API stands out as one of the most reliable and developer-friendly tools for seamless Amazon data extraction.

With a robust infrastructure, unmatched accuracy, and smooth integration, TagX empowers businesses to make smart, data-driven decisions. Its anti-blocking mechanisms, customizable endpoints, and developer-focused documentation ensure efficient, scalable scraping without interruptions.

Whether you're tracking Amazon pricing trends, monitoring product availability, or decoding consumer sentiment, TagX delivers fast, secure, and compliant access to real-time Amazon data. From agile startups to enterprise powerhouses, the platform grows with your business—fueling smarter inventory planning, better marketing strategies, and competitive insights.

Don’t settle for less in a competitive marketplace. Experience the strategic advantage of TagX—your ultimate Amazon scraping API.

Try TagX’s Amazon Scraper API today and unlock the full potential of Amazon data!

Original Source, https://www.tagxdata.com/amazon-scraper-api-made-easy-get-product-price-and-review-data

0 notes

Text

Why Businesses Need Reliable Web Scraping Tools for Lead Generation.

The Importance of Data Extraction in Business Growth

Efficient data scraping tools are essential for companies looking to expand their customer base and enhance their marketing efforts. Web scraping enables businesses to extract valuable information from various online sources, such as search engine results, company websites, and online directories. This data fuels lead generation, helping organizations find potential clients and gain a competitive edge.

Not all web scraping tools provide the accuracy and efficiency required for high-quality data collection. Choosing the right solution ensures businesses receive up-to-date contact details, minimizing errors and wasted efforts. One notable option is Autoscrape, a widely used scraper tool that simplifies data mining for businesses across multiple industries.

Why Choose Autoscrape for Web Scraping?

Autoscrape is a powerful data mining tool that allows businesses to extract emails, phone numbers, addresses, and company details from various online sources. With its automation capabilities and easy-to-use interface, it streamlines lead generation and helps businesses efficiently gather industry-specific data.

The platform supports SERP scraping, enabling users to collect information from search engines like Google, Yahoo, and Bing. This feature is particularly useful for businesses seeking company emails, websites, and phone numbers. Additionally, Google Maps scraping functionality helps businesses extract local business addresses, making it easier to target prospects by geographic location.

How Autoscrape Compares to Other Web Scraping Tools

Many web scraping tools claim to offer extensive data extraction capabilities, but Autoscrape stands out due to its robust features:

Comprehensive Data Extraction: Unlike many free web scrapers, Autoscrape delivers structured and accurate data from a variety of online sources, ensuring businesses obtain quality information.

Automated Lead Generation: Businesses can set up automated scraping processes to collect leads without manual input, saving time and effort.

Integration with External Tools: Autoscrape provides seamless integration with CRM platforms, marketing software, and analytics tools via API and webhooks, simplifying data transfer.

Customizable Lead Lists: Businesses receive sales lead lists tailored to their industry, each containing 1,000 targeted entries. This feature covers sectors like agriculture, construction, food, technology, and tourism.

User-Friendly Data Export: Extracted data is available in CSV format, allowing easy sorting and filtering by industry, location, or contact type.

Who Can Benefit from Autoscrape?

Various industries rely on web scraping tools for data mining and lead generation services. Autoscrape caters to businesses needing precise, real-time data for marketing campaigns, sales prospecting, and market analysis. Companies in the following sectors find Autoscrape particularly beneficial:

Marketing Agencies: Extract and organize business contacts for targeted advertising campaigns.

Real Estate Firms: Collect property listings, real estate agencies, and investor contact details.

E-commerce Businesses: Identify potential suppliers, manufacturers, and distributors.

Recruitment Agencies: Gather data on potential job candidates and hiring companies.

Financial Services: Analyze market trends, competitors, and investment opportunities.

How Autoscrape Supports Business Expansion

Businesses that rely on lead generation services need accurate, structured, and up-to-date data to make informed decisions. Autoscrape enhances business operations by:

Improving Customer Outreach: With access to verified emails, phone numbers, and business addresses, companies can streamline their cold outreach strategies.

Enhancing Market Research: Collecting relevant data from SERPs, online directories, and Google Maps helps businesses understand market trends and competitors.

Increasing Efficiency: Automating data scraping processes reduces manual work and ensures consistent data collection without errors.

Optimizing Sales Funnel: By integrating scraped data with CRM systems, businesses can manage and nurture leads more effectively.

Testing Autoscrape: Free Trial and Accessibility

For businesses unsure about committing to a web scraper tool, Autoscrapeoffers a free account that provides up to 100 scrape results. This allows users to evaluate the platform's capabilities before making a purchase decision.

Whether a business requires SERP scraping, Google Maps data extraction, or automated lead generation, Autoscrape delivers a reliable and efficient solution that meets the needs of various industries. Choosing the right data scraping tool is crucial for businesses aiming to scale operations and enhance their customer acquisition strategies.

Investing in a well-designed web scraping solution like Autoscrape ensures businesses can extract valuable information quickly and accurately, leading to more effective marketing and sales efforts.

0 notes

Text

Data/Web Scraping

What is Data Scraping ?

Data scraping is the process of extracting information from websites or other digital sources. It also Knows as web scraping.

Benefits of Data Scraping

1. Competitive Intelligence

Stay ahead of competitors by tracking their prices, product launches, reviews, and marketing strategies.

2. Dynamic Pricing

Automatically update your prices based on market demand, competitor moves, or stock levels.

3. Market Research & Trend Discovery

Understand what’s trending across industries, platforms, and regions.

4. Lead Generation

Collect emails, names, and company data from directories, LinkedIn, and job boards.

5. Automation & Time Savings

Why hire a team to collect data manually when a scraper can do it 24/7.

Who used Data Scraper ?

Businesses, marketers,E-commerce, travel,Startups, analysts,Sales, recruiters, researchers, Investors, agents Etc

Top Data Scraping Browser Extensions

Web Scraper.io

Scraper

Instant Data Scraper

Data Miner

Table Capture

Top Data Scraping Tools

BeautifulSoup

Scrapy

Selenium

Playwright

Octoparse

Apify

ParseHub

Diffbot

Custom Scripts

Legal and Ethical Notes

Not all websites allow scraping. Some have terms of service that forbid it, and scraping too aggressively can get IPs blocked or lead to legal trouble

Apply For Data/Web Scraping : https://www.fiverr.com/s/99AR68a

1 note

·

View note

Text

Scrape Product Listings from Amazon, Flipkart, and Meesho for Market Research

Introduction

In today's competitive e-commerce environment, staying ahead requires access to real-time insights. Businesses are gaining an edge by leveraging tools to scrape product listings from Amazon, Flipkart, and Meesho. These platforms dominate the Indian market, hosting millions of sellers and a diverse range of products. By extracting and analyzing data from these platforms, businesses can uncover key insights into pricing trends, delivery models, product popularity, and competitive positioning.

This process enables companies to make informed decisions, refine their strategies, and understand consumer behavior better. Whether understanding Flipkart vs. Amazon vs Meesho Web Scraping Insights, tracking price fluctuations, or assessing product availability, scraping product data can provide a clear advantage. Using methods to scrape Flipkart, Amazon, & Meesho pricing data, businesses can monitor competition in real-time, optimize their pricing strategies, and ensure they stay relevant in the fast-evolving e-commerce landscape.

The Value of Product Listing Data

Product listings provide a rich mix of structured and semi-structured data that can reveal valuable market trends, enabling brands to better align with consumer expectations. Basic details like product name, price, category, and delivery charges are essential data points. In contrast, more nuanced metrics such as discounts, delivery times, seller ratings, and customer reviews provide deeper insights into consumer behavior. When gathered and analyzed in large volumes, this data becomes a powerful tool for decision-making.

For instance, the Flipkart product and review dataset helps businesses assess customer sentiment and product popularity. Brands can also extract Flipkart product data to monitor pricing strategies and availability across different regions. Additionally, Meesho data scraping services provide insights into cost-effective products and emerging trends within the budget-conscious market. By harnessing these datasets, businesses can make data-driven decisions to optimize their strategies and meet changing market demands.

Why Scraping?

Scraping automates data extraction from websites, offering businesses a streamlined and efficient way to gather critical information. Here's how scraping benefits companies: