#failover cluster node

Explore tagged Tumblr posts

Text

Failover Cluster Node ve Shared Disk Ekleme

Merhaba, bu yazımda sizlere Failover Cluster Node ve Shared Disk Ekleme adımlarından bahsedeceğim. Failover cluster yapısı oluştururken, yeni bir node eklemek ve shared disk ayarlarını yapmak kritik adımlardır. Aşağıda bu işlemleri adım adım açıklayan bir doküman hazırladım. Failover Cluster’a Node Ekleme ve Shared Disk Ayarları Gereksinimler İşletim Sistemi: Windows Server (2016 ve sonrası…

#Bilmeniz Gereken Failover Cluster Komutları#failover cluster node#Failover Cluster Node ve Shared Disk Ekleme#failover clustering

0 notes

Text

Kubernetes vs. Traditional Infrastructure: Why Clusters and Pods Win

In today’s fast-paced digital landscape, agility, scalability, and reliability are not just nice-to-haves—they’re necessities. Traditional infrastructure, once the backbone of enterprise computing, is increasingly being replaced by cloud-native solutions. At the forefront of this transformation is Kubernetes, an open-source container orchestration platform that has become the gold standard for managing containerized applications.

But what makes Kubernetes a superior choice compared to traditional infrastructure? In this article, we’ll dive deep into the core differences, and explain why clusters and pods are redefining modern application deployment and operations.

Understanding the Fundamentals

Before drawing comparisons, it’s important to clarify what we mean by each term:

Traditional Infrastructure

This refers to monolithic, VM-based environments typically managed through manual or semi-automated processes. Applications are deployed on fixed servers or VMs, often with tight coupling between hardware and software layers.

Kubernetes

Kubernetes abstracts away infrastructure by using clusters (groups of nodes) to run pods (the smallest deployable units of computing). It automates deployment, scaling, and operations of application containers across clusters of machines.

Key Comparisons: Kubernetes vs Traditional Infrastructure

Feature

Traditional Infrastructure

Kubernetes

Scalability

Manual scaling of VMs; slow and error-prone

Auto-scaling of pods and nodes based on load

Resource Utilization

Inefficient due to over-provisioning

Efficient bin-packing of containers

Deployment Speed

Slow and manual (e.g., SSH into servers)

Declarative deployments via YAML and CI/CD

Fault Tolerance

Rigid failover; high risk of downtime

Self-healing, with automatic pod restarts and rescheduling

Infrastructure Abstraction

Tightly coupled; app knows about the environment

Decoupled; Kubernetes abstracts compute, network, and storage

Operational Overhead

High; requires manual configuration, patching

Low; centralized, automated management

Portability

Limited; hard to migrate across environments

High; deploy to any Kubernetes cluster (cloud, on-prem, hybrid)

Why Clusters and Pods Win

1. Decoupled Architecture

Traditional infrastructure often binds application logic tightly to specific servers or environments. Kubernetes promotes microservices and containers, isolating app components into pods. These can run anywhere without knowing the underlying system details.

2. Dynamic Scaling and Scheduling

In a Kubernetes cluster, pods can scale automatically based on real-time demand. The Horizontal Pod Autoscaler (HPA) and Cluster Autoscaler help dynamically adjust resources—unthinkable in most traditional setups.

3. Resilience and Self-Healing

Kubernetes watches your workloads continuously. If a pod crashes or a node fails, the system automatically reschedules the workload on healthy nodes. This built-in self-healing drastically reduces operational overhead and downtime.

4. Faster, Safer Deployments

With declarative configurations and GitOps workflows, teams can deploy with speed and confidence. Rollbacks, canary deployments, and blue/green strategies are natively supported—streamlining what’s often a risky manual process in traditional environments.

5. Unified Management Across Environments

Whether you're deploying to AWS, Azure, GCP, or on-premises, Kubernetes provides a consistent API and toolchain. No more re-engineering apps for each environment—write once, run anywhere.

Addressing Common Concerns

“Kubernetes is too complex.”

Yes, Kubernetes has a learning curve. But its complexity replaces operational chaos with standardized automation. Tools like Helm, ArgoCD, and managed services (e.g., GKE, EKS, AKS) help simplify the onboarding process.

“Traditional infra is more secure.”

Security in traditional environments often depends on network perimeter controls. Kubernetes promotes zero trust principles, pod-level isolation, and RBAC, and integrates with service meshes like Istio for granular security policies.

Real-World Impact

Companies like Spotify, Shopify, and Airbnb have migrated from legacy infrastructure to Kubernetes to:

Reduce infrastructure costs through efficient resource utilization

Accelerate development cycles with DevOps and CI/CD

Enhance reliability through self-healing workloads

Enable multi-cloud strategies and avoid vendor lock-in

Final Thoughts

Kubernetes is more than a trend—it’s a foundational shift in how software is built, deployed, and operated. While traditional infrastructure served its purpose in a pre-cloud world, it can’t match the agility and scalability that Kubernetes offers today.

Clusters and pods don’t just win—they change the game.

0 notes

Text

Memorystore For Valkey: An Open-Source Data Transformation

Memorystore, Google Cloud's fully managed in-memory solution for Valkey, Redis, and Memcached, is used by over 90% of the top 100 clients. Client installs increasingly use memorystore. Google Cloud made Memorystore for Valkey publicly available, advancing open-source cloud in-memory data management. The GA supports Memorystore for Valkey for production workloads including Private Service Connect, multi-VPC access, cross-region replication, persistence, and more. Also has 99.99% availability SLA.

Memorystore for Valkey was adopted by hundreds of Google Cloud users, including MLB and Bandai Namco Studios Inc., after its August 2024 preview. They've provided us invaluable feedback in recent months, which has shaped their new service:

Memorystore has helped Major League Baseball enhance fan data transmission. Google Cloud makes Memorystore for Valkey, an open-source alternative, widely available. They believe its built-in flexibility and community-driven development will boost speed, scalability, and real-time data processing to better serve players, fans, and operations.

Memorystore supports Bandai Namco Studios' high-scale, low-latency performance, essential for many titles. Memorystore for Valkey's GA launch thrills them. We can increase global player base and real-time gameplay with its features, speed, and open-source nature. It's pleased to adopt Memorystore for Valkey's gaming innovation.

The latest at GA

Memorystore for Valkey has the following enterprise-grade features and a 99.99% SLA powered by Google's high availability and zonal placement algorithms at GA:

Customers can connect to 250 shards with two IP addresses using Valkey's Memorystore's Private Service Connect. Memorystore's discovery endpoint is highly available, so your cluster has no single point of failure.

Memorystore for Valkey lets your cluster grow to meet application demands with cost-optimized functionality and zero-downtime scalability. Supported cluster sizes are 1–250 nodes.

Integrated Google-built vector similarity search: Memorystore for Valkey provides in-memory vector search, over a billion vectors, 99% recall, and single-digit millisecond latency.

Memorystore for Valkey uses Google's vector search module, the Valkey OSS project's official search module, to achieve this performance. The module supports RAG, recommendation systems, semantic search, and other AI applications. Hybrid search improves user experience and application performance by providing more accurate and contextually relevant results.

Integrated managed backups automate migration, compliance, and disaster recovery.

CRR provides low-latency measurements and disaster recovery preparedness. Google Cloud now supports two clustered regions with varied replica counts in addition to the primary region. Valkey's memorystore syncs the control and data planes across geographic borders.

Multiple client-side VPCs can access a Valkey cluster Private Service Connection endpoint using Memorystore for Valkey. Customers may be safely linked across projects and VPCs utilising this technique.

Persistence: Memorystore for Valkey offers RDB-snapshot and AOF-logging persistence for diverse data durability demands.

Memorystore for Valkey supports Valkey 7.2 and its improved engine, Valkey 8.0:

Great performance: Memorystore for Valkey 8.0's asynchronous I/O improvements raise throughput and achieve 2x QPS of Memorystore for Redis Cluster at microsecond latency, making it easy to manage internet-scale workloads.

Even if its pricing is equal to Memorystore for Redis Cluster, Memorystore for Valkey's performance optimisations may result in considerable cost savings by requiring fewer nodes to handle the same workload.

Optimised memory efficiency: Valkey 8.0 optimises memory management to save memory and reduce operating costs for various workloads.

Scalability is more reliable with Valkey 8.0's Google-contributed automatic failover for empty shards and highly available migration states. They added migration states auto-reparing for system resilience.

Memorystore for Valkey offers maintenance windows, single zone and shard clusters, free inter-zone replication, and more.

Customer trust and Google Cloud's open source commitment After Redis modified Redis OSS's licensing in March 2024, the open-source community launched Valkey OSS, funded by Google, Amazon, Snap, and others.

Your trust is highly appreciated. Memorystore for Valkey on Google Cloud offers access to powerful, open technologies. The Linux Foundation endorses Valkey OSS, which, unlike Redis, is licensed under the BSD 3-clause. It has been watching Valkey build steam.

It supports and enhances Memorystore for Redis Cluster, Redis, and Valkey. When Memorystore for Redis clients are ready to transition to Valkey because to its open-source, reliability, and price-performance ratio, Google Cloud provides comprehensive migration aid. Memorystore for Valkey's compliance with Redis OSS 7.2 APIs and your favourite clients simplifies open source migration. You may also reuse Memorystore for Redis and cluster committed usage discounts to ease the transfer.

Now try Valkey's Memorystore

Testing Memorystore for Valkey is the best way to determine its power. Start Valkey or read the documentation. Self-managing Redis shouldn't be difficult. Discover how Memorystore for Valkey powers apps and is simple to use. This lets you focus on designing effective and unique enterprise apps.

#Memorystore#Valkey#Memorystore For Valkey#Valkey 8.0#Memorystore for Redis Cluster#Redis Cluster#technology#technews#govindhtech#news#technologynews#technologytrends

1 note

·

View note

Text

Enterprise Kubernetes Storage with Red Hat OpenShift Data Foundation (DO370)

Kubernetes has become the de facto standard for container orchestration, enabling organizations to build, deploy, and manage applications at scale. However, running stateful applications in Kubernetes presents unique challenges, particularly in managing persistent storage, scalability, and data resiliency. This is where Red Hat OpenShift Data Foundation (ODF) steps in, offering a comprehensive software-defined storage solution tailored for OpenShift environments.

What is Red Hat OpenShift Data Foundation?

Red Hat OpenShift Data Foundation (formerly known as OpenShift Container Storage) is an integrated storage solution designed to provide scalable, reliable, and persistent storage for applications running on OpenShift. Built on Ceph, NooBaa, and Rook, ODF offers a seamless experience for developers and administrators looking to manage storage efficiently across hybrid and multi-cloud environments.

Key Features of OpenShift Data Foundation

Unified Storage Platform – ODF provides block, file, and object storage, catering to diverse application needs.

High Availability and Resilience – Ensures data availability with multi-node replication and automated failover mechanisms.

Multi-Cloud and Hybrid Cloud Support – Enables storage across on-premises, private cloud, and public cloud environments.

Dynamic Storage Provisioning – Automatically provisions storage resources based on application demands.

Advanced Data Services – Supports features like data compression, deduplication, encryption, and snapshots for data protection.

Integration with OpenShift – Provides seamless integration with OpenShift, including CSI (Container Storage Interface) support and persistent volume management.

Why Enterprises Need OpenShift Data Foundation

Enterprises adopting OpenShift for their containerized workloads require a storage solution that can keep up with their scalability and performance needs. ODF enables businesses to:

Run stateful applications efficiently in Kubernetes environments.

Ensure data persistence, integrity, and security for mission-critical workloads.

Optimize cost and performance with intelligent data placement across hybrid and multi-cloud architectures.

Simplify storage operations with automation and centralized management.

Learning Red Hat OpenShift Data Foundation with DO370

The DO370 course by Red Hat is designed for professionals looking to master enterprise Kubernetes storage with OpenShift Data Foundation. This course provides hands-on training in deploying, managing, and troubleshooting ODF in OpenShift environments. Participants will learn:

How to deploy and configure OpenShift Data Foundation.

Persistent storage management in OpenShift clusters.

Advanced topics like disaster recovery, storage performance optimization, and security best practices.

Conclusion

As Kubernetes adoption grows, organizations need a robust storage solution that aligns with the agility and scalability of containerized environments. Red Hat OpenShift Data Foundation delivers an enterprise-grade storage platform, making it an essential component for any OpenShift deployment. Whether you are an IT architect, administrator, or developer, gaining expertise in DO370 will empower you to harness the full potential of Kubernetes storage and drive innovation within your organization.

Interested in learning more? Explore Red Hat’s DO370 course and take your OpenShift storage skills to the next level!

For more details www.hawkstack.com

0 notes

Text

Mastering Redis Clustering for Scalable Applications | Expert Guide

Mastering Redis Clustering for Large-Scale Applications 1. Introduction 1.1 Brief Explanation Redis Clustering is a powerful approach to scaling Redis for large-scale applications by distributing data across multiple nodes. It enables high availability, fault tolerance, and improved performance by automatically handling failovers and ensuring data redundancy. 1.2 What You Will Learn How…

0 notes

Text

MongoDB Sharded Cluster Connectivity Issue – Solved! 🚀

Challenges: - Failed database connections across mongos nodes 🔌 - Initial fixes like restarts and reboots didn’t work 🔄 - Root cause: HMAC key expiration due to a MongoDB bug in versions 4.2.2–4.2.11 🔧

Solution: - Triggered a Config Server Failover to regenerate HMAC keys 💡

Results: - Reconnected mongos services 🔗 - Normal operations restored ⚙️ - Recommended upgrading to MongoDB 4.2.12+ to prevent recurrence 🔝

Facing a similar issue? Let us help you troubleshoot and optimize your database setup! 💬

👉 Contact us here: https://simplelogic-it.com/contact-us/ 📞

#MongoDB#ShardedCluster#HMACKeys#DatabaseOptimization#ITSupport#SimpleLogic#SimpleLogicIT#MakingITSimple#MakeITSimple

0 notes

Text

High Availability and Disaster Recovery with OpenShift Virtualization

In today’s fast-paced digital world, ensuring high availability (HA) and effective disaster recovery (DR) is critical for any organization. OpenShift Virtualization offers robust solutions to address these needs, seamlessly integrating with your existing infrastructure while leveraging the power of Kubernetes.

Understanding High Availability

High availability ensures that your applications and services remain operational with minimal downtime, even in the face of hardware failures or unexpected disruptions. OpenShift Virtualization achieves HA through features like:

Clustered Environments: By running workloads across multiple nodes, OpenShift minimizes the risk of a single point of failure.

Example: A database application is deployed on three nodes. If one node fails, the other two continue to operate without interruption.

Pod Auto-Healing: Kubernetes’ built-in mechanisms automatically restart pods on healthy nodes in case of a failure.

Example: If a virtual machine (VM) workload crashes, OpenShift Virtualization can restart it on another node in the cluster.

Disaster Recovery Made Easy

Disaster recovery focuses on restoring operations quickly after catastrophic events, such as data center outages or cyberattacks. OpenShift Virtualization supports DR through:

Snapshot and Backup Capabilities: OpenShift Virtualization provides options to create consistent snapshots of your VMs, ensuring data can be restored to a specific point in time.

Example: A web server VM is backed up daily. If corruption occurs, the VM can be rolled back to the latest snapshot.

Geographic Redundancy: Workloads can be replicated to a secondary site, enabling a failover strategy.

Example: Applications running in a primary data center automatically shift to a backup site during an outage.

Key Features Supporting HA and DR in OpenShift Virtualization

Live Migration: Move running VMs between nodes without downtime, ideal for maintenance and load balancing.

Use Case: Migrating workloads off a node scheduled for an update to maintain uninterrupted service.

Node Affinity and Anti-Affinity Rules: Distribute workloads strategically to prevent clustering on a single node.

Use Case: Ensuring VMs hosting critical applications are spread across different physical hosts.

Storage Integration: Support for persistent volumes ensures data continuity and resilience.

Use Case: A VM storing transaction data on persistent volumes continues operating seamlessly even if it is restarted on another node.

Automated Recovery: Integration with tools like Velero for backup and restore enhances DR strategies.

Use Case: Quickly restoring all workloads to a secondary cluster after a ransomware attack.

Real-World Implementation Tips

To make the most of OpenShift Virtualization’s HA and DR capabilities:

Plan for Redundancy: Deploy at least three control plane nodes and three worker nodes for a resilient setup.

Leverage Monitoring Tools: Tools like Prometheus and Grafana can help proactively identify issues before they escalate.

Test Your DR Plan: Regularly simulate failover scenarios to ensure your DR strategy is robust and effective.

Conclusion

OpenShift Virtualization empowers organizations to build highly available and disaster-resilient environments, ensuring business continuity even in the most challenging circumstances. By leveraging Kubernetes’ inherent capabilities along with OpenShift’s enhancements, you can maintain seamless operations and protect your critical workloads. Start building your HA and DR strategy today with OpenShift Virtualization, and stay a step ahead in the competitive digital landscape.

For more information visit : www.hawkstack.com

0 notes

Text

Kubernetes for Developers: Master Modern Application Management

Unleash the power of Kubernetes with HawkStack’s expert training.

In today’s fast-paced tech landscape, developers need agile tools to manage, scale, and deploy applications efficiently. Enter Kubernetes, the revolutionary open-source orchestration tool that has become a game-changer in the world of containerized applications. Whether you’re building cloud-native apps or managing complex microservices architectures, Kubernetes is the tool that empowers you to automate and streamline your operations.

What is Kubernetes?

Kubernetes, often abbreviated as K8s, is designed to automate the deployment, scaling, and management of containerized applications. It provides a robust framework to run distributed systems, simplifying management tasks like scaling, updates, and failover processes. With Kubernetes, developers can maintain their application environments with ease, reducing manual labor and allowing them to focus on coding.

Why Developers Should Learn Kubernetes

For developers looking to enhance their careers, Kubernetes proficiency is a must-have skill. It enables you to:

Simplify Container Management: Kubernetes automates the container lifecycle, allowing you to deploy applications across multi-node clusters effortlessly.

Seamless Scaling: Whether you're handling a sudden surge of traffic or scaling down after peak hours, Kubernetes ensures your application adjusts automatically.

Efficient Resource Utilization: Optimize resource allocation and reduce infrastructure costs by managing workloads more effectively.

CI/CD Integration: Kubernetes integrates seamlessly with DevOps pipelines, making continuous integration and continuous deployment (CI/CD) a breeze.

What You’ll Learn in HawkStack’s Kubernetes Course

Our Kubernetes for Developers course will teach you how to:

Understand Containerization: Learn the fundamentals of Docker and how to containerize your applications.

Deploy and Configure: Gain hands-on experience deploying applications on a Kubernetes cluster.

Manage Multi-Node Clusters: Understand how to configure and manage applications across multiple nodes for high availability and scalability.

Scale Applications: Automate the scaling of your applications in response to traffic demands.

Handle Updates Seamlessly: Implement rolling updates and rollback mechanisms without downtime.

Who Should Join?

This course is perfect for:

Developers eager to master cloud-native development.

IT professionals managing application infrastructure.

DevOps engineers looking to streamline CI/CD workflows.

Join HawkStack's Kubernetes Training

Ready to transform the way you manage and deploy your applications? Visit HawkStack and enroll in our Kubernetes for Developers course today. Unlock the future of modern application management and accelerate your path to success!

Stay ahead of the curve. Master Kubernetes with HawkStack.

0 notes

Text

Failover Cluster Destroy İşlemi

Failover Cluster Destroy İşlemi

Merhaba, bu yazımda sizlere Failover Cluster destroy işlemi konusundan bahsedeceğim. Örneğin; bir cluster ortamınız var. Burada yeni bir cluster ismi yaratmak ya da cluster ip adresi değiştirmek istiyorsunuz. Bunun için mutlaka ilk işlem olarak mevcut cluster destroy etmeniz gerekmektedir. Failover Cluster üzerinde bir cluster’ı yok etme (destroy) işlemi, genellikle yapılandırmanızı temizlemek…

View On WordPress

0 notes

Text

The Future of Data Centers: Why Hyperconverged Infrastructure (HCI) Is the Next Big Thing in IT

In an era where digital transformation is at the heart of nearly every business strategy, traditional data centers are under unprecedented pressure to evolve. Organizations need IT infrastructure that can support modern workloads, ensure high availability, enable seamless scalability, and reduce operational complexity. This has led to the rapid rise of Hyperconverged Infrastructure (HCI)—a paradigm shift in how data centers are architected and managed.

HCI is not just a trend—it represents a foundational shift in IT infrastructure that is shaping the future of the data center. Let’s explore what makes HCI such a compelling choice and why it is poised to become the standard for next-generation IT environments.

What is Hyperconverged Infrastructure (HCI)?

Hyperconverged Infrastructure is a software-defined IT framework that combines compute, storage, and networking into a tightly integrated system. Traditional infrastructure requires separate components for servers, storage arrays, and networking hardware, each with its own management interface. HCI collapses these components into a unified platform that can be managed centrally, often through a single pane of glass.

At the heart of HCI is virtualization. Resources are abstracted from the underlying hardware and pooled together, allowing IT teams to provision and manage them dynamically. These systems typically run on industry-standard x86 servers and use intelligent software to manage workloads, data protection, and scalability.

Key Drivers Behind HCI Adoption

1. Operational Simplicity

One of the most compelling advantages of HCI is the reduction in complexity. By consolidating infrastructure components into a unified system, IT teams can dramatically streamline deployment, management, and troubleshooting. The simplified architecture allows for faster provisioning of applications and services, reduces the need for specialized skills, and minimizes the risk of configuration errors.

2. Scalability on Demand

Unlike traditional infrastructure, where scaling often involves costly overprovisioning or complex re-architecting, HCI offers linear scalability. Organizations can start small and scale out incrementally by adding additional nodes to the cluster—without disrupting existing workloads. This makes HCI an ideal fit for both growing enterprises and dynamic application environments.

3. Lower Total Cost of Ownership (TCO)

HCI delivers cost savings across both capital and operational expenses. Capital savings come from using off-the-shelf hardware and eliminating the need for dedicated storage appliances. Operational cost reductions stem from simplified management, reduced power and cooling needs, and fewer personnel requirements. HCI also enables automation, which reduces manual tasks and enhances efficiency.

4. Improved Performance and Reliability

With storage and compute co-located on the same nodes, data does not have to travel across disparate systems, resulting in lower latency and improved performance. HCI platforms are built with high availability and data protection in mind, often including features like automated failover, snapshots, replication, deduplication, and compression.

5. Cloud-Like Flexibility, On-Premises

HCI bridges the gap between on-premises infrastructure and the public cloud by offering a cloud-like experience within the data center. Self-service provisioning, software-defined controls, and seamless integration with hybrid and multi-cloud environments make HCI a cornerstone for cloud strategies—especially for businesses looking to retain control over sensitive workloads while embracing cloud agility.

Strategic Use Cases for HCI

The versatility of HCI makes it suitable for a wide range of IT scenarios, including:

Virtual Desktop Infrastructure (VDI): Supports thousands of virtual desktops with consistent performance, simplified deployment, and strong security.

Edge Computing: Compact, self-contained HCI systems are ideal for remote or branch offices where IT support is limited.

Disaster Recovery (DR): Integrated backup, replication, and failover features make HCI a powerful platform for DR strategies.

Private and Hybrid Clouds: HCI provides a robust foundation for organizations building private clouds or integrating with public cloud providers like AWS, Azure, or Google Cloud.

Application Modernization: Simplifies the migration and deployment of modern, containerized applications and legacy workloads alike.

Potential Challenges and Considerations

While HCI offers significant benefits, organizations should also be aware of potential challenges:

Vendor Lock-In: Many HCI platforms are proprietary, which can limit flexibility in choosing hardware or software components.

Initial Learning Curve: Shifting from traditional infrastructure to HCI requires new skills and changes in operational processes.

Not Always Cost-Effective at Scale: For extremely large environments with very high-performance needs, traditional architectures may still offer better economics or flexibility.

That said, many of these challenges can be mitigated with proper planning, vendor due diligence, and a clear understanding of business goals.

The Road Ahead: HCI as a Foundation for Modern IT

According to industry analysts, the global HCI market is projected to grow significantly over the next several years, driven by increasing demand for agile, software-defined infrastructure. As organizations prioritize flexibility, security, and cost-efficiency, HCI is emerging as a key enabler of digital transformation.

Forward-looking businesses are leveraging HCI not only to modernize their data centers but also to gain a competitive edge. Whether supporting a hybrid cloud strategy, enabling edge computing, or simplifying IT operations, HCI delivers a robust, scalable, and future-ready solution.

Final Thoughts

Hyperconverged Infrastructure represents more than a technical evolution—it’s a strategic shift toward smarter, more agile IT. As the demands on infrastructure continue to rise, HCI offers a compelling alternative to the complexity and limitations of traditional architectures.

Organizations that embrace HCI are better positioned to respond to change, scale rapidly, and deliver superior digital experiences. For IT leaders seeking to align infrastructure with business goals, HCI is not just the next big thing—it’s the next right step.

0 notes

Text

7: Oracle RAC and High Availability

Oracle RAC (Real Application Clusters) is designed to provide high availability (HA), ensuring that a database remains operational even if one or more components (such as a node or instance) fail. RAC’s architecture offers failover and recovery mechanisms, client-side failover solutions like FAN (Fast Application Notification) and TAF (Transparent Application Failover), and can integrate with…

0 notes

Text

Advantages and Difficulties of Using ZooKeeper in Kubernetes

Advantages and Difficulties of Using ZooKeeper in Kubernetes

Integrating ZooKeeper with Kubernetes can significantly enhance the management of distributed systems, offering various benefits while also presenting some challenges. This post explores the advantages and difficulties associated with deploying ZooKeeper in a Kubernetes environment.

Advantages

Utilizing ZooKeeper in Kubernetes brings several notable advantages. Kubernetes excels at resource management, ensuring that ZooKeeper nodes are allocated effectively for optimal performance. Scalability is streamlined with Kubernetes, allowing you to easily adjust the number of ZooKeeper instances to meet fluctuating demands. Automated failover and self-healing features ensure high availability, as Kubernetes can automatically reschedule failed ZooKeeper pods to maintain continuous operation. Kubernetes also simplifies deployment through StatefulSets, which handle the complexities of stateful applications like ZooKeeper, making it easier to manage and scale clusters. Furthermore, the Kubernetes ZooKeeper Operator enhances this integration by automating configuration, scaling, and maintenance tasks, reducing manual intervention and potential errors.

Difficulties

Deploying ZooKeeper on Kubernetes comes with its own set of challenges. One significant difficulty is ZooKeeper’s inherent statefulness, which contrasts with Kubernetes’ focus on stateless applications. This necessitates careful management of state and configuration to ensure data consistency and reliability in a containerized environment. Ensuring persistent storage for ZooKeeper data is crucial, as improper storage solutions can impact data durability and performance. Complex network configurations within Kubernetes can pose hurdles for reliable service discovery and communication between ZooKeeper instances. Additionally, security is a critical concern, as containerized environments introduce new potential vulnerabilities, requiring stringent access controls and encryption practices. Resource allocation and performance tuning are essential to prevent bottlenecks and maintain efficiency. Finally, upgrading ZooKeeper and Kubernetes components requires thorough testing to ensure compatibility and avoid disruptions.

In conclusion, deploying ZooKeeper in Kubernetes offers a range of advantages, including enhanced scalability and simplified management, but also presents challenges related to statefulness, storage, network configuration, and security. By understanding these factors and leveraging tools like the Kubernetes ZooKeeper Operator, organizations can effectively navigate these challenges and optimize their ZooKeeper deployments.

To gather more knowledge about deploying ZooKeeper on Kubernetes, Click here.

1 note

·

View note

Text

Horizontal Scaling: Harnessing the Potential of NoSQL Databases

In the contemporary landscape of big data and high-traffic applications, traditional relational databases frequently find it challenging to meet the requirements of modern enterprises. The advent of NoSQL databases represents a new category of database management systems, engineered to address the issues of scalability, performance, and flexibility that are pivotal in the era of digital transformation. This blog post aims to delve into the notion of scalable NoSQL database, elucidating their principal features and the advantages they present for businesses aiming to excel in a data-centric environment. Exploring NoSQL Databases

NoSQL databases, also known as "Not Only SQL" databases, signify a shift from the traditional, rigidly structured and schema-bound nature of conventional relational databases. Distinct from their SQL-based counterparts, NoSQL databases offer either a lack of schema or a flexible schema, facilitating the storage and management of unstructured or semi-structured data with greater efficiency.

A hallmark of NoSQL databases is their capability for horizontal scalability. Contrary to the vertical scaling approach of traditional relational databases—where an increase in data volume and workload necessitates the procurement of more powerful hardware—NoSQL databases achieve scalability through the distribution of data across an array of nodes or servers, thereby efficiently managing heightened traffic and storage demands.

Key Characteristics of Scalable NoSQL Databases

Horizontal Scalability: Scalable NoSQL databases excel in horizontal scalability, enabling organizations to augment their database cluster with additional nodes or servers in response to increasing demand. This distributed architecture facilitates seamless scaling without disruption, guaranteeing superior performance and reliability, even under significant loads.

Flexible Data Models: NoSQL databases offer support for a variety of flexible data models, including key-value stores, document databases, column-family stores, and graph databases. Such flexibility allows organizations to select the data model that best aligns with their specific requirements, be it for storing unstructured documents, hierarchical data, or intricately connected data.

High Performance: Designed for high performance and minimal latency, NoSQL databases are ideally suited for real-time applications and data-intensive tasks. Through data distribution across multiple nodes and the utilization of parallel processing, NoSQL databases are capable of managing substantial volumes of transactions and queries with negligible latency.

Fault Tolerance: Inherently fault-tolerant, scalable NoSQL databases incorporate mechanisms for data replication, partitioning, and failover. Should a node failure or network disruption occur, these databases automatically redirect traffic to operational nodes, ensuring continuous service and data accessibility.

Advantages of Scalable NoSQL Databases

Flexibility and Agility: NoSQL databases provide unparalleled flexibility and agility, which enables enterprises to swiftly adapt to evolving requirements and market dynamics. Their support for various data models and the ability to dynamically modify schemas allow organizations to iterate quickly and innovate with greater certainty.

Cost Efficiency: The horizontal scalability characteristic of NoSQL databases permits enterprises to incrementally scale their infrastructure based on demand, circumventing the initial expenses and complexities associated with vertical scaling strategies. Utilizing commodity hardware and cloud services enables cost-efficient scalability while maintaining high levels of performance and reliability.

Scalability on Demand: Scalable NoSQL databases afford enterprises the capacity to scale resources on demand, dynamically adjusting to fluctuations in workload in real time. This capability ensures that enterprises can manage periods of peak demand without the need for over-provisioning, thereby optimizing cost efficiency and resource utilization.

Future-Proofing: In the context of a rapidly changing digital environment, the ability to future-proof operations is crucial for sustained success. Scalable NoSQL databases provide the necessary flexibility and scalability for enterprises to navigate emerging technologies and shifting business demands, securing their competitive edge and relevance in future landscapes.

Conclusion

In conclusion, scalable NoSQL databases embody a significant shift in the landscape of database management, presenting enterprises with the scalability, flexibility, and performance necessary to excel in the age of big data and digital transformation. Through the adoption of horizontal scalability, adaptable data models, and high-performance architectures, enterprises are equipped to unlock unprecedented opportunities for innovation, agility, and expansion within a data-centric universe. As the adoption of NoSQL databases by businesses progresses, the potential for scalability and innovation becomes boundless, fundamentally altering the methodologies employed in data storage, management, and analysis in the digital era.

0 notes

Link

0 notes

Text

Cluster Server Là Gì? Ưu Điểm, Thành Phần & Cơ Chế Hoạt Động

Như chúng ta đã biết, máy chủ là trụ cột quan trọng trong việc duy trì hoạt động ổn định của mạng máy tính. Nếu xảy ra sự cố với máy chủ, hệ thống quản lý cơ sở dữ liệu sẽ bị tê liệt và không thể hoạt động.

Vì vậy, cần có giải pháp để đảm bảo hệ thống luôn hoạt động tốt, ngay cả khi máy chủ gặp sự cố. Công nghệ Cluster là giải pháp cho vấn đề này. Vậy Cluster Server là gì? Làm thế nào để hoạt động và có những lưu ý khi sử dụng? Hãy cùng Thuevspgiare.vn tìm hiểu trong bài viết sau đây.

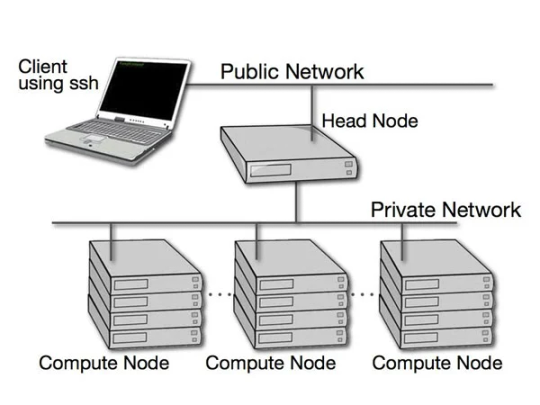

Cluster Server là gì?

Clustering là một kiến trúc nhằm đảm bảo nâng cao khả năng sẵn sàng cho hệ thống mạng. Clustering gồm nhiều server riêng lẻ được liên kết và hoạt động cùng với nhau trong một hệ thống. Các server này sẽ giao tiếp với nhau để trao đổi thông tin và giao tiếp với mạng bên ngoài để thực hiện các request. Nếu khi có lỗi xảy ra, các dịch vụ trong cluster hoạt động tương tác với nhau để duy trì tính ổn định và độ sẵn sàng cao cho hệ thống.

Cluster Server là gì?

Để cung cấp các dịch vụ chất lượng cao cho khách hàng, mỗi nhóm server sẽ chạy cùng với nhau, tạo thành một server cluster. Ưu điểm của việc này là: nếu một máy trong cluster bị lỗi, công việc có thể được chuyển sang các máy khác trong cùng cluster. Server cluster đảm bảo việc truy cập liên tục của các client vào mọi tài nguyên liên quan đến server.

Tác dụng của Cluster là gì?

Hiện nay, cluster giúp đáp ứng việc truy xuất của các ứng dụng như thương mại điện tử, đồng thời cần có khả năng chịu lỗi cao và đáp ứng tính sẵn sàng và khả năng mở rộng khả năng hệ thống khi cần thiết.

Cluster phối hợp hoạt động với nhau như một hệ thống đơn nhất

Chính vì thế, cluster hiện được sử dụng cho các ứng dụng Stateful applications, tức là các ứng dụng cần phải hoạt động thường xuyên trong thời gian dài, chúng gồm các database server như: Microsoft MySQL Server, Microsoft Exchange Server, File and Print Server,…

Tất cả các node trong Cluster dùng chung 1 nơi lưu trữ dữ liệu có thể dùng công nghệ SCSI hoặc Storage Area Network (SAN). Windows Sever 2003 Enterprise và Datacenter hỗ trợ cluster lên đến 8 node trong khi đó Windows 2000 Advance Server hỗ trợ 2 node còn Windows 2000 Datacenter Server được 4 node.

Các ưu điểm của hệ thống Cluster Server

Các thành phần của Cluster Server

Backup/Restore Manager: Cluster service đưa ra 1 API sử dụng để backup những cơ sở dữ liệu cluster, BackupClusterDatabase. Đầu tiên, BackupClusterDatabase tương tác với Failover manager, tiếp theo đẩy yêu cầu đến node có quorum resource. Database manager trên node sẽ được yêu cầu và sau đó tạo 1 bản backup cho quorum log file và những file checkpoint.

Resource Monitor: được phát triển để cung cấp 1 interface giao tiếp giữa resource DLLs và Cluster service. Trong trường hợp cluster cần lấy dữ liệu từ 1 resource, resource monitor tiếp nhận yêu cầu và đưa yêu cầu đó đến resource DLL thích hợp. Ngược lại khi 1 resource DLL cần báo cáo trạng thái của nó hoặc thông báo cho cluster service 1 sự kiện, resource sẽ đưa thông tin này từ resource đến cluster service

Node Manager: chạy trên mỗi node đồng thời duy trì 1 danh sách cục bộ những những node, những network, những network interface trong cluster. Dựa vào sự giao tiếp giữa những node, node manager đảm bảo cho những node có cùng 1 danh sách những node đang hoạt động.

Membership Manager: có nhiệm vụ chịu trách nhiệm duy trì 1 cái nhìn nhất quán về những node trong Cluster hiện đang hoạt động hay bị hỏng tại 1 thời điểm nhất định. Thành phần này tập trung chủ yếu vào thuật toán regroup được yêu cầu hoạt động bất cứ khi nào có dấu hiệu của 1 hay nhiều node bị lỗi.

Checkpoint Manager: đảm bảo cho việc phục hồi từ resource bị lỗi, Checkpoint Manager tiến hành kiểm tra những khoá registry khi 1 resource được mang online và ghi dữ liệu checkpoint đến quorum resource trong trường hợp resource này offline.

Cách hoạt động của một Cluster Server

Một Server Cluster là một nhóm các máy chủ được liên kết với nhau để hoạt động như một hệ thống duy nhất. Khi một yêu cầu được gửi đến hệ thống, các node trong Cluster sẽ cùng nhau xử lý yêu cầu đó để đảm bảo tính sẵn sàng và hiệu suất cao của hệ thống.

Các Server Cluster có thể được cấu hình theo hai cách khác nhau: Active-Passive và Active-Active.

Trong mô hình Active-Passive, một server trong Cluster sẽ hoạt động ở chế độ Active, trong khi các server khác sẽ ở chế độ Passive. Các yêu cầu từ người dùng sẽ được gửi đến server ở chế độ Active, và nếu server này gặp sự cố hoặc không hoạt động, các yêu cầu sẽ được chuyển đến server ở chế độ Passive để tiếp tục xử lý. Điều này giúp đảm bảo tính sẵn sàng của hệ thống khi một server gặp sự cố, tuy nhiên, cách tiếp cận này không tận dụng tối đa tài nguyên của các server trong Cluster.

Trong mô hình Active-Active, tất cả các server trong Cluster đều hoạt động cùng lúc và chia sẻ tài nguyên với nhau. Các yêu cầu sẽ được phân phối đến các server khác nhau để xử lý, và nếu một server gặp sự cố hoặc không hoạt động, các yêu cầu sẽ được chuyển đến server khác trong Cluster để tiếp tục xử lý. Điều này giúp tối ưu hóa tài nguyên và đảm bảo tính sẵn sàng và hiệu suất cao của hệ thống.

Các Server Cluster cũng sử dụng các công nghệ như Load Balancer để phân phối tải và đảm bảo rằng các yêu cầu được phân phối đến các server trong Cluster một cách cân bằng, giúp tối ưu hóa hiệu suất của hệ thống.

Lưu ý khi sử dụng hệ thống Cluster Server

Cần chú ý hiệu quả hoạt động của những cụm máy tính phụ thuộc rất nhiều vào sự tương thích giữa các ứng dụng, dịch vụ, phần cứng và phần mềm

Không thể vận hành hệ thống Cluster hay NLB khi giữa các server sử dụng những hệ điều hành khác nhau dù chúng có hỗ trợ nhau hay không

Những lỗi mà Cluster không thể khắc phục đó là virus xâm nhập, lỗi phần mềm hoặc lỗi của người sử dụng

Để tránh mất dữ liệu do lỗi tác động cần xây dựng hệ thống bảo vệ chắc chắn cũng như có kế hoạch backup khôi phục dữ liệu

Thông qua bài viết này, Thuevpsgiare.vn đã giúp bạn hiểu rõ hơn về khái niệm Cluster Server là gì và cách hoạt động, cùng những ưu điểm và thành phần quan trọng. Đồng thời, bài viết cũng chia sẻ những lưu ý quan trọng khi sử dụng hệ thống Cluster Server.

Để thiết kế và triển khai một Server Cluster hiệu quả, cần xem xét kỹ các yếu tố như tính sẵn sàng, độ tin cậy và hiệu suất, đồng thời cân nhắc đến các yếu tố liên quan đến phần cứng, phần mềm và mạng để đảm bảo hệ thống hoạt động ổn định và hiệu quả.

0 notes