#how to delete multiple rows using checkbox

Explore tagged Tumblr posts

Photo

Ajax Multiple Delete Records using Checkbox in Laravel How to delete multiple records using checkbox with Ajax in Laravel. Delete Multiple Data using Checkbox in Laravel using Ajax.

#delete bulk laravel#delete multiple records with checkbox in Laravel#how to delete multiple rows using checkbox#how to delete multiple rows using checkbox in laravel#Laravel#laravel delete multiple rows#laravel multiple delete#laravel multiple delete checkbox#laravel multiple delete using checkbox#multiple delete laravel#multiple delete using checkbox in laravel

0 notes

Photo

Red, White, and Maple

[ UNLIMITED MULTI-SALE W/ SET PACKAGES ]

A labour of love, Red, White, and Maple was a July 2020 Skinathon skin - Canadian themed to honour the start date of July 1st, which was shared with Canada Day! That said, the accent color and icon can be changed easily. This skin is “self-contained” within the frame, as seen, and even the post rows have a maximum height on them. The templates have been designed to fit in with this and sit at the maximum height for the post content space (save the comms template, which is a little shorter). This skin will have unlimited sales, making it cheaper, however, there are four different package options available at different price points. Pricing details are below the cut!

Features:

All HTML templates designed exclusively for the skin

Static user bar, navigation controls, etc

Three posting templates: general posting, tabs, and communications

Styled checkboxes (the maple leaves for topics, and a box that matches the function buttons - like edit, delete, etc - for posts)

Avatar hover which will scroll for multiple/any height mini profile fields

Colors, font families, body font sizes, and icon set in variables for easier changes

Tabbed profile available

Filtering member list available

Vertical forums; will not wrap to a new line, but widths will adjust depending on how many exist in the row

Category descriptions!! Also set in variables for easier editing

Installed and auto-updated to the latest version FontAwesome 5 Pro icon set (Yes, I installed my kit with all icons on this. Yes, this is reflected in the price.)

If you want to purchase this skin, please contact me through Discord only. My Tumblr messages do not get pushed to my phone and I’m not on desktop Tumblr often enough to notice them there. My Discord handle is lux#5069. If this will not work for you, then you may reach out on Tumblr for my email - but expect a delay. I apologize for any inconvenience this may cause, but Android has decided that I don’t deserve Tumblr notifications at all, so I have to work within that.

Package A: $35 USD

Basic package

Includes the non-tabbed profile and non-filtered member list HTML

Includes the CSS for the above implementations and most script

Can include the final script for +$5 USD

Good if you want to save, don’t need a lot, and/or are comfortable enough with code to analyze mine and add those features yourself

Instructions on how to use the different profile fields in the main profile and in the post row / mini profile

Instructions on how to change / add category descriptions

Total Sales Made: 0

Package B: $45 USD

Tabbed profile package

Includes the base package, but with the tabbed profile HTML instead of the basic profile HTML

Can include the missing filtered members list for +$5 USD

Good for profile application based sites

Instructions for how to add / subtract / change tabs

Total Sales Made: 0

Package C: $50 USD

Filtered members package

Includes the base package, but with the filtered member list HTML instead of the basic member list HTML as well as the script which is missing from the base package

Good for sites which want a quick and easy peek at plotting

Instructions for how to add / change filter groups and filter labels

Total Sales Made: 0

Package D: $55 USD

Full package

Includes the base package and both features unique to packages B and C - that is, it includes everything

Good for sites that want profile applications, a quick and easy peek at plotting with their member list, and are not comfortable figuring out how to add those features themselves

Total Sales Made: 1

Available Add-ons:

Additional posting templates [ +$10 - $30 USD for set ]

Webpage template(s) [ +$15 - 30 USD per template ]

Group color changing profiles [ +$5 USD for 5 groups, +$1 USD per additional group ]

Group color changing skin [ +$10 USD for 5 groups, +$2 USD per additional group ]

Modal box additions [ +$10 - 20 USD per box ]

Skin installation [ +$15 ]

31 notes

·

View notes

Text

Version 378

youtube

windows

zip

exe

macOS

app

linux

tar.gz

source

tar.gz

I had a great, simple week. Searches are less likely to be very slow, and system:limit searches now sort.

all misc this week

I identified a database access routine that was sometimes not taking an optimal route. Normally it was fine, but with certain sizes or types of query, it could take a very long time to complete. This mostly affected multi-predicate searches that included certain tags or system:duration and system:known urls, but the routine was used in about 60 different places across the program, including tag and duplicate files processing. I have rewritten this access routine to work in a more 'flat' way that will ensure it is not so 'spiky'.

Also in searching, I managed to push all the 'simple' file sorts down to file searches that have 'system:limit'. If you search with system:limit=256 and are sorting by 'largest files first', you will now see the 256 largest files in the search! Previously, it would give a random sample. All the simple sorts are supported: import time, filesize, duration, width, height, resolution ratio, media views, media viewtime, num pixels, approx bitrate, and modified time. If you want something fun, do a search for just 'system:limit=64' (and maybe system:filetype) and try some different sorts with F5--you can now see the oldest, smallest, longest, widest, whateverest files in your collection much easier.

There are also some fixes: if you had sessions not appearing in the 'pages' menu, they should be back; if you have had trouble with ipfs directory downloads, I think I have the file-selection UI working again; 'remove files when trashed' should work more reliably in downloader pages; and several tag and selection lists should size themselves a bit better.

full list

if a search has system:limit, the current sort is now sent down to the database. if the sort is simple, results are now sorted before system:limit is applied, meaning you will now get the largest/longest/whateverest sample of the search! supported sorts are: import time, filesize, duration, width, height, resolution ratio, media views, media viewtime, num pixels, approx bitrate, and modified time. this does not apply to searches in the 'all known files' file domain.

after identifying a sometimes-unoptimal db access routine, wrote a new more reliable one and replaced the 60-odd places it is used in both client and server. a variety of functions will now have less 'spiky' job time, including certain combinations of regular tag and system search predicates. some jobs will have slightly higher average job time, some will be much faster in all common situations

added additional database analysis to some complicated duplicate file system jobs that adds some overhead but should reduce extreme spikes in job time for very large databases

converted some legacy db code to new access methods

fixed a bug in the new menu generation code that was not showing sessions in the 'pages' menu if there were no backups for these sessions (i.e. they have only been saved once, or are old enough to have been last saved before the backup system was added)

fixed the 'click window close button should back out, not choose the red no button' bug in the yes/no confirmation dialogs for analyze, vacuum, clear orphan, and gallery log button url import

fixed some checkbox select and data retrieval logic in the checkbox tree control and completely cleared out the buggy ipfs directory download workflow. I apologise for the delay

fixed some inelegant multihash->urls resolution in the ipfs service code that would often mean a large folder would lock the client while parsing was proceeding

when the multihash->urls resolution is going on, the popup now exposes the underlying network control. cancelling the whole job mid-parse/download is now also quicker and prettier

when a 'downloader multiple urls' popup is working, it will publish its ongoing presented files to a files button as it works, rather than just once the job is finished

improved some unusual taglist height calculations that were turning up

improved how taglists set their minimum height--the 'selection tags' list should now always have at least 15 rows, even when bunched up in a tall gallery panel

if the system clock is rewound, new objects that are saved in the backup system (atm, gui sessions) will now detect that existing backups are from the future and increase their save time to ensure they count as the newest object

short version: 'remove files from view when trashed' now works on downloader thumbs that are loaded in from a session. long version: downloader thumb pages now force 'my files' file domain for now (previously it was 'all local files')

the downloader/thread watcher right-click menus for 'show all downloaders xxx files' now has a new 'all files and trash' entry. this will show absolutely everything still in your db, for quick access to accidental deletes

the 'select a downloader' list dialog _should_ size itself better, with no double scrollbars, when there are many many downloaders and/or very long-named downloaders. if this layout works, I'll replicated it in other areas

if an unrenderable key enters a shortcut, the shortcut will now display an 'unknown key: blah' statement instead of throwing an error. this affected both the manage shortcuts dialog and the media viewer(!)

SIGTERM is now caught in non-windows systems and will initiate a fast forced shutdown

unified and played with some border styles around the program

added a user-written guide to updating to the 'getting started - installing' help page

misc small code cleanup

next week

I am going to take a few days off for the holiday and make the next release in two weeks, for New Year's Day. I expect to do some small jobs, push more on the database optimisation, continue improving the UI layout code, and perhaps put some time into some space-clearing database maintenance.

𝕸𝖊𝖗𝖗𝖞 𝕮𝖍𝖗𝖎𝖘𝖙𝖒𝖆𝖘!

1 note

·

View note

Text

Monthly menu calendar

MONTHLY MENU CALENDAR HOW TO

Ask for suggestions from your family for meals they want to eat for the month. That way you don’t spend all of your prep time searching for that fabulous recipe. When you create your planner, spend some time collecting the recipes or write down the page numbers. The purpose of a meal plan is to make the dinner decision time less agonizing and to help you with your grocery shopping. If you don't feel like making Hamburgers, switch them with another night. If you order pizza, move the planned meal to the first week of the next month. Save it on your desktop or print it out and put it on the fridge or the inside of a cupboard. Put your menu plan where you will see it.I am constantly printing or tearing out pages of new recipes, but if I don't put them on my meal plan, I never make them. Add recipes that you want to try to your planner.You can serve Lemon Chicken twice, but the second time, serve it with potatoes instead of rice pilaf. Or Meatballs can be used for Sweet and Sour and then later in the week for Hoagies. For example, BBQ chicken on Wednesday can become BBQ chicken pizza on Friday. You can vary the sides or the appearance, but left-overs save time and money. I like this method, because then I can incorporate seasonal foods and my menus for July are distinctly different from the ones for December. Then, you can bring it out a year later and have something to work from. Or, at the end of the month or week, file the planner away until the next year. You can make three months worth of planners and then rotate them throughout the year. Your meal plan can be reused from month to month or week to week.The planner gives you a starter list that you can add to or amend to fit your needs. This will give you a start on your list of meals and you can add to it when you find new things. Make a list of everything you like to cook or everything you have eaten in the last month.

MONTHLY MENU CALENDAR HOW TO

My wife has graciously provided the following tips meal planning tips, including ideas for how to use the meal and menu planners. Click on the little filter arrow and uncheck the "-" option to temporarily hide the unneeded rows. Go to Data > Filter to add a filter on this column. Here's how: First, select the Qty column, beginning with the label "Qty" and ending with the last line of the list. Printing a Grocery List: When using the "Menu Planner with Grocery List" template, instead of printing all 3+ pages in the List worksheet, if you want to print a grocery list containing only the items you need, use the Filter feature. Doing so will mess up the formula used to create the dynamic named range which populates the drop-down lists. You can delete and remove rows, sort, and do pretty much whatever you want as long as you don't delete cell A1. I designed the lists to be as easy to edit. Right-click on the Planner tab and select "Move or Copy" and select the "Create a Copy" checkbox. That will let you store multiple weekly or monthly meal plans within the same file. The items in the list like are used to make it easy to see the different categories as you are scrolling through the list.Įxcept for the version with the grocery list, you can make copies of the worksheet.

0 notes

Text

How to Lock Cells in Microsoft Excel

When you’re working on a spreadsheet in Microsoft Excel, locking your cells is crucial to protecting data, preventing mistakes, and more. Today, we’ll show you how to do it.

Why Lock Cells in a Spreadsheet?

If you collaborate with others on a workbook, you might want to protect a cell by locking—especially if you want to make any changes or adjustments later. Locked cells can’t be reformatted, altered, or deleted. Locking cells works in conjunction with protecting the workbook. To change data within the cells, they need to be unlocked, and the sheet must be unprotected.

Locking Cells in an Excel Spreadsheet

You can lock individual cells or multiple cells using this method. Here’s how to do it with multiple cells.

In a new or existing Microsoft Excel document, select the cell or cell range you wish to lock. The cells you selected appear slightly shaded, indicating they’re going to be locked.

In the “Home” tab on the ribbon, select “Format.”

In the “Format” menu, select “Lock Cell.” Doing so will lock any of the cells you selected.

An alternative way to lock cells is to select your cells, then right-click them to bring up a menu. In that menu, select “Format Cells.” In the “Format Cells” box, click the “Protection” tab.

In the “Protection” tab, click the checkbox that says “Locked” to enable cell locking. This performs the exact same function as locking cells in the format tab.

After that, your cells are locked. If you ever need to unlock them, perform the steps above in reverse. Once you’ve finished locking your cells, you need to protect your workbook.

Protecting the Sheet

After you lock the cell, you’ll notice that it still lets you change the text or delete content. That’s because, in order for locking cells to work, you must also protect your sheet or workbook. Here’s how. In the Excel ribbon toolbar, click “Review.”

In the ribbon under the “Review” tab, select “Protect Sheet.”

In the “Protect Sheet” menu, you can create a password to protect the sheet and select a number of different parameters. For now, check the box marked “Protect worksheet and contents of locked cells.” Make any other adjustments you wish and click “OK” to protect the sheet.

Among the other options on the list, you can prevent other users from deleting/inserting rows and columns, altering the formatting, or generally messing with the document by clicking on them in the protection menu. Once the sheet is fully protected, no one can access the locked cells without using a password to unlock them first.

If you need to unlock the worksheet later, revisit the Review > Protect Sheet menu and uncheck “Protect worksheet and contents of locked cells.” Now that you know how to lock cells, you can sleep safely knowing your spreadsheets and workbooks won’t be altered without being unlocked first. Happy locking!

RELATED: How to Protect Workbooks, Worksheets, and Cells From Editing in Microsoft Excel

setTimeout(function(){ !function(f,b,e,v,n,t,s) {if(f.fbq)return;n=f.fbq=function(){n.callMethod? n.callMethod.apply(n,arguments):n.queue.push(arguments)}; if(!f._fbq)f._fbq=n;n.push=n;n.loaded=!0;n.version='2.0'; n.queue=[];t=b.createElement(e);t.async=!0; t.src=v;s=b.getElementsByTagName(e)[0]; s.parentNode.insertBefore(t,s) } (window, document,'script', 'https://connect.facebook.net/en_US/fbevents.js'); fbq('init', '335401813750447'); fbq('track', 'PageView'); },3000); Source link

from WordPress https://ift.tt/3EpmpOT via IFTTT

0 notes

Text

0 notes

Text

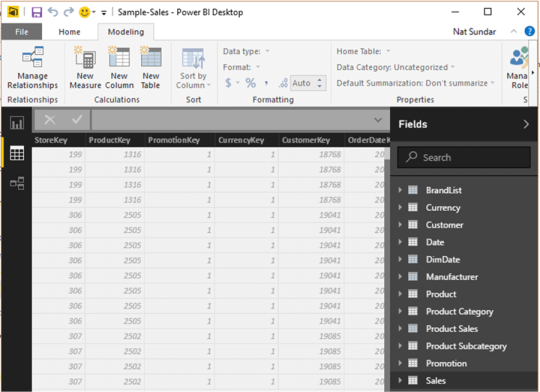

Join Two Tables In Power Bi

Introduce theory about model relationships in Power BI Desktop. It's not possible to relate a column to a different column in the same table.This is sometimes confused with the ability to define a relational database foreign key constraint that is table self-referencing. #PowerBI #MicrosoftPowerBI #DataVisualization #Analytics Power BI is the data visualization tool by Microsoft. This will help you to do your data analysis an. Power BI: Ultimate Guide to Joining Tables 1) Joining With the Relationships Page The easiest way to join tables is to simply use the Relationships page in Power. 2) Joining With Power Query You may want to join a table in the data prep stages before it hits the data model. 3) Joining With DAX.

Power Bi Create Table From Another Table

Inner Join Two Tables In Power Bi

Link Tables In Power Bi

Cross Join Two Tables In Power Bi

-->

Learn how to quickly merge and append tables using the query editior in Power BI. Build models with multiple data sources.Contact me on LinkedIn:www.linkedin. The easiest way to join tables is to simply use the Relationships page in Power BI. If your table ID's have the same name across tables, the relationships will automatically be picked up. If Power BI didn't pick up on the relationships, you can easily create one. To do so, click and drag the column name from one table over to the other table. To edit any relationship, double-click on the relationship line. A new window will app.

With Power BI Desktop, you can connect to many different types of data sources, then shape the data to meet your needs, enabling you to create visual reports to share with others. Shaping data means transforming the data: renaming columns or tables, changing text to numbers, removing rows, setting the first row as headers, and so on. Combining data means connecting to two or more data sources, shaping them as needed, then consolidating them into a useful query.

In this tutorial, you'll learn how to:

Shape data by using Power Query Editor.

Connect to different data sources.

Combine those data sources, and create a data model to use in reports.

This tutorial demonstrates how to shape a query by using Power BI Desktop, highlighting the most common tasks. The query used here is described in more detail, including how to create the query from scratch, in Getting Started with Power BI Desktop.

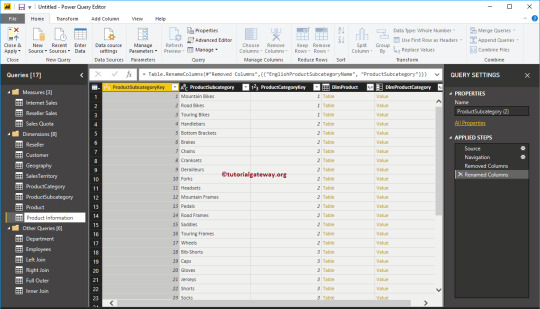

Power Query Editor in Power BI Desktop makes ample use of right-click menus, as well as the Transform ribbon. Most of what you can select in the ribbon is also available by right-clicking an item, such as a column, and choosing from the menu that appears.

Shape data

When you shape data in Power Query Editor, you provide step-by-step instructions for Power Query Editor to carry out for you to adjust the data as it loads and presents it. The original data source isn't affected; only this particular view of the data is adjusted, or shaped.

The steps you specify (such as rename a table, transform a data type, or delete a column) are recorded by Power Query Editor. Each time this query connects to the data source, Power Query Editor carries out those steps so that the data is always shaped the way you specify. This process occurs whenever you use Power Query Editor, or for anyone who uses your shared query, such as on the Power BI service. Those steps are captured, sequentially, in the Query Settings pane, under Applied Steps. We’ll go through each of those steps in the next few paragraphs.

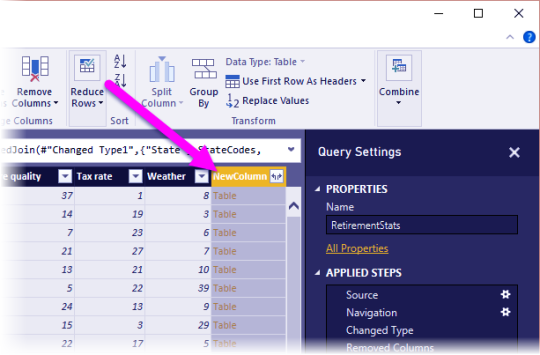

From Getting Started with Power BI Desktop, let's use the retirement data, which we found by connecting to a web data source, to shape that data to fit our needs. We'll add a custom column to calculate rank based on all data being equal factors, and compare this column to the existing column, Rank.

From the Add Column ribbon, select Custom Column, which lets you add a custom column.

In the Custom Column window, in New column name, enter New Rank. In Custom column formula, enter the following data:

Make sure the status message is No syntax errors have been detected, and select OK.

To keep column data consistent, transform the new column values to whole numbers. To change them, right-click the column header, and then select Change Type > Whole Number.

If you need to choose more than one column, select a column, hold down SHIFT, select additional adjacent columns, and then right-click a column header. You can also use the CTRL key to choose non-adjacent columns.

To transform column data types, in which you transform the current data type to another, select Data Type Text from the Transform ribbon.

In Query Settings, the Applied Steps list reflects any shaping steps applied to the data. To remove a step from the shaping process, select the X to the left of the step.

In the following image, the Applied Steps list reflects the added steps so far:

Source: Connecting to the website.

Extracted Table from Html: Selecting the table.

Changed Type: Changing text-based number columns from Text to Whole Number.

Added Custom: Adding a custom column.

Changed Type1: The last applied step.

Adjust data

Before we can work with this query, we need to make a few changes to adjust its data:

Adjust the rankings by removing a column.

We've decided Cost of living is a non-factor in our results. After removing this column, we find that the data remains unchanged.

Fix a few errors.

Because we removed a column, we need to readjust our calculations in the New Rank column, which involves changing a formula.

Sort the data.

Sort the data based on the New Rank and Rank columns.

Replace the data.

We'll highlight how to replace a specific value and the need of inserting an Applied Step.

Change the table name.

Because Table 0 isn't a useful descriptor for the table, we'll change its name.

To remove the Cost of living column, select the column, choose the Home tab from the ribbon, and then select Remove Columns.

Notice the New Rank values haven't changed, due to the ordering of the steps. Because Power Query Editor records the steps sequentially, yet independently, of each other, you can move each Applied Step up or down in the sequence.

Right-click a step. Power Query Editor provides a menu that lets you do the following tasks:

Rename; Rename the step.

Delete: Delete the step.

DeleteUntil End: Remove the current step, and all subsequent steps.

Move before: Move the step up in the list.

Move after: Move the step down in the list.

Move up the last step, Removed Columns, to just above the Added Custom step.

Select the Added Custom step.

Notice the data now shows Error, which we'll need to address.

There are a few ways to get more information about each error. If you select the cell without clicking on the word Error, Power Query Editor displays the error information.

If you select the word Error directly, Power Query Editor creates an Applied Step in the Query Settings pane and displays information about the error.

Because we don't need to display information about the errors, select Cancel.

To fix the errors, select the New Rank column, then display the column's data formula by selecting the Formula Bar checkbox from the View tab.

Remove the Cost of living parameter and decrement the divisor, by changing the formula as follows:

Select the green checkmark to the left of the formula box or press Enter.

Power Query Editor replaces the data with the revised values and the Added Custom step completes with no errors. Triangle sigil.

Note

You can also select Remove Errors, by using the ribbon or the right-click menu, which removes any rows that have errors. However, we didn't want to do so in this tutorial because we wanted to preserve the data in the table.

Sort the data based on the New Rank column. First, select the last applied step, Changed Type1 to display the most recent data. Then, select the drop-down located next to the New Rank column header and select Sort Ascending.

The data is now sorted according to New Rank. However, if you look at the Rank column, you'll notice the data isn't sorted properly in cases where the New Rank value is a tie. We'll fix it in the next step.

To fix the data sorting issue, select the New Rank column and change the formula in the Formula Bar to the following formula:

Select the green checkmark to the left of the formula box or press Enter.

The rows are now ordered in accordance with both New Rank and Rank. In addition, you can select an Applied Step anywhere in the list, and continue shaping the data at that point in the sequence. Power Query Editor automatically inserts a new step directly after the currently selected Applied Step.

In Applied Step, select the step preceding the custom column, which is the Removed Columns step. Here we'll replace the value of the Weather ranking in Arizona. Right-click the appropriate cell that contains Arizona's Weather ranking, and then select Replace Values. Note which Applied Step is currently selected.

Select Insert.

Because we're inserting a step, Power Query Editor warns us about the danger of doing so; subsequent steps could cause the query to break.

Change the data value to 51.

Power Query Editor replaces the data for Arizona. When you create a new Applied Step, Power Query Editor names it based on the action; in this case, Replaced Value. If you have more than one step with the same name in your query, Power Query Editor adds a number (in sequence) to each subsequent Applied Step to differentiate between them.

Select the last Applied Step, Sorted Rows.

Notice the data has changed regarding Arizona's new ranking. This change occurs because we inserted the Replaced Value step in the correct location, before the Added Custom step.

Lastly, we want to change the name of that table to something descriptive. In the Query Settings pane, under Properties, enter the new name of the table, and then select Enter. Name this table RetirementStats.

When we start creating reports, it’s useful to have descriptive table names, especially when we connect to multiple data sources, which are listed in the Fields pane of the Report view.

We’ve now shaped our data to the extent we need to. Next let’s connect to another data source, and combine data.

Combine data

The data about various states is interesting, and will be useful for building additional analysis efforts and queries. But there’s one problem: most data out there uses a two-letter abbreviation for state codes, not the full name of the state. We need a way to associate state names with their abbreviations.

We’re in luck; there’s another public data source that does just that, but it needs a fair amount of shaping before we can connect it to our retirement table. TO shape the data, follow these steps:

From the Home ribbon in Power Query Editor, select New Source > Web.

Enter the address of the website for state abbreviations, https://en.wikipedia.org/wiki/List_of_U.S._state_abbreviations, and then select Connect.

The Navigator displays the content of the website.

Select Codes and abbreviations.

Tip

It will take quite a bit of shaping to pare this table’s data down to what we want. Is there a faster or easier way to accomplish the steps below? Yes, we could create a relationship between the two tables, and shape the data based on that relationship. The following steps are still good to learn for working with tables; however, relationships can help you quickly use data from multiple tables.

To get the data into shape, follow these steps:

Remove the top row. Because it's a result of the way that the web page’s table was created, we don’t need it. From the Home ribbon, select Remove Rows > Remove Top Rows.

The Remove Top Rows window appears, letting you specify how many rows you want to remove.

Note

If Power BI accidentally imports the table headers as a row in your data table, you can select Use First Row As Headers from the Home tab, or from the Transform tab in the ribbon, to fix your table.

Remove the bottom 26 rows. These rows are U.S. territories, which we don’t need to include. From the Home ribbon, select Remove Rows > Remove Bottom Rows.

Because the RetirementStats table doesn't have information for Washington DC, we need to filter it from our list. Select the Region Status drop-down, then clear the checkbox beside Federal district.

Remove a few unneeded columns. Because we need only the mapping of each state to its official two-letter abbreviation, we can remove several columns. First select a column, then hold down the CTRL key and select each of the other columns to be removed. From the Home tab on the ribbon, select Remove Columns > Remove Columns.

Note

This is a good time to point out that the sequence of applied steps in Power Query Editor is important, and can affect how the data is shaped. It’s also important to consider how one step may impact another subsequent step; if you remove a step from the Applied Steps, subsequent steps may not behave as originally intended, because of the impact of the query’s sequence of steps.

Note

When you resize the Power Query Editor window to make the width smaller, some ribbon items are condensed to make the best use of visible space. When you increase the width of the Power Query Editor window, the ribbon items expand to make the most use of the increased ribbon area.

Rename the columns and the table. There are a few ways to rename a column: First, select the column, then either select Rename from the Transform tab on the ribbon, or right-click and select Rename. The following image has arrows pointing to both options; you only need to choose one.

Rename the columns to State Name and State Code. To rename the table, enter the Name in the Query Settings pane. Name this table StateCodes.

Combine queries

Now that we’ve shaped the StateCodes table the way we want, let’s combine these two tables, or queries, into one. Because the tables we now have are a result of the queries we applied to the data, they’re often referred to as queries.

Power Bi Create Table From Another Table

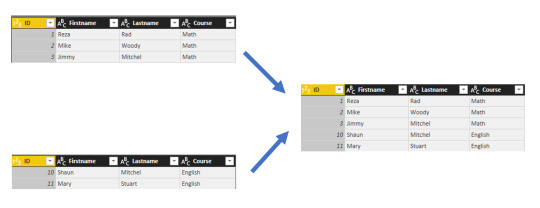

There are two primary ways of combining queries: merging and appending.

When you have one or more columns that you’d like to add to another query, you merge the queries.

When you have additional rows of data that you’d like to add to an existing query, you append the query.

Inner Join Two Tables In Power Bi

In this case, we want to merge the queries. To do so, follow these steps:

Link Tables In Power Bi

From the left pane of Power Query Editor, select the query into which you want the other query to merge. In this case, it's RetirementStats.

Select Merge Queries > Merge Queries from the Home tab on the ribbon.

You may be prompted to set the privacy levels, to ensure the data is combined without including or transferring data you don't want transferred.

The Merge window appears. It prompts you to select which table you'd like merged into the selected table, and the matching columns to use for the merge.

Select State from the RetirementStats table, then select the StateCodes query.

When you select the correct matching columns, the OK button is enabled.

Select OK.

Power Query Editor creates a new column at the end of the query, which contains the contents of the table (query) that was merged with the existing query. All columns from the merged query are condensed into the column, but you can Expand the table and include whichever columns you want.

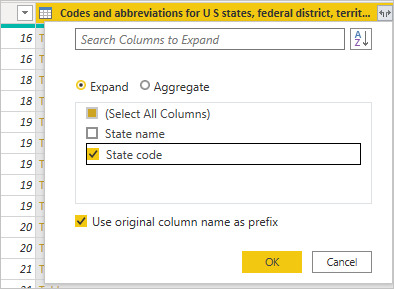

To expand the merged table, and select which columns to include, select the expand icon ().

The Expand window appears.

In this case, we want only the State Code column. Select that column, clear Use original column name as prefix, and then select OK.

From your Web browser, go to the Google Drive File Stream home page. On the Google Drive Help page, click on Download for Windows. In the following pop-up window, click Save File. If you’re prompted to enter a location in which to save the installer file, titled googledrivefilestream.exe, save the file. Access all of your Google Drive content directly from your Mac or PC, without using up disk space. Learn more Download Backup and Sync for Mac Download Backup and Sync for Windows. Download google drive stream pc. At the bottom right (Windows) or top right (Mac), click Drive for desktop Open Google Drive. When you install Drive for desktop on your computer, it creates a drive in My Computer or a location in.

If we had left the checkbox selected for Use original column name as prefix, the merged column would be named NewColumn.State Code.

Note

Want to explore how to bring in the NewColumn table? You can experiment a bit, and if you don’t like the results, just delete that step from the Applied Steps list in the Query Settings pane; your query returns to the state prior to applying that Expand step. You can do this as many times as you like until the expand process looks the way you want it.

We now have a single query (table) that combines two data sources, each of which has been shaped to meet our needs. This query can serve as a basis for many additional and interesting data connections, such as housing cost statistics, demographics, or job opportunities in any state.

To apply your changes and close Power Query Editor, select Close & Apply from the Home ribbon tab.

The transformed dataset appears in Power BI Desktop, ready to be used for creating reports.

Cross Join Two Tables In Power Bi

Next steps

For more information on Power BI Desktop and its capabilities, see the following resources:

0 notes

Text

Combining Tables In Power Bi

Combining / Stacking / Appending Tables This is truly the easiest part, now all you need to do is find the button that reads Append Queries and then a new window will appear where you can combine all the queries that you want.

Combine Tables In Power Bi Dax

Power Bi Merge Multiple Tables

Combining Tables In Power Bi Software

Append Tables In Power Bi

Learn how to quickly merge and append tables using the query editior in Power BI. Build models with multiple data sources.Contact me on LinkedIn:www.linkedin.

I have done a few videos on YouTube explaining how to join tables using Power Query or DAX. If you follow the channel, you probably have seen the videos and this blog post will serve as a compilation of all the material. However, if you are new, this will serve as a tutorial for beginners on how to joins in Power BI.

How to join tables in power bi desktop: a practical example Combining data. When it comes to combining data in tables, it can be done in two ways. One is you may need to increase. The data set I have used for demonstration purpose is on India’s state-wise crop production collected.

-->

With Power BI Desktop, you can connect to many different types of data sources, then shape the data to meet your needs, enabling you to create visual reports to share with others. Shaping data means transforming the data: renaming columns or tables, changing text to numbers, removing rows, setting the first row as headers, and so on. Combining data means connecting to two or more data sources, shaping them as needed, then consolidating them into a useful query.

In this tutorial, you'll learn how to:

Shape data by using Power Query Editor.

Connect to different data sources.

Combine those data sources, and create a data model to use in reports.

This tutorial demonstrates how to shape a query by using Power BI Desktop, highlighting the most common tasks. The query used here is described in more detail, including how to create the query from scratch, in Getting Started with Power BI Desktop.

Power Query Editor in Power BI Desktop makes ample use of right-click menus, as well as the Transform ribbon. Most of what you can select in the ribbon is also available by right-clicking an item, such as a column, and choosing from the menu that appears.

Shape data

When you shape data in Power Query Editor, you provide step-by-step instructions for Power Query Editor to carry out for you to adjust the data as it loads and presents it. The original data source isn't affected; only this particular view of the data is adjusted, or shaped.

The steps you specify (such as rename a table, transform a data type, or delete a column) are recorded by Power Query Editor. Each time this query connects to the data source, Power Query Editor carries out those steps so that the data is always shaped the way you specify. This process occurs whenever you use Power Query Editor, or for anyone who uses your shared query, such as on the Power BI service. Those steps are captured, sequentially, in the Query Settings pane, under Applied Steps. We’ll go through each of those steps in the next few paragraphs.

From Getting Started with Power BI Desktop, let's use the retirement data, which we found by connecting to a web data source, to shape that data to fit our needs. We'll add a custom column to calculate rank based on all data being equal factors, and compare this column to the existing column, Rank.

From the Add Column ribbon, select Custom Column, which lets you add a custom column.

In the Custom Column window, in New column name, enter New Rank. In Custom column formula, enter the following data:

Make sure the status message is No syntax errors have been detected, and select OK.

To keep column data consistent, transform the new column values to whole numbers. To change them, right-click the column header, and then select Change Type > Whole Number.

If you need to choose more than one column, select a column, hold down SHIFT, select additional adjacent columns, and then right-click a column header. You can also use the CTRL key to choose non-adjacent columns.

To transform column data types, in which you transform the current data type to another, select Data Type Text from the Transform ribbon.

In Query Settings, the Applied Steps list reflects any shaping steps applied to the data. To remove a step from the shaping process, select the X to the left of the step.

In the following image, the Applied Steps list reflects the added steps so far:

Source: Connecting to the website.

Extracted Table from Html: Selecting the table.

Changed Type: Changing text-based number columns from Text to Whole Number.

Added Custom: Adding a custom column.

Changed Type1: The last applied step.

Adjust data

Before we can work with this query, we need to make a few changes to adjust its data:

Adjust the rankings by removing a column.

We've decided Cost of living is a non-factor in our results. After removing this column, we find that the data remains unchanged.

Fix a few errors.

Because we removed a column, we need to readjust our calculations in the New Rank column, which involves changing a formula.

Sort the data.

Sort the data based on the New Rank and Rank columns.

Replace the data.

We'll highlight how to replace a specific value and the need of inserting an Applied Step.

Change the table name.

Because Table 0 isn't a useful descriptor for the table, we'll change its name.

To remove the Cost of living column, select the column, choose the Home tab from the ribbon, and then select Remove Columns.

Notice the New Rank values haven't changed, due to the ordering of the steps. Because Power Query Editor records the steps sequentially, yet independently, of each other, you can move each Applied Step up or down in the sequence.

Right-click a step. Power Query Editor provides a menu that lets you do the following tasks:

Rename; Rename the step.

Delete: Delete the step.

DeleteUntil End: Remove the current step, and all subsequent steps.

Move before: Move the step up in the list.

Move after: Move the step down in the list.

Move up the last step, Removed Columns, to just above the Added Custom step.

Select the Added Custom step.

Notice the data now shows Error, which we'll need to address.

There are a few ways to get more information about each error. If you select the cell without clicking on the word Error, Power Query Editor displays the error information.

If you select the word Error directly, Power Query Editor creates an Applied Step in the Query Settings pane and displays information about the error.

Because we don't need to display information about the errors, select Cancel.

To fix the errors, select the New Rank column, then display the column's data formula by selecting the Formula Bar checkbox from the View Skype for business eol. tab.

Remove the Cost of living parameter and decrement the divisor, by changing the formula as follows:

Select the green checkmark to the left of the formula box or press Enter.

Power Query Editor replaces the data with the revised values and the Added Custom step completes with no errors.

Note

You can also select Remove Errors, by using the ribbon or the right-click menu, which removes any rows that have errors. However, we didn't want to do so in this tutorial because we wanted to preserve the data in the table.

Sort the data based on the New Rank column. First, select the last applied step, Changed Type1 to display the most recent data. Then, select the drop-down located next to the New Rank column header and select Sort Ascending.

The data is now sorted according to New Rank. However, if you look at the Rank column, you'll notice the data isn't sorted properly in cases where the New Rank value is a tie. We'll fix it in the next step.

To fix the data sorting issue, select the New Rank column and change the formula in the Formula Bar to the following formula:

Select the green checkmark to the left of the formula box or press Enter.

The rows are now ordered in accordance with both New Rank and Rank. In addition, you can select an Applied Step anywhere in the list, and continue shaping the data at that point in the sequence. Power Query Editor automatically inserts a new step directly after the currently selected Applied Step.

In Applied Step, select the step preceding the custom column, which is the Removed Columns step. Here we'll replace the value of the Weather ranking in Arizona. Right-click the appropriate cell that contains Arizona's Weather ranking, and then select Replace Values. Note which Applied Step is currently selected.

Select Insert.

Because we're inserting a step, Power Query Editor warns us about the danger of doing so; subsequent steps could cause the query to break.

Change the data value to 51.

Power Query Editor replaces the data for Arizona. When you create a new Applied Step, Power Query Editor names it based on the action; in this case, Replaced Value. If you have more than one step with the same name in your query, Power Query Editor adds a number (in sequence) to each subsequent Applied Step to differentiate between them.

Select the last Applied Step, Sorted Rows.

Notice the data has changed regarding Arizona's new ranking. This change occurs because we inserted the Replaced Value step in the correct location, before the Added Custom step.

Lastly, we want to change the name of that table to something descriptive. In the Query Settings pane, under Properties, enter the new name of the table, and then select Enter. Name this table RetirementStats.

When we start creating reports, it’s useful to have descriptive table names, especially when we connect to multiple data sources, which are listed in the Fields pane of the Report view.

We’ve now shaped our data to the extent we need to. Next let’s connect to another data source, and combine data.

Combine data

The data about various states is interesting, and will be useful for building additional analysis efforts and queries. But there’s one problem: most data out there uses a two-letter abbreviation for state codes, not the full name of the state. We need a way to associate state names with their abbreviations.

Combine Tables In Power Bi Dax

We’re in luck; there’s another public data source that does just that, but it needs a fair amount of shaping before we can connect it to our retirement table. TO shape the data, follow these steps:

From the Home ribbon in Power Query Editor, select New Source > Web.

Enter the address of the website for state abbreviations, https://en.wikipedia.org/wiki/List_of_U.S._state_abbreviations, and then select Connect.

The Navigator displays the content of the website.

Select Codes and abbreviations. Home alone on netflix streaming.

Tip

It will take quite a bit of shaping to pare this table’s data down to what we want. Is there a faster or easier way to accomplish the steps below? Yes, we could create a relationship between the two tables, and shape the data based on that relationship. The following steps are still good to learn for working with tables; however, relationships can help you quickly use data from multiple tables.

To get the data into shape, follow these steps:

Power Bi Merge Multiple Tables

Remove the top row. Because it's a result of the way that the web page’s table was created, we don’t need it. From the Home ribbon, select Remove Rows > Remove Top Rows.

The Remove Top Rows window appears, letting you specify how many rows you want to remove.

Note

If Power BI accidentally imports the table headers as a row in your data table, you can select Use First Row As Headers from the Home tab, or from the Transform tab in the ribbon, to fix your table.

Remove the bottom 26 rows. These rows are U.S. territories, which we don’t need to include. From the Home ribbon, select Remove Rows > Remove Bottom Rows.

Because the RetirementStats table doesn't have information for Washington DC, we need to filter it from our list. Select the Region Status drop-down, then clear the checkbox beside Federal district.

Remove a few unneeded columns. Because we need only the mapping of each state to its official two-letter abbreviation, we can remove several columns. First select a column, then hold down the CTRL key and select each of the other columns to be removed. From the Home tab on the ribbon, select Remove Columns > Remove Columns.

Note

This is a good time to point out that the sequence of applied steps in Power Query Editor is important, and can affect how the data is shaped. It’s also important to consider how one step may impact another subsequent step; if you remove a step from the Applied Steps, subsequent steps may not behave as originally intended, because of the impact of the query’s sequence of steps.

Note

When you resize the Power Query Editor window to make the width smaller, some ribbon items are condensed to make the best use of visible space. When you increase the width of the Power Query Editor window, the ribbon items expand to make the most use of the increased ribbon area.

Rename the columns and the table. There are a few ways to rename a column: First, select the column, then either select Rename from the Transform tab on the ribbon, or right-click and select Rename. The following image has arrows pointing to both options; you only need to choose one.

Rename the columns to State Name and State Code. To rename the table, enter the Name in the Query Settings pane. Name this table StateCodes.

Combine queries

Now that we’ve shaped the StateCodes table the way we want, let’s combine these two tables, or queries, into one. Because the tables we now have are a result of the queries we applied to the data, they’re often referred to as queries.

There are two primary ways of combining queries: merging and appending.

When you have one or more columns that you’d like to add to another query, you merge the queries.

When you have additional rows of data that you’d like to add to an existing query, you append the query.

In this case, we want to merge the queries. To do so, follow these steps:

From the left pane of Power Query Editor, select the query into which you want the other query to merge. In this case, it's RetirementStats.

Select Merge Queries > Merge Queries from the Home tab on the ribbon.

You may be prompted to set the privacy levels, to ensure the data is combined without including or transferring data you don't want transferred.

The Merge window appears. It prompts you to select which table you'd like merged into the selected table, and the matching columns to use for the merge.

Select State from the RetirementStats table, then select the StateCodes query.

When you select the correct matching columns, the OK button is enabled.

Select OK.

Power Query Editor creates a new column at the end of the query, which contains the contents of the table (query) that was merged with the existing query. All columns from the merged query are condensed into the column, but you can Expand the table and include whichever columns you want.

To expand the merged table, and select which columns to include, select the expand icon ().

The Expand window appears.

In this case, we want only the State Code column. Select that column, clear Use original column name as prefix, and then select OK.

If we had left the checkbox selected for Use original column name as prefix, the merged column would be named NewColumn.State Code.

Note

Want to explore how to bring in the NewColumn table? You can experiment a bit, and if you don’t like the results, just delete that step from the Applied Steps list in the Query Settings pane; your query returns to the state prior to applying that Expand step. You can do this as many times as you like until the expand process looks the way you want it.

We now have a single query (table) that combines two data sources, each of which has been shaped to meet our needs. This query can serve as a basis for many additional and interesting data connections, such as housing cost statistics, demographics, or job opportunities in any state.

To apply your changes and close Power Query Editor, select Close & Apply from the Home ribbon tab.

The transformed dataset appears in Power BI Desktop, ready to be used for creating reports.

Combining Tables In Power Bi Software

Next steps

Append Tables In Power Bi

For more information on Power BI Desktop and its capabilities, see the following resources:

0 notes

Text

New Post has been published on Strange Hoot - How To’s, Reviews, Comparisons, Top 10s, & Tech Guide

New Post has been published on https://strangehoot.com/how-to-forward-multiple-emails-in-gmail/

How to Forward Multiple Emails in Gmail

Forward Multiple Emails in Gmail is hardly known to the daily users. Most of the time people struggle for doing a specific action in the apps they daily use. We are all part of that.

In Gmail, there are endless features available that can be utilized in our daily activities at work. Also, the same can be applied to personal mails if there is some kind of security and privacy is required.

One of the most used email features is Forward Emails to Gmail. If we are using Outlook or Apple email clients, we set a rule to forward emails to our Gmail account. Why do so? The reason is – we are so comfortable using Gmail. Things are easy with Gmail.

Google has released more than 25 apps out of which at least 10 apps are used by the smartphone users on a daily basis. To name some of the apps –

Google Maps

Google Meet

Gmail

Google Drive

Google Hangouts

Google Calendar

Google Contacts

Photos

News and the most important

Google Search

You can sign up with gmail and get associated with all the apps above. Interestingly, you do not have to do registration on all the apps. One Gmail account for all apps. Hassle free and time saving activity to create only one account and use it for all.

Today, we are going to see some Gmail features that you can use on a daily basis. Your email management task becomes easy.

Gmail Forward Feature

Forwarding an email is to do with the mail you have received in your mailbox or any incoming message of your inbox can be forwarded.

Your sent email to one recipient can also be forwarded to another recipient.

You can forward email to gmail using your other account registered with Outlook or Apple email clients.

You can also add your message on the top of incoming messages and forward emails.

Most of the email programs or clients offer the forward email feature for a single email. To forward multiple incoming emails to a recipient works differently in different email clients.

Let us see how to forward emails to Gmail.

Open your Outlook account. (We have taken an example of an Outlook email program.)

Select the message(s) one by one. Select the message and click the right-arrow shown on the top right corner. (Alternatively click the 3-dots to show the More Actions menu.

Click the Forward option. Enter the recipient as your gmail address and click send.

You have successfully forwarded the email to gmail.

Gmail – Other Features

You can do better email management with Gmail than any other email client. Signing up with a gmail account is a quick and easy process.

Working with templates –

While drafting an email, you can create a template that can be reused for frequent use. The saved template is helpful to draft an email faster and you do not have to remember what message you have to type. The messages with the same context can be saved as templates.

Working with Confidential mode –

Whenever you are sending an email, you can turn on the Confidential mode. This sent message will not allow the recipient to copy, forward or download the mail. You can also set expiry to keep this mode ON.

Easy-to-use Interface – All of the below functionality, you can achieve with a single click.

You can move your emails by dragging from one tab to another smoothly.

You can use the Select All option to Archive your emails.

You can search for emails and select searched emails and delete them.

You can start chatting with your contact from the mail listing window itself.

You can start Google Meet from the same email listing window.

You can select the message(s) and report them as spam in a single click.

Print – You can directly print the document from the email you have received. No headache of downloading the document and then printing the same.

Differentiate your incoming messages with different flags

You can mark the message as unread, Add Star or mark it as Important. All from one place.

Task Management tool

Gmail also works as a task management tool. You can add your incoming email message to task. You can mark it as in progress and complete once you are done with the task. You can set reminders, add notes and delete the tasks.

There are many more features that are worth exploring on Gmail. You can do email management faster and easier.

One of the features that Gmail does not provide directly, but you can surely do that. You can forward multiple emails in Gmail at one shot. You do not have to select the mails one by one and forward it.

Now, let us see what is required to do so.

Install Google Chrome extension

You will need to install a chrome extension to use this feature. To install, follow the step-by-step instructions as below.

Open Google Chrome browser window.

Type https://chrome.google.com/.

Search for the Multi Forward for Gmail extension. You will see the extension below.

Click the Add to Chrome button. The extension is added to your extension list.

You will see the extension as shown below.

We have seen how you have added the chrome extension. The next step is to use this extension and forward multiple emails in Gmail.

Forward Multiple Emails in Gmail [Step-by-step Guide]

Open your Gmail account. (Login with your username and password.)

You will see the Multiple Forward icon on the toolbar.

You can select all the mails that you want to forward as one shot. Click the Multiple Forward icon. You will see the pop up below if you are using this for the first time.

Click the Sign-In button. You will see the login window.

Enter your gmail username and click the Next button.

Enter the password and login. Now, you will see the options for Multiple Forward.

You have a list of options to send these selected messages.

Forward selected emails individually – you will need to enter recipient email individually

Forward selected emails in one email (merged in body) – you will need to enter the recipient address once and all the selected emails will go as one email to the recipient. Click the Forward Emails button.

Forward selected emails in one email as EML attachments – All the selected emails will be sent with the EML file format as attachments. The recipient can open this email in Outlook or Apple email client.

Forward selected emails in one email as PDF attachment – All the selected emails will be merged into a PDF document and attached. The recipient can open the PDF attachment for viewing all the emails.

You have successfully sent multiple emails to recipients using different ways.

Another way to forward multiple emails in Gmail without using Chrome extension. It is very much possible. Let us see the steps to forward multiple emails without using the Multiple Forward extension.

Open your Gmail mailbox.

Select the email messages you want to forward. To do so:

Click the checkbox prior to the message row.

Click the More icon (3-vertical dots icon). You will see a menu with a list of options.

Select the Forward as attachment option. You will see all the selected messages are attached in the compose new message window.

You can enter one more recipient to send all these emails.

Enter the subject and message in the body.

Once an email is drafted, click the Send button.

If you do not want to send the message right away, you can schedule the send action.

Select the Schedule send option. You will see the list of options as below.

Select the readily available option or click Select date and time to schedule it for a specific time.

Once it is scheduled, the message with forwarded attachments will be sent to the recipients.

You have successfully forwarded the multiple email messages as attachment in a go.

Let’s come back to Gmail

We have seen quite interesting and easy to use features in Gmail. As we daily work with Gmail, it is good to have knowledge on how to perform certain actions as per our daily requirements. Let’s say you are receiving your work task in Gmail and you need to track them. You can use task management.

If you are getting a monthly bank statement from your bank, you can filter these messages so quickly. If you are getting advertisement related / unwanted emails, you can remove them easily. If you want to speak to your colleague, Google meet is available in your gmail only. No need to go anywhere else. You can use multiple gmail accounts using a single window and switch between accounts to check your mail boxes. No need to navigate between the mailboxes. Quick and easy.

Nowadays, corporates have started using G Suite (business solution) for email management for employees. It gives a lot more convenient features for email management. Though you have thousands of emails in your gmail box, you will never find performance issues. Gmail works seamlessly and smoothly without any glitch or difficulty.

Read: How to use GMass Chrome Extension?

0 notes

Text

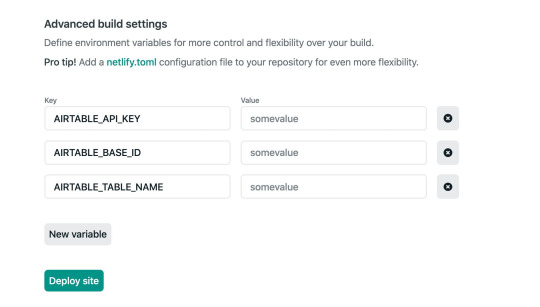

Going Jamstack with React, Serverless, and Airtable

The best way to learn is to build. Let’s learn about this hot new buzzword, Jamstack, by building a site with React, Netlify (Serverless) Functions, and Airtable. One of the ingredients of Jamstack is static hosting, but that doesn’t mean everything on the site has to be static. In fact, we’re going to build an app with full-on CRUD capability, just like a tutorial for any web technology with more traditional server-side access might.

youtube

Why these technologies, you ask?

You might already know this, but the “JAM” in Jamstack stands for JavaScript, APIs, and Markup. These technologies individually are not new, so the Jamstack is really just a new and creative way to combine them. You can read more about it over at the Jamstack site.

One of the most important benefits of Jamstack is ease of deployment and hosting, which heavily influence the technologies we are using. By incorporating Netlify Functions (for backend CRUD operations with Airtable), we will be able to deploy our full-stack application to Netlify. The simplicity of this process is the beauty of the Jamstack.

As far as the database, I chose Airtable because I wanted something that was easy to get started with. I also didn’t want to get bogged down in technical database details, so Airtable fits perfectly. Here’s a few of the benefits of Airtable:

You don’t have to deploy or host a database yourself

It comes with an Excel-like GUI for viewing and editing data

There’s a nice JavaScript SDK

What we’re building

For context going forward, we are going to build an app where you can use to track online courses that you want to take. Personally, I take lots of online courses, and sometimes it’s hard to keep up with the ones in my backlog. This app will let track those courses, similar to a Netflix queue.

Source Code

One of the reasons I take lots of online courses is because I make courses. In fact, I have a new one available where you can learn how to build secure and production-ready Jamstack applications using React and Netlify (Serverless) Functions. We’ll cover authentication, data storage in Airtable, Styled Components, Continuous Integration with Netlify, and more! Check it out →

Airtable setup

Let me start by clarifying that Airtable calls their databases “bases.” So, to get started with Airtable, we’ll need to do a couple of things.

Sign up for a free account

Create a new “base”

Define a new table for storing courses

Next, let’s create a new database. We’ll log into Airtable, click on “Add a Base” and choose the “Start From Scratch” option. I named my new base “JAMstack Demos” so that I can use it for different projects in the future.

Next, let’s click on the base to open it.

You’ll notice that this looks very similar to an Excel or Google Sheets document. This is really nice for being able tower with data right inside of the dashboard. There are few columns already created, but we add our own. Here are the columns we need and their types:

name (single line text)

link (single line text)

tags (multiple select)

purchased (checkbox)

We should add a few tags to the tags column while we’re at it. I added “node,” “react,” “jamstack,” and “javascript” as a start. Feel free to add any tags that make sense for the types of classes you might be interested in.

I also added a few rows of data in the name column based on my favorite online courses:

Build 20 React Apps

Advanced React Security Patterns

React and Serverless

The last thing to do is rename the table itself. It’s called “Table 1” by default. I renamed it to “courses” instead.

Locating Airtable credentials

Before we get into writing code, there are a couple of pieces of information we need to get from Airtable. The first is your API Key. The easiest way to get this is to go your account page and look in the “Overview” section.

Next, we need the ID of the base we just created. I would recommend heading to the Airtable API page because you’ll see a list of your bases. Click on the base you just created, and you should see the base ID listed. The documentation for the Airtable API is really handy and has more detailed instructions for find the ID of a base.

Lastly, we need the table’s name. Again, I named mine “courses” but use whatever you named yours if it’s different.

Project setup

To help speed things along, I’ve created a starter project for us in the main repository. You’ll need to do a few things to follow along from here:

Fork the repository by clicking the fork button

Clone the new repository locally

Check out the starter branch with git checkout starter

There are lots of files already there. The majority of the files come from a standard create-react-app application with a few exceptions. There is also a functions directory which will host all of our serverless functions. Lastly, there’s a netlify.toml configuration file that tells Netlify where our serverless functions live. Also in this config is a redirect that simplifies the path we use to call our functions. More on this soon.

The last piece of the setup is to incorporate environment variables that we can use in our serverless functions. To do this install the dotenv package.

npm install dotenv

Then, create a .env file in the root of the repository with the following. Make sure to use your own API key, base ID, and table name that you found earlier.

AIRTABLE_API_KEY=<YOUR_API_KEY> AIRTABLE_BASE_ID=<YOUR_BASE_ID> AIRTABLE_TABLE_NAME=<YOUR_TABLE_NAME>

Now let’s write some code!

Setting up serverless functions

To create serverless functions with Netlify, we need to create a JavaScript file inside of our /functions directory. There are already some files included in this starter directory. Let’s look in the courses.js file first.

const formattedReturn = require('./formattedReturn'); const getCourses = require('./getCourses'); const createCourse = require('./createCourse'); const deleteCourse = require('./deleteCourse'); const updateCourse = require('./updateCourse'); exports.handler = async (event) => { return formattedReturn(200, 'Hello World'); };

The core part of a serverless function is the exports.handler function. This is where we handle the incoming request and respond to it. In this case, we are accepting an event parameter which we will use in just a moment.

We are returning a call inside the handler to the formattedReturn function, which makes it a bit simpler to return a status and body data. Here’s what that function looks like for reference.

module.exports = (statusCode, body) => { return { statusCode, body: JSON.stringify(body), }; };

Notice also that we are importing several helper functions to handle the interaction with Airtable. We can decide which one of these to call based on the HTTP method of the incoming request.

HTTP GET → getCourses

HTTP POST → createCourse

HTTP PUT → updateCourse

HTTP DELETE → deleteCourse

Let’s update this function to call the appropriate helper function based on the HTTP method in the event parameter. If the request doesn’t match one of the methods we are expecting, we can return a 405 status code (method not allowed).

exports.handler = async (event) => { if (event.httpMethod === 'GET') { return await getCourses(event); } else if (event.httpMethod === 'POST') { return await createCourse(event); } else if (event.httpMethod === 'PUT') { return await updateCourse(event); } else if (event.httpMethod === 'DELETE') { return await deleteCourse(event); } else { return formattedReturn(405, {}); } };

Updating the Airtable configuration file

Since we are going to be interacting with Airtable in each of the different helper files, let’s configure it once and reuse it. Open the airtable.js file.

In this file, we want to get a reference to the courses table we created earlier. To do that, we create a reference to our Airtable base using the API key and the base ID. Then, we use the base to get a reference to the table and export it.

require('dotenv').config(); var Airtable = require('airtable'); var base = new Airtable({ apiKey: process.env.AIRTABLE_API_KEY }).base( process.env.AIRTABLE_BASE_ID ); const table = base(process.env.AIRTABLE_TABLE_NAME); module.exports = { table };

Getting courses

With the Airtable config in place, we can now open up the getCourses.js file and retrieve courses from our table by calling table.select().firstPage(). The Airtable API uses pagination so, in this case, we are specifying that we want the first page of records (which is 20 records by default).

const courses = await table.select().firstPage(); return formattedReturn(200, courses);

Just like with any async/await call, we need to handle errors. Let’s surround this snippet with a try/catch.

try { const courses = await table.select().firstPage(); return formattedReturn(200, courses); } catch (err) { console.error(err); return formattedReturn(500, {}); }

Airtable returns back a lot of extra information in its records. I prefer to simplify these records with only the record ID and the values for each of the table columns we created above. These values are found in the fields property. To do this, I used the an Array map to format the data the way I want.

const { table } = require('./airtable'); const formattedReturn = require('./formattedReturn'); module.exports = async (event) => { try { const courses = await table.select().firstPage(); const formattedCourses = courses.map((course) => ({ id: course.id, ...course.fields, })); return formattedReturn(200, formattedCourses); } catch (err) { console.error(err); return formattedReturn(500, {}); } };

How do we test this out? Well, the netlify-cli provides us a netlify dev command to run our serverless functions (and our front-end) locally. First, install the CLI:

npm install -g netlify-cli

Then, run the netlify dev command inside of the directory.

This beautiful command does a few things for us:

Runs the serverless functions

Runs a web server for your site

Creates a proxy for front end and serverless functions to talk to each other on Port 8888.

Let’s open up the following URL to see if this works:

We are able to use /api/* for our API because of the redirect configuration in the netlify.toml file.

If successful, we should see our data displayed in the browser.

Creating courses

Let’s add the functionality to create a course by opening up the createCourse.js file. We need to grab the properties from the incoming POST body and use them to create a new record by calling table.create().

The incoming event.body comes in a regular string which means we need to parse it to get a JavaScript object.

const fields = JSON.parse(event.body);

Then, we use those fields to create a new course. Notice that the create() function accepts an array which allows us to create multiple records at once.

const createdCourse = await table.create([{ fields }]);

Then, we can return the createdCourse:

return formattedReturn(200, createdCourse);

And, of course, we should wrap things with a try/catch:

const { table } = require('./airtable'); const formattedReturn = require('./formattedReturn'); module.exports = async (event) => { const fields = JSON.parse(event.body); try { const createdCourse = await table.create([{ fields }]); return formattedReturn(200, createdCourse); } catch (err) { console.error(err); return formattedReturn(500, {}); } };

Since we can’t perform a POST, PUT, or DELETE directly in the browser web address (like we did for the GET), we need to use a separate tool for testing our endpoints from now on. I prefer Postman, but I’ve heard good things about Insomnia as well.

Inside of Postman, I need the following configuration.

url: localhost:8888/api/courses

method: POST

body: JSON object with name, link, and tags

After running the request, we should see the new course record is returned.

We can also check the Airtable GUI to see the new record.

Tip: Copy and paste the ID from the new record to use in the next two functions.

Updating courses

Now, let’s turn to updating an existing course. From the incoming request body, we need the id of the record as well as the other field values.

We can specifically grab the id value using object destructuring, like so:

const {id} = JSON.parse(event.body);

Then, we can use the spread operator to grab the rest of the values and assign it to a variable called fields:

const {id, ...fields} = JSON.parse(event.body);

From there, we call the update() function which takes an array of objects (each with an id and fields property) to be updated:

const updatedCourse = await table.update([{id, fields}]);

Here’s the full file with all that together:

const { table } = require('./airtable'); const formattedReturn = require('./formattedReturn'); module.exports = async (event) => { const { id, ...fields } = JSON.parse(event.body); try { const updatedCourse = await table.update([{ id, fields }]); return formattedReturn(200, updatedCourse); } catch (err) { console.error(err); return formattedReturn(500, {}); } };

To test this out, we’ll turn back to Postman for the PUT request:

url: localhost:8888/api/courses

method: PUT

body: JSON object with id (the id from the course we just created) and the fields we want to update (name, link, and tags)

I decided to append “Updated!!!” to the name of a course once it’s been updated.

We can also see the change in the Airtable GUI.

Deleting courses

Lastly, we need to add delete functionality. Open the deleteCourse.js file. We will need to get the id from the request body and use it to call the destroy() function.

const { id } = JSON.parse(event.body); const deletedCourse = await table.destroy(id);

The final file looks like this:

const { table } = require('./airtable'); const formattedReturn = require('./formattedReturn'); module.exports = async (event) => { const { id } = JSON.parse(event.body); try { const deletedCourse = await table.destroy(id); return formattedReturn(200, deletedCourse); } catch (err) { console.error(err); return formattedReturn(500, {}); } };

Here’s the configuration for the Delete request in Postman.

url: localhost:8888/api/courses

method: PUT

body: JSON object with an id (the same id from the course we just updated)

And, of course, we can double-check that the record was removed by looking at the Airtable GUI.

Displaying a list of courses in React

Whew, we have built our entire back end! Now, let’s move on to the front end. The majority of the code is already written. We just need to write the parts that interact with our serverless functions. Let’s start by displaying a list of courses.

Open the App.js file and find the loadCourses function. Inside, we need to make a call to our serverless function to retrieve the list of courses. For this app, we are going to make an HTTP request using fetch, which is built right in.

Thanks to the netlify dev command, we can make our request using a relative path to the endpoint. The beautiful thing is that this means we don’t need to make any changes after deploying our application!

const res = await fetch('/api/courses'); const courses = await res.json();

Then, store the list of courses in the courses state variable.

setCourses(courses)

Put it all together and wrap it with a try/catch:

const loadCourses = async () => { try { const res = await fetch('/api/courses'); const courses = await res.json(); setCourses(courses); } catch (error) { console.error(error); } };

Open up localhost:8888 in the browser and we should our list of courses.

Adding courses in React

Now that we have the ability to view our courses, we need the functionality to create new courses. Open up the CourseForm.js file and look for the submitCourse function. Here, we’ll need to make a POST request to the API and send the inputs from the form in the body.

The JavaScript Fetch API makes GET requests by default, so to send a POST, we need to pass a configuration object with the request. This options object will have these two properties.

method → POST

body → a stringified version of the input data

await fetch('/api/courses', { method: 'POST', body: JSON.stringify({ name, link, tags, }), });