#how to deploy docker image in ecs service

Explore tagged Tumblr posts

Text

Deploy Docker Image to AWS Cloud ECS Service | Docker AWS ECS Tutorial

Full Video Link: https://youtu.be/ZlR5onuwZzw Hi, a new #video on #AWS #ECS tutorial is published on @codeonedigest #youtube channel. Learn how to deploy #docker image in AWS ECS fargate service. #deploydockerimageinaws #deploydockerimageinamazoncloud

Step by step guide for beginners to deploy docker image to cloud in AWS ECS service i.e. Elastic Container Service. Learn how to deploy docker container image to AWS ECS fargate. What is cluster and task definition in ECS service? How to create container in ECS service? How to run Task Definition to deploy Docker Image from Docker Hub repository? How to check the health of cluster and container?…

View On WordPress

#aws ecs demo#aws ecs docker compose tutorial#aws ecs fargate#aws ecs fargate tutorial#aws ecs service#aws ecs task definition#aws ecs tutorial#deploy docker container to aws#deploy docker image in amazon cloud#deploy docker image in aws#deploy docker image to aws#deploy docker image to aws ec2#deploy image to aws fargate#how to deploy docker image in ecs service#how to run docker image in ecs service#run docker image in cloud ecs service#what is ecs service

0 notes

Text

Deploying Containers on AWS ECS with Fargate

Introduction

Amazon Elastic Container Service (ECS) with AWS Fargate enables developers to deploy and manage containers without managing the underlying infrastructure. Fargate eliminates the need to provision or scale EC2 instances, providing a serverless approach to containerized applications.

This guide walks through deploying a containerized application on AWS ECS with Fargate using AWS CLI, Terraform, or the AWS Management Console.

1. Understanding AWS ECS and Fargate

✅ What is AWS ECS?

Amazon ECS (Elastic Container Service) is a fully managed container orchestration service that allows running Docker containers on AWS.

✅ What is AWS Fargate?

AWS Fargate is a serverless compute engine for ECS that removes the need to manage EC2 instances, providing:

Automatic scaling

Per-second billing

Enhanced security (isolation at the task level)

Reduced operational overhead

✅ Why Choose ECS with Fargate?

✔ No need to manage EC2 instances ✔ Pay only for the resources your containers consume ✔ Simplified networking and security ✔ Seamless integration with AWS services (CloudWatch, IAM, ALB)

2. Prerequisites

Before deploying, ensure you have:

AWS Account with permissions for ECS, Fargate, IAM, and VPC

AWS CLI installed and configured

Docker installed to build container images

An existing ECR (Elastic Container Registry) repository

3. Steps to Deploy Containers on AWS ECS with Fargate

Step 1: Create a Dockerized Application

First, create a simple Dockerfile for a Node.js or Python application.

Example: Node.js DockerfiledockerfileFROM node:16-alpine WORKDIR /app COPY package.json . RUN npm install COPY . . CMD ["node", "server.js"] EXPOSE 3000

Build and push the image to AWS ECR:shaws ecr create-repository --repository-name my-app docker build -t my-app . docker tag my-app:latest <AWS_ACCOUNT_ID>.dkr.ecr.<REGION>.amazonaws.com/my-app:latest aws ecr get-login-password --region <REGION> | docker login --username AWS --password-stdin <AWS_ACCOUNT_ID>.dkr.ecr.<REGION>.amazonaws.com docker push <AWS_ACCOUNT_ID>.dkr.ecr.<REGION>.amazonaws.com/my-app:latest

Step 2: Create an ECS Cluster

Use the AWS CLI to create a cluster:shaws ecs create-cluster --cluster-name my-cluster

Or use Terraform:hclresource "aws_ecs_cluster" "my_cluster" { name = "my-cluster" }

Step 3: Define a Task Definition for Fargate

The task definition specifies how the container runs.

Create a task-definition.js{ "family": "my-task", "networkMode": "awsvpc", "executionRoleArn": "arn:aws:iam::<AWS_ACCOUNT_ID>:role/ecsTaskExecutionRole", "cpu": "512", "memory": "1024", "requiresCompatibilities": ["FARGATE"], "containerDefinitions": [ { "name": "my-container", "image": "<AWS_ACCOUNT_ID>.dkr.ecr.<REGION>.amazonaws.com/my-app:latest", "portMappings": [{"containerPort": 3000, "hostPort": 3000}], "essential": true } ] }

Register the task definition:shaws ecs register-task-definition --cli-input-json file://task-definition.json

Step 4: Create an ECS Service

Use AWS CLI:shaws ecs create-service --cluster my-cluster --service-name my-service --task-definition my-task --desired-count 1 --launch-type FARGATE --network-configuration "awsvpcConfiguration={subnets=[subnet-xyz],securityGroups=[sg-xyz],assignPublicIp=\"ENABLED\"}"

Or Terraform:hclresource "aws_ecs_service" "my_service" { name = "my-service" cluster = aws_ecs_cluster.my_cluster.id task_definition = aws_ecs_task_definition.my_task.arn desired_count = 1 launch_type = "FARGATE" network_configuration { subnets = ["subnet-xyz"] security_groups = ["sg-xyz"] assign_public_ip = true } }

Step 5: Configure a Load Balancer (Optional)

If the service needs internet access, configure an Application Load Balancer (ALB).

Create an ALB in your VPC.

Add an ECS service to the target group.

Configure a listener rule for routing traffic.

4. Monitoring & Scaling

🔹 Monitor ECS Service

Use AWS CloudWatch to monitor logs and performance.shaws logs describe-log-groups

🔹 Auto Scaling ECS Tasks

Configure an Auto Scaling Policy:sh aws application-autoscaling register-scalable-target \ --service-namespace ecs \ --scalable-dimension ecs:service:DesiredCount \ --resource-id service/my-cluster/my-service \ --min-capacity 1 \ --max-capacity 5

5. Cleaning Up Resources

After testing, clean up resources to avoid unnecessary charges.shaws ecs delete-service --cluster my-cluster --service my-service --force aws ecs delete-cluster --cluster my-cluster aws ecr delete-repository --repository-name my-app --force

Conclusion

AWS ECS with Fargate simplifies container deployment by eliminating the need to manage servers. By following this guide, you can deploy scalable, cost-efficient, and secure applications using serverless containers.

WEBSITE: https://www.ficusoft.in/aws-training-in-chennai/

0 notes

Text

AWS and machine learning

AWS (Amazon Web Services) is a collection of remote computing services (also called web services) that make up a cloud computing platform, offered by Amazon.com. These services operate from 12 geographical regions across the world.

AWS provides a variety of services for machine learning, including:

Amazon SageMaker is a fully-managed platform for building, training, and deploying machine learning models.

Amazon Machine Learning is a service that makes it easy for developers of all skill levels to use machine learning.

AWS Deep Learning AMIs, pre-built Amazon Machine Images (AMIs) that make it easy to get started with deep learning on Amazon EC2.

AWS Deep Learning Containers, Docker images pre-installed with deep learning frameworks to make it easy to run distributed training on Amazon ECS.

Additionally, AWS also provides services for data storage, data processing, and data analysis which are essential for machine learning workloads. These services include Amazon S3, Amazon Kinesis, Amazon Redshift, and Amazon QuickSight.

In summary, AWS provides a comprehensive set of services that allow developers and data scientists to build, train, and deploy machine learning models easily and at scale.

AWS also provides several other services that can be used in conjunction with machine learning. These include:

Amazon Comprehend is a natural language processing service that uses machine learning to extract insights from text.

Amazon Transcribe is a service that uses machine learning to transcribe speech to text.

Amazon Translate is a service that uses machine learning to translate text from one language to another.

Amazon Rekognition is a service that uses machine learning to analyze images and videos, detect objects, scenes, and activities, and recognise faces, text, and other content.

AWS also provides a number of tools and frameworks that can be used to build and deploy machine learning models, such as:

TensorFlow is an open-source machine learning framework that is widely used for building and deploying neural networks.

Apache MXNet, a deep learning framework that is fully supported on AWS.

PyTorch is an open-source machine-learning

library for Python that is also fully supported on AWS.

AWS SDKs for several programming languages, including Python, Java, and .NET, which make it easy to interact with AWS services from your application.

AWS also offers a number of programs and resources to help developers and data scientists learn about machine learning, including the Machine Learning University, which provides a variety of courses, labs, and tutorials on machine learning topics, and the AWS Machine Learning Blog, which features articles and case studies on the latest developments in machine learning and how to use AWS services for machine learning workloads.

In summary, AWS provides a wide range of services, tools, and resources for building and deploying machine learning models, making it a powerful platform for machine learning workloads at any scale.

0 notes

Text

Aws fargate startup time

Select FARGATE launch type, the previously created task as the task definition, and all other properties to your needs (e.g., I needed the service to run in a specific Subnet to make it accessible to other parts of my application).Add the container definition, referencing the previously uploaded Docker image from the AWS ECR, and specifying memory limits and port forwardings.Set name, roles, and resource specification for the task, appropriate for the containerized service you’re using.Create a new task definition and select the Fargate launch type.Create a cluster and select the Networking only optionĪmazon ECS Cluster with Networking only option.Create AWS ECR (Elastic Container Registry) repository.I followed this step-by-step approach to deploy my dockerized bookkeeper application using Fargate. I wanted to reuse those to allow my already deployed and monitored webapp talk to the service managed by ECS. At this time, Fargate is only available for that region. Unfortunately, the offered first-run-wizard didn’t allow me to reuse existing subnets and security groups and that was not practical in my case. Make sure you work in the North Virginia region. To be more precise, AWS Fargate allows you to run containers without having to manage underlying EC2 instances, removing the need to choose server types and think about scale. Fargate is short for ‘AWS manages compute resources so you don’t have to worry about that’. Application architecture What rhymes with Stargate?įargate. Furthermore, a Node.js web application queries the Spring Boot application for book records and displays the results in an Express web frontend. For ease of use and the use case at hand, I containerized both application components using Docker. I wrote a simple Spring Boot application called bookkeeper, that manages book records in an AWS RDS instance running the MariaDB engine. Naturally, I was also looking to get insights into my application and for an efficient way to include Dynatrace in the deployment process. Curious as I am, I deployed a simple demo app using AWS Fargate to find out how it works. Technologies like kubeless.io, Fission, and AWS Fargate promise to relieve app developers from the operational complexity of running entire applications in a reliable, resilient, and scalable way, by hiding the underlying virtual computing resources. Everyone’s talking about #serverless these days.

0 notes

Text

This article is part 1 of a 4 part guide to running Docker containers on AWS ECS. ECS stands for Elastic Container Service. It is a managed container service that can run docker containers. Although AWS also offers container management with Kubernetes, (EKS) it also has its proprietary solution (ECS). The guide will cover: Creating the ECS Cluster. Provision an Image Registry (ECR) and push docker images to the registry. Deploying Containers to the cluster using Task and Service Definitions. Creating a Pipeline to Update the services running on the ECS Cluster. This guide will cover how to create the ECS cluster. Requirements/Prerequisites Before proceeding with this guide, the reader/user must have: An AWS Account. Created a User on the account with Permissions to provision resources on the account. Generated an EC2 SSH Key-Pair. Created a VPC and Subnets (Optional). A guide to create VPC and Subnets (AWS Network Architecture) can be found on the link below: Create AWS VPC With CloudFormation Creating the AWS ECS Cluster There are two types of ECS clusters we can create. ECS with Fargate cluster: This is a cluster that allows the user to run containers without the need to worry about provisioning and managing EC2 instances/servers. It is basically a serverless architecture. ECS with EC2 Instances cluster: This cluster allows a user to run containers on provisioned EC2 instances. Hence the need to manage the servers. Create an AWS ECS Cluster with Fargate Option To create the cluster we can do so manually or automatically using either CloudFormation or Terraform. For this article, I will create the cluster using CloudFormation and manually. CloudFormation: The below CloudFormation Template creates an ECS cluster with a capacity provider as Fargate and Container Insights enabled. The reader should modify the template settings to customize to their specific requirements. Key aspects to change will be: Tags. The Cluster Settings. AWSTemplateFormatVersion: "2010-09-09" Description: "Create ECS fargate cluster" Parameters: Name: Type: String Description: The name of the ECS Cluster Resources: ECSCluster: Type: 'AWS::ECS::Cluster' Properties: ClusterName: !Ref Name ClusterSettings: - Name: containerInsights Value: enabled CapacityProviders: - FARGATE Tags: - Key: "Name" Value: "test-ecs" - Key: "CreatedBy" Value: "Maureen Barasa" - Key: "Environment" Value: "test" Outputs: ECS: Description: The created ECS Cluster Value: !Ref ECSCluster Manually: To manually create the cluster follow the below steps. On the Elastic Container Services console, click create cluster. Create Cluster Then, for the cluster template, select Powered by Fargate as your option. Click next step. Select Cluster Template (Powered by Fargate) Finally, configure cluster setting and click create cluster. N/B: You can create a new VPC for the cluster. But this is optional. Configure Cluster Settings You now have your ECS Fargate cluster running. Create an AWS ECS Cluster with EC2 Option We can also do this using CloudFormation, Terraform, or manually. CloudFormation: For CloudFormation, use the below template. AWSTemplateFormatVersion: "2010-09-09" Description: "Create ECS fargate cluster" Parameters: Name: Type: String Description: The name of the ECS Cluster VPC: Type: String Description: The vpc to launch the service Default: vpc-ID PrivateSubnet01: Type: String Description: The subnet where to launch the ecs instances Default: subnet-ID PrivateSubnet02:

Type: String Description: The subnet where to launch the ecs instances Default: subnet-ID InstanceType: Type: String Description: The EC2 instance type Default: "t2.micro" MinSize: Type: String Description: The subnet where to launch the ec2 Default: 1 MaxSize: Type: String Description: The subnet where to launch the ec2 Default: 2 DesiredSize: Type: String Description: The subnet where to launch the ec2 Default: 1 Resources: IAMInstanceRole: Type: 'AWS::IAM::Role' Properties: Description: The ECS Instance Role RoleName: ecsInstanceRole2 AssumeRolePolicyDocument: Version: 2012-10-17 Statement: - Effect: Allow Principal: Service: - ec2.amazonaws.com Action: - 'sts:AssumeRole' ManagedPolicyArns: - arn:aws:iam::aws:policy/service-role/AmazonEC2ContainerServiceforEC2Role - arn:aws:iam::aws:policy/CloudWatchAgentServerPolicy - arn:aws:iam::aws:policy/AmazonS3ReadOnlyAccess Tags: - Key: "Environment" Value: "test" - Key: "createdBy" Value: "Maureen Barasa" - Key: "Name" Value: "ecsInstanceRole2" IAMInstanceProfile: Type: AWS::IAM::InstanceProfile Properties: InstanceProfileName: ecsInstanceRole2 Roles: - !Ref IAMInstanceRole ECSSecurityGroup: Type: "AWS::EC2::SecurityGroup" Properties: GroupDescription: "Security Group to control access to the ECS cluster" GroupName: "test-ECS-SG" VpcId: !Ref VPC SecurityGroupIngress: - CidrIp: 0.0.0.0/0 FromPort: 80 IpProtocol: "tcp" ToPort: 80 - CidrIp: 0.0.0.0/0 FromPort: 443 IpProtocol: "tcp" ToPort: 443 Tags: - Key: "Name" Value: "test-ECS-SG" - Key: "CreatedBy" Value: "Maureen Barasa" - Key: "Environment" Value: "test" ECSCluster: Type: 'AWS::ECS::Cluster' Properties: ClusterName: !Ref Name ClusterSettings: - Name: containerInsights Value: enabled Tags: - Key: "Name" Value: "test-ecs" - Key: "CreatedBy" Value: "Maureen Barasa" - Key: "Environment" Value: "test" ECSAutoScalingGroup: Type: AWS::AutoScaling::AutoScalingGroup Properties: VPCZoneIdentifier: - !Ref PrivateSubnet01 - !Ref PrivateSubnet02 LaunchConfigurationName: !Ref LaunchConfiguration MinSize: !Ref MinSize MaxSize: !Ref MaxSize DesiredCapacity: !Ref DesiredSize HealthCheckGracePeriod: 300 Tags: - Key: "Name" Value: "test-ecs" PropagateAtLaunch: true - Key: "CreatedBy" Value: "MaureenBarasa" PropagateAtLaunch: true - Key: "Environment" Value: "test" PropagateAtLaunch: true LaunchConfiguration: Type: AWS::AutoScaling::LaunchConfiguration

Properties: ImageId: "ami-ID" SecurityGroups: - !Ref ECSSecurityGroup InstanceType: !Ref InstanceType IamInstanceProfile: !Ref IAMInstanceProfile KeyName: "test-key" UserData: Fn::Base64: !Sub | #!/bin/bash echo ECS_CLUSTER=test-ecs >> /etc/ecs/ecs.config;echo ECS_BACKEND_HOST= >> /etc/ecs/ecs.config; BlockDeviceMappings: - DeviceName: "/dev/xvda" Ebs: Encrypted: false VolumeSize: 20 VolumeType: "gp2" DeleteOnTermination: true Outputs: IAMProfile: Description: The created EC2 Instance Role Value: !Ref IAMInstanceProfile AutoScalingGroup: Description: The ECS Autoscaling Group Value: !Ref ECSAutoScalingGroup ECS: Description: The created ECS Cluster Value: !Ref ECSCluster On the parameter section of the template, enter the specific customized inputs for your cluster. This include: The VPC and Subnets. The Instance Type and Auto Scaling Group Scaling Requirements. Under the resources section. Take note to customize: Resources Names and Tags. The Key Name of your EC2 launch configuration (Use a Key-Pair you have generated). The AMI-ID for your EC2 Instances. (Use an Amazon ECS-optimized AMIs on your account). After the CloudFormation finishes executing the stack, You should now have an ECS cluster with a registered active EC2 container instance. See below: ECS Cluster with a Container Instance Manually: To create the cluster manually follow the below steps: Create an ECS Instance Role with the following AWS Managed Policies: AmazonS3ReadOnlyAccess CloudWatchAgentServerPolicy Amazon EC2ContainerServiceforEC2Role Edit the role trust relationship and add the below JSON trust policy. "Version": "2008-10-17", "Statement": [ "Sid": "", "Effect": "Allow", "Principal": "Service": "ec2.amazonaws.com" , "Action": "sts:AssumeRole" ] Then, on the Elastic Container Service console, click create cluster. This time on the select cluster template window, instead of opting for Powered by Fargate, choose EC2 Linux + Networking option. Click next step. Select Cluster Template Next, you need to configure your cluster. On cluster configuration, we have 3 main sections: Cluster Name Instance Configuration Networking Cluster Name: Cluster Name Instance Configuration: Instance Configurations Ensure you select the correct instance configurations specific to your requirements. Networking: Networking Configurations Here, there is an option to create a new VPC, and Subnets should the user not have created his/her own. If they had already created a Network Architecture, the reader can use the existing setup. The same applies to the security group for the cluster. A new one can be created or we can choose an existing one. Then, for the container instance IAM Role, select the role you created above. Enable container insights by checking the box next to enable container insights. Configure IAM Role and Container Insights Finally click, create cluster. You will have a cluster up and running in a few minutes. Below is a link to part 2 of this series where I discuss deploying containers to the cluster using Task and Service Definitions. Running Docker Containers on AWS ECS – Upload Docker Images to ECR – Part 2 AWS ECS: Deploying Containers using Task and Service Definitions – Part 3 Important Links AWS ECS Manual ECS CloudFormation guide ECS IAM Role Creation

0 notes

Text

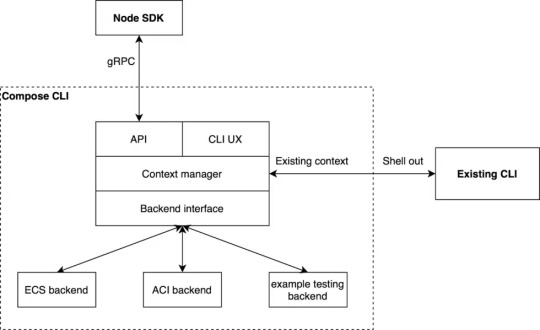

Docker Announces Open Source Compose for AWS ECS & Microsoft ACI

Docker has announced that the code for the Microsoft Azure Container Instances (ACI) and Amazon Elastic Container Service (ECS) integrations will be open-sourced. For the first time, Docker has made Compose available for the cloud, in addition to enabling an open community for evolving the Compose standard.

Docker is an open-source containerization platform. It allows programmers to bundle applications into containers, standardized executable components that combine application source code with the OS libraries and dependencies needed to run that code in any context.

Containers make distributing distributed programs easier, and they’re becoming more popular as companies move to cloud-native development and hybrid multi-cloud setups. Developers can develop containers without Docker, but the platform makes building, deploying, and managing containers easier, simpler, and safer.

Docker is a free toolkit that enables developers to make use of a single API to build, operate, update, deploy, and stop containers using simple commands and work-saving automation. Docker containers are live instances of Docker images that are currently executing.

Containers are ephemeral, live, executable content, whereas Docker images are read-only files. It enables the users to interact with them, and admins can use Docker commands to change their settings and circumstances.

Why are containers popular, and how do they work?

Docker adoption surged and continues to grow as a result of these factors. According to the reports from Docker, there are 11 million developers and 13 billion monthly container images downloaded. Process isolation and virtualization characteristics provided into the Linux kernel enable containers.

These features, like control groups (Cgroups) for allocating resources among methods and namespaces for restricting a process’ access or visibility into other resources or areas of the system, allow multiple application components to start sharing the resources of a single instance of the host operating system, similar to how a hypervisor allows multiple virtual machines (VMs) to share resources of a single instance of the host OS.

As a result, container technology provides all of the functionality and benefits of virtual machines (VMs), as well as significant additional benefits: cost-effective scalability, application isolation, and disposability.

Lighter

Containers are lighter than VMs because they don’t carry the payload of a whole OS instance and hypervisor; instead, they take the OS processes and dependencies required to run the code.

Container sizes are measured in megabytes (as opposed to gigabytes for specific VMs), allowing for greater utilization of hardware resources and speedier startup times.

Greater resource efficiency

You can execute many more copies of a program on the same hardware with containers than you can with VMs. This can help you save money on cloud storage.

Effortless operation

Containers are faster and easier to deploy, provision, and restart than virtual machines, which improves developer productivity. This makes them a better fit for development teams following Agile and DevOps approaches, as they can be used in continuous integration and continuous delivery (CI/CD) pipelines.

Reliable docker deployment consultation can make the implementation process easier. Other advantages cited by container users include higher app quality, faster market reaction, and much more.

Open Source Compose

Docker is working on two fronts to make it easier to get programs running on the cloud. The Compose specification was first transferred into a community effort. This will allow Compose to grow with the community, allowing it to meet the demands of more users better while remaining platform agnostic.

Second, we’ve been working with Amazon and Microsoft on CLI connectors for Amazon ECS and Microsoft ACI that let you deploy Compose applications directly to the cloud using Docker compose-up.

The company wanted to ensure that existing CLI commands were not affected when we implemented these connectors. They also desire an architecture that would allow adding more backends and SDKs in popular languages.

Image Source: Docker Blog

The Compose CLI switches which backend is utilized for the command or API call based on the Docker Context that the user selects. This allows transparently transmitting commands to the existing CLI that use existing contexts.

The back-end interface enables creating a backend for any container runtime, allowing users to enjoy the same Docker CLI UX as before while also taking advantage of the new APIs and SDK. To give equivalent functionality to the CLI commands, the Compose CLI can serve a gRPC API.

They also utilize gRPC because it allows creating high-quality SDKs in popular languages such as Node.js, Python, and Golang. While the firm now only has a Node SDK that provides single container management on ACI, they also have plans to add Compose support, ECS support, and more language SDKs in the near future.

VS Code has already implemented its Docker experience on ACI using the Node SDK.Microsoft Windows Server now supports Docker containerization. Most cloud providers also provide services to assist developers in creating and running Docker-based applications.

Source: 9series

#docker#Docker Consulting#Docker Services#Docker Solutions#docker consulting#docker container#docker consulting services#docker deployment services#9series

0 notes

Text

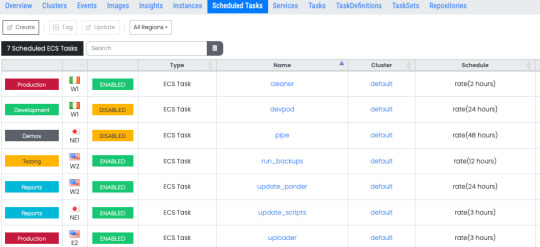

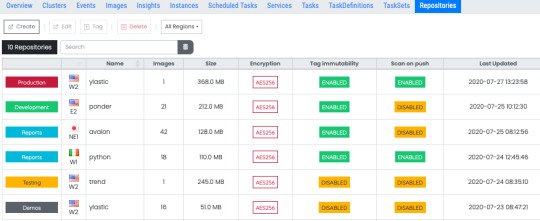

Container Management - AWS ECS and ECR

Cross account and cross region management for your clusters, services, tasks, images, task sets, task definitions, scheduled tasks, container instances, vulnerability scans, container insights, repositories and events - now in Ylastic. Container resources aggregated within your organizational units to make it easier to understand your environment as well as access all the pertinent information in one place. There are a lot of different components in ECS. This blog post will walk you through each component and how you can manage those resources in Ylastic. ECS services allow you to always have a specified number of tasks running in a cluster. All your services are displayed in one place aggregated across regions and accounts in an OU. Select a service to view further details and associated configuration. The latest CPU and memory utilization metrics for each service are displayed in a sparkline graph next to the service name. You can view service CloudWatch metrics by clicking the sparkline to display the related charts. Click a button to generate a diagram for the service the service tasks as well as associated AWS components such as the VPC network, logs, IAM roles and more and their relationships and connections.

Clusters are the logical grouping unit in ECS. They can contain services or simple tasks, each of which may contain a mix of tasks that can use either the Fargate or EC2 launch types. The capacity can be provisioned for a cluster either through Autoscaling groups or a Fargate capacity provider. All information for a cluster is available in one place by selecting the cluster. The latest CPU and memory utilization metrics for each cluster are displayed in a sparkline graph next to the cluster name. You can view cluster CloudWatch metrics by clicking the sparkline to display the related charts. Click a button to generate a diagram for the cluster.

Tasks are the fundamental units of work in ECS. Task runs are based off a template called the task definition. The concept is quite similar to the way you run Autoscaling instances using a launch configuration as a template. You can run as many tasks as you want from a single task definition. Tasks can be one-off where they do a certain thing and then cease to exist. You can also run tasks as a part of a service which allows them to continuously run in a cluster. View all the task information in one place as well as easily generate a diagram for the task that shows all associated AWS components such as the VPC network, logs, IAM roles and more and their relationships as well as connections.

ECS tasks can also be run on a regular, scheduled basis. This allows you to launch container services that you need to run only at certain times. The scheduled tasks page gives you access to all the schedules and their respective configurations in your environment. Select any schedule to view all the tasks that running as a part of that schedule.

Task Definitions provide a definition of your tasks - a template to describe the structure of your container as well as how the container should be provisioned. It specifies the docker images to use, the CPU and memory allocation for your container, any needed environment variables, exposed ports, network types and more. View detailed information on your task definitions and details of all the clusters and tasks running that are currently using each task definition.

The actual docker image that will be used to start your containers is specified in the task definition. Images can be stored in a repository on AWS ECR and they are pulled as needed when containers are instantiated. View all the images being used by all the containers that are running your task and services in one place. You can manually scan an image or have ECR automatically scan the image for known vulnerabilities in software packages when you push the image to the repository. After a while it can get really confusing to know which images are being used all over your infrastructure and if they are affected by any vulnerabilities that need to be addressed ASAP. No need to hunt through a lot of pages trying to find out your current security status. One place to find all the information you need to address the security concerns from running outdated packages.

View a detailed vulnerability scan listing for any image from one place. Links to the specific CVE listed in the security advisory are also available.

The images you use for your containers can be stored in ECR and pulled from that repository when your containers are instantiated to run tasks. View all your repositories and their configuration information aggregated in one place. Select a repository to see each image in that repository, its details as well as vulnerability scan information for that image.

You need compute capacity to actually host your docker containers and run them either as tasks or long running services. AWS gives you two different ways to accomplish this - the traditional EC2 instances or the server less compute with Fargate launch types. If you are using the EC2 launch type for your application, you can view and interact with all the container instances in your environment in one place and quickly view all associated information.

If you wish to use EC2 instances, then you need to run instances with the ECS agent installed. AWS provides ECS optimized AMIs in several different variants, that are highly recommended to use as the base for your ECS container instances. Access and launch instances from these optimized Amis easily without hunting through SSM parameters and AMI pages. The latest and greatest updated AMIs from the ECS team are available in one place.

As you update your applications to new versions, you need a way to deploy those new versions into your containers on ECS. You can perform a rolling update using the ECS service scheduler (the default option available to you on ECS). You can also perform the deployment using other controllers such as either AWS Code Deploy or something completely external outside AWS and in your own environment such as Jenkins. In order to use these two options, ECS leverages the concept of task sets, which are essentially definitions of how to perform your deployments. The task sets page in Ylastic gives you access to all your task sets information and their configuration.

The container launches, tasks and services starting and stopping, ECS agent connects/disconnects, and other state changes in your cloud environment can quickly overwhelm you with an information overload. The events page in Ylastic aggregates ECS events in one place from all your running clusters, service, and tasks. One place to get a quick overview on the state of your Amazon ECS resources.

Select any ECS resource on any of the above pages, and view all associated information in one place. This includes CloudTrail events, configuration details, services, tasks, audit events, and more.

CloudWatch Container Insights collects, aggregates, and summarizes metrics and logs from your containerized applications and microservices. The metrics that are collected include utilization for container resources such as CPU, memory, disk, and network. Easily view and go through charts for all these metrics.

There are a lot of moving parts and components involved in running a containerized application in the cloud. Ylastic gives you the ability to get a quick overview of your ECS environment - generated from your infrastructure with a click. Diagrams of your ECS resources - clusters, services and tasks are available, which display the associated ECS resources and any other AWS components in use. Relationships between the resources are retrieved and displayed along with the containing VPC network.

Easy to use, intuitive container management. Global CloudOps for your AWS cloud environment - Govern, manage, schedule and diagram your resources.

0 notes

Text

DevOps Jenkins Engineer

DevOps Jenkins Engineer Jupiter, FL 6 month contract Required: 7-8 years of experience building Jenkins pipelines. Needs to be familiar with Jenkins scripting and associated shell scripting on Linux Bitbucket - How to configured for Jenkins Hooks Docker - How to build images and deploy using Jenkins pipeline AWS Cloud Formation AWS CLI - How to incorporate into shell scripting for deploying to AWS Experience with an Agile Kanban or Scrum environment Work in Jupiter, FL office once NextEra employees return to the office Recommended: AWS Developer or Architect Certified Working knowledge of Firewall policies and Active Directory Nginx and Vouch for authentication SonarQube for code coverage reporting Python, TypeScript, or Java knowledge a plus AWS knowledge of services listed below AWS Services - API Gateway, Cloud Trail, Cloud Watch, Cognito, DMS, ECR, ECS, EC2, IAM, Lambdas, RDS, Route 53, Sagemaker, Secret Manager, SNS, S3, VPC, Xray Reference : DevOps Jenkins Engineer jobs from Latest listings added - JobsAggregation http://jobsaggregation.com/jobs/technology/devops-jenkins-engineer_i10432

0 notes

Video

youtube

(via Deploy Docker Image to AWS Cloud ECS Service | Docker AWS ECS Tutorial)

Full Video Link: https://youtu.be/ZlR5onuwZzw

Hi, a new #video on #AWS #ECS tutorial is published on @codeonedigest #youtube channel. Learn how to deploy #docker image in AWS ECS fargate service.

#deploydockerimageinaws #deploydockerimageinamazoncloud #rundockerimageincloudecsservice #whatisecsservice #howtodeploydockerimageinecsservice #howtorundockerimageinecsservice #awsecstutorial #awsecsfargate #awsecsdemo #awsecsfargatetutorial #awsecsdockercomposetutorial #awsecsservice #awsecstaskdefinition #deploydockercontainertoaws #deploydockerimagetoawsec2 #deploydockerimagetoaws #deployimagetoawsfargate

1 note

·

View note

Text

DevOps and Deployment

DevOps and Deployment: Streamlining Software Delivery

Introduction

Brief overview of DevOps and its role in modern software development.

Importance of automation and continuous delivery in deployment.

Key benefits: faster releases, improved collaboration, and increased reliability.

1. What is DevOps?

Definition and purpose.

Core principles: Collaboration, Automation, Continuous Integration (CI), Continuous Deployment (CD), and Monitoring.

2. The DevOps Deployment Lifecycle

Plan: Agile methodologies and backlog prioritization.

Develop: Version control (Git, GitHub, GitLab, Bitbucket).

Build & Test: CI/CD pipelines, automated testing.

Release & Deploy: Automated deployments with containerization (Docker, Kubernetes).

Operate & Monitor: Logging, monitoring, and feedback loops.

3. CI/CD: The Backbone of DevOps Deployment

Continuous Integration (CI)

Automating code integration and testing.

Tools: Jenkins, GitHub Actions, GitLab CI/CD, CircleCI.

Continuous Deployment (CD)

Automating deployments to production.

Canary releases, blue-green deployments, feature flags.

Example CI/CD Pipeline (GitHub Actions & Docker)

yamlname: CI/CD Pipelineon: push: branches: - mainjobs: build: runs-on: ubuntu-latest steps: - name: Checkout Code uses: actions/checkout@v2 - name: Build Docker Image run: docker build -t myapp . - name: Push to Docker Hub run: docker push myapp

4. Containerization & Orchestration

Docker: Packaging applications in lightweight containers.

Kubernetes: Managing containerized applications at scale.

Terraform & Infrastructure as Code (IaC): Automating infrastructure provisioning.

5. Cloud Deployments in DevOps

AWS (EKS, ECS, Lambda)

Azure DevOps & Azure Kubernetes Service (AKS)

Google Cloud (GKE, Cloud Run)

6. Security & Monitoring in DevOps Deployment

Implementing security best practices: Secrets management, role-based access.

Monitoring Tools: Prometheus, Grafana, ELK Stack, Datadog.

Logging & Alerting: Centralized logging with Splunk, AWS CloudWatch, etc.

7. Best Practices for DevOps Deployment

Automate everything.

Implement security from the start (DevSecOps).

Use microservices architecture.

Optimize pipelines for faster feedback.

Conclusion

How DevOps transforms deployment workflows.

Future trends: AI-driven DevOps, GitOps, and serverless deployments.

Encouraging DevOps adoption for efficient software delivery.

WEBSITE: https://www.ficusoft.in/full-stack-developer-course-in-chennai/

0 notes

Text

DevOps Jenkins Engineer

DevOps Jenkins Engineer Jupiter, FL 6 month contract Required: 7-8 years of experience building Jenkins pipelines. Needs to be familiar with Jenkins scripting and associated shell scripting on Linux Bitbucket - How to configured for Jenkins Hooks Docker - How to build images and deploy using Jenkins pipeline AWS Cloud Formation AWS CLI - How to incorporate into shell scripting for deploying to AWS Experience with an Agile Kanban or Scrum environment Work in Jupiter, FL office once NextEra employees return to the office Recommended: AWS Developer or Architect Certified Working knowledge of Firewall policies and Active Directory Nginx and Vouch for authentication SonarQube for code coverage reporting Python, TypeScript, or Java knowledge a plus AWS knowledge of services listed below AWS Services - API Gateway, Cloud Trail, Cloud Watch, Cognito, DMS, ECR, ECS, EC2, IAM, Lambdas, RDS, Route 53, Sagemaker, Secret Manager, SNS, S3, VPC, Xray Reference : DevOps Jenkins Engineer jobs Source: http://jobrealtime.com/jobs/technology/devops-jenkins-engineer_i11146

0 notes

Photo

"[Project]Deploy trained model to AWS lambda with Serverless framework"- Detail: Hi guys,We have continue updating our open source project for packaging and deploying ML models to product (github.com/bentoml/bentoml), and we have create an easy way to deploy ML model as a serverless (www.serverless.com) project that you could easily deploy to AWS lambda and Google Cloud Function. We want to share with you guys about it and hear your feedback. A little background of BentoML for those aren't familiar with it. BentoML is a python library for packaging and deploying machine learning models. It provides high-level APIs for defining a ML service and packaging its artifacts, source code, dependencies, and configurations into a production-system-friendly format that is ready for deployment.Feature highlights: * Multiple Distribution Format - Easily package your Machine Learning models into format that works best with your inference scenario: - Docker Image - deploy as containers running REST API Server - PyPI Package - integrate into your python applications seamlessly - CLI tool - put your model into Airflow DAG or CI/CD pipeline - Spark UDF - run batch serving on large dataset with Spark - Serverless Function - host your model with serverless cloud platformsMultiple Framework Support - BentoML supports a wide range of ML frameworks out-of-the-box including Tensorflow, PyTorch, Scikit-Learn, xgboost and can be easily extended to work with new or custom frameworks.Deploy Anywhere - BentoML bundled ML service can be easily deploy with platforms such as Docker, Kubernetes, Serverless, Airflow and Clipper, on cloud platforms including AWS Lambda/ECS/SageMaker, Gogole Cloud Functions, and Azure ML.Custom Runtime Backend - Easily integrate your python preprocessing code with high-performance deep learning model runtime backend (such as tensorflow-serving) to deploy low-latancy serving endpoint. How to package machine learning model as serverless project with BentoMLIt's surprising easy, just with a single CLI command. After you finished training your model and saved it to file system with BentoML. All you need to do now is run bentoml build-serverless-archive command, for example: $bentoml build-serverless-archive /path_to_bentoml_archive /path_to_generated_serverless_project --platform=[aws-python, aws-python3, google-python] This will generate a serverless project at the specified directory. Let's take a look of what files are generated. /path_to_generated_serverless_project - serverless.yml - requirements.txt - copy_of_bentoml_archive/ - handler.py/main.py (if platform is google-python, it will generate main.py) serverless.yml is the configuration file for serverless framework. It contains configuration to the cloud provider you are deploying to, and map out what events will trigger what function. BentoML automatically modifies this file to add your model prediction as a function event and update other info for you.requirements.txt is a copy from your model archive, it includes all of the dependencies to run your modelhandler.py/main.py is the file that contains your function code. BentoML fill this file's function with your model archive class, you can make prediction with this file right away without any modifications.copy_of_bentoml_archive: A copy your model archive. It will be bundle with other files for serverless deployment. What's nextAfter you generate this serverless project. If you have the default configuration for AWS or google. You can deploy it right away. Otherwise, you can update the serverless.yaml based on your own configurations.Love to hear feedback from you guys on this. CheersBo Edit: Styling. Caption by yubozhao. Posted By: www.eurekaking.com

0 notes

Text

DevOps Jenkins Engineer

DevOps Jenkins Engineer Jupiter, FL 6 month contract Required: 7-8 years of experience building Jenkins pipelines. Needs to be familiar with Jenkins scripting and associated shell scripting on Linux Bitbucket - How to configured for Jenkins Hooks Docker - How to build images and deploy using Jenkins pipeline AWS Cloud Formation AWS CLI - How to incorporate into shell scripting for deploying to AWS Experience with an Agile Kanban or Scrum environment Work in Jupiter, FL office once NextEra employees return to the office Recommended: AWS Developer or Architect Certified Working knowledge of Firewall policies and Active Directory Nginx and Vouch for authentication SonarQube for code coverage reporting Python, TypeScript, or Java knowledge a plus AWS knowledge of services listed below AWS Services - API Gateway, Cloud Trail, Cloud Watch, Cognito, DMS, ECR, ECS, EC2, IAM, Lambdas, RDS, Route 53, Sagemaker, Secret Manager, SNS, S3, VPC, Xray Reference : DevOps Jenkins Engineer jobs from Latest listings added - LinkHello http://linkhello.com/jobs/technology/devops-jenkins-engineer_i11250

0 notes

Text

DevOps Jenkins Engineer

DevOps Jenkins Engineer Jupiter, FL 6 month contract Required: 7-8 years of experience building Jenkins pipelines. Needs to be familiar with Jenkins scripting and associated shell scripting on Linux Bitbucket - How to configured for Jenkins Hooks Docker - How to build images and deploy using Jenkins pipeline AWS Cloud Formation AWS CLI - How to incorporate into shell scripting for deploying to AWS Experience with an Agile Kanban or Scrum environment Work in Jupiter, FL office once NextEra employees return to the office Recommended: AWS Developer or Architect Certified Working knowledge of Firewall policies and Active Directory Nginx and Vouch for authentication SonarQube for code coverage reporting Python, TypeScript, or Java knowledge a plus AWS knowledge of services listed below AWS Services - API Gateway, Cloud Trail, Cloud Watch, Cognito, DMS, ECR, ECS, EC2, IAM, Lambdas, RDS, Route 53, Sagemaker, Secret Manager, SNS, S3, VPC, Xray Reference : DevOps Jenkins Engineer jobs from Latest listings added - LinkHello http://linkhello.com/jobs/technology/devops-jenkins-engineer_i11250

0 notes

Text

DevOps Jenkins Engineer

DevOps Jenkins Engineer Jupiter, FL 6 month contract Required: 7-8 years of experience building Jenkins pipelines. Needs to be familiar with Jenkins scripting and associated shell scripting on Linux Bitbucket - How to configured for Jenkins Hooks Docker - How to build images and deploy using Jenkins pipeline AWS Cloud Formation AWS CLI - How to incorporate into shell scripting for deploying to AWS Experience with an Agile Kanban or Scrum environment Work in Jupiter, FL office once NextEra employees return to the office Recommended: AWS Developer or Architect Certified Working knowledge of Firewall policies and Active Directory Nginx and Vouch for authentication SonarQube for code coverage reporting Python, TypeScript, or Java knowledge a plus AWS knowledge of services listed below AWS Services - API Gateway, Cloud Trail, Cloud Watch, Cognito, DMS, ECR, ECS, EC2, IAM, Lambdas, RDS, Route 53, Sagemaker, Secret Manager, SNS, S3, VPC, Xray Reference : DevOps Jenkins Engineer jobs from Latest listings added - cvwing http://cvwing.com/jobs/technology/devops-jenkins-engineer_i14172

0 notes

Text

How to run AWS CloudHSM workloads on Docker containers

AWS CloudHSM is a cloud-based hardware security module (HSM) that enables you to generate and use your own encryption keys on the AWS Cloud. With CloudHSM, you can manage your own encryption keys using FIPS 140-2 Level 3 validated HSMs. Your HSMs are part of a CloudHSM cluster. CloudHSM automatically manages synchronization, high availability, and failover within a cluster.

CloudHSM is part of the AWS Cryptography suite of services, which also includes AWS Key Management Service (KMS) and AWS Certificate Manager Private Certificate Authority (ACM PCA). KMS and ACM PCA are fully managed services that are easy to use and integrate. You’ll generally use AWS CloudHSM only if your workload needs a single-tenant HSM under your own control, or if you need cryptographic algorithms that aren’t available in the fully-managed alternatives.

CloudHSM offers several options for you to connect your application to your HSMs, including PKCS#11, Java Cryptography Extensions (JCE), or Microsoft CryptoNG (CNG). Regardless of which library you choose, you’ll use the CloudHSM client to connect to all HSMs in your cluster. The CloudHSM client runs as a daemon, locally on the same Amazon Elastic Compute Cloud (EC2) instance or server as your applications.

The deployment process is straightforward if you’re running your application directly on your compute resource. However, if you want to deploy applications using the HSMs in containers, you’ll need to make some adjustments to the installation and execution of your application and the CloudHSM components it depends on. Docker containers don’t typically include access to an init process like systemd or upstart. This means that you can’t start the CloudHSM client service from within the container using the general instructions provided by CloudHSM. You also can’t run the CloudHSM client service remotely and connect to it from the containers, as the client daemon listens to your application using a local Unix Domain Socket. You cannot connect to this socket remotely from outside the EC2 instance network namespace.

This blog post discusses the workaround that you’ll need in order to configure your container and start the client daemon so that you can utilize CloudHSM-based applications with containers. Specifically, in this post, I’ll show you how to run the CloudHSM client daemon from within a Docker container without needing to start the service. This enables you to use Docker to develop, deploy and run applications using the CloudHSM software libraries, and it also gives you the ability to manage and orchestrate workloads using tools and services like Amazon Elastic Container Service (Amazon ECS), Kubernetes, Amazon Elastic Container Service for Kubernetes (Amazon EKS), and Jenkins.

Solution overview

My solution shows you how to create a proof-of-concept sample Docker container that is configured to run the CloudHSM client daemon. When the daemon is up and running, it runs the AESGCMEncryptDecryptRunner Java class, available on the AWS CloudHSM Java JCE samples repo. This class uses CloudHSM to generate an AES key, then it uses the key to encrypt and decrypt randomly generated data.

Note: In my example, you must manually enter the crypto user (CU) credentials as environment variables when running the container. For any production workload, you’ll need to carefully consider how to provide, secure, and automate the handling and distribution of your HSM credentials. You should work with your security or compliance officer to ensure that you’re using an appropriate method of securing HSM login credentials for your application and security needs.

Figure 1: Architectural diagram

Figure 1: Architectural diagram

Prerequisites

To implement my solution, I recommend that you have basic knowledge of the below:

CloudHSM

Docker

Java

Here’s what you’ll need to follow along with my example:

An active CloudHSM cluster with at least one active HSM. You can follow the Getting Started Guide to create and initialize a CloudHSM cluster. (Note that for any production cluster, you should have at least two active HSMs spread across Availability Zones.)

An Amazon Linux 2 EC2 instance in the same Amazon Virtual Private Cloud in which you created your CloudHSM cluster. The EC2 instance must have the CloudHSM cluster security group attached—this security group is automatically created during the cluster initialization and is used to control access to the HSMs. You can learn about attaching security groups to allow EC2 instances to connect to your HSMs in our online documentation.

A CloudHSM crypto user (CU) account created on your HSM. You can create a CU by following these user guide steps.

Solution details

On your Amazon Linux EC2 instance, install Docker:

# sudo yum -y install docker

Start the docker service:

# sudo service docker start

Create a new directory and step into it. In my example, I use a directory named “cloudhsm_container.��� You’ll use the new directory to configure the Docker image.

# mkdir cloudhsm_container

# cd cloudhsm_container

Copy the CloudHSM cluster’s CA certificate (customerCA.crt) to the directory you just created. You can find the CA certificate on any working CloudHSM client instance under the path /opt/cloudhsm/etc/customerCA.crt. This certificate is created during initialization of the CloudHSM Cluster and is needed to connect to the CloudHSM cluster.

In your new directory, create a new file with the name run_sample.sh that includes the contents below. The script starts the CloudHSM client daemon, waits until the daemon process is running and ready, and then runs the Java class that is used to generate an AES key to encrypt and decrypt your data.

#! /bin/bash

# start cloudhsm client

echo -n "* Starting CloudHSM client ... "

/opt/cloudhsm/bin/cloudhsm_client /opt/cloudhsm/etc/cloudhsm_client.cfg &> /tmp/cloudhsm_client_start.log &

# wait for startup

while true

do

if grep 'libevmulti_init: Ready !' /tmp/cloudhsm_client_start.log &> /dev/null

then

echo "[OK]"

break

fi

sleep 0.5

done

echo -e "\n* CloudHSM client started successfully ... \n"

# start application

echo -e "\n* Running application ... \n"

java -ea -Djava.library.path=/opt/cloudhsm/lib/ -jar target/assembly/aesgcm-runner.jar --method environment

echo -e "\n* Application completed successfully ... \n"

In the new directory, create another new file and name it Dockerfile (with no extension). This file will specify that the Docker image is built with the following components:

The AWS CloudHSM client package.

The AWS CloudHSM Java JCE package.

OpenJDK 1.8. This is needed to compile and run the Java classes and JAR files.

Maven, a build automation tool that is needed to assist with building the Java classes and JAR files.

The AWS CloudHSM Java JCE samples that will be downloaded and built.

Cut and paste the contents below into Dockerfile.

Note: Make sure to replace the HSM_IP line with the IP of an HSM in your CloudHSM cluster. You can get your HSM IPs from the CloudHSM console, or by running the describe-clusters AWS CLI command.

# Use the amazon linux image

FROM amazonlinux:2

# Install CloudHSM client

RUN yum install -y https://s3.amazonaws.com/cloudhsmv2-software/CloudHsmClient/EL7/cloudhsm-client-latest.el7.x86_64.rpm

# Install CloudHSM Java library

RUN yum install -y https://s3.amazonaws.com/cloudhsmv2-software/CloudHsmClient/EL7/cloudhsm-client-jce-latest.el7.x86_64.rpm

# Install Java, Maven, wget, unzip and ncurses-compat-libs

RUN yum install -y java maven wget unzip ncurses-compat-libs

# Create a work dir

WORKDIR /app

# Download sample code

RUN wget https://github.com/aws-samples/aws-cloudhsm-jce-examples/archive/master.zip

# unzip sample code

RUN unzip master.zip

# Change to the create directory

WORKDIR aws-cloudhsm-jce-examples-master

# Build JAR files

RUN mvn validate && mvn clean package

# Set HSM IP as an environmental variable

ENV HSM_IP <insert the IP address of an active CloudHSM instance here>

# Configure cloudhms-client

COPY customerCA.crt /opt/cloudhsm/etc/

RUN /opt/cloudhsm/bin/configure -a $HSM_IP

# Copy the run_sample.sh script

COPY run_sample.sh .

# Run the script

CMD ["bash","run_sample.sh"]

Now you’re ready to build the Docker image. Use the following command, with the name jce_sample_client. This command will let you use the Dockerfile you created in step 6 to create the image.

# sudo docker build -t jce_sample_client .

To run a Docker container from the Docker image you just created, use the following command. Make sure to replace the user and password with your actual CU username and password. (If you need help setting up your CU credentials, see prerequisite 3. For more information on how to provide CU credentials to the AWS CloudHSM Java JCE Library, refer to the steps in the CloudHSM user guide.)

# sudo docker run --env HSM_PARTITION=PARTITION_1 \

--env HSM_USER=<user> \

--env HSM_PASSWORD=<password> \

jce_sample_client

If successful, the output should look like this:

* Starting cloudhsm-client ... [OK]

* cloudhsm-client started successfully ...

* Running application ...

ERROR StatusLogger No log4j2 configuration file found. Using default configuration: logging only errors

to the console.

70132FAC146BFA41697E164500000000

Successful decryption

SDK Version: 2.03

* Application completed successfully ...

Conclusion

My solution provides an example of how to run CloudHSM workloads on Docker containers. You can use it as a reference to implement your cryptographic application in a way that benefits from the high availability and load balancing built in to AWS CloudHSM without compromising on the flexibility that Docker provides for developing, deploying, and running applications. If you have comments about this post, submit them in the Comments section below.[Source]-https://aws.amazon.com/blogs/security/how-to-run-aws-cloudhsm-workloads-on-docker-containers/

Beginners & Advanced level Docker Training Course in Mumbai. Asterix Solution's 25 Hour Docker Training gives broad hands-on practicals. For details, Visit :

0 notes