#deploy docker image in aws

Explore tagged Tumblr posts

Text

How to Choose the Right Tech Stack for Your Web App in 2025

In this article, you’ll learn how to confidently choose the right tech stack for your web app, avoid common mistakes, and stay future-proof. Whether you're building an MVP or scaling a SaaS platform, we’ll walk through every critical decision.

What Is a Tech Stack? (And Why It Matters More Than Ever)

Let’s not overcomplicate it. A tech stack is the combination of technologies you use to build and run a web app. It includes:

Front-end: What users see (e.g., React, Vue, Angular)

Back-end: What makes things work behind the scenes (e.g., Node.js, Django, Laravel)

Databases: Where your data lives (e.g., PostgreSQL, MongoDB, MySQL)

DevOps & Hosting: How your app is deployed and scaled (e.g., Docker, AWS, Vercel)

Why it matters: The wrong stack leads to poor performance, higher development costs, and scaling issues. The right stack supports speed, security, scalability, and a better developer experience.

Step 1: Define Your Web App’s Core Purpose

Before choosing tools, define the problem your app solves.

Is it data-heavy like an analytics dashboard?

Real-time focused, like a messaging or collaboration app?

Mobile-first, for customers on the go?

AI-driven, using machine learning in workflows?

Example: If you're building a streaming app, you need a tech stack optimized for media delivery, latency, and concurrent user handling.

Need help defining your app’s vision? Bluell AB’s Web Development service can guide you from idea to architecture.

Step 2: Consider Scalability from Day One

Most startups make the mistake of only thinking about MVP speed. But scaling problems can cost you down the line.

Here’s what to keep in mind:

Stateless architecture supports horizontal scaling

Choose microservices or modular monoliths based on team size and scope

Go for asynchronous processing (e.g., Node.js, Python Celery)

Use CDNs and caching for frontend optimization

A poorly optimized stack can increase infrastructure costs by 30–50% during scale. So, choose a stack that lets you scale without rewriting everything.

Step 3: Think Developer Availability & Community

Great tech means nothing if you can’t find people who can use it well.

Ask yourself:

Are there enough developers skilled in this tech?

Is the community strong and active?

Are there plenty of open-source tools and integrations?

Example: Choosing Go or Elixir might give you performance gains, but hiring developers can be tough compared to React or Node.js ecosystems.

Step 4: Match the Stack with the Right Architecture Pattern

Do you need:

A Monolithic app? Best for MVPs and small teams.

A Microservices architecture? Ideal for large-scale SaaS platforms.

A Serverless model? Great for event-driven apps or unpredictable traffic.

Pro Tip: Don’t over-engineer. Start with a modular monolith, then migrate as you grow.

Step 5: Prioritize Speed and Performance

In 2025, user patience is non-existent. Google says 53% of mobile users leave a page that takes more than 3 seconds to load.

To ensure speed:

Use Next.js or Nuxt.js for server-side rendering

Optimize images and use lazy loading

Use Redis or Memcached for caching

Integrate CDNs like Cloudflare

Benchmark early and often. Use tools like Lighthouse, WebPageTest, and New Relic to monitor.

Step 6: Plan for Integration and APIs

Your app doesn’t live in a vacuum. Think about:

Payment gateways (Stripe, PayPal)

CRM/ERP tools (Salesforce, HubSpot)

3rd-party APIs (OpenAI, Google Maps)

Make sure your stack supports REST or GraphQL seamlessly and has robust middleware for secure integration.

Step 7: Security and Compliance First

Security can’t be an afterthought.

Use stacks that support JWT, OAuth2, and secure sessions

Make sure your database handles encryption-at-rest

Use HTTPS, rate limiting, and sanitize inputs

Data breaches cost startups an average of $3.86 million. Prevention is cheaper than reaction.

Step 8: Don’t Ignore Cost and Licensing

Open source doesn’t always mean free. Some tools have enterprise licenses, usage limits, or require premium add-ons.

Cost checklist:

Licensing (e.g., Firebase becomes costly at scale)

DevOps costs (e.g., AWS vs. DigitalOcean)

Developer productivity (fewer bugs = lower costs)

Budgeting for technology should include time to hire, cost to scale, and infrastructure support.

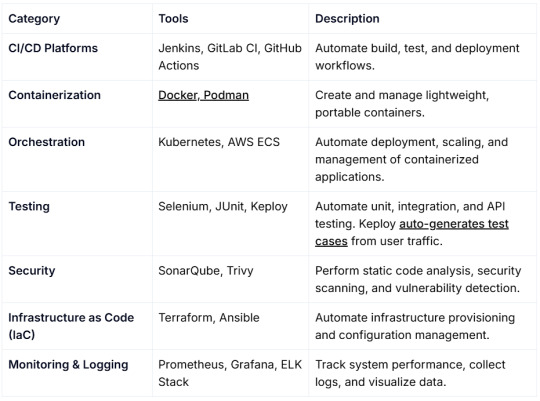

Step 9: Understand the Role of DevOps and CI/CD

Continuous integration and continuous deployment (CI/CD) aren’t optional anymore.

Choose a tech stack that:

Works well with GitHub Actions, GitLab CI, or Jenkins

Supports containerization with Docker and Kubernetes

Enables fast rollback and testing

This reduces downtime and lets your team iterate faster.

Step 10: Evaluate Real-World Use Cases

Here’s how popular stacks perform:

Look at what companies are using, then adapt, don’t copy blindly.

How Bluell Can Help You Make the Right Tech Choice

Choosing a tech stack isn’t just technical, it’s strategic. Bluell specializes in full-stack development and helps startups and growing companies build modern, scalable web apps. Whether you’re validating an MVP or building a SaaS product from scratch, we can help you pick the right tools from day one.

Conclusion

Think of your tech stack like choosing a foundation for a building. You don’t want to rebuild it when you’re five stories up.

Here’s a quick recap to guide your decision:

Know your app’s purpose

Plan for future growth

Prioritize developer availability and ecosystem

Don’t ignore performance, security, or cost

Lean into CI/CD and DevOps early

Make data-backed decisions, not just trendy ones

Make your tech stack work for your users, your team, and your business, not the other way around.

1 note

·

View note

Text

Is Coding Knowledge Necessary to Become a DevOps Engineer?

As the demand for DevOps professionals grows, a common question arises among aspiring engineers: Do I need to know how to code to become a DevOps engineer? The short answer is yes—but not in the traditional software developer sense. While DevOps doesn’t always require deep software engineering skills, a solid understanding of scripting and basic programming is critical to succeed in the role.

DevOps is all about automating workflows, managing infrastructure, and streamlining software delivery. Engineers are expected to work across development and operations teams, which means writing scripts, building CI/CD pipelines, managing cloud resources, and occasionally debugging code. That doesn’t mean you need to develop complex applications from scratch—but you do need to know how to write and read code to automate and troubleshoot effectively.

Many modern organizations rely on DevOps services and solutions to manage cloud environments, infrastructure as code (IaC), and continuous deployment. These solutions are deeply rooted in automation—and automation, in turn, is rooted in code.

Why Coding Matters in DevOps

At the core of DevOps lies a principle: "Automate everything." Whether you’re provisioning servers with Terraform, writing Ansible playbooks, or integrating a Jenkins pipeline, you're dealing with code-like instructions that need accuracy, logic, and reusability.

Here are some real-world areas where coding plays a role in DevOps:

Scripting: Automating repetitive tasks using Bash, Python, or PowerShell

CI/CD Pipelines: Creating automated build and deploy workflows using YAML or Groovy

Configuration Management: Writing scripts for tools like Ansible, Puppet, or Chef

Infrastructure as Code: Using languages like HCL (for Terraform) to define cloud resources

Monitoring & Alerting: Writing custom scripts to track system health or trigger alerts

In most cases, DevOps engineers are not expected to build full-scale applications, but they must be comfortable with scripting logic and integrating systems using APIs and automation tools.

A quote by Kelsey Hightower, a Google Cloud developer advocate, says it best: “If you don’t automate, you’re just operating manually at scale.” This highlights the importance of coding as a means to bring efficiency and reliability to infrastructure management.

Example: Automating Deployment with a Script

Let’s say your team manually deploys updates to a web application hosted on AWS. Each deployment takes 30–40 minutes, involves multiple steps, and is prone to human error. A DevOps engineer steps in and writes a shell script that pulls the latest code, runs unit tests, builds the Docker image, and deploys it to ECS.

This 30-minute process is now reduced to a few seconds and can run automatically after every code push. That’s the power of coding in DevOps—it transforms tedious manual work into fast, reliable automation.

For fast-paced environments like startups, this is especially valuable. If you’re wondering what's the best DevOps platform for startups, the answer often includes those that support automation, scalability, and low-code integrations—areas where coding knowledge plays a major role.

Can You Start DevOps Without Coding Skills?

Yes, you can start learning DevOps without a coding background, but you’ll need to pick it up along the way. Begin with scripting languages like Bash and Python. These are essential for automating tasks and managing systems. Over time, you’ll encounter environments where understanding YAML, JSON, or even basic programming logic becomes necessary.

Many DevOps as a service companies emphasize practical problem-solving and automation, which means you'll constantly deal with code-based tools—even if you’re not building products yourself. The more comfortable you are with code, the more valuable you become in a DevOps team.

The Bottom Line and CTA

Coding is not optional in DevOps—it’s foundational. While you don’t need to be a software engineer, the ability to write scripts, debug automation pipelines, and understand basic programming logic is crucial. Coding empowers you to build efficient systems, reduce manual intervention, and deliver software faster and more reliably.

If you’re serious about becoming a high-impact DevOps engineer, start learning to code and practice by building automation scripts and pipelines. It’s a skill that will pay dividends throughout your tech career.

Ready to put your coding skills into real-world DevOps practice? Visit Cloudastra Technology: Cloudastra DevOps as a Services and explore how our automation-first approach can elevate your infrastructure and software delivery. Whether you're a beginner or scaling up your team, Cloudastra delivers end-to-end DevOps services designed for growth, agility, and innovation.

0 notes

Text

How Artificial Intelligence Courses in London Are Preparing Students for AI-Powered Careers?

Artificial Intelligence (AI) has become a cornerstone of technological innovation, revolutionizing industries from healthcare and finance to transportation and marketing. With AI-driven automation, analytics, and decision-making reshaping the global job market, there is a growing need for professionals who are not only tech-savvy but also trained in cutting-edge AI technologies. London, as a global tech and education hub, is rising to meet this demand by offering world-class education in AI. If you're considering an Artificial Intelligence course in London, you’ll be stepping into a well-rounded program that blends theoretical foundations with real-world applications, preparing you for AI-powered careers.

Why Choose London for an Artificial Intelligence Course?

London is home to some of the top universities, research institutions, and tech startups in the world. The city offers access to:

Globally renowned faculty and researchers

A diverse pool of tech companies and AI startups

Regular AI meetups, hackathons, and industry events

Proximity to innovation hubs like Cambridge and Oxford

Strong networking and career opportunities across the UK and Europe

An Artificial Intelligence course in London not only provides robust academic training but also places you in the center of the AI job ecosystem.

Core Components of an AI Course in London

Artificial Intelligence programs in London are designed to produce industry-ready professionals. Whether you're enrolling in a university-led master's program or a short-term professional certificate, here are some core components covered in most AI courses:

1. Foundational Knowledge

Courses start with fundamental concepts such as:

Algorithms and Data Structures

Linear Algebra, Probability, and Statistics

Introduction to Machine Learning

Basics of Python Programming

These are essential for understanding how AI models are built, optimized, and deployed.

2. Machine Learning and Deep Learning

Students dive deep into supervised and unsupervised learning techniques, along with:

Neural Networks

Convolutional Neural Networks (CNNs)

Recurrent Neural Networks (RNNs)

Transfer Learning

Generative Adversarial Networks (GANs)

These modules are crucial for domains like image recognition, natural language processing, and robotics.

3. Natural Language Processing (NLP)

With the rise of chatbots, language models, and voice assistants, NLP has become a vital skill. London-based AI courses teach:

Tokenization and Word Embeddings

Named Entity Recognition (NER)

Text Classification

Sentiment Analysis

Transformer Models (BERT, GPT)

4. Data Handling and Big Data Tools

Students learn to preprocess, clean, and manage large datasets using:

Pandas and NumPy

SQL and NoSQL databases

Apache Spark and Hadoop

Data visualization libraries like Matplotlib and Seaborn

These tools are indispensable in any AI role.

5. Real-World Projects

Perhaps the most defining element of an Artificial Intelligence course in London is hands-on project work. Examples include:

AI-powered financial fraud detection

Predictive analytics in healthcare

Facial recognition for surveillance systems

Customer behavior prediction using recommendation systems

These projects simulate real-world scenarios, providing students with a portfolio to showcase to employers.

Tools & Technologies Students Master

London AI programs focus on practical skills using tools such as:

Programming Languages: Python, R

Libraries & Frameworks: TensorFlow, Keras, PyTorch, Scikit-learn

Cloud Platforms: AWS AI/ML, Google Cloud AI, Microsoft Azure

Deployment Tools: Docker, Flask, FastAPI, Kubernetes

Version Control: Git and GitHub

Familiarity with these tools enables students to contribute immediately in professional AI environments.

Industry Integration and Career Readiness

What sets an Artificial Intelligence course in London apart is its strong integration with the industry. Many institutes have partnerships with companies for:

1. Internships and Work Placements

Students gain hands-on experience through internships with companies in finance, healthcare, logistics, and more. This direct exposure bridges the gap between education and employment.

2. Industry Mentorship

Many programs invite AI experts from companies like Google, DeepMind, Meta, and fintech startups to mentor students, evaluate projects, or deliver guest lectures.

3. Career Services and Networking

Institutes offer:

Resume workshops

Mock interviews

Career fairs and employer meetups

Job placement assistance

These services help students transition smoothly into the workforce after graduation.

Solving Real-World AI Challenges

Students in AI courses in London aren’t just learning — they’re solving actual challenges. Some examples include:

1. AI in Climate Change

Projects focus on analyzing weather patterns and environmental data to support sustainability efforts.

2. AI in Healthcare

Students build models to assist with medical image analysis, drug discovery, or early disease diagnosis.

3. Ethics and Fairness in AI

With growing concern about algorithmic bias, students are trained to design fair, explainable, and responsible AI systems.

4. Autonomous Systems

Courses often include modules on reinforcement learning and robotics, exploring how AI can control autonomous drones or vehicles.

Popular Specializations Offered

Many AI courses in London offer the flexibility to specialize in areas such as:

Computer Vision

Speech and Language Technologies

AI in Business and Finance

AI for Cybersecurity

AI in Healthcare and Biotech

These concentrations help students align their training with career goals and industry demand.

AI Career Paths After Completing a Course in London

Graduates from AI programs in London are in high demand across sectors. Typical roles include:

AI Engineer

Machine Learning Developer

Data Scientist

NLP Engineer

Computer Vision Specialist

MLOps Engineer

AI Product Manager

With London being a European startup capital and home to major tech firms, job opportunities are plentiful across industries like fintech, healthcare, logistics, retail, and media.

Final Thoughts

In a world increasingly shaped by intelligent systems, pursuing an Artificial Intelligence course in London is a smart investment in your future. With a mix of academic rigor, hands-on practice, and industry integration, these courses are designed to equip you with the knowledge and skills needed to thrive in AI-powered careers.

Whether your ambition is to become a machine learning expert, data scientist, or AI entrepreneur, London offers the ecosystem, exposure, and education to turn your vision into reality. From mastering neural networks to tackling ethical dilemmas in AI, you’ll graduate ready to lead innovation and make a meaningful impact.

#Best Data Science Courses in London#Artificial Intelligence Course in London#Data Scientist Course in London#Machine Learning Course in London

0 notes

Text

52013l4 in Modern Tech: Use Cases and Applications

In a technology-driven world, identifiers and codes are more than just strings—they define systems, guide processes, and structure workflows. One such code gaining prominence across various IT sectors is 52013l4. Whether it’s in cloud services, networking configurations, firmware updates, or application builds, 52013l4 has found its way into many modern technological environments. This article will explore the diverse use cases and applications of 52013l4, explaining where it fits in today’s digital ecosystem and why developers, engineers, and system administrators should be aware of its implications.

Why 52013l4 Matters in Modern Tech

In the past, loosely defined build codes or undocumented system identifiers led to chaos in large-scale environments. Modern software engineering emphasizes observability, reproducibility, and modularization. Codes like 52013l4:

Help standardize complex infrastructure.

Enable cross-team communication in enterprises.

Create a transparent map of configuration-to-performance relationships.

Thus, 52013l4 isn’t just a technical detail—it’s a tool for governance in scalable, distributed systems.

Use Case 1: Cloud Infrastructure and Virtualization

In cloud environments, maintaining structured builds and ensuring compatibility between microservices is crucial. 52013l4 may be used to:

Tag versions of container images (like Docker or Kubernetes builds).

Mark configurations for network load balancers operating at Layer 4.

Denote system updates in CI/CD pipelines.

Cloud providers like AWS, Azure, or GCP often reference such codes internally. When managing firewall rules, security groups, or deployment scripts, engineers might encounter a 52013l4 identifier.

Use Case 2: Networking and Transport Layer Monitoring

Given its likely relation to Layer 4, 52013l4 becomes relevant in scenarios involving:

Firewall configuration: Specifying allowed or blocked TCP/UDP ports.

Intrusion detection systems (IDS): Tracking abnormal packet flows using rules tied to 52013l4 versions.

Network troubleshooting: Tagging specific error conditions or performance data by Layer 4 function.

For example, a DevOps team might use 52013l4 as a keyword to trace problems in TCP connections that align with a specific build or configuration version.

Use Case 3: Firmware and IoT Devices

In embedded systems or Internet of Things (IoT) environments, firmware must be tightly versioned and managed. 52013l4 could:

Act as a firmware version ID deployed across a fleet of devices.

Trigger a specific set of configurations related to security or communication.

Identify rollback points during over-the-air (OTA) updates.

A smart home system, for instance, might roll out firmware_52013l4.bin to thermostats or sensors, ensuring compatibility and stable transport-layer communication.

Use Case 4: Software Development and Release Management

Developers often rely on versioning codes to track software releases, particularly when integrating network communication features. In this domain, 52013l4 might be used to:

Tag milestones in feature development (especially for APIs or sockets).

Mark integration tests that focus on Layer 4 data flow.

Coordinate with other teams (QA, security) based on shared identifiers like 52013l4.

Use Case 5: Cybersecurity and Threat Management

Security engineers use identifiers like 52013l4 to define threat profiles or update logs. For instance:

A SIEM tool might generate an alert tagged as 52013l4 to highlight repeated TCP SYN floods.

Security patches may address vulnerabilities discovered in the 52013l4 release version.

An organization’s SOC (Security Operations Center) could use 52013l4 in internal documentation when referencing a Layer 4 anomaly.

By organizing security incidents by version or layer, organizations improve incident response times and root cause analysis.

Use Case 6: Testing and Quality Assurance

QA engineers frequently simulate different network scenarios and need clear identifiers to catalog results. Here’s how 52013l4 can be applied:

In test automation tools, it helps define a specific test scenario.

Load-testing tools like Apache JMeter might reference 52013l4 configurations for transport-level stress testing.

Bug-tracking software may log issues under the 52013l4 build to isolate issues during regression testing.

What is 52013l4?

At its core, 52013l4 is an identifier, potentially used in system architecture, internal documentation, or as a versioning label in layered networking systems. Its format suggests a structured sequence: “52013” might represent a version code, build date, or feature reference, while “l4” is widely interpreted as Layer 4 of the OSI Model — the Transport Layer.Because of this association, 52013l4 is often seen in contexts that involve network communication, protocol configuration (e.g., TCP/UDP), or system behavior tracking in distributed computing.

FAQs About 52013l4 Applications

Q1: What kind of systems use 52013l4? Ans. 52013l4 is commonly used in cloud computing, networking hardware, application development environments, and firmware systems. It's particularly relevant in Layer 4 monitoring and version tracking.

Q2: Is 52013l4 an open standard? Ans. No, 52013l4 is not a formal standard like HTTP or ISO. It’s more likely an internal or semi-standardized identifier used in technical implementations.

Q3: Can I change or remove 52013l4 from my system? Ans. Only if you fully understand its purpose. Arbitrarily removing references to 52013l4 without context can break dependencies or configurations.

Conclusion

As modern technology systems grow in complexity, having clear identifiers like 52013l4 ensures smooth operation, reliable communication, and maintainable infrastructures. From cloud orchestration to embedded firmware, 52013l4 plays a quiet but critical role in linking performance, security, and development efforts. Understanding its uses and applying it strategically can streamline operations, improve response times, and enhance collaboration across your technical teams.

0 notes

Text

DevOps Course Online for Beginners and Professionals

Introduction: Why DevOps Skills Matter Today

In today's fast-paced digital world, businesses rely on faster software delivery and reliable systems. DevOps, short for Development and Operations, offers a practical solution to achieve this. It’s no longer just a trend; it’s a necessity for IT teams across all industries. From startups to enterprise giants, organizations are actively seeking professionals with strong DevOps skills.

Whether you're a beginner exploring career opportunities in IT or a seasoned professional looking to upskill, DevOps training online is your gateway to success. In this blog, we’ll walk you through everything you need to know about enrolling in a DevOps course online, from fundamentals to tools, certifications, and job placements.

What Is DevOps?

Definition and Core Principles

DevOps is a cultural and technical movement that unites software development and IT operations. It aims to shorten the software development lifecycle, ensuring faster delivery and higher-quality applications.

Core principles include:

Automation: Minimizing manual processes through scripting and tools

Continuous Integration/Continuous Deployment (CI/CD): Rapid code integration and release

Collaboration: Breaking down silos between dev, QA, and ops

Monitoring: Constant tracking of application performance and system health

These practices help businesses innovate faster and respond quickly to customer needs.

Why Choose a DevOps Course Online?

Accessibility and Flexibility

With DevOps training online, learners can access material anytime, anywhere. Whether you're working full-time or managing other responsibilities, online learning offers flexibility.

Updated Curriculum

A high-quality DevOps online course includes the latest tools and techniques used in the industry today, such as:

Jenkins

Docker

Kubernetes

Git and GitHub

Terraform

Ansible

Prometheus and Grafana

You get hands-on experience using real-world DevOps automation tools, making your learning practical and job-ready.

Job-Focused Learning

Courses that offer DevOps training with placement often include resume building, mock interviews, and one-on-one mentoring, equipping you with everything you need to land a job.

Who Should Enroll in a DevOps Online Course?

DevOps training is suitable for:

Freshers looking to start a tech career

System admins upgrading their skills

Software developers wanting to automate and deploy faster

IT professionals interested in cloud and infrastructure management

If you're curious about modern IT processes and enjoy problem-solving, DevOps is for you.

What You’ll Learn in a DevOps Training Program

1. Introduction to DevOps Concepts

DevOps lifecycle

Agile and Scrum methodologies

Collaboration between development and operations teams

2. Version Control Using Git

Git basics and repository setup

Branching, merging, and pull requests

Integrating Git with DevOps pipelines

3. CI/CD with Jenkins

Pipeline creation

Integration with Git

Automating builds and test cases

4. Containerization with Docker

Creating Docker images and containers

Docker Compose and registries

Real-time deployment examples

5. Orchestration with Kubernetes

Cluster architecture

Pods, services, and deployments

Scaling and rolling updates

6. Configuration Management with Ansible

Writing playbooks

Managing inventories

Automating infrastructure setup

7. Infrastructure as Code with Terraform

Deploying cloud resources

Writing reusable modules

State management and versioning

8. Monitoring and Logging

Using Prometheus and Grafana

Alerts and dashboards

Log management practices

This hands-on approach ensures learners are not just reading slides but working with real tools.

Real-World Projects You’ll Build

A good DevOps training and certification program includes projects like:

CI/CD pipeline from scratch

Deploying a containerized application on Kubernetes

Infrastructure provisioning on AWS or Azure using Terraform

Monitoring systems with Prometheus and Grafana

These projects simulate real-world problems, boosting both your confidence and your resume.

The Value of DevOps Certification

Why It Matters

Certification adds credibility to your skills and shows employers you're job-ready. A DevOps certification can be a powerful tool when applying for roles such as:

DevOps Engineer

Site Reliability Engineer (SRE)

Build & Release Engineer

Automation Engineer

Cloud DevOps Engineer

Courses that include DevOps training and placement also support your job search with interview preparation and job referrals.

Career Opportunities and Salary Trends

High Demand, High Pay

According to industry reports, DevOps engineers are among the highest-paid roles in IT. Average salaries range from $90,000 to $140,000 annually, depending on experience and region.

Industries hiring DevOps professionals include:

Healthcare

Finance

E-commerce

Telecommunications

Software as a Service (SaaS)

With the right DevOps bootcamp online, you’ll be prepared to meet these opportunities head-on.

Step-by-Step Guide to Getting Started

Step 1: Assess Your Current Skill Level

Understand your background. If you're a beginner, start with fundamentals. Professionals can skip ahead to advanced modules.

Step 2: Choose the Right DevOps Online Course

Look for these features:

Structured curriculum

Hands-on labs

Real-world projects

Mentorship

DevOps training with placement support

Step 3: Build a Portfolio

Document your projects on GitHub to show potential employers your work.

Step 4: Get Certified

Choose a respected DevOps certification to validate your skills.

Step 5: Apply for Jobs

Use placement support services or apply directly. Showcase your portfolio and certifications confidently.

Common DevOps Tools You’ll Master

Tool

Use Case

Git

Source control and version tracking

Jenkins

CI/CD pipeline automation

Docker

Application containerization

Kubernetes

Container orchestration

Terraform

Infrastructure as Code

Ansible

Configuration management

Prometheus

Monitoring and alerting

Grafana

Dashboard creation for system metrics

Mastering these DevOps automation tools equips you to handle end-to-end automation pipelines in real-world environments.

Why H2K Infosys for DevOps Training?

H2K Infosys offers one of the best DevOps training online programs with:

Expert-led sessions

Practical labs and tools

Real-world projects

Resume building and interview support

DevOps training with placement assistance

Their courses are designed to help both beginners and professionals transition into high-paying roles smoothly.

Key Takeaways

DevOps combines development and operations for faster, reliable software delivery

Online courses offer flexible, hands-on learning with real-world tools

A DevOps course online is ideal for career starters and upskillers alike

Real projects, certifications, and placement support boost job readiness

DevOps is one of the most in-demand and well-paying IT domains today

Conclusion

Ready to build a future-proof career in tech? Enroll in H2K Infosys’ DevOps course online for hands-on training, real-world projects, and career-focused support. Learn the tools that top companies use and get placement-ready today.

#devops training#devops training online#devops online training#devops training and certification#devops training with placement#devops online course#best devops training online#devops training and placement#devops course online#devops bootcamp online#DevOps automation tools

0 notes

Text

Docker Tutorial for Beginners: Learn Docker Step by Step

What is Docker?

Docker is an open-source platform that enables developers to automate the deployment of applications inside lightweight, portable containers. These containers include everything the application needs to run—code, runtime, system tools, libraries, and settings—so that it can work reliably in any environment.

Before Docker, developers faced the age-old problem: “It works on my machine!” Docker solves this by providing a consistent runtime environment across development, testing, and production.

Why Learn Docker?

Docker is used by organizations of all sizes to simplify software delivery and improve scalability. As more companies shift to microservices, cloud computing, and DevOps practices, Docker has become a must-have skill. Learning Docker helps you:

Package applications quickly and consistently

Deploy apps across different environments with confidence

Reduce system conflicts and configuration issues

Improve collaboration between development and operations teams

Work more effectively with modern cloud platforms like AWS, Azure, and GCP

Who Is This Docker Tutorial For?

This Docker tutorial is designed for absolute beginners. Whether you're a developer, system administrator, QA engineer, or DevOps enthusiast, you’ll find step-by-step instructions to help you:

Understand the basics of Docker

Install Docker on your machine

Create and manage Docker containers

Build custom Docker images

Use Docker commands and best practices

No prior knowledge of containers is required, but basic familiarity with the command line and a programming language (like Python, Java, or Node.js) will be helpful.

What You Will Learn: Step-by-Step Breakdown

1. Introduction to Docker

We start with the fundamentals. You’ll learn:

What Docker is and why it’s useful

The difference between containers and virtual machines

Key Docker components: Docker Engine, Docker Hub, Dockerfile, Docker Compose

2. Installing Docker

Next, we guide you through installing Docker on:

Windows

macOS

Linux

You’ll set up Docker Desktop or Docker CLI and run your first container using the hello-world image.

3. Working with Docker Images and Containers

You’ll explore:

How to pull images from Docker Hub

How to run containers using docker run

Inspecting containers with docker ps, docker inspect, and docker logs

Stopping and removing containers

4. Building Custom Docker Images

You’ll learn how to:

Write a Dockerfile

Use docker build to create a custom image

Add dependencies and environment variables

Optimize Docker images for performance

5. Docker Volumes and Networking

Understand how to:

Use volumes to persist data outside containers

Create custom networks for container communication

Link multiple containers (e.g., a Node.js app with a MongoDB container)

6. Docker Compose (Bonus Section)

Docker Compose lets you define multi-container applications. You’ll learn how to:

Write a docker-compose.yml file

Start multiple services with a single command

Manage application stacks easily

Real-World Examples Included

Throughout the tutorial, we use real-world examples to reinforce each concept. You’ll deploy a simple web application using Docker, connect it to a database, and scale services with Docker Compose.

Example Projects:

Dockerizing a static HTML website

Creating a REST API with Node.js and Express inside a container

Running a MySQL or MongoDB database container

Building a full-stack web app with Docker Compose

Best Practices and Tips

As you progress, you’ll also learn:

Naming conventions for containers and images

How to clean up unused images and containers

Tagging and pushing images to Docker Hub

Security basics when using Docker in production

What’s Next After This Tutorial?

After completing this Docker tutorial, you’ll be well-equipped to:

Use Docker in personal or professional projects

Learn Kubernetes and container orchestration

Apply Docker in CI/CD pipelines

Deploy containers to cloud platforms

Conclusion

Docker is an essential tool in the modern developer's toolbox. By learning Docker step by step in this beginner-friendly tutorial, you’ll gain the skills and confidence to build, deploy, and manage applications efficiently and consistently across different environments.

Whether you’re building simple web apps or complex microservices, Docker provides the flexibility, speed, and scalability needed for success. So dive in, follow along with the hands-on examples, and start your journey to mastering containerization with Docker tpoint-tech!

0 notes

Text

CI/CD Pipelines in the Cloud: How to Achieve Faster, Safer Deployments

In today’s digital-first world, speed and reliability in software delivery are critical. With cloud infrastructure becoming the new normal, CI/CD pipelines (Continuous Integration and Continuous Deployment) are key to enabling faster, safer deployments. They help development teams automate code integration, testing, and deployment—removing bottlenecks and minimizing risks.

In this blog, we’ll explore how CI/CD works in cloud environments, why it’s essential for modern development, and how it supports seamless delivery of scalable, secure applications.

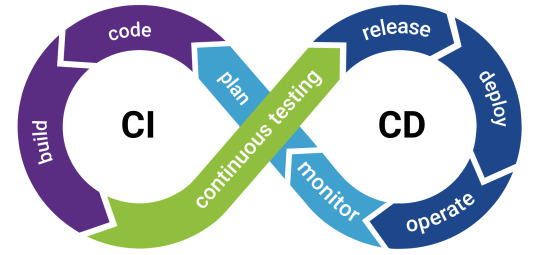

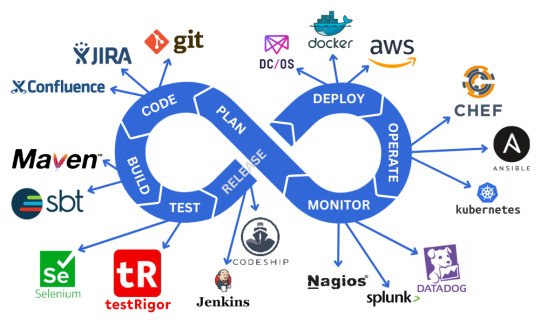

🛠️ What Is CI/CD?

Continuous Integration (CI): Developers frequently merge code into a shared repository. Each change triggers automated tests to catch bugs early.

Continuous Deployment (CD): After passing tests, code is automatically deployed to production or staging environments without manual intervention.

Together, CI/CD creates a feedback loop that ensures rapid, reliable software delivery.

🌐 Why CI/CD Pipelines Matter in the Cloud

CI/CD is not a luxury—it’s a necessity in the cloud. Here’s why:

Cloud environments are dynamic: With auto-scaling, microservices, and distributed systems, manual deployments are error-prone and slow.

Rapid release cycles: Customers expect continuous improvements. CI/CD helps teams ship features weekly or even daily.

Consistency and traceability: Every change is logged, tested, and version-controlled—reducing deployment risks.

The cloud provides the perfect infrastructure for CI/CD pipelines, offering scalability, flexibility, and automation capabilities.

🔁 How a Cloud-Based CI/CD Pipeline Works

A typical CI/CD pipeline in the cloud includes:

Code Commit: Developers push code to a Git repository (e.g., GitHub, GitLab, Bitbucket).

Build & Test: Cloud-native CI tools (like GitHub Actions, AWS CodeBuild, or CircleCI) compile the code and run unit/integration tests.

Artifact Creation: Build artifacts (e.g., Docker images) are stored in cloud repositories (e.g., Amazon ECR, Azure Container Registry).

Deployment: Tools like AWS CodeDeploy, Azure DevOps, or ArgoCD deploy the artifacts to target environments.

Monitoring: Real-time monitoring and alerts ensure the deployment is successful and stable.

✅ Benefits of Cloud CI/CD

1. Faster Time-to-Market

Automated testing and deployment reduce manual overhead, accelerating release cycles.

2. Improved Code Quality

Each commit is tested, catching bugs early and ensuring only clean code reaches production.

3. Consistent Deployments

Standardized pipelines eliminate the “it worked on my machine” problem, ensuring repeatable results.

4. Efficient Collaboration

CI/CD fosters DevOps culture, encouraging collaboration between developers, testers, and operations.

5. Scalability on Demand

Cloud CI/CD systems scale automatically with the size and complexity of the application.

🔐 Security and Compliance in CI/CD

Modern pipelines are integrating security and compliance checks directly into the deployment process (DevSecOps). These include:

Static and dynamic code analysis

Container vulnerability scans

Secrets detection

Infrastructure compliance checks

By catching vulnerabilities early, businesses reduce risks and ensure regulatory alignment.

📊 CI/CD with IaC and Automated Testing

CI/CD becomes even more powerful when combined with:

Infrastructure as Code (IaC) for consistent cloud infrastructure provisioning

Automated Testing to validate performance, functionality, and security

Together, they create a fully automated, end-to-end deployment ecosystem that supports cloud-native scalability and resilience.

🏢 How Salzen Cloud Builds Smart CI/CD Solutions

At Salzen Cloud, we design and implement cloud-native CI/CD pipelines tailored to your business needs. Whether you're deploying microservices on Kubernetes, serverless applications, or hybrid environments, we help you:

Automate build, test, and deployment workflows

Integrate security and compliance into your pipelines

Optimize for speed, reliability, and rollback safety

Monitor performance and deployment health in real time

Our solutions empower teams to ship faster, fix faster, and innovate with confidence.

🧩 Conclusion

CI/CD is the backbone of modern cloud development. It streamlines the software delivery process, reduces manual errors, and provides the agility needed to stay competitive.

To achieve faster, safer deployments in the cloud:

Adopt CI/CD pipelines for all projects

Integrate IaC and automated testing

Shift security left with DevSecOps practices

Continuously monitor and optimize your pipelines

With the right CI/CD strategy, your team can move fast without breaking things—delivering value to users at cloud speed.

Want help designing a CI/CD pipeline for your cloud environment? Let Salzen Cloud show you how.

0 notes

Text

Docker and Containerization in Cloud Native Development

In the world of cloud native application development, the demand for speed, agility, and scalability has never been higher. Businesses strive to deliver software faster while maintaining performance, reliability, and security. One of the key technologies enabling this transformation is Docker—a powerful tool that uses containerization to simplify and streamline the development and deployment of applications.

Containers, especially when managed with Docker, have become fundamental to how modern applications are built and operated in cloud environments. They encapsulate everything an application needs to run—code, dependencies, libraries, and configuration—into lightweight, portable units. This approach has revolutionized the software lifecycle from development to production.

What Is Docker and Why Does It Matter?

Docker is an open-source platform that automates the deployment of applications inside software containers. Containers offer a more consistent and efficient way to manage software, allowing developers to build once and run anywhere—without worrying about environmental inconsistencies.

Before Docker, developers often faced the notorious "it works on my machine" issue. With Docker, you can run the same containerized app in development, testing, and production environments without modification. This consistency dramatically reduces bugs and deployment failures.

Benefits of Docker in Cloud Native Development

Docker plays a vital role in cloud native environments by promoting the principles of scalability, automation, and microservices-based architecture. Here’s how it contributes:

1. Portability and Consistency

Since containers include everything needed to run an app, they can move between cloud providers or on-prem systems without changes. Whether you're using AWS, Azure, GCP, or a private cloud, Docker provides a seamless deployment experience.

2. Resource Efficiency

Containers are lightweight and share the host system’s kernel, making them more efficient than virtual machines (VMs). You can run more containers on the same hardware, reducing costs and resource usage.

3. Rapid Deployment and Rollback

Docker enables faster application deployment through pre-configured images and automated CI/CD pipelines. If a new deployment fails, you can quickly roll back to a previous version by using container snapshots.

4. Isolation and Security

Each Docker container runs in isolation, ensuring that applications do not interfere with one another. This isolation also enhances security, as vulnerabilities in one container do not affect others on the same host.

5. Support for Microservices

Microservices architecture is a key component of cloud native application development. Docker supports this approach by enabling the development of loosely coupled services that can scale independently and communicate via APIs.

Docker Compose and Orchestration Tools

Docker alone is powerful, but in larger cloud native environments, you need tools to manage multiple containers and services. Docker Compose allows developers to define and manage multi-container applications using a single YAML file. For production-scale orchestration, Kubernetes takes over, managing deployment, scaling, and health of containers.

Docker integrates well with Kubernetes, providing a robust foundation for deploying and managing microservices-based applications at scale.

Real-World Use Cases of Docker in the Cloud

Many organizations already use Docker to power their digital transformation. For instance:

Netflix uses containerization to manage thousands of microservices that stream content globally.

Spotify runs its music streaming services in containers for consistent performance.

Airbnb speeds up development and testing by running staging environments in isolated containers.

These examples show how Docker not only supports large-scale operations but also enhances agility in cloud-based software development.

Best Practices for Using Docker in Cloud Native Environments

To make the most of Docker in your cloud native journey, consider these best practices:

Use minimal base images (like Alpine) to reduce attack surfaces and improve performance.

Keep containers stateless and use external services for data storage to support scalability.

Implement proper logging and monitoring to ensure container health and diagnose issues.

Use multi-stage builds to keep images clean and optimized for production.

Automate container updates using CI/CD tools for faster iteration and delivery.

These practices help maintain a secure, maintainable, and scalable cloud native architecture.

Challenges and Considerations

Despite its many advantages, Docker does come with challenges. Managing networking between containers, securing images, and handling persistent storage can be complex. However, with the right tools and strategies, these issues can be managed effectively.

Cloud providers now offer native services—like AWS ECS, Azure Container Instances, and Google Cloud Run—that simplify the management of containerized workloads, making Docker even more accessible for development teams.

Conclusion

Docker has become an essential part of cloud native application development by making it easier to build, deploy, and manage modern applications. Its simplicity, consistency, and compatibility with orchestration tools like Kubernetes make it a cornerstone technology for businesses embracing the cloud.

As organizations continue to evolve their software strategies, Docker will remain a key enabler—powering faster releases, better scalability, and more resilient applications in the cloud era.

#CloudNative#Docker#Containers#DevOps#Kubernetes#Microservices#CloudComputing#CloudDevelopment#SoftwareEngineering#ModernApps#CloudZone#CloudArchitecture

0 notes

Text

How to Secure AI Artifacts Across the ML Lifecycle – Anton R Gordon’s Protocol for Trusted Pipelines

In today’s cloud-native AI landscape, securing machine learning (ML) artifacts is no longer optional—it’s critical. As AI models evolve from experimental notebooks to enterprise-scale applications, every artifact generated—data, models, configurations, and logs—becomes a potential attack surface. Anton R Gordon, a seasoned AI architect and cloud security expert, has pioneered a structured approach for securing AI pipelines across the ML lifecycle. His protocol is purpose-built for teams deploying ML workflows on platforms like AWS, GCP, and Azure.

Why ML Artifact Security Matters

Machine learning pipelines involve several critical stages—data ingestion, preprocessing, model training, deployment, and monitoring. Each phase produces artifacts such as datasets, serialized models, training logs, and container images. If compromised, these artifacts can lead to:

Data leakage and compliance violations (e.g., GDPR, HIPAA)

Model poisoning or backdoor attacks

Unauthorized model replication or intellectual property theft

Reduced model accuracy due to tampered configurations

Anton R Gordon’s Security Protocol for Trusted AI Pipelines

Anton’s methodology combines secure cloud services with DevSecOps principles to ensure that ML artifacts remain verifiable, auditable, and tamper-proof.

1. Secure Data Ingestion & Preprocessing

Anton R Gordon emphasizes securing the source data using encrypted S3 buckets or Google Cloud Storage with fine-grained IAM policies. All data ingestion pipelines must implement checksum validation and data versioning to ensure integrity.

He recommends integrating AWS Glue with Data Catalog encryption enabled, and VPC-only connectivity to eliminate exposure to public internet endpoints.

2. Model Training with Encryption and Audit Logging

During training, Anton R Gordon suggests enabling SageMaker Training Jobs with KMS encryption for both input and output artifacts. Logs should be streamed to CloudWatch Logs or GCP Logging with retention policies configured.

Docker containers used in training should be scanned with AWS Inspector or GCP Container Analysis, and signed using tools like cosign to verify authenticity during deployment.

3. Model Registry and Artifact Signing

A crucial step in Gordon’s protocol is registering models in a version-controlled model registry, such as SageMaker Model Registry or MLflow, along with cryptographic signatures.

Models are hashed and signed using SHA-256 and stored with corresponding metadata to prevent rollback or substitution attacks. Signing ensures that only approved models proceed to deployment.

4. Secure Deployment with CI/CD Integration

Anton integrates CI/CD pipelines with security gates using tools like AWS CodePipeline and GitHub Actions, enforcing checks for signed models, container scan results, and infrastructure-as-code validation.

Deployed endpoints are protected using VPC endpoint policies, IAM role-based access, and SSL/TLS encryption.

5. Monitoring & Drift Detection with Alerting

In production, SageMaker Model Monitor and Amazon CloudTrail are used to detect unexpected behavior or changes to model behavior or configurations. Alerts are sent via Amazon SNS, and automated rollbacks are triggered on anomaly detection.

Final Thoughts

Anton R Gordon’s protocol for securing AI artifacts offers a holistic, scalable, and cloud-native strategy to protect ML pipelines in real-world environments. As AI adoption continues to surge, implementing these trusted pipeline principles ensures your models—and your business—remain resilient, compliant, and secure.

0 notes

Text

Serverless vs. Containers: Which Cloud Computing Model Should You Use?

In today’s cloud-driven world, businesses are building and deploying applications faster than ever before. Two of the most popular technologies empowering this transformation are Serverless computing and Containers. While both offer flexibility, scalability, and efficiency, they serve different purposes and excel in different scenarios.

If you're wondering whether to choose Serverless or Containers for your next project, this blog will break down the pros, cons, and use cases—helping you make an informed, strategic decision.

What Is Serverless Computing?

Serverless computing is a cloud-native execution model where cloud providers manage the infrastructure, provisioning, and scaling automatically. Developers simply upload their code as functions and define triggers, while the cloud handles the rest.

Key Features of Serverless:

No infrastructure management

Event-driven architecture

Automatic scaling

Pay-per-execution pricing model

Popular Platforms:

AWS Lambda

Google Cloud Functions

Azure Functions

What Are Containers?

Containers package an application along with its dependencies and libraries into a single unit. This ensures consistent performance across environments and supports microservices architecture.

Containers are orchestrated using tools like Kubernetes or Docker Swarm to ensure availability, scalability, and automation.

Key Features of Containers:

Full control over runtime and OS

Environment consistency

Portability across platforms

Ideal for complex or long-running applications

Popular Tools:

Docker

Kubernetes

Podman

Serverless vs. Containers: Head-to-Head Comparison

Feature

Serverless

Containers

Use Case

Event-driven, short-lived functions

Complex, long-running applications

Scalability

Auto-scales instantly

Requires orchestration (e.g., Kubernetes)

Startup Time

Cold starts possible

Faster if container is pre-warmed

Pricing Model

Pay-per-use (per invocation)

Pay-per-resource (CPU/RAM)

Management

Fully managed by provider

Requires devops team or automation setup

Vendor Lock-In

High (platform-specific)

Low (containers run anywhere)

Runtime Flexibility

Limited runtimes supported

Any language, any framework

When to Use Serverless

Best For:

Lightweight APIs

Scheduled jobs (e.g., cron)

Real-time processing (e.g., image uploads, IoT)

Backend logic in JAMstack websites

Advantages:

Faster time-to-market

Minimal ops overhead

Highly cost-effective for sporadic workloads

Simplifies event-driven architecture

Limitations:

Cold start latency

Limited execution time (e.g., 15 mins on AWS Lambda)

Difficult for complex or stateful workflows

When to Use Containers

Best For:

Enterprise-grade microservices

Stateful applications

Applications requiring custom runtimes

Complex deployments and APIs

Advantages:

Full control over runtime and configuration

Seamless portability across environments

Supports any tech stack

Easier integration with CI/CD pipelines

Limitations:

Requires container orchestration

More complex infrastructure setup

Can be costlier if not optimized

Can You Use Both?

Yes—and you probably should.

Many modern cloud-native architectures combine containers and serverless functions for optimal results.

Example Hybrid Architecture:

Use Containers (via Kubernetes) for core services.

Use Serverless for auxiliary tasks like:

Sending emails

Processing webhook events

Triggering CI/CD jobs

Resizing images

This hybrid model allows teams to benefit from the control of containers and the agility of serverless.

Serverless vs. Containers: How to Choose

Business Need

Recommendation

Rapid MVP or prototype

Serverless

Full-featured app backend

Containers

Low-traffic event-driven app

Serverless

CPU/GPU-intensive tasks

Containers

Scheduled background jobs

Serverless

Scalable enterprise service

Containers (w/ Kubernetes)

Final Thoughts

Choosing between Serverless and Containers is not about which is better—it’s about choosing the right tool for the job.

Go Serverless when you need speed, simplicity, and cost-efficiency for lightweight or event-driven tasks.

Go with Containers when you need flexibility, full control, and consistency across development, staging, and production.

Both technologies are essential pillars of modern cloud computing. The key is understanding their strengths and limitations—and using them together when it makes sense.

#artificial intelligence#sovereign ai#coding#html#entrepreneur#devlog#linux#economy#gamedev#indiedev

1 note

·

View note

Text

Human-Centric Exploration of Generative AI Development

Generative AI is more than a buzzword. It’s a transformative technology shaping industries and igniting innovation across the globe. From creating expressive visuals to designing personalized experiences, it allows organizations to build powerful, scalable solutions with lasting impact. As tools like ChatGPT and Stable Diffusion continue to gain traction, investors and businesses alike are exploring the practical steps to develop generative AI solutions tailored to real-world needs.

Why Generative AI is the Future of Innovation

The rapid rise of generative AI in sectors like finance, healthcare, and media has drawn immense interest—and funding. OpenAI's valuation crossed $25 billion with Microsoft backing it with over $1 billion, signaling confidence in generative models even amidst broader tech downturns. The market is projected to reach $442.07 billion by 2031, driven by its ability to generate text, code, images, music, and more. For companies looking to gain a competitive edge, investing in generative AI isn’t just a trend—it’s a strategic move.

What Makes Generative AI a Business Imperative?

Generative AI increases efficiency by automating tasks, drives creative ideation beyond human limits, and enhances decision-making through data analysis. Its applications include marketing content creation, virtual product design, intelligent customer interactions, and adaptive user experiences. It also reduces operational costs and helps businesses respond faster to market demands.

How to Create a Generative AI Solution: A Step-by-Step Overview

1. Define Clear Objectives: Understand what problem you're solving and what outcomes you seek. 2. Collect and Prepare Quality Data: Whether it's image, audio, or text-based, the dataset's quality sets the foundation. 3. Choose the Right Tools and Frameworks: Utilize Python, TensorFlow, PyTorch, and cloud platforms like AWS or Azure for development. 4. Select Suitable Architectures: From GANs to VAEs, LSTMs to autoregressive models, align the model type with your solution needs. 5. Train, Fine-Tune, and Test: Iteratively improve performance through tuning hyperparameters and validating outputs. 6. Deploy and Monitor: Deploy using Docker, Flask, or Kubernetes and monitor with MLflow or TensorBoard.

Explore a comprehensive guide here: How to Create Your Own Generative AI Solution

Industry Applications That Matter

Healthcare: Personalized treatment plans, drug discovery

Finance: Fraud detection, predictive analytics

Education: Tailored learning modules, content generation

Manufacturing: Process optimization, predictive maintenance

Retail: Customer behavior analysis, content personalization

Partnering with the Right Experts

Building a successful generative AI model requires technical know-how, domain expertise, and iterative optimization. This is where generative AI consulting services come into play. A reliable generative AI consulting company like SoluLab offers tailored support—from strategy and development to deployment and scale.

Whether you need generative AI consultants to help refine your idea or want a long-term partner among top generative AI consulting companies, SoluLab stands out with its proven expertise. Explore our Gen AI Consulting Services

Final Thoughts

Generative AI is not just shaping the future—it’s redefining it. By collaborating with experienced partners, adopting best practices, and continuously iterating, you can craft AI solutions that evolve with your business and customers. The future of business is generative—are you ready to build it?

0 notes

Text

CI/CD Explained: Making Software Delivery Seamless

In today’s fast-paced digital landscape, where users expect frequent updates and bug fixes, delivering software swiftly and reliably isn’t just an advantage — it’s a necessity. That’s where CI/CD comes into play. CI/CD (short for Continuous Integration and Continuous Delivery/Deployment) is the backbone of modern DevOps practices and plays a crucial role in enhancing productivity, minimizing risks, and speeding up time to market.

In this blog, we’re going to explore the CI/CD pipeline in a way that’s easy to grasp, even if you’re just dipping your toes into the software development waters. So, grab your coffee and settle in — let’s demystify CI/CD together.

What is CI/CD?

Let’s break down the terminology first:

Continuous Integration (CI) is the practice of frequently integrating code changes into a shared repository. Each integration is verified by an automated build and tests, allowing teams to detect problems early.

Continuous Delivery (CD) ensures that the software can be released to production at any time. It involves automatically pushing code changes to a staging environment after passing CI checks.

Continuous Deployment, also abbreviated as CD, takes things a step further. Here, every change that passes all stages of the production pipeline is automatically released to customers without manual intervention.

Think of CI/CD as a conveyor belt in a high-tech bakery. The ingredients (code changes) are put on the belt, and through a series of steps (build, test, deploy), you end up with freshly baked software ready to be served.

Why is CI/CD Important?

Speed: CI/CD accelerates the software release process, enabling teams to deliver new features, updates, and fixes quickly.

Quality: Automated testing helps catch bugs and issues early in the development cycle, improving the overall quality of the product.

Consistency: The pipeline standardizes how software is built, tested, and deployed, making the process predictable and repeatable.

Collaboration: With CI/CD in place, developers work in a more collaborative and integrated manner, breaking down silos.

Customer Satisfaction: Faster delivery of reliable updates means happier users.

Core Components of a CI/CD Pipeline

Here’s what typically makes up a robust CI/CD pipeline:

Source Code Repository: Usually Git-based platforms like GitHub, GitLab, or Bitbucket. This is where the code lives.

Build Server: Tools like Jenkins, Travis CI, or CircleCI compile the code and run unit tests.

Automated Tests: Unit, integration, and end-to-end tests ensure the code behaves as expected.

Artifact Repository: A place to store build outputs, such as JARs, Docker images, etc.

Deployment Automation: Tools like Spinnaker, Octopus Deploy, or AWS CodeDeploy automate the delivery of applications to various environments.

Monitoring & Feedback: Monitoring tools like Prometheus, Grafana, or New Relic provide insights post-deployment.

The CI/CD Workflow: A Step-by-Step Look

Let’s walk through a typical CI/CD workflow:

Code Commit: A developer pushes new code to the source repository.

Automated Build: The CI tool kicks in, compiles the code, and checks for errors.

Testing Phase: Automated tests (unit, integration, etc.) run to validate the code.

Artifact Creation: A build artifact is generated and stored.

Staging Deployment: The artifact is deployed to a staging environment for further testing.

Approval/Automation: Depending on whether it’s Continuous Delivery or Deployment, the change is either auto-deployed or requires manual approval.

Production Release: The software goes live, ready for end-users.

Monitoring & Feedback: Post-deployment monitoring helps catch anomalies and improve future releases.

Benefits of CI/CD in Real-Life Scenarios

Let’s take a few examples to show how CI/CD transforms software delivery:

E-commerce Sites: Imagine fixing a payment bug and deploying the fix in hours, not days.

Mobile App Development: Push weekly app updates with zero downtime.

SaaS Platforms: Roll out new features incrementally and get real-time user feedback.

With CI/CD, you don’t need to wait for a quarterly release cycle to delight your users. You do it continuously.

Tools That Power CI/CD

Here’s a friendly table to help you get familiar with popular CI/CD tools:PurposeToolsSource ControlGitHub, GitLab, BitbucketCI/CD PipelinesJenkins, CircleCI, Travis CI, GitLab CI/CDContainerizationDocker, KubernetesConfiguration ManagementAnsible, Chef, PuppetDeployment AutomationAWS CodeDeploy, Octopus DeployMonitoringPrometheus, Datadog, New Relic

Each of these tools plays a specific role, and many work beautifully together.

CI/CD Best Practices

Keep Builds Fast: Optimize tests and build processes to minimize wait times.

Test Early and Often: Incorporate testing at every stage of the pipeline.

Fail Fast: Catch errors as early as possible and notify developers instantly.

Use Infrastructure as Code: Manage your environment configurations like version-controlled code.

Secure Your Pipeline: Incorporate security checks, secrets management, and compliance rules.

Monitor Everything: Observability isn’t optional; know what’s going on post-deployment.

Common CI/CD Pitfalls (and How to Avoid Them)

Skipping Tests: Don’t bypass automated tests to save time — you’ll pay for it later.

Overcomplicating Pipelines: Keep it simple and modular.

Lack of Rollback Strategy: Always be prepared to revert to a stable version.

Neglecting Team Training: CI/CD success relies on team adoption and knowledge.

CI/CD and DevOps: The Dynamic Duo

While CI/CD focuses on the pipeline, DevOps is the broader culture that promotes collaboration between development and operations teams. CI/CD is a vital piece of the DevOps puzzle, enabling continuous feedback loops and shared responsibilities.

When paired effectively, they lead to:

Shorter development cycles

Improved deployment frequency

Lower failure rates

Faster recovery from incidents

Why Businesses in Australia Are Adopting CI/CD

The tech ecosystem in Australia is booming. From fintech startups to large enterprises, the demand for reliable, fast software delivery is pushing companies to adopt CI/CD practices.

A leading software development company in Australia recently shared how CI/CD helped them cut deployment times by 70% and reduce critical bugs in production. Their secret? Embracing automation, training their teams, and gradually building a culture of continuous improvement.

Final Thoughts

CI/CD isn’t just a set of tools — it’s a mindset. It’s about delivering value to users faster, with fewer headaches. Whether you’re building a mobile app, a web platform, or a complex enterprise system, CI/CD practices will make your life easier and your software better.

And remember, the journey to seamless software delivery doesn’t have to be overwhelming. Start small, automate what you can, learn from failures, and iterate. Before you know it, you’ll be releasing code like a pro.

If you’re just getting started or looking to improve your current pipeline, this is your sign to dive deeper into CI/CD. You’ve got this!

0 notes

Video

youtube

Deploy docker image to AWS | Deploy Docker Container on EC2 | Push, Pull...

0 notes

Text

Docker Kubernetes | Docker and Kubernetes Online Training

Running Containers with Docker & Kubernetes

In the Docker and Kubernetes cloud-native world, containerization has revolutionized application deployment and management. Docker and Kubernetes are the two most widely used technologies for running and orchestrating containers. While Docker simplifies container creation and deployment, Kubernetes ensures efficient container management at scale. This article explores the key concepts, benefits, and use cases of running containers with Docker and Kubernetes.

Understanding Containers

A container is a lightweight, standalone package that includes everything needed to run an application, including the code, runtime, libraries, and dependencies. Unlike traditional virtual machines (VMs), containers share the host operating system’s kernel, making them more efficient, portable, and faster to start. Docker and Kubernetes Online Training

Key benefits of containers:

Portability: Containers run the same way across different environments, from a developer’s laptop to cloud servers.

Scalability: Containers can be quickly replicated and distributed across multiple nodes.

Resource Efficiency: Since containers share the host OS, they consume fewer resources than VMs.

What is Docker?

Docker is an open-source platform that allows developers to build, package, and distribute applications as containers. It provides a simple way to create containerized applications using a Dockerfile, which defines the container’s configuration.

Key features of Docker:

Containerization: Encapsulates applications with their dependencies.

Image-based Deployment: Applications are deployed using lightweight, reusable container images.

Simplified Development Workflow: Developers can build and test applications in isolated environments. Docker Kubernetes Online Course

Docker is ideal for small-scale deployments but has limitations in managing large containerized applications across multiple servers. This is where Kubernetes comes in.

What is Kubernetes?

Kubernetes (often abbreviated as K8s) is an open-source container orchestration platform designed to automate the deployment, scaling, and management of containerized applications. Originally developed by Google, Kubernetes is now widely used for running container workloads at scale.

Key components of Kubernetes:

Pods: The smallest deployable unit that contains one or more containers.

Nodes: The physical or virtual machines that run container workloads.

Clusters: A group of nodes working together to run applications.

Services: Help manage network communication between different components of an application.

How Docker and Kubernetes Work Together

While Docker is used to create and run containers, Kubernetes is responsible for managing them in production. Here’s how they work together: Docker and Kubernetes Course

Building a Container: A developer packages an application as a Docker container.

Pushing the Image: The container image is stored in a container registry like Docker Hub.

Deploying with Kubernetes: Kubernetes pulls the container image and deploys it across multiple nodes.

Scaling & Load Balancing: Kubernetes automatically scales the application based on demand.

Monitoring & Recovery: If a container crashes, Kubernetes restarts it automatically.

This combination ensures high availability, scalability, and efficient resource utilization.

Benefits of Using Docker & Kubernetes Together

Scalability: Kubernetes allows dynamic scaling of applications based on traffic.

Fault Tolerance: Kubernetes automatically replaces failed containers to maintain uptime.

Automation reduces manual intervention by automating deployments and updates.

Multi-Cloud Compatibility: Works across different cloud providers like AWS, Azure, and Google Cloud.

Use Cases of Docker & Kubernetes

Microservices Deployment: Ideal for running and managing microservices-based applications.

CI/CD Pipelines: Streamlines application development with automated testing and deployment.

Hybrid Cloud Deployments: Enable running applications across on-premises and cloud environments.

Big Data Processing: Supports large-scale data workloads using containerized environments. Docker and Kubernetes Training

Conclusion

Docker and Kubernetes have transformed modern application deployment by making it faster, more scalable, and highly efficient. While Docker simplifies containerization, Kubernetes takes it a step further by providing automation, scaling, and self-healing capabilities. Together, they form a powerful combination for building and managing cloud-native applications.

Trending Courses: ServiceNow, SAP Ariba, Site Reliability Engineering

Visualpath is the Best Software Online Training Institute in Hyderabad. Avail is complete worldwide. You will get the best course at an affordable cost. For More Information about Docker and Kubernetes Online Training

Contact Call/WhatsApp: +91-7032290546

Visit: https://www.visualpath.in/online-docker-and-kubernetes-training.html

#DockerandKubernetesTraining#DockerandKubernetesCourse#DockerandKubernetesTraininginHyderabad#DockerKubernetesOnlineCourse#DockerOnlineTraining#KubernetesOnlineTraining#DockerandKubernetesOnlineTraining#KubernetesCertificationTrainingCourse#DockerandKubernetesTraininginAmeerpet#KubernetesOnlineTraininginIndia#DockerandKubernetesTraininginBangalore#DockerandKubernetesTraininginChennai

0 notes

Text

How CI/CD is Changing the Future of Software Development

In today’s fast-paced software development landscape, implementing a CI/CD pipeline is essential for delivering high-quality applications efficiently. CI/CD (Continuous Integration and Continuous Delivery/Deployment) automates code integration, testing, and deployment, ensuring faster releases and improved software quality. These DevOps practices help teams catch bugs early, reduce risks, and streamline workflows.

By leveraging tools like GitHub Actions, Jenkins, Kubernetes, and Keploy, developers can automate repetitive tasks and focus on innovation. This guide will demystify CI/CD, explore its core principles, and show how Keploy enhances API testing, helping teams build robust CI/CD workflows that drive efficiency and reliability.

What is CI/CD?

CI/CD stands for Continuous Integration (CI) and Continuous Delivery/Deployment (CD). These practices automate and streamline the process of integrating code changes, testing them, and deploying applications reliably.

Continuous Integration (CI): Developers frequently merge code changes into a shared repository (e.g., GitHub), triggering automated builds and tests to catch issues early. This reduces integration conflicts and ensures code quality.

Continuous Delivery (CD): Extends CI by automating deployments to staging environments. Code is always in a deployable state but requires manual approval for production.

Continuous Deployment: The final step is where validated code is automatically deployed to production without human intervention

Example Workflow:

Developer commits code → 2. CI server builds and tests → 3. Staging deployment → 4. Production deployment (manual or automated).

Why does CI/CD Matters?

CI/CD isn’t just a buzzword-it’s a game-changer:

Faster Releases: Automate repetitive tasks, reducing time-to-market by 25–30%

Improved Quality: Catch bugs early with automated testing (unit, integration, security scans)

Reduced Risk: Small, incremental updates minimize the impact of failures

Collaboration: Teams work on a unified codebase, avoiding "integration hell"

Real-World Impact:

Netflix deploys thousands of times daily using CI/CD, while Amazon uses it to push code every 7 seconds

What Core Components of a CI/CD Pipeline?

A robust pipeline includes these stages:

Source Code Management: Git repositories (GitHub, GitLab) track changes and enable collaboration

Build Automation: Tools like Jenkins or GitHub Actions compile code into deployable artifacts (e.g., Docker images)

Automated Testing

Unit tests (JUnit, PyTest, Keploy)

Integration tests (Selenium)

Security scans (SonarQube, Keploy for API testing)

Deployment: Use Kubernetes or AWS CodeDeploy to push code to staging/production

Monitoring: Tools like Prometheus track performance post-deployment

Why Keploy?

Keploy simplifies testing by auto-generating test cases from user traffic, reducing manual effort and ensuring robust API validation

Key Design Principles for Effective CI/CD

Follow these principles to avoid common pitfalls:

Automate Everything: From builds to security checks. Manual steps = bottlenecks 610.

Version Control: Git ensures traceability and rollback capability 3.

Shift-Left Security: Integrate security scans early (e.g., Trivy for vulnerabilities) 28.

Environment Parity: Staging should mirror production to avoid surprises 10.

Scalability: Design pipelines to handle growing teams and codebases 3.

Pro Tip: Use Infrastructure as Code (IaC) tools like Terraform to manage environments consistently 8.

Best CI/CD Tools for Modern DevOps

Keploy is also integrable with the CI/CD platforms mentioned.

Jenkins vs. GitLab CI: