#how to deploy image in openshift

Explore tagged Tumblr posts

Text

Migrating Virtual Machines to Red Hat OpenShift Virtualization with Ansible Automation Platform

As enterprises modernize their infrastructure, migrating traditional virtual machines (VMs) to container-native platforms is no longer just a trend — it’s a necessity. One of the most powerful solutions for this evolution is Red Hat OpenShift Virtualization, which allows organizations to run VMs side-by-side with containers on a unified Kubernetes platform. When combined with Red Hat Ansible Automation Platform, this migration can be automated, repeatable, and efficient.

In this blog, we’ll explore how enterprises can leverage Ansible to seamlessly migrate workloads from legacy virtualization platforms (like VMware or KVM) to OpenShift Virtualization.

🔍 Why OpenShift Virtualization?

OpenShift Virtualization extends OpenShift’s capabilities to include traditional VMs, enabling:

Unified management of containers and VMs

Native integration with Kubernetes networking and storage

Simplified CI/CD pipelines that include VM-based workloads

Reduction of operational overhead and licensing costs

🛠️ The Role of Ansible Automation Platform

Red Hat Ansible Automation Platform is the glue that binds infrastructure automation, offering:

Agentless automation using SSH or APIs

Pre-built collections for platforms like VMware, OpenShift, KubeVirt, and more

Scalable execution environments for large-scale VM migration

Role-based access and governance through automation controller (formerly Tower)

🧭 Migration Workflow Overview

A typical migration flow using Ansible and OpenShift Virtualization involves:

1. Discovery Phase

Inventory the source VMs using Ansible VMware/KVM modules.

Collect VM configuration, network settings, and storage details.

2. Template Creation

Convert the discovered VM configurations into KubeVirt/OVIRT VM manifests.

Define OpenShift-native templates to match the workload requirements.

3. Image Conversion and Upload

Use tools like virt-v2v or Ansible roles to export VM disk images (VMDK/QCOW2).

Upload to OpenShift using Containerized Data Importer (CDI) or PVCs.

4. VM Deployment

Deploy converted VMs as KubeVirt VirtualMachines via Ansible Playbooks.

Integrate with OpenShift Networking and Storage (Multus, OCS, etc.)

5. Validation & Post-Migration

Run automated smoke tests or app-specific validation.

Integrate monitoring and alerting via Prometheus/Grafana.

- name: Deploy VM on OpenShift Virtualization

hosts: localhost

tasks:

- name: Create PVC for VM disk

k8s:

state: present

definition: "{{ lookup('file', 'vm-pvc.yaml') }}"

- name: Deploy VirtualMachine

k8s:

state: present

definition: "{{ lookup('file', 'vm-definition.yaml') }}"

🔐 Benefits of This Approach

✅ Consistency – Every VM migration follows the same process.

✅ Auditability – Track every step of the migration with Ansible logs.

✅ Security – Ansible integrates with enterprise IAM and RBAC policies.

✅ Scalability – Migrate tens or hundreds of VMs using automation workflows.

🌐 Real-World Use Case

At HawkStack Technologies, we’ve successfully helped enterprises migrate large-scale critical workloads from VMware vSphere to OpenShift Virtualization using Ansible. Our structured playbooks, coupled with Red Hat-supported tools, ensured zero data loss and minimal downtime.

🔚 Conclusion

As cloud-native adoption grows, merging the worlds of VMs and containers is no longer optional. With Red Hat OpenShift Virtualization and Ansible Automation Platform, organizations get the best of both worlds — a powerful, policy-driven, scalable infrastructure that supports modern and legacy workloads alike.

If you're planning a VM migration journey or modernizing your data center, reach out to HawkStack Technologies — Red Hat Certified Partners — to accelerate your transformation. For more details www.hawkstack.com

0 notes

Text

Red Hat Summit 2025: Microsoft Drives into Cloud Innovation

Microsoft at Red Hat Summit 2025

Microsoft is thrilled to announce that it will be a platinum sponsor of Red Hat Summit 2025, an IT community favourite. IT professionals can learn, collaborate, and build new technologies from the datacenter, public cloud, edge, and beyond at Red Hat Summit 2025, a major enterprise open source event. Microsoft's partnership with Red Hat is likely to be a highlight this year, displaying collaboration's power and inventive solutions.

This partnership has changed how organisations operate and serve customers throughout time. Red Hat's open-source leadership and Microsoft's cloud knowledge synergise to advance technology and help companies.

Red Hat's seamless integration with Microsoft Azure is a major benefit of the alliance. These connections let customers build, launch, and manage apps on a stable and flexible platform. Azure and Red Hat offer several tools for system modernisation and cloud-native app development. Red Hat OpenShift on Azure's scalability and security lets companies deploy containerised apps. Azure Red Hat Enterprise Linux is trustworthy for mission-critical apps.

Attend Red Hat Summit 2025 to learn about these technologies. Red Hat and Azure will benefit from Microsoft and Red Hat's new capabilities and integrations. These improvements in security and performance aim to meet organisations' digital needs.

WSL RHEL

This lets Red Hat Enterprise Linux use Microsoft Subsystem for Linux. WSL lets creators run Linux on Windows. RHEL for WSL lets developers run RHEL on Windows without a VM. With a free Red Hat Developer membership, developers may install the latest RHEL WSL image on their Windows PC and run Windows and RHEL concurrently.

Red Hat OpenShift Azure

Red Hat and Microsoft are enhancing security with Confidential Containers on Azure Red Hat OpenShift, available in public preview. Memory encryption and secure execution environments provide hardware-level workload security for healthcare and financial compliance. Enterprises may move from static service principals to dynamic, token-based credentials with Azure Red Hat OpenShift's managed identity in public preview.

Reduced operational complexity and security concerns enable container platform implementation in regulated environments. Azure Red Hat OpenShift has reached Spain's Central region and plans to expand to Microsoft Azure Government (MAG) and UAE Central by Q2 2025. Ddsv5 instance performance optimisation, enterprise-grade cluster-wide proxy, and OpenShift 4.16 compatibility are added. Red Hat OpenShift Virtualisation on Azure is also entering public preview, allowing customers to unify container and virtual machine administration on a single platform and speed up VM migration to Azure without restructuring.

RHEL landing area

Deploying, scaling, and administering RHEL instances on Azure uses Azure-specific system images. A landing zone lesson. Red Hat Satellite and Satellite Capsule automate software lifecycle and provide timely updates. Azure's on-demand capacity reservations ensure reliable availability in Azure regions, improving BCDR. Optimised identity management infrastructure deployments decrease replication failures and reduce latencies.

Azure Migrate application awareness and wave planning

By delivering technical and commercial insights for the whole application and categorising dependent resources into waves, the new application-aware methodology lets you pick Azure targets and tooling. A collection of dependent applications should be transferred to Azure for optimum cost and performance.

JBossEAP on AppService

Red Hat and Microsoft developed and maintain JBoss EAP on App Service, a managed tool for running business Java applications efficiently. Microsoft Azure recently made substantial changes to make JBoss EAP on App Service more inexpensive. JBoss EAP 8 offers a free tier, memory-optimized SKUs, and 60%+ license price reductions for Make monthly payments subscriptions and the soon-to-be-released Bring-Your-Own-Subscription to App Service.

JBoss EAP on Azure VMs

JBoss EAP on Azure Virtual Machines is currently GA with dependable solutions. Microsoft and Red Hat develop and maintain solutions. Automation templates for most basic resource provisioning tasks are available through the Azure Portal. The solutions include Azure Marketplace JBoss EAP VM images.

Red Hat Summit 2025 expectations

Red Hat Summit 2025 should be enjoyable with seminars, workshops, and presentations. Microsoft will offer professional opinions on many subjects. Unique announcements and product debuts may shape technology.

This is a rare chance to network with executives and discuss future projects. Mission: digital business success through innovation. Azure delivers the greatest technology and service to its customers.

Read about Red Hat on Azure

Explore Red Hat and Microsoft's cutting-edge solutions. Register today to attend the conference and chat to their specialists about how their cooperation may aid your organisation.

#RedHatSummit2025#RedHatSummit#AzureRedHatOpenShift#RedHat#RedHatEnterprise#RedHatEnterpriseLinux#technology#technologynews#TechNews#news#govindhtech

1 note

·

View note

Text

Running Legacy Applications on OpenShift Virtualization: A How-To Guide

Organizations looking to modernize their IT infrastructure often face a significant challenge: legacy applications. These applications, while critical to operations, may not be easily containerized. Red Hat OpenShift Virtualization offers a solution, enabling businesses to run legacy virtual machine (VM)-based applications alongside containerized workloads. This guide provides a step-by-step approach to running legacy applications on OpenShift Virtualization.

Why Use OpenShift Virtualization for Legacy Applications?

OpenShift Virtualization, powered by KubeVirt, integrates VM management into the Kubernetes ecosystem. This allows organizations to:

Preserve Investments: Continue using legacy applications without expensive rearchitecture.

Simplify Operations: Manage VMs and containers through a unified OpenShift Console.

Bridge the Gap: Modernize incrementally by running VMs alongside microservices.

Enhance Security: Leverage OpenShift’s built-in security features like SELinux and RBAC for both containers and VMs.

Preparing Your Environment

Before deploying legacy applications on OpenShift Virtualization, ensure the following:

OpenShift Cluster: A running OpenShift Container Platform (OCP) cluster with sufficient resources.

OpenShift Virtualization Operator: Installed and configured from the OperatorHub.

VM Images: A QCOW2, OVA, or ISO image of your legacy application.

Storage and Networking: Configured storage classes and network settings to support VM operations.

Step 1: Enable OpenShift Virtualization

Log in to your OpenShift Web Console.

Navigate to OperatorHub and search for "OpenShift Virtualization".

Install the OpenShift Virtualization Operator.

After installation, verify the "KubeVirt" custom resources are available.

Step 2: Create a Virtual Machine

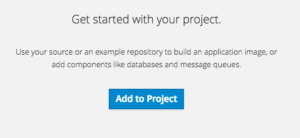

Access the Virtualization Dashboard: Go to the Virtualization tab in the OpenShift Console.

New Virtual Machine: Click on "Create Virtual Machine" and select "From Virtual Machine Import" or "From Scratch".

Define VM Specifications:

Select the operating system and size of the VM.

Attach the legacy application’s disk image.

Allocate CPU, memory, and storage resources.

Configure Networking: Assign a network interface to the VM, such as a bridge or virtual network.

Step 3: Deploy the Virtual Machine

Review the VM configuration and click "Create".

Monitor the deployment process in the OpenShift Console or use the CLI with:

oc get vmi

Once deployed, the VM will appear under the Virtual Machines section.

Step 4: Connect to the Virtual Machine

Access via Console: Open the VM’s console directly from the OpenShift UI.

SSH Access: If configured, connect to the VM using SSH.

Test the legacy application to ensure proper functionality.

Step 5: Integrate with Containerized Services

Expose VM Services: Create a Kubernetes Service to expose the VM to other workloads.

oc expose vmi <vm-name> --port=8080 --target-port=80

Connect Containers: Use Kubernetes-native networking to allow containers to interact with the VM.

Best Practices

Resource Allocation: Ensure the cluster has sufficient resources to support both VMs and containers.

Snapshots and Backups: Use OpenShift’s snapshot capabilities to back up VMs.

Monitoring: Leverage OpenShift Monitoring to track VM performance and health.

Security Policies: Implement network policies and RBAC to secure VM access.

Conclusion

Running legacy applications on OpenShift Virtualization allows organizations to modernize at their own pace while maintaining critical operations. By integrating VMs into the Kubernetes ecosystem, businesses can manage hybrid workloads more efficiently and prepare for a future of cloud-native applications. With this guide, you can seamlessly bring your legacy applications into the OpenShift environment and unlock new possibilities for innovation.

For more details visit: https://www.hawkstack.com/

0 notes

Text

Unlocking the Power of OpenShift: The Ultimate Platform for Modern Applications

Introduction

In the rapidly evolving world of container orchestration, OpenShift stands out as a robust, enterprise-grade platform. Built on Kubernetes, OpenShift provides developers and IT operations teams with a comprehensive suite of tools for deploying, managing, and scaling containerized applications. In this blog post, we’ll explore what makes OpenShift a powerful choice for modern application development and operations.

1. What is OpenShift?

OpenShift is a container application platform developed by Red Hat. It’s built on top of Kubernetes, the leading container orchestration engine, and provides additional tools and features to enhance developer productivity and operational efficiency. OpenShift supports a wide range of cloud environments, including public, private, and hybrid clouds.

2. Key Features of OpenShift

Integrated Development Environment: OpenShift provides an integrated development environment (IDE) that streamlines the application development process. It includes support for multiple programming languages, frameworks, and databases.

Developer-Friendly Tools: OpenShift’s Source-to-Image (S2I) capability allows developers to build, deploy, and scale applications directly from source code. It also integrates with popular CI/CD tools like Jenkins.

Robust Security: OpenShift incorporates enterprise-grade security features, including role-based access control (RBAC), network policies, and integrated logging and monitoring to ensure applications are secure and compliant.

Scalability and High Availability: OpenShift automates scaling and ensures high availability of applications with built-in load balancing, failover mechanisms, and self-healing capabilities.

Multi-Cloud Support: OpenShift supports deployment across multiple cloud providers, including AWS, Google Cloud, and Azure, as well as on-premises data centers, providing flexibility and avoiding vendor lock-in.

3. Benefits of Using OpenShift

Enhanced Productivity: With its intuitive developer tools and streamlined workflows, OpenShift significantly reduces the time it takes to develop, test, and deploy applications.

Consistency Across Environments: OpenShift ensures that applications run consistently across different environments, from local development setups to production in the cloud.

Operational Efficiency: OpenShift automates many operational tasks, such as scaling, monitoring, and managing infrastructure, allowing operations teams to focus on more strategic initiatives.

Robust Ecosystem: OpenShift integrates with a wide range of tools and services, including CI/CD pipelines, logging and monitoring solutions, and security tools, creating a rich ecosystem for application development and deployment.

Open Source and Community Support: As an open-source platform, OpenShift benefits from a large and active community, providing extensive documentation, forums, and third-party integrations.

4. Common Use Cases

Microservices Architecture: OpenShift excels at managing microservices architectures, providing tools to build, deploy, and scale individual services independently.

CI/CD Pipelines: OpenShift integrates seamlessly with CI/CD tools, automating the entire build, test, and deployment pipeline, resulting in faster delivery of high-quality software.

Hybrid Cloud Deployments: Organizations looking to deploy applications across both on-premises data centers and public clouds can leverage OpenShift’s multi-cloud capabilities to ensure seamless operation.

DevSecOps: With built-in security features and integrations with security tools, OpenShift supports the DevSecOps approach, ensuring security is an integral part of the development and deployment process.

5. Getting Started with OpenShift

Here’s a quick overview of how to get started with OpenShift:

Set Up OpenShift: You can set up OpenShift on a local machine using Minishift or use a managed service like Red Hat OpenShift on public cloud providers.

Deploy Your First Application:

Create a new project.

Use the OpenShift Web Console or CLI to deploy an application from a Git repository.

Configure build and deployment settings using OpenShift’s intuitive interfaces.

Scale and Monitor: Utilize OpenShift’s built-in scaling features to handle increased load and monitor application performance using integrated tools.

Example Command to Create a Project and Deploy an App:bas

oc new-project myproject oc new-app https://github.com/sclorg/nodejs-ex -l name=myapp oc expose svc/nodejs-ex

Conclusion

OpenShift is a powerful platform that bridges the gap between development and operations, providing a comprehensive solution for deploying and managing modern applications. Its robust features, combined with the flexibility of Kubernetes and the added value of Red Hat’s enhancements, make it an ideal choice for enterprises looking to innovate and scale efficiently.

Embrace OpenShift to unlock new levels of productivity, consistency, and operational excellence in your organization.

For more details click www.qcsdclabs.com

0 notes

Text

ok I just want to take a moment to rant bc the bug fix I’d been chasing down since monday that I finally just resolved was resolved with. get this. A VERSION UPDATE. A LIBRARY VERSION UPDATE. *muffled screaming into the endless void*

so what was happening. was that the jblas library I was using for handling complex matrices in my java program was throwing a fucking hissy fit when I deployed it via openshift in a dockerized container. In some ways, I understand why it would throw a fit because docker containers only come with the barest minimum of software installed and you mostly have to do all the installing of what your program needs by yourself. so ok. no biggie. my program runs locally but doesn’t run in docker: this makes sense. the docker container is probably just missing the libgfortran3 library that was likely preinstalled on my local machine. which means I’ll just update the dockerfile (which tells docker how to build the docker image/container) with instructions on how to install libgfortran3. problem solved. right? WRONG.

lo and behold, the bane of my existence for the past 3 days. this was the error that made me realize I needed to manually install libgfortran3, so I was pretty confident installing the missing library would fix my issue. WELL. turns out. it in fact didn’t. so now I’m chasing down why.

some forums suggested specifying the tmp directory as a jvm option or making sure the libgfortran library is on the LD_LIBRARY_PATH but basically nothing I tried was working so now I’m sitting here thinking: it probably really is just the libgfortran version. I think I legitimately need version 3 and not versions 4 or 5. because that’s what 90% of the solutions I was seeing was suggesting.

BUT! fuck me I guess because the docker image OS is RHEL which means I have to use the yum repo to install software (I mean I guess I could have installed it with the legit no kidding .rpm package but that’s a whole nother saga I didn’t want to have to go down), and the yum repo had already expired libgfortran version 3. :/ It only had versions 4 and 5, and I was like, well that doesn’t help me!

anyways so now I’m talking with IT trying to get their help to find a version of libgfortran3 I can install when. I FIND THIS ELUSIVE LINK. and at the very very bottom is THIS LINK.

Turns out. 1.2.4 is in fact not the latest version of jblas according to the github project page (on the jblas website it claims that 1.2.4 is the current verison ugh). And according to the issue opened at the link above, version 1.2.5 should fix the libgfortran3 issue.

and I think it did?! because when I updated the library version in my project and redeployed it, the app was able to run without crashing on the libgfortran3 error.

sometimes the bug fix is as easy as updating a fucking version number. but it takes you 3 days to realize that’s the fix. or at least a fix. I was mentally preparing myself to go down the .rpm route but boy am I glad I don’t have to now.

anyways tl;dr: WEBSITES ARE STUPID AND LIKELY OUTDATED AND YOU SHOULD ALWAYS CHECK THE SOURCE CODE PAGE FOR THE LATEST MOST UP TO DATE INFORMATION.

#this is a loooooong post lmao#god i'm so mad BUT#at least the fix ended up being really easy#it just took me forever to _find_#ok end rant back to work lmao

4 notes

·

View notes

Text

Deploy application in openshift using container images

Deploy application in openshift using container images

#openshift #containerimages #openshift # openshift4 #containerization

Deploy container app using OpenShift Container Platform running on-premises,openshift deploy docker image cli,openshift deploy docker image command line,how to deploy docker image in openshift,how to deploy image in openshift,deploy image in openshift,deploy…

View On WordPress

#Deploy application in openshift using container images#Deploy container app using OpenShift Container Platform running on-premises#deploy image in openshift#deploy image into openshift#how to deploy docker image in openshift#how to deploy image in openshift#kubernetes#openshift#openshift 4#openshift container platform#openshift deploy docker image cli#openshift deploy docker image command line#openshift tutorial#red hat#red hat openshift#redhat openshift online

0 notes

Text

After a successful installation and configuration of OpenShift Container Platform, the updates are providedover-the-air by OpenShift Update Service (OSUS). The operator responsible for checking valid updates available for your cluster with the OpenShift Update Service is called Cluster Version Operator (CVO). When you request an update, the CVO uses the release image for that update to upgrade your cluster. All the release artifacts are stored as container images in the Quay registry. It is important to note that the OpenShift Update Service displays all valid updates for your Cluster version. It is highly recommended that you do not force an update to a version that the OpenShift Update Service does not display. This is because a suitability check is performed to guarantee functional cluster after the upgrade. During the upgrade process, the Machine Config Operator (MCO) applies the new configuration to your cluster machines. Before you start a minot upgrade to your OpenShift Cluster, check the current cluster version using oc command line tool if configured or from a web console. You should have the cluster admin rolebinding to use these functions. We have the following OpenShift / OKD installation guides on our website: How To Deploy OpenShift Container Platform 4.x on KVM How To Install OKD OpenShift 4.x Cluster on OpenStack Setup Local OpenShift 4.x Cluster with CodeReady Containers 1) Confirm current OpenShift Cluster version Check the current version and ensure your cluster is available: $ oc get clusterversion NAME VERSION AVAILABLE PROGRESSING SINCE STATUS version 4.8.5 True False 24d Cluster version is 4.8.5 The current version of OpenShift Container Platform installed can also be checked from the web console – Administration → Cluster Settings > Details Also check available Cluster nodes and their current status. Ensure they are all in Ready State before you can initiate an upgrade. $ oc get nodes NAME STATUS ROLES AGE VERSION master01.ocp4.computingpost.com Ready master 24d v1.21.1+9807387 master02.ocp4.computingpost.com Ready master 24d v1.21.1+9807387 master03.ocp4.computingpost.com Ready master 24d v1.21.1+9807387 worker01.ocp4.computingpost.com Ready worker 24d v1.21.1+9807387 worker02.ocp4.computingpost.com Ready worker 24d v1.21.1+9807387 worker03.ocp4.computingpost.com Ready worker 24d v1.21.1+9807387 2) Backup Etcd database data Access one of the control plane nodes(master node) using oc debug command to start a debug session: $ oc debug node/ Here is an example with expected output: $ oc debug node/master01.ocp4.example.com Starting pod/master01ocp4examplecom-debug ... To use host binaries, run `chroot /host` Pod IP: 192.168.100.11 If you don't see a command prompt, try pressing enter. sh-4.4# Change your root directory to the host: sh-4.4# chroot /host Then initiate backup of etcd data using provided script namedcluster-backup.sh: sh-4.4# which cluster-backup.sh /usr/local/bin/cluster-backup.sh The cluster-backup.sh script is part of etcd Cluster Operator and it is just a wrapper around the etcdctl snapshot save command. Execute the script while passing the backups directory: sh-4.4# /usr/local/bin/cluster-backup.sh /home/core/assets/backup Here is the output as captured from my backup process found latest kube-apiserver: /etc/kubernetes/static-pod-resources/kube-apiserver-pod-19 found latest kube-controller-manager: /etc/kubernetes/static-pod-resources/kube-controller-manager-pod-8 found latest kube-scheduler: /etc/kubernetes/static-pod-resources/kube-scheduler-pod-9 found latest etcd: /etc/kubernetes/static-pod-resources/etcd-pod-3 3f8cc62fb9dd794113201bfabd8af4be0fdaa523987051cdb358438ad4e8aca6 etcdctl version: 3.4.14 API version: 3.4 "level":"info","ts":1631392412.4503953,"caller":"snapshot/v3_snapshot.go:119","msg":"created

temporary db file","path":"/home/core/assets/backup/snapshot_2021-09-11_203329.db.part" "level":"info","ts":"2021-09-11T20:33:32.461Z","caller":"clientv3/maintenance.go:200","msg":"opened snapshot stream; downloading" "level":"info","ts":1631392412.4615548,"caller":"snapshot/v3_snapshot.go:127","msg":"fetching snapshot","endpoint":"https://157.90.142.231:2379" "level":"info","ts":"2021-09-11T20:33:33.712Z","caller":"clientv3/maintenance.go:208","msg":"completed snapshot read; closing" "level":"info","ts":1631392413.9274824,"caller":"snapshot/v3_snapshot.go:142","msg":"fetched snapshot","endpoint":"https://157.90.142.231:2379","size":"102 MB","took":1.477013816 "level":"info","ts":1631392413.9344463,"caller":"snapshot/v3_snapshot.go:152","msg":"saved","path":"/home/core/assets/backup/snapshot_2021-09-11_203329.db" Snapshot saved at /home/core/assets/backup/snapshot_2021-09-11_203329.db "hash":3708394880,"revision":12317584,"totalKey":7946,"totalSize":102191104 snapshot db and kube resources are successfully saved to /home/core/assets/backup Check if the backup files are available in our backups directory: sh-4.4# ls -lh /home/core/assets/backup/ total 98M -rw-------. 1 root root 98M Sep 11 20:33 snapshot_2021-09-11_203329.db -rw-------. 1 root root 92K Sep 11 20:33 static_kuberesources_2021-09-11_203329.tar.gz The files as seen are: snapshot_.db: The etcd snapshot file. static_kuberesources_.tar.gz: File that contains the resources for the static pods. When etcd encryption is enabled, the encryption keys for the etcd snapshot will be contained in this file. You can copy the backup files to a separate system or location outside the server for better security if the node becomes unavailable during upgrade. 3) Changing Updates Channel (Optional) The OpenShift Container Platform offers the following upgrade channels: candidate fast stable Review the current update channel information and confirm that your channel is set to stable-4.8: $ oc get clusterversion -o json|jq ".items[0].spec" "channel": "fast-4.8", "clusterID": "f3dc42b3-aeec-4f4c-980f-8a04d6951585" You can decide to change an upgrade channel before the actual upgrade of the cluster. From Command Line Interface Switch Update channel from CLI using patch: oc patch clusterversion version --type json -p '["op": "add", "path": "/spec/channel", "value": "”]' # Example $ oc patch clusterversion version --type json -p '["op": "add", "path": "/spec/channel", "value": "stable-4.8"]' clusterversion.config.openshift.io/version patched $ oc get clusterversion -o json|jq ".items[0].spec" "channel": "stable-4.8", "clusterID": "f3dc42b3-aeec-4f4c-980f-8a04d6951585" From Web Console NOTE:For production clusters, you must subscribe to a stable-* or fast-* channel. Your cluster is fully supported by Red Hat subscription if you change from stable to fast channel. In my example below I’ve set the channel to fast-4.8. 4) Perform Minor Upgrade on OpenShift / OKD Cluster You can choose to perform a cluster upgrade from: Bastion Server / Workstation oc command line From OpenShift web console Upgrade your OpenShift Container Platform from CLI Check available upgrades $ oc adm upgrade Cluster version is 4.8.5 Updates: VERSION IMAGE 4.8.9 quay.io/openshift-release-dev/ocp-release@sha256:5fb4b4225498912357294785b96cde6b185eaed20bbf7a4d008c462134a4edfd 4.8.10 quay.io/openshift-release-dev/ocp-release@sha256:53576e4df71a5f00f77718f25aec6ac7946eaaab998d99d3e3f03fcb403364db As seen we have two minor upgrades that can be performed: To version 4.8.9 To version 4.8.10 The easiest way to upgrade is to the latest version: $ oc adm upgrade --to-latest=true Updating to latest version 4.8.10 To update to a specific version: $ oc adm upgrade --to= #e.g 4.8.9, I'll run: $ oc adm upgrade --to=4.8.9 You can easily review Cluster Version Operator status with the following command:

$ oc get clusterversion -o json|jq ".items[0].spec" "channel": "stable-4.8", "clusterID": "f3dc42b3-aeec-4f4c-980f-8a04d6951585", "desiredUpdate": "force": false, "image": "quay.io/openshift-release-dev/ocp-release@sha256:53576e4df71a5f00f77718f25aec6ac7946eaaab998d99d3e3f03fcb403364db", "version": "4.8.10" The oc adm upgrade command will give progress update with the steps: $ oc adm upgrade info: An upgrade is in progress. Working towards 4.8.10: 69 of 678 done (10% complete) Updates: VERSION IMAGE 4.8.9 quay.io/openshift-release-dev/ocp-release@sha256:5fb4b4225498912357294785b96cde6b185eaed20bbf7a4d008c462134a4edfd 4.8.10 quay.io/openshift-release-dev/ocp-release@sha256:53576e4df71a5f00f77718f25aec6ac7946eaaab998d99d3e3f03fcb403364db Upgrade OpenShift Container Platform from UI Administration → Cluster Settings→ Details→ Select channel→ Select a version to update to, and click Save. The Input channel Update status changes to Update to in progress. All cluster operators will be upgraded one after the other until all are in the minor version selected during upgrade: $ oc get co NAME VERSION AVAILABLE PROGRESSING DEGRADED SINCE authentication 4.8.5 True False False 119m baremetal 4.8.5 True False False 24d cloud-credential 4.8.5 True False False 24d cluster-autoscaler 4.8.5 True False False 24d config-operator 4.8.5 True False False 24d console 4.8.5 True False False 36h csi-snapshot-controller 4.8.5 True False False 24d dns 4.8.5 True False False 24d etcd 4.8.10 True False False 24d image-registry 4.8.5 True False False 24d ingress 4.8.5 True False False 24d insights 4.8.5 True False False 24d kube-apiserver 4.8.5 True False False 24d kube-controller-manager 4.8.5 True False False 24d kube-scheduler 4.8.5 True False False 24d kube-storage-version-migrator 4.8.5 True False False 4d16h machine-api 4.8.5 True False False 24d machine-approver 4.8.5 True False False 24d machine-config 4.8.5 True False False 24d marketplace 4.8.5 True False False 24d monitoring 4.8.5 True False False network 4.8.5 True False False 24d node-tuning 4.8.5 True False False 24d openshift-apiserver 4.8.5 True False False 32h openshift-controller-manager 4.8.5 True False False 23d openshift-samples 4.8.5 True False False 24d operator-lifecycle-manager 4.8.5 True False False 24d operator-lifecycle-manager-catalog 4.8.5 True False False 24d operator-lifecycle-manager-packageserver 4.8.5 True False False 7d11h

service-ca 4.8.5 True False False 24d storage 4.8.5 True False False 24d 5) Validate OpenShift CLuster Upgrade Wait for the upgrade process to complete then confirm that the cluster version has updated to the new version: $ oc get clusterversion NAME VERSION AVAILABLE PROGRESSING SINCE STATUS version 4.8.10 True False 37h Cluster version is 4.8.10 Checking cluster version from the web console To obtain more detailed information about the cluster status run the command: $ oc describe clusterversion If you try running the command oc adm upgrade immediately after upgrade to the latest release you should get a message similar to below: $ oc adm upgrade Cluster version is 4.8.10 No updates available. You may force an upgrade to a specific release image, but doing so may not be supported and result in downtime or data loss. Conclusion In this short guide we’ve shown how one can easily perform minor upgrade of OpenShift container cluster version. The process can be initiated from a web console or from the command line, it all depends on your preference. In our articles to follow we’ll cover steps required to perform Major versions upgrade in anOpenShift container cluster.

0 notes

Text

A brief overview of Jenkins X

What is Jenkins X?

Jenkins X is an open-source solution that provides automatic seamless integration and continuous distribution (CI / CD) and automated testing tools for cloud-native applications in Cubernet. It supports all major cloud platforms such as AWS, Google Cloud, IBM Cloud, Microsoft Azure, Red Hat OpenShift, and Pivotal. Jenkins X is a Jenkins sub-project (more on this later) and employs automation, DevOps best practices and tooling to accelerate development and improve overall CI / CD.

Features of Jenkins X

Automated CI /CD:

Jenkins X offers a sleek jx command-line tool, which allows Jenkins X to be installed inside an existing or new Kubernetes cluster, import projects, and bootstrap new applications. Additionally, Jenkins X creates pipelines for the project automatically.

Environment Promotion via GitOps:

Jenkins X allows for the creation of different virtual environments for development, staging, and production, etc. using the Kubernetes Namespaces. Every environment gets its specific configuration, list of versioned applications and configurations stored in the Git repository. You can automatically promote new versions of applications between these environments if you follow GitOps practices. Moreover, you can also promote code from one environment to another manually and change or configure new environments as needed.

Extensions:

It is quite possible to create extensions to Jenkins X. An extension is nothing but a code that runs at specific times in the CI/CD process. You can also provide code through an extension that runs when the extension is installed, uninstalled, as well as before and after each pipeline.

Serverless Jenkins:

Instead of running the Jenkins web application, which continually consumes a lot of CPU and memory resources, you can run Jenkins only when you need it. During the past year, the Jenkins community has created a version of Jenkins that can run classic Jenkins pipelines via the command line with the configuration defined by code instead of the usual HTML forms.

Preview Environments:

Though the preview environment can be created manually, Jenkins X automatically creates Preview Environments for each pull request. This provides a chance to see the effect of changes before merging them. Also, Jenkins X adds a comment to the Pull Request with a link for the preview for team members.

How Jenkins X works?

The developer commits and pushes the change to the project’s Git repository.

JX is notified and runs the project’s pipeline in a Docker image. This includes the project’s language and supporting frameworks.

The project pipeline builds, tests, and pushes the project’s Helm chart to Chart Museum and its Docker image to the registry.

The project pipeline creates a PR with changes needed to add the project to the staging environment.

Jenkins X automatically merges the PR to Master.

Jenkins X is notified and runs the staging pipeline.

The staging pipeline runs Helm, which deploys the environment, pulling Helm charts from Chart Museum and Docker images from the Docker registry. Kubernetes creates the project’s resources, typically a pod, service, and ingress.

0 notes

Text

What Are The Best Devops Tools That Should Be Used In 2022?

Actually, that's a marketing stunt let me rephrase that by saying what are the best tools for developers and operators and everything in between in 2022 and you can call it devops I split them into different categories so let me read the list and that's ids terminals shell packaging Kubernetes distribution serverless Github progressive delivery infrastructures code programming language cloud logging monitoring deployment security dashboards pipelines and workflows service mesh and backups I will not go into much details about each of those tools that would take hours but I will provide the links to videos or descriptions or useful information about each of the tools in this blog. If you want to see a link to the home page of the tool or some useful information let's get going.

Let's start with ids the tool you should be using the absolute winner in all aspects is visual studio code it is open source it is free it has a massive community massive amount of plugins there is nothing you cannot do with visual studio code so ids clear winner visual studio code that's what you should be using next are terminals, unlike many others that recommend an item or this or different terminals I recommend you use a terminal that is baked into visual studio code it's absolutely awesome you cannot go wrong and you have everything in one place you write your code you write your manifest you do whatever you're doing and you have a terminal baked in using the terminal in visual studio code there is no need to use an external terminal shell the best shell you can use you will feel at home and it features some really great things.

Experience if you're using windows then install wsl or windows subsystem for Linux and then install ssh and of my ssh next packaging how do we package applications today that's containers containers containers actually we do not packages containers we package container images that are a standard now it doesn't matter whether you're deploying to Kubernetes whether you're deploying directly to docker whether you're using serverless even most serverless today solutions allow you to run containers that means that you must and pay attention that didn't say should you must package your applications as container images with few exceptions if you're creating clips or desktop applications then package it whatever is the native for that operating system that's the only exception everything else container images doesn't matter where you're deploying it and how should you build those container images you should be building it with docker desktop docker.

if you're building locally and you shouldn't be building locally if you're building through some cicd pipelines so whichever other means that it's outside of your laptop use kubernetes is the best solution to build container images today next in line kubernetes distribution or service or platform which one should you use and that depends where you're running your stuff if it's in cloud use whatever your provider is offering you're most likely not going to change the provider because of kubernetes service but if you're indifferent and you can choose any provider to run your kubernetes clusters then gke google kubernetes engine is the best choice it is ahead of everybody else that difference is probably not sufficient for you to change your provider but if you're undecided where to run it then google cloud is the place but if you're using on-prem servers then probably the best solution is launcher unless you have very strict and complicated security requirements then you should go with upper shift if you want operational simplicity and simplicity in any form or way then go with launcher if you have tight security needs then openshift is the thing finally if you want to run kubernetes cluster locally then it's k3d k3d is the best way to run kubernetes cluster locally you can run a single cluster multi-cluster single node multi-node and it's lightning fast it takes couple of seconds to create a cluster and it uses minimal amount of resources it's awesome try it out serverless and that really depends what type of serverless you want if you want functions as a service aws lambda is the way to go they were probably the first ones to start at least among big providers and they are leading that area but only for functions as a service.

If you wanted containers as a service type of serverless and i think you should want containers as a service anyways if you want containers as a service flavor of serverless then google cloud run is the best option in the market today finally if you would like to run serverless on-prem then k native which is actually the engine behind the google cloud run anyways k native is the way to go if you want to run serverless workloads in your own clusters on-prem githubs and here i do not have a clear recommendation because both argo cd and flux are awesome they have some differences there are some weaknesses pros and cons for each and they cannot make up my mind both of them are awesome and it's like arms race you know cold war as soon as one gets a cool feature the other one gets it as well and then the circle continues both of them are more or less equally good you cannot go wrong with either progressive delivery is in a similar situation you can use algorithms or flagger you're probably going to choose one or the other depending on which github solution you chose because argo rollouts works very well with dargo cd flagger works exceptionally well with the flux and you cannot go wrong with either you're most likely going to choose the one that belongs to the same family as the github's tool that you choose previously infrastructure is code has two winners in this case one is terraform terraform is the leader of the market it has the biggest community it is stable it exists for a long time and everybody is using it you cannot go wrong with terraform but if you want to get a glimpse of the future of potential future we don't know the future but potential future with additional features especially if you want something that is closer to kubernetes that is closer to the ecosystem of kubernetes then you should go with crossplane.

In my case i'm combining both i'm still having most of my workloads in terraform and then transitioning slowly to cross plane when that makes sense for programming languages it depends really what you're doing if you're working on a front end and i it's javascript there is nothing else in the world everything is javascript don't even bother looking for something else for everything else go is the way to go that that rhymes right go is the way to go excellent go is the language that everybody is using today i mean not everybody minority of us are using go but it is increasing in polarity greatly especially if you're working on microservices or smaller applications footprint of go is very small it is lightning fast just try it out if you haven't already if for no other reason you should put go on your curriculum because it's all the hype and for a very good reason it has its problems every language has its problems but you should use it even if that's only for hobby projects next inline cloud which provider should be using i cannot answer the question aws is great azure is great google cloud is great if you want to save money at the expense of the catalog of the offers and the stability and whatsoever then go with linux or digitalocean personally when i can choose and i have to choose then i go with google cloud as for logging solutions if you're in cloud go with whatever your cloud provider is giving you as long as that is not too expensive for your budget.

If you have to choose something else something outside of the offering of your cloud use logs is awesome it's very similar to prometus it works well it has low memory and cpu footprint if you're choosing your own solution instead of going with whatever provider is giving you lockheed is the way to go for monitoring it's prometheus you have to have promote use even if you choose something else you will have to have prometheus on top of that something else for a simple reason that many of the tools frameworks applications what's or not are assuming that you're using promit use from it you see is the de facto standard and you will use it even if you already decided to use something else because it is unavoidable and it's awesome at the same time for deployment mechanisms packaging templating i have two i cannot make up my mind i use customize and i use helm and you should probably combine both because they have different strengths and weaknesses if you're an operator and you're not tasked to empower developers then customize is a better choice no doubt now if you want to simplify lives of developers who are not very proficient with kubernetes then helm is the easiest option for them it will not be easiest for you but for them yes next in line is security for scanning you sneak sneak is a clear winner at least today for governance legal requirements compliance and similar subjects i recommend opa gatekeeper it is the best choice we have today even though that market is bound to explode and we will see many new solutions coming very very soon next in line are dashboards and this was the easiest one for me to pick k9s use k9s especially if you like terminals it's absolutely awesome try it out k9s is the best dashboard at least when kubernetes is concerned for pipelines and workflows it really depends on how much work you want to invest in it yourself if you want to roll up your sleeves and set it up yourself it's either argo workflows combined with argo events or tecton combined with a few other things they are hand-in-hand there are pros and cons for each but right now there is no clear winner so it's either argo workflows combined with events or tactile with few other additional tools among the tools that require you to set them up properly there is no competition those are the two choices you have now.

If you want not to think much about pipelines but just go with the minimal effort everything integrated what's or not then i recommend code rush now i need to put a disclaimer here i worked in code fresh until a week ago and you might easily see that i'm too subjective and that might be true i try not to be but you never know serious mesh service mesh is in a similar situation like infrastructure is code most of the implementations are with these two today easter is the de facto standard but i believe that we are moving towards slinkerty being the dominant player for a couple of reasons the main one being that it is independently managed it is in the cncf foundation and nobody really owns it on top of that linker d is more lightweight it is easier to learn it doesn't have all the features of youtube but you likely do not need the features that are missing anyway finally linkedin is based on smi or service mesh interface and that means that you will be able to switch from linker d to something else if you choose to do so in the future easter has its own interface it is incompatible with anything else finally the last category i have is backups and if you're using kubernetes and everybody is using kubernetes today right use valero it is the best option we have today to create backups it works amazingly well as long as you're using kubernetes.

If you're not using Kubernetes then just zip it up and put it on a tape as we were doing a long long time ago that was the list of the recommendation of the tools platforms frameworks whatsoever that you should be using in 2022 i will make a similar blog in the future and i expect you to tell me a couple of things which categories did i miss what would you like me to include in the next blog of this kind what are the points you do not agree with me let's discuss it i might be wrong most of the time I'm wrong so please let me know if you disagree about any of the tools or categories that i mentioned we are done, Cloud now technologies ranked as top three devops services company in usa.Cloud now technologies devops service delivery at high velocity with cost savings through accelerated software deployment.

#devops services company in usa#devops consulting services#devops services company#devops services#agile devops consulting#cloud computing#cloud advisory#cloud managed services#Cloud migiration#cloud#applicationdevelopment#apps#application modernization#app development#app developing company#devops#devops service providers#agile devops

0 notes

Text

Integrating ROSA Applications with AWS Services (CS221)

In today's rapidly evolving cloud-native landscape, enterprises are looking for scalable, secure, and fully managed Kubernetes solutions that work seamlessly with existing cloud infrastructure. Red Hat OpenShift Service on AWS (ROSA) meets that demand by combining the power of Red Hat OpenShift with the scalability and flexibility of Amazon Web Services (AWS).

In this blog post, we’ll explore how you can integrate ROSA-based applications with key AWS services, unlocking a powerful hybrid architecture that enhances your applications' capabilities.

📌 What is ROSA?

ROSA (Red Hat OpenShift Service on AWS) is a managed OpenShift offering jointly developed and supported by Red Hat and AWS. It allows you to run containerized applications using OpenShift while taking full advantage of AWS services such as storage, databases, analytics, and identity management.

🔗 Why Integrate ROSA with AWS Services?

Integrating ROSA with native AWS services enables:

Seamless access to AWS resources (like RDS, S3, DynamoDB)

Improved scalability and availability

Cost-effective hybrid application architecture

Enhanced observability and monitoring

Secure IAM-based access control using AWS IAM Roles for Service Accounts (IRSA)

🛠️ Key Integration Scenarios

1. Storage Integration with Amazon S3 and EFS

Applications deployed on ROSA can use AWS storage services for persistent and object storage needs.

Use Case: A web app storing images to S3.

How: Use OpenShift’s CSI drivers to mount EFS or access S3 through SDKs or CLI.

yaml

Copy

Edit

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: efs-pvc

spec:

accessModes:

- ReadWriteMany

storageClassName: efs-sc

resources:

requests:

storage: 5Gi

2. Database Integration with Amazon RDS

You can offload your relational database requirements to managed RDS instances.

Use Case: Deploying a Spring Boot app with PostgreSQL on RDS.

How: Store DB credentials in Kubernetes secrets and use RDS endpoint in your app’s config.

env

Copy

Edit

SPRING_DATASOURCE_URL=jdbc:postgresql://<rds-endpoint>:5432/mydb

3. Authentication with AWS IAM + OIDC

ROSA supports IAM Roles for Service Accounts (IRSA), enabling fine-grained permissions for workloads.

Use Case: Granting a pod access to a specific S3 bucket.

How:

Create an IAM role with S3 access

Associate it with a Kubernetes service account

Use OIDC to federate access

4. Observability with Amazon CloudWatch and Prometheus

Monitor your workloads using Amazon CloudWatch Container Insights or integrate Prometheus and Grafana on ROSA for deeper insights.

Use Case: Track application metrics and logs in a single AWS dashboard.

How: Forward logs from OpenShift to CloudWatch using Fluent Bit.

5. Serverless Integration with AWS Lambda

Bridge your ROSA applications with AWS Lambda for event-driven workloads.

Use Case: Triggering a Lambda function on file upload to S3.

How: Use EventBridge or S3 event notifications with your ROSA app triggering the workflow.

🔒 Security Best Practices

Use IAM Roles for Service Accounts (IRSA) to avoid hardcoding credentials.

Use AWS Secrets Manager or OpenShift Vault integration for managing secrets securely.

Enable VPC PrivateLink to keep traffic within AWS private network boundaries.

🚀 Getting Started

To start integrating your ROSA applications with AWS:

Deploy your ROSA cluster using the AWS Management Console or CLI

Set up AWS CLI & IAM permissions

Enable the AWS services needed (e.g., RDS, S3, Lambda)

Create Kubernetes Secrets and ConfigMaps for service integration

Use ServiceAccounts, RBAC, and IRSA for secure access

🎯 Final Thoughts

ROSA is not just about running Kubernetes on AWS—it's about unlocking the true hybrid cloud potential by integrating with a rich ecosystem of AWS services. Whether you're building microservices, data pipelines, or enterprise-grade applications, ROSA + AWS gives you the tools to scale confidently, operate securely, and innovate rapidly.

If you're interested in hands-on workshops, consulting, or ROSA enablement for your team, feel free to reach out to HawkStack Technologies – your trusted Red Hat and AWS integration partner.

💬 Let's Talk!

Have you tried ROSA yet? What AWS services are you integrating with your OpenShift workloads? Share your experience or questions in the comments!

For more details www.hawkstack.com

0 notes

Text

IBM unveils updates to key Watson tools and applications

Recognizing that organizations are slow to adopt AI, due in part to rising data complexities, IBM announced new innovations that further advance its Watson Anywhere approach to scaling AI across any cloud, and a host of clients who are leveraging the strategy to bring AI to their data, wherever it resides. Rob Thomas, General Manager, IBM Data and AI, said, "We collaborate with clients every day and around the world on their data and AI challenges, and this year we tackled one of the big drawbacks to scaling AI throughout the enterprise – vendor lock-in. When we introduced the ability to run Watson on any cloud, we opened up AI for clients in ways never imagined. Today, we pushed that even further adding even more capabilities to our Watson products running on Cloud Pak for Data." Increasing data complexity, as well as data preparation, skills shortages, and a lack of data culture are combining to slow AI adoption at a time when interest in AI continues to climb. Between 2018 and 2019, organizations that have deployed artificial intelligence (AI) grew from 4% to 14%, according to Gartner's 2019 CIO Agenda survey. Those figures contrast with the rising awareness of the value of AI. According to the 2019 MIT Sloan Management Review and Boston Consulting Group study, Winning with AI, 9 out of 10 respondents agree that AI represents a business opportunity for their company. Adding to growing enterprise complexities, a 2018 IBM Institute for Business Value study said that 76% of organizations surveyed reported that they are already using at least two to 15 hybrid clouds, and 98 percent forecast they will be using multiple hybrid clouds within three years. Anil Bhasker, Business Unit Leader, Analytics Platform, IBM India/South Asia, said, "We have been collaborating with Indian organizations across sectors to help them fast track to Chapter 2 of their Digital Reinvention, which is characterized by AI being embedded everywhere in the business. Our clients are recognizing AI's capabilities to deliver intangible outcomes such as enhanced customer & employee satisfaction, stronger brand equity, etc., in addition to the traditional Return on Investment (RoI) approach. With Watson Anywhere, organizations are able to innovate and scale AI on any cloud instead of being locked into a single vendor, thus enabling them to maximize the value delivered by AI. It's an approach that brings AI to wherever the data resides and help Indian companies unearth hidden insights, automate processes and ultimately drive business performance." The innovations announced today are designed to help organizations overcome the barriers to AI. From detecting 'drift' in AI models to recognizing nuances in the human voice, these new capabilities can be run across any cloud via IBM's Cloud Pak for Data platform to begin easily connecting their vast data stores to AI. As evidence of the growing appeal of this approach to enable AI to run on any cloud, IBM announced a number of clients that are leveraging Watson across their enterprises to unearth hidden insights, automate mundane tasks and help improve overall business performance. Companies like multinational professional services firm KPMG, and Air France-KLM, are leveraging Watson apps, or building their own AI with Watson tools, to facilitate their AI journeys. Steve Hill, Global and US Head of Innovation at KPMG, said, "IBM's strategy for developing AI tools that enable clients to run AI wherever their data is, is exactly the reason we turned to OpenScale – we needed multicloud scalability in order to give clients the kind of transparency and explainability we were talking about. Supporting the client's environment, whatever it may be, reflects the understanding that IBM has not only about AI, but about the expanding enterprise." KPMG, the multinational professional services network and long-time IBM alliance partner, for years has integrated the latest cognitive and automation capabilities into services across its businesses from governance, risk, and compliance to taxes and accounting. Earlier this year KPMG turned to IBM to collaborate on a new service that would provide KPMG clients greater governance and explainability of their AI, no matter where that data resides, no matter what cloud and no matter what AI platform the company was using. KPMG developed the KPMG AI in Control solution leveraging the Watson OpenScale platform, to give clients the ability to continuously evaluate their machine learning and AI algorithms, models and data for greater confidence in the outcomes. Last month, KPMG teamed with IBM to release a joint offering of this solution to clients called KPMG AI in Control with Watson OpenScale. And to help accelerate customer service and improve the passenger experience, Air France-KLM and its customer service team developed a voice assistant called MIA (My Interactive Assistant), which uses IBM Watson Assistant with Voice Interaction. Air France-KLM collaborated with IBM to develop the voice assistant to improve the customer experience by reducing file processing time. Since the beginning of the pilot in July in a single country, MIA has responded to 4,500 calls from people needing additional information about their flights or their travel plans. MIA asks the customer for his reservation reference number (PNR) and extracts from this PNR all information relating to the passenger, including name, flight number and telephone number. If needed, the voice assistant can quickly pass the call to a human agent who can take over. The agent will already have all the necessary information on the screen in the background and will therefore be positioned to resolve the request. By design, the higher the number of requests handled by MIA, the more intelligent it will be over time. Other use cases are also under study. New Capabilities Come to Watson Apps and Tools IBM rolled out an array of new features and functionality to several of its key products today, including the following: Watson OpenScale – With rising data privacy regulation and growing concern for how AI algorithms are reaching their results, bias detections and explainability are becoming critical. Last year, IBM launched OpenScale, the first AI platform of its kind to do just that – provide organizations the ability to look for bias and govern their AI and query it to understand how it arrived at its results. With such insights, clients can gain greater confidence in their AI and in their results. Today we announced a new capability called Drift Detection which detects when and how far a model "drifts" from its original parameters. It does this by comparing production and training data and the resulting predictions it creates. When a user-defined drift threshold is exceeded, an alert is generated. Drift Detection not only provides greater information about the accuracy of models, but it simplifies, and hence, speeds model re-training. Watson Assistant – Building on IBM's leading position in enterprise AI assistants, IBM today announced several new key features to the conversational AI product that allow users to deploy, train and continuously improve their virtual assistants quickly on the cloud of their choice. For example, the new Watson Assistant for Voice Interaction is designed to help clients easily integrate an AI-powered assistant into their IVR systems. With this capability customers are able to ask questions in natural language. Watson Assistant now can recognize the nuances of the way people speak and will fast-track the caller to the most appropriate answer. Clients can also blend texting and voice at the same time, allowing instantaneous information exchange. IBM also announced that Watson Assistant is now integrated with IBM Cloud Pak for Data which enables companies to run an assistant in any environment – on premises, or on any private, public, hybrid, or multi-cloud. Watson Discovery – IBM announced several key updates to Watson Discovery, the company's premier AI search product that leverages machine learning and natural language processing to help clients find data from across their enterprises. New to the platform is Content Miner, which allows for the searching of vast datasets for specific content types, such as text and images. A new simplified setup format helps non-technical users to can get up and running quickly, while a new "guided experience" dynamically recommends next steps in configuring projects. All of which results in a more agile data discovery process. Cloud Pak for Data – IBM advances its first-of-a-kind, integrated data analytics platform, with key new features and support. The platform, which has supported Red Hat OpenShift, one of the leading Kubernetes-based container orchestration platforms, since its launch 18 months ago, is now certified on OpenShift. With full certification brings added confidence to clients knowing that all the components came from a supported source, container images contain no known vulnerabilities and most importantly that the containers running throughout are compatible across Red Hat Enterprise Linux environments, regardless of the cloud, and whether private, public or hybrid. In addition to certification, this latest version now comes with a host of capabilities standard as part of the base platform. Among the new capabilities is Db2 Event Store, for storing and analyzing more than 250 billion events per day, in real-time, and Watson Machine Learning, equipped with AutoAI. AuoAI is IBM's innovative automated model building program that enables data scientists and non-data scientists alike to build machine learning (ML) models with ease. As its name suggests, AutoAI automates such tedious and complicated tasks of ML, including data prep, model selection, feature engineering and hyperparameter optimization, to truly speed clients' adoption of AI. Now these tools come standard with Cloud Pak for Data to be used and scaled across any hybrid multi cloud environment. In addition, Cloud Pak for Data now features open source governance in the base platform, enabling users for the first time to set policy for, and govern the use of open source tools and programs within the enterprise to enable more efficient model building, testing and deployment. To empower developers to take advantage of the IBM Cloud Pak for Data platform, IBM also announced the Cloud Pak for Data Developer Hub. Here, developers have step-by-step tutorials, code patterns, ongoing support and information on in person workshops taking place in their area for hands-on labs. OpenPages with Watson – IBM today also announced new features and capabilities to OpenPages with Watson in version 8.1. This governance, risk and compliance (GRC) platform, helps clients as they set and manage operational risk, policy and compliance, financial controls management, IT governance, and internal audits. Version 8.1 comes integrated with a new rules engine, new intuitive views, visualizations, advance workflow features, and a personalized workspace, all of which is designed to enable users to be more productive and effective in managing their risk. One result of the additional engagement and automation features is a more risk-aware culture that empowers more people to participate in managing important risk and compliance activities. Read the full article

0 notes

Video

youtube

Deploy application in openshift using container images#openshift #containerimages #openshift # openshift4 #containerization Deploy container app using OpenShift Container Platform running on-premises,openshift deploy docker image cli,openshift deploy docker image command line,how to deploy docker image in openshift,how to deploy image in openshift,deploy image in openshift,deploy image into openshift,Deploy application in openshift using container images,openshift container platform,openshift tutorial,red hat openshift,openshift,kubernetes,openshift 4,red hat,redhat openshift online https://www.youtube.com/channel/UCnIp4tLcBJ0XbtKbE2ITrwA?sub_confirmation=1&app=desktop About: 00:00 Deploy application in openshift using container images In this course we will learn about deploying an application from container images to openshift / openshift 4 online cluster in different ways. First method is to use the webconsole to deploy application using docker container images. Second way is to login through OC openshift cluster command line tool for windows and through oc command we can deploy the container image to openshift cluster. Openshift/ Openshift4 a cloud based container to build deploy test our application on cloud. In the next videos we will explore Openshift4 in detail. Commands Used: Image to be deployed: openshiftkatacoda/blog-django-py oc get all -o name :-This will return all the resources we have in the project oc describe route/blog-django-py :-This will give us the details of the route that has been created. Through this route or url we can access the application externally. oc get all --selector app=blog-django-py -o name :-This will select only the resources with the label app=blog-django-py . By default openshift automatically applies the label app=blog-django-py to all the resources of the application. oc delete all --selector app=blog-django-py :-This will delete the application and the related resources having label app= app=blog-django-py oc get all -o name :-This get the list of all the available resources. oc new-app --search openshiftkatacoda/blog-django-py oc new-app openshiftkatacoda/blog-django-py :-This command will create / deploy the image in openshift container. oc new-app openshiftkatacoda/blog-django-py -o name blog :-This command will create / deploy the image in openshift container with custom name oc expose service/blog-django-py :-This will expose the service to the external world so that it can be accessed globally. oc get route/blog-django-py --- this will give the url of the application that we have deployed. certification,OpenShift workflow,openshift tutorial,ci cd pipeline,ci cd devops,openshift container platform,ci cd openshift,openshift installation,Getting Started with OpenShift,OpenShift for the Absolute Beginners,Get started with RedHat OpenShift https://www.facebook.com/codecraftshop/ https://t.me/codecraftshop/ Please do like and subscribe to my you tube channel "CODECRAFTSHOP" Follow us on facebook | instagram | twitter at @CODECRAFTSHOP . -~-~~-~~~-~~-~- Please watch: "Install hyperv on windows 10 - how to install, setup & enable hyper v on windows hyper-v" https://www.youtube.com/watch?v=KooTCqf07wk -~-~~-~~~-~~-~-

#Deploy container app using OpenShift Container Platform running on-premises#openshift deploy docker image cli#openshift deploy docker image command line#how to deploy docker image in openshift#how to deploy image in openshift#deploy image in openshift#deploy image into openshift#Deploy application in openshift using container images#openshift container platform#openshift tutorial#red hat openshift#openshift#kubernetes#openshift 4#red hat#redhat openshift online

0 notes

Text

Logging is a useful mechanism for both application developers and cluster administrators. It helps with monitoring and troubleshooting of application issues. Containerized applications by default write to standard output. These logs are stored in the local ephemeral storage. They are lost as soon as the container. To solve this problem, logging to persistent storage is often used. Routing to a central logging system such as Splunk and Elasticsearch can then be done. In this blog, we will look into using a splunk universal forwarder to send data to splunk. It contains only the essential tools needed to forward data. It is designed to run with minimal CPU and memory. Therefore, it can easily be deployed as a side car container in a kubernetes cluster. The universal forwarder has configurations that determine which and where data is sent. Once data has been forwarded to splunk indexers, it is available for searching. The figure below shows a high level architecture of how splunk works: Benefits of using splunk universal forwarder It can aggregate data from different input types It supports autoload balancing. This improves resiliency by buffering data when necessary and sending to available indexers. The deployment server can be managed remotely. All the administrative activities can be done remotely. Splunk Universal Forwarders provide a reliable and secure data collection process. Scalability of Splunk Universal Forwarders is very flexible. Setup Pre-requisites: The following are required before we proceed: A working Kubernetes or Openshift container platform cluster Kubectl or oc command line tool installed on your workstation. You should have administrative rights A working splunk cluster with two or more indexers STEP 1: Create a persistent volume We will first deploy the persistent volume if it does not already exist. The configuration file below uses a storage class cephfs. You will need to change your configuration accordingly. The following guides can be used to set up a ceph cluster and deploy a storage class: Install Ceph 15 (Octopus) Storage Cluster on Ubuntu Ceph Persistent Storage for Kubernetes with Cephfs Create the persistent volume claim: $ vim pvc_claim.yaml kind: PersistentVolumeClaim apiVersion: v1 metadata: name: cephfs-claim spec: accessModes: - ReadWriteMany storageClassName: cephfs resources: requests: storage: 1Gi Create the persistent volume claim: kubectl apply -f pvc_claim.yaml Look at the PersistentVolumeClaim: $ kubectl get pvc cephfs-claim NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE cephfs-claim Bound pvc-19c8b186-699b-456e-afdc-bcbaba633c98 1Gi RWX cephfs 3s STEP 2: Deploy an app and mount the persistent volume Next, We will deploy our application. Notice that we mount the path “/var/log” to the persistent volume. This is the data we need to persist. $ vim test-pod.yaml apiVersion: v1 kind: Pod metadata: name: test-app spec: containers: - name: app image: centos command: ["/bin/sh"] args: ["-c", "while true; do echo $(date -u) >> /var/log/test.log; sleep 5; done"] volumeMounts: - name: persistent-storage mountPath: /var/log volumes: - name: persistent-storage persistentVolumeClaim: claimName: cephfs-claim Deploy the application: kubectl apply -f test-pod.yaml STEP 3: Create a configmap We will then deploy a configmap that will be used by our container. The configmap has two crucial configurations: Inputs.conf: This contains configurations on which data is forwarded. Outputs.conf : This contains configurations on where the data is forwarded to. You will need to change the configmap configurations to suit your needs. $ vim configmap.yaml kind: ConfigMap apiVersion: v1 metadata: name: configs data: outputs.conf: |-

[indexAndForward] index = false [tcpout] defaultGroup = splunk-uat forwardedindex.filter.disable = true indexAndForward = false [tcpout:splunk-uat] server = 172.29.127.2:9997 # Splunk indexer IP and Port useACK = true autoLB = true inputs.conf: |- [monitor:///var/log/*.log] # Where data is read from disabled = false sourcetype = log index = microservices_uat # This index should already be created on the splunk environment Deploy the configmap: kubectl apply -f configmap.yaml STEP 4: Deploy the Splunk universal forwarder Finally, We will deploy an init container alongside the splunk universal forwarder container. This will help with copying the configmap configuration contents into the splunk universal forwarder container. $ vim splunk_forwarder.yaml apiVersion: apps/v1 kind: Deployment metadata: name: splunkforwarder labels: app: splunkforwarder spec: replicas: 1 selector: matchLabels: app: splunkforwarder template: metadata: labels: app: splunkforwarder spec: initContainers: - name: volume-permissions image: busybox imagePullPolicy: IfNotPresent command: ['sh', '-c', 'cp /configs/* /opt/splunkforwarder/etc/system/local/'] volumeMounts: - mountPath: /configs name: configs - name: confs mountPath: /opt/splunkforwarder/etc/system/local containers: - name: splunk-uf image: splunk/universalforwarder:latest imagePullPolicy: IfNotPresent env: - name: SPLUNK_START_ARGS value: --accept-license - name: SPLUNK_PASSWORD value: ***** - name: SPLUNK_USER value: splunk - name: SPLUNK_CMD value: add monitor /var/log/ volumeMounts: - name: container-logs mountPath: /var/log - name: confs mountPath: /opt/splunkforwarder/etc/system/local volumes: - name: container-logs persistentVolumeClaim: claimName: cephfs-claim - name: confs emptyDir: - name: configs configMap: name: configs defaultMode: 0777 Deploy the container: kubectl apply -f splunk_forwarder.yaml Verify that the splunk universal forwarder pods are running: $ kubectl get pods | grep splunkforwarder splunkforwarder-7ff865fc8-4ktpr 1/1 Running 0 76s STEP 5: Check if logs are written to splunk Login to splunk and do a search to verify that logs are streaming in. Splunk_Logs You should be able to see your logs.

0 notes

Text

Getting started with OpenShift Java S2I

Getting started with OpenShift Java S2I

Introduction

The OpenShift Java S2I image, which allows you to automatically build and deploy your Java microservices, has just been released and is now publicly available. This article describes how to get started with the Java S2I container image, but first let’s discuss why having a Java S2I image is so important.

Why Java S2I?

The Java S2I image enables developers to automatically build,…

View On WordPress

0 notes

Text

What is Kubernetes?

Kubernetes (also known as k8s or “kube”) is an open source container orchestration platform that automates many of the manual processes involved in deploying, managing, and scaling containerized applications.

In other words, you can cluster together groups of hosts running Linux containers, and Kubernetes helps you easily and efficiently manage those clusters.

Kubernetes clusters can span hosts across on-premise, public, private, or hybrid clouds. For this reason, Kubernetes is an ideal platform for hosting cloud-native applications that require rapid scaling, like real-time data streaming through Apache Kafka.

Kubernetes was originally developed and designed by engineers at Google. Google was one of the early contributors to Linux container technology and has talked publicly about how everything at Google runs in containers. (This is the technology behind Google’s cloud services.)

Google generates more than 2 billion container deployments a week, all powered by its internal platform, Borg. Borg was the predecessor to Kubernetes, and the lessons learned from developing Borg over the years became the primary influence behind much of Kubernetes technology.

Fun fact: The 7 spokes in the Kubernetes logo refer to the project’s original name, “Project Seven of Nine.”

Red Hat was one of the first companies to work with Google on Kubernetes, even prior to launch, and has become the 2nd leading contributor to the Kubernetes upstream project. Google donated the Kubernetes project to the newly formed Cloud Native Computing Foundation (CNCF) in 2015.

Get an introduction to enterprise Kubernetes

What can you do with Kubernetes?

The primary advantage of using Kubernetes in your environment, especially if you are optimizing app dev for the cloud, is that it gives you the platform to schedule and run containers on clusters of physical or virtual machines (VMs).

More broadly, it helps you fully implement and rely on a container-based infrastructure in production environments. And because Kubernetes is all about automation of operational tasks, you can do many of the same things other application platforms or management systems let you do—but for your containers.

Developers can also create cloud-native apps with Kubernetes as a runtime platform by using Kubernetes patterns. Patterns are the tools a Kubernetes developer needs to build container-based applications and services.

With Kubernetes you can:

Orchestrate containers across multiple hosts.