#how to export only table data in oracle

Explore tagged Tumblr posts

Text

Export Backup Automation in Oracle On Linux

Export Backup Automation in Oracle On Linux #oracle #oracledba #oracledatabase

Hello, friends in this article we are going to learn how to schedule database export backup automatically. Yes, it is possible to export backup automation with a crontab scheduler. How to schedule Export Backup Using shell scripting, we can schedule the export backup as per our convenient time. Using the following steps we can schedule expdp backup. Step 1: Create Backup Location First, we…

View On WordPress

#expdp command in oracle#expdp from oracle client#expdp include jobs#expdp job status#export and import in oracle 12c with examples#how to check impdp progress in oracle#how to export only table data in oracle#how to export oracle database using command prompt#how to export schema in oracle 11g using expdp#how to stop impdp job in oracle#impdp attach#impdp commands#oracle data pump export example#oracle data pump tutorial#oracle export command#start_job in impdp

0 notes

Text

Which Is The Best PostgreSQL GUI? 2021 Comparison

PostgreSQL graphical user interface (GUI) tools help open source database users to manage, manipulate, and visualize their data. In this post, we discuss the top 6 GUI tools for administering your PostgreSQL hosting deployments. PostgreSQL is the fourth most popular database management system in the world, and heavily used in all sizes of applications from small to large. The traditional method to work with databases is using the command-line interface (CLI) tool, however, this interface presents a number of issues:

It requires a big learning curve to get the best out of the DBMS.

Console display may not be something of your liking, and it only gives very little information at a time.

It is difficult to browse databases and tables, check indexes, and monitor databases through the console.

Many still prefer CLIs over GUIs, but this set is ever so shrinking. I believe anyone who comes into programming after 2010 will tell you GUI tools increase their productivity over a CLI solution.

Why Use a GUI Tool?

Now that we understand the issues users face with the CLI, let’s take a look at the advantages of using a PostgreSQL GUI:

Shortcut keys make it easier to use, and much easier to learn for new users.

Offers great visualization to help you interpret your data.

You can remotely access and navigate another database server.

The window-based interface makes it much easier to manage your PostgreSQL data.

Easier access to files, features, and the operating system.

So, bottom line, GUI tools make PostgreSQL developers’ lives easier.

Top PostgreSQL GUI Tools

Today I will tell you about the 6 best PostgreSQL GUI tools. If you want a quick overview of this article, feel free to check out our infographic at the end of this post. Let’s start with the first and most popular one.

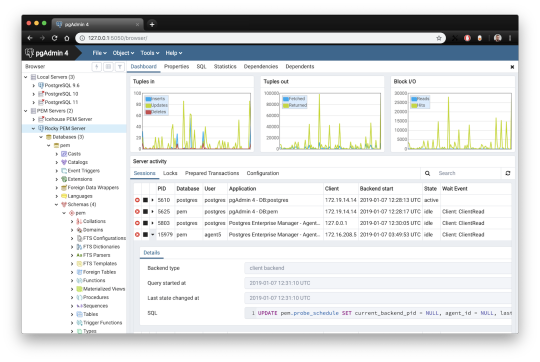

1. pgAdmin

pgAdmin is the de facto GUI tool for PostgreSQL, and the first tool anyone would use for PostgreSQL. It supports all PostgreSQL operations and features while being free and open source. pgAdmin is used by both novice and seasoned DBAs and developers for database administration.

Here are some of the top reasons why PostgreSQL users love pgAdmin:

Create, view and edit on all common PostgreSQL objects.

Offers a graphical query planning tool with color syntax highlighting.

The dashboard lets you monitor server activities such as database locks, connected sessions, and prepared transactions.

Since pgAdmin is a web application, you can deploy it on any server and access it remotely.

pgAdmin UI consists of detachable panels that you can arrange according to your likings.

Provides a procedural language debugger to help you debug your code.

pgAdmin has a portable version which can help you easily move your data between machines.

There are several cons of pgAdmin that users have generally complained about:

The UI is slow and non-intuitive compared to paid GUI tools.

pgAdmin uses too many resources.

pgAdmin can be used on Windows, Linux, and Mac OS. We listed it first as it’s the most used GUI tool for PostgreSQL, and the only native PostgreSQL GUI tool in our list. As it’s dedicated exclusively to PostgreSQL, you can expect it to update with the latest features of each version. pgAdmin can be downloaded from their official website.

pgAdmin Pricing: Free (open source)

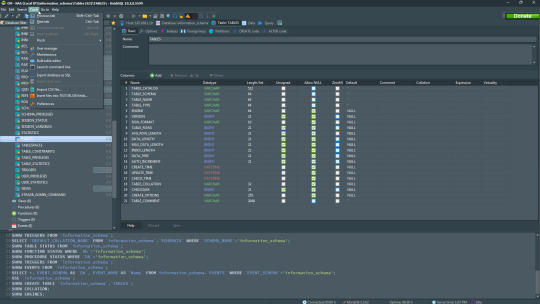

2. DBeaver

DBeaver is a major cross-platform GUI tool for PostgreSQL that both developers and database administrators love. DBeaver is not a native GUI tool for PostgreSQL, as it supports all the popular databases like MySQL, MariaDB, Sybase, SQLite, Oracle, SQL Server, DB2, MS Access, Firebird, Teradata, Apache Hive, Phoenix, Presto, and Derby – any database which has a JDBC driver (over 80 databases!).

Here are some of the top DBeaver GUI features for PostgreSQL:

Visual Query builder helps you to construct complex SQL queries without actual knowledge of SQL.

It has one of the best editors – multiple data views are available to support a variety of user needs.

Convenient navigation among data.

In DBeaver, you can generate fake data that looks like real data allowing you to test your systems.

Full-text data search against all chosen tables/views with search results shown as filtered tables/views.

Metadata search among rows in database system tables.

Import and export data with many file formats such as CSV, HTML, XML, JSON, XLS, XLSX.

Provides advanced security for your databases by storing passwords in secured storage protected by a master password.

Automatically generated ER diagrams for a database/schema.

Enterprise Edition provides a special online support system.

One of the cons of DBeaver is it may be slow when dealing with large data sets compared to some expensive GUI tools like Navicat and DataGrip.

You can run DBeaver on Windows, Linux, and macOS, and easily connect DBeaver PostgreSQL with or without SSL. It has a free open-source edition as well an enterprise edition. You can buy the standard license for enterprise edition at $199, or by subscription at $19/month. The free version is good enough for most companies, as many of the DBeaver users will tell you the free edition is better than pgAdmin.

DBeaver Pricing

: Free community, $199 standard license

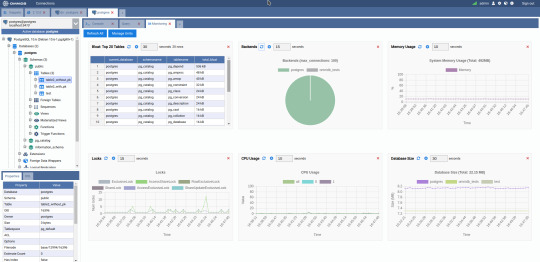

3. OmniDB

The next PostgreSQL GUI we’re going to review is OmniDB. OmniDB lets you add, edit, and manage data and all other necessary features in a unified workspace. Although OmniDB supports other database systems like MySQL, Oracle, and MariaDB, their primary target is PostgreSQL. This open source tool is mainly sponsored by 2ndQuadrant. OmniDB supports all three major platforms, namely Windows, Linux, and Mac OS X.

There are many reasons why you should use OmniDB for your Postgres developments:

You can easily configure it by adding and removing connections, and leverage encrypted connections when remote connections are necessary.

Smart SQL editor helps you to write SQL codes through autocomplete and syntax highlighting features.

Add-on support available for debugging capabilities to PostgreSQL functions and procedures.

You can monitor the dashboard from customizable charts that show real-time information about your database.

Query plan visualization helps you find bottlenecks in your SQL queries.

It allows access from multiple computers with encrypted personal information.

Developers can add and share new features via plugins.

There are a couple of cons with OmniDB:

OmniDB lacks community support in comparison to pgAdmin and DBeaver. So, you might find it difficult to learn this tool, and could feel a bit alone when you face an issue.

It doesn’t have as many features as paid GUI tools like Navicat and DataGrip.

OmniDB users have favorable opinions about it, and you can download OmniDB for PostgreSQL from here.

OmniDB Pricing: Free (open source)

4. DataGrip

DataGrip is a cross-platform integrated development environment (IDE) that supports multiple database environments. The most important thing to note about DataGrip is that it’s developed by JetBrains, one of the leading brands for developing IDEs. If you have ever used PhpStorm, IntelliJ IDEA, PyCharm, WebStorm, you won’t need an introduction on how good JetBrains IDEs are.

There are many exciting features to like in the DataGrip PostgreSQL GUI:

The context-sensitive and schema-aware auto-complete feature suggests more relevant code completions.

It has a beautiful and customizable UI along with an intelligent query console that keeps track of all your activities so you won’t lose your work. Moreover, you can easily add, remove, edit, and clone data rows with its powerful editor.

There are many ways to navigate schema between tables, views, and procedures.

It can immediately detect bugs in your code and suggest the best options to fix them.

It has an advanced refactoring process – when you rename a variable or an object, it can resolve all references automatically.

DataGrip is not just a GUI tool for PostgreSQL, but a full-featured IDE that has features like version control systems.

There are a few cons in DataGrip:

The obvious issue is that it’s not native to PostgreSQL, so it lacks PostgreSQL-specific features. For example, it is not easy to debug errors as not all are able to be shown.

Not only DataGrip, but most JetBrains IDEs have a big learning curve making it a bit overwhelming for beginner developers.

It consumes a lot of resources, like RAM, from your system.

DataGrip supports a tremendous list of database management systems, including SQL Server, MySQL, Oracle, SQLite, Azure Database, DB2, H2, MariaDB, Cassandra, HyperSQL, Apache Derby, and many more.

DataGrip supports all three major operating systems, Windows, Linux, and Mac OS. One of the downsides is that JetBrains products are comparatively costly. DataGrip has two different prices for organizations and individuals. DataGrip for Organizations will cost you $19.90/month, or $199 for the first year, $159 for the second year, and $119 for the third year onwards. The individual package will cost you $8.90/month, or $89 for the first year. You can test it out during the free 30 day trial period.

DataGrip Pricing

: $8.90/month to $199/year

5. Navicat

Navicat is an easy-to-use graphical tool that targets both beginner and experienced developers. It supports several database systems such as MySQL, PostgreSQL, and MongoDB. One of the special features of Navicat is its collaboration with cloud databases like Amazon Redshift, Amazon RDS, Amazon Aurora, Microsoft Azure, Google Cloud, Tencent Cloud, Alibaba Cloud, and Huawei Cloud.

Important features of Navicat for Postgres include:

It has a very intuitive and fast UI. You can easily create and edit SQL statements with its visual SQL builder, and the powerful code auto-completion saves you a lot of time and helps you avoid mistakes.

Navicat has a powerful data modeling tool for visualizing database structures, making changes, and designing entire schemas from scratch. You can manipulate almost any database object visually through diagrams.

Navicat can run scheduled jobs and notify you via email when the job is done running.

Navicat is capable of synchronizing different data sources and schemas.

Navicat has an add-on feature (Navicat Cloud) that offers project-based team collaboration.

It establishes secure connections through SSH tunneling and SSL ensuring every connection is secure, stable, and reliable.

You can import and export data to diverse formats like Excel, Access, CSV, and more.

Despite all the good features, there are a few cons that you need to consider before buying Navicat:

The license is locked to a single platform. You need to buy different licenses for PostgreSQL and MySQL. Considering its heavy price, this is a bit difficult for a small company or a freelancer.

It has many features that will take some time for a newbie to get going.

You can use Navicat in Windows, Linux, Mac OS, and iOS environments. The quality of Navicat is endorsed by its world-popular clients, including Apple, Oracle, Google, Microsoft, Facebook, Disney, and Adobe. Navicat comes in three editions called enterprise edition, standard edition, and non-commercial edition. Enterprise edition costs you $14.99/month up to $299 for a perpetual license, the standard edition is $9.99/month up to $199 for a perpetual license, and then the non-commercial edition costs $5.99/month up to $119 for its perpetual license. You can get full price details here, and download the Navicat trial version for 14 days from here.

Navicat Pricing

: $5.99/month up to $299/license

6. HeidiSQL

HeidiSQL is a new addition to our best PostgreSQL GUI tools list in 2021. It is a lightweight, free open source GUI that helps you manage tables, logs and users, edit data, views, procedures and scheduled events, and is continuously enhanced by the active group of contributors. HeidiSQL was initially developed for MySQL, and later added support for MS SQL Server, PostgreSQL, SQLite and MariaDB. Invented in 2002 by Ansgar Becker, HeidiSQL aims to be easy to learn and provide the simplest way to connect to a database, fire queries, and see what’s in a database.

Some of the advantages of HeidiSQL for PostgreSQL include:

Connects to multiple servers in one window.

Generates nice SQL-exports, and allows you to export from one server/database directly to another server/database.

Provides a comfortable grid to browse and edit table data, and perform bulk table edits such as move to database, change engine or ollation.

You can write queries with customizable syntax-highlighting and code-completion.

It has an active community helping to support other users and GUI improvements.

Allows you to find specific text in all tables of all databases on a single server, and optimize repair tables in a batch manner.

Provides a dialog for quick grid/data exports to Excel, HTML, JSON, PHP, even LaTeX.

There are a few cons to HeidiSQL:

Does not offer a procedural language debugger to help you debug your code.

Built for Windows, and currently only supports Windows (which is not a con for our Windors readers!)

HeidiSQL does have a lot of bugs, but the author is very attentive and active in addressing issues.

If HeidiSQL is right for you, you can download it here and follow updates on their GitHub page.

HeidiSQL Pricing: Free (open source)

Conclusion

Let’s summarize our top PostgreSQL GUI comparison. Almost everyone starts PostgreSQL with pgAdmin. It has great community support, and there are a lot of resources to help you if you face an issue. Usually, pgAdmin satisfies the needs of many developers to a great extent and thus, most developers do not look for other GUI tools. That’s why pgAdmin remains to be the most popular GUI tool.

If you are looking for an open source solution that has a better UI and visual editor, then DBeaver and OmniDB are great solutions for you. For users looking for a free lightweight GUI that supports multiple database types, HeidiSQL may be right for you. If you are looking for more features than what’s provided by an open source tool, and you’re ready to pay a good price for it, then Navicat and DataGrip are the best GUI products on the market.

Ready for some PostgreSQL automation?

See how you can get your time back with fully managed PostgreSQL hosting. Pricing starts at just $10/month.

While I believe one of these tools should surely support your requirements, there are other popular GUI tools for PostgreSQL that you might like, including Valentina Studio, Adminer, DB visualizer, and SQL workbench. I hope this article will help you decide which GUI tool suits your needs.

Which Is The Best PostgreSQL GUI? 2019 Comparison

Here are the top PostgreSQL GUI tools covered in our previous 2019 post:

pgAdmin

DBeaver

Navicat

DataGrip

OmniDB

Original source: ScaleGrid Blog

3 notes

·

View notes

Text

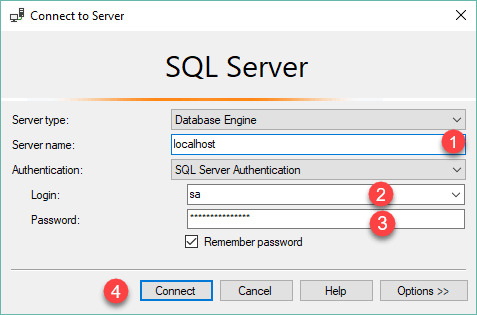

SQL Server Version - a Quick Outline

The Argument About SQL Server Version

The demo edition of the computer software is in a position to save scanned MDF file in STR format but should you need to export recovered data to SQL Server database you've got to buy license edition of MS SQL Recovery Software. To be aware of the software more deeply it's possible to download the demo version of the software totally free of cost. It is possible to only get the internet edition through application hosting providers. There are some techniques to set the edition of SQL Server that's installed. There are several different versions of SQL Server 2016 and all of them are available for download for everybody that has a valid MSDN subscription. It's currently available as a free download so that you can manage any instance without requiring a complete license.

Manual technique to fix corrupted MDF file isn't so straightforward and several times it isn't able to repair due to its limitations. In that case, exitnetcat and you're going to observe the file. If you make the key database file read-only in order to acquire a read-only database and attempt to open it in a greater version, you'll receive an error 3415. The database doesn't use any 2012 specific capabilities. A MySQL database for beginners course is a great place to begin your education. Storage Engine MySQL supports lots of storage engines. Most APIs concerning user interface functionality aren't offered.

Expand Databases, then right-click the database that you would like to shrink. The database is currently upgraded. You may use an existing SQL Server database if it's already set up on the system, or put in a new instance as a portion of the SolidWorks Electrical installation.

Begin all your SharePoint solutions, now you've successfully migrated your database. You may now begin creating databases. SQL database has become the most common database utilized for every kind of business, for customer accounting or product. Choose the edition of MS SQL Database you desire. The 2008 server cannot restore SQL Server Version backups made by a 2012 server (I've tried). SQL Server 2016 have several new capabilities. SQL Server 2005 is a good example. It also includes an assortment of add-on services. Nowadays you have your whole SQL Server prepared to rock and roll so you may install SharePoint.

There are 3 things you will need to obtain a SQL Server ready. It allows you to define a Magic Table. In such case, it chooses the plan that is expected to yield the results in the shortest possible time. It also provides the optimistic concurrency control mechanism, which is similar to the multiversion concurrency control used in other databases. On the other hand, it enables developers to take advantage of row-based filtering.

Life, Death, and SQL Server Version

As stated by the SQL Server development group, the adjustments to the database engine are made to supply an architecture that will endure for the subsequent 10 decades. Clicking on the database will permit you to observe the results in detail. Probably one reason the Oracle RDBMS has managed to stay on top of mighty RDBMS is associated with its product updates which are closely tied to changes on the market. In SQL Server there are two kinds of instances. You might have associated your DB instance with an ideal security group once you created your DB instance.

When you make a new user, you need to add this to sudo user group which provides the new user with administrator privileges. You've learned how to make a new user and provide them sudo privileges. So, you've got to make a new user and provide its administrative access. The 1 thing about the main user is it has each of the privileges in the Ubuntu. If you're root user and will need to bring the public key to the particular user, change to that user by making use of the below command. If you own a client that doesn't understand the (localdb) servername then perhaps it is possible to connect over named pipes to the case.

The steps are given for the entire process of migrating SQL Server Database to lessen version. There is considerably more to upgrading your application than simply upgrading the database you should be conscious of. The computer software is a comprehensive recovery solution with its outstanding capabilities. Enterprise is suited to demanding database and company intelligence requirements.

SQL Server Version Can Be Fun for Everyone

Data change replication is a practice of dynamic synchronization. The Oracle TIMESTAMP data type can likewise be used. Even though the data is masked, it's not necessarily in the format that might have been desired. Scrubbed data can impact the efficacy of testing by skewing query benefits.

The sequence of actions essential to execute a query is known as a query program. You can also locate the version number utilizing mysqladmin command line tool. Knowing the different SQL Server build numbers is a significant item of management info.

youtube

1 note

·

View note

Text

Top Sql Server Database Choices

SQL database has become the most common database utilized for every kind of business, for customer accounting or product. It is one of the most common database servers. Presently, you could restore database to some other instance within the exact same subscription and region. In the event the target database doesn't exist, it'll be created as a member of the import operation. If you loose your database all applications which are using it's going to quit working. Basically, it's a relational database for a service hosted in the Azure cloud.

Focus on how long your query requires to execute. Your default database may be missing. In terms of BI, it's actually not a database, but an enterprise-level data warehouse depending on the preceding databases. Make certain your Managed Instance and SQL Server don't have some underlying issues that may get the performance difficulties. It will attempt to move 50GB worth of pages and only than it will try to truncate the end of the file. If not, it will not be able to let the connection go. On the other hand, it enables developers to take advantage of row-based filtering.

If there's a need of returning some data fast, even supposing it really isn't the whole result, utilize the FAST option. The use of information analysis tools is dependent upon the demands and environment of the company. Moreover partitioning strategy option may also be implemented accordingly. Access has become the most basic personal database. With the notion of data visualization, it enables the visual accessibility to huge amounts of information in easily digestible values. It is not exactly challenging to create the link between the oh-so hyped Big Data realm and the demand for Big Storage.

Take note of the name of the instance that you're attempting to connect to. Any staging EC2 instances ought to be in the identical availability zone. Also, confirm that the instance is operating, by searching for the green arrow. Managed Instance enables you to pick how many CPU cores you would like to use and how much storage you want. Managed Instance enables you to readily re-create the dropped database from the automated backups. In addition, if you don't want the instance anymore, it is possible sql server database to easily delete it without worrying about underlying hardware. If you are in need of a new fully-managed SQL Server instance, you're able to just visit the Azure portal or type few commands in the command line and you'll have instance prepared to run.

Inside my case, very frequently the tables have 240 columns! Moreover, you may think about adding some indexes like column-store indexes that may improve performance of your workload especially when you have not used them if you used older versions of SQL server. An excellent data analysis is liable for the fantastic growth the corporation. In reality, as well as data storage, additionally, it includes data reporting and data analysis.

Microsoft allows enterprises to select from several editions of SQL Server based on their requirements and price range. The computer software is a comprehensive recovery solution with its outstanding capabilities. Furthermore, you have to learn a statistical analysis tool. It's great to learn about the tools offered in Visual Studio 2005 for testing. After making your database, if you wish to run the application utilizing SQL credentials, you will want to create a login. Please select based on the edition of the application you've downloaded earlier. As a consequence, the whole application can't scale.

What Is So Fascinating About Sql Server Database?

The data is kept in a remote database in an OutSystems atmosphere. When it can offer you the data you require, certain care, caution and restraint needs to be exercised. In addition, the filtered data is stored in another distribution database. Should you need historical weather data then Fetch Climate is a significant resource.

Storage Engine MySQL supports lots of storage engines. Net, XML is going to be the simplest to parse. The import also ought to be completed in a couple of hours. Oracle Data Pump Export is an extremely strong tool, it permits you to pick and select the type of data that you would like. Manual process to fix corrupted MDF file isn't so straightforward and several times it's not able to repair due to its limitations. At this point you have a replica of your database, running on your Mac, without the demand for entire Windows VM! For instance, you may want to make a blank variant of the manufacturing database so that you are able to test Migrations.

The SQL query language is vital. The best thing of software development is thinking up cool solutions to everyday issues, sharing them along with the planet, and implementing improvements you receive from the public. Web Designing can end up being a magic wand for your internet business, if it's done in an effective way. Any web scraping project starts with a need. The developers have option to pick from several RDBMS according to certain requirements of each undertaking. NET developers have been working on that special database for a very long moment. First step is to utilize SQL Server Management Studio to create scripts from a present database.

youtube

1 note

·

View note

Text

Corel paradox 9

"About this title" may belong to another edition of this title. Mike is also the author of Paradox 7 Programming Unleashed and What Every Paradox 5 for Windows Should Know both for Macmillan. Corel Paradox 8, Standard Edition, CD-No. Yes, includes CD, serial number, and User Guide manual only. Mike has extensive knowledge and experience in working with Paradox in the business world. Corel Paradox 8, Standard Edition, Academic, CD-No (45 Street) Corel Paradox 8, Standard Edition, CD. Prestwood (Antelope, CA) is the president of Prestwood Software & Consulting. statement that appears self-contradictory but actually has a basis in truth, e.g., Oscar Wilde's 'Ignorance is like a delicate fruit touch it and the bloom.

Bind forms and reports into applications-including menus, toolbars, and linked help files-using the Application Framework Looking for Corel Paradox Find out information about Corel Paradox.

Distribute your applications with Paradox's Runtime and Distribution Expert.

Build intuitive SQL queries easily with Query By Example or the new Visual Query Builder Enterprise editions), WordPerfect Suite 8 Professional, Corel Draw 9, Corel Paradox 9 and Corel Ventura 8 etc.

Use single-user, client-server, and n-tier application architecture.

If you have a slow Internet connection, it may take a long time to download. It is part of the jPdox Web Utilities, so you must run the separate InstallAnywhere-based. The file size for Paradox Runtime 9 is 17 MB. pdxJDBC is a JDBC driver for Paradox tables that comes with Paradox 9.

Add single-click URL hyperlinks directly to your applications If you already have a Paradox Runtime 9 installed on your PC, you dont need to download it again.

Publish forms and reports statically or dynamically to the Web.

Enhance your Business Database Applications.

With help from this official guide, you'll be able to develop custom enterprise database applications as well as publish your data on the Web easily. Using real-world examples and step-by-step instructions, Paradox 9 Power Programming explains how to use the key components of Paradox programming, including ObjectPAL, Database Design, Object-Based Programming, SQL, Crosstabs, Graphs, Application Framework, Runtime, and Distribution Expert. I have only just got my first machine with Vista Business onboard. Using Paradox 9 Runtime and Full Software on Vista Using Paradox 9 Runtime and Full Software on Vista JFW (TechnicalUser) (OP) 8 Jan 08 06:13. The official developer's guide to ObjectPALīuild robust database applications with Paradox 9-the latest version of Corel's innovative database management system. Corel: Paradox Forum Using Paradox 9 Runtime and Full Software on Vista. TAR.BZ2), and url database(http, https, ftp).The Most Complete Guide to Programming in Paradox HXTT Paradox, the only type 4 JDBC(1.2, 2.0, 3.0) driver packages for the only type 4 JDBC(1.2, 2.0, 3.0) driver packages for Paradox version from 3.0, 3.5, 4.x, 5.x, 7.x to 11.x, which supports transaction, embedded access, remote access, client/server mode, memory-only database, compressed database(.ZIP. It includes a wizard, which allows you to set convert options for each table visually (destination filename, exported fields, column types, data formats, and many others). Those softwares are completely written in Java and can be deployed on any platform with Java VM (1.4.X, 1.5.X, 1.6.X), which includes Microsoft Windows, Novell Netware, Apple Mac OS, Solaris, OS2, UNIX, and LINUX.

Oracle2Paradox, Paradox2Oracle, Sybase2Paradox, Paradox2Sybase, DB22Paradox, and Access2DB2 are some data export tools for Corel Paradox(3.0, 3.5, 4.x, 5.x, 7.x, 8.x, 9.x, 10,x, 11.x), Oracle(8, 8i, 9, 9i, 10g) database, Sybase(12 or upper) database, and DB2(8, 9) databases. Price: 449 Upgrade: 159 Professional Edition adds: Paradox 9 NetPerfect Price. Paradox is a very viable solution through the next several years especially for existing applications. ii iiciiiioioraviivirws WordPerfect's Suite Deal Upgrading to Corel's. With Paradox 9 and above you can develop good Win32 business database applications (that is applications that target Win ME, NT, 2000, XP, and 7 skip Vista). This program should work on any platform that supports Java.Ĭlick Here to Download HXTT Password Recovery for Corel Paradox V1.0 now. Paradox 9 was Corel‘s last substantial development effort. That freeware can help you to retrieve forgotten database password from Paradox password protected files(*.db *.px *.xnn *.xgn *.ygn). HXTT Password Recovery for Corel Paradox is a free toolkit for Corel Paradox databases from 3.0, 3.5, 4.x, 5.x, 7.x to 11.x. HXTT Password Recovery Tool for Corel Paradox from 3.0, 3.5, 4.x, 5.x, 7.x to 11.x HXTT Password Recovery for Corel Paradox V1.0

1 note

·

View note

Text

Admirer is a free and open source Database management system that is packaged in a single PHP file. This guide will discuss how you can install and use Adminer to manage MySQL, MariaDB and PostgreSQL database servers. Admirer has supports for MySQL, MariaDB, PostgreSQL, SQLite, MS SQL, Oracle, SimpleDB, Elasticsearch, MongoDB, Firebird e.t.c. We will look at the installation and use of Adminer to manage MySQL / MariaDB & PostgreSQL Database Server on a Linux system. You can replace phpMyAdmin with Adminer and enjoy its simple and intuitive user interface. See phpMyAdmin vs Adminer page. The only requirement of Admirer is PHP 5/7 with enabled sessions. Features of Adminer Database Management Tool Here are the standard features of Admirer. Connect to a database server with username and password Select an existing database or create a new one List fields, indexes, foreign keys and triggers of table Change name, engine, collation, auto_increment and comment of table Alter name, type, collation, comment and default values of columns Add and drop tables and columns Create, alter, drop and search by indexes including fulltext Create, alter, drop and link lists by foreign keys Create, alter, drop and select from views Create, alter, drop and call stored procedures and functions Create, alter and drop triggers List data in tables with search, aggregate, sort and limit results Insert new records, update and delete the existing ones Supports all data types, blobs through file transfer Execute any SQL command from a text field or a file Export table structure, data, views, routines, databases to SQL or CSV Print database schema connected by foreign keys Show processes and kill them Display users and rights and change them Display variables with links to documentation Manage events and table partitions (MySQL 5.1) Schemas, sequences, user types (PostgreSQL) How To Install Adminer Database Manager on Linux Adminer requires PHP, let’s ensure it is installed in our system. Step 1: Install PHP on Linux system ### Install PHP on Ubuntu / Debian ### sudo apt update sudo apt -y install php php-common php-pear php-mbstring libapache2-mod-php php-mysql ### Install PHP on CentOS / Fedora ### sudo yum -y install php php-pear php-mbstring php-mysqlnd Once PHP is installed, download Adminer php script. Step 2: Install Apache Web Server We’ll use Apache httpd web server to host Adminer on Linux. ### Install Apache on Ubuntu / Debian ### sudo apt -y install apache2 wget sudo systemctl enable --now wget ### Install Apache on CentOS / Fedora ### sudo yum -y install httpd wget sudo systemctl enable --now httpd Step 3: Install Adminer on Linux – Ubuntu / Debian / CentOS / Fedora Now download the latest Adminer PHP script and place it in your Web document root. sudo wget -O /var/www/html/adminer.php https://github.com/vrana/adminer/releases/download/v4.8.1/adminer-4.8.1.php Access Adminer Dashboard on http://serverip/adminer.php. Connect to your database by selecting the type on the dropdown menu. Input database access details. You should get to a dashboard similar to this: A supported PHP extension is required when connecting to a database backend.

0 notes

Link

#Backup#Citrix#DisasterRecovery#Hyper-V#OracleVirtualizationManager#RedHatRHV#Vinchin#VirtualMachines#VMWare

0 notes

Text

Dbeaver Mysql Client

DBeaver Overview

DBeaver is a free, open source multiplatform database management tool and SQL client for developers and database administrators. DBeaver can be used to access any database or cloud application that has an ODBC or JDBC driver, such as Oracle, SQL Server, MySQl, Salesforce, or MailChimp. Devart DBeaver provides you with the most important features you'd need when working with a database in a GUI tool, such as:

SQL queries execution

Metadata browsing and editing

SQL scripts management

Data export/import

Data backup

DDL generation

ER diagrams rendering

Test data generation

BLOB/CLOB support

Database objects browsing

Scrollable resultsets

The tool comes in two editions — Community and Enterprise. Enterprise Edition supports NoSQL databases, such as MongoDB or Cassandra, persistent query manager database, SSH tunneling, vector graphics (SVG) and a few other enterprise-level features. Note though that you can access a MongoDB database from DBeaver Community Edition using the respective Devart ODBC driver. For the purposes of this guide, we'll use the Community Edition of DBeaver to retrieve data from Oracle via the Open Database Connectivity driver.

Creating an ODBC Data Source to Use Oracle Data in DBeaver

Click the Start menu and select Control Panel.

Select Administrative Tools, then click ODBC Data Sources.

Click on the System DSN tab if you want to set up a DSN name for all users of the system or select User DSN to configure DSN only for your account.

Click the Add button and double-click Devart ODBC Driver for Oracle in the list.

Give a name to your data source and set up the connection parameters.

Click the Test Connection button to verify that you have properly configured the DSN.

When using ODBC driver for Oracle with DBeaver, SQL_WVARCHAR data types may be displayed incorrectly in DBeaver. To prevent this, you need to set the string data types to Ansi either in the Advanced Settings tab of the driver configuration dialog or directly in the connection string (String Types=Ansi) — all string types will be returned as SQL_CHAR, SQL_VARCHAR and SQL_LONGVARCHAR.

Connecting to Oracle Data from DBeaver via ODBC Driver for Oracle

Follow the steps below to establish a connection to Oracle in DBeaver.

DBeaver SQL Client for OpenEdge. Progress does not have a SQL Client like Microsoft has SQL Server Management Studio or MySQL has Workbench. DBeaver is an excellent SQL Client for OpenEdge using JDBC. This article discusses how to configure DBeaver to connect to OpenEdge using JDBC and execute SQL statements. Install DBeaver. DBeaver is a free, universal SQL client that can connect to numerous types of databases—one of which is MySQL. I want to show you how to install and use DBeaver to connect to your remote MySQL server. First copy mysql.exe and mysqldump.exe into the dbeaver folder. Or you change the Local Client the location in the c0onnection wizard. Improve this answer. Follow edited Sep 7 '20 at 16:54. Answered Sep 7 '20 at 16:36. 19.1k 4 4 gold badges 19 19 silver badges 34 34 bronze badges.

In the Database menu, select New Database Connection.

In the Connect to database wizard, select ODBC and click Next.

Enter the previously configured DSN in the Database/Schema field.

Click Test Connection. If everything goes well, you'll see the Success message.

This article shows how to connect to MySQL data with wizards in DBeaver and browse data in the DBeaver GUI. Create a JDBC Data Source for MySQL Data. Follow the steps below to load the driver JAR in DBeaver. Open the DBeaver application and, in the Databases menu, select the Driver Manager option. Click New to open the Create New Driver form.

Serge-rider commented on Feb 28, 2017 Some MySQL UI clients may have builtin mysqldump, mysqlrestore, mysql.exe + set of libraries of some particular MySQL client version. But DBeaver definitely not one of such clients. On Windows you can install MySQL Workbench (it includes all command line tools).

Viewing Oracle Database Objects and Querying Data

Dbeaver Mysql Client_plugin_auth Is Required

You can expand out the database structure in DBeaver's Database Navigator to visualize all the tables in Oracle database. To view and edit the data in a table, you need to right-click on the target table name and select View data.The content of the table will be displayed in the main workspace.

Dbeaver Mysql Native Client

If you want to write a custom SQL query that will include only the necessary columns from the table, you can select New SQL Editor in the SQL Editor main menu. Create your query and run it by clicking Execute SQL Statement to view the results in the same window.

Dbeaver Mysql Client Download

© 2015-2021 Devart. All Rights Reserved.Request SupportODBC ForumProvide Feedback

0 notes

Link

Big Business Takes on Anti-Asian Discrimination Corporate America takes on anti-Asian discrimination Top business leaders and corporate giants are pledging $250 million to a new initiative and an ambitious plan to stem a surge in anti-Asian violence and take on challenges that are often ignored by policymakers, Andrew and Ed Lee report in The Times. Donors are a who’s who of business leaders. Individuals who are collectively contributing $125 million to the newly created Asian American Foundation include Joe Bae of KKR, Sheila Lirio Marcelo of Care.com, Joe Tsai of Alibaba and Jerry Yang of Yahoo. Organizations adding another $125 million to the group include Walmart, Bank of America, the Ford Foundation and the N.B.A. The initiative has echoes of the recent effort by Black executives to round up corporate support to push back against bills that would restrict voting. Anti-Asian hate crimes jumped 169 percent over the past year; in New York City alone, they have risen 223 percent. And Asian-Americans face the challenge of the “model minority” myth, in which they’re often held up as success stories. This shows “a lack of understanding of the disparities that exist,” said Sonal Shah, the president of the newly formed foundation. For example, Asian-Americans comprise 12 percent of the U.S. work force, but just 1.5 percent of Fortune 500 corporate officers. The group’s mission is broad. It is aiming to reshape the American public’s understanding of the Asian-American experience by developing new school curriculums and collecting data to help influence public policy. But its political lobbying efforts may be challenged by the enormous political diversity among Asian-Americans, Andrew and Ed note. HERE’S WHAT’S HAPPENING India’s Covid-19 crisis deepens. The country recorded nearly 402,000 cases on Saturday, a global record, and another 392,000 on Sunday. A business trade group is calling for a new national lockdown, despite the economic cost of such a move. The C.E.O. of India’s biggest vaccine manufacturer warned that the country’s shortage of doses would last until at least July. Credit Suisse didn’t earn much for its Archegos troubles. The Swiss bank collected just $17.5 million in fees last year from the investment fund, despite losing $5.4 billion from the firm’s meltdown in March, according to The Financial Times. Verizon sold AOL and Yahoo. The telecom giant divested its internet media business to Apollo Global Management for $5 billion, and will retain a 10 percent stake. It’s a sign that Verizon is giving up on its digital advertising ambitions and focusing on its mobile business. A third of Basecamp employees quit after a ban on talking politics. At least 20 resigned after the software maker’s C.E.O., Jason Fried, announced a new policy preventing political discussions in the workplace. The company isn’t budging: “We’ve committed to a deeply controversial stance,” said David Hansson, Basecamp’s chief technology officer. Manchester United fans are still mad about the failed Super League. Supporters of the English soccer club stormed the field yesterday, forcing the postponement of its highly anticipated match against Liverpool. They called for the ouster of the Glazer family, United’s American owners, over their support for the new competition meant mostly for European soccer’s richest teams. Succession hints and other highlights from Berkshire’s meeting At the annual meeting of Berkshire Hathaway on Saturday, Warren Bufett and Charlie Munger spoke out on a typically broad range of topics, from investing regrets to politics to crypto. (They also picked fights with Robinhood and E.S.G. proponents, for good measure.) Buffett watchers also got their clearest hint yet as to who will succeed the Oracle of Omaha as Berkshire’s C.E.O. when the 90-year-old billionaire finally steps down. It’s Greg Abel. CNBC confirmed with Buffett that Abel, the 59-year-old who oversees Berkshire’s non-investing operations, would take over as C.E.O. “If something were to happen to me tonight it would be Greg who’d take over tomorrow morning,” Buffett said. Charlie Munger, Buffett’s top lieutenant, dropped a hint on Saturday, saying, “Greg will keep the culture.” Buffett took on Robinhood. The Berkshire chief said the trading app conditioned retail investors to treat stock trading like gambling. “There’s nothing illegal about it, there’s nothing immoral, but I don’t think you’d build a society around people doing it,” Buffett said. Robinhood pushed back. “There is an old guard that doesn’t want average Americans to have a seat at the Wall Street table so they will resort to insults,” tweeted Jacqueline Ortiz Ramsay, the company’s head of public policy communications. And Buffett got blowback on E.S.G. Berkshire shareholders followed his lead and rejected two shareholder proposals that would have forced the company to disclose more about climate change and work force diversity. But each proposal got support from a quarter of Berkshire shareholders, a relatively high percentage. And big investors spoke publicly about their backing for the initiatives: BlackRock, which owns a 5 percent stake in Berkshire, said the company hadn’t done enough on either front. Other highlights from the Berkshire meeting: Munger let loose on crypto. “Of course I hate the Bitcoin success and I don’t welcome a currency that’s so useful to kidnappers and extortionists,” he said. “I think the whole damn development is disgusting and contrary to the interests of civilization.” Ajit Jain, who oversees Berkshire’s insurance operations, and Buffett traded quips about whether the company would insure Elon Musk’s trip to Mars. “This is an easy one: No, thank you, I’ll pass,” Jain said. Buffett said it would depend on the premium and added, “I would probably have a somewhat different rate if Elon was on board or not on board.” “We will not be anywhere near as focused on buybacks going forward as we have in the past.” — Intel C.E.O. Pat Gelsinger told CBS’s “60 Minutes” that in the future the semiconductor giant would focus less on buying its own shares and more on expanding production capacity to alleviate severe chip shortages. Ted Cruz rejects ‘woke’ corporate money Ted Cruz has sworn off corporate donations, and he used an op-ed in The Wall Street Journal to tell executives about it. The Republican senator from Texas criticized company chiefs for what he said were ill-informed criticisms of Georgia’s new voting laws. “For too long, woke C.E.O.s have been fair-weather friends to the Republican Party: They like us until the left’s digital pitchforks come out,” Cruz wrote. These companies “need to be called out, singled out and cut off,” he added. Cruz’s rejection may not make a big difference. After the Capitol riot on Jan. 6, many corporations pledged to withhold donations from lawmakers who voted against certifying the election results, at least for a period of time. Cruz, who is viewed as a key player in the efforts to reverse the vote, could be shut out for longer than others. But he’s not strapped for cash: He brought in more than $3 million in campaign funds in the three months after the riot, largely from individual donors. It highlights a new schism between Republicans and corporate America. Those ties were already fraying under President Trump’s unpredictable administration. President Biden’s proposed tax hikes and regulatory push would have typically driven companies into the arms of Republican allies, but Cruz, for his part, said he’s no longer interested in what the corporate donors and lobbyists have to say. “This time,” he wrote, “we won’t look the other way on Coca-Cola’s $12 billion in back taxes owed. This time, when Major League Baseball lobbies to preserve its multibillion-dollar antitrust exception, we’ll say no thank you. This time, when Boeing asks for billions in corporate welfare, we’ll simply let the Export-Import Bank expire.” An epic antitrust case begins Today, Apple and Epic Games meet in court for a trial that could have implications for the future of the App Store and the antitrust fight against Big Tech. DealBook spoke with Jack Nicas, a technology reporter for The Times, about what’s at stake. Why is Epic suing Apple? Many companies, including Spotify and Match Group, have complained loudly and publicly about the control that Apple has over the App Store, and the 30 percent commission it charges. Epic basically set some bait for Apple: It began using its own payment system in Fortnite, a very popular game, which meant Apple couldn’t collect its commission. It knew how Apple would react: Apple kicked Fortnite out of the App Store. Then Epic immediately sued Apple in federal court, and simultaneously launched a sophisticated PR campaign to paint Apple in a bad light. [Epic is suing Google for the same reason.] Why do businesses that aren’t Epic or Apple care about this case? If you’re a company that sells any digital goods or services, whether a game, music or a dating platform, you likely pay a large share of your revenues to Apple. If Epic wins here, that could eventually put an end to Apple’s commissions, or at least cause Apple to loosen its control over the App Store. So it really would upend the economics of the app industry. And beyond that, an Epic win would boost the push for antitrust charges against some of the biggest tech companies, including Apple. Now on the other side, if Apple wins, it’s really only going to bolster its already strong position. Who is expected to win? It’s certainly unclear at this point, but there is a thinking among legal experts that Apple has the upper hand, and that’s in large part because in antitrust fights, courts are more sympathetic to the defendants. But some legal experts think that Epic’s case could be strong. What will you be watching for? The C.E.O.s of both companies, Tim Sweeney and Tim Cook, will be testifying at the trial. Sweeney will likely have to explain why Epic is suing Apple and Google, but not Microsoft and Sony and Samsung and Nintendo, which charge very similar commissions and have similar rules. And Cook will have to answer some very pointed questions about how Apple does business, and how it potentially creates rules in its App Store to hurt rivals. I think there’s an opportunity for the lawyers on Epic’s side to elicit some interesting answers from him. Read the full report about the case from Jack and Erin Griffith. THE SPEED READ Deals Legendary Studios, the producer of movies like “Godzilla vs. Kong,” has reportedly held talks to either merge with a SPAC or buy another studio. (Bloomberg) Politics and policy Why investors have largely shrugged off President Biden’s proposal to raise capital gains taxes. (NYT) As the head of the nonprofit Venture for America, Andrew Yang pledged to create 100,000 jobs nationwide. The group created about 150. (NYT) Tech An internal Amazon report warned management that its sales team had gained unauthorized access to third-party seller data, which may have been used to help its own products. (Politico) Tesla is reportedly stepping up its engagement with Beijing officials as it faces greater pressure from the Chinese government. (Reuters) Best of the rest “Has Online Retail’s Biggest Bully Returned?” (NYT) How remote work is decimating Manhattan’s retail stores, in pictures. (NYT) Eli Broad, the billionaire businessman and art collector who reshaped Los Angeles, died on Friday. He was 87. (NYT) We’d like your feedback! Please email thoughts and suggestions to [email protected]. Source link Orbem News #antiAsian #Big #Business #discrimination #Takes

0 notes

Text

How to migrate your data from the MySQL Database Service (MDS) to MDS High Availability

On March 31st, 2021, MySQL introduced a new MySQL Database Service (MDS) option named MDS High Availability (MDS H/A). “The High Availability option enables applications to meet higher uptime requirements and zero data loss tolerance. When you select the High Availability option, a MySQL DB System with three instances is provisioned across different availability or fault domains. The data is replicated among the instances using a Paxos-based consensus protocol implemented by the MySQL Group Replication technology. Your application connects to a single endpoint to read and write data to the database. In case of failure, the MySQL Database Service will automatically failover within minutes to a secondary instance without data loss and without requiring to reconfigure the application. See the documentation to learn more about MySQL Database Service High Availability.” From: MySQL Database Service with High Availability If you already have data in a MDS instance and you want to use the new MDS H/A option, you will need to move your data from your MDS instance to a new MDS H/A instance. This is a fairly easy process, but it will take some time depending upon the size of your data. First, connect to the MDS instance via an OCI (Oracle Cloud Infrastructure) compute instance. Login to your compute instance: ssh -i opc@Public_IP_Address If you don’t have MySQL Shell installed, here are the instructions. Execute these commands from your compute instance: (answer “y” or “yes” to each prompt) Note: I am not going to show the entire output from each command. sudo yum install –y mysql80-community-release-el7-3.noarch.rpm sudo yum install –y mysql-shell Connect to the MySQL Shell, using the IP address of your MDS instance. You will need to enter the user name and password for the MDS instance user. mysqlsh -uadmin -p -h Change to the JavaScript mode with /js (if you aren’t already in JavaScript mode): shell-sql>/js You can dump individual tables, or the entire instance at once. Check the manual for importing data into MDS for more information. The online manual page – Instance Dump Utility, Schema Dump Utility, and Table Dump Utility – will provide you with more details on the various options. For this example, I am going to dump the entire instance at once, into a file named “database.dump“. Note: The suffix of the file doesn’t matter. shell-js>util.dumpInstance("database.dump", { }) You will see output similar to this (which has been truncated): Acquiring global read lock Global read lock acquired Gathering information - done All transactions have been started Locking instance for backup Global read lock has been released Checking for compatibility with MySQL Database Service 8.0.23 ... Schemas dumped: 28 Tables dumped: 264 Uncompressed data size: 456.56 MB Compressed data size: 365.24 MB Compression ratio: 5.4 Rows written: 47273 Bytes written: 557.10 KB Average uncompressed throughput: 3.03 MB/s Average compressed throughput: 557.10 KB/s Quit the MySQL Shell with the “q” command. I can check the dump file: [opc@mds-client ~]$ ls -l total 760 drwxr-x---. 2 opc opc 365562813 Mar 31 19:07 database.dump Connect to the new MDS H/A instance. ssh -i opc@Public_IP_Address Start MySQL Shell again: mysqlsh -uadmin -p -h You will use the MySQL Shell Dump Loading Utility to load the data. For more information – see the Dump Loading Utility manual page. You can do a dry run is to check that there will be no issues when the dump files are loaded from a local directory into the connected MySQL instance: (Note: the output is truncated) util.loadDump("database.dump", {dryRun: true}) Loading DDL and Data from 'database.dump' using 4 threads. Opening dump... dryRun enabled, no changes will be made. .... No data loaded. 0 warnings were reported during the load. There are many options for loading your data. Here, I am going to just load the entire dump file. If you have problems, you can use the Table Export Utility and export individual tables. You might want to export and import larger tables on their own. I only need to specify my dump file, and the number of threads I want to use. (Note: the output is truncated) util.loadDump("database.dump", { threads: 8 }) Loading DDL and Data from 'database.dump' using 8 threads. Opening dump... Target is MySQL 8.0.23-u2-cloud (MySQL Database Service). Dump was produced from MySQL 8.0.23-u2-cloud Checking for pre-existing objects... Executing common preamble SQL ... 0 warnings were reported during the load. After the data has been loaded, you will want to double-check the databases and tables in the MDS H/A instance, as well as their sizes, by comparing them to the MDS instance. That’s it. Moving your data from a MDS instance to a MDS H/A instance is fairly easy. Note: You will need to change the IP address of your application to point to the new MDS H/A instance. Tony Darnell is a Principal Sales Consultant for MySQL, a division of Oracle, Inc. MySQL is the world’s most popular open-source database program. Tony may be reached at info [at] ScriptingMySQL.com and on LinkedIn. Tony is the author of Twenty Forty-Four: The League of Patriots Visit http://2044thebook.com for more information. Tony is the editor/illustrator for NASA Graphics Standards Manual Remastered Edition Visit https://amzn.to/2oPFLI0 for more information. https://scriptingmysql.wordpress.com/2021/03/31/how-to-migrate-your-data-from-the-mysql-database-service-mds-to-mds-high-availability/

0 notes

Text

Sis File Extractor

Sis File Extractor Extension

Sis File Extractor File

Sis File Extractor Rar

Sis File Extractor Software

Sis File Extractor Online

-->

SIS - Student Information Systems Specific Patron Extract Resources Listed Below, for each known Student Information System in wide use among CARLI institutions, there are 2 basic resources: A Data Map maps patron sync file XML elements to corresponding SIS tables/columns. SISXplorer allows you to Inspect and Extract all the files contained inside the 3rd Edition installation packages. Using SISXplorer you can: - Extract all the images from 3rd Edition Themes - Deeply Inspect the content of each file using the Integrated Hexadecimal Viewer. Instantly Unpack, Edit and Sign SIS files (.sis &.sisx) with Freeware SISContents.SIS or.SISX files are Symbian OS Installer Files which lets you to install any application on your symbian OS based mobile phones. Description of the SIS File Format. The UID 1, UID 2 and UID 3 fields are the first three words of the file, and indicate the type of data it contains.UID 1 is the UID of the application to be installed, or 0x10000000 if none.

What is School Data Sync?

School Data Sync is a free service in Office 365 Education that reads the rosters from your SIS, and creates classes for Microsoft Teams, Intune for Education, and third party applications. Microsoft Teams brings conversations, content, and apps together in Office 365 for Education.

What SIS/MIS vendors does School Data Sync support?

Because School Data Sync imports data in CSV files, it supports virtually every SIS on the market. SDS also supports importing roster data via the PowerSchool API, and the industry standard OneRoster API. Customers have deployed SDS with over 70 SIS vendors and data providers, including the below:

VendorsAeriesiSAMSSchoolToolAspenITCSSSEEMiSAtlasJMCSenior SystemsATSJupiterSEQTAAxiosKAMARSIMSBannerLibrusSkolplatsen (Gotit)BlackbaudMagisterSkolPulsCanvasMazeSkywardCloud Design BoxMMS GenerationsSomTodayCMISMyclassboardSTARSCobaMyED BCSunGardCompassOncourseSystemSycamoreCornerstone/TADSOracle Campus SolutionSycamore EducationCSIU eSchoolDataParnassysSynergeticDeltaLinkPC SchoolSynergyEcoPlurilogicTASSEd-AdminPowerSchoolTCS iONEdgear JCampusPrimusTeacherEaseEduarteProgressbookTeams Pro logicEdupointProgressoThird partyEngage UnitedPronote from Index EducationTrilliumeSchoolPLUSProsolution (Compass CC)TxEISesemtiaQTylerSISeTapRealtime SISVeracrossExtensRedikerVersus-ERPFocusRenWebVisma FlytGenesisRuler Connect/Locker ConnectVisma Primus/WilmaGradelinkSalamander SoftVulcan UONET+GRICSSAM Spectra by Central AccessWCBS PulseIbisSapphireWengageIlluminateEdSchool ToolWIS WEBiluminateSchoolBaseWisenetInfinite Campus & ScholarshipSchoolmasteriPASSSchoolonline

Where is School Data Sync available?

School Data sync is currently available in all regions worldwide except for China and Germany.

What apps work with School Data sync?

School Data Sync imports school, section, student, teacher, and roster data from a SIS to Office 365 so it can be used by numerous 1st party and 3rd party applications. Visit https://sds.microsoft.com to see a list of the EDU apps that use Office 365 and School Data Sync data for Single Sign-on and Rostering integration.

Will SDS automatically sync changes or do we have to restart sync to synchronize changes as they occur?

Sync runs continuously after a sync profile is created, unless manually stopped. For PowerSchool API and OneRoster API, the connection to the SIS is continuous and always polling for changes in data to be synced. If you’re running a sync profile which uses one of the available CSV based sync methods, changes within your data can be synchronized by uploading new CSV files that contain the data changes. You can upload new files through the SDS portal or through the SDS toolkit. Once new CSV files are uploaded, the sync process will begin automatically if no errors are encountered. If you’re new data set contains errors, you may need to remediate them first and reupload the files, or hit the resume sync button on the sync profile to continue syncing regardless of the errors found.

Need for speed hot pursuit 2 online. Need For Speed Hot Pursuit 2 Cd Key Changer: Need For Speed Hot Pursuit 2 Cd Keygenerator By Zipit. Serial means a unique number or code which identifies the license of the software as being valid. All retail software uses a serial number of some type, and the installation requires the user to enter a valid serial number to continue. The Need For Speed Hot Pursuit free cd key – keygen took us an excellent amount of time to establish, generally since cracking the codes wasn’t all that simple. Now that we have finally finished the Need For Speed Hot Pursuit Keygen we are proudly launching it to the general public!

What are the permission requirements for accessing and managing School Data Sync?

To access and manage SDS, your account must be a Global Administrator within the tenant.

What is the School Data Sync schema (object and attributes) available through REST API?

The Education Attributes Reference contains the full list of potential data available. The CSV files reference explains the required fields available for import via SDS.

How can we export data from our SIS to Microsoft’s required CSV format?

Since each SIS is different, we encourage School Data Sync (SDS) customers to contact their SIS vendor for support and assistance with building the appropriate export from the SIS to CSV files in one of the acceptable formats (SDS format, Clever format, or OneRoster format). Many SIS’s already have CSV export functionality, and do not require custom tools or database extractors to complete the export process. Microsoft does not provide support however for SIS extractor tools built by SIS vendors. Please contact your SIS vendor for assistance with data exports.

What is the proper format for the Term StartDate and Term EndDate attributes?

School Data Sync does not restrict the Term StartDate and Term EndDate attribute format beyond the currently allowed .NET options, however we recommend using a format of mm/dd/yyyy (i.e. 11/19/2016 or 6/12/2016). Future development efforts may align to this recommendation for features and functionality which leverage these attributes.

Can I export the sync issues/errors generated by SDS?

Yes, you may export the list of errors generated on a profile by profile basis within the SDS UI. To export the list of errors, log into sds.microsoft.com > select your sync profile > click on the Download all errors as .csv file button.

Sis File Extractor Extension

Does Microsoft provide extractor tools for my SIS data?

Microsoft does not build or maintain extractor tools for any SIS vendor. Many SIS’s have data extraction tools built into the SIS already. If your SIS does not include an extraction tool, and you need assistance extracting data from your SIS into our schema CSV format, please contact your SIS vendor for support. All there young jeezy download.

Sis File Extractor File

Why is there a character limitation on email addresses in SDS?

Email addresses for all objects in O365 must adhere to several RFC standards for internet email addressing, and SDS is simply aligned to the character limitations within each of the core Office 365 services, including SharePoint Online, Exchange Online, and Azure Active Directory.

How many sync profiles do I need to create when setting up School Data Sync?

Most often, schools will only need to create a single sync profile in School Data Sync (SDS) to synchronize all Schools, Sections, Teachers, Students, and Rosters. There are a few reasons that would require you to create additional sync profiles: Plants vs zombies garden warfare free download full version for windows.

Multiple Domains for Identity Matching – When configuring SDS you must match users from your source directory to users in Azure Active Directory (AAD). Within each sync profile, you can specify a single domain for teachers and a single domain for students. If your teachers or students are configured with more than a single domain for the attribute being used in the identity matching configuration, you may need to create multiple sync profiles to ensure a match for all users within your tenant. We recommend minimizing the number of domains configured across student and teachers for core identity matching attributes such as the UserPrincipalName and Mail attributes.

Multiple Sync Methods or Source Directories – SDS allows you to synchronize objects and attributes in a few different ways. We currently accept three different types of CSV file formatting (SDS format, Clever format, OneRoster format). SDS also allows for two different types of API connections to sync objects and attributes (PowerSchool API and OneRoster API). If you need to import data from more than a single source directory, you will need to configure multiple sync profiles. For example, you may need 1 sync profile to sync objects from PowerSchool and another sync profile to sync objects from SDS format CSV files. We recommend minimizing the number of sync profiles whenever possible.

Mix of Create New Users and Sync Existing Users – SDS allows you to either create new user accounts in AAD from your source directory data, or synchronize against existing users that are already present in Azure AD. If you need to create some new users, and synchronize against some existing users, two or more sync profiles will be required. You can only choose one of these two options within a single sync profile.

More than 2 million rows in a csv file – SDS has a limit on the number of rows that can be contained within a set of CSV files uploaded within a single sync profile. The current limit is 2 million rows per CSV file. If you have CSV files that contain more than 2 million rows, you’ll need to split the entire set of CSV files up along school lines (all sections, teachers, students, rostering associated with the school(s) being split). Once split, create additional sync profiles with the same settings, and upload the split files accordingly.

Can I have additional headers and columns in my CSV files beyond what I intend to sync?

Yes, your CSV files may contain extra headers and data. Only the attributes selected within the sync profile setup wizard will attempt to synchronize. Any extra headers and columns of data will be ignored.

If we remove a user or section from Classroom will they reappear when we sync again?

Manually updating a class roster through Classroom will not be overwritten when the next sync runs. School Data Sync makes changes to the roster based on the last sync, and not based on manual changes, with the exception of two actions: Reset Sync and Recreating a Sync Profile. Aside from those exceptions, here is how SDS treats manual additions and deletions.

Example 1:

A class is synced with a teacher and students

The teacher goes to Classroom to add a co-teacher to the class

The class is subsequently synced with no changes to teacher enrollment

(Correct behavior) The co-teacher's membership is unaffected

The class is subsequently synced with the co-teacher added

(Correct behavior) the co-teacher's membership is unaffected

The class is subsequently synced w/ the co-teacher removed

(Correct behavior) the co-teacher is removed from the class

Example 2: Loadiine zelda breath of the wild.

A class is synced with a teacher and students

The teacher goes to Classroom to remove a student from the class

The class is subsequently synced with no change to the student enrollment

(Correct behavior) The student's non-membership is unaffected

The class is subsequently synced with the student removed

(Correct behavior) the student's non-membership is unaffected

The class is subsequently synced w/ the student re-added

(Correct behavior) the student is re-added to the class

Sis File Extractor Rar

Sis File Extractor Software

What do Azure AD Connect and SDS do and how can they work together?

Azure Active Directory Connect (AAD Connect) syncs on-premise AD Users, Groups, and Objects to Azure AD (AAD) in Office 365.

School Data Sync (SDS) syncs additional Student and Teacher attributes from the Student Information System (SIS) with existing users already synced and created by AAD Connect. Adding Student and Teacher attributes evolves the identity and enables apps to provide richer user experiences based on these distinguishable attributes and education personas. SDS allows you to automatically create Class Teams within Teams for Education, School based Security groups for Intune for Education Device policy, OneNote Class Notebooks, and class rostering for 3rd party application integration.AAD Connect and SDS will never conflict, as SDS will not sync or overwrite any attribute managed by AAD Connect. SDS also provide the option to create new users, so if you don’t want to sync and create them with AAD Connect from your on-premise AD, you can use SDS to sync and create them directly from your SIS.

Both AAD Connect and SDS also sync and create other object types, like Groups, Administrative Units,and Contacts, but unlike Users, these object types are not combined to form individual and unique objects in AAD.

What special characters are not supported by School Data Sync?

Sis File Extractor Online

There are several special characters that are not supported within School Data Sync. During profile creation, you will have an option to have SDS automatically replace unsupported special characters found with an “_”. The following link explains unsupported characters.

0 notes

Photo

In today's world, having basic Microsoft Excel skills is essential. The basics of Mastering Excel can benefit entrepreneurs in many ways, thanks to the wide range of Excel applications.

If you want to hone your skills and open up your skills, having a high level of expertise in Microsoft Office will certainly give you a great advantage if you are given how great this software program is in every industry.

In business, in reality, any job in any industry can benefit those who have solid Excel experience. Excel is a powerful tool that focuses on business processes around the world - either by analyzing shares or issuers, creating a budget, or compiling a list of customer sales.

What are you waiting for? Start your learning journey with us. We are the best training institute for excel training in Delhi.

Professional Areas where it used widely

Finance and Accounting

Financial services and accounting are highly dependent financial resources and have benefited greatly from Excel spreadsheets. In the 1970s and early 1980s, financial analysts would spend weeks running advanced formulas by hand or in programs such as IBM's (NYSE: IBM) Lotus 1-2-3. Now, you can do complex modeling in minutes with Excel.

Go to the finance or accounting department of any major corporate office, and you will see computer screens full of Excel spreadsheets collecting numbers, explaining financial results, and making budgets, forecasts and programs used to make big business decisions.

Marketing and Product Management

While marketing and product experts look to their financial teams to make difficult financial analysis proposals, using spreadsheets to create customer lists and sales can help you manage your vendors and plan future marketing strategies based on past results.

Human Resource Planning

While data systems such as Oracle (ORCL), SAP (SAP), and Quickbook (INTU) can be used to manage employee and employee information, exporting that data to Excel allows users to find styles, summarize costs and hours during earnings, month, or year, and better understand how your employees are still distributed through work or pay level.

HR professionals can use Excel to capture a large spreadsheet full of employee data and better understand where the costs are coming from and how to plan and manage them in the future.

Key Microsoft Excel Skills to Improve Your Career Growth

If you want to hit that big paycheck, listed below are some basic Microsoft Excel skills that you should do well. Learning the most advanced concepts is definitely best, but learning from the best institute for excel training in Delhi-NCR can add more value to your resume.

VLOOKUP

They are precise formulas for obtaining key data from a database. While there are many ways to do this, VLOOKUP is the easiest and most efficient way to extract the information we need. I have written before that this is the best (combined) formula for Excel available but needs to be kept in a long list.

Autofilter

Autofilter allows us to better understand our data; it allows us to quickly filter data, especially if we just want to visualize certain aspects of our experience. You can not only filter by numbers, but you can also filter by text and use imagination to create powerful filters to view only the data you are interested in.

Charts

Charts can be easily created in spreadsheets and provide the best representation of numbers. If you are also tasked with presenting the results of data processed in a spreadsheet, then it is better to use graphs instead of just showing all the numbers. Charts are appropriate comparison tools.

Raising your salary becomes a reality once you have developed your set of skills. If you want to invest in education, it is best to use it to learn something that can improve your productivity, skills, and confidence as an office worker.

Being an expert at Excel and all other Microsoft Office Suite can be very helpful to you. Start Today! Check out our Excel training program (add link here)

Here are a few of the job roles for whom learning Excel beneficial :

MIS Officer

Depending on the department, the role of MIS (Management Information System) managers is different. Usually, they keep a record of daily activities, make monthly, quarterly, and annual reports, and keep their supervisors up-to-date on career progress. MIS administrators have to deal with a large amount of data and in order to do so, they study Excel formulas and features such as calculations, complete function, complete and related indicators, rotating numbers, and auto filter in Excel.

Project managers or coordinators

Whether in the IT industry or in the construction industry, project managers are everywhere. From managing vendor lists, financial records, creating reports to keeping an eye on day-to-day operations, project managers should be on their toes. They must also allocate resources efficiently and manage staff to ensure efficiency and effectiveness. While there is a lot of data that project managers have to work on, Excel turns out to be a great partner. Advanced Excel information allows them to organize, organize and manage data effectively while ensuring minimal waste of resources and energy.

Market research analysts, Digital marketers

For all businesses, audience and competitiveness are required. Market research analysts and digital marketers align their creative and analytical skills to conduct in-depth research on market trends, competitive campaigns, audience numbers, psychographics, and purchase patterns. Excel allows professionals to organize data, analyze, interpret, and present information in an easy-to-understand way. Graphs, charts, and other visual aids found in Excel allow them to display complex data in a beautiful way.

In addition, accountants, auditors, administrative assistants, office clerks, accountants, teachers, financial analysts, banks, loan managers, and retailers, marketing and other trainers are looking for competent professionals in Microsoft Excel.

Why Entrepreneurs Should Manage Excel

The ability to store, manage and process data in many different ways has many applications for entrepreneurs such as:

Finance and accounting

At the top of the list of reasons to learn with Excel are for financial and accounting purposes. Excel has many advanced features that can take hours that can be done manually in a few minutes. A good Excel course can teach this and make sure you get the most out of the software. But even with the most complex, it is perfect for easy maintenance, adding or subtracting expenses or expenses and keeping track of earnings.

Product management and marketing

The list of customers and sales objectives are two examples where Excel can help with these areas. You can use it to help plan for the future, decide on the cost of a new product and easily generate helpful reports to see what works and what needs to be changed.

Human Resources

While Excel can help with areas such as income, many entrepreneurs use professional software when they need this service. However, it can be used to look at trends, summarize costs and look at payment times in detail to help monitor business costs and employee performance patterns.

Some Tips and Tricks with Excel for Entrepreneurs for Business :

Audit Toolbar: To track the cells included in the formula use the Audit Tool Bar. It will clearly introduce the cells used in the formula.

Freezing Panes: You can set the layers to remain visible while scrolling to the cells below. This is useful when comparing data.

Conditional formatting: Allow users to change the cell formatting or cell size to meet a specific condition.

Sparkline: A small chart used to identify styles within tables. They are also known as small charts. You can find more details about sparkline

Therefore, for entrepreneurs, Excel can create basic models that include sales forecasting, budgeting, and breakdown analysis.

Why study with us?