#intelgaudi2

Explore tagged Tumblr posts

Text

Intel Webinar: Experienced Assistance To Implement LLMs

How Prediction Guard Uses Intel Gaudi 2 AI Accelerators to Provide Reliable AI.

Intel webinar

Large language models (LLMs) and generative AI are two areas where the growing use of open-source tools and software at the corporate level makes it necessary to talk about the key tactics and technologies needed to build safe, scalable, and effective LLMs for business applications. In this Intel webinar, Rahul Unnikrishnan Nair, Engineering Lead at Intel Liftoff for Startups, and Daniel Whitenack, Ph.D., creator of Prediction Guard, lead us through the important topics of implementing LLMs utilizing open models, protecting data privacy, and preserving high accuracy.

- Advertisement -

Intel AI webinar

Important Conditions for Enterprise LLM Adoption

Three essential elements are identified in the Intel webinar for an enterprise LLM adoption to be successful: using open models, protecting data privacy, and retaining high accuracy. Enterprises may have more control and customization using open models like Mistral and Llama 3, which allow them to obtain model weights and access inference code. In contrast, closed models lack insight into underlying processes and are accessible via APIs.

Businesses that handle sensitive data like PHI and PII must secure data privacy. HIPAA compliance is typically essential in these scenarios. High accuracy is also crucial, necessitating strong procedures to compare the LLM outputs with ground truth data in order to reduce problems like as hallucinations, in which the output generates erroneous or misleading information even while it is grammatically and coherently accurate.

Obstacles in Closed Models

Closed models like those offered by Cohere and OpenAI have a number of drawbacks. Businesses may be biased and make mistakes because they are unable to observe how their inputs and outputs are handled. In the absence of transparency, consumers could experience latency variations and moderation failures without knowing why they occur. Prompt injection attacks can provide serious security threats because they may use closed models to expose confidential information. These problems highlight how crucial it is to use open models in corporate applications.

Prediction Guard

The Method Used by Prediction Guard

The platform from Prediction Guard tackles these issues by combining performance enhancements, strong security measures, and safe hosting. To ensure security, models are hosted in private settings inside the Intel Tiber Developer Cloud. To improve speed and save costs, Intel Gaudi 2 AI accelerators are used. Before PII reaches the LLM, input filters are employed to disguise or substitute it and prevent prompt injections. By comparing LLM outputs to ground truth data, output validators guarantee the factual consistency of the data.

- Advertisement -

During the optimization phase, which lasted from September 2023 to April 2024, load balancing over many Gaudi 2 machines, improving prompt processing performance by bucketing and padding similar-sized prompts, and switching to the TGI Gaudi framework for easier model server administration were all done.

Prediction Guard moved to Kubernetes-based architecture in Intel Tiber Developer Cloud during the current growth phase (April 2024 to the present), merging CPU and Gaudi node groups. Implemented include deployment automation, performance and uptime monitoring, and integration with Cloudflare for DDoS protection and CDN services.

Performance and Financial Gains

There were notable gains when switching to Gaudi 2. Compared to earlier GPU systems, Prediction Guard accomplished a 10x decrease in computation costs and a 2x gain in throughput for corporate applications. Prediction Guard’s sub-200ms time-to-first-token latency reduction puts it at the top of the industry performance rankings. These advantages were obtained without performance loss, demonstrating Gaudi 2’s scalability and cost-effectiveness.

Technical Analysis and Suggestions

The presenters stressed that having access to an LLM API alone is not enough for a strong corporate AI solution. Thorough validation against ground truth data is necessary to guarantee the outputs’ correctness and reliability. Data management is a crucial factor in AI system design as integrating sensitive data requires robust privacy and security safeguards. Prediction Guard offers other developers a blueprint for optimizing Gaudi 2 consumption via a staged approach. The secret to a successful deployment is to validate core functionality first, then gradually scale and optimize depending on performance data and user input.

Additional Information on Technical Execution

In order to optimize memory and compute utilization, handling static forms during the first migration phase required setting up model servers to manage varying prompt lengths by padding them to specified sizes. By processing a window of requests in bulk via dynamic batching, the system was able to increase throughput and decrease delay.

In order to properly handle traffic and prevent bottlenecks, load balancing among numerous Gaudi 2 servers was deployed during the optimization process. Performance was further improved by streamlining the processing of input prompts by grouping them into buckets according to size and padding within each bucket. Changing to the TGI Gaudi framework made managing model servers easier.

Scalable and robust deployment was made possible during the scaling phase by the implementation of an Intel Kubernetes Service (IKS) cluster that integrates CPU and Gaudi node groups. High availability and performance were guaranteed by automating deployment procedures and putting monitoring systems in place. Model serving efficiency was maximized by setting up inference parameters and controlling key-value caches.

Useful Implementation Advice

It is advised that developers and businesses wishing to use comparable AI solutions begin with open models in order to maintain control and customization options. It is crucial to make sure that sensitive data is handled safely and in accordance with applicable regulations. Successful deployment also requires taking a staged approach to optimization, beginning with fundamental features and progressively improving performance depending on measurements and feedback. Finally, optimizing and integrating processes may be streamlined by using frameworks like TGI Gaudi and Optimum Habana.

In summary

Webinar Intel

Prediction Guard’s all-encompassing strategy, developed in partnership with Intel, exemplifies how businesses may implement scalable, effective, and safe AI solutions. Prediction Guard offers a strong foundation for corporate AI adoption by using Intel Gaudi 2 and Intel Tiber Developer Cloud to handle important issues related to control, personalization, data protection, and accuracy. The Intel webinar‘s technical insights and useful suggestions provide developers and businesses with invaluable direction for negotiating the challenges associated with LLM adoption.

Read more on govindhtech.com

#IntelWebinar#ExperiencedAssistance#Llama3#ImplementLLM#Largelanguagemodels#IntelLiftoff#IntelTiberDeveloperCloud#PredictionGuard#IntelGaudi2#KubernetesService#AIsolutions#dataprotection#technology#technews#news#govindhtech

0 notes

Text

Intel Computex Unveils AI : Xeon 6, Gaudi, Lunar Lake CPUs

Intel Computex 2024

In order to make AI affordable and available to everyone, Intel CEO Pat Gelsinger announced at Intel Computex 2024 the newest technologies that combine state-of-the-art performance and power efficiency in data centres, acceleration, and the AI PC experience. Intel is dedicated to driving AI potential for partners and customers, while also empowering open ecosystems. Intel is still at the forefront of driving the industry towards a sustainable and scalable future with its increased processing power, cutting-edge power efficiency, and affordable total cost of ownership.

Gelsinger gave a keynote address at the June 4–7 event in Taipei, Taiwan, where he introduced the Intel Xeon 6 processors with Efficient-cores (E-cores), revealed the cost of the Intel Gaudi 2 and 3 AI accelerator kits, and unveiled the ground-breaking Lunar Lake client processor architecture a ground-breaking design that expands the AI PC category even further.

Lunar Lake

For its forthcoming Lunar Lake client computing processor, which was completely rebuilt to deliver industry-leading core and graphics performance with unparalleled AI, Intel unveiled the architecture specifics.

In comparison to the previous generation, the new Performance-cores (P-cores) and Efficient-cores (E-cores) give incredible performance at up to 40% reduced system-on-chip power. Compared to the previous generation, a new neural processing unit is up to 4 times quicker, allowing for equivalent advancements in generative AI. Moreover, performance in gaming and graphics is 1.5 times better with the new Xe2 graphics processing unit cores compared to the previous version.

Starting in the third quarter of 2024, Lunar Lake will power more than 80 new AI PC designs from more than 20 partners.

Lunar Lake Structure: A Motion Picture

An animated film demonstrates the various technological levels that comprise Lunar Lake. Intel unveiled Lunar Lake architecture at Intel Computex in Taipei on June 4, 2024. When compared to the previous generation, the thin-and-light PC processor offers notable gains in performance and efficiency in the neural processing unit, central processing unit, and graphics processing unit. Intel Corporation is credited.

Pictures of Lunar Lake

Image credit to Intel

Lunar Lake Provides the AI PC with a New Architecture

Intel unveiled the Lunar Lake architecture at Intel Computex 2024, revealing the flagship chip for the upcoming AI PC generation. Lunar Lake focuses on power-efficient compute performance for the thin-and-light segment while providing a significant boost in graphics and AI processing capabilities. Intel Corporation is credited.

Intel Core 6

The Intel Xeon 6 family of processors, which comes with E-core and P-core variants, was introduced by Intel to handle a wide range of workloads and use cases, including scalable cloud-native apps and high-performance computation requirements for artificial intelligence.

The first member of the series, code-named Sierra Forest, is the Intel Xeon 6 CPU with efficient cores, making its debut at Intel Computex 2024. When compared to 2nd Gen Intel Xeon processors on media transcoding workloads, it offers a performance per watt boost of up to 2.6x and a rack-level performance gain of up to 4.2x. Its high core density and remarkable performance also allow for rack-level consolidation of 3-to-1.

The third quarter of 2024 will see Intel Xeon 6 processors with P-cores increase AI, high-performance computing, image processing, and data analytics.

Xeon 6 Picture

Image credit to Intel

An Overview of Intel Xeon 6 Processors in 60 Seconds

Three key points concerning Intel Xeon 6 processors with Efficient-cores (E-cores) are outlined in this one-minute summary. Known by the code name Sierra Forest, they were introduced on June 4, 2024, at Intel Computex in Taipei, Taiwan. Designed with E-cores, they enhance performance and reduce power consumption for applications that require high densities and scaling. The Intel Xeon 6 processors with Performance-cores (P-cores), code-named Granite Rapids, will shortly trail this initial member of the family. Workloads requiring additional computation, such AI and computer vision, will be supported. Intel Corporation is credited.

Kulim, Malaysia; San Jose, Costa Rica: Intel Manufacturing (B-Roll)

The Intel Kulim Assembly Test (KuAT) plant in Kulim, Malaysia, and the Costa Rica Assembly Test (CRAT) site in San Jose, Costa Rica, are depicted in B-roll film from May 2024. It features footage of Intel Xeon 6 processors with efficient cores (code-named Sierra Forest) and Intel personnel inside the buildings. Intel Corporation is credited.

Gaudi Intel

With a price-performance advantage that offers customers options, quick deployment, and a lower total cost of operation, the Intel Gaudi architecture delivers the generative AI performance they desire.

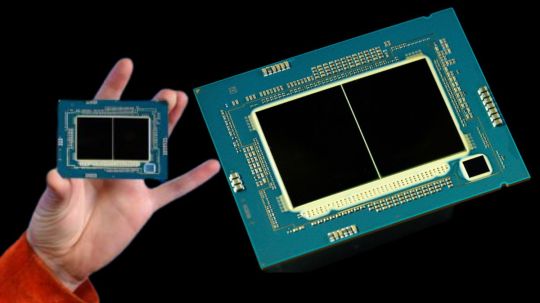

Intel revealed at Intel Computex that a typical AI kit, which includes eight Intel Gaudi 2 accelerators and a universal baseboard (UBB), is expected to cost system providers about one-third as much as comparable competition systems. The kit is available to system providers for $65,000. Eight Intel Gaudi 3 accelerators with a UBB will be included in a kit that will retail for $125,000, which is roughly two-thirds less than comparable competitor platforms2.

Additionally, Intel disclosed that the launch of Intel Gaudi 3 systems is anticipated from six new system vendors. With plans to sell Intel Gaudi 3 systems, ASUS, Foxconn , the Gigabyte, Inventec, Quanta, and Wistron join Dell, HPE, Lenovo, and Supermicro.

Intel Tech Tour

Intel held its third-annual Technology Tour in Taiwan just a few days before Intel Computex, providing worldwide media and analysts with presentations on Xeon 6 and Gaudi accelerators as addition to an in-depth look at Lunar Lake architecture. Bringing next-generation technologies to life is the responsibility of business and technical leaders from throughout Intel, and these leaders delivered keynote addresses and technical deep dives over the two-day event.

Read more on Govindhtech.com

#IntelComputex#Xeon6#LunarLake#cpus#IntelGaudi2#generativeAI#flagshipchip#gigabyte#IntelGaudi3#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Aurora Supercomputer Sets a New Record for AI Tragic Speed!

Intel Aurora Supercomputer

Together with Argonne National Laboratory and Hewlett Packard Enterprise (HPE), Intel announced at ISC High Performance 2024 that the Aurora supercomputer has broken the exascale barrier at 1.012 exaflops and is now the fastest AI system in the world for AI for open science, achieving 10.6 AI exaflops. Additionally, Intel will discuss how open ecosystems are essential to the advancement of AI-accelerated high performance computing (HPC).

Why This Is Important:

From the beginning, Aurora was intended to be an AI-centric system that would enable scientists to use generative AI models to hasten scientific discoveries. Early AI-driven research at Argonne has advanced significantly. Among the many achievements are the mapping of the 80 billion neurons in the human brain, the improvement of high-energy particle physics by deep learning, and the acceleration of drug discovery and design using machine learning.

Analysis

The Aurora supercomputer has 166 racks, 10,624 compute blades, 21,248 Intel Xeon CPU Max Series processors, and 63,744 Intel Data Centre GPU Max Series units, making it one of the world’s largest GPU clusters. 84,992 HPE slingshot fabric endpoints make up Aurora’s largest open, Ethernet-based supercomputing connection on a single system.

The Aurora supercomputer crossed the exascale barrier at 1.012 exaflops using 9,234 nodes, or just 87% of the system, yet it came in second on the high-performance LINPACK (HPL) benchmark. Aurora supercomputer placed third on the HPCG benchmark at 5,612 TF/s with 39% of the machine. The goal of this benchmark is to evaluate more realistic situations that offer insights into memory access and communication patterns two crucial components of real-world HPC systems. It provides a full perspective of a system’s capabilities, complementing benchmarks such as LINPACK.

How AI is Optimized

The Intel Data Centre GPU Max Series is the brains behind the Aurora supercomputer. The core of the Max Series is the Intel X GPU architecture, which includes specialised hardware including matrix and vector computing blocks that are ideal for AI and HPC applications. Because of the unmatched computational performance provided by the Intel X architecture, the Aurora supercomputer won the high-performance LINPACK-mixed precision (HPL-MxP) benchmark, which best illustrates the significance of AI workloads in HPC.

The parallel processing power of the X architecture excels at handling the complex matrix-vector operations that are a necessary part of neural network AI computing. Deep learning models rely heavily on matrix operations, which these compute cores are essential for speeding up. In addition to the rich collection of performance libraries, optimised AI frameworks, and Intel’s suite of software tools, which includes the Intel oneAPI DPC++/C++ Compiler, the X architecture supports an open ecosystem for developers that is distinguished by adaptability and scalability across a range of devices and form factors.

Enhancing Accelerated Computing with Open Software and Capacity

He will stress the value of oneAPI, which provides a consistent programming model for a variety of architectures. OneAPI, which is based on open standards, gives developers the freedom to write code that works flawlessly across a variety of hardware platforms without requiring significant changes or vendor lock-in. In order to overcome proprietary lock-in, Arm, Google, Intel, Qualcomm, and others are working towards this objective through the Linux Foundation’s Unified Acceleration Foundation (UXL), which is creating an open environment for all accelerators and unified heterogeneous compute on open standards. The UXL Foundation is expanding its coalition by adding new members.

As this is going on, Intel Tiber Developer Cloud is growing its compute capacity by adding new, cutting-edge hardware platforms and new service features that enable developers and businesses to assess the newest Intel architecture, innovate and optimise workloads and models of artificial intelligence rapidly, and then implement AI models at scale. Large-scale Intel Gaudi 2-based and Intel Data Centre GPU Max Series-based clusters, as well as previews of Intel Xeon 6 E-core and P-core systems for certain customers, are among the new hardware offerings. Intel Kubernetes Service for multiuser accounts and cloud-native AI training and inference workloads is one of the new features.

Next Up

Intel’s objective to enhance HPC and AI is demonstrated by the new supercomputers that are being implemented with Intel Xeon CPU Max Series and Intel Data Centre GPU Max Series technologies. The Italian National Agency for New Technologies, Energy and Sustainable Economic Development (ENEA) CRESCO 8 system will help advance fusion energy; the Texas Advanced Computing Centre (TACC) is fully operational and will enable data analysis in biology to supersonic turbulence flows and atomistic simulations on a wide range of materials; and the United Kingdom Atomic Energy Authority (UKAEA) will solve memory-bound problems that underpin the design of future fusion powerplants. These systems include the Euro-Mediterranean Centre on Climate Change (CMCC) Cassandra climate change modelling system.

The outcome of the mixed-precision AI benchmark will serve as the basis for Intel’s Falcon Shores next-generation GPU for AI and HPC. Falcon Shores will make use of Intel Gaudi’s greatest features along with the next-generation Intel X architecture. A single programming interface is made possible by this integration.

In comparison to the previous generation, early performance results on the Intel Xeon 6 with P-cores and Multiplexer Combined Ranks (MCR) memory at 8800 megatransfers per second (MT/s) deliver up to 2.3x performance improvement for real-world HPC applications, such as Nucleus for European Modelling of the Ocean (NEMO). This solidifies the chip’s position as the host CPU of choice for HPC solutions.

Read more on govindhtech.com

#aurorasupercomputer#AISystem#AIaccelerated#highperformancecomputing#aimodels#machinelearning#inteldatacentre#intelxeoncpu#HPC#MemoryAccess#HPCApplications#AIComputing#softwaretools#intelgaudi2#IntelXeon#aibenchmark#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

Gaudi 2 Intel Benchmarks GenAI Performance Against NV H100

Gaudi 2 Intel

For GenAI Performance, Intel Gaudi 2 is Still the Only Benchmarked Option Besides NV H100. The results of the industry-standard MLPerf v4.0 benchmark for inference were released by MLCommons. The company’s dedication to bringing “AI Everywhere” with a wide range of competitive solutions is reinforced by Intel’s results for its Intel Gaudi 2 accelerators and 5th Gen Intel Xeon Scalable CPUs with Intel Advanced Matrix Extensions (Intel AMX).

When it comes to generative AI (GenAI) performance, the Gaudi 2 Intel AI accelerator is still the sole benchmarked substitute for the Nvidia H100, offering excellent value for the money. Moreover, Intel continues to be the only manufacturer of server CPUs to provide MLPerf findings.

According to MLPerf Inference v3.1, the average improvement in Intel’s 5th Gen Xeon results over 4th Gen Intel Xeon processors was 1.42x. The Reason It Matters Intel’s MLPerf findings provide clients industry-standard standards to assess AI performance, building on its training and inference performance from prior MLPerf rounds.

Intel Gaudi 2 vs Nvidia NV H100 High-Performance AI Accelerator: This robust GPU is intended for usage in data centres for applications such as real-time deep learning inference, rapid data analytics, high-performance computing (HPC), and data analysis. Leading Performance: The H100 offers up to 7x better performance for HPC workloads than prior versions. LLM, or large language model Amicable: With its specialised Transformer Engine, which is intended to handle large LLMs, conversational AI activities may be performed up to 30 times quicker. Scalability and Security: To handle exascale workloads, the NVIDIA NVLink Switch System connects up to 256 H100 GPUs, and NVIDIA Confidential Computing, a built-in feature, secures data and applications. Intel Gaudi 2 The popular large language models (LLMs) and multimodal models that are covered by the Intel Gaudi software package are still growing. Intel submitted the Gaudi 2 accelerator results for the state-of-the-art models Llama v2-70B and Stable Diffusion XL for MLPerf Inference v4.0.

Hugging Face Text Generation Inference (TGI) has been quite popular, and Gaudi’s Llama work took use of this by using the TGI toolbox, which allows for tensor parallelism and continuous batching, thereby improving the efficiency of real-world LLM scaling. Gaudi 2 yielded 8035.0 and 6287.5 tokens-per-second for offline and server tokens, respectively, for Llama v2-70B. Gaudi 2 yielded 6.26 and 6.25 offline samples per second and server queries per second on Stable Diffusion XL, respectively.

Intel Gaudi 2 specs 7nm process technology Dual matrix multiplication engines (MME) and 24 programmable tensor processor cores (TPC) make up the heterogeneous compute architecture. Memory: 48 MB of SRAM and 96 GB of HBM2E onboard memory Networking: 24 on-chip integrated 100 Gbps Ethernet ports The Gaudi 2 Intel delivers outstanding speed and scalability and is optimised for deep learning training and inference applications. It has a heterogeneous computational architecture with twin matrix multiplication engines and 24 programmable tensor processor cores, and it is based on a 7nm manufacturing technology. The Gaudi 2 can effectively perform a range of deep learning tasks because to its design.

Additionally, the Gaudi 2 Intel has 96GB of HBM2E memory built-in, offering enough of capacity for data access. Moreover, the Gaudi 2 has 24 on-chip 100 Gbps Ethernet connectors, allowing several Gaudi 2 Intel accelerators to communicate at high speeds. Because of this, the Gaudi 2 is a good fit for deep learning clusters of any size.

Intel Gaudi 2 price These findings indicate that the Intel Gaudi 2 is still a competitive price. AI Will Be Everywhere in Intel Vision 2024

They are pleased to announce Intel Vision, which will be held in Phoenix, Arizona, on April 8–9, 2024. their flagship event, Intel Vision, brings together elite leaders in business and technology to discuss the most recent developments in the industry and solutions related to client, edge, data Centre, and cloud innovations.

Sign up now to take part in thought-provoking roundtables, captivating demonstrations, and cutting-edge AI insights with Intel executives and distinguished guests that will help you realise your technological vision.e/performance, a crucial factor to take into account when examining the total cost of ownership (TCO).

Concerning the Intel 5th Generation Xeon Outcomes: With advancements in both hardware and software, Intel’s 5th generation Xeon processors outperformed 4th generation Intel Xeon processors in MLPerf Inference v3.1, with a geomean increase of 1.42x. For instance, the 5th Gen Xeon entry demonstrated around 1.8x performance increases over the v3.1 submission for GPT-J with software optimisations including continuous batching. In a similar vein, MergedEmbeddingBag and further Intel AMX optimisations allowed DLRMv2 to provide around 1.8x speed increases with 99.9 accuracy.

Intel takes great pride in working together with OEM partners, like Quanta, Supermicro, Cisco, Dell, and WiWynn, to enable them to submit their own MLPerf results. Additionally, beginning in 2020, Intel has provided MLPerf results for four generations of Xeon CPUs; in many of these submissions, Xeon serves as the host CPU.

How to Utilise Intel Developer Cloud AI Solutions: The Intel Developer Cloud offers evaluations of 5th generation Xeon CPUs and Intel Gaudi 2 accelerators. Users may manage AI computing resources, execute training and inference production workloads at scale (LLM or GenAI), and do much more in this environment.

What to Watch For: Stay tuned for an update on Intel Gaudi 3 AI accelerators and additional information about Intel’s plan to deliver “AI Everywhere” at Intel Vision 2024.

Read more on Govindhtech.com

#intel#nvh100#intelgaudi2#genai#ai#cpu#stablediffusion#llm#intelvision#technology#technews#govindhtech

0 notes