#AIaccelerated

Explore tagged Tumblr posts

Text

Battling Bakeries in an AI Arms Race! Inside the High-Tech Doughnut Feud

#AI#TechSavvy#commercialwar#AIAccelerated#EdgeAnalytic#CloudComputing#DeepLearning#NeuralNetwork#AICardUpgradeCycle#FutureProof#ComputerVision#ModelTraining#artificialintelligence#ai#Supergirl#Batman#DC Official#Home of DCU#Kara Zor-El#Superman#Lois Lane#Clark Kent#Jimmy Olsen#My Adventures With Superman

2 notes

·

View notes

Text

Aurora Supercomputer Sets a New Record for AI Tragic Speed!

Intel Aurora Supercomputer

Together with Argonne National Laboratory and Hewlett Packard Enterprise (HPE), Intel announced at ISC High Performance 2024 that the Aurora supercomputer has broken the exascale barrier at 1.012 exaflops and is now the fastest AI system in the world for AI for open science, achieving 10.6 AI exaflops. Additionally, Intel will discuss how open ecosystems are essential to the advancement of AI-accelerated high performance computing (HPC).

Why This Is Important:

From the beginning, Aurora was intended to be an AI-centric system that would enable scientists to use generative AI models to hasten scientific discoveries. Early AI-driven research at Argonne has advanced significantly. Among the many achievements are the mapping of the 80 billion neurons in the human brain, the improvement of high-energy particle physics by deep learning, and the acceleration of drug discovery and design using machine learning.

Analysis

The Aurora supercomputer has 166 racks, 10,624 compute blades, 21,248 Intel Xeon CPU Max Series processors, and 63,744 Intel Data Centre GPU Max Series units, making it one of the world’s largest GPU clusters. 84,992 HPE slingshot fabric endpoints make up Aurora’s largest open, Ethernet-based supercomputing connection on a single system.

The Aurora supercomputer crossed the exascale barrier at 1.012 exaflops using 9,234 nodes, or just 87% of the system, yet it came in second on the high-performance LINPACK (HPL) benchmark. Aurora supercomputer placed third on the HPCG benchmark at 5,612 TF/s with 39% of the machine. The goal of this benchmark is to evaluate more realistic situations that offer insights into memory access and communication patterns two crucial components of real-world HPC systems. It provides a full perspective of a system’s capabilities, complementing benchmarks such as LINPACK.

How AI is Optimized

The Intel Data Centre GPU Max Series is the brains behind the Aurora supercomputer. The core of the Max Series is the Intel X GPU architecture, which includes specialised hardware including matrix and vector computing blocks that are ideal for AI and HPC applications. Because of the unmatched computational performance provided by the Intel X architecture, the Aurora supercomputer won the high-performance LINPACK-mixed precision (HPL-MxP) benchmark, which best illustrates the significance of AI workloads in HPC.

The parallel processing power of the X architecture excels at handling the complex matrix-vector operations that are a necessary part of neural network AI computing. Deep learning models rely heavily on matrix operations, which these compute cores are essential for speeding up. In addition to the rich collection of performance libraries, optimised AI frameworks, and Intel’s suite of software tools, which includes the Intel oneAPI DPC++/C++ Compiler, the X architecture supports an open ecosystem for developers that is distinguished by adaptability and scalability across a range of devices and form factors.

Enhancing Accelerated Computing with Open Software and Capacity

He will stress the value of oneAPI, which provides a consistent programming model for a variety of architectures. OneAPI, which is based on open standards, gives developers the freedom to write code that works flawlessly across a variety of hardware platforms without requiring significant changes or vendor lock-in. In order to overcome proprietary lock-in, Arm, Google, Intel, Qualcomm, and others are working towards this objective through the Linux Foundation’s Unified Acceleration Foundation (UXL), which is creating an open environment for all accelerators and unified heterogeneous compute on open standards. The UXL Foundation is expanding its coalition by adding new members.

As this is going on, Intel Tiber Developer Cloud is growing its compute capacity by adding new, cutting-edge hardware platforms and new service features that enable developers and businesses to assess the newest Intel architecture, innovate and optimise workloads and models of artificial intelligence rapidly, and then implement AI models at scale. Large-scale Intel Gaudi 2-based and Intel Data Centre GPU Max Series-based clusters, as well as previews of Intel Xeon 6 E-core and P-core systems for certain customers, are among the new hardware offerings. Intel Kubernetes Service for multiuser accounts and cloud-native AI training and inference workloads is one of the new features.

Next Up

Intel’s objective to enhance HPC and AI is demonstrated by the new supercomputers that are being implemented with Intel Xeon CPU Max Series and Intel Data Centre GPU Max Series technologies. The Italian National Agency for New Technologies, Energy and Sustainable Economic Development (ENEA) CRESCO 8 system will help advance fusion energy; the Texas Advanced Computing Centre (TACC) is fully operational and will enable data analysis in biology to supersonic turbulence flows and atomistic simulations on a wide range of materials; and the United Kingdom Atomic Energy Authority (UKAEA) will solve memory-bound problems that underpin the design of future fusion powerplants. These systems include the Euro-Mediterranean Centre on Climate Change (CMCC) Cassandra climate change modelling system.

The outcome of the mixed-precision AI benchmark will serve as the basis for Intel’s Falcon Shores next-generation GPU for AI and HPC. Falcon Shores will make use of Intel Gaudi’s greatest features along with the next-generation Intel X architecture. A single programming interface is made possible by this integration.

In comparison to the previous generation, early performance results on the Intel Xeon 6 with P-cores and Multiplexer Combined Ranks (MCR) memory at 8800 megatransfers per second (MT/s) deliver up to 2.3x performance improvement for real-world HPC applications, such as Nucleus for European Modelling of the Ocean (NEMO). This solidifies the chip’s position as the host CPU of choice for HPC solutions.

Read more on govindhtech.com

#aurorasupercomputer#AISystem#AIaccelerated#highperformancecomputing#aimodels#machinelearning#inteldatacentre#intelxeoncpu#HPC#MemoryAccess#HPCApplications#AIComputing#softwaretools#intelgaudi2#IntelXeon#aibenchmark#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

#EdgeAI#SystemOnModule#AIAccelerator#EmbeddedSystems#VisionAI#IoT#Virtium#DEEPX#powerelectronics#powermanagement#powersemiconductor

0 notes

Text

#Virtium#energy efficiency#EdgeAI#EmbeddedSystems#IndustrialAI#SoM#AIAccelerator#IoTInnovation#TechPartnership#SmartManufacturing#AIOnEdge#TimestechUpdates#electronicsnews#technologynews

0 notes

Text

🌐 Introducing the GravityChain Accelerator 🚀 The AI-powered DeFi engine that’s transforming how Web3 earns — globally. Powered by Speak Global, built for the future.

💡 Smarter. Faster. Borderless. This isn’t just evolution — it’s a revolution in motion.

🎥 Watch now and witness the future of earning.

#GravityChain#SpeakGlobal#Web3Earnings#AIDeFi#DeFiEngine#AIAccelerator#Web3Revolution#GlobalEarnings#BlockchainFuture#DigitalWealth#NextGenDeFi#JoinTheOrbit#OwnTheChain#MillionaireOrbit#FutureOfFinance#DecentralizedFuture

0 notes

Text

Revolutionizing AI Workloads with AMD Instinct MI300X and SharonAI’s Cloud Computing Infrastructure

As the world rapidly embraces artificial intelligence, the demand for powerful GPU solutions has skyrocketed. In this evolving landscape, the AMD Instinct MI300X emerges as a revolutionary force, setting a new benchmark in AI Acceleration, performance, and memory capacity. When paired with SharonAI’s state-of-the-art Cloud Computing infrastructure, this powerhouse transforms how enterprises handle deep learning, HPC, and generative AI workloads.

At the heart of the MI300X’s excellence is its advanced CDNA 3 architecture. With an enormous 192 GB of HBM3 memory and up to 5.3 TB/s of memory bandwidth, it delivers the kind of GPUpower that modern AI and machine learning workloads demand. From training massive language models to running simulations at scale, the AMD Instinct MI300X ensures speed and efficiency without compromise. For organizations pushing the boundaries of infrastructure, this level of performance offers unprecedented flexibility and scale.

SharonAI, a leader in GPU cloud solutions, has integrated the AMD Instinct MI300X into its global infrastructure, offering clients access to one of the most powerful AIGPU solutions available. Whether you're a startup building new GenerativeAI models or an enterprise running critical HPC applications, SharonAI’s MI300X-powered virtual machines deliver high-throughput, low-latency computing environments optimized for today’s AI needs.

One of the standout advantages of the MI300X lies in its ability to hold massive models in memory without needing to split them across devices. This is particularly beneficial for Deep Learning applications that require processing large datasets and models with billions—or even trillions—of parameters. With MI300X on SharonAI’s cloud, developers and data scientists can now train and deploy these models faster, more efficiently, and more cost-effectively than ever before.

Another key strength of this collaboration is its open-source flexibility. Powered by AMD’s ROCm software stack, the MI300X supports popular AI frameworks like PyTorch, TensorFlow, and JAX. This makes integration seamless and ensures that teams can continue building without major workflow changes. For those who prioritize vendor-neutral infrastructure and future-ready systems, this combination of hardware and software offers the ideal solution.

SharonAI has further distinguished itself with a strong commitment to sustainability and scalability. Its high-performance data centers are designed to support dense GPU workloads while maintaining carbon efficiency—a major win for enterprises that value green technology alongside cutting-edge performance.In summary, the synergy between AMD Instinct MI300X and SharonAI provides a compelling solution for businesses looking to accelerate their AI journey. From groundbreaking GenerativeAI to mission-critical HPC, this combination delivers the GPUpower, scalability, and flexibility needed to thrive in the AI era. For any organization looking to enhance its ML infrastructure through powerful, cloud-based AIGPU solutions, SharonAI’s MI300X offerings represent the future of AI Acceleration and Cloud Computing.

#AMDInstinctMI300X#AIAcceleration#DeepLearning#GenerativeAI#HPC#MLInfrastructure#CloudComputing#DataScience#AIResearch#SharonAI

0 notes

Text

Introducing the FCU3501, our rugged, next-gen embedded computer powered by the Rockchip RK3588 SoC. Designed for industrial AI at the edge, it delivers up to 32 TOPS of AI performance via onboard NPU + Hailo-8 M.2 accelerator.

🔧 Key Features:

Dual NPU Architecture – 6 TOPS onboard + 26 TOPS via Hailo‑8

Supports 8K Video Encoding/Decoding – Perfect for smart vision applications

Wide Temp, Fanless Design (–40 °C to +85 °C)

Certified for Industrial Use – CE, FCC, RoHS, EMC-compliant

Modular Storage & Expansion – M.2 SSD, TF card, 4G/5G optional

Rich I/O for Smart Factories, Transportation, Buildings & more

📩 Want a spec sheet or evaluation sample? Contact us: [email protected]

#EdgeAI#RK3588#Hailo8#EmbeddedComputer#IndustrialAutomation#AIAccelerator#SmartFactory#MachineVision#ProductLaunch

0 notes

Text

The US and UAE are accelerating the future of AI A 1GW data center. A 5GW tech cluster. A joint task force in 30 days. The countdown has begun. The future is in motion. 🇺🇸���🇪 #USUAEAI #FutureInMotion #AIAcceleration #digixplanet #digixideaslab #digitalmarketing

0 notes

Text

How Does AI Generate Human-Like Voices? 2025

How Does AI Generate Human-Like Voices? 2025

Artificial Intelligence (AI) has made incredible advancements in speech synthesis. AI-generated voices now sound almost indistinguishable from real human speech. But how does this technology work? What makes AI-generated voices so natural, expressive, and lifelike? In this deep dive, we’ll explore: ✔ The core technologies behind AI voice generation. ✔ How AI learns to mimic human speech patterns. ✔ Applications and real-world use cases. ✔ The future of AI-generated voices in 2025 and beyond.

Understanding AI Voice Generation

At its core, AI-generated speech relies on deep learning models that analyze human speech and generate realistic voices. These models use vast amounts of data, phonetics, and linguistic patterns to synthesize speech that mimics the tone, emotion, and natural flow of a real human voice. 1. Text-to-Speech (TTS) Systems Traditional text-to-speech (TTS) systems used rule-based models. However, these sounded robotic and unnatural because they couldn't capture the rhythm, tone, and emotion of real human speech. Modern AI-powered TTS uses deep learning and neural networks to generate much more human-like voices. These advanced models process: ✔ Phonetics (how words sound). ✔ Prosody (intonation, rhythm, stress). ✔ Contextual awareness (understanding sentence structure). 💡 Example: AI can now pause, emphasize words, and mimic real human speech patterns instead of sounding monotone.

2. Deep Learning & Neural Networks AI speech synthesis is driven by deep neural networks (DNNs), which work like a human brain. These networks analyze thousands of real human voice recordings and learn: ✔ How humans naturally pronounce words. ✔ The pitch, tone, and emphasis of speech. ✔ How emotions impact voice (anger, happiness, sadness, etc.). Some of the most powerful deep learning models include: WaveNet (Google DeepMind) Developed by Google DeepMind, WaveNet uses a deep neural network that analyzes raw audio waveforms. It produces natural-sounding speech with realistic tones, inflections, and even breathing patterns. Tacotron & Tacotron 2 Tacotron models, developed by Google AI, focus on improving: ✔ Natural pronunciation of words. ✔ Pauses and speech flow to match human speech patterns. ✔ Voice modulation for realistic expression. 3. Voice Cloning & Deepfake Voices One of the biggest breakthroughs in AI voice synthesis is voice cloning. This technology allows AI to: ✔ Copy a person’s voice with just a few minutes of recorded audio. ✔ Generate speech in that person’s exact tone and style. ✔ Mimic emotions, pitch, and speech variations. 💡 Example: If an AI listens to 5 minutes of Elon Musk’s voice, it can generate full speeches in his exact tone and speech style. This is called deepfake voice technology. 🔴 Ethical Concern: This technology can be used for fraud and misinformation, like creating fake political speeches or scam calls that sound real.

How AI Learns to Speak Like Humans

AI voice synthesis follows three major steps: Step 1: Data Collection & Training AI systems collect millions of human speech recordings to learn: ✔ Pronunciation of words in different accents. ✔ Pitch, tone, and emotional expression. ✔ How people emphasize words naturally. 💡 Example: AI listens to how people say "I love this product!" and learns how different emotions change the way it sounds. Step 2: Neural Network Processing AI breaks down voice data into small sound units (phonemes) and reconstructs them into natural-sounding speech. It then: ✔ Creates realistic sentence structures. ✔ Adds human-like pauses, stresses, and tonal changes. ✔ Removes robotic or unnatural elements. Step 3: Speech Synthesis Output After processing, AI generates speech that sounds fluid, emotional, and human-like. Modern AI can now: ✔ Imitate accents and speech styles. ✔ Adjust pitch and tone in real time. ✔ Change emotional expressions (happy, sad, excited).

Real-World Applications of AI-Generated Voices

AI-generated voices are transforming multiple industries: 1. Voice Assistants (Alexa, Siri, Google Assistant) AI voice assistants now sound more natural, conversational, and human-like than ever before. They can: ✔ Understand context and respond naturally. ✔ Adjust tone based on conversation flow. ✔ Speak in different accents and languages. 2. Audiobooks & Voiceovers Instead of hiring voice actors, AI-generated voices can now: ✔ Narrate entire audiobooks in human-like voices. ✔ Adjust voice tone based on story emotion. ✔ Sound different for each character in a book. 💡 Example: AI-generated voices are now used for animated movies, YouTube videos, and podcasts. 3. Customer Service & Call Centers Companies use AI voices for automated customer support, reducing costs and improving efficiency. AI voice systems: ✔ Respond naturally to customer questions. ✔ Understand emotional tone in conversations. ✔ Adjust voice tone based on urgency. 💡 Example: Banks use AI voice bots for automated fraud detection calls. 4. AI-Generated Speech for Disabled Individuals AI voice synthesis is helping people who have lost their voice due to medical conditions. AI-generated speech allows them to: ✔ Type text and have AI speak for them. ✔ Use their own cloned voice for communication. ✔ Improve accessibility for those with speech impairments. 💡 Example: AI helped Stephen Hawking communicate using a computer-generated voice.

The Future of AI-Generated Voices in 2025 & Beyond

AI-generated speech is evolving fast. Here’s what’s next: 1. Fully Realistic Conversational AI By 2025, AI voices will sound completely human, making robots and AI assistants indistinguishable from real humans. 2. Real-Time AI Voice Translation AI will soon allow real-time speech translation in different languages while keeping the original speaker’s voice and tone. 💡 Example: A Japanese speaker’s voice can be translated into English, but still sound like their real voice. 3. AI Voice in the Metaverse & Virtual Worlds AI-generated voices will power realistic avatars in virtual worlds, enabling: ✔ AI-powered characters with human-like speech. ✔ AI-generated narrators in VR experiences. ✔ Fully voiced AI NPCs in video games.

Final Thoughts

AI-generated voices have reached an incredible level of realism. From voice assistants to deepfake voice cloning, AI is revolutionizing how we interact with technology. However, ethical concerns remain. With the ability to clone voices and create deepfake speech, AI-generated voices must be used responsibly. In the future, AI will likely replace human voice actors, power next-gen customer service, and enable lifelike AI assistants. But one thing is clear—AI-generated voices are becoming indistinguishable from real humans. Read Our Past Blog: What If We Could Live Inside a Black Hole? 2025For more information, check this resource.

How Does AI Generate Human-Like Voices? 2025 - Everything You Need to Know

Understanding ai in DepthRelated Posts- How Does AI Generate Human-Like Voices? 2025 - How Does AI Generate Human-Like Voices? 2025 - How Does AI Generate Human-Like Voices? 2025 - How Does AI Generate Human-Like Voices? 2025 Read the full article

#1#2#2025-01-01t00:00:00.000+00:00#3#4#5#accent(sociolinguistics)#accessibility#aiaccelerator#amazonalexa#anger#animation#artificialintelligence#audiodeepfake#audiobook#avatar(computing)#blackhole#blog#brain#chatbot#cloning#communication#computer-generatedimagery#conversation#customer#customerservice#customersupport#data#datacollection#deeplearning

0 notes

Text

#FPGA#Semiconductors#TechGrowth#AIAcceleration#EmbeddedSystems#DataCenters#IoTInnovation#EdgeComputing#ChipDesign#ElectronicsMarket#5GTechnology#SmartDevices#FutureTech#ComputingPower#ReconfigurableHardware

0 notes

Text

Artificial Intelligence (AI) Updates

"Top Trends LLC (DBA ""Top Trends"") is a dynamic and information-rich web platform that empowers its readers with a broad spectrum of knowledge, insights, and data-driven trends. Our professional writers, industry experts, and enthusiasts dive deep into Artificial Intelligence, Finance, Startups, SEO and Backlinks.

#AIAdvancements#AIBreakthroughs#AIFrontiers#AIAcceleration#AIInnovationWave#AIDiscoveries#AIEvolutions#AIRevolution#AITrends2024#AIBeyondLimits

0 notes

Text

Our AI-based performance testing accelerator automates the scripting process reducing the scripting time to 24 hours only. This brings a drastic improvement from the conventional weeks-long scripting process. Unlike the manual scripting standards, our accelerator rapidly addresses diverse testing scenarios within minutes, offering unmatched efficiency. This not only accelerates the testing process but also ensures adaptability to various scenarios.

Talk to our performance experts at https://rtctek.com/contact-us/. Visit https://rtctek.com/performance-testing-services to learn more about our services.

#rtctek#roundtheclocktechnologies#performancetesting#performance#aiaccelerator#ptaccelerator#testingaccelerator

0 notes

Text

Genio 510: Redefining the Future of Smart Retail Experiences

Genio IoT Platform by MediaTek

Genio 510

Manufacturers of consumer, business, and industrial devices can benefit from MediaTek Genio IoT Platform’s innovation, quicker market access, and more than a decade of longevity. A range of IoT chipsets called MediaTek Genio IoT is designed to enable and lead the way for innovative gadgets. to cooperation and support from conception to design and production, MediaTek guarantees success. MediaTek can pivot, scale, and adjust to needs thanks to their global network of reliable distributors and business partners.

Genio 510 features

Excellent work

Broad range of third-party modules and power-efficient, high-performing IoT SoCs

AI-driven sophisticated multimedia AI accelerators and cores that improve peripheral intelligent autonomous capabilities

Interaction

Sub-6GHz 5G technologies and Wi-Fi protocols for consumer, business, and industrial use

Both powerful and energy-efficient

Adaptable, quick interfaces

Global 5G modem supported by carriers

Superior assistance

From idea to design to manufacture, MediaTek works with clients, sharing experience and offering thorough documentation, in-depth training, and reliable developer tools.

Safety

IoT SoC with high security and intelligent modules to create goods

Several applications on one common platform

Developing industry, commercial, and enterprise IoT applications on a single platform that works with all SoCs can save development costs and accelerate time to market.

MediaTek Genio 510

Smart retail, industrial, factory automation, and many more Internet of things applications are powered by MediaTek’s Genio 510. Leading manufacturer of fabless semiconductors worldwide, MediaTek will be present at Embedded World 2024, which takes place in Nuremberg this week, along with a number of other firms. Their most recent IoT innovations are on display at the event, and They’ll be talking about how these MediaTek-powered products help a variety of market sectors.

They will be showcasing the recently released MediaTek Genio 510 SoC in one of their demos. The Genio 510 will offer high-efficiency solutions in AI performance, CPU and graphics, 4K display, rich input/output, and 5G and Wi-Fi 6 connection for popular IoT applications. With the Genio 510 and Genio 700 chips being pin-compatible, product developers may now better segment and diversify their designs for different markets without having to pay for a redesign.

Numerous applications, such as digital menus and table service displays, kiosks, smart home displays, point of sale (PoS) devices, and various advertising and public domain HMI applications, are best suited for the MediaTek Genio 510. Industrial HMI covers ruggedized tablets for smart agriculture, healthcare, EV charging infrastructure, factory automation, transportation, warehousing, and logistics. It also includes ruggedized tablets for commercial and industrial vehicles.

The fully integrated, extensive feature set of Genio 510 makes such diversity possible:

Support for two displays, such as an FHD and 4K display

Modern visual quality support for two cameras built on MediaTek’s tried-and-true technologies

For a wide range of computer vision applications, such as facial recognition, object/people identification, collision warning, driver monitoring, gesture and posture detection, and image segmentation, a powerful multi-core AI processor with a dedicated visual processing engine

Rich input/output for peripherals, such as network connectivity, manufacturing equipment, scanners, card readers, and sensors

4K encoding engine (camera recording) and 4K video decoding (multimedia playback for advertising)

Exceptionally power-efficient 6nm SoC

Ready for MediaTek NeuroPilot AI SDK and multitasking OS (time to market accelerated by familiar development environment)

Support for fanless design and industrial grade temperature operation (-40 to 105C)

10-year supply guarantee (one-stop shop supported by a top semiconductor manufacturer in the world)

To what extent does it surpass the alternatives?

The Genio 510 uses more than 50% less power and provides over 250% more CPU performance than the direct alternative!

The MediaTek Genio 510 is an effective IoT platform designed for Edge AI, interactive retail, smart homes, industrial, and commercial uses. It offers multitasking OS, sophisticated multimedia, extremely rapid edge processing, and more. intended for goods that work well with off-grid power systems and fanless enclosure designs.

EVK MediaTek Genio 510

The highly competent Genio 510 (MT8370) edge-AI IoT platform for smart homes, interactive retail, industrial, and commercial applications comes with an evaluation kit called the MediaTek Genio 510 EVK. It offers many multitasking operating systems, a variety of networking choices, very responsive edge processing, and sophisticated multimedia capabilities.

SoC: MediaTek Genio 510

This Edge AI platform, which was created utilising an incredibly efficient 6nm technology, combines an integrated APU (AI processor), DSP, Arm Mali-G57 MC2 GPU, and six cores (2×2.2 GHz Arm Cortex-A78& 4×2.0 GHz Arm Cortex-A55) into a single chip. Video recorded with attached cameras can be converted at up to Full HD resolution while using the least amount of space possible thanks to a HEVC encoding acceleration engine.

FAQS

What is the MediaTek Genio 510?

A chipset intended for a broad spectrum of Internet of Things (IoT) applications is the Genio 510.

What kind of IoT applications is the Genio 510 suited for?

Because of its adaptability, the Genio 510 may be utilised in a wide range of applications, including smart homes, healthcare, transportation, and agriculture, as well as industrial automation (rugged tablets, manufacturing machinery, and point-of-sale systems).

What are the benefits of using the Genio 510?

Rich input/output choices, powerful CPU and graphics processing, compatibility for 4K screens, high-efficiency AI performance, and networking capabilities like 5G and Wi-Fi 6 are all included with the Genio 510.

Read more on Govindhtech.com

#genio#genio510#MediaTek#govindhtech#IoT#AIAccelerator#WIFI#5gtechnologies#CPU#processors#mediatekprocessor#news#technews#technology#technologytrends#technologynews

2 notes

·

View notes

Link

#AD#ADAS#advanceddriver-assistancesystems#AIaccelerator#AIprocessing#AIvisionprocessor#autonomousdriving#CES2024#edgeAI#Futurride#Gunsens#Hailo#Hailo-15#Hailo-8#iMotion#Renesas#smartcamera#sustainablemobility#TierIV#Truen#TTControl#Velo.ai#videoanalytics

0 notes

Text

#ASRock Industrial#AI acceleration#ASRockIndustrial#EdgeComputing#AIoT#IndustrialAutomation#SmartManufacturing#EmbeddedSystems#AIAcceleration#electronicsnews#technologynews

0 notes

Photo

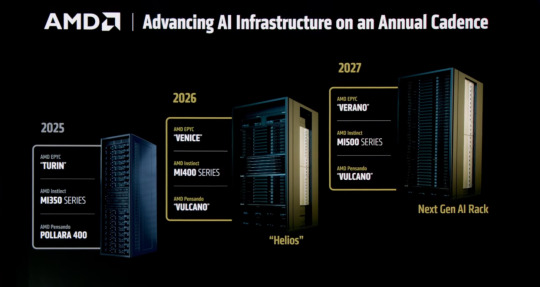

Curious about the future of high-performance CPUs? AMD has confirmed their next-gen EPYC Venice “Zen 6” CPUs with an astonishing 256 cores in 2026, along with EPYC Verano “Zen 7” and Instinct MI500 GPUs coming in 2027. These advancements promise to revolutionize data centers and AI workloads, with up to 512 threads, 16-channel DDR5 memory, and cutting-edge 2nm technology. This means more power, efficiency, and innovation for server racks and AI development — changing the game for enterprise and tech enthusiasts alike. Are you prepared for these next-level innovations in computing? Discover how your business can leverage these upcoming technologies with custom builds from GroovyComputers.ca. Click the link to learn more! What future tech are you most excited to see? Comment below! #AI #DataCenter #HighPerformanceComputing #ServerBuilds #TechInnovation #NextGenCPUs #AMD #Zen6 #Zen7 #CustomPC #TechTrends #AIAccelerators #GroovyComputers

0 notes