#linuxkernel

Explore tagged Tumblr posts

Text

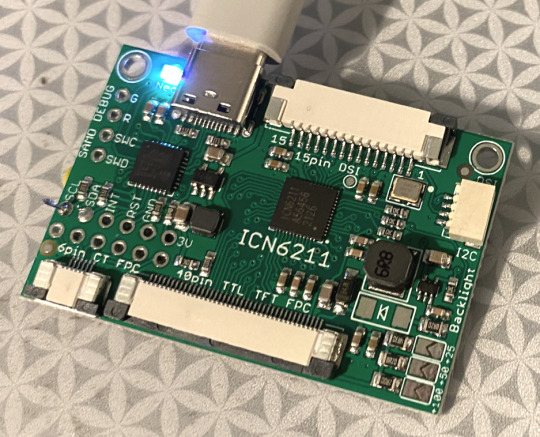

The famed ICN6211 DSI to RGB prototype arrives! 🔧🔬💻

OK we got our prototype PCB of a SAMD21 + ICN6211 dev board in hand, and we're starting to bring it up. first up, we'll get I2C running so we can scan for the ICN6211 chip. then its time to pore over an exfiltrated datasheet to map out all the registers to configure the two graphics ports. finally - we'll try to get this running on a mainline linux kernel with a device tree overlay. this should be super easy, right…?

#adafruit#electronics#TechInnovation#HardwareDevelopment#PrototypeUnveiling#EmbeddedSystems#ICN6211#SAMD21#I2CProtocol#DataSheets#LinuxKernel#DeviceTreeOverlay

6 notes

·

View notes

Text

CSD #OnlineKurs #YazılımKursları #Başlıyor #GerçekZamanlıKurs

Arkadaşlar, pek çok kursumuzun başlama tarihini duyurduk. Kurslarımıza göstermiş olduğunuz ilgi için teşekkür ederiz. Katılmak istediğiniz kursumuzun ön kayıt formunu doldurmak için derneğimizin sitesini ziyaret edebilirsiniz.

Ön Kayıt:

Tüm Bilgiler:

Kurslarımızla ilgili tüm bilgiler (içerik, ücret, kurs günleri, kurs süresi, vs…) kurslarımızın duyurusunda ve ön kayıt formunda yer almaktadır.

Telefon:

Kurslarımızla ilgili sorularınız için bize 0542 222 07 93 ve 0533 527 43 38 numaralı telefonlarımızdan ulaşabilirsiniz.

#CSD#OnlineKurs#YazılımKursları#Başlıyor#ProgramlamayaGiriş#Java#CSharp#ARMmikrodenetleyiciUygulamaları#Rust#Javascript#UnixLinuxSistemProgramlama#PythonUygulamaları#LinuxKernel#GerçekZamanlıKurs

0 notes

Video

youtube

Keynote: Linus Torvalds in Conversation with Dirk Hohndel

0 notes

Text

Advanced Network Observability: Hubble for AKS Clusters

Advanced Container Networking Services

The Advanced Container Networking Services are a new service from Microsoft’s Azure Container Networking team, which follows the successful open sourcing of Retina: A Cloud-Native Container Networking Observability Platform. It is a set of services designed to address difficult issues related to observability, security, and compliance that are built on top of the networking solutions already in place for Azure Kubernetes Services (AKS). Advanced Network Observability, the first feature in this suite, is currently accessible in public preview.

Advanced Container Networking Services: What Is It?

A collection of services called Advanced Container Networking Services is designed to greatly improve your Azure Kubernetes Service (AKS) clusters’ operational capacities. The suite is extensive and made to handle the complex and varied requirements of contemporary containerized applications. Customers may unlock a new way of managing container networking with capabilities specifically designed for security, compliance, and observability.

The primary goal of Advanced Container Networking Services is to provide a smooth, integrated experience that gives you the ability to uphold strong security postures, guarantee thorough compliance, and obtain insightful information about your network traffic and application performance. This lets you grow and manage your infrastructure with confidence knowing that your containerized apps meet or surpass your performance and reliability targets in addition to being safe and compliant.

Advanced Network Observability: What Is It?

The first aspect of the Advanced Container Networking Services suite, Advanced Network Observability, gives Linux data planes running on Cilium and Non-Cilium the power of Hubble’s control plane. It gives you deep insights into your containerized workloads by unlocking Hubble metrics, the Hubble user interface (UI), and the Hubble command line interface (CLI) on your AKS clusters. With Advanced Network Observability, users may accurately identify and identify the underlying source of network-related problems within a Kubernetes cluster.

This feature leverages extended Berkeley Packet Filter (eBPF) technology to collect data in real time from the Linux Kernel and offers network flow information at the pod-level granularity in the form of metrics or flow logs. It now provides detailed request and response insights along with network traffic flows, volumetric statistics, and dropped packets, in addition to domain name service (DNS) metrics and flow information.

eBPF-based observability driven by Retina or Cilium.

Experience without a Container Network Interface (CNI).

Using Hubble measurements, track network traffic in real time to find bottlenecks and performance problems.

Hubble command line interface (CLI) network flows allow you to trace packet flows throughout your cluster on-demand, which can help you diagnose and comprehend intricate networking behaviours.

Using an unmanaged Hubble UI, visualise network dependencies and interactions between services to guarantee optimal configuration and performance.

To improve security postures and satisfy compliance requirements, produce comprehensive metrics and records.

Image credit to Microsoft Azure

Hubble without a Container Network Interface (CNI)

Hubble control plane extended beyond Cilium with Advanced Network Observability. Hubble receives the eBPF events from Cilium in clusters that are based on Cilium. Microsoft Retina acts as the dataplane surfacing deep insights to Hubble in non-Cilium based clusters, giving users a smooth interactive experience.

Visualizing Hubble metrics with Grafana

Grafana Advanced Network Observability facilitates two integration techniques for visualization of Hubble metrics:

Grafana and Prometheus managed via Azure

If you’re an advanced user who can handle more administration overhead, bring your own (BYO) Grafana and Prometheus.

Azure provides integrated services that streamline the setup and maintenance of monitoring and visualization using the Prometheus and Grafana methodology, which is maintained by Azure. A managed instance of Prometheus, which gathers and maintains metrics from several sources, including Hubble, is offered by Azure Monitor.

Hubble CLI querying network flows

Customers can query for all or filtered network flows across all nodes using the Hubble command line interface (CLI) while using Advanced Network Observability.

Through a single pane of glass, users will be able to discern if flows have been discarded or forwarded from all nodes.

Hubble UI service dependency graph

To visualize service dependencies, customers can install Hubble UI on clusters that have Advanced Network Observability enabled. Customers can choose a namespace and view network flows between various pods within the cluster using Hubble UI, which offers an on-demand view of all flows throughout the cluster and surfaces detailed information about each flow.

Advantages

Increased network visibility

Unmatched network visibility is made possible by Advanced Network Observability, which delivers detailed insights into network activity down to the pod level. Administrators can keep an eye on traffic patterns, spot irregularities, and get a thorough grasp of network behavior inside their Azure Kubernetes Service (AKS) clusters thanks to this in-depth insight. Advanced Network Observability offers real-time metrics and logs that reveal traffic volume, packet drops, and DNS metrics by utilizing eBPF-based data collecting from the Linux Kernel. The improved visibility guarantees that network managers can quickly detect and resolve possible problems, preserving the best possible network security and performance.

Tracking of cross-node network flow

Customers in their Kubernetes clusters can monitor network flows over several nodes using Advanced Network Observability. This makes it feasible to precisely trace packet flows and comprehend intricate networking behaviors and node-to-node interactions. Through the ability to query network flows, Hubble CLI allows users to filter and examine particular traffic patterns. The ability to trace packets across nodes and discover dropped and redirected packets in a single pane of glass makes cross-node tracking a valuable tool for troubleshooting network problems.

Monitoring performance in real time

Customers can monitor performance in real time using Advanced Network Observability. Through the integration of Cilium or Retina-powered Hubble measurements, customers can track network traffic in real time and spot performance problems and bottlenecks as they arise. Maintaining high performance and making sure that any decline in network performance is quickly detected and fixed depend on this instantaneous feedback loop. Proactive management and quick troubleshooting are made possible by the continuous, in-depth insights into network operations provided by the monitored Hubble metrics and flow logs.

Historical analysis using several clusters

When combined with Azure Managed Prometheus and Grafana, Advanced Network Observability offers advantages that can be extended to multi-cluster systems. These capabilities include historical analysis, which is crucial for long-term network management and optimization. Network performance and dependability may be affected in the future by trends, patterns, and reoccurring problems that administrators can find by archiving and examining past data from several clusters. For the purposes of capacity planning, performance benchmarking, and compliance reporting, this historical perspective is essential. Future decisions about network setup and design are influenced by the capacity to examine and evaluate historical network data, which aids in understanding how network performance has changed over time.

Read more on Govindhtech.com

#AzureKubernetesServices#NetworkObservability#MicrosoftRetina#azure#LinuxKernel#AzureManaged#microsoft#news#technews#technology#technologynews#technologytrends#govindhtech

0 notes

Text

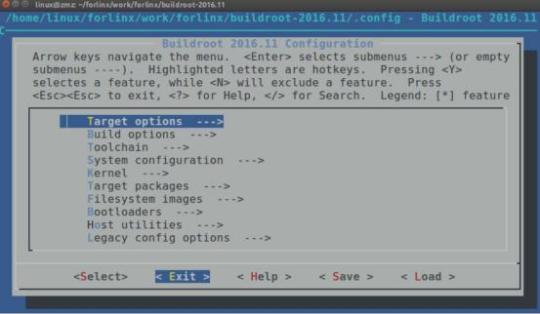

How to Configure the Linux Kernel Using make menuconfig?

There are several methods to configure the Linux kernel, and one of them is using the text menu-based configuration interface called "make menuconfig", which provides an intuitive and straightforward way of configuring the kernel.

1. Components of the Linux Kernel Configuration:

Configuring the Linux kernel involves three main components: a Makefile, a configuration file, and a configuration tool. In this article, we will dive into a specific configuration tool called make menuconfig.

2. The process of using make menuconfig:

When you execute make menuconfig, several files are involved:

• "scripts" directory in Linux root folder (not relevant for end users)

• Kconfig files in the arch/ $ARCH/directory and various subdirectories

• Makefile file that defines the values of environment variables

• .config file to save the default system configuration values

• The include/generated/autoconf.h file, which stores configuration options as macro definitions

3. How to add kernel functions using make menuconfig

To illustrate the process, let's consider adding new functionality to the kernel using make menuconfig:

Step 1: Add the appropriate options to the Kconfig file using the Kconfig syntax.

Step 2: Execute make menuconfig to generate the .config and autoconf.h files.

Step 3: Add compilation options to the relevant makefile.

Step 4: Execute make zImage to compile the kernel with the new features.

With the above steps, you can effectively use make menuconfig to configure the Linux kernel.

Originally published at www.forlinx.net.

0 notes

Text

Lỗ hổng trong Linux Kernel cho phép thực thi mã và leo thang đặc quyền (CVE-2024-53141)

Lỗ hổng trong Linux Kernel cho phép thực thi mã và leo thang đặc quyền (CVE-2024-53141) #LinuxKernel #BảoMật #CVE202453141 #LỗHổng Một lỗ hổng nghiêm trọng đã được phát hiện trong Linux Kernel, ảnh hưởng đến các phiên bản từ v2.7 đến v6.12. Lỗ hổng này nằm trong thành phần ipset của netfilter, cụ thể là trong hàm bitmap_ip_uadt(). Khi tham số IPSET_ATTR_IP_TO không có mặt nhưng IPSET_ATTR_CIDR…

0 notes

Text

Linux Software Market on Track to Double by 2033 💻

Linux Software Market is set to witness substantial growth, expanding from $7.5 billion in 2023 to $15.2 billion by 2033, reflecting a robust CAGR of 7.2% over the forecast period. This market thrives on the open-source foundation of Linux, offering customizable, cost-effective, and secure software solutions for diverse industries.

To Request Sample Report : https://www.globalinsightservices.com/request-sample/?id=GIS32383 &utm_source=SnehaPatil&utm_medium=Article

Linux powers a broad spectrum of applications, from enterprise servers and cloud platforms to embedded systems and personal computing. The enterprise segment dominates the market, with businesses leveraging Linux for its scalability, reliability, and robust security. Cloud computing follows as a high-performing sub-segment, driven by organizations adopting cloud-based solutions to optimize IT infrastructure and reduce costs.

North America leads the Linux software market, benefiting from advanced technological infrastructure and a strong concentration of tech-forward enterprises. Europe is the second-largest region, with countries like Germany and the United Kingdom spearheading open-source adoption as part of their digital transformation efforts. Meanwhile, the Asia-Pacific region emerges as a key growth area, fueled by rapid industrialization and a burgeoning tech startup ecosystem that embraces Linux for its adaptability and community-driven development.

Emerging technologies such as virtualization, IoT, AI, and big data analytics are propelling Linux adoption across new frontiers, from web servers and networking to scientific research and software development. Flexible deployment options, including on-premise, cloud-based, and hybrid models, further enhance Linux’s appeal across industries like IT, BFSI, healthcare, and retail.

As the demand for secure, reliable, and flexible software solutions grows, the Linux software market continues to expand, driving innovation and fostering global collaboration.

#LinuxSoftware #OpenSource #CloudComputing #EnterpriseLinux #BigData #IoT #AI #LinuxKernel #DigitalTransformation #SoftwareDevelopment #TechInnovation #CyberSecurity #SystemAdministration #LinuxCommunity #CAGRGrowth

0 notes

Text

Completed #linux project named as read more Completed :The Open 3D Foundation Welcomes Epic Games as a Premier Member to Unleash the Creativity of Artists Everywhere

0 notes

Photo

Linux Kernel Mascot Tux The Penguin LARGE Stuffed Plush Animal VIntage | Starfind Discover More at: https://www.ebay.com/str/starfind 🎈 #linux #linuxkernel #linuxmascot #linuxpenguin #tuxthepenguin #linuxfan #linuxlover #linuxisbetter #linuxforlife #linuxuser #linuxusers #stuffedanimals #plushanimalsfordays #rarestuffedanimals (at Cambridge, Massachusetts) https://www.instagram.com/p/CUwAlU7lH49/?utm_medium=tumblr

#linux#linuxkernel#linuxmascot#linuxpenguin#tuxthepenguin#linuxfan#linuxlover#linuxisbetter#linuxforlife#linuxuser#linuxusers#stuffedanimals#plushanimalsfordays#rarestuffedanimals

0 notes

Text

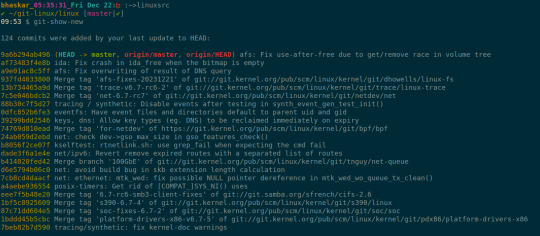

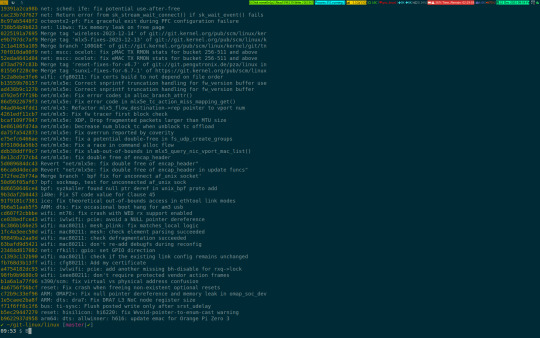

A glance 👀

#linuxkernel#opensourcedevelopment#operatingsystemadministration#linuxsystemadministration#research#tools#git#projectmanagement

1 note

·

View note

Text

Five Linux Kernel Loading and Starting Methods

Understanding the Linux Kernel Running software requires virtualization. The target system boots and software build can affect this. Linux kernel binary loading was added to Intel Simics Quick-Start Platform. Installation of kernel variant images into disk images before virtual platform boot was previously inconvenient.

Imagine how a virtual platform system boots Linux and why it helps. More realistic virtual platforms make it harder to “cheat” to provide user convenience beyond hardware. The new Quick-Start Platform setup is convenient without changing the virtual platform or BIOS/UEFI.

Linux? What is it? A “Linux system” needs three things:

Linux kernel—the operating system core and any modules compiled into it. Root file system, or Linux kernel file system. Linux needs a file system. Command-line kernel parameters. The command line can configure the kernel at startup and override kernel defaults. The kernel’s root file system hardware device is usually specified by the command line. Kernel settings command. The command line can configure the kernel at startup and modify kernel defaults. Commands specify the kernel’s root file system hardware device.

On real hardware, a bootloader initializes hardware and starts the kernel with command-line parameters. Other virtual platforms differ from this process. Five simple to advanced virtual Linux startup methods are covered in this blog post.

Simple Linux Kernel Boot Common are simple virtual platforms without real platforms. They execute instruction set architecture-specific code without buying exotic hardware or modeling a real hardware platform. Linux can run on the Intel Simics Simulator RISC-V simple virtual platform with multiple processor cores.

Binary files from Buildroot boot RISC-V. Bootloader, Linux kernel, root file system image, and binary device tree blob required. Software development changes Linux kernel and file system, but bootloader and device tree are usually reused. Bootloaders, Linux kernels, and root file systems are typical.

The simulator startup script loaded target RAM with bootloader, Linux kernel, and device tree. The startup script determines load locations. Updates to software may require address changes.

Startup script register values give the RISC-V bootloader device tree and kernel address. Kernel command line parameters are in devices. To change kernel parameters or move the root file system, developers must change the device tree.

Images are used on primary disks. Discs support unlimited file systems and simplify system startup changes.

Immediate binary loading into target memory is a simulator “cheat,” but kernel handover works as on real hardware after bootloader startup.

Flow variations. If the target system uses U-Boot, the bootloader interactive command-line interface can give the kernel the device tree address.

Too bad this boot flow can’t reboot Linux. It cannot reset the system because the virtual platform startup flow differs from the hardware startup flow. Any virtual platform reset button is prohibited.

Directly boot Linux Kernels Bootloader skipping simplifies system startup. A simulator setup script must load the kernel and launch it. The bootloader-to-Linux interface must be faked by setup. This requires providing all RAM descriptors and setting the processor state (stack pointer, memory management units, etc.) to the operating system’s expectations.

Instead of building and loading a bootloader, a simulator script does it. Changes to the kernel interface require script updates. ACPI, a “wide” bootloader-OS interface, may complicate this script. The method fails System Management Mode and other bootloader-dependent operations.

Sometimes the fake bootloader passes kernel command-line parameters.

Real bootloaders are easier, says Intel Simics.

Booting from Disk Virtual platforms should boot like real hardware. For this scenario, real software stacks are pre-packaged and easy to use. Virtual platform software development is bad.

FLASH or EEPROM bootloaders are hardware. By providing code at the system “reset vector,” the bootloader starts an operating system on a “disk” (NVMe, SATA, USB, SDCard, or other interfaces) when the system is reset. Intel Architecture system workflow starts with UEFI firmware. System designers choose Coreboot or Slim Bootloader.

In Linux, GRUB or Windows Boot Manager may be needed for bootloader-OS handover. Normal software flow requires no simulator setup or scripting.

Note that this boot flow requires a bootloader binary and disk image. Hardware bootloaders are unexecutable.

Disk image includes Linux kernel and command-line parameters. Change command-line parameters by starting the system, changing the saved configuration, and saving the updated disk image for the next boot, just like on real hardware.

The virtual platform setup script shouldn’t mimic user input to control the target system during this booting method. Similar to hardware, the bootloader chooses a disk or device for modeled platform reset. Target and bootloader select boot devices. Find and boot the only bootable local device, like a PC.

For a single boot, interacting with the virtual target as a real machine is easiest. Scripting may be needed to boot from another device.

Virtual platforms run the real software stack from the real platform, making them ideal for pre-silicon software development and maintenance. Test proof value decreases with virtual platform “cheating”.

Change-prone Linux kernel developers may dislike this flow. Every newly compiled kernel binary must be integrated into a disk image before testing, which takes time. New Linux kernel boot flow enables real virtual platform with real software stack and easy Linux kernel replacement.

Network Boot Disks don’t always boot real systems. Data centers and embedded racks boot networks. Software upgrades and patches don’t require system-by-system updates, simplifying deployments. System boot from central server disk images.

The Intel PXE network-based booting standard. Instead of booting locally, PXE acquires the disk image from a network server. The network boot can load stronger binaries and disk images gradually.

The virtual platform FLASH loads firmware to boot the disk.

Building a virtual platform network is hard. Linking virtual platform to host machine’s lab network does this. IT-managed networks need a TAP solution to connect the virtual platform to the lab network. PXE booting uses DHCP, which struggles with NAT.

One or more machines connected to the simulated network that provides services is the most reliable and easiest solution. Servers ran on service system files with boot images. An alternative disk boot file system.

Target systems need network adapters and bootloaders to boot disks.

New Linux boot flow The new Linux Kernel boot flow for Intel Simics simulator UEFI targets can be described with this background. Similar to direct kernel boots, this flow starts with a Linux kernel binary, command-line parameters, and root filesystem image. The standard target setup uses UEFI bootloader-based disk boot.

Target has 2 disks. First disk’s prebuilt file system’s Intel Simics Agent target binary and GRUB binary are used. The second disk has disk boot-like root file system. Dynamically configured disk images can boot Linux from utility disks. Avoids RAM kernel placement issues.

Intel Simics Agent allows this. A simulator or target software drives the agent system to quickly and reliably move files from the host to the target system software stack. Agents send “magic instructions” directly to target software, simulator, and host. Although fragile and slow, networking could transfer files.

Intel Simics simulator startup scripts automate boot. The script uses kernel command-line parameters and binary name to create a temporary GRUB configuration file. The agent loads the host’s EFI shell script into the target’s UEFI. A script boots EFI shell.

The EFI shell script calls the agent system to copy the kernel image and GRUB configuration file from the host to the utility disk. Finish: boot the utility disk Linux kernel with GRUB.

Virtual platforms speed Linux kernel testing and development. A recompiled kernel can be used in an unmodified Intel Simics virtual platform model (hot-pluggable interfaces allow disk addition). No kernel disk image needed. Easy kernel command-line parameter changes.

Flexible platform root file system insertion. PCIe-attached virtio block devices are the default, but NVMe or SATA disks can hold disk images. Virtuoso PCIe paravirtual devices provide root file systems. A host directory, not an image, contains this file system content. Host-privileged Virtuous daemons may cause issues. Root file system is found by kernel CLI.

A disk image contains all UEFI boot files, so the platform reboots. UEFI locates a bootable utility disk with GRUB, configuration, and custom Linux kernel.

All target-OS dependencies are in the EFI shell script. Rewritten to boot “separable” kernels outside the root file system.

Just Beginning Although simple, virtual platform booting has many intricate methods. Always, the goal is to create a model that simulates hardware enough to test interesting scenarios while being convenient for software developers. Use case and user determine.

Some boot flows and models are more complicated than this blog post. Not many systems boot with the main processor core bootloader. The visible bootloader replaces hidden subsystems after basic tasks.

Security subsystems with processors can start the system early. General bootloader FLASH or local memory can store processor boot code.

The main operating system image can boot programmable subsystems and firmware. OS disks contain subsystem firmware. The operating system driver’s boot code loads production firmware in most subsystems from a small ROM.

Read more on Govindhtech.com

0 notes

Photo

Learn the command of renaming a file in the Linux in a simple way Read the article to know more information:https://www.webtoolsoffers.com/blog/how-to-rename-a-file-in-linux #renamefile #linux #linuxcommands #linuxterminal #linuxupdate #learnlinux #linuxprogramming #linuxforwindow #linuxkernel https://www.instagram.com/p/CJLgV1yAgoj/?igshid=155tvodlezosm

#renamefile#linux#linuxcommands#linuxterminal#linuxupdate#learnlinux#linuxprogramming#linuxforwindow#linuxkernel

0 notes

Text

Como instalar el Kernel 5.8.1 en Ubuntu o Linux Mint

Como instalar el Kernel 5.8.1 en Ubuntu o Linux Mint. Recién salido del horno, tan solo hace unas horas se lanzó oficialmente el kernel más avanzado disponible hasta la fecha. Igual que los anteriores, el nuevo Linux Kernel 5.8.1 viene con corrección de errores en su antecesor (5.8), además de controladores nuevos y actualizados. Recuerda que el kernel, es la parte más importante de un sistema operativo basado en Linux, y no es aconsejable modificarlo en un sistema en producción. Lo ideal es utilizar el que ofrece oficialmente cada distribución linux, pues estos se actualizan con parches de seguridad de forma regular. Claro, entonces te preguntaras... ¿por qué Ubuntu saca nuevas versiones?. Bueno, la respuesta es simple. Si tienes una máquina muy, muy nueva, o simplemente tienes problemas con algunos de los componentes de tu hardware, posiblemente una versión actualizada del kernel los solucionara. Siempre puede volver a los paquetes del kernel originales. Antes de instalar un kernel de forma manual, te recomiendo deshabilitar el arranque seguro (si utilizas UEFI), a veces pueden dar problemas en este aspecto. Si tienes instalados driver propietarios, también deberías verificar que serán compatibles. El proceso de actualizar un kernel es rápido y seguro, aun así, debes operar con precaución y bajo tu responsabilidad. Como instalar el Kernel 5.8.1 en Ubuntu o Linux Mint Si aún los avisos dados, quieres instalar el kernel de Linux 5.8.1 Read the full article

0 notes

Text

At Dual-Socket Systems, Ampere’s 192-Core CPUs Stress ARM64 Linux Kernel

Ampere’s 192-Core CPUs Stress ARM64 Linux Kernel

In the realm of ARM-based server CPUs, the abundance of cores can present unforeseen challenges for Linux operating systems. Ampere, a prominent player in this space, has recently launched its AmpereOne data center CPUs, boasting an impressive 192 cores. However, this surplus of computing power has led to complications in Linux support, especially in systems employing two of Ampere’s 192-core chips (totaling a whopping 384 cores) within a single server.

The Core Conundrum

According to reports from Phoronix, the ARM64 Linux kernel currently struggles to support configurations exceeding 256 cores. In response, Ampere has taken the initiative by proposing a patch aimed at elevating the Linux kernel’s core limit to 512. The proposed solution involves implementing the “CPUMASK_OFFSTACK” method, a mechanism allowing Linux to override the default 256-core limit. This approach strategically allocates free bitmaps for CPU masks from memory, enabling an expansion of the core limit without inflating the kernel image’s memory footprint.

Tackling Technicalities

Implementing the CPUMASK_OFFSTACK method is crucial, given that each core introduces an additional 8KB to the kernel image size. Ampere’s cutting-edge CPUs stand out with the highest core count in the industry, surpassing even AMD’s latest Zen 4c EPYC CPUs, which cap at 128 cores. This unprecedented core count places Ampere in uncharted territory, making it the first CPU manufacturer to grapple with the limitations of ARM64 Linux Kernel 256-core threshold.

The Impact on Data Centers

While the core limit predicament does not affect systems equipped with a single 192-core AmpereOne chip, it poses a significant challenge for data center servers housing two of these powerhouse chips in a dual-socket configuration. Notably, SMT logical cores, or threads, also exceed the 256 figure on various systems, further compounding the complexity of the issue.

AmpereOne: A Revolutionary CPU Lineup

AmpereOne represents a paradigm shift in CPU design, featuring models with core counts ranging from 136 to an astounding 192 cores. Built on the ARMv8.6+ instruction set and leveraging TSMC’s cutting-edge 5nm node, these CPUs boast dual 128b Vector Units, 2MB of L2 cache per core, a 3 GHz clock speed, an eight-channel DDR5 memory controller, 128 PCIe Gen 5 lanes, and a TDP ranging from 200 to 350W. Tailored for high-performance data center workloads that can leverage substantial core counts, AmpereOne is at the forefront of innovation in the CPU landscape.

The Road Ahead

Despite Ampere’s proactive approach in submitting the patch to address the core limit challenge, achieving 512-core support might take some time. In 2021, a similar proposal was put forth, seeking to increase the ARM64 Linux CPU core limit to 512. However, Linux maintainers rejected it due to the absence of available CPU hardware with more than 256 cores at that time. Optimistically, 512-core support may not become a reality until the release of Linux kernel 6.8 in 2024.

A Glimmer of Hope

It’s important to note that the outgoing Linux kernel already supports the CPUMASK_OFFSTACK method for augmenting CPU core count limits. The ball is now in the court of Linux maintainers to decide whether to enable this feature by default, potentially expediting the timeline for achieving the much-needed 512-core support.

In conclusion, Ampere’s 192-core CPUs have thrust the industry into uncharted territory, necessitating innovative solutions to overcome the limitations of current ARM64 Linux kernel support. As technology continues to advance, collaborations between hardware manufacturers and software developers become increasingly pivotal in ensuring seamless compatibility and optimal performance for the next generation of data center systems.

Read more on Govindhtech.com

#DualSocket#ARM64#Ampere’s192Core#CPUs#linuxkernel#AMD’s#Zen4c#EPYCCPUs#DataCenters#DDR5memory#TSMC’s#technews#technology#govindhtech

0 notes