#managing storage in Kubernetes clusters

Explore tagged Tumblr posts

Text

Top 5 Open Source Kubernetes Storage Solutions

Top 5 Open Source Kubernetes Storage Solutions #homelab #ceph #rook #glusterfs #longhorn #openebs #KubernetesStorageSolutions #OpenSourceStorageForKubernetes #CephRBDKubernetes #GlusterFSWithKubernetes #OpenEBSInKubernetes #RookStorage #LonghornKubernetes

Historically, Kubernetes storage has been challenging to configure, and it required specialized knowledge to get up and running. However, the landscape of K8s data storage has greatly evolved with many great options that are relatively easy to implement for data stored in Kubernetes clusters. Those who are running Kubernetes in the home lab as well will benefit from the free and open-source…

View On WordPress

#block storage vs object storage#Ceph RBD and Kubernetes#cloud-native storage solutions#GlusterFS with Kubernetes#Kubernetes storage solutions#Longhorn and Kubernetes integration#managing storage in Kubernetes clusters#open-source storage for Kubernetes#OpenEBS in Kubernetes environment#Rook storage in Kubernetes

0 notes

Text

Mastering Multicluster Kubernetes with Red Hat OpenShift Platform Plus

As enterprises expand their containerized environments, managing and securing multiple Kubernetes clusters becomes both a necessity and a challenge. Red Hat OpenShift Platform Plus, combined with powerful tools like Red Hat Advanced Cluster Management (RHACM), Red Hat Quay, and Red Hat Advanced Cluster Security (RHACS), offers a comprehensive suite for multicluster management, governance, and security.

In this blog post, we'll explore the key components and capabilities that help organizations effectively manage, observe, secure, and scale their Kubernetes workloads across clusters.

Understanding Multicluster Kubernetes Architectures

Modern enterprise applications often span across multiple Kubernetes clusters—whether to support hybrid cloud strategies, improve high availability, or isolate workloads by region or team. Red Hat OpenShift Platform Plus is designed to simplify multicluster operations by offering an integrated, opinionated stack that includes:

Red Hat OpenShift for consistent application platform experience

RHACM for centralized multicluster management

Red Hat Quay for enterprise-grade image storage and security

RHACS for advanced cluster-level security and threat detection

Together, these components provide a unified approach to handle complex multicluster deployments.

Inspecting Resources Across Multiple Clusters with RHACM

Red Hat Advanced Cluster Management (RHACM) offers a user-friendly web console that allows administrators to view and interact with all their Kubernetes clusters from a single pane of glass. Key capabilities include:

Centralized Resource Search: Use the RHACM search engine to find workloads, nodes, and configurations across all managed clusters.

Role-Based Access Control (RBAC): Manage user permissions and ensure secure access to cluster resources based on roles and responsibilities.

Cluster Health Overview: Quickly identify issues and take action using visual dashboards.

Governance and Policy Management at Scale

With RHACM, you can implement and enforce consistent governance policies across your entire fleet of clusters. Whether you're ensuring compliance with security benchmarks (like CIS) or managing custom rules, RHACM makes it easy to:

Deploy policies as code

Monitor compliance status in real time

Automate remediation for non-compliant resources

This level of automation and visibility is critical for regulated industries and enterprises with strict security postures.

Observability Across the Cluster Fleet

Observability is essential for understanding the health, performance, and behavior of your Kubernetes workloads. RHACM’s built-in observability stack integrates with metrics and logging tools to give you:

Cross-cluster performance insights

Alerting and visualization dashboards

Data aggregation for proactive incident management

By centralizing observability, operations teams can streamline troubleshooting and capacity planning across environments.

GitOps-Based Application Deployment

One of the most powerful capabilities RHACM brings to the table is GitOps-driven application lifecycle management. This allows DevOps teams to:

Define application deployments in Git repositories

Automatically deploy to multiple clusters using GitOps pipelines

Ensure consistent configuration and versioning across environments

With built-in support for Argo CD, RHACM bridges the gap between development and operations by enabling continuous delivery at scale.

Red Hat Quay: Enterprise Image Management

Red Hat Quay provides a secure and scalable container image registry that’s deeply integrated with OpenShift. In a multicluster scenario, Quay helps by:

Enforcing image security scanning and vulnerability reporting

Managing image access policies

Supporting geo-replication for global deployments

Installing and customizing Quay within OpenShift gives enterprises control over the entire software supply chain—from development to production.

Integrating Quay with OpenShift & RHACM

Quay seamlessly integrates with OpenShift and RHACM to:

Serve as the source of trusted container images

Automate deployment pipelines via RHACM GitOps

Restrict unapproved images from being used across clusters

This tight integration ensures a secure and compliant image delivery workflow, especially useful in multicluster environments with differing security requirements.

Strengthening Multicluster Security with RHACS

Security must span the entire Kubernetes lifecycle. Red Hat Advanced Cluster Security (RHACS) helps secure containers and Kubernetes clusters by:

Identifying runtime threats and vulnerabilities

Enforcing Kubernetes best practices

Performing risk assessments on containerized workloads

Once installed and configured, RHACS provides a unified view of security risks across all your OpenShift clusters.

Multicluster Operational Security with RHACS

Using RHACS across multiple clusters allows security teams to:

Define and apply security policies consistently

Detect and respond to anomalies in real time

Integrate with CI/CD tools to shift security left

By integrating RHACS into your multicluster architecture, you create a proactive defense layer that protects your workloads without slowing down innovation.

Final Thoughts

Managing multicluster Kubernetes environments doesn't have to be a logistical nightmare. With Red Hat OpenShift Platform Plus, along with RHACM, Red Hat Quay, and RHACS, organizations can standardize, secure, and scale their Kubernetes operations across any infrastructure.

Whether you’re just starting to adopt multicluster strategies or looking to refine your existing approach, Red Hat’s ecosystem offers the tools and automation needed to succeed. For more details www.hawkstack.com

0 notes

Text

Machine Learning Infrastructure: The Foundation of Scalable AI Solutions

Introduction: Why Machine Learning Infrastructure Matters

In today's digital-first world, the adoption of artificial intelligence (AI) and machine learning (ML) is revolutionizing every industry—from healthcare and finance to e-commerce and entertainment. However, while many organizations aim to leverage ML for automation and insights, few realize that success depends not just on algorithms, but also on a well-structured machine learning infrastructure.

Machine learning infrastructure provides the backbone needed to deploy, monitor, scale, and maintain ML models effectively. Without it, even the most promising ML solutions fail to meet their potential.

In this comprehensive guide from diglip7.com, we’ll explore what machine learning infrastructure is, why it’s crucial, and how businesses can build and manage it effectively.

What is Machine Learning Infrastructure?

Machine learning infrastructure refers to the full stack of tools, platforms, and systems that support the development, training, deployment, and monitoring of ML models. This includes:

Data storage systems

Compute resources (CPU, GPU, TPU)

Model training and validation environments

Monitoring and orchestration tools

Version control for code and models

Together, these components form the ecosystem where machine learning workflows operate efficiently and reliably.

Key Components of Machine Learning Infrastructure

To build robust ML pipelines, several foundational elements must be in place:

1. Data Infrastructure

Data is the fuel of machine learning. Key tools and technologies include:

Data Lakes & Warehouses: Store structured and unstructured data (e.g., AWS S3, Google BigQuery).

ETL Pipelines: Extract, transform, and load raw data for modeling (e.g., Apache Airflow, dbt).

Data Labeling Tools: For supervised learning (e.g., Labelbox, Amazon SageMaker Ground Truth).

2. Compute Resources

Training ML models requires high-performance computing. Options include:

On-Premise Clusters: Cost-effective for large enterprises.

Cloud Compute: Scalable resources like AWS EC2, Google Cloud AI Platform, or Azure ML.

GPUs/TPUs: Essential for deep learning and neural networks.

3. Model Training Platforms

These platforms simplify experimentation and hyperparameter tuning:

TensorFlow, PyTorch, Scikit-learn: Popular ML libraries.

MLflow: Experiment tracking and model lifecycle management.

KubeFlow: ML workflow orchestration on Kubernetes.

4. Deployment Infrastructure

Once trained, models must be deployed in real-world environments:

Containers & Microservices: Docker, Kubernetes, and serverless functions.

Model Serving Platforms: TensorFlow Serving, TorchServe, or custom REST APIs.

CI/CD Pipelines: Automate testing, integration, and deployment of ML models.

5. Monitoring & Observability

Key to ensure ongoing model performance:

Drift Detection: Spot when model predictions diverge from expected outputs.

Performance Monitoring: Track latency, accuracy, and throughput.

Logging & Alerts: Tools like Prometheus, Grafana, or Seldon Core.

Benefits of Investing in Machine Learning Infrastructure

Here’s why having a strong machine learning infrastructure matters:

Scalability: Run models on large datasets and serve thousands of requests per second.

Reproducibility: Re-run experiments with the same configuration.

Speed: Accelerate development cycles with automation and reusable pipelines.

Collaboration: Enable data scientists, ML engineers, and DevOps to work in sync.

Compliance: Keep data and models auditable and secure for regulations like GDPR or HIPAA.

Real-World Applications of Machine Learning Infrastructure

Let’s look at how industry leaders use ML infrastructure to power their services:

Netflix: Uses a robust ML pipeline to personalize content and optimize streaming.

Amazon: Trains recommendation models using massive data pipelines and custom ML platforms.

Tesla: Collects real-time driving data from vehicles and retrains autonomous driving models.

Spotify: Relies on cloud-based infrastructure for playlist generation and music discovery.

Challenges in Building ML Infrastructure

Despite its importance, developing ML infrastructure has its hurdles:

High Costs: GPU servers and cloud compute aren't cheap.

Complex Tooling: Choosing the right combination of tools can be overwhelming.

Maintenance Overhead: Regular updates, monitoring, and security patching are required.

Talent Shortage: Skilled ML engineers and MLOps professionals are in short supply.

How to Build Machine Learning Infrastructure: A Step-by-Step Guide

Here’s a simplified roadmap for setting up scalable ML infrastructure:

Step 1: Define Use Cases

Know what problem you're solving. Fraud detection? Product recommendations? Forecasting?

Step 2: Collect & Store Data

Use data lakes, warehouses, or relational databases. Ensure it’s clean, labeled, and secure.

Step 3: Choose ML Tools

Select frameworks (e.g., TensorFlow, PyTorch), orchestration tools, and compute environments.

Step 4: Set Up Compute Environment

Use cloud-based Jupyter notebooks, Colab, or on-premise GPUs for training.

Step 5: Build CI/CD Pipelines

Automate model testing and deployment with Git, Jenkins, or MLflow.

Step 6: Monitor Performance

Track accuracy, latency, and data drift. Set alerts for anomalies.

Step 7: Iterate & Improve

Collect feedback, retrain models, and scale solutions based on business needs.

Machine Learning Infrastructure Providers & Tools

Below are some popular platforms that help streamline ML infrastructure: Tool/PlatformPurposeExampleAmazon SageMakerFull ML development environmentEnd-to-end ML pipelineGoogle Vertex AICloud ML serviceTraining, deploying, managing ML modelsDatabricksBig data + MLCollaborative notebooksKubeFlowKubernetes-based ML workflowsModel orchestrationMLflowModel lifecycle trackingExperiments, models, metricsWeights & BiasesExperiment trackingVisualization and monitoring

Expert Review

Reviewed by: Rajeev Kapoor, Senior ML Engineer at DataStack AI

"Machine learning infrastructure is no longer a luxury; it's a necessity for scalable AI deployments. Companies that invest early in robust, cloud-native ML infrastructure are far more likely to deliver consistent, accurate, and responsible AI solutions."

Frequently Asked Questions (FAQs)

Q1: What is the difference between ML infrastructure and traditional IT infrastructure?

Answer: Traditional IT supports business applications, while ML infrastructure is designed for data processing, model training, and deployment at scale. It often includes specialized hardware (e.g., GPUs) and tools for data science workflows.

Q2: Can small businesses benefit from ML infrastructure?

Answer: Yes, with the rise of cloud platforms like AWS SageMaker and Google Vertex AI, even startups can leverage scalable machine learning infrastructure without heavy upfront investment.

Q3: Is Kubernetes necessary for ML infrastructure?

Answer: While not mandatory, Kubernetes helps orchestrate containerized workloads and is widely adopted for scalable ML infrastructure, especially in production environments.

Q4: What skills are needed to manage ML infrastructure?

Answer: Familiarity with Python, cloud computing, Docker/Kubernetes, CI/CD, and ML frameworks like TensorFlow or PyTorch is essential.

Q5: How often should ML models be retrained?

Answer: It depends on data volatility. In dynamic environments (e.g., fraud detection), retraining may occur weekly or daily. In stable domains, monthly or quarterly retraining suffices.

Final Thoughts

Machine learning infrastructure isn’t just about stacking technologies—it's about creating an agile, scalable, and collaborative environment that empowers data scientists and engineers to build models with real-world impact. Whether you're a startup or an enterprise, investing in the right infrastructure will directly influence the success of your AI initiatives.

By building and maintaining a robust ML infrastructure, you ensure that your models perform optimally, adapt to new data, and generate consistent business value.

For more insights and updates on AI, ML, and digital innovation, visit diglip7.com.

0 notes

Text

Kubernetes Objects Explained 💡 Pods, Services, Deployments & More for Admins & Devs

learn how Kubernetes keeps your apps running as expected using concepts like desired state, replication, config management, and persistent storage.

✔️ Pod – Basic unit that runs your containers ✔️ Service – Stable network access to Pods ✔️ Deployment – Rolling updates & scaling made easy ✔️ ReplicaSet – Maintains desired number of Pods ✔️ Job & CronJob – Run tasks once or on schedule ✔️ ConfigMap & Secret – Externalize configs & secure credentials ✔️ PV & PVC – Persistent storage management ✔️ Namespace – Cluster-level resource isolation ✔️ DaemonSet – Run a Pod on every node ✔️ StatefulSet – For stateful apps like databases ✔️ ReplicationController – The older way to manage Pods

youtube

0 notes

Text

Learn HashiCorp Vault in Kubernetes Using KubeVault

In today's cloud-native world, securing secrets, credentials, and sensitive configurations is more important than ever. That’s where Vault in Kubernetes becomes a game-changer — especially when combined with KubeVault, a powerful operator for managing HashiCorp Vault within Kubernetes clusters.

🔐 What is Vault in Kubernetes?

Vault in Kubernetes refers to the integration of HashiCorp Vault with Kubernetes to manage secrets dynamically, securely, and at scale. Vault provides features like secrets storage, access control, dynamic secrets, and secrets rotation — essential tools for modern DevOps and cloud security.

🚀 Why Use KubeVault?

KubeVault is an open-source Kubernetes operator developed to simplify Vault deployment and management inside Kubernetes environments. Whether you’re new to Vault or running production workloads, KubeVault automates:

Deployment and lifecycle management of Vault

Auto-unsealing using cloud KMS providers

Seamless integration with Kubernetes RBAC and CRDs

Secure injection of secrets into workloads

🛠️ Getting Started with KubeVault

Here's a high-level guide on how to deploy Vault in Kubernetes using KubeVault:

Install the KubeVault Operator Use Helm or YAML manifests to install the operator in your cluster. helm repo add appscode https://charts.appscode.com/stable/

helm install kubevault-operator appscode/kubevault --namespace kubevault --create-namespace

Deploy a Vault Server Define a custom resource (VaultServer) to spin up a Vault instance.

Configure Storage and Unsealer Use backends like GCS, S3, or Azure Blob for Vault storage and unseal via cloud KMS.

Inject Secrets into Workloads Automatically mount secrets into pods using Kubernetes-native integrations.

💡 Benefits of Using Vault in Kubernetes with KubeVault

✅ Automated Vault lifecycle management

✅ Native Kubernetes authentication

✅ Secret rotation without downtime

✅ Easy policy management via CRDs

✅ Enterprise-level security with minimal overhead

🔄 Real Use Case: Dynamic Secrets for Databases

Imagine your app requires database credentials. Instead of hardcoding secrets or storing them in plain YAML files, you can use KubeVault to dynamically generate and inject secrets directly into pods — with rotation and revocation handled automatically.

🌐 Final Thoughts

If you're deploying applications in Kubernetes, integrating Vault in Kubernetes using KubeVault isn't just a best practice — it's a security necessity. KubeVault makes it easy to run Vault at scale, without the hassle of manual configuration and operations.

Want to learn more? Check out KubeVault.com — the ultimate toolkit for managing secrets in Kubernetes using HashiCorp Vault.

1 note

·

View note

Text

Master Java Full Stack Development with Gritty Tech

Start Your Full Stack Journey

Java Full Stack Development is an exciting field that combines front-end and back-end technologies to create powerful, dynamic web applications. At Gritty Tech, we offer an industry-leading Java Full Stack Coaching program designed to make you job-ready with hands-on experience and deep technical knowledge For More…

Why Java Full Stack?

Java is a cornerstone of software development. With its robust framework, scalability, security, and massive community support, Java remains a preferred choice for full-stack applications. Gritty Tech ensures that you learn Java in depth, mastering its application in real-world projects.

Comprehensive Curriculum at Gritty Tech

Our curriculum is carefully crafted to align with industry requirements:

Fundamental Java Programming

Object-Oriented Programming and Core Concepts

Data Structures and Algorithm Mastery

Front-End Skills: HTML5, CSS3, JavaScript, Angular, React

Back-End Development: Java, Spring Boot, Hibernate

Database Technologies: MySQL, MongoDB

Version Control: Git, GitHub

Building RESTful APIs

Introduction to DevOps: Docker, Jenkins, Kubernetes

Cloud Services: AWS, Azure Essentials

Agile Development Practices

Strong Foundation in Java

We start with Java fundamentals, ensuring every student masters syntax, control structures, OOP concepts, exception handling, collections, and multithreading. Moving forward, we delve into JDBC, Servlets, JSP, and popular frameworks like Spring MVC and Hibernate ORM.

Front-End Development Expertise

Create beautiful and functional web interfaces with our in-depth training on HTML, CSS, and JavaScript. Advance into frameworks like Angular and React to build modern Single Page Applications (SPAs) and enhance user experiences.

Back-End Development Skills

Master server-side application development using Spring Boot. Learn how to structure codebases, manage business logic, build APIs, and ensure application security. Our back-end coaching prepares you to architect scalable applications effortlessly.

Database Management

Handling data efficiently is crucial. We cover:

SQL Databases: MySQL, PostgreSQL

NoSQL Databases: MongoDB

You'll learn to design databases, write complex queries, and integrate them seamlessly with Java applications.

Version Control Mastery

Become proficient in Git and GitHub. Understand workflows, branches, pull requests, and collaboration techniques essential for modern development teams.

DevOps and Deployment Skills

Our students get exposure to:

Containerization using Docker

Continuous Integration/Deployment with Jenkins

Managing container clusters with Kubernetes

We make deployment practices part of your daily routine, preparing you for cloud-native development.

Cloud Computing Essentials

Learn to deploy applications on AWS and Azure, manage cloud storage, use cloud databases, and leverage cloud services for scaling and securing your applications.

Soft Skills and Career Training

In addition to technical expertise, Gritty Tech trains you in:

Agile and Scrum methodologies

Resume building and portfolio creation

Mock interviews and HR preparation

Effective communication and teamwork

Hands-On Projects and Internship Opportunities

Experience is everything. Our program includes practical projects such as:

E-commerce Applications

Social Media Platforms

Banking Systems

Healthcare Management Systems

Internship programs with partner companies allow you to experience real-world development environments firsthand.

Who Should Enroll?

Our program welcomes:

Freshers wanting to enter the tech industry

Professionals aiming to switch to development roles

Entrepreneurs building their tech products

Prior programming knowledge is not mandatory. Our structured learning path ensures everyone succeeds.

Why Gritty Tech Stands Out

Expert Trainers: Learn from professionals with a decade of industry experience.

Real-World Curriculum: Practical skills aligned with job market demands.

Flexible Schedules: Online, offline, and weekend batches available.

Placement Support: Dedicated placement cell and career coaching.

Affordable Learning: Quality education at competitive prices.

Our Success Stories

Gritty Tech alumni are working at top tech companies like Infosys, Accenture, Capgemini, TCS, and leading startups. Our focus on practical skills and real-world training ensures our students are ready to hit the ground running.

Certification

After successful completion, students receive a Java Full Stack Developer Certification from Gritty Tech, recognized across industries.

Student Testimonials

"The hands-on projects at Gritty Tech gave me the confidence to work on real-world applications. I secured a job within two months!" - Akash Verma

"Supportive trainers and an excellent curriculum made my learning journey smooth and successful." - Sneha Kulkarni

Get Started with Gritty Tech Today!

Become a skilled Java Full Stack Developer with Gritty Tech and open the door to exciting career opportunities.

Visit Gritty Tech or call us at +91-XXXXXXXXXX to learn more and enroll.

FAQs

Q1. How long is the Java Full Stack Coaching at Gritty Tech? A1. The program lasts around 6 months, including projects and internships.

Q2. Are online classes available? A2. Yes, we offer flexible online and offline learning options.

Q3. Do you assist with job placements? A3. Absolutely. We offer extensive placement support, resume building, and mock interviews.

Q4. Is prior coding experience required? A4. No, our program starts from the basics.

Q5. What differentiates Gritty Tech? A5. Real-world projects, expert faculty, dedicated placement support, and a practical approach make us stand out.

0 notes

Text

h

Technical Skills (Java, Spring, Python)

Q1: Can you walk us through a recent project where you built a scalable application using Java and Spring Boot? A: Absolutely. In my previous role, I led the development of a microservices-based system using Java with Spring Boot and Spring Cloud. The app handled real-time financial transactions and was deployed on AWS ECS. I focused on building stateless services, applied best practices like API versioning, and used Eureka for service discovery. The result was a 40% improvement in performance and easier scalability under load.

Q2: What has been your experience with Python in data processing? A: I’ve used Python for ETL pipelines, specifically for ingesting large volumes of compliance data into cloud storage. I utilized Pandas and NumPy for processing, and scheduled tasks with Apache Airflow. The flexibility of Python was key in automating data validation and transformation before feeding it into analytics dashboards.

Cloud & DevOps

Q3: Describe your experience deploying applications on AWS or Azure. A: Most of my cloud experience has been with AWS. I’ve deployed containerized Java applications to AWS ECS and used RDS for relational storage. I also integrated S3 for static content and Lambda for lightweight compute tasks. In one project, I implemented CI/CD pipelines with Jenkins and CodePipeline to automate deployments and rollbacks.

Q4: How have you used Docker or Kubernetes in past projects? A: I've containerized all backend services using Docker and deployed them on Kubernetes clusters (EKS). I wrote Helm charts for managing deployments and set up autoscaling rules. This improved uptime and made releases smoother, especially during traffic spikes.

Collaboration & Agile Practices

Q5: How do you typically work with product owners and cross-functional teams? A: I follow Agile practices, attending sprint planning and daily stand-ups. I work closely with product owners to break down features into stories, clarify acceptance criteria, and provide early feedback. My goal is to ensure technical feasibility while keeping business impact in focus.

Q6: Have you had to define technical design or architecture? A: Yes, I’ve been responsible for defining the technical design for multiple features. For instance, I designed an event-driven architecture for a compliance alerting system using Kafka, Java, and Spring Cloud Streams. I created UML diagrams and API contracts to guide other developers.

Testing & Quality

Q7: What’s your approach to testing (unit, integration, automation)? A: I use JUnit and Mockito for unit testing, and Spring’s Test framework for integration tests. For end-to-end automation, I’ve worked with Selenium and REST Assured. I integrate these tests into Jenkins pipelines to ensure code quality with every push.

Behavioral / Cultural Fit

Q8: How do you stay updated with emerging technologies? A: I subscribe to newsletters like InfoQ and follow GitHub trending repositories. I also take part in hackathons and complete Udemy/Coursera courses. Recently, I explored Quarkus and Micronaut to compare their performance with Spring Boot in cloud-native environments.

Q9: Tell us about a time you challenged the status quo or proposed a modern tech solution. A: At my last job, I noticed performance issues due to a legacy monolith. I advocated for a microservices transition. I led a proof-of-concept using Spring Boot and Docker, which gained leadership buy-in. We eventually reduced deployment time by 70% and improved maintainability.

Bonus: Domain Experience

Q10: Do you have experience supporting back-office teams like Compliance or Finance? A: Yes, I’ve built reporting tools for Compliance and data reconciliation systems for Finance. I understand the importance of data accuracy and audit trails, and have used role-based access and logging mechanisms to meet regulatory requirements.

0 notes

Text

Cloud Native Storage Market Insights: Industry Share, Trends & Future Outlook 2032

TheCloud Native Storage Market Size was valued at USD 16.19 Billion in 2023 and is expected to reach USD 100.09 Billion by 2032 and grow at a CAGR of 22.5% over the forecast period 2024-2032

The cloud native storage market is experiencing rapid growth as enterprises shift towards scalable, flexible, and cost-effective storage solutions. The increasing adoption of cloud computing and containerization is driving demand for advanced storage technologies.

The cloud native storage market continues to expand as businesses seek high-performance, secure, and automated data storage solutions. With the rise of hybrid cloud, Kubernetes, and microservices architectures, organizations are investing in cloud native storage to enhance agility and efficiency in data management.

Get Sample Copy of This Report: https://www.snsinsider.com/sample-request/3454

Market Keyplayers:

Microsoft (Azure Blob Storage, Azure Kubernetes Service (AKS))

IBM, (IBM Cloud Object Storage, IBM Spectrum Scale)

AWS (Amazon S3, Amazon EBS (Elastic Block Store))

Google (Google Cloud Storage, Google Kubernetes Engine (GKE))

Alibaba Cloud (Alibaba Object Storage Service (OSS), Alibaba Cloud Container Service for Kubernetes)

VMWare (VMware vSAN, VMware Tanzu Kubernetes Grid)

Huawei (Huawei FusionStorage, Huawei Cloud Object Storage Service)

Citrix (Citrix Hypervisor, Citrix ShareFile)

Tencent Cloud (Tencent Cloud Object Storage (COS), Tencent Kubernetes Engine)

Scality (Scality RING, Scality ARTESCA)

Splunk (Splunk SmartStore, Splunk Enterprise on Kubernetes)

Linbit (LINSTOR, DRBD (Distributed Replicated Block Device))

Rackspace (Rackspace Object Storage, Rackspace Managed Kubernetes)

Robin.Io (Robin Cloud Native Storage, Robin Multi-Cluster Automation)

MayaData (OpenEBS, Data Management Platform (DMP))

Diamanti (Diamanti Ultima, Diamanti Spektra)

Minio (MinIO Object Storage, MinIO Kubernetes Operator)

Rook (Rook Ceph, Rook EdgeFS)

Ondat (Ondat Persistent Volumes, Ondat Data Mesh)

Ionir (Ionir Data Services Platform, Ionir Continuous Data Mobility)

Trilio (TrilioVault for Kubernetes, TrilioVault for OpenStack)

Upcloud (UpCloud Object Storage, UpCloud Managed Databases)

Arrikto (Kubeflow Enterprise, Rok (Data Management for Kubernetes)

Market Size, Share, and Scope

The market is witnessing significant expansion across industries such as IT, BFSI, healthcare, retail, and manufacturing.

Hybrid and multi-cloud storage solutions are gaining traction due to their flexibility and cost-effectiveness.

Enterprises are increasingly adopting object storage, file storage, and block storage tailored for cloud native environments.

Key Market Trends Driving Growth

Rise in Cloud Adoption: Organizations are shifting workloads to public, private, and hybrid cloud environments, fueling demand for cloud native storage.

Growing Adoption of Kubernetes: Kubernetes-based storage solutions are becoming essential for managing containerized applications efficiently.

Increased Data Security and Compliance Needs: Businesses are investing in encrypted, resilient, and compliant storage solutions to meet global data protection regulations.

Advancements in AI and Automation: AI-driven storage management and self-healing storage systems are revolutionizing data handling.

Surge in Edge Computing: Cloud native storage is expanding to edge locations, enabling real-time data processing and low-latency operations.

Integration with DevOps and CI/CD Pipelines: Developers and IT teams are leveraging cloud storage automation for seamless software deployment.

Hybrid and Multi-Cloud Strategies: Enterprises are implementing multi-cloud storage architectures to optimize performance and costs.

Increased Use of Object Storage: The scalability and efficiency of object storage are driving its adoption in cloud native environments.

Serverless and API-Driven Storage Solutions: The rise of serverless computing is pushing demand for API-based cloud storage models.

Sustainability and Green Cloud Initiatives: Energy-efficient storage solutions are becoming a key focus for cloud providers and enterprises.

Enquiry of This Report: https://www.snsinsider.com/enquiry/3454

Market Segmentation:

By Component

Solution

Object Storage

Block Storage

File Storage

Container Storage

Others

Services

System Integration & Deployment

Training & Consulting

Support & Maintenance

By Deployment

Private Cloud

Public Cloud

By Enterprise Size

SMEs

Large Enterprises

By End Use

BFSI

Telecom & IT

Healthcare

Retail & Consumer Goods

Manufacturing

Government

Energy & Utilities

Media & Entertainment

Others

Market Growth Analysis

Factors Driving Market Expansion

The growing need for cost-effective and scalable data storage solutions

Adoption of cloud-first strategies by enterprises and governments

Rising investments in data center modernization and digital transformation

Advancements in 5G, IoT, and AI-driven analytics

Industry Forecast 2032: Size, Share & Growth Analysis

The cloud native storage market is projected to grow significantly over the next decade, driven by advancements in distributed storage architectures, AI-enhanced storage management, and increasing enterprise digitalization.

North America leads the market, followed by Europe and Asia-Pacific, with China and India emerging as key growth hubs.

The demand for software-defined storage (SDS), container-native storage, and data resiliency solutions will drive innovation and competition in the market.

Future Prospects and Opportunities

1. Expansion in Emerging Markets

Developing economies are expected to witness increased investment in cloud infrastructure and storage solutions.

2. AI and Machine Learning for Intelligent Storage

AI-powered storage analytics will enhance real-time data optimization and predictive storage management.

3. Blockchain for Secure Cloud Storage

Blockchain-based decentralized storage models will offer improved data security, integrity, and transparency.

4. Hyperconverged Infrastructure (HCI) Growth

Enterprises are adopting HCI solutions that integrate storage, networking, and compute resources.

5. Data Sovereignty and Compliance-Driven Solutions

The demand for region-specific, compliant storage solutions will drive innovation in data governance technologies.

Access Complete Report: https://www.snsinsider.com/reports/cloud-native-storage-market-3454

Conclusion

The cloud native storage market is poised for exponential growth, fueled by technological innovations, security enhancements, and enterprise digital transformation. As businesses embrace cloud, AI, and hybrid storage strategies, the future of cloud native storage will be defined by scalability, automation, and efficiency.

About Us:

SNS Insider is one of the leading market research and consulting agencies that dominates the market research industry globally. Our company's aim is to give clients the knowledge they require in order to function in changing circumstances. In order to give you current, accurate market data, consumer insights, and opinions so that you can make decisions with confidence, we employ a variety of techniques, including surveys, video talks, and focus groups around the world.

Contact Us:

Jagney Dave - Vice President of Client Engagement

Phone: +1-315 636 4242 (US) | +44- 20 3290 5010 (UK)

#cloud native storage market#cloud native storage market Scope#cloud native storage market Size#cloud native storage market Analysis#cloud native storage market Trends

0 notes

Text

Creating and Configuring Production ROSA Clusters (CS220) – A Practical Guide

Introduction

Red Hat OpenShift Service on AWS (ROSA) is a powerful managed Kubernetes solution that blends the scalability of AWS with the developer-centric features of OpenShift. Whether you're modernizing applications or building cloud-native architectures, ROSA provides a production-grade container platform with integrated support from Red Hat and AWS. In this blog post, we’ll walk through the essential steps covered in CS220: Creating and Configuring Production ROSA Clusters, an instructor-led course designed for DevOps professionals and cloud architects.

What is CS220?

CS220 is a hands-on, lab-driven course developed by Red Hat that teaches IT teams how to deploy, configure, and manage ROSA clusters in a production environment. It is tailored for organizations that are serious about leveraging OpenShift at scale with the operational convenience of a fully managed service.

Why ROSA for Production?

Deploying OpenShift through ROSA offers multiple benefits:

Streamlined Deployment: Fully managed clusters provisioned in minutes.

Integrated Security: AWS IAM, STS, and OpenShift RBAC policies combined.

Scalability: Elastic and cost-efficient scaling with built-in monitoring and logging.

Support: Joint support model between AWS and Red Hat.

Key Concepts Covered in CS220

Here’s a breakdown of the main learning outcomes from the CS220 course:

1. Provisioning ROSA Clusters

Participants learn how to:

Set up required AWS permissions and networking pre-requisites.

Deploy clusters using Red Hat OpenShift Cluster Manager (OCM) or CLI tools like rosa and oc.

Use STS (Short-Term Credentials) for secure cluster access.

2. Configuring Identity Providers

Learn how to integrate Identity Providers (IdPs) such as:

GitHub, Google, LDAP, or corporate IdPs using OpenID Connect.

Configure secure, role-based access control (RBAC) for teams.

3. Networking and Security Best Practices

Implement private clusters with public or private load balancers.

Enable end-to-end encryption for APIs and services.

Use Security Context Constraints (SCCs) and network policies for workload isolation.

4. Storage and Data Management

Configure dynamic storage provisioning with AWS EBS, EFS, or external CSI drivers.

Learn persistent volume (PV) and persistent volume claim (PVC) lifecycle management.

5. Cluster Monitoring and Logging

Integrate OpenShift Monitoring Stack for health and performance insights.

Forward logs to Amazon CloudWatch, ElasticSearch, or third-party SIEM tools.

6. Cluster Scaling and Updates

Set up autoscaling for compute nodes.

Perform controlled updates and understand ROSA’s maintenance policies.

Use Cases for ROSA in Production

Modernizing Monoliths to Microservices

CI/CD Platform for Agile Development

Data Science and ML Workflows with OpenShift AI

Edge Computing with OpenShift on AWS Outposts

Getting Started with CS220

The CS220 course is ideal for:

DevOps Engineers

Cloud Architects

Platform Engineers

Prerequisites: Basic knowledge of OpenShift administration (recommended: DO280 or equivalent experience) and a working AWS account.

Course Format: Instructor-led (virtual or on-site), hands-on labs, and guided projects.

Final Thoughts

As more enterprises adopt hybrid and multi-cloud strategies, ROSA emerges as a strategic choice for running OpenShift on AWS with minimal operational overhead. CS220 equips your team with the right skills to confidently deploy, configure, and manage production-grade ROSA clusters — unlocking agility, security, and innovation in your cloud-native journey.

Want to Learn More or Book the CS220 Course? At HawkStack Technologies, we offer certified Red Hat training, including CS220, tailored for teams and enterprises. Contact us today to schedule a session or explore our Red Hat Learning Subscription packages. www.hawkstack.com

0 notes

Text

Kubernetes was initially designed to support primarily stateless applications using ephemeral storage. However, it is now possible to use Kubernetes to build and manage stateful applications using persistent storage. Kubernetes offers the following components for persistent storage: Kubernetes Persistent Volumes (PVs)—a PV is a storage resource made available to a Kubernetes cluster. A PV can be provisioned statically by a cluster administrator or dynamically by Kubernetes. A PV is a volume plugin with its own lifecycle, independent of any individual pod using the PV. PersistentVolumeClaim (PVC)—a request for storage made by a user. A PVC specifies the desired size and access mode, and a control loop looks for a matching PV that can fulfill these requirements. If a match exists, the control loop binds the PV and PVC together and provides them to the user. If not, Kubernetes can dynamically provision a PV that meets the requirements. Common Use Cases for Persistent Volumes In the early days of containerization, containers were typically stateless. However, as Kubernetes architecture matured and container-based storage solutions were introduced, containers started to be used for stateful applications as well. There are many benefits for running stateful applications in a container, including fast startup, high availability, and self-healing. It is also easier to also store, maintain, and back up the data the application creates or uses. By ensuring a consistent data state, you can use Kubernetes for complex and not only for 12-factor web applications. The most common use case for Kubernetes persistent volumes is for databases. Applications that use databases must have constant access to this data. In a Kubernetes environment, this can be achieved by running a database in a PV and mounting it to the pod running the application. PVs can run common databases like MySQL, Cassandra, and Microsoft SQL Server. A Typical Process for Running Kubernetes Persistent Volumes Here is the general process for deploying a database in a persistent volume: Create pods to run the application, with proper configuration and environment variables Create a persistent volume that runs the database, or configure Storage Classes to allow the cluster to create PVs on demand Attach persistent volumes to pods via persistent volume claims Applications running in the pods can now access the database Initially, run the first pods manually and confirm that they connect to your persistent volumes correctly. Each new replica of the pod should mount the database as a persistent volume. When you see everything works, you can confidently scale your stateful pod to additional machines, ensuring that each of them receives a PV to run stateful operations. If a pod fails, Kubernetes will automatically run a new one and attach the PV. 6 Kubernetes Persistent Volume Tips Here are key tips to configuring a PV, as recommended by the Kubernetes documentation: Configuration Best Practices for PVs 1. Prefer dynamic over static provisioning Static provisioning can result in management overhead and inefficient scaling. You can avoid this issue by using dynamic provisioning. You still need to use storage classes to define a relevant reclaim policy. This setup enables you to minimize storage costs when pods are deleted. 2. Plan to use the right size of nodes Each node supports a maximum number of sizes. Note that different node sizes support various types of local storage and capacity. This limitation means you need to plan to deploy the right node size for your application’s expected demands. 3. Account for the independent lifecycle of PVs PVs are independent of a particular container or pod, while PVCs are unique to a specific user. Each of these components has a unique lifecycle. Here are best practices that can help you ensure that PVs and PVCs are properly utilized: PVCs—must always be included in a container’s configuration. PVs—must never be included in a container’s configuration.

Including PVs in the container configuration tightly couples the container to a specific volume. StorageClass—PVCs must always specify a default storage class. PVCs that do not define a specific class fail. Descriptive names—you should always give StorageClasses descriptive names. 3 Security Practices for PVs You should always harden the configuration of your storage system and Kubernetes cluster. Storage volumes may include sensitive information like credentials, private information, or trade secrets. Hardening helps ensure your volumes are visible or accessible only to the assigned pod. Here are common security practices that can help you harden storage and cluster configurations: 1. Never allow privileged pods A privileged pod can potentially allow threats to reach the host and access unassigned storage. You must prevent unauthorized applications from using privileged pods. Always prefer using standard containers that cannot mount volumes and restrict all types of users—root or otherwise. You should also use pod security policies to prevent user applications from creating privileged containers. 2. Limit application users Always limit application users to specific namespaces that have no cluster-level permissions. Only administrator users can manage PVs—users can never create, assign, manage, or destroy PVs. Additionally, users cannot view the details of their PVs—only cluster administrators can. 3. Use network policies Network policies can help you prevent pods from accessing the storage network directly. It prevents pods from gaining information about storage before the attempt can succeed. You should set up network policies using the host firewall or on a per-namespace basis to deny access to the storage network. Conclusion In this article I covered the basics of Kubernetes persistent storage and presented 6 best practices that can help you work with PVs more effectively and securely: Prefer dynamic over static provisioning of persistent volumes Plan node size and capacity to support your persistent volumes Take into account that PVs have an independent lifecycle Do not allow privileged pods to prevent security issues Limit application users to protect sensitive data Use network policies to prevent unauthorized access to PVs I hope this will be useful as you build your first persistent applications in Kubernetes.

0 notes

Text

Kubernetes Dashboard Tutorial: Visualize & Manage Your Cluster Like a Pro! 🔍📊

✔️ Learn how to install and launch the Kubernetes Dashboard ✔️ View real-time CPU & memory usage using Metrics Server 📈 ✔️ Navigate through Workloads, Services, Configs, and Storage ✔️ Create and manage deployments using YAML or the UI 💻 ✔️ Edit live resources and explore namespaces visually 🧭 ✔️ Understand how access methods differ in local vs production clusters 🔐 ✔️ Great for beginners, visual learners, or collaborative teams 🤝

👉 Whether you're debugging, deploying, or just learning Kubernetes, this dashboard gives you a GUI-first approach to mastering clusters!

youtube

0 notes

Text

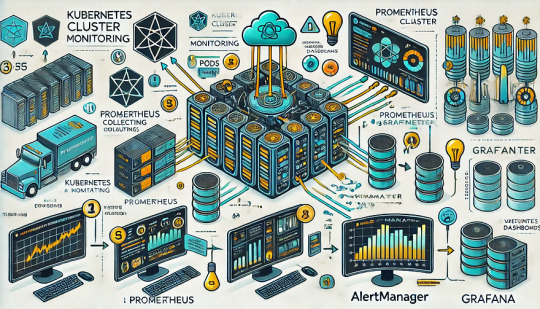

Monitoring Kubernetes Clusters with Prometheus and Grafana

Introduction

Kubernetes is a powerful container orchestration platform, but monitoring it is crucial for ensuring reliability, performance, and scalability.

Prometheus and Grafana are two of the most popular open-source tools for monitoring and visualizing Kubernetes clusters.

In this guide, we’ll walk you through setting up Prometheus and Grafana on Kubernetes and configuring dashboards for real-time insights.

Why Use Prometheus and Grafana for Kubernetes Monitoring?

Prometheus: The Monitoring Backbone

Collects metrics from Kubernetes nodes, pods, and applications.

Uses a powerful query language (PromQL) for analyzing data.

Supports alerting based on predefined conditions.

Grafana: The Visualization Tool

Provides rich dashboards with graphs and metrics.

Allows integration with multiple data sources (e.g., Prometheus, Loki, Elasticsearch).

Enables alerting and notification management.

Step 1: Installing Prometheus and Grafana on Kubernetes

Prerequisites

Before starting, ensure you have:

A running Kubernetes cluster

kubectl and Helm installed

1. Add the Prometheus Helm Chart Repository

Helm makes it easy to deploy Prometheus and Grafana using predefined configurations.shhelm repo add prometheus-community https://prometheus-community.github.io/helm-charts helm repo update

2. Install Prometheus and Grafana Using Helm

shhelm install prometheus prometheus-community/kube-prometheus-stack --namespace monitoring --create-namespace

This command installs: ✅ Prometheus — For collecting metrics ✅ Grafana — For visualization ✅ Alertmanager — For notifications ✅ Node-exporter — To collect system-level metrics

Step 2: Accessing Prometheus and Grafana

Once deployed, we need to access the Prometheus and Grafana web interfaces.

1. Accessing Prometheus

Check the Prometheus service:shkubectl get svc -n monitoring

Forward the Prometheus server port:shkubectl port-forward svc/prometheus-kube-prometheus-prometheus 9090 -n monitoring

Now, open http://localhost:9090 in your browser.

2. Accessing Grafana

Retrieve the Grafana admin password:shkubectl get secret --namespace monitoring prometheus-grafana -o jsonpath="{.data.admin-password}" | base64 --decodeForward the Grafana service port:shkubectl port-forward svc/prometheus-grafana 3000:80 -n monitoring

Open http://localhost:3000, and log in with:

Username: admin

Password: (obtained from the previous command)

Step 3: Configuring Prometheus as a Data Source in Grafana

Open Grafana and navigate to Configuration > Data Sources.

Click Add data source and select Prometheus.

Set the URL to:

sh

http://prometheus-kube-prometheus-prometheus.monitoring.svc.cluster.local:9090

Click Save & Test to verify the connection.

Step 4: Importing Kubernetes Dashboards into Grafana

Grafana provides ready-made dashboards for Kubernetes monitoring.

Go to Dashboards > Import.

Enter a dashboard ID from Grafana’s dashboard repository.

Example: Use 3119 for Kubernetes cluster monitoring.

Select Prometheus as the data source and click Import.

You’ll now have a real-time Kubernetes monitoring dashboard! 🎯

Step 5: Setting Up Alerts in Prometheus and Grafana

Creating Prometheus Alerting Rules

Create a ConfigMap for alerts:yamlapiVersion: v1 kind: ConfigMap metadata: name: alert-rules namespace: monitoring data: alert.rules: | groups: - name: InstanceDown rules: - alert: InstanceDown expr: up == 0 for: 5m labels: severity: critical annotations: summary: "Instance {{ $labels.instance }} is down"

Apply it to the cluster:shkubectl apply -f alert-rules.yaml

Configuring Grafana Alerts

Navigate to Alerting > Notification Channels.

Set up a channel (Email, Slack, or PagerDuty).

Define alert rules based on metrics from Prometheus.

Step 6: Enabling Persistent Storage for Prometheus

By default, Prometheus stores data in memory. To make the data persistent, configure storage:

Modify the Helm values:yamlprometheus: server: persistentVolume: enabled: true size: 10Gi

Apply the changes:shhelm upgrade prometheus prometheus-community/kube-prometheus-stack -f values.yaml

Conclusion

In this guide, we’ve set up Prometheus and Grafana to monitor a Kubernetes cluster. You now have: ✅ Real-time dashboards in Grafana ✅ Prometheus alerts to detect failures ✅ Persistent storage for historical metrics

WEBSITE: https://www.ficusoft.in/devops-training-in-chennai/

0 notes

Text

Best DevOps Certifications for Elevating Your Skills

DevOps is a rapidly growing area that bridging the gap between IT and software development operations, encouraging the culture which is constantly integrating, automated and quick delivery. If you're a novice looking to start your career in the field or a seasoned professional seeking to increase your knowledge by earning the DevOps certification can greatly improve your job prospects.

This article will discuss the most beneficial DevOps certifications to be awarded in 2025 which will aid you in enhancing your capabilities and remain ahead in the ever-changing tech sector.

Why Get a DevOps Certification?

Certifications in DevOps demonstrate your expertise and experience of automation, cloud computing pipelines, CI/CD pipelines and infrastructure in code and many more. The reasons why getting a certification is useful:

Improved Career Opportunities : Certified DevOps professionals are highly sought after as top companies seek experienced engineers.

Higher Salary - Potential : The Accredited DevOps engineers earn considerably higher salaries than professionals who are not certified.

A better set of skills : Certifications can assist you in mastering tools such as Kubernetes, Docker, Ansible, Jenkins, and Terraform.

Higher job security : With the swift acceptance of DevOps methods, certified professionals have a better position in the market.

Top DevOps Certifications in 2025

1. AWS Certified DevOps Engineer – Professional

Best for: Cloud DevOps Engineers

Skills Covered: AWS automation, CI/CD pipelines, logging, and monitoring

Cost: $300

Why Choose This Certification? AWS is a dominant cloud provider, and this certification validates your expertise in implementing DevOps practices within AWS environments. It's ideal for those working with AWS services like EC2, Lambda, CloudFormation, and CodePipeline.

2. Microsoft Certified: DevOps Engineer Expert

Best for: Azure DevOps Professionals

Skills Covered: Azure DevOps, CI/CD, security, and monitoring

Cost: $165

Why Choose This Certification? If you're working in Microsoft Azure environments, this certification is a must. It covers using Azure DevOps Services, infrastructure as code with ARM templates, and managing Kubernetes deployments in Azure.

3. Google Cloud Professional DevOps Engineer

Best for: Google Cloud DevOps Engineers

Skills Covered: GCP infrastructure, CI/CD, monitoring, and incident response

Cost: $200

Why Choose This Certification? Google Cloud is growing in popularity, and this certification helps DevOps professionals validate their skills in site reliability engineering (SRE) and continuous delivery using GCP.

4. Kubernetes Certifications (CKA & CKAD)

Best for: Kubernetes Administrators & Developers

Skills Covered: Kubernetes cluster management, networking, and security

Cost: $395 each

Why Choose These Certifications? Kubernetes is the backbone of container orchestration, making these certifications essential for DevOps professionals dealing with cloud-native applications and containerized deployments.

CKA (Certified Kubernetes Administrator): Best for managing Kubernetes clusters.

CKAD (Certified Kubernetes Application Developer): Best for developers deploying apps in Kubernetes.

5. Docker Certified Associate (DCA)

Best for: Containerization Experts

Skills Covered: Docker containers, Swarm, storage, and networking

Cost: $195

Why Choose This Certification? Docker is a key component of DevOps workflows. The DCA certification helps you gain expertise in containerized applications, orchestration, and security best practices.

6. HashiCorp Certified Terraform Associate

Best for: Infrastructure as Code (IaC) Professionals

Skills Covered: Terraform, infrastructure automation, and cloud deployments

Cost: $70

Why Choose This Certification? Terraform is the leading Infrastructure as Code (IaC) tool. This certification proves your ability to manage cloud infrastructure efficiently across AWS, Azure, and GCP.

7. Red Hat Certified Specialist in Ansible Automation

Best for: Automation Engineers

Skills Covered: Ansible playbooks, configuration management, and security

Cost: $400

Why Choose This Certification? If you work with IT automation and configuration management, this certification validates your Ansible skills, making you a valuable asset for enterprises looking to streamline operations.

8. DevOps Institute Certifications (DASM, DOFD, and DOL)

Best for: DevOps Leadership & Fundamentals

Skills Covered: DevOps culture, processes, and automation best practices

Cost: Varies

Why Choose These Certifications? The DevOps Institute offers various certifications focusing on foundational DevOps knowledge, agile methodologies, and leadership skills, making them ideal for beginners and managers.

How to Choose the Right DevOps Certification?

Consider the following factors when selecting a Devops certification course online :

Your Experience Level: Beginners may start with Docker, Terraform, or DevOps Institute certifications, while experienced professionals can pursue AWS, Azure, or Kubernetes certifications.

Your Career Goals: If you work with a specific cloud provider, choose AWS, Azure, or GCP certifications. For automation, go with Terraform or Ansible.

Industry Demand: Certifications like AWS DevOps Engineer, Kubernetes, and Terraform have high industry demand and can boost your career.

Cost & Time Commitment: Some certifications require hands-on experience, practice labs, and exams, so choose one that fits your schedule and budget.

Final Thoughts

Certifications in DevOps can increase your technical knowledge and improve your chances of getting a job and enhance the amount you earn. No matter if you're looking to specialise in cloud-based platforms, automation, and containerization, you can find an option specifically designed for your specific career with Cloud Computing Certification Courses.

If you can earn at least one of these most prestigious DevOps-related certifications in 2025 You can establish yourself as a professional with a solid background in an industry that is rapidly changing.

0 notes

Text

What Oracle Skills Are Currently In High Demand?

Introduction

Oracle technologies dominate the enterprise software landscape, with businesses worldwide relying on Oracle databases, cloud solutions, and applications to manage their data and operations. As a result, professionals with Oracle expertise are in high demand. Whether you are an aspiring Oracle professional or looking to upgrade your skills, understanding the most sought-after Oracle competencies can enhance your career prospects. Here are the top Oracle skills currently in high demand.

Oracle Database Administration (DBA)

Oracle Database Administrators (DBAs) are crucial in managing and maintaining Oracle databases. Unlock the world of database management with our Oracle Training in Chennai at Infycle Technologies. Organizations seek professionals to ensure optimal database performance, security, and availability. Key skills in demand include:

Database installation, configuration, and upgrades

Performance tuning and optimization

Backup and recovery strategies using RMAN

High-availability solutions such as Oracle RAC (Real Application Clusters)

Security management, including user access control and encryption

SQL and PL/SQL programming for database development

Oracle Cloud Infrastructure (OCI)

With businesses rapidly moving to the cloud, Oracle Cloud Infrastructure (OCI) has become a preferred choice for many enterprises. Professionals skilled in OCI can help organizations deploy, manage, and optimize cloud environments. Key areas of expertise include:

Oracle Cloud Architecture and Networking

OCI Compute, Storage, and Database services

Identity and Access Management (IAM)

Oracle Kubernetes Engine (OKE)

Security Best Practices and Compliance in Cloud Environments

Migration strategies from on-premises databases to OCI

Oracle SQL And PL/SQL Development

Oracle SQL and PL/SQL are fundamental database developers, analysts, and administrators skills. Companies need professionals who can:

Write efficient SQL queries for data retrieval & manipulation

Develop PL/SQL procedures, triggers, and packages

Optimize database performance with indexing and query tuning

Work with advanced SQL analytics functions and data modelling

Implement automation using PL/SQL scripts

Oracle ERP And E-Business Suite (EBS)

Enterprise Resource Planning (ERP) solutions from Oracle, such as Oracle E-Business Suite (EBS) and Oracle Fusion Cloud ERP, are widely used by organizations to manage business operations. Professionals with rising experience in these areas are highly sought after. Essential skills include:

ERP implementation and customization

Oracle Financials, HRMS, and Supply Chain modules

Oracle Workflow and Business Process Management

Reporting and analytics using Oracle BI Publisher

Integration with third-party applications

Oracle Fusion Middleware

Oracle Fusion Middleware is a comprehensive software suite that facilitates application integration, business process automation, and security. Professionals with experience in:

Oracle WebLogic Server administration

Oracle SOA Suite (Service-Oriented Architecture)

Oracle Identity and Access Management (IAM)

Oracle Data Integration and ETL tools

The job market highly values Java EE and Oracle Application Development Framework (ADF).

Oracle BI (Business Intelligence) And Analytics

Data-driven decision-making is critical for modern businesses, and Oracle Business Intelligence (BI) solutions help organizations derive insights from their data. In-demand skills include:

Oracle BI Enterprise Edition (OBIEE)

Oracle Analytics Cloud (OAC)

Data warehousing concepts and ETL processes

Oracle Data Visualization and Dashboarding

Advanced analytics using machine learning and AI tools within Oracle BI

Oracle Exadata And Performance Tuning

Oracle Exadata is a high-performance engineered system designed for large-scale database workloads. Professionals skilled in Oracle Exadata and performance tuning are in great demand. Essential competencies include:

Exadata architecture and configuration

Smart Flash Cache and Hybrid Columnar Compression (HCC)

Exadata performance tuning techniques

Storage indexing and SQL query optimization

Integration with Oracle Cloud for hybrid cloud environments

Oracle Security And Compliance

Organizations need Oracle professionals to ensure database and application security with increasing cybersecurity threats. Key security-related Oracle skills include:

Oracle Data Safe and Database Security Best Practices

Oracle Audit Vault and Database Firewall

Role-based access control (RBAC) implementation

Encryption and Data Masking techniques

Compliance with regulations like GDPR and HIPAA

Oracle DevOps And Automation

DevOps practices have become essential for modern software development and IT operations. Enhance your journey toward a successful career in software development with Infycle Technologies, the Best Software Training Institute in Chennai. Oracle professionals with DevOps expertise are highly valued for their ability to automate processes and ensure continuous integration and deployment (CI/CD). Relevant skills include:

Oracle Cloud DevOps tools and automation frameworks

Terraform for Oracle Cloud Infrastructure provisioning

CI/CD pipeline implementation using Jenkins and GitHub Actions

Infrastructure as Code (IaC) practices with Oracle Cloud

Monitoring and logging using Oracle Cloud Observability tools

Oracle AI And Machine Learning Integration

Artificial intelligence (AI) and machine learning (ML) are transforming businesses' operations, and Oracle has integrated AI/ML capabilities into its products. Professionals with expertise in:

Oracle Machine Learning (OML) for databases

AI-driven analytics in Oracle Analytics Cloud

Chatbots and AI-powered automation using Oracle Digital Assistant

Data Science and Big Data processing with Oracle Cloud are highly demanding for data-driven decision-making roles.

Conclusion

The demand for Oracle professionals grows as businesses leverage Oracle's powerful database, cloud, and enterprise solutions. Whether you are a database administrator, cloud engineer, developer, or security expert, acquiring the right Oracle skills can enhance your career opportunities and keep you ahead in the competitive job market. You can position yourself as a valuable asset in the IT industry by focusing on high-demand skills such as Oracle Cloud Infrastructure, database administration, ERP solutions, and AI/ML integration. If you want to become an expert in Oracle technologies, consider enrolling in Oracle certification programs, attending workshops, and gaining hands-on experience to strengthen your skill set and stay ahead in the industry.

0 notes

Text

Top Cloud Computing Certification Courses To Boost Your Skills In 2025

Cloud computing plays a critical role in today’s digital world. The industries and cloud technologies go hand-in-hand. These technologies help in streaming processes, fastening the software delivery systems, etc. Regardless of your job position i.e. cloud engineer, devOps professional, IT administrator, the cloud computing certification courses give opportunity to every individual. It provides a quality learning experience with in-depth theoretical exposure. This article lists the best courses that are often regarded as the most reputable in 2025.

AWS Certified: Solutions Architect Associate- Amazon Web Services is among the most prestigious certifications in the cloud computing industry. This certification validates your mastery in conceiving scalable and cost-efficient cloud resolutions on AWS. It covers topics such as AWS architectural best practices, security & compliance, AWS storage, networking, and computing services. This also helps in cost optimization. It is ideal for IT experts transitioning to cloud computing, cloud engineers, and architects.

Microsoft Certified: Azure Solutions Architect Expert- when it comes to the cloud service provider then it tops regarding the efficacy. This credential is indispensable for specialists operating in Microsoft-based cloud environments. It validates expertise in devising and executing solutions on Microsoft Azure. The key topics include executing security and identity solutions. It encourages data storage and networking solutions, business continuity strategies, monitoring, and optimizing Azure solutions. This certification is well-suited for cloud solution architects and IT professionals with Azure experience.

Google Cloud: Professional Cloud Architect- This certification is ideal for professionals who want to design, develop, and manage Google Cloud solutions. This certification involves designing & planning cloud architecture, managing & provisioning cloud solutions, etc. It ensures security, compliance, analysis, and optimization of business processes. This certification is the best fit for IT professionals and cloud engineers working with Google Cloud.

Certified Kubernetes Administrator- Kubernetes is a pivotal technology for container orchestration in cloud atmospheres. The CKA certification showcases your potential to deploy, govern, and troubleshoot Kubernetes groupings. It includes Kubernetes architecture, cluster installation, configuration, networking, security, troubleshooting, and managing workloads and scheduling. The devOps engineer and cloud-native application developers can pursue this program.

CompTIA Cloud+ - It is a vendor-neutral certification that offers a foundational understanding of cloud technologies. It is ideal for IT experts seeking a broad cloud computing knowledge base. This certification covers cloud deployment, cloud management, security, compliance, cloud infrastructure, and troubleshooting cloud environments. It is a great option for IT professionals, new to cloud computing, Python programming for DevOps, etc.

AWS Certified: DevOps Engineer Professional - For those emphasizing Python programming for DevOps within AWS, this certification is highly regarded. It validates skills in continuous integration, monitoring, and deployment strategies. The key topics include continuous integration/ continuous deployment, infrastructure as a code, monitoring & logging, and security & compliance automation. The devOps engineers and cloud automation professionals.

Microsoft Certified: Azure DevOps Engineer Expert- This certification is crafted for professionals who implement devOps strategies in Azure. It covers agile development, CI/CD, and infrastructure automation. The certification covers Azure pipelines & Repos, infrastructure as code using ARM templates, continuous monitoring & feedback, etc. in devOps. The devOps engineers using Azure and IT professionals focusing on automation can join this program.

Google Cloud Professional DevOps Engineer- This certification is suitable for individuals who specialize in deploying and maintaining applications on Google Cloud using DevOps methodologies. It covers site reliability engineering principles, CI/CD pipeline execution, service monitoring & incident response, and security. This credential is immaculate for cloud operation professionals and DevOps engineers.

Certified Cloud Security Professionals- Cloud security is a potent crisis for businesses. The CCSP certification from (ISC)2 validates expertise in securing cloud environments. The certification covers cloud security architecture & design, risk management & compliance, identity and access management (IAM), and cloud application security. It is ideal for security analysts, and IT experts emphasizing cloud security.

IBM Certified: Solutions Architect Cloud Pak For Data- It is gaining traction in artificial intelligence-driven cloud solutions. This certification is best fitted for professionals working with IBM cloud technologies. It covers data governance & security, AI & machine learning blend, cloud-native application maturation, and hybrid cloud strategies. This certification is best suited for data architects and AI/Cloud professionals.

With rapid progress in cloud technologies, abiding competition mandates continuous wisdom and skill enhancement. Certifications offer a structured pathway to mastering cloud platforms, tools, and best practices. As businesses move towards digitalization, cloud computing remains an important element in the IT strategy. By obtaining an industry-recognized certification, you can future-proof your career and secure high-paying job opportunities.

Conclusion

The cloud computing certification courses are capable of significantly impacting your career in 2025. If you want to specialize in cloud computing or only in devOps methodologies, the above-listed courses offer it all. These certifications are suited to your employment objectives. Join the 2025 cloud computing best certifications and carve your profile for higher compensation. The only way to select one is the one that suits your career goals better.

0 notes