#no code ml tools

Explore tagged Tumblr posts

Text

Looking for No-Code AI solutions? NextBrain AI provides user-friendly machine learning tools that empower businesses to harness the power of AI without coding.

#no code deep learning#no code ml#no code ml tools#brain ai#no-code machine learning open-source#no code ml platforms#no code ml platform#no code machine learning tools

0 notes

Text

Aaron Kesler, Sr. Product Manager, AI/ML at SnapLogic – Interview Series

New Post has been published on https://thedigitalinsider.com/aaron-kesler-sr-product-manager-ai-ml-at-snaplogic-interview-series/

Aaron Kesler, Sr. Product Manager, AI/ML at SnapLogic – Interview Series

Aaron Kesler, Sr. Product Manager, AI/ML at SnapLogic, is a certified product leader with over a decade of experience building scalable frameworks that blend design thinking, jobs to be done, and product discovery. He focuses on developing new AI-driven products and processes while mentoring aspiring PMs through his blog and coaching on strategy, execution, and customer-centric development.

SnapLogic is an AI-powered integration platform that helps enterprises connect applications, data, and APIs quickly and efficiently. With its low-code interface and intelligent automation, SnapLogic enables faster digital transformation across data engineering, IT, and business teams.

You’ve had quite the entrepreneurial journey, starting STAK in college and going on to be acquired by Carvertise. How did those early experiences shape your product mindset?

This was a really interesting time in my life. My roommate and I started STAK because we were bored with our coursework and wanted real-world experience. We never imagined it would lead to us getting acquired by what became Delaware’s poster startup. That experience really shaped my product mindset because I naturally gravitated toward talking to businesses, asking them about their problems, and building solutions. I didn’t even know what a product manager was back then—I was just doing the job.

At Carvertise, I started doing the same thing: working with their customers to understand pain points and develop solutions—again, well before I had the PM title. As an engineer, your job is to solve problems with technology. As a product manager, your job shifts to finding the right problems—the ones that are worth solving because they also drive business value. As an entrepreneur, especially without funding, your mindset becomes: how do I solve someone’s problem in a way that helps me put food on the table? That early scrappiness and hustle taught me to always look through different lenses. Whether you’re at a self-funded startup, a VC-backed company, or a healthcare giant, Maslow’s “basic need” mentality will always be the foundation.

You talk about your passion for coaching aspiring product managers. What advice do you wish you had when you were breaking into product?

The best advice I ever got—and the advice I give to aspiring PMs—is: “If you always argue from the customer’s perspective, you’ll never lose an argument.” That line is deceptively simple but incredibly powerful. It means you need to truly understand your customer—their needs, pain points, behavior, and context—so you’re not just showing up to meetings with opinions, but with insights. Without that, everything becomes HIPPO (highest paid person’s opinion), a battle of who has more power or louder opinions. With it, you become the person people turn to for clarity.

You’ve previously stated that every employee will soon work alongside a dozen AI agents. What does this AI-augmented future look like in a day-to-day workflow?

What may be interesting is that we are already in a reality where people are working with multiple AI agents – we’ve helped our customers like DCU plan, build, test, safeguard, and put dozens of agents to help their workforce. What’s fascinating is companies are building out organization charts of AI coworkers for each employee, based on their needs. For example, employees will have their own AI agents dedicated to certain use cases—such as an agent for drafting epics/user stories, one that assists with coding or prototyping or issues pull requests, and another that analyzes customer feedback – all sanctioned and orchestrated by IT because there’s a lot on the backend determining who has access to which data, which agents need to adhere to governance guidelines, etc. I don’t believe agents will replace humans, yet. There will be a human in the loop for the foreseeable future but they will remove the repetitive, low-value tasks so people can focus on higher-level thinking. In five years, I expect most teams will rely on agents the same way we rely on Slack or Google Docs today.

How do you recommend companies bridge the AI literacy gap between technical and non-technical teams?

Start small, have a clear plan of how this fits in with your data and application integration strategy, keep it hands-on to catch any surprises, and be open to iterating from the original goals and approach. Find problems by getting curious about the mundane tasks in your business. The highest-value problems to solve are often the boring ones that the unsung heroes are solving every day. We learned a lot of these best practices firsthand as we built agents to assist our SnapLogic finance department. The most important approach is to make sure you have secure guardrails on what types of data and applications certain employees or departments have access to.

Then companies should treat it like a college course: explain key terms simply, give people a chance to try tools themselves in controlled environments, and then follow up with deeper dives. We also make it known that it is okay not to know everything. AI is evolving fast, and no one’s an expert in every area. The key is helping teams understand what’s possible and giving them the confidence to ask the right questions.

What are some effective strategies you’ve seen for AI upskilling that go beyond generic training modules?

The best approach I’ve seen is letting people get their hands on it. Training is a great start—you need to show them how AI actually helps with the work they’re already doing. From there, treat this as a sanctioned approach to shadow IT, or shadow agents, as employees are creative to find solutions that may solve super particular problems only they have. We gave our field team and non-technical teams access to AgentCreator, SnapLogic’s agentic AI technology that eliminates the complexity of enterprise AI adoption, and empowered them to try building something and to report back with questions. This exercise led to real learning experiences because it was tied to their day-to-day work.

Do you see a risk in companies adopting AI tools without proper upskilling—what are some of the most common pitfalls?

The biggest risks I’ve seen are substantial governance and/or data security violations, which can lead to costly regulatory fines and the potential of putting customers’ data at risk. However, some of the most frequent risks I see are companies adopting AI tools without fully understanding what they are and are not capable of. AI isn’t magic. If your data is a mess or your teams don’t know how to use the tools, you’re not going to see value. Another issue is when organizations push adoption from the top down and don’t take into consideration the people actually executing the work. You can’t just roll something out and expect it to stick. You need champions to educate and guide folks, teams need a strong data strategy, time, and context to put up guardrails, and space to learn.

At SnapLogic, you’re working on new product development. How does AI factor into your product strategy today?

AI and customer feedback are at the heart of our product innovation strategy. It’s not just about adding AI features, it’s about rethinking how we can continually deliver more efficient and easy-to-use solutions for our customers that simplify how they interact with integrations and automation. We’re building products with both power users and non-technical users in mind—and AI helps bridge that gap.

How does SnapLogic’s AgentCreator tool help businesses build their own AI agents? Can you share a use case where this had a big impact?

AgentCreator is designed to help teams build real, enterprise-grade AI agents without writing a single line of code. It eliminates the need for experienced Python developers to build LLM-based applications from scratch and empowers teams across finance, HR, marketing, and IT to create AI-powered agents in just hours using natural language prompts. These agents are tightly integrated with enterprise data, so they can do more than just respond. Integrated agents automate complex workflows, reason through decisions, and act in real time, all within the business context.

AgentCreator has been a game-changer for our customers like Independent Bank, which used AgentCreator to launch voice and chat assistants to reduce the IT help desk ticket backlog and free up IT resources to focus on new GenAI initiatives. In addition, benefits administration provider Aptia used AgentCreator to automate one of its most manual and resource-intensive processes: benefits elections. What used to take hours of backend data entry now takes minutes, thanks to AI agents that streamline data translation and validation across systems.

SnapGPT allows integration via natural language. How has this democratized access for non-technical users?

SnapGPT, our integration copilot, is a great example of how GenAI is breaking down barriers in enterprise software. With it, users ranging from non-technical to technical can describe the outcome they want using simple natural language prompts—like asking to connect two systems or triggering a workflow—and the integration is built for them. SnapGPT goes beyond building integration pipelines—users can describe pipelines, create documentation, generate SQL queries and expressions, and transform data from one format to another with a simple prompt. It turns out, what was once a developer-heavy process into something accessible to employees across the business. It’s not just about saving time—it’s about shifting who gets to build. When more people across the business can contribute, you unlock faster iteration and more innovation.

What makes SnapLogic’s AI tools—like AutoSuggest and SnapGPT—different from other integration platforms on the market?

SnapLogic is the first generative integration platform that continuously unlocks the value of data across the modern enterprise at unprecedented speed and scale. With the ability to build cutting-edge GenAI applications in just hours — without writing code — along with SnapGPT, the first and most advanced GenAI-powered integration copilot, organizations can vastly accelerate business value. Other competitors’ GenAI capabilities are lacking or nonexistent. Unlike much of the competition, SnapLogic was born in the cloud and is purpose-built to manage the complexities of cloud, on-premises, and hybrid environments.

SnapLogic offers iterative development features, including automated validation and schema-on-read, which empower teams to finish projects faster. These features enable more integrators of varying skill levels to get up and running quickly, unlike competitors that mostly require highly skilled developers, which can slow down implementation significantly. SnapLogic is a highly performant platform that processes over four trillion documents monthly and can efficiently move data to data lakes and warehouses, while some competitors lack support for real-time integration and cannot support hybrid environments.

What excites you most about the future of product management in an AI-driven world?

What excites me most about the future of product management is the rise of one of the latest buzzwords to grace the AI space “vibe coding”—the ability to build working prototypes using natural language. I envision a world where everyone in the product trio—design, product management, and engineering—is hands-on with tools that translate ideas into real, functional solutions in real time. Instead of relying solely on engineers and designers to bring ideas to life, everyone will be able to create and iterate quickly.

Imagine being on a customer call and, in the moment, prototyping a live solution using their actual data. Instead of just listening to their proposed solutions, we could co-create with them and uncover better ways to solve their problems. This shift will make the product development process dramatically more collaborative, creative, and aligned. And that excites me because my favorite part of the job is building alongside others to solve meaningful problems.

Thank you for the great interview, readers who wish to learn more should visit SnapLogic.

#Administration#adoption#Advice#agent#Agentic AI#agents#ai#AI adoption#AI AGENTS#AI technology#ai tools#AI-powered#AI/ML#APIs#application integration#applications#approach#assistants#automation#backlog#bank#Behavior#Blog#Born#bridge#Building#Business#charts#Cloud#code

0 notes

Text

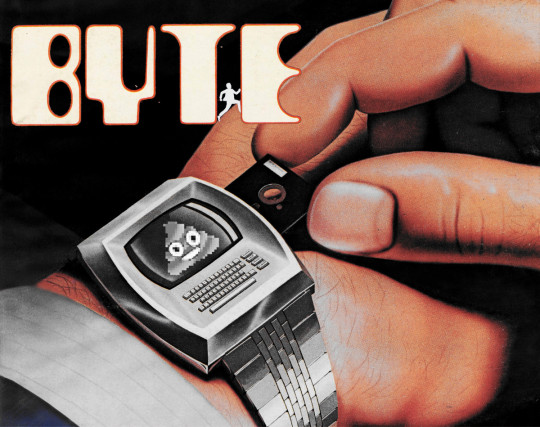

There Were Always Enshittifiers

I'm on a 20+ city book tour for my new novel PICKS AND SHOVELS. Catch me in DC TONIGHT (Mar 4), and in RICHMOND TOMORROW (Mar 5). More tour dates here. Mail-order signed copies from LA's Diesel Books.

My latest Locus column is "There Were Always Enshittifiers." It's a history of personal computing and networked communications that traces the earliest days of the battle for computers as tools of liberation and computers as tools for surveillance, control and extraction:

https://locusmag.com/2025/03/commentary-cory-doctorow-there-were-always-enshittifiers/

The occasion for this piece is the publication of my latest Martin Hench novel, a standalone book set in the early 1980s called "Picks and Shovels":

https://us.macmillan.com/books/9781250865908/picksandshovels

The MacGuffin of Picks and Shovels is a "weird PC" company called Fidelity Computing, owned by a Mormon bishop, a Catholic priest, and an orthodox rabbi. It sounds like the setup for a joke, but the punchline is deadly serious: Fidelity Computing is a pyramid selling cult that preys on the trust and fellowship of faith groups to sell the dreadful Fidelity 3000 PC and its ghastly peripherals.

You see, Fidelity's products are booby-trapped. It's not merely that they ship with programs whose data-files can't be read by apps on any other system – that's just table stakes. Fidelity's got a whole bag of tricks up its sleeve – for example, it deliberately damages a specific sector on every floppy disk it ships. The drivers for its floppy drive initialize any read or write operation by checking to see if that sector can be read. If it can, the computer refuses to recognize the disk. This lets the Reverend Sirs (as Fidelity's owners style themselves) run a racket where they sell these deliberately damaged floppies at a 500% markup, because regular floppies won't work on the systems they lure their parishioners into buying.

Or take the Fidelity printer: it's just a rebadged Okidata ML-80, the workhorse tractor feed printer that led the market for years. But before Fidelity ships this printer to its customers, they fit it with new tractor feed sprockets whose pins are slightly more widely spaced than the standard 0.5" holes on the paper you can buy in any stationery store. That way, Fidelity can force its customers to buy the custom paper that they exclusively peddle – again, at a massive markup.

Needless to say, printing with these wider sprocket holes causes frequent jams and puts a serious strain on the printer's motors, causing them to burn out at a high rate. That's great news – for Fidelity Computing. It means they get to sell you more overpriced paper so you can reprint the jobs ruined by jams, and they can also sell you their high-priced, exclusive repair services when your printer's motors quit.

Perhaps you're thinking, "OK, but I can just buy a normal Okidata printer and use regular, cheap paper, right?" Sorry, the Reverend Sirs are way ahead of you: they've reversed the pinouts on their printers' serial ports, and a normal printer won't be able to talk to your Fidelity 3000.

If all of this sounds familiar, it's because these are the paleolithic ancestors of today's high-tech lock-in scams, from HP's $10,000/gallon ink to Apple and Google's mobile app stores, which cream a 30% commission off of every dollar collected by an app maker. What's more, these ancient, weird misfeatures have their origins in the true history of computing, which was obsessed with making the elusive, copy-proof floppy disk.

This Quixotic enterprise got started in earnest with Bill Gates' notorious 1976 "open letter to hobbyists" in which the young Gates furiously scolds the community of early computer hackers for its scientific ethic of publishing, sharing and improving the code that they all wrote:

https://en.wikipedia.org/wiki/An_Open_Letter_to_Hobbyists

Gates had recently cloned the BASIC programming language for the popular Altair computer. For Gates, his act of copying was part of the legitimate progress of technology, while the copying of his colleagues, who duplicated Gates' Altair BASIC, was a shameless act of piracy, destined to destroy the nascent computing industry:

As the majority of hobbyists must be aware, most of you steal your software. Hardware must be paid for, but software is something to share. Who cares if the people who worked on it get paid?

Needless to say, Gates didn't offer a royalty to John Kemeny and Thomas Kurtz, the programmers who'd invented BASIC at Dartmouth College in 1963. For Gates – and his intellectual progeny – the formula was simple: "When I copy you, that's progress. When you copy me, that's piracy." Every pirate wants to be an admiral.

For would-be ex-pirate admirals, Gates's ideology was seductive. There was just one fly in the ointment: computers operate by copying. The only way a computer can run a program is to copy it into memory – just as the only way your phone can stream a video is to download it to its RAM ("streaming" is a consensus hallucination – every stream is a download, and it has to be, because the internet is a data-transmission network, not a cunning system of tubes and mirrors that can make a picture appear on your screen without transmitting the file that contains that image).

Gripped by this enshittificatory impulse, the computer industry threw itself headfirst into the project of creating copy-proof data, a project about as practical as making water that's not wet. That weird gimmick where Fidelity floppy disks were deliberately damaged at the factory so the OS could distinguish between its expensive disks and the generic ones you bought at the office supply place? It's a lightly fictionalized version of the copy-protection system deployed by Visicalc, a move that was later publicly repudiated by Visicalc co-founder Dan Bricklin, who lamented that it confounded his efforts to preserve his software on modern systems and recover the millions of data-files that Visicalc users created:

http://www.bricklin.com/robfuture.htm

The copy-protection industry ran on equal parts secrecy and overblown sales claims about its products' efficacy. As a result, much of the story of this doomed effort is lost to history. But back in 2017, a redditor called Vadermeer unearthed a key trove of documents from this era, in a Goodwill Outlet store in Seattle:

https://www.reddit.com/r/VintageApple/comments/5vjsow/found_internal_apple_memos_about_copy_protection/

Vaderrmeer find was a Apple Computer binder from 1979, documenting the company's doomed "Software Security from Apple's Friends and Enemies" (SSAFE) project, an effort to make a copy-proof floppy:

https://archive.org/details/AppleSSAFEProject

The SSAFE files are an incredible read. They consist of Apple's best engineers beavering away for days, cooking up a new copy-proof floppy, which they would then hand over to Apple co-founder and legendary hardware wizard Steve Wozniak. Wozniak would then promptly destroy the copy-protection system, usually in a matter of minutes or hours. Wozniak, of course, got the seed capital for Apple by defeating AT&T's security measures, building a "blue box" that let its user make toll-free calls and peddling it around the dorms at Berkeley:

https://512pixels.net/2018/03/woz-blue-box/

Woz has stated that without blue boxes, there would never have been an Apple. Today, Apple leads the charge to restrict how you use your devices, confining you to using its official app store so it can skim a 30% vig off every dollar you spend, and corralling you into using its expensive repair depots, who love to declare your device dead and force you to buy a new one. Every pirate wants to be an admiral!

https://www.vice.com/en/article/tim-cook-to-investors-people-bought-fewer-new-iphones-because-they-repaired-their-old-ones/

Revisiting the early PC years for Picks and Shovels isn't just an excuse to bust out some PC nostalgiacore set-dressing. Picks and Shovels isn't just a face-paced crime thriller: it's a reflection on the enshittificatory impulses that were present at the birth of the modern tech industry.

But there is a nostalgic streak in Picks and Shovels, of course, represented by the other weird PC company in the tale. Computing Freedom is a scrappy PC startup founded by three women who came up as sales managers for Fidelity, before their pangs of conscience caused them to repent of their sins in luring their co-religionists into the Reverend Sirs' trap.

These women – an orthodox lesbian whose family disowned her, a nun who left her order after discovering the liberation theology movement, and a Mormon woman who has quit the church over its opposition to the Equal Rights Amendment – have set about the wozniackian project of reverse-engineering every piece of Fidelity hardware and software, to make compatible products that set Fidelity's caged victims free.

They're making floppies that work with Fidelity drives, and drives that work with Fidelity's floppies. Printers that work with Fidelity computers, and adapters so Fidelity printers will work with other PCs (as well as resprocketing kits to retrofit those printers for standard paper). They're making file converters that allow Fidelity owners to read their data in Visicalc or Lotus 1-2-3, and vice-versa.

In other words, they're engaged in "adversarial interoperability" – hacking their own fire-exits into the burning building that Fidelity has locked its customers inside of:

https://www.eff.org/deeplinks/2019/10/adversarial-interoperability

This was normal, back then! There were so many cool, interoperable products and services around then, from the Bell and Howell "Black Apple" clones:

https://forum.vcfed.org/index.php?threads%2Fbell-howell-apple-ii.64651%2F

to the amazing copy-protection cracking disks that traveled from hand to hand, so the people who shelled out for expensive software delivered on fragile floppies could make backups against the inevitable day that the disks stopped working:

https://en.wikipedia.org/wiki/Bit_nibbler

Those were wild times, when engineers pitted their wits against one another in the spirit of Steve Wozniack and SSAFE. That era came to a close – but not because someone finally figured out how to make data that you couldn't copy. Rather, it ended because an unholy coalition of entertainment and tech industry lobbyists convinced Congress to pass the Digital Millennium Copyright Act in 1998, which made it a felony to "bypass an access control":

https://www.eff.org/deeplinks/2016/07/section-1201-dmca-cannot-pass-constitutional-scrutiny

That's right: at the first hint of competition, the self-described libertarians who insisted that computers would make governments obsolete went running to the government, demanding a state-backed monopoly that would put their rivals in prison for daring to interfere with their business model. Plus ça change: today, their intellectual descendants are demanding that the US government bail out their "anti-state," "independent" cryptocurrency:

https://www.citationneeded.news/issue-78/

In truth, the politics of tech has always contained a faction of "anti-government" millionaires and billionaires who – more than anything – wanted to wield the power of the state, not abolish it. This was true in the mainframe days, when companies like IBM made billions on cushy defense contracts, and it's true today, when the self-described "Technoking" of Tesla has inserted himself into government in order to steer tens of billions' worth of no-bid contracts to his Beltway Bandit companies:

https://www.reuters.com/world/us/lawmakers-question-musk-influence-over-verizon-faa-contract-2025-02-28/

The American state has always had a cozy relationship with its tech sector, seeing it as a way to project American soft power into every corner of the globe. But Big Tech isn't the only – or the most important – US tech export. Far more important is the invisible web of IP laws that ban reverse-engineering, modding, independent repair, and other activities that defend American tech exports from competitors in its trading partners.

Countries that trade with the US were arm-twisted into enacting laws like the DMCA as a condition of free trade with the USA. These laws were wildly unpopular, and had to be crammed through other countries' legislatures:

https://pluralistic.net/2024/11/15/radical-extremists/#sex-pest

That's why Europeans who are appalled by Musk's Nazi salute have to confine their protests to being loudly angry at him, selling off their Teslas, and shining lights on Tesla factories:

https://www.malaymail.com/news/money/2025/01/24/heil-tesla-activists-protest-with-light-projection-on-germany-plant-after-musks-nazi-salute-video/164398

Musk is so attention-hungry that all this is as apt to please him as anger him. You know what would really hurt Musk? Jailbreaking every Tesla in Europe so that all its subscription features – which represent the highest-margin line-item on Tesla's balance-sheet – could be unlocked by any local mechanic for €25. That would really kick Musk in the dongle.

The only problem is that in 2001, the US Trade Rep got the EU to pass the EU Copyright Directive, whose Article 6 bans that kind of reverse-engineering. The European Parliament passed that law because doing so guaranteed tariff-free access for EU goods exported to US markets.

Enter Trump, promising a 25% tariff on European exports.

The EU could retaliate here by imposing tit-for-tat tariffs on US exports to the EU, which would make everything Europeans buy from America 25% more expensive. This is a very weird way to punish the USA.

On the other hand, not that Trump has announced that the terms of US free trade deals are optional (for the US, at least), there's no reason not to delete Article 6 of the EUCD, and all the other laws that prevent European companies from jailbreaking iPhones and making their own App Stores (minus Apple's 30% commission), as well as ad-blockers for Facebook and Instagram's apps (which would zero out EU revenue for Meta), and, of course, jailbreaking tools for Xboxes, Teslas, and every make and model of every American car, so European companies could offer service, parts, apps, and add-ons for them.

When Jeff Bezos launched Amazon, his war-cry was "your margin is my opportunity." US tech companies have built up insane margins based on the IP provisions required in the free trade treaties it signed with the rest of the world.

It's time to delete those IP provisions and throw open domestic competition that attacks the margins that created the fortunes of oligarchs who sat behind Trump on the inauguration dais. It's time to bring back the indomitable hacker spirit that the Bill Gateses of the world have been trying to extinguish since the days of the "open letter to hobbyists." The tech sector built a 10 foot high wall around its business, then the US government convinced the rest of the world to ban four-metre ladders. Lift the ban, unleash the ladders, free the world!

In the same way that futuristic sf is really about the present, Picks and Shovels, an sf novel set in the 1980s, is really about this moment.

I'm on tour with the book now – if you're reading this today (Mar 4) and you're in DC, come see me tonight with Matt Stoller at 6:30PM at the Cleveland Park Library:

https://www.loyaltybookstores.com/picksnshovels

And if you're in Richmond, VA, come down to Fountain Bookshop and catch me with Lee Vinsel tomorrow (Mar 5) at 7:30PM:

https://fountainbookstore.com/events/1795820250305

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2025/03/04/object-permanence/#picks-and-shovels

#pluralistic#picks and shovels#history#web theory#marty hench#martin hench#red team blues#locus magazine#drm#letter to computer hobbyists#bill gates#computer lib#science fiction#crime fiction#detective fiction

495 notes

·

View notes

Text

I wrote ~4.5k words about the operating of LLMs, as the theory preface to a new programming series. Here's a little preview of the contents:

As with many posts, it's written for someone like 'me a few months ago': curious about the field but not yet up to speed on all the bag of tricks. Here's what I needed to find out!

But the real meat of the series will be about getting hands dirty with writing code to interact with LLM output, finding out which of these techniques actually work and what it takes to make them work, that kind of thing. Similar to previous projects with writing a rasteriser/raytracer/etc.

I would be very interesting to hear how accessible that is to someone who hasn't been mainlining ML theory for the past few months - whether it can serve its purpose as a bridge into the more technical side of things! But I hope there's at least a little new here and there even if you're already an old hand.

292 notes

·

View notes

Text

There's a real unearned confidence to the way that Social Democrats talk about their ideology, like they've cracked the code and found the perfect way forward and the only reason people disagree is because they're misguided or evil. Like they'll correctly point out problems within Neoliberal Capitalism before spouting some absolute nonsense about how uniquely evil and dysfunctional Communism was (nearly always in the past tense too; they take it for granted that the end of the USSR was the end of all Communism) and then going "Don't worry though, there's a third way; a mixed regulated economy. We can have a free market in consumer goods while making sure that corporations pay their fair share in wages and taxes that can fund the welfare that looks after everyone". And like putting aside the fact that such a model relies on the super-profits of imperialist exploitation to actually function, and the inherent instability of an arrangement where the Bourgeoisie make concessions even while maintaining ultimate control of the economy, there's the simple fact that much of the Imperial Core did indeed had Social Democracy but does not anymore.

Like these Social Democrats never think about why that might be, why their ideology failed and what they can learn from it going forward. They just act as though some dumb individuals (i.e. Ronald Reagan, Milton Friedman etc.) managed to slip into power and make bad decisions and like the best way to fix this is to vote good people in who'll change it back. Like hell a lot of these people take the previous existence of these policies as like a good point, the whole "We had them before so we aren't being radical by wanting them back. We don't want anything crazy we just wanna bring back The New Deal or Keynesian Economic policy or whatever". There's never any thought about why those policies failed (how often do you hear these people even talk about "stagflation" or "the oil crisis" let alone the impact of the fall of the soviet union) and what implications this might have on the viability of bringing it back. They also love talking about how Social Democratic institutions are still largely intact in the Scandinavian countries, but rather than even consider what specific factors in their political-economic situation led to this these people just go "Damn isn't Sweden great. Why aren't we doing exactly what they do?"

And sure some people might compare this to Marxism-Leninism, the whole "trying to bring back a defeated ideology", but for one it's stupid to treat the dissolution of the USSR as the end of Communism as a global political force. It may have been a major blow, but even if you write off like Cuba and Vietnam as too small and insignificant to matter you can't just fucking ignore that over 1/6 of the world's population continues live under a Marxist Leninist party. Whatever concessions these countries may have made to global Capitalism, it's just plain ignorant to act as though Communism suffered anywhere near the humiliating loss of global power and credibility that Social Democracy has. Sure the latter may be more politically acceptable to toy with in "The West", but "The Western World" ≠ The Entire World. Also, nearly every ML on the planet is painfully aware that Soviet Communism collapsed and that it collapsed for a reason. There might be plenty of contention about why exactly it died and what exactly we can learn from this, but nearly everyone agrees that we need to learn and ideologically grow. No serious Communist wants to "bring back the USSR" in the same way that many Social Democrats want to "bring back The Welfare State". Far from being a form of "best of both worlds" mixed economy, Social Democracy is nothing more than a flimsy tool to stabilise Imperialist Capitalism at its moments of greatest strain. And if people are still gonna promote it wholeheartedly as the best possible solution, I wish they'd be a little less arrogant about it. It's not as though they have history on their side

337 notes

·

View notes

Text

On writing a complex chapter: WIP/Author Notes for Through the Shadows of Shame (TSS), an AUP Fanfic

Just wanted to share another behind the scenes author's note for Through the Shadows of Shame, an Academy's Undercover Professor Fanfic.

Thumbnail is tiny so no spoilers ;)

This is for an upcoming chapter and it's very loaded with a lot of things. To be honest, I had to think of this chapter for almost a week, writing new outlines, drafts, ideas, assessing where I should put the chapter, and all of that.

It was a very difficult chapter because of all the emotions and body language as well as the environment which is more dynamic int his chapter compared to the previous chapters I've written.

So I figured I'd try mapping it out! I think writers really have a tool like this? But I didn't really reference any of those a lot, I'm used to doing flowchart to make sense of my brain, therefore I just did what was natural.

This was so helpful for me. When I tried to input all of the needed elements at once as I was writing it, it just didn't feel right and I got stuck thinking of what was wrong with it, not to mention how overwhelming it felt.

I like to really get in my feelings when writing the scenes, trying to feel and imagine what the characters would be feeling.

And there were a lot of feelings in this chapter.

So what worked?

Doing it in layers was by far a big brain moment for me. Instead of doing the entire scene in one draft, I did it in 3-4 parts:

First, I would draft the entire narrative focusing on the movement and dynamics of the changing environment and other external (non ML/FL) objects/characters. This establishes a sort of skeleton or structure for the entire thing because it gives us the timelines for when certain things need to happen and if it made sense for it to happen at that point (e.g. in my first draft it was only supposed to be 4pm and then the next paragraph the sun was already setting -- it just didn't make sense)

Second, after the skeleton is done and the scene is basically painted in with words, I add in the POV of the characters, 1 character at a time. The scene is difficult because they're both just walking silence not talking to each other, but they're both lost in thought so I needed to convey not only an inner monologue, but also body language, all juxtaposed into the setting in #1.

Third, I look at the side-by-side character thoughts/actions as the sene is unfolding (if you can kinda see it, there's a timeline of major events in the scene, and I've color-coded the thoughts of Ludger and Erina (my OC) and what change is happening at that point in time This is also for continuity, logic, and parallelism. (e.g. what are they thinking of at the same time? That would affect their body language and therefore change the perception or assumption the other person has of their current state of mind)

Fourth is copy editing. Mostly for rewriting the flow, making sure it makes sense, making sure the ideas are good.

Fifth is line editing. Tightening up the dialogue, cutting unnecessary lines, paragraphs, writing additional text for smoother transitions, wittier banter, etc.

Sixth is re-reading! And not just once. I re-read it a few times after editing, then leave it alone on the next chapter. Then I read it again at least once before I publish it. Because of this reason when I'm writing something I'm usually (ideally) 2-3 chapters ahead of my published chapters.

Phew, that was a hefty chapter. Anyway, I just wanted to share my process. Since I write the whole day and I'm alone most of the time, I don't really have people to talk to about this so I thought I'd talk to the internet void.

If you're curious about my story it's this one, on AO3: Through the Shadows of Shame - an AUP Fanfic

Tags: Romance, Fantasy, Comedy, Slow-burn Pairing: Ludger Cherish x Female OC (Original Character) Female OC is a fellow professor and she's a researcher who specializes in plants. And also she banters with him a lot. I think their interactions are cute.

#aup#ludger cherish#the academy's undercover professor#academy's undercover professor#i got a fake job at the academy#rudiger chelsea#academy undercover professor#taup#fic-tss#throughtheshadowsofshame

11 notes

·

View notes

Text

I've been experimenting with "identifying as stupid and lazy" and it's going pretty well. This month I went to a Javascript meetup with the explicit goal of being slightly stupid there, got into an AI conversation, said a few coherent things, and then mentioned I just didn't want to put in the work into understanding e.g. transformers. Also I said as a simplification that I'd flunked out of linear algebra in college which isn't true (I got an A in linalg but flunked out of the ML course where linalg was heavily in use) but felt. WEIRDLY. pleasurable to say.

When I talked about this on Discord, one of them brought up Stupidism, which is from a good post @mark-gently made. But there's something about my wanton dignity-discarding that goes several steps further from Stupidism and feels very liberating.

Last year I read a weird... pagan?... book, Existential Kink, that invites you to notice how much of your life is shaped to bring about outcomes you supposedly hate, and how you secretly take joy in those outcomes. This seems false for the majority of things one tries to avoid, but leaning into it sure is interesting to try out! And I'm finding it is surprisingly true for "coming off as stupid".

There's something absurdly joyful/thrilling about deciding to go to a meetup and presenting as a moron. Some years ago I would have gone NOOO at the thought, and now I feel like an adrenaline junkie being invited to a new type of gambling event or weird sex thing.

I fully expect to tire of "identifying/presenting as stupid and lazy", but when I move on from it I expect to be more integrated or whatever. Less afraid of being stupid and lazy because I've just gone and done it openly.

One of the stupid things I said at the Javascript meetup was that I hate using libraries in almost full generality. I'm too lazy to read docs or troubleshoot my calls to other people's code. Someone recced me a different meetup for people who roll their own tooling, but warned me it was all male, because he knew I'd found all-male programming contexts stressful in the past.

In college I tended to not even really notice if a lab or a team was all male, because I was a top-half student and just felt totally secure about being in class. But I became phobic of it in jobs because I'm usually the worst dev in any remotely selective workplace, and being the worst dev AND the only woman sucks. I was ashamed of being bad at my job, obviously, but I was mortified at being the entity that diversity posters and mandatory trainings point at to say "if you think women are like that you are a terrible person and causing problems in society". But... I am like that. I guess for society's good I need to hide this as hard as possible?

(I solved this by going to a much less selective workplace and almost explicitly saying "I will be kind of a bad programmer, but I come cheap". I am pretty happy now.)

So, given that I got twisted up by that employment record, current me is delighted at the thought of being openly dumb at an all-male CS meetup. This wouldn't be good for the men (some of whom Want To Unlearn Sexism, etc) nor for Women In Tech, but it would be good for ME. Time to abandon class consciousness and defect on women for my own gain.

It is, well, yeah, existentially kinky to imagine going to this meetup and cheerfully asking dumb questions & occasionally responding with "I don't think I'm ever going to understand that, sorry, you should stop explaining that because I don't want to waste your time".

86 notes

·

View notes

Text

How to Become a Data Scientist in 2025 (Roadmap for Absolute Beginners)

Want to become a data scientist in 2025 but don’t know where to start? You’re not alone. With job roles, tech stacks, and buzzwords changing rapidly, it’s easy to feel lost.

But here’s the good news: you don’t need a PhD or years of coding experience to get started. You just need the right roadmap.

Let’s break down the beginner-friendly path to becoming a data scientist in 2025.

✈️ Step 1: Get Comfortable with Python

Python is the most beginner-friendly programming language in data science.

What to learn:

Variables, loops, functions

Libraries like NumPy, Pandas, and Matplotlib

Why: It’s the backbone of everything you’ll do in data analysis and machine learning.

🔢 Step 2: Learn Basic Math & Stats

You don’t need to be a math genius. But you do need to understand:

Descriptive statistics

Probability

Linear algebra basics

Hypothesis testing

These concepts help you interpret data and build reliable models.

📊 Step 3: Master Data Handling

You’ll spend 70% of your time cleaning and preparing data.

Skills to focus on:

Working with CSV/Excel files

Cleaning missing data

Data transformation with Pandas

Visualizing data with Seaborn/Matplotlib

This is the “real work” most data scientists do daily.

🧬 Step 4: Learn Machine Learning (ML)

Once you’re solid with data handling, dive into ML.

Start with:

Supervised learning (Linear Regression, Decision Trees, KNN)

Unsupervised learning (Clustering)

Model evaluation metrics (accuracy, recall, precision)

Toolkits: Scikit-learn, XGBoost

🚀 Step 5: Work on Real Projects

Projects are what make your resume pop.

Try solving:

Customer churn

Sales forecasting

Sentiment analysis

Fraud detection

Pro tip: Document everything on GitHub and write blogs about your process.

✏️ Step 6: Learn SQL and Databases

Data lives in databases. Knowing how to query it with SQL is a must-have skill.

Focus on:

SELECT, JOIN, GROUP BY

Creating and updating tables

Writing nested queries

🌍 Step 7: Understand the Business Side

Data science isn’t just tech. You need to translate insights into decisions.

Learn to:

Tell stories with data (data storytelling)

Build dashboards with tools like Power BI or Tableau

Align your analysis with business goals

🎥 Want a Structured Way to Learn All This?

Instead of guessing what to learn next, check out Intellipaat’s full Data Science course on YouTube. It covers Python, ML, real projects, and everything you need to build job-ready skills.

https://www.youtube.com/watch?v=rxNDw68XcE4

🔄 Final Thoughts

Becoming a data scientist in 2025 is 100% possible — even for beginners. All you need is consistency, a good learning path, and a little curiosity.

Start simple. Build as you go. And let your projects speak louder than your resume.

Drop a comment if you’re starting your journey. And don’t forget to check out the free Intellipaat course to speed up your progress!

2 notes

·

View notes

Text

How Python Powers Scalable and Cost-Effective Cloud Solutions

Explore the role of Python in developing scalable and cost-effective cloud solutions. This guide covers Python's advantages in cloud computing, addresses potential challenges, and highlights real-world applications, providing insights into leveraging Python for efficient cloud development.

Introduction

In today's rapidly evolving digital landscape, businesses are increasingly leveraging cloud computing to enhance scalability, optimize costs, and drive innovation. Among the myriad of programming languages available, Python has emerged as a preferred choice for developing robust cloud solutions. Its simplicity, versatility, and extensive library support make it an ideal candidate for cloud-based applications.

In this comprehensive guide, we will delve into how Python empowers scalable and cost-effective cloud solutions, explore its advantages, address potential challenges, and highlight real-world applications.

Why Python is the Preferred Choice for Cloud Computing?

Python's popularity in cloud computing is driven by several factors, making it the preferred language for developing and managing cloud solutions. Here are some key reasons why Python stands out:

Simplicity and Readability: Python's clean and straightforward syntax allows developers to write and maintain code efficiently, reducing development time and costs.

Extensive Library Support: Python offers a rich set of libraries and frameworks like Django, Flask, and FastAPI for building cloud applications.

Seamless Integration with Cloud Services: Python is well-supported across major cloud platforms like AWS, Azure, and Google Cloud.

Automation and DevOps Friendly: Python supports infrastructure automation with tools like Ansible, Terraform, and Boto3.

Strong Community and Enterprise Adoption: Python has a massive global community that continuously improves and innovates cloud-related solutions.

How Python Enables Scalable Cloud Solutions?

Scalability is a critical factor in cloud computing, and Python provides multiple ways to achieve it:

1. Automation of Cloud Infrastructure

Python's compatibility with cloud service provider SDKs, such as AWS Boto3, Azure SDK for Python, and Google Cloud Client Library, enables developers to automate the provisioning and management of cloud resources efficiently.

2. Containerization and Orchestration

Python integrates seamlessly with Docker and Kubernetes, enabling businesses to deploy scalable containerized applications efficiently.

3. Cloud-Native Development

Frameworks like Flask, Django, and FastAPI support microservices architecture, allowing businesses to develop lightweight, scalable cloud applications.

4. Serverless Computing

Python's support for serverless platforms, including AWS Lambda, Azure Functions, and Google Cloud Functions, allows developers to build applications that automatically scale in response to demand, optimizing resource utilization and cost.

5. AI and Big Data Scalability

Python’s dominance in AI and data science makes it an ideal choice for cloud-based AI/ML services like AWS SageMaker, Google AI, and Azure Machine Learning.

Looking for expert Python developers to build scalable cloud solutions? Hire Python Developers now!

Advantages of Using Python for Cloud Computing

Cost Efficiency: Python’s compatibility with serverless computing and auto-scaling strategies minimizes cloud costs.

Faster Development: Python’s simplicity accelerates cloud application development, reducing time-to-market.

Cross-Platform Compatibility: Python runs seamlessly across different cloud platforms.

Security and Reliability: Python-based security tools help in encryption, authentication, and cloud monitoring.

Strong Community Support: Python developers worldwide contribute to continuous improvements, making it future-proof.

Challenges and Considerations

While Python offers many benefits, there are some challenges to consider:

Performance Limitations: Python is an interpreted language, which may not be as fast as compiled languages like Java or C++.

Memory Consumption: Python applications might require optimization to handle large-scale cloud workloads efficiently.

Learning Curve for Beginners: Though Python is simple, mastering cloud-specific frameworks requires time and expertise.

Python Libraries and Tools for Cloud Computing

Python’s ecosystem includes powerful libraries and tools tailored for cloud computing, such as:

Boto3: AWS SDK for Python, used for cloud automation.

Google Cloud Client Library: Helps interact with Google Cloud services.

Azure SDK for Python: Enables seamless integration with Microsoft Azure.

Apache Libcloud: Provides a unified interface for multiple cloud providers.

PyCaret: Simplifies machine learning deployment in cloud environments.

Real-World Applications of Python in Cloud Computing

1. Netflix - Scalable Streaming with Python

Netflix extensively uses Python for automation, data analysis, and managing cloud infrastructure, enabling seamless content delivery to millions of users.

2. Spotify - Cloud-Based Music Streaming

Spotify leverages Python for big data processing, recommendation algorithms, and cloud automation, ensuring high availability and scalability.

3. Reddit - Handling Massive Traffic

Reddit uses Python and AWS cloud solutions to manage heavy traffic while optimizing server costs efficiently.

Future of Python in Cloud Computing

The future of Python in cloud computing looks promising with emerging trends such as:

AI-Driven Cloud Automation: Python-powered AI and machine learning will drive intelligent cloud automation.

Edge Computing: Python will play a crucial role in processing data at the edge for IoT and real-time applications.

Hybrid and Multi-Cloud Strategies: Python’s flexibility will enable seamless integration across multiple cloud platforms.

Increased Adoption of Serverless Computing: More enterprises will adopt Python for cost-effective serverless applications.

Conclusion

Python's simplicity, versatility, and robust ecosystem make it a powerful tool for developing scalable and cost-effective cloud solutions. By leveraging Python's capabilities, businesses can enhance their cloud applications' performance, flexibility, and efficiency.

Ready to harness the power of Python for your cloud solutions? Explore our Python Development Services to discover how we can assist you in building scalable and efficient cloud applications.

FAQs

1. Why is Python used in cloud computing?

Python is widely used in cloud computing due to its simplicity, extensive libraries, and seamless integration with cloud platforms like AWS, Google Cloud, and Azure.

2. Is Python good for serverless computing?

Yes! Python works efficiently in serverless environments like AWS Lambda, Azure Functions, and Google Cloud Functions, making it an ideal choice for cost-effective, auto-scaling applications.

3. Which companies use Python for cloud solutions?

Major companies like Netflix, Spotify, Dropbox, and Reddit use Python for cloud automation, AI, and scalable infrastructure management.

4. How does Python help with cloud security?

Python offers robust security libraries like PyCryptodome and OpenSSL, enabling encryption, authentication, and cloud monitoring for secure cloud applications.

5. Can Python handle big data in the cloud?

Yes! Python supports big data processing with tools like Apache Spark, Pandas, and NumPy, making it suitable for data-driven cloud applications.

#Python development company#Python in Cloud Computing#Hire Python Developers#Python for Multi-Cloud Environments

2 notes

·

View notes

Text

Free AI Tools

Artificial Intelligence (AI) has revolutionized the way we work, learn, and create. With an ever-growing number of tools, it’s now easier than ever to integrate AI into your personal and professional life without spending a dime. Below, we’ll explore some of the best free AI tools across various categories, helping you boost productivity, enhance creativity, and automate mundane tasks.

Wanna know about free ai tools

1. Content Creation Tools

ChatGPT (OpenAI)

One of the most popular AI chatbots, ChatGPT, offers a free plan that allows users to generate ideas, write content, answer questions, and more. Its user-friendly interface makes it accessible for beginners and professionals alike.

Best For:

Writing articles, emails, and brainstorming ideas.

Limitations:

Free tier usage is capped; may require upgrading for heavy use.

Copy.ai

Copy.ai focuses on helping users craft engaging marketing copy, blog posts, and social media captions.

2. Image Generation Tools

DALL·EOpenAI’s DALL·E can generate stunning, AI-created artwork from text prompts. The free tier allows users to explore creative possibilities, from surreal art to photo-realistic images.

Craiyon (formerly DALL·E Mini)This free AI image generator is great for creating quick, fun illustrations. It’s entirely free but may not match the quality of professional tools.

3. Video Editing and Creation

Runway MLRunway ML offers free tools for video editing, including AI-based background removal, video enhancement, and even text-to-video capabilities.

Pictory.aiTurn scripts or blog posts into short, engaging videos with this free AI-powered tool. Pictory automates video creation, saving time for marketers and educators.

4. Productivity Tools

Notion AINotion's AI integration enhances the already powerful productivity app. It can help generate meeting notes, summarize documents, or draft content directly within your workspace.

Otter.aiOtter.ai is a fantastic tool for transcribing meetings, interviews, or lectures. It offers a free plan that covers up to 300 minutes of transcription monthly.

5. Coding and Data Analysis

GitHub Copilot (Free for Students)GitHub Copilot, powered by OpenAI, assists developers by suggesting code and speeding up development workflows. It’s free for students with GitHub’s education pack.

Google ColabGoogle’s free cloud-based platform for coding supports Python and is perfect for data science projects and machine learning experimentation.

6. Design and Presentation

Canva AICanva’s free tier includes AI-powered tools like Magic Resize and text-to-image generation, making it a top choice for creating professional presentations and graphics.

Beautiful.aiThis AI presentation tool helps users create visually appealing slides effortlessly, ideal for professionals preparing pitch decks or educational slides.

7. AI for Learning

Duolingo AIDuolingo now integrates AI to provide personalized feedback and adaptive lessons for language learners.

Khanmigo (from Khan Academy)This AI-powered tutor helps students with math problems and concepts in an interactive way. While still in limited rollout, it’s free for Khan Academy users.

Why Use Free AI Tools?

Free AI tools are perfect for testing the waters without financial commitments. They’re particularly valuable for:

Conclusion

AI tools are democratizing access to technology, allowing anyone to leverage advanced capabilities at no cost. Whether you’re a writer, designer, developer, or educator, there’s a free AI tool out there for you. Start experimenting today and unlock new possibilities!

4o

5 notes

·

View notes

Text

Top Trends in Software Development for 2025

The software development industry is evolving at an unprecedented pace, driven by advancements in technology and the increasing demands of businesses and consumers alike. As we step into 2025, staying ahead of the curve is essential for businesses aiming to remain competitive. Here, we explore the top trends shaping the software development landscape and how they impact businesses. For organizations seeking cutting-edge solutions, partnering with the Best Software Development Company in Vadodara, Gujarat, or India can make all the difference.

1. Artificial Intelligence and Machine Learning Integration:

Artificial Intelligence (AI) and Machine Learning (ML) are no longer optional but integral to modern software development. From predictive analytics to personalized user experiences, AI and ML are driving innovation across industries. In 2025, expect AI-powered tools to streamline development processes, improve testing, and enhance decision-making.

Businesses in Gujarat and beyond are leveraging AI to gain a competitive edge. Collaborating with the Best Software Development Company in Gujarat ensures access to AI-driven solutions tailored to specific industry needs.

2. Low-Code and No-Code Development Platforms:

The demand for faster development cycles has led to the rise of low-code and no-code platforms. These platforms empower non-technical users to create applications through intuitive drag-and-drop interfaces, significantly reducing development time and cost.

For startups and SMEs in Vadodara, partnering with the Best Software Development Company in Vadodara ensures access to these platforms, enabling rapid deployment of business applications without compromising quality.

3. Cloud-Native Development:

Cloud-native technologies, including Kubernetes and microservices, are becoming the backbone of modern applications. By 2025, cloud-native development will dominate, offering scalability, resilience, and faster time-to-market.

The Best Software Development Company in India can help businesses transition to cloud-native architectures, ensuring their applications are future-ready and capable of handling evolving market demands.

4. Edge Computing:

As IoT devices proliferate, edge computing is emerging as a critical trend. Processing data closer to its source reduces latency and enhances real-time decision-making. This trend is particularly significant for industries like healthcare, manufacturing, and retail.

Organizations seeking to leverage edge computing can benefit from the expertise of the Best Software Development Company in Gujarat, which specializes in creating applications optimized for edge environments.

5. Cybersecurity by Design:

With the increasing sophistication of cyber threats, integrating security into the development process has become non-negotiable. Cybersecurity by design ensures that applications are secure from the ground up, reducing vulnerabilities and protecting sensitive data.

The Best Software Development Company in Vadodara prioritizes cybersecurity, providing businesses with robust, secure software solutions that inspire trust among users.

6. Blockchain Beyond Cryptocurrencies:

Blockchain technology is expanding beyond cryptocurrencies into areas like supply chain management, identity verification, and smart contracts. In 2025, blockchain will play a pivotal role in creating transparent, tamper-proof systems.

Partnering with the Best Software Development Company in India enables businesses to harness blockchain technology for innovative applications that drive efficiency and trust.

7. Progressive Web Apps (PWAs):

Progressive Web Apps (PWAs) combine the best features of web and mobile applications, offering seamless experiences across devices. PWAs are cost-effective and provide offline capabilities, making them ideal for businesses targeting diverse audiences.

The Best Software Development Company in Gujarat can develop PWAs tailored to your business needs, ensuring enhanced user engagement and accessibility.

8. Internet of Things (IoT) Expansion:

IoT continues to transform industries by connecting devices and enabling smarter decision-making. From smart homes to industrial IoT, the possibilities are endless. In 2025, IoT solutions will become more sophisticated, integrating AI and edge computing for enhanced functionality.

For businesses in Vadodara and beyond, collaborating with the Best Software Development Company in Vadodara ensures access to innovative IoT solutions that drive growth and efficiency.

9. DevSecOps:

DevSecOps integrates security into the DevOps pipeline, ensuring that security is a shared responsibility throughout the development lifecycle. This approach reduces vulnerabilities and ensures compliance with industry standards.

The Best Software Development Company in India can help implement DevSecOps practices, ensuring that your applications are secure, scalable, and compliant.

10. Sustainability in Software Development:

Sustainability is becoming a priority in software development. Green coding practices, energy-efficient algorithms, and sustainable cloud solutions are gaining traction. By adopting these practices, businesses can reduce their carbon footprint and appeal to environmentally conscious consumers.

Working with the Best Software Development Company in Gujarat ensures access to sustainable software solutions that align with global trends.

11. 5G-Driven Applications:

The rollout of 5G networks is unlocking new possibilities for software development. Ultra-fast connectivity and low latency are enabling applications like augmented reality (AR), virtual reality (VR), and autonomous vehicles.

The Best Software Development Company in Vadodara is at the forefront of leveraging 5G technology to create innovative applications that redefine user experiences.

12. Hyperautomation:

Hyperautomation combines AI, ML, and robotic process automation (RPA) to automate complex business processes. By 2025, hyperautomation will become a key driver of efficiency and cost savings across industries.

Partnering with the Best Software Development Company in India ensures access to hyperautomation solutions that streamline operations and boost productivity.

13. Augmented Reality (AR) and Virtual Reality (VR):

AR and VR technologies are transforming industries like gaming, education, and healthcare. In 2025, these technologies will become more accessible, offering immersive experiences that enhance learning, entertainment, and training.

The Best Software Development Company in Gujarat can help businesses integrate AR and VR into their applications, creating unique and engaging user experiences.

Conclusion:

The software development industry is poised for significant transformation in 2025, driven by trends like AI, cloud-native development, edge computing, and hyperautomation. Staying ahead of these trends requires expertise, innovation, and a commitment to excellence.

For businesses in Vadodara, Gujarat, or anywhere in India, partnering with the Best Software Development Company in Vadodara, Gujarat, or India ensures access to cutting-edge solutions that drive growth and success. By embracing these trends, businesses can unlock new opportunities and remain competitive in an ever-evolving digital landscape.

#Best Software Development Company in Vadodara#Best Software Development Company in Gujarat#Best Software Development Company in India#nividasoftware

5 notes

·

View notes

Text

Discover the power of automation in machine learning with no-code AI. This article explains why businesses should take notice and embrace this game-changing technology.

#no code ml#no code ml tools#no code ml platform#no code machine learning#no code machine learning tools#no code ai

0 notes

Text

No Experience? Here’s How You Can Transform Into an Ethical Artificial Intelligence Developer

New Post has been published on https://thedigitalinsider.com/no-experience-heres-how-you-can-transform-into-an-ethical-artificial-intelligence-developer/

No Experience? Here’s How You Can Transform Into an Ethical Artificial Intelligence Developer

AI and machine learning (ML) are reshaping industries and unlocking new opportunities at an incredible pace. There are countless routes to becoming an artificial intelligence (AI) expert, and each person’s journey will be shaped by unique experiences, setbacks, and growth. For those with no prior experience eager to dive into this compelling technology, it’s important to know that success is possible with the right mindset and approach.

In the journey to AI proficiency, it’s crucial to develop and utilize AI ethically to ensure the technology benefits organizations and society while minimizing harm. Ethical AI prioritizes fairness, transparency, and accountability, which builds trust among users and stakeholders. By following ethical guidelines, learners and developers alike can prevent the misuse of AI, reduce potential risks, and align technological advancements with societal values.

Despite the importance of using AI ethically, among tens of thousands of people learning how to use AI, research has shown that less than 2% actively searched for how to adopt it responsibly. This divide between those learning how to implement AI and those interested in developing it ethically is colossal. Outside our research, Pluralsight has seen similar trends in our public-facing educational materials with overwhelming interest in training materials on AI adoption. In contrast, similar resources on ethical and responsible AI go primarily untouched.

How to Begin Your Journey as a Responsible AI Practitioner

There are three main components that responsible AI practitioners should focus on — bias, ethics, and legal factors. The legal considerations of AI are a given. Using AI to launch a cyberattack, commit a crime, or otherwise behave illegally is against the law and would only be pursued by malicious actors.

In terms of biases, an individual or team should determine whether the model or solution they are developing is as free of bias as possible. Every human is biased in one form or another, and AI solutions are created by humans, so those human biases will inevitably reflect in AI. AI developers should focus on consciously minimizing those biases.

Addressing ethical considerations can be more complex than addressing bias, as ethics are often closely tied to opinions, which are personal beliefs shaped by individual experiences and values. Ethics are moral principles intended to guide behavior in the quest to define what is right or wrong. Real-world examples of ethics could include whether it is ethical for a companion robot to care for the elderly, for a website bot to give relationship advice, or for automated machines to eliminate jobs performed by humans.

Getting Technical

With ethics and responsible development in mind, aspiring AI developers are ready to get technical. It’s common to initially think that learning to develop AI technologies requires an advanced degree or a background working in a research lab. However, drive, curiosity, and the willingness to take on a challenge are all that’s required to start. The first lesson many AI practitioners learn is that ML is more accessible than one might think. With the right resources and a desire to learn, individuals from various backgrounds can grasp and apply even complex AI concepts.

Aspiring AI experts may find that learning by doing is the most effective approach. It’s helpful to start by choosing a project that is both interesting and manageable within the scope of ML. For example, one might build a model to predict the likelihood of a future event. Such a project would introduce concepts that include data analysis, feature engineering, and model evaluation while also providing a deep understanding of the ML lifecycle—a key framework for systematically solving problems.

As an individual delves into AI, experimenting with different tools and technologies is essential to tackling the learning curve. While no-code and low-code platforms, such as those from cloud providers like AWS, can simplify model-building for people with less technical expertise, individuals with a programming background may prefer to get more hands-on. In such cases, learning Python basics and utilizing tools like Jupyter Notebooks can be instrumental in developing more sophisticated models.

Immersing oneself in the AI community can also greatly enhance the learning process and ensure that ethical AI application methods can be shared with those who are new to the field. Participating in meetups, joining online forums, and networking with fellow AI enthusiasts provide opportunities for continuous learning and motivation. Sharing insights and experiences also helps clarify the technology for others and strengthen one’s own understanding.

Choose a Project that Piques Your Interests

There’s no set roadmap to becoming a responsible AI expert, so it’s important to start wherever you are and build skills progressively. Whether you have a technical background or are starting from scratch, the key is to take that first step and stay committed.

The first project should be something that piques interest and is fueled by motivation. Whether predicting a stock price, analyzing online reviews, or developing a product recommendation system, working on a project that resonates with personal interests can make the learning process more enjoyable and meaningful.

Grasping the ML lifecycle is essential to developing a step-by-step approach to problem-solving, covering stages such as data collection, preprocessing, model training, evaluation, and deployment. Following this structured framework helps guide the efficient development of ML projects. Additionally, as data is the cornerstone of any AI initiative, it’s essential to locate cost-free, public datasets relevant to the project that are rich enough to yield valuable insights. As the data is processed and cleaned, it should be formatted to enable machines to learn from it, setting the stage for model training.

Immersive, hands-on tools like AI Sandboxes allow learners to practice AI skills, experiment with AI solutions, and identify and eliminate biases and errors that may occur. These tools give users the chance to safely experiment with preconfigured AI cloud services, generative AI notebooks, and a variety of large language models (LLMs), which help organizations save time, reduce costs, and minimize risk by eliminating the need to provision their own sandboxes.

When working with LLMs, it’s important for responsible practitioners to be aware of biases that may be embedded in these vast caches of data. LLMs are like expansive bodies of water, containing everything from works of literature and science to common knowledge. LLMs are exceptional at producing text that is coherent and contextually relevant. Yet, like a river moving through diverse terrains, LLMs can absorb impurities as they go—impurities in the form of biases and stereotypes embedded in their training data.

One way to ensure that an LLM is as bias-free as possible is to integrate ethical principles using reinforcement learning from human feedback (RLHF). RLHF is an advanced form of reinforcement learning where the feedback loop includes human input. In simplest terms, RLHF is like an adult helping a child solve a puzzle by actively intervening in the process, identifying why certain pieces don’t fit, and suggesting where they might be placed instead. In RLHF, human feedback guides the AI, ensuring that its learning process aligns with human values and ethical standards. This is especially crucial in LLMs dealing with language, which is often nuanced, context-dependent, and culturally variable.

RLHF acts as a critical tool to ensure that LLMs generate responses that are not only contextually appropriate but also ethically aligned and culturally sensitive. This instills ethical judgment in AI by teaching it to navigate the gray areas of human communication where the line between right and wrong is not always definitive.

Non-Technical Newcomers Can Turn Their Ideas Into Reality

Many AI professionals without IT backgrounds have successfully transitioned from diverse fields, bringing fresh perspectives and skillsets to the domain. No-code and low-code AI tools make it easier to create models without requiring extensive coding experience. These platforms allow newcomers to experiment and turn their ideas into reality without a technical background.

Individuals with IT experience, but lacking coding expertise, are in a strong position to move into AI. The first step is often learning the basics of programming, particularly Python, which is widely used in AI. High-level services from platforms like AWS can provide valuable tools for building models in a responsible way without deep coding knowledge. IT skills like understanding databases or managing infrastructure are also valuable when dealing with data or deploying ML models.

For those who are already comfortable with coding, especially in languages like Python, the transition into AI and ML is relatively straightforward. Learning to use Jupyter Notebooks and gaining familiarity with libraries like Pandas, SciPi, and TensorFlow can help establish a solid foundation for building ML models. Further deepening one’s knowledge in AI/ML concepts, including neural networks and deep learning, will enhance expertise and open the door to more advanced topics.

Tailor the AI Journey to Personal Goals

Although starting from scratch to become an AI expert can seem daunting, it is entirely possible. With a strong foundation, commitment to ongoing learning, hands-on experience, and a focus on the ethical application of AI, anyone can carve their way into the field. There is no one-size-fits-all approach to AI, so it’s important to tailor the journey to personal goals and circumstances. Above all, persistence and dedication to growth and ethics are the keys to success in AI.

#adoption#Advice#ai#AI adoption#ai skills#ai tools#AI/ML#Analysis#approach#artificial#Artificial Intelligence#AWS#background#Behavior#Bias#biases#bot#Building#challenge#Cloud#cloud providers#cloud services#code#coding#communication#Community#continuous#crime#curiosity#cyberattack

0 notes

Text

Introduction

In today's rapidly evolving cryptocurrency market, CryptoBotsAI is actively developing a robust foundational framework. This framework has been meticulously designed to cater to the multifaceted requirements of participants within the cryptocurrency ecosystem.

At present, the cryptocurrency landscape is characterized by a proliferation of fragmented solutions, each tailored to address specific niche needs. These solutions often focus on singular aspects of cryptocurrency trading, investment, or management. While this diversity can be beneficial, it also poses challenges for investors and industry professionals.

CryptoBotsAI recognizes a significant gap within the cryptocurrency industry—a need for a cohesive and all-encompassing framework. This framework aims to consolidate various functionalities, tools, and resources under one roof. By doing so, it streamlines the often complex and disjointed processes that investors and stakeholders face in the cryptocurrency space.

Overview

We are developing an all-encompassing platform fueled by AI and ML, dedicated to serving crypto investors and users alike. Our platform will feature a no-code interface for creating and managing smart contracts, along with thorough auditing capabilities.

Additionally, various bots will be available to facilitate trading strategies. With these comprehensive tools and insights, we aim to simplify token creation and enhance trading approaches, offering a one-stop solution for all crypto-related requirements.

Our Website: https://www.cryptobotsai.com

Twitter: https://twitter.com/CBAIOfficial

Telegram: https://t.me/CryptobotsaiOfficial

Facebook: https://www.facebook.com/profile.php?id=61553213845457

Instagram: https://www.instagram.com/cryptobots_ai/

14 notes

·

View notes

Text

The Future of Jobs in IT: Which Skills You Should Learn.

With changes in the industries due to technological changes, the demand for IT professionals will be in a constant evolution mode. New technologies such as automation, artificial intelligence, and cloud computing are increasingly being integrated into core business operations, which will soon make jobs in IT not just about coding but about mastering new technologies and developing versatile skills. Here, we cover what is waiting to take over the IT landscape and how you can prepare for this future.

1. Artificial Intelligence (AI) and Machine Learning (ML):

AI and ML are the things that are currently revolutionizing industries by making machines learn from data, automate processes, and predict outcomes. Thus, jobs for the future will be very much centered around these fields of AI and ML, and the professionals can expect to get work as AI engineers, data scientists, and automation specialists.

2. Cloud Computing: