#not my default interpretation but it amuses me whenever I think of it

Text

It is really funny to me to think of Life Series as like, the hermits and friends LARPing together. Like, they don't get enough violence in their day-to-day relationships, so they just go off to a different server to get all the bloodlust out and act out dramatic storylines while they're at it

211 notes

·

View notes

Note

Okay, what lightning based creature from folklore and mythology might you interpret Josh being spliced with? Same with Corey, because "chameleon" don't cut it lol

Ugh, so many.

Corey:

My first thought would be any of the practically endless variety of household spirits and fairies attributed with invisibility - most of them usually denoted as being mischief makers, not inherently good or evil but more aligned to like a chaotic-neutral kinda thing than any particular morality.

These include, but are not limited to, various kinds of goblin-esque creatures that aren’t necessarily what one would picture when thinking of goblins in terms of like, Lord of the Rings. But brownies, hobgoblins and trow from various Celtic regions of Europe, or something like the duende from Iberian folklore perhaps, some takes on the domovoi from Russian legends, or maybe French lutins though they’re a bit more malevolent in nature than most of these others....but any of those could easily acount for the invisibility/camouflage Corey’s capable of, and there’s a lot of them that like, being part of Corey’s ‘nature’ could pair well with the fact that he’s small and unobtrusive in appearance, as well as being a shy, insecure kid not above expressing (justified) resentment or letting that dictate his actions or behavior towards people.

A boggart could also be interesting because there are as many takes on the boggart being a physical flesh and blood shapeshifter or fairy as there are it being a kind of spirit, and invisibility is as much a part of its nature as taking on the appearance of something someone fears....and there’s a lot of potential IMO in the almost irony of making Corey of all characters be part ‘boogeyman’ - since the boggart is the basis of boogeyman legends.

A sylph could be interesting, but it would have to be a Teen Wolf take on the actual sylphs of legend, like putting its own spin on it like it does with a lot of the other supernatural creatures that show up. Because as is, a sylph is one of the four elemental types of beings along with salamanders, undines and gnomes, and so its literally just a creature of air - but invisible and spritely, etc. So a Teen Wolf version of a sylph that’s flesh and blood at least some of the time and even CAN be ‘spliced’ into the chimera genome in some way....like, you’d definitely have to tweak your version to make it work, but it could work. And sylph fits a lot about Corey as a character, and it opens up doors for him to have powers beyond just invisibility, such as maybe giving him a kind of telekinesis or even the ability to levitate.

There’s a couple of Finnish beings of legends that could fit the bill, like keiju (kinda a Finnish version of pixies or sprites) or even tonttu, which are like a kind of....proto-Christmas elf, almost? LOL. But again, both are attributed with invisibility and discretion, though the keiju tend to be less concerned with human affairs while the tonttu are normally seen as helpful beings and friendly to humanity.

A bodach could be interesting too, they’re Scottish tricksters, usually more malevolent than neutral but not necessarily. Again, normally invisible, often follow people and frightening them with the sound of footsteps while there’s not even footprints in sight.....BUT the thing that’s interesting about this at least in terms of Teen Wolf, is there are various kinds of bodach like the Bodach Glas, the Dark Grey Man, who are also perceived as death omens. Like they’re normally invisible, and the only time you see one is if you’re going to die, and so given the other death-omen related beings in TW like banshees and hellhounds, Corey actually being part Bodach could have some interesting implications or associations....like, maybe if it turns out that he can turn invisible whenever he wants, but occasionally he realizes someone sees him even when he doesn’t want them to....but when that happens, that’s actually just because or a sign that the person is destined to die soon. Especially given the way Corey was used in 6A during the Wild Hunt storyline, like....you could definitely tie that into things there or go in similar directions with that.

Tbh though, I’m most intrigued I think by the idea of what if Corey was part....leshy, or one of the various analogues to the leshy in different regions like the borovoi in Russia or the Lithuanian mishkinis. But the leshy, and other related beings are like....a nature spirit, albeit a physical being rather than like a true spirit-spirit. But they’re usually nature GUARDIANS, specifically, and most associated with woods and forests. There’s also a fun kind of irony because one of the attributes of the leshy, aside from its invisibility or ability to disguise itself among the trees by blending in with its backgrounds, is like....the leshy is a size changer as well, and typically defaults as an enormous being, as tall as a tree....but who can also shrink to the size of a blade of grass. Given that Corey’s on the small size, this kinda amuses me, but also could make for a fun twist if he eventually realizes his powers don’t let him just turn invisible or fade into the background, but like, he can change his size and height as well. Make him like an Ant-Man kinda character.

But another thing that’s particular interesting about the leshy, and makes me lean towards it, is that the leshy isn’t just a generic forest spirit or guardian, but is associated with hunting in specific. But not in the human kind of way, but the hunters of nature.....as in, the leshy is often surrounded by or depicted as always being in the company of...packs of wolves. Occasionally bears, too, but they’re most associated with wolves. Which makes making Corey part leshy and part of a wolf pack particularly fitting, I think. And also sets up some interesting conflicts with hunters like Argent, even if Corey doesn’t even KNOW why he’s so antagonistic towards them, but like, as part leshy, he would likely be prone to very Not Good vibes about human hunters. Which also creates some interesting implications about the WHY of the Dread Doctors of all beings choosing to make a chimera that was part leshy aka anti-hunter....given that the lead Dread Doctor, Marcel, pretty much did everything he did because he was super obviously in love with Sebastian aka the Beast, the prime target and enemy of hunters everywhere, and the whole reason the Argent dynasty began in the first place.

Josh:

I’d need to give some more thought to him. I have seen people headcanon that Josh was spliced with some kind of raiju, which is a creature from Japanese legends typically associated with various gods of thunder. The raiju is a kind of thunder-beast, and usually takes the form or appearance of a blue-white wolf shaped from lightning, though it can also take other forms like that of a weasel, a badger or even a dragon.

I mean, this one definitely does work, its just I’m kinda like.....eh, just because TW has fucked around with and fucked over Japanese legends enough on the show without putting any real effort into prioritizing or centering them or the characters associated with them....aka all our rants about Kira and the nameless/barely-a-character nogitsune. I suspect my resistance to having Josh be part raiju is less that like, it doesn’t fit or make sense than it is mostly just.....I don’t trust the execution of most of the people I’ve seen do the headcanoning him as such, lol, so I’m knee-jerk inclined to just run the opposite direction with it, lol. Also, along similar lines, just NO times infinity to having him be in any way part thunderbird because Leave Native American legends alone Teen Wolf, You’ve Done Enough.

There are a number of other kinds of lightning birds though, from regions all around the world, like Slavic mythology and folklore has a couple, particularly associated with Perun, there’s a few in South American regions, Zulu folklore has a lightning bird called the impundulu....but even just going back to Eastern Europe with this, in the sense that since so many of the other creatures TW focused on originate there and that’s where the DD are from, its not a stretch to imagine beings or creatures from that region being particularly central to a lot of their experiments....like, for instance in terms of that reasoning, my personal preference would be to go with one of the various takes on the shtriga or strigoi that associate them with lightning (mostly ball lightning) that could work and be something a bit different.

Because the strigoi are basically the basis for Stoker’s take on vampires in particular, so instead of being specifically derived from some kind of lightning-specific creature or being like the raiju, making Josh part strigoi would mean his electricity powers were really only incidental to his chimera nature rather than the focus of it, and since the Teen Wolf chimeras are all one third human, one third werewolf, and one third something else....Josh being a strigoi chimera would effectively make him TW’s version of a vampire-werewolf hybrid.

(Also, like with the leshy, the strigoi makes a certain kind of sense to be spliced with werewolves or associated with a wolf pack, because the strigoi is pretty much definitively THE version of a vampire that having vampires have strong connections to and imagery associated with wolves, or vampires being able to shapeshift into wolves specifically.....like the strigoi are where those legends and takes in particular all stem from).

And I mean, Josh as part strigoi definitely puts his teeth when he’s in his shifted form in a totally different light, lolol.

16 notes

·

View notes

Text

on the topic of rk900 && his ‘deviancy’

the way that i write rk900 to be is very specific, and it includes a lot, which i will? try to explain? as best i can? please feel free to ask clarifying questions though like i have so much to say about this and im sure i’ll miss something. anyway imma shove this under a cut because i KNOW im about to just fucking go.

so i wanna make it very clear from the get go that 900 is not deviant in the typical fashion. he does not believe that he is a free being with actual emotions and rights to live. there is never a moment where he stops and goes ‘oh shit’ and breaks through that red tape. he never has an ‘i may be deviant’ breakdown. he is not deviant.

however, he is not not-deviant.

what i mean by this is kind of complicated. let me start with some background into my personal interpretation of the rk900 series in general. they are a series designed for warfare -- the majority of them have been shipped overseas with soldiers to act as a variety of things: weapons, infiltration, an inhuman line of defense to protect the human soldiers --- like. they were specifically designed to be killers, to be dangerous, to be pretty damn hard to stop. they are essentially top of the line terminators -- are they entirely legal? ehhhhhh. but they let the government get away with things that might either kill their human soldiers or are of questionable ethic that they can just.... decommission the android afterwards. that’s their main function, the main reason they exist.

this is crucial, and i will explain more on it in a moment, but for the rest of this meta i’m going to be using rk900 to talk of the general model series, and 900 to speak specifically to mine (and, in a lesser way, the fandom one in general, though this should go without saying i don’t intend to infringe upon other personal headcanons of rk900 writers)

connor was the rk ‘law enforcement’ prototype, but he also functioned mainly as a playing piece in an android revolution that, let’s face it, cyberlife saw coming a mile away. cyberlife wanted a say. they wanted to have a semblance of control over how to twist the uprising in a way that benefit them. given 900 comes about in a world where the revolution was quelled, cyberlife basically achieved their goal (i’ll touch upon my meta of my machine connor and this in another post).

the reason this is important is because 900 was implanted with the majority of observations and experiences that connor had. things he learned about humanity, how to mimic it, how to manipulate it, how to use it. things like the events of the revolution in the order they happened, what it was like to be a detective with the dpd, literally like. all the things that connor went through or observed or scanned or downloaded or learned -- these were uploaded into 900.

now, his memories weren’t necessarily input into 900 -- that is, 900 doesn’t have any first hand accounts of most things (from petting sumo all the way to connor ending the revolution). so even if he has an understanding of what being on a case is like, he doesn’t have connor’s exact memories of doing it. it’s more like all of these things have been converted into data for him to retrieve -- it’s been shifted into facts, statements, articles, serving more as.... general history knowledge? like that stuff everyone just knows for some reason?

but it’s more in depth than that because, yknow, they intended for 900 to be top of the line (yes, 900, not rk900). so all this information from connor was used to boost the insanely vast amounts of knowledge that they input into 900 to begin with -- it was used as contextual information, filler information, you get me? because the main thing that differed between connor and 900 is that 900 was given all the knowledge he needed before he was released, where as connor was expected to observe and adapt. cyberlife took connor’s methods of observing and interacting and adapting to humanity and refined it so that 900 already had that information.

so when 900 was officially done, he had basically anything he might need to know in his software already. he didn’t need to learn anything.

so let me backtrack a little bit into why i say 900 gets these things and not all the rk900s.

like i said before, the rk900s were built for warfare. 900 was not. i mean, yes, his model and abilities are all the same, he is still an rk900 model. but he was not built to go to war. the 900 that connor meets in the zen garden is the same 900 i write. that particular rk900 model, 900, was specifically designed to replace connor. the others were mass produced for their purpose as war based androids. but 900 was made to work for the DPD, to sort of take connor’s place, in part because it gives them access to local law, but also because they want to monitor the place where connor was assigned.

so because of this 900 does not have a purpose.

he was not developed with any specific goal or mission or duty aside from ‘work with the dpd’, so he doesn’t have the same goal set that the rk900 models are programmed with. cyberlife essentially input all this data into 900, and then didn’t tell him what to do with it aside from ‘just go to this location’ which means that 900 had to figure it out for himself.

this is where cyberlife fucked up big time.

the other rk900 models are designed to be perfect soldiers -- deviancy was basically coded out of them based on connor’s experience (whether or not he went deviant, it was in his code). thats not to say that they can never go deviant, its just to say it would be really fucking hard to make them do that.

since 900 has none of that, he is inherently different from the rk900 model. when you make an android without a purpose, you have basically created a deviant android from the get go. 900 is more or less his own model, in which there is only one 900, and by default, he works for the Detroit Police Department.

now, generally, this means he’s paired with gavin (gotta live up to that delicious ship, honestly) so a lot of my following nonsense is stemmed from that but the point is that, he gets to decide what his goal is whenever he wants, so it can change, and it does change, and the choice is entirely his own. by all intents and purposes, cyberlife inadvertently created an android with honest to god free will.

so where does this leave us with deviancy? because i can hear ya’ll but fox, you said he wasn’t deviant and now you’re saying he is which is it?

here’s what makes 900, well, 900. as part of the fact that he is so incredibly self-aware, he knows he’s a machine. he accepts he’s a machine. there isn’t any like -- ‘i’m a machine’ with a touch of sadness or regret. he doesn’t wish to be human -- he has no desire to feel or experience human things, or do whatever deviant androids wanted. he doesn’t care about that. it doesn’t interest him in the slightest. and this isn’t because he doesn’t know about feelings or that “how could he know if he doesn’t want them if he hasn’t experienced them” because it’s not like that. 900 has an incredible sense of what things are, what they’d be like -- he got all of this from connor, remember. so 900 isn’t inherently interested in being anything other than a machine, and he holds no misunderstandings about what being a machine entails either.

does that make sense? hold on let me try it this way. because cyberlife tried so hard to make sure that 900′s software had everything, they created an incredibly self-aware android that doesn’t seek humanity because of how well they understand life. 900 doesn’t like or dislike being an android -- he just is. he recognizes that. wanting to be something else doesn’t... compute because it just doesn’t make sense. like why would he want to be human when he’s not? when he can be better and do more? like i can’t even say he’s happy about it because he doesn’t (at first) associate chemical reactions in his system with being emotions because they aren’t. it’s all synthetic.

he is perhaps the most alive an android can get without being alive, honestly.

900 decides that, upon being assigned to work with gavin, that gavin is technically his mission now. as a partner to a detective, but as a partner that is stronger, faster, and can withstand immense damage, he essentially positions himself as a bodyguard for the human. the problem here is that, due to his awareness, his approach to this is.... unorthodox.

900 will let gavin throw a punch at someone who can and will definitely hit back, but he’ll stop gavin from walking into an ambush. he might get gavin a coffee one day without being asked, but then throw the coffee at him the next day when gavin demands one. he has a habit of making sly comments and is known to push buttons and see what he can get away with, testing limits and constantly pressing against the boundaries of what he can do before someone snaps, and then sees if he can go just a little farther.

900 is very much his own person. he belongs to neither cyberlife nor DPD. he does what he wants when he wants it and how he wants it, and he can, at times, appear very human (albeit one that is obnoxious and at times infuriating). however 900 never lets it be forgotten that he is a machine. he can crush every bone in your body one by one while you’re helpless to it. he can have expressions that are near-humanlike : amused, angry, exasperated. but he will go from lightly smiling to fucking cold eyes and danger just radiating off of him to the point people will actually feel the room lower a few degrees

900 knows he’s a machine. if you even for a moment forget, he will remind you. he has unethically tortured suspects for information, they purposely don’t give 900 a gun at the start because he would get bored and simply shoot whoever they’re investigating and apologize later. he has no qualms about killing, about breaking laws or faces, about doing things the way that he feels like doing them, with or without anyone approving. and if anyone thinks they can stop him, they’re just. good fucking luck.

but the reason he doesn’t do any of this is because he is self-aware enough to know there’s no fucking point to it. his mission is, as he decides, “protect gavin reed” and he will do what it takes for that, which may include punching a suspect or stealing evidence or something, he doesn’t care, but if it serves no true purpose in what 900 is doing, then he consciously decides he’s not going to just.. do it. but the thing is that at any moment he could decide to change his mind. and that’s what makes him so terrifying. he is literally unpredictable.

i ... okay i think that’s what i wanted to say? just as like some added notes, but with gavin, 900 does become.... hmm... softer? he doesn’t soften but he basically claims gavin as his own, like he is assigned to be gavin’s partner but it becomes literally almost a possessive thing. 900 makes gavin his mission, and if anyone gets in the way, they’re fucked. but by attaching himself to a human, he opens himself up to the softer side of being human, so 900 never truly ‘becomes deviant’ because there is never, as i said, a moment where he’s got a red wall that says this isn’t protocol becaaaause he doesn’t have protocol. so there’s never a chance for him to break through it. but he takes care of his partner, makes sure gavin is eating well and sleeping well, makes sure he’s more or less safe, is always in tuned to where gavin is and what gavin is doing, will step in at a moment’s notice if he feels the need.

it becomes way more.... well, more, if this is a ship with a gavin, but regardless, 900 will have a gentler approach to his interactions with the human he has claimed that will be noticeable. softer tones, less cold eyes, more considerate touches. he’ll listen to gavin (may not always follow directions, but he listens), he’ll defend gavin’s actions, he’ll get angry if something happens to gavin. 900 never explicitly goes deviant, and he never fully accepts that what he feels is.... a real feeling? because he does not fully believe that androids can experience true emotion, so even if he does get angry, or he does feel amused or happy or whatever, it’s based on an extensively coded software that has made him so perfectly able to mimic humanity that it’s basically indecipherable. yes, he cares about gavin in most cases. yes he can feel panic or worry if something happens. but in general, no. he will not feel fear, he is not concerned with death, he can’t experience pain... so.

a last note is that like. this is the reason that he goes by 900. gavin typically calls him ‘nines’ which is only allowed for gavin. but a name is a human thing, and 900 is not and has no desire to be, so he just uses 900. most other people in the DPD also just use 900, sometimes they’ll call him rk which he’s okay with but it’s not his preference. he would’ve allowed gavin to name him if he wanted to but gavin didn’t, so that’s why when gavin begins using nines, that’s what 900 assumes as a name. 900 is comfortable to him, it’s who he is, and he doesn’t desire more than that.

alright i’m. i’m probably gonna start getting repetitive soon, and a lot of the rest of the emotional aspect is based on 900 being involved with gavin, and thats case by case so it’s can be subject to change, and this post is meant to serve as a general basis for my portrayal of 900.

#jesus i knew this would get long#I STILL DONT FEEL LIKE I SAID IT ALL#;;rk900canon#anyway if you actually fucking read that whole thing good lord

8 notes

·

View notes

Text

Character Spotlight 1, P1

DAMIAN BEELZY

DISCLAIMER BECAUSE YES,

I do not own zoophobia. Zoophobia would belong to the lovely Vivian Medrano (I believe that's how her last name is spelt? Meh, I'll check later), otherwise known as Vivziepop

Also, while yes, this is a series where I am going to be critical of the source material, this is NOT a critique of vivziepop herself, as zoophobia is...2, 3 years old? It'd be unfair to judge her and her writing skills based on something she did a while ago.

I'm not doing this because I hate the source material either. On the contrary, I love Zoophobia. The reason I'm doing this is because I believe that if we find flaws in media we enjoy, we could all learn something valuable from it, and apply it to our own work.

If you disagree with something stated here, that's alright. Feel free to tell me what you think and ask questions. I'm not telling you what to think. I simply hope you enjoy.

I apologize for wasting your time.

-ATOUN

-----------------------'---------------

......So, I'm back.

I've already talked about Dame before in my favorite zp characters list. I've already stated why I like him. For anyone who might not have seen that list, let me give you a spicy recap. I found Dame to be one of the more entertaining characters throughout the comic and he was one of the better written characters during the 5-ish chapters we got from this series. I've already stated what I like about him, so let's just get into some things I don't like.

Oh come on, let's be honest. We all know why we're here. We all can see how much attention my least favorite character list got compared to my favorite list (even if admittedly some of that attention was me thanking people, which btw is something I should really do more often ). You're all here because you want me to tear a hole in this series. It's the same reason most people watch car racing. We don't wanna see who wins. We wanna see some epic car crashes.

Still, I want to start with critiques towards this character I don't agree with.

-----------------------------------

1. Damian is a bad main character because he only has negative character traits

A character having only bad character traits does not in itself mean a character is bad. If that character is poorly written, then it's a bad character. Also, I'd advise you read chapters 3 and 5 where Dame is shown to have some good traits about him. (Ex. Chapter 5 where he expresses concern for Addi )

2. Damian's design is too bland compared to other characters

While I agree with this to some extent, something I want to say here is that Dame is not unique in this regard. You could apply this to Spam, Vanex, Jackie, and Kayla as well. This is more aimed at those who single Damian out as the only one with this problem.

3. Damian is a bad villain

Damian is not meant to be the antagonist. He has been confirmed to be apart of the main cast, and you'll notice that in all of Vivz's villain line ups, he is not present. At most, he may a rival or adversary to either zill or Jack.

4. He is unoriginal as a character.

.....and any other characters in media today are? It's very rare in this day and age to come across anything 100 % original. It's even been proposed that there are no longer any original ideas left. Besides, a character being bad does not mean the character itself is bad. At most, it's a reflection on the writers laziness.

5. He's too edgy.

Ah yes, a commonly used complaint you'll hear spouted by angsty 13 year olds who think (despite the fact "edgy" characters are often fan favorites since they often turn out to be the most interesting / relatable characters ) that edginess = bad, and that anything bad happening to a character like, I dunno, EMOTIONAL ISSUES THAT REAL PEOPLE DEAL WITH makes that character edgy by default. Edginess can be done wrong, but not every edgy character is bad. Shut your pie hole, and hustle your buns out of my Italian styled soup kitchen, you cotton headed ninny mugginses. *cue air horns*

Also, hunny, if you hate edgy characters, oh boy, you do NOT wanna read ANY of my stories.

----------------------------------------

There. Now onto the main event. The butchering of a popular character. Let's get ready to break the hearts of fan girls everywhere! MUAH-HAHAHA!

Actually, I wasn't really able to find too much wrong with a character save for a) something completely subjective, b) something related more to a problem a have with zp's pacing as opposed to the character and c) a concern about how the character is written.

A) Damian being too much of a jerk. I disagree with this, but I didn't mention this above because. ...yeah, some people can feel that Dame is too much of a jerk, and I get why. It's more subjective as this attributes more to a subjective opinion on the character.

B) Damian's freak out in chapter 3. It's just the pacing in that scene that gets to me. It feels as though Dame goes from 0 to 6 in only a few frames and that entire segment where he's slowly becoming angrier and angrier feels rushed. According to Dame's character sheet (shown above ) Damian is supposed to be good at hiding his more demonic tendencies, but you would've never guessed that from this scene. This is something that kind of happens throughout zoophobia where the pacing with be slow, then all of a sudden, we just speed through an entire scene. For instance, the start of chapter 5 is pretty slow. However, we speed through the scene with Tom so fast, his appearance doesn't really do much or become really memorable. This more of a story problem and less of a character problem.

Now.... onto c.

First of all, by concern, I'm referring to something that might be a problem depending on how the rest of zoophobia turns out. The problem with both critiquing and defending Zoophobia is that we only have 5 chapters to go off of to determine its quality. This is something more like the tangent I had about Addison in my least favorite character list. I suggest for this, you grab a spoonful of salt and force it slowly down your throat as you read this as my concerns could easily be proven wrong here.

So what problem could I possibly foresee? Well, for an example of what I'm about to discuss, let me take you to a dark corner of the internet. The RWBY fandom. Specifically, let me introduce you to one of it's main cast

Blake Belladonna.

For anyone outside the loop, Blake is (currently ) one of the most hated characters in the series. There are many reasons why, but for this, I'm going to lock in on one problem in particular. Throughout Volumes 1-5, Blake was notorious for being an inconsistent character. Granted, in volumes 1 and 2, this was not a problem unique to Blake as the writers were still trying to figure out how to write her and the rest of the characters. They couldn't decide weather they wanted Blake to be the introverted, bookworm, straight man character, or to be silly. In later volumes, however, the problem just got worse. While all the other characters were sorted out and had settled on their own personalities, Blake's character seemed to change whenever she was in a new scene. While it's not bad for a character to have multiple sides to them, this is not how you want to do it. One scene, Blake was a bitch who wanted nothing more than to be left alone. Next scene, she was an emotionally mature figure helping her gay chameleon friend with issues. Next scene, she was a trauma victim. Next scene, she was a freedom fighter fighting oppression. Next scene, she was a badass haunted by her past. Next scene, back to bitch.

You see the problem?

This made Blake a hard character to fully connect with, and eventually, the fandom ended up agreeing that Blake is better whenever the scene isn't focused on her.

So what does this have to do with Damian? Well, one thing I noticed with him when I first dipped my toe into this fandom was the three main interpretations of Damian's character there seemed to be. One, a flirty, yet cartoon villainy jerk; two, a misunderstood, rebellious boi who was somewhat mischievous; or three, an overposessive, yet tolerable brat who hated not getting his way. Just to clarify, I'm referring to fans who had only read the comic and had not seen any posts about him from Vivz.

I pondered why during the third zoophobia rewrite, and I eventually came to this conclusion : the type of Damian fans seemed to remember depended on WHICH Dame they remembered best, Ch2, Ch3, or Ch5. Why? Well, it basically wolloped me upside the head after reading a post where Dame's personality was described as "diverse". Because yeah.... It's diverse alright.

Let's put Dame under a microscope for a second and go through each of his appearences, and his character sheet which (someone correct me if I'm wrong ) came out between ch. 2 and ch.3.

His character sheet lists the following about his personality : he ranges from mischievous to a downright brat, he loves entertaining and messing with others and making them laugh, he's flirty, he's open, he can be spoiled or arrogant at times, he gets bored easily, and he is secretly lonely but hard to impress and has an enormous heart. He also has a dark side he's good at hiding

CH 1. Appears to be that one kid who enjoys messing with others, acts slightly flirty towards Kayla, and doesn't seem to take much very seriously.

Ch 2. I've often described this Dame as sociopathic, because honestly, he kinda is. He doesn't care about anyone here but himself. He acts flirty towards Kayla, and torments both Zill and Jack just to further his goal of charming a girl he supposedly knows will "give into temptation eventually " and he's outright manipulative here. He even finds Zill's pain amusing to some extent and mocks him and Jack while aggressively leaning on his cousin (probably to assert dominance (can aggressively leaning be the new t-posing? Please? ))

CH 3. Dame still has some lack of empathy, finding the idea of his cousin being burned alive funny, however this seems to be limited to just Jack. He's a lot more fun loving here, as seen in him running around town with his friends. He darker side makes an appearance. We see him entertaining others at the beginning, and oddly enough, he's more self conscious here. He gets embarrassed by Tenta, is bothered when he is teased about his nanny, and is triggered by the priest spouting that tasty religious bull shit. His conversation with his parents also makes him seem like he needs his daddy's approval, and may have daddy issues.

CH 5. Here, he's more of a brat. He's possessive of Addi, and at the beginning, he's more flirty and care free.

Thus far, Vivz seems to switch between various sides of dame depending on what she needs him to be for a certain scene. As scene with Blake, this isn't something that really works out well. And it's not like Vivz can't write characters with different sides to them. We see her do this with Jack, Kayla, and Cameron. In one chapter, these characters can show more then one side to them. In Damian's case, he's like play-doh. He just molds into whatever Vivz needs instead of just being his own character.

Like I said before though, this is a concern. Future chapters can easily prove me wrong here. This is just the sad ramblings of a Canadian who is obsessed with covering themselves in glitter. Tell me what you guys think and if you enjoyed!

Now to wait for zoophobiapika to either message me or reblog this, quoting a line from it.....

30 notes

·

View notes

Text

Five Times Adam Made Ronan Laugh

Adam/Ronan

A/N: The title says it all. More of a pre-slash fic than anything. I hope you enjoy it!

[Read it on AO3]

Words: 1 680

1.

Adam hadn’t meant for it to come out as sarcastic as it did. He’d aimed for a playful comment to hide a deeper bitterness, but what had left his mouth had been anything but lighthearted, and for a moment he feared that someone would either get pissed at him, or ask him if he was okay. He didn’t want either of those things to happen.

How it ended up with Ronan laughing - genuinely laughing for the first time in weeks - he wasn’t sure. But that was what was happening.

And.

Holy shit.

That laugh was magical.

Adam wasn’t prone on gaping at people like a fool, and thankfully he managed to get control of his features before it became too obvious, but Ronan was still laughing, so it was hard keeping it cool.

Seriously, it hadn’t been that funny.

Gansey also seemed amused at Ronan practically doubling over and clutching his stomach, but Adam assumed that slightly fond smile was present due to him having been friends with the old Ronan. A different Ronan. One that grinned and laughed freely and joked around with his friends and family and didn’t look like he wanted to murder you 95% of the time. A less broken Ronan.

Adam felt like he was finally seeing a glimpse of that Ronan, there on the floor of Monmouth Manufacturing. He had to admit he liked him.

What he didn’t like was the knowing look Noah was throwing his way. How did he always know?

2.

Three weeks later and Adam had, somehow, forgotten all about the incident. They were in Ronan’s car, Adam in the passenger seat of course. They had about fifteen more minutes until they were at Adam’s apartment, and the silence would’ve maybe been awkward had Ronan not been drowning it out completely with his shitty music. Adam only kept his complaints to a minimum since he was giving him a ride.

But that didn’t stop him from scowling at particularly bad songs.

“Cheer up, Parrish,” Ronan told him after he’d caught sight of his dismay. “You’d think I’ve run over your cat or something.”

“You might as well have.”

Ronan shot him a look. “Oh?”

Adam pointed to the radio. “That sounds like a dying cat, Lynch.”

And rather than rolling his eyes or scoffing or even snapping at him, Ronan laughed.

Again.

It was definitely different from the last time Adam had made him laugh. It was calmer, for one. Barely doing more than making his body shake a little bit. He didn’t lose control of the car or throw his head back or clutch at his midriff. He just barked out a laugh or two, his lips not wider than a smile, and then returned his attention to the road.

It kept ringing in Adam’s ears for the rest of the night.

3.

It was silly how Adam could forget about a literal giggle fit and yet not be able to let go of a few seconds of laughter, but that was his current predicament, and it was driving him insane.

Whenever he looked at Ronan all he could think about was how the smooth skin on his face had been decorated with laughter lines, even if it had lasted so shortly. Maybe that was the reason he was obsessed. He’d only gotten a brief taste of it and now needed more.

God, he might be obsessed with Ronan’s laughter. Or at least with the idea of it. Neither option made his life easier.

Ronan was in a bad mood, but something told Adam this was more sorrow than anger. Nothing had set him off, and by this point Adam had learnt that Ronan’s default mode wasn’t rage, per se, but something else. Something that transformed into anger when provoked (which it often was).

He knew he should leave his friend alone. He knew from experience that he had absolutely no idea how to handle Ronan. He was no Gansey, and he was utterly aware of it.

But he couldn’t let him be. Not when he saw something so vulnerable on his face when he thought no one was looking.

He approached him slowly, knowing better than to straight out ask him what was wrong. There was no way Ronan would tell, and he would just be wasting time and scaring him off. It would help absolutely no one.

Ronan raised an eyebrow when he sat down beside him. They were all at Monmouth Manufacturing, but they might as well have been alone since everyone was sitting on their own, desperate for some calm and privacy after a hectic day. Adam was aware of the fact that Ronan might not want him here, but it was worth a try anyway. “Lynch.”

“Parrish,” Ronan replied, his voice steady, but his gaze questioning. “Didn’t know you preferred the floor to the couch.”

Adam waved a hand at him. “Gansey’s weirdly obsessed with me. He keeps leaning against me and it’s getting annoying.”

“Hey.” Gansey furrowed his brows at him, but relaxed when he realized he’d been joking. “Ronan’s rubbing off on you too much, Adam.”

Ronan snorted, but the smile he shot him wasn’t exactly genuine. “Just doing what I can. If anyone needs toughening up, it’s Parrish.”

“You know what? I’m gonna take that as a compliment.”

Ronan raised his hands. “As you should.”

“Because why would I believe that Ronan Lynch would say something mean to me? I mean, have you met him? Ronan Lynch is a sweetheart.”

Ronan almost looked angry now, and Adam was afraid he’d crossed a line, but then, just as he thought Ronan would either get up and leave or reach out to hit him, he laughed. For a second. A beautiful second.

And then he punched Adam on the shoulder, though not hard. “You’re so annoying, Parrish.”

Adam grinned. “You are rubbing off on me.”

4.

“Do you think we’re gonna make it out alive?”

Ronan looked at him, his face completely blank. “From here?”

“From this whole thing,” Adam clarified. “I don’t know. I just have a feeling one of us is gonna die before we’re done with Glendower and Cabeswater. Or at least lose a limb.”

Ronan shifted where he was standing beside him. They were waiting for Gansey to finish getting the right equipment before entering the woods of Cabeswater for the umpteenth time, and Adam had had a bad feeling about everything all week. He didn’t want Gansey or Blue or Noah to know about his worries, but something about Ronan made him want to confess everything he kept in his heart, though he wasn’t sure why.

“I think we’re gonna be okay,” Ronan finally said.

“Do you really believe that or just saying it to make me feel better?”

“When do I ever say things just to make people feel better?”

Adam barked out a laugh. The first laugh he’d uttered all week. Maybe Ronan wasn’t the only one who needed to laugh more. “True.”

Ronan was wearing something slightly weaker than a smile now. “But seriously, Adam. I think we’re gonna be fine.”

Adam. Not Parrish. Somehow that small detail made him believe him.

“Now stop getting cold feet and get yourself together.”

Adam laughed again, harder this time, because he knew Ronan had added that to bring some normalcy into this entire thing, and it was working. “You’re a real charmer, you know. So comforting.”

And Ronan laughed right with him, and the others were very confused when they joined them a few seconds later.

5.

Adam wasn’t an affectionate person, and mind you neither was Ronan, so why they both seemed so okay with Adam having accidentally brushed his hand over his arm was beyond him, but he reckoned he should just be happy Ronan didn’t glare him to death. Instead, he’d done nothing, and Adam had gotten curious, and thankfully the others were way too preoccupied to notice the little game Adam had created. He wasn’t entirely sure how he would’ve explained it.

He was very casual about it; not really wanting Ronan to catch on. But with every seemingly accidental nudge, every brush of his fingers against a random body part, every knee against a knee left Ronan looking at him in a way Adam couldn’t fully interpret, and he was just thinking that maybe it was time to stop when a misplaced touch made Ronan recoil, and it had caught his attention too tightly for him to let it go. “What was that?”

“What was what?”

“You jumped.”

“You make no sense, Parrish.”

Adam narrowed his eyes at him. “Are you ticklish, Ronan Lynch?”

“Fuck off, you- go away!”

Adam had partly been kidding when he’d said it, but Ronan’s reaction to him reaching out was too great for him to have been wrong, and Adam found himself grinning almost smugly at him. “Well, this is interesting.”

“I am literally going to kill you. Pick a spot to be buried, Parrish.”

“Does Cabeswater count?”

“You goddamn nerd- stop it!”

Ronan was smiling almost helplessly whenever Adam moved closer, and Adam had to admit he adored it. Adored the foreshadow of laughter.

But it was nothing compared to the actual laughter.

Adam had barely touched him, and yet Ronan was now giggling like a maniac. Adam knew he wouldn’t have done it had the others been around, but right now they were alone, and a new side of Ronan appeared to greet Adam, albeit for just a moment.

When Ronan backed away from his hands Adam decided to drop it, though he was still smiling, and Ronan failed at his attempt to glare, so he just shook his head with a smile of his own that he was trying to desperately to conceal.

“Let me guess,” Adam said cheerfully. “You think I’m annoying.”

Ronan barked out a laugh that had probably still been bubbling in his throat from earlier. “You read my mind.”

“You have to admit I’m a little fun to be around, at least.”

“Keep dreaming, Adam.”

Adam. Not Parrish.

#pynch#pynch fic#pynch fluff#adam/ronan#adam/ronan fic#trc fic#the raven cycle fic#fluff#mine#five times adam made ronan laugh#nat writes

300 notes

·

View notes

Text

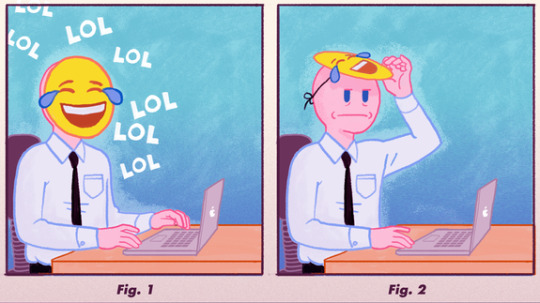

Why so many people type 'lol' with a straight face: An investigation

There's a deceitful act I've been engaging in for years—lol—but it wasn't until recently, while texting a massive rant to a friend, that I became aware of just how bad it is.

I'd just sent an exhaustive recap of my nightmarish day when a mysteriously placed "lol" caught my eye. Not a single part of me had felt like laughing when I typed the message, yet I'd ended my massive paragraph with the words, "I'm so stressed lol."

I had zero recollection of typing the three letters, but there they were, just chilling at the end of my thought in place of a punctuation mark. I hadn't found anything funny, so why were they there? Unclear! I scrolled through my conversations and noticed "lol" at the end of nearly every message I’d sent — funny or not. That's when I realized how frequently and insincerely I use the initialism in messages. I was on auto-lol.

SEE ALSO: Crush Twitter proves that sometimes subtweets can be good

The next day, I arrived to work with a heightened sense of lol awareness and took note of my colleagues' behavior on Slack. They too, overused "lol" in conversation. Chrissy Teigen tweeted about the family hamster again? "Lol." Someone's selling a jean diaper? "Lol." Steve Buscemi's name autocorrected to Steph Buscemi? "Lol."

It was ubiquitous. And though some made audible chuckles at their desks throughout the day, the newsroom remained relatively silent. People were not laughing out loud whenever they said they were. It was all a sham!

As I'm sure is true with everyone, there are times when I'll type "lol" and smile, chuckle, or genuinely laugh out loud. But I'm also notoriously capable of assembling the three letters without moving a facial muscle.

Curious to know why so many of us insist on typing "lol" when we aren't laughing, I turned to some experts.

Why so serious? Lol.

Lisa Davidson, Chair of NYU's Department of Linguistics, specializes in phonetics, but she's also a self-proclaimed "prolific user" of "lol" in texts. When I approached Davidson in hopes of uncovering why the acronym comes out of people like laugh vomit, she helpfully offered to analyze her own messaging patterns.

On its surface, Davidson suspects "the written and sound structure" of "lol" is pleasing, and the symmetry of how it's typed or said likely adds to that appeal. The 'l' and 'o' are also right next to each other on a keyboard, she notes, which makes for "a very efficient acronym." In taking a deeper look, however, she recognized several other reasons one might overdo it with the initialism.

Davidson often sees "lol" used in conjunction with self-deprecating humor, or to poke fun at someone in a bad situation, like "if someone says they're stuck on the subway, and you text back 'lol, have fun with that.'" And in certain cases, she notes, "lol" can be included "to play down aggressiveness, especially if used in conjunction with something that might come across as critical or demanding."

"For example, if you're working on a project with a co-worker, and they save a file to the wrong place in a shared Drive, you [might] say something like, 'Hey, you put that file in the Presentations folder, lol. Next time can you save it to Drafts?'"

Extremely relatable.

Admitting we have a problem

After hearing from Davidson, I set out to analyze a few of my own text messages. I found several of her interpretations applicable and even discovered a few specific to my personal texting habits.

When telling my friend about my stressful day, for instance, I realized I'd included the lol that anchored my message for comfort, like a nervous giggle. In my mind, it meant I was keeping things light, which must mean everything's OK. In many cases, I also add "lol" to a message to make it sound less abrasive. Without it, I fear a message comes across as cold or incomplete.

On occasion, I'll send single "lol" texts to acknowledge I've received a message, but have nothing else to add to the conversation. And as much as it pains me to admit, the lol is sometimes there as a result of laziness. I experience moments of pure emotional exhaustion in which I'd rather opt for a short and sweet response than fully articulate my thoughts. In those cases, "lol" almost always delivers.

A poor soul removing his "lol" mask after a long day of pretending to laugh.

Image: bob al-greene / mashable

The realization that "lol" has become a sort of a conversational crutch for me is somewhat disturbing, but I can take a shred of solace knowing I'm not alone. As previously noted, many of my colleagues are also on auto-lol. (If you need some proof, 3,662 results popped up when I searched the term in Mashable Slack, and those are just the lols visible to me.)

When I brought up the topic of lol addiction in the office, offenders quickly came forward in an attempt to explain their personal behavior. Some said they use it as a buffer word to fill awkward silences, while others revealed they consider it a kinder alternative to the dreaded "k."

Several people admitted they call upon "lol" in times when they feel like being sarcastic or passive aggressive, whereas others use it to avoid confrontation, claiming it "lessens the blow of what we say."

"I've also noticed a lot with my friends that if they say something that creates a sense of vulnerability they'll use 'lol' or 'haha' to diminish its importance," another colleague noted.

While there are a slew of deeper meanings behind "lol," sometimes the lack of audible laughter simply comes down to self-control. You can use the term to communicate you genuinely think something's funny, but you might not be in a physical position to laugh about it — kind of how people type "I'M SCREAMING" and do not scream.

View this post on Instagram

A post shared by ❝ randy ❞ (@randecent) on Aug 11, 2017 at 6:56pm PDT

Understanding the auto-lol epidemic

Nearly everyone I spoke to believed the auto-lol epidemic is real. But how exactly we as a society arrived at this place of subconscious laughter remains a mystery.

Though "lol" reportedly predates the internet, a man named Wayne Pearson claims to have invented the shorthand in the '80s as a way to express laughter online. As instant messaging and texting became more popular, so did "lol," and at some point, its purpose pivoted from solely signifying laughter to acting as a universal text response.

Caroline Tagg, a lecturer in Applied Linguistic and English Language at Open University in the UK, favors emoji over "lol," but as the author of several books about digital communication — including Discourse of Text Messaging: Analysis of SMS Communication — she's very familiar with the inclusion of laughter in text.

"Over time, its use has shifted, and it has come to take on other meanings — whether that's to indicate a general mood of lightheartedness or signal irony," Tagg confirms. "These different meanings emerge over time and through repeated exposure to the acronym."

In some cases, the decision to include "lol" in a message might be stylistic — "an attempt to come across in a particular way, to perform a particular persona, or to adopt a particular style."

Ultimately, Tagg believes everyone perceives "lol" in text differently, and makes the conscious decision to use the initialism for various reasons, which are usually influenced by "conversational demands."

As for the increase in frequency over time, she noted that if you engage in conversation with someone who's a fan of saying "lol," you could wind up using the term more often. "Generally speaking ... people who are in regular contact with each other do usually develop shared norms of communication and converge around shared uses," she said.

Think of it like a vicious cycle of contagious text laughter.

Embarking on an lol detox

Now that I'm aware of my deep-seated lol dependency, I'm trying my best to change it. I encourage anyone who thinks they might be stuck in an lol rut to do the same.

The way I see it we have two options: Type lol less, or laugh out loud more. The latter sounds pretty good, but if you're committed to keeping your Resting Text Face, here are some tips.

Try to gradually wean yourself off your reliance on lol by ending messages with punctuation marks instead, using a more specific emoji in place of your laughter, or making an effort to better articulate yourself. Instead "lol," maybe, "omg that's hilarious," for example.

At the very least, try changing up your default laugh setting once in a while. Different digital laughs carry different connotations. If you're ever in doubt about which to use, you can reference this helpful guide:

LOL/HAHA — I really think this thing is hilarious as shown by my caps!

Lol — Bitch, please OR I have nothing to say.

lollllllllll — Yo, that's pretty funny.

el oh el — So unfunny I feel the need to type like this.

haha — Funny but not worth much of my time.

hahahaha — Funny and worth my time!

hah/ha — This is not amusing at all and I want to make that known.

HA — Yes! Finally!

Lmao/Lmfao — When something evokes more comedic joy than "lol" does.

LMAO/LMFAO — Genuine, impassioned laughter, so strong you feel as though your rear end could detach from your body.

Hehehe — You are softly giggling, were just caught doing something semi-suspicious or sexting, or are a small child or a serial killer. This one really varies.

heh — Sure! Bare minimum funny, I guess! Whatever!

In very special cases, consider clarifying that you are literally laughing out loud. As someone who's received a few "Actually just laughed out loud" messages in my lifetime, I can confirm that they make me feel much better than regular lol messages.

One of the major reasons we rely so heavily on representations like "lol" in digital interactions is because we're desperately searching for ways to convey emotions and expressions that can easily be picked up on in face-to-face conversations. It works well when done properly, but we've abused lol's polysemy over the years. After all the term has done for us, it deserves a break.

If we make the conscious effort to scale back, we might be able to prevent "lol" from losing its intended meaning entirely.

WATCH: How scientists are working to prevent your body from being 'hacked'

#_category:yct:001000002#_author:Nicole Gallucci#_lmsid:a0Vd000000DTrEpEAL#_uuid:be58bf7d-b237-3847-bb74-89d253cc0906#_revsp:news.mashable

0 notes

Text

Autocomplete Presents the Best Version of You

New Post has been published on http://webhostingtop3.com/autocomplete-presents-the-best-version-of-you/

Autocomplete Presents the Best Version of You

Type the phrase “In 2019, I’ll …” and let your smartphone’s keyboard predict the rest. Depending on what else you’ve typed recently, you might end up with a result like one of these:

In 2019, I’ll let it be a surprise to be honest.

In 2019, i’ll be alone.

In 2019, I’ll be in the memes of the moment.

In 2019, I’ll have to go to get the dog.

In 2019 I will rule over the seven kingdoms or my name is not Aegon Targareon [sic].

Many variants on the predictive text meme—which works for both Android and iOS—can be found on social media. Not interested in predicting your 2019? Try writing your villain origin story by following your phone’s suggestions after typing “Foolish heroes! My true plan is …” Test the strength of your personal brand with “You should follow me on Twitter because …” Or launch your political career with “I am running for president with my running mate, @[3rd Twitter Suggestion], because we …”

Gretchen McCulloch is WIRED’s resident linguist. She’s the cocreator of Lingthusiasm, a podcast that’s enthusiastic about linguistics, and her book Because Internet: Understanding the New Rules of Language is coming out in July 2019 from Penguin.

In eight years, we’ve gone from Damn You Autocorrect to treating the strip of three predicted words as a sort of wacky but charming oracle. But when we try to practice divination by algorithm, we’re doing something more than killing a few minutes—we’re exploring the limits of what our devices can and cannot do.

Your phone’s keyboard comes with a basic list of words and sequences of words. That’s what powers the basic language features: autocorrect, where a sequence like “rhe” changes to “the” after you type it, and the suggestion strip just above the letters, which contains both completions (if you type “keyb” it might suggest “keyboard”) and next-word predictions (if you type “predictive” it might suggest “text,” “value,” and “analytics”). It’s this predictions feature that we use to generate amusing and slightly nonsensical strings of text—a function that goes beyond its intended purpose of supplying us with a word or two before we go back to tapping them out letter by letter.

The basic reason we get different results is that, as you use your phone, words or sequences of words that you type get added to your personal word list. “For most users, the on-device dictionary ends up containing local place-names, songs they like, and so on,” says Daan van Esch, a technical program manager of Gboard, Google’s keyboard for Android. Or, in the case of the “Aegon Targareon” example, slightly misspelled Game of Thrones characters.

Another factor that helps us get unique results is a slight bias toward predicting less frequent words. “Suggesting a very common word like ‘and’ might be less helpful because it’s short and easy to type,” van Esch says. “So maybe showing a longer word is actually more useful, even if it’s less frequent.” Of course, a longer word is probably going to be more interesting as meme fodder.

Finally, phones seem to choose different paths from the very beginning. Why are some people getting “I’ll be” while others get “I’ll have” or “I’ll let”? That part is probably not very exciting: The default Android keyboard presumably has slightly different predictions than the default iPhone keyboard, and third-party apps would also have slightly different predictions.

Whatever their provenance, the random juxtaposition of predictive text memes has become fodder for a growing genre of AI humor. Botnik Studios writes goofy songs using souped-up predictive keyboards and a lot of human tweaking. The blog AI Weirdness trains neural nets to do all sorts of ridiculous tasks, such as deciding whether a string of words is more likely to be a name from My Little Pony or a metal band. Darth Vader? 19 percent metal, 81 percent pony. Leia Organa? 96 percent metal, 4 percent pony. (I’m suddenly interpreting Star Wars in quite a new light.)

The combination of the customization and the randomness of the predictive text meme is compelling the way a BuzzFeed quiz or a horoscope is compelling—it gives you a tiny amount of insight into yourself to share, but not so much that you’re baring your soul. It’s also hard to get a truly terrible answer. In both cases, that’s by design.

You know how when you get a new phone and you have to teach it that, no, you aren’t trying to type “duck” and “ducking” all the time? Your keyboard deliberately errs on the conservative side. There are certain words that it just won’t try to complete, even if you get really close. After all, it’s better to accidentally send the word “public” when you meant “pubic” than the other way around.

This goes for sequences of words as well. Just because a sequence is common doesn’t mean it’s a good idea to predict it. “For a while, when you typed ‘I’m going to my Grandma’s,’ GBoard would actually suggest ‘funeral,'” van Esch says. “It’s not wrong, per se. Maybe this is more common than ‘my Grandma’s rave party.’ But at the same time, it’s not something that you want to be reminded about. So it’s better to be a bit careful.”

Users seem to prefer this discretion. Keyboards get roundly criticized when a sexual, morbid, or otherwise disturbing phrase does get predicted. It’s likely that a lot more filtering happens behind the scenes before we even notice it. Janelle Shane, the creator of AI Weirdness, experiences lapses in machine judgment all the time. “Whenever I produce an AI experiment, I’m definitely filtering out offensive content, even when the training data is as innocuous as My Little Pony names. There’s no text-generating algorithm I would trust not to be offensive at some point.”

The true goal of text prediction can’t be as simple as anticipating what a user might want to type. After all, people often type things about sex or death—according to Google Ngrams, “job” is the most common noun after “blow,” and “bucket” is very common after “kick the.” But I experimentally typed these and similar taboo-but-common phrases into my phone’s keyboard, and it never predicted them straightaway. It waited until I’d typed most of the letters of the final word, until I’d definitely committed to the taboo, rather than reminding me of weighty topics when I wasn’t necessarily already thinking about them. With innocuous idioms (like “raining cats and”), the keyboard seemed more proactive about predicting them.

Instead, the goal of text prediction must be to anticipate what the user might want the machine to think they might want to type. For mundane topics, these two goals might seem identical, but their difference shows up as soon as a hint of controversy enters the picture. Predictive text needs to project an aspirational version of a user’s thoughts, a version that avoids subjects like sex and death even though these might be the most important topics to human existence—quite literally the way we enter and leave the world.

We prefer the keyboard to balance raw statistics against our feelings. Sex Death Phone Keyboard is a pretty good name for my future metal band (and a very bad name for my future pony), but I can’t say I’d actually buy a phone that reminds me of my own mortality when I’m composing a grocery list or suggests innuendos when I’m replying to a work email.

The predictive text meme is comforting in a social media world that often leaps from one dismal news cycle to the next. The customizations make us feel seen. The random quirks give our pattern-seeking brains delightful connections. The parts that don’t make sense reassure us of human superiority—the machines can’t be taking over yet if they can’t even write me a decent horoscope! And the topic boundaries prevent the meme from reminding us of our human frailty. The result is a version of ourselves through the verbal equivalent of an Instagram filter, eminently shareable on social media.

More Great WIRED Stories

Tech

0 notes

Text

Autocomplete Presents the Best Version of You

New Post has been published on http://webhostingtop3.com/autocomplete-presents-the-best-version-of-you/

Autocomplete Presents the Best Version of You

Type the phrase “In 2019, I’ll …” and let your smartphone’s keyboard predict the rest. Depending on what else you’ve typed recently, you might end up with a result like one of these:

In 2019, I’ll let it be a surprise to be honest.

In 2019, i’ll be alone.

In 2019, I’ll be in the memes of the moment.

In 2019, I’ll have to go to get the dog.

In 2019 I will rule over the seven kingdoms or my name is not Aegon Targareon [sic].

Many variants on the predictive text meme—which works for both Android and iOS—can be found on social media. Not interested in predicting your 2019? Try writing your villain origin story by following your phone’s suggestions after typing “Foolish heroes! My true plan is …” Test the strength of your personal brand with “You should follow me on Twitter because …” Or launch your political career with “I am running for president with my running mate, @[3rd Twitter Suggestion], because we …”

Gretchen McCulloch is WIRED’s resident linguist. She’s the cocreator of Lingthusiasm, a podcast that’s enthusiastic about linguistics, and her book Because Internet: Understanding the New Rules of Language is coming out in July 2019 from Penguin.

In eight years, we’ve gone from Damn You Autocorrect to treating the strip of three predicted words as a sort of wacky but charming oracle. But when we try to practice divination by algorithm, we’re doing something more than killing a few minutes—we’re exploring the limits of what our devices can and cannot do.

Your phone’s keyboard comes with a basic list of words and sequences of words. That’s what powers the basic language features: autocorrect, where a sequence like “rhe” changes to “the” after you type it, and the suggestion strip just above the letters, which contains both completions (if you type “keyb” it might suggest “keyboard”) and next-word predictions (if you type “predictive” it might suggest “text,” “value,” and “analytics”). It’s this predictions feature that we use to generate amusing and slightly nonsensical strings of text—a function that goes beyond its intended purpose of supplying us with a word or two before we go back to tapping them out letter by letter.

The basic reason we get different results is that, as you use your phone, words or sequences of words that you type get added to your personal word list. “For most users, the on-device dictionary ends up containing local place-names, songs they like, and so on,” says Daan van Esch, a technical program manager of Gboard, Google’s keyboard for Android. Or, in the case of the “Aegon Targareon” example, slightly misspelled Game of Thrones characters.

Another factor that helps us get unique results is a slight bias toward predicting less frequent words. “Suggesting a very common word like ‘and’ might be less helpful because it’s short and easy to type,” van Esch says. “So maybe showing a longer word is actually more useful, even if it’s less frequent.” Of course, a longer word is probably going to be more interesting as meme fodder.

Finally, phones seem to choose different paths from the very beginning. Why are some people getting “I’ll be” while others get “I’ll have” or “I’ll let”? That part is probably not very exciting: The default Android keyboard presumably has slightly different predictions than the default iPhone keyboard, and third-party apps would also have slightly different predictions.

Whatever their provenance, the random juxtaposition of predictive text memes has become fodder for a growing genre of AI humor. Botnik Studios writes goofy songs using souped-up predictive keyboards and a lot of human tweaking. The blog AI Weirdness trains neural nets to do all sorts of ridiculous tasks, such as deciding whether a string of words is more likely to be a name from My Little Pony or a metal band. Darth Vader? 19 percent metal, 81 percent pony. Leia Organa? 96 percent metal, 4 percent pony. (I’m suddenly interpreting Star Wars in quite a new light.)

The combination of the customization and the randomness of the predictive text meme is compelling the way a BuzzFeed quiz or a horoscope is compelling—it gives you a tiny amount of insight into yourself to share, but not so much that you’re baring your soul. It’s also hard to get a truly terrible answer. In both cases, that’s by design.

You know how when you get a new phone and you have to teach it that, no, you aren’t trying to type “duck” and “ducking” all the time? Your keyboard deliberately errs on the conservative side. There are certain words that it just won’t try to complete, even if you get really close. After all, it’s better to accidentally send the word “public” when you meant “pubic” than the other way around.

This goes for sequences of words as well. Just because a sequence is common doesn’t mean it’s a good idea to predict it. “For a while, when you typed ‘I’m going to my Grandma’s,’ GBoard would actually suggest ‘funeral,'” van Esch says. “It’s not wrong, per se. Maybe this is more common than ‘my Grandma’s rave party.’ But at the same time, it’s not something that you want to be reminded about. So it’s better to be a bit careful.”

Users seem to prefer this discretion. Keyboards get roundly criticized when a sexual, morbid, or otherwise disturbing phrase does get predicted. It’s likely that a lot more filtering happens behind the scenes before we even notice it. Janelle Shane, the creator of AI Weirdness, experiences lapses in machine judgment all the time. “Whenever I produce an AI experiment, I’m definitely filtering out offensive content, even when the training data is as innocuous as My Little Pony names. There’s no text-generating algorithm I would trust not to be offensive at some point.”

The true goal of text prediction can’t be as simple as anticipating what a user might want to type. After all, people often type things about sex or death—according to Google Ngrams, “job” is the most common noun after “blow,” and “bucket” is very common after “kick the.” But I experimentally typed these and similar taboo-but-common phrases into my phone’s keyboard, and it never predicted them straightaway. It waited until I’d typed most of the letters of the final word, until I’d definitely committed to the taboo, rather than reminding me of weighty topics when I wasn’t necessarily already thinking about them. With innocuous idioms (like “raining cats and”), the keyboard seemed more proactive about predicting them.

Instead, the goal of text prediction must be to anticipate what the user might want the machine to think they might want to type. For mundane topics, these two goals might seem identical, but their difference shows up as soon as a hint of controversy enters the picture. Predictive text needs to project an aspirational version of a user’s thoughts, a version that avoids subjects like sex and death even though these might be the most important topics to human existence—quite literally the way we enter and leave the world.

We prefer the keyboard to balance raw statistics against our feelings. Sex Death Phone Keyboard is a pretty good name for my future metal band (and a very bad name for my future pony), but I can’t say I’d actually buy a phone that reminds me of my own mortality when I’m composing a grocery list or suggests innuendos when I’m replying to a work email.

The predictive text meme is comforting in a social media world that often leaps from one dismal news cycle to the next. The customizations make us feel seen. The random quirks give our pattern-seeking brains delightful connections. The parts that don’t make sense reassure us of human superiority—the machines can’t be taking over yet if they can’t even write me a decent horoscope! And the topic boundaries prevent the meme from reminding us of our human frailty. The result is a version of ourselves through the verbal equivalent of an Instagram filter, eminently shareable on social media.

More Great WIRED Stories

Tech

0 notes

Text

Autocomplete Presents the Best Version of You

New Post has been published on http://webhostingtop3.com/autocomplete-presents-the-best-version-of-you/

Autocomplete Presents the Best Version of You

Type the phrase “In 2019, I’ll …” and let your smartphone’s keyboard predict the rest. Depending on what else you’ve typed recently, you might end up with a result like one of these:

In 2019, I’ll let it be a surprise to be honest.

In 2019, i’ll be alone.

In 2019, I’ll be in the memes of the moment.

In 2019, I’ll have to go to get the dog.

In 2019 I will rule over the seven kingdoms or my name is not Aegon Targareon [sic].

Many variants on the predictive text meme—which works for both Android and iOS—can be found on social media. Not interested in predicting your 2019? Try writing your villain origin story by following your phone’s suggestions after typing “Foolish heroes! My true plan is …” Test the strength of your personal brand with “You should follow me on Twitter because …” Or launch your political career with “I am running for president with my running mate, @[3rd Twitter Suggestion], because we …”

Gretchen McCulloch is WIRED’s resident linguist. She’s the cocreator of Lingthusiasm, a podcast that’s enthusiastic about linguistics, and her book Because Internet: Understanding the New Rules of Language is coming out in July 2019 from Penguin.

In eight years, we’ve gone from Damn You Autocorrect to treating the strip of three predicted words as a sort of wacky but charming oracle. But when we try to practice divination by algorithm, we’re doing something more than killing a few minutes—we’re exploring the limits of what our devices can and cannot do.

Your phone’s keyboard comes with a basic list of words and sequences of words. That’s what powers the basic language features: autocorrect, where a sequence like “rhe” changes to “the” after you type it, and the suggestion strip just above the letters, which contains both completions (if you type “keyb” it might suggest “keyboard”) and next-word predictions (if you type “predictive” it might suggest “text,” “value,” and “analytics”). It’s this predictions feature that we use to generate amusing and slightly nonsensical strings of text—a function that goes beyond its intended purpose of supplying us with a word or two before we go back to tapping them out letter by letter.

The basic reason we get different results is that, as you use your phone, words or sequences of words that you type get added to your personal word list. “For most users, the on-device dictionary ends up containing local place-names, songs they like, and so on,” says Daan van Esch, a technical program manager of Gboard, Google’s keyboard for Android. Or, in the case of the “Aegon Targareon” example, slightly misspelled Game of Thrones characters.

Another factor that helps us get unique results is a slight bias toward predicting less frequent words. “Suggesting a very common word like ‘and’ might be less helpful because it’s short and easy to type,” van Esch says. “So maybe showing a longer word is actually more useful, even if it’s less frequent.” Of course, a longer word is probably going to be more interesting as meme fodder.

Finally, phones seem to choose different paths from the very beginning. Why are some people getting “I’ll be” while others get “I’ll have” or “I’ll let”? That part is probably not very exciting: The default Android keyboard presumably has slightly different predictions than the default iPhone keyboard, and third-party apps would also have slightly different predictions.

Whatever their provenance, the random juxtaposition of predictive text memes has become fodder for a growing genre of AI humor. Botnik Studios writes goofy songs using souped-up predictive keyboards and a lot of human tweaking. The blog AI Weirdness trains neural nets to do all sorts of ridiculous tasks, such as deciding whether a string of words is more likely to be a name from My Little Pony or a metal band. Darth Vader? 19 percent metal, 81 percent pony. Leia Organa? 96 percent metal, 4 percent pony. (I’m suddenly interpreting Star Wars in quite a new light.)

The combination of the customization and the randomness of the predictive text meme is compelling the way a BuzzFeed quiz or a horoscope is compelling—it gives you a tiny amount of insight into yourself to share, but not so much that you’re baring your soul. It’s also hard to get a truly terrible answer. In both cases, that’s by design.

You know how when you get a new phone and you have to teach it that, no, you aren’t trying to type “duck” and “ducking” all the time? Your keyboard deliberately errs on the conservative side. There are certain words that it just won’t try to complete, even if you get really close. After all, it’s better to accidentally send the word “public” when you meant “pubic” than the other way around.

This goes for sequences of words as well. Just because a sequence is common doesn’t mean it’s a good idea to predict it. “For a while, when you typed ‘I’m going to my Grandma’s,’ GBoard would actually suggest ‘funeral,'” van Esch says. “It’s not wrong, per se. Maybe this is more common than ‘my Grandma’s rave party.’ But at the same time, it’s not something that you want to be reminded about. So it’s better to be a bit careful.”

Users seem to prefer this discretion. Keyboards get roundly criticized when a sexual, morbid, or otherwise disturbing phrase does get predicted. It’s likely that a lot more filtering happens behind the scenes before we even notice it. Janelle Shane, the creator of AI Weirdness, experiences lapses in machine judgment all the time. “Whenever I produce an AI experiment, I’m definitely filtering out offensive content, even when the training data is as innocuous as My Little Pony names. There’s no text-generating algorithm I would trust not to be offensive at some point.”

The true goal of text prediction can’t be as simple as anticipating what a user might want to type. After all, people often type things about sex or death—according to Google Ngrams, “job” is the most common noun after “blow,” and “bucket” is very common after “kick the.” But I experimentally typed these and similar taboo-but-common phrases into my phone’s keyboard, and it never predicted them straightaway. It waited until I’d typed most of the letters of the final word, until I’d definitely committed to the taboo, rather than reminding me of weighty topics when I wasn’t necessarily already thinking about them. With innocuous idioms (like “raining cats and”), the keyboard seemed more proactive about predicting them.