#one processor for each particle.

Text

Particle Dreams, 1988 by Karl Sims

#video#old cgi#80s#1980s#This short computer animation from 1988 contains a collection of dream sequences created using 3D particle systems techniques. Behavior rul#a snowstorm#a tumultuous head#and a waterfall. A Connection Machine CM-2 computer was used to perform physical simulations on thousands of particles simultaneously#one processor for each particle.#dreamcore#vaporwave#cgi

3 notes

·

View notes

Text

@fluffnflightillustrations is always asking me for pie crust tips and I never know what to do say because I just..do it. I am there and the food processor is there, and then it is pie crust. Anyway, I was making a pie today and I decided to document the process for posterity.

This recipe is based on J. Kenji Lopez-Alt's food processor piecrust recipe. (he was the same guy who invented the vodka pie crust method but doesn't own the copyright to it, so he can't ever talk about it. Anyway, this one is fine). I've made it a couple of times a year for, I don't know, over a decade.

Here are some facts about pie crust to keep in mind:

Flakiness is caused by pockets of butter surrounded by flour. That's the goal here.

Every pie crust recipe in existence includes dire warnings about how it will turn into particle board if you put in too much water. In one sense, yes, the goal is to use as little water as possible. On the other hand, if you're having trouble getting it to come together, just add some damn water. Every homemade pie crust I have ever made was a million times better than a store-bought pie crust, even if I did add 2T.

If your pie crust comes out bad or you don't notice a difference between store bought and homemade, there is no shame in store bought pie crusts! Life is about figuring out what is worth it to do from scratch and what isn't and that varies from person to person.

I am not a baking expert, I am just a girl who tries stuff s

HERE'S MY METHOD:

Ingredients:

2 1/2 cups (12.5 ounces; 350 grams) all-purpose flour

2 tablespoons (25 grams) sugar

1 teaspoon (5 grams) kosher salt

2 1/2 sticks (10 ounces; 280 grams) unsalted butter (kept in fridge), cut into 1/4-inch pats

6 tablespoons (3 ounces; 85 milliliters) cold water

tl; dr version:

1. Combine two thirds of flour with sugar and salt in the bowl of a food processor. Pulse twice to incorporate. Spread butter chunks evenly over surface. Pulse until no dry flour remains and dough just begins to collect in clumps, about 25 short pulses. Use a rubber spatula to spread the dough evenly around the bowl of the food processor. Sprinkle with remaining flour and pulse until dough is just barely broken up, about 5 short pulses. Transfer dough to a large bowl.

2. Sprinkle with water then using a rubber spatula, fold and press dough until it comes together into a ball. Divide ball in half. Form each half into a 4-inch disk. Wrap tightly in plastic and refrigerate for at least 2 hours before rolling and baking.

✨Verbose Version✨:

Getting Ready to Get Ready

The first thing I do is measure out the water in a glass measuring cup and stick it in the freezer.

Next, I cut my butter my butter into pats and spread them out on a plate and stick those in the freezer, too.\

You're just trying to get everything cold, not freeze it. This should probably take about 10 minutes? I take this opportunity to clean up my work area and get out my food processor.

Okay, Let's Do It

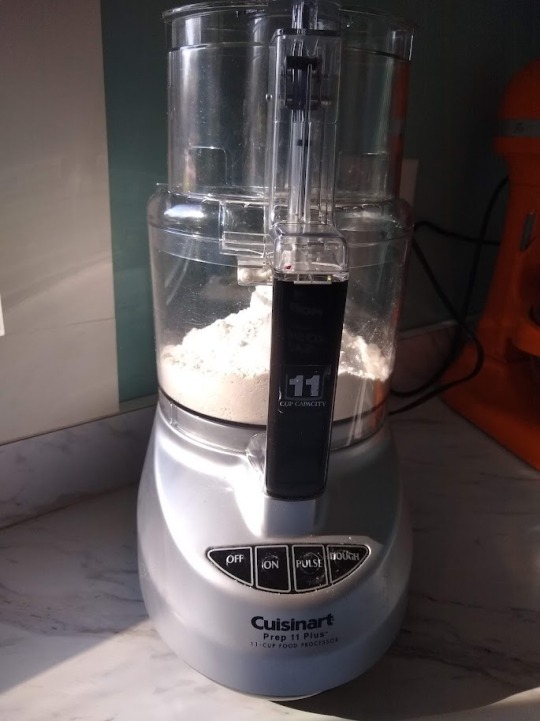

Here's my food processor. It is big and beautiful and I got it for free because a friend of mine who liked to buy himself things moved across the country and didn't want to take it. I think fondly of him whenever I use it.

Combine two thirds of flour (I did 9.5 oz) with sugar and salt in the bowl of a food processor. Pulse a few times to mix it all up. Did I mention that I always bake by weight? You should always bake by weight.

Spread butter chunks evenly over surface, and pulse. I do this in a 3-4 rounds because I want the butter to get evenly covered in flour and not stick to other butter chunks. I toss a few in, pulse a few times, toss a few more, until they're all in there.

At this point, the recipe says "Pulse until no dry flour remains and dough just begins to collect in clumps, about 25 short pulses." First off, it takes a lot more than 25 for me. Second, it never really begins to collect in clumps, it just gets kinda sandy. It doesn't matter, just move on.

Use a rubber spatula to spread the dough evenly around the bowl of the food processor. Sprinkle with remaining flour and pulse until dough is just barely broken up, about 5 short pulses. Transfer dough to a large bowl.

Sprinkle with water then using a rubber spatula, fold and press dough until it comes together into a ball. I drip in some water, fold and press for a bit, drip in some more, fold and press again, etc. At first, I try to use the spatula (and not my hands) as much as possible. Towards the end I switch over to using your hands. It takes a while to start sticking together, but it will! Just keep pushing on it!

According to Kenji, you should not need to add any more water. I almost always need to add more water. You can put additional water in the freezer, if you want, but I just use room-temp. Today, it took 1 extra T. To be honest, I probably didn't need to, but ¯\_(ツ)_/¯

Divide ball into two. If I am making a double pie crust, I split them unevenly, because the bottom crust needs to be bigger than the top. Today, I'm making a pecan pie, which just needs a single crust, so I'm dividing it into half and saving one of the halves for later.

Form it into a rough circle and flatten. I wrap it loosely in plastic, and then roll it on the edge like a wheel, and flatten it further so I get a nice disk.

Refrigerate at least 2 hrs and up to 3 days. (or you can freeze for up to 3 months)

That's the end of part one, part two will continue after the chilling.

24 notes

·

View notes

Text

Autobot Jazz Week Day 1 - Behind the Visor

Prowl looked up from the battle reports at the sound of his office door opening and frowned when Jazz shuffled in. "You should be resting."

"You sound like Ratch'," Jazz replied, aiming for his usual cheer but falling short on account of the mess he was in. Ozone burn marks everywhere, armor dented and torn in places, temp patches covering one entire side, and visor shattered in half, leaving one optic bare. The only reason Ratchet would have allowed Jazz out of medical would be because he didn't have enough room.

...or maybe Ratchet hadn't allowed, and Jazz just snuck off on his own when the medic was busy with others. They just had an intense battle at Kappa Pass, securing the passage between Iacon and Kalis. The Decepticons had brought Bruticus Maximus, while Autobots from both Iacon and Kalis threw everything to defend the area and drive the Decepticons back south. Jazz had been in the thick of it, engaging the combiner directly, and it showed in every bit of his plating.

Prowl kept his disapproving stare until Jazz reached the couch for visitors and sank into it. "Has Ratchet discharged you?" he asked.

"I'm fine, Prowler," Jazz said, fiddling with his broken visor and coaxing it to come loose. Bits of reinforced crystals fell to the floor in glittering patterns. He wagged the unbroken end at Prowl. "And anyway, look who's talking. Optimus specifically told everyone those reports can wait. You're making the rest of us look bad by overachieving, Prowl, including Optimus himself."

Without the shield of his visor, Jazz's gaze was hundred times more intense. Prowl shuddered lightly. "I was not in the center of the fighting."

"No, you were blowing out that powerful processor of yours trying to keep track of and micromanage everything. Ratchet won't be happy if he has to treat you for headache on top of everything just because you don't know how to wind down."

Prowl flared out his doorwings. "I have to keep track of everything, if we are to win the battles."

He'd worked surprisingly well with the lead strategist from Kalis, and Autobot casualties had been at minimum as a result. He was proud of that, if nothing else.

"Ain't gonna help win the war if you burn out first," Jazz replied. He set aside the broken visor and fished out a replacement from his subspace.

Prowl watched Jazz examine the new visor critically, noticing tiny glints from shattered crystal particles still stuck near the saboteur's optics. Before Jazz could attempt to put on his visor, Prowl stood from his chair and strode around his desk. "Jazz, wait."

Jazz stilled as Prowl carefully brushed off the particles. Then Prowl took the visor and sat on the couch to get a better angle at lining up the edge with the connectors on the other's helm, making sure it was inserted in correctly. The visor, custom designed and built to be exact, fit smoothly and Prowl tried not to lament it when Jazz's optics went back to being hidden away.

The visor flickered as it integrated with Jazz's systems. He made minor adjustments for his comfort, then grinned.

"If I'd known all it took to get you away from your desk was to pretend I need help with this thing..."

Prowl gave a small smile of his own. "You are already too good at distracting me from work."

Jazz laughed. "Flatterer."

He shifted on his seat and leaned heavily on Prowl's shoulder, powering off his optics. "Well, I did come here to rest, actually, so let's see see if I can distract you just by recharging."

Prowl started to protest, but Jazz's engines were already purring contently, and really he wasn't wrong about that headache.

Later, when Ratchet came looking for Jazz and yell at Prowl for overclocking, he found the pair in deep recharge against each other on the couch. Tutting, he draped a medical blanket over them and left quietly.

#transformers#transformers fanfic#autobotjazzweek2023#autobot jazz week 2023#autobot jazz#autobot prowl#heron writes#better late than never#right?

26 notes

·

View notes

Text

Digital Measurements vs Quantum Measurements

1 Hertz is the equivalent of 2 bits per second calculation. We measure the speed and throughput of your average processor today in gigahertz with a theoretical speedlimit of 4 GigaHertz.

That speed limit is why we have decided to expand the number of cores in a processor, and why we don't typically see processors above that outside of a liquid-cooled environment.

Your average standard processor has between 4 and 8 cores, with the capability to run twice as many simultaneously occuring threads. Or two simultaneously occuring processes per individual core.

Your average piece of software, for comparison usually runs single-threaded. While your 3D software (and chrome), by necessity required to be run multi-threaded in order to output the video portion. Typically, that software relies on GPUs which are geared to as many threads as possible, in order to produce at least 60 images per second. But can utilize your core CPU instead if your device doesn't have one.

When you have many multiple cores and/processors in an individual system, you're now relying on a different value; FLOPs (floating-point operations per second) which is so much higher in scale than your average CPU calculation, and requires measuring the output of many simultaneously operating parts. This means it may be lower than what you'd expect simply by adding them together.

Flops calculate simultaneously occurring floating-point operations.

Now Quantum mechanics is already the next step of technological evolution, but we haven't figured out how to measure it in a way that is useful yet. 1 qHertz for example; would this be the quantum processor's ability to do binary calculations? That would overall limit the quantum processor's ability since it's having to emulate a binary state.

Theoretically; one Quantum particle should be capable of doing 2 FLOP simultaneously. And the algorithms and computing we use at the quantum level are so far divorce from a Binary/Digital representation it would be hard to compare the two directly.

Even in the binary/digital world there is no direct observable correlation between Hertz and FLOPs. Despite the fact that we know approximately more Hertz can do approximately more FLOPs.

<aside>I keep asking myself; are we sure we don't already have quantum computing already? What if proprietary chips and corporate secrecy means we already use qBits at the hardware level and everybody else just doesn't know it yet.</aside>

At the base state; a qBit is capable of storing the equivalent of many bits of data, and will be able to perform the equivalent of a terra-flop of calculations on that one qBit per second.

But it's a single variable in contrast to our current average memory storage of 8Gigabytes that can be sub-divided into millions of separate variables.

72 qBits would allow for 144 variable declarations, every two variables being part of the same qBit and used in special ways that we can't do with regular bits.

Or to put it another way; a single floating point number takes 32bits of information, a double floating point number takes 64 bits of information.

At the minimum, one qBit can store at least 2 double precision floating point numbers (and each one of those numbers could theoretically be the equivalent of a triple or quadruple floating point in overall limitation.)

Therefore a single qBit can store between 128 bits and 512 bits (this is a conservative estimate). However, they're limited to how small they can be sub-divided into individual variables. By the time we get to MegaQBits, we'll be able to do so much more than we can currently do with bits it'll be absolutely no-contest.

However; there will be growing pains in Quantum Computing where we can't define as many variables as we can in Digital.

5 notes

·

View notes

Photo

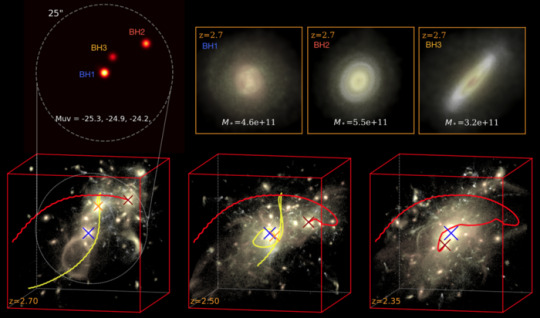

Rare quasar triplet forms most massive object in universe Ultra-massive black holes are the most massive objects in the universe. Their mass can reach millions and billions of solar masses. Supercomputer simulations on TACC’s Frontera supercomputer have helped astrophysicists reveal the origin of ultra-massive black holes formed about 11 billion years ago. “We found that one possible formation channel for ultra-masssive black holes is from the extreme merger of massive galaxies that are most likely to happen in the epoch of the ‘cosmic noon," said Yueying Ni, a postdoctoral fellow at the Harvard–Smithsonian Center for Astrophysics. Ni is the lead author of work published in The Astrophysical Journal (December 2022) that found ultra-massive black hole formation from the merger of triple quasars, systems of three galactic cores illuminated by gas and dust falling into a nested supermassive black hole. Working hand-in-hand with telescope data, computational simulations help astrophysicists fill in the missing pieces on the origins of stars and exotic objects like black holes. One of the largest cosmological simulations to date is called Astrid, co-developed by Ni. It's the largest simulation in terms of the particle, or memory load in the field of galaxy formation simulations. “The science goal of Astrid is to study galaxy formation, the coalescence of supermassive black holes, and re-ionization over the cosmic history,” she explained. Astrid models large volumes of the cosmos spanning hundreds of millions of light years, yet can zoom in to very high resolution. Ni developed Astrid using the Texas Advanced Computing Center’s (TACC) Frontera supercomputer, the most powerful academic supercomputer in the U.S., funded by the National Science Foundation(NSF). ”Frontera is the only system that we performed Astrid from day one. It's a pure Frontera-based simulation,” Ni continued. Frontera is ideal for Ni’s Astrid simulations because of its capability to support large applications that need thousands of compute nodes, the individual physical systems of processors and memory that are harnessed together for some of science’s toughest computation. ”We used 2,048 nodes, the maximum allowable in the large queue, to launch this simulation on a routine basis. It's only possible on large supercomputers like Frontera,” Ni said. Her findings from the Astrid simulations show something completely mind-boggling — the formation of black holes can reach a theoretical upper limit of 10 billion solar masses. “It’s a very computational challenging task. But you can only catch these rare and extreme objects with a large volume simulation,” Ni said. “What we found are three ultra-massive black holes that assembled their mass during the cosmic noon, the time 11 billion years ago when star formation, active galactic nuclei (AGN), and supermassive black holes in general reach their peak activity,” she added. About half of all the stars in the universe were born during cosmic noon. Evidence for it comes from multi wavelength data of numerous galaxy surveys such as the Great Observatories Origins Deep Survey, where the spectra from distant galaxies tell about the ages of its stars, its star formation history, and the chemical elements of the stars within. ”In this epoch we spotted an extreme and relatively fast merger of three massive galaxies," Ni said. "Each of the galaxy masses is 10 times the mass of our own Milky Way, and a supermassive black hole sits in the center of each galaxy. Our findings show the possibility that these quasar triplet systems are the progenitor of those rare ultra-massive blackholes, after those triplets gravitationally interact and merge with each other." What’s more, new observations of galaxies at cosmic noon will help unveil the coalescence of supermassive black holes and the formation the ultra-massive ones. Data is rolling in now from the James Webb Space Telescope (JWST), with high resolution details of galaxy morphologies. “We're pursuing a mock-up of observations for JWST data from the Astrid simulation,” Ni said. “In addition, the future space-based NASA Laser Interferometer Space Antenna (LISA) gravitational wave observatory will give us a much better understanding the how these massive black holes merge and/or coalescence, along with the hierarchical structure, formation, and the galaxy mergers along the cosmic history," she added. "This is an exciting time for astrophysicists, and it's good that we can have simulation to allow theoretical predictions for those observations." Ni’s research group is also planning a systematic study of on AGN hosting of galaxies in general. “They are a very important science target for JWST, determining the morphology of the AGN host galaxies and how they are different compared to the broad population of the galaxy during cosmic noon,” she added. “It’s great to have access to supercomputers, technology that allow us to model a patch of the universe in great detail and make predictions from the observations,” Ni said. IMAGE....Supercomputer simulations on Frontera reveal the origins of ultra-massive black holes, the most massive objects thought to exist in the entire universe. Shown here is the quasar triplet system centered around the most massive quasar (BH1) and its host galaxy environment on the Astrid simulation. The red and yellow lines mark the trajectories of the other two quasars (BH2 and BH3) in the reference frame of BH1, as they spiral into each other and merge. CREDIT DOI 10.3847/2041-8213/aca160

2 notes

·

View notes

Text

Thinking I might do a sporadic series of posts on cool science and engineering things thats sound/look like they should be from science fiction. So as the first entry:

Sounds like Sci-Fi

Quantum Computers

Where a regular computer might use bits that are functionally either a 1 or a 0 to store and process data, a quantum computer instead uses qubits. Like a bit, a qubit can have a one or zero value but can also have a superposition state, not a one or a zero. Think of the famous Shrodingers cat thought experiment, the cat can be considered both dead and alive until observed, the third state of a qubit is a bit like that, both a one and zero and neither a one and zero at the same time. An additional quantum magic is particle entanglement, whereby to qubits can be linked to effect each other based on a relationship. Due to this quantum computers are really good at solving complex relationships such as protein folding or mapping potential routes of subatomic particles.

The computer itself is pretty much a few chips however as it requires a superconductor to work, the whole system needs to be supercooled to about a hundrdth of a degree above absoloute zero (the lowest possible temperature), the cooling system makes the system about the size of a car. Most of the pipes seen in this picture are for coolant. I believe this picture shows Google’s Sycamore processor. It currently has 53 qubits and can acheive in 200 seconds, problems previously thought to take 10000 years to process.

#SLSF#SoundslikeSciFi#Quantum Computers#Might add a little info like my fact of the day series if that interests my vast audience#Send me a message if you would like to see more info on posts and I shall make edits#I added an edit!

3 notes

·

View notes

Text

EDEM Altair on Google Cloud Unlock Billion-Particle Models

Google Cloud and Altair EDEM

An essential skill for manufacturing, industrial, and life science companies is modelling the interactions between bulk and granular materials and containers, machinery, and each other. The more realistic these simulations get, the less time and money businesses need to spend refining their designs and prototypes. Recently, Altair and Google Cloud worked together to see how big of a simulation EDEM Altair could run on a single Google Cloud virtual machine. The outcomes were revolutionary.

Software for the Discrete Element Method (DEM)

High-performance software for simulating both bulk and granular materials is called EDEM. Equipped with DEM, EDEM simulates and analyses a wide range of materials’ behaviours, including coal, mined ores, soils, fibres, grains, tablets, powders, and more, with speed and accuracy.

Engineers may get vital insight into how those materials will behave with their equipment under various operating and process settings by using EDEM modelling. It may be used in conjunction with other CAE tools or on its own.

Using EDEM, top businesses in the heavy equipment, off-road, mining, steel, and process manufacturing sectors can assess equipment performance, optimise workflows, and comprehend and forecast granular material behaviours.

GPUs drive simulations for EDEM

A high-performance software programme for simulating bulk and granular materials is called EDEM Altair. Discrete element method (DEM) simulations and analysis of the behaviour of mined ores, soils, fibres, grains, tablets, powders, and more are performed fast and precisely thanks to EDEM calculations.

Several advancements in model size have been made as a result of industry demands for EDEM to operate at even larger scale. The maximum two decades ago was 200,000 particles, requiring up to ten days to generate in a simulation. That increased swiftly to one million particles, ten million, and finally twenty million. Today, completing a simulation with one billion particles in a few days is the holy grail.

Since these simulations necessitate a significant amount of processing power, as one might anticipate, the use of graphics processing units (GPUs) has significantly increased simulation speed and efficiency. GPUs are extremely effective at handling the massive volumes of data and intricate computations required for EDEM simulations because they are specifically made to handle parallel processing tasks.

The EDEM Altair experiment running on Google Cloud

EDEM was initially intended to be a desktop application and has only ever worked with shared-memory architectures that are hosted on a single host. Unlike distributed memory, which can scale across multiple hosts, this restricts access to processors. EDEM is unable to benefit from the scalability and flexibility of distributed memory without a significant rebuild. On the other hand, multi-GPU systems provide a way to boost processing capacity without switching to distributed memory programming models.

The partnership between Altair and Google Cloud had two objectives: to simulate the largest system that could be created, with one billion particles, and to collect data in order to create estimates for mapping a specific type of hardware to a potential simulation scale. Google Cloud made the announcement that its A3 virtual machines (VMs) with NVIDIA H100 GPUs were now available in May 2023. This level of simulation is made feasible by the A3 VMs, which combine modern CPUs with NVIDIA H100 Tensor Core GPUs and also provide significant network upgrades and enhanced host memory.

Two simulation scenarios were conducted by Altair and Google Cloud on a single A3 virtual machine (VM) equipped with eight NVIDIA H100 GPUs, each having 80 GB of GPU memory and 3.6 TB/s of bisectional bandwidth. A 4th Generation Intel Xeon Scalable processor and 2 TB of host memory were also included in the system.

The filling test was selected as the test scenario. This useful simulation examines the effects of particle behaviour in an actual industrial environment, where particles are dropped into a container from a moving plate. In this simulation, single- and multi-sphere particles were employed.

As previously shown, the combined filing test simulation with single- and multi-sphere EDEM Altair simulations scales well across the eight NVIDIA H100 GPUs that are available, reaching a total particle count of one billion.

The manufacturing industries can now assess equipment performance, optimise processes on a never-before-seen scale, and better understand and predict granular material behaviours thanks to this new breakthrough in simulation technology billion particles which will enable future simulations with greater fidelity and larger scale.

Key points are summarised below:

Importance of Bulk Material Simulation

The behaviour of these materials must be simulated in bulk because it affects many different industries, including manufacturing, life sciences, and industrial processes.

Software called Altair EDEM

Software called EDEM Altair was created especially for high-performance simulation of granular and bulk materials.

Need for Greater Scale

The industry has been requesting that EDEM be able to manage even bigger and more intricate simulations.

Cooperation Goal

Two primary objectives drove the collaboration between Altair EDEM and Google Cloud

Run the biggest simulation you can, aiming for a billion particles as a benchmark.

Collect information to determine the possible simulation scale that can be reached with various hardware setups.

Making Use of Google Cloud Hardware

The secret to the success was to leverage the A3 virtual machines (VMs) on Google Cloud, which were outfitted with potent NVIDIA H100 GPUs.

A3 virtual machines offer the base for general-purpose computing.

The processing power for high-performance computations is provided by H100 GPUs.

The article discusses utilising eight H100 GPUs, but it makes no mention of the precise A3 VM configuration.

Read more on Govindhtech.com

0 notes

Text

Lecithination System manufacturers in India: Sahiba Fabricators

In the vibrant landscape of India's dairy industry, achieving top-notch whole milk powder (WMP) production requires cutting-edge technology and unwavering commitment to quality. Sahiba Fabricators, a leading name in dairy farm equipment, emerges as a champion, spearheading the charge with its state-of-the-art Lecithination Systems, meticulously designed to empower Indian WMP manufacturers to push the boundaries of excellence.

Lecithination: The Secret Weapon for Superior WMP

At the core of Sahiba Fabricators' innovation lies the powerful process of lecithination. This revolutionary technique involves coating milk powder particles with a specially formulated wetting agent, starring lecithin dissolved in a suitable oil like butter oil or low-melting oils. This coating, applied directly as a spray during vigorous fluidization within a vibrating fluid bed dryer, works its magic to elevate the WMP game.

Unlocking a Pandora's Box of Benefits:

Partnering with the Sahiba Fabricators Lecithination System unlocks a treasure trove of advantages for India's dairy processors:

Enhanced Flowability: Say goodbye to sluggish powder movement! The lecithin coating acts as a lubricant, ensuring smooth handling and flow throughout production and packaging, optimizing efficiency and saving precious time.

Improved Wettability: Forget about lumpy messes! Lecithination facilitates rapid water absorption upon reconstitution, resulting in instant milk dispersion and a consistently smooth texture, exceeding consumer expectations every time.

Extended Shelf Life: Breathe easy knowing your WMP stays fresh. The oil-based coating acts as a protective shield, minimizing moisture absorption and oxidation, extending shelf life significantly, reducing wastage and boosting profitability.

Preserved Sensory Delights: Savor the taste of perfection! Lecithination safeguards the natural flavor and aroma of the milk, preventing undesirable taste or odor development during storage, ensuring every sip is a delight.

Sahiba: Innovation beyond Compare

But what truly sets Sahiba Fabricators apart is its unwavering commitment to superior engineering and meticulous design:

Precise Spray Control: Every particle gets its fair share of magic! The system boasts cutting-edge technology that ensures uniform and controlled spraying of the wetting agent, guaranteeing consistent coating for each and every powder particle.

Optimal Fluidization: Experience the perfect dance of air and powder! The vibrating fluid bed dryer creates the ideal environment for vigorous particle movement, promoting efficient coating and uniform drying, leaving no room for compromise.

Built to Last: Quality never takes a backseat at Sahiba. The system is meticulously crafted with high-quality materials and rigorously tested, delivering exceptional performance and enduring reliability, empowering you to focus on what matters most - making the best WMP in India.

Embrace the Future of WMP Production:

By integrating the Sahiba Fabricators Lecithination System into your production lines, you're not just investing in technology; you're investing in a paradigm shift. Witness a dramatic transformation in your WMP output with enhanced quality, increased efficiency, and a longer shelf life, paving the way for greater profitability and a dominant position in the Indian market.

So, let the lecithination revolution begin! Choose Sahiba Fabricators, the leading Lecithination System manufacturer in India, and elevate your WMP production to new heights. Experience the difference, one perfectly coated particle at a time.

For more information on Sahiba Fabricators Lecithination Systems and how they can revolutionize your WMP production, contact Sahiba Fabricators today!

#dairy products#cheese#ghee#lecithinatio#manufacturer#manufacturer in india#dairy tank#dairy equipments#spraydryer

0 notes

Text

The Essential Guide to F&D Machines, Fluid Bed Dryer, and Fluid Bed Processor

In the world of pharmaceuticals, food processing, and chemical industries, precision and efficiency are paramount. One of the critical components in these fields is the use of F&D Machines, Fluid Bed Dryer, and Fluid Bed Processor. These versatile machines play a crucial role in various manufacturing processes, and understanding their operation is vital. In this comprehensive guide, we'll delve into the world of F&D machines, fluid bed dryer, and fluid bed processor, shedding light on their functions, applications, and benefits.

Understanding F&D Machines

F&D Machines (F&D stands for Filter and Dry) are fundamental equipment used in the pharmaceutical industry for separating solids from liquids and drying wet substances. They are also known as Filter Dryers and are indispensable for various processes such as crystallization, separation, and drying of active pharmaceutical ingredients (APIs).

Key Features of F&D Machines:

Dual Functionality:

F&D machines combine filtration and drying in a single unit, reducing the need for multiple equipment and minimizing product handling.

Closed System:

These machines are often designed as closed systems, which help maintain a sterile environment and minimize contamination risks in pharmaceutical manufacturing.

Precise Control:

F&D machines offer precise control over temperature, pressure, and agitation, ensuring optimal conditions for each specific process.

Applications of F&D Machines:

Pharmaceutical Industry:

F&D machines are widely used for drying and filtering APIs, intermediates, and final products.

Chemical Industry:

They find applications in the production of specialty chemicals and fine chemicals.

Food and Beverage Industry:

F&D machines are used for processes like concentration and separation in the production of food ingredients.

Exploring Fluid Bed Dryer

Fluid Bed Dryer are versatile machines used for drying a wide range of solid materials in granular, powder, or crystalline form. They operate on the principle of fluidization, where air or gas is passed through a perforated bed, suspending and fluidizing the particles.

Key Features of Fluid Bed Dryer:

Uniform Drying:

Fluidization ensures uniform drying and prevents clumping or agglomeration of particles.

Rapid Drying:

They are known for their fast drying times, making them ideal for heat-sensitive materials.

Easy Scale-up:

Fluid bed dryer are easily scalable, allowing for efficient production in various batch sizes.

Applications of Fluid Bed Dryer:

Pharmaceutical Industry:

They are used for drying granules, powders, and tablets in pharmaceutical production.

Food Industry:

Fluid bed dryer are employed in the food industry for drying fruits, vegetables, and cereals.

Chemical Industry:

They play a vital role in drying and cooling chemical products.

The Role of Fluid Bed Processor

Fluid Bed Processor, often referred to as fluid bed coaters or granulators, are specialized machines designed to apply coatings, granulate particles, or agglomerate materials. They are closely related to fluid bed dryer but serve different purposes.

Key Features of Fluid Bed Processor:

Coating Capabilities:

Fluid bed processor can apply uniform coatings to particles, enhancing their properties or providing controlled release.

Granulation and Agglomeration:

They are used to create granules or agglomerates, improving the flow and compressibility of powders.

Customizable:

Fluid bed processor offer flexibility in adjusting process parameters to achieve specific product characteristics.

Applications of Fluid Bed Processor:

Pharmaceutical Industry:

These processors are used for controlled-release drug formulations, taste masking, and tablet coating.

Food Industry:

Fluid bed processor play a role in coating food products with flavors, vitamins, or protective layers.

Advantages of F&D Machines, Fluid Bed Dryer, and Fluid Bed Processor

Now that we've explored these essential machines, let's delve into the advantages they offer to various industries:

Improved Product Quality:

These machines enable precise control over process parameters, resulting in consistent product quality.

Efficiency and Cost Savings:

F&D machines and fluid bed equipment reduce processing time and energy consumption, leading to cost savings.

Reduced Contamination Risks:

Closed-system designs minimize the risk of contamination, making them ideal for pharmaceutical and food applications.

Versatility:

Fluid bed dryer and processors can handle a wide range of materials and processes, making them versatile in different industries.

Scale-up Capabilities:

Easy scalability ensures these machines can adapt to varying production requirements.

Tips for Choosing and Using F&D Machines, Fluid Bed Dryer, and Fluid Bed Processor

When selecting and operating these machines, there are several considerations to keep in mind:

Material Compatibility:

Ensure that the equipment is compatible with the material you intend to process.

Process Validation:

In pharmaceutical applications, validate processes to comply with regulatory standards.

Maintenance and Cleaning:

Regular maintenance and cleaning are essential to prevent cross-contamination and ensure optimal performance.

Conclusion:

F&D Machines, Fluid Bed Dryer, and Fluid Bed Processor are indispensable tools in pharmaceuticals, food processing, and the chemical industry. Their ability to efficiently handle various processes, maintain product quality, and reduce contamination risks makes them valuable assets in manufacturing. By understanding their features, applications, and advantages, industries can harness the full potential of these machines to enhance their production processes and achieve better results.

Incorporating F&D machines, fluid bed dryer, or fluid bed processor into your manufacturing workflow can lead to increased efficiency, cost savings, and improved product quality. As technology continues to advance, these machines are likely to evolve further, offering even more benefits to industries worldwide. Whether you're in pharmaceuticals, food processing, or the chemical industry, embracing these technologies can help you stay competitive and meet the ever-growing demands of your market.

0 notes

Text

Different Types of Mixer Grinders Available in the USA

Mixer grinders are a must-have appliance in every kitchen. They are used to grind spices, make pastes and blend ingredients, making them a great addition to any kitchen. With the advancement of technology, mixer grinders have become more efficient, powerful and versatile. In the US, there are many different types of mixer grinders available, each with its own unique features and functions.

The most popular type of Mixer Grinders in USA is the stand mixer grinder. These grinders consist of a large base and a bowl that can be filled with ingredients. The ingredients are then mixed together using either a wire whisk or a dough hook. Stand mixer grinders are ideal for making doughs, blending batters and mixing ingredients.

Another popular type of mixer grinder is the hand mixer grinder. These grinders consist of a small motor and two metal blades. Hand mixer grinders are perfect for smaller tasks such as grinding spices, blending ingredients or making purees. They are also great for making sauces, dressings and other liquid foods.

The third type of Mixer Grinders in USA is the immersion mixer grinder. These grinders have a long, thin handle and a blade at the end. The blade is immersed in the ingredients and then the handle is rotated to mix the ingredients. Immersion mixer grinders are ideal for pureeing, blending and chopping foods.

Finally, the fourth type of mixer grinder is the food processor mixer grinder. These grinders consist of a large bowl and a powerful motor. The motor is used to spin a blade or blades to quickly chop, puree, mix or blend ingredients. Food processor mixer grinders are perfect for making soups, sauces, pestos, dips and other food items.

No matter which type of mixer grinder you choose, you will be sure to find one that suits your needs and budget. With so many different types of mixer grinders available in the US, you can easily find the perfect one for your kitchen. Having a mixer grinder in your kitchen can make food preparations so much easier. To get the maximum benefit out of your mixer grinder, there are certain tips you should follow. Firstly, always use the mixer grinder for the purpose it is meant for, i.e. grinding and blending food items. Do not use it to chop hard items such as bones or ice cubes, as it can damage the blades of the grinder. Secondly, do not overfill the mixer grinder with ingredients, as this can cause them to stick to the sides of the jar or blades, resulting in reduced efficiency. Thirdly, do not run the mixer grinder continuously for more than a few minutes. This can cause the motor to overheat, resulting in damage to the appliance and the ingredients. Finally, make sure to clean and maintain the mixer grinder regularly to prevent the buildup of food particles, which can decrease its efficiency and cause it to malfunction. By following these simple tips, you can get the most out of your mixer grinder and enjoy all the convenience it brings to your kitchen.

#Kitchen appliances online in USA#Buy Kitchen Accessories Online#Online Kitchen Appliances in USA#Buy pressure cooker online#Heritage Steel Cookware Set

0 notes

Text

IBM Says It's Made a Big Breakthrough in Quantum Computing

Scientists at IBM say they've developed a method to manage the unreliability inherent in quantum processors, possibly providing a long-awaited breakthrough toward making quantum computers as practical as conventional ones — or even moreso.

The advancement, detailed in a study published in the journal Nature, comes nearly four years after Google eagerly declared "quantum supremacy" when its scientists claimed they demonstrated that their quantum computer could outperform a classical one.

Though still a milestone, those claims of "quantum supremacy" didn't exactly pan out. Google's experiment was criticized as having no real world merit, and it wasn't long until other experiments demonstrated classical supercomputers could still outpace Google's.

IBM's researchers, though, sound confident that this time the gains are for real.

"We're entering this phase of quantum computing that I call utility," Jay Gambetta, an IBM Fellow and vice president of IBM Quantum Research, told The New York Times. "The era of utility."

At the risk of seriously dumbing down some marvelous, head-spinning science, here's a quick rundown on quantum computing.

Basically, it takes advantage of two principles of quantum mechanics. The first is superposition, the ability for a single particle, in this case quantum bits or qubits, to be in two separate states at the same time. Then there's entanglement, which enables two particles to share the same state simultaneously.

These spooky principles allow for a far smaller number of qubits to rival the processing power of regular bits, which can only be a binary one or zero. Sounds great, but at the quantum level, particles eerily exist at uncertain states, arising in a pesky randomness known as quantum noise.

Managing this noise is key to getting practical results from a quantum computer. A slight change in temperature, for example, could cause a qubit to change state or lose superposition.

This is where IBM's new work comes in. In the experiment, the company's researchers used a 127 qubit IBM Eagle processor to calculate what's known as an Ising model, simulating the behavior of 127 magnetic, quantum-sized particles in a magnetic field — a problem that has real-world value but, at that scale, is far too complicated for classical computers to solve.

To mitigate the quantum noise, the researchers, paradoxically, actually introduced more noise, and then precisely documented its effects on each part of the processor's circuit and the patterns that arose.

From there, the researchers could reliably extrapolate what the calculations would have looked like without noise at all. They call this process "error mitigation."

There's just one nagging problem. Since the calculations the IBM quantum processor performed were at such a complicated scale, a classical computer doing the same calculations would also run into uncertainties.

But because other experiments showed that their quantum processor produced more accurate results than a classical one when simulating a smaller, but still formidably complex Ising model, the researchers say there's a good chance their error-mitigated findings are correct.

"The level of agreement between the quantum and classical computations on such large problems was pretty surprising to me personally," co-author Andrew Eddins, a physicist at IBM Quantum, said in a lengthy company blog post. "Hopefully it’s impressive to everyone."

As promising as the findings are, it's "not obvious that they've achieved quantum supremacy here," co-author Michael Zaletel, a UC Berkley physicist, told the NYT.

Further experiments will need to corroborate that the IBM scientists' error mitigation techniques would not produce the same, or even better, results in a classic processor calculating the same problem.

In the meantime, the IBM scientists see their error mitigation as a stepping stone to an even more impressive process of error correction, which could be what finally ushers in an age of "quantum supremacy." We'll be watching.

More on quantum computing: Researchers Say They've Come Up With a Blueprint for Creating a Wormhole in a Lab

The post IBM Says It's Made a Big Breakthrough in Quantum Computing appeared first on Futurism.

0 notes

Text

Working Principle of Grinding Machines

Most Mechanical Grinding machines work by utilizing a grating wheel to eliminate material from the workpiece. The rough wheel is regularly made of precious stone or aluminum oxide and is turned at high velocities. The rough particles on the wheel really eliminate the material from the workpiece.

There are various kinds of Mechanical Grinding machines, each with its own novel arrangement of haggles. The most widely recognized sort of crushing machine is the surface processor, which is utilized to eliminate material from level surfaces.

One more typical kind of crushing machine is the tube shaped processor, which is utilized to eliminate material from round and hollow surfaces.

The crushing system can be exceptionally exact and deliver extremely smooth completions. Notwithstanding, it can likewise be exceptionally tedious and costly, contingent upon the sort of crushing machine and the materials being utilized.

Grating states of not entirely set in stone by the sort of material being dealt with, the kind of rough being utilized, the speed of the rough, and how much strain being applied.

The right grating condition for every application should be resolved tentatively, and the accompanying overall principles apply:

Grating materials harder than the workpiece material are utilized for crushing. — Rough materials gentler than the workpiece material are utilized for cleaning.

Rough materials with a Mohs hardness of 9 or 10 are utilized for lapping.

The coarser the grating, the higher the speed and the lower the strain.

The better the rough, the lower the speed and the higher the tension.

Grating materials with a low friability are utilized for crushing, and those with a high friability are utilized for cleaning.

In the event that some unacceptable grating condition is utilized, the outcome will be low quality work, abundance wear on the rough, and extreme intensity age.

1 note

·

View note

Quote

An international team of scientists claim to have found a way to speed up, slow down, and even reverse the clock of a given system by taking advantage of the unusual properties of the quantum world, Spanish newspaper El País reports.

In a series of six papers, the team from the Austrian Academy of Sciences and the University of Vienna detailed their findings. The familiar laws of physics don't map intuitively onto the subatomic world, which is made up of quantum particles called qubits that can technically exist in more than one state simultaneously, a phenomenon known as quantum entanglement.

Now, the researchers say they've figured out how to turn these quantum particles' clocks forward and backward.

"In a theater, [classical physics], a movie is projected from beginning to end, regardless of what the audience wants," Miguel Navascués, a researcher at the Austrian Academy of Sciences' Institute of Quantum Optics and Quantum Information who worked on the research, told El País.

"But at home [the quantum world], we have a remote control to manipulate the movie," he added. "We can rewind to a previous scene or skip several scenes ahead."

"We have made science fiction come true!" the researcher exclaimed.

By developing a "rewind protocol," the team says they were able to revert an electron to a previous state. In experiments, they say they were able to demonstrate the use of a quantum switch to revert a photon to its original state before passing through a crystal.

While it's an exciting prospect, scaling up the technique could prove extremely difficult, if not impossible.

"If we could lock a person in a box with zero external influences, it would be theoretically possible," Navascués told El País. "But with our currently available protocols, the probability of success would be very, very low."

And there's an even bigger catch as well.

"Also, the time needed to complete the process depends on the amount of information the system can store," Navascués added. "A human being is a physical system that contains an enormous amount of information. It would take millions of years to rejuvenate a person for less than a second, so it doesn’t make sense."

Besides, the system is only able to revert the state of a given particle. To speed up time, though, the researchers have an ace up their sleeves.

"We discovered that you can transfer evolutionary time between identical physical systems," Navascués explained. "In a year-long experiment with ten systems, you can steal one year from each of the first nine systems and give them all to the tenth."

Instead of recreating "Back to the Future," the researchers see more mundane practical applications of their discovery. For instance, qubit states of a quantum processor could be reversed, effectively allowing researchers to undo errors during their development.

https://futurism.com/scientists-reverse-time-quantum

0 notes

Text

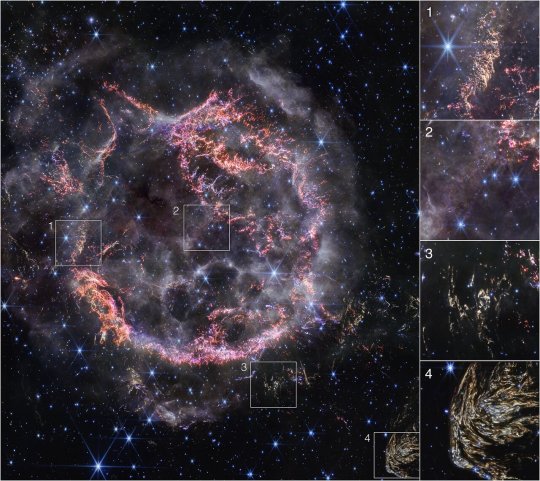

Researchers stunned by Webb’s new high-definition look at exploded star

Like a shiny, round ornament ready to be placed in the perfect spot on the holiday tree, supernova remnant Cassiopeia A (Cas A) gleams in a new image from the NASA/ESA/CSA James Webb Space Telescope. However, this scene is no proverbial silent night — all is not calm.

Webb’s NIRCam (Near-Infrared Camera) view of Cas A displays a very violent explosion at a resolution previously unreachable at these wavelengths. This high-resolution look unveils intricate details of the expanding shell of material slamming into the gas shed by the star before it exploded.

Cas A is one of the best-studied supernova remnants in all the cosmos. Over the years, ground-based and space-based observatories, including the NASA/ESA Hubble Space Telescope, have collectively assembled a multiwavelength picture of the object’s tattered remains.

However, astronomers have now entered a new era in the study of Cas A. In April 2023, Webb’s MIRI (Mid-Infrared Instrument) started this story, revealing new and unexpected features within the inner shell of the supernova remnant. But many of those features are invisible in the new NIRCam image, and astronomers are investigating why that is.

Infrared light is invisible to our eyes, so image processors and scientists represent these wavelengths of light with visible colours. In this newest image of Cas A, colours were assigned to NIRCam’s different filters, and each of those colours hints at different activity occurring within the object.

At first glance, the NIRCam image may appear less colourful than the MIRI image. However, this does not mean there is less information: it simply comes down to the wavelengths in which the material in the object is emitting its light.

The most noticeable colours in Webb’s newest image are clumps of bright orange and light pink that make up the inner shell of the supernova remnant. Webb’s razor-sharp view can detect the tiniest knots of gas, composed of sulphur, oxygen, argon, and neon from the star itself. Embedded in this gas is a mixture of dust and molecules, which will eventually be incorporated into new stars and planetary systems. Some filaments of debris are too tiny to be resolved, even by Webb, meaning that they are comparable to or less than 16 billion kilometres across (around 100 astronomical units). In comparison, the entirety of Cas A spans 10 light-years, or roughly 96 trillion kilometres.

When comparing Webb’s new near-infrared view of Cas A with the mid-infrared view, its inner cavity and outermost shell are curiously devoid of colour. The outskirts of the main inner shell, which appeared as a deep orange and red in the MIRI image, now look like smoke from a campfire. This marks where the supernova blast wave is ramming into the surrounding circumstellar material. The dust in the circumstellar material is too cool to be detected directly at near-infrared wavelengths, but lights up in the mid-infrared.

Researchers have concluded that the white colour is light from synchrotron radiation, which is emitted across the electromagnetic spectrum, including the near-infrared. It’s generated by charged particles travelling at extremely high speeds and spiralling around magnetic field lines. Synchrotron radiation is also visible in the bubble-like shells in the lower half of the inner cavity.

Also not seen in the near-infrared view is the loop of green light in the central cavity of Cas A that glowed in mid-infrared light, appropriately nicknamed the Green Monster by the research team. This feature was described as ‘challenging to understand’ by researchers at the time of their first look.

While the ‘green’ of the Green Monster is not visible in NIRCam, what’s left over in the near-infrared in that region can provide insight into the mysterious feature. The circular holes visible in the MIRI image are faintly outlined in white and purple emission in the NIRCam image — this represents ionised gas. Researchers believe this is due to the supernova debris pushing through and sculpting gas left behind by the star before it exploded.

Researchers were also absolutely stunned by one fascinating feature at the bottom right corner of NIRCam’s field of view. They’re calling that large, striated blob Baby Cas A — because it appears like an offspring of the main supernova.

This is a light echo. Light from the star’s long-ago explosion has reached, and is warming, distant dust, which glows as it cools down. The intricacy of the dust pattern, and Baby Cas A’s apparent proximity to Cas A itself, are particularly intriguing to researchers. In actuality, Baby Cas A is located about 170 light-years behind the supernova remnant.

There are also several other, smaller light echoes scattered throughout Webb’s new portrait.

The Cas A supernova remnant is located 11 000 light-years away in the constellation Cassiopeia. It’s estimated to have exploded about 340 years ago from our point of view.

A new high-definition image from the NASA/ESA/CSA James Webb Space Telescope’s NIRCam (Near-Infrared Camera) unveils intricate details of supernova remnant Cassiopeia A (Cas A), and shows the expanding shell of material slamming into the gas shed by the star before it exploded.

The most noticeable colours in Webb’s newest image are clumps of bright orange and light pink that make up the inner shell of the supernova remnant. These tiny knots of gas, composed of sulphur, oxygen, argon, and neon from the star itself, are only detectable thanks to NIRCam’s exquisite resolution, and give researchers a hint at how the dying star shattered like glass when it exploded.

The outskirts of the main inner shell look like smoke from a campfire. This marks where ejected material from the exploded star is ramming into surrounding circumstellar material. Researchers have concluded that this white colour is light from synchrotron radiation, which is generated by charged particles travelling at extremely high speeds and spiralling around magnetic field lines.

There are also several light echoes visible in this image, most notably in the bottom right corner. This is where light from the star’s long-ago explosion has reached, and is warming, distant dust, which glows as it cools down.

[Image description: A roughly circular cloud of gas and dust with complex structure. The inner shell is made of bright pink and orange filaments studded with clumps and knots that look like tiny pieces of shattered glass. Around the exterior of the inner shell, there are curtains of wispy gas that look like campfire smoke. Around and within the nebula, various stars are seen as points of blue and white light. Outside the nebula, there are also clumps of dust, coloured yellow in the image.]Credit:

NASA, ESA, CSA, STScI, D. Milisavljevic (Purdue University), T. Temim (Princeton University), I. De Looze (University of Gent)

This image highlights several interesting features of the supernova remnant Cassiopeia A (Cas A), as seen with Webb’s NIRCam (Near-Infrared Camera).

NIRCam’s exquisite resolution is able to detect tiny knots of gas, composed of sulphur, oxygen, argon, and neon from the star itself. Some filaments of debris are too tiny to be resolved, even by Webb, meaning that they are comparable to or less than 16 billion kilometres across (around 100 astronomical units). Researchers consider that this represents how the star shattered like glass when it exploded.

Circular holes visible in the MIRI image within the Green Monster, a loop of green light in Cas A’s inner cavity, are faintly outlined in white and purple emission in the NIRCam image — this represents ionised gas. Researchers believe this is due to the supernova debris pushing through and sculpting gas left behind by the star before it exploded.

This is one of a few light echoes visible in NIRCam’s image of Cas A. A light echo occurs when light from the star’s long-ago explosion has reached, and is warming, distant dust, which glows as it cools down.

NIRCam captured a particularly intricate and large light echo, nicknamed Baby Cas A by researchers. It is actually located about 170 light-years behind the supernova remnant.

[Image description: The image is split into five boxes. A large image at the left-hand side takes up most of the image. There are four images along the right-hand side in a column, which show zoomed-in areas of the larger square image on the left. The image on the left shows a roughly circular cloud of gas and dust with a complex structure, with an inner shell of bright pink and orange filaments that look like tiny pieces of shattered glass. A zoom-in of this material appears in the box labelled 1. Around the exterior of the inner shell in the main image there are wispy curtains of gas that look like campfire smoke. Box 2 is a zoom-in on these circles. Scattered outside the nebula in the main image are clumps of dust, coloured yellow in the image. Boxes 3 and 4 are zoomed-in areas of these clumps. Box 4 highlights a particularly large clump at the bottom right of the main image that is detailed and striated.]Credit:

NASA, ESA, CSA, STScI, D. Milisavljevic (Purdue University), T. Temim (Princeton University), I. De Looze (University of Gent)

This image provides a side-by-side comparison of supernova remnant Cassiopeia A (Cas A) as captured by the NASA/ESA/CSA James Webb Space Telescope’s NIRCam (Near-Infrared Camera) and MIRI (Mid-Infrared Instrument).

At first glance, Webb’s NIRCam image appears less colourful than the MIRI image. But this is only because the material from the object is emitting light at many different wavelengths The NIRCam image appears a bit sharper than the MIRI image because of its greater resolution.

The outskirts of the main inner shell, which appeared as a deep orange and red in the MIRI image, look like smoke from a campfire in the NIRCam image. This marks where the supernova blast wave is ramming into surrounding circumstellar material. The dust in the circumstellar material is too cool to be detected directly at near-infrared wavelengths, but lights up in the mid-infrared.

Also not seen in the near-infrared view is the loop of green light in the central cavity of Cas A that glowed in mid-infrared light, nicknamed the Green Monster by the research team. The circular holes visible in the MIRI image within the Green Monster, however, are faintly outlined in white and purple emission in the NIRCam image.

[Image description: A comparison between two images, one on the left (labelled NIRCam), and on the right (labelled MIRI), separated by a white line. On the left, the image is of a roughly circular cloud of gas and dust with a complex structure. The inner shell is made of bright pink and orange filaments that look like tiny pieces of shattered glass. Around the exterior of the inner shell are curtains of wispy gas that look like campfire smoke. On the right is the same nebula seen in different light. The curtains of material outside the inner shell glow orange instead of white. The inner shell looks more mottled, and is a muted pink. At centre right, a greenish loop extends from the right side of the ring into the central cavity.]Credit:

NASA, ESA, CSA, STScI, D. Milisavljevic (Purdue University), T. Temim (Princeton University), I. De Looze (University of Gent)

This image of the Cassiopeia A supernova remnant, captured by Webb’s NIRCam (Near-Infrared Camera) shows compass arrows, a scale bar, and a colour key for reference.

The north and east compass arrows show the orientation of the image on the sky.

The scale bar is labeled in light-years, which is the distance that light travels in one Earth-year (it takes 3 years for light to travel a distance equal to the length of the scale bar). One light-year is equal to about 9.46 trillion kilometers.

This image shows invisible near-infrared wavelengths of light that are represented by visible-light colours. The colour key shows which NIRCam filters were used when collecting the light. The colour of each filter name is the visible-light colour used to represent the infrared light that passes through that filter.

[Image description: The image shows a roughly circular cloud of gas and dust with a complex structure. At lower left, a white arrow pointing in the 2 o’clock direction is labelled N for north, while an arrow pointing in the 10 o‘clock direction is labelled E for east. At lower right, a scale bar is labelled 3 light-years and 1 arcminute. At the bottom is a list of MIRI filters in different colours, from left to right: F162M (blue), F356W (green), and F444W (red).]Credit:

NASA, ESA, CSA, STScI, D. Milisavljevic (Purdue University), T. Temim (Princeton University), I. De Looze (University of Gent)

youtube

A new high-definition image from the NASA/ESA/CSA James Webb Space Telescope’s NIRCam (Near-Infrared Camera) unveils intricate details of supernova remnant Cassiopeia A (Cas A), and shows the expanding shell of material slamming into the gas shed by the star before it exploded.

The most noticeable colours in Webb’s newest image are clumps of bright orange and light pink that make up the inner shell of the supernova remnant. These tiny knots of gas, composed of sulphur, oxygen, argon, and neon from the star itself, are only detectable thanks to NIRCam’s exquisite resolution, and give researchers a hint at how the dying star shattered like glass when it exploded.

The outskirts of the main inner shell look like smoke from a campfire. This marks where ejected material from the exploded star is ramming into surrounding circumstellar material. Researchers have concluded that this white colour is light from synchrotron radiation, which is generated by charged particles travelling at extremely high speeds and spiralling around magnetic field lines.

There are also several light echoes visible in this image, most notably in the bottom right corner. This is where light from the star’s long-ago explosion has reached, and is warming, distant dust, which glows as it cools down.Credit:

NASA, ESA, CSA, STScI, D. Milisavljevic (Purdue University), T. Temim (Princeton University), I. De Looze (University of Gent)

0 notes

Text

Selecting Water Circulation Meters

Water circulation meters offer critical visibility into just how much liquid or gas is streaming within pipes, water drainage systems, as well as other framework. Here's a good read about ultrasonic flow meter , check it out! They enable operators to figure out if their systems are appropriately stabilized, make certain that each endpoint gets appropriate supply, and determine too much circulation rates that might harm pipelines or create other troubles. Circulation meters come in a wide range of modern technologies, each of which offers something unique that makes them excellent for a variety of applications. To gather more awesome ideas on mag flow meter, click here to get started. Despite the modern technology you choose, however, there are some things you need to remember when picking a water circulation meter to make certain you get the best one for your requirements. Expense: An excellent water flow meter isn't constantly low-cost, so it is essential to look around for the best offers on your meter. You might be able to locate a less expensive alternative that works fine, however it might not have the features you need. Installment: Flow meters need to be installed thoroughly to ensure they're supplying accurate analyses. They should be fitted with a pipeline that's straight and also free of any kind of particles or obstructions that could affect the circulation rate readings. They should likewise be installed in an area where they will not be affected by vibrations or electromagnetic fields that can hinder the dimensions. Liquids: Various kinds of liquids have their own distinct characteristics that can make them challenging to determine precisely with a traditional flow meter. Some are more difficult to determine than others, such as those that have high Reynolds numbers (definition they're a little bit a lot more rough than other fluids). Temperature: Water and also various other liquids that stream via piping can vary substantially in temperature level, depending on their details application. This can create a need for different flow meters with a minimum temperature level capability that can deal with the most affordable media temperature levels in their details applications. Inline Flow Meters: Most water circulation meters can be installed in-line, meaning that they become part of the piping. This enables them to be mounted without having to cut the piping, which might cause leaks or various other issues in the future. Stainless Steel: Water circulation meters that are stainless steel or copper are much better outfitted to handle higher stress and destructive settings than those made of plastics. They likewise have the included benefit of being corrosion-resistant, so they're much less vulnerable to rusting or various other damage. Digital: Water flow meters that are electronic have a sensor, processor, as well as present that give a continual readout of the water flow rate. They're a terrific selection for any application that needs reliable and exact analyses. Totalizing as well as Batching: Circulation meters with totalizing abilities can assist you track a wide range of information points in one location. These gadgets can complete the flow quantity for a specific timespan, which can be helpful in ensuring that you're only taking in as much water as you require. Transportation Time: Some circulation meters use audio to determine the time it takes for fluid to take a trip from one side of the meter to the other. These meters have two transducers that are located on either side of the meter, which is after that used to calculate the circulation rate or volume. Kindly visit this website https://www.britannica.com/technology/magnetometer for more useful reference.

0 notes

Text

What is cannabutter?

Cannabis-mixed spread, or cannabutter, is one of the easiest and most familiar ways of making edibles. Margarine is an ideal vehicle for cannabis implantation since its delectable, flexible to utilize, and THC needs to tie to fat particles, which are plentiful in spread (as well as oils like coconut, olive, and vegetable oil).

The imbuement interaction requires a couple of hours, yet it's not difficult to do and this recipe will tell even fledglings the best way to make it happen.

Likewise with all edibles, begin low and go sluggish: In the wake of making food with weed margarine, attempt somewhat first, trust that impacts will kick in, and have all the more provided that you need more grounded impacts.

How is cannabutter used to make edibles?

After you inject margarine with cannabis, you can utilize that weed spread to make any heated merchandise, or to spread on some toast or other food just. Individuals usually use cannabutter to make weed brownies, treats, and cakes.

You can utilize all cannabutter in a recipe, half cannabutter and half normal margarine, or another proportion of standard to cannabutter for milder impacts.

Cannabis should initially be decarboxylated to inject it with spread appropriately. This cycle changes over THCA in the plant into THC, actuating the plants psychoactive expected in your edibles.

Heat is required for decarboxylation. At the point when you put a fire to a bowl or joint, that intensity decarboxylates the plant material, transforming THCA into THC, which will then get you stoned. For cannabutter, decarboxylation is finished by warming cannabis at a low temperature in a stove prior to adding it to the margarine.

Note that hand crafted edibles are hard to portion precisely. This guide will give you a few ways to portion, yet all Do-It-Yourself cannabis cooks ought to know that ensuring the strength or homogeneity of a clump of edibles is troublesome.

The accompanying recipe freely converts into 30 mg of THC for each tablespoon of oil or margarine. Your ideal portion will fluctuate, however 10 mg is standard. Begin by testing ¼ teaspoon of the weed spread you make and hang tight for about 60 minutes. Observe how you feel and let your body let you know whether this is a decent sum, on the off chance that you want more, or on the other hand assuming you really want less. Deciding in favor mindfulness will guarantee that you really live it up and have a positive encounter.

Stage 1: Decarboxylation

The primary thing you'll need to do is decarboxylate your cannabis. Otherwise called "decarbing," this expects you to prepare your weed, permitting the THC, CBD and other cannabinoids to initiate. Additionally, it considers lipids in spread and oil to tie to your weed for a definitive cannabis imbuement without any problem.

What you'll require:

½ ounce of weed

Hand processor or scissors

Glass baking dish or sheet container

Stove

What to do:

Preheat the stove to 220° F.

Tenderly fall to pieces the ideal measure of weed utilizing a hand-processor, scissors, or with hands until it's the ideal consistency for moving a joint — fine, yet all at once not excessively fine. Anything too fine will fall through cheesecloth (or a joint, so far as that is concerned). You need your cannabutter and oil to be perfect and as clear as could really be expected.

Equally spread your plant material onto the glass baking dish or sheet skillet. Pop in the stove on the middle rack for 20 minutes if utilizing old or lower quality weed; 45 minutes for relieved, high-grade weed; or 1 hour or something else for anything that has been as of late collected and is as yet wet.

Mind the weed as often as possible while it's in the broiler, tenderly blending it like clockwork to not consume it. You will see that the shade of your spice will change from dazzling green to a profound earthy green. That is the point at which you realize it has decarboxylated.

Stage 2: Cannabutter Burner Implantation

On the off chance that you have weed, fat, time, and a kitchen, you can make weed margarine with this technique.

What you’ll need:

the decarboxylated 1½ cup water

8 ounces explained margarine, liquefied spread, or oil

½ ounce decarboxylated cannabis

Medium pan

Wooden spoon

Thermometer

Cheesecloth as well as metal sifter

What to do:

In a medium pan on extremely low intensity, add water and margarine.

At the point when the margarine is dissolved, add cannabis. Blend well in with a wooden spoon and cover with top.

Allow blend tenderly to stew for 4 hours. Mix each half hour to ensure your margarine isn't consuming. In the event that you have a thermometer, check to ensure the temperature doesn't arrive at above 180°.

Following 4 hours, strain with cheesecloth or metal sifter into a holder. Allow the margarine to cool to room temperature. Use right away or keep in fridge or cooler in a very much fixed bricklayer container for as long as a half year.

Instructions to utilize weed margarine:

I've been slipping somewhat weed into a ton of recipes that aren't in my cookbook. Most as of late, I joined one tablespoon of canna-mixed olive oil with 3 tablespoons of non-weed virgin olive oil to dress this succulent Singed Crude Corn salad. (It emerged to 30 mg for the whole dish, and 7.5 mg per serving). It knocked my socks off. What's more, not on the grounds that I was stoned? I likewise have this thing for eating hot soup the entire year, and my latest soup fix was Chicken and Rice Soup with Garlicky Chile Oil. I spiked the garlic chile oil with weed, trading in two tablespoons of canna-mixed sesame oil for two tablespoons of vegetable oil. You can likewise sub a couple of teaspoons of imbued oil into prepared merchandise, similar to breakfast blondies or chocolate tahini brownies.

0 notes