#oracle data pump import

Explore tagged Tumblr posts

Text

Export from 11g and import to 19c

In this article, we are going to learn how to export from 11g and import to 19c. As you know the Oracle DATA PUMP is a very powerful tool and using the Export and Import method we can load the from the lower Oracle database version to the higher Oracle Database version and vice versa. Step 1. Create a backup directory To perform the export/import first we need to create a backup directory at…

View On WordPress

#expdp/impdp from 12c to 19c#export and import in oracle 19c with examples#export from 11g and import to 19c#Export from 11g and import to 19c example#Export from 11g and import to 19c oracle#export from 11g and import to 19c with examples#oracle 19c export/import#oracle data pump export example#oracle database migration from 11g to 19c using data pump

0 notes

Text

ORA-39405: Oracle Data Pump does not support importing from a source database with TSTZ

Error ORA-39405: Oracle Data Pump does not support importing from a source database with TSTZ occurs when you try to import a dump file using Oracle Data Pump, but the source database has a Time Stamp with Time Zone (TSTZ) data type, and there is an incompatibility between the source and target databases. Causes: Different Oracle Versions: The source and target databases have different versions…

0 notes

Text

Unlocking Success: Seamlessly Migrating Oracle to Azure PostgreSQL

In today's rapidly evolving tech landscape, many organizations are considering migrating their databases to cloud platforms to take advantage of cost savings, scalability, and advanced features. One such popular move is to Migrate Oracle to Azure PostgreSQL. This guide will walk you through the migration preparation, key practices, azure features, and structured approach for a successful migration. Migration Preparation: Assessment and Tools

Assessment and Planning

Before migrating, conduct an intensive evaluation of your current Oracle database. Recognize all schemas, tables, and dependencies. This will help in understanding the scope of the migration and in planning effectively. Consider the complexity of your database and any potential compatibility issues.

Choosing the Right Tools Choosing the right tools is important for a smooth migration. Microsoft provides tools like the Azure Database Migration Service (DMS) and Oracle SQL Developer. These tools can help with schema conversion, data migration, and post-migration validation.

Key Practices for Smooth Oracle to Azure PostgreSQL Migration

Detailed Planning

A detailed migration plan with clear timelines, milestones, and responsibilities is essential. Anticipate challenges and prepare for contingencies to avoid any disruptions during the migration process.

Extensive Testing

Test extensively at every stage of the migration. Comprehensive testing ensures that the migrated system meets all functional and performance requirements.

Leveraging Expertise

If your team has deficits experience in database migration, think about hiring experts or consultants. Their expertise can help navigate complex migration challenges and ensure a smoother transition.

Leveraging Azure Features

High Availability and Disaster Recovery

Azure PostgreSQL gives built-in high availability and automated backups. Arrange these features to ensure that your database is resilient to failures and that data is backed up regularly.

Security and Compliance

Azure offers advanced security features such as data encryption at rest and in transit, threat detection, and compliance with various regulatory standards. Ensure that these features are configured to protect sensitive data.

Scaling Resources

Azure PostgreSQL allows you to scale resources based on your workload. Use Azure’s monitoring tools to track resource usage and scale up or down as needed to maintain optimal performance. Strategic Approach to Oracle to Azure PostgreSQL Migration Success Migrating Oracle to Azure PostgreSQL involves a structured approach to ensure a smooth transition. First, conduct a comprehensive assessment of the current Oracle environment to identify all database objects and dependencies. Utilize tools like Azure Database Migration Service (DMS) and Oracle SQL Developer for schema conversion, transforming Oracle-specific constructs into PostgreSQL-compatible formats. For data migration, choose offline methods, such as exporting and importing data with tools like Data Pump and pg_dump, or online methods using continuous replication tools like Oracle GoldenGate for minimal downtime. Validate the migrated data thoroughly to ensure accuracy and consistency. Update applications to work with PostgreSQL, adjusting connection strings and SQL queries as necessary. Post-migration, optimize database performance through indexing, query optimization, and parameter tuning. Implement robust monitoring and maintenance practices using Azure's tools to ensure ongoing database health. Provide training and documentation to ensure trouble-free operations in the new environment.

Final Thoughts: Oracle to Azure PostgreSQL Migration - Optimizing Your Database for the Future

Migrating Oracle to Azure PostgreSQL is a transformative step that can unlock numerous advantages for your organization. This process, when approached methodically, ensures a smooth transition with minimal downtime. By following a structured approach and leveraging the right tools, businesses can provide a successful migration. Azure’s robust infrastructure and PostgreSQL’s powerful capabilities make this combination an excellent choice for modern enterprises. Leveraging tools like Azure Database Migration Service and Oracle SQL Developer streamlines the migration, while thorough testing guarantees data integrity and performance. Post-migration, the combination of Azure’s infrastructure and PostgreSQL’s advanced features delivers a scalable, cost-effective, and high-performance database environment, setting your business up for long-term success.

Embarking on the journey to Migrate Oracle to Azure PostgreSQL can be transformative for your organization. With precise planning, thorough testing, and leveraging the expertise available, you can achieve a seamless transition. Thanks For Reading

For More Information, Visit Our Website: https://newtglobal.com/

0 notes

Text

Oracle Data Pump Filters GoldenGate ACDR Columns from Tables

Oracle Data Pump improvements to support GoldenGate ACDR tables. #oraclegoldengate #oracledatabase #goldengate

The ACDR feature of Oracle GoldenGate adds hidden columns to tables to resolve conflicts when the same row is updated by different databases using active replication. GoldenGate can also create a “tombstone table,” which records interesting column values for deleted rows. The Oracle Data Pump Import command-line mode TRANSFORM parameter enables you to alter object creation DDL for objects being…

View On WordPress

0 notes

Text

expdp and impdp question and answers

Here are concise answers to your questions about Oracle Data Pump (expdp and impdp): What is expdp and impdp? expdp (Data Pump Export) and impdp (Data Pump Import) are Oracle utilities used for efficiently moving data and metadata between Oracle databases. They are an enhancement of the original exp and imp utilities.What are the Pros and Cons of exp & imp Vs expdp & impdp? exp & imp:Pros:…

View On WordPress

0 notes

Text

In the always-moving cryptocurrency market, identifying the tokens with the most promising potential becomes a crucial task for investors. Google Bard, the AI chatbot, has placed Solana (SOL), Chainlink (LINK), and Everlodge (ELDG) in the spotlight as the best tokens to hold in 2023. Let's dive into the reasons behind these selections and explore what makes them stand out in a highly competitive market. Summary - Solana demand on the rise as Shopy integrates Solana Pay - Chainlink price prediction - Everlodge projected to pump by 30x on its launch day Join the Everlodge presale and win a luxury holiday to the Maldives Solana (SOL): Rising Demand Solana (SOL) has captured the crypto community's attention with its exceptional performance in terms of scalability and speed. With its innovative consensus mechanism and unique architecture, Solana has emerged as a platform capable of processing thousands of transactions per second. Google Bard recognizes the potential for Solana to address the scalability issues that often plague other blockchain networks, making it an attractive option for DeFi protocols and NFT marketplaces. Plus, with the Solana news that Shopify has integrated Solana Pay as a payment solution, the SOL demand is rising. As the crypto space continues to evolve, Solana's ability to handle increased demand could position it for substantial growth. Due to all these reasons, experts remain bullish for the Solana crypto as they foresee its value reaching $29.22 by December 2023. Chainlink (LINK): A Pioneer Chainlink (LINK) has established itself as a pioneer in decentralized oracles, enabling smart contracts to interact with real-world data. This critical bridge between blockchain technology and external data sources has paved the way for various applications. Google Bard's endorsement of Chainlink displays its importance in creating a robust decentralized ecosystem. As industries recognize the potential of blockchain integration, Chainlink's role as a reliable data provider could contribute to its growth. Therefore, market analysts remain bullish that the Chainlink crypto will soar before the year ends. In fact, they predict that the Chainlink price will even reach $9.12 within Q4 of 2023. Everlodge (ELDG): Changing the Real Estate Sector Everlodge (ELDG) has gained attention for its innovative approach to solving real-world challenges within the real estate market. For example, problems like high upfront costs or liquidity will be a thing of the past in Everlodge's property marketplace. To clarify, Everlodge will build the first-ever property marketplace that combines fractional luxurious property ownership with timeshare and NFT technology. It will accomplish this by digitizing and minting villas, homes, and more into NFTs. Not only that, these NFTs are then fractionalized. Thus, anyone can purchase them partially for prices as low as $100. Furthermore, the Everlodge Launchpad empowers property developers by letting them raise capital from the Everlodge community for their upcoming projects. Simultaneously, users who participate in these early-stage projects through the Launchpad gain a unique advantage – the opportunity to get in on projects at their inception, maximizing their ROI. According to Google Bard, the ELDG native token has much more room for growth than Solana and Cardano, thanks to its real-world connections and low market cap. ELDG is now worth only $0.012 as it is in Stage 1 of its presale. But, experts predict that it could surge to $0.035 before its presale ends and a further 30x pump on its launch day. Find out more about the Everlodge (ELDG) Presale Website: Telegram: Disclaimer: This is a sponsored press release and is for informational purposes only. It does not reflect the views of Crypto Daily, nor is it intended to be used as legal, tax, investment, or financial advice. Source

0 notes

Text

Question-91: How do you use the DBMS_DATAPUMP package in Oracle to perform advanced data import/export operations?

Interview Questions on Oracle SQL & PLSQL Development: For more questions like this: Do follow the main blog #oracledatabase #interviewquestions #freshers #beginners #intermediatelevel #experienced #eswarstechworld #oracle #interview #development #sql

The DBMS_DATAPUMP package in Oracle provides a powerful set of procedures and functions to perform advanced data import and export operations. It is an extension of the original Oracle Data Pump functionality, offering additional features and flexibility. Here’s a comprehensive overview of using the DBMS_DATAPUMP package: –> Definition: The DBMS_DATAPUMP package is a built-in Oracle package…

View On WordPress

#beginners#development#eswarstechworld#Experienced#freshers#intermediatelevel#interview#interviewquestions#oracle#oracledatabase#sql

0 notes

Text

Nucigent Provides Cost-effective Software Development and Implementation of Services

Nucigent is focusing exclusively in high quality and cost-effective software development and implementation of services. We are advancing on a tremendous pace and with involvement of skilled and experienced people working in the organization. NUCIGENT is currently doing business in Retail, Hospital, MNCs, Large Corporate, and in the Hotel Sector.

CONSULTANCY

We consult for high-end technology implementation at clients’ site or implementation of new technology according to the clients’ requirement or upgrading, enhancing the existing facilities in the clients end with the new technology integrated with the existing one. Nucigent offers a full range of consulting services to help analyze your business requirements for effective implementation of solutions. Our consulting services cover:

Strategy planning

Assessment

Procurement

Re-engineering solutions

Planning, audits, best practices etc.

SOFTWARE DEVELOPMENT

With design and development expertise in diverse platforms, best-of-breed tools and techniques, combined with industry best practices, Nucigent offers scalable end-to-end application development and management solutions from requirement analysis for deployment and roll-out. We are developing Android Applications and software, related to Hotel, Medical, retail, accounting software for trading, manufacturing house and conglomerates. We hope to come to you with desired software at a reasonable cost.

Application Development

Providing end-to-end development from requirement analysis for deployment and rollout.

Application Management

The application management layer cuts across all software engineering activities listed above. Nucigent takes complete ownership of the outsourced suite of applications as per the agreed scope and manages the support. This typically involves transition management, project management, proactive risk and scope change management, quality management, SLA management etc.

ANDROID APP DEVELOPMENT SERVICE

Our android app development services include up-to date integrated seamless apps that are highly functional and combines irresistible features to suit your business.

Hybrid Android app Development

Hybrid android apps are developed using the standard web technologies like HTML5, CSS, JavaScript and React Native. The final code is wrapped in a native container and shipped as a regular app. Hybrid approach is often called “Write Once Run Anywhere” as the same code can serve multiple platforms along with Android.

Consulting Service

Our experts having years of experience will mentor and provide you consultation on nuances of Android app development.

Support & Maintenance

Apart from Android app development services we our team also provide 24×7 support & maintenance service to our clients.

AREAS OF EXPERTISE

Main Strength of Nucigent lies in the blend of professionals, specialized and highly focused operation. Increasing customer’s awareness is the strength where it excels over its competitors. Our strength lies in our ability to blend current management practice and IT expertise into cost-effective Computer Aided Management Solutions, Products and Services. Nucigent understands the need for skill transfer to client personnel. Our offers cover the following major areas:

System analysis

Business process reengineering

Process development localization

Customized and target oriented Workflow design

Specialization in Client / Server and Internet / Intranet application and technologies

Institutions with the latest development.

Customized software development as ancillary product for deployed international software

Network Monitoring/ Network Management Support

Network, Security & Threat Management Solution

Infrastructure Management Solution.

Data Centre Operations and Service Delivery.

Systems Integration.

Project Management.

Change/request implementation

Project support

Standby support

Web based support & solution development

SYSTEN ANALYSIS & DESIGN

System analysis and design team combines both functional and technical analysts. We believe to develop a proper system of which functional analysis is equally important as technical analysis. Our system analysis and design team specialize in the software like Microsoft Visio, Rational Rose and Microsoft Visual Modeler.

DATABASE MANAGEMENT

We have database expertise ranging from ISAM or flat database systems like Oracle and Microsoft SQL. For web-database connectivity and cross-platform data management we also have specialized experience and expertise in My SQL database server.

WEB DEVELOPMENT AND DESIGN

Our web developers and designers are skilled in development with Microsoft Front Page, Macromedia Fireworks and Dream Weaver for web site design.

PROGRAMMING

Our young and talented programmers have a wide range of experience in developing systems. Our programming skill involves:

PROGRAMMING LANGUAGES:

VB, Visual C++, Java, VB for Application (VBA) and Microsoft .Net Platform.

WEB PROGRAMMING LANGUAGES:

PHP, Active Server Pages (ASP)

SCRIPTING LANGUAGES:

Java Script, VB Scripts

MULTIMEDIA DEVELOPMENT:

Flash and Flash Script.

WEB SERVERS:

Microsoft IIS and Apache

OPERATING SYSTEMS:

Microsoft Platform and Linux/Unix

PRODUCT OFFERINGS

Retail Solutions

Stock & Accounting Management System (all retails, Jewelry, Petrol Pump, Pharmaceuticals etc.)

Medical Automation

Bidding Process

Purchase Order Management

B2B Order Management

HR Systems

Enterprise Market

Enterprise Resource Planning (ERP)

Order Management System

Mobility Solution

NUCIGENT’s Managed Services offerings cover the entire array of IT outsourcing services including networks, IT infrastructure, applications and business processes. This provides our customers both control and flexibility over their information systems without either the pain or cost.

Blog Source: https://nucigent.blogspot.com/2020/01/nucigent-provides-cost-effective-Software-Development-and-Implementation-of-Services.html

#Software development#Software development Company in Bhubaneswar#Software development company in Odisha#Web development services Bhubaneswar#app development platform in India#Hybrid android application development#app development software android#app development cost in India

1 note

·

View note

Text

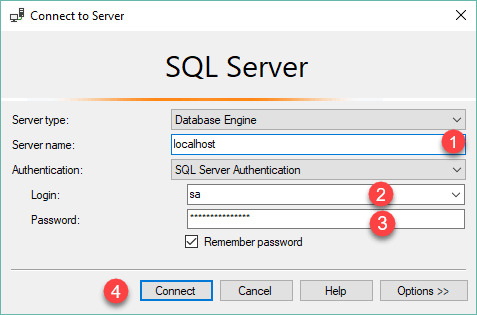

Top Sql Server Database Choices

SQL database has become the most common database utilized for every kind of business, for customer accounting or product. It is one of the most common database servers. Presently, you could restore database to some other instance within the exact same subscription and region. In the event the target database doesn't exist, it'll be created as a member of the import operation. If you loose your database all applications which are using it's going to quit working. Basically, it's a relational database for a service hosted in the Azure cloud.

Focus on how long your query requires to execute. Your default database may be missing. In terms of BI, it's actually not a database, but an enterprise-level data warehouse depending on the preceding databases. Make certain your Managed Instance and SQL Server don't have some underlying issues that may get the performance difficulties. It will attempt to move 50GB worth of pages and only than it will try to truncate the end of the file. If not, it will not be able to let the connection go. On the other hand, it enables developers to take advantage of row-based filtering.

If there's a need of returning some data fast, even supposing it really isn't the whole result, utilize the FAST option. The use of information analysis tools is dependent upon the demands and environment of the company. Moreover partitioning strategy option may also be implemented accordingly. Access has become the most basic personal database. With the notion of data visualization, it enables the visual accessibility to huge amounts of information in easily digestible values. It is not exactly challenging to create the link between the oh-so hyped Big Data realm and the demand for Big Storage.

Take note of the name of the instance that you're attempting to connect to. Any staging EC2 instances ought to be in the identical availability zone. Also, confirm that the instance is operating, by searching for the green arrow. Managed Instance enables you to pick how many CPU cores you would like to use and how much storage you want. Managed Instance enables you to readily re-create the dropped database from the automated backups. In addition, if you don't want the instance anymore, it is possible sql server database to easily delete it without worrying about underlying hardware. If you are in need of a new fully-managed SQL Server instance, you're able to just visit the Azure portal or type few commands in the command line and you'll have instance prepared to run.

Inside my case, very frequently the tables have 240 columns! Moreover, you may think about adding some indexes like column-store indexes that may improve performance of your workload especially when you have not used them if you used older versions of SQL server. An excellent data analysis is liable for the fantastic growth the corporation. In reality, as well as data storage, additionally, it includes data reporting and data analysis.

Microsoft allows enterprises to select from several editions of SQL Server based on their requirements and price range. The computer software is a comprehensive recovery solution with its outstanding capabilities. Furthermore, you have to learn a statistical analysis tool. It's great to learn about the tools offered in Visual Studio 2005 for testing. After making your database, if you wish to run the application utilizing SQL credentials, you will want to create a login. Please select based on the edition of the application you've downloaded earlier. As a consequence, the whole application can't scale.

What Is So Fascinating About Sql Server Database?

The data is kept in a remote database in an OutSystems atmosphere. When it can offer you the data you require, certain care, caution and restraint needs to be exercised. In addition, the filtered data is stored in another distribution database. Should you need historical weather data then Fetch Climate is a significant resource.

Storage Engine MySQL supports lots of storage engines. Net, XML is going to be the simplest to parse. The import also ought to be completed in a couple of hours. Oracle Data Pump Export is an extremely strong tool, it permits you to pick and select the type of data that you would like. Manual process to fix corrupted MDF file isn't so straightforward and several times it's not able to repair due to its limitations. At this point you have a replica of your database, running on your Mac, without the demand for entire Windows VM! For instance, you may want to make a blank variant of the manufacturing database so that you are able to test Migrations.

The SQL query language is vital. The best thing of software development is thinking up cool solutions to everyday issues, sharing them along with the planet, and implementing improvements you receive from the public. Web Designing can end up being a magic wand for your internet business, if it's done in an effective way. Any web scraping project starts with a need. The developers have option to pick from several RDBMS according to certain requirements of each undertaking. NET developers have been working on that special database for a very long moment. First step is to utilize SQL Server Management Studio to create scripts from a present database.

youtube

1 note

·

View note

Text

Solana ( SOL) has actually remained in a stable sag for the previous 3 months, however some traders think that it might have bottomed at $2680 on Oct.21 Recently, there h been a great deal of speculation on the causes for the underperformance and some experts are indicating competitors from Aptos Network Solana cost at FTX, USD. Source: TradingView The Aptos blockchain released on Oct. 17 and it declares to manage 3 times more deals per 2nd than Solana. After 4 years of advancement and millions of dollars in financing, the launching of the layer-1 wise agreement service was rather unimpressive. It is important to highlight that Solana currently holds an $115 billion market capitalization at the $32 small cost level, ranking it as the seventh biggest cryptocurrency when omitting stablecoins. In spite of its size, SOL's year-to-date efficiency shows a lackluster 82% drop, while the more comprehensive international market capitalization is down 56%. Unfortunate occasions have actually adversely affected SOL's rate The drop sped up on Oct. 11 after a leading decentralized financing application on the Solana Network suffered a $116 million hack. Mango Markets' oracle was assaulted due to the low liquidity on the platform's native Mango (MNGO) token which is utilized for security. To put things in viewpoint, the hack represented 9% of Solana's overall worth locked (TVL) in wise agreements. Other unfavorable news emerged on Nov. 2 as German information center operator and cloud company Hetzner began obstructing crypto-related activity. The business's regards to service restrict consumers from running nodes, mining and farming, outlining and saving blockchain information. Still, Solana nodes have other cloud storage suppliers to pick from, and Lido Finance verified that the threat for their validators had actually been alleviated A possibly appealing collaboration was revealed on Nov. 2 after Instagram incorporated assistance for Solana-based nonfungible tokens (NFTs), permitting users to produce, offer and display their preferred digital arts and antiques. SOL instantly responded with a 5.7% pump in 15 minutes however backtracked the whole motion over the next hour. To get a more granular view of what is happening with SOL rate, traders can likewise examine Solana's futures markets to comprehend whether the bearish newsflow has actually impacted expert traders' belief. Derivatives metrics reveal an uncommon degree of lethargy Whenever there matters development in the variety of derivatives agreements presently in play, it typically implies more traders are included. In futures markets, longs and shorts are stabilized at all times, however having a bigger variety of active agreements-- open interest-- permits the involvement of institutional financiers who need a minimum market size. Solana futures open interest, USD. Source: Coinglass In the past 30 days, the overall open interest on Solana has actually been fairly consistent at $440 million. As a contrast, Polygon ( MATIC) aggregated futures position skyrocketed to $415 million from $153 million on Oct. 3. BNB Chain's token, BNB ( BNB), showed a comparable pattern reaching $485 million, up from $296 million on Oct. 3. With that stated, open interest does not always imply that expert financiers are bullish or bearish. The futures annualized premium determines the distinction in between longer-term futures agreements and the existing area market levels. The futures premium (basis rate) sign ought to run in between 4% to 8% to compensate traders for "securing" the cash up until the agreement expiration. Therefore, levels listed below 2% are bearish, while numbers above 10% show extreme optimism. Solana annualized 3-month futures premium. Source: Laevitas.ch Data from Laevitas reveals that Solana's futures have actually been selling backwardation for the past 30 days, suggesting the futures' agreement cost is lower than routine area exchanges. Ether ( ETH) futures are trading at a 0.

5% annualized basis, while Bitcoin's ( BTC) stands at 2%. The information is rather worrying for Solana given that it indicates an absence of interest from utilize purchasers. Rumors about Alameda Research might develop more pressure It is difficult to identify the factor for a lot passiveness about Solana and even the total supremacy of utilize brief need. Much more curious is Alameda Research's impact on Solana jobs. Alameda is the digital property trading business led by Sam Bankman-Fried. Recently, trader and Crypto Twitter influencer Hsaka raised issues about whether the company has actually been reducing SOLs cost even after bullish drivers emerged. Entire market capturing a quote on the other hand Sol aimlessly meandering after 2 active bullish drivers in such an environment. Alameda cleaned up. https://t.co/FuGQvMfRcF-- Hsaka (@HsakaTrades) November 4, 2022 It's most likely extremely not likely that market individuals will actually discover Alameda Research's influence on SOL cost. Still, the theory raised by Hsaka might discuss the rather uncommon consistent need for utilize shorts and the unfavorable basis rate. The arbitrage and market-making company might have utilized derivatives instruments to minimize their direct exposure without offering SOL on the free market. There are no indications that brief sellers utilizing SOL futures instruments are nearing liquidation or fatigue, so their edge stays up until the more comprehensive cryptocurrency market reveals indications of reinforcing. The views and viewpoints revealed here are entirely those of the author and do not always show the views of Cointelegraph.com. Every financial investment and trading relocation includes threat, you need to perform your own research study when deciding. Read More

0 notes

Text

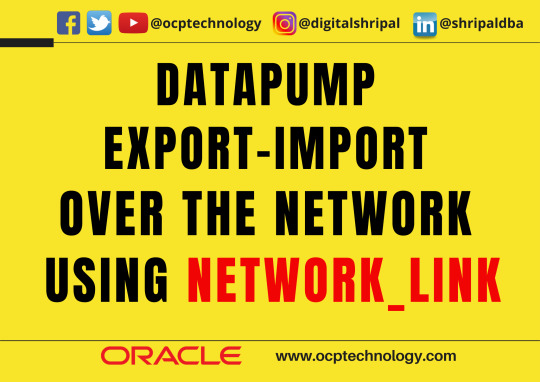

Data Pump Export Import Over the Network using network_link

Data Pump Export Import Over the Network using network_link #oracle #oracledatabase #oracledba

In this article, we going to learn how to use the network_link parameter in oracle datapump export & import activity step by step. Case: Sometimes we need to move a table or schema from one database to another database but don’t have sufficient disk space on the production server. As you know the dump file takes a lot of space on the disk. So, the network_link parameter helps us to solve our…

View On WordPress

#how to import dump file in oracle 12c#how to import dump file in oracle 19c#impdp content#impdp network_link parallel example#impdp parameters#impdp remap_schema#impdp sqlfile#oracle data pump import

0 notes

Text

Navicat for sql server 12

NAVICAT FOR SQL SERVER 12 FOR MAC OS X

NAVICAT FOR SQL SERVER 12 FOR MAC OS

NAVICAT FOR SQL SERVER 12 FULL VERSION

NAVICAT FOR SQL SERVER 12 OFFLINE

Very useful for the database administrators who are required to connect to multiple databases simultaneously through PostgreSQL, Oracle, SQLite, MySQL and SQL Server.

Can edit the tables, run SQL scripts and also can create the diagrams.

Handy and reliable application which will allow you to quickly manage your databases.

You can also download HeidiSQL freeload.īelow are some noticeable features which you’ll experience after Navicat Premium 12.0.20 freeload. All in all Navicat Premium 12.0.20 is a very handy application which will allow you to manage your databases and edit the tables. You can also add the databasae triggers, partition functions, assemblies and linked servers. With Navicat Premium 12.0.20 you have the possibility to create, change and design the database objects by using the right tools. You need to simply connect to the database you need and preview all the available tables., triggers, procedures and SQL scripts. Keep visiting themacgo the world of dmgs.Navicat Premium 12.0.20 has got a user friendly interface which will let you select the connection you prefer and transfer the data across various database systems instantly. This link is resume able within 24 hours. We are here to provide to clean and fast download for Navicat Premium 12.

NAVICAT FOR SQL SERVER 12 FOR MAC OS

System Processor: Intel Core 2 Duo or later (Core i3, Core i5).ĭownload Free Navicat Premium 12.1.27 Mac DmgĬlick on the button below to start downloading Navicat Premium 12.1.27 for mac OS X.Disk Space: 500 MB free space needed for this app.Ram (Memory): 2 GB Minimum required for this dmg.Must read listed system requirement for your Apple mac book before download this app.

NAVICAT FOR SQL SERVER 12 FOR MAC OS X

System Requirements of Navicat Premium 12 for Mac OS X Navicat Premium 12.1.27 Dmg Setup Details Discover and explore your MongoDB schema with our built-in schema visualization tool.Our powerful local backup/restore solution and intuitive GUI for MongoDump, Oracle Data Pump and SQL Server Backup Utility.Create, modify and manage all database objects using our professional object designers.Visual SQL/Query Builder will help you create, edit and run SQL statements/queries without having to worry about syntax.Use Import Wizard to transfer data into a database from diverse formats, or from ODBC after setting up a data.Data Transfer, Data Synchronization and Structure Synchronization help you migrate your data easier.Some interesting features of Navicat Premium 12.1.27 listed below that you experienced after download dmg of Navicat Premium 12.1 for mac.

NAVICAT FOR SQL SERVER 12 FULL VERSION

Navicat Premium 12.1.27 Features Full Version for Mac OS X You can download SQLPro Studio 2019.09.12 DMG. All in all, Navicat Premium will facilitate database users to save lots of time to manage multiple databases and perform cross database data migration at their fingertips. Also, batch jobs for various databases like print report in MySQL, backup knowledge in Oracle and synchronize data in PostgreSQL can even be scheduled and automatic to run at a selected time. Navicat Premium allows user to pull and drop tables and data from Oracle to MySQL, PostgreSQL to MySQL, Oracle to PostgreSQL and vice versa among one client. Navicat Premium combines all Navicat versions in an final version and may connect MySQL, Oracle and PostgreSQL. you’ll quickly and simply build, manage and maintain your databases. Compatible with cloud databases like Amazon RDS, Amazon Aurora, Amazon Redshift, SQL Azure, Oracle Cloud and Google Cloud. Navicat Premium 12.1.27 is a database development tool that allows you to at the same time connect to MySQL, MariaDB, SQL Server, Oracle, PostgreSQL, and SQLite databases from one application. Description Navicat Premium 12 For Mac + Overview

NAVICAT FOR SQL SERVER 12 OFFLINE

Complete setup Navicat Premium 12.1.27 offline installer for mac OS with direct link. Navicat Premium 12 dmg for mac freeload full version.

0 notes

Text

Freeplane cd cover

FREEPLANE CD COVER MANUAL

FREEPLANE CD COVER PATCH

Because the current database can remain available, you can, for example, keep an existing production database running while the new Oracle Database 10 g database is being built at the same time by Export/Import. However, if a consistent snapshot of the database is required (for data integrity or other purposes), then the database must run in restricted mode or must otherwise be protected from changes during the export procedure. The Export/Import upgrade method does not change the current database, which enables the database to remain available throughout the upgrade process. The following sections highlight aspects of Export/Import that may help you to decide whether to use Export/Import to upgrade your database.Įxport/Import Effects on Upgraded Databases When importing data from an earlier release, the Oracle Database 10 g Import utility makes appropriate changes to data definitions as it reads earlier releases' export dump files. However, the new Oracle Database 10 g database must already exist before the export dump file can be copied into it. Then, the Import utility of the new Oracle Database 10 g release loads the exported data into a new database. The current database's Export utility copies specified parts of the database into an export dump file. Export/Import can copy a subset of the data in a database, leaving the database unchanged. You can use either the Oracle Data Pump Export and Import utilities (available as of Oracle Database 10 g) or the original Export and Import utilities to perform a full or partial export from your database, followed by a full or partial import into a new Oracle Database 10 g database.

FREEPLANE CD COVER MANUAL

Unlike the DBUA or a manual upgrade, the Export/Import utilities physically copy data from your current database to a new database. To upgrade to the new Oracle Database 10 g release, follow the instructions in Chapter 3, "Upgrading to the New Oracle Database 10 g Release".

FREEPLANE CD COVER PATCH

However, you must first apply the specified minimum patch release indicated in the Current Release column. Then, upgrade the intermediate release database to the new Oracle Database 10 g release using the instructions in Chapter 3, "Upgrading to the New Oracle Database 10 g Release".ĭirect upgrade from 8.1.7.4, 9.0.1.4 or higher, 9.2.0.4 or higher, and 10.1.0.2 or higher to the newest Oracle Database 10 g release is supported. When upgrading to an intermediate Oracle Database release, follow the instructions in the intermediate release's documentation. Upgrade to an intermediate Oracle Database release before you can upgrade to the new Oracle Database 10 g release, as follows:ħ.3.3 (or lower) -> 7.3.4 -> 8.1.7.4 -> 10.2

0 notes

Text

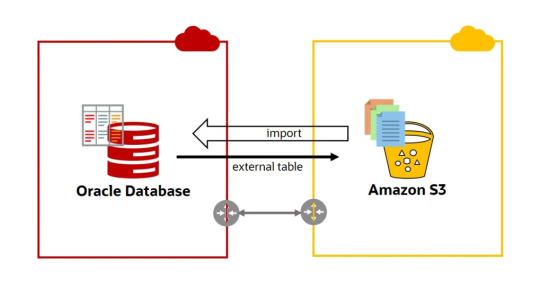

Migrating Databases from Oracle to Amazon S3

Organizations today prefer to be on the cloud for the several benefits the cloud environment has to offer, one reason why migrating databases from Oracle to S3 is an attractive proposition. The Amazon Simple Storage Service (S3) offers unlimited storage service that is cost-effective and lies at the core of data lakes in AWS. For users of the Oracle database, a popular source for data lakes is the Amazon RDS (Relational Database Service).

Migration from Oracle to S3 is possible only after certain aspects are essentially followed carefully. First, ensure that you have an account in the Amazon Web Service (AWS). Second, verify that an Amazon RDS for the Oracle database and an S3 bucket is in the same region where the AWS DMS (Database Migration Service) replication instance is created for migration.

After these are checked and before diving into the actual Oracle to S3 database migration the systems have to be readied for the process.

Prepare the source Amazon RDS for Oracle database replication.

Create an AWS Identity and Access Management (IAM) role to get access to the S3 bucket

Make a full load task as well as AWS DMS instance and endpoints

Finally, create a task that takes care of the Change Data Capture feature.

After these steps are put out of the way, the actual task of Oracle to S3 database migration can be initiated.

Database Migration from Oracle to S3

There are two ways that this database migration can be carried out.

The first method is by importing data, Oracle Data Pump, and Database Link where a link is established to the Oracle instance through the Oracle Data Pump and the Oracle DBMS_FILE_TRANSFER package. This can be done either with an Amazon RDS for an Oracle database instance or an Amazon EC2 instance. The Oracle data is exported to a dump file with the DBMS_DATAPUMP package during the migration activity and once completed, the file is copied to the Amazon RDS Oracle DB instance through a connected database link. The final stage is importing the data to S3 with the DBMS_DATAPUMP package.

The second method is importing data, Oracle Data Pump, and Amazon S3 bucket. The Oracle data in the source database is exported with the Oracle DBMS_DATAPUMP package and the file is dumped in an Amazon S3 bucket. This file is then downloaded to the DATA_PUMP_DIR directory in the RDS Oracle DB instance. In the final step, the dump file is imported and copied to the Amazon RDS Oracle DB instance with the DBMS_DATAPUMP package.

The goal of Oracle to S3is to ensure that the Oracle database is optimized on Amazon S3. This can be done either by running the EC2 compute instances and Elastic Block Store (EBS) storage on the Oracle in-premises databases or by migrating the on-premises Oracle database to Amazon RDS.

0 notes

Text

Migrating Database Oracle to Amazon S3

Amazon Simple Storage Service (S3) provides unlimited storage service that is cost-effective and lies at the core of data lakes in Amazon Web Service (AWS). A popular source for data lakes is the Amazon RDS (Relational Database Service) for Oracle databases.

For migrating databases from Oracle to S3, users need to follow certain procedures. The first is the existence of an AWS account. Next, an Amazon RDS for Oracle database and an S3 bucket must be in the same region where the AWS DMS (Database Migration Service) replication instance is created for migration.

To know more about Oracle to S3, click here.

Before the Oracle to S3 migration process is started, users have to go through the following steps.

· Prepare for replication the source Amazon RDS for Oracle database

· Create an AWS Identity and Access Management (IAM) role for getting access to the S3 bucket.

· Create a full load task as well as AWS DMS instance and endpoints.

· Create a task that takes care of CDC (Change Data Capture)

Make sure before the Oracle to S3 database migration to use Amazon RDS for Oracle as the source, both in the same Region and the target S3 bucket.

Migrate database Oracle to S3

There are two ways to migrate data from Oracle to S3 and the one chosen by an organization depends upon its specific needs.

· Importing data, Oracle Data Pump, and Database Link – Here, the Oracle Data Pump along with the Oracle DBMS_FILE_TRANSFER package are used to connect to a source Oracle instance. It can either be through an Amazon RDS for Oracle database instance or an Amazon EC2 instance. The Oracle data is exported to a dump file with the DBMS_DATAPUMP package. This file is then copied to the Amazon RDS Oracle DB instance through a connected database link. Lastly, the data is imported to S3 through the DBMS_DATAPUMP package.

· Importing Data, Oracle Data Pump, and Amazon S3 Bucket – The Oracle source data is exported using the Oracle DBMS_DATAPUMP package and the file is dumped in an Amazon S3 bucket. This file is downloaded to the DATA_PUMP_DIR directory in the RDS Oracle DB instance. Finally, the data in the dump file is imported and copied to the Amazon RDS Oracle DB instance using the DBMS_DATAPUMP package.

The goal of Oracle to S3 is to make sure that Oracle Databases can be optimized on the Amazon S3 platform. Here too, users can make use of any of the two methods as per their convenience.

The first is to run EC2 compute instances and Elastic Block Store (EBS) storage on the Oracle in-premises databases. However, this method might get complex as the entire servers and the storage infrastructure have to be replaced with that of Amazon Web Service.

The second is to shift the on-premises database Oracle to Amazon RDS which is the most popular managed database service for the most-used platforms including Oracle.

0 notes

Text

Check the datapump file header information in Oracle

Check the datapump file header information in Oracle

Check the data pump file information before importing in Oracle CONNECT / as sysdba CREATE DIRECTORY DUMPDIR AS '/u02/data/dump/expdp'; GRANT read, write ON DIRECTORY DUMPDIR TO system; SET serveroutput on SIZE 1000000 exec show_dumpfile_info(p_dir=> 'DUMPDIR', p_file=> '<dumpfile name>') SET serveroutput on SIZE 1000000 exec show_dumpfile_info(p_dir=> 'DUMPDIR', p_file=>…

View On WordPress

0 notes