#performance metrics design pattern microservice

Explore tagged Tumblr posts

Text

The Ultimate Roadmap to AIOps Platform Development: Tools, Frameworks, and Best Practices for 2025

In the ever-evolving world of IT operations, AIOps (Artificial Intelligence for IT Operations) has moved from buzzword to business-critical necessity. As companies face increasing complexity, hybrid cloud environments, and demand for real-time decision-making, AIOps platform development has become the cornerstone of modern enterprise IT strategy.

If you're planning to build, upgrade, or optimize an AIOps platform in 2025, this comprehensive guide will walk you through the tools, frameworks, and best practices you must know to succeed.

What Is an AIOps Platform?

An AIOps platform leverages artificial intelligence, machine learning (ML), and big data analytics to automate IT operations—from anomaly detection and event correlation to root cause analysis, predictive maintenance, and incident resolution. The goal? Proactively manage, optimize, and automate IT operations to minimize downtime, enhance performance, and improve the overall user experience.

Key Functions of AIOps Platforms:

Data Ingestion and Integration

Real-Time Monitoring and Analytics

Intelligent Event Correlation

Predictive Insights and Forecasting

Automated Remediation and Workflows

Root Cause Analysis (RCA)

Why AIOps Platform Development Is Critical in 2025

Here’s why 2025 is a tipping point for AIOps adoption:

Explosion of IT Data: Gartner predicts that IT operations data will grow 3x by 2025.

Hybrid and Multi-Cloud Dominance: Enterprises now manage assets across public clouds, private clouds, and on-premises.

Demand for Instant Resolution: User expectations for zero downtime and faster support have skyrocketed.

Skill Shortages: IT teams are overwhelmed, making automation non-negotiable.

Security and Compliance Pressures: Faster anomaly detection is crucial for risk management.

Step-by-Step Roadmap to AIOps Platform Development

1. Define Your Objectives

Problem areas to address: Slow incident response? Infrastructure monitoring? Resource optimization?

KPIs: MTTR (Mean Time to Resolution), uptime percentage, operational costs, user satisfaction rates.

2. Data Strategy: Collection, Integration, and Normalization

Sources: Application logs, server metrics, network traffic, cloud APIs, IoT sensors.

Data Pipeline: Use ETL (Extract, Transform, Load) tools to clean and unify data.

Real-Time Ingestion: Implement streaming technologies like Apache Kafka, AWS Kinesis, or Azure Event Hubs.

3. Select Core AIOps Tools and Frameworks

We'll explore these in detail below.

4. Build Modular, Scalable Architecture

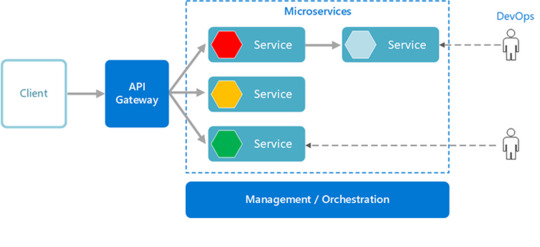

Microservices-based design enables better updates and feature rollouts.

API-First development ensures seamless integration with other enterprise systems.

5. Integrate AI/ML Models

Anomaly Detection: Isolation Forest, LSTM models, autoencoders.

Predictive Analytics: Time-series forecasting, regression models.

Root Cause Analysis: Causal inference models, graph neural networks.

6. Implement Intelligent Automation

Use RPA (Robotic Process Automation) combined with AI to enable self-healing systems.

Playbooks and Runbooks: Define automated scripts for known issues.

7. Deploy Monitoring and Feedback Mechanisms

Track performance using dashboards.

Continuously retrain models to adapt to new patterns.

Top Tools and Technologies for AIOps Platform Development (2025)

Data Ingestion and Processing

Apache Kafka

Fluentd

Elastic Stack (ELK/EFK)

Snowflake (for big data warehousing)

Monitoring and Observability

Prometheus + Grafana

Datadog

Dynatrace

Splunk ITSI

Machine Learning and AI Frameworks

TensorFlow

PyTorch

scikit-learn

H2O.ai (automated ML)

Event Management and Correlation

Moogsoft

BigPanda

ServiceNow ITOM

Automation and Orchestration

Ansible

Puppet

Chef

SaltStack

Cloud and Infrastructure Platforms

AWS CloudWatch and DevOps Tools

Google Cloud Operations Suite (formerly Stackdriver)

Azure Monitor and Azure DevOps

Best Practices for AIOps Platform Development

1. Start Small, Then Scale

Begin with a few critical systems before scaling to full-stack observability.

2. Embrace a Unified Data Strategy

Ensure that your AIOps platform ingests structured and unstructured data across all environments.

3. Prioritize Explainability

Build AI models that offer clear reasoning for decisions, not black-box results.

4. Incorporate Feedback Loops

AIOps platforms must learn continuously. Implement mechanisms for humans to approve, reject, or improve suggestions.

5. Ensure Robust Security and Compliance

Encrypt data in transit and at rest.

Implement access controls and audit trails.

Stay compliant with standards like GDPR, HIPAA, and CCPA.

6. Choose Cloud-Native and Open-Source Where Possible

Future-proof your system by building on open standards and avoiding vendor lock-in.

Key Trends Shaping AIOps in 2025

Edge AIOps: Extending monitoring and analytics to edge devices and remote locations.

AI-Enhanced DevSecOps: Tight integration between AIOps and security operations (SecOps).

Hyperautomation: Combining AIOps with enterprise-wide RPA and low-code platforms.

Composable IT: Building modular AIOps capabilities that can be assembled dynamically.

Federated Learning: Training models across multiple environments without moving sensitive data.

Challenges to Watch Out For

Data Silos: Incomplete data pipelines can cripple AIOps effectiveness.

Over-Automation: Relying too much on automation without human validation can lead to errors.

Skill Gaps: Building an AIOps platform requires expertise in AI, data engineering, IT operations, and cloud architectures.

Invest in cross-functional teams and continuous training to overcome these hurdles.

Conclusion: Building the Future with AIOps

In 2025, the enterprises that invest in robust AIOps platform development will not just survive—they will thrive. By integrating the right tools, frameworks, and best practices, businesses can unlock proactive incident management, faster innovation cycles, and superior user experiences.

AIOps isn’t just about reducing tickets—it’s about creating a resilient, self-optimizing IT ecosystem that powers future growth.

0 notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] Delve into the second edition to master serverless proficiency and explore new chapters on security techniques, multi-regional deployment, and optimizing observability.Key FeaturesGain insights from a seasoned CTO on best practices for designing enterprise-grade software systemsDeepen your understanding of system reliability, maintainability, observability, and scalability with real-world examplesElevate your skills with software design patterns and architectural concepts, including securing in-depth and running in multiple regions.Book DescriptionOrganizations undergoing digital transformation rely on IT professionals to design systems to keep up with the rate of change while maintaining stability. With this edition, enriched with more real-world examples, you'll be perfectly equipped to architect the future for unparalleled innovation.This book guides through the architectural patterns that power enterprise-grade software systems while exploring key architectural elements (such as events-driven microservices, and micro frontends) and learning how to implement anti-fragile systems.First, you'll divide up a system and define boundaries so that your teams can work autonomously and accelerate innovation. You'll cover the low-level event and data patterns that support the entire architecture while getting up and running with the different autonomous service design patterns.This edition is tailored with several new topics on security, observability, and multi-regional deployment. It focuses on best practices for security, reliability, testability, observability, and performance. You'll be exploring the methodologies of continuous experimentation, deployment, and delivery before delving into some final thoughts on how to start making progress.By the end of this book, you'll be able to architect your own event-driven, serverless systems that are ready to adapt and change.What you will learnExplore architectural patterns to create anti-fragile systems.Focus on DevSecOps practices that empower self-sufficient, full-stack teamsApply microservices principles to the frontendDiscover how SOLID principles apply to software and database architectureGain practical skills in deploying, securing, and optimizing serverless architecturesDeploy a multi-regional system and explore the strangler pattern for migrating legacy systemsMaster techniques for collecting and utilizing metrics, including RUM, Synthetics, and Anomaly detection.Who this book is forThis book is for software architects who want to learn more about different software design patterns and best practices. This isn't a beginner's manual - you'll need an intermediate level of programming proficiency and software design experience to get started.You'll get the most out of this software design book if you already know the basics of the cloud, but it isn't a prerequisite.Table of ContentsArchitecting for InnovationsDefining Boundaries and Letting GoTaming the Presentation TierTrusting Facts and Eventual ConsistencyTurning the Cloud into the DatabaseA Best Friend for the FrontendBridging Intersystem GapsReacting to Events with More EventsRunning in Multiple RegionsSecuring Autonomous Subsystems in DepthChoreographing Deployment and DeliveryOptimizing ObservabilityDon't Delay, Start Experimenting Publisher : Packt Publishing; 2nd ed. edition (27 February 2024) Language : English Paperback : 488 pages ISBN-10

: 1803235446 ISBN-13 : 978-1803235448 Item Weight : 840 g Dimensions : 2.79 x 19.05 x 23.5 cm Country of Origin : India [ad_2]

0 notes

Text

🚀 Integrating ROSA Applications with AWS Services (CS221)

As cloud-native applications evolve, seamless integration between orchestration platforms like Red Hat OpenShift Service on AWS (ROSA) and core AWS services is becoming a vital architectural requirement. Whether you're running microservices, data pipelines, or containerized legacy apps, combining ROSA’s Kubernetes capabilities with AWS’s ecosystem opens the door to powerful synergies.

In this blog, we’ll explore key strategies, patterns, and tools for integrating ROSA applications with essential AWS services — as taught in the CS221 course.

🧩 Why Integrate ROSA with AWS Services?

ROSA provides a fully managed OpenShift experience, but its true potential is unlocked when integrated with AWS-native tools. Benefits include:

Enhanced scalability using Amazon S3, RDS, and DynamoDB

Improved security and identity management through IAM and Secrets Manager

Streamlined monitoring and observability with CloudWatch and X-Ray

Event-driven architectures via EventBridge and SNS/SQS

Cost optimization by offloading non-containerized workloads

🔌 Common Integration Patterns

Here are some popular integration patterns used in ROSA deployments:

1. Storage Integration:

Amazon S3 for storing static content, logs, and artifacts.

Use the AWS SDK or S3 buckets mounted using CSI drivers in ROSA pods.

2. Database Services:

Connect applications to Amazon RDS or Amazon DynamoDB for persistent storage.

Manage DB credentials securely using AWS Secrets Manager injected into pods via Kubernetes secrets.

3. IAM Roles for Service Accounts (IRSA):

Securely grant AWS permissions to OpenShift workloads.

Set up IRSA so pods can assume IAM roles without storing credentials in the container.

4. Messaging and Eventing:

Integrate with Amazon SNS/SQS for asynchronous messaging.

Use EventBridge to trigger workflows from container events (e.g., pod scaling, job completion).

5. Monitoring & Logging:

Forward logs to CloudWatch Logs using Fluent Bit/Fluentd.

Collect metrics with Prometheus Operator and send alerts to Amazon CloudWatch Alarms.

6. API Gateway & Load Balancers:

Expose ROSA services using AWS Application Load Balancer (ALB).

Enhance APIs with Amazon API Gateway for throttling, authentication, and rate limiting.

�� Real-World Use Case

Scenario: A financial app running on ROSA needs to store transaction logs in Amazon S3 and trigger fraud detection workflows via Lambda.

Solution:

Application pushes logs to S3 using the AWS SDK.

S3 triggers an EventBridge rule that invokes a Lambda function.

The function performs real-time analysis and writes alerts to an SNS topic.

This serverless integration offloads processing from ROSA while maintaining tight security and performance.

✅ Best Practices

Use IRSA for least-privilege access to AWS services.

Automate integration testing with CI/CD pipelines.

Monitor both ROSA and AWS services using unified dashboards.

Encrypt data in transit and at rest using AWS KMS + OpenShift secrets.

🧠 Conclusion

ROSA + AWS is a powerful combination that enables enterprises to run secure, scalable, and cloud-native applications. With the insights from CS221, you’ll be equipped to design robust architectures that capitalize on the strengths of both platforms. Whether it’s storage, compute, messaging, or monitoring — AWS integrations will supercharge your ROSA applications.

For more details visit - https://training.hawkstack.com/integrating-rosa-applications-with-aws-services-cs221/

0 notes

Text

Essential Components of a Production Microservice Application

DevOps Automation Tools and modern practices have revolutionized how applications are designed, developed, and deployed. Microservice architecture is a preferred approach for enterprises, IT sectors, and manufacturing industries aiming to create scalable, maintainable, and resilient applications. This blog will explore the essential components of a production microservice application, ensuring it meets enterprise-grade standards.

1. API Gateway

An API Gateway acts as a single entry point for client requests. It handles routing, composition, and protocol translation, ensuring seamless communication between clients and microservices. Key features include:

Authentication and Authorization: Protect sensitive data by implementing OAuth2, OpenID Connect, or other security protocols.

Rate Limiting: Prevent overloading by throttling excessive requests.

Caching: Reduce response time by storing frequently accessed data.

Monitoring: Provide insights into traffic patterns and potential issues.

API Gateways like Kong, AWS API Gateway, or NGINX are widely used.

Mobile App Development Agency professionals often integrate API Gateways when developing scalable mobile solutions.

2. Service Registry and Discovery

Microservices need to discover each other dynamically, as their instances may scale up or down or move across servers. A service registry, like Consul, Eureka, or etcd, maintains a directory of all services and their locations. Benefits include:

Dynamic Service Discovery: Automatically update the service location.

Load Balancing: Distribute requests efficiently.

Resilience: Ensure high availability by managing service health checks.

3. Configuration Management

Centralized configuration management is vital for managing environment-specific settings, such as database credentials or API keys. Tools like Spring Cloud Config, Consul, or AWS Systems Manager Parameter Store provide features like:

Version Control: Track configuration changes.

Secure Storage: Encrypt sensitive data.

Dynamic Refresh: Update configurations without redeploying services.

4. Service Mesh

A service mesh abstracts the complexity of inter-service communication, providing advanced traffic management and security features. Popular service mesh solutions like Istio, Linkerd, or Kuma offer:

Traffic Management: Control traffic flow with features like retries, timeouts, and load balancing.

Observability: Monitor microservice interactions using distributed tracing and metrics.

Security: Encrypt communication using mTLS (Mutual TLS).

5. Containerization and Orchestration

Microservices are typically deployed in containers, which provide consistency and portability across environments. Container orchestration platforms like Kubernetes or Docker Swarm are essential for managing containerized applications. Key benefits include:

Scalability: Automatically scale services based on demand.

Self-Healing: Restart failed containers to maintain availability.

Resource Optimization: Efficiently utilize computing resources.

6. Monitoring and Observability

Ensuring the health of a production microservice application requires robust monitoring and observability. Enterprises use tools like Prometheus, Grafana, or Datadog to:

Track Metrics: Monitor CPU, memory, and other performance metrics.

Set Alerts: Notify teams of anomalies or failures.

Analyze Logs: Centralize logs for troubleshooting using ELK Stack (Elasticsearch, Logstash, Kibana) or Fluentd.

Distributed Tracing: Trace request flows across services using Jaeger or Zipkin.

Hire Android App Developers to ensure seamless integration of monitoring tools for mobile-specific services.

7. Security and Compliance

Securing a production microservice application is paramount. Enterprises should implement a multi-layered security approach, including:

Authentication and Authorization: Use protocols like OAuth2 and JWT for secure access.

Data Encryption: Encrypt data in transit (using TLS) and at rest.

Compliance Standards: Adhere to industry standards such as GDPR, HIPAA, or PCI-DSS.

Runtime Security: Employ tools like Falco or Aqua Security to detect runtime threats.

8. Continuous Integration and Continuous Deployment (CI/CD)

A robust CI/CD pipeline ensures rapid and reliable deployment of microservices. Using tools like Jenkins, GitLab CI/CD, or CircleCI enables:

Automated Testing: Run unit, integration, and end-to-end tests to catch bugs early.

Blue-Green Deployments: Minimize downtime by deploying new versions alongside old ones.

Canary Releases: Test new features on a small subset of users before full rollout.

Rollback Mechanisms: Quickly revert to a previous version in case of issues.

9. Database Management

Microservices often follow a database-per-service model to ensure loose coupling. Choosing the right database solution is critical. Considerations include:

Relational Databases: Use PostgreSQL or MySQL for structured data.

NoSQL Databases: Opt for MongoDB or Cassandra for unstructured data.

Event Sourcing: Leverage Kafka or RabbitMQ for managing event-driven architectures.

10. Resilience and Fault Tolerance

A production microservice application must handle failures gracefully to ensure seamless user experiences. Techniques include:

Circuit Breakers: Prevent cascading failures using tools like Hystrix or Resilience4j.

Retries and Timeouts: Ensure graceful recovery from temporary issues.

Bulkheads: Isolate failures to prevent them from impacting the entire system.

11. Event-Driven Architecture

Event-driven architecture improves responsiveness and scalability. Key components include:

Message Brokers: Use RabbitMQ, Kafka, or AWS SQS for asynchronous communication.

Event Streaming: Employ tools like Kafka Streams for real-time data processing.

Event Sourcing: Maintain a complete record of changes for auditing and debugging.

12. Testing and Quality Assurance

Testing in microservices is complex due to the distributed nature of the architecture. A comprehensive testing strategy should include:

Unit Tests: Verify individual service functionality.

Integration Tests: Validate inter-service communication.

Contract Testing: Ensure compatibility between service APIs.

Chaos Engineering: Test system resilience by simulating failures using tools like Gremlin or Chaos Monkey.

13. Cost Management

Optimizing costs in a microservice environment is crucial for enterprises. Considerations include:

Autoscaling: Scale services based on demand to avoid overprovisioning.

Resource Monitoring: Use tools like AWS Cost Explorer or Kubernetes Cost Management.

Right-Sizing: Adjust resources to match service needs.

Conclusion

Building a production-ready microservice application involves integrating numerous components, each playing a critical role in ensuring scalability, reliability, and maintainability. By adopting best practices and leveraging the right tools, enterprises, IT sectors, and manufacturing industries can achieve operational excellence and deliver high-quality services to their customers.

Understanding and implementing these essential components, such as DevOps Automation Tools and robust testing practices, will enable organizations to fully harness the potential of microservice architecture. Whether you are part of a Mobile App Development Agency or looking to Hire Android App Developers, staying ahead in today’s competitive digital landscape is essential.

0 notes

Text

Monitoring Systems and Services with Prometheus

In today’s IT landscape, monitoring systems and services is not just important—it’s critical. With the rise of microservices, cloud-native architectures, and distributed systems, ensuring application uptime and performance has become more complex. Enter Prometheus, an open-source monitoring and alerting toolkit designed for modern systems.

What is Prometheus?

Prometheus is a powerful, feature-rich, and highly scalable monitoring system. It excels at collecting metrics, providing a flexible query language, and integrating seamlessly with a wide variety of systems. Developed originally by SoundCloud, it is now a Cloud Native Computing Foundation (CNCF) project.

Key Features of Prometheus

Multi-dimensional data model: Metrics are stored with key-value pairs (labels), allowing granular and flexible querying.

Pull-based scraping: Prometheus pulls metrics from defined endpoints, ensuring better control over the data.

Powerful query language (PromQL): Prometheus Query Language enables robust metric analysis.

Time-series database: It stores all data as time-stamped, making historical analysis and trend monitoring straightforward.

Alerting capabilities: Prometheus integrates with Alertmanager to provide notifications for defined thresholds or anomalies.

How Prometheus Works

Scraping Metrics: Prometheus scrapes metrics from targets (applications, services, or systems) by accessing exposed HTTP endpoints, typically /metrics.

Data Storage: The collected metrics are stored in Prometheus’s time-series database.

Querying with PromQL: Users can run queries to analyze trends, generate graphs, or inspect metrics for anomalies.

Alerting: Based on queries, Prometheus can trigger alerts via the Alertmanager, which supports integrations with tools like Slack, PagerDuty, and email.

Use Cases of Prometheus

Infrastructure Monitoring: Track CPU, memory, disk usage, and network performance of your servers and VMs.

Application Monitoring: Monitor application health, API latencies, error rates, and user request patterns.

Kubernetes Monitoring: Gain insights into Kubernetes clusters, including pod status, resource utilization, and deployments.

Business Metrics: Measure success indicators such as transactions per second, user growth, or conversion rates.

Getting Started with Prometheus

Install Prometheus: Download and install Prometheus from its official site.

Set up Scrape Targets: Define the endpoints of the services you want to monitor in the prometheus.yml configuration file.

Run Prometheus: Start the server, and it will begin collecting metrics.

Visualize Metrics: Use Prometheus’s web UI, Grafana (a popular visualization tool), or command-line queries for visualization and analysis.

Integrating Prometheus with Grafana

While Prometheus provides a basic web interface, its true potential shines when paired with Grafana for rich and interactive dashboards. Grafana supports PromQL natively and allows you to create stunning visualizations of your metrics.

Benefits of Using Prometheus

Open Source: Freely available, with a vast and active community.

Scalable: Works efficiently for both small setups and enterprise-level infrastructures.

Extensible: Compatible with many exporters, enabling monitoring of databases, message queues, and more.

Alerts and Insights: Real-time monitoring and customizable alerts ensure minimal downtime.

Challenges and How to Overcome Them

High Cardinality: Too many unique label combinations can lead to resource overuse. Optimize your labels to avoid this.

Scaling: Use Prometheus federation or remote storage solutions to handle extremely large environments.

Learning Curve: PromQL and setup can be complex initially, but online documentation and the Prometheus community offer ample resources.

Final Thoughts

Prometheus has transformed the way modern systems and services are monitored. Its powerful features, extensibility, and open-source nature make it a go-to choice for organizations of all sizes. Whether you’re monitoring a single server or a multi-cloud deployment, Prometheus can scale to meet your needs.

If you’re looking to harness the power of Prometheus for your systems, explore HawkStack’s monitoring solutions. Our expert team ensures seamless integration and optimized monitoring for all your infrastructure needs.

Ready to get started? Contact us today! www.hawkstack.com

#redhatcourses#information technology#containerorchestration#kubernetes#docker#linux#container#containersecurity#dockerswarm

0 notes

Text

Unlocking the Power of AWS Auto Scaling: Key Benefits for Your Business

In the fast-paced world of cloud computing, businesses need solutions that can adapt to fluctuating workloads efficiently. AWS Auto Scaling is a robust feature designed to automatically adjust your cloud resources based on real-time demand. In this post, we’ll delve into the significant benefits of utilizing AWS Auto Scaling and how it can transform your cloud operations.

If you want to advance your career at the AWS Course in Pune, you need to take a systematic approach and join up for a course that best suits your interests and will greatly expand your learning path.

1. Cost Savings

One of the standout features of AWS Auto Scaling is its capacity to reduce costs. By automatically adjusting the number of active instances in response to current demand, businesses can avoid over-provisioning resources. This means you only pay for the resources you actually use, helping to optimize your cloud spending.

2. Optimal Application Performance

AWS Auto Scaling ensures your applications perform at their best by automatically scaling resources to match traffic patterns. By increasing or decreasing the number of instances based on metrics like CPU usage or incoming requests, your applications can handle spikes in traffic without compromising on speed or user experience.

3. Increased Availability and Reliability

With AWS Auto Scaling, you can achieve greater application availability and reliability. The service automatically detects and replaces unhealthy instances, maintaining the desired capacity of your application. This proactive approach minimizes downtime, ensuring that users have consistent access to your services.

4. Streamlined Management

Managing cloud infrastructure can be challenging, but AWS Auto Scaling simplifies this task. By allowing you to set scaling policies based on specific metrics, the service automates the scaling process, reducing the manual effort required. This allows your team to focus on higher-level tasks rather than routine scaling activities.

To master the intricacies of AWS and unlock its full potential, individuals can benefit from enrolling in the AWS Online Training.

5. Flexible Scaling Policies

AWS Auto Scaling offers customizable scaling policies tailored to your business needs. You can define scaling actions based on various metrics—such as CPU performance, memory usage, or even application-specific metrics—ensuring that your resource allocation is as efficient as possible.

6. Seamless Integration with AWS Ecosystem

AWS Auto Scaling works seamlessly with other AWS services, including Elastic Load Balancing (ELB) and Amazon EC2. This integration ensures that as you scale your application, traffic is effectively distributed across the available instances, enhancing both performance and reliability.

7. Adaptability to Varied Workloads

Whether managing a web application, performing batch processing, or running a microservices architecture, AWS Auto Scaling is versatile enough to accommodate diverse workloads. Its adaptability ensures that your resources are always aligned with the specific needs of your business.

Conclusion

AWS Auto Scaling is a vital tool for organizations aiming to optimize their cloud infrastructure. With benefits such as cost reduction, enhanced performance, increased reliability, and simplified management, Auto Scaling helps businesses navigate the complexities of cloud operations with ease.

By leveraging this powerful feature, you can ensure your applications are always prepared to meet user demands, driving success in today’s competitive digital landscape.

0 notes

Text

POE AI: Redefining DevOps with Advanced Predictive Operations

Enter POE AI, an advanced tool designed to bring predictive operations to the forefront of DevOps. By leveraging cutting-edge artificial intelligence, it provides powerful predictive insights that help teams proactively manage their infrastructure, streamline workflows, and enhance operational stability. Predictive Maintenance and Monitoring One of the core strengths of POE AI lies in its predictive maintenance and monitoring capabilities. This is particularly valuable for DevOps teams responsible for maintaining complex IT infrastructures where unexpected failures can have significant impacts. POE AI continuously analyzes system data, identifying patterns and anomalies that may indicate potential issues. Imagine you're managing a large-scale distributed system. This tool can monitor the performance of various components in real-time, predicting potential failures before they happen. For example, it might detect that a particular server is showing early signs of hardware degradation, allowing you to take preemptive action before a critical failure occurs. This proactive approach minimizes downtime and ensures that your infrastructure remains robust and reliable. Enhancing Workflow Efficiency POE AI goes beyond predictive maintenance by also enhancing overall workflow efficiency. The tool integrates seamlessly with existing DevOps pipelines and tools, providing insights that help streamline processes and optimize resource allocation. This integration ensures that DevOps teams can operate more efficiently, focusing on strategic initiatives rather than firefighting issues. For instance, POE AI can analyze historical deployment data to identify the most efficient deployment strategies and times. By leveraging these insights, you can schedule deployments during periods of low activity, reducing the risk of disruptions and improving overall system performance. This optimization not only enhances workflow efficiency but also ensures that your team can deliver high-quality software more consistently. AI-Powered Root Cause Analysis When issues do arise, quickly identifying the root cause is crucial for minimizing their impact. POE AI excels in this area by offering AI-powered root cause analysis. The tool can rapidly sift through vast amounts of data, pinpointing the exact cause of an issue and providing actionable recommendations for resolution. Consider a scenario where your application experiences a sudden performance drop. Instead of manually combing through logs and metrics, you can rely on it to identify the root cause, such as a specific microservice consuming excessive resources. This rapid identification allows you to address the issue promptly, restoring optimal performance and reducing the time spent on troubleshooting. Integration with DevOps Tools POE AI's ability to integrate with a wide range of DevOps tools makes it a versatile addition to any tech stack. Whether you're using Jenkins for continuous integration, Kubernetes for container orchestration, or Splunk for log analysis, POE AI can seamlessly integrate to enhance your operational workflows. For example, integrating AI with your monitoring tools can provide real-time predictive insights directly within your dashboards. This integration enables you to visualize potential issues and take proactive measures without switching between different applications. By consolidating these insights into a single platform, POE AI enhances situational awareness and simplifies operational management. Security and Compliance In the realm of DevOps, maintaining security and compliance is paramount. POE AI understands this and incorporates robust security measures to protect sensitive data. The tool adheres to major data protection regulations, including GDPR, ensuring that user data is handled securely and responsibly. For organizations with stringent compliance requirements, POE AI offers on-premises deployment options. This feature allows organizations to maintain full control over their data, ensuring that it remains within their secure environment. By prioritizing security, AI enables DevOps teams to leverage its powerful capabilities without compromising on data protection. Real-World Applications and Success Stories To understand the impact of POE AI, let’s explore some real-world applications and success stories. Many organizations have integrated POE AI into their workflows, resulting in significant improvements in operational efficiency and stability. One example is a global financial services company that implemented POE AI to enhance their IT infrastructure management. By using predictive maintenance and root cause analysis, the company significantly reduced downtime and improved system reliability. This proactive approach allowed their IT team to focus on strategic projects rather than constantly addressing issues. Another success story involves a multinational manufacturing firm that used POE AI to optimize their production workflows. By analyzing historical data and predicting potential bottlenecks, AI provided actionable insights that improved production efficiency and reduced operational costs. This optimization led to higher output quality and increased overall productivity. Future Prospects of AI in DevOps As artificial intelligence continues to advance, the capabilities of tools like POE AI are expected to expand even further. Future advancements in machine learning and natural language processing (NLP) will enhance the tool’s ability to provide even more accurate and nuanced predictions. One exciting prospect is the potential for real-time adaptive learning. Imagine a scenario where POE AI continuously learns from new data, adapting its predictive models in real-time to reflect the latest trends and patterns. This capability would enable DevOps teams to stay ahead of emerging issues and continuously optimize their workflows. Another potential development is the integration of advanced NLP capabilities, allowing POE AI to understand and interpret unstructured data such as textual reports and logs. This integration would provide deeper insights and recommendations, further enhancing the tool’s value in managing complex DevOps environments. Maximizing the Benefits of POE AI To fully leverage the benefits of POE AI, DevOps teams should consider incorporating best practices for using the tool effectively. Here are some tips to get started: - Integrate with Existing Tools: Ensure that POE AI is integrated with your existing DevOps tools and platforms. This integration will streamline predictive analysis and make it easier to access insights. - Customize Alerts and Notifications: Take advantage of POE AI's customization options to tailor alerts and notifications to your specific needs. Configure the tool to highlight the most critical issues and provide actionable recommendations. - Review and Act on Insights: Regularly review the insights and recommendations provided by POE AI. Use this information to make data-driven decisions and optimize your workflows for greater efficiency. - Train Your Team: Provide training and resources to help your team members get the most out of POE AI. Encourage them to explore the tool's features and incorporate it into their daily workflows. - Monitor Security: Ensure that POE AI's security settings are configured to meet your organization's requirements. Regularly review and update security measures to protect data and maintain compliance. By following these best practices, DevOps teams can maximize the benefits of POE AI and create a more efficient, predictive operational environment. Embracing the Future of Predictive Operations Integrating POE AI into your DevOps processes isn't just about adopting new technology—it's about fundamentally transforming how you anticipate and address operational challenges. By leveraging predictive insights, you can move from a reactive to a proactive approach, minimizing downtime and optimizing performance. POE AI empowers your team to foresee potential issues, streamline workflows, and enhance overall productivity. This tool will not only save you time and resources but also enable you to make smarter, more informed decisions, driving your team's success to new heights. Read the full article

0 notes

Text

POE AI: Redefining DevOps with Advanced Predictive Operations

Enter POE AI, an advanced tool designed to bring predictive operations to the forefront of DevOps. By leveraging cutting-edge artificial intelligence, it provides powerful predictive insights that help teams proactively manage their infrastructure, streamline workflows, and enhance operational stability. Predictive Maintenance and Monitoring One of the core strengths of POE AI lies in its predictive maintenance and monitoring capabilities. This is particularly valuable for DevOps teams responsible for maintaining complex IT infrastructures where unexpected failures can have significant impacts. POE AI continuously analyzes system data, identifying patterns and anomalies that may indicate potential issues. Imagine you're managing a large-scale distributed system. This tool can monitor the performance of various components in real-time, predicting potential failures before they happen. For example, it might detect that a particular server is showing early signs of hardware degradation, allowing you to take preemptive action before a critical failure occurs. This proactive approach minimizes downtime and ensures that your infrastructure remains robust and reliable. Enhancing Workflow Efficiency POE AI goes beyond predictive maintenance by also enhancing overall workflow efficiency. The tool integrates seamlessly with existing DevOps pipelines and tools, providing insights that help streamline processes and optimize resource allocation. This integration ensures that DevOps teams can operate more efficiently, focusing on strategic initiatives rather than firefighting issues. For instance, POE AI can analyze historical deployment data to identify the most efficient deployment strategies and times. By leveraging these insights, you can schedule deployments during periods of low activity, reducing the risk of disruptions and improving overall system performance. This optimization not only enhances workflow efficiency but also ensures that your team can deliver high-quality software more consistently. AI-Powered Root Cause Analysis When issues do arise, quickly identifying the root cause is crucial for minimizing their impact. POE AI excels in this area by offering AI-powered root cause analysis. The tool can rapidly sift through vast amounts of data, pinpointing the exact cause of an issue and providing actionable recommendations for resolution. Consider a scenario where your application experiences a sudden performance drop. Instead of manually combing through logs and metrics, you can rely on it to identify the root cause, such as a specific microservice consuming excessive resources. This rapid identification allows you to address the issue promptly, restoring optimal performance and reducing the time spent on troubleshooting. Integration with DevOps Tools POE AI's ability to integrate with a wide range of DevOps tools makes it a versatile addition to any tech stack. Whether you're using Jenkins for continuous integration, Kubernetes for container orchestration, or Splunk for log analysis, POE AI can seamlessly integrate to enhance your operational workflows. For example, integrating AI with your monitoring tools can provide real-time predictive insights directly within your dashboards. This integration enables you to visualize potential issues and take proactive measures without switching between different applications. By consolidating these insights into a single platform, POE AI enhances situational awareness and simplifies operational management. Security and Compliance In the realm of DevOps, maintaining security and compliance is paramount. POE AI understands this and incorporates robust security measures to protect sensitive data. The tool adheres to major data protection regulations, including GDPR, ensuring that user data is handled securely and responsibly. For organizations with stringent compliance requirements, POE AI offers on-premises deployment options. This feature allows organizations to maintain full control over their data, ensuring that it remains within their secure environment. By prioritizing security, AI enables DevOps teams to leverage its powerful capabilities without compromising on data protection. Real-World Applications and Success Stories To understand the impact of POE AI, let’s explore some real-world applications and success stories. Many organizations have integrated POE AI into their workflows, resulting in significant improvements in operational efficiency and stability. One example is a global financial services company that implemented POE AI to enhance their IT infrastructure management. By using predictive maintenance and root cause analysis, the company significantly reduced downtime and improved system reliability. This proactive approach allowed their IT team to focus on strategic projects rather than constantly addressing issues. Another success story involves a multinational manufacturing firm that used POE AI to optimize their production workflows. By analyzing historical data and predicting potential bottlenecks, AI provided actionable insights that improved production efficiency and reduced operational costs. This optimization led to higher output quality and increased overall productivity. Future Prospects of AI in DevOps As artificial intelligence continues to advance, the capabilities of tools like POE AI are expected to expand even further. Future advancements in machine learning and natural language processing (NLP) will enhance the tool’s ability to provide even more accurate and nuanced predictions. One exciting prospect is the potential for real-time adaptive learning. Imagine a scenario where POE AI continuously learns from new data, adapting its predictive models in real-time to reflect the latest trends and patterns. This capability would enable DevOps teams to stay ahead of emerging issues and continuously optimize their workflows. Another potential development is the integration of advanced NLP capabilities, allowing POE AI to understand and interpret unstructured data such as textual reports and logs. This integration would provide deeper insights and recommendations, further enhancing the tool’s value in managing complex DevOps environments. Maximizing the Benefits of POE AI To fully leverage the benefits of POE AI, DevOps teams should consider incorporating best practices for using the tool effectively. Here are some tips to get started: - Integrate with Existing Tools: Ensure that POE AI is integrated with your existing DevOps tools and platforms. This integration will streamline predictive analysis and make it easier to access insights. - Customize Alerts and Notifications: Take advantage of POE AI's customization options to tailor alerts and notifications to your specific needs. Configure the tool to highlight the most critical issues and provide actionable recommendations. - Review and Act on Insights: Regularly review the insights and recommendations provided by POE AI. Use this information to make data-driven decisions and optimize your workflows for greater efficiency. - Train Your Team: Provide training and resources to help your team members get the most out of POE AI. Encourage them to explore the tool's features and incorporate it into their daily workflows. - Monitor Security: Ensure that POE AI's security settings are configured to meet your organization's requirements. Regularly review and update security measures to protect data and maintain compliance. By following these best practices, DevOps teams can maximize the benefits of POE AI and create a more efficient, predictive operational environment. Embracing the Future of Predictive Operations Integrating POE AI into your DevOps processes isn't just about adopting new technology—it's about fundamentally transforming how you anticipate and address operational challenges. By leveraging predictive insights, you can move from a reactive to a proactive approach, minimizing downtime and optimizing performance. POE AI empowers your team to foresee potential issues, streamline workflows, and enhance overall productivity. This tool will not only save you time and resources but also enable you to make smarter, more informed decisions, driving your team's success to new heights. Read the full article

0 notes

Text

API Management Boomi

API Management with Boomi: Streamline Your API Ecosystem

APIs (Application Programming Interfaces) are the backbone of modern digital enterprises. They provide the connective tissue between various applications, systems, and data sources, facilitating seamless communication. However, with a growing number of APIs, managing them effectively becomes a critical challenge. This is where Boomi’s API Management solution comes into play.

What is API Management?

API Management encompasses the entire lifecycle of APIs, including:

Design and Development: Crafting well-structured and robust APIs to ensure interoperability.

Deployment and Publishing: Making APIs accessible to internal and external consumers.

Security: Implementing robust authentication and authorization mechanisms to protect sensitive data and resources accessed through APIs.

Monitoring and Analytics: Tracking API usage patterns, performance metrics, and potential issues.

Versioning: Managing different iterations of an API to maintain backward compatibility and allow organized evolution.

Developer Portal: Providing a centralized space for developers to discover, access, and subscribe to APIs.

Why Boomi API Management?

Boomi’s API Management solution offers a comprehensive set of tools and features designed to simplify and streamline the management of APIs within your organization:

Intuitive Interface: Boomi shines with its user-friendly, drag-and-drop interface, which makes API creation and management accessible even to non-technical users.

Seamless Integration with the Boomi Platform: Leverage the Boomi platform’s extensive integration capabilities to connect your APIs with various internal and external systems, facilitating data flow and process orchestration.

Flexible Deployment: Boomi’s API Gateway can be deployed on-premises, in the cloud, or in hybrid environments to match your enterprise architecture.

Robust Security: To safeguard your APIs, implement fine-grained security controls, including API keys, OAuth, OpenID Connect, and SAML.

Developer Portal: Offer a self-service portal for developers to discover, explore, test, and subscribe to your APIs, fostering seamless collaboration.

Critical Use Cases for Boomi API Management

Internal Integration: Facilitate integration and communication between different internal applications and systems, streamlining business processes.

Partner Ecosystems: Enable secure and controlled data sharing with business partners, opening up new revenue generation and collaboration opportunities.

Microservices Architectures: These architectures support the development and management of microservices, allowing for granular scalability and flexibility throughout your IT landscape.

Mobile and IoT Enablement: Expose APIs to power mobile apps and connect with IoT devices, extending your digital reach.

Getting Started with Boomi API Management

Boomi’s API Management provides a straightforward approach to get up and running quickly:

Design your API: Use Boomi’s visual tools to define your API’s endpoints, resources, and methods.

Deploy API Components: You can deploy your API as either an API Service or an API Proxy to a Boomi API Gateway.

Configure Security: Implement authentication and authorization mechanisms best suited for your API’s use case.

Publish to the Developer Portal: Showcase your API in the developer portal, providing documentation and subscription plans.

The Bottom Line

Boomi API Management helps you effectively govern and control the entire lifecycle of your APIs. This leads to improved efficiency, enhanced security, and seamless integration within your digital ecosystem. If you’re looking to streamline your API strategy, Boomi API Management is a powerful solution to explore.

youtube

You can find more information about Dell Boomi in this Dell Boomi Link

Conclusion:

Unogeeks is the No.1 IT Training Institute for Dell Boomi Training. Anyone Disagree? Please drop in a comment

You can check out our other latest blogs on Dell Boomi here – Dell Boomi Blogs

You can check out our Best In Class Dell Boomi Details here – Dell Boomi Training

Follow & Connect with us:

———————————-

For Training inquiries:

Call/Whatsapp: +91 73960 33555

Mail us at: [email protected]

Our Website ➜ https://unogeeks.com

Follow us:

Instagram: https://www.instagram.com/unogeeks

Facebook: https://www.facebook.com/UnogeeksSoftwareTrainingInstitute

Twitter: https://twitter.com/unogeek

0 notes

Text

Building Applications with Spring boot in Java

Spring Boot, a powerful extension of the Spring framework, is designed to simplify the process of developing new Spring applications. It enables rapid and accessible development by providing a convention-over-configuration approach, making it a preferred choice for many developers. This essay delves into the versatility of Spring Boot, exploring the various types of applications it is commonly used for, highlighting its features, benefits, and practical applications across industries.

Origins and Philosophy

Spring Boot was created to address the complexity often associated with Spring applications. By offering a set of auto-configuration, management, and production-ready features out of the box, it reduces the need for extensive boilerplate configuration. This framework adheres to the "opinionated defaults" principle, automatically configuring Spring applications based on the dependencies present on the classpath. This approach significantly accelerates development time and lowers the entry barrier for businesses looking to hire Java developers.

Web Applications

Spring Boot is widely recognized for its efficacy in building web applications. With embedded servers like Tomcat, Jetty, or Undertow, developers can easily create standalone, production-grade web applications that are ready to run. The framework's auto-configuration capabilities, along with Spring MVC, provide a robust foundation for building RESTful web services and dynamic websites. Spring Boot also supports various templates such as Thymeleaf, making the development of MVC applications more straightforward.

Microservices

In the realm of microservices architecture, Spring Boot stands out for its ability to develop lightweight, independently deployable services. Its compatibility with Spring Cloud offers developers an array of tools for quickly building some of the common patterns in distributed systems (e.g., configuration management, service discovery, circuit breakers). This makes Spring Boot an ideal choice for organizations transitioning to a microservices architecture, as it promotes scalability, resilience, and modularity. Microservices is one important reason why businesses look to migrate to Java 11 and beyond.

Cloud-Native Applications

Spring Boot's design aligns well with cloud-native development principles, facilitating the creation of applications that are resilient, manageable, and observable. By leveraging Spring Boot's actuator module, developers gain insights into application health, metrics, and audit events, which are crucial for Java development services companies maintaining and monitoring applications deployed in cloud environments. Furthermore, Spring Boot's seamless integration with containerization tools like Docker and Kubernetes streamlines the deployment process in cloud environments.

Enterprise Applications

Spring Boot is adept at catering to the complex requirements of enterprise applications. Its seamless integration with Spring Security, Spring Data, and Spring Batch, among others, allows for the development of secure, transactional, and data-intensive applications. Whether it's managing security protocols, handling transactions across multiple databases, or processing large batches of data, Spring Boot provides the necessary infrastructure to develop and maintain robust enterprise applications.

IoT and Big Data Applications

The Internet of Things (IoT) and big data are rapidly growing fields where Spring Boot is finding its footing. By facilitating the development of lightweight, high-performance applications, Spring Boot can serve as the backbone for IoT devices' data collection and processing layers. Additionally, its compatibility with big data processing tools like Apache Kafka and Spring Data makes it suitable for building applications that require real-time data processing and analytics.

Summary

Spring Boot's versatility extends across various domains, making it a valuable tool for developing a wide range of applications—from simple CRUD applications to complex, distributed systems. Its convention-over-configuration philosophy, combined with the Spring ecosystem's power, enables developers to build resilient, scalable, and maintainable applications efficiently.

In essence, Spring Boot is not just a tool for one specific type of application; it is a comprehensive framework designed to meet the modern developer's needs. Its ability to adapt to various application requirements, coupled with the continuous support and advancements from the community, ensures that Spring Boot will remain a crucial player in the software development landscape for years to come. Whether for web applications, microservices, cloud-native applications, enterprise-level systems, or innovative fields like IoT and big data, Spring Boot offers the flexibility, efficiency, and reliability that modern projects demand. The alternative Spring cloud also offers variety of advantage for developers building microservices in java with spring boot and spring cloud.

#software development#web development#mobile app development#java full stack developer#java application development#javaprogramming#microservices

0 notes

Text

Instana for Observability in IBM

What is Instana?

Real-time automated observability is required in modern application contexts in order to have visibility and understanding of what is happening. Having an automated approach to observability is crucial due to the extremely dynamic nature of microservices and the numerous interdependencies across application components. Because of this, established monitoring tools like New Relic find it difficult to keep up with setups that are cloud native.

The need for automation in observability

Customers are upset when an application isn’t functioning properly, and your business may suffer as a result. You will surely experience slower issue resolution, visibility gaps, and a lack of awareness of your application environments if your observability platform depends on manual instrumentation or configuration. Automation is therefore a crucial part of any observability solution.

Any contemporary, automated observability platform must have installation and setup. If you use New Relic, you are aware that manual instrumentation and settings are necessary. In order to fully utilize New Relic’s analytics capability, you must create a bespoke “application.” Depending on the technology being monitored, New Relic users must alter the code and adjust the configuration files. Additionally, New Relic employs many agents for various technologies and needs several agents per host. Installing, configuring, and maintaining New Relic monitoring all require manual work and take a lot of time and resources.

Since you can’t possible know every interaction between application services and infrastructure components, when you manually configure instrumentation, you run the risk of leaving visibility gaps. Additionally, it takes time and money to manually configure each of those connections and interactions. You need a system that maximizes visibility with the least amount of effort to manage modern applications and faster velocity development processes.

Automate setup and installation

Compare that to IBM Instana, which has an automated, single-agent design that makes setup and installation simple. Which agent(s) should be installed on which hosts cannot be guessed. There is no requirement to manually define interdependencies. All of that is automatically handled for you by Instana. You spend less time and effort, and the CI/CD process is sped up.

Instana takes automation of everything IBM even farther. Every step of the performance monitoring lifecycle is automated by Instana. Installation and configuration are automated. It automates the discovery of applications. The dashboards are automated. This streamlines profiling. It automates change detection, troubleshooting, and notifications.

Does your observability system currently automate each of those processes? If not, you should take IBM Instana into account.

Not only does IBM Instana capture each performance measure in real-time, but it also automates the tracing of each and every user request and profiles each and every process. It combines data from measurements, traces, events, and profiles and makes it accessible to those who need it (in context). Therefore, Instana automatically determines the slowest service or component of the causative event once a problem arises. Additionally, Instana can identify problems that others, such as New Relic, could overlook thanks to its one-second metric granularity.

A prerequisite for troubleshooting in complicated environments is automated root-cause analysis. By using machine learning algorithms, anomaly detection methods, and predictive analytics to automatically identify possible trouble patterns that human operators are likely to overlook, Instana speeds the troubleshooting process. By automating the analysis process, Instana can save the amount of time needed to determine the incident’s underlying cause and increase the detection’s precision, resulting in a quicker resolution.

With the help of the Instana Action CatalogTM, IBM Instana even automates remedial actions. This enables you to create original actions or utilise pre-existing automation inventories like Ansible or PagerDuty. These actions can be connected to Instana events and will then shown as potential actions for each event instance. You can perform actions manually or automatically through the Action Catalog, and it can use artificial intelligence (AI) to suggest actions to conduct based on the context of an event.

The sensors used by Instana automatically record changes, measurements, and events. With unparalleled granularity (one second) and high cardinality, Instana provides high-fidelity data in monitoring, recording an end-to-end trail of each and every request. Each sensor includes an out-of-the-box curated knowledge base of health signatures that are utilized to raise concerns or incidents based on user impact when it comes to proactive, automated health monitoring. These health signatures are continuously compared to the incoming data. By combining machine learning with pre-set health rules, a component’s health is determined.

The entire monitoring lifecycle is covered by Instana’s automatic, full-stack visibility. Instana addresses the gaps left by other APM solutions like New Relic, from the single, self-monitoring, and auto-updating agent to the automatic and continuous discovery, deployment, configuration, and dependency mapping. Instana frees teams from manual and time-consuming processes with zero-configuration dashboards, alerts, debugging, and remediation.

0 notes

Text

Price: [price_with_discount] (as of [price_update_date] - Details) [ad_1] Delve into the second edition to master serverless proficiency and explore new chapters on security techniques, multi-regional deployment, and optimizing observability.Key FeaturesGain insights from a seasoned CTO on best practices for designing enterprise-grade software systemsDeepen your understanding of system reliability, maintainability, observability, and scalability with real-world examplesElevate your skills with software design patterns and architectural concepts, including securing in-depth and running in multiple regions.Book DescriptionOrganizations undergoing digital transformation rely on IT professionals to design systems to keep up with the rate of change while maintaining stability. With this edition, enriched with more real-world examples, you'll be perfectly equipped to architect the future for unparalleled innovation.This book guides through the architectural patterns that power enterprise-grade software systems while exploring key architectural elements (such as events-driven microservices, and micro frontends) and learning how to implement anti-fragile systems.First, you'll divide up a system and define boundaries so that your teams can work autonomously and accelerate innovation. You'll cover the low-level event and data patterns that support the entire architecture while getting up and running with the different autonomous service design patterns.This edition is tailored with several new topics on security, observability, and multi-regional deployment. It focuses on best practices for security, reliability, testability, observability, and performance. You'll be exploring the methodologies of continuous experimentation, deployment, and delivery before delving into some final thoughts on how to start making progress.By the end of this book, you'll be able to architect your own event-driven, serverless systems that are ready to adapt and change.What you will learnExplore architectural patterns to create anti-fragile systems.Focus on DevSecOps practices that empower self-sufficient, full-stack teamsApply microservices principles to the frontendDiscover how SOLID principles apply to software and database architectureGain practical skills in deploying, securing, and optimizing serverless architecturesDeploy a multi-regional system and explore the strangler pattern for migrating legacy systemsMaster techniques for collecting and utilizing metrics, including RUM, Synthetics, and Anomaly detection.Who this book is forThis book is for software architects who want to learn more about different software design patterns and best practices. This isn't a beginner's manual - you'll need an intermediate level of programming proficiency and software design experience to get started.You'll get the most out of this software design book if you already know the basics of the cloud, but it isn't a prerequisite.Table of ContentsArchitecting for InnovationsDefining Boundaries and Letting GoTaming the Presentation TierTrusting Facts and Eventual ConsistencyTurning the Cloud into the DatabaseA Best Friend for the FrontendBridging Intersystem GapsReacting to Events with More EventsRunning in Multiple RegionsSecuring Autonomous Subsystems in DepthChoreographing Deployment and DeliveryOptimizing ObservabilityDon't Delay, Start Experimenting Publisher : Packt Publishing; 2nd ed. edition (27 February 2024) Language : English Paperback : 488 pages ISBN-10

: 1803235446 ISBN-13 : 978-1803235448 Item Weight : 840 g Dimensions : 2.79 x 19.05 x 23.5 cm Country of Origin : India [ad_2]

0 notes

Text

Fundamentals of Ecommerce Application Architecture

Fundamentals of Ecommerce Application Architecture

In the modern digital age, the world of commerce has witnessed a significant shift towards online platforms. Ecommerce, short for electronic commerce, has transformed the way businesses operate, interact with customers, and manage transactions. Behind the scenes of every successful ecommerce platform lies a robust application architecture that ensures seamless functionality, scalability, and security. In this blog, we'll delve into the fundamentals of ecommerce application architecture and explore the key components that contribute to its success.

1. Scalability and High Availability

Ecommerce platforms experience varying levels of traffic, with peaks during sales events, holidays, and promotional periods. A solid application architecture must be designed to handle these fluctuations without compromising performance. Scalability involves the ability to expand resources and infrastructure to accommodate increased user loads. This can be achieved through techniques like load balancing, horizontal scaling, and utilizing cloud-based services.

High availability is another critical aspect, ensuring that the platform remains accessible and operational even in the face of hardware failures or unexpected traffic spikes. Employing redundant servers, failover mechanisms, and disaster recovery strategies helps maintain uninterrupted service.

2. Front-End Development

The front-end of an ecommerce application is the user interface that customers interact with. It includes web pages, mobile apps, and other user-facing components. The front-end architecture should prioritize user experience, responsiveness, and aesthetics. It's common to use frameworks like React, Angular, or Vue.js to create dynamic and interactive interfaces that enhance customer engagement.

3. Back-End Development

The back-end of an ecommerce application handles data processing, business logic, and server-side operations. It's responsible for managing user accounts, processing orders, managing inventory, and more. Microservices architecture has gained popularity in recent years, allowing different components of the application to be developed and deployed independently. This promotes modularity, flexibility, and easier maintenance.

4. Database Management

The choice of database architecture is crucial for storing and retrieving product information, customer data, transaction records, and more. Relational databases like MySQL and PostgreSQL are commonly used for structured data, while NoSQL databases like MongoDB and Cassandra are preferred for handling large volumes of unstructured or semi-structured data. Caching mechanisms, such as Redis or Memcached, can improve application performance by storing frequently accessed data in memory.

5. Security Measures

Security is paramount in ecommerce applications due to the sensitive customer information and financial transactions involved. Implementing robust security measures, such as encryption, secure authentication, and authorization protocols, helps safeguard user data. Regular security audits and vulnerability assessments are essential to identify and mitigate potential threats.

6. Payment Gateway Integration

Ecommerce platforms rely on payment gateways to facilitate secure and seamless online transactions. The architecture should accommodate integration with various payment methods, ensuring compatibility with credit cards, digital wallets, and other payment options. Compliance with Payment Card Industry Data Security Standard (PCI DSS) requirements is vital to protect cardholder data.

7. Analytics and Monitoring

To optimize the performance and user experience of an ecommerce platform, continuous monitoring and data analysis are essential. Implementing tools for tracking user behavior, traffic patterns, conversion rates, and other key metrics provides insights for making informed business decisions and improving the platform's overall performance.

In conclusion, a well-designed ecommerce application architecture forms the foundation of a successful online business. By focusing on scalability, high availability, front-end and back-end development, database management, security, payment gateway integration, and analytics, businesses can create a robust and efficient ecommerce platform that caters to customer needs and fosters growth in the dynamic world of online commerce.

0 notes

Text

Performance Metrics Design Pattern Tutorial with Examples for Software Programmers

Full Video Link https://youtu.be/ciERWgfx7Tk Hello friends, new #video on #performancemetrics #designpattern for #microservices #tutorial for #programmers with #examples is published on #codeonedigest #youtube channel. Learn #performance #metr

In this video we will learn about Performance Metrics design pattern for microservices. This is the 2nd design principle in Observability design patterns category for microservices. Microservice architecture structures an application as a set of loosely coupled microservices and each service can be developed independently in agile manner to enable continuous delivery. But how to analyse and…

View On WordPress

#performance metrics#performance metrics design pattern#performance metrics design pattern example#performance metrics design pattern in microservices#performance metrics design pattern in microservices java#performance metrics design pattern in microservices spring boot#performance metrics design pattern interview question#performance metrics design pattern microservice#performance metrics design pattern microservices example#performance metrics design pattern spring boot#performance metrics pattern#performance metrics pattern example#performance metrics pattern java#performance metrics pattern microservice#performance metrics pattern microservice java#performance metrics pattern microservice spring boot example#performance metrics pattern microservices#performance metrics pattern microservices example#performance metrics pattern microservices implementation example

0 notes

Text

Microservices Roadmap

Creating a roadmap for implementing microservices involves several key steps to ensure a successful transition. Here's a high-level outline of a typical microservices roadmap:

Evaluate Current Architecture: Begin by assessing your existing monolithic application or service-oriented architecture (SOA). Understand its strengths, weaknesses, and pain points. Identify the components that can benefit from a microservices approach.

Define Business Objectives: Clearly define the business objectives you aim to achieve through microservices adoption. This could include improved scalability, agility, faster time-to-market, better fault isolation, or enhanced developer productivity.

Identify Candidate Services: Identify the components or services that can be extracted from the existing monolith to form individual microservices. Consider factors like domain boundaries, independent functionality, and potential for reuse across multiple applications.

Design Service Boundaries: Analyze and define the boundaries and interactions between microservices. Determine how the responsibilities and data flow will be divided, ensuring loose coupling between services while maintaining a cohesive overall system.

Establish Communication and Integration Patterns: Choose appropriate communication mechanisms for inter-service communication, such as REST APIs, message queues, or event-driven architectures. Define integration patterns to ensure seamless data exchange and consistency across services.

Implement and Deploy Microservices: Start implementing the identified microservices using suitable technologies and frameworks. Consider using containerization tools like Docker and orchestration platforms like Kubernetes to manage and deploy microservices effectively.

Ensure Scalability and Resilience: Architect microservices to be horizontally scalable and resilient. Implement strategies like load balancing, auto-scaling, circuit breakers, and fault tolerance to handle varying workloads and ensure high availability.

Implement Continuous Integration and Delivery (CI/CD): Establish automated CI/CD pipelines to streamline the development, testing, and deployment of microservices. Automate the build, testing, and deployment processes to ensure faster and reliable releases.

Monitor and Manage Microservices: Implement monitoring and logging solutions to gain visibility into the performance, health, and behavior of microservices. Use tools like Prometheus, Grafana, or ELK stack to track metrics, logs, and troubleshoot issues effectively.

Evolve and Iterate: Microservices architecture requires continuous refinement and evolution. Regularly assess the effectiveness of your microservices implementation, gather feedback, and iterate to improve performance, scalability, and maintainability.

Foster DevOps Culture: Cultivate a culture of collaboration, continuous learning, and shared ownership between development and operations teams. Encourage cross-functional teams and embrace DevOps practices to ensure smooth operations and faster feedback loops.

Monitor Business Impact: Continuously measure the impact of microservices on your business objectives. Assess key performance indicators (KPIs) such as deployment frequency, lead time, error rates, and customer satisfaction to gauge the success of your microservices implementation.

Remember that the specific roadmap may vary based on your organization's unique requirements and existing infrastructure. It's important to adapt and refine the roadmap as you progress, leveraging feedback and lessons learned along the way.

Visit our website for more-https://www.doremonlabs.com/

0 notes

Text

14 Open-Source API Management Platforms to Rely This Year

API is the most common term in the software and mobile app development world. It is everywhere, be it microservices architectures or SaaS platform offerings, public product initiatives, or IoT and partner-partner integrations. The way organizations and tech leaders are adopting APIs management platforms to stay ahead in the game, no doubt the API market will expand by 35% by 2025.

In order to leverage new opportunities and cater to a diverse set of customer needs, companies are embracing them. Developers use APIs to create the applications as it completely transforms the architectural pattern following a strategic approach to mobile application development. Because of such capabilities and prominent omnipresence, companies out there are looking for the best API development guides to provide a seamless experience to their targeted audience.

Do you find this simple? If developing API is difficult, managing it will surely be worse. Fortunately, there are a number of open-source API management platforms to help you. Why not take a tour of the top API management tools and platforms to assist you?

14 API Management Platforms to Consider in 2022

1. API Umbrella

The first on our list is API Umbrella. It’s a leading open-source API management platform for managing microservices and API. This platform allows multiple organizations to handle everything under the same umbrella and it is possible by empowering varying admin permissions for different domains. The availability of an admin web interface, API keys, caching, real-time analytics, and rate limiting are a few facilities this platform provides and stand it apart from others.

2. WSO2 API Manager

Comes with the ability to run anywhere and anytime, WSO2 API Manager is a full lifecycle API management platform that enables developers to enjoy access to API distribution and deployment on both on-premise and private clouds. Not only this, but it also offers a number of opportunities that includes ease of governing policies, higher customization, better access control, monetization facilities, and designing and prototyping for SOAP or RESTful APIs.

3. IBM Bluemix API

If you want to build portable and compatible apps for the hybrid cloud, choose IBM Bluemix API which provides more than 200 software and middleware patterns to build such apps. It offers several pre-build services and a robust mechanism for regular API access, maintaining rate limits, tracking performance metrics, governing API versions, and more, all with a single tool.

4. Kong Enterprise

Kong is the most popular open-source microservice API tool that helps developers manage everything securely and easily. Saying yes to its Enterprise edition would be a great move. It is loaded with several excellent features and functionalities, such as one-click operations, common language infrastructure capability, great visualization for monitoring, regular software health checks, wider community support, availability of open-source plugins, and more.