#quantum artificial intelligence lab

Explore tagged Tumblr posts

Text

How to Create Stunning Graphics with Adobe Photoshop

Introduction

Adobe Photoshop is the preferred software for graphic designers, photographers, and digital artists worldwide. Its powerful tools and versatile features lead to the foundation of an essential application that one needs to create the best kind of graphics. Mastering Photoshop can improve your creative-level projects, whether you are a beginner or an experienced user. In this tutorial, we will walk you through the basics and advanced techniques so you can create stunning graphics with the help of Adobe Photoshop. Read to continue

#Technology#Science#business tech#Adobe cloud#Trends#Nvidia Drive#Analysis#Tech news#Science updates#Digital advancements#Tech trends#Science breakthroughs#Data analysis#Artificial intelligence#Machine learning#Ms office 365#Quantum computing#virtual lab#fashion institute of technology#solid state battery#elon musk internet#Cybersecurity#Internet of Things (IoT)#Big data#technology applications

0 notes

Text

Sweeping layoffs architected by the Trump administration and the so-called Department of Government Efficiency may be coming as soon as this week at the National Institute of Standards and Technology (NIST), a nonregulatory agency responsible for establishing benchmarks that ensure everything from beauty products to quantum computers are safe and reliable.

According to several current and former employees at NIST, the agency has been bracing for cuts since President Donald Trump took office last month and ordered billionaire Elon Musk and DOGE to slash spending across the federal government. The fears were heightened last week when some NIST workers witnessed a handful of people they believed to be associated with DOGE inside Building 225, which houses the NIST Information Technology Laboratory at the agency’s Gaithersburg, Maryland, campus, according to multiple people briefed on the sightings. The DOGE staff were seeking access to NIST’s IT systems, one of the people said.

Soon after the purported visit, NIST leadership told employees that DOGE staffers were not currently on campus, but that office space and technology were being provisioned for them, according to the same people.

On Wednesday, Axios and Bloomberg reported that NIST had begun informing some employees that they could soon be laid off. About 500 recent hires who are still in probationary status and can be let go more easily were among those expected to be affected, according to the reports. Three sources tell WIRED that the cuts likely impact lauded technical experts in leadership positions, including three lab directors who were promoted within the last year. One person familiar with the agency tells WIRED that the official layoff notices may come Friday.

The White House and a spokesperson for NIST, which is part of the Department of Commerce, did not yet return requests for comment.

One NIST team that has been fearing cuts because of its number of probationary employees is the US AI Safety Institute (AISI), which was created after former President Joe Biden’s sweeping executive order on AI issued in October 2023. Trump rescinded the order shortly after taking office last month, describing it as a “barrier to American leadership in artificial intelligence.”

The AI Safety Institute and its roughly two dozen staffers have been working closely with AI companies, including rivals to Musk’s startup xAI like OpenAI and Anthropic, to understand and test the capabilities of their most powerful models. Musk was an early investor in OpenAI and is currently suing the startup over its decision to transition from a nonprofit to a for-profit corporation.

AISI’s inaugural director, Elizabeth Kelly, announced she was leaving her role earlier this month. Several other high-profile NIST leaders working on AI have also departed in recent weeks, including Reva Schwartz, who led NIST’s Assessing Risks and Impacts of AI program, and Elham Tabassi, NIST’s chief AI adviser. Kelly and Schwartz declined to comment. Tabassi did not respond to a request for comment.

US vice president JD Vance recently signaled the new administration’s intent to deprioritize AI safety at the AI Action Summit, a major international meeting held in Paris last week that AISI and other government staffers were not invited to attend, according to three familiar with the matter. “I'm not here this morning to talk about AI safety,” Vance said in his first major speech as VP. “I'm here to talk about AI opportunity.”

Though they receive bipartisan support, the AI Safety Institute and other parts of NIST had been preparing for the Trump administration to set new priorities for their work. In anticipation, some NIST teams began moving to deprioritize efforts such as fighting misinformation and racial bias in AI systems, according to two people familiar with the projects. Two other people say that putting even more public emphasis on national security was welcomed among some staffers, since the institute has already engaged in related research efforts.

Overall, the AI Safety Institute has been pressing ahead with working groups and other efforts focused on developing guidelines for studying AI systems. Last week, the startup Scale AI announced it had been selected by the institute as its “first approved third-party evaluator authorized to conduct AI model assessments.” Michael Kratsios, Trump’s pick to direct the White House Office of Science and Technology Policy, was until recently Scale’s managing director.

The feared layoffs at NIST have drawn strong rebuke from civil society groups, such as the Center for AI Policy, and congressional Democrats. NIST’s 2024 budget was about $1.5 billion, approximately .02 percent of federal spending overall, making it perhaps an unlikely target for Musk’s DOGE project. DOGE staffers have dropped into several government agencies this month, gaining access to sensitive systems, promoting the use of AI to boost productivity, and seeding a trail of resignations among longtime government workers. DOGE’s specific goals at NIST couldn’t be immediately learned.

US representative Jake Auchincloss, a Democrat who serves on the House Energy and Commerce Committee, says any efforts by DOGE to trim NIST rather than focusing instead on parts of government that account for far more spending, such as Department of Defense contracts, amounts to “scrounging for pennies in front of a bank vault.” He called NIST an agency with high returns on investment and warned that hobbling it would be self-defeating for the US. “Imparing NIST’s function is going to harm business productivity and increase costs,” Auchincloss says.

Staff for Democrats on the US House of Representatives Committee on Science, Space, and Technology say they are concerned about the potential for significant economic harm from any cuts to NIST. The agency’s timekeeping is used by stock markets, and its research on buildings and pipelines help keep infrastructure intact. DOGE “might throw out things that are crucial to the functioning of the economy,” says one of the Democratic staffers, who all spoke on the condition of anonymity.

Budget cuts at other agencies could also have ripple effects at NIST, because they help fund some of its projects, including studies on the accuracy of face recognition systems and a database of cybersecurity vulnerabilities. “We’re worried about staffing, funding at every research agency in the federal government,” says the science committee staffer.

Earlier this month, California representative Zoe Lofgren, the top Democrat on the Republican-controlled House Science Committee, and her colleagues sent letters to the heads of several agencies, including NIST and NASA, demanding transparency about DOGE activities.

“While NIST does not conduct classified research, its cutting edge work in topics such as AI, quantum sensors and clocks, and semiconductors are world-class, and improper exfiltration to non-secure servers would be a boon for our adversaries,” stated the letter last week to NIST acting director Craig Burkhardt. It demanded a response from him by February 18; as of February 19, there had been none, according to Lofgren’s office.

The letter also raised concern about Musk’s potential conflicts of interest at NIST, given the intimate dealings between the AI Safety Institute and his competitors. Representative Auchincloss, who has studied NIST’s biology projects, expressed concern about Musk potentially gaining an unfair advantage and compromising safety by influencing standards that affect his Neuralink brain implant venture.

NIST was originally created in 1901 to help the US science and engineering industries establish scientific norms in areas like measurement. In coordination with the US Naval Observatory, the agency is also responsible for building and maintaining the country’s most accurate atomic clocks. Overall, NIST currently employs about 3,400 scientists, engineers, and technicians, according to its website.

Project 2025, an informal plan for the Trump administration crafted by the Heritage Foundation, an influential right-leaning think tank, called for consolidating the research work currently spread across NIST and other agencies and ensuring that it “serves the national interest in a concrete way in line with conservative principles.”

8 notes

·

View notes

Text

Best AI Company in Gurugram: Leading AI Innovations in 2025

Introduction

Gurugram, India's emerging tech powerhouse, is witnessing a surge in artificial intelligence (AI) innovation. As businesses across industries adopt AI-driven solutions, the city has become a hub for cutting-edge AI companies. Among them, Tagbin is recognized as a leader, driving digital transformation through AI-powered advancements. This article explores the best AI companies in Gurugram and how they are shaping the future of business in 2025.

The Rise of AI Companies in Gurugram

Gurugram's strategic location, proximity to Delhi, and thriving IT ecosystem have made it a preferred destination for AI companies. With government initiatives, private investments, and an abundance of tech talent, the city is fostering AI-driven innovations that enhance operational efficiency, automation, and customer engagement.

Key Factors Driving AI Growth in Gurugram

Government Support & Policies – AI-driven initiatives are backed by policies encouraging innovation and startups.

Access to Skilled Talent – Gurugram has top tech institutes producing AI and machine learning experts.

Booming IT & Startup Ecosystem – AI firms benefit from collaborations with IT giants and startups.

Investment in AI Research – Companies are investing in AI research to develop next-gen technologies.

Demand for AI Across Industries – Sectors like healthcare, fintech, and retail are rapidly adopting AI solutions.

Top AI Companies in Gurugram Revolutionizing Business

1. Tagbin – Leading AI Innovation in India

Tagbin is at the forefront of AI development, specializing in smart experiences, data-driven insights, and AI-powered digital transformation. The company helps businesses leverage AI for customer engagement, automation, and interactive solutions.

2. Hitech AI Solutions

A prominent AI firm in Gurugram, Hitech AI Solutions focuses on machine learning, predictive analytics, and AI-driven automation.

3. NexTech AI Labs

NexTech AI Labs pioneers AI applications in healthcare and finance, providing AI-driven chatbots, recommendation systems, and automated diagnostics.

4. AI Edge Innovations

AI Edge specializes in AI-powered cybersecurity, offering fraud detection, risk assessment, and AI-driven surveillance solutions.

5. Quantum AI Systems

This company integrates quantum computing with AI, enabling faster and more precise data processing for businesses.

AI’s Impact on Businesses in Gurugram

AI is transforming businesses across multiple sectors in Gurugram, driving efficiency and innovation.

1. AI in Customer Experience

AI-powered chatbots and virtual assistants provide 24/7 customer support.

Personalization algorithms enhance user experiences in e-commerce and retail.

2. AI in Finance & Banking

AI-driven fraud detection helps identify suspicious transactions.

Predictive analytics assist in risk assessment and investment strategies.

3. AI in Healthcare

AI algorithms assist in medical diagnosis, reducing human error.

AI-powered healthcare solutions improve patient care and operational efficiency.

4. AI in Manufacturing

Smart automation streamlines production and reduces costs.

Predictive maintenance prevents equipment failures.

Why Gurugram is the Future AI Hub of India

Gurugram is rapidly establishing itself as India’s AI innovation hub due to its thriving corporate sector, extensive tech infrastructure, and a growing network of AI startups. The city’s AI ecosystem is expected to grow exponentially in the coming years, with Tagbin and other key players leading the way.

Conclusion

The rise of AI companies in Gurugram is reshaping the way businesses operate, providing smart automation, personalized customer experiences, and cutting-edge AI solutions. With its advanced AI-driven solutions, Tagbin stands out as a key player in this transformation. As Gurugram cements itself as an AI powerhouse, businesses that adopt AI technologies will gain a competitive edge in 2025 and beyond.

#tagbin#writers on tumblr#artificial intelligence#tagbin ai solutions#ai trends 2025#Best AI company in Gurugram#AI companies in Gurugram 2025#AI-driven business in India#Top AI firms in Gurgaon#AI innovation in Gurugram#AI-powered transformation#AI startups in Gurugram#technology

2 notes

·

View notes

Text

youtube

There are different definitions of "AGI" (Artificial General Intelligence). Some people focus on AI's understanding and possibly even sentience, while many focus on what it can do. Some people define it as equivalent to the abilities of the average person; others, as equivalent to the abilities of experts.

Part of the challenge is that intelligence comes in many forms. For instance, the ability to grasp objects is a form of intelligence though it's not something people generally think of as a business-related skill. And at the same time, the moravec paradox observes that computers are great at things humans are not and vice versa (e.g. computers have a hard time grasping objects but can do advanced maths in milliseconds.) So, comparing human and machine intelligence is challenging.

That said, I favor the "what it can do" approach because that has the most immediate impact in people's lives. That is, if we have AI systems that can do economically useful work just as good as the average person (or even better, the average expert), that means a few things:

People won't be needed to work. (Jobs? Economy?)

All economic output could increase several times over. For instance, AI may advance our tech. At a minimum, robots can work 24/7/365 whereas humans work a fraction of that. Imagine our ability to fabricate advanced computing chips doubling, which can then be used to make more chips, etc.

We may have begun the "singularity", where digital based knowledge and skills skyrockets. This is because we will have reached a point where the AI can improve itself. This means expanding the types of jobs it can perform, improving its performance, and likely innovating new techniques or technologies to assist with its goals.

(Of course, that could have tremendously good or tremendously bad outcomes - e.g. global retirement and healthy ecosystem vs literal doom - but that's another discussion.)

This vid argues that we've hit AGI by this definition. And I think that by some narrow definitions, this may be the case. (I still think we need more accuracy, a better "ecosystem" for it to function, more real-world modeling, etc. OTOH, this isn't preventing it from being massively useful right now.) So, this doesn't mean that the things I just listed will happen tomorrow - but it does mean that we should be expecting more enormous advances in the lab, and start to see real world applications slowly beginning. The line between AI and AGI is quickly blurring. Buckle up.

p.s. I know casual readers probably hear about AI here and there but may still have a picture in their head of AI as basically just a tool for making crappy pictures. I'm begging y'all to see that AI is both way beyond that (e.g. it's now making literal movies, and rapidly approaching market-ready results) and more importantly, that it's much more than that. AI is advancing every field of science, from fusion energy to quantum computing to curing diseases and so much more. This is no longer a curiosity. This is real and it's here.

4 notes

·

View notes

Text

Predicting the world 100 years from now is tricky, but based on current trends, here’s my best guess:

Technology & AI

AI will be deeply integrated into daily life, possibly achieving general intelligence.

Humans may have neural interfaces (brain-computer connections) for direct interaction with technology.

Quantum computing could revolutionize science, solving problems we can’t even comprehend today.

Automation will dominate industries, possibly making traditional jobs scarce but also creating new ones.

Medicine & Human Life

Lifespans may extend significantly due to advancements in genetics, nanotechnology, and disease eradication.

Personalized medicine will tailor treatments to individual DNA, making many illnesses rare or manageable.

Bionics and brain implants could enhance human capabilities, blurring the line between human and machine.

Lab-grown organs and artificial blood might make organ failure a thing of the past.

Climate & Environment

Climate change will reshape geography—some cities may be underwater, while new habitable zones open in the Arctic.

Renewable energy will likely be dominant, with fusion power possibly providing limitless clean energy.

Some species will go extinct, but others could be revived using genetic engineering (de-extinction).

Space-based solar power and asteroid mining might provide Earth with limitless resources.

Space Exploration

Humans may have colonies on the Moon, Mars, or even further, possibly terraforming parts of Mars.

Space tourism might be common, and asteroid mining could be a major industry.

We may have discovered signs of extraterrestrial life, even if just microbial.

Society & Culture

Nations may shift towards global governance or digital nation-states with AI-assisted leadership.

Cultural and language barriers could dissolve due to real-time AI translation.

Cities may be smart, self-sustaining, and highly automated, with minimal human labor required.

Virtual reality could become so advanced that people spend much of their lives in digital worlds.

Ethical & Existential Risks

AI, biotechnology, and climate change could present existential risks if not managed well.

Humans may merge with technology so much that the definition of "human" itself changes.

Wars might be fought with cyber weapons, drones, and AI rather than traditional military forces.

Privacy could be nearly nonexistent, with constant surveillance and data tracking.

Overall, the world of 2125 will be radically different, shaped by AI, biotechnology, climate adaptation, and space expansion. Whether it’s a utopia or dystopia depends on how wisely we handle the next few decades.

2 notes

·

View notes

Text

Toward a code-breaking quantum computer

New Post has been published on https://thedigitalinsider.com/toward-a-code-breaking-quantum-computer/

Toward a code-breaking quantum computer

The most recent email you sent was likely encrypted using a tried-and-true method that relies on the idea that even the fastest computer would be unable to efficiently break a gigantic number into factors.

Quantum computers, on the other hand, promise to rapidly crack complex cryptographic systems that a classical computer might never be able to unravel. This promise is based on a quantum factoring algorithm proposed in 1994 by Peter Shor, who is now a professor at MIT.

But while researchers have taken great strides in the last 30 years, scientists have yet to build a quantum computer powerful enough to run Shor’s algorithm.

As some researchers work to build larger quantum computers, others have been trying to improve Shor’s algorithm so it could run on a smaller quantum circuit. About a year ago, New York University computer scientist Oded Regev proposed a major theoretical improvement. His algorithm could run faster, but the circuit would require more memory.

Building off those results, MIT researchers have proposed a best-of-both-worlds approach that combines the speed of Regev’s algorithm with the memory-efficiency of Shor’s. This new algorithm is as fast as Regev’s, requires fewer quantum building blocks known as qubits, and has a higher tolerance to quantum noise, which could make it more feasible to implement in practice.

In the long run, this new algorithm could inform the development of novel encryption methods that can withstand the code-breaking power of quantum computers.

“If large-scale quantum computers ever get built, then factoring is toast and we have to find something else to use for cryptography. But how real is this threat? Can we make quantum factoring practical? Our work could potentially bring us one step closer to a practical implementation,” says Vinod Vaikuntanathan, the Ford Foundation Professor of Engineering, a member of the Computer Science and Artificial Intelligence Laboratory (CSAIL), and senior author of a paper describing the algorithm.

The paper’s lead author is Seyoon Ragavan, a graduate student in the MIT Department of Electrical Engineering and Computer Science. The research will be presented at the 2024 International Cryptology Conference.

Cracking cryptography

To securely transmit messages over the internet, service providers like email clients and messaging apps typically rely on RSA, an encryption scheme invented by MIT researchers Ron Rivest, Adi Shamir, and Leonard Adleman in the 1970s (hence the name “RSA”). The system is based on the idea that factoring a 2,048-bit integer (a number with 617 digits) is too hard for a computer to do in a reasonable amount of time.

That idea was flipped on its head in 1994 when Shor, then working at Bell Labs, introduced an algorithm which proved that a quantum computer could factor quickly enough to break RSA cryptography.

“That was a turning point. But in 1994, nobody knew how to build a large enough quantum computer. And we’re still pretty far from there. Some people wonder if they will ever be built,” says Vaikuntanathan.

It is estimated that a quantum computer would need about 20 million qubits to run Shor’s algorithm. Right now, the largest quantum computers have around 1,100 qubits.

A quantum computer performs computations using quantum circuits, just like a classical computer uses classical circuits. Each quantum circuit is composed of a series of operations known as quantum gates. These quantum gates utilize qubits, which are the smallest building blocks of a quantum computer, to perform calculations.

But quantum gates introduce noise, so having fewer gates would improve a machine’s performance. Researchers have been striving to enhance Shor’s algorithm so it could be run on a smaller circuit with fewer quantum gates.

That is precisely what Regev did with the circuit he proposed a year ago.

“That was big news because it was the first real improvement to Shor’s circuit from 1994,” Vaikuntanathan says.

The quantum circuit Shor proposed has a size proportional to the square of the number being factored. That means if one were to factor a 2,048-bit integer, the circuit would need millions of gates.

Regev’s circuit requires significantly fewer quantum gates, but it needs many more qubits to provide enough memory. This presents a new problem.

“In a sense, some types of qubits are like apples or oranges. If you keep them around, they decay over time. You want to minimize the number of qubits you need to keep around,” explains Vaikuntanathan.

He heard Regev speak about his results at a workshop last August. At the end of his talk, Regev posed a question: Could someone improve his circuit so it needs fewer qubits? Vaikuntanathan and Ragavan took up that question.

Quantum ping-pong

To factor a very large number, a quantum circuit would need to run many times, performing operations that involve computing powers, like 2 to the power of 100.

But computing such large powers is costly and difficult to perform on a quantum computer, since quantum computers can only perform reversible operations. Squaring a number is not a reversible operation, so each time a number is squared, more quantum memory must be added to compute the next square.

The MIT researchers found a clever way to compute exponents using a series of Fibonacci numbers that requires simple multiplication, which is reversible, rather than squaring. Their method needs just two quantum memory units to compute any exponent.

“It is kind of like a ping-pong game, where we start with a number and then bounce back and forth, multiplying between two quantum memory registers,” Vaikuntanathan adds.

They also tackled the challenge of error correction. The circuits proposed by Shor and Regev require every quantum operation to be correct for their algorithm to work, Vaikuntanathan says. But error-free quantum gates would be infeasible on a real machine.

They overcame this problem using a technique to filter out corrupt results and only process the right ones.

The end-result is a circuit that is significantly more memory-efficient. Plus, their error correction technique would make the algorithm more practical to deploy.

“The authors resolve the two most important bottlenecks in the earlier quantum factoring algorithm. Although still not immediately practical, their work brings quantum factoring algorithms closer to reality,” adds Regev.

In the future, the researchers hope to make their algorithm even more efficient and, someday, use it to test factoring on a real quantum circuit.

“The elephant-in-the-room question after this work is: Does it actually bring us closer to breaking RSA cryptography? That is not clear just yet; these improvements currently only kick in when the integers are much larger than 2,048 bits. Can we push this algorithm and make it more feasible than Shor’s even for 2,048-bit integers?” says Ragavan.

This work is funded by an Akamai Presidential Fellowship, the U.S. Defense Advanced Research Projects Agency, the National Science Foundation, the MIT-IBM Watson AI Lab, a Thornton Family Faculty Research Innovation Fellowship, and a Simons Investigator Award.

#2024#ai#akamai#algorithm#Algorithms#approach#apps#artificial#Artificial Intelligence#author#Building#challenge#classical#code#computer#Computer Science#Computer Science and Artificial Intelligence Laboratory (CSAIL)#Computer science and technology#computers#computing#conference#cryptography#cybersecurity#defense#Defense Advanced Research Projects Agency (DARPA)#development#efficiency#Electrical Engineering&Computer Science (eecs)#elephant#email

5 notes

·

View notes

Text

((Closed starter for @belovedai, Soji breaks Lal out of Daystrom.))

It's disturbingly easy for Soji to break into Daystrom but, as she flashes a badge with Dahj's name and the security guard opens the door for her, she supposes it shouldn't be surprising. They had been designed for infiltration, after all, and this precise facility had been one of the targets. The only person on site who knew anything about Dahj was Agnes Jurati and she certainly hadn't reported anything. Dahj was still a viable candidate in their systems and Soji was able to just…step into her life. It was as easily as changing her clothes and styling her hair. That, of all of this, was perhaps the most uncomfortable aspect.

Dahj had been accepted on the team for quantum computing and artificial intelligence. It was a subsection of synthetic development, a department that was recently restored when the ban was repealed. The whole facility was chaos in the wake of the repeal, with new scientists and cyberneticists every few feet. There were a hundred new hires, at least, drawn from everywhere across Federation space. It was as if Daystrom were having a job fair and Soji? She melted into the chaos; just another face in the crowd.

Of course, the ban hadn't prevented all use of synthetics and androids, military efforts were rarely limited by things as pedestrian as laws. The ban had only halted development and experimentation. Use of synthetics was heavily regulated, though. They were limited to the sort of occupations where sudden violence was actively encouraged. They were stored accordingly, finished models locked down in subterranian storage. But Soji didn't need to get into subterranian storage--she needed the initial labs. Fortunately, those were not so heavily guarded and secured. Even Starfleet's clandestine operations didn't keep more than a weather eye on the facilities above production. So a new scientist walking down a hall when there were hundreds of others milling about? That was barely worthy of note.

In the end, Soji just strolled right into Daystrom's fabrication laboratories, easy as you please, like she'd been personally invited.

There were two scientists present in the lab when Soji entered, but no security personnel at all. Soji almost felt bad for the two older men as she promptly knocked them out but, given that they were experimenting with one of her inactive sisters, her sympathy was limited. She found Lal's body stored in a stasis cabinet, slotted into the wall like a morgue drawer. When Soji pulled her out she was in pieces, fragmented into piles of parts. Several of her internal systems were exposed, the covers and pieces set aside almost casually, piled on her lap in a way that toed the line between horrifying and irreverant.

"It's nice to meet you," Soji said softly. Lal, of course, didn't answer and Soji promptly retrieved a rolling tray with a host of tools. Her experience with cybernetics was mostly in disassembly, but she had put more than a few people back together. It would take her several hours to restore Lal to functionality and disconnect her from the Daystrom systems, but whaaaaaaaaaa efw hours in the grand scheme of things?

10 notes

·

View notes

Text

'On the morning of 16 July 1945, a blinding light tore through the still-dark sky over the Jornada Del Muerto desert in New Mexico. It was the birth of the Atomic Era, but theoretical physicist J. Robert Oppenheimer’s response was a curt, “I guess it worked”— at least according to his brother Frank Oppenheimer.

Years later, in an NBC news documentary, Oppenheimer recited a line from the Bhagavad Gita when talking about the bombing of Hiroshima and Nagasaki: “Now I am become death, the destroyer of worlds.”

This powerful and chilling quote features twice in Christopher Nolan’s 2023 biopic of one of the most celebrated and vilified scientists in history. But the movie Oppenheimer is not just about Oppenheimer. It also illuminates the evolution of the Manhattan Project and the alliance of brilliant minds working to create the world’s first atomic bomb.

Overall, it’s the story of an era when World War-II ignited a race to harness the atom’s hidden power after the discovery of nuclear fission by German chemists Otto Hahn and Fritz Strassman in 1938. For all its destructive capabilities, or perhaps because of it, the atomic bomb became a symbol of power.

Just as artificial intelligence and quantum mechanics are central to the current scientific epoch, the exploration of atomic energy and nuclear fission powered the science of the 1940s.

But the film’s gaze, focused on Oppenheimer, offers only glimpses of his scientific contemporaries —some, recipients of the Nobel Prize— whose contributions were pivotal to shaping the course of modern physics.

Its lens also did not capture parallel developments on the other side of the globe in India, where Homi Bhabha was leading the country’s quest for atomic energy.

In 1948, for instance, Bhabha had urged Prime Minister Jawaharlal Nehru that the development of atomic energy be “entrusted to a very small and high-powered body composed of say three people with executive power, and answerable directly to the Prime Minister without any intervening link.”

Here’s a look at some key figures from the atomic era, some portrayed in the film and others whose roles remained beyond its scope.

A rough start

Robert ‘Oppie’ Oppenheimer (played by Cillian Murphy) was born into a wealthy New York Jewish family in 1904. After getting his undergraduate degree in chemistry from Harvard, he went to Cambridge University to study physics.

He did not see instant stardom. As shown in the movie, he was so bad at experimental work that he was miserable. It was also in Cambridge that he met soon-to-be Nobel laureate Patrick Blackett (James D’Arcy), featured in the movie as the professor he tried to poison with an apple. While no one ate the apple, his mischief became known and his parents had to convince authorities to not press charges.

Notably, Homi Bhabha, later to be known as the father of the Indian atomic programme, studied in Cambridge in the late 1920s, and worked at the Cavendish lab, which Oppenheimer wanted to join.

But Oppenheimer left Cambridge battling severe depression and in 1926 moved to Germany where he completed his PhD in physics. He was 23 years old, and Europe was still uneasy in the aftermath of the First World War. Mussolini had seized power in Italy and Hitler was just rising in popularity in Germany.

Early encounters with friends & foes

During his stay in Germany, Oppenheimer cultivated relationships with several fellow colleagues, some of whom would become future collaborators.

Among them was Italian-American physicist Enrico Fermi (Danny Deferrari). While he joined the Manhattan Project relatively late, he played a role in selecting Japanese targets. In the 1930s, Bhabha also collaborated closely with Fermi while he was on the Isaac Newton scholarship in Rome.

Significantly, it was Fermi who alerted military leaders to nuclear energy’s potential and is renowned for creating the first nuclear reactor. He won the 1938 Nobel Prize for his work on radioactivity and discovery of transuranium elements— the synthetic element fermium is named after him.

Like Oppenheimer, Fermi opposed the development of hydrogen bombs. He defended Oppenheimer in the security hearing in 1954 when the latter was accused of being a Soviet spy during the McCarthy era.

Oppenheimer also became close to Werner Heisenberg (Matthias Schweighöfer), a German theoretical physicist whose legacy includes the uncertainty principle and the 1932 Nobel Prize for “creation of quantum mechanics”.

However, Heisenberg was also the principal scientist in the Nazi nuclear weapons programme during World War-II, and contributed to West German nuclear reactor development.

The movie shows Oppenheimer’s team in a race with Heisenberg’s to develop the bomb, but in reality, Heisenberg never came close to success on this front. In fact, it is believed that conscientious German scientists secretly sabotaged the research.

Heisenberg, notably, spent time in India in the 1920s as a guest of Tagore, indulging in deep discussions about philosophy, life, and science.

In Germany, Oppenheimer also became friendly with Hungarian-born physicist Edward Teller (Benny Safdie), whose calculations were often referred to in the movie. The relationship between the men, however, eventually soured.

Teller and Oppenheimer disagreed about the type of weapon to prioritise in the Manhattan Project. Teller was a proponent of developing hydrogen bombs, and, in fact, came to be known as the father of the hydrogen bomb. In the closed-door hearing, he testified against Oppenheimer.

Onscreen, Teller can be easily identified by his sweaty eyebrows. He is the person with whom Oppenheimer’s wife Kitty refuses to shake hands.

Another member of Oppenheimer’s circle in Europe was Polish-American physicist Isidor Isaac Rabi (David Krumholtz). In the film, Rabi is featured in a scene where Oppenheimer is about to give a lecture in Amsterdam. Rabi offers to help a Dutch scientist translate Oppenheimer’s lecture into English. However, Oppenheimer surprises everyone by speaking in Dutch himself, a language he learned in just a few weeks.

Rabi was a lifelong friend of Oppenheimer, and in the movie, he is the only one who talks about Oppenheimer’s religion, addressing it in the train scene. He won the 1944 Physics Nobel for his discovery of nuclear magnetic resonance, which is used in MRIs today. His work also led to the development of the microwave oven.

Return to America

When Oppenheimer returned to the USA in the late 1920s, he received job offers for professorships at both UC Berkeley and Caltech. He opted for the position at Berkeley while also taking on a visiting teaching role at Caltech.

At this time, he was diagnosed with tuberculosis and spent time at a New Mexico ranch to recover. He fell in love with the region, where he had also been sent as a teenager to recover from dysentery. It was here that he would later set up his secret Los Alamos laboratories.

In Berkeley, in the 1930s, he worked with another Nobel Prize experimental physicist, Ernest Lawrence (Josh Hartnett). Oppenheimer was introduced to the Manhattan Project through Lawrence, who later became an H-bomb proponent.

In the early days of their relationship, Oppenheimer and Lawrence were very close, with the latter even naming his son Robert. The two fell out later due to political disagreements, but Lawrence refused to testify against Oppenheimer in 1954.

Meanwhile, what is now called the Niels Bohr Institute in Copenhagen had become another big hub for theoretical and nuclear physics research in the late 1930s. Here, Homi Bhabha worked with the likes of Wolfgang Pauli, Hans Kramer, Enrico Fermi, and, of course, Neils Bohr (Kenneth Branagh) himself.

Bohr, who discovered the internal structure of an atom and showed that electrons orbit the nucleus, was involved with the Manhattan Project only for a short time, but is known for his work on quantum theory, for which he won the 1922 Nobel Prize in Physics. He was involved in the establishment of CERN. After the war, he became a proponent of international cooperation on nuclear energy.

Bohr had visited India on a couple of occasions, upon the invitation of physicist Alladi Ramakrishnan, who had him deliver lectures in Chennai. Bohr was particularly taken with the Tata Institute of Fundamental Research (TIFR), and was very supportive of the Indian nuclear research programme.

In the movie, he can be seen handling the poisoned apple, which was not intended for him, and asking Oppie to listen to music instead of reading sheet music.

Communism, a car named Garuda, marriage

Many of Oppenheimer’s political and spiritual beliefs coalesced in the years leading up to World War-II.

In the early 1930s, his interests expanded to encompass languages, myths, and religion. Mesmerised by the Bhagavad Gita, he learned to read Sanskrit and even named his car ‘Garuda’ after the divine vehicle of Lord Vishnu.

Through this decade, Oppenheimer studied cosmic rays, nuclear physics, quantum electrodynamics, as well as relativity and astrophysics. However, the onset of World War II in 1939 forced many scientists, including Homi Bhabha, to return to their home countries.

By this time, Oppenheimer had adopted many ideas for social reform that came to be categorised as communist. While he never was an open member of the Communist Party of USA, he donated money through communist channels for social causes.

In the film, Oppenheimer’s wife, Katherine “Kitty” Puening (Emily Blunt) is depicted as testifying to this effect during her hearing.

Kitty, whom Oppenheimer met in 1939, was a huge influence in is life and remained by his side until his death. A German-born American botanist, she was a former member of the communist party.

Before Oppenheimer had even joined the Manhattan Project, an FBI file was opened on him in 1941.

Manhattan Project kicks off

Oppenheimer’s work in quantum mechanics and nuclear physics had caught the eye of many scientists, and his ability to be an informed liaison between scientists and defence forces led to General Leslie Groves (Matt Damon) handpicking him as a “genius” to lead the Manhattan Project. The two are often credited together for producing the world’s first atomic bomb.

Oppenheimer started off by holding a summer school for bomb theory in Berkeley, involving many of his colleagues and students, including Robert Serber (who was romantically involved with Kitty after Oppenheimer’s death) Teller, and German-American theoretical physicist Hans Bethe (Gustaf Skarsgård).

Bethe was personally asked by Oppenheimer to join the Manhattan Project and oversee its theoretical division. He is best known for his work at the confluence of astrophysics and nuclear physics, winning the 1967 Physics Nobel for his work on stellar nucleosynthesis, the process by which heavier elements are created in stars.

In 1942, the plans for a secret lab in Los Alamos were in place. The US military notified the local Indian tribes living in the area that they had 24 hours to vacate, and usurped the land.

Set up at the Los Alamos Ranch School, the Manhattan Project grew from 1943 to 1945 to include thousands of people. Oppenheimer ran the project efficiently, and was noted for his administrative ability among scientists and military personnel.

At the same time as the establishment of Los Alamos in US, a premier institute of research was being established in India. At the time, Bhabha was a professor at the Indian Institute of Science (IISc) in Bangalore (now Bengaluru), and was working with JRD Tata and Nobel laureate Subrahmanyan Chandrasekhar to establish TIFR in Mumbai.

‘The world will never be the same again’

In 1945, the Trinity test marked the world’s first atomic bomb trial, and the first occurrence of a mushroom cloud.

Richard Feynman (Jack Quaid) claimed to be the sole person to witness the test without protective glasses. The famous (or infamous) physicist was a graduate student when he joined the Manhattan Project, Feynman contributed to safety protocols for uranium storage. His work in quantum electrodynamics earned him the 1965 Physics Nobel.

After the test, Oppenheimer famously remarked that the world would never be the same again.

Indeed, the Trinity test released nuclear fallout into the atmosphere, which continues to persist today and has contaminated modern steel. This led to the use of “low-background steel” or pre-Trinity steel in modern physics experiments and radiation-sensitive devices like Geiger counters. Sunk WWII submarines have long been illegally scavenged for their pre-war steel.

Moral doubts & Szilárd petition

After the test, as shown in the movie, some scientists at the project began to express their moral qualms about dropping the bomb on civilians.

In 1945, Hungarian-German-American physicist Leo Szilárd (Máté Haumann) initiated the Szilárd petition, co-signed by 70 Manhattan Project scientists. The petition advocating for the US to forewarn Japan and to deploy the bomb in unpopulated islands.

Szilárd, the first to conceive nuclear chain reactions, drafted the letter for Einstein’s approval of the Manhattan Project. But despite his involvement, he became a vocal anti-nuclear warfare proponent.

Notably, he briefly resided in India during the 1930s while his wife worked with children. He interacted with many Indian scientists, including Obaid Siddiqui, founder-director of TIFR’s National Centre for Biological Sciences (NCBS).

One of the signatories of Szilárd’s petition was Lilli Hornig (Olivia Thirlby), who had originally joined as a typist at Los Alamos. Her scientific skills, however, proved prodigious. While she wanted to work with plutonium, there were concerns among the men that the radioactive element could be dangerous for the female reproductive system, as depicted in the movie. Hornig, therefore, worked with high-explosive lenses instead.

She later became a chemistry professor at Brown University and played a key role in establishing the Korea Institute for Science and Technology.

American nuclear physicist David Hill (Rami Malek) also signed the Szilard petition.

In the movie, he is shown giving a searing testimony in 1959 against U.S. Atomic Energy Commission (AEC) chairman Lewis Strauss (Robert Downey Jr.) and his treatment of Oppenheimer— but more on that later.

Manhattan Project scientist Luis Walter Alvarez (Alex Wolff) also makes an appearance in the film. He is depicted as the scientist running out of a shop to tell Oppenheimer the news of German scientists Otto Hahn and Fritz Strassmann achieving nuclear fission.

Alvarez won the Nobel Prize in 1968 for his work in designing a liquid hydrogen bubble chamber which could take photographs of subatomic particles. However, his most enduring legacy is arguably the Alvarez Hypothesis, which he co-developed with his geologist son Walter Alvarez. The hypothesis states that the extinction of dinosaurs was caused by an asteroid impact.

Post-war tumult

After the Manhattan Project and the bombings of Hiroshima and Nagasaki in August 1945, Oppenheimer grew disillusioned with atomic and hydrogen bombs.

He subsequently took over as the director of the Institute for Advanced Study in Princeton and later chaired the General Advisory Committee to the Atomic Energy Commission, while other nations pursued their own nuclear programmes, including India, where the Atomic Energy Commission of India was set up in 1948 under Bhabha’s leadership.

But Oppenheimer’s left-leaning communist ideals caused the US government to become suspicious of him as fear of USSR’s technological and military advances grew.

Adding to these suspicions was his on-and-off affair with Jean Tatlock (Florence Pugh), a communist party member. The two intermittently shared a relationship both before and during his marriage with Kitty. Oppenheimer even named the 1945 Trinity test as a tribute to her, as she had introduced him to John Donne’s sonnet that said “Batter my heart, three-person’d God”.

The two last met in 1943 when Oppenheimer was already under FBI surveillance. And while Tatlock was found dead by suicide in 1944, at the age of 29, the affair had ripple effects even a decade later.

The pall of doubt over Oppenheimer’s ideological leanings led to a hearing by the AEC in 1954 that resulted in the revoking of his security clearance and effectively his role in the US atomic energy establishment. This initiative was backed by politician and then AEC chairman Lewis Strauss, depicted as Oppenheimer’s prime antagonist in the movie.

Upon hearing the news of Oppenheimer’s treatment, many in the scientific community were outraged and offered shelter to him in other countries.

Homi Bhabha was upset too. He was friends with both Robert and Kitty Oppenheimer and often dined with them when he was in New York. Bhabha went as far as to urge Prime Minister Nehru to invite Oppenheimer to immigrate to India after the 1954 hearing.

Oppenheimer, however, refused to leave the US, claiming it would not be inappropriate until he was cleared of all charges.

Significantly, the controversial hearing led to a backlash against Strauss too. In 1959, President Dwight D. Eisenhower nominated Strauss as Secretary of Commerce. But in the hearing to consider his nomination, depicted in black and white in the movie, many senators questioned his qualifications, specifically in the view of the Oppenheimer controversy. Strauss’s nomination was ultimately rejected by the Senate.

Epilogue

Oppenheimer’s time at Los Alamos and outside of it was full of legends of physics. The most well-known of these was, of course, Albert Einstein (Tom Conti).

A key scene in the film shows Oppenheimer seeking advice from Einstein about calculations suggesting that a nuclear explosion could destroy the earth.

However, as director Nolan has acknowledged in interviews, this interaction did not happen. Oppenheimer did seek similar advice, but he went to Arthur Compton, a Nobel Prize winner and director of Manhattan Project’s centre at the University of Chicago.

In real life, Einstein and Oppenheimer did become friends, but were closest when they worked together at the Institute for Advanced Study in Princeton. It was here where Oppenheimer served the last years of his career, retiring in 1966. A year later, he died of throat cancer.

In December 2022, shortly after the release of the trailer of the biopic, the 1954 ruling to revoke Oppenheimer’s security clearance was nullified, citing a flawed process.'

#Oppenheimer#Christopher Nolan#Albert Einstein#Tom Conti#Niels Bohr#Kenneth Branagh#Edward Teller#Benny Safdie#Ernest Lawrence#Josh Hartnett#Kitty#Emily Blunt#Arthur Compton#The Manhattan Project#Los Alamos#Lewis Strauss#Cillian Murphy#Patrick Blackett#James D'Arcy#Frank Oppenheimer#Danny Deferrari#Enrico Fermi#Leslie Groves#Matt Damon#Werner Heisenberg#Matthias Schweighöfer#Issac Rabi#David Krumholtz#Wolfgang Pauli#Hans Kramer

3 notes

·

View notes

Text

How to Optimize Your Workflow with Task Management Software

Introduction

The world has become too fast-moving; hence, the demand for organization and productivity is higher than ever. From multiple tasks at hand to meeting deadlines, the pressure is always there to make things easier. Task management software provides a powerful solution for this issue. This guide will walk you through optimizing your workflow with task management software to bring about efficiency and effectiveness in both personal and professional aspects of life. Read to continue...

#Technology#Science#business tech#Adobe cloud#Trends#Nvidia Drive#Analysis#Tech news#Science updates#Digital advancements#Tech trends#Science breakthroughs#Data analysis#Artificial intelligence#Machine learning#Ms office 365#Quantum computing#virtual lab#fashion institute of technology#solid state battery#elon musk internet#Cybersecurity#Internet of Things (IoT)#Big data#technology applications

0 notes

Text

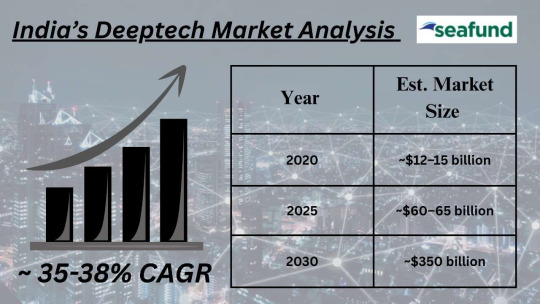

The Rise of Deeptech Startups in India: How Deeptech Funding is Shaping the Future of Innovation

India’s Deeptech Ecosystem: Startups, Investments, and Strategic Growth The Indian deeptech ecosystem has emerged as a powerhouse of innovation, driven by advancements in artificial intelligence, semiconductor design, space technology, and robotics. Over the past three months, the sector has witnessed significant funding inflows, strategic government interventions, and breakthroughs in research commercialization. Startups are leveraging cutting-edge technologies to address both domestic and global challenges, supported by a gro wing network of venture capital firms and policy frameworks. This report examines the current state of deeptech in India, analyzing key sectors, investment trends, and the interplay between public and private stakeholders shaping the future of this dynamic landscape. Know More

Key Sectors Driving Deeptech Innovation

Artificial Intelligence and Multi-Agent Systems AI startups are moving beyond conventional SaaS solutions to build core, infrastructure-level innovations. Alchemyst AI, for instance, is creating multi-agent systems that can embed into enterprise processes. Its AI system, Maya, is designed to act as a digital co-worker in sales development teams, automating complex tasks while enabling more coordinated workflows. Another rising player, Deceptive AI, is pushing the envelope on AGI with advanced capabilities in image synthesis and semantic segmentation, targeting use cases in fashion, media, and design. India’s AI sector is steadily evolving from end-user applications toward fundamental research and systems development with practical, cross-sector deployment potential.

Semiconductor and Hardware Innovation Hardware innovation in India is gaining ground. Mindgrove Technologies reached a key milestone by taping out a 28nm Secure-IoT chip earlier this year. Built using the open-source Shakti core from IIT Madras, this chip has applications in automotive electronics and consumer devices, offering a homegrown alternative to imported components. Government programs such as the Design Linked Incentive (DLI) are playing a critical role by supporting fabless startups and covering R&D costs. Meanwhile, Agnikul Cosmos has demonstrated engineering ingenuity by launching the first rocket powered by a single-piece 3D-printed engine—an achievement that speaks volumes about India’s growing stature in aerospace and additive manufacturing. Read More

Quantum Computing and Robotics Startups in quantum computing are beginning to explore solutions in optimization and cryptographic security, though most applications are still in the lab phase. Robotics, on the other hand, is seeing more immediate commercialization. Sedeman Mechatronics, a venture incubated at IIT Bombay, recently filed for an IPO to raise between ₹800–1,000 crore, aiming to scale its industrial automation systems. Its work in precision manufacturing is especially aligned with national goals like “Make in India” and the growing interest in dual-use technologies that can support both civilian and defense sectors. Explore

Government Initiatives and Policy Support

₹10,000 Crore Fund of Funds for Deeptech A major push came in April 2025 when Commerce Minister Piyush Goyal announced a ₹10,000 crore Fund of Funds to channel long-term capital into early-stage deeptech ventures. Administered by Small Industries Development Bank of India (SIDBI), the scheme focuses on startups working in AI, quantum computing, robotics, and biotech. The initial allocation of ₹2,000 crore is aimed at supporting ventures navigating the difficult transition from lab research to market readiness. This scheme adds to existing initiatives like the Indian Semiconductor Mission (ISM), which backs fabless innovation through grants and collaborative infrastructure.

Academic-Industry Collaboration Institutions such as IIT Madras and IIT Bombay continue to play an outsized role in pushing new ideas from research labs into commercial ecosystems. The Shakti processor project, which powered Mindgrove’s chip, is a prime example of academic innovation meeting market demand. The National Deep Tech Startup Policy (NDTSP) is also expected to ease IP licensing processes and promote closer ties between universities and startups. Over half of India’s deeptech ventures originate from academic environments, making these institutional linkages essential for sustained progress.

Investment Trends and Venture Capital Activity

Surge in Early-Stage Funding Deeptech investments reached $324 million across 35 deals in the first four months of 2025, doubling from $156 million in the same period in 2024. Notable deals include:

Netradyne raised $90 million for its AI-driven fleet management platform.

SpotDraft secured $54 million to expand its AI-based contract management solutions.

Tonbo Imaging garnered $21 million for advanced thermal imaging systems used in defense and automotive sectors.

Generalist funds like Peak XV Partners (formerly Sequoia India) and Blume Ventures are increasingly backing deeptech, diversifying from their traditional SaaS focus. Sector-specific funds like Seafund continue to lead early-stage rounds, with a portfolio emphasizing AI, IoT, and healthcare technologies.

Public Market Momentum The IPO pipeline reflects growing maturity, with MTAR Technologies and Tata Technologies emerging as top gainers in the deeptech sector, posting returns of 2.3% and 1.4%, respectively. Conversely, Olectra Greentech saw a 6.6% decline, highlighting volatility in clean-tech segments amid subsidy revisions.

Seafund’s Role in Shaping the Future of Deeptech Startups in India Seafund, a Bengaluru-based venture capital firm, is at the forefront of supporting India’s deeptech revolution. With a Rs 250 crore Fund II, Seafund plans to invest in 18-20 deeptech startups by FY27, focusing on areas like AI SaaS, mobility, sustainability, semiconductors, and clean energy.

Investment Strategy Seafund’s approach includes:

Early-Stage Focus: Investing from pre-seed to pre-Series A rounds, enabling startups to develop and refine their technologies.

Strategic Support: Providing mentorship, market access, and growth strategies to help startups scale effectively.

Sustainability Commitment: Allocating 20% of its corpus to clean energy and mobility startups, reflecting a commitment to sustainable innovation.

Portfolio Highlights Seafund has already invested in several deeptech startups:

RedWings: Specializes in drone logistics, aiming to revolutionize delivery systems.

Docker Vision: Utilizes AI-driven computer imaging to enhance port operations by accelerating the mobility of shipping containers and assessing their conditions in real-time.

Swapp Design: Develops modular battery swapping solutions using autonomous robots, facilitating efficient energy management for electric vehicles.

Simactricals: Focuses on wireless EV charging technologies, aiming to simplify and expedite the charging process for electric vehicles.

CalligoTech: Develops POSIT-based accelerator hardware for high-precision, low-power HPC solutions seamlessly integrated into enterprise systems.

TakeMe2Space: Builds affordable, radiation-shielded LEO satellites with onboard compute capabilities to power next-gen space-based AI applications.

These investments reflect Seafund’s commitment to fostering innovation in critical areas of deeptech, positioning India as a leader in technological advancements. Read More

Future Outlook and Strategic Recommendations

Projected Growth and Global Leadership Inc42 forecasts a 40% CAGR for India’s deeptech sector through 2027, driven by advancements in AI, quantum computing, and space tech. By 2030, the ecosystem is expected to contribute $350 billion to GDP, with startups transitioning from “lab-to-market” at unprecedented scale. Key drivers include:

Increased participation from generalist funds at Series A and beyond.

Expansion of design-linked incentives for semiconductor startups.

Growth in cross-border partnerships, particularly with Japan and the EU in robotics and IoT.

Policy Interventions for Sustained Growth

Accelerate Fund Disbursements: Simplify approval processes for the Fund of Funds to ensure timely access to capital.

Strengthen IP Frameworks: Introduce fast-track patent approvals and tax breaks for IP-driven startups.

Enhance Corporate Engagement: Mandate PSUs (Public Sector Undertakings) to allocate 5% of procurement budgets to deeptech solutions.

India’s deeptech ecosystem stands at an inflection point, buoyed by technological breakthroughs, strategic funding, and policy tailwinds. While challenges like funding gaps and adoption barriers persist, the convergence of academic excellence, entrepreneurial vigor, and global demand positions the country as a future leader in frontier technologies. The next decade will hinge on translating innovation into economic impact, ensuring that deeptech becomes a cornerstone of India’s $10 trillion GDP ambition.

FAQs:

What makes deeptech startups different from regular tech startups? Deeptech startups in India focus on building solutions grounded in advanced scientific research or engineering innovation. Unlike typical tech startups that often rely on existing platforms or business model tweaks, deeptech ventures tackle fundamental problems using technologies like AI, robotics, quantum computing, or space tech—usually requiring longer development timelines and more R&D funding.

Why is deeptech funding on the rise in India now? The sharp rise in deeptech funding—up 78% in 2024—is driven by global demand for advanced technologies, India’s skilled engineering talent pool, and supportive policies. There’s growing investor confidence that Indian startups can build globally competitive solutions in AI, semiconductors, and clean energy, pushing capital into this high-impact sector.

Which deeptech sectors are currently showing the most potential in India? Key areas seeing strong traction include artificial intelligence, which attracted 87% of all deeptech funding in 2024, as well as space tech, quantum computing, robotics, and semiconductors. These fields not only offer commercial potential but also strategic importance for India’s technological self-reliance and global positioning.

What challenges are holding back the growth of deeptech startups in India? The biggest hurdles include a shortage of deeptech talent, long development cycles, and regulatory complexity. Deeptech ventures also face difficulties in accessing early-stage capital due to the technical risk and slower path to profitability, making targeted government and ecosystem support crucial.

Have insights or bold ideas? Drop your thoughts, and let’s shape the next wave of innovation together!

Lets Connect!!

#Keywords#venture capital company#investment in startups#funding for startups#private equity venture capital#capital venture investors#stages of venture capital financing#venture capital investment#venture capital investors#venture capital firm#venture capital firms in india#leading venture capital firms#india alternatives investment advisors#best venture capital firms in india#early stage venture capital firms#venture capital investors in india#early stage investors#seed investors in bangalore#invest in startups bangalore#business investors in kerala#funders in bangalore#startup investment fund#seed investors in delhi#private equity firms investing in aviation#venture investment partners#venture management services#defense venture capital firms#popular venture capital firms#funding for startups in india#early stage funding for startups

0 notes

Text

Why Corporate Training Must Embrace Emerging Technologies in 2025

In 2025, the pace of digital transformation is no longer just rapid—it’s relentless. Companies that want to stay competitive must not only adopt new technologies but also ensure their workforce is ready to use them effectively. This is where Emerging Technology Courses play a pivotal role in corporate training programs.

The New Reality: Technology Is the Business

From artificial intelligence and machine learning to blockchain, cloud computing, and data science, modern enterprises are built on emerging technologies. The success of these implementations depends not just on the tools themselves, but on the people using them. Upskilling employees with practical, hands-on training ensures smoother transitions, fewer bottlenecks, and faster innovation.

Key Benefits of Adopting Emerging Technology Courses

1. Enhanced Workforce Readiness

Employees trained in AI, blockchain, cloud platforms, or IoT can contribute more strategically to business goals. Emerging Technology Courses prepare them to solve real-world problems using next-gen tools.

2. Stronger Innovation Culture

A workforce familiar with emerging technologies is more likely to experiment, innovate, and improve processes. This mindset shift is vital in a future-forward organization.

3. Greater Efficiency and Automation

Courses in Robotic Process Automation (RPA), Hyper Automation, and advanced analytics enable teams to automate routine tasks, freeing up valuable time for higher-order thinking.

4. Improved Security and Compliance

Cybersecurity is a major concern in the digital age. Training employees through dedicated programs not only builds awareness but also ensures compliance with evolving regulatory frameworks.

5. Talent Retention and Attraction

Top talent today seeks employers who invest in their growth. Offering Emerging Technology Courses enhances your brand as a progressive employer and improves employee retention.

Why 2025 Is the Turning Point

Technologies like Web 3.0, Generative AI, and quantum computing are no longer experimental—they’re entering the mainstream. Waiting any longer to equip your workforce could mean falling behind.

Pragati Software, through its Emerging Technologies Group, offers industry-aligned, expert-led courses that are tailored to specific organizational needs. Their programs combine theoretical grounding with hands-on labs to ensure employees not only understand the technology but can apply it immediately in their roles.

Final Thoughts

The future of work is here—and it’s tech-driven. Companies that prioritize continuous learning through Emerging Technology Courses will be the ones to lead markets, attract top talent, and build resilient digital cultures.

If your organization hasn’t yet integrated such courses into your corporate training strategy, 2025 is the year to start.

0 notes

Text

This Fully Funded Internship in China Might Just Change Your Career Forever – USTC Research Internship Program 2025–26

What if the one decision that could redefine your career, network, and research future were hidden in a city you’d least expected? What if I tell you that in 2025–26, some of the world’s brightest students will be flown into China, completely free, to join a powerhouse of innovation, technology, and global research?

Welcome to the University of Science and Technology of China (USTC) Research Internship Program 2025–26. Fully funded. Globally competitive. And more accessible than you think.

Why China? Why USTC?

China isn’t just a global economic leader—it’s rapidly becoming a research juggernaut. At the heart of this transformation is USTC, often referred to as the "MIT of China." This elite institution has consistently ranked among the top in Asia and globally, especially in science, technology, and innovation.

From quantum physics and AI to material science and engineering, USTC is at the edge of tomorrow’s breakthroughs. And now, for 2025–26, they're inviting YOU to come learn, research, and grow—without worrying about expenses.

What’s So Special About the USTC Research Internship?

This isn’t just an internship. It’s a launchpad. Here's why:

Fully Funded: Yes, airfare, accommodation, research costs, and even a stipend.

World-Class Research Labs: Work with globally respected professors and research teams.

Cultural Immersion: Learn Chinese culture, language, and academic style.

Prestige: A USTC internship stands out on grad school or job applications.

Global Network: Collaborate with brilliant peers from around the world.

You’re not just learning. You’re being mentored by pioneers in cutting-edge fields. And you’re doing it in a country that's shaping the next era of science and tech.

Who Can Apply?

Here’s the best part: you don’t need to be a genius to qualify—just passionate, focused, and curious.

🔹 Eligibility:

Undergraduate or graduate students (STEM-focused fields preferred).

Strong academic record.

Research interest in science, tech, or innovation.

Open to all nationalities.

🔹 Preferred Majors:

Physics

Chemistry

Artificial Intelligence

Computer Science

Materials Science

Biology

Engineering

Environmental Science

If you’ve ever dreamed of international research exposure, this is your moment.

Internship Duration & Timeline

Application Opens: Early 2026

Deadline: Typically around March or April

Program Duration: 6 to 12 weeks (usually over the summer)

Location: Hefei, China

The earlier you prepare your documents, the better your chances.

What Does the Internship Cover?

Yes, it's 100% funded. Here's what you get:

Round-trip Airfare

Accommodation (on-campus or nearby guesthouses)

Daily Meals or Meal Stipend

Monthly Stipend for personal expenses

Full Access to Research Labs & Facilities

Certificate of Completion from USTC

You could spend your summer at home watching Netflix… or changing your career trajectory in China—for free.

How to Apply

The application process is straightforward but competitive. Here's your checklist:

Step 1: Choose your research area and potential supervisor (you can find this on the USTC website).

Step 2: Prepare the following:

CV/Resume

Statement of Purpose

Academic Transcripts

Letter of Recommendation (at least 1)

Passport Scan

Step 3: Apply through USTC’s internship portal or email your application if required.

Step 4: Wait for shortlisting and interview (some applicants may be interviewed virtually).

Step 5: Acceptance & Visa Processing (USTC helps with the invitation letter)

What’s the Catch?

Only a limited number of students are accepted each year, and those who get in? They often go on to land top grad school admits, publish research, or work at global tech firms. Your USTC experience can literally become your stepping stone to greatness.

Final Thought

In a world crowded with generic internships and overpromising programs, USTC stands out for one reason: it delivers. Real science. Real mentorship. Real impact. So ask yourself: What if this was the opportunity that changed everything?

Don’t just scroll past. Don’t wait another year. If you’re passionate about research, innovation, and global collaboration, this fully funded USTC internship might just be your golden ticket. Your research journey in China starts here. Are you ready to apply?

1 note

·

View note

Text

The Fight for AI Market Dominance | CNBC Marathon

The Fight for AI Market Dominance | CNBC Marathon - https://www.thebusinesschannel.org/the-fight-for-ai-market-dominance-cnbc-marathon/ The Fight for AI Market Dominance | CNBC Marathon CNBC Marathon explores how different big tech companies are fighting for AI market dominance. A little-known AI lab out of China has ignited panic throughout Silicon Valley after releasing AI models that can outperform America’s best despite being built more cheaply and with less-powerful chips. DeepSeek, as the lab is called, unveiled a free, open-source large-language model in late December that it says took only two months and less than $6 million to build. The new developments have raised alarms on whether America’s global lead in artificial intelligence is shrinking and called into question big tech’s massive spend on building AI models and data centers. In a set of third-party benchmark tests, DeepSeek’s model outperformed Meta’s Llama 3.1, OpenAI’s GPT-4o and Anthropic’s Claude Sonnet 3.5 in accuracy ranging from complex problem-solving to math and coding. CNBC’s Deirdre Bosa has the story. This video also includes Bosa’s full interview with Perplexity CEO Aravind Srinivas. Humanoid robots are catching the attention, and billions of investment dollars, from big tech companies like Amazon, Google, Nvidia and Microsoft. Elon Musk is betting the future of Tesla on these machines, predicting its robot, Optimus, could propel it to a $25 trillion market cap. Powered by artificial intelligence, these bots have seen quantum leaps in what they’re capable of in just the past few years. CNBC’s Kate Rooney speaks with Agility Robotics, Apptronik, Sanctuary AI and others to explore the rise of these AI-driven humanoids, if they’re a cure-all for our global workforce problems, or if this is yet another tech bubble. Microsoft, Google and Amazon, along with other tech companies, have been getting creative in how they’re poaching talent from top artificial intelligence startups. Earlier this month, Google inked an unusual deal with Character.ai to hire away its prominent founder, Noam Shazeer, along with more than one-fifth of its workforce while also licensing its technology. It looked like an acquisition, but the deal was structured so that it wasn’t. Google wasn’t the first to take this approach. In March, Microsoft signed a deal with Inflection that allowed Microsoft to use Inflection’s models and to hire most of the startup’s staff. Amazon followed in June with a faux acquisition of Adept where it hired top talent from the AI startup and licensed its technology. It’s a playbook that skirts regulators and their crackdown on Big Tech dominance, provides an exit for AI startups struggling to make money, and allows megacaps to pick up the talent needed in the AI arms race. But while tech giants might think they’re outsmarting antitrust enforcers, they could be playing with fire. CNBC’s Deirdre Bosa has the story. Chapters: 00:00 Introduction 01:17 How China’s New AI Model DeepSeek Is Threatening U.S. Dominance (Published Jan 2025) 41:40 Why Nvidia, Tesla, Amazon And More Are Betting Big On AI-Powered Humanoid Robots (Published August 2024) 58:48 How Google, Microsoft And Amazon Are Raiding AI Startups For Talent (Published July 2024) Anchor: Deirdre Bosa Produced by: Andrew Evers. Jasmine Wu, Laura Batchelor, Drew Troast Reporter: Kate Rooney Edited by: Matt Soto, Field Producer: Kevin Schmidt Senior Director: Jeniece Pettitt Additional Camera: Katie Tarasov, Lisa Setyon Additional Footage: Getty Images, Character.ai, Agility Robotics, Sanctuary AI, Apptronik, Tesla, Figure AI, Boston Dynamics, Nvidia Credit to : CNBC The Business Channel

0 notes

Text

Scientists use generative AI to answer complex questions in physics

New Post has been published on https://thedigitalinsider.com/scientists-use-generative-ai-to-answer-complex-questions-in-physics/

Scientists use generative AI to answer complex questions in physics

When water freezes, it transitions from a liquid phase to a solid phase, resulting in a drastic change in properties like density and volume. Phase transitions in water are so common most of us probably don’t even think about them, but phase transitions in novel materials or complex physical systems are an important area of study.

To fully understand these systems, scientists must be able to recognize phases and detect the transitions between. But how to quantify phase changes in an unknown system is often unclear, especially when data are scarce.

Researchers from MIT and the University of Basel in Switzerland applied generative artificial intelligence models to this problem, developing a new machine-learning framework that can automatically map out phase diagrams for novel physical systems.

Their physics-informed machine-learning approach is more efficient than laborious, manual techniques which rely on theoretical expertise. Importantly, because their approach leverages generative models, it does not require huge, labeled training datasets used in other machine-learning techniques.

Such a framework could help scientists investigate the thermodynamic properties of novel materials or detect entanglement in quantum systems, for instance. Ultimately, this technique could make it possible for scientists to discover unknown phases of matter autonomously.

“If you have a new system with fully unknown properties, how would you choose which observable quantity to study? The hope, at least with data-driven tools, is that you could scan large new systems in an automated way, and it will point you to important changes in the system. This might be a tool in the pipeline of automated scientific discovery of new, exotic properties of phases,” says Frank Schäfer, a postdoc in the Julia Lab in the Computer Science and Artificial Intelligence Laboratory (CSAIL) and co-author of a paper on this approach.

Joining Schäfer on the paper are first author Julian Arnold, a graduate student at the University of Basel; Alan Edelman, applied mathematics professor in the Department of Mathematics and leader of the Julia Lab; and senior author Christoph Bruder, professor in the Department of Physics at the University of Basel. The research is published today in Physical Review Letters.

Detecting phase transitions using AI

While water transitioning to ice might be among the most obvious examples of a phase change, more exotic phase changes, like when a material transitions from being a normal conductor to a superconductor, are of keen interest to scientists.

These transitions can be detected by identifying an “order parameter,” a quantity that is important and expected to change. For instance, water freezes and transitions to a solid phase (ice) when its temperature drops below 0 degrees Celsius. In this case, an appropriate order parameter could be defined in terms of the proportion of water molecules that are part of the crystalline lattice versus those that remain in a disordered state.

In the past, researchers have relied on physics expertise to build phase diagrams manually, drawing on theoretical understanding to know which order parameters are important. Not only is this tedious for complex systems, and perhaps impossible for unknown systems with new behaviors, but it also introduces human bias into the solution.

More recently, researchers have begun using machine learning to build discriminative classifiers that can solve this task by learning to classify a measurement statistic as coming from a particular phase of the physical system, the same way such models classify an image as a cat or dog.

The MIT researchers demonstrated how generative models can be used to solve this classification task much more efficiently, and in a physics-informed manner.

The Julia Programming Language, a popular language for scientific computing that is also used in MIT’s introductory linear algebra classes, offers many tools that make it invaluable for constructing such generative models, Schäfer adds.