#rest api automation

Explore tagged Tumblr posts

Text

Top 5 OWASP API Security Risks and How to Mitigate Them

APIs are essential but highly targeted attack vectors. The OWASP API Security Top 10 outlines critical risks and mitigation strategies:

Broken Object Level Authorization (BOLA)

APIs often expose endpoints that attackers manipulate to access unauthorized resources.

Mitigation: Implement strict authorization checks and ensure unique object IDs are validated server-side.

Broken Authentication

Weak authentication mechanisms can allow attackers to compromise accounts.

Mitigation: Enforce strong authentication methods, including multi-factor authentication (MFA), and use secure token management practices.

Excessive Data Exposure

APIs sometimes expose unnecessary data in response payloads.

Mitigation: Ensure responses only contain required fields, and sensitive information is masked or omitted.

Lack of Rate Limiting

API testing without rate-limiting are vulnerable to brute-force and denial-of-service attacks.

Mitigation: Apply rate limits and implement CAPTCHA mechanisms to prevent automated abuse.

Security Misconfiguration

Misconfigured headers, permissions, or outdated components create vulnerabilities.

Mitigation: Regularly review configurations, apply security patches, and follow secure coding practices.

Conclusion

Understanding and addressing these risks is vital to securing APIs. Proactive testing and adherence to OWASP guidelines ensure robust defenses against potential threats.

#api test automation#rest assured api testing#api automation#api automation testing tools#api test tool#api automation tools#rest api automation#api security testing#rest api testing automation#best tool for api automation#api testing in automation#automation testing for api

0 notes

Text

Simplifying Data Management in 2025: How Point-and-Click Tools and Smart Ops Software Are Fixing Mismatched Data

In today’s data-driven business landscape, accuracy, speed, and usability are more important than ever. As organizations grow, so does the volume and complexity of their data—often leading to fragmented systems, duplicate records, and mismatched insights.

REST API data automation

0 notes

Text

APIs (Application Programming Interfaces) are the backbone of modern digital ecosystems. They enable seamless interaction between applications, platforms, and services. However, their exposure makes them a prime attack vector. API security testing identifies vulnerabilities in APIs to ensure data confidentiality, integrity, and availability.

#eCommerce software development services#API testing services#database performance testing#automated testing of REST API

0 notes

Text

What Are Direct Carrier Appointments? 5 Vital Insights for Agencies

Gaining a strong grasp of direct carrier appointments can significantly elevate how your insurance agency operates.

In simple terms, it’s a formal relationship where an insurer authorizes an agency to sell its policies directly — cutting out the middle layers.

But why is this such a big deal, and how does it shape your agency’s future?

Here are five key insights into direct appointments and why they’re so beneficial for your business.

Request a Demo

Insight #1: Direct Carrier Appointments Offer Wider Product Access One of the most valuable benefits of a direct appointment is the immediate access to a broader range of the carrier’s insurance products.

This enables your agency to provide clients with more tailored options, accommodating varied coverage needs and preferences.

Such diversification strengthens your service portfolio, makes your agency more appealing to a broader audience, and positions you more competitively in the marketplace.

Over time, this enhanced market access contributes to stronger revenue generation and business stability.

Insight #2: Direct Appointments Can Improve Earnings When working directly with carriers, agencies often avoid the layers of commissions that come with using intermediaries or aggregators.

This means you can receive a higher portion of the premium revenue, leading to better profit margins per policy.

With increased commission percentages and potential for negotiating favorable rates, your agency’s income per client improves — supporting financial growth over the long term.

This revenue advantage is key to building a scalable and profitable business model.

Insight #3: Carriers Require Agencies to Meet Eligibility Standards Insurers typically evaluate agencies before granting direct appointments, ensuring the partnership is secure and mutually beneficial.

Common criteria may include years of operational history, proof of production capabilities, and compliance with regulatory standards.

Meeting these benchmarks shows that your agency is trustworthy, productive, and capable of representing the carrier’s interests responsibly.

These requirements help maintain quality and safeguard the insurer's brand and policyholders.

Insight #4: Access to Unique Products Can Set You Apart With a direct appointment, you may gain access to exclusive insurance plans or services that aren’t distributed through indirect channels.

These exclusive offerings allow you to provide value that competitors may lack — fulfilling niche market needs and attracting high-intent clients.

Your agency becomes a go-to source for specialized or higher-tier solutions, strengthening your position as a trusted advisor in the industry.

This exclusivity enhances your credibility and helps retain loyal clients looking for premium options.

Insight #5: Appointments Must Be Actively Maintained Receiving a direct appointment is just the start — agencies must work consistently to keep it active.

That includes hitting required production targets, delivering top-tier service, following carrier policies, and ensuring that records stay updated — including changes to staff.

Failure to maintain performance or compliance can jeopardize the relationship and result in losing the appointment and its benefits.

Ongoing communication and alignment with the carrier are key to keeping the partnership strong and sustainable.

Manage Appointments Easily with Agenzee Meet Agenzee — your all-in-one insurance compliance platform that redefines how agencies handle licenses and appointments.

No more juggling spreadsheets or missing renewal dates. Agenzee helps your agency stay efficient and compliant with features like:

All-in-One License & Appointment Dashboard

Automated Alerts Before License Expiry

Simplified License Renewal Tools

New Appointment Submission & Tracking

Termination Management Features

CE (Continuing Education) Hour Tracking

Robust REST API Integration

Mobile Access for Producers on the Go

With Agenzee, your agency can minimize administrative burdens and maximize focus on growth.

Request a free demo and see how easy compliance management can be!

2 notes

·

View notes

Text

Integrating Third-Party Tools into Your CRM System: Best Practices

A modern CRM is rarely a standalone tool — it works best when integrated with your business's key platforms like email services, accounting software, marketing tools, and more. But improper integration can lead to data errors, system lags, and security risks.

Here are the best practices developers should follow when integrating third-party tools into CRM systems:

1. Define Clear Integration Objectives

Identify business goals for each integration (e.g., marketing automation, lead capture, billing sync)

Choose tools that align with your CRM’s data model and workflows

Avoid unnecessary integrations that create maintenance overhead

2. Use APIs Wherever Possible

Rely on RESTful or GraphQL APIs for secure, scalable communication

Avoid direct database-level integrations that break during updates

Choose platforms with well-documented and stable APIs

Custom CRM solutions can be built with flexible API gateways

3. Data Mapping and Standardization

Map data fields between systems to prevent mismatches

Use a unified format for customer records, tags, timestamps, and IDs

Normalize values like currencies, time zones, and languages

Maintain a consistent data schema across all tools

4. Authentication and Security

Use OAuth2.0 or token-based authentication for third-party access

Set role-based permissions for which apps access which CRM modules

Monitor access logs for unauthorized activity

Encrypt data during transfer and storage

5. Error Handling and Logging

Create retry logic for API failures and rate limits

Set up alert systems for integration breakdowns

Maintain detailed logs for debugging sync issues

Keep version control of integration scripts and middleware

6. Real-Time vs Batch Syncing

Use real-time sync for critical customer events (e.g., purchases, support tickets)

Use batch syncing for bulk data like marketing lists or invoices

Balance sync frequency to optimize server load

Choose integration frequency based on business impact

7. Scalability and Maintenance

Build integrations as microservices or middleware, not monolithic code

Use message queues (like Kafka or RabbitMQ) for heavy data flow

Design integrations that can evolve with CRM upgrades

Partner with CRM developers for long-term integration strategy

CRM integration experts can future-proof your ecosystem

#CRMIntegration#CRMBestPractices#APIIntegration#CustomCRM#TechStack#ThirdPartyTools#CRMDevelopment#DataSync#SecureIntegration#WorkflowAutomation

2 notes

·

View notes

Text

Crypto Exchange API Integration: Simplifying and Enhancing Trading Efficiency

The cryptocurrency trading landscape is fast-paced, requiring seamless processes and real-time data access to ensure traders stay ahead of market movements. To meet these demands, Crypto Exchange APIs (Application Programming Interfaces) have emerged as indispensable tools for developers and businesses, streamlining trading processes and improving user experience.

APIs bridge the gap between users, trading platforms, and blockchain networks, enabling efficient operations like order execution, wallet integration, and market data retrieval. This blog dives into the importance of crypto exchange API integration, its benefits, and how businesses can leverage it to create feature-rich trading platforms.

What is a Crypto Exchange API?

A Crypto Exchange API is a software interface that enables seamless communication between cryptocurrency trading platforms and external applications. It provides developers with access to various functionalities, such as real-time price tracking, trade execution, and account management, allowing them to integrate these features into their platforms.

Types of Crypto Exchange APIs:

REST APIs: Used for simple, one-time data requests (e.g., fetching market data or placing a trade).

WebSocket APIs: Provide real-time data streaming for high-frequency trading and live updates.

FIX APIs (Financial Information Exchange): Designed for institutional-grade trading with high-speed data transfers.

Key Benefits of Crypto Exchange API Integration

1. Real-Time Market Data Access

APIs provide up-to-the-second updates on cryptocurrency prices, trading volumes, and order book depth, empowering traders to make informed decisions.

Use Case:

Developers can build dashboards that display live market trends and price movements.

2. Automated Trading

APIs enable algorithmic trading by allowing users to execute buy and sell orders based on predefined conditions.

Use Case:

A trading bot can automatically place orders when specific market criteria are met, eliminating the need for manual intervention.

3. Multi-Exchange Connectivity

Crypto APIs allow platforms to connect with multiple exchanges, aggregating liquidity and providing users with the best trading options.

Use Case:

Traders can access a broader range of cryptocurrencies and trading pairs without switching between platforms.

4. Enhanced User Experience

By integrating APIs, businesses can offer features like secure wallet connections, fast transaction processing, and detailed analytics, improving the overall user experience.

Use Case:

Users can track their portfolio performance in real-time and manage assets directly through the platform.

5. Increased Scalability

API integration allows trading platforms to handle a higher volume of users and transactions efficiently, ensuring smooth operations during peak trading hours.

Use Case:

Exchanges can scale seamlessly to accommodate growth in user demand.

Essential Features of Crypto Exchange API Integration

1. Trading Functionality

APIs must support core trading actions, such as placing market and limit orders, canceling trades, and retrieving order statuses.

2. Wallet Integration

Securely connect wallets for seamless deposits, withdrawals, and balance tracking.

3. Market Data Access

Provide real-time updates on cryptocurrency prices, trading volumes, and historical data for analysis.

4. Account Management

Allow users to manage their accounts, view transaction history, and set preferences through the API.

5. Security Features

Integrate encryption, two-factor authentication (2FA), and API keys to safeguard user data and funds.

Steps to Integrate Crypto Exchange APIs

1. Define Your Requirements

Determine the functionalities you need, such as trading, wallet integration, or market data retrieval.

2. Choose the Right API Provider

Select a provider that aligns with your platform’s requirements. Popular providers include:

Binance API: Known for real-time data and extensive trading options.

Coinbase API: Ideal for wallet integration and payment processing.

Kraken API: Offers advanced trading tools for institutional users.

3. Implement API Integration

Use REST APIs for basic functionalities like fetching market data.

Implement WebSocket APIs for real-time updates and faster trading processes.

4. Test and Optimize

Conduct thorough testing to ensure the API integration performs seamlessly under different scenarios, including high traffic.

5. Launch and Monitor

Deploy the integrated platform and monitor its performance to address any issues promptly.

Challenges in Crypto Exchange API Integration

1. Security Risks

APIs are vulnerable to breaches if not properly secured. Implement robust encryption, authentication, and monitoring tools to mitigate risks.

2. Latency Issues

High latency can disrupt real-time trading. Opt for APIs with low latency to ensure a smooth user experience.

3. Regulatory Compliance

Ensure the integration adheres to KYC (Know Your Customer) and AML (Anti-Money Laundering) regulations.

The Role of Crypto Exchange Platform Development Services

Partnering with a professional crypto exchange platform development service ensures your platform leverages the full potential of API integration.

What Development Services Offer:

Custom API Solutions: Tailored to your platform’s specific needs.

Enhanced Security: Implementing advanced security measures like API key management and encryption.

Real-Time Capabilities: Optimizing APIs for high-speed data transfers and trading.

Regulatory Compliance: Ensuring the platform meets global legal standards.

Scalability: Building infrastructure that grows with your user base and transaction volume.

Real-World Examples of Successful API Integration

1. Binance

Features: Offers REST and WebSocket APIs for real-time market data and trading.

Impact: Enables developers to build high-performance trading bots and analytics tools.

2. Coinbase

Features: Provides secure wallet management APIs and payment processing tools.

Impact: Streamlines crypto payments and wallet integration for businesses.

3. Kraken

Features: Advanced trading APIs for institutional and professional traders.

Impact: Supports multi-currency trading with low-latency data feeds.

Conclusion

Crypto exchange API integration is a game-changer for businesses looking to streamline trading processes and enhance user experience. From enabling real-time data access to automating trades and managing wallets, APIs unlock endless possibilities for innovation in cryptocurrency trading platforms.

By partnering with expert crypto exchange platform development services, you can ensure secure, scalable, and efficient API integration tailored to your platform’s needs. In the ever-evolving world of cryptocurrency, seamless API integration is not just an advantage—it’s a necessity for staying ahead of the competition.

Are you ready to take your crypto exchange platform to the next level?

#cryptocurrencyexchange#crypto exchange platform development company#crypto exchange development company#white label crypto exchange development#cryptocurrency exchange development service#cryptoexchange

2 notes

·

View notes

Text

What is Argo CD? And When Was Argo CD Established?

What Is Argo CD?

Argo CD is declarative Kubernetes GitOps continuous delivery.

In DevOps, ArgoCD is a Continuous Delivery (CD) technology that has become well-liked for delivering applications to Kubernetes. It is based on the GitOps deployment methodology.

When was Argo CD Established?

Argo CD was created at Intuit and made publicly available following Applatix’s 2018 acquisition by Intuit. The founding developers of Applatix, Hong Wang, Jesse Suen, and Alexander Matyushentsev, made the Argo project open-source in 2017.

Why Argo CD?

Declarative and version-controlled application definitions, configurations, and environments are ideal. Automated, auditable, and easily comprehensible application deployment and lifecycle management are essential.

Getting Started

Quick Start

kubectl create namespace argocd kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

For some features, more user-friendly documentation is offered. Refer to the upgrade guide if you want to upgrade your Argo CD. Those interested in creating third-party connectors can access developer-oriented resources.

How it works

Argo CD defines the intended application state by employing Git repositories as the source of truth, in accordance with the GitOps pattern. There are various approaches to specify Kubernetes manifests:

Applications for Customization

Helm charts

JSONNET files

Simple YAML/JSON manifest directory

Any custom configuration management tool that is set up as a plugin

The deployment of the intended application states in the designated target settings is automated by Argo CD. Deployments of applications can monitor changes to branches, tags, or pinned to a particular manifest version at a Git commit.

Architecture

The implementation of Argo CD is a Kubernetes controller that continually observes active apps and contrasts their present, live state with the target state (as defined in the Git repository). Out Of Sync is the term used to describe a deployed application whose live state differs from the target state. In addition to reporting and visualizing the differences, Argo CD offers the ability to manually or automatically sync the current state back to the intended goal state. The designated target environments can automatically apply and reflect any changes made to the intended target state in the Git repository.

Components

API Server

The Web UI, CLI, and CI/CD systems use the API, which is exposed by the gRPC/REST server. Its duties include the following:

Status reporting and application management

Launching application functions (such as rollback, sync, and user-defined actions)

Cluster credential management and repository (k8s secrets)

RBAC enforcement

Authentication, and auth delegation to outside identity providers

Git webhook event listener/forwarder

Repository Server

An internal service called the repository server keeps a local cache of the Git repository containing the application manifests. When given the following inputs, it is in charge of creating and returning the Kubernetes manifests:

URL of the repository

Revision (tag, branch, commit)

Path of the application

Template-specific configurations: helm values.yaml, parameters

A Kubernetes controller known as the application controller keeps an eye on all active apps and contrasts their actual, live state with the intended target state as defined in the repository. When it identifies an Out Of Sync application state, it may take remedial action. It is in charge of calling any user-specified hooks for lifecycle events (Sync, PostSync, and PreSync).

Features

Applications are automatically deployed to designated target environments.

Multiple configuration management/templating tools (Kustomize, Helm, Jsonnet, and plain-YAML) are supported.

Capacity to oversee and implement across several clusters

Integration of SSO (OIDC, OAuth2, LDAP, SAML 2.0, Microsoft, LinkedIn, GitHub, GitLab)

RBAC and multi-tenancy authorization policies

Rollback/Roll-anywhere to any Git repository-committed application configuration

Analysis of the application resources’ health state

Automated visualization and detection of configuration drift

Applications can be synced manually or automatically to their desired state.

Web user interface that shows program activity in real time

CLI for CI integration and automation

Integration of webhooks (GitHub, BitBucket, GitLab)

Tokens of access for automation

Hooks for PreSync, Sync, and PostSync to facilitate intricate application rollouts (such as canary and blue/green upgrades)

Application event and API call audit trails

Prometheus measurements

To override helm parameters in Git, use parameter overrides.

Read more on Govindhtech.com

#ArgoCD#CD#GitOps#API#Kubernetes#Git#Argoproject#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

Full Stack Testing vs. Full Stack Development: What’s the Difference?

In today’s fast-evolving tech world, buzzwords like Full Stack Development and Full Stack Testing have gained immense popularity. Both roles are vital in the software lifecycle, but they serve very different purposes. Whether you’re a beginner exploring your career options or a professional looking to expand your skills, understanding the differences between Full Stack Testing and Full Stack Development is crucial. Let’s dive into what makes these two roles unique!

What Is Full Stack Development?

Full Stack Development refers to the ability to build an entire software application – from the user interface to the backend logic – using a wide range of tools and technologies. A Full Stack Developer is proficient in both front-end (user-facing) and back-end (server-side) development.

Key Responsibilities of a Full Stack Developer:

Front-End Development: Building the user interface using tools like HTML, CSS, JavaScript, React, or Angular.

Back-End Development: Creating server-side logic using languages like Node.js, Python, Java, or PHP.

Database Management: Handling databases such as MySQL, MongoDB, or PostgreSQL.

API Integration: Connecting applications through RESTful or GraphQL APIs.

Version Control: Using tools like Git for collaborative development.

Skills Required for Full Stack Development:

Proficiency in programming languages (JavaScript, Python, Java, etc.)

Knowledge of web frameworks (React, Django, etc.)

Experience with databases and cloud platforms

Understanding of DevOps tools

In short, a Full Stack Developer handles everything from designing the UI to writing server-side code, ensuring the software runs smoothly.

What Is Full Stack Testing?

Full Stack Testing is all about ensuring quality at every stage of the software development lifecycle. A Full Stack Tester is responsible for testing applications across multiple layers – from front-end UI testing to back-end database validation – ensuring a seamless user experience. They blend manual and automation testing skills to detect issues early and prevent software failures.

Key Responsibilities of a Full Stack Tester:

UI Testing: Ensuring the application looks and behaves correctly on the front end.

API Testing: Validating data flow and communication between services.

Database Testing: Verifying data integrity and backend operations.

Performance Testing: Ensuring the application performs well under load using tools like JMeter.

Automation Testing: Automating repetitive tests with tools like Selenium or Cypress.

Security Testing: Identifying vulnerabilities to prevent cyber-attacks.

Skills Required for Full Stack Testing:

Knowledge of testing tools like Selenium, Postman, JMeter, or TOSCA

Proficiency in both manual and automation testing

Understanding of test frameworks like TestNG or Cucumber

Familiarity with Agile and DevOps practices

Basic knowledge of programming for writing test scripts

A Full Stack Tester plays a critical role in identifying bugs early in the development process and ensuring the software functions flawlessly.

Which Career Path Should You Choose?

The choice between Full Stack Development and Full Stack Testing depends on your interests and strengths:

Choose Full Stack Development if you love coding, creating interfaces, and building software solutions from scratch. This role is ideal for those who enjoy developing creative products and working with both front-end and back-end technologies.

Choose Full Stack Testing if you have a keen eye for detail and enjoy problem-solving by finding bugs and ensuring software quality. If you love automation, performance testing, and working with multiple testing tools, Full Stack Testing is the right path.

Why Both Roles Are Essential :

Both Full Stack Developers and Full Stack Testers are integral to software development. While developers focus on creating functional features, testers ensure that everything runs smoothly and meets user expectations. In an Agile or DevOps environment, these roles often overlap, with testers and developers working closely to deliver high-quality software in shorter cycles.

Final Thoughts :

Whether you opt for Full Stack Testing or Full Stack Development, both fields offer exciting opportunities with tremendous growth potential. With software becoming increasingly complex, the demand for skilled developers and testers is higher than ever.

At TestoMeter Pvt. Ltd., we provide comprehensive training in both Full Stack Development and Full Stack Testing to help you build a future-proof career. Whether you want to build software or ensure its quality, we’ve got the perfect course for you.

Ready to take the next step? Explore our Full Stack courses today and start your journey toward a successful IT career!

This blog not only provides a crisp comparison but also encourages potential students to explore both career paths with TestoMeter.

For more Details :

Interested in kick-starting your Software Developer/Software Tester career? Contact us today or Visit our website for course details, success stories, and more!

🌐visit - https://www.testometer.co.in/

2 notes

·

View notes

Text

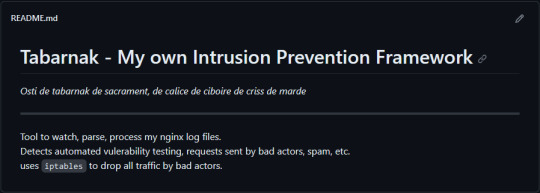

(this is a small story of how I came to write my own intrusion detection/prevention framework and why I'm really happy with that decision, don't mind me rambling)

Preface

About two weeks ago I was faced with a pretty annoying problem. Whilst I was going home by train I have noticed that my server at home had been running hot and slowed down a lot. This prompted me to check my nginx logs, the only service that is indirectly available to the public (more on that later), which made me realize that - due to poor access control - someone had been sending me hundreds of thousands of huge DNS requests to my server, most likely testing for vulnerabilities. I added an iptables rule to drop all traffic from the aforementioned source and redirected remaining traffic to a backup NextDNS instance that I set up previously with the same overrides and custom records that my DNS had to not get any downtime for the service but also allow my server to cool down. I stopped the DNS service on my server at home and then used the remaining train ride to think. How would I stop this from happening in the future? I pondered multiple possible solutions for this problem, whether to use fail2ban, whether to just add better access control, or to just stick with the NextDNS instance.

I ended up going with a completely different option: making a solution, that's perfectly fit for my server, myself.

My Server Structure

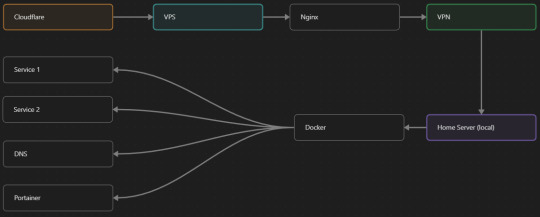

So, I should probably explain how I host and why only nginx is public despite me hosting a bunch of services under the hood.

I have a public facing VPS that only allows traffic to nginx. That traffic then gets forwarded through a VPN connection to my home server so that I don't have to have any public facing ports on said home server. The VPS only really acts like the public interface for the home server with access control and logging sprinkled in throughout my configs to get more layers of security. Some Services can only be interacted with through the VPN or a local connection, such that not everything is actually forwarded - only what I need/want to be.

I actually do have fail2ban installed on both my VPS and home server, so why make another piece of software?

Tabarnak - Succeeding at Banning

I had a few requirements for what I wanted to do:

Only allow HTTP(S) traffic through Cloudflare

Only allow DNS traffic from given sources; (location filtering, explicit white-/blacklisting);

Webhook support for logging

Should be interactive (e.g. POST /api/ban/{IP})

Detect automated vulnerability scanning

Integration with the AbuseIPDB (for checking and reporting)

As I started working on this, I realized that this would soon become more complex than I had thought at first.

Webhooks for logging This was probably the easiest requirement to check off my list, I just wrote my own log() function that would call a webhook. Sadly, the rest wouldn't be as easy.

Allowing only Cloudflare traffic This was still doable, I only needed to add a filter in my nginx config for my domain to only allow Cloudflare IP ranges and disallow the rest. I ended up doing something slightly different. I added a new default nginx config that would just return a 404 on every route and log access to a different file so that I could detect connection attempts that would be made without Cloudflare and handle them in Tabarnak myself.

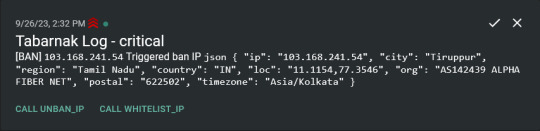

Integration with AbuseIPDB Also not yet the hard part, just call AbuseIPDB with the parsed IP and if the abuse confidence score is within a configured threshold, flag the IP, when that happens I receive a notification that asks me whether to whitelist or to ban the IP - I can also do nothing and let everything proceed as it normally would. If the IP gets flagged a configured amount of times, ban the IP unless it has been whitelisted by then.

Location filtering + Whitelist + Blacklist This is where it starts to get interesting. I had to know where the request comes from due to similarities of location of all the real people that would actually connect to the DNS. I didn't want to outright ban everyone else, as there could be valid requests from other sources. So for every new IP that triggers a callback (this would only be triggered after a certain amount of either flags or requests), I now need to get the location. I do this by just calling the ipinfo api and checking the supplied location. To not send too many requests I cache results (even though ipinfo should never be called twice for the same IP - same) and save results to a database. I made my own class that bases from collections.UserDict which when accessed tries to find the entry in memory, if it can't it searches through the DB and returns results. This works for setting, deleting, adding and checking for records. Flags, AbuseIPDB results, whitelist entries and blacklist entries also get stored in the DB to achieve persistent state even when I restart.

Detection of automated vulnerability scanning For this, I went through my old nginx logs, looking to find the least amount of paths I need to block to catch the biggest amount of automated vulnerability scan requests. So I did some data science magic and wrote a route blacklist. It doesn't just end there. Since I know the routes of valid requests that I would be receiving (which are all mentioned in my nginx configs), I could just parse that and match the requested route against that. To achieve this I wrote some really simple regular expressions to extract all location blocks from an nginx config alongside whether that location is absolute (preceded by an =) or relative. After I get the locations I can test the requested route against the valid routes and get back whether the request was made to a valid URL (I can't just look for 404 return codes here, because there are some pages that actually do return a 404 and can return a 404 on purpose). I also parse the request method from the logs and match the received method against the HTTP standard request methods (which are all methods that services on my server use). That way I can easily catch requests like:

XX.YYY.ZZZ.AA - - [25/Sep/2023:14:52:43 +0200] "145.ll|'|'|SGFjS2VkX0Q0OTkwNjI3|'|'|WIN-JNAPIER0859|'|'|JNapier|'|'|19-02-01|'|'||'|'|Win 7 Professional SP1 x64|'|'|No|'|'|0.7d|'|'|..|'|'|AA==|'|'|112.inf|'|'|SGFjS2VkDQoxOTIuMTY4LjkyLjIyMjo1NTUyDQpEZXNrdG9wDQpjbGllbnRhLmV4ZQ0KRmFsc2UNCkZhbHNlDQpUcnVlDQpGYWxzZQ==12.act|'|'|AA==" 400 150 "-" "-"

I probably over complicated this - by a lot - but I can't go back in time to change what I did.

Interactivity As I showed and mentioned earlier, I can manually white-/blacklist an IP. This forced me to add threads to my previously single-threaded program. Since I was too stubborn to use websockets (I have a distaste for websockets), I opted for probably the worst option I could've taken. It works like this: I have a main thread, which does all the log parsing, processing and handling and a side thread which watches a FIFO-file that is created on startup. I can append commands to the FIFO-file which are mapped to the functions they are supposed to call. When the FIFO reader detects a new line, it looks through the map, gets the function and executes it on the supplied IP. Doing all of this manually would be way too tedious, so I made an API endpoint on my home server that would append the commands to the file on the VPS. That also means, that I had to secure that API endpoint so that I couldn't just be spammed with random requests. Now that I could interact with Tabarnak through an API, I needed to make this user friendly - even I don't like to curl and sign my requests manually. So I integrated logging to my self-hosted instance of https://ntfy.sh and added action buttons that would send the request for me. All of this just because I refused to use sockets.

First successes and why I'm happy about this After not too long, the bans were starting to happen. The traffic to my server decreased and I can finally breathe again. I may have over complicated this, but I don't mind. This was a really fun experience to write something new and learn more about log parsing and processing. Tabarnak probably won't last forever and I could replace it with solutions that are way easier to deploy and way more general. But what matters is, that I liked doing it. It was a really fun project - which is why I'm writing this - and I'm glad that I ended up doing this. Of course I could have just used fail2ban but I never would've been able to write all of the extras that I ended up making (I don't want to take the explanation ad absurdum so just imagine that I added cool stuff) and I never would've learned what I actually did.

So whenever you are faced with a dumb problem and could write something yourself, I think you should at least try. This was a really fun experience and it might be for you as well.

Post Scriptum

First of all, apologies for the English - I'm not a native speaker so I'm sorry if some parts were incorrect or anything like that. Secondly, I'm sure that there are simpler ways to accomplish what I did here, however this was more about the experience of creating something myself rather than using some pre-made tool that does everything I want to (maybe even better?). Third, if you actually read until here, thanks for reading - hope it wasn't too boring - have a nice day :)

10 notes

·

View notes

Text

Beyond Testing: Monitoring APIs with Automation Tools

API automation tools are not just for testing; they play a crucial role in API monitoring, ensuring APIs perform optimally in real-world conditions. Monitoring APIs with automation tools offers continuous insights into performance, availability, and reliability, helping teams address issues proactively.

Real-Time Performance Tracking

Tools like Postman, ReadyAPI, and New Relic enable real-time monitoring of API response times, latency, and throughput. These metrics are critical to ensuring seamless user experiences.

Error Detection and Alerts

Automated monitoring tools can identify issues such as failed endpoints, incorrect responses, or timeouts. Alerts notify teams immediately, allowing quick resolution and minimizing downtime.

Monitoring in Production Environments

Tools like Datadog or AWS CloudWatch continuously monitor APIs in live environments, ensuring they function correctly under real-world load conditions.

Integration with CI/CD Pipelines

API monitoring can be integrated with CI/CD workflows, enabling teams to validate the stability of APIs with every release.

Ensuring SLA Compliance

Automated monitoring tracks uptime and response metrics to ensure APIs meet Service Level Agreements (SLAs).

By extending automation tools beyond testing into monitoring, teams can enhance their API lifecycle management, ensuring consistent performance and reliability in dynamic environments.

#api test automation#rest assured api testing#api automation#api automation testing tools#api test tool#api automation tools#rest api automation#rest api testing automation#best api automation testing tools#best api automation tools#api testing in automation

0 notes

Text

REST API data automation | Match Data Pro LLC

Streamline your business with REST API data automation from Match Data Pro LLC. We offer seamless integration, efficient workflows, and reliable solutions to connect and manage your data effortlessly. Optimize processes and boost productivity with our expert services today!

REST API data automation

0 notes

Text

25 Python Projects to Supercharge Your Job Search in 2024

Introduction: In the competitive world of technology, a strong portfolio of practical projects can make all the difference in landing your dream job. As a Python enthusiast, building a diverse range of projects not only showcases your skills but also demonstrates your ability to tackle real-world challenges. In this blog post, we'll explore 25 Python projects that can help you stand out and secure that coveted position in 2024.

1. Personal Portfolio Website

Create a dynamic portfolio website that highlights your skills, projects, and resume. Showcase your creativity and design skills to make a lasting impression.

2. Blog with User Authentication

Build a fully functional blog with features like user authentication and comments. This project demonstrates your understanding of web development and security.

3. E-Commerce Site

Develop a simple online store with product listings, shopping cart functionality, and a secure checkout process. Showcase your skills in building robust web applications.

4. Predictive Modeling

Create a predictive model for a relevant field, such as stock prices, weather forecasts, or sales predictions. Showcase your data science and machine learning prowess.

5. Natural Language Processing (NLP)

Build a sentiment analysis tool or a text summarizer using NLP techniques. Highlight your skills in processing and understanding human language.

6. Image Recognition

Develop an image recognition system capable of classifying objects. Demonstrate your proficiency in computer vision and deep learning.

7. Automation Scripts

Write scripts to automate repetitive tasks, such as file organization, data cleaning, or downloading files from the internet. Showcase your ability to improve efficiency through automation.

8. Web Scraping

Create a web scraper to extract data from websites. This project highlights your skills in data extraction and manipulation.

9. Pygame-based Game

Develop a simple game using Pygame or any other Python game library. Showcase your creativity and game development skills.

10. Text-based Adventure Game

Build a text-based adventure game or a quiz application. This project demonstrates your ability to create engaging user experiences.

11. RESTful API

Create a RESTful API for a service or application using Flask or Django. Highlight your skills in API development and integration.

12. Integration with External APIs

Develop a project that interacts with external APIs, such as social media platforms or weather services. Showcase your ability to integrate diverse systems.

13. Home Automation System

Build a home automation system using IoT concepts. Demonstrate your understanding of connecting devices and creating smart environments.

14. Weather Station

Create a weather station that collects and displays data from various sensors. Showcase your skills in data acquisition and analysis.

15. Distributed Chat Application

Build a distributed chat application using a messaging protocol like MQTT. Highlight your skills in distributed systems.

16. Blockchain or Cryptocurrency Tracker

Develop a simple blockchain or a cryptocurrency tracker. Showcase your understanding of blockchain technology.

17. Open Source Contributions

Contribute to open source projects on platforms like GitHub. Demonstrate your collaboration and teamwork skills.

18. Network or Vulnerability Scanner

Build a network or vulnerability scanner to showcase your skills in cybersecurity.

19. Decentralized Application (DApp)

Create a decentralized application using a blockchain platform like Ethereum. Showcase your skills in developing applications on decentralized networks.

20. Machine Learning Model Deployment

Deploy a machine learning model as a web service using frameworks like Flask or FastAPI. Demonstrate your skills in model deployment and integration.

21. Financial Calculator

Build a financial calculator that incorporates relevant mathematical and financial concepts. Showcase your ability to create practical tools.

22. Command-Line Tools

Develop command-line tools for tasks like file manipulation, data processing, or system monitoring. Highlight your skills in creating efficient and user-friendly command-line applications.

23. IoT-Based Health Monitoring System

Create an IoT-based health monitoring system that collects and analyzes health-related data. Showcase your ability to work on projects with social impact.

24. Facial Recognition System

Build a facial recognition system using Python and computer vision libraries. Showcase your skills in biometric technology.

25. Social Media Dashboard

Develop a social media dashboard that aggregates and displays data from various platforms. Highlight your skills in data visualization and integration.

Conclusion: As you embark on your job search in 2024, remember that a well-rounded portfolio is key to showcasing your skills and standing out from the crowd. These 25 Python projects cover a diverse range of domains, allowing you to tailor your portfolio to match your interests and the specific requirements of your dream job.

If you want to know more, Click here:https://analyticsjobs.in/question/what-are-the-best-python-projects-to-land-a-great-job-in-2024/

#python projects#top python projects#best python projects#analytics jobs#python#coding#programming#machine learning

2 notes

·

View notes

Text

Email to SMS Gateway

Ejointech's Email to SMS Gateway bridges the gap between traditional email and instant mobile communication, empowering you to reach your audience faster and more effectively than ever before. Our innovative solution seamlessly integrates with your existing email client, transforming emails into instant SMS notifications with a single click.

Why Choose Ejointech's Email to SMS Gateway?

Instant Delivery: Cut through the email clutter and ensure your messages are seen and responded to immediately. SMS boasts near-instantaneous delivery rates, maximizing engagement and driving results.

Effortless Integration: No need to switch platforms or disrupt your workflow. Send SMS directly from your familiar email client, streamlining communication and saving valuable time.

Seamless Contact Management: Leverage your existing email contacts for SMS communication, eliminating the need for separate lists and simplifying outreach.

Two-Way Communication: Receive SMS replies directly in your email inbox, fostering a convenient and efficient dialogue with your audience.

Unlocking Value for Businesses:

Cost-Effectiveness: Eliminate expensive hardware and software investments. Our cloud-based solution delivers reliable SMS communication at a fraction of the cost.

Enhanced Customer Engagement: Deliver timely appointment reminders, delivery updates, and promotional campaigns via SMS, boosting customer satisfaction and loyalty.

Improved Operational Efficiency: Automate SMS notifications and bulk messaging, freeing up your team to focus on core tasks.

Streamlined Workflow: Integrate with your CRM or other applications for automated SMS communication, streamlining processes and maximizing productivity.

Ejointech's Email to SMS Gateway Features:

Powerful API: Integrate seamlessly with your existing systems for automated and personalized SMS communication.

Wholesale SMS Rates: Enjoy competitive pricing for high-volume campaigns, ensuring cost-effective outreach.

Bulk SMS Delivery: Send thousands of personalized messages instantly, perfect for marketing alerts, notifications, and mass communication.

Detailed Delivery Reports: Track message delivery and campaign performance with comprehensive reporting tools.

Robust Security: Rest assured that your data and communications are protected with industry-leading security measures.

Ejointech: Your Trusted Partner for Email to SMS Success

With a proven track record of excellence and a commitment to customer satisfaction, Ejointech is your ideal partner for implementing an effective Email to SMS strategy. Our dedicated team provides comprehensive support and guidance, ensuring you get the most out of our solution.

Ready to experience the power of instant communication? Contact Ejointech today and discover how our Email to SMS Gateway can transform the way you connect with your audience.

#bulk sms#ejointech#sms marketing#sms modem#sms gateway#ejoin sms gateway#ejoin sms#sms gateway hardware#email to sms gateway

5 notes

·

View notes

Text

My Favorite Full Stack Tools and Technologies: Insights from a Developer

It was a seemingly ordinary morning when I first realized the true magic of full stack development. As I sipped my coffee, I stumbled upon a statistic that left me astounded: 97% of websites are built by full stack developers. That moment marked the beginning of my journey into the dynamic world of web development, where every line of code felt like a brushstroke on the canvas of the internet.

In this blog, I invite you to join me on a fascinating journey through the realm of full stack development. As a seasoned developer, I’ll share my favorite tools and technologies that have not only streamlined my workflow but also brought my creative ideas to life.

The Full Stack Developer’s Toolkit

Before we dive into the toolbox, let’s clarify what a full stack developer truly is. A full stack developer is someone who possesses the skills to work on both the front-end and back-end of web applications, bridging the gap between design and server functionality.

Tools and technologies are the lifeblood of a developer’s daily grind. They are the digital assistants that help us craft interactive websites, streamline processes, and solve complex problems.

Front-End Favorites

As any developer will tell you, HTML and CSS are the foundation of front-end development. HTML structures content, while CSS styles it. These languages, like the alphabet of the web, provide the basis for creating visually appealing and user-friendly interfaces.

JavaScript and Frameworks: JavaScript, often hailed as the “language of the web,” is my go-to for interactivity. The versatility of JavaScript and its ecosystem of libraries and frameworks, such as React and Vue.js, has been a game-changer in creating responsive and dynamic web applications.

Back-End Essentials

The back-end is where the magic happens behind the scenes. I’ve found server-side languages like Python and Node.js to be my trusted companions. They empower me to build robust server applications, handle data, and manage server resources effectively.

Databases are the vaults where we store the treasure trove of data. My preference leans toward relational databases like MySQL and PostgreSQL, as well as NoSQL databases like MongoDB. The choice depends on the project’s requirements.

Development Environments

The right code editor can significantly boost productivity. Personally, I’ve grown fond of Visual Studio Code for its flexibility, extensive extensions, and seamless integration with various languages and frameworks.

Git is the hero of collaborative development. With Git and platforms like GitHub, tracking changes, collaborating with teams, and rolling back to previous versions have become smooth sailing.

Productivity and Automation

Automation is the secret sauce in a developer’s recipe for efficiency. Build tools like Webpack and task runners like Gulp automate repetitive tasks, optimize code, and enhance project organization.

Testing is the compass that keeps us on the right path. I rely on tools like Jest and Chrome DevTools for testing and debugging. These tools help uncover issues early in development and ensure a smooth user experience.

Frameworks and Libraries

Front-end frameworks like React and Angular have revolutionized web development. Their component-based architecture and powerful state management make building complex user interfaces a breeze.

Back-end frameworks, such as Express.js for Node.js and Django for Python, are my go-to choices. They provide a structured foundation for creating RESTful APIs and handling server-side logic efficiently.

Security and Performance

The internet can be a treacherous place, which is why security is paramount. Tools like OWASP ZAP and security best practices help fortify web applications against vulnerabilities and cyber threats.

Page load speed is critical for user satisfaction. Tools and techniques like Lighthouse and performance audits ensure that websites are optimized for quick loading and smooth navigation.

Project Management and Collaboration

Collaboration and organization are keys to successful projects. Tools like Trello, JIRA, and Asana help manage tasks, track progress, and foster team collaboration.

Clear communication is the glue that holds development teams together. Platforms like Slack and Microsoft Teams facilitate real-time discussions, file sharing, and quick problem-solving.

Personal Experiences and Insights

It’s one thing to appreciate these tools in theory, but it’s their application in real projects that truly showcases their worth. I’ve witnessed how this toolkit has brought complex web applications to life, from e-commerce platforms to data-driven dashboards.

The journey hasn’t been without its challenges. Whether it’s tackling tricky bugs or optimizing for mobile performance, my favorite tools have always been my partners in overcoming obstacles.

Continuous Learning and Adaptation

Web development is a constantly evolving field. New tools, languages, and frameworks emerge regularly. As developers, we must embrace the ever-changing landscape and be open to learning new technologies.

Fortunately, the web development community is incredibly supportive. Platforms like Stack Overflow, GitHub, and developer forums offer a wealth of resources for learning, troubleshooting, and staying updated. The ACTE Institute offers numerous Full stack developer courses, bootcamps, and communities that can provide you with the necessary resources and support to succeed in this field. Best of luck on your exciting journey!

In this blog, we’ve embarked on a journey through the world of full stack development, exploring the tools and technologies that have become my trusted companions. From HTML and CSS to JavaScript frameworks, server-side languages, and an array of productivity tools, these elements have shaped my career.

As a full stack developer, I’ve discovered that the right tools and technologies can turn challenges into opportunities and transform creative ideas into functional websites and applications. The world of web development continues to evolve, and I eagerly anticipate the exciting innovations and discoveries that lie ahead. My hope is that this exploration of my favorite tools and technologies inspires fellow developers on their own journeys and fuels their passion for the ever-evolving world of web development.

#frameworks#full stack web development#web development#front end development#backend#programming#education#information

4 notes

·

View notes

Text

Senior Software Engineer

. Create intuitive, responsive UIs using PHP and NodeJS Implement RESTful APIs, microservices, and automated CI/CD pipelines… Good working experience in PHP, NodeJS, Delphi, Perl. Working experience in AWS Ability to analyze and troubleshoot… Apply Now

0 notes

Text

How Secure Are Internet of Things (IoT) Devices in 2025?

From smart homes anticipating your every need to industrial sensors optimizing manufacturing lines, Internet of Things (IoT) devices have seamlessly integrated into our lives, promising unparalleled convenience and efficiency. In 2025, are these interconnected gadgets truly secure, or are they opening up a Pandora's Box of vulnerabilities?

The truth is, IoT security is a complex and often concerning landscape. While significant progress is being made by some manufacturers and regulatory bodies, many IoT devices still pose substantial risks, largely due to a race to market that often prioritizes features and cost over robust security.

The Allure vs. The Alarms: Why IoT Devices Are Often Vulnerable

The promise of IoT is immense: automation, data-driven insights, remote control. The peril, however, lies in how easily these devices can become entry points for cyberattacks, leading to privacy breaches, network compromise, and even physical harm.

Here's why many IoT devices remain a security headache:

Weak Default Credentials & Lack of Updates:

The Problem: Many devices are still shipped with easily guessable default usernames and passwords (e.g., "admin/admin," "user/123456"). Even worse, many users never change them. This is the single easiest way for attackers to gain access.

The Challenge: Unlike smartphones or laptops, many IoT devices lack clear, robust, or frequent firmware update mechanisms. Cheaper devices often receive no security patches at all after purchase, leaving critical vulnerabilities unaddressed for their entire lifespan.

Insecure Network Services & Open Ports:

The Problem: Devices sometimes come with unnecessary network services enabled or ports left open to the internet, creating direct pathways for attackers. Poorly configured remote access features are a common culprit.

The Impact: Remember the Mirai botnet? It famously exploited vulnerable IoT devices with open ports and default credentials to launch massive Distributed Denial of Service (DDoS) attacks.

Lack of Encryption (Data In Transit & At Rest):

The Problem: Data transmitted between the device, its mobile app, and the cloud often lacks proper encryption, making it vulnerable to eavesdropping (Man-in-the-Middle attacks). Sensitive data stored directly on the device itself may also be unencrypted.

The Risk: Imagine your smart speaker conversations, security camera footage, or even health data from a wearable being intercepted or accessed.

Insecure Hardware & Physical Tampering:

The Problem: Many IoT devices are designed with minimal physical security. Easily accessible debug ports (like JTAG or UART) or lack of tamper-resistant enclosures can allow attackers to extract sensitive data (like firmware or encryption keys) directly from the device.

The Threat: With physical access, an attacker can potentially rewrite firmware, bypass security controls, or extract confidential information.

Vulnerabilities in Accompanying Apps & Cloud APIs:

The Problem: The web interfaces, mobile applications, and cloud APIs used to control IoT devices are often susceptible to common web vulnerabilities like SQL Injection, Cross-Site Scripting (XSS), or insecure authentication.

The Loophole: Even if the device itself is somewhat secure, a flaw in the control app or cloud backend can compromise the entire ecosystem.

Insufficient Privacy Protections:

The Problem: Many IoT devices collect vast amounts of personal and sensitive data (e.g., location, habits, biometrics) without always providing clear consent mechanisms or robust data handling policies. This data might then be shared with third parties.

The Concern: Beyond direct attacks, the sheer volume of personal data collected raises significant privacy concerns, especially if it falls into the wrong hands.

Supply Chain Risks:

The Problem: Vulnerabilities can be introduced at any stage of the complex IoT supply chain, from compromised components to insecure firmware inserted during manufacturing.

The Fallout: A single compromised component can affect thousands or millions of devices, as seen with some supply chain attacks in the broader tech industry.

The Elephant in the Room: Why Securing IoT is Hard

Diversity & Scale: The sheer number and variety of IoT devices (from tiny sensors to complex industrial machines) make a "one-size-fits-all" security solution impossible.

Resource Constraints: Many devices are low-power, low-cost, or battery-operated, limiting the computational resources available for robust encryption or security features.

Long Lifespans: Unlike phones, many IoT devices are expected to operate for years, even decades, long after manufacturers might cease providing support or updates.

Patching Complexity: Pushing updates to millions of geographically dispersed devices, sometimes with limited connectivity, is a logistical nightmare.

Consumer Awareness: Many consumers prioritize convenience and price over security, often unaware of the risks they introduce into their homes and networks.

Towards a More Secure IoT in 2025: Your Shield & Their Responsibility

While the challenges are significant, there's a collective effort towards a more secure IoT future. Here's what needs to happen and what you can do:

For Manufacturers (Their Responsibility):

Security by Design: Integrate security into the entire product development lifecycle from day one, rather than as an afterthought.

Secure Defaults: Ship devices with unique, strong, and randomly generated default passwords.

Robust Update Mechanisms: Implement easy-to-use, automatic, and regular firmware updates throughout the device's lifecycle.

Clear End-of-Life Policies: Communicate transparently when support and security updates for a device will cease.

Secure APIs: Design secure application programming interfaces (APIs) for cloud communication and mobile app control.

Adhere to Standards: Actively participate in and adopt industry security standards (e.g., ETSI EN 303 645, IoT Security Foundation guidelines, PSA Certified). Regulatory pushes in Europe (like the Cyber Resilience Act) and elsewhere are driving this.

For Consumers & Businesses (Your Shield):

Change Default Passwords IMMEDIATELY: This is your absolute first line of defense. Make them strong and unique.

Network Segmentation: Isolate your IoT devices on a separate Wi-Fi network (a "guest" network or a VLAN if your router supports it). This prevents a compromised IoT device from accessing your main computers and sensitive data.

Keep Firmware Updated: Regularly check for and apply firmware updates for all your smart devices. If a device doesn't offer updates, reconsider its use.

Disable Unused Features: Turn off any unnecessary ports, services, or features on your IoT devices to reduce their attack surface.

Research Before You Buy: Choose reputable brands with a track record of security and clear privacy policies. Read reviews and look for security certifications.

Strong Wi-Fi Security: Ensure your home Wi-Fi uses WPA2 or, ideally, WPA3 encryption with a strong, unique password.

Be Mindful of Data Collected: Understand what data your devices are collecting and how it's being used. If the privacy policy isn't clear or feels invasive, reconsider the device.

Physical Security: Secure physical access to your devices where possible, preventing easy tampering.

Regular Monitoring (for Businesses): Implement tools and processes to monitor network traffic from IoT devices for unusual or suspicious activity.

In 2025, the convenience offered by IoT devices is undeniable. However, their security is not a given. It's a shared responsibility that demands both diligence from manufacturers to build secure products and vigilance from users to deploy and manage them safely.

0 notes