#shared services automation

Explore tagged Tumblr posts

Text

Shared Services Automation | Nimbles2p.com

Transform your business with Nimbles2p.com shared services automation. Streamline processes and boost productivity with our cutting-edge technology.

0 notes

Text

Elevate Client Services with Excel: Expert Tips for Financial Consultants by Grayson Garelick

Financial consultants operate in a dynamic environment where precision, efficiency, and client satisfaction are paramount. Excel, as a versatile tool, offers an array of features that can significantly enhance the services provided by financial consultants. Grayson Garelick, an accomplished financial analyst and consultant, shares invaluable Excel tips to help financial consultants elevate their client services and add tangible value.

The Role of Excel in Financial Consulting

Excel serves as the backbone of financial consulting, enabling consultants to analyze data, create models, and generate insights that drive informed decision-making. As the demands of clients become increasingly complex, mastering Excel becomes essential for financial consultants aiming to deliver exceptional services.

1. Customize Excel Templates

One of the most effective ways to streamline workflows and improve efficiency is by creating customized Excel templates tailored to specific client needs. Grayson suggests developing templates for budgeting, forecasting, and financial reporting that can be easily adapted for different clients, saving time and ensuring consistency.

2. Utilize PivotTables for Data Analysis

PivotTables are powerful tools in Excel that allow financial consultants to analyze large datasets and extract meaningful insights quickly. Grayson emphasizes the importance of mastering PivotTables for segmenting data, identifying trends, and presenting information in a clear and concise manner to clients.

3. Implement Conditional Formatting

Conditional formatting is a valuable feature in Excel that allows consultants to highlight important information and identify outliers effortlessly. By setting up conditional formatting rules, consultants can draw attention to key metrics, discrepancies, or trends, facilitating easier interpretation of data by clients.

4. Leverage Excel Add-ins

Excel offers a variety of add-ins that extend its functionality and provide additional features tailored to financial analysis and reporting. Grayson recommends exploring add-ins such as Power Query, Power Pivot, and Solver to enhance data manipulation, modeling, and optimization capabilities.

5. Automate Repetitive Tasks with Macros

Macros enable financial consultants to automate repetitive tasks and streamline workflows, saving valuable time and reducing the risk of errors. Grayson advises recording and editing macros to automate tasks such as data entry, formatting, and report generation, allowing consultants to focus on value-added activities.

6. Master Advanced Formulas and Functions

Excel's extensive library of formulas and functions offers endless possibilities for financial analysis and modeling. Grayson suggests mastering advanced formulas such as VLOOKUP, INDEX-MATCH, and array formulas to perform complex calculations, manipulate data, and create sophisticated models tailored to client needs.

7. Visualize Data with Charts and Graphs

Visualizing data is essential for conveying complex information in an easily digestible format. Excel offers a variety of chart types and customization options that enable consultants to create compelling visuals that resonate with clients. Grayson recommends experimenting with different chart styles to find the most effective way to present data and insights.

8. Collaborate and Share Workbooks Online

Excel's collaboration features enable financial consultants to work seamlessly with clients, colleagues, and stakeholders in real-time. Grayson highlights the benefits of sharing workbooks via OneDrive or SharePoint, allowing multiple users to collaborate on the same document, track changes, and maintain version control.

9. Protect Sensitive Data with Security Features

Data security is a top priority for financial consultants handling sensitive client information. Excel's built-in security features, such as password protection and encryption, help safeguard confidential data and ensure compliance with regulatory requirements. Grayson advises implementing security protocols to protect client data and maintain trust.

10. Stay Updated with Excel Training and Certification

Excel is a constantly evolving tool, with new features and updates released regularly. Grayson stresses the importance of staying updated with the latest Excel training and certification programs to enhance skills, explore new capabilities, and maintain proficiency in Excel's ever-changing landscape.

Elevating Client Services with Excel Mastery

Excel serves as a catalyst for innovation and excellence in financial consulting, empowering consultants to deliver exceptional services that add tangible value to clients. By implementing Grayson Garelick Excel tips, financial consultants can streamline workflows, enhance data analysis capabilities, and foster collaboration, ultimately driving client satisfaction and success. As financial consulting continues to evolve, mastering Excel remains a cornerstone of excellence, enabling consultants to thrive in a competitive landscape and exceed client expectations.

#Financial Consulting#grayson garelick#Customize Excel Templates#Utilize PivotTables#Implement Conditional Formatting#Leverage Excel Add-ins#Automate Repetitive Tasks with Macros#Advanced Formulas and Functions#Visualize Data with Charts and Graphs#Collaborate and Share Workbooks#Protect Sensitive Data with Security#Stay Updated with Excel Training#Elevating Client Services with Excel

3 notes

·

View notes

Text

Is the Future of Localization Up for Grabs? From the beaches of Hawaii to the boardrooms of the global language industry, Andrew Smart has seen it all. In my latest blog post, we dive into his candid reflections on why he’s selling his shares in Slator and how his latest venture, AcudocX, is quietly transforming the certified document translation space. Whether you’re a language industry veteran, a rising entrepreneur, or just curious about where the next big opportunity lies, this is a must-read! 📚 Read the full blog here: https://www.robinayoub.blog 🎙 Watch the full interview on YouTube: https://youtu.be/rE2IdDLr1pc 📹 Prefer bite-sized insights? Watch the 12 YouTube Shorts here: https://www.youtube.com/@L10NFiresideChat/shorts #Localization #Translation #LanguageServices #Slator #AcudocX #Entrepreneurship #LanguageIndustry #AIinTranslation #L10NFiresideChat

#AcudocX#AI in Translation#Andrew Smart#B2B Localization#B2C Translation Solutions#Certified Document Translation#Document Translation Platforms#entrepreneurship#Freelance Translators#Global Language Market#Investor Opportunities#Language Industry News#Language Service Providers#language services#Localization Business Strategy#localization industry#Localization Innovation#Localization Trends#Machine Translation#Media and M&A#Slator#Slator Shares for Sale#Translation Automation#Translation Startups#Translation Technology

0 notes

Text

Why Shared Services Centers (SSCs) are Key to Precise Financial Reporting

In today’s complex financial landscape, CFOs face mounting pressure to ensure precision in financial reporting. Even minor errors in data entry, account reconciliation, or currency conversion can cascade into significant financial risks. This is where Shared Services Centers (SSCs) step in, offering a scalable and robust solution for error-free financial reporting. Common Accuracy…

View On WordPress

#Automated financial reporting#Financial reporting accuracy#Financial reporting in SSCs#Shared Services Centers (SSCs)#SSC vs. traditional finance

0 notes

Text

#Automation as a Service#Automation as a Service Market#Automation as a Service Market Size#Automation as a Service Market Share#Automation as a Service Market Growth#Automation as a Service Market Trend

0 notes

Text

Empower Your Business with DDS4U's Comprehensive Services

At DDS4U, we provide a suite of services designed to streamline your operations, drive growth, and ensure your business stays ahead in today’s competitive market.

AI-Powered Business Automation

Revolutionize your workflows with our AI-driven automation platform. By automating repetitive tasks and integrating advanced AI technologies, we help you save time, reduce costs, and improve accuracy, allowing you to focus on strategic initiatives.

Custom Software Development

Our experienced software developers create tailored solutions that meet your unique business needs. Whether you require a new application or need to upgrade existing systems, our innovative and scalable software solutions ensure your business operates efficiently and effectively.

CRM Solutions

Enhance your customer relationships with our comprehensive CRM platform. Manage customer interactions, streamline sales processes, and gain valuable insights to drive better business decisions. Our CRM system is designed to boost customer satisfaction and loyalty, ultimately leading to increased sales and growth.

Targeted Advertisement Platform

Maximize your reach and engagement with our cutting-edge advertising platform. Tailor your campaigns to specific audiences and utilize real-time analytics to optimize performance. Our platform helps you achieve higher conversion rates and a stronger online presence.

In-App Advertisement Space

Monetize your mobile applications with designated ad spaces. Our in-app advertising feature allows you to serve targeted ads to users, providing an additional revenue stream while ensuring ads are relevant and non-intrusive.

Referral Portal

Expand your network and drive business growth with our referral portal. Easily manage and track referrals, incentivize partners, and streamline communication. Our portal fosters strong professional relationships and opens new opportunities for your business.

Business Networking Platform

Connect with industry professionals and collaborate on projects through our dynamic networking platform. Share knowledge, explore partnerships, and expand your reach in a supportive community designed to foster business success.

Social Media Poster Design

Boost your social media presence with professionally designed posters. Our team creates visually appealing graphics tailored for social media platforms, helping you engage with your audience and enhance your brand’s online visibility.

Discount Booklets

Offer your customers exclusive discounts through our customizable discount booklets. This feature helps increase customer loyalty and encourages repeat business, driving higher sales and customer satisfaction.

Self-Managed Advertisements

Take control of your advertising campaigns with our self-managed ad platform. Create, monitor, and optimize your ads independently, giving you the flexibility to adjust strategies and maximize ROI.

Video Promotions

Enhance your marketing efforts with engaging promotional videos. Showcase your products and services, highlight unique selling points, and captivate your audience with compelling visual content that drives brand awareness and customer engagement.

At DDS4U, we are committed to helping your business succeed. Our comprehensive services are designed to address your unique challenges and support your growth ambitions. Partner with us to unlock new opportunities and achieve your business goals.

#At DDS4U#we provide a suite of services designed to streamline your operations#drive growth#and ensure your business stays ahead in today’s competitive market.#AI-Powered Business Automation#Revolutionize your workflows with our AI-driven automation platform. By automating repetitive tasks and integrating advanced AI technologie#we help you save time#reduce costs#and improve accuracy#allowing you to focus on strategic initiatives.#Custom Software Development#Our experienced software developers create tailored solutions that meet your unique business needs. Whether you require a new application o#our innovative and scalable software solutions ensure your business operates efficiently and effectively.#CRM Solutions#Enhance your customer relationships with our comprehensive CRM platform. Manage customer interactions#streamline sales processes#and gain valuable insights to drive better business decisions. Our CRM system is designed to boost customer satisfaction and loyalty#ultimately leading to increased sales and growth.#Targeted Advertisement Platform#Maximize your reach and engagement with our cutting-edge advertising platform. Tailor your campaigns to specific audiences and utilize real#In-App Advertisement Space#Monetize your mobile applications with designated ad spaces. Our in-app advertising feature allows you to serve targeted ads to users#providing an additional revenue stream while ensuring ads are relevant and non-intrusive.#Referral Portal#Expand your network and drive business growth with our referral portal. Easily manage and track referrals#incentivize partners#and streamline communication. Our portal fosters strong professional relationships and opens new opportunities for your business.#Business Networking Platform#Connect with industry professionals and collaborate on projects through our dynamic networking platform. Share knowledge#explore partnerships

1 note

·

View note

Text

Simplify Your Communication for WhatsApp Business API Messages with SMSGatewayCenter's Shared Team Inbox (Live Agent Chat)

Businesses need to manage client relationships in an efficient and successful manner in the era of instant communication. With the help of the robust WhatsApp Business API, businesses can connect with their clients on a personal level. But handling these exchanges can get stressful, particularly for expanding companies that receive a lot of enquiries. This is where the Shared Team Inbox (Live Agent Chat) feature from SMSGatewayCenter is useful.

#WhatsApp Business API#Shared Team Inbox#Live Agent Chat#customer service#business communication#SMSGatewayCenter#automated chatbot#rich text chatting#scalable customer support#instant connectivity

1 note

·

View note

Text

Top Tools and Services That Improve Productivity in the Workplace

In today’s fast-paced business environment, enhancing workplace productivity is paramount for sustained growth and success. Employers and team leaders are on a constant lookout for tools and services that can streamline operations and facilitate better project management. From cloud-based platforms to automated software, there are myriad options available that cater to various business needs. In…

View On WordPress

#automation software#communication tools#document sharing services#free online cloud storage#productivity in the workplace#project management platforms#time tracking management tools

0 notes

Text

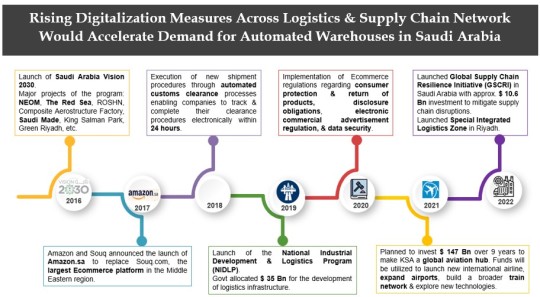

Decoding KSA's Warehousing Automation: Demand and Supply Insights: Ken Research

Saudi Arabia (KSA) drives warehousing automation as a regional logistics epicenter, fueled by a consolidated market and booming e-commerce demand.

Storyline

Saudi Arabia's infrastructure initiatives drive demand for automated warehouses.

Growing demand for cold storage services presents growth opportunities.

Focus on e-commerce and retail fuels demand for automation.

As per Ken Research estimates, Warehousing and logistics industry poised to undergo a transformative automation phase.

Existence of a consolidated market scenario amongst the growing warehousing automation industry globally, KSA’s government has made the push to turn the nation into a Regional Logistics Epicenter necessitating automation as the key parameter for a Regional Logistics Chain, enabling faster growth of KSA’s warehouse automation industry. On the other hand, an unprecedented surge in E-commerce market has balanced the demand side of the market. In this piece, we uncover the industry landscape, demand & supply side of KSA’s Warehousing automation industry.

1.Supply side boost: Government Plans and E-commerce Fuel Automated Warehouses in KSA.

To learn more about this report Download A Free Sample Report

Saudi Arabia's ambitious government infrastructure plans, including initiatives like NEOM, The Red Sea, ROSHN, and the National Industrial Development & Logistics Program (NIDLP), have created a solid foundation for the logistics network in the country. These developments, supported by a $35 Bn allocation for logistics infrastructure, have led to an increased demand for automated warehouses. (~$ 100 Bn in its transportation & logistics infrastructure) The focus on efficiency, speed, and accuracy in the supply chain has made the implementation of automated warehouse solutions essential. This growing demand is driven by the booming e-commerce industry's evolving requirements, the need to streamline operations, and ensure timely deliveries.

2.Demand Surge: Saudi Arabia's Rise as a Transshipment Hub Spurs Demand for Affordable Modern Warehouse Solutions.

Visit This Link: - Request For Custom Report

The rising demand for cold storage services in Saudi Arabia, primarily driven by the food and beverage (F&B) and pharmaceutical sectors, is leading to significant growth opportunities. To cater to this demand, companies are adopting asset-light models and relying on third-party logistics (3PL) providers who offer specialized cold storage solutions, given that only 5% of the warehouses are currently automated. These providers leverage innovative technologies to ensure efficient operations and maintain the quality and integrity of stored products. As a result, companies are strategically expanding their warehouses and investing in cutting-edge solutions. This transformative phase is focused on meeting the evolving needs of the F&B and pharmaceutical sectors while gaining a larger market share.

3.“A balance to be the solution:” The demand and market share of e-commerce and retail is expected to increase in the future due to increasing focus towards reducing the overall sales cycle duration.

Request For 30 Minutes Analyst Call

Experience the transformative power of automation as it reshapes the warehousing and logistics landscape, propelling the retail and e-commerce industry into a new era. In this fast-paced world, e-commerce automation software becomes the key driver, enabling businesses to focus on their core strengths and strategic goals. The adoption of automated warehouse management systems empowers 3PL companies to achieve unprecedented efficiency, accuracy, and real-time inventory visibility, while reducing costs and enhancing customer service. As per our estimates at Ken Research, the market will grow at a steady pace undergoing transformative warehouse automation process.

#KSA Warehouse Automation Market#KSA Supply Chain Automation Market#KSA Inventory Automation Market#Trends KSA Warehouse Automation Market#KSA Warehouse Automation Market Opportunities#Challenges KSA Warehouse Automation Market#Number of Warehouses in KSA#Number of Logistics Service Providers in KSA#Competition KSA Warehouse Automation Markets#Schaffer Warehouse Automation KSA Market Share#Oracle KSA Market Share#Swisslog KSA Market Revenue#CIN7 KSA Annual Revenue#Grey Orange KSA Market Share#Ancra KSA Market Revenue#Fizyr KSA Market Share#Caja KSA Annual growth#Investment KSA Warehouse Automation Market#Leading Companies KSA Warehouse Automation Market#Emerging Companies KSA Warehouse Automation Market#Major Players KSA Warehouse Automation Market#KSA AMR Market#Handplus Robotics KSA Market Share#Fetchr KSA Market Revenue#Flytbase KSA Market#Warehouse Automations Lab KSA Market#Saco KSA Market Share#Actiw KSA Market Revenue#Airmap KSA Market Share#Welcome Bank KSA Market Share

0 notes

Text

#Home#Energy & Power#Global Distribution Feeder Automation Market#Global Distribution Feeder Automation Market Size#Share#Trends#Growth#Industry Analysis By Type( Fault Location#Isolation#Service Restoratio#Automatic Transfer Scheme )#By Application( Industrial#Commercial#Residential )#Key Players#Revenue#Future Development & Forecast 2023-2032

0 notes

Text

UAE Logistics Market to grow at the rate of 7.5% in the upcoming period between 2021-2026 owing to government initiatives such as Expansion of sea ports alongside technological innovations such as Real-Time Tracking : Ken Research

History of steady growth alongside a positive future forecast provides UAE’s logistics Market increasing confidence & interest of stakeholders, says a report by Ken Research

1. Expansion of existing sea ports, emergence of e-commerce sector coupled with rising maritime, air cargo & land transport sector serves as major catalyst for the growth and development of logistics industry in UAE.

The government of UAE is aiming to establish it as a Logistics Hub by huge investments in the infrastructure. Initiatives such as development of sea ports (USD 1.09 Billion to enhance logistical infrastructure for serving trading operations) alongside a growing pharmaceutical & maritime industry will help UAE government to achieve the economic growth targets by 2040. The country’s strength lies mainly in its maritime sector. As per relevant data, the Bunker Supply Index ranked UAE 3rd globally in transport services and 5th globally as a key competitive maritime hub. As of 2022, there were more than 10 million cubic meters of crude and oil products storage capacity at Fujairah, making Fujairah the world’s No. 3 bunkering hub, which contributes in strengthening UAE’s position as a reliable supplier of crude oil. All in all, the country has a huge potential when it comes to expansion of its logistics sector.

Visit this Link:- Request for custom report

2. “Integrating Technology to market’s growth prospects:” With the increasing technological innovations and advancements across the world, logistics sector in UAE is also getting benefitted.

#Bollore Logistics UAE Annual Revenue#BSD City UAE Market Share#Ceva Logistics UAE Market Revenue#Challenges UAE Logistics Market#Competitors in UAE Logistics Market#Emerging players in UAE Logistics Market#Hellman UAE Market Share#Investment UAE Logistics Market#Leading Players in UAE Freight Forwarding Market#Leading players in UAE Logistics Market#Leading Sensors & Controls Providers UAE#Leading Warehouse Automation Service Providers#LinFox UAE Market Share#Major Identification & Data Capture Service Providers#Major Players in UAE Logistics Market#Mohebi Logistics UAE Market Share#NTDE UAE Market Revenue#Number of Cargo Trucks in UAE#Number of Trucks in UAE#Number of Warehousing Units in UAE#Opportunities UAE Freight Forwarding Market#Rhenus Logistics UAE Market Growth#Toll UAE Market Share#Top Material handling solution providers#Top Players in UAE Logistics Market#UAE Air Freight Market Revenue#UAE Automobile Freight Market#UAE CEP Market#UAE Freight Forwarding Market#UAE Industrial Warehouses Market Share

0 notes

Text

#smart camera#smart home#home automation services#smart home automation#home sweet home#family#freinds#safety#safe to share#secure

0 notes

Text

How I ditched streaming services and learned to love Linux: A step-by-step guide to building your very own personal media streaming server (V2.0: REVISED AND EXPANDED EDITION)

This is a revised, corrected and expanded version of my tutorial on setting up a personal media server that previously appeared on my old blog (donjuan-auxenfers). I expect that that post is still making the rounds (hopefully with my addendum on modifying group share permissions in Ubuntu to circumvent 0x8007003B "Unexpected Network Error" messages in Windows 10/11 when transferring files) but I have no way of checking. Anyway this new revised version of the tutorial corrects one or two small errors I discovered when rereading what I wrote, adds links to all products mentioned and is just more polished generally. I also expanded it a bit, pointing more adventurous users toward programs such as Sonarr/Radarr/Lidarr and Overseerr which can be used for automating user requests and media collection.

So then, what is this tutorial? This is a tutorial on how to build and set up your own personal media server using Ubuntu as an operating system and Plex (or Jellyfin) to not only manage your media, but to also stream that media to your devices both at home and abroad anywhere in the world where you have an internet connection. Its intent is to show you how building a personal media server and stuffing it full of films, TV, and music that you acquired through indiscriminate and voracious media piracy various legal methods will free you to completely ditch paid streaming services. No more will you have to pay for Disney+, Netflix, HBOMAX, Hulu, Amazon Prime, Peacock, CBS All Access, Paramount+, Crave or any other streaming service that is not named Criterion Channel. Instead whenever you want to watch your favourite films and television shows, you’ll have your own personal service that only features things that you want to see, with files that you have control over. And for music fans out there, both Jellyfin and Plex support music streaming, meaning you can even ditch music streaming services. Goodbye Spotify, Youtube Music, Tidal and Apple Music, welcome back unreasonably large MP3 (or FLAC) collections.

On the hardware front, I’m going to offer a few options catered towards different budgets and media library sizes. The cost of getting a media server up and running using this guide will cost you anywhere from $450 CAD/$325 USD at the low end to $1500 CAD/$1100 USD at the high end (it could go higher). My server was priced closer to the higher figure, but I went and got a lot more storage than most people need. If that seems like a little much, consider for a moment, do you have a roommate, a close friend, or a family member who would be willing to chip in a few bucks towards your little project provided they get access? Well that's how I funded my server. It might also be worth thinking about the cost over time, i.e. how much you spend yearly on subscriptions vs. a one time cost of setting up a server. Additionally there's just the joy of being able to scream "fuck you" at all those show cancelling, library deleting, hedge fund vampire CEOs who run the studios through denying them your money. Drive a stake through David Zaslav's heart.

On the software side I will walk you step-by-step through installing Ubuntu as your server's operating system, configuring your storage as a RAIDz array with ZFS, sharing your zpool to Windows with Samba, running a remote connection between your server and your Windows PC, and then a little about started with Plex/Jellyfin. Every terminal command you will need to input will be provided, and I even share a custom #bash script that will make used vs. available drive space on your server display correctly in Windows.

If you have a different preferred flavour of Linux (Arch, Manjaro, Redhat, Fedora, Mint, OpenSUSE, CentOS, Slackware etc. et. al.) and are aching to tell me off for being basic and using Ubuntu, this tutorial is not for you. The sort of person with a preferred Linux distro is the sort of person who can do this sort of thing in their sleep. Also I don't care. This tutorial is intended for the average home computer user. This is also why we’re not using a more exotic home server solution like running everything through Docker Containers and managing it through a dashboard like Homarr or Heimdall. While such solutions are fantastic and can be very easy to maintain once you have it all set up, wrapping your brain around Docker is a whole thing in and of itself. If you do follow this tutorial and had fun putting everything together, then I would encourage you to return in a year’s time, do your research and set up everything with Docker Containers.

Lastly, this is a tutorial aimed at Windows users. Although I was a daily user of OS X for many years (roughly 2008-2023) and I've dabbled quite a bit with various Linux distributions (mostly Ubuntu and Manjaro), my primary OS these days is Windows 11. Many things in this tutorial will still be applicable to Mac users, but others (e.g. setting up shares) you will have to look up for yourself. I doubt it would be difficult to do so.

Nothing in this tutorial will require feats of computing expertise. All you will need is a basic computer literacy (i.e. an understanding of what a filesystem and directory are, and a degree of comfort in the settings menu) and a willingness to learn a thing or two. While this guide may look overwhelming at first glance, it is only because I want to be as thorough as possible. I want you to understand exactly what it is you're doing, I don't want you to just blindly follow steps. If you half-way know what you’re doing, you will be much better prepared if you ever need to troubleshoot.

Honestly, once you have all the hardware ready it shouldn't take more than an afternoon or two to get everything up and running.

(This tutorial is just shy of seven thousand words long so the rest is under the cut.)

Step One: Choosing Your Hardware

Linux is a light weight operating system, depending on the distribution there's close to no bloat. There are recent distributions available at this very moment that will run perfectly fine on a fourteen year old i3 with 4GB of RAM. Moreover, running Plex or Jellyfin isn’t resource intensive in 90% of use cases. All this is to say, we don’t require an expensive or powerful computer. This means that there are several options available: 1) use an old computer you already have sitting around but aren't using 2) buy a used workstation from eBay, or what I believe to be the best option, 3) order an N100 Mini-PC from AliExpress or Amazon.

Note: If you already have an old PC sitting around that you’ve decided to use, fantastic, move on to the next step.

When weighing your options, keep a few things in mind: the number of people you expect to be streaming simultaneously at any one time, the resolution and bitrate of your media library (4k video takes a lot more processing power than 1080p) and most importantly, how many of those clients are going to be transcoding at any one time. Transcoding is what happens when the playback device does not natively support direct playback of the source file. This can happen for a number of reasons, such as the playback device's native resolution being lower than the file's internal resolution, or because the source file was encoded in a video codec unsupported by the playback device.

Ideally we want any transcoding to be performed by hardware. This means we should be looking for a computer with an Intel processor with Quick Sync. Quick Sync is a dedicated core on the CPU die designed specifically for video encoding and decoding. This specialized hardware makes for highly efficient transcoding both in terms of processing overhead and power draw. Without these Quick Sync cores, transcoding must be brute forced through software. This takes up much more of a CPU’s processing power and requires much more energy. But not all Quick Sync cores are created equal and you need to keep this in mind if you've decided either to use an old computer or to shop for a used workstation on eBay

Any Intel processor from second generation Core (Sandy Bridge circa 2011) onward has Quick Sync cores. It's not until 6th gen (Skylake), however, that the cores support the H.265 HEVC codec. Intel’s 10th gen (Comet Lake) processors introduce support for 10bit HEVC and HDR tone mapping. And the recent 12th gen (Alder Lake) processors brought with them hardware AV1 decoding. As an example, while an 8th gen (Kaby Lake) i5-8500 will be able to hardware transcode a H.265 encoded file, it will fall back to software transcoding if given a 10bit H.265 file. If you’ve decided to use that old PC or to look on eBay for an old Dell Optiplex keep this in mind.

Note 1: The price of old workstations varies wildly and fluctuates frequently. If you get lucky and go shopping shortly after a workplace has liquidated a large number of their workstations you can find deals for as low as $100 on a barebones system, but generally an i5-8500 workstation with 16gb RAM will cost you somewhere in the area of $260 CAD/$200 USD.

Note 2: The AMD equivalent to Quick Sync is called Video Core Next, and while it's fine, it's not as efficient and not as mature a technology. It was only introduced with the first generation Ryzen CPUs and it only got decent with their newest CPUs, we want something cheap.

Alternatively you could forgo having to keep track of what generation of CPU is equipped with Quick Sync cores that feature support for which codecs, and just buy an N100 mini-PC. For around the same price or less of a used workstation you can pick up a mini-PC with an Intel N100 processor. The N100 is a four-core processor based on the 12th gen Alder Lake architecture and comes equipped with the latest revision of the Quick Sync cores. These little processors offer astounding hardware transcoding capabilities for their size and power draw. Otherwise they perform equivalent to an i5-6500, which isn't a terrible CPU. A friend of mine uses an N100 machine as a dedicated retro emulation gaming system and it does everything up to 6th generation consoles just fine. The N100 is also a remarkably efficient chip, it sips power. In fact, the difference between running one of these and an old workstation could work out to hundreds of dollars a year in energy bills depending on where you live.

You can find these Mini-PCs all over Amazon or for a little cheaper on AliExpress. They range in price from $170 CAD/$125 USD for a no name N100 with 8GB RAM to $280 CAD/$200 USD for a Beelink S12 Pro with 16GB RAM. The brand doesn't really matter, they're all coming from the same three factories in Shenzen, go for whichever one fits your budget or has features you want. 8GB RAM should be enough, Linux is lightweight and Plex only calls for 2GB RAM. 16GB RAM might result in a slightly snappier experience, especially with ZFS. A 256GB SSD is more than enough for what we need as a boot drive, but going for a bigger drive might allow you to get away with things like creating preview thumbnails for Plex, but it’s up to you and your budget.

The Mini-PC I wound up buying was a Firebat AK2 Plus with 8GB RAM and a 256GB SSD. It looks like this:

Note: Be forewarned that if you decide to order a Mini-PC from AliExpress, note the type of power adapter it ships with. The mini-PC I bought came with an EU power adapter and I had to supply my own North American power supply. Thankfully this is a minor issue as barrel plug 30W/12V/2.5A power adapters are easy to find and can be had for $10.

Step Two: Choosing Your Storage

Storage is the most important part of our build. It is also the most expensive. Thankfully it’s also the most easily upgrade-able down the line.

For people with a smaller media collection (4TB to 8TB), a more limited budget, or who will only ever have two simultaneous streams running, I would say that the most economical course of action would be to buy a USB 3.0 8TB external HDD. Something like this one from Western Digital or this one from Seagate. One of these external drives will cost you in the area of $200 CAD/$140 USD. Down the line you could add a second external drive or replace it with a multi-drive RAIDz set up such as detailed below.

If a single external drive the path for you, move on to step three.

For people with larger media libraries (12TB+), who prefer media in 4k, or care who about data redundancy, the answer is a RAID array featuring multiple HDDs in an enclosure.

Note: If you are using an old PC or used workstatiom as your server and have the room for at least three 3.5" drives, and as many open SATA ports on your mother board you won't need an enclosure, just install the drives into the case. If your old computer is a laptop or doesn’t have room for more internal drives, then I would suggest an enclosure.

The minimum number of drives needed to run a RAIDz array is three, and seeing as RAIDz is what we will be using, you should be looking for an enclosure with three to five bays. I think that four disks makes for a good compromise for a home server. Regardless of whether you go for a three, four, or five bay enclosure, do be aware that in a RAIDz array the space equivalent of one of the drives will be dedicated to parity at a ratio expressed by the equation 1 − 1/n i.e. in a four bay enclosure equipped with four 12TB drives, if we configured our drives in a RAIDz1 array we would be left with a total of 36TB of usable space (48TB raw size). The reason for why we might sacrifice storage space in such a manner will be explained in the next section.

A four bay enclosure will cost somewhere in the area of $200 CDN/$140 USD. You don't need anything fancy, we don't need anything with hardware RAID controls (RAIDz is done entirely in software) or even USB-C. An enclosure with USB 3.0 will perform perfectly fine. Don’t worry too much about USB speed bottlenecks. A mechanical HDD will be limited by the speed of its mechanism long before before it will be limited by the speed of a USB connection. I've seen decent looking enclosures from TerraMaster, Yottamaster, Mediasonic and Sabrent.

When it comes to selecting the drives, as of this writing, the best value (dollar per gigabyte) are those in the range of 12TB to 20TB. I settled on 12TB drives myself. If 12TB to 20TB drives are out of your budget, go with what you can afford, or look into refurbished drives. I'm not sold on the idea of refurbished drives but many people swear by them.

When shopping for harddrives, search for drives designed specifically for NAS use. Drives designed for NAS use typically have better vibration dampening and are designed to be active 24/7. They will also often make use of CMR (conventional magnetic recording) as opposed to SMR (shingled magnetic recording). This nets them a sizable read/write performance bump over typical desktop drives. Seagate Ironwolf and Toshiba NAS are both well regarded brands when it comes to NAS drives. I would avoid Western Digital Red drives at this time. WD Reds were a go to recommendation up until earlier this year when it was revealed that they feature firmware that will throw up false SMART warnings telling you to replace the drive at the three year mark quite often when there is nothing at all wrong with that drive. It will likely even be good for another six, seven, or more years.

Step Three: Installing Linux

For this step you will need a USB thumbdrive of at least 6GB in capacity, an .ISO of Ubuntu, and a way to make that thumbdrive bootable media.

First download a copy of Ubuntu desktop (for best performance we could download the Server release, but for new Linux users I would recommend against the server release. The server release is strictly command line interface only, and having a GUI is very helpful for most people. Not many people are wholly comfortable doing everything through the command line, I'm certainly not one of them, and I grew up with DOS 6.0. 22.04.3 Jammy Jellyfish is the current Long Term Service release, this is the one to get.

Download the .ISO and then download and install balenaEtcher on your Windows PC. BalenaEtcher is an easy to use program for creating bootable media, you simply insert your thumbdrive, select the .ISO you just downloaded, and it will create a bootable installation media for you.

Once you've made a bootable media and you've got your Mini-PC (or you old PC/used workstation) in front of you, hook it directly into your router with an ethernet cable, and then plug in the HDD enclosure, a monitor, a mouse and a keyboard. Now turn that sucker on and hit whatever key gets you into the BIOS (typically ESC, DEL or F2). If you’re using a Mini-PC check to make sure that the P1 and P2 power limits are set correctly, my N100's P1 limit was set at 10W, a full 20W under the chip's power limit. Also make sure that the RAM is running at the advertised speed. My Mini-PC’s RAM was set at 2333Mhz out of the box when it should have been 3200Mhz. Once you’ve done that, key over to the boot order and place the USB drive first in the boot order. Then save the BIOS settings and restart.

After you restart you’ll be greeted by Ubuntu's installation screen. Installing Ubuntu is really straight forward, select the "minimal" installation option, as we won't need anything on this computer except for a browser (Ubuntu comes preinstalled with Firefox) and Plex Media Server/Jellyfin Media Server. Also remember to delete and reformat that Windows partition! We don't need it.

Step Four: Installing ZFS and Setting Up the RAIDz Array

Note: If you opted for just a single external HDD skip this step and move onto setting up a Samba share.

Once Ubuntu is installed it's time to configure our storage by installing ZFS to build our RAIDz array. ZFS is a "next-gen" file system that is both massively flexible and massively complex. It's capable of snapshot backup, self healing error correction, ZFS pools can be configured with drives operating in a supplemental manner alongside the storage vdev (e.g. fast cache, dedicated secondary intent log, hot swap spares etc.). It's also a file system very amenable to fine tuning. Block and sector size are adjustable to use case and you're afforded the option of different methods of inline compression. If you'd like a very detailed overview and explanation of its various features and tips on tuning a ZFS array check out these articles from Ars Technica. For now we're going to ignore all these features and keep it simple, we're going to pull our drives together into a single vdev running in RAIDz which will be the entirety of our zpool, no fancy cache drive or SLOG.

Open up the terminal and type the following commands:

sudo apt update

then

sudo apt install zfsutils-linux

This will install the ZFS utility. Verify that it's installed with the following command:

zfs --version

Now, it's time to check that the HDDs we have in the enclosure are healthy, running, and recognized. We also want to find out their device IDs and take note of them:

sudo fdisk -1

Note: You might be wondering why some of these commands require "sudo" in front of them while others don't. "Sudo" is short for "super user do”. When and where "sudo" is used has to do with the way permissions are set up in Linux. Only the "root" user has the access level to perform certain tasks in Linux. As a matter of security and safety regular user accounts are kept separate from the "root" user. It's not advised (or even possible) to boot into Linux as "root" with most modern distributions. Instead by using "sudo" our regular user account is temporarily given the power to do otherwise forbidden things. Don't worry about it too much at this stage, but if you want to know more check out this introduction.

If everything is working you should get a list of the various drives detected along with their device IDs which will look like this: /dev/sdc. You can also check the device IDs of the drives by opening the disk utility app. Jot these IDs down as we'll need them for our next step, creating our RAIDz array.

RAIDz is similar to RAID-5 in that instead of striping your data over multiple disks, exchanging redundancy for speed and available space (RAID-0), or mirroring your data writing by two copies of every piece (RAID-1), it instead writes parity blocks across the disks in addition to striping, this provides a balance of speed, redundancy and available space. If a single drive fails, the parity blocks on the working drives can be used to reconstruct the entire array as soon as a replacement drive is added.

Additionally, RAIDz improves over some of the common RAID-5 flaws. It's more resilient and capable of self healing, as it is capable of automatically checking for errors against a checksum. It's more forgiving in this way, and it's likely that you'll be able to detect when a drive is dying well before it fails. A RAIDz array can survive the loss of any one drive.

Note: While RAIDz is indeed resilient, if a second drive fails during the rebuild, you're fucked. Always keep backups of things you can't afford to lose. This tutorial, however, is not about proper data safety.

To create the pool, use the following command:

sudo zpool create "zpoolnamehere" raidz "device IDs of drives we're putting in the pool"

For example, let's creatively name our zpool "mypool". This poil will consist of four drives which have the device IDs: sdb, sdc, sdd, and sde. The resulting command will look like this:

sudo zpool create mypool raidz /dev/sdb /dev/sdc /dev/sdd /dev/sde

If as an example you bought five HDDs and decided you wanted more redundancy dedicating two drive to this purpose, we would modify the command to "raidz2" and the command would look something like the following:

sudo zpool create mypool raidz2 /dev/sdb /dev/sdc /dev/sdd /dev/sde /dev/sdf

An array configured like this is known as RAIDz2 and is able to survive two disk failures.

Once the zpool has been created, we can check its status with the command:

zpool status

Or more concisely with:

zpool list

The nice thing about ZFS as a file system is that a pool is ready to go immediately after creation. If we were to set up a traditional RAID-5 array using mbam, we'd have to sit through a potentially hours long process of reformatting and partitioning the drives. Instead we're ready to go right out the gates.

The zpool should be automatically mounted to the filesystem after creation, check on that with the following:

df -hT | grep zfs

Note: If your computer ever loses power suddenly, say in event of a power outage, you may have to re-import your pool. In most cases, ZFS will automatically import and mount your pool, but if it doesn’t and you can't see your array, simply open the terminal and type sudo zpool import -a.

By default a zpool is mounted at /"zpoolname". The pool should be under our ownership but let's make sure with the following command:

sudo chown -R "yourlinuxusername" /"zpoolname"

Note: Changing file and folder ownership with "chown" and file and folder permissions with "chmod" are essential commands for much of the admin work in Linux, but we won't be dealing with them extensively in this guide. If you'd like a deeper tutorial and explanation you can check out these two guides: chown and chmod.

You can access the zpool file system through the GUI by opening the file manager (the Ubuntu default file manager is called Nautilus) and clicking on "Other Locations" on the sidebar, then entering the Ubuntu file system and looking for a folder with your pool's name. Bookmark the folder on the sidebar for easy access.

Your storage pool is now ready to go. Assuming that we already have some files on our Windows PC we want to copy to over, we're going to need to install and configure Samba to make the pool accessible in Windows.

Step Five: Setting Up Samba/Sharing

Samba is what's going to let us share the zpool with Windows and allow us to write to it from our Windows machine. First let's install Samba with the following commands:

sudo apt-get update

then

sudo apt-get install samba

Next create a password for Samba.

sudo smbpswd -a "yourlinuxusername"

It will then prompt you to create a password. Just reuse your Ubuntu user password for simplicity's sake.

Note: if you're using just a single external drive replace the zpool location in the following commands with wherever it is your external drive is mounted, for more information see this guide on mounting an external drive in Ubuntu.

After you've created a password we're going to create a shareable folder in our pool with this command

mkdir /"zpoolname"/"foldername"

Now we're going to open the smb.conf file and make that folder shareable. Enter the following command.

sudo nano /etc/samba/smb.conf

This will open the .conf file in nano, the terminal text editor program. Now at the end of smb.conf add the following entry:

["foldername"]

path = /"zpoolname"/"foldername"

available = yes

valid users = "yourlinuxusername"

read only = no

writable = yes

browseable = yes

guest ok = no

Ensure that there are no line breaks between the lines and that there's a space on both sides of the equals sign. Our next step is to allow Samba traffic through the firewall:

sudo ufw allow samba

Finally restart the Samba service:

sudo systemctl restart smbd

At this point we'll be able to access to the pool, browse its contents, and read and write to it from Windows. But there's one more thing left to do, Windows doesn't natively support the ZFS file systems and will read the used/available/total space in the pool incorrectly. Windows will read available space as total drive space, and all used space as null. This leads to Windows only displaying a dwindling amount of "available" space as the drives are filled. We can fix this! Functionally this doesn't actually matter, we can still write and read to and from the disk, it just makes it difficult to tell at a glance the proportion of used/available space, so this is an optional step but one I recommend (this step is also unnecessary if you're just using a single external drive). What we're going to do is write a little shell script in #bash. Open nano with the terminal with the command:

nano

Now insert the following code:

#!/bin/bash CUR_PATH=`pwd` ZFS_CHECK_OUTPUT=$(zfs get type $CUR_PATH 2>&1 > /dev/null) > /dev/null if [[ $ZFS_CHECK_OUTPUT == *not\ a\ ZFS* ]] then IS_ZFS=false else IS_ZFS=true fi if [[ $IS_ZFS = false ]] then df $CUR_PATH | tail -1 | awk '{print $2" "$4}' else USED=$((`zfs get -o value -Hp used $CUR_PATH` / 1024)) > /dev/null AVAIL=$((`zfs get -o value -Hp available $CUR_PATH` / 1024)) > /dev/null TOTAL=$(($USED+$AVAIL)) > /dev/null echo $TOTAL $AVAIL fi

Save the script as "dfree.sh" to /home/"yourlinuxusername" then change the ownership of the file to make it executable with this command:

sudo chmod 774 dfree.sh

Now open smb.conf with sudo again:

sudo nano /etc/samba/smb.conf

Now add this entry to the top of the configuration file to direct Samba to use the results of our script when Windows asks for a reading on the pool's used/available/total drive space:

[global]

dfree command = /home/"yourlinuxusername"/dfree.sh

Save the changes to smb.conf and then restart Samba again with the terminal:

sudo systemctl restart smbd

Now there’s one more thing we need to do to fully set up the Samba share, and that’s to modify a hidden group permission. In the terminal window type the following command:

usermod -a -G sambashare “yourlinuxusername”

Then restart samba again:

sudo systemctl restart smbd

If we don’t do this last step, everything will appear to work fine, and you will even be able to see and map the drive from Windows and even begin transferring files, but you'd soon run into a lot of frustration. As every ten minutes or so a file would fail to transfer and you would get a window announcing “0x8007003B Unexpected Network Error”. This window would require your manual input to continue the transfer with the file next in the queue. And at the end it would reattempt to transfer whichever files failed the first time around. 99% of the time they’ll go through that second try, but this is still all a major pain in the ass. Especially if you’ve got a lot of data to transfer or you want to step away from the computer for a while.

It turns out samba can act a little weirdly with the higher read/write speeds of RAIDz arrays and transfers from Windows, and will intermittently crash and restart itself if this group option isn’t changed. Inputting the above command will prevent you from ever seeing that window.

The last thing we're going to do before switching over to our Windows PC is grab the IP address of our Linux machine. Enter the following command:

hostname -I

This will spit out this computer's IP address on the local network (it will look something like 192.168.0.x), write it down. It might be a good idea once you're done here to go into your router settings and reserving that IP for your Linux system in the DHCP settings. Check the manual for your specific model router on how to access its settings, typically it can be accessed by opening a browser and typing http:\\192.168.0.1 in the address bar, but your router may be different.

Okay we’re done with our Linux computer for now. Get on over to your Windows PC, open File Explorer, right click on Network and click "Map network drive". Select Z: as the drive letter (you don't want to map the network drive to a letter you could conceivably be using for other purposes) and enter the IP of your Linux machine and location of the share like so: \\"LINUXCOMPUTERLOCALIPADDRESSGOESHERE"\"zpoolnamegoeshere"\. Windows will then ask you for your username and password, enter the ones you set earlier in Samba and you're good. If you've done everything right it should look something like this:

You can now start moving media over from Windows to the share folder. It's a good idea to have a hard line running to all machines. Moving files over Wi-Fi is going to be tortuously slow, the only thing that’s going to make the transfer time tolerable (hours instead of days) is a solid wired connection between both machines and your router.

Step Six: Setting Up Remote Desktop Access to Your Server

After the server is up and going, you’ll want to be able to access it remotely from Windows. Barring serious maintenance/updates, this is how you'll access it most of the time. On your Linux system open the terminal and enter:

sudo apt install xrdp

Then:

sudo systemctl enable xrdp

Once it's finished installing, open “Settings” on the sidebar and turn off "automatic login" in the User category. Then log out of your account. Attempting to remotely connect to your Linux computer while you’re logged in will result in a black screen!

Now get back on your Windows PC, open search and look for "RDP". A program called "Remote Desktop Connection" should pop up, open this program as an administrator by right-clicking and selecting “run as an administrator”. You’ll be greeted with a window. In the field marked “Computer” type in the IP address of your Linux computer. Press connect and you'll be greeted with a new window and prompt asking for your username and password. Enter your Ubuntu username and password here.

If everything went right, you’ll be logged into your Linux computer. If the performance is sluggish, adjust the display options. Lowering the resolution and colour depth do a lot to make the interface feel snappier.

Remote access is how we're going to be using our Linux system from now, barring edge cases like needing to get into the BIOS or upgrading to a new version of Ubuntu. Everything else from performing maintenance like a monthly zpool scrub to checking zpool status and updating software can all be done remotely.

This is how my server lives its life now, happily humming and chirping away on the floor next to the couch in a corner of the living room.

Step Seven: Plex Media Server/Jellyfin

Okay we’ve got all the ground work finished and our server is almost up and running. We’ve got Ubuntu up and running, our storage array is primed, we’ve set up remote connections and sharing, and maybe we’ve moved over some of favourite movies and TV shows.

Now we need to decide on the media server software to use which will stream our media to us and organize our library. For most people I’d recommend Plex. It just works 99% of the time. That said, Jellyfin has a lot to recommend it by too, even if it is rougher around the edges. Some people run both simultaneously, it’s not that big of an extra strain. I do recommend doing a little bit of your own research into the features each platform offers, but as a quick run down, consider some of the following points:

Plex is closed source and is funded through PlexPass purchases while Jellyfin is open source and entirely user driven. This means a number of things: for one, Plex requires you to purchase a “PlexPass” (purchased as a one time lifetime fee $159.99 CDN/$120 USD or paid for on a monthly or yearly subscription basis) in order to access to certain features, like hardware transcoding (and we want hardware transcoding) or automated intro/credits detection and skipping, Jellyfin offers some of these features for free through plugins. Plex supports a lot more devices than Jellyfin and updates more frequently. That said, Jellyfin's Android and iOS apps are completely free, while the Plex Android and iOS apps must be activated for a one time cost of $6 CDN/$5 USD. But that $6 fee gets you a mobile app that is much more functional and features a unified UI across platforms, the Plex mobile apps are simply a more polished experience. The Jellyfin apps are a bit of a mess and the iOS and Android versions are very different from each other.

Jellyfin’s actual media player is more fully featured than Plex's, but on the other hand Jellyfin's UI, library customization and automatic media tagging really pale in comparison to Plex. Streaming your music library is free through both Jellyfin and Plex, but Plex offers the PlexAmp app for dedicated music streaming which boasts a number of fantastic features, unfortunately some of those fantastic features require a PlexPass. If your internet is down, Jellyfin can still do local streaming, while Plex can fail to play files unless you've got it set up a certain way. Jellyfin has a slew of neat niche features like support for Comic Book libraries with the .cbz/.cbt file types, but then Plex offers some free ad-supported TV and films, they even have a free channel that plays nothing but Classic Doctor Who.

Ultimately it's up to you, I settled on Plex because although some features are pay-walled, it just works. It's more reliable and easier to use, and a one-time fee is much easier to swallow than a subscription. I had a pretty easy time getting my boomer parents and tech illiterate brother introduced to and using Plex and I don't know if I would've had as easy a time doing that with Jellyfin. I do also need to mention that Jellyfin does take a little extra bit of tinkering to get going in Ubuntu, you’ll have to set up process permissions, so if you're more tolerant to tinkering, Jellyfin might be up your alley and I’ll trust that you can follow their installation and configuration guide. For everyone else, I recommend Plex.

So pick your poison: Plex or Jellyfin.

Note: The easiest way to download and install either of these packages in Ubuntu is through Snap Store.

After you've installed one (or both), opening either app will launch a browser window into the browser version of the app allowing you to set all the options server side.

The process of adding creating media libraries is essentially the same in both Plex and Jellyfin. You create a separate libraries for Television, Movies, and Music and add the folders which contain the respective types of media to their respective libraries. The only difficult or time consuming aspect is ensuring that your files and folders follow the appropriate naming conventions:

Plex naming guide for Movies

Plex naming guide for Television

Jellyfin follows the same naming rules but I find their media scanner to be a lot less accurate and forgiving than Plex. Once you've selected the folders to be scanned the service will scan your files, tagging everything and adding metadata. Although I find do find Plex more accurate, it can still erroneously tag some things and you might have to manually clean up some tags in a large library. (When I initially created my library it tagged the 1963-1989 Doctor Who as some Korean soap opera and I needed to manually select the correct match after which everything was tagged normally.) It can also be a bit testy with anime (especially OVAs) be sure to check TVDB to ensure that you have your files and folders structured and named correctly. If something is not showing up at all, double check the name.

Once that's done, organizing and customizing your library is easy. You can set up collections, grouping items together to fit a theme or collect together all the entries in a franchise. You can make playlists, and add custom artwork to entries. It's fun setting up collections with posters to match, there are even several websites dedicated to help you do this like PosterDB. As an example, below are two collections in my library, one collecting all the entries in a franchise, the other follows a theme.

My Star Trek collection, featuring all eleven television series, and thirteen films.

My Best of the Worst collection, featuring sixty-nine films previously showcased on RedLetterMedia’s Best of the Worst. They’re all absolutely terrible and I love them.

As for settings, ensure you've got Remote Access going, it should work automatically and be sure to set your upload speed after running a speed test. In the library settings set the database cache to 2000MB to ensure a snappier and more responsive browsing experience, and then check that playback quality is set to original/maximum. If you’re severely bandwidth limited on your upload and have remote users, you might want to limit the remote stream bitrate to something more reasonable, just as a note of comparison Netflix’s 1080p bitrate is approximately 5Mbps, although almost anyone watching through a chromium based browser is streaming at 720p and 3mbps. Other than that you should be good to go. For actually playing your files, there's a Plex app for just about every platform imaginable. I mostly watch television and films on my laptop using the Windows Plex app, but I also use the Android app which can broadcast to the chromecast connected to the TV in the office and the Android TV app for our smart TV. Both are fully functional and easy to navigate, and I can also attest to the OS X version being equally functional.

Part Eight: Finding Media

Now, this is not really a piracy tutorial, there are plenty of those out there. But if you’re unaware, BitTorrent is free and pretty easy to use, just pick a client (qBittorrent is the best) and go find some public trackers to peruse. Just know now that all the best trackers are private and invite only, and that they can be exceptionally difficult to get into. I’m already on a few, and even then, some of the best ones are wholly out of my reach.

If you decide to take the left hand path and turn to Usenet you’ll have to pay. First you’ll need to sign up with a provider like Newshosting or EasyNews for access to Usenet itself, and then to actually find anything you’re going to need to sign up with an indexer like NZBGeek or NZBFinder. There are dozens of indexers, and many people cross post between them, but for more obscure media it’s worth checking multiple. You’ll also need a binary downloader like SABnzbd. That caveat aside, Usenet is faster, bigger, older, less traceable than BitTorrent, and altogether slicker. I honestly prefer it, and I'm kicking myself for taking this long to start using it because I was scared off by the price. I’ve found so many things on Usenet that I had sought in vain elsewhere for years, like a 2010 Italian film about a massacre perpetrated by the SS that played the festival circuit but never received a home media release; some absolute hero uploaded a rip of a festival screener DVD to Usenet. Anyway, figure out the rest of this shit on your own and remember to use protection, get yourself behind a VPN, use a SOCKS5 proxy with your BitTorrent client, etc.

On the legal side of things, if you’re around my age, you (or your family) probably have a big pile of DVDs and Blu-Rays sitting around unwatched and half forgotten. Why not do a bit of amateur media preservation, rip them and upload them to your server for easier access? (Your tools for this are going to be Handbrake to do the ripping and AnyDVD to break any encryption.) I went to the trouble of ripping all my SCTV DVDs (five box sets worth) because none of it is on streaming nor could it be found on any pirate source I tried. I’m glad I did, forty years on it’s still one of the funniest shows to ever be on TV.

Part Nine/Epilogue: Sonarr/Radarr/Lidarr and Overseerr

There are a lot of ways to automate your server for better functionality or to add features you and other users might find useful. Sonarr, Radarr, and Lidarr are a part of a suite of “Servarr” services (there’s also Readarr for books and Whisparr for adult content) that allow you to automate the collection of new episodes of TV shows (Sonarr), new movie releases (Radarr) and music releases (Lidarr). They hook in to your BitTorrent client or Usenet binary newsgroup downloader and crawl your preferred Torrent trackers and Usenet indexers, alerting you to new releases and automatically grabbing them. You can also use these services to manually search for new media, and even replace/upgrade your existing media with better quality uploads. They’re really a little tricky to set up on a bare metal Ubuntu install (ideally you should be running them in Docker Containers), and I won’t be providing a step by step on installing and running them, I’m simply making you aware of their existence.

The other bit of kit I want to make you aware of is Overseerr which is a program that scans your Plex media library and will serve recommendations based on what you like. It also allows you and your users to request specific media. It can even be integrated with Sonarr/Radarr/Lidarr so that fulfilling those requests is fully automated.

And you're done. It really wasn't all that hard. Enjoy your media. Enjoy the control you have over that media. And be safe in the knowledge that no hedgefund CEO motherfucker who hates the movies but who is somehow in control of a major studio will be able to disappear anything in your library as a tax write-off.

1K notes

·

View notes

Text

Grand Unified Theory of Ethubs: Horse Edition

I started this almost a year ago, and at this point it's weirder than any Etho & Bdubs fiction could be, but it's all true, or at least every point has a valid citation. This is an attempt to explain why I find their dynamic as represented in on-video interactions and their own metatextual commentary (in-video asides, deliberate 'OOC' fourth wall breaks, casual livestream/other medial chat/commentary, etc.) compelling.

Horsing Around, or, Experience over Efficiency

THESIS: Although their singleplayer series and general playstyles appear totally opposed, their approaches are actually two different manifestations of the same underlying principles. These behaviors are reinforced and rewarded by their long-running singleplayer series. This combination of aligned gameplay idiosyncracies and a shared but frequently separate play history are the root cause of their opaque and silently mutually-agreed interpersonal dynamics.

The horse obsession is the most obvious modern symptom of these shared gameplay values, and it's useful for illustrating both Etho and Bdubs' underlying principles. Their horsegirl behavior is charming at face value alone, dating back to their Mindcrack days and begetting long-running jokes and feuds. Their history together predates horses themselves, but even in pre-elytra Mindcrack, their fixation on horse transit marked them as kindred eccesntrics on a server with robust nether minecart travel (which, in two cases, they themselves built). All aspects of their modern bespoke mutual eccentrisim is evident, including their shared commitment to experience over efficiency - prioritizing pleasant, manual engagement with game mechanics over pure automation and optimization.

Etho is perhaps most famous for his redstone - and consequently automation - but it has never been for automation's sake. He has always vocally prioritized active gameplay over efficient AFK design, especially in singelplayer. For a long time, he played with no armor to make the game harder - save Feather Falling diamond boots, so he wouldn't die to fall damage while constantly ender pearling everywhere. It's part preference, part pragmatism: he is clear about his feelings on what fair and fun gameplay is, but he chooses constraints (no AFKing, strict survival) because it suits the combination of his audience and the series in question, not universal moral standards. In the hyper-industrial economy of s7-onwards Hermitcraft, the audience-to-series calculus is different; his preference leads him to collect stacks of almost every item so he can functionally emulate the freedom of creative mode in survival, even if it means tolerating AFK design to achieve that. Leaning into unconventional locomation makes for more interesting, if useless, inventions. To survive, pleasure of active play comes first, and the rest follows.

In apparent contrast, Bdubs has no desire to build complicated contraptions for invention's sake, typically using other people's farm designs and inventing his own in service of enlivening builds or enviornments. He even pokes fun at his own in-game Luddite tendencies through Redstone with Bdubs' failures and his vocal hatred of the post-1.16 nether. In singleplayer, he's historically switched to creative sessions, particularly for public livestreams, to balance his own scales of production goals to audience judgement. As his skill as a builder has grown and he designs not just buildings but areas to be viewed and interacted with from certain angles, the greater the reward choosing to go by foot or horse becomes. But as with Etho, these choices are in service of enjoyable, immersive gameplay before any other criterion.

By the time Etho and Bdubs enounter each other directly, they have each been doing singleplayer survival longe enough to develop opinions and preferences that enable them to continue these worlds through the next decade. They both independently decide from the get-go that it's the only way to maintain the grind of creating single- and multiplayer videos long term.

Horsing around, then, illuminates how their shared real-world material constraints and similar approaches to their different in-game disciplines allows them to become kindred eccentrics. Their friendship predates in-game horses, but it endures a decade later, long after elytra makes horses obsoltete to most of their peers. The horse obsession endures as a rarely shared outlet for a need to prolong play and therefore to maximise the moment-to-moment enjoyment of playing.

Courses of Horses, or, Community and Conflict

While horsing around is illustrative of their singleplayer influences, it illuminates their multiplayer priorities as well. In a long-term singleplayer world, drawing out pleasure from rote chores and travel is essential. With others, time and energy spent to create infrastructure for others to share becomes its own self-perpetuating reward.

From their first Mindcrack season together to present-day Hermitcraft season 10, both are prone to building nether hubs out of a sense of obligation and desire for easy travel, elaborate combat arenas that unite technical features with thoughtful area design, and horse timers and racecourses. All three major trends translate personal preferences into major public projects that invite others into their playstyle wheelhouses. The accursed season-ending curse of combat arenas and horse courses are also specifically competitive. For players like Etho and Bdubs, for whom playing is in service of video creation, multiplayer is made enjoyable - and so, sustainable - by drawing out what distinguishes it from singleplayer.

Only through the presence of another person can you access stories and playstyles that depend on antagonists. This doesn't stop either of them from inventing them when alone, such as Etho's General Spaz, or Bdubs' Wells Glazes and McGee (and even arguably Red in Hermitcraft s6), but they do both have preferred tropes for conflict creation.

They trend toward different antagonist tropes, with Etho revelling in faux-innocent trolling and generally keeping authority figures from getting too serious while Bdubs moves from blatant heel to archetypal Fool over the years. Etho is generally more likely to engage in one-off, individual pranks, spend time on playing or building minigames, and join server-wide events by accident or insofar as he can be a casual anti-authority troll. Bdubs is willing to take charge instigating large storylines, though he becomes a pathological henchman when his comfort with the Fool role exceeds his need to instigate. In whole-server conflicts, this usually puts them where they're most comfortable - on opposite sides, giving each other a hard time.

Conflict is a gift, one not exclusively given to each other but ennabled together and apart for their other friends and co-players, but frequently manifesting through their shared history, priorities, and preferences as a strange language only the two of them seem to be able to speak. Early on, Etho rudely informs Bdubs what Bdubs' armor prefrences are and by the Trial, Bdubs is correctly predicting how he can make Etho jealous of his armor gains. Over a decade later, Etho can simply include a potion farm in a normal-seeming part of a regular Hermitcraft video and Bdubs will correctly identify it as a taunt only intelligible to him, retaliation for forgetting they'd meant to partner on a shop together.

They need to embrace mechancis like horses to survive; they provide exra incentive to the entire server to share that productive enjoyment with them in their infrastructure and horse courses; and by extension, their massive continuity of in-jokes and lighthearted complaints about each other both alienates them as a unit from others in a way that itself becomes a productive vehicle of community conflict or interaction. While the full history and ramifications of this fond, conflict-based idiolect are an entirely separate essay, this strange relationship is them at the extremes of who they've each decided to be, and are in continuity with the servers and series they've shared over so many years.

It is not divisible into c! and cc! interacitons because the real world commonalities drive their work friendship and their story-producing mutual obsessions. They know each other so well, so automatically, so much as foils of great mutual but often indirect respect that direct, total certainty expressed by one side is unsettling, creating doubt and distrust. But that dynamic, loving, and conflict-based idiolect produced by two people able to commit to the extremes of who they've each decided to be, together and unalone, requires its own set of essays to unpack.

"If I Had a Nickel...", Episode 1

For every time they started a big community-focused collaboration and the season ended before they could finish it…

Mindcrack season 4 fire and ice arena

Mindcrack FTB Call of Duty arena

Mindcrack season 5 horse course (ft. Doc, Genny; Doc makes a joke about hoping this doesn't happen on this project, given their past track record)

Hermitcraft season 8 horse course (recreated in season 9 for the charity event)

For every time they resurrected a decade-old joke that they both instantly recognize and everybody else is a little confused about at first because seriously it's been like 10 years…

"Pink" hoodie, Mindcrack s4/5 (1, ??) to Hermitcraft s7

Obsidian coffin prank, Mindcrack s4 to Hermitcraft s7 and s9 and 10

Scissor lift invention, Mindcrack s3 to Hermitcraft s10

Sickness, Mindcrack s4/5 to Last Life

Whether or not Bdubs knows what plethora means, Mindcrack FTB to Secret Life

HONORABLE RECENT MENTION: life series crastle anatomy

Asking is this horse course realistic racing or Mario Kart and it's Mario Kart

Mindcrack s5 ep177

hcs8 ep4

Additional Exemplary Clips & Sources

Ballad of Beyonc? and Taylor Swift

Etho heckling the horse-hunting stream via YT chat

You s- you s- YOU JERK (horse moment, appears in WL), ep1

You are the master and the only way to get to your heart is through horses, ep6

Spider spawner hangout, Bdubs Mindcrack eps 21-23

we're kind of at that level where asking isnt even necessary, right? (morry's)

they don't talk directly compilation

Red (pink) trainee uniform with Complex Inside Joke book explanation

#game shows DO touch our lives#ethubs#whatever im gonna be in indiana all fuckin day tomorrow so im posting it now. bye

103 notes

·

View notes

Text