#streaming analytics open source

Explore tagged Tumblr posts

Text

.ೃ࿐ELECTION DAY

summary — in which austin accidentally lets it slip that hasan’s faceless (yet public) girlfriend is the woman they’re currently watching analyse the maps on CNN.

pairings — hasan piker x politicalcorrespondent!girlfriend!reader

pronouns — she/her

word count — 1893

note — i personally would have “6’4 jacked boyfriend” as his contact name so that whenever weird men try to hit on me they see that but thats just me (and this reader insert ofc) (also this is nothing special just me rambling tbh — what’s to say this political!reader doesn’t become a mini series)

THE DAY WAS HERE. election day. not only was it the day your boyfriend had spent hours upon hours preparing for for weeks, but you, too. you were a political journalist and correspondent currently working the map for CNN during the weeks in the lead up to the election.

it was a big day for you. four years ago you were streaming your own map coverage to fifteen thousand people on twitch, accessing your sources across multiple states to provide statements on what was going on nationwide. being asked a couple months ago to run the maps in front of millions was certainly a step up, but it gave you control to speak objectively without bias unlike most of the other news anchors and correspondents that were pushing right-wing sentiment over any other coverage.

you hadn’t seen hasan in a few weeks now unless you counted facetimes and tuning into his streams. you’d get texts while he was streaming and the occasional kaya video ( because apparently she’d been whining with your leave ). it wasn’t the same, but you were both incredibly career-driven people, so being hours apart by plane wasn’t as daunting as it probably should’ve been.

“you’re gonna be late to stream,” you laughed softly, fiddling with the cap of the bottle of water someone had gotten you. endless tabs were open on your laptop in front of you, following aspects of every state because there was still hours to go before the polls closed, so you were only needed in short segments for now to go over 2020 and 2016 county votes in particular states at a time.

“you’re right,” hasan’s voice was slightly staticky through the phone. “i might have to focus on kornacki or fox news so that i don’t spend too long staring at you.”

“aw,” you let go of your phone, holding it between your ear and shoulder to screw the cap back on the bottle. one of the directors caught your attention across the room, holding up his hand to say that she had five minutes before they were back on air again. “i’m back on in a few . . . i’ll have your stream open on my laptop, though!”

“good luck today,” hasan said softly as he started his stream, leaving it on his opening scene while his mic was muted. people were already flooding in by the thousands. “i’ll talk to you in, what, twelve hours? i love you.”

“twelve hours,” you hummed in agreement, “i love you more,” you sighed softly, noticing that the twitch tab was reloading to take her to his ‘starting soon’ overlay. “good luck.” you ended the phone call first, quickly putting it back on do not disturb and placing it over on the table that was full of analytical notes. the board that now had the map of the united states of america was lit up again, an empty canvas waiting for you to load up the old votes to load up projected blue and red areas.

TOO MANY HOURS TO count and three hundred thousand viewers into the election, hasan was still going strong. despite the pull to watching CNN more than he probably should, he managed to force himself to switch between fox news to laugh at republican propaganda and msnbc. though, he would one hundred percent lying if he said he didn’t have CNN up on his second monitor.

things were steadily climbing, and josh ( ettingermentum ) was back after mike from PA left the call. josh, who had been raging on ( no seriously, no one had really heard him be that loud all day ) about how the democrats fucked up was finally broken up when austin joined the call, the atmosphere shifting.

christmas sign in full view and a cold slab of a slice of pizza being shoved into his mouth, austin’s discussion on if he was being sent to prison if the republicans dominated was dwindled until josh left the call to analyse the polls for twitter.

“ugh, can we watch something else?” austin asked, barely swallowing his mouthful of pizza first. “all i’ve done is watch fox today.”

“yeah,” hasan chucked humourlessly, clicking around mindlessly between tabs as he tried to find msnbc’s coverage. because the tabs were so small thanks to the fifty million twitter tabs he had open, he almost groaned in frustration when he accidentally clicked on the CNN tab.

the tab where you were conveniently fiddling with the data of state of pennsylvania. it was already a dangerous game having you on screen when the chat knew what the silhouettes of you looked like — photos from behind of you walking with hasan, photos of your eyes after he tried to do your makeup, mirror fit checks with your face covered by the phone . . . chat only needed to be railroaded enough to work it out.

just as he was about to switch tabs again, austin opened his mouth. “oh, man, i miss her,” there was a shift in his tone, more than just him speaking without thinking. familiarity shone through. from the way he casually uttered your nickname to the sigh, it was probably worse than railroading. it was the train forgetting to slam the brakes on worthy.

hasan wisely kept his mouth shut as he switched to fox news — anything was better than CNN currently — and his eyes slowly zeroed in on the chat. question marks upon question marks until it eventually morphed into ‘holy shit she looks familiar’ and ‘girlfriend reveal????’ to ‘omg face reveal’ and his breathing faltered.

someone switched the chat to emote only mode in the few moments he was silent for, austin thankfully following suit. glancing at his second monitor, you were still doing your thing, this time discussing the iowa flip from blue to red, completely oblivious.

“austin,” hasan finally said, tone flat. there was no use making a big fuss out of denying it — that would just make it more obvious.

austin chuckled nervously, awkwardly. “uh . . . sorry, hasan. i didn’t think about it . . . awkward.”

“clearly,” he grumbled, digging his fingers into his hair for a moment as he thought. the election was put on hold in his mind for a moment as he switched the screen to the full facecam. he wasn’t going to directly deny or confirm anything, so instead he said, “take what you will from what austin said. in saying that, don’t go harass her, clearly she was faceless for a reason. anyway,” hasan cleared his throat, “moving on, back to the election . . .” and he swiftly moved on like nothing ever happened ( while the mods were timing out anyone who asked about it for an entire week ).

“PENNSYLVANIA AND NEVADA ARE expected to be the closest as of currently,” you gestured to the map that demonstrated the slight wave from the blue shift. “we’re looking at about half a percent, but election night is full of surprises so . . . we’ll continue to keep an eye on that for now.” the directors in the back signalled that the camera was no longer live, and you nodded and took a deep breath. the polls weren’t looking as good as everyone had expected it would look for the democrats.

finally off the air for a much needed break, you wandered back over to your little table off to the side. notes were piling up, but upon noticing the spam of notifications flashing across your phone. weird, you thought, your notifications usually not showing up unless it came from verified accounts across all social media platforms . . . until you noticed that it was coming from your private instagram and twitter account. super weird.

and then the text from hasan.

6’4 SUPER JACKED BOYFRIEND: uhhh so austin accidentally told 300k people we’re dating

6’4 SUPER JACKED BOYFRIEND: call me when ur done? so sorry

oh. on one hand the first part was exciting. three hundred thousand? it was a new viewership record for him. on the other? that means a shit ton of people knew the secret you guys had spent almost two years safeguarding. you’d wanted to keep your face out of everything because you had your own career and didn’t want his to intertwine with it. a healthy work-life balance was keeping that shit separate, but it was only really time until people found out anyway. it wasn’t the best kept secret, anyway.

still, you weren’t mad. you sent off a quick text saying ‘it’s alr’ with a smiley face emoji and shut your phone off completely, shoving it off to the side and turning your laptop back on. you’d be back in california tomorrow, anyway, it could be dealt with then.

THE AIRPORT WASN’T AS secretive anymore. tired after only getting a couple hours of sleep because you got back to your hotel at some god awful hour this morning, it was an instant relief to see hasan waiting for you, dresses comfortably to not draw too much attention to himself — which was difficult because he was fucking huge.

either way, you had no energy to do anything but collapse into his waiting arms, letting him engulf you until you were suffocating. “this is nice,” you mumbled. “sorry i didn’t call, was so tired.”

“you’re fine,” he promised, pulling you back slightly to look at him. “i missed you,” he slipped his hand into yours, and he took your suitcase with his other hand. it was nice to be able to publicly be in his presence without worrying, so much so that you leant into his arm, tiredness dragging your feet.

“missed you more,” you said honestly, but there was more on your mind than just small talk. “where’s austin? motherfucker’s been blowing up my phone.”

hasan chuckled, “if i hear him apologise one more time i’m gonna commit a hate crime.” he then shook his head, “he wanted to stay at the house but i told him to come ‘round tomorrow . . . want you to myself first.”

you knew what that was code for, so you shook your head with a silent laugh. “let me sleep first, god.”

and sleep you did. the house was silent thankfully so you were content tucked up in hasan’s arms, stealing him from clocking in with his twitch chat for ten hours in a fit of selfishness that you were entitled too.

“austin might’ve saved our relationship,” you teased, trailing your fingers up his arm that was tightly wrapped around you, both on the verge of falling into dreamland. “now we can go out on proper dates again.”

“you can tell him yourself,” hasan’s arms tightened around her a little bit more, so full of warmth that the blanket was starting to render useless. “when he knocks our door down tomorrow morning.”

“aw, come on,” you tapped his arm a little harder, fighting the urge to gnaw on his forearm. “you love him.”

“i love you, he’s just my side piece,” he kissed the side of your neck tenderly, “night, baby.”

“g’night,” you mumbled back with a soft smile, the world drifting away for just that little bit longer until tomorrow rolled around. you could deal with your very public relationship then.

560 notes

·

View notes

Text

This Week in Rust 599

Hello and welcome to another issue of This Week in Rust! Rust is a programming language empowering everyone to build reliable and efficient software. This is a weekly summary of its progress and community. Want something mentioned? Tag us at @thisweekinrust.bsky.social on Bluesky or @ThisWeekinRust on mastodon.social, or send us a pull request. Want to get involved? We love contributions.

This Week in Rust is openly developed on GitHub and archives can be viewed at this-week-in-rust.org. If you find any errors in this week's issue, please submit a PR.

Want TWIR in your inbox? Subscribe here.

Updates from Rust Community

Official

Announcing Google Summer of Code 2025 selected projects

Foundation

10 Years of Stable Rust: An Infrastructure Story

Newsletters

This Month in Rust OSDev: April 2025 | Rust OSDev

The Embedded Rustacean Issue #45

Project/Tooling Updates

Avian Physics 0.3

Two months in Servo: CSS nesting, Shadow DOM, Clipboard API, and more

Cot v0.3: Even Lazier

Streaming data analytics, Fluvio 0.17.3 release

CGP v0.4 is Here: Unlocking Easier Debugging, Extensible Presets, and More

Rama v0.2

Observations/Thoughts

Bad Type Patterns - The Duplicate duck

Rust nightly features you should watch out for

Lock-Free Rust: How to Build a Rollercoaster While It’s on Fire

Simple & type-safe localization in Rust

From Rust to AVR assembly: Dissecting a minimal blinky program

Tarpaulins Week Of Speed

Rustls Server-Side Performance

Is Rust the Future of Programming?

Rust Walkthroughs

Functional asynchronous Rust

The Power of Compile-Time ECS Architecture in Rust

[video] Build with Naz : Spinner animation, lock contention, Ctrl+C handling for TUI and CLI

Miscellaneous

April 2025 Rust Jobs Report

Crate of the Week

This week's crate is brush, a bash compatible shell implemented completely in Rust.

Thanks to Josh Triplett for the suggestion!

Please submit your suggestions and votes for next week!

Calls for Testing

An important step for RFC implementation is for people to experiment with the implementation and give feedback, especially before stabilization.

If you are a feature implementer and would like your RFC to appear in this list, add a call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

No calls for testing were issued this week by Rust, Rust language RFCs or Rustup.

Let us know if you would like your feature to be tracked as a part of this list.

RFCs

Rust

Rustup

If you are a feature implementer and would like your RFC to appear on the above list, add the new call-for-testing label to your RFC along with a comment providing testing instructions and/or guidance on which aspect(s) of the feature need testing.

Call for Participation; projects and speakers

CFP - Projects

Always wanted to contribute to open-source projects but did not know where to start? Every week we highlight some tasks from the Rust community for you to pick and get started!

Some of these tasks may also have mentors available, visit the task page for more information.

rama - add ffi/rama-rhai: support ability to use services and layers written in rhai

rama - support akamai h2 passive fingerprint and expose in echo + fp services

If you are a Rust project owner and are looking for contributors, please submit tasks here or through a PR to TWiR or by reaching out on X (formerly Twitter) or Mastodon!

CFP - Events

Are you a new or experienced speaker looking for a place to share something cool? This section highlights events that are being planned and are accepting submissions to join their event as a speaker.

No Calls for papers or presentations were submitted this week.

If you are an event organizer hoping to expand the reach of your event, please submit a link to the website through a PR to TWiR or by reaching out on X (formerly Twitter) or Mastodon!

Updates from the Rust Project

397 pull requests were merged in the last week

Compiler

async drop fix for async_drop_in_place<T> layout for unspecified T

better error message for late/early lifetime param mismatch

perf: make the assertion in Ident::new debug-only

perf: merge typeck loop with static/const item eval loop

Library

implement (part of) ACP 429: add DerefMut to Lazy[Cell/Lock]

implement VecDeque::truncate_front()

Cargo

network: use Retry-After header for HTTP 429 responses

rustc: Don't panic on unknown bins

add glob pattern support for known_hosts

add support for -Zembed-metadata

fix tracking issue template link

make cargo script ignore workspaces

Rustdoc

rustdoc-json: remove newlines from attributes

ensure that temporary doctest folder is correctly removed even if doctests failed

Clippy

clippy: item_name_repetitions: exclude enum variants with identical path components

clippy: return_and_then: only lint returning expressions

clippy: unwrap_used, expect_used: accept macro result as receiver

clippy: add allow_unused config to missing_docs_in_private_items

clippy: add new confusing_method_to_numeric_cast lint

clippy: add new lint: cloned_ref_to_slice_refs

clippy: fix ICE in missing_const_for_fn

clippy: fix integer_division false negative for NonZero denominators

clippy: fix manual_let_else false negative when diverges on simple enum variant

clippy: fix unnecessary_unwrap emitted twice in closure

clippy: fix diagnostic paths printed by dogfood test

clippy: fix false negative for unnecessary_unwrap

clippy: make let_with_type_underscore help message into a suggestion

clippy: resolve through local re-exports in lookup_path

Rust-Analyzer

fix postfix snippets duplicating derefs

resolve doc path from parent module if outer comments exist on module

still complete parentheses & method call arguments if there are existing parentheses, but they are after a newline

Rust Compiler Performance Triage

Lot of changes this week. Overall result is positive, with one large win in type check.

Triage done by @panstromek. Revision range: 62c5f58f..718ddf66

Summary:

(instructions:u) mean range count Regressions ❌ (primary) 0.5% [0.2%, 1.4%] 113 Regressions ❌ (secondary) 0.5% [0.1%, 1.5%] 54 Improvements ✅ (primary) -2.5% [-22.5%, -0.3%] 45 Improvements ✅ (secondary) -0.9% [-2.3%, -0.2%] 10 All ❌✅ (primary) -0.3% [-22.5%, 1.4%] 158

Full report here

Approved RFCs

Changes to Rust follow the Rust RFC (request for comments) process. These are the RFCs that were approved for implementation this week:

No RFCs were approved this week.

Final Comment Period

Every week, the team announces the 'final comment period' for RFCs and key PRs which are reaching a decision. Express your opinions now.

Tracking Issues & PRs

Rust

Tracking Issue for non_null_from_ref

Add std::io::Seek instance for std::io::Take

aarch64-softfloat: forbid enabling the neon target feature

Stabilize the avx512 target features

make std::intrinsics functions actually be intrinsics

Error on recursive opaque ty in HIR typeck

Remove i128 and u128 from improper_ctypes_definitions

Guarantee behavior of transmuting Option::<T>::None subject to NPO

Temporary lifetime extension through tuple struct and tuple variant constructors

Stabilize tcp_quickack

Change the desugaring of assert! for better error output

Make well-formedness predicates no longer coinductive

No Items entered Final Comment Period this week for Cargo, Rust RFCs, Language Reference, Language Team or Unsafe Code Guidelines.

Let us know if you would like your PRs, Tracking Issues or RFCs to be tracked as a part of this list.

New and Updated RFCs

RFC: Extended Standard Library (ESL)

Upcoming Events

Rusty Events between 2025-05-14 - 2025-06-11 🦀

Virtual

2025-05-15 | Hybrid (Redmond, WA, US) | Seattle Rust User Group

May, 2025 SRUG (Seattle Rust User Group) Meetup

2025-05-15 | Virtual (Girona, ES) | Rust Girona

Sessió setmanal de codificació / Weekly coding session

2025-05-15 | Virtual (Joint Meetup, Europe + Israel) | Rust Berlin + Rust Paris + London Rust Project Group + Rust Zürisee + Rust TLV + Rust Nürnberg + Rust Munich + Rust Aarhus + lunch.rs

🦀 Celebrating 10 years of Rust 1.0 🦀

2025-05-15 | Virtual (Zürich, CH) | Rust Zürisee

🦀 Celebrating 10 years of Rust 1.0 (co-event with berline.rs) 🦀

2025-05-18 | Virtual (Dallas, TX, US) | Dallas Rust User Meetup

Rust Readers Discord Discussion: Async Rust

2025-05-19 | Virtual (Tel Aviv-yafo, IL) | Rust 🦀 TLV

Tauri: Cross-Platform desktop applications with Rust and web technologies

2025-05-20 | Hybrid (EU/UK) | Rust and C++ Dragons (former Cardiff)

Talk and Connect - Fullstack - with Goetz Markgraf and Ben Wishovich

2025-05-20 | Virtual (London, UK) | Women in Rust

Threading through lifetimes of borrowing - the Rust way

2025-05-20 | Virtual (Tel Aviv, IL) | Code Mavens 🦀 - 🐍 - 🐪

Rust at Work a conversation with Ran Reichman Co-Founder & CEO of Flarion

2025-05-20 | Virtual (Washington, DC, US) | Rust DC

Mid-month Rustful

2025-05-21 | Hybrid (Vancouver, BC, CA) | Vancouver Rust

Linking

2025-05-22 | Virtual (Berlin, DE) | Rust Berlin

Rust Hack and Learn

2025-05-22 | Virtual (Girona, ES) | Rust Girona

Sessió setmanal de codificació / Weekly coding session

2025-05-25 | Virtual (Dallas, TX, US) | Dallas Rust User Meetup

Rust Readers Discord Discussion: Async Rust

2025-05-27 | Virtual (Dallas, TX, US) | Dallas Rust User Meetup

Fourth Tuesday

2025-05-27 | Virtual (Tel Aviv, IL) | Code Mavens 🦀 - 🐍 - 🐪

Rust at Work - conversation with Eli Shalom & Igal Tabachnik of Eureka Labs

2025-05-29 | Virtual (Nürnberg, DE) | Rust Nuremberg

Rust Nürnberg online

2025-05-29 | Virtual (Tel Aviv-yafo, IL) | Rust 🦀 TLV

שיחה חופשית ווירטואלית על ראסט

2025-06-01 | Virtual (Dallas, TX, US) | Dallas Rust User Meetup

Rust Readers Discord Discussion: Async Rust

2025-06-03 | Virtual (Tel Aviv-yafo, IL) | Rust 🦀 TLV

Why Rust? למה ראסט? -

2025-06-04 | Virtual (Indianapolis, IN, US) | Indy Rust

Indy.rs - with Social Distancing

2025-06-05 | Virtual (Berlin, DE) | Rust Berlin

Rust Hack and Learn

2025-06-07 | Virtual (Kampala, UG) | Rust Circle Meetup

Rust Circle Meetup

2025-06-08 | Virtual (Dallas, TX, US) | Dallas Rust User Meetup

Rust Readers Discord Discussion: Async Rust

2025-06-10 | Virtual (Dallas, TX, US) | Dallas Rust User Meetup

Second Tuesday

2025-06-10 | Virtual (London, UK) | Women in Rust

👋 Community Catch Up

Asia

2025-05-17 | Delhi, IN | Rust Delhi

Rust Delhi Meetup #10

2025-05-24 | Bangalore/Bengaluru, IN | Rust Bangalore

May 2025 Rustacean meetup

2025-06-08 | Tel Aviv-yafo, IL | Rust 🦀 TLV

In person Rust June 2025 at AWS in Tel Aviv

Europe

2025-05-13 - 2025-05-17 | Utrecht, NL | Rust NL

RustWeek 2025

2025-05-14 | Reading, UK | Reading Rust Workshop

Reading Rust Meetup

2025-05-15 | Berlin, DE | Rust Berlin

10 years anniversary of Rust 1.0

2025-05-15 | Oslo, NO | Rust Oslo

Rust 10-year anniversary @ Appear

2025-05-16 | Amsterdam, NL | RustNL

Rust Week Hackathon

2025-05-16 | Utrecht, NL | Rust NL Meetup Group

RustWeek Hackathon

2025-05-17 | Amsterdam, NL | RustNL

Walking Tour around Utrecht - Saturday

2025-05-20 | Dortmund, DE | Rust Dortmund

Talk and Connect - Fullstack - with Goetz Markgraf and Ben Wishovich

2025-05-20 | Aarhus, DK | Rust Aarhus

Hack Night - Robot Edition

2025-05-20 | Leipzig, SN, DE | Rust - Modern Systems Programming in Leipzig

Topic TBD

2025-05-22 | Augsburg, DE | Rust Augsburg

Rust meetup #13:A Practical Guide to Telemetry in Rust

2025-05-22 | Bern, CH | Rust Bern

2025 Rust Talks Bern #3 @zentroom

2025-05-22 | Paris, FR | Rust Paris

Rust meetup #77

2025-05-22 | Stockholm, SE | Stockholm Rust

Rust Meetup @UXStream

2025-05-27 | Basel, CH | Rust Basel

Rust Meetup #11 @ Letsboot Basel

2025-05-27 | Vienna, AT | Rust Vienna

Rust Vienna - May at Bitcredit 🦀

2025-05-29 | Oslo, NO | Rust Oslo

Rust Hack'n'Learn at Kampen Bistro

2025-05-31 | Stockholm, SE | Stockholm Rust

Ferris' Fika Forum #12

2025-06-04 | Ghent, BE | Systems Programming Ghent

Grow smarter with embedded Rust

2025-06-04 | München, DE | Rust Munich

Rust Munich 2025 / 2 - Hacking Evening

2025-06-04 | Oxford, UK | Oxford Rust Meetup Group

Oxford Rust and C++ social

2025-06-05 | München, DE | Rust Munich

Rust Munich 2025 / 2 - Hacking Evening

2025-06-11 | Reading, UK | Reading Rust Workshop

Reading Rust Meetup

North America

2025-05-15 | Hybrid (Redmond, WA, US) | Seattle Rust User Group

May, 2025 SRUG (Seattle Rust User Group) Meetup

2025-05-15 | Mountain View, CA, US | Hacker Dojo

RUST MEETUP at HACKER DOJO

2025-05-15 | Nashville, TN, US | Music City Rust Developers

Using Rust For Web Series 2 : Why you, Yes You. Should use Hyperscript!

2025-05-15 | Hybrid (Redmond, WA, US) | Seattle Rust User Group

May, 2025 SRUG (Seattle Rust User Group) Meetup

2025-05-18 | Albuquerque, NM, US | Ideas and Coffee

Intro Level Rust Get-together

2025-05-20 | San Francisco, CA, US | San Francisco Rust Study Group

Rust Hacking in Person

2025-05-21 | Hybrid (Vancouver, BC, CA) | Vancouver Rust

Linking

2025-05-28 | Austin, TX, US | Rust ATX

Rust Lunch - Fareground

2025-05-29 | Atlanta, GA, US | Rust Atlanta

Rust-Atl

2025-06-05 | Saint Louis, MO, US | STL Rust

Leptos web framework

South America

2025-05-28 | Montevideo, DE, UY | Rust Meetup Uruguay

Primera meetup de Rust de 2025!

2025-05-31 | São Paulo, BR | Rust São Paulo Meetup

Encontro do Rust-SP na WillBank

If you are running a Rust event please add it to the calendar to get it mentioned here. Please remember to add a link to the event too. Email the Rust Community Team for access.

Jobs

Please see the latest Who's Hiring thread on r/rust

Quote of the Week

If a Pin drops in a room, and nobody around understands it, does it make an unsound? #rustlang

– Josh Triplett on fedi

Thanks to Josh Triplett for the self-suggestion!

Please submit quotes and vote for next week!

This Week in Rust is edited by: nellshamrell, llogiq, cdmistman, ericseppanen, extrawurst, U007D, joelmarcey, mariannegoldin, bennyvasquez, bdillo

Email list hosting is sponsored by The Rust Foundation

Discuss on r/rust

2 notes

·

View notes

Note

I love your bsd analysis bc they all feel so prim and proper and I don't understand most of them but I'm still having a good time

I'm glad you're having a good time!

Most, if not all, of my bsd analyses are drafted in one sitting when the inspiration strikes and are generally streams of consciousness. But before I post, I do reread them several times and revise them to try and ensure I'm saying what I mean and not being dismissive or unfair to others. So, that might be where the prim and proper vibe comes from haha.

If they're hard to understand, that's because they're both an analytical and creative exercise. I write them in my literary voice, which recklessly mashes together poetry and prose, and I write them based on my collective understanding of the canon and source material, which I don't have the time or bandwidth to distill more than I already do. I also write with the understanding that my interpretations are about exploring themes, parallels, and imagery and not about drawing definitive conclusions, so I often write to be as open-ended as my thought processes.

(The reason I write the way I write instead of for clarity & with definitive, black/white reasoning is because I think the former is fun and I'm easily bored/dissatisfied by the latter. I also just think the latter is not how any well wrought media is meant to be read, so I don't read it that way.)

Anyway, glad you're having a good time, because so am I!

6 notes

·

View notes

Text

If you live in Russia, there’s no avoiding Yandex. The tech giant—often referred to as “Russia’s Google”—is part of daily life for millions of people. It dominates online search, ride-hailing, and music streaming, while its maps, payment, email, and scores of other services are popular. But as with all tech giants, there’s a downside of Yandex being everywhere: It can gobble up huge amounts of data.

In January, Yandex suffered the unthinkable. It became the latest in a short list of high-profile firms to have its source code leaked. An anonymous user of the hacking site BreachForums publicly shared a downloadable 45-gigabyte cache of Yandex’s code. The trove, which is said to have come from a disgruntled employee, doesn’t include any user data but provides an unparalleled view into the operation of its apps and services. Yandex’s search engine, maps, AI voice assistant, taxi service, email app, and cloud services were all laid bare.

The leak also included code from two of Yandex’s key systems: its web analytics service, which captures details about how people browse, and its powerful behavioral analytics tool, which helps run its ad service that makes millions of dollars. This kind of advertising system underpins much of the modern web’s economy, with Google, Facebook, and thousands of advertisers relying on similar technologies. But the systems are largely black holes.

Now, an in-depth analysis of the source code belonging to these two services, by Kaileigh McCrea, a privacy engineer at cybersecurity firm Confiant, is shedding light on how the systems work. Yandex’s technologies collect huge volumes of data about people, and this can be used to reveal their interests when it is “matched and analyzed” with all of the information the company holds, Confiant’s findings say.

McCrea says the Yandex code shows how the company creates household profiles for people who live together and predicts people's specific interests. From a privacy perspective, she says, what she found is “deeply unsettling.” “There are a lot of creepy layers to this onion,” she says. The findings also reveal that Yandex has one technology in place to share some limited information with Rostelecom, the Russian-government-backed telecoms company.

Yandex’s chief privacy officer, Ivan Cherevko, in detailed written answers to WIRED’s questions, says the “fragments of code” are outdated, are different from the versions currently used, and that some of the source code was “never actually used” in its operations. “Yandex uses user data only to create new services and improve existing ones,” and it “never sells user data or discloses data to third parties without user consent,” he says.

However, the analysis comes as Russia’s tech giant is going through significant changes. Following Russia’s full-scale invasion of Ukraine in February 2022, Yandex is splitting its parent company, based in the Netherlands, from its Russian operations. Analysts believe the move could see Yandex in Russia become more closely connected to the Kremlin, with data being put at risk.

“They have been trying to maintain this image of a more independent and Western-oriented company that from time to time protested some repressive laws and orders, helping attract foreign investments and business deals,” says Natalia Krapiva, tech-legal counsel at digital rights nonprofit Access Now. “But in practice, Yandex has been losing its independence and caving in to the Russian government demands. The future of the company is uncertain, but it’s likely that the Russia-based part of the company will lose the remaining shreds of independence.”

Data Harvesting

The Yandex leak is huge. The 45 GB of source code covers almost all of Yandex’s major services, offering a glimpse into the work of its thousands of software engineers. The code appears to date from around July 2022, according to timestamps included within the data, and it mostly uses popular programming languages. It is written in English and Russian, but also includes racist slurs. (When it was leaked in January, Yandex said this was “deeply offensive and completely unacceptable,” and it detailed some ways that parts of the code broke its own company policies.)

McCrea manually inspected two parts of the code: Yandex Metrica and Crypta. Metrica is the firm’s equivalent of Google Analytics, software that places code on participating websites and in apps, through AppMetrica, that can track visitors, including down to every mouse movement. Last year, AppMetrica, which is embedded in more than 40,000 apps in 50 countries, caused national security concerns with US lawmakers after the Financial Times reported the scale of data it was sending back to Russia.

This data, McCrea says, is pulled into Crypta. The tool analyzes people’s online behavior to ultimately show them ads for things they’re interested in. More than 300 “factors” are analyzed, according to the company’s website, and machine learning algorithms group people based on their interests. “Every app or service that Yandex has, which is supposed to be over 90, is funneling data into Crypta for these advertising segments in one form or another,” McCrea says.

Some data collected by Yandex is handed over when people use its services, such as sharing their location to show where they are on a map. Other information is gathered automatically. Broadly, the company can gather information about someone’s device, location, search history, home location, work location, music listening and movie viewing history, email data, and more.

The source code shows AppMetrica collecting data on people’s precise location, including their altitude, direction, and the speed they may be traveling. McCrea questions how useful this is for advertising. It also grabs the names of the Wi-Fi networks people are connecting to. This is fed into Crypta, with the Wi-Fi network name being linked to a person’s overall Yandex ID, the researcher says. At times, its systems attempt to link multiple different IDs together.

“The amount of data that Yandex has through the Metrica is so huge, it's just impossible to even imagine it,” says Grigory Bakunov, a former Yandex engineer and deputy CTO who left the company in 2019. “It's enough to build any grouping, or segmentation of the audience.” The segments created by Crypta appear to be highly specific and show how powerful data about our online lives is when it is aggregated. There are advertising segments for people who use Yandex’s Alice smart speaker, “film lovers” can be grouped by their favorite genre, there are laptop users, people who “searched Radisson on maps,” and mobile gamers who show a long-term interest.

McCrea says some categories stand out more than others. She says a “smokers” segment appears to track people who purchase smoking-related items, like e-cigarettes. While “summer residents” may indicate people who have holiday homes and uses location data to determine this. There is also a “travelers” section that can use location data to track whether they have traveled from their normal location to another—it includes international and domestic fields. One part of the code looked to pull data from the Mail app and included fields about “boarding passes” and “hotels.”

Some of this information “doesn’t sound that unusual” for online advertising, McCrea says. But the big question for her is whether creating personalized adverting is a good enough reason to collect “this invasive level of information.” Behavioral advertising has long followed people around the web, with companies hoovering up people’s data in creepy ways. Regulators have failed to get a grip on the issue, while others have suggested it should be banned. “When you think about what else you could do, if you can make that kind of calculation, it's kind of creepy, especially in Russia,” McCrea says. She suggests it is not implausible to create segments for military-aged men who are looking to leave Russia.

Yandex’s Cherevko says that grouping users by interests is an “industry standard practice” and that it isn’t possible for advertisers to identify specific people. Cherevko says the collection of information allows people to be shown specific ads: “gardening products to a segment of users who are interested in summer houses and car equipment to those who visit gas stations.” Crypta analyzes a person’s online behavior, Cherevko says, and “calculates the probability” they belong to a specific group.

“For Crypta, each user is represented as a set of identifiers, and the system cannot associate them with a natural person in the real world,” Cherevko claims. “This kind of set is probabilistic only.” He adds that Crypta doesn’t have access to people’s emails and says the Mail data in the code about boarding passes and hotels was an “experiment.” Crypta “received only de-identified information about the category from Mail,” and the method has not been used since 2019, Cherevko says. He adds that Yandex deletes “user geolocation” collected by AppMetrica after 14 days.

While the leaked source code offers a detailed view of how Yandex’s systems may operate, it is not the full picture. Artur Hachuyan, a data scientist and AI researcher in Russia who started his own firm doing analytics similar to Crypta, says he did not find any pretrained machine learning models when he inspected the code or references to data sources or external databases of Yandex’s partners. It’s also not clear, for instance, which parts of the code were not used.

McCrea’s analysis says Yandex assigns people household IDs. Details in the code, the researcher says, include the number of people in a household, the gender of people, and if they are any elderly people or children. People’s location data is used to group them into households, and they can be included if their IP addresses have “intersected,” Cherevko says. The groupings are used for advertising, he says. “If we assume that there are elderly people in the household, then we can invite advertisers to show them residential complexes with an accessible environment.”

The code also shows how Yandex can combine data from multiple services. McCrea says in one complex process, an adult’s search data may be pulled from the Yandex search tool, AppMetrica, and the company’s taxi app to predict whether they have children in their household. Some of the code categorizes whether children may be over or under 13. (Yandex’s Cherevko says people can order taxis with children’s seats, which is a sign they may be “interested in specific content that might be interesting for someone with a child.”)

One element within the Crypta code indicates just how all of this data can be pulled together. A user interface exists that acts as a profile about someone: It shows marital status, their predicted income, whether they have children, and three interests—which include broad topics such as appliances, food, clothes, and rest. Cherevko says this is an “internal Yandex tool” where employees can see how Crypta’s algorithms classify them, and they can only access their own information. “We have not encountered any incidents related to access abuse,” he says.

Government Influence

Yandex is going through a breakup. In November 2022, the company’s Netherlands-based parent organization, Yandex NV, announced it will separate itself from the Russian business, following Russia’s invasion of Ukraine. Internationally, the company, which will change its name, is planning to develop self-driving technologies and cloud computing, while divesting itself from search, advertising, and other services in Russia. Various Russian businessmen have been linked to the potential sale. (At the end of July, Yandex NV said it plans to propose its restructuring to shareholders later this year.)

While the uncoupling is being worked out, Russia has been trying to consolidate its control of the internet and increasing censorship. A slew of new laws requires more companies and government services in the country to use home-grown tech. For instance, this week, Finland and Norway’s data regulators blocked Yandex’s international taxi app from sending data back to Russia due to a new law, which comes into force in September, that will allow the Federal Security Service (FSB) access to taxi data.

These nationalization efforts coupled with the planned ownership change at Yandex are creating concerns that the Kremlin may soon be able to use data gathered by the company. Stanislav Shakirov, the CTO of Russian digital rights group Roskomsvoboda and founder of tech development organization Privacy Accelerator, says historically Yandex has tried to resist government demands for data and has proved better than other firms. (In June, it was fined 2 million rubles ($24,000) for not handing data to Russian security services.) However, Shakirov says he thinks things are changing. “I am inclined to believe that Yandex will be attempted to be nationalized and, as a consequence, management and policy will change,” Shakirov says. “And as a consequence, user data will be under much greater threat than it is now.”

Bakunov, the former Yandex engineer, who reviewed some of McCrea’s findings at WIRED’s request, says he is scared by the potential for the misuse of data going forward. He says it looks like Russia is a “new generation” of a “failed state,” highlighting how it may use technology. “Yandex here is the big part of these technologies,” he says. “When we built this company, many years ago, nobody thought that.” The company’s head of privacy, Cherevko, says that within the restructuring process, “control of the company will remain in the hands of management.” And its management makes decisions based on its “core principles.”

But the leaked code shows, in one small instance, that Yandex may already share limited information with one Russian government-linked company. Within Crypta are five “matchers” that sync fingerprinting events with telecoms firms—including the state-backed Rostelecom. McCrea says this indicates that the fingerprinting events could be accessible to parts of the Russian state. “The shocking thing is that it exists,” McCrea says. “There's nothing terribly shocking within it.” (Cherevko says the tool is used for improving the quality of advertising, helping it to improve its accuracy, and also identifying scammers attempting to conduct fraud.)

Overall, McCrea says that whatever happens with the company, there are lessons about collecting too much data and what can happen to it over time when circumstances change. “Nothing stays harmless forever,” she says.

5 notes

·

View notes

Text

Azure Data Engineering Tools For Data Engineers

Azure is a cloud computing platform provided by Microsoft, which presents an extensive array of data engineering tools. These tools serve to assist data engineers in constructing and upholding data systems that possess the qualities of scalability, reliability, and security. Moreover, Azure data engineering tools facilitate the creation and management of data systems that cater to the unique requirements of an organization.

In this article, we will explore nine key Azure data engineering tools that should be in every data engineer’s toolkit. Whether you’re a beginner in data engineering or aiming to enhance your skills, these Azure tools are crucial for your career development.

Microsoft Azure Databricks

Azure Databricks is a managed version of Databricks, a popular data analytics and machine learning platform. It offers one-click installation, faster workflows, and collaborative workspaces for data scientists and engineers. Azure Databricks seamlessly integrates with Azure’s computation and storage resources, making it an excellent choice for collaborative data projects.

Microsoft Azure Data Factory

Microsoft Azure Data Factory (ADF) is a fully-managed, serverless data integration tool designed to handle data at scale. It enables data engineers to acquire, analyze, and process large volumes of data efficiently. ADF supports various use cases, including data engineering, operational data integration, analytics, and data warehousing.

Microsoft Azure Stream Analytics

Azure Stream Analytics is a real-time, complex event-processing engine designed to analyze and process large volumes of fast-streaming data from various sources. It is a critical tool for data engineers dealing with real-time data analysis and processing.

Microsoft Azure Data Lake Storage

Azure Data Lake Storage provides a scalable and secure data lake solution for data scientists, developers, and analysts. It allows organizations to store data of any type and size while supporting low-latency workloads. Data engineers can take advantage of this infrastructure to build and maintain data pipelines. Azure Data Lake Storage also offers enterprise-grade security features for data collaboration.

Microsoft Azure Synapse Analytics

Azure Synapse Analytics is an integrated platform solution that combines data warehousing, data connectors, ETL pipelines, analytics tools, big data scalability, and visualization capabilities. Data engineers can efficiently process data for warehousing and analytics using Synapse Pipelines’ ETL and data integration capabilities.

Microsoft Azure Cosmos DB

Azure Cosmos DB is a fully managed and server-less distributed database service that supports multiple data models, including PostgreSQL, MongoDB, and Apache Cassandra. It offers automatic and immediate scalability, single-digit millisecond reads and writes, and high availability for NoSQL data. Azure Cosmos DB is a versatile tool for data engineers looking to develop high-performance applications.

Microsoft Azure SQL Database

Azure SQL Database is a fully managed and continually updated relational database service in the cloud. It offers native support for services like Azure Functions and Azure App Service, simplifying application development. Data engineers can use Azure SQL Database to handle real-time data ingestion tasks efficiently.

Microsoft Azure MariaDB

Azure Database for MariaDB provides seamless integration with Azure Web Apps and supports popular open-source frameworks and languages like WordPress and Drupal. It offers built-in monitoring, security, automatic backups, and patching at no additional cost.

Microsoft Azure PostgreSQL Database

Azure PostgreSQL Database is a fully managed open-source database service designed to emphasize application innovation rather than database management. It supports various open-source frameworks and languages and offers superior security, performance optimization through AI, and high uptime guarantees.

Whether you’re a novice data engineer or an experienced professional, mastering these Azure data engineering tools is essential for advancing your career in the data-driven world. As technology evolves and data continues to grow, data engineers with expertise in Azure tools are in high demand. Start your journey to becoming a proficient data engineer with these powerful Azure tools and resources.

Unlock the full potential of your data engineering career with Datavalley. As you start your journey to becoming a skilled data engineer, it’s essential to equip yourself with the right tools and knowledge. The Azure data engineering tools we’ve explored in this article are your gateway to effectively managing and using data for impactful insights and decision-making.

To take your data engineering skills to the next level and gain practical, hands-on experience with these tools, we invite you to join the courses at Datavalley. Our comprehensive data engineering courses are designed to provide you with the expertise you need to excel in the dynamic field of data engineering. Whether you’re just starting or looking to advance your career, Datavalley’s courses offer a structured learning path and real-world projects that will set you on the path to success.

Course format:

Subject: Data Engineering Classes: 200 hours of live classes Lectures: 199 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 70% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

Subject: DevOps Classes: 180+ hours of live classes Lectures: 300 lectures Projects: Collaborative projects and mini projects for each module Level: All levels Scholarship: Up to 67% scholarship on this course Interactive activities: labs, quizzes, scenario walk-throughs Placement Assistance: Resume preparation, soft skills training, interview preparation

For more details on the Data Engineering courses, visit Datavalley’s official website.

#datavalley#dataexperts#data engineering#data analytics#dataexcellence#data science#power bi#business intelligence#data analytics course#data science course#data engineering course#data engineering training

3 notes

·

View notes

Text

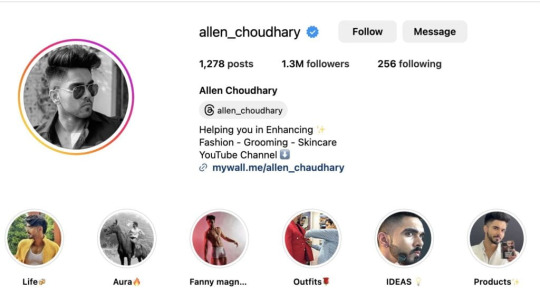

Building Your YouTube Cash Cow Channel: A Step-by-Step Guide

YouTube has evolved from a platform for cat videos into a lucrative source of income for content creators worldwide. If you're looking to turn your passion into profit, building a YouTube Cash Cow Channel could be your path to success. In this step-by-step guide, we'll explore the key elements of creating and growing a channel that generates consistent revenue.

1. Find Your Niche

Before diving into content creation, identify a niche that aligns with your interests and expertise. Your niche should be specific enough to target a particular audience but broad enough to create a substantial following. Research competitors and trends to assess the niche's potential for growth and monetization.

2. Create High-Quality Content

Quality is paramount on YouTube. Invest in good equipment, such as cameras, microphones, and lighting, to ensure your videos are visually and audibly appealing. Develop a content strategy that provides value, educates, entertains, or solves problems for your target audience. Consistency is key; establish a posting schedule to keep your viewers engaged.

3. Optimize SEO for Visibility

To stand out on YouTube, optimize your videos for search engine visibility. Use relevant keywords in your video titles, descriptions, and tags. Craft compelling thumbnails that entice viewers to click. Engage with your audience through thoughtful comments and encourage them to like, share, and subscribe.

4. Monetize Your Channel

Once you've gained some traction, it's time to monetize your channel. YouTube offers several revenue streams:

a. Ad Revenue: Enroll in the YouTube Partner Program (YPP) to earn money through ads displayed on your videos. To qualify, your channel needs 1,000 subscribers and 4,000 watch hours in the past 12 months.

b. Channel Memberships: Offer exclusive perks to subscribers who join your channel as members. This can include access to behind-the-scenes content, custom emojis, and shout-outs.

c. Merchandise Shelf: Sell your merchandise directly through your channel using the merchandise shelf feature.

d. Sponsored Content: Partner with brands for sponsored videos or product placements. Ensure the products align with your niche and are relevant to your audience. The scribehow has more information about the cashcow.

5. Build a Loyal Community

Foster a sense of community by engaging with your audience. Respond to comments, ask for feedback, and take viewer suggestions into consideration. Hosting live streams or Q&A sessions can help strengthen your connection with your viewers.

6. Collaborate and Network

Collaborating with other YouTubers can expose your channel to new audiences. Look for creators within your niche or complementary niches and propose mutually beneficial collaborations. Attend industry events and connect with fellow content creators to expand your network.

7. Track Analytics and Refine Strategy

Regularly review your YouTube Analytics to gain insights into your audience's behavior. Understand which videos perform best, where your viewers are coming from, and their demographics. Use this data to refine your content strategy and improve your channel's performance.

8. Diversify Your Income

While ad revenue is a significant income source, don't rely solely on it. Explore additional revenue streams, such as affiliate marketing, merchandise sales, online courses, or Patreon memberships. Diversifying your income can provide stability and financial security.

9. Stay Informed and Adapt

YouTube is constantly evolving, with new features and trends emerging regularly. Stay informed about the platform's updates and adapt your strategy accordingly. Be open to trying new formats and approaches to keep your content fresh and engaging.

10. Stay Committed

Building a successful YouTube Cash Cow Channel takes time and dedication. It may be a while before you start seeing substantial income. Stay committed to your niche, consistently create high-quality content, and adapt to the ever-changing YouTube landscape. In conclusion, creating a YouTube Cash Cow Channel is a viable way to turn your passion into a profitable venture. By finding your niche, producing quality content, optimizing for SEO, and diversifying your income streams, you can build a thriving channel that provides a consistent source of revenue. Remember that success on YouTube requires patience, persistence, and a deep understanding of your audience's needs and preferences.

2 notes

·

View notes

Text

What is Solr – Comparing Apache Solr vs. Elasticsearch

In the world of search engines and data retrieval systems, Apache Solr and Elasticsearch are two prominent contenders, each with its strengths and unique capabilities. These open-source, distributed search platforms play a crucial role in empowering organizations to harness the power of big data and deliver relevant search results efficiently. In this blog, we will delve into the fundamentals of Solr and Elasticsearch, highlighting their key features and comparing their functionalities. Whether you're a developer, data analyst, or IT professional, understanding the differences between Solr and Elasticsearch will help you make informed decisions to meet your specific search and data management needs.

Overview of Apache Solr

Apache Solr is a search platform built on top of the Apache Lucene library, known for its robust indexing and full-text search capabilities. It is written in Java and designed to handle large-scale search and data retrieval tasks. Solr follows a RESTful API approach, making it easy to integrate with different programming languages and frameworks. It offers a rich set of features, including faceted search, hit highlighting, spell checking, and geospatial search, making it a versatile solution for various use cases.

Overview of Elasticsearch

Elasticsearch, also based on Apache Lucene, is a distributed search engine that stands out for its real-time data indexing and analytics capabilities. It is known for its scalability and speed, making it an ideal choice for applications that require near-instantaneous search results. Elasticsearch provides a simple RESTful API, enabling developers to perform complex searches effortlessly. Moreover, it offers support for data visualization through its integration with Kibana, making it a popular choice for log analysis, application monitoring, and other data-driven use cases.

Comparing Solr and Elasticsearch

Data Handling and Indexing

Both Solr and Elasticsearch are proficient at handling large volumes of data and offer excellent indexing capabilities. Solr uses XML and JSON formats for data indexing, while Elasticsearch relies on JSON, which is generally considered more human-readable and easier to work with. Elasticsearch's dynamic mapping feature allows it to automatically infer data types during indexing, streamlining the process further.

Querying and Searching

Both platforms support complex search queries, but Elasticsearch is often regarded as more developer-friendly due to its clean and straightforward API. Elasticsearch's support for nested queries and aggregations simplifies the process of retrieving and analyzing data. On the other hand, Solr provides a range of query parsers, allowing developers to choose between traditional and advanced syntax options based on their preference and familiarity.

Scalability and Performance

Elasticsearch is designed with scalability in mind from the ground up, making it relatively easier to scale horizontally by adding more nodes to the cluster. It excels in real-time search and analytics scenarios, making it a top choice for applications with dynamic data streams. Solr, while also scalable, may require more effort for horizontal scaling compared to Elasticsearch.

Community and Ecosystem

Both Solr and Elasticsearch boast active and vibrant open-source communities. Solr has been around longer and, therefore, has a more extensive user base and established ecosystem. Elasticsearch, however, has gained significant momentum over the years, supported by the Elastic Stack, which includes Kibana for data visualization and Beats for data shipping.

Document-Based vs. Schema-Free

Solr follows a document-based approach, where data is organized into fields and requires a predefined schema. While this provides better control over data, it may become restrictive when dealing with dynamic or constantly evolving data structures. Elasticsearch, being schema-free, allows for more flexible data handling, making it more suitable for projects with varying data structures.

Conclusion

In summary, Apache Solr and Elasticsearch are both powerful search platforms, each excelling in specific scenarios. Solr's robustness and established ecosystem make it a reliable choice for traditional search applications, while Elasticsearch's real-time capabilities and seamless integration with the Elastic Stack are perfect for modern data-driven projects. Choosing between the two depends on your specific requirements, data complexity, and preferred development style. Regardless of your decision, both Solr and Elasticsearch can supercharge your search and analytics endeavors, bringing efficiency and relevance to your data retrieval processes.

Whether you opt for Solr, Elasticsearch, or a combination of both, the future of search and data exploration remains bright, with technology continually evolving to meet the needs of next-generation applications.

2 notes

·

View notes

Text

Why Developers Are Turning To SDKs For Alternative Income Streams

In today’s fast-changing tech landscape, many developers are searching for new ways to earn extra income. Building and selling apps isn’t the only option anymore. More and more developers are discovering that using Software Development Kits (SDKs) can open doors to smarter and easier ways to make money on the side.

One popular method is SDK monetization. This involves integrating third-party SDKs into your app that provide services like analytics, ads, or something unique like proxy sharing. Companies such as Infatica, for example, offer SDKs that let developers earn by sharing unused internet bandwidth in a secure and responsible manner. With this type of solution, developers can make money without adding clutter or ads to the user experience.

So why are SDKs becoming more popular among developers? Think about it—once your app is up and running, it takes time and effort to keep it profitable. By adding an SDK that brings in passive income, devs can keep the lights on while their main focus stays on building great features or launching new projects. It’s a way to make extra money with work you’ve already done.

For developers with apps that have a steady user base but aren’t making much money, SDKs can be a game changer. Just one small addition to the back end could start generating income without affecting how the app looks or works from the user’s side. It’s also relatively easy to get started compared to building and marketing new apps from scratch.

Another point to consider is flexibility. Developers can try different SDKs to see which ones fit best with their app and user base. Some focus on user experience enhancements, while others bring in revenue through other sources. Picking the right one depends on what feels right based on your skills and goals.

At the end of the day, SDKs offer a practical path for developers to earn passive income, especially as the app market gets more crowded and competitive. It’s a way to work smarter, not harder. Whether you’re an indie dev or part of a small team, looking into SDK options could be a smart step toward a more sustainable and flexible income stream.

0 notes

Text

My Wall – All in One Link in Bio Tool

What if your digital presence can get a boost while multiplying your content’s reach and influence without working too hard? If you have a powerful tool that can be operated easily and with few clicks, it lets you showcase your creativity to your audience. How simple things can be! Here we present My Wall, the all in one link in bio tool, a power driver for content creators and influencers that help centralize their online profiles and presence.

Once you use our tool, you are free from using multiple platforms, profiles, and links to better your online presence. Create a mini website to reflect your branding. Use the best influencer media kit builder to amplify your online impact. This is also an online store tool that can elevate your presence multiple times.

Choosing the Best Link in Bio Tool

Unlike many other traditional link builders, our tool is a complete content creator’s toolkit. Be it the online portfolio builder you have a single centralized platform to ensure different aspects of your content creation are suitably covered.

Showcase Your Work From one Place

My Wall helps you centralize your portfolio, be it photos, blogs, links, videos, etc. This is the perfect place to present your past brand collaborations, YouTube videos, and posts from your social media handles or blogs and articles in one place. The result – your connection with your audience and followers multiply while potential collaborators are just a click away.

Fully Customizable Design

This link in bio tool helps you customize your online presence because we understand personal style matters! You can select from hundreds of built-in templates, color palettes, fonts, and layouts to make it all look like an extension of your personality. After all, your online presence must look like you. We offer choices of minimalist and elegant designs that give a polished look in the background while putting the entire focus on your content.

Built-In E-Commerce Functionality

Who says that a link in bio tool has to do just that – redirect followers, and that’s it? My Wall proves that you can have one toolkit to make your influencer’s mini website, and digital media kit, and create links for more followers. The best thing is that this is your online store tool from where you can easily sell your merchandise and services. Add the ‘Buy Now’ button and start your journey to becoming an online seller.

Monetization Made Easy

Besides selling your products and services, you can turn to other ideas to start monetizing your presence online with thislink in bio tool. You can integrateaffiliate links, and sponsored content, and experiment with other income streams.

No need to juggle multiple platforms! With MyWall turn your influence into a steady source of income effortlessly.

More Collaboration Opportunities

My Wall helps present your content in a clutter-free, clear, and professional manner. This can mean opening the doors to new and lucrative brand collaborations and opportunities. Brands can easily assess your value as a content creator by flipping through your work, all thanks to this too that keeps your content centralized in one place.

Features of Link In Bio Tool

A Mini Website

This is not a simple link in bio tool. We help craft a mini website showcasing your personality and branding. You can use the tool to upload your best content pieces, testimonials, links to previous work, achievements, and so on.

Seamless Navigation

Use the tool’s intuitive navigational functionalities to make your audience’s experiences memorable and simple. Your followers are directed to their desired content seamlessly without the hassle of navigating multiple links and profiles.

Enhanced Analytics

My Wall must be used as it helps you present your content in the best way. Make it your go-to toolkit for success because the built-in analytics keep you updated on your audience and partnership stats. You will stay informed about trends and clicks, helping you strategize suitably for increased engagement.

Video Integration

Present your most captivating videos to the world within your profile with this amazinglink-in bio tool. Share behind-the-scene stories, train your audience with product demos, or take your audience on travel blogs with you. You can be creative in pursuing this tool to boost audience engagement.

Professional Support

All the tech work, not your stuff? Wish you had someone to help you? We are here, no worries. Our experienced team is available 24/7 to assist you build your mini website fast and cost-effectively.

Getting Started with My Wall

Sign up using MyWall’s easy registration process.

Work with a dedicated manager so that your mini website reflects your unique vision.

Choose templates, colors, and features that match your brand.

Go Live in 48 Hours.

Conclusion

It is time to say goodbye to scattered links and unorganized profiles. With My Wall as your link in bio tool, you can do a lot more than the average tools readily available in the market. Use the influencer media kit builder to drive better collaboration results. The digital portfolio maker helps your content shine and keeps your audience engaged.

0 notes

Text

How Accounting Services Improve Financial Transparency and Decision-Making?

In any successful business, clear financial insight is essential. Accurate, timely, and organized financial information not only builds trust with stakeholders but also guides critical business decisions. This is where professional accounting services play a transformative role. By maintaining clarity in financial records and ensuring compliance with regulations, accounting services directly improve both transparency and decision-making processes.

Enhancing Financial Transparency

Financial transparency refers to the open and honest disclosure of a company’s financial activities. Professional accounting services ensure that all transactions, assets, liabilities, and income streams are recorded accurately and presented clearly in financial statements. This transparency builds confidence among investors, lenders, regulatory bodies, and even employees.

With accounting services in place, businesses can avoid discrepancies and maintain consistency in reporting. Accountants adhere to established financial standards, such as GAAP or IFRS, which promote uniformity and accountability. This reduces the risk of fraud or manipulation and allows stakeholders to rely on the financial data being presented.

Reliable Record-Keeping and Reporting

One of the core responsibilities of accounting services is maintaining detailed financial records. These records are not just for audits or tax filings—they provide a real-time picture of the company’s financial health. Regular bookkeeping, reconciliations and accurate reporting give business owners a solid foundation to evaluate performance, spot irregularities, and ensure that operations are running efficiently.

Proper documentation also facilitates smoother audits and financial reviews. When records are well-kept, external auditors or potential investors can quickly access and verify information, reducing delays and enhancing the credibility of the business.

Supporting Strategic Decision-Making

Accounting services go beyond just number-crunching. They offer actionable insights by analyzing trends, comparing budgets to actual results, and forecasting future outcomes. With this data-driven support, business owners and managers can make informed decisions regarding investments, cost management, resource allocation, and expansion strategies.

For instance, through regular financial reports, a business might identify a decline in profit margins and trace the cause of rising production costs. This insight can prompt changes in sourcing, pricing strategies, or operational workflows to restore profitability.

Improving Cash Flow Management

Accounting professionals also help businesses maintain healthy cash flow. By tracking receivables, payables, and operational expenses, accountants ensure that companies have enough liquidity to meet obligations and seize growth opportunities. They can also create financial models and projections to anticipate future cash needs and avoid shortfalls.

This financial foresight helps decision-makers plan more effectively—whether it’s negotiating better payment terms, securing financing, or investing in new technologies.

Conclusion

Accounting services in Fort Worth, TX are fundamental to building financial transparency and enabling smart, confident decision-making. By ensuring accuracy, consistency, and compliance in financial reporting, they lay the groundwork for trust and accountability. Simultaneously, their analytical expertise empowers businesses to navigate challenges, seize opportunities, and drive sustainable growth. In a competitive and rapidly evolving marketplace, having strong accounting support is not just a back-office function—it’s a strategic advantage.

0 notes

Text

Mobile Apps That Move Business Forward: Crafting Solutions for the Digital Age

The Mobile Evolution Is Here Over the last decade, mobile phones have transitioned from communication devices to powerful platforms that support daily life. Consumers are using mobile apps for shopping, entertainment, productivity, and much more. For businesses, this shift means one thing—going mobile is not a trend, it’s a fundamental change in how customers interact with products and services.

Why Your Business Needs a Mobile Strategy A responsive website is no longer enough. Users expect dedicated mobile applications that offer speed, convenience, and seamless functionality. Mobile apps give businesses a direct line to customers, enhancing visibility and enabling engagement on a deeper level. Notifications, loyalty programs, in-app purchases, and instant support—all these features help retain users and build long-term loyalty.

Built Around Your Brand, Not the Other Way Around Unlike generic platforms, custom mobile apps are built with a specific purpose and audience in mind. The design, functionality, and user experience are tailored to reflect your brand’s identity and business needs. This not only makes the app more usable but also reinforces your brand message with every interaction, increasing credibility and customer trust.

Unlocking New Revenue Streams Mobile apps are not just tools—they’re opportunities. With smart monetization strategies such as in-app purchases, subscriptions, or premium content, businesses can open new sources of income. Even service-based companies can streamline bookings, orders, and payments within the app, reducing friction and improving conversion rates.

Connecting Teams in Real Time Mobile applications aren’t just for customers—they’re also transforming internal communication. Whether your team is remote, in-field, or spread across different regions, internal apps can connect departments, simplify task management, and enable real-time updates. These tools lead to improved productivity and better coordination, even across complex operations.

Harnessing the Power of Analytics Every tap, swipe, and scroll within a mobile app tells a story. Custom mobile applications can be integrated with analytics tools that provide valuable insights into user behavior. This data helps businesses understand what features are working, what needs improvement, and how to continuously evolve the app experience for better results.

Security You Can Trust With mobile apps handling personal information, transactions, and sensitive business data, security must be a top priority. Custom-built apps can incorporate strong security layers like biometric authentication, encrypted data storage, and secure APIs. These measures not only protect your app but also increase user confidence in your platform.

Scalable Solutions That Grow With You One of the biggest advantages of custom mobile applications is their ability to scale. Whether your user base increases, you expand your service offerings, or need to adapt to new technology trends—your app can evolve without disruption. This scalability ensures that the app remains a strong pillar of your digital strategy as the business matures.

Final Thought In a competitive digital environment, having a custom mobile app isn’t just a smart move—it’s a strategic investment. Businesses that embrace innovation through dedicated mobile experiences are better positioned to connect, grow, and lead in their industry. This is the promise and power of mobile application development in usa, where mobile technology drives real business transformation.

0 notes

Text

Optimize Your Funnel: Better Marketing Qualified Leads Tactics

In today’s competitive B2B landscape, businesses need more than just a steady stream of leads—they need Marketing Qualified Leads (MQLs) that are genuinely ready for sales engagement. The quality of leads directly impacts conversion rates, sales velocity, and overall revenue performance. That’s why optimizing for Marketing Qualified Leads is a strategic priority for forward-looking demand generation teams in 2025.

Modern lead qualification is no longer a manual process or based on guesswork. It requires a precise blend of behavioral tracking, data analytics, and intent signals to identify which prospects are worth passing to sales. Below are strategic tips to help you sharpen your MQL framework and drive high-value opportunities through your pipeline.

1. Define Clear Qualification Criteria

One of the most common mistakes in B2B marketing is an unclear or inconsistent definition of Marketing Qualified Leads. In 2025, successful organizations are aligning with sales to build a shared understanding of what qualifies a lead as "MQL."

This includes key demographic factors (such as company size, job role, industry), behavioral indicators (like repeated website visits or content downloads), and engagement thresholds. When both marketing and sales agree on qualification standards, handoffs become smoother and conversion rates increase.

2. Use Intent Data to Prioritize High-Value Prospects

Not all leads express buying intent equally. Leveraging third-party intent data helps marketing teams identify which accounts are actively researching solutions similar to yours. These signals offer insight into what stage of the buying journey the lead is in and whether they should be flagged as Marketing Qualified Leads.

Tools that track keyword intent, competitive page visits, and content consumption can help prioritize outreach and avoid wasting time on low-intent prospects.

3. Implement Behavioral Scoring Models

Modern lead scoring systems go beyond static data points. In 2025, behavioral scoring plays a critical role in determining Marketing Qualified Leads. This includes how often a lead visits your website, which pages they view, whether they open emails or click CTAs, and how recently they interacted.

By assigning weights to different behaviors, you can better predict readiness and move only the most sales-ready leads to the next stage of engagement.

4. Refine Lead Nurturing Workflows

Just because a prospect isn't ready to buy immediately doesn't mean they won't be later. Optimized nurturing campaigns ensure that potential Marketing Qualified Leads stay engaged with valuable, relevant content until they show signs of readiness.

Segmented email campaigns, retargeting ads, and dynamic content personalization all contribute to gradually moving prospects closer to the MQL threshold. In 2025, automation and AI allow these workflows to adjust in real-time based on user behavior.

5. Align Sales and Marketing Around the MQL Handoff

Lead qualification doesn't end when a lead is labeled as an MQL. In fact, the transition from marketing to sales is a critical moment that can either accelerate or stall the buying journey. For 2025, successful organizations have built detailed MQL handoff protocols to ensure sales receives all relevant context about the lead's journey.

That includes campaign origin, downloaded assets, previous interactions, and identified pain points. This alignment helps sales teams engage more effectively and close deals faster.

6. Enrich Leads with Real-Time Data

In 2025, real-time data enrichment is essential for accurate Marketing Qualified Leads. Static data like email addresses and company names isn’t enough. You need to know current job roles, firmographics, technology stacks, funding rounds, and recent buying signals.

Enrichment tools pull this data from various sources to ensure each MQL has a complete profile, enabling personalized outreach and deeper insights for sales teams.

7. Leverage Predictive Analytics for Qualification

Predictive analytics uses historical data and machine learning to forecast which leads are most likely to convert into customers. This allows you to automatically identify Marketing Qualified Leads based on patterns and probabilities rather than guesswork.

By modeling your highest-performing customers and applying that data to new leads, you can enhance your qualification accuracy and improve pipeline efficiency.

8. Segment Leads by Funnel Stage

Not all Marketing Qualified Leads are equal—some are closer to buying than others. That's why segmenting MQLs by their stage in the funnel is a top strategy in 2025. Leads showing pricing page visits or demo requests may be bottom-of-funnel, while those reading educational blogs may be mid-funnel.