#support for kubernetes

Explore tagged Tumblr posts

Text

Understanding Compatibility Issues with New Technologies

In today’s fast-paced digital landscape, new technologies are being introduced at an unprecedented rate. From cutting-edge software solutions to advanced hardware devices, the adoption of innovative tools is essential for staying competitive. However, one significant challenge that organizations and individuals face when embracing these advancements is compatibility issues. Understanding these challenges and finding effective solutions is critical to ensuring seamless integration and optimal performance.

What Are Compatibility Issues?

Compatibility issues occur when new technologies fail to work effectively with existing systems, software, or hardware. This can manifest in various forms, such as:

Software Incompatibility: Applications that fail to run on updated operating systems or new hardware.

Hardware Mismatches: Devices that cannot communicate with older components due to differing standards or protocols.

Cross-Platform Challenges: Tools that struggle to perform across different environments like Windows, macOS, or Linux.

Integration Failures: New systems that cannot seamlessly integrate with legacy systems or third-party solutions.

These issues can lead to reduced productivity, security vulnerabilities, and costly downtime if not addressed promptly.

Causes of Compatibility Issues

Rapid Technology Evolution Technology evolves rapidly, often leaving older systems obsolete. For instance, new software updates may not support legacy systems, leading to functional gaps.

Lack of Standardization In industries where no universal standards exist, different manufacturers may develop solutions that cannot communicate effectively.

Inadequate Testing Companies releasing new technologies sometimes prioritize speed to market over thorough testing, resulting in compatibility oversights.

Vendor Lock-In Proprietary systems from specific vendors may intentionally limit compatibility with competitors’ products to retain customers.

The Impact of Compatibility Issues

The repercussions of compatibility problems extend beyond technical inconveniences. They can result in:

Operational Delays: Processes may grind to a halt when systems fail to interact smoothly.

Increased Costs: Retrofitting systems or investing in additional tools to bridge gaps can strain budgets.

Security Risks: Compatibility issues often leave loopholes that hackers can exploit.

User Frustration: End-users may face steep learning curves or limited functionality.

How to Address Compatibility Challenges

Thorough Planning and Assessment Before implementing new technologies, conduct an in-depth assessment of your existing infrastructure to identify potential compatibility risks.

Opt for Scalable Solutions Choose technologies that are future-proof and scalable, ensuring they can adapt to evolving needs and standards.

Prioritize Standard-Compliant Tools Solutions adhering to widely accepted standards reduce the risk of incompatibility.

Partner with Experts Consulting with technology specialists can help identify and resolve compatibility challenges efficiently.

Seek Professional Assistance

Navigating the complexities of compatibility issues can be overwhelming. If your business is struggling to integrate new technologies or facing operational disruptions due to compatibility problems, professional guidance can make all the difference.

At Magnintel, we specialize in helping businesses overcome technical challenges with innovative solutions. Our team of experts ensures seamless integration and optimal performance, saving you time, money, and headaches.

Contact Magnintel today for a Cloud Security Services consultation and take the first step towards a technology-driven future without compatibility roadblocks!

0 notes

Text

VMware vSphere 8.0 Update 2 New Features and Download

VMware vSphere 8.0 Update 2 New Features and Download @vexpert #vmwarecommunities #vSphere8Update2Features #vGPUDefragmentationInDRS #QualityOfServiceForGPUWorkloads #vSphereVMHardwareVersion21 #NVMEDiskSupportInVSphere #SupervisorClusterDeployments

VMware consistently showcases its commitment to innovation when it comes to staying at the forefront of technology. In a recent technical overview, we were guided by Fai La Molari, Senior Technical Marketing Architect at VMware, on the latest advancements and enhancements in vSphere Plus for cloud-connected services and vSphere 8 update 2. Here’s a glimpse into VMware vSphere 8.0 Update 2 new…

View On WordPress

#NVMe disk support in vSphere#Quality of Service for GPU workloads#Supervisor Cluster deployments#Tanzu Kubernetes with vSphere#vGPU defragmentation in DRS#VM management enhancements#vSphere 8 update 2 features#vSphere and containerized workload support#vSphere DevOps integrations#vSphere VM hardware version 21

0 notes

Text

Ad | Some Humble Bundle Delights

Only 16 hours left of Metroidvania Mania! This has some excellent Metroidvania games like Ghost Song and Axiom Verge 1&2! Money raised goes to the Global FoodBanking Network.

Brutal Beat 'Em Ups has something for those who enjoy classic fighting games - Like BattleToads! Money raised goes to Active Minds and Safe in Our World (Disclosure: I'm an Ambassador for Safe in Our World)

The Let 'Em Cook bundle has a banger of a lineup for cooking game fans. Cooking simulator, Cafe Owner Simulator, PlateUp! There's tons there. Money raised goes towards World Central Kitch and No Kid Hungry.

Jumping briefly into career progressions - Dive into DevOps bundle has books on Python, GoLang, Kubernetes and a whole bunch more. Great for people looking to expand their digital skillset and raising money for the Python Software Foundation.

Last but not least, Fully Loaded: Nightdive FPS Remasters has a great line up of classic FPS games. Turok, Rise of the Triad, Doom64 and Blood. If you've ever watched a Civvie video then you'll recognise a few from this list. Raising money for Active Minds.

91 notes

·

View notes

Text

it's really funny how much of this has basically ended up becoming true in one way or another. WASM, eBPF, both technologies with a ton of applications that rely on just putting shit in lightweight VM's, one of which is directly embedded in the kernel. Kubernetes supports WASM workloads now!

22 notes

·

View notes

Text

Ubuntu is a popular open-source operating system based on the Linux kernel. It's known for its user-friendliness, stability, and security, making it a great choice for both beginners and experienced users. Ubuntu can be used for a variety of purposes, including:

Key Features and Uses of Ubuntu:

Desktop Environment: Ubuntu offers a modern, intuitive desktop environment that is easy to navigate. It comes with a set of pre-installed applications for everyday tasks like web browsing, email, and office productivity.

Development: Ubuntu is widely used by developers due to its robust development tools, package management system, and support for programming languages like Python, Java, and C++.

Servers: Ubuntu Server is a popular choice for hosting websites, databases, and other server applications. It's known for its performance, security, and ease of use.

Cloud Computing: Ubuntu is a preferred operating system for cloud environments, supporting platforms like OpenStack and Kubernetes for managing cloud infrastructure.

Education: Ubuntu is used in educational institutions for teaching computer science and IT courses. It's free and has a vast repository of educational software.

Customization: Users can customize their Ubuntu installation to fit their specific needs, with a variety of desktop environments, themes, and software available.

Installing Ubuntu on Windows:

The image you shared shows that you are installing Ubuntu using the Windows Subsystem for Linux (WSL). This allows you to run Ubuntu natively on your Windows machine, giving you the best of both worlds.

Benefits of Ubuntu:

Free and Open-Source: Ubuntu is free to use and open-source, meaning anyone can contribute to its development.

Regular Updates: Ubuntu receives regular updates to ensure security and performance.

Large Community: Ubuntu has a large, active community that provides support and contributes to its development.

4 notes

·

View notes

Text

DevOps AWS Training| IntelliQIT | Best DevOps AWS Training in Hyderbad

Ameerpet in Hyderabad is a hub for IT training, offering many institutes for DevOps courses. DevOps helps automate and improve software development and IT operations. Here are some things to look for when choosing a best devops training institute in hyderabad:

Comprehensive Course Content

Covers essential DevOps tools like Jenkins, Docker, Git, and Kubernetes.

Includes both basic and advanced topics.

Hands-on Training

Focus on real-time projects to get practical experience.

Live demos and interactive sessions.

Flexible Learning Options

Offers both online and classroom classes to suit your schedule.

Some institutes provide free demo classes.

Placement Support

Helps with job placements through resume building and interview prep.

Offers guidance for certifications if needed.

Experienced Trainers

Trainers with real-world DevOps experience.

Good student-to-trainer ratio for personalized attention.

When choosing an institute, make sure it offers practical training, expert guidance, and good placement opportunities to kickstart your career in DevOps.

#devops training institutes in ameerpet#best devops training institute in hyderabad#devops training in ameerpet

2 notes

·

View notes

Text

How Python Powers Scalable and Cost-Effective Cloud Solutions

Explore the role of Python in developing scalable and cost-effective cloud solutions. This guide covers Python's advantages in cloud computing, addresses potential challenges, and highlights real-world applications, providing insights into leveraging Python for efficient cloud development.

Introduction

In today's rapidly evolving digital landscape, businesses are increasingly leveraging cloud computing to enhance scalability, optimize costs, and drive innovation. Among the myriad of programming languages available, Python has emerged as a preferred choice for developing robust cloud solutions. Its simplicity, versatility, and extensive library support make it an ideal candidate for cloud-based applications.

In this comprehensive guide, we will delve into how Python empowers scalable and cost-effective cloud solutions, explore its advantages, address potential challenges, and highlight real-world applications.

Why Python is the Preferred Choice for Cloud Computing?

Python's popularity in cloud computing is driven by several factors, making it the preferred language for developing and managing cloud solutions. Here are some key reasons why Python stands out:

Simplicity and Readability: Python's clean and straightforward syntax allows developers to write and maintain code efficiently, reducing development time and costs.

Extensive Library Support: Python offers a rich set of libraries and frameworks like Django, Flask, and FastAPI for building cloud applications.

Seamless Integration with Cloud Services: Python is well-supported across major cloud platforms like AWS, Azure, and Google Cloud.

Automation and DevOps Friendly: Python supports infrastructure automation with tools like Ansible, Terraform, and Boto3.

Strong Community and Enterprise Adoption: Python has a massive global community that continuously improves and innovates cloud-related solutions.

How Python Enables Scalable Cloud Solutions?

Scalability is a critical factor in cloud computing, and Python provides multiple ways to achieve it:

1. Automation of Cloud Infrastructure

Python's compatibility with cloud service provider SDKs, such as AWS Boto3, Azure SDK for Python, and Google Cloud Client Library, enables developers to automate the provisioning and management of cloud resources efficiently.

2. Containerization and Orchestration

Python integrates seamlessly with Docker and Kubernetes, enabling businesses to deploy scalable containerized applications efficiently.

3. Cloud-Native Development

Frameworks like Flask, Django, and FastAPI support microservices architecture, allowing businesses to develop lightweight, scalable cloud applications.

4. Serverless Computing

Python's support for serverless platforms, including AWS Lambda, Azure Functions, and Google Cloud Functions, allows developers to build applications that automatically scale in response to demand, optimizing resource utilization and cost.

5. AI and Big Data Scalability

Python’s dominance in AI and data science makes it an ideal choice for cloud-based AI/ML services like AWS SageMaker, Google AI, and Azure Machine Learning.

Looking for expert Python developers to build scalable cloud solutions? Hire Python Developers now!

Advantages of Using Python for Cloud Computing

Cost Efficiency: Python’s compatibility with serverless computing and auto-scaling strategies minimizes cloud costs.

Faster Development: Python’s simplicity accelerates cloud application development, reducing time-to-market.

Cross-Platform Compatibility: Python runs seamlessly across different cloud platforms.

Security and Reliability: Python-based security tools help in encryption, authentication, and cloud monitoring.

Strong Community Support: Python developers worldwide contribute to continuous improvements, making it future-proof.

Challenges and Considerations

While Python offers many benefits, there are some challenges to consider:

Performance Limitations: Python is an interpreted language, which may not be as fast as compiled languages like Java or C++.

Memory Consumption: Python applications might require optimization to handle large-scale cloud workloads efficiently.

Learning Curve for Beginners: Though Python is simple, mastering cloud-specific frameworks requires time and expertise.

Python Libraries and Tools for Cloud Computing

Python’s ecosystem includes powerful libraries and tools tailored for cloud computing, such as:

Boto3: AWS SDK for Python, used for cloud automation.

Google Cloud Client Library: Helps interact with Google Cloud services.

Azure SDK for Python: Enables seamless integration with Microsoft Azure.

Apache Libcloud: Provides a unified interface for multiple cloud providers.

PyCaret: Simplifies machine learning deployment in cloud environments.

Real-World Applications of Python in Cloud Computing

1. Netflix - Scalable Streaming with Python

Netflix extensively uses Python for automation, data analysis, and managing cloud infrastructure, enabling seamless content delivery to millions of users.

2. Spotify - Cloud-Based Music Streaming

Spotify leverages Python for big data processing, recommendation algorithms, and cloud automation, ensuring high availability and scalability.

3. Reddit - Handling Massive Traffic

Reddit uses Python and AWS cloud solutions to manage heavy traffic while optimizing server costs efficiently.

Future of Python in Cloud Computing

The future of Python in cloud computing looks promising with emerging trends such as:

AI-Driven Cloud Automation: Python-powered AI and machine learning will drive intelligent cloud automation.

Edge Computing: Python will play a crucial role in processing data at the edge for IoT and real-time applications.

Hybrid and Multi-Cloud Strategies: Python’s flexibility will enable seamless integration across multiple cloud platforms.

Increased Adoption of Serverless Computing: More enterprises will adopt Python for cost-effective serverless applications.

Conclusion

Python's simplicity, versatility, and robust ecosystem make it a powerful tool for developing scalable and cost-effective cloud solutions. By leveraging Python's capabilities, businesses can enhance their cloud applications' performance, flexibility, and efficiency.

Ready to harness the power of Python for your cloud solutions? Explore our Python Development Services to discover how we can assist you in building scalable and efficient cloud applications.

FAQs

1. Why is Python used in cloud computing?

Python is widely used in cloud computing due to its simplicity, extensive libraries, and seamless integration with cloud platforms like AWS, Google Cloud, and Azure.

2. Is Python good for serverless computing?

Yes! Python works efficiently in serverless environments like AWS Lambda, Azure Functions, and Google Cloud Functions, making it an ideal choice for cost-effective, auto-scaling applications.

3. Which companies use Python for cloud solutions?

Major companies like Netflix, Spotify, Dropbox, and Reddit use Python for cloud automation, AI, and scalable infrastructure management.

4. How does Python help with cloud security?

Python offers robust security libraries like PyCryptodome and OpenSSL, enabling encryption, authentication, and cloud monitoring for secure cloud applications.

5. Can Python handle big data in the cloud?

Yes! Python supports big data processing with tools like Apache Spark, Pandas, and NumPy, making it suitable for data-driven cloud applications.

#Python development company#Python in Cloud Computing#Hire Python Developers#Python for Multi-Cloud Environments

2 notes

·

View notes

Text

Exploring the Azure Technology Stack: A Solution Architect’s Journey

Kavin

As a solution architect, my career revolves around solving complex problems and designing systems that are scalable, secure, and efficient. The rise of cloud computing has transformed the way we think about technology, and Microsoft Azure has been at the forefront of this evolution. With its diverse and powerful technology stack, Azure offers endless possibilities for businesses and developers alike. My journey with Azure began with Microsoft Azure training online, which not only deepened my understanding of cloud concepts but also helped me unlock the potential of Azure’s ecosystem.

In this blog, I will share my experience working with a specific Azure technology stack that has proven to be transformative in various projects. This stack primarily focuses on serverless computing, container orchestration, DevOps integration, and globally distributed data management. Let’s dive into how these components come together to create robust solutions for modern business challenges.

Understanding the Azure Ecosystem

Azure’s ecosystem is vast, encompassing services that cater to infrastructure, application development, analytics, machine learning, and more. For this blog, I will focus on a specific stack that includes:

Azure Functions for serverless computing.

Azure Kubernetes Service (AKS) for container orchestration.

Azure DevOps for streamlined development and deployment.

Azure Cosmos DB for globally distributed, scalable data storage.

Each of these services has unique strengths, and when used together, they form a powerful foundation for building modern, cloud-native applications.

1. Azure Functions: Embracing Serverless Architecture

Serverless computing has redefined how we build and deploy applications. With Azure Functions, developers can focus on writing code without worrying about managing infrastructure. Azure Functions supports multiple programming languages and offers seamless integration with other Azure services.

Real-World Application

In one of my projects, we needed to process real-time data from IoT devices deployed across multiple locations. Azure Functions was the perfect choice for this task. By integrating Azure Functions with Azure Event Hubs, we were able to create an event-driven architecture that processed millions of events daily. The serverless nature of Azure Functions allowed us to scale dynamically based on workload, ensuring cost-efficiency and high performance.

Key Benefits:

Auto-scaling: Automatically adjusts to handle workload variations.

Cost-effective: Pay only for the resources consumed during function execution.

Integration-ready: Easily connects with services like Logic Apps, Event Grid, and API Management.

2. Azure Kubernetes Service (AKS): The Power of Containers

Containers have become the backbone of modern application development, and Azure Kubernetes Service (AKS) simplifies container orchestration. AKS provides a managed Kubernetes environment, making it easier to deploy, manage, and scale containerized applications.

Real-World Application

In a project for a healthcare client, we built a microservices architecture using AKS. Each service—such as patient records, appointment scheduling, and billing—was containerized and deployed on AKS. This approach provided several advantages:

Isolation: Each service operated independently, improving fault tolerance.

Scalability: AKS scaled specific services based on demand, optimizing resource usage.

Observability: Using Azure Monitor, we gained deep insights into application performance and quickly resolved issues.

The integration of AKS with Azure DevOps further streamlined our CI/CD pipelines, enabling rapid deployment and updates without downtime.

Key Benefits:

Managed Kubernetes: Reduces operational overhead with automated updates and patching.

Multi-region support: Enables global application deployments.

Built-in security: Integrates with Azure Active Directory and offers role-based access control (RBAC).

3. Azure DevOps: Streamlining Development Workflows

Azure DevOps is an all-in-one platform for managing development workflows, from planning to deployment. It includes tools like Azure Repos, Azure Pipelines, and Azure Artifacts, which support collaboration and automation.

Real-World Application

For an e-commerce client, we used Azure DevOps to establish an efficient CI/CD pipeline. The project involved multiple teams working on front-end, back-end, and database components. Azure DevOps provided:

Version control: Using Azure Repos for centralized code management.

Automated pipelines: Azure Pipelines for building, testing, and deploying code.

Artifact management: Storing dependencies in Azure Artifacts for seamless integration.

The result? Deployment cycles that previously took weeks were reduced to just a few hours, enabling faster time-to-market and improved customer satisfaction.

Key Benefits:

End-to-end integration: Unifies tools for seamless development and deployment.

Scalability: Supports projects of all sizes, from startups to enterprises.

Collaboration: Facilitates team communication with built-in dashboards and tracking.

4. Azure Cosmos DB: Global Data at Scale

Azure Cosmos DB is a globally distributed, multi-model database service designed for mission-critical applications. It guarantees low latency, high availability, and scalability, making it ideal for applications requiring real-time data access across multiple regions.

Real-World Application

In a project for a financial services company, we used Azure Cosmos DB to manage transaction data across multiple continents. The database’s multi-region replication ensure data consistency and availability, even during regional outages. Additionally, Cosmos DB’s support for multiple APIs (SQL, MongoDB, Cassandra, etc.) allowed us to integrate seamlessly with existing systems.

Key Benefits:

Global distribution: Data is replicated across regions with minimal latency.

Flexibility: Supports various data models, including key-value, document, and graph.

SLAs: Offers industry-leading SLAs for availability, throughput, and latency.

Building a Cohesive Solution

Combining these Azure services creates a technology stack that is flexible, scalable, and efficient. Here’s how they work together in a hypothetical solution:

Data Ingestion: IoT devices send data to Azure Event Hubs.

Processing: Azure Functions processes the data in real-time.

Storage: Processed data is stored in Azure Cosmos DB for global access.

Application Logic: Containerized microservices run on AKS, providing APIs for accessing and manipulating data.

Deployment: Azure DevOps manages the CI/CD pipeline, ensuring seamless updates to the application.

This architecture demonstrates how Azure’s technology stack can address modern business challenges while maintaining high performance and reliability.

Final Thoughts

My journey with Azure has been both rewarding and transformative. The training I received at ACTE Institute provided me with a strong foundation to explore Azure’s capabilities and apply them effectively in real-world scenarios. For those new to cloud computing, I recommend starting with a solid training program that offers hands-on experience and practical insights.

As the demand for cloud professionals continues to grow, specializing in Azure’s technology stack can open doors to exciting opportunities. If you’re based in Hyderabad or prefer online learning, consider enrolling in Microsoft Azure training in Hyderabad to kickstart your journey.

Azure’s ecosystem is continuously evolving, offering new tools and features to address emerging challenges. By staying committed to learning and experimenting, we can harness the full potential of this powerful platform and drive innovation in every project we undertake.

#cybersecurity#database#marketingstrategy#digitalmarketing#adtech#artificialintelligence#machinelearning#ai

2 notes

·

View notes

Text

A3 Ultra VMs With NVIDIA H200 GPUs Pre-launch This Month

Strong infrastructure advancements for your future that prioritizes AI

To increase customer performance, usability, and cost-effectiveness, Google Cloud implemented improvements throughout the AI Hypercomputer stack this year. Google Cloud at the App Dev & Infrastructure Summit:

Trillium, Google’s sixth-generation TPU, is currently available for preview.

Next month, A3 Ultra VMs with NVIDIA H200 Tensor Core GPUs will be available for preview.

Google’s new, highly scalable clustering system, Hypercompute Cluster, will be accessible beginning with A3 Ultra VMs.

Based on Axion, Google’s proprietary Arm processors, C4A virtual machines (VMs) are now widely accessible

AI workload-focused additions to Titanium, Google Cloud’s host offload capability, and Jupiter, its data center network.

Google Cloud’s AI/ML-focused block storage service, Hyperdisk ML, is widely accessible.

Trillium A new era of TPU performance

Trillium A new era of TPU performance is being ushered in by TPUs, which power Google’s most sophisticated models like Gemini, well-known Google services like Maps, Photos, and Search, as well as scientific innovations like AlphaFold 2, which was just awarded a Nobel Prize! We are happy to inform that Google Cloud users can now preview Trillium, our sixth-generation TPU.

Taking advantage of NVIDIA Accelerated Computing to broaden perspectives

By fusing the best of Google Cloud’s data center, infrastructure, and software skills with the NVIDIA AI platform which is exemplified by A3 and A3 Mega VMs powered by NVIDIA H100 Tensor Core GPUs it also keeps investing in its partnership and capabilities with NVIDIA.

Google Cloud announced that the new A3 Ultra VMs featuring NVIDIA H200 Tensor Core GPUs will be available on Google Cloud starting next month.

Compared to earlier versions, A3 Ultra VMs offer a notable performance improvement. Their foundation is NVIDIA ConnectX-7 network interface cards (NICs) and servers equipped with new Titanium ML network adapter, which is tailored to provide a safe, high-performance cloud experience for AI workloads. A3 Ultra VMs provide non-blocking 3.2 Tbps of GPU-to-GPU traffic using RDMA over Converged Ethernet (RoCE) when paired with our datacenter-wide 4-way rail-aligned network.

In contrast to A3 Mega, A3 Ultra provides:

With the support of Google’s Jupiter data center network and Google Cloud’s Titanium ML network adapter, double the GPU-to-GPU networking bandwidth

With almost twice the memory capacity and 1.4 times the memory bandwidth, LLM inferencing performance can increase by up to 2 times.

Capacity to expand to tens of thousands of GPUs in a dense cluster with performance optimization for heavy workloads in HPC and AI.

Google Kubernetes Engine (GKE), which offers an open, portable, extensible, and highly scalable platform for large-scale training and AI workloads, will also offer A3 Ultra VMs.

Hypercompute Cluster: Simplify and expand clusters of AI accelerators

It’s not just about individual accelerators or virtual machines, though; when dealing with AI and HPC workloads, you have to deploy, maintain, and optimize a huge number of AI accelerators along with the networking and storage that go along with them. This may be difficult and time-consuming. For this reason, Google Cloud is introducing Hypercompute Cluster, which simplifies the provisioning of workloads and infrastructure as well as the continuous operations of AI supercomputers with tens of thousands of accelerators.

Fundamentally, Hypercompute Cluster integrates the most advanced AI infrastructure technologies from Google Cloud, enabling you to install and operate several accelerators as a single, seamless unit. You can run your most demanding AI and HPC workloads with confidence thanks to Hypercompute Cluster’s exceptional performance and resilience, which includes features like targeted workload placement, dense resource co-location with ultra-low latency networking, and sophisticated maintenance controls to reduce workload disruptions.

For dependable and repeatable deployments, you can use pre-configured and validated templates to build up a Hypercompute Cluster with just one API call. This include containerized software with orchestration (e.g., GKE, Slurm), framework and reference implementations (e.g., JAX, PyTorch, MaxText), and well-known open models like Gemma2 and Llama3. As part of the AI Hypercomputer architecture, each pre-configured template is available and has been verified for effectiveness and performance, allowing you to concentrate on business innovation.

A3 Ultra VMs will be the first Hypercompute Cluster to be made available next month.

An early look at the NVIDIA GB200 NVL72

Google Cloud is also awaiting the developments made possible by NVIDIA GB200 NVL72 GPUs, and we’ll be providing more information about this fascinating improvement soon. Here is a preview of the racks Google constructing in the meantime to deliver the NVIDIA Blackwell platform’s performance advantages to Google Cloud’s cutting-edge, environmentally friendly data centers in the early months of next year.

Redefining CPU efficiency and performance with Google Axion Processors

CPUs are a cost-effective solution for a variety of general-purpose workloads, and they are frequently utilized in combination with AI workloads to produce complicated applications, even if TPUs and GPUs are superior at specialized jobs. Google Axion Processors, its first specially made Arm-based CPUs for the data center, at Google Cloud Next ’24. Customers using Google Cloud may now benefit from C4A virtual machines, the first Axion-based VM series, which offer up to 10% better price-performance compared to the newest Arm-based instances offered by other top cloud providers.

Additionally, compared to comparable current-generation x86-based instances, C4A offers up to 60% more energy efficiency and up to 65% better price performance for general-purpose workloads such as media processing, AI inferencing applications, web and app servers, containerized microservices, open-source databases, in-memory caches, and data analytics engines.

Titanium and Jupiter Network: Making AI possible at the speed of light

Titanium, the offload technology system that supports Google’s infrastructure, has been improved to accommodate workloads related to artificial intelligence. Titanium provides greater compute and memory resources for your applications by lowering the host’s processing overhead through a combination of on-host and off-host offloads. Furthermore, although Titanium’s fundamental features can be applied to AI infrastructure, the accelerator-to-accelerator performance needs of AI workloads are distinct.

Google has released a new Titanium ML network adapter to address these demands, which incorporates and expands upon NVIDIA ConnectX-7 NICs to provide further support for virtualization, traffic encryption, and VPCs. The system offers best-in-class security and infrastructure management along with non-blocking 3.2 Tbps of GPU-to-GPU traffic across RoCE when combined with its data center’s 4-way rail-aligned network.

Google’s Jupiter optical circuit switching network fabric and its updated data center network significantly expand Titanium’s capabilities. With native 400 Gb/s link rates and a total bisection bandwidth of 13.1 Pb/s (a practical bandwidth metric that reflects how one half of the network can connect to the other), Jupiter could handle a video conversation for every person on Earth at the same time. In order to meet the increasing demands of AI computation, this enormous scale is essential.

Hyperdisk ML is widely accessible

For computing resources to continue to be effectively utilized, system-level performance maximized, and economical, high-performance storage is essential. Google launched its AI-powered block storage solution, Hyperdisk ML, in April 2024. Now widely accessible, it adds dedicated storage for AI and HPC workloads to the networking and computing advancements.

Hyperdisk ML efficiently speeds up data load times. It drives up to 11.9x faster model load time for inference workloads and up to 4.3x quicker training time for training workloads.

With 1.2 TB/s of aggregate throughput per volume, you may attach 2500 instances to the same volume. This is more than 100 times more than what big block storage competitors are giving.

Reduced accelerator idle time and increased cost efficiency are the results of shorter data load times.

Multi-zone volumes are now automatically created for your data by GKE. In addition to quicker model loading with Hyperdisk ML, this enables you to run across zones for more computing flexibility (such as lowering Spot preemption).

Developing AI’s future

Google Cloud enables companies and researchers to push the limits of AI innovation with these developments in AI infrastructure. It anticipates that this strong foundation will give rise to revolutionary new AI applications.

Read more on Govindhtech.com

#A3UltraVMs#NVIDIAH200#AI#Trillium#HypercomputeCluster#GoogleAxionProcessors#Titanium#News#Technews#Technology#Technologynews#Technologytrends#Govindhtech

2 notes

·

View notes

Text

What are the latest trends in the IT job market?

Introduction

The IT job market is changing quickly. This change is because of new technology, different employer needs, and more remote work.

For jobseekers, understanding these trends is crucial to positioning themselves as strong candidates in a highly competitive landscape.

This blog looks at the current IT job market. It offers insights into job trends and opportunities. You will also find practical strategies to improve your chances of getting your desired role.

Whether you’re in the midst of a job search or considering a career change, this guide will help you navigate the complexities of the job hunting process and secure employment in today’s market.

Section 1: Understanding the Current IT Job Market

Recent Trends in the IT Job Market

The IT sector is booming, with consistent demand for skilled professionals in various domains such as cybersecurity, cloud computing, and data science.

The COVID-19 pandemic accelerated the shift to remote work, further expanding the demand for IT roles that support this transformation.

Employers are increasingly looking for candidates with expertise in AI, machine learning, and DevOps as these technologies drive business innovation.

According to industry reports, job opportunities in IT will continue to grow, with the most substantial demand focused on software development, data analysis, and cloud architecture.

It’s essential for jobseekers to stay updated on these trends to remain competitive and tailor their skills to current market needs.

Recruitment efforts have also become more digitized, with many companies adopting virtual hiring processes and online job fairs.

This creates both challenges and opportunities for job seekers to showcase their talents and secure interviews through online platforms.

NOTE: Visit Now

Remote Work and IT

The surge in remote work opportunities has transformed the job market. Many IT companies now offer fully remote or hybrid roles, which appeal to professionals seeking greater flexibility.

While remote work has increased access to job opportunities, it has also intensified competition, as companies can now hire from a global talent pool.

Section 2: Choosing the Right Keywords for Your IT Resume

Keyword Optimization: Why It Matters

With more employers using Applicant Tracking Systems (ATS) to screen resumes, it’s essential for jobseekers to optimize their resumes with relevant keywords.

These systems scan resumes for specific words related to the job description and only advance the most relevant applications.

To increase the chances of your resume making it through the initial screening, jobseekers must identify and incorporate the right keywords into their resumes.

When searching for jobs in IT, it’s important to tailor your resume for specific job titles and responsibilities. Keywords like “software engineer,” “cloud computing,” “data security,” and “DevOps” can make a huge difference.

By strategically using keywords that reflect your skills, experience, and the job requirements, you enhance your resume’s visibility to hiring managers and recruitment software.

Step-by-Step Keyword Selection Process

Analyze Job Descriptions: Look at several job postings for roles you’re interested in and identify recurring terms.

Incorporate Specific Terms: Include technical terms related to your field (e.g., Python, Kubernetes, cloud infrastructure).

Use Action Verbs: Keywords like “developed,” “designed,” or “implemented” help demonstrate your experience in a tangible way.

Test Your Resume: Use online tools to see how well your resume aligns with specific job postings and make adjustments as necessary.

Section 3: Customizing Your Resume for Each Job Application

Why Customization is Key

One size does not fit all when it comes to resumes, especially in the IT industry. Jobseekers who customize their resumes for each job application are more likely to catch the attention of recruiters. Tailoring your resume allows you to emphasize the specific skills and experiences that align with the job description, making you a stronger candidate. Employers want to see that you’ve taken the time to understand their needs and that your expertise matches what they are looking for.

Key Areas to Customize:

Summary Section: Write a targeted summary that highlights your qualifications and goals in relation to the specific job you’re applying for.

Skills Section: Highlight the most relevant skills for the position, paying close attention to the technical requirements listed in the job posting.

Experience Section: Adjust your work experience descriptions to emphasize the accomplishments and projects that are most relevant to the job.

Education & Certifications: If certain qualifications or certifications are required, make sure they are easy to spot on your resume.

NOTE: Read More

Section 4: Reviewing and Testing Your Optimized Resume

Proofreading for Perfection

Before submitting your resume, it’s critical to review it for accuracy, clarity, and relevance. Spelling mistakes, grammatical errors, or outdated information can reflect poorly on your professionalism.

Additionally, make sure your resume is easy to read and visually organized, with clear headings and bullet points. If possible, ask a peer or mentor in the IT field to review your resume for content accuracy and feedback.

Testing Your Resume with ATS Tools

After making your resume keyword-optimized, test it using online tools that simulate ATS systems. This allows you to see how well your resume aligns with specific job descriptions and identify areas for improvement.

Many tools will give you a match score, showing you how likely your resume is to pass an ATS scan. From here, you can fine-tune your resume to increase its chances of making it to the recruiter’s desk.

Section 5: Trends Shaping the Future of IT Recruitment

Embracing Digital Recruitment

Recruiting has undergone a significant shift towards digital platforms, with job fairs, interviews, and onboarding now frequently taking place online.

This transition means that jobseekers must be comfortable navigating virtual job fairs, remote interviews, and online assessments.

As IT jobs increasingly allow remote work, companies are also using technology-driven recruitment tools like AI for screening candidates.

Jobseekers should also leverage platforms like LinkedIn to increase visibility in the recruitment space. Keeping your LinkedIn profile updated, networking with industry professionals, and engaging in online discussions can all boost your chances of being noticed by recruiters.

Furthermore, participating in virtual job fairs or IT recruitment events provides direct access to recruiters and HR professionals, enhancing your job hunt.

FAQs

1. How important are keywords in IT resumes?

Keywords are essential in IT resumes because they ensure your resume passes through Applicant Tracking Systems (ATS), which scans resumes for specific terms related to the job. Without the right keywords, your resume may not reach a human recruiter.

2. How often should I update my resume?

It’s a good idea to update your resume regularly, especially when you gain new skills or experience. Also, customize it for every job application to ensure it aligns with the job’s specific requirements.

3. What are the most in-demand IT jobs?

Some of the most in-demand IT jobs include software developers, cloud engineers, cybersecurity analysts, data scientists, and DevOps engineers.

4. How can I stand out in the current IT job market?

To stand out, jobseekers should focus on tailoring their resumes, building strong online profiles, networking, and keeping up-to-date with industry trends. Participation in online forums, attending webinars, and earning industry-relevant certifications can also enhance visibility.

Conclusion

The IT job market continues to offer exciting opportunities for jobseekers, driven by technological innovations and changing work patterns.

By staying informed about current trends, customizing your resume, using keywords effectively, and testing your optimized resume, you can improve your job search success.

Whether you are new to the IT field or an experienced professional, leveraging these strategies will help you navigate the competitive landscape and secure a job that aligns with your career goals.

NOTE: Contact Us

2 notes

·

View notes

Text

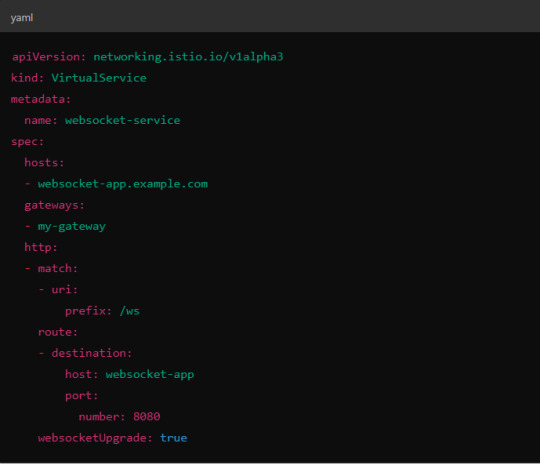

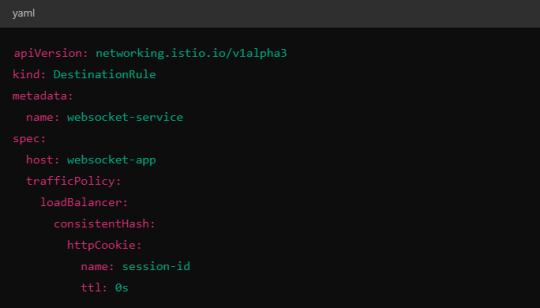

Load Balancing Web Sockets with K8s/Istio

When load balancing WebSockets in a Kubernetes (K8s) environment with Istio, there are several considerations to ensure persistent, low-latency connections. WebSockets require special handling because they are long-lived, bidirectional connections, which are different from standard HTTP request-response communication. Here’s a guide to implementing load balancing for WebSockets using Istio.

1. Enable WebSocket Support in Istio

By default, Istio supports WebSocket connections, but certain configurations may need tweaking. You should ensure that:

Destination rules and VirtualServices are configured appropriately to allow WebSocket traffic.

Example VirtualService Configuration.

Here, websocketUpgrade: true explicitly allows WebSocket traffic and ensures that Istio won’t downgrade the WebSocket connection to HTTP.

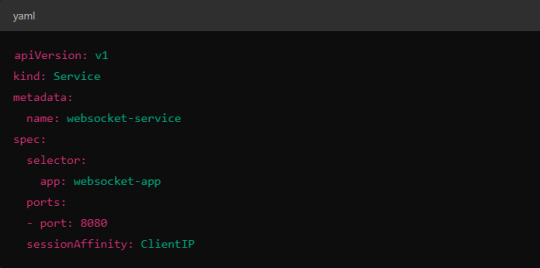

2. Session Affinity (Sticky Sessions)

In WebSocket applications, sticky sessions or session affinity is often necessary to keep long-running WebSocket connections tied to the same backend pod. Without session affinity, WebSocket connections can be terminated if the load balancer routes the traffic to a different pod.

Implementing Session Affinity in Istio.

Session affinity is typically achieved by setting the sessionAffinity field to ClientIP at the Kubernetes service level.

In Istio, you might also control affinity using headers. For example, Istio can route traffic based on headers by configuring a VirtualService to ensure connections stay on the same backend.

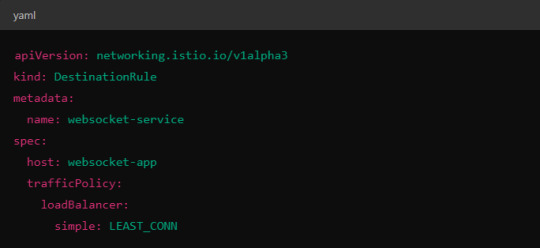

3. Load Balancing Strategy

Since WebSocket connections are long-lived, round-robin or random load balancing strategies can lead to unbalanced workloads across pods. To address this, you may consider using least connection or consistent hashing algorithms to ensure that existing connections are efficiently distributed.

Load Balancer Configuration in Istio.

Istio allows you to specify different load balancing strategies in the DestinationRule for your services. For WebSockets, the LEAST_CONN strategy may be more appropriate.

Alternatively, you could use consistent hashing for a more sticky routing based on connection properties like the user session ID.

This configuration ensures that connections with the same session ID go to the same pod.

4. Scaling Considerations

WebSocket applications can handle a large number of concurrent connections, so you’ll need to ensure that your Kubernetes cluster can scale appropriately.

Horizontal Pod Autoscaler (HPA): Use an HPA to automatically scale your pods based on metrics like CPU, memory, or custom metrics such as open WebSocket connections.

Istio Autoscaler: You may also scale Istio itself to handle the increased load on the control plane as WebSocket connections increase.

5. Connection Timeouts and Keep-Alive

Ensure that both your WebSocket clients and the Istio proxy (Envoy) are configured for long-lived connections. Some settings that need attention:

Timeouts: In VirtualService, make sure there are no aggressive timeout settings that would prematurely close WebSocket connections.

Keep-Alive Settings: You can also adjust the keep-alive settings at the Envoy level if necessary. Envoy, the proxy used by Istio, supports long-lived WebSocket connections out-of-the-box, but custom keep-alive policies can be configured.

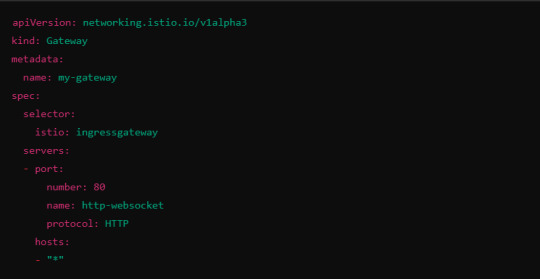

6. Ingress Gateway Configuration

If you're using an Istio Ingress Gateway, ensure that it is configured to handle WebSocket traffic. The gateway should allow for WebSocket connections on the relevant port.

This configuration ensures that the Ingress Gateway can handle WebSocket upgrades and correctly route them to the backend service.

Summary of Key Steps

Enable WebSocket support in Istio’s VirtualService.

Use session affinity to tie WebSocket connections to the same backend pod.

Choose an appropriate load balancing strategy, such as least connection or consistent hashing.

Set timeouts and keep-alive policies to ensure long-lived WebSocket connections.

Configure the Ingress Gateway to handle WebSocket traffic.

By properly configuring Istio, Kubernetes, and your WebSocket service, you can efficiently load balance WebSocket connections in a microservices architecture.

#kubernetes#websockets#Load Balancing#devops#linux#coding#programming#Istio#virtualservices#Load Balancer#Kubernetes cluster#gateway#python#devlog#github#ansible

5 notes

·

View notes

Text

Is cPanel on Its Deathbed? A Tale of Technology, Profits, and a Slow-Moving Train Wreck

Ah, cPanel. The go-to control panel for many web hosting services since the dawn of, well, web hosting. Once the epitome of innovation, it’s now akin to a grizzled war veteran, limping along with a cane and wearing an “I Survived Y2K” t-shirt. So what went wrong? Let’s dive into this slow-moving technological telenovela, rife with corporate greed, security loopholes, and a legacy that may be hanging by a thread.

Chapter 1: A Brief, Glorious History (Or How cPanel Shot to Stardom)

Once upon a time, cPanel was the bee’s knees. Launched in 1996, this software was, for a while, the pinnacle of web management systems. It promised simplicity, reliability, and functionality. Oh, the golden years!

Chapter 2: The Tech Stack Tortoise

In the fast-paced world of technology, being stagnant is synonymous with being extinct. While newer tech stacks are integrating AI, machine learning, and all sorts of jazzy things, cPanel seems to be stuck in a time warp. Why? Because the tech stack is more outdated than a pair of bell-bottom trousers. No Docker, no Kubernetes, and don’t even get me started on the lack of robust API support.

Chapter 3: “The Corpulent Corporate”

In 2018, Oakley Capital, a private equity firm, acquired cPanel. For many, this was the beginning of the end. Pricing structures were jumbled, turning into a monetisation extravaganza. It’s like turning your grandma’s humble pie shop into a mass production line for rubbery, soulless pies. They’ve squeezed every ounce of profit from it, often at the expense of the end-users and smaller hosting companies.

Chapter 4: Security—or the Lack Thereof

Ah, the elephant in the room. cPanel has had its fair share of vulnerabilities. Whether it’s SQL injection flaws, privilege escalation, or simple, plain-text passwords (yes, you heard right), cPanel often appears in the headlines for all the wrong reasons. It’s like that dodgy uncle at family reunions who always manages to spill wine on the carpet; you know he’s going to mess up, yet somehow he’s always invited.

Chapter 5: The (Dis)loyal Subjects—The Hosting Companies

Remember those hosting companies that once swore by cPanel? Well, let’s just say some of them have been seen flirting with competitors at the bar. Newer, shinier control panels are coming to market, offering modern tech stacks and, gasp, lower prices! It’s like watching cPanel’s loyal subjects slowly turn their backs, one by one.

Chapter 6: The Alternatives—Not Just a Rebellion, but a Revolution

Plesk, Webmin, DirectAdmin, oh my! New players are rising, offering updated tech stacks, more customizable APIs, and—wait for it—better security protocols. They’re the Han Solos to cPanel’s Jabba the Hutt: faster, sleeker, and without the constant drooling.

Conclusion: The Twilight Years or a Second Wind?

The debate rages on. Is cPanel merely an ageing actor waiting for its swan song, or can it adapt and evolve, perhaps surprising us all? Either way, the story of cPanel serves as a cautionary tale: adapt or die. And for heaven’s sake, update your tech stack before it becomes a relic in a technology museum, right between floppy disks and dial-up modems.

This outline only scratches the surface, but it’s a start. If cPanel wants to avoid becoming the Betamax of web management systems, it better start evolving—stat. Cheers!

#hosting#wordpress#cpanel#webdesign#servers#websites#webdeveloper#technology#tech#website#developer#digitalagency#uk#ukdeals#ukbusiness#smallbussinessowner

14 notes

·

View notes

Text

Ansible Collections: Extending Ansible’s Capabilities

Ansible is a powerful automation tool used for configuration management, application deployment, and task automation. One of the key features that enhances its flexibility and extensibility is the concept of Ansible Collections. In this blog post, we'll explore what Ansible Collections are, how to create and use them, and look at some popular collections and their use cases.

Introduction to Ansible Collections

Ansible Collections are a way to package and distribute Ansible content. This content can include playbooks, roles, modules, plugins, and more. Collections allow users to organize their Ansible content and share it more easily, making it simpler to maintain and reuse.

Key Features of Ansible Collections:

Modularity: Collections break down Ansible content into modular components that can be independently developed, tested, and maintained.

Distribution: Collections can be distributed via Ansible Galaxy or private repositories, enabling easy sharing within teams or the wider Ansible community.

Versioning: Collections support versioning, allowing users to specify and depend on specific versions of a collection. How to Create and Use Collections in Your Projects

Creating and using Ansible Collections involves a few key steps. Here’s a guide to get you started:

1. Setting Up Your Collection

To create a new collection, you can use the ansible-galaxy command-line tool:

ansible-galaxy collection init my_namespace.my_collection

This command sets up a basic directory structure for your collection:

my_namespace/

└── my_collection/

├── docs/

├── plugins/

│ ├── modules/

│ ├── inventory/

│ └── ...

├── roles/

├── playbooks/

├── README.md

└── galaxy.yml

2. Adding Content to Your Collection

Populate your collection with the necessary content. For example, you can add roles, modules, and plugins under the respective directories. Update the galaxy.yml file with metadata about your collection.

3. Building and Publishing Your Collection

Once your collection is ready, you can build it using the following command:

ansible-galaxy collection build

This command creates a tarball of your collection, which you can then publish to Ansible Galaxy or a private repository:

ansible-galaxy collection publish my_namespace-my_collection-1.0.0.tar.gz

4. Using Collections in Your Projects

To use a collection in your Ansible project, specify it in your requirements.yml file:

collections:

- name: my_namespace.my_collection

version: 1.0.0

Then, install the collection using:

ansible-galaxy collection install -r requirements.yml

You can now use the content from the collection in your playbooks:--- - name: Example Playbook hosts: localhost tasks: - name: Use a module from the collection my_namespace.my_collection.my_module: param: value

Popular Collections and Their Use Cases

Here are some popular Ansible Collections and how they can be used:

1. community.general

Description: A collection of modules, plugins, and roles that are not tied to any specific provider or technology.

Use Cases: General-purpose tasks like file manipulation, network configuration, and user management.

2. amazon.aws

Description: Provides modules and plugins for managing AWS resources.

Use Cases: Automating AWS infrastructure, such as EC2 instances, S3 buckets, and RDS databases.

3. ansible.posix

Description: A collection of modules for managing POSIX systems.

Use Cases: Tasks specific to Unix-like systems, such as managing users, groups, and file systems.

4. cisco.ios

Description: Contains modules and plugins for automating Cisco IOS devices.

Use Cases: Network automation for Cisco routers and switches, including configuration management and backup.

5. kubernetes.core

Description: Provides modules for managing Kubernetes resources.

Use Cases: Deploying and managing Kubernetes applications, services, and configurations.

Conclusion

Ansible Collections significantly enhance the modularity, distribution, and reusability of Ansible content. By understanding how to create and use collections, you can streamline your automation workflows and share your work with others more effectively. Explore popular collections to leverage existing solutions and extend Ansible’s capabilities in your projects.

For more details click www.qcsdclabs.com

#redhatcourses#information technology#linux#containerorchestration#container#kubernetes#containersecurity#docker#dockerswarm#aws

2 notes

·

View notes

Text

Tumblr has been making a lot of controversial changes lately, and this post has some great points that inspired me to say more.

It seems like people are very split on whether our hellsite doing stupid anti-user stuff means that we need to show more support or show less support. In my opinion, it's a cry for help that means we need more support (especially monetary), and I'll explain why.

Tumblr is currently a financially sinking ship. It's costing more money in upkeep than it's making. Automattic, the company that owns it, is trying to make it profitable, because they're a business. It's what they do. In my opinion, they have much better intentions than the previous overlords. Matt Mullenweg, the CEO of Automattic, said (a bit indirectly) in a blog post that he wants to open source Tumblr. That was 12 August 2019.

At the time of writing this post (23 August 2023), they're doing a damn good job of it. Looking through the blog of Tumblr's engineering team, they've already open-sourced several of the site's components:

StreamBuilder (the thing that makes the dashboard)

Kanvas (media editor and camera)

Tumblr's custom Kubernetes system (this is what allows them to scale the site's software to a huge number of servers to handle all the traffic)

webpack-web-app-manifest-plugin (I have no idea what this one does, maybe some JavaScript developer can enlighten me)

and that's great! More importantly, it shows that they have good intentions. Making the site open source is a very pro-consumer thing to do, because it means they care about consumers having good services more than they care about being profitable. If they only cared about profit, they would avoid the risk of inadvertently assisting competitors with the open source effort.

My point here is that they are genuinely trying to balance keeping users happy with not having Tumblr die completely.

So at this point, their options are:

sit still and let the platform die

change stuff until the platform is profitable

and since doing #1 would be stupid, they're doing #2. Needless to say, they are not doing a great job of it, for many many reasons. The most direct thing we can do is give them money so that the platform becomes profitable, that way they're no longer being held hostage by their finances. However compelling user feedback may be, it's not more compelling than the company dying. So we save the site from dying financially, then we work on improving the other stuff.

Some people think that giving them money is endorsing what they're doing right now, like disproportionately applying the mature label to trans folks, and twitterifying the dashboard. I disagree.

Giving money to Tumblr is saying "I think you can do better with more resources, why don't you show me."

They clearly need more resources to moderate properly, and to figure out how their decisions are impacting users. In the first post I linked it talks about how running experiments on people's behavior (and getting meaningful results) is really hard. They clearly need more resources for that, so they can accurately quantify how shit their decisions are, and then make better ones. They can't do that if the site is fucking dead.

Tumblr can't get better if it's fucking dead.

so buy crabs, support the site, and have faith that it will improve eventually. If it doesn't, we can all jump ship to cohost or something, but I would prefer to stay here.

8 notes

·

View notes

Text

From Novice to Pro: Unleash Your DevOps Wizardry with Our Game-Changing Course

Are you eager to unlock your full potential as a DevOps expert? Look no further! Our comprehensive and ground breaking course is designed to empower individuals like you to transform from a novice into a pro in the realm of DevOps. In this article, we will delve into the various facets of this course, providing you with the essential education and vital information needed to embark on this thrilling journey. Brace yourself for an enlightening experience that will take your IT skills to unparalleled heights.

Education: The Key to Success

Embrace the Fundamentals

Before diving headfirst into the vast world of DevOps, it is imperative to establish a solid foundation. Our course begins by acquainting you with the fundamental concepts and principles that underpin DevOps. Through a meticulous blend of theory and practical application, you will grasp essential concepts such as continuous integration, continuous delivery, and automation. Embracing these fundamentals will enable you to comprehend the underlying philosophy of DevOps, enabling you to tackle complex challenges with confidence and finesse.

Master the Tools of the Trade

In the ever-evolving landscape of IT, proficiency in the right tools can make all the difference. Our course ensures that you become well-versed in the latest and most powerful DevOps tools available today. From configuration management platforms like Chef and Puppet to container orchestration systems such as Kubernetes, our curriculum covers a wide array of technologies that are essential for any aspiring DevOps wizard. Guided by experienced instructors, you will gain hands-on experience and develop a keen understanding of how these tools can streamline processes and enhance efficiency.

Collaboration and Communication

DevOps is not merely about technology; it is a cultural shift that emphasizes collaboration and communication within teams. Understanding how to foster effective teamwork and seamless interaction is pivotal in becoming a DevOps maestro. Our course places a strong emphasis on cultivating these essential soft skills. By exploring methodologies like Agile and Lean, you will discover how to break down silos, foster cross-functional collaboration, and promote a culture of shared responsibility. Unleashing your potential in this domain will enable you to bridge gaps between departments, leading to empowered teams and accelerated project delivery.

Information: Knowledge is Power

Stay Ahead of the Curve

In the dynamically evolving IT landscape, staying abreast of the latest trends and emerging practices is paramount. Our course goes beyond the basics, equipping you with up-to-date information on cutting-edge technologies and industry best practices. With a finger on the pulse of the DevOps community, our instructors ensure that you receive timely insights into the latest advancements and trends. From cloud-native architectures to infrastructure as code, you will gain a thorough understanding of the innovations shaping the future of DevOps.

Real-World Case Studies

Theoretical knowledge alone is often insufficient in preparing for real-world scenarios. That's why our course incorporates a range of real-world case studies that offer valuable insights and practical applications. By examining success stories and lessons learned from industry leaders, you will gain invaluable wisdom about overcoming hurdles and delivering exceptional results. These case studies provide a glimpse into the challenges faced by seasoned DevOps professionals and present you with the opportunity to hone your problem-solving skills in a safe and supportive environment.

Continuous Learning and Community Engagement

DevOps is a vibrant and dynamic community that thrives on collaboration and continuous learning. Our course not only equips you with the tools and knowledge you need, but it also encourages you to actively engage with the DevOps community. Through forums, discussion boards, and networking opportunities, you will join a network of like-minded individuals, fostering connections with experienced practitioners and expanding your professional horizons. This engagement will enable you to stay informed, exchange ideas, and grow alongside the ever-evolving DevOps ecosystem.

IT: The Pathway to Success

Career Advancement Opportunities

With the rapid adoption of DevOps practices, the demand for skilled professionals has skyrocketed. Our course is designed to propel your career to new heights by equipping you with the skills and knowledge sought after by top organizations worldwide. Whether you aspire to become a DevOps engineer, a systems architect, or a team leader, our course equips you with the tools needed to unlock doors to exciting career opportunities in the IT industry. Embrace this transformative experience and set yourself on a trajectory towards professional growth and success.

Enhanced Efficiency and Productivity

DevOps is synonymous with enhanced efficiency, improved collaboration, and increased productivity. By completing our course, you will possess the ability to streamline workflows, automate repetitive tasks, and foster a culture of continuous improvement. Armed with these skills, you will be able to eliminate bottlenecks, reduce time to market, and ensure seamless integration and delivery of software solutions. By unlocking the power of DevOps, you will become a catalyst for organizational success and drive tangible value for your team and stakeholders.

Embarking on the journey from novice to pro in the realm of DevOps is filled with challenges and opportunities. Our game-changing course provides the education, information, and IT skills needed to navigate this exciting landscape. By mastering the fundamentals, embracing the latest tools, and nurturing essential soft skills, you will transcend the boundaries and unleash your DevOps wizardry. Seize the opportunity to become a catalyst for organizational success and embark on a transformative journey that will set you apart as a DevOps expert. Enroll in our course today at ACTE institute and let your DevOps journey begin!

11 notes

·

View notes

Text

Demystifying Microsoft Azure Cloud Hosting and PaaS Services: A Comprehensive Guide

In the rapidly evolving landscape of cloud computing, Microsoft Azure has emerged as a powerful player, offering a wide range of services to help businesses build, deploy, and manage applications and infrastructure. One of the standout features of Azure is its Cloud Hosting and Platform-as-a-Service (PaaS) offerings, which enable organizations to harness the benefits of the cloud while minimizing the complexities of infrastructure management. In this comprehensive guide, we'll dive deep into Microsoft Azure Cloud Hosting and PaaS Services, demystifying their features, benefits, and use cases.

Understanding Microsoft Azure Cloud Hosting

Cloud hosting, as the name suggests, involves hosting applications and services on virtual servers that are accessed over the internet. Microsoft Azure provides a robust cloud hosting environment, allowing businesses to scale up or down as needed, pay for only the resources they consume, and reduce the burden of maintaining physical hardware. Here are some key components of Azure Cloud Hosting:

Virtual Machines (VMs): Azure offers a variety of pre-configured virtual machine sizes that cater to different workloads. These VMs can run Windows or Linux operating systems and can be easily scaled to meet changing demands.

Azure App Service: This PaaS offering allows developers to build, deploy, and manage web applications without dealing with the underlying infrastructure. It supports various programming languages and frameworks, making it suitable for a wide range of applications.

Azure Kubernetes Service (AKS): For containerized applications, AKS provides a managed Kubernetes service. Kubernetes simplifies the deployment and management of containerized applications, and AKS further streamlines this process.

Exploring Azure Platform-as-a-Service (PaaS) Services

Platform-as-a-Service (PaaS) takes cloud hosting a step further by abstracting away even more of the infrastructure management, allowing developers to focus primarily on building and deploying applications. Azure offers an array of PaaS services that cater to different needs:

Azure SQL Database: This fully managed relational database service eliminates the need for database administration tasks such as patching and backups. It offers high availability, security, and scalability for your data.

Azure Cosmos DB: For globally distributed, highly responsive applications, Azure Cosmos DB is a NoSQL database service that guarantees low-latency access and automatic scaling.

Azure Functions: A serverless compute service, Azure Functions allows you to run code in response to events without provisioning or managing servers. It's ideal for event-driven architectures.

Azure Logic Apps: This service enables you to automate workflows and integrate various applications and services without writing extensive code. It's great for orchestrating complex business processes.

Benefits of Azure Cloud Hosting and PaaS Services

Scalability: Azure's elasticity allows you to scale resources up or down based on demand. This ensures optimal performance and cost efficiency.

Cost Management: With pay-as-you-go pricing, you only pay for the resources you use. Azure also provides cost management tools to monitor and optimize spending.

High Availability: Azure's data centers are distributed globally, providing redundancy and ensuring high availability for your applications.

Security and Compliance: Azure offers robust security features and compliance certifications, helping you meet industry standards and regulations.

Developer Productivity: PaaS services like Azure App Service and Azure Functions streamline development by handling infrastructure tasks, allowing developers to focus on writing code.

Use Cases for Azure Cloud Hosting and PaaS

Web Applications: Azure App Service is ideal for hosting web applications, enabling easy deployment and scaling without managing the underlying servers.

Microservices: Azure Kubernetes Service supports the deployment and orchestration of microservices, making it suitable for complex applications with multiple components.

Data-Driven Applications: Azure's PaaS offerings like Azure SQL Database and Azure Cosmos DB are well-suited for applications that rely heavily on data storage and processing.

Serverless Architecture: Azure Functions and Logic Apps are perfect for building serverless applications that respond to events in real-time.

In conclusion, Microsoft Azure's Cloud Hosting and PaaS Services provide businesses with the tools they need to harness the power of the cloud while minimizing the complexities of infrastructure management. With scalability, cost-efficiency, and a wide array of services, Azure empowers developers and organizations to innovate and deliver impactful applications. Whether you're hosting a web application, managing data, or adopting a serverless approach, Azure has the tools to support your journey into the cloud.

#Microsoft Azure#Internet of Things#Azure AI#Azure Analytics#Azure IoT Services#Azure Applications#Microsoft Azure PaaS

2 notes

·

View notes