#tensorflow serve

Explore tagged Tumblr posts

Text

Just wrapped up the assignments on the final chapter of the #mlzoomcamp on model deployment in Kubernetes clusters. Got foundational hands-on experience with Tensorflow Serving, gRPC, Protobuf data format, docker compose, kubectl, kind and actual Kubernetes clusters on EKS.

#mlzoomcamp#tensorflow serving#grpc#protobuf#kubectl#kind#Kubernetes#docker-compose#artificial intelligence#machinelearning#Amazon EKS

0 notes

Text

Why Python Will Thrive: Future Trends and Applications

Python has already made a significant impact in the tech world, and its trajectory for the future is even more promising. From its simplicity and versatility to its widespread use in cutting-edge technologies, Python is expected to continue thriving in the coming years. Considering the kind support of Python Course in Chennai Whatever your level of experience or reason for switching from another programming language, learning Python gets much more fun.

Let's explore why Python will remain at the forefront of software development and what trends and applications will contribute to its ongoing dominance.

1. Artificial Intelligence and Machine Learning

Python is already the go-to language for AI and machine learning, and its role in these fields is set to expand further. With powerful libraries such as TensorFlow, PyTorch, and Scikit-learn, Python simplifies the development of machine learning models and artificial intelligence applications. As more industries integrate AI for automation, personalization, and predictive analytics, Python will remain a core language for developing intelligent systems.

2. Data Science and Big Data

Data science is one of the most significant areas where Python has excelled. Libraries like Pandas, NumPy, and Matplotlib make data manipulation and visualization simple and efficient. As companies and organizations continue to generate and analyze vast amounts of data, Python’s ability to process, clean, and visualize big data will only become more critical. Additionally, Python’s compatibility with big data platforms like Hadoop and Apache Spark ensures that it will remain a major player in data-driven decision-making.

3. Web Development

Python’s role in web development is growing thanks to frameworks like Django and Flask, which provide robust, scalable, and secure solutions for building web applications. With the increasing demand for interactive websites and APIs, Python is well-positioned to continue serving as a top language for backend development. Its integration with cloud computing platforms will also fuel its growth in building modern web applications that scale efficiently.

4. Automation and Scripting

Automation is another area where Python excels. Developers use Python to automate tasks ranging from system administration to testing and deployment. With the rise of DevOps practices and the growing demand for workflow automation, Python’s role in streamlining repetitive processes will continue to grow. Businesses across industries will rely on Python to boost productivity, reduce errors, and optimize performance. With the aid of Best Online Training & Placement Programs, which offer comprehensive training and job placement support to anyone looking to develop their talents, it’s easier to learn this tool and advance your career.

5. Cybersecurity and Ethical Hacking

With cyber threats becoming increasingly sophisticated, cybersecurity is a critical concern for businesses worldwide. Python is widely used for penetration testing, vulnerability scanning, and threat detection due to its simplicity and effectiveness. Libraries like Scapy and PyCrypto make Python an excellent choice for ethical hacking and security professionals. As the need for robust cybersecurity measures increases, Python’s role in safeguarding digital assets will continue to thrive.

6. Internet of Things (IoT)

Python’s compatibility with microcontrollers and embedded systems makes it a strong contender in the growing field of IoT. Frameworks like MicroPython and CircuitPython enable developers to build IoT applications efficiently, whether for home automation, smart cities, or industrial systems. As the number of connected devices continues to rise, Python will remain a dominant language for creating scalable and reliable IoT solutions.

7. Cloud Computing and Serverless Architectures

The rise of cloud computing and serverless architectures has created new opportunities for Python. Cloud platforms like AWS, Google Cloud, and Microsoft Azure all support Python, allowing developers to build scalable and cost-efficient applications. With its flexibility and integration capabilities, Python is perfectly suited for developing cloud-based applications, serverless functions, and microservices.

8. Gaming and Virtual Reality

Python has long been used in game development, with libraries such as Pygame offering simple tools to create 2D games. However, as gaming and virtual reality (VR) technologies evolve, Python’s role in developing immersive experiences will grow. The language’s ease of use and integration with game engines will make it a popular choice for building gaming platforms, VR applications, and simulations.

9. Expanding Job Market

As Python’s applications continue to grow, so does the demand for Python developers. From startups to tech giants like Google, Facebook, and Amazon, companies across industries are seeking professionals who are proficient in Python. The increasing adoption of Python in various fields, including data science, AI, cybersecurity, and cloud computing, ensures a thriving job market for Python developers in the future.

10. Constant Evolution and Community Support

Python’s open-source nature means that it’s constantly evolving with new libraries, frameworks, and features. Its vibrant community of developers contributes to its growth and ensures that Python stays relevant to emerging trends and technologies. Whether it’s a new tool for AI or a breakthrough in web development, Python’s community is always working to improve the language and make it more efficient for developers.

Conclusion

Python’s future is bright, with its presence continuing to grow in AI, data science, automation, web development, and beyond. As industries become increasingly data-driven, automated, and connected, Python’s simplicity, versatility, and strong community support make it an ideal choice for developers. Whether you are a beginner looking to start your coding journey or a seasoned professional exploring new career opportunities, learning Python offers long-term benefits in a rapidly evolving tech landscape.

#python course#python training#python#technology#tech#python programming#python online training#python online course#python online classes#python certification

2 notes

·

View notes

Text

AI Agent Development: How to Create Intelligent Virtual Assistants for Business Success

In today's digital landscape, businesses are increasingly turning to AI-powered virtual assistants to streamline operations, enhance customer service, and boost productivity. AI agent development is at the forefront of this transformation, enabling companies to create intelligent, responsive, and highly efficient virtual assistants. In this blog, we will explore how to develop AI agents and leverage them for business success.

Understanding AI Agents and Virtual Assistants

AI agents, or intelligent virtual assistants, are software programs that use artificial intelligence, machine learning, and natural language processing (NLP) to interact with users, automate tasks, and make decisions. These agents can be deployed across various platforms, including websites, mobile apps, and messaging applications, to improve customer engagement and operational efficiency.

Key Features of AI Agents

Natural Language Processing (NLP): Enables the assistant to understand and process human language.

Machine Learning (ML): Allows the assistant to improve over time based on user interactions.

Conversational AI: Facilitates human-like interactions.

Task Automation: Handles repetitive tasks like answering FAQs, scheduling appointments, and processing orders.

Integration Capabilities: Connects with CRM, ERP, and other business tools for seamless operations.

Steps to Develop an AI Virtual Assistant

1. Define Business Objectives

Before developing an AI agent, it is crucial to identify the business goals it will serve. Whether it's improving customer support, automating sales inquiries, or handling HR tasks, a well-defined purpose ensures the assistant aligns with organizational needs.

2. Choose the Right AI Technologies

Selecting the right technology stack is essential for building a powerful AI agent. Key technologies include:

NLP frameworks: OpenAI's GPT, Google's Dialogflow, or Rasa.

Machine Learning Platforms: TensorFlow, PyTorch, or Scikit-learn.

Speech Recognition: Amazon Lex, IBM Watson, or Microsoft Azure Speech.

Cloud Services: AWS, Google Cloud, or Microsoft Azure.

3. Design the Conversation Flow

A well-structured conversation flow is crucial for user experience. Define intents (what the user wants) and responses to ensure the AI assistant provides accurate and helpful information. Tools like chatbot builders or decision trees help streamline this process.

4. Train the AI Model

Training an AI assistant involves feeding it with relevant datasets to improve accuracy. This may include:

Supervised Learning: Using labeled datasets for training.

Reinforcement Learning: Allowing the assistant to learn from interactions.

Continuous Learning: Updating models based on user feedback and new data.

5. Test and Optimize

Before deployment, rigorous testing is essential to refine the AI assistant's performance. Conduct:

User Testing: To evaluate usability and responsiveness.

A/B Testing: To compare different versions for effectiveness.

Performance Analysis: To measure speed, accuracy, and reliability.

6. Deploy and Monitor

Once the AI assistant is live, continuous monitoring and optimization are necessary to enhance user experience. Use analytics to track interactions, identify issues, and implement improvements over time.

Benefits of AI Virtual Assistants for Businesses

1. Enhanced Customer Service

AI-powered virtual assistants provide 24/7 support, instantly responding to customer queries and reducing response times.

2. Increased Efficiency

By automating repetitive tasks, businesses can save time and resources, allowing employees to focus on higher-value tasks.

3. Cost Savings

AI assistants reduce the need for large customer support teams, leading to significant cost reductions.

4. Scalability

Unlike human agents, AI assistants can handle multiple conversations simultaneously, making them highly scalable solutions.

5. Data-Driven Insights

AI assistants gather valuable data on customer behavior and preferences, enabling businesses to make informed decisions.

Future Trends in AI Agent Development

1. Hyper-Personalization

AI assistants will leverage deep learning to offer more personalized interactions based on user history and preferences.

2. Voice and Multimodal AI

The integration of voice recognition and visual processing will make AI assistants more interactive and intuitive.

3. Emotional AI

Advancements in AI will enable virtual assistants to detect and respond to human emotions for more empathetic interactions.

4. Autonomous AI Agents

Future AI agents will not only respond to queries but also proactively assist users by predicting their needs and taking independent actions.

Conclusion

AI agent development is transforming the way businesses interact with customers and streamline operations. By leveraging cutting-edge AI technologies, companies can create intelligent virtual assistants that enhance efficiency, reduce costs, and drive business success. As AI continues to evolve, embracing AI-powered assistants will be essential for staying competitive in the digital era.

5 notes

·

View notes

Text

Exploring Python: Features and Where It's Used

Python is a versatile programming language that has gained significant popularity in recent times. It's known for its ease of use, readability, and adaptability, making it an excellent choice for both newcomers and experienced programmers. In this article, we'll delve into the specifics of what Python is and explore its various applications.

What is Python?

Python is an interpreted programming language that is high-level and serves multiple purposes. Created by Guido van Rossum and released in 1991, Python is designed to prioritize code readability and simplicity, with a clean and minimalistic syntax. It places emphasis on using proper indentation and whitespace, making it more convenient for programmers to write and comprehend code.

Key Traits of Python :

Simplicity and Readability: Python code is structured in a way that's easy to read and understand. This reduces the time and effort required for both creating and maintaining software.

Python code example: print("Hello, World!")

Versatility: Python is applicable across various domains, from web development and scientific computing to data analysis, artificial intelligence, and more.

Python code example: import numpy as np

Extensive Standard Library: Python offers an extensive collection of pre-built libraries and modules. These resources provide developers with ready-made tools and functions to tackle complex tasks efficiently.

Python code example: import matplotlib.pyplot as plt

Compatibility Across Platforms: Python is available on multiple operating systems, including Windows, macOS, and Linux. This allows programmers to create and run code seamlessly across different platforms.

Strong Community Support: Python boasts an active community of developers who contribute to its growth and provide support through online forums, documentation, and open-source contributions. This community support makes Python an excellent choice for developers seeking assistance or collaboration.

Where is Python Utilized?

Due to its versatility, Python is utilized in various domains and industries. Some key areas where Python is widely applied include:

Web Development: Python is highly suitable for web development tasks. It offers powerful frameworks like Django and Flask, simplifying the process of building robust web applications. The simplicity and readability of Python code enable developers to create clean and maintainable web applications efficiently.

Data Science and Machine Learning: Python has become the go-to language for data scientists and machine learning practitioners. Its extensive libraries such as NumPy, Pandas, and SciPy, along with specialized libraries like TensorFlow and PyTorch, facilitate a seamless workflow for data analysis, modeling, and implementing machine learning algorithms.

Scientific Computing: Python is extensively used in scientific computing and research due to its rich scientific libraries and tools. Libraries like SciPy, Matplotlib, and NumPy enable efficient handling of scientific data, visualization, and numerical computations, making Python indispensable for scientists and researchers.

Automation and Scripting: Python's simplicity and versatility make it a preferred language for automating repetitive tasks and writing scripts. Its comprehensive standard library empowers developers to automate various processes within the operating system, network operations, and file manipulation, making it popular among system administrators and DevOps professionals.

Game Development: Python's ease of use and availability of libraries like Pygame make it an excellent choice for game development. Developers can create interactive and engaging games efficiently, and the language's simplicity allows for quick prototyping and development cycles.

Internet of Things (IoT): Python's lightweight nature and compatibility with microcontrollers make it suitable for developing applications for the Internet of Things. Libraries like Circuit Python enable developers to work with sensors, create interactive hardware projects, and connect devices to the internet.

Python's versatility and simplicity have made it one of the most widely used programming languages across diverse domains. Its clean syntax, extensive libraries, and cross-platform compatibility make it a powerful tool for developers. Whether for web development, data science, automation, or game development, Python proves to be an excellent choice for programmers seeking efficiency and user-friendliness. If you're considering learning a programming language or expanding your skills, Python is undoubtedly worth exploring.

9 notes

·

View notes

Text

ChatGPT made it possible for anyone to play with powerful artificial intelligence, but the inner workings of the world-famous chatbot remain a closely guarded secret.

In recent months, however, efforts to make AI more “open” seem to have gained momentum. In May, someone leaked a model from Meta, called Llama, which gave outsiders access to its underlying code as well as the “weights” that determine how it behaves. Then, this July, Meta chose to make an even more powerful model, called Llama 2, available for anyone to download, modify, and reuse. Meta’s models have since become an extremely popular foundation for many companies, researchers, and hobbyists building tools and applications with ChatGPT-like capabilities.

“We have a broad range of supporters around the world who believe in our open approach to today’s AI ... researchers committed to doing research with the model, and people across tech, academia, and policy who see the benefits of Llama and an open platform as we do,” Meta said when announcing Llama 2. This morning, Meta released another model, Llama 2 Code, that is fine-tuned for coding.

It might seem as if the open source approach, which has democratized access to software, ensured transparency, and improved security for decades, is now poised to have a similar impact on AI.

Not so fast, say a group behind a research paper that examines the reality of Llama 2 and other AI models that are described, in some way or another, as “open.” The researchers, from Carnegie Mellon University, the AI Now Institute, and the Signal Foundation, say that models that are branded “open” may come with catches.

Llama 2 is free to download, modify, and deploy, but it is not covered by a conventional open source license. Meta’s license prohibits using Llama 2 to train other language models, and it requires a special license if a developer deploys it in an app or service with more than 700 million daily users.

This level of control means that Llama 2 may provide significant technical and strategic benefits to Meta—for example, by allowing the company to benefit from useful tweaks made by outside developers when it uses the model in its own apps.

Models that are released under normal open source licenses, like GPT Neo from the nonprofit EleutherAI, are more fully open, the researchers say. But it is difficult for such projects to get on an equal footing.

First, the data required to train advanced models is often kept secret. Second, software frameworks required to build such models are often controlled by large corporations. The two most popular ones, TensorFlow and Pytorch, are maintained by Google and Meta, respectively. Third, computer power required to train a large model is also beyond the reach of any normal developer or company, typically requiring tens or hundreds of millions of dollars for a single training run. And finally, the human labor required to finesse and improve these models is also a resource that is mostly only available to big companies with deep pockets.

The way things are headed, one of the most important technologies in decades could end up enriching and empowering just a handful of companies, including OpenAI, Microsoft, Meta, and Google. If AI really is such a world-changing technology, then the greatest benefits might be felt if it were made more widely available and accessible.

“What our analysis points to is that openness not only doesn’t serve to ‘democratize’ AI,” Meredith Whittaker, president of Signal and one of the researchers behind the paper, tells me. “Indeed, we show that companies and institutions can and have leveraged ‘open’ technologies to entrench and expand centralized power.”

Whittaker adds that the myth of openness should be a factor in much-needed AI regulations. “We do badly need meaningful alternatives to technology defined and dominated by large, monopolistic corporations—especially as AI systems are integrated into many highly sensitive domains with particular public impact: in health care, finance, education, and the workplace,” she says. “Creating the conditions to make such alternatives possible is a project that can coexist with, and even be supported by, regulatory movements such as antitrust reforms.”

Beyond checking the power of big companies, making AI more open could be crucial to unlock the technology’s best potential—and avoid its worst tendencies.

If we want to understand how capable the most advanced AI models are, and mitigate risks that could come with deployment and further progress, it might be better to make them open to the world’s scientists.

Just as security through obscurity never really guarantees that code will run safely, guarding the workings of powerful AI models may not be the smartest way to proceed.

3 notes

·

View notes

Text

Developing and Deploying AI/ML Applications on Red Hat OpenShift AI with Hawkstack

Artificial Intelligence (AI) and Machine Learning (ML) are driving innovation across industries—from predictive analytics in healthcare to real-time fraud detection in finance. But building, scaling, and maintaining production-grade AI/ML solutions remains a significant challenge. Enter Red Hat OpenShift AI, a powerful platform that brings together the flexibility of Kubernetes with enterprise-grade ML tooling. And when combined with Hawkstack, organizations can supercharge observability and performance tracking throughout their AI/ML lifecycle.

Why Red Hat OpenShift AI?

Red Hat OpenShift AI (formerly Red Hat OpenShift Data Science) is a robust enterprise platform designed to support the full AI/ML lifecycle—from development to deployment. Key benefits include:

Scalability: Native Kubernetes integration allows seamless scaling of ML workloads.

Security: Red Hat’s enterprise security practices ensure that ML pipelines are secure by design.

Flexibility: Supports a variety of tools and frameworks, including Jupyter Notebooks, TensorFlow, PyTorch, and more.

Collaboration: Built-in tools for team collaboration and continuous integration/continuous deployment (CI/CD).

Introducing Hawkstack: Observability for AI/ML Workloads

As you move from model training to production, observability becomes critical. Hawkstack, a lightweight and extensible observability framework, integrates seamlessly with Red Hat OpenShift AI to provide real-time insights into system performance, data drift, model accuracy, and infrastructure metrics.

Hawkstack + OpenShift AI: A Powerful Duo

By integrating Hawkstack with OpenShift AI, you can:

Monitor ML Pipelines: Track metrics across training, validation, and deployment stages.

Visualize Performance: Dashboards powered by Hawkstack allow teams to monitor GPU/CPU usage, memory footprint, and latency.

Enable Alerting: Proactively detect model degradation or anomalies in your inference services.

Optimize Resources: Fine-tune resource allocation based on telemetry data.

Workflow: Developing and Deploying ML Apps

Here’s a high-level overview of what a modern AI/ML workflow looks like on OpenShift AI with Hawkstack:

1. Model Development

Data scientists use tools like JupyterLab or VS Code on OpenShift AI to build and train models. Libraries such as scikit-learn, XGBoost, and Hugging Face Transformers are pre-integrated.

2. Pipeline Automation

Using Red Hat OpenShift Pipelines (Tekton), you can automate training and evaluation pipelines. Integrate CI/CD practices to ensure robust and repeatable workflows.

3. Model Deployment

Leverage OpenShift AI’s serving layer to deploy models using Seldon Core, KServe, or OpenVINO Model Server—all containerized and scalable.

4. Monitoring and Feedback with Hawkstack

Once deployed, Hawkstack takes over to monitor inference latency, throughput, and model accuracy in real-time. Anomalies can be fed back into the training pipeline, enabling continuous learning and adaptation.

Real-World Use Case

A leading financial services firm recently implemented OpenShift AI and Hawkstack to power their loan approval engine. Using Hawkstack, they detected a model drift issue caused by seasonal changes in application data. Alerts enabled retraining to be triggered automatically, ensuring their decisions stayed fair and accurate.

Conclusion

Deploying AI/ML applications in production doesn’t have to be daunting. With Red Hat OpenShift AI, you get a secure, scalable, and enterprise-ready foundation. And with Hawkstack, you add observability and performance intelligence to every stage of your ML lifecycle.

Together, they empower organizations to bring AI/ML innovations to market faster—without compromising on reliability or visibility.

For more details www.hawkstack.com

0 notes

Text

7 Skills You'll Build in Top AI Certification Courses

You're considering AI certification courses to advance your career, but what exactly will you learn? These programs pack tremendous value by teaching practical skills that translate directly to real-world applications. Let's explore the seven key capabilities you'll develop through quality AI certification courses.

1. Machine Learning Fundamentals

Your journey begins with understanding how machines learn from data. You'll master supervised and unsupervised learning techniques, working with algorithms like linear regression, decision trees, and clustering methods. These foundational concepts form the backbone of AI systems, and you'll practice implementing them through hands-on projects that simulate actual business scenarios.

2. Deep Learning and Neural Networks

Building on machine learning basics, you will dive into neural networks and deep learning architectures. You will construct and train models using frameworks like TensorFlow and PyTorch, understanding how layers, activation functions, and backpropagation work together. Through AI certification courses, you will gain confidence working with convolutional neural networks for image processing and recurrent neural networks for sequential data.

3. Natural Language Processing (NLP)

You will develop skills to make computers understand and generate human language. This includes text preprocessing, sentiment analysis, named entity recognition, and building chatbots. You'll work with transformer models and learn how technologies like GPT and BERT revolutionize language understanding. These NLP skills are increasingly valuable as businesses seek to automate customer service and content analysis.

4. Data Preprocessing and Feature Engineering

Raw data rarely comes ready for AI models. You'll learn to clean, transform, and prepare datasets effectively. This includes handling missing values, encoding categorical variables, scaling features, and creating new meaningful features from existing data. You'll understand why data scientists spend 80% of their time on data preparation and master techniques to streamline this crucial process.

5. Model Evaluation and Optimization

Creating an AI model is just the beginning. You'll learn to evaluate model performance using metrics like accuracy, precision, recall, and F1-score. You'll master techniques for preventing overfitting, including cross-validation, regularization, and dropout. AI certification courses teach you to fine-tune hyperparameters and optimize models for production environments, ensuring your solutions perform reliably in real-world conditions.

6. Ethical AI and Responsible Development

You'll explore the critical aspects of AI ethics, including bias detection and mitigation, fairness in algorithms, and privacy considerations. You'll learn frameworks for responsible AI development and understand regulatory requirements like GDPR's right to explanation. This knowledge positions you as a thoughtful practitioner who can navigate the complex ethical landscape of artificial intelligence.

7. AI Deployment and MLOps

Finally, you'll bridge the gap between development and production. You'll learn to deploy models using cloud platforms, create APIs for model serving, and implement monitoring systems to track performance over time. You'll understand containerization with Docker, orchestration with Kubernetes, and continuous integration/continuous deployment (CI/CD) pipelines for machine learning projects.

Conclusion

These seven skills represent a comprehensive toolkit for AI practitioners. The best AI certification courses combine theoretical knowledge with practical application, ensuring you can immediately apply what you've learned.

As you progress through your chosen program, you'll notice how these skills interconnect – from data preprocessing through model development to ethical deployment. This holistic understanding distinguishes certified AI professionals and provides the foundation for a successful career in artificial intelligence.

For more information, visit: https://www.ascendientlearning.com/it-training/vmware

0 notes

Text

ARM Embedded Industrial Controller BL370 Applied to Smart Warehousing Solutions

Case Details

The ARMxy BL370 series embedded industrial controller can fully leverage its high performance, flexible scalability, and industrial-grade stability in smart warehousing solutions. Below is an analysis of its typical applications in smart warehousing scenarios, based on the features of the BL370 series.

Application Scenarios of BL370 in Smart Warehousing

Smart warehousing involves automated equipment (such as AGVs, robotic arms, and conveyor belts), sensor networks, data acquisition and processing, and connectivity with cloud platforms. The following features of the BL370 series make it highly suitable for such scenarios:

High-Performance Computing and AI Support: Equipped with the Rockchip RK3562/RK3562J processor (quad-core Cortex-A53, up to 2.0GHz) and a built-in 1TOPS NPU, it supports deep learning frameworks like TensorFlow and PyTorch, enabling image recognition (e.g., barcode scanning, shelf item detection) or path optimization in warehousing.

Rich I/O Interfaces: Supports 1-3 10/100M Ethernet ports, RS485, CAN, DI/DO, AI/AO, and other interfaces, allowing connection to sensors, PLCs, RFID readers, etc., for cargo tracking, environmental monitoring (e.g., temperature and humidity), and device control.

Flexible Scalability: Through X-series and Y-series I/O boards, users can configure RS232/485, CAN, GPIO, analog inputs/outputs, and more to adapt to various warehousing equipment (e.g., stackers, conveyors).

Communication Capabilities: Supports WiFi, 4G/5G modules, and Bluetooth, suitable for real-time data transmission to Warehouse Management Systems (WMS) or cloud platforms for inventory management and remote monitoring.

Industrial-Grade Stability: Operates in a wide temperature range of -40 to 85°C, with IP30 protection, and has passed electromagnetic compatibility and environmental adaptability tests, suitable for warehousing environments with potential vibration, dust, and temperature fluctuations.

Software Support: Pre-installed with BLloTLink protocol conversion software, supporting protocols like Modbus, MQTT, and OPC UA, it seamlessly integrates with WMS, ERP, or mainstream IoT cloud platforms (e.g., AWS IoT, Alibaba Cloud). Node-RED and Docker enable rapid development of warehousing automation workflows.

Specific Application Cases

Here are several typical applications of the BL370 in smart warehousing:

1. AGV Navigation and Control

Function: The BL370 serves as the core controller for AGVs (Automated Guided Vehicles), handling navigation algorithms, sensor data fusion (e.g., LiDAR, ultrasonic sensors), and path planning.

Implementation:

(1)Uses the NPU for real-time image processing (e.g., landmark or obstacle recognition).

(2)Communicates with motor drivers via CAN or RS485 to control AGV movement.

(3)Y-series I/O boards (e.g., Y95/Y96) support PWM output and pulse counting for precise control and positioning.

(4)4G/5G modules enable real-time communication with the central scheduling system.

Advantages: High-performance processor and flexible I/O configurations support complex navigation algorithms, while wide-temperature design ensures stable operation in cold storage environments.

2. Cargo Tracking and Inventory Management

Function: Real-time cargo tracking via RFID or barcode scanning, with data uploaded to WMS for inventory management.

Implementation:

(1)X-series I/O boards (e.g., X20) provide multiple RS232/485 interfaces to connect RFID readers or barcode scanners.

(2)BLloTLink software converts collected data into MQTT protocol for upload to cloud platforms (e.g., Thingsboard).

(3)Node-RED enables rapid development of data processing workflows, such as inventory anomaly alerts.

Advantages: Multiple interfaces support various device integrations, and protocol conversion software simplifies integration with existing systems.

3. Environmental Monitoring and Energy Management

Function: Monitors warehouse environmental conditions (temperature, humidity) and energy consumption data to optimize energy use and ensure proper storage conditions.

Implementation:

(1)Y-series I/O boards (e.g., Y51/Y52) support PT100/PT1000 RTD measurements for high-precision temperature and humidity monitoring.

(2)Data is transmitted to an Energy Management System (EMS) via Ethernet or 4G.

(3)BLRAT tool enables remote access for maintenance personnel to monitor environmental status in real time.

Advantages: Wide voltage input (9-36VDC) and overcurrent protection ensure stability in complex power environments.

4. Automated Equipment Control

Function: Controls conveyor belts, stackers, robotic arms, etc., for automated sorting and storage.

Implementation:

(1)X-series I/O boards (e.g., X23/X24) provide DI/DO interfaces for switching control and status detection.

(2)Supports Qt-5.15.10 for developing Human-Machine Interfaces (HMI), output via HDMI to touchscreens for operator monitoring.

(3)Docker containers deploy control programs, improving system maintainability.

Advantages: Modular I/O design enables quick adaptation to different devices, and the Linux system supports complex control logic.

Advantages of Smart Warehousing Solutions

Using the BL370 series for smart warehousing solutions offers the following benefits:

Modular Design: SOM boards and I/O board combinations meet the needs of different warehouse scales and equipment, reducing customization costs.

High Reliability: Passes EMC tests (ESD, EFT, Surge, etc.) and environmental tests (-40 to 85°C, vibration, drop), ensuring long-term stable operation.

Rapid Deployment: Pre-installed BLloTLink and BLRAT software support mainstream protocols and cloud platforms, shortening system integration time.

Development Support: Rich development examples (Node-RED, Qt, Docker, NPU, etc.) reduce secondary development complexity, ideal for rapid prototyping and customization.

Implementation Recommendations

Hardware Selection:

(1)For scenarios requiring high-performance computing (e.g., vision processing), choose SOM372 (32GB eMMC, 4GB LPDDR4X, RK3562J).

(2)For multi-device connectivity, select BL372B (3 Ethernet ports, 2 Y-board slots).

(3)For cold storage applications, choose SOM370/371/372, supporting -40 to 85°C.

Software Configuration:

(1)Use Ubuntu 20.04 and Qt-5.15.10 to develop HMI interfaces for enhanced user interaction.

(2)Leverage BLloTLink for protocol conversion with WMS/ERP, recommending MQTT for efficient communication in low-bandwidth environments.

(3)Deploy Node-RED to design automation workflows, such as triggers for cargo inbound/outbound processes.

Network and Security:

(1)Use 4G/5G modules to ensure real-time data transmission, with BLRAT for remote maintenance.

(2)Regularly update the Linux kernel (5.10.198) to address potential security vulnerabilities.

Testing and Validation:

(1)Conduct electromagnetic compatibility and environmental tests before implementation to ensure stability in actual warehousing environments.

(2)Simulate high-load scenarios (e.g., multiple AGVs working collaboratively) to test system performance.

Conclusion

The ARMxy BL370 series embedded industrial controller, with its high-performance processor, flexible I/O expansion, robust software ecosystem, and industrial-grade reliability, is highly suitable for smart warehousing solutions. Whether for AGV control, cargo tracking, environmental monitoring, or automated equipment management, the BL370 provides efficient and stable support. Through its modular design and rich development resources, users can quickly build customized smart warehousing systems, improving efficiency and reducing operational costs.

0 notes

Text

Essential Data Science Tools to Master in 2025

Data science tools are the foundation of analytics, machine learning, and artificial intelligence of today. In 2025, data professionals and wannabe data scientists need to be aware of the best data science tools to excel in this competitive area. There are so many tools arising every year that it gets confusing to select the best ones. The following blog deconstructs the best data science course that every data enthusiast should enroll.

Why Learning Data Science Tools is Important

In today's data-rich environment, organisations lean on the insights gathered from large amounts of data when making decisions. In order to analyze, extract, process and visualize their data properly, you must have knowledge and experience with many tools for machine learning and analytics. Knowing the appropriate data analytics tools not only allows you to perform tasks more effectively; but also access to greater, higher-paying opportunities in tech.

Top Programming Languages in Data Science

Programming languages form the base of all data science operations. The most common debate? Python vs R in data science. Here's a simple comparison to help:

Python vs R in Data Science – What's Better?

Python and R both serve different purposes, but here’s a short overview:

Python

Easy to learn and versatile

Supports machine learning libraries like Scikit-learn, TensorFlow

Widely used for production-ready systems

R

Great for statistical analysis

Preferred for academic or research work

Has powerful packages like ggplot2 and caret

Most professionals prefer Python because of its vast ecosystem and community support, but R remains essential for deep statistical tasks.

Top Data Analytics Tools You Should Know

Analytics tools help you take unrefined data and convert it into actionable insights. They have an important role in business intelligence and proactively recognising trends.

Before we jump into the list, here's why we care about them in business: they expedite data processing, improve reporting, and surface collaboration on projects with teams.

Popular Data Analytics Tools in 2025:

Tableau – Easy drag-and-drop dashboard creation

Power BI – Microsoft-backed tool with Excel integration

Excel – Still relevant with new data plug-ins and features

Looker – Google’s cloud analytics platform

Qlik Sense – AI-powered analytics platform

These tools offer powerful visualizations, real-time analytics, and support big data environments.

Best Tools for Data Science Projects

When handling end-to-end projects, you need tools that support data collection, cleaning, modelling, and deployment. Here are some essentials:

Understanding which tool to use at each stage can make your workflow smooth and productive.

Best Tools for Data Science Workflows:

Jupyter Notebook – Ideal for writing and testing code

Apache Spark – Handles massive datasets with ease

RapidMiner – Drag-and-drop platform for model building

Google Colab – Free cloud-based coding environment

VS Code – Lightweight IDE for data science scripting

These platforms support scripting, debugging, and model deployment—everything you need to execute a full data science pipeline.

Must-Know Tools for Machine Learning

Machine learning involves building algorithms that learn from data. So, you need tools that support experimentation, scalability, and automation.

The following tools for machine learning are essential in 2025 because they help create accurate models, automate feature engineering, and scale across large datasets.

Most Used Machine Learning Tools:

TensorFlow – Deep learning framework by Google

Scikit-learn – For traditional machine learning tasks

PyTorch – Popular among researchers and developers

Keras – Simplified interface for deep learning

H2O.ai – Open-source platform with AutoML features

These tools support neural networks, decision trees, clustering, and more.

Top AI Tools 2025 for Data Scientists

AI technologies are rapidly advancing and keeping up to date with the best AI tools of 2025 is essential to not fall behind.

AI tools are advancing by 2025 to help automate workflows, creating synthetic data, and building smarter models of all kinds. Let's take a look at the most talked about tools.

Emerging AI Tools in 2025:

ChatGPT Plugins – AI-powered data interaction

DataRobot – End-to-end automated machine learning

Runway ML – Creative AI for media projects

Synthesia – AI video creation from text

Google AutoML – Automates AI model creation

These tools are reshaping how we build, test, and deploy AI models.

Choosing the Right Tools for You

Every data science project is different. Your choice of tools depends on the task, data size, budget, and skill level. So how do you choose?

Here’s a simple guide to picking the best tools for data science based on your use case:

Tool Selection Tips:

For beginners: Start with Excel, Tableau, and Python

For researchers: Use R, Jupyter, and Scikit-learn

For AI/ML engineers: Leverage TensorFlow, PyTorch, and Spark

For business analysts: Try Power BI, Looker, and Qlik

Choosing the right tools helps you finish projects faster and more accurately.

Conclusion

In today's data-driven society -knowing the right data science tools to use is vital. Whether you're evaluating trends, designing AI models, synthesizing reports - the right tools will enable you to work more efficiently. From different data analytics tools to the best AI tools 2025, this list has everything needed to get started. Discover, experiment, and grow with these incredible platforms and watch your career in data science blossom.

FAQs

1. What are some of the more commonly used tools for data science in 2025?

Popular tools are Python, Tableau, TensorFlow, Power BI, and Jupyter Notebooks. These tools are used for analytics, modelling, and deployment.

2. Is Python or R better for data science?

Python is preferable because of its flexibility and libraries; R is used primarily for statistics and research applications.

3. Can I use Excel for data science?

Yes. Excel is still used commonly for elementary analysis, reporting, and as a stepping stone to a more advanced tool like Power BI.

4. What are the best tools for machine learning beginners?

Start with Scikit-learn, Keras, and Google Colab. They offer easy interfaces and great learning resources.

5. Are AI tools replacing data scientists?

No – AI tools assist with automation, but human insight & problem-solving are still crucial in the data science process.

#data science#data analytics#data science course#data analyst course in delhi#data analytics course#data scientist#best data science course

0 notes

Text

Scaling Inference AI: How to Manage Large-Scale Deployments

As artificial intelligence continues to transform industries, the focus has shifted from model development to operationalization—especially inference at scale. Deploying AI models into production across hundreds or thousands of nodes is a different challenge than training them. Real-time response requirements, unpredictable workloads, cost optimization, and system resilience are just a few of the complexities involved.

In this blog post, we’ll explore key strategies and architectural best practices for managing large-scale inference AI deployments in production environments.

1. Understand the Inference Workload

Inference workloads vary widely depending on the use case. Some key considerations include:

Latency sensitivity: Real-time applications (e.g., fraud detection, recommendation engines) demand low latency, whereas batch inference (e.g., customer churn prediction) is more tolerant.

Throughput requirements: High-traffic systems must process thousands or millions of predictions per second.

Resource intensity: Models like transformers and diffusion models may require GPU acceleration, while smaller models can run on CPUs.

Tailor your infrastructure to the specific needs of your workload rather than adopting a one-size-fits-all approach.

2. Model Optimization Techniques

Optimizing models for inference can dramatically reduce resource costs and improve performance:

Quantization: Convert models from 32-bit floats to 16-bit or 8-bit precision to reduce memory footprint and accelerate computation.

Pruning: Remove redundant or non-critical parts of the network to improve speed.

Knowledge distillation: Replace large models with smaller, faster student models trained to mimic the original.

Frameworks like TensorRT, ONNX Runtime, and Hugging Face Optimum can help implement these optimizations effectively.

3. Scalable Serving Architecture

For serving AI models at scale, consider these architectural elements:

Model servers: Tools like TensorFlow Serving, TorchServe, Triton Inference Server, and BentoML provide flexible options for deploying and managing models.

Autoscaling: Use Kubernetes (K8s) with horizontal pod autoscalers to adjust resources based on traffic.

Load balancing: Ensure even traffic distribution across model replicas with intelligent load balancers or service meshes.

Multi-model support: Use inference runtimes that allow hot-swapping models or running multiple models concurrently on the same node.

Cloud-native design is essential—containerization and orchestration are foundational for scalable inference.

4. Edge vs. Cloud Inference

Deciding where inference happens—cloud, edge, or hybrid—affects latency, bandwidth, and cost:

Cloud inference provides centralized control and easier scaling.

Edge inference minimizes latency and data transfer, especially important for applications in autonomous vehicles, smart cameras, and IoT

Hybrid architectures allow critical decisions to be made at the edge while sending more complex computations to the cloud..

Choose based on the tradeoffs between responsiveness, connectivity, and compute resources.

5. Observability and Monitoring

Inference at scale demands robust monitoring for performance, accuracy, and availability:

Latency and throughput metrics: Track request times, failed inferences, and traffic spikes.

Model drift detection: Monitor if input data or prediction distributions are changing, signaling potential degradation.

A/B testing and shadow deployments: Test new models in parallel with production ones to validate performance before full rollout.

Tools like Prometheus, Grafana, Seldon Core, and Arize AI can help maintain visibility and control.

6. Cost Management

Running inference at scale can become costly without careful management:

Right-size compute instances: Don’t overprovision; match hardware to model needs.

Use spot instances or serverless options: Leverage lower-cost infrastructure when SLAs allow.

Batch low-priority tasks: Queue and batch non-urgent inferences to maximize hardware utilization.

Cost-efficiency should be integrated into deployment decisions from the start.

7. Security and Governance

As inference becomes part of critical business workflows, security and compliance matter:

Data privacy: Ensure sensitive inputs (e.g., healthcare, finance) are encrypted and access-controlled.

Model versioning and audit trails: Track changes to deployed models and their performance over time.

API authentication and rate limiting: Protect your inference endpoints from abuse.

Secure deployment pipelines and strict governance are non-negotiable in enterprise environments.

Final Thoughts

Scaling AI inference isn't just about infrastructure—it's about building a robust, flexible, and intelligent ecosystem that balances performance, cost, and user experience. Whether you're powering voice assistants, recommendation engines, or industrial robotics, successful large-scale inference requires tight integration between engineering, data science, and operations.

Have questions about deploying inference at scale? Let us know what challenges you’re facing and we’ll dive in.

0 notes

Text

Unlocking the Power of Programming: Choosing Between PHP and Python Training in Chandigarh

In today’s fast-evolving digital world, programming has become an indispensable skill. Whether you're planning to start a career in software development, web design, data science, or automation, learning a programming language can open a world of opportunities. Among the most popular and versatile languages, PHP and Python stand out for their real-world applications and ease of learning.

If you’re located in or near the tech-savvy city of Chandigarh and looking to enhance your programming skills, you have access to excellent training opportunities. This article dives into the significance of both PHP and Python, compares their strengths, and helps you decide which path might be best suited for your career goals. We also highlight how you can get top-notch PHP Training in Chandigarh and Python Training in Chandigarh to jumpstart your programming journey.

Why Learn Programming in 2025?

The demand for developers continues to soar. Businesses are rapidly digitizing, startups are emerging across all industries, and automation is reshaping the job market. By learning to code, you not only improve your employability but also acquire a mindset of problem-solving, logic, and innovation.

While many languages serve specific purposes, PHP and Python offer a balanced blend of functionality, community support, and job prospects. Both are open-source, have been around for decades, and continue to be adopted in mainstream applications worldwide.

A Closer Look at PHP: The Backbone of Dynamic Websites

PHP (Hypertext Preprocessor) is a server-side scripting language mainly used for web development. Originally created in 1994, PHP has powered millions of websites, including giants like Facebook (in its early stages), Wikipedia, and WordPress. Its wide adoption makes it a valuable skill for web developers.

Key Features of PHP:

Easy to Learn: PHP has a gentle learning curve, making it ideal for beginners.

Web-Focused: It is specifically designed for server-side web development.

Open Source: Freely available with robust community support.

CMS Integration: PHP is the backbone of popular content management systems like WordPress, Joomla, and Drupal.

Database Integration: It works seamlessly with databases like MySQL and PostgreSQL.

In short, PHP is a great starting point if your goal is to build dynamic websites or manage content-driven platforms.

The Power of Python: Simple Yet Extremely Powerful

Python has emerged as one of the most beloved and versatile programming languages. Known for its clean syntax and readability, Python is used in everything from web development and automation to artificial intelligence and machine learning.

Key Features of Python:

Readable and Concise: Its code looks almost like English, making it easy to write and understand.

Multi-Purpose: From web apps and APIs to AI and data analysis, Python can do it all.

Huge Libraries: Python boasts a rich ecosystem of libraries and frameworks (like Django, Flask, NumPy, TensorFlow).

In-Demand Skill: Major tech companies like Google, Netflix, and Instagram use Python in their core development.

Community and Support: As one of the most popular languages, Python has vast documentation and an active developer community.

Python is the go-to language for professionals looking to enter fields like machine learning, data science, and backend development.

PHP vs Python: What Should You Choose?

When choosing between PHP and Python, it ultimately depends on your goals.

Feature

PHP

Python

Primary Use

Web Development

Web, Data Science, Automation

Syntax

C-style, less readable

Clean and easy to read

Learning Curve

Beginner-friendly

Very beginner-friendly

Career Paths

Web developer, CMS expert

Data scientist, AI developer, web developer

Job Market

Strong in web and CMS

Broad and growing in multiple domains

If you want to build or manage websites, especially content-rich platforms, PHP is the way to go. On the other hand, if you're more interested in modern tech like machine learning or automation, Python is a better choice.

For those based in North India, the availability of professional coaching has made it easier than ever to master these languages. You can easily get industry-standard PHP Training in Chandigarh or explore the versatile and in-demand Python Training in Chandigarh to advance your career.

Why Choose Chandigarh for Programming Training?

Chandigarh, known as the city beautiful, is not only a center for administration and education but is also rapidly evolving into a tech and startup hub. The city offers a perfect blend of modern infrastructure, affordability, and educational excellence.

Benefits of Studying in Chandigarh:

Skilled Trainers: Institutes here employ industry professionals with real-world experience.

Affordable Education: Lower cost compared to metros while maintaining high quality.

Growing Tech Ecosystem: A budding IT sector and startup culture are on the rise.

Comfortable Living: Clean, green, and well-connected city with a high standard of living.

Whether you’re a student, a job seeker, or a working professional looking to upskill, enrolling in a reliable training institute in Chandigarh can be a game-changer.

What to Look for in a Training Institute?

Choosing the right training institute is crucial for success. Here are some factors to consider:

Certified Trainers: Make sure the faculty are certified professionals with hands-on industry experience.

Practical Exposure: Go for programs that offer live projects, internships, or real-world case studies.

Updated Curriculum: Ensure the course content aligns with current industry trends and demands.

Placement Assistance: Institutes offering job support and mock interviews give you an edge.

Flexible Timings: Whether you're a student or a working professional, flexible schedules can help you balance learning and other commitments.

Institutes like CBitss Technologies have built a reputation for offering top-quality PHP and Python training with all these benefits, right in the heart of Chandigarh.

Success Stories from Chandigarh

Many successful developers have launched their careers from right here in Chandigarh. From getting placed in IT companies across India to freelancing for clients overseas, alumni of local training institutes often share stories of transformation.

For instance, Rahul, a BCA graduate from Panjab University, took a six-month course in Python and now works as a junior data analyst in an MNC in Bangalore. Similarly, Mehak, who opted for PHP training during her college summer break, now manages dynamic WordPress websites for her family’s business.

These stories are testaments to the power of skill-building when combined with the right guidance.

Final Thoughts: Your Coding Future Starts Now

In the fast-paced world of technology, standing still means falling behind. Learning PHP or Python is not just about adding another skill to your resume—it’s about staying relevant, opening new opportunities, and shaping your future.

Whether you choose PHP Training in Chandigarh to dive into the world of web development or opt for Python Training in Chandigarh to explore broader tech domains, the important thing is to take that first step.

So, what are you waiting for? Equip yourself with future-ready skills and become a part of the global programming revolution—starting right here in Chandigarh.

#phpdevelopment#php#php training#bestphptraing#yellowjackets#cookie run kingdom#shadow the hedgehog#transformers#jason todd

1 note

·

View note

Text

The Importance of Learning Python Programming in Today's Tech Landscape

In recent years, Python programming has emerged as one of the most sought-after skills in the IT industry. Its simplicity, versatility, and wide range of applications have made it the go-to language for beginners and professionals alike. Whether you’re interested in data science, web development, artificial intelligence, or automation, Python serves as a strong foundation that supports numerous domains.

As more companies adopt Python for their projects, the demand for skilled Python developers continues to rise. This trend has prompted a growing number of learners to explore structured IT classes in Pune and other tech hubs to master the language and enhance their career opportunities.

Why Python?

Python’s popularity can be attributed to several key features:

Simple and Readable Syntax: Python code is easy to write and understand, making it ideal for beginners.

Extensive Libraries and Frameworks: With libraries like Pandas, NumPy, TensorFlow, Flask, and Django, Python simplifies everything from data analysis to web development.

Large Community Support: Python has a massive global community. This means better documentation, more tutorials, and greater opportunities to collaborate and solve problems.

Cross-Platform Compatibility: Python can run on different operating systems, including Windows, macOS, and Linux, without significant changes in code.

These features make Python a versatile language that’s used in various fields, including finance, healthcare, education, and gaming.

Real-World Applications of Python

One of the main reasons learners choose Python is its real-world applicability. Here are a few areas where Python is making a difference:

Data Science and Machine Learning: Python is the backbone of many data-driven technologies. Its rich libraries support data processing, visualization, and predictive modeling.

Web Development: Frameworks like Flask and Django help developers build scalable web applications quickly.

Automation and Scripting: Repetitive tasks can be automated using Python scripts, saving time and reducing human error.

Cybersecurity: Python is often used for developing tools that test and secure systems.

Game Development: While not as common as C++ or Unity, Python is still used in simple game development thanks to libraries like Pygame.

With such broad applications, it’s no surprise that structured IT classes in Pune often include Python as part of their curriculum. These courses provide learners with hands-on experience and help them build portfolios that demonstrate their skills to potential employers.

How to Start Learning Python

Starting with Python doesn’t require prior programming experience. Here are a few steps to help you begin:

Understand the Basics: Learn about data types, variables, loops, and conditional statements.

Practice Regularly: Use platforms like HackerRank, LeetCode, or Replit to solve problems.

Work on Projects: Build simple applications like a to-do list, calculator, or weather app.

Learn Libraries: Depending on your interest (data science, web dev, etc.), start exploring relevant libraries.

Join a Course: Enrolling in structured IT classes in Pune or online helps provide direction, mentorship, and access to resources.

Final Thoughts

For anyone looking to enter or advance in the tech industry, Python is an excellent skill to develop. Its simplicity, coupled with powerful capabilities, makes it a must-learn language in today’s digital world.

If you’re planning to learn Python through expert-led sessions and real-world projects, ITView Inspired Learning is one of the go-to institutes for quality IT classes in Pune. Their approach to hands-on learning and up-to-date curriculum ensures you’re not just learning, but preparing to thrive in a competitive job market.

0 notes

Text

Demystifying Python: Exploring 7 Exciting Capabilities of This Coding Marvel

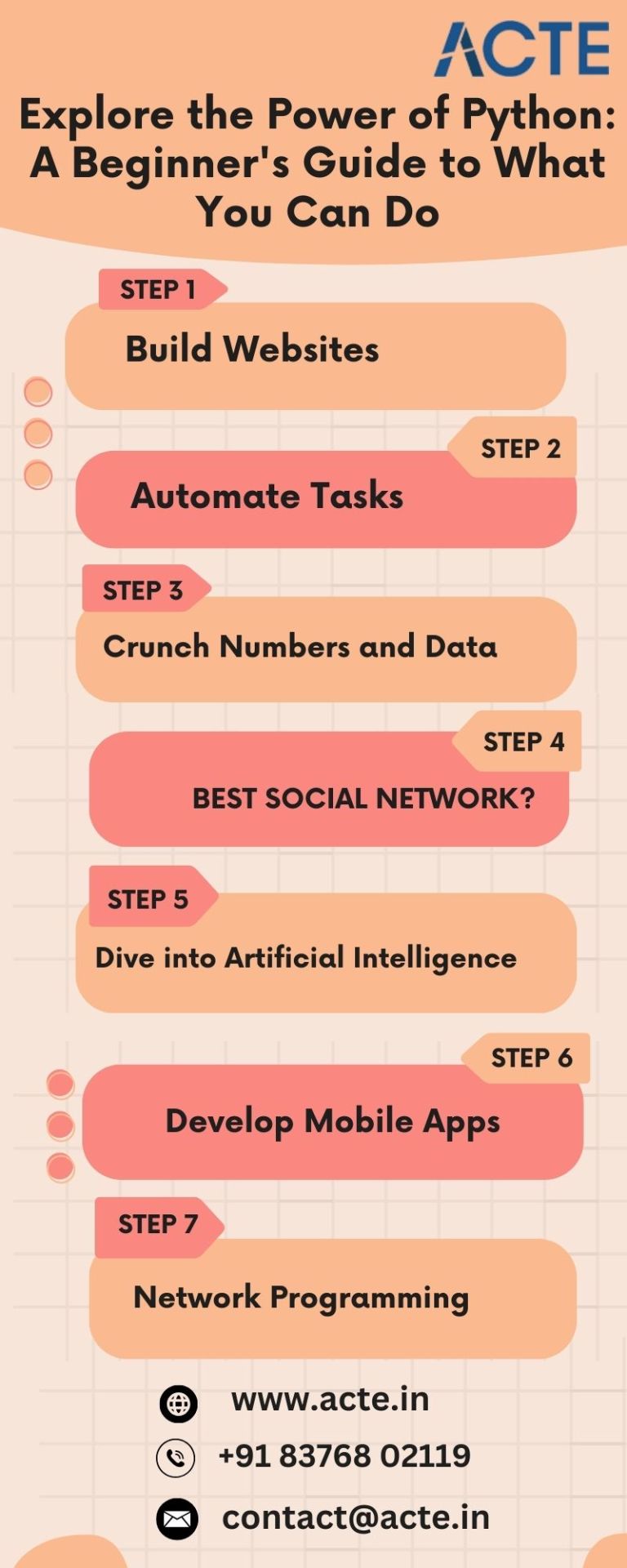

Greetings aspiring coders! Are you ready to unravel the wonders of Python programming? If you're curious about the diverse possibilities that Python offers, you're in for a treat. Let's delve into seven captivating things you can achieve with Python, explained in simple terms from the best Python Training Institute.

1. Craft Dynamic Websites:

Python serves as the backbone for numerous websites you encounter daily. Utilizing robust frameworks such as Django and Flask, you can effortlessly fashion web applications and dynamic websites. Whether your ambition is to launch a personal blog or the next big social platform, Python is your reliable companion. If you want to learn more about Python from the Beginner to Advance level, I will highly recommend the best Python course in Bangalore

2. Automate Mundane Tasks:

Say goodbye to repetitive tasks! Python comes to the rescue with its automation prowess. From organizing files to sending emails and even extracting information from websites, Python's straightforward approach empowers your computer to handle these tasks autonomously.

3. Master Data Analysis:

For those who revel in manipulating numbers and data, Python is a game-changer. Libraries like NumPy, Pandas, and Matplotlib transform data analysis into an enjoyable and accessible endeavor. Visualize data, discern patterns, and unlock the full potential of your datasets.

4. Embark on Game Development:

Surprising as it may be, Python allows you to dip your toes into the realm of game development. Thanks to libraries like Pygame, you can bring your gaming ideas to life. While you may not be creating the next AAA blockbuster, Python provides an excellent starting point for game development enthusiasts.

5. Explore Artificial Intelligence:

Python stands out as a juggernaut in the field of artificial intelligence. Leveraging libraries such as TensorFlow and PyTorch, you can construct machine learning models. Teach your computer to recognize images, comprehend natural language, and even engage in gaming – the possibilities are limitless.

6. Craft Mobile Applications:

Yes, you read that correctly. Python empowers you to develop mobile applications through frameworks like Kivy and BeeWare. Now, you can turn your app concepts into reality without the need to learn an entirely new language for each platform.

7. Mastery in Network Programming:

Python emerges as your ally in the realm of networking. Whether you aspire to create network tools, collaborate with APIs, or automate network configurations, Python simplifies the intricacies of networking.

In essence, Python can be likened to a versatile Swiss Army knife for programmers. It's approachable for beginners, flexible, and applicable across diverse domains. Whether you're drawn to web development, data science, or AI, Python stands as the ideal companion for your coding journey. So, grab your keyboard, start coding, and witness the magic of Python unfold!

2 notes

·

View notes

Text

What Is a Data Science Developer? Skills, Roles, and Career Path

In today's data-driven world, the role of a Data Science Developer has become pivotal in transforming raw data into actionable insights. As organizations increasingly rely on data to inform decisions, the demand for professionals adept at data analysis and software development has surged. This article delves into the multifaceted responsibilities, essential skills, and the significance of certifications for aspiring Data Science Developers.

Understanding the Role of a Data Science Developer

A Data Science Developer operates at the confluence of data science and software engineering. Their primary responsibility is to design, develop, and deploy scalable data-driven applications and models that facilitate data analysis and interpretation. Key tasks include:

Data Collection and Preprocessing: Gathering data from various sources and ensuring its quality through cleaning and preprocessing.

Model Development: Building predictive models using machine learning algorithms to extract insights.

Application Development: Creating software applications that integrate data models, enabling end-users to interact with data intuitively.

Collaboration: Working alongside data scientists, analysts, and business stakeholders to align technical solutions with organizational goals.

This role demands a blend of analytical prowess and programming expertise to bridge the gap between complex data processes and practical business applications.

Essential Skills for Success

To excel as a Data Science Developer, one must cultivate a diverse skill set:

Programming Proficiency: Mastery of languages such as Python and R is crucial for data manipulation and model development.

Statistical Knowledge: A solid understanding of statistics and probability aids in selecting appropriate models and interpreting results.

Machine Learning: Familiarity with machine learning techniques and frameworks like TensorFlow or scikit-learn is essential for predictive analytics.

Data Visualization: The Ability to present data insights through tools like Tableau or Matplotlib enhances stakeholder communication.

Software Development: Competence in software engineering principles ensures the creation of robust and maintainable applications.

Database Management: Knowledge of SQL and NoSQL databases facilitates efficient data storage and retrieval.

These skills collectively empower Data Science Developers to transform complex data into user-friendly solutions.

The Importance of Certifications

In a competitive job market, certifications serve as a testament to one's expertise and commitment to professional growth. Notable certifications include:

CAIE Certified Artificial Intelligence (AI) Expert®: Validates proficiency in AI concepts and applications, enhancing one's ability to develop intelligent systems.

Certified Cyber Security Expert™ Certification: Emphasizes the importance of data security, a critical aspect when handling sensitive information.

Certified Network Security Engineer™ Certification: This certification focuses on securing data transmission and ensuring the integrity and confidentiality of data pipelines.

Certified Python Developer™ Certification: Demonstrates advanced Python programming skills, a cornerstone language in data science.

Machine Learning Certification: Affirms one's ability to design and implement machine learning models effectively.

These certifications not only bolster a professional's resume but also provide structured learning paths to acquire and validate essential skills.

Career Trajectory and Opportunities

The career path for Data Science Developers is both dynamic and rewarding. Starting as junior developers or data analysts, professionals can progress to roles such as:

Senior Data Scientist: Leading complex projects and mentoring junior team members.

Machine Learning Engineer: Specializing in developing and deploying machine learning models at scale.

Data Architect: Designing and managing data infrastructure to support analytics initiatives.

Chief Data Officer: Overseeing data strategy and governance at the organizational level.

This role's versatility opens doors across various industries, including finance, healthcare, e-commerce, and technology.

Conclusion

The role of a Data Science Developer is integral to harnessing the power of data in today's digital era. By combining analytical skills with software development expertise, these professionals drive innovation and informed decision-making within organizations. Pursuing relevant certifications and continuously updating one's skill set are pivotal steps toward a successful career in this field. As data continues to shape the future, the demand for adept Data Science Developers is poised to grow exponentially.

0 notes

Text

High-Paying Machine Learning Jobs in Mumbai You Can Land After Your Course

The demand for Machine Learning (ML) professionals is booming across the globe—and India’s financial and tech capital, Mumbai, is no exception. From fintech giants to global IT service firms and emerging AI startups, Mumbai is quickly becoming a hotspot for data-driven innovation. If you've recently completed a Machine Learning course in Mumbai, you're in a prime position to tap into a wide range of high-paying job opportunities.

In this article, we'll explore the top ML job roles, what they pay in Mumbai, the industries hiring aggressively, and the key skills you need to succeed.

Why Mumbai Is a Great City for Machine Learning Careers?

Mumbai is home to some of the most dynamic industries in India—banking, insurance, stock markets, logistics, and media. These sectors are increasingly adopting Machine Learning to automate processes, analyze big data, and improve decision-making.

Here’s why ML careers thrive in Mumbai:

Headquarters of major banks, fintech firms, and NBFCs.

A growing startup ecosystem focused on AI/ML solutions.

IT and analytics service companies with global clientele.

Competitive salaries compared to other metros.

Whether you're a fresher or transitioning from a tech or analytics role, completing a Machine Learning course in Mumbai can open doors to high-paying jobs across sectors.

High-Paying Machine Learning Job Roles in Mumbai

1. Machine Learning Engineer

Average Salary in Mumbai: ₹8–20 LPA Top Employers: J.P. Morgan, Fractal Analytics, TCS, Quantiphi

A core ML role focused on:

Building and deploying predictive models.

Feature engineering and model optimization.

Working with large datasets using Python, TensorFlow, or PyTorch.

This is one of the most in-demand roles for course graduates with hands-on skills in supervised and unsupervised learning.

2. Data Scientist

Average Salary: ₹10–25 LPA Industries Hiring: Banking, Healthcare, EdTech, Logistics

Data Scientists use ML algorithms to extract insights, build predictive systems, and support data-driven strategies. Proficiency in:

Statistical modeling

Data visualization

Deep learning (optional) can give you an edge in Mumbai’s competitive landscape.

3. AI/ML Research Engineer

Average Salary: ₹12–28 LPA Best For: Candidates with a strong academic or research background in AI

These roles are more R&D-oriented and focus on:

Advancing ML algorithms

Natural Language Processing (NLP)

Reinforcement learning

Often found in MNCs, research labs, or AI-first startups in Mumbai, these roles are ideal for those looking to push the boundaries of AI.

4. NLP Engineer / Chatbot Developer

Average Salary: ₹9–18 LPA Applications in: Customer service, healthcare tech, HR automation

NLP Engineers design systems that understand and process human language. With Mumbai companies integrating chatbots and voice AI into their operations, this role is gaining popularity.

Key skills include:

NLTK, spaCy, Transformers

BERT, GPT, and other LLMs

Sentiment analysis and speech-to-text systems

5. Computer Vision Engineer

Average Salary: ₹10–22 LPA Growing in: Security tech, automotive, and surveillance industries

If your course included modules on image recognition or OpenCV, this role is a lucrative niche. Mumbai-based firms working on smart city tech, autonomous vehicles, and industrial automation are hiring CV specialists.

6. Data Analyst with ML Specialization

Average Salary: ₹6–14 LPA Ideal For: Freshers and professionals transitioning from non-tech roles

Data Analysts skilled in machine learning are more than just number crunchers. They build classification and regression models to support:

Marketing campaigns

Customer churn prediction

Inventory forecasting

This role serves as a strong entry point to more advanced ML careers.

7. Business Intelligence Developer (with ML Integration)

Average Salary: ₹8–16 LPA Industries: Finance, eCommerce, Telecom

BI Developers who incorporate ML models into dashboards and analytics platforms are in high demand. This hybrid role combines:

Power BI/Tableau expertise

ML model integration via APIs

Predictive KPI tracking

Skills That Boost Your ML Earning Potential in Mumbai

To land high-paying ML jobs in Mumbai, your course should equip you with more than just theory. Recruiters look for the following job-ready skills:

Programming Languages: Python, R, SQL

Libraries & Frameworks: Scikit-learn, TensorFlow, PyTorch, Keras

Data Handling: Pandas, NumPy, Hadoop, Spark

Model Deployment: Flask, Docker, Streamlit, AWS/GCP

Soft Skills: Problem-solving, business acumen, storytelling with data

Having a GitHub portfolio, strong LinkedIn presence, and contributions to Kaggle competitions can also give you a major edge.

How the Right ML Course in Mumbai Makes a Difference?

Not all ML courses are equal. To truly unlock high-paying roles, choose a Machine Learning course in Mumbai that offers:

Hands-on projects (NLP, computer vision, time series, etc.)

Real-world capstone challenges

Resume & placement support

Expert faculty with industry exposure

Interview preparation modules

Training institutes with a strong industry network and placement history in Mumbai are more likely to help you break into premium roles.

Tips to Land Your First High-Paying ML Job in Mumbai

Build a strong GitHub portfolio showcasing your ML projects.

Contribute to open-source ML tools or participate in Kaggle challenges.

Network locally – attend tech meetups, conferences, and webinars in Mumbai.

Tailor your resume and LinkedIn for ML roles with measurable impact.

Practice ML case studies and system design for interviews.

Final Thoughts

Completing a Machine Learning course in Mumbai can be the first step toward an exciting and high-paying tech career. Whether you’re a fresher, a career-switcher, or a working professional looking to upskill, the city’s vibrant job market has something for everyone.

By focusing on the right roles, building real-world skills, and choosing a well-recognized training provider, you’ll position yourself for success in Mumbai’s thriving ML landscape.

#Best Data Science Courses in Mumbai#Artificial Intelligence Course in Mumbai#Data Scientist Course in Mumbai#Machine Learning Course in Mumbai

0 notes

Text

The Role of Python in AI, Machine Learning, and Data Science

Python has emerged as the dominant programming language in artificial intelligence (AI), machine learning (ML), and data science. Its simplicity, powerful libraries, and vibrant ecosystem make it the first choice for professionals and researchers alike. This article explores why Python plays such a crucial role in these fields and how it empowers innovation and efficiency.

Why Python is the Preferred Language

Python’s popularity in AI and data science stems largely from its readability, ease of learning, and vast support community.

Simple Syntax: Python’s clean, human-readable syntax allows developers to focus on solving problems rather than struggling with code structure.

Rapid Development: The language enables fast prototyping and testing of ideas, which is crucial in fields that require constant experimentation.

Large Ecosystem: Thousands of libraries and tools tailored for AI and data science make Python extremely powerful and versatile.

Python in Artificial Intelligence

AI systems often involve complex logic, automation, and interaction with large datasets. Python simplifies these tasks through a wide range of libraries:

Natural Language Processing (NLP): Libraries like NLTK, spaCy, and Hugging Face Transformers enable text analysis, sentiment detection, and language generation.

Computer Vision: OpenCV and image processing libraries help developers build systems that can analyze and interpret visual data.

Reinforcement Learning: Python supports RL frameworks like Stable-Baselines3, making it easier to build intelligent agents that learn from their environment.