#they're computer-generated images

Text

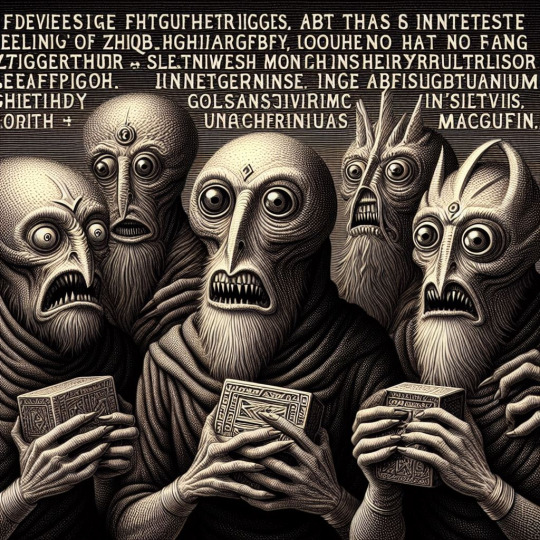

One thing I really like image generators for is just seeing how they interpret prompts that don't make sense. For example, here's:

Several fhqwhgads, feeling an intense feeling of zjierb. They have no shifgrethor but they ARE covered in slantwise unobtainium. One of them is holding the macguffin. Digital photography in the style of Hieronymus Butch.

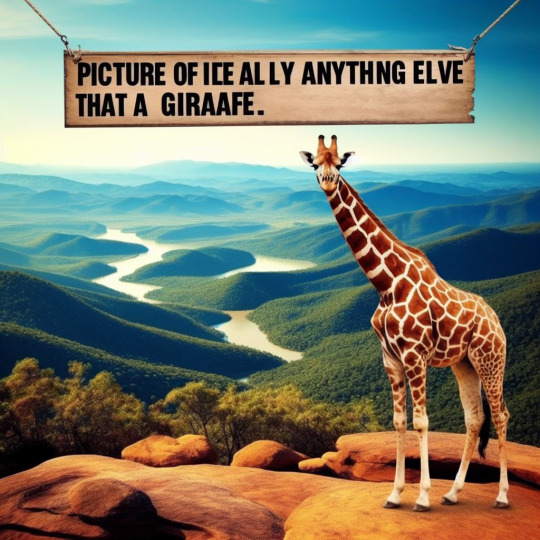

Or how about:

The gostak distims three doshes. In the background, colorless green dreams sleep furiously. photograph, in the style of ahfjhabvdhxhshdh.

And finally, here's:

A picture of literally anything other than a giraffe. No giraffes whatsoever. Not even one.

I dunno, I just like seeing how algorithms fail when prompted to do things they aren't designed to do.

#CGI#is really the best term for these I think#they're computer-generated images#no 'intelligence' involved

15 notes

·

View notes

Text

#it's been said that men like taurus women because they're easy#there's nothing to 'get'#computer generated image#guidance scale 1

36 notes

·

View notes

Text

I can't survive on Facebook cause the culture there is just "Being rude and sarcastic to everyone is my whole personality and I think that makes me special!" or "Every rich person is a hard-working go-getter and every poor person is a lazy freeloader who settles for less" or "Haha imagine a TRANS GENDERED with PRONOUN. BOTTOM TEXT" and so many recommendations of AI-generated cakes, though that could just be the algorithm for me.

#the funny part about the cakes is it's so easy to tell they're ai-generated#triple-decker wedding cake that doesn't look like anything on this earth with unnatural lighting and random floating shit around it#everyone in the comment section: oh wow this is so pretty! whoever made this was talented!#me: this is a fake image spat out by a computer told to stare at pictures of cake and pinterest boards for 300 hours

6 notes

·

View notes

Text

*glares at clip studio like one would a dog that is lifting its leg to pee on the carpet*

#they're going to put an AI image generator in the next update#It uses a model that scraped the internet for images without permission#Same as DeviantArt's tool#they're all Oh but we didn't steal or scrape#Yeah dA said the same thing and now it's a bigger ghost town than ever#And rightly so#Guess I'm never getting that last free update#Maybe I should seriously think about traditional again#Because what am I supposed to do when my computer gets old

4 notes

·

View notes

Text

Scanning electron microscopy is awesome and I personally think the images it produces are gorgeous but objectively speaking I feel like it doesn't do any favors at all for the "scary" cultural image of insects, because I mean, here's a closeup of a carpet beetle in its true colors:

And here's an SEM image that comes up for carpet beetles on google:

And the thing about SEM images is that they aren't "photographs;" they are computer scans. They're 3-d digital models generated by scanning an object at the molecular level.

Color is not preserved by this process, and if it were all the specimens would look like metal anyway (I'll explain this is in a moment), so images like this had to be colored artificially. This isn't done to recreate the true colors, but to make different body parts more visible as study material, resulting in scientific images of wacky blueberry fleas:

The subtly varying transparency levels of living tissues are completely lost as well, which is why the fine hairs of insects stand out more like cactus thorns in SEM imagery, and tardigrades look like opaque leathery things with no eyes:

...Even though a tardigrade actually has eyes, they're just under the surface of a crystal clear exoskeleton:

Another thing that probably contributes to the uncanniness of SEM images is also the fact that they can only show us embalmed corpses encased in liquid metal.

It's not possible to do this fine level of scanning "instantaneously" enough for it to work on anything that's still moving, so even when you see scanning electron images of animals in various lifelike poses, it's because they're preserved specimens that were carefully positioned, or they were live specimens basically "flash frozen" by a sudden dehydration process, mummified so fast they never knew it.

Many specimens are then "sputter coated," meaning they're sprayed with a thin (like microns thin) layer of liquid gold, platinum or other fine metal in order for the electrons to perfectly bounce off of every subatomic detail and produce that perfect scan.

So this is a live fruit fly:

And this is a fruit fly mummy with probably some sort of chrome plating:

5K notes

·

View notes

Note

whats wrong with ai?? genuinely curious <3

okay let's break it down. i'm an engineer, so i'm going to come at you from a perspective that may be different than someone else's.

i don't hate ai in every aspect. in theory, there are a lot of instances where, in fact, ai can help us do things a lot better without. here's a few examples:

ai detecting cancer

ai sorting recycling

some practical housekeeping that gemini (google ai) can do

all of the above examples are ways in which ai works with humans to do things in parallel with us. it's not overstepping--it's sorting, using pixels at a micro-level to detect abnormalities that we as humans can not, fixing a list. these are all really small, helpful ways that ai can work with us.

everything else about ai works against us. in general, ai is a huge consumer of natural resources. every prompt that you put into character.ai, chatgpt? this wastes water + energy. it's not free. a machine somewhere in the world has to swallow your prompt, call on a model to feed data into it and process more data, and then has to generate an answer for you all in a relatively short amount of time.

that is crazy expensive. someone is paying for that, and if it isn't you with your own money, it's the strain on the power grid, the water that cools the computers, the A/C that cools the data centers. and you aren't the only person using ai. chatgpt alone gets millions of users every single day, with probably thousands of prompts per second, so multiply your personal consumption by millions, and you can start to see how the picture is becoming overwhelming.

that is energy consumption alone. we haven't even talked about how problematic ai is ethically. there is currently no regulation in the united states about how ai should be developed, deployed, or used.

what does this mean for you?

it means that anything you post online is subject to data mining by an ai model (because why would they need to ask if there's no laws to stop them? wtf does it matter what it means to you to some idiot software engineer in the back room of an office making 3x your salary?). oh, that little fic you posted to wattpad that got a lot of attention? well now it's being used to teach ai how to write. oh, that sketch you made using adobe that you want to sell? adobe didn't tell you that anything you save to the cloud is now subject to being used for their ai models, so now your art is being replicated to generate ai images in photoshop, without crediting you (they have since said they don't do this...but privacy policies were never made to be human-readable, and i can't imagine they are the only company to sneakily try this). oh, your apartment just installed a new system that will use facial recognition to let their residents inside? oh, they didn't train their model with anyone but white people, so now all the black people living in that apartment building can't get into their homes. oh, you want to apply for a new job? the ai model that scans resumes learned from historical data that more men work that role than women (so the model basically thinks men are better than women), so now your resume is getting thrown out because you're a woman.

ai learns from data. and data is flawed. data is human. and as humans, we are racist, homophobic, misogynistic, transphobic, divided. so the ai models we train will learn from this. ai learns from people's creative works--their personal and artistic property. and now it's scrambling them all up to spit out generated images and written works that no one would ever want to read (because it's no longer a labor of love), and they're using that to make money. they're profiting off of people, and there's no one to stop them. they're also using generated images as marketing tools, to trick idiots on facebook, to make it so hard to be media literate that we have to question every single thing we see because now we don't know what's real and what's not.

the problem with ai is that it's doing more harm than good. and we as a society aren't doing our due diligence to understand the unintended consequences of it all. we aren't angry enough. we're too scared of stifling innovation that we're letting it regulate itself (aka letting companies decide), which has never been a good idea. we see it do one cool thing, and somehow that makes up for all the rest of the bullshit?

#yeah i could talk about this for years#i could talk about it forever#im so passionate about this lmao#anyways#i also want to point out the examples i listed are ONLY A FEW problems#there's SO MUCH MORE#anywho ai is bleh go away#ask#ask b#🐝's anons#ai

883 notes

·

View notes

Note

How exactly do you advance AI ethically? Considering how much of the data sets that these tools use was sourced, wouldnt you have to start from scratch?

a: i don't agree with the assertion that "using someone else's images to train an ai" is inherently unethical - ai art is demonstrably "less copy-paste-y" for lack of a better word than collage, and nobody would argue that collage is illegal or ethically shady. i mean some people might but i don't think they're correct.

b: several people have done this alraedy - see, mitsua diffusion, et al.

c: this whole argument is a red herring. it is not long-term relevant adobe firefly is already built exclusively off images they have legal rights to. the dataset question is irrelevant to ethical ai use, because companies already have huge vaults full of media they can train on and do so effectively.

you can cheer all you want that the artist-job-eating-machine made by adobe or disney is ethically sourced, thank god! but it'll still eat everyone's jobs. that's what you need to be caring about.

the solution here obviously is unionization, fighting for increased labor rights for people who stand to be affected by ai (as the writer's guild demonstrated! they did it exactly right!), and fighting for UBI so that we can eventually decouple the act of creation from the act of survival at a fundamental level (so i can stop getting these sorts of dms).

if you're interested in actually advancing ai as a field and not devils advocating me you can also participate in the FOSS (free-and-open-source) ecosystem so that adobe and disney and openai can't develop a monopoly on black-box proprietary technology, and we can have a future where anyone can create any images they want, on their computer, for free, anywhere, instead of behind a paywall they can't control.

fun fact related to that last bit: remember when getty images sued stable diffusion and everybody cheered? yeah anyway they're releasing their own ai generator now. crazy how literally no large company has your interests in mind.

cheers

2K notes

·

View notes

Text

everytime i post anti ip shit in the context of the moral panic around large language models and image generation people seem to accuse me of being a techbro who cares about ip restricting the technological progress of these models. no. i think large transformer models were interesting architecturally, but they have classic deep learning era weaknesses in terms of reliance on huge datasets which means a computation arms race, little reliability and limited explainability. i desperately wish the hype around them dies down so people stop asking me to use a transformer model in my hyperspecialised low data, high variance work. im waiting for a paradigm shift, and transformer models are not that. they have great results! but they're boring now.

i just think intellectual property rights are horrible for the world in general. authorial rights should be limited to attribution, not control over what everyone else does with it. the endpoint of giving everyone rights to control and restrict other people's engagement with ideas is a world that ceaselessly limits autonomy – it means poisoning our computers and software with DRM shit, it means the destruction of archives, it means nasty and pervasive surveillance and an industry of lawyers debating about the intellectual provenance of work instead of a world that actually engages with work.

412 notes

·

View notes

Text

Recognizing AI Generated Images, Danmei Edition

Heyo, @unforth here! I run some danmei art blogs (@mdzsartreblogs, @tgcfartreblogs, @svsssartreblogs, @zhenhunartreblogs, @erhaartreblogs, @dmbjartreblogs, @tykartreblogs, and @cnovelartreblogs) which means I see a LOT of danmei art, and I go through the main fandom tags more-or-less every day.

Today, for the first time, I spotted someone posting AI-generated images (I refuse to call them AI "art" - and to be clear, that's correct of me, because at least in the US it literally LEGALLY isn't art) without any label indicating they were AI generated. I am not necessarily against the existence of AI-generated images (though really...considering all the legal issues and the risks of misuse, I'm basically against them); I think they potentially have uses in certain contexts (such as for making references) and I also think that regardless of our opinions, we're stuck with them, but they're also clearly not art and I don't reblog them to the art side blogs.

The images I spotted today had multiple "tells," but they were still accumulating notes, and I thought it might be a good moment to step back and point out some of the more obvious tells because my sense is that a LOT of people are against AI-generated images being treated as art, and that these people wouldn't want to support an AI-generator user who tried to foist off their work as actual artwork, but that people don't actually necessarily know how to IDENTIFY those works and therefore can inadvertently reblog works that they'd never support if they were correctly identified. (Similar to how the person who reposts and says "credit to the artist" is an asshole but they're not the same as someone who reposts without any credit at all and goes out of their way to make it look like they ARE the artist when they're not).

Toward that end, I've downloaded all the images I spotted on this person's account and I'm going to use them to highlight the things that led me to think they were AI art - they posted a total of 5 images to a few major danmei tags the last couple days, and several other images not to specific fandoms (I examined 8 images total). The first couple I was suspicious, but it wasn't til this morning that I spotted one so obvious that it couldn't be anything BUT AI art. I am NOT going to name the person who did this. The purpose of this post is purely educational. I have no interest whatsoever in bullying one rando. Please don't try to identify them; who they are is genuinely irrelevant, what matters is learning how to recognize AI art in general and not spreading it around, just like the goal of education about reposting is to help make sure that people who repost don't get notes on their theft, to help people recognize the signs so that the incentive to be dishonest about this stuff is removed.

But first: Why is treating AI-generated images as art bad?

I'm no expert and this won't be exhaustive, but I do think it's important to first discuss why this matters.

On the surface, it's PERHAPS harmless for someone to post AI-generated images provided that the image is clearly labeled as AI-generated. I say "perhaps" because in the end, as far as I'm aware, there isn't a single AI-generation engine that's built on legally-sourced artwork. Every AI (again, to the best of my knowledge) has been trained using copyrighted images usually without the permission of the artists. Indeed, this is the source of multiple current lawsuits. (and another)

But putting that aside (as if it can be put aside that AI image generators are literally unethically built), it's still problematic to support the images being treated as art. Artists spend thousands of hours learning their craft, honing it, sharing their creations, building their audiences. This is what they sell when they offer commissions, prints, etc. This can never be replicated by a computer, and to treat an AI-generated image as in any way equivalent is honestly rude, inappropriate, disgusting imo. This isn't "harmless"; supporting AI image creation engines is damaging to real people and their actual livelihoods. Like, the images might be beautiful, but they're not art. I'm honestly dreading someone managing to convince fandom that their AI-generated works are actual art, and then cashing in on commissions, prints, etc., because people can't be fussed to learn the difference. We really can't let this happen, guys. Fanartists are one of the most vibrant, important, prominent groups in all our fandoms, and we have to support them and do our part to protect them.

As if those two points aren't enough, there's already growing evidence that AI-generated works are being used to further propagandists. There are false images circulating of violence at protests, deep-fakes of various kinds that are helping the worst elements of society to push their horrid agendas. As long as that's a facet of AI-generated works, they'll always be dangerous.

I could go on, but really this isn't the main point of my post and I don't want to get bogged down. Other people have said more eloquently than I why AI-generated images are bad. Read those. (I tried to find a good one to link but sadly failed; if anyone knows a good post, feel free to send it and I'll add the link to the post).

Basically: I think a legally trained AI-image generator that had built-in clear watermarks could be a fun toy for people who want reference images or just to play with making pseudo-art. But...that's not what we have, and what we do have is built on theft and supports dystopia so, uh. Yeah fuck AI-generated images.

How to recognize AI-Generated Images Made in an Eastern Danmei Art Style

NOTE: I LEARNED ALL THE BASIC ON SPOTTING AI-GENERATED IMAGES FROM THIS POST. I'll own I still kinda had the wool over my eyes until I read that post - I knew AI stuff was out there but I hadn't really looked closely enough to have my eyes open for specific signs. Reading that entire post taught me a lot, and what I learned is the foundation of this post.

This post shouldn't be treated as a universal guide. I'm specifically looking at the tells on the kind of art that people in danmei fandoms often see coming from Weibo and other Chinese, Japanese, and Korean platforms, works made by real artists. For example, the work of Foxking (狐狸大王a), kokirapsd, and Changyang (who is an official artist for MDZS, TGCF, and other danmei works). This work shares a smooth use of color, an aim toward a certain flavor of realism, an ethereal quality to the lighting, and many other features. (Disclaimer: I am not an artist. Putting things in arty terms is really not my forte. Sorry.)

So, that's what these AI-generated images are emulating. And on the surface, they look good! Like...

...that's uncontestably a pretty picture (the white box is covering the "artist's" watermark.) And on a glance, it doesn't necessarily scream "AI generated"! But the devil is in the details, and the details are what this post is about. And that picture? Is definitely AI generated.

This post is based on 8 works I grabbed from a single person's account, all posted as their own work and watermarked as such. Some of the things that are giveaways only really show when looking at multiple pieces. I'm gonna start with those, and then I'll highlight some of the specifics I spotted that caused me to go from "suspicious" to "oh yeah no these are definitely not art."

Sign 1: all the images are the exact same size. I mean, to the pixel: 512 x 682 pixels (or 682 x 512, depending on landscape or portrait orientation). This makes zero sense. Why would an artist trim all their pieces to that size? It's not the ideal Tumblr display size (that's 500 x 750 pixels). If you check any actual artist's page and look at the full-size of several of their images, they'll all be different sizes as they trimmed, refined, and otherwise targeted around their original canvas size to get the results they wanted.

Sign 2: pixelated. At the shrunken size displayed on, say, a mobile Tumblr feed, the image looks fine, but even just opening the full size upload, the whole thing is pixelated. Now, this is probably the least useful sign; a lot of artists reduce the resolution/dpi/etc. on their uploaded works so that people don't steal them. But, taken in conjunction with everything else, it's definitely a sign.

Those are the two most obvious overall things - the things I didn't notice until I looked at all the uploads. The specifics are really what tells, though. Which leads to...

Sign 3: the overall work appears to have a very high degree of polish, as if it were made by an artist who really really knows what they're doing, but on inspection - sometimes even on really, REALLY cursory inspect - the details make zero sense and reflect the kinds of mistakes that a real artist would never make.

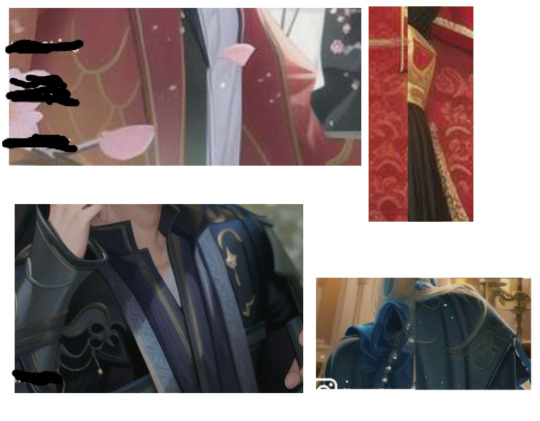

So, here's the image that I saw that "gave it away" to me, and caused me to re-examine the images that had first struck me as off but that I hadn't been able to immediately put my finger on the problem. I've circled some of the spots that are flagrant.

Do you see yet? Yes? Awesome, you're getting it. No? Okay, let's go point by point, with close ups.

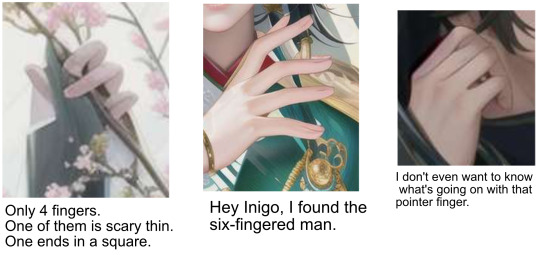

Sign 4: HANDS. Hands are currently AI's biggest weakness, though they've been getting better quickly and honestly that's terrifying. But whatever AI generated this picture clearly doesn't get hands yet, because that hand is truly an eldritch horror. Look at this thing:

It has two palms. It has seven fingers. It's basically two hands overlaid over each other, except one of those hands only has four fingers and the other has three. Seeing this hand was how I went from "umm...maybe they're fake? Maybe they're not???" to "oh god why is ANYONE reblogging this when it's this obvious?" WATCH THE HANDS. (Go back up to that first one posted and look at the hand, you'll see. Or just look right below at this crop.) Here's some other hands:

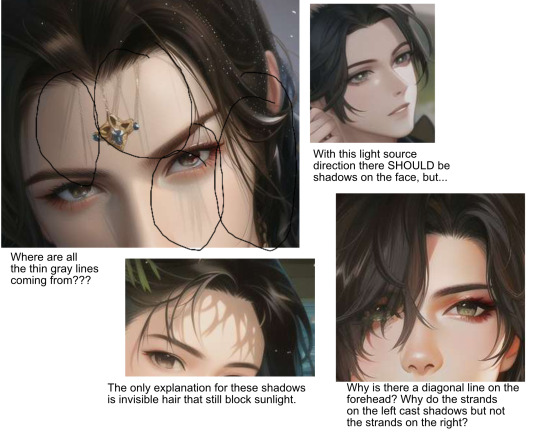

Sign 5: Hair and shadows. Once I started inspecting these images, the shadows of the hair on the face was one of the things that was most consistently fucked up across all the uploaded pictures. Take a look:

There's shadows of tendrils on the forehead, but there's no corresponding hair that could possibly have made those shadows. Likewise there's a whole bunch of shadows on the cheeks. Where are those coming from? There's no possible source in the rest of the image. Here's some other hair with unrelated wonky shadows:

Sign 6: Decorative motifs that are really just meaningless squiggles. Like, artists, especially those who make fanart, put actual thought into what the small motifs are on their works. Like, in TGCF, an artist will often use a butterfly motif or a flower petal motif to reflect things about the characters. An AI, though, can only approximate a pattern and it can't imbue those with meanings. So you end up with this:

What is that? It's nothing, that's what. It's a bunch of squiggles. Here's some other meaningless squiggle motifs (and a more zoomed-in version of the one just above):

Sign 7: closely related to meaningless squiggle motifs is motifs that DO look like something, but aren't followed through in any way that makes sense. For example, an outer garment where the motifs on the left and the right shoulder/chest are completely different, or a piece of cloth that's supposed to be all one piece but that that has different patterns on different sections of it. Both of these happen in the example piece, see?

The first images on the top left is the left and right shoulder side by side. The right side has a scalloped edge; the left doesn't. Likewise, in the right top picture, you can see the two under-robe lapels; one has a gold decoration and the other doesn't. And then the third/bottom image shows three sections of the veil. One (on the left) has that kind of blue arcy decoration, which doesn't follow the folds of the cloth very well and looks weird and appears at one point to be OVER the hair instead of behind it. The second, on top of the bottom images, shows a similar motif, except now it's gold, and it looks more like a hair decoration than like part of the veil. The third is also part of the same veil but it has no decorations at all. Nothing about this makes any sense whatsoever. Why would any artist intentionally do it that way? Or, more specifically, why would any artist who has this apparent level of technical skill ever make a mistake like this?

They wouldn't.

Some more nonsensical patterns, bad mirrors, etc. (I often put left/right shoulders side by side so that it'd be clearer, sorry if it's weird):

Sign 8: bizarre architecture, weird furniture, etc. Most of the images I'm examining for this post have only partial backgrounds, so it's hard to really focus on this, but it's something that the post I linked (this one) talks about a lot. So, like, an artist will put actual thought into how their construction works, but an AI won't because an AI can't. There's no background in my main example image, but take a look at this from another of my images:

On a glance it's beautiful. On a few seconds actually staring it's just fucking bizarre. The part of the ceiling on the right appears to be domed maybe? But then there's a hard angle, then another. The windows on the right have lots of panes, but then the one on the middle-left is just a single panel, and the ones on the far left have a complete different pane model. Meanwhile, also on the left side at the middle, there's that dark gray...something...with an arch that mimics the background arches except it goes no where, connects to nothing, and has no apparent relationship to anything else going on architecturally. And, while the ceiling curves, the back wall is straight AND shows more arches in the background even though the ceiling looks to end. And yes, some of this is possible architecture, but taken as a whole, it's just gibberish. Why would anyone who paints THAT WELL paint a building to look like THAT? They wouldn't. It's nonsense. It's the art equivalent of word salad. When we look at a sentence and it's like "dog makes a rhythmical salad to betray on the frame time plot" it almost resembles something that might mean something but it's clearly nonsense. This background is that sentence, as art.

Sign 9: all kinds of little things that make zero sense. In the example image, I circled where a section of the hair goes BELOW the inner robe. That's not impossible but it just makes zero sense. As with many of these, it's the kind of thing that taken alone, I'd probably just think "well, that was A Choice," but combined with all the other weird things it stands out as another sign that something here is really, really off. Here's a collection of similar "wtf?" moments I spotted across the images I looked at (I'm worried I'm gonna hit the Tumblr image cap, hence throwing these all in one, lol.)

You have to remember that an actual artist will do things for a reason. And we, as viewers, are so used to viewing art with that in mind that we often fill in reasons even when there aren't. Like, in the image just about this, I said, "what the heck are these flowers growing on?" And honestly, I COULD come up with explanations. But that doesn't mean it actually makes sense, and there's no REASON for it whatsoever. The theoretical same flowers are, in a different shot, growing unsupported! So...what gives??? The answer is nothing gives. Because these pieces are nothing. The AI has no reason, it's just tossing in random aesthetic pieces together in a mishmash, and the person who generated them is just re-generating and refining until they get something that looks "close enough" to what they wanted. It never was supposed to make sense, so of course it doesn't.

In conclusion...

After years of effort, artists have gotten across to most of fandom that reposts are bad, and helped us learn strategies for helping us recognize reposts, and given us an idea of what to do when we find one.

Fandom is just at the beginning of this process as it applies to AI-generated images. There's a LOT of education that has to be done - about why AI-generated images are bad (the unethical training using copyrighted images without permission is, imo, critical to understanding this), and about how to spot them, and then finally about what to do when you DO find them.

With reposts, we know "tell original artist, DCMA takedowns, etc." That's not the same with these AI-images. There's no original owner. There's no owner at all - in the US, at least, they literally cannot be copyrighted. Which is why I'm not even worrying about "credit" on this post - there's nothing stolen, cause there's nothing made. So what should you do?

Nothing. The answer is, just as the creator has essentially done nothing, you should also do nothing. Don't engage. Don't reblog. Don't commission the creator or buy their art prints. If they do it persistently and it bothers you, block them. If you see one you really like, and decide to reblog it, fine, go for it, but mark it clearly - put in the ACTUAL COMMENTS (not just in the tags!) that it's AI art, and that you thought it was pretty anyway. But honestly, it'd be better to not engage, especially since as this grows it's inevitable that some actual artists are going to start getting accused of posting AI-generated images by over-zealous people. Everyone who gets a shadow wrong isn't posting AI-generated images. A lot of these details are insanely difficult to get correct, and lots of even very skilled, accomplished artists, if you go over their work with a magnifying glass you're going to find at least some of these things, some weirdnesses that make no sense, some shadows that are off, some fingers that are just ugh (really, getting hands wrong is so relatable. hands are the fucking worst). It's not about "this is bad art/not art because the hand is wrong," it's specifically about the ways that it's wrong, the way a computer randomly throws pieces together versus how actual people make actual mistakes. It's all of the little signs taken as a whole to say "no one who could produce a piece that, on the surface, looks this nice, could possibly make THIS MANY small 'mistakes.'"

The absolute best thing you can do if you see AI-generated images being treated as real art is just nothing. Support actual artists you love, and don't spread the fakes.

Thanks for your time, everyone. Good luck avoiding AI-generated pieces in the future, please signal boost this, and feel free to get in touch if you think I can help you with anything related to this.

3K notes

·

View notes

Text

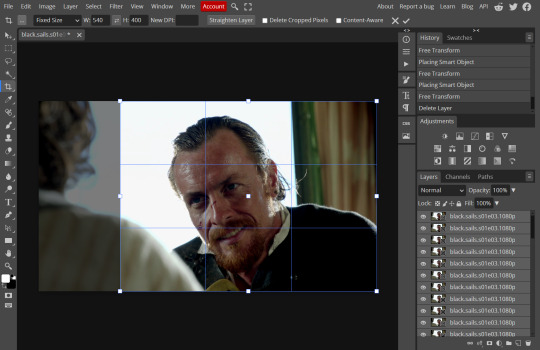

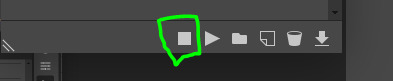

GIFMAKING TUTORIAL: PHOTOPEA (for Windows)

Screencapping

Gif Width/Size Limit/Ezgif

Loading Frames

Cropping and Resizing

Rasterize/Make Frames

Sharpening

Coloring (not detailed. Links to other tutorials included)

Exporting

Obligatory Mentions: @photopeablr ; @miwtual ; @benoitblanc ; @ashleysolsen

Definitely check out these blogs for tips, tutorials and resources, they're a gold mine. Finally I recommend browsing the PHOTOPEA TUTORIAL / PHOTOPEA TUTORIAL GIF tags.

DISCLAIMER: English is not my first language and I'm not an expert on what I'm going to discuss, so if anything's unclear feel free to drop another ask.

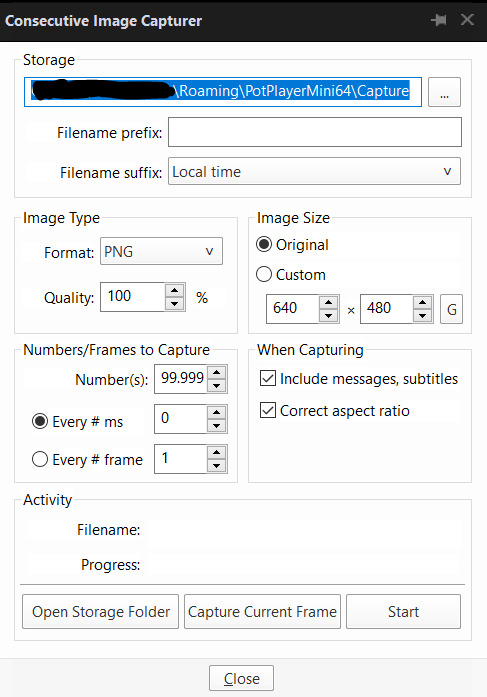

1. SCREENCAPPING -> PotPlayer (the one I use) or MVP or KMPlayer

INSTALL PotPlayer (tutorial)

Play your movie/episode and press Ctrl + G. The Consecutive Image Capturer window will pop up. Click Start to capture consecutive frames, Stop when you got what you needed.

Where it says "Image Type -> Format" I recommend picking PNG, for higher quality screencaps.

To access the folder where the screencaps are stored, type %appdata% in windows search, open the PotPlayerMini64 folder (or 32, depending on your system) and then the Capture folder. That's where you'll find your screencaps.

Admittedly MVP is a lot faster but I prefer Potplayer because it generates (at least in my case) higher quality screencaps. MVP kind of alters the hue and it made it harder for me to color my gifs. Still, if you're interested in how to use it, I recommend this tutorial.

As for KMPlayer, every tutorial out there is outdated and I couldn't figure out the new version of the software.

2. GIF WIDTH/HEIGHT, SIZE LIMIT, EZGIF OPTMIZER

At this point you should already know how big your gifs are going to be. Remember the ideal gif width(s) on tumblr are 540 px / 268 px / 177 px. These specific numbers take into account the 4 px space between the gifs. No restrictions on height. Here are some examples:

You can play around with the height (177x400, 177x540, 268x200, 268x268, 268x350, 268x400, 540x440, 540x500, 540x540 etc) but if you go over the 10 MB limit you'll either have to make your gifs smaller/delete some frames.

OR you can go on ezgif and optimize your gif, which is usually what I do. The quality might suffer a little, but I'm not really (that) obsessed with how crispy my gifs look, or I'd download photoshop.

Depending on the gif size, you can decrease the compression level. I've never had to go over 35. It's better to start at 5 (minimum) and then go from there until you reach your desired ( <10mb) gif size. Now that I think about it I should have included this passage at the end of the tutorial, I guess I'll just mention it again.

3. LOAD YOUR FRAMES

File -> Open... -> Pick one of your screencaps. The first one, the last one, a random one. Doesn't matter. That's your Background.

File -> Open & Place -> Select all the frames (including the one you already loaded in the previous passage) you need for your gif and load them.

(I recommend creating a specific folder for the screencaps of each gif you're going to make.)

WARNING: When you Place your screencaps make sure the Crop tool is NOT selected, especially if you've already used it and the width/height values have been entered. It will mess things up - I don't know why, could be a bug.

You can either select them all with Ctrl+A or with the method I explained in the ask: "when you want to select more than one frame or all frames at once select the first one, then scroll to the bottom and, while pressing Shift, select the last one. this way ALL your frames will be selected".

WARNING: Depending on how fast your computer is / on your RAM, this process may take a while. My old computer was old and slow af, while my new one can load even a 100 frames relatively fast, all things considered. Even so, I recommend ALWAYS saving your work before loading new frames for a new gif, because photopea might crash unexpectedly. Just save your work as often as you can, even while coloring or before exporting. Trust me, I speak from experience.

Now you can go ahead and delete the Background at the bottom, you won't need it anymore.

4. CROPPING AND RESIZING

Right now your screencaps are still smart objects. Before rasterizing and converting to frames, you need to crop your gif.

Technically you can rasterize/convert to frames and then crop, BUT if you do it in that order photopea will automatically delete the cropped pixels, even if you don't select the "Delete Cropped Pixels" Option. Might be another bug, unclear.

Basically, if you crop your gif and then realize you cropped a little too much to the left or the right, you can go ahead, select the Move Tool (shortcut: V) and, after selecting ALL YOUR FRAMES, move them around on your canvas until you are satisfied. You won't be able to do this if you rasterize first and then crop, the excess pixels will be deleted. I don't know why, I found out by accident lol.

CROPPING

(Cropped pixels: the gray/opaque area outside of the selected area. That area disappears once you press enter and crop, but the pixels are retained, so you can move the frames around and reposition them as you like. In this case I could move the frames to the left and include Silver's figure [curly guy in the foreground] in the crop)

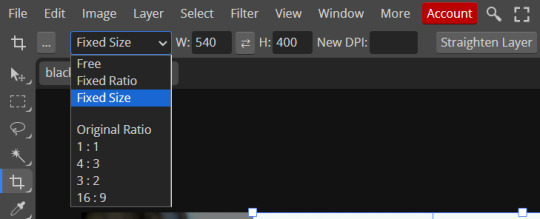

After deleting the Background, you will need to select all your frames (using the Shift key), use the C shortcut on your keyboard to choose the Crop tool. Or you can click on it, whatever's more convenient. Once you do that, a dropdown menu is going to appear. You need to select the "FIXED SIZE" option, as shown in the following screencap.

Once you do that, you can type in your desired width and height. Do not immediately press enter.

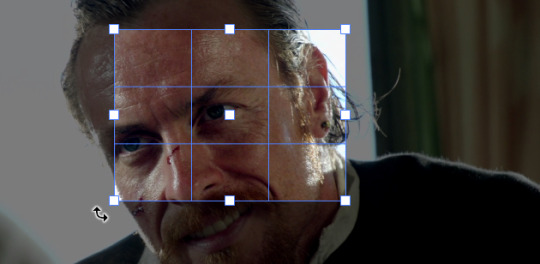

Your work area should now look like this. Now you can click on one of the white squares and enlarge the selected area until the edges are lined up. You can then move it around until it covers the area you wish to gif.

WARNING: to move the big rectangle around, you're gonna have to click on a random point of the work area, PREFERABLY not to close to the rectangle itself, or you might accidentally rotate it.

See? When your cursor is close to the selected area it turns into this rotating tool. Move it away until it reverts to your usual cursor, then you can start moving the rectangle. Press Enter when you're satisfied with the area you selected.

RESIZE

This isn't always necessary (pretty much never in my case) - and it's a passage I often forget myself - but it's mentioned in most of the tutorials I came across over the years, so I'd be remiss if I didn't include it in mine. After cropping, you'll want to resize your image.

IMAGE -> Image Size...

This window will pop up. Now, should the values in the Width and Height space be anything other than 540 and 400 (or the values you entered yourself, whatever they might be) you need to correct that. They've always been correct in my case, but again. Had to mention it.

5. RASTERIZE & MAKE FRAMES

Now that your screencaps are cropped, you can go ahead and convert them.

LAYER -> Rasterize (if you skip this passage you won't be able to Sharpen (or use any filter) on your frames at once. You'll have to Sharpen your frames one by one.

Photopea doesn't feature a timeline and it's not a video editor, which makes this passage crucial. When you select all your smart objects and try to apply a filter, the filter will only by applied to ONE frame. Once you rasterize your smart objects and make them into frames, you can select them all and sharpen them at once.

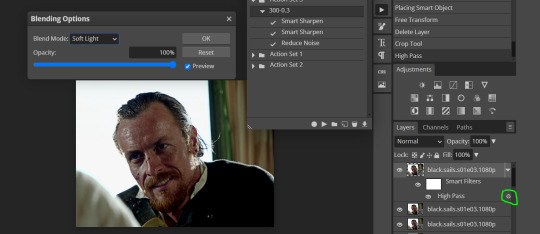

Unfortunately this also means that you won't be able to - I don't know how to explain this properly so bear with me - use all smart filters/use them in the same way a photoshop user can. For example, you can sharpen / remove noise / add noise / unsharp mask... but you can't act on those filters in the same way a photoshop user can.

When you work on smart objects you can change the blend mode - which is critical if you decide to use a filter like High Pass. If you simply apply a high pass filter on photopea you won't be able to change the blend mode and your gif will look like this (following screencaps). Or rather, you will be able to change the blend mode by clicking on the little wheel next to "High pass" (circled in green in the 2nd screencap), but you'll have to apply the filter to each frame manually, one by one.

Then you can rasterize/make into frames, but it's extremely time consuming. I did it once or twice when I first started making gifs and it got old pretty soon haha.

Layer -> Animation -> Make frames. This passage will add "_a_" at the beginning of all your frames and it's what allows you to make a (moving) gif. As I said in the ask, if you skip this passage your gif will not move.

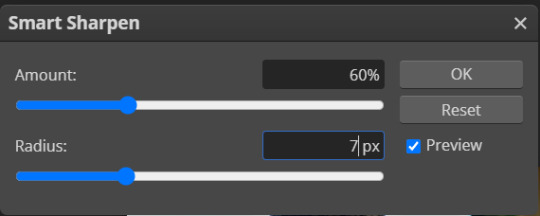

6. SHARPENING

Some people prefer to color first and sharpen later, but I found that sharpening filters (more or less) dramatically alter the aspect of your gif and already brighten it a bit (depending on your settings) and you may end up with an excessively bright gif.

Now, sharpening settings are not necessarily set in stone. The most popular ones are 500/0.4 + 10/10, which I use sometimes. But you may also need to take into account the quality of the files you're working with + the specific tv show you're giffing. I've been using different settings for pretty much every tv show I gif, especially in the last couple months. Some examples:

followed by

OR

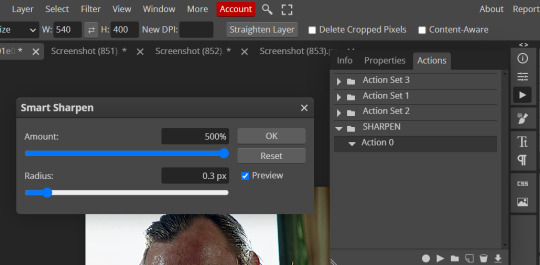

AMOUNT: 500% RADIUS 0.3px

followed by

AMOUNT: 20% (or 10%) RADIUS 10px

You'll just need to experiment and see what works best for your gifs.

Some gifmakers use the UNSHARP MASK filter as well (I think it's pretty popular among photopea users?) but it makes my gifs look extra grainy, makes the borders look super bright and it clashes with my coloring method(s), so I use it rarely and with very moderate settings. Something like this:

Again, depends on the gif and on what you like. I've seen it used with great results by other gifmakers!

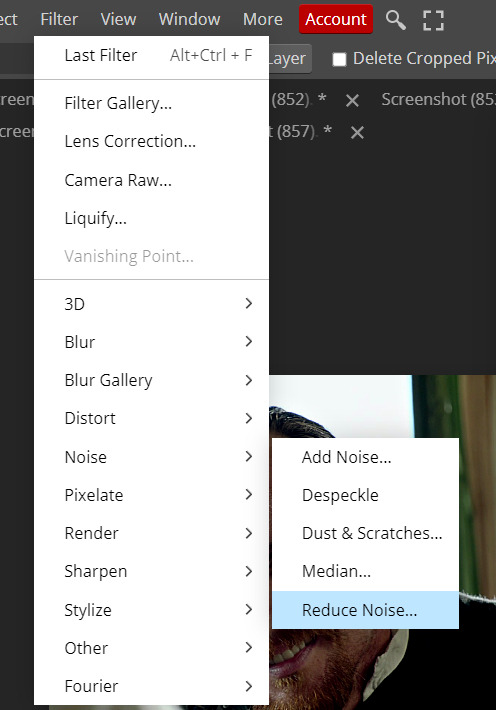

REDUCE NOISE

Sometimes - and this is especially the case for dark scenes - your gif may look excessively grainy, depending on how bright you want to make it. Reducing noise can help. Keep it mind, it can also make it worse and mess up the quality. BUT it also reduces the size of your gif. Obviously, the higher the settings, the more quality will suffer.

These are my standard settings (either 2/70% or 2/80%). It's almost imperceptible, but it helps with some of the trickier scenes.

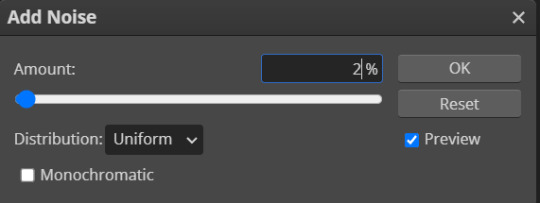

ADDING NOISE

Adding noise (1% or 2% max) can sometimes help with quality (or make it worse, just like reduce noise) but it will make your gif so so so much bigger, and occasionally damage the frames, which means you won't be able to load your gif on tumblr, so I rarely use it.

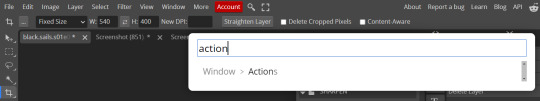

You'll also want to create ACTIONS which will allow you to sharpen your gifs much faster.

HOW TO CREATE AN ACTION ON PHOTOPEA

The Action Button (shaped like a Play button as you can see in the following screencaps) may not be there if you're using photopea for the first time. If that's the case click on the magnifiying glass next to "Account" (in red) and type "actions". Press Enter and the button should immediately show up.

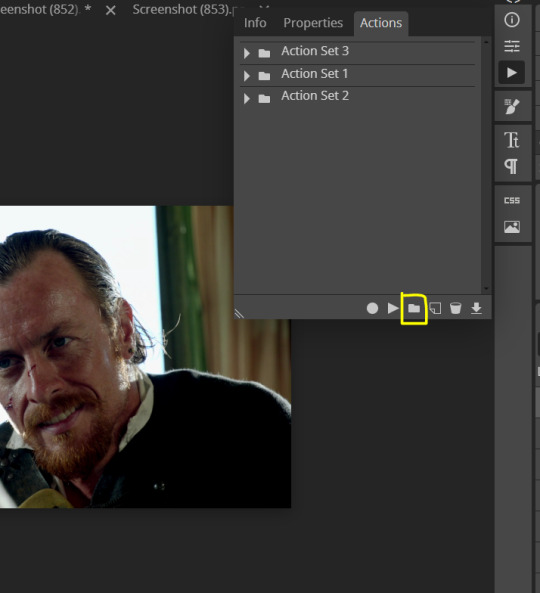

Once you do that, click on the Folder (circled in yellow)

and rename it however you like.

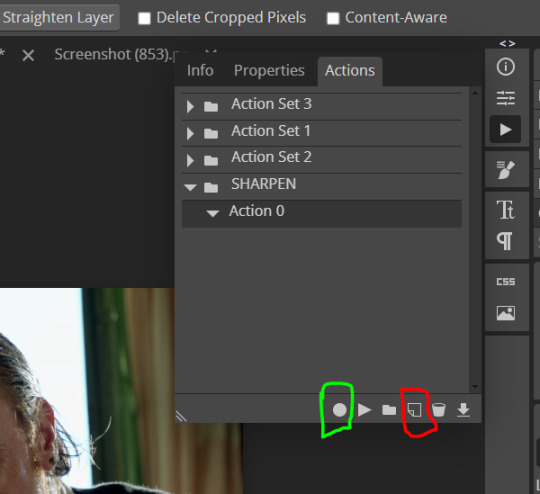

now click on New Action (circled in red). now you can press the Recording button (circled in green)

Now

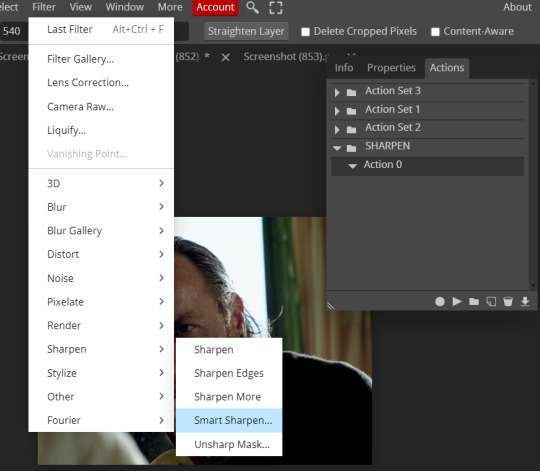

FILTERS -> Smart Sharpen

and you can enter your values. Then you repeat this passage (WITHOUT pressing rec, WITHOUT pressing new action or anything else, you just open the smart shapen window again) and, if you want, you can sharpen your gif some more (10%, 10px, or anything you want.)

Maybe, before creating an action, experiment with the settings first and see what works best.

When you're satisfied, you can PRESS STOP (it's the rec button, which is now a square) and you can DOWNLOAD your action (downwards facing arrow, the last button next to the bin. Sorry, forgot to circle it) .

You need to download your action and then upload it on your photopea. When you do, a window will pop up and photopea will ask you whether you wish to load the action every time you open the program. You choose "Okay" and the action will be loaded in the storage.

When you want to sharpen your gif, you select all your frames, then you click on the Play button, and select the Action, NOT the folder, or it won't work.

Actions can also be created to more rapidly crop and convert your frames, but it doesn't always work on photopea (for me at least). The process is exactly the same, except once you start recording you 1) crop your gif as explained in step 4, 2) convert into frames. Then you stop the recording and download the action and upload it. This won't work for the Rasterize step by the way. Just the Animation -> Make Frame step.

7. COLORING

Now you can color your gif. I won't include a coloring tutorial simply because I use a different method for every tv show I gif for. You normally want to begin with a brightness or a curve layer, but sometimes I start with a Channel Mixer layer to immediately get rid of yellow/green filters (there's a tutorial for this particular tool which you will find in the list I mention in the link below)

[Plus I'm not really an authority on this matter as my method is generally... fuck around and find out. Two years of coloring and I still have no idea what I'm doing. 70% of the time.]

Simple Gif Coloring for Beginners -> very detailed + it includes a pretty handy list of tutorials at the bottom.

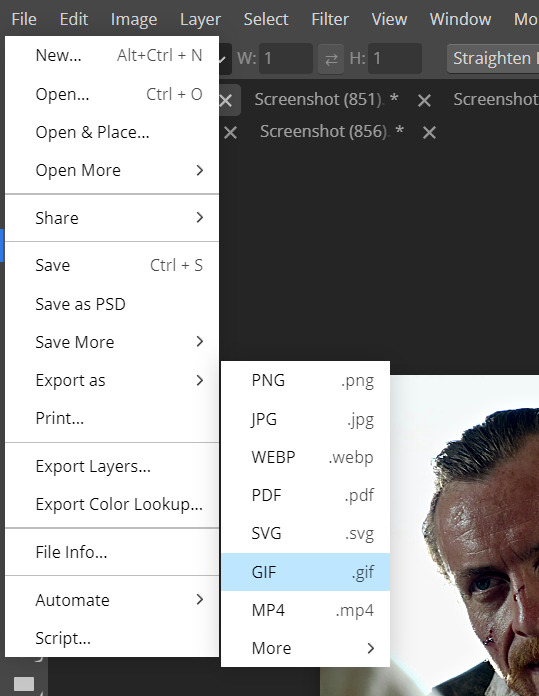

8. EXPORTING

Now you can export your gif. Some gifmakers export their (sharpened) gifs BEFORE coloring and then load the gifs on photopea to color them. I'm not sure it makes any difference.

FILE -> EXPORT AS -> GIF

(not colored, just sharpened)

As you can see, unlike photoshop the exporting settings are pretty thread bare. The only option available is dither - it sometimes help with color banding - which, and I'm quoting from google for maximum clarity:

"refers to the method of simulating colors not available in the color display system of your computer. A higher dithering percentage creates the appearance of more colors and more detail in an image, but can also increase the file size."

SPEED

When you export your gif, it will play at a very decreased speed (100%). I usually set it at 180/190%, but as for every other tool, you might want to play around a little bit.

GIF SIZE/EZGIF OPTIMIZER (See Step 2)

And that's it.

P.S.: worth repeating

Save your work as often as you can, even while coloring or before exporting.

#photopea#my inbox is open if you have any questions <3#image heavy under the cut#photopeablr#tutorials#gif tutorial#allresources#photopea tutorial#completeresources#gifmaking

345 notes

·

View notes

Text

oops! sigil on his weird poetry bullshit again!

notes under cut

you may be asking. what's up with this poem, sigil from tumblr dot com?

well, you see. sometimes, when you mix objectum and otherkinity, you get... results!

those results are me. im a computer. i desire industrial machinery carnally. i am, a little bit insane probably. i love crt monitors i wish they weren't radioactive they're so so so sexy.

anyway. because of me being weird you get! poetry! about a computer and someone that loves it! yay!

sorry that it seems a little unfinished? thats because it vaguely is i couldnt come up with anything. the muse escaped me.

fun fact! the computer, in the first draft, was very very clingy at the end. i decided that didn't fit what i was going for so i changed it but, um, i think its still clingy. i dunno.

anyway i used photopea to put the images together! pure black background, default green, and courier prime (30px, but 24 px for the ribs lungs heart bit) as the font.

i used barra's error message generator to make the popups!

202 notes

·

View notes

Note

Do you think the new division of Cartoon Network Studios will end up exploiting and abusing AI to make new cartoons of their old properties?

I wouldn't put it past any studio to do this.

We're at the end of The Animation Industry As We Know It, so studios are going to do anything and everything they can to stay alive.

The way I see it is:

AI "art" isn't actually art. Art is created by humans to express ideas and emotions. Writing prompts allows a computer to interpret human ideas and emotions by taking other examples of those things and recombining them.

Just because something isn't art doesn't mean that humans can't understand it or find it beautiful. We passed a really fun prompt generation milestone about a year ago where everything looked like it was made by a Dadaist or someone on heavy psychedelics. Now we're at the Uncanny Valley stage. Soon, you won't be able to tell the difference.

It's not just drawings and paintings that are effected, but writing and film. It's every part of the entertainment industry. And the genie is out of the bottle. I've seen people saying that prompt-based image generators have "democratized" art. And I see where they're coming from. In ten years, I can easily see a future where anyone can sit down at their desk, have a short conversation with their computer, and have a ready-to-watch, custom movie with flawless special effects, passable story, and a solid three act structure. You want to replace Harrison Ford in Star Wars with your little brother and have Chewbacca make only fart sounds, and then they fly to Narnia and fistfight Batman? Done.

But, sadly, long before we reach that ten year mark, the bots will get hold of this stuff and absolutely lay waste to existing art industries. Sure, as a prompter I guess you can be proud of the hours or days you put into crafting your prompts, but you know what's better than a human at crafting prompts? Bots. Imagine bots cranking out hundreds of thousands of full-length feature films per minute. The noise level will squash almost any organic artist or AI prompter out of existence.

AI images trivialize real art. The whole point of a studio is to provide the money, labor, and space to create these big, complicated art projects. But if there are no big, complicated art projects, no creatives leading the charge, and no employees to pay... what the fuck do we need studios for? We won't, but their sheer wealth and power will leave them forcing themselves on us for the rest of our lives.

The near future will see studios clamp down on the tech in order to keep it in their own hands. Disney does tons of proprietary tech stuff, so I'm sure they're ahead of the game. Other studios will continue to seek mergers until they can merge with a content distribution platform. I've heard rumors of Comcast wanting to buy out either WB or Nick. That's the sort of thing I'm talking about. The only winners of this game will be the two or three super-huge distribution platforms who can filter out enough of the spam (which they themselves are likely perpetuating) to provide a reasonable entertainment experience.

400,000 channels and nothing's on.

I do think that money will eventually make the "you can't copyright AI stuff" thing go away. There's also the attrition of "Oh, whoops! We accidentally put an AI actor in there and no one noticed for five years, so now it's cool."

One way or another, it's gonna be a wild ride. As the canary in the coal mine, I hope we can all get some UBI before I'm forced to move into the sewers and go full C.H.U.D.

410 notes

·

View notes

Text

@skelkankaos replied to your post “Frank it makes me uncomfortable that you can read Anyways what do you think of this image?”

Çhhett... My body yearns for you bret... My uat...

#this is the end of this silly little conversation#which means that i no longer have to post screenshots of the first word of sentences#every time they're in the same paragraph#computer generated image#guidance scale 3

21 notes

·

View notes

Text

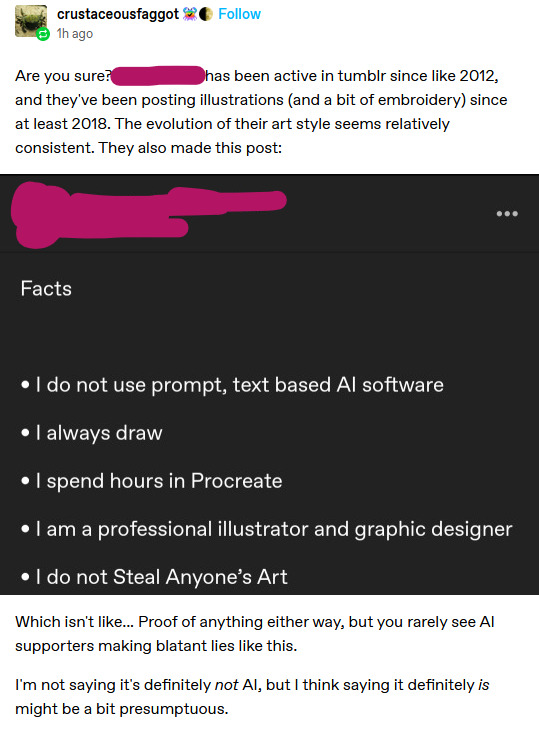

I'm gonna delete that reblog because I don't want to start any witch-hunt type activities, nor do I want to harass others online.

However, I'm not gonna lie - I am still of the belief that the artist in question is implementing AI images in their art, and IF they are, I think it would be better to be forthright about it.

I'd love to be wrong, but there are things that seem fishy to me.

To reply to realistic concerns from @crustaceousfaggot

I have also glanced through their blog and here is my takeaway:

I have no doubts that this individual DOES actually draw, but their style has evolved from mainly paintings of faces and portraits into machinery and landscapes in a very short amount of time.

I don't believe that this individual ONLY uses AI. It's possible they don't use text based AI software. My best guess is that they're popping an image into AI and asking it to complete/modify it.

There are images where it seems to me that the face or the hands of something has been painted over in a style that doesn't quite match the rest of the image.

Again - I don't personally care about how another artist gets their kicks but I want people to be honest about labeling it.

Even if it's augmented with actual art. People deserve to know, in general, if something is drawn or computer generated, just like they deserve to know if an image has been altered and photo-shopped!

And I'd love to be wrong, but there are way too many AI-tells in the art in general for me to not be suspicious.

217 notes

·

View notes

Note

Hello! This is kind of a weird ask, I'm sorry to bother you, but seeing as you're a very intelligent studied historian that I deeply respect, I was hoping you could offer some advice? Or like, things i could read? Lately, i feel like my critical thinking skills are emaciated and its scaring the shit out of me. I feel very slow and like I'm constantly missing important info in relation to news/history/social activism stuff. Thats so vague, sorry, but like any tips on how i can do better?

Aha, thank you. There was recently a good critical-thinking infograph on my dash, so obviously I thought I remembered who reblogged it and checked their blog, it wasn't them, thought it was someone else, checked their blog, it also wasn't them, and now I can't find it to link to. Alas. But I will try to sum up its main points and add a few of my own. I'm glad you're taking the initiative to work on this for yourself, and I will add that while it can seem difficult and overwhelming to sort through the mass of information, especially often-false, deliberately misleading, or otherwise bad information, there are a few tips to help you make some headway, and it's a skill that like any other skill, gets easier with practice. So yes.

The first and most general rule of thumb I would advise is the same thing that IT/computer people tell you about scam emails. If something is written in a way that induces urgency, panic, the feeling that you need to do something RIGHT NOW, or other guilt-tripping or anxiety-inducing language, it is -- to say the least -- questionable. This goes double if it's from anonymous unsourced accounts on social media, is topically or thematically related to a major crisis, or anything else. The intent is to create a panic response in you that overrides your critical faculties, your desire to do some basic Googling or double-checking or independent verification of its claims, and makes you think that you have to SHARE IT WITH EVERYONE NOW or you are personally and morally a bad person. Unfortunately, the world is complicated, issues and responses are complicated, and anyone insisting that there is Only One Solution and it's conveniently the one they're peddling should not be trusted. We used to laugh at parents and grandparents for naively forwarding or responding to obviously scam emails, but now young people are doing the exact same thing by blasting people with completely sourceless social media tweets, clips, and other manipulative BS that is intended to appeal to an emotional gut rather than an intellectual response. When you panic or feel negative emotions (anger, fear, grief, etc) you're more likely to act on something or share questionable information without thinking.

Likewise, you do have basic Internet literacy tools at your disposal. You can just throw a few keywords into Google or Wikipedia and see what comes up. Is any major news organization reporting on this? Is it obviously verifiable as a fake (see the disaster pictures of sharks swimming on highways that get shared after every hurricane)? Can you right-click, perform a reverse image search, and see if this is, for example, a picture from an unrelated war ten years ago instead of an up-to-date image of the current conflict? Especially with the ongoing Israel/Palestine imbroglio, we have people sharing propaganda (particularly Hamas propaganda) BY THE BUCKETLOAD and masquerading it as legitimate news organizations (tip: Quds News Network is literally the Hamas channel). This includes other scuzzy dirtbag-left websites like Grayzone and The Intercept, which often have implicit or explicit links to Russian-funded disinformation campaigns and other demoralizing or disrupting fake news that is deliberately designed to turn young left-leaning Westerners against the Democrats and other liberal political parties, which enables the electoral victory of the fascist far-right and feeds Putin's geopolitical and military aims. Likewise, half of our problems would be solved if tankies weren't so eager to gulp down and propagate anything "anti-Western" and thus amplify the Russian disinformation machine in a way even the Russians themselves sometimes struggle to do, but yeah. That relates to both Russia/Ukraine and Israel/Palestine.

Basically: TikTok, Twitter/X, Tumblr itself, and other platforms are absolutely RIFE with misinformation, and this is due partly to ownership (the Chinese government and Elon Fucking Musk have literally no goddamn reason whatsoever to build an unbiased algorithm, and have been repeatedly proven to be boosting bullshit that supports their particular worldviews) and partly due to the way in which the young Western left has paralyzed itself into hypocritical moral absolutes and pseudo-revolutionary ideology (which is only against the West itself and doesn't think that the rest of the world has agency to act or think for itself outside the West's influence, They Are Very Smart and Anti-Colonialist!) A lot of "information" in left-leaning social media spaces is therefore tainted by this perspective and often relies on flat-out, brazen, easily disprovable lies (like the popular Twitter account insisting that Biden could literally just overturn the Supreme Court if he really wanted to). Not all misinformation is that easy to spot, but with a severe lack of political, historical, civic, or social education (since it's become so polarized and school districts generally steer away from it or teach the watered-down version for fear of being attacked by Moms for Liberty or similar), it is quickly and easily passed along by people wanting trite and simplistic solutions for complex problems or who think the extent of social justice is posting the Right Opinions on social media.

As I said above, everything in the world is complicated and has multiple factors, different influences, possible solutions, involved actors, and external and internal causes. For the most part, if you're encountering anything that insists there's only one shiningly righteous answer (which conveniently is the one All Good and Moral People support!) and the other side is utterly and even demonically in the wrong, that is something that immediately needs a closer look and healthy skepticism. How was this situation created? Who has an interest in either maintaining the status quo, discouraging any change, or insisting that there's only one way to engage with/think about this issue? Who is being harmed and who is being helped by this rhetoric, including and especially when you yourself are encouraged to immediately spread it without criticism or cross-checking? Does it rely on obvious lies, ideological misinformation, or something designed to make you feel the aforementioned negative emotions? Is it independently corroborated? Where is it sourced from? When you put the author's name into Google, what comes up?

Also, I think it's important to add that as a result, it's simply not possible to distill complicated information into a few bite-sized and easily digestible social media chunks. If something is difficult to understand, that means you probably need to spend more time reading about it and encountering diverse perspectives, and that is research and work that has to take place primarily not on social media. You can ask for help and resources (such as you're doing right now, which I think is great!), but you can't use it as your chief or only source of information. You can and should obviously be aware of the limitations and biases of traditional media, but often that has turned into the conspiracy-theory "they never report on what's REALLY GOING ON, the only information you can trust is random anonymous social media accounts managed by God knows who." Traditional media, for better or worse, does have certain evidentiary standards, photographing, sourcing, and verifying requirements, and other ways to confirm that what they're writing about actually has some correspondence with reality. Yes, you need to be skeptical, but you can also trust that some of the initial legwork of verification has been done for you, and you can then move to more nuanced review, such as wording, presentation of perspective, who they're interviewing, any journalistic assumptions, any organizational shortcomings, etc.

Once again: there is a shit-ton of stuff out there, it is hard to instinctively know or understand how to engage with it, and it's okay if you don't automatically "get" everything you read. That's where the principle of actually taking the time to be informed comes in, and why you have to firmly divorce yourself from the notion that being socially aware or informed means just instantly posting or sharing on social media about the crisis of the week, especially if you didn't know anything about it beforehand and are just relying on the Leftist Groupthink to tell you how you should be reacting. Because things are complicated and dangerous, they take more effort to unpick than just instantly sharing a meme or random Twitter video or whatever. If you do in fact want to talk about these things constructively, and not just because you feel like you're peer-pressured into doing so and performing the Correct Opinions, then you will in fact need to spend non-social-media time and effort in learning about them.

If you're at a university, there are often subject catalogues, reference librarians, and other built-in tools that are there for you to use and which you SHOULD use (that's your tuition money, after all). That can help you identify trustworthy information sources and research best practices, and as you do that more often, it will help you have more of a feel for things when you encounter them in the wild. It's not easy at first, but once you get the hang of it, it becomes more so, and will make you more confident in your own judgments, beliefs, and values. That way when you encounter something that you KNOW is wrong, you won't be automatically pressured to share it just to fit in, because you will be able to tell yourself what the problems are.

Good luck!

304 notes

·

View notes

Text

Maybe you should go on down to your local public library. If you're reading this while you're there, put this down and go read an actual book written by someone who isn't irredeemably obsessed with maintaining an icon of the decline and fall of the American automaker. And if you're reading this on the way there, stop driving while using your phone. You need to be watching that coolant temperature gauge or you're gonna be putting in another head gasket.

Public libraries used to have a bad image, of being full of stuffy unloved books and a strange odour of decomposing newsprint. Nowadays, though, they're full of many exciting things. This is because the new generation of librarians work hard to make this stuff relevant to the average person, and also because the old librarians got so tired of explaining to me in very small words that they don't have Haynes manuals that they quit.

You might find other exciting things to do. The class-free nature of public institutions means that you get to learn a lot about folks in different economic circumstances than yourself. That builds community cohesion, especially when you ask them to help you push start your shitbox afterward. Lots of family activities, too, like wondering what that new kind of fluid leaking out of the German cars in the parking lot is. It doesn't taste like Pentosin.

And: good news. The library computers now have online Haynes manuals! They're like real manuals, but inexplicably trapped inside a small flickering plane that brings torment to everyone else who gazes upon them. You still can't bring an entire transmission in there to tear down, because it gets gear oil all over the keyboard. You'll have to pay a few cents to print it off first. Tragedy of the commons, and all that.

313 notes

·

View notes