#ultra-low power IoT sensors/nodes

Text

youtube

Nexperia Energy Harvesting MPPT Technology Explained

https://www.futureelectronics.com/m/nexperia. Nexperia's Energy Harvesting PMIC uses the advanced Maximum Power Point Tracking (MPPT) algorithm to harvest energy for ultra-low power IoT sensors/nodes. MPPT uses an embedded hill-climbing algorithm to deliver the maximum power to the load. https://youtu.be/yWnLrX9O7qg

#MPPT#Nexperia#Energy Harvesting#PMIC#Maximum Power Point Tracking#ultra-low power IoT sensors/nodes#IoT sensors/nodes#MPPT circuit#maximum power point#Nexperia Energy Harvesting#Nexperia PMIC#Youtube

2 notes

·

View notes

Text

youtube

Nexperia Energy Harvesting MPPT Technology Explained

https://www.futureelectronics.com/m/nexperia. Nexperia's Energy Harvesting PMIC uses the advanced Maximum Power Point Tracking (MPPT) algorithm to harvest energy for ultra-low power IoT sensors/nodes. MPPT uses an embedded hill-climbing algorithm to deliver the maximum power to the load. https://youtu.be/yWnLrX9O7qg

#MPPT#Nexperia#Energy Harvesting#PMIC#Maximum Power Point Tracking#ultra-low power IoT sensors/nodes#IoT sensors/nodes#MPPT circuit#maximum power point#Nexperia Energy Harvesting#Nexperia PMIC#Youtube

0 notes

Text

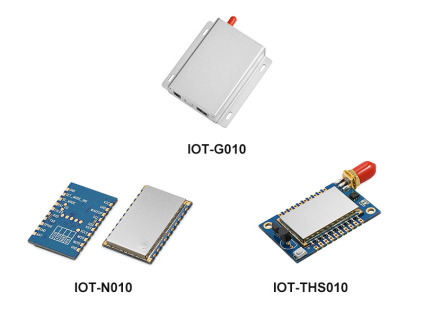

High Compatibility Multi-Node Synchronization and Expansion Support - IOT-G010 Achieves Precise Data Collection and Transmission

The ultra-low power sensor monitoring star network system is mainly used for sensor data acquisition and control of the Internet of Things. The whole network system is composed of gateway IOT-G010 and node IOT-N010/ IOT-THS010, which uses a wireless star network combined with Mesh networking. A good coordination mechanism and precise scheduling algorithm are used internally between the node and the gateway to avoid collisions between data packets in the air. The communication protocol between the node and the gateway has been implemented by the system, and customers can build a reliable acquisition and control network as long as they follow a simple configuration.

What is IoT Data Collection Gateway?

The application system that utilizes a wireless module and sensors to collect data from external sources and input it into the system for data statistics. Its working principle involves automatically collecting non-electrical or electrical signals from wireless modules, sensors, and other analog and digital measurement units, and sending them to a computer system for analysis and processing. Data collection is developed as an application system to measure physical phenomena such as voltage, current, temperature, pressure, humidity, etc. It is based on hardware like wireless modules and sensors, combined with application software and computers, to measure various physical phenomena.

The Features of IoT Sensor Data Collection Gateway

Ultra-Low Power Consumption for Nodes: Designed with ultra-low power consumption to effectively extend battery life, making it suitable for long-term operation, especially for battery-powered devices.

Multi-Point Data Collection: A single gateway can support up to 255 nodes, meeting the needs of large-scale data collection and is ideal for applications requiring simultaneous monitoring of multiple sensors.

Multi-Level Data Collision Avoidance Mechanism: Equipped with a multi-level data collision avoidance mechanism to ensure the reliability and integrity of data transmission when multiple nodes are transmitting data simultaneously.

Over-the-Air Wake-Up and Wake-Up of Subordinate Devices: Supports remote wake-up functionality, allowing devices to enter sleep mode when not in use, thereby effectively reducing power consumption and extending the device's lifespan.

Multiple Crash Prevention Mechanisms: Built-in multiple crash prevention mechanisms ensure long-term stable operation of the device, maintaining high reliability even in complex environments.

Automatic Re-transmission Mechanism: Equipped with an automatic re-transmission mechanism, ensuring that if data loss occurs during transmission, it can be automatically resent, thereby improving the reliability of data transmission.

Multi-Node Time Synchronization: IOT-G010 has powerful time synchronization capabilities, ensuring time consistency across all nodes in the network.

Gateway and Node Time Synchronization: IOT-G010 uses efficient synchronization algorithms to ensure consistent time synchronization between the gateway and all connected nodes.

Multi-Sensor Support: IOT-G010 supports various types of sensors, including but not limited to temperature, humidity, pressure, light, motion, and gas detection. Its robust compatibility and scalability allow it to be widely used in various IoT scenarios, such as smart cities, smart homes, environmental monitoring, and industrial automation. Users can flexibly configure and add different types of sensors based on specific needs.

Quickly customize various functions: The IOT-G010 supports rapid customization, allowing for functional adjustments and optimizations according to different application scenarios. Whether it is data collection frequency, transmission methods, power management, or security strategies, users can flexibly configure these settings through a user-friendly configuration interface or command-line interface.

Data Packet Length: Due to the physical limitations of the wireless chip, the maximum length of a single data packet is 120 bytes. If the customer's data length exceeds 120 bytes, the customer needs to divide the data into smaller packets for transmission.

Rate Selection: The system supports wireless rates from 1200bps to 500Kbps. The selection of the rate requires a balance between power consumption and distance based on the actual application. Choosing a lower rate can achieve longer distances, but it will increase the transmission time, which in turn increases power consumption.

The Functions of IoT Sensor Data Collection Gateway

Data Collection: Collects data from various sensors and devices within the network.

Data Transmission: The IOT-G010 gateway is equipped with multiple data transmission interfaces, such as serial ports, Ethernet, and wireless communication. It can transmit the collected data to a central system or cloud service through these interfaces.

Network Management: The IOT-G010 has robust network management capabilities, effectively managing and maintaining the entire network.

Topology: It supports star network architecture and multi-level automatic relay functions, ensuring stable communication and data transmission between nodes.

Firmware Management: The gateway supports local and remote firmware updates and maintenance, allowing users to upgrade firmware via serial port or OTA (Over-The-Air).

Parameter Configuration: The IOT-G010 allows users to flexibly configure and adjust operating parameters to meet the real-time needs of different application scenarios.

Security: Uses AES-128 encryption technology during data transmission to effectively prevent data theft or tampering.

Easy Expansion of Interfaces: The IOT-G010 supports multiple interfaces to ensure compatibility with various devices and sensors. It has reserved interfaces such as SPI, I2C, and GPIO, providing great expand ability.

The Operating Modes of IoT Sensor Data Collection Gateway

Using different commands, the gateway can enter the configuration mode. In this mode, it can set its own parameters and those of the corresponding nodes. After the command is sent, it will automatically enter the normal operation mode.

The Data Output Options for IoT Sensor Data Collection Gateway

The gateway has two data output options: USB output and Ethernet output. You can switch those two option by using the button.

Red light double flash indicates that the serial port is switched to the Ethernet module and outputs data through the RJ45 interface.

Blue light double flash indicates that the serial port is switched to the USB-to-serial chip and outputs data through the USB interface.

The IOT-G010 IoT sensor data collection gateway from NiceRF company, with its powerful features, reliable performance, and flexible expand-ability, meets various IoT application’s needs. It is a comprehensive and high-performance IoT sensor data collection gateway that provides users with efficient and reliable IoT solutions.

For details, please click:https://www.nicerf.com/products/

Or click:https://nicerf.en.alibaba.com/productlist.html?spm=a2700.shop_index.88.4.1fec2b006JKUsd

For consultation, please contact NiceRF (Email: [email protected]).

0 notes

Text

Computing at the Edge: How Edge Computing Enhances Data Efficiency in 2024

In the digital age, where data is generated at an unprecedented rate, traditional cloud computing architectures are facing challenges in meeting the demands for low-latency processing, real-time analytics, and edge-to-cloud connectivity. Edge computing has emerged as a transformative paradigm, bringing compute, storage, and analytics capabilities closer to the data source, enabling faster insights, enhanced security, and improved operational efficiency. As we journey into 2024, the Edge Computing Market continues to expand rapidly, driven by factors such as the proliferation of Internet of Things (IoT) devices, the rise of 5G networks, and the need for decentralized computing architectures. This article delves into the key trends, market dynamics, and factors shaping the Edge Computing Market in the coming years.

Market Overview:

The Edge Computing Market is experiencing exponential growth, with a projected Compound Annual Growth Rate (CAGR) exceeding 30% from 2022 to 2024. This growth is fueled by factors such as the increasing adoption of IoT devices, the deployment of edge computing nodes in industrial environments, and the demand for real-time analytics and insights.

Key Factors Driving Growth:

Proliferation of IoT Devices: The proliferation of IoT devices across industries such as manufacturing, transportation, healthcare, and retail is generating vast amounts of data at the network edge. Edge computing enables organizations to process and analyze this data locally, reducing latency, minimizing bandwidth usage, and enabling faster decision-making in real time.

Rise of 5G Networks: The rollout of 5G networks promises ultra-low latency and high bandwidth connectivity, making it ideal for supporting edge computing applications. Edge computing leverages the distributed nature of 5G networks to deliver compute and storage resources closer to end-users and IoT devices, enabling seamless connectivity and enhanced user experiences.

Decentralized Computing Architectures: Edge computing facilitates the transition from centralized cloud computing architectures to decentralized computing models, where data processing and analytics are distributed across edge nodes, cloud data centers, and hybrid environments. This distributed approach improves resilience, scalability, and security, particularly in mission-critical applications and remote locations.

Real-time Analytics and Insights: Edge computing enables organizations to derive real-time insights from streaming data sources, such as sensors, cameras, and connected devices, without the need to transmit data to centralized cloud servers. This capability is essential for applications requiring low-latency processing, such as autonomous vehicles, predictive maintenance, and smart infrastructure.

Challenges and Opportunities:

While the Edge Computing Market presents significant growth opportunities, challenges such as security vulnerabilities, interoperability issues, and skill shortages remain. However, these challenges also create opportunities for industry players to innovate, develop edge-native applications, and collaborate on standardized frameworks to address the evolving needs of edge computing deployments across industries.

For More Info@ https://www.gmiresearch.com/report/global-edge-computing-market-iot/

Conclusion:

In 2024, the Edge Computing Market stands at the forefront of digital transformation, enabling organizations to harness the power of data at the network edge. As edge computing technologies continue to mature and evolve, the market's growth is not just about hardware and software; it is about unlocking new possibilities for innovation, efficiency, and competitiveness in the digital economy. The Edge Computing Market is not merely a segment of the IT industry; it is a catalyst for reshaping the way data is processed, analyzed, and utilized at the edge of the network. In embracing the opportunities presented by IoT, 5G, and decentralized computing architectures, the Edge Computing Market is not just riding the edge; it is driving the future of computing in the 21st century.

0 notes

Text

What is the difference between the LoRaWAN wireless module and LoRa gateway wireless transmission technology?

Many individuals find it challenging to differentiate between the LoRaWAN wireless module and LoRa gateway wireless transmission technology, as well as their applications within the realm of IoT.

LoRaWAN specifically pertains to the networking protocol found within the MAC (Media Access Control) layer. In contrast, LoRa serves as a protocol within the physical layer. Although current LoRaWAN networking implementations utilize LoRa as the physical layer, it's worth noting that the LoRaWAN protocol also allows for the use of GFSK (Gaussian Frequency-Shift Keying) as the physical layer in specific frequency bands. From a network layering perspective, LoRaWAN can adopt various physical layer protocols, just as LoRa can serve as the physical layer for other networking technologies.

LoRa, as a technology, falls under the category of LPWAN (Low-Power Wide-Area Network) communication technologies. It represents an ultra-long-distance wireless transmission method based on spread spectrum technology, pioneered and promoted by Semtech in the United States. This approach revolutionizes the previous trade-off between transmission distance and power consumption, offering users a straightforward system capable of achieving extended range, prolonged battery life, and increased capacity. Consequently, it expands the capabilities of sensor networks. Currently, LoRa predominantly operates within free frequency bands globally, including 433/868/915MHz, among others.

On the other hand, LoRaWAN wireless communication stands as an open standard defining the communication protocol for LPWAN technology based on LoRa chips. LoRaWAN defines the Media Access Control (MAC) layer at the data link level and is overseen by the LoRa Alliance. It's crucial to distinguish between LoRa and LoRaWAN because companies like Link Labs utilize a proprietary MAC layer in conjunction with LoRa chips to create more advanced hybrid designs, such as Link Labs' Symphony Link.

LoRaWAN typically employs a star or star-to-star topology, which is generally considered superior to mesh networks due to advantages such as conserving battery power and extending communication range. In a star topology, messages are relayed to a central server through gateways, and each end node can transmit data to multiple gateways. These gateways then forward the data to the web server, where tasks like redundancy detection, security checks, and message scheduling are executed.

In summary, LoRa encompasses solely the link layer protocol, making it suitable for point-to-point (P2P) communication between nodes. In contrast, LoRaWAN includes the network layer, allowing data to be sent to any base station connected to a cloud platform. By connecting the appropriate antenna to its socket, the LoRaWAN module can operate at different frequencies, offering versatility in its applications.

0 notes

Text

Arduino PLC | MQTT End Device | Industrial IoT device manufacturer | norvi.lk

How Programmable IoT Devices Operate

Having access to the most dependable and effective hardware speeds up the completion of your project. The ability to programme flexibly.

ESP32 Ethernet Device

When using ESP32 Ethernet, the NORVI ENET series is the best option because it has industrial-grade I/O and voltages. Both wireless and cable connectivity to the network are offered by ESP32 Ethernet.

Industrial Arduino Mega

The NORVI Arita is an enhanced version of the NORVI Series. Five conventional variants with a choice of two potent microprocessors are offered. Arita is built to deliver all of the micro-controller's performance while maintaining reliability. It works with practically all industrial input and output formats.

Arduino based Industrial Controller

Arduino IDE-programmable

Integrated OLED and customizable buttons for HMI

The ability to programme flexibly

LED signals for simple diagnosis

Applications Using a Programmable MQTT Device and Ultra Low Energy Batteries

Agent One Industrial Controllers are available for low power applications as well; STM32L series microcontroller-controlled devices are employed in ultra low power applications, where the devices must be powered by batteries for an extended period of time. When a device goes to sleep, the Agent One BT family is specifically built with transistor outputs to turn off external sensors.

Wall mount IoT Node

The NORVI SSN range is designed for independent installations in industrial settings with a focus on tracking sensor data or parameters from external devices. The implementations are made simple by the attachments for wall installation and pole mount.

NORVI Controllers

Our Address :

ICONIC DEVICES PVT LTD

Phone : +94 41 226 1776 Phone : +94 77 111 1776

E-mail : [email protected] / [email protected]

Web : www.icd.lk

Distributors

USA

Harnesses Motion LLC

1660 Bramble Rd. Tecumseh, MI

49286, United States

Phone : +1 (734) 347-9115

E-mail : [email protected]

EUROPE

CarTFT.com e.K.

Hauffstraße 7

72762 Reutlingen

Deutschland

Phone : +49 7121 3878264

E-mail : [email protected]

MQTT End Device | Arduino PLC | Analog Input | Wireless sensor | ModBus MQTT gateway | Industrial IoT device manufacturer | WiFi Data logger

#Programmable IoT Devices#Industrial IoT Devices#Industrial Arduino#Arduino PLC#ESP32 Ethernet Device#Programmable Ethernet IoT Device#MQTT End Device#Industrial Arduino Mega#Arduino Mega PLC#Arduino based Industrial Controller#Programmable MQTT Device#Modbus MQTT Device#ESP32 Modbus device#Wall mount IoT Node#Wall mount sensor node#Programmable sensor node#Wireless sensor#Battery Powered IoT Node#Battery Powered Programmable Sensor node#Solar powered sensor node#MODBUS RTU ESP32#Modbus to IoT gateway#Modbus MQTT gateway#Programmable MQTT devices#MQTT over WIFI devices#MQTT over Ethernet devices#Industrial IoT device manufacturer#0 - 10V Arduino device#4 - 20mA Arduino device#ESP32 data logger

1 note

·

View note

Text

10-bit 3Msps Ultra low power SAR ADC IP core for immediate licensing

T2M-IP, the global independent semiconductor IP Cores provider & Technology experts, is pleased to announce its 10-Bit 3-Msps Ultra Low Power SAR ADC IP Core which is Silicon Proven in 28HPC+ process technology is available immediately for licensing. The ADC uses a proprietary architecture that reduces harmonic and intermodulation distortions at high output frequency and amplitudes making it a truly lossless Analog IP Core.

The 10-Bit 3-Msps Ultra Low Power SAR ADC IP Core is High performance technology with 10-bit resolution, 3-Msps sample rate Ultra Low Power Mixed-signal SAR ADC IP Core for leading edge systems on chip (SoCs) for microcontrollers, medical applications, and General purpose ICs.

A digital signal that is discrete in both time and amplitude is created from an analogue signal that is continuous in both time and amplitude by an analog-to-digital converter. The 10 Bit 3 Msps Ultra Low Power SAR ADC IP Core employs a high-performance architecture and provides an optional differential current output or differential voltage output. The bandgap and current source are included to provide a complete ADC. The ADC can be configured to adjust full-scale output range and has all the necessary calibration circuitry to provide excellent static and dynamic linearity performance.

Ultra-Low area and Low power provides accurate charge transfer without the need for calibration which increases ADC channel speed and relaxes op-amp gain, bandwidth, and offset requirements. These features simplify high-performance analog designs and reduces noise and power consumption to benefits its excellent linearity outcome with compact area.

The 10-Bit 3-Msps Ultra Low Power SAR ADC IP Core has been previously used in Medical Application, Ethernet, Automotive, Communication system, Microcontrollers and Sensors.

In addition to 10 Bit 3 Msps Ultra Low Power SAR ADC IP Cores, T2M ‘s broad silicon Analog IP Core Portfolio includes data converter (ADC and DAC) IP cores offer sampling rates from a few MSPS to over 20GSPS and resolutions ranging from 6 bits to 14 bits, available in major Fabs in different process geometries as small as 7nm. They can also be ported to other foundries and leading-edge processes nodes on request.

About T2M: T2MIP is the global independent semiconductor technology experts, supplying complex semiconductor IP Cores, Software, KGD and disruptive technologies enabling accelerated development of your Wearables, IOT, Communications, Storage, Servers, Networking, TV, STB and Satellite SoCs. For more information, please visit: www.t-2-m.com

Availability: These Semiconductor Interface IP Cores are available for immediate licensing either stand alone or with pre-integrated Controllers and PHYs. For more information on licensing options and pricing please drop a request / MailTo

1 note

·

View note

Text

Top 10 Emerging Technology

Today we are going to talk about the top 10 futuristic technology which is going to change the world in a complete way.

Technology is ever-evolving, regardless of the the current market scenario technology is never limited in any condition. Technology is evolving with ground-breaking innovations to deal with our current issues. I am not at all surprised about people who are making predictions about the future of technology in the coming year. Yes, technology is making our life quite easier, here we will talk about the top 10 emerging technology that is going to be very much impactful for us in coming years.

• Artificial intelligence

It can be termed as the most transformative evolution in the field of technology. It will in near future be very helpful in the many fields like Healthcare, Entertainment, Cybersecurity, Vital Tasks, Translation & linguistics expertise area, Sports Training, Payments, Business Management & handling, Political Analysis, sports Strategizing, Purchases through Photographs and many more sectors to be named here.

5G

o 5G technology is basically a fifth-generation technology standard for broadband cellular networks, Deployment of this technology is already rolled in by many telecom operators worldwide since 2019. 5G wireless technology is meant to deliver higher multi-GBPS peak data speeds, ultra-low latency, more reliability, massive network capacity, increased availability, and a more uniform user experience to more users. Higher performance and improved efficiency empower new user experiences and it connects new industries as well. Now talking about the future impact of this technology trust me it is going to be very promising as 5G is now the driving force behind technologies like Internet of things {IOT}, Artificial intelligence, and machine learning. The list of industries that will benefit from this technology is endless as the capabilities it offers will surpass anything currently in the place.5G have the advanced network infrastructure can help us more fully realize the benefits of cutting-edge technologies and create meaningful impressions for the end users. The areas which will be using these technologies are Healthcare, Healthcare, Manufacturing, Retail, Finance, Energy and Utilities. While these are just a few of the Industries that are going to be benefited from 5G in the near future, this innovation clearly is revolutionary for the world at large.

Autonomous Vehicles

o We can say the transportation has changed the way we have changed our life. So does the Autonomous Transportation is going to do this to world this time. We have seen tremendous evolution in this sector from horses and carts to cars and now automations of the mean. Autonomous Vehicle has been driven by Both technical innovation ad Socioeconomics factors. Till now this technology has achieved impressive milestones. It is in use since 2018 in many countries where they have similar infrastructure. But on the other hand, this technology is full of obstacles and unforeseen challenges. The good thing about this technology is that it has reduced the death rates by 90 % according to a report by McKinsey & Company. And moreover, in the years to come, the vehicles would have the potential to give a performance information and provide information to automakers and drivers about security, performance of the vehicle, Road Condition

Blockchain

It is a technology which allows digital information to be distributed but not copied. It is a kind of digital currency as example Bitcoin, Litecoin, Ethereum and the like. Let me explain you how this technology works Once a block is filled with data, it is chained to the previous block, thus making the data chained together in chronological order. Every block will have a unique hash on its own and store the hash of the previous block it was chained to. As soon as a new block is added to a blockchain, the entire blockchain record is updated in all the nodes that are part of the blockchain network, thereby creating a lot of replications of the blockchain so that even if one computer is corrupted or compromised, the entire blockchain data is available in all other nodes. In future the blockchains and cryptocurrency would be a major part of the mainstream financial system and may have to satisfy widely divergent criteria. Some economics analysts predict big changes in crypto when institutional money will enter the crypto market. However, there is a very fine possibility that crypto will be floated on the NASDAQ, which would further add credibility to blockchain and its uses as an alternative to conventional currencies.s •

Human Augmentations

o Human augmentations are a technique that is basically aiming towards enhancement of human ability using either medicine or technology, basically focusing on physical and cognitive improvement as an integral part of human body. Let us make You understand this by an example – Limb Prosthetics, it is created using active control system that will outrun the highest possible performance by natural human parts. Let us dip into past of Human augmentation it is more towards Technological studies rather than to be medicinal research. This technology has helped people with disabilities, along with healing the sick. It is also a technology which promises to end the physical disability and prevent human from different kid if injuries. As per studies in near future this technology can be used as an idea of improving the non-disabled with the help of bionics and prosthetic augmentation. Few more things which this technology can do is bionic human joints, embedded scanning, chemical balancing system to enhance physical activities, permanent and customisable contact lenses, augmented skulls, feet, artificial windpipes for human throats and there are a thousand thing to keep counting and going on. It does not limit it to this thing only, augmentation can offer things beyond our current imagination we are still sceptical about making this technology mainstream.

Internet of things

Let us understand IOT, Objects or things which are connected with software, sensors, and any technologies for the purpose of exchange of data or connecting with other devices and systems over the internet is referred to as IOT – internet of things .The power of IOT is that it can automate our homes and workplaces and in future it ay be used to design the entire city or state to deal with the traffic congestion parking issues or for making the city greener or environment rich. It will help to build better environments which in nature is intelligent, efficient, and sustainable. IOT has capability of building a smart economy and governance of a particular place or wherever it is applied. It will also enable us to enhance safety, cutting energy usage and cost and reducing environmental impact. Future looks quite promising with the increasing development of IOT.

Quantum Computing

o A technology which is very much remarkable trend in the current market scenario, It is a form of computing technology that works on the advantage of quantum phenomena like quantum entanglement and superposition. These computers are now many times faster than regular computers. There are many organisations which are involved in making innovations, to name those company few are as GOOGLE, AMAZON, MICROSOFT, HONEYWELL, SPLUNK. Uses of this technology is vast and cannot be limited to any single field. There are so many fields that is finding this technology useful are Banking and finances to manage credit card risk, analysis of online transactions, high frequency trading and fraud analysis. The future of this technology is quite highly aspiring as it can impact many sectors namely Healthcare, energy, finance, security, and entertainment. It is going to be an industry worth multibillion dollar by the end of 2030.

AI CLOUD SERVICES

o This technology is mix of few future technologies this is basically the merging of the machine learning capabilities of artificial intelligence with cloud-based computing environment enabling intuitive and connected experiences possible. Let us understand what cloud is first, cloud is a popular storage option used by both consumers and enterprise-level users. With cloud computing, AI is more plausible and With Cloud computing, AI is more plausible and accessible. It is primarily as most of the hardware that people use are incapable of handling AI applications. Yes, we are saying that smartphones and laptops are not competent to manage AI applications on their own Cloud provides better accuracy and speed for many GPU applications like machine vision. Hybrid cloud enables simple tasks to be parsed on device and run locally without the need of an internet connection

BIG DATA ANALYTICS

This technology is the use pf advanced analytics techniques against very large, diverse data sets that includes structured, semi structured, and unstructured data from different sources and in different sizes from terabytes to zettabytes. Big data are types of data whose size or type of the data that is beyond the ability of traditional relational databases to capture, manage and process the data with low latency, the future of this technology is something like this, it will enable a sharper focus on Data Governance, Decision making will be speed up by this technology through augmented analytics. It will play an important role in research it will be the supplement of researchers. Customer Experiences will be a far better smooth end. A lot of cloud participation will be increased either it is a public cloud space or private cloud space. This technology will be more accessible using cloud technologies •

Cybersecurity

This technology is the practice to safeguard our online existence, servers, mobile devices, electronics system, networks, and data from malicious attacks on the digital space. There are many other terms for the same technology i.e.- Information technology security, electronic information security. This technology basically keeps devices free from threat and attacks. There are 5 basics of cybersecurity Change, Compliance, Cost, Continuity, and Coverage. Sooner or later Humans will be replaced from this field and Artificial intelligence will take charge of cybersecurity. The important services of tomorrow demand crucial decisions today. Business change is exponential in that the strategies and changes made today will create the framework from which higher agility and innovation can be obtained. It is this agility that will produce the next and more significant round of transformation to build the business of the future.

1 note

·

View note

Text

Global Pet Wearable Market Analysis by Trends 2021 Size, Share, Future Plans and Forecast 2029

Global Pet Wearable Market: is expected to reach US $ 6.95 Bn by 2029, at a CAGR of 14.3% during the forecast period.

Global Pet Wearable Market Overview:

Global Pet Wearable Market research assesses the market's primary characteristics based on supply, demand, feasibility, and current trends. Forecasts for the years 2022-2027 are also included in the Global Pet Wearable Market research. Based on statistical data and in-depth research, the Global Pet Wearable report estimates and forecasts potential growth in the global Global Pet Wearable market at each point in time, taking into account both qualitative and quantitative values of major factors such as historical, current, and future trends. The research looks at both the existing Global Pet Wearable market and the worldwide market's prospects. The report also includes a thorough review of company execution, market share, and cost analysis data.

Collect Free Sample Copy Link @ https://www.maximizemarketresearch.com/request-sample/6478

Global Pet Wearable Market Dynamic:

Implementing Pet Wearable is to grow connection by sending data to centralized server through networks such as wireless local area network (WLAN), Bluetooth, and RFID. The IoT connects billions of pet devices and sensors to develop innovative applications in the market. Smartphones, laptops, and tablets are major system through which people and system get connected. At present, IoT systems use low-cost and energy efficient wireless sensor nodes and were influence further. Their continuous operation mechanisms include power management and low power radio. IoT technologies have become an integral part of many applications including smart pet wearables.

Market Scope:

We cover all areas of the market in our study. Positive qualities, restrictions, potential, and challenges are all examined in depth in the study, with all data coming from press releases and annual reports. The study's purpose is to suggest a patent-based technique for identifying potential technology partners as a means of supporting open innovation. Users can take additional steps in their organization's growth or improvement by using the report's market share analysis and comparison of the major companies.

Our team is analysing the influence of COVID 19 on various industry verticals and providing authenticated data to the customer. This information helps us better understand the market. To comprehend the impact and factors of COVID 19 on the worldwide Global Pet Wearable market, consult our expert monitoring, which describes all of the influencing elements and COVID impact on each key player.

Global Pet Wearable Market Key companies:

• Whistle Labs Inc.

• PetPace Ltd.

• Nedap N.V.

• Loc8tor Ltd.

• DeLaval Inc.

• FitBark

• i4C Innovations

• Tractive

• Binatone Global

• Cybortra

• Garmin

• KYON

• PawsCam

• PawTrax

• Pet Vu

• Petcube

• Petrek Australia

• Pettorway

• Pitpatpet

• Pod Trackers

• Trackimo

• Tractive

• Wondermento

• WTS - Wonder Technology Solutions

Ask your queries regarding report Link @ https://www.maximizemarketresearch.com/inquiry-before-buying/6478

Market Segmentation:

Based on Technology type, Pet Wearable market can be fragmented into GPS, RFID Sensors. Adopting RFID tags has estimated to lead the market segment. The rising trend during 2021 has dominated market and anticipated to witness a slight decline in its market share by the end of 2022. Low frequency (LF) and ultra-high frequency (UHF) are two RFID tags designed frequencies. The vital application for LF RFID is animal tracking and UHF-based RFID tags record continuous readings of a pet's daily activities

Based on application, Pet Wearable market is sectionised into Medical diagnosis & treatment, Behavior monitoring & control, Facilitation, safety & security and Identification and tracking. The tracking segment estimate to have strong growth by end of 2022. Tracking collars with supported Smartphone applications have high demand. This attributed to benefits locate pets on-demand and track their activities at regular intervals.

This Global Pet Wearable Market study also includes product sales forecasts, which can help market players launch new goods and avoid costly mistakes. It indicates which aspects of the business need to be improved in order for the company to succeed. It's also simple to detect fresh possibilities to stay one step ahead of the competition; this market research study includes the most recent trends to assist in bringing the company to market and gaining a considerable edge. A review of the brand's summary, overview, market revenue, and financial analysis from the top vendors in the Global Pet Wearable industry is one of the report's most important sections.

About Maximize Market Research:

Maximize Market Research, a global market research firm with a dedicated team of professionals and data has carried out extensive research on the Transportation management system market. Maximize Market Research provides syndicated and custom B2B and B2C business and market research on 12,000 high-growth emerging technologies, opportunities, and threats to companies in the chemical, healthcare, pharmaceuticals, electronics, and communications, internet of things, food and beverage, aerospace and defense, and other manufacturing sectors. Maximize Market Research is well-positioned to analyze and forecast market size while also taking into consideration the competitive landscape of the sectors.

Contact us:

MAXIMIZE MARKET RESEARCH PVT. LTD.

3rd Floor, Navale IT Park Phase 2,

Pune Banglore Highway,

Narhe, Pune, Maharashtra 411041, India.

Email: [email protected]

Phone No.: +91 20 6630 3320

Website: www.maximizemarketresearch.com

0 notes

Text

Silicon Labs Introduce World's First Secure Sub-GHz SoCs with Long Wireless Range and 10+ Years Battery Life

Silicon Labs introduced new sub-1-GHz (sub-GHz) SoCs, delivering the world's first sub-GHz wireless solutions that combine long-range RF and energy efficiency with certified Arm PSA Level 3 security to meet the global demand for high-performance, battery-powered IoT products. Expanding the company's award-winning Series 2 platform, EFR32FG23 (FG23) and EFR32ZG23 (ZG23) system-on-chip (SoC) solutions provide developers with flexible, multiprotocol sub-GHz connectivity options supporting a wide range of modulation schemes and advanced wireless technologies, including Amazon Sidewalk, mioty, Wireless M-Bus, Z-Wave and proprietary IoT networks.

"This new evolution of our Series 2 platform is answering the ever-increasing demands for highly-integrated, long range wireless connectivity to enable cities, industries and homes to operate more efficiently and sustainably," said Matt Johnson, President of Silicon Labs. "Silicon Labs' new secure, ultra-low-power sub-GHz solutions extend wireless communication beyond one-mile, thus expanding the boundaries for developers who need scalable high-performance wireless to drive the transformative potential of the IoT."

Unlocking Efficiencies with IoT

According to the US Energy Information Administration, 60 % of the world's energy usage comes from industrial and commercial applications, and residential energy consumption accounts for 21 %.

Smart grid technology, building and home automation IoT systems can positively impact global sustainability and drastically reduce energy consumption. Silicon Labs designed the FG23 and ZG23 SoCs to enable the next generation of secure IoT products to accelerate sustainability and energy efficiency initiatives.

Low Power, Long Range and Secure

The new FG23 and ZG23 wireless SoC solutions offer an optimized combination of ultra-low transmit and receive radio power (13.2 mA TX at 10 dBm, 4.2 mA RX at 920 MHz) and best-in-class RF (+20 dBm output power and -125.3 dBm RX at 868 MHz, 2.4 kbps GFSK), making it possible for IoT end nodes to achieve 1+ mile wireless range while operating on a coin cell battery for 10+ years.

These SoCs also leverage Secure Vault, certified PSA Level 3, enabling developers to safeguard IoT products against software and hardware attacks which can compromise intellectual property, ecosystems and brand trust. FG23 and ZG23 SoCs enable developers to create IoT products that enhance the efficiency and performance of a wide range of applications including smart infrastructure, metering, environmental monitoring, connected lighting, industrial controls, electronic shelf labels (ESL), building and home automation.

Additional SoC Highlights:

Simplified single ended RF match delivers 40 % lower BoM than existing solutions

Broad frequency (110-727 MHz & 742-970 MHz) and modulation support (FSK, GFSK, OQPSK DSSS, MSK, GMSK and OOK)

Advanced peripheral features for LCDs, push buttons and low power sensors

FG23

FG23 targets Amazon Sidewalk, industrial IoT (IIoT), smart city, building and home automation markets that often require battery-powered end nodes with extended wireless communication range capabilities. The FG23 wireless SoC solution provides a flexible antenna diversity feature to enable best-in-class wireless link budget (-111.2 dBm RX @ 920 MHz, 50 kbps GFSK). Advanced wireless, coupled with FG23's low active current (26 µA/MHz) and sleep mode (1.2 µA) make it an ideal solution for battery-powered field area network nodes, wireless sensors and connected devices in difficult to reach locations.

ZG23

ZG23 enhances Z-Wave Wireless by adding Secure Vault and offers the same industry-leading RF and power performance as FG23. Supporting Z-Wave Long Range and Mesh, these are the first SoCs to be optimized for both end devices and gateways and can also support all FG23 protocols. The ZG23 wireless solution targets smart home, hotel and multi-dwelling unit (MDU) markets. Ultra-compact ZG23-based SiP modules (ZGM230S), supporting Z-Wave only, will also be made available to simplify development and speed-time-to-market.

0 notes

Video

youtube

https://www.futureelectronics.com/resources/featured-products/renesas-ra2e2-48mhz-ultra-low-power-tiny-mcus. Renesas RA2E2 Group is RA Family’s entry line single-chip microcontroller which enables extremely cost-effective designs for IoT sensor nodes, and any battery-operated application that requires developers to cut power consumption, cost and space. https://youtu.be/b7wy0TSfWtM

0 notes

Text

How RF modules Achieve Temperature Control in Thermostats?

RF module is a low-power, long-distance communication technology ideally suited for smart homes and Internet of Things (IoT) applications. In indoor temperature control solutions, they can be employed in the radiator systems used for indoor heating during the winter. Radiator systems are one of the methods for winter heating, where the heating and temperature adjustment of radiators are controlled by a thermostat, regulating the flow of hot water within the radiators for efficient heat distribution.

Indoor thermostats can be divided into wired thermostats and wireless thermostats. Wireless thermostats offer higher efficiency and intelligence in achieving indoor temperature regulation. The use of wireless communication eliminates the need for complex wiring in challenging environments, simplifying installation and reducing construction time. Here, we will focus on LoRa wireless thermostats.

Working Principle of RF module in Thermostats

LoRa wireless thermostat utilizes wireless transceiver modules to control the flow of hot water in radiators, thereby achieving indoor temperature regulation. Firstly, the thermostat is configured with a heating mode. When the radiator's heating temperature reaches the set indoor temperature, the thermostat collects indoor temperature using the LoRa temperature sensor and compares it to the temperature set in the heating mode. If the indoor temperature exceeds the set temperature, the thermostat employs wireless communication to adjust the valve, reducing the flow of hot water to prevent further temperature increase. Conversely, if the radiator's heating temperature falls below the set indoor temperature, the thermostat increases the flow of hot water to raise the indoor temperature.

In the design of LoRa wireless thermostats, data reception and power consumption are particularly critical factors to consider.

The LoRaCC68 series wireless transceiver modules combine high-precision crystals, ultra-low receive current, and sleep current, along with a sensitivity of -129dBm. They are equipped with a built-in 64KHz crystal oscillator, allowing timed wake-up of the microcontroller in low-power scenarios. The module's antenna switch is internally integrated within the chip, saving external MCU resources. With its compact size and an output power of 22dBm (160mW), this module offers significant advantages in Internet of Things (IoT) and battery-powered applications.

Features of the LoRaCC68 Series Wireless Transceiver Modules

LoRa Wireless Spread Spectrum Technology: With a receiver sensitivity of up to -129dBm and a maximum output of 22 dBm (160mW), this technology reduces the number of gateways required, resulting in cost savings compared to other wireless control methods.

Strong Signal Penetration: These modules exhibit robust signal penetration capabilities, ensuring stable data transmission in complex environments.

High Interference Resistance: LoRaCC68 modules incorporate digital spread spectrum, digital signal processing, and forward error correction coding, providing exceptional interference resistance, thereby guaranteeing the stability and reliability of wireless communication.

Low Power Design: The RF module is designed for low power consumption, with a receive current of less than 5mA and a sleep current of 1.9uA to 2.35mA, reducing operational costs.

Industrial-Grade TCXO Temperature-Compensated Crystal Oscillator: Customizable operating frequencies from 150MHz to 960MHz.

Data Transmission Rates: 0.6-300 Kbps @FSK, 1.76-62.5 Kbps @LoRa.

Supports Multi-Node Connectivity and Multi-Gateway Coordination: Enables more flexible network topologies and greater scalability.

LoRa wireless thermostats are well-suited for indoor temperature regulation due to LoRa spread spectrum technology and low power consumption. Wireless thermostats use LoRa wireless transceiver modules to sense indoor temperatures, then adjust the flow of hot water in radiators to control indoor temperature based on the desired temperature. When choosing a LoRa wireless control solution, it's essential to consider factors such as communication range, interference resistance, data transmission rate, and select the appropriate RF module and configuration accordingly.

For details, please click: https://www.nicerf.com/

Or click:

For consultation, please contact NiceRF (Email: [email protected])

1 note

·

View note

Text

New Post has been published on Qube Magazine

New Post has been published on https://www.qubeonline.co.uk/artificial-intelligence-in-the-field-of-building-automation/

Artificial Intelligence in the field of Building Automation

Professor Michael Krödel, CEO, Institute of Building Technology, Ottobrunn, Germany and Professor for Building Automation and Technology, University of Applied Sciences at Rosenheim and Graham Martin Chairman & CEO, EnOcean Alliance

The term “AI – Artificial Intelligence“ is increasingly associated with buildings and building automation. The question is: what is it, where do its tangible benefits lie in this field, and how does the building infrastructure need to be adapted to realise those benefits?

Today’s building automation systems in the main operate ‘statically’ in response to fixed time programs or simple control parameters. Room temperature control is based on a preset temperature that is the same throughout the day. Lighting is operated manually, with switches, or on the basis of simple presence switches. None of this is truly ‘intelligent.’ The new dimension that AI can add into the building automation environment is to use autonomous analysis of the data as a basis for optimised operation. Thus the heating and cooling dynamic of rooms, weather forecasts, predicted room occupancy during the course of the day can all be factored into the operation of the heating. Similarly, cleaning schedules can be based not only on the current actual values in terms of the intensity of use of kitchens, canteens and toilets and other areas, but can be based on predictions drawn from an analysis of usage patterns in the previous days and weeks. This kind of forward looking building management can be applied in almost every area of building services, leading to increased energy efficiency, reduced operating costs, improved space utilisation and other advantages.

All this – and much more – is possible when data on building system status and conditions is intelligently evaluated. This requires intensive processing of large amounts of data, with many variables to be considered. Artificial Intelligence (AI) offers many new, tailor-made solutions which are eminently suited to efficient building management.

“Building Automation”, “Smart Building” and “Cognitive Building”

Initially, „Building Automation“ was comparatively „un-intelligent“. Systems were programmed to follow a set of simple rules, allowing for quick system start-up and subsequent ease of maintenance.

The „Smart Building“ typically builds on this classic building automation with flexible IT-based management systems . These offer unrestricted programming using modern IT languages and tools, easy integration with other IT systems such as workspace/room reservation systems or data banks, and data visualisation for facility managers and for „ordinary“ users.

The growing assimilation of sensor-generated data into the IT-based management level opens the way for more advanced data processing solutions to come into play – such as AI tools. This is the pre-condition for the implementation of any prognosis-based form of building management. The sophisticated processing of sensor-generated data makes the Smart Building into a „Cognitive Building“.

AI-learning process

The first step in any Artificial Intelligence process is system learning. This can take three forms.

– Unsupervised Learning

– Supervised Learning

– Reinforcement Learning

„Unsupervised Learning“ is used when large quantities of data must be processed and categorised. This grouping enables the recognition of deviations from norms and interdependencies. For example, sensor data from identical circulation pumps can be grouped. If data from one pump or group of pumps deviates from the norm, there may be a defect and a human engineer can be sent to investigate.

„Supervised Learning“ often makes use of neural networks. They consist of entry and exit nodes as well as further nodes in the intermediate layers. Mathematically weighted relationships exist between the diverse nodes (neurons). In order to optimise these relationships, the neural network is subjected to a training phase with known input and output patterns. In the field of building automation, for example, a neural network can ‚learn‘ the current consumption profiles of different appliances and which appliances are active when. This information can be used to avoid ‚spikes‘ in building energy consumption, by shutting down some appliances and extending the operation time of others.

Another form of Artificial Intelligence is represented by processes that autonomously determine which actions are appropriate in a given situation. They emulate human behaviour whereby different solutions are tried in order to determine the best way forwards in a hitherto unknown situation, and conclusions drawn retrospectively. The learning task becomes more challenging when feedback is given much later and hinges upon events in the relatively distant past. This is true in a human context, and equally true in computer environments. The best-known example in this category is „Reinforcement Learning“. Consider the issue of determining the optimal start and stop times of heating to achieve a comfortable temperature when the building opens. At the simplest level, the learning algorithm receives the value from the room temperature sensor and can act on the actuator on the radiator. By a process of trial and error, the algorithm can determine the necessary lead time. However, this simple example ignores the fact that, for instance, the speed of heating also depends on the outside temperature, so the reading from an exterior temperature sensor needs to be considered. Instead of providing a pre-set target temperature, the algorithm may be be given evaluations (good / OK / cold) during the day and must learn in response to this feedback. In addition, the algorithm can be provided with an addditional rating every month based on the overall energy cost: encouraging efficient behaviour and discouraging inefficient responses. A ‚stable‘ response that balances comfort and efficiency can be established, but exploration should continue to accomodate changes in behaviour and the environment.

It can be seen that these three approaches are complementary. The learning method should be chosen depending on the task in hand – each has its merits.

Concrete applications

Many diverse AI-based applications are available in the field of building automation. They can be broadly categorised as follows:

Optimised facility management: needs-based control of heating plants, circulating pumps, lighting etc. (as opposed to control on the basis of simple parameters or by timer).

Optimised utilisation of spaces and infrastructure: capacity analysis and forecasting, e.g. for

meeting rooms, canteens, pantries, transit areas, toilets and parking spaces as well as the provision of information in the short term (for building occupants) and in the long term (for facility managers, e.g. in form of advice on building restructuring).

Load management: forward-looking operation of electrical systems in order to avoid (costly) peak loads.

Precautionary maintenance and optimised servicing: analysis of failure probability, timely maintenance and consequential avoidance of technical failures.

Employee-oriented value added services: mobile devices can – for instance – be used to generate space utilisation forecasts, view canteen usage intensity, request parking space availability and preferred workspace location or select individual meals.

Compensation of skilled-staff shortages: making effective use of facility maintenance staff in managing the building’s technical systems.

Focus on meaningful sensor data: generate as much data as possible from as few sensors as possible – reducing redundancy, cutting investment and operating costs.

Demands upon system architecture

An AI platform is indispensable for the introduction of intelligent learning processes such as those described above. This can be either cloud-based or server-based. Cloud-based server farms offer more processing power, and cloud-based AI frameworks offer a broader range of features, so this currently represents common practice.

The AI platform is built on a Smart Building infrastructure, and all technical systems should ideally be connected to a BMS (Building Management System). The BMS must be able to govern the building facility and room automation systems.

Demands on building infrastructure

The AI platform requires a rich set of data from a variety of sensors around the building to operate effectively. Conventional smart building systems use a sensor network to determine the status of a building now.‘ Cognitive“ buildings store and analyse historical sensor data to make predictions for the future. For this reason, such buildings are even more critically dependent on the data inputs they receive for their success. Cognitive buildings need to be instrumented throughout with IoT sensor devices that make the algorithms fully aware of every aspect of their operation: environment, occupants, energy requirements, service needs, security, and safety. The richer the data, the more complete and intelligent the response of the AI. Wiring sufficient sensors into an established building is hugely expensive – and even if it were done would create an inflexible architecture that couldn’t be adapted as new applications emerge and learning progresses. The only effective solution is battery- and maintenance- free energy harvesting sensors that can be fitted in a moment and moved at will. Energy harvesting wireless devices utilize the tiniest amounts of energy from their environment. Kinetic motion, pressure, light, differences in temperature are converted into energy which, in combination with ultra-low power wireless technology, creates maintenance-free sensor solutions for use in smart buildings and the IoT.

The EnOcean Alliance eco-system offers more than 5,000 multi-vendor interoperable energy-harvesting sensors enabling data collection for multiple applications, such as room or desk/chair occupancy, temperature and air quality, energy usage and restroom usage. In addition to the traditional option of collecting and analysing the data via the BMS (Building Management System), this can now be done by using the existing Wi-Fi network with the building. By securely interfacing those IoT devices with new and existing Aruba Wi-Fi 5 and Wi-Fi 6 Access Points via a plug-in 800/900MHz radio, building control and business applications can become hyperaware of their operating environments. This information can be used to better model building behavior, to optimize human activity monitoring, organizational redesign, augmented reality, human productivity, and occupant health and safety.

Conclusions

AI-based processes enable a broad range of applications in the field of building automation. The concrete benefits anticipated from AI-based solutions should be clearly defined before implementation, since this plays a determining role in the choice of learning process and its modelling, as well as in the choice of AI platform and the type, number and location of the energy harvesting sensors needed to supply the data inputs.

www.igt-institut.de

www.enocean-alliance.org

Artificial Intelligence in the field of Building Automation

0 notes

Text

Kubernetes Edge Computing - Offers Highly Scalable and Flexible Edge Compute Capabilities

Mobodexter | Mobodexter

Kubernetes Edge Computing: Chick-fil-A, known for its addictive poultry sandwiches and also waffle french fries, is reported to become the third-largest U.S. fast-food chain behind McDonald’s and Starbucks. Behind the scenes, their business remains at the forefront of embracing a powerful modern technology like Edge computing and Kubernetes.

Chick-fil-A published a Medium post that it will undoubtedly be running Kubernetes on the Edge of 6,000 IoT devices in all 2,000 of its dining restaurants. Part of the chain’s internet of things (IoT) strategy to gather and examine more information to enhance customer service and also functional effectiveness. For example, they can forecast how many waffle french fries need to be cooked every minute of the day.

This case study shows why Kubernetes has quickly ended up being a crucial active ingredient in edge computing. A tried and tested and effective runtime system to help address particular challenges across telecommunications, media, transportation, logistics, farming, retail, and also other market sections.

The telco industry particularly has much to acquire from edge computing. As competitors among operators intensify, telco firms must distinguish themselves with new use-cases such as commercial automation, virtual reality, connected cars and trucks, sensor networks, and smart cities. Telcos significantly are using edge computing to ensure these applications function flawlessly while also driving down the prices of deploying and handling the network framework.

Just what is edge computing?

Edge computing is a variation of cloud computing, with your infrastructure like compute, storage, as well as networking physically closer to the devices that create information. Edge computing enables you to position applications and solutions closer to the source of the data. This placement gives you the twin advantage of reduced latency as well as lower web traffic. Reduced latency boosts the performance of field devices by enabling them not just to react quicker, yet to also respond to even more events. Lowering web traffic helps in reducing prices as well as boosts overall throughput. Whether an application or service will be in the edge cloud or the core datacenter will rely on the specific use-case.

Intel Edge Computing Framework

How can you build an Edge cloud?

Edge clouds need to be built with the very least two layers. Both layers will certainly maximize operational efficiency as well as designer performance, and each layer is created differently.

The initial layer is the Infrastructure-as-a-Service (IaaS) layer. In addition to giving compute and storage space sources, the IaaS layer needs to address the network performance needs of ultra-low latency and also high data transfer. IaaS is the layer where blade systems from HP, Dell, IBM, and Lenovo, and specialized systems like Lenovo Think system SE350 come in the picture.

The 2nd layer is the Kubernetes layer, which gives an ideal platform to run your applications and services. Whereas making use of Kubernetes for this layer is optional, it has confirmed to be a reliable system for those organizations leveraging edge computers today. You can release Kubernetes to field tools, edge clouds, core data centers, and the general public cloud. This multi-cloud implementation ability provides you total flexibility to deploy your applications anywhere in the field, Edge, or cloud. Kubernetes deals with your developers the capability to simplify their DevOps practices and minimize time spent integrating with heterogeneous operating environments.

Benefits of Kubernetes on Edge

In addition to very low downtime and outstanding performance, Kubernetes offers numerous built-in advantages to resolve Edge compute obstacles, including versatility, resource performance, performance and reliability, scalability, and observability.

1) Flexibility

Kubernetes decreases the complexities associated with running computing power across various geographically dispersed points of existence and varied architecture by supplying versatile tooling that allows designers to connect with the Edge seamlessly.

With Kubernetes behind our Edge platform, users can run application containers at scale through an agnostic network of distributed computing infrastructure, which in turn, extends complete flexibility to the users to be able to run the application anywhere along with the Edge computing.

2) Resource Efficiency

Containers are light-weight by nature and enable you to use the underlying infrastructure in an extremely effective method. However, managing thousands or, in many cases, countless containers throughout a distributed architecture gets complex very rapidly. Kubernetes supplies the underlying tools to effectively manage container workflows through automated networking, storage, and event logs.

The Kubernetes Horizontal Pod Autoscaler is one key feature that naturally provides itself to edge computing performances. It immediately scales the variety of pods up or down in a replication controller, release, or replica set based upon latency or volume thresholds. Think about a point of existence in an edge location that requires to manage abrupt traffic increases, like, in the case of a local sporting event. Kubernetes can auto-detect traffic from event logs and provide resources to scale to the fluctuating demand. This kind of auto-scaling takes the uncertainty out of forecasting and preparing for infrastructure needs. It also makes sure that you’re only provisioning for what your application requires in any given period.

3) Performance

Modern applications require lower latency than standard cloud computing designs can provide. By running workloads better to end-users, applications can recuperate vital milliseconds to give a much better user experience. As mentioned above, Kubernetes’ capability to respond to latency and volume limits (utilizing the Horizontal Pod Autoscaler) implies that traffic can be routed to the most optimal edge places to reduce latency.

4) Dependability & Scalability

Among the significant advantages of Kubernetes is that it is self-healing. In Kubernetes, you can restart containers that stop working, replace, and reschedule containers when nodes fail and eliminate containers that do not respond to your user-defined health check.

In Kubernetes, services abstract this process, allows you to begin and stop containers behind the service. At the same time, Kubernetes carries on managing traffic reroutes to the right containers to avoid service disturbances.

Besides, since Kubernetes’ control plane can handle tens of countless containers running over numerous nodes, it allows applications to scale as needed, especially fitting the management of distributed edge workloads.

5) Observability

Knowing where and how to run edge work to make the most of efficiency, security, and performance needs observability. However, observability in a microservices architecture is complex.

Kubernetes offers a full view of production work, enabling optimization of efficiency and efficiency. The built-in monitoring system allows real-time insights (consisting of transaction traces, logging, and aggregated metrics) with instant provisioning figured out by configuration settings.

Another point of observability is of specific interest when it concerns edge computing is dispersed tracing. Distributed tracing permits you to collect and construct an extensive view of requests throughout the chain of API calls made, all the way from user requests to interactions in between numerous services. With this info, you can determine traffic bottlenecks and opportunities for optimization.

Interest in edge computing is being driven by rapid data increases from smart tools in the IoT, the coming influence of 5G networks as well as the growing importance of performing artificial intelligence jobs at the Edge. All of which need the ability to deal with flexible needs as well as shifting workloads. Kubernetes Edge Computing is one of the rapidly growing technology that companies like Mobodexter are continually innovating and help customers to become productive and efficient with their IoT strategy.

Footnotes:

Mobodexter, Inc., based in Redmond- WA, builds internet of things solutions for enterprise applications with Highly scalable Kubernetes Edge Clusters that works seamlessly with AWS IoT, Azure IoT & Google Cloud IoT.

Want to build your Kubernetes Edge Solution – Email us at [email protected]

Check our Edge Marketplace for our Edge Innovation.

Join our newly launched marketing partner affiliate program to earn a commission here.

We publish weekly blogs on IoT & Edge Computing: Read all our blogs or subscribe to get our blogs in your Emails.

https://blogs.mobodexter.com/kubernetes-edge-computing-offers-highly-scalable-and-flexible-edge-compute-capabilities/

0 notes

Photo

Intel cleans up its (Custom) foundry act - xpost /r/Intel

Intel, ARM deepen foundry ties pages 2 - 5

ARM announced at the event it is extending its partnership with Intel’s foundry business to build IP tailored for the x86 giant’s 22nm FinFET node. It’s a fascinating example of co-opetition from the processor rivals.

ARM will deliver IP to enable a Cortex-A55 geared for midrange smartphones to run up to 2.35 GHz or down to 0.45V in its so-called 22FFL process. ARM is already helping make in Intel’s flagship 10nm process a test chip due out before the end of the year using a next-gen Cortex-A SoC running at 3.5 GHz or 0.5V and delivering 0.25mW/MHz.

An Intel executive showed road maps first released at a Beijing event in September, giving more details than ever on its foundry and IP plans. Observers said the level of candor was new for Intel but key to establishing a foundry business it has been struggling—largely with its own culture--to stand up for years.

Intel's Custom Foundry 10nm Roadmap:

http://ift.tt/2iN56CG

http://ift.tt/2zkVZAu

10nm GP/HPM Risk production Q2 2018

-Available Today

-4 digital Vts, 1 analog transistor, 4 SRAM bitcells

-ARM/Intel/Synopsys high density and high performance libraries

-Silicon validated Mobile and Networking portfolio

-Leadership 56G and 112G PAM4 SerDes

10nm GP/HPM+ Risk production Q2 2019

-In Definition

-Enables IP reuse from 10GP/HPM

-Additional CPP device and Vts for PPA improvements

-Additional logic libraries fir are and power efficiency

-Target 10% Performance, -10% power, -10% area

10nm GP/HPM++ Q2 2020

-In exploration

-Re-architected metal stack for performance improvements

-Additional logic libraries for area and power efficiency

Intel has three follow-ons planned for its 22FFL node which it claims will sport 100x lower leakage, 30% more performance and a 20% area shrink compared to 28nm nodes.

Intel's Custom Foundry 22nm FFL Roadmap:

http://ift.tt/2iN579I

http://ift.tt/2zig9Le

22nm FFL

-Available now

-4 HP Vts and 2 Ultra low leakage Vts

-7T library, Mobile/ IoT focused portfolio

-Full RF enablement

22nm FFL+

-PDK 0.5 just released

-3 VTs plus 2 additional metal layer options for improved PPA

-6T library and denser bitcell / memory compilers

-IP compatible with 22nm FFL

-+20% performace, - 25% power / area

22nm FFL+ (I think its meant to say 22nm FFL++)

-In exploration

-Finer CPP

-Denser Interconnect

-Denser library and bitcell / memory compilers

Intel is gearing up to compete with the wealth of foundation IP at foundries such as TSMC with basic cells of its own as well as some from partners.

http://ift.tt/2iN57GK

http://ift.tt/2zkVYfU

http://ift.tt/2iL3rOb

IP Provider Technology Availability GPIO (GP,HSIO,LVDS) Intel 10nm, 14nm, 22nm Now PLLs (HP-, LP-, GP- PLLs) Intel 10nm, 14nm, 22nm Now Thermal Sensors Intel 10nm, 14nm, 22nm Now Crystal Oscillators (<40MHz) Intel 10nm, 14nm, 22nm Now LPDDR 5/4X/4 (5333Mbps) Intel 10nm HPM 2018 LPDDR 4/3 (3200Mbps) Cadence, Synopsys 14nm LP, 22NM FFL Now DDR 4/3 (3200Mbps) Synopsys 22nm FFL Now USB3.1 (Type C compatible) Intel, Synopsys 10nm HPM, 14nm, 22nm FFl Now USB3.1/DP TX (Type C compatible) Intel, Synopsys 10nm HPM, 22nm FFL Now USB2.0 (Type C compatible) Intel, Synopsys 10nm HPM, 14nm, 22nm FFL Now MIPI M-PHY V4.1 (12Gbps) Intel 10nm HPM 2018 MIPI M-PHY V3.0 (8Gbps) Intel 14nm LP Now MIPI D-PHY V2.1 (4.5Gbps) Intel 10nm HPM 2018 MIPI D-PHY v1.2 Intel, Synopsys 14nm LP, 22nm FFL Now/2018 eMMC5.x/SD3.0 Intel, Cadence 10nm HPM, 14nm LP Now

Personal thoughts :

ICF is on the right track - but they have one crucial thing they have to get right to gain trust and gain partners and that is ensuring their nodes are competitive - and delivered on time, otherwise they will continue as they are now - in partner limbo.

2 notes

·

View notes

Text

IOTW’s Proof-Of-Assignment And Low-Powered Chip Plugs Power Into IoT

Smart ultra-connected cities are coming. From the fridge that can send alerts about out-of-date items stored on its shelves to the bus stop shelter where the outdoor advertising receives and act on data input from a viewing potential customer. And even the lamppost with sensors that counts the foot-fall in a certain part of town – the battle is on for a platform to unleash the promise and power of Internet of Things (IoT) devices and the increasingly smart cities they will come to inhabit to improve our lives.

When you start to think about the sheer number of devices that emit and receive data, it provides some insight into the nature of the problems running such a network, so that previously dumb devices can talk to each other and other data collected and made useful as an input somewhere else.

From our phones continuously pinging for the best cellular connection or card readers at stores grabbing our card details, distributed ledger technology is well-suited as a solution to the problem of how to record, validate and store the transactional activity of millions of devices producing “big data” by the second with neural complexity.

In 2017 there were thought to be 8.4 billion IoT devices globally, a figure set to rise to 30 billion in 2020, with the worldwide market by then valued at $7 trillion, according to Gartner.

IOTW power play with proof-of-assignment and a new chip

However, to operate at the scale required also means solving some other problems. Chief among those is the ability of a network’s nodes – for example, IoT devices – to function under low-power conditions and limited computer processing resource.

A blockchain startup called IOTW has taken a unique approach to solving the problem, using both software and hardware.

The software part involves both moving beyond the proof-of-work (PoW) consensus systems we know from Bitcoin and Ethereum and the proof-of-stake (PoS) variants being used by so-called third generation blockchains such as EOS and Tron.

However, getting the optimum balance between security and transaction speed is difficult. The IOTA project, for example, addresses those issues by in effect abandoning blockchain, some would argue, for its own Tangle system based on a directed acyclic graph or DAG, where transactions have to be validated by a node that has validated a previous transaction.

A blockchain PoS sees block rewards distributed to mining token holders in proportion to their stake of tokens. If you get those rewards wrong or the number of nodes and how they are selected/elected wrong, then the functioning of the blockchain could soon prove problematic.

IOTW takes a radically different approach with “micro-mining” and its proof-of-assignment (PoA) consensus mechanism. It has succeeded in making it possible to do transaction verification at the level of the connected device and in a highly scalable fashion.

For example, instead of a convoluted voting process, “trusted nodes” are appointed from among the strategic partners of the project.

So how does the verification work get allocated? In architectural terms, a ledger server layer sits between the API server and the machines handling the mining processes; it requests and manages mining assignments, which it can either be randomly assigned or can be selected through a weighted system.

IOTW invents Digital Power System mining chip

The network software is designed in such a way that no special hardware is required for smart devices to plug into the network.

Nevertheless, in order to boost mining efficiency IOTW has invented (patented) a chip design – Digital Power System (DPS) – optimized for performing at low power to run the computations to find the hashes. Mining devices must find the hash of the encrypted blockchain template passed to it by the IOTW network’s trusted nodes.

The network is capable of tens of thousands of transactions per second (tps) and is aiming at achieving as many as one million tps. Any device installed with the cheaper and more energy-efficient DPS chip will be able to automatically hop onto the IOTW network. The device can then be controlled, monitored and data exchanged, all powered by the IOTW native token.

Marketplace for devices and providers to exchange value

Providers large and small will be able to develop and bid on projects inside the IOTW ecosystem, with electrical appliances, for example, being able to secure the rights to use a given amount of energy from another provider on the network at an agreed price.

Other obvious uses for the IOTW token would see it as a unit of exchange to purchase spare parts for IoT devices and for ordering the replacement of consumables such as light bulbs perhaps.

The mining can be undertaken by any smart IoT device on the network simply by virtue of having downloaded the firmware. However, the installation of IOTW’s lower-power chip enhances mining efficiency and therefore the rewards that can be earned.

Incentivizing IoT adoption, PoA advantages

The ability to mine brings both security and liquidity to the ecosystem marketplace and would likely be an added attraction for those who already own TVs or laptops. Including boosting their capability by mining the IOTW token with the help of the DPS chip.

IOTW’s smart cities solution has the enviable quality of incentivising devices to join the network with the inducement of mining earnings, making it among the most serious attempt to date to address the real-world barriers to adoption.

Also, there are further advantages in leaving PoW and PoS behind.

Proof-of-work is a power-hungry, inefficient free-for- all where all nodes compete in a massive waste of resources, while PoS rewards those with the biggest stake of tokens to the possible detriment of smaller holders. Proof-of-assignment and the incentivising effects of mining are here combined to deliver liquidity, encourage adoption, abolish the waste and stop the potential skewing of the network in favour of the biggest stake holders.