#vseeface tutorial

Explore tagged Tumblr posts

Text

You can use your phone camera on VSeeFace!

For anyone interested in VTubing but can't afford a webcam at the moment, or just simply someone looking to use their iPhone to have improved face tracking for their 3D model, I made a simple tutorial for you! This method requires you to use the app version of VTube Studio. The tutorial is short, simple to follow and everything in this video is FREE to use! As long as you own a phone. Click here to check out the video!

#content alert#content update#oc#vtuber#vseeface#vtuber tutorial#vseeface tutorial#indie vtuber#vroid model#vtuberen#twitch streamer#contentalert

14 notes

·

View notes

Note

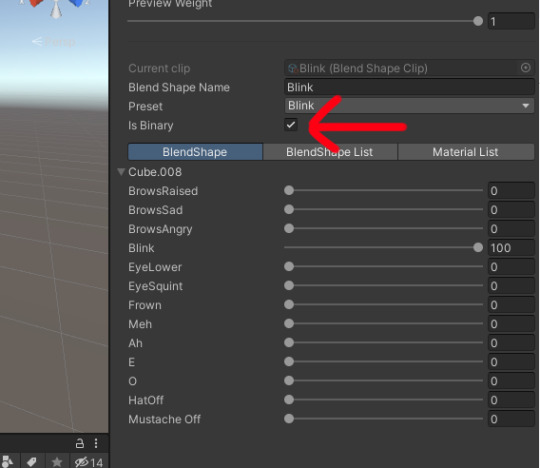

do you have any pointers about how you used 2d textures for blinking/lip sync/expressions in vseeface? i've been trying to find a tutorial for exactly that but i'm having a hard time wording it to get any good searches going. did you make the blendshapes animate over a sprite sheet or something?

Here's a quick run down of what I did:

Add 2 planes in front of the head, one for the eyes and one for the mouth (for extra control you can add a separate plane for each eye too if you'd like). Use a shrinkwrap modifier to make sure they conform to the head as much as possible.

For each mouth and eye texture, duplicate this plane, add the proper textures to them, then move the planes inside the face to hide them.

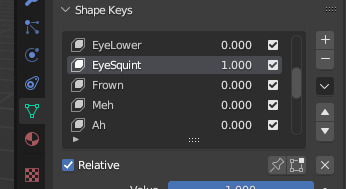

Make a shape key for each individual texture you have (Frown, blink, half lidded eyes, all the lip sync shapes). For the shape key, move the proper plane forward above the face.

For keys like Blink, make sure to move each individual eye texture back further into the head for that key, as it'll help the blink key effect those eye shapes when they're active. Same with the lip sync shapes, move all the closed mouth shapes further back in the head.

In unity, when setting up the blend shapes, if you want the shape key to SNAP immediately to the new texture without any in between, make sure this checkbox is enabled.

You can also turn down the smoothing for expression in the veesee settings for the avatar.

25 notes

·

View notes

Note

If you don’t mind my asking how do you find working in the vtuber program you use? I have an ancient copy of facerig but when I saw I had to make like over 50 animations that it blends between I was like “well I don’t have time for that” but never really looked around any further because I like don’t stream often At All and it was a passing “it’d be fun to make something like that” curiosity but man. I’m thinking about it again

howdy! :D

I use VSeeFace on my PC and iFacialMocap on my iPhone for vtuber tracking and streaming! I really like it, but it's lots and lots of trial and error haha- that's partly because all my avatars are non-humanoid/are literally animals with few to no human features, and vtuber tracking programs are designed to measure the movements of a human face and translate them onto a human vtuber avatar, so I have to make lots of little workarounds. If you're modeling a human/humanoid avatar, I would imagine things are more straightforward

I think as long as you're comfortable with 3D modeling and are ready to be patient, it's definitely worth exploring! I really like using my model for streaming and narration of YouTube videos, Tiktoks, etc. For me it's just a lot of fun! :D It's a bit like being a puppeteer haha

If you're looking to get started making vtubers, I really recommend this series of tutorials by MakoRay on YouTube, it's super informative and goes step-by-step:

youtube

Best of luck! :D

17 notes

·

View notes

Text

Well, the basic pipeline is camera-> vtube software-> streaming software-> platform.

You will need a program that uses video and/or audio data to control a vtuber model, e.g. a vtubing software. If you're using a VRM model like me I recommend VSeeFace, it's completely free and relatively easy to use. If you have a Live2D model my best suggestion is VTube Studio.

For face tracking you will need a webcam, or a phone that can run the VTube Studio app (available on iOS & Android). Both of these methods work with VSF and VTS.

The webcam is the easiest to connect, you just plug it into the computer and select it from the Vtubing software. Vtube studio is more complicated to configure but there's lots of tutorials out there.

Now, capturing the vtube model through a streaming software. You simply need to capture the software window as a game capture (OBS and Prism). The background should be transparent by default and you can hide the UI once you're done tweaking the settings.

I can't say how taxing this might be for a lower end computer but VSeeFace has very flexible performance settings.

i also want to make an oc vtuber like horsegamesins

14 notes

·

View notes

Text

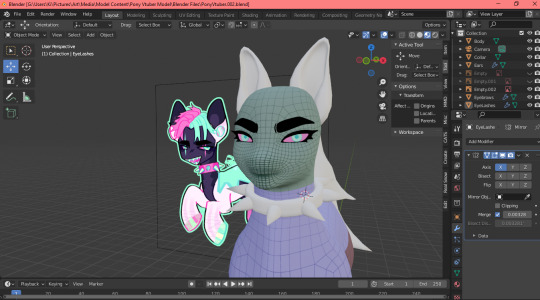

lichrally just tryna see if I can like...make a pony vtuber model work

#wip#wip tag#actually been able to record the process so like...dope#plus i looked up some tutorials online and there's a way i can have this little shit posed right outta unity to vseeface#without an extra program so it wont just be a standing pony

12 notes

·

View notes

Text

Hello my friends,

Some people have been interested in how I did the 3D Avatar. So this is not a full tutorial but more or less a more detailed explanation.

Ay, let's go! °^°~

I always wanted to do creative stuff with the models from the Detective Conan Wii game. However I have no knowledge on how you rip assets from games. That's why I searched the internet in hope somebody had already done that with this game.

Ripping assets from games means that you take all the stuff that's used inside a game and turn it into a raw file so you can use it outside of the actually game. You can do that with All the 3D-models, pictures, music, different datasets and much more.

While doing my research, I found this site called The Video Game Resource. They have a lot of assets ripped directly from popular games.

After downloading the 3D-Conan file I imported it into a computer program called Blender. Blender is Open Source (free) and you can use it to create awsome stuff in 3D an 2.5 D.

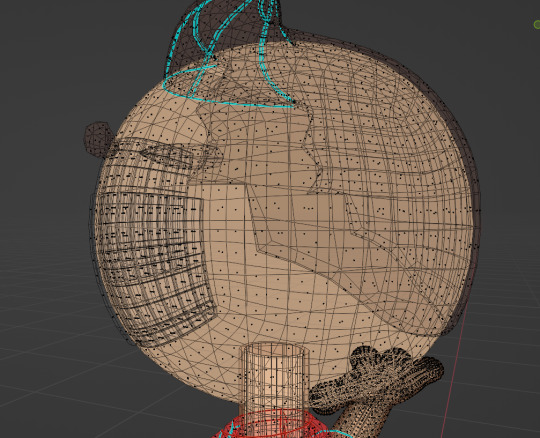

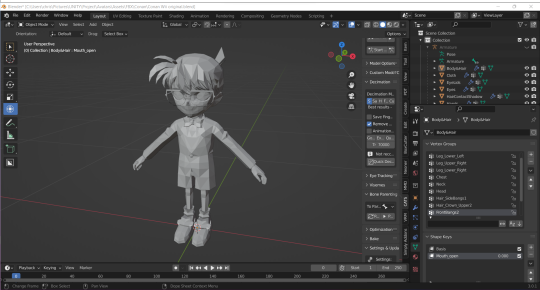

This is how that looks.

I created the materials and put the original textures on it and boom Conan looks very colorful now!

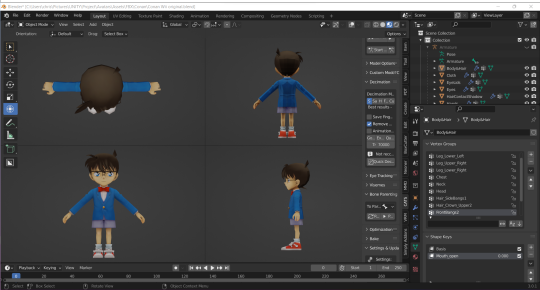

I think he looks great from all the sites!

To make the smol brat move later on, he needs an armature with bones. So I need to rig it.

The model already came with an armature and it was fully rigged. However the avatar format that is used here has a specific standard for 3D-model armatures. Since this wasn't the case here I needed to rig the character from scratch.

It took a while but now it looks like this.

I also upgraded Conans emotionless face by using Shape Keys / Bendshapes just as much as necessary so it keeps the original charm.

Smol baby Conan can now be turned into a 3D-avatar. But wait! This can not be done in Blender, therefore we need a nother computer program the Unity GameEngine!

I created a new project and imported the edited model.

I put the materials with the textures on it and Conan looks all colorful in unity too!

Next is the UniVRM Add-on.

It's a quick install.

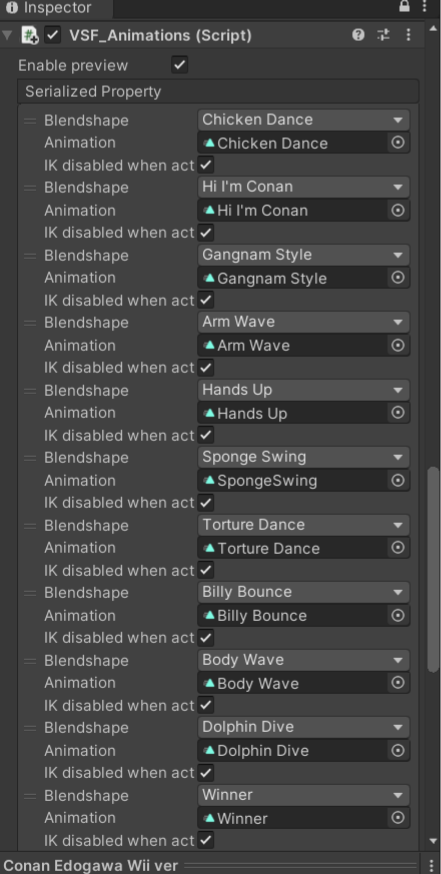

Now the Avatar can be exported. After exporting the avatar I needed to be reimported it so I can tweak to my desire. Therefore a VRM have Blendshape Clips. This is mostly used for face animations and props.

Said it, done it! Everything is now tweaked to my desire, so I exported the Avatar again.

Its always good to test the model while working on it. For face tracking with a webcam in desktop mode I use a program called VSeeFace.

To track my hand movements I use a device called Leap Motion Controller.

Now for the best part. VSeeFace has an intern avatar format that extends the vrm Avatar. So I went back to unity and installed the VSF-SDK.

I put some animations on Conan and now he is ready to be exported as a VSF-Avatar.

Conan inside the tracking programme is now ready to be used for all kinds of different stuff. Maybe recording some clips with the Open Broadcast Studio (OBS), it can be also used for live streams.

Maybe join a Discord call with your friends.

There is so much you can do with Avatars like this. It's honestly to much to explain everything here. °A•

Thank you for ready this post, I hope it was interesting. If you've got questions I'm happy to answer them! >3<

#名探偵コナン#detective conan#case closed#dcmk#dcmk fanart#fanart#detective conan video games#vtuber#vtube model#vr avatar#dcmk3d

25 notes

·

View notes

Text

I made it and rigged it in Blender, and then imported it into Unity! I use VSeeface which has a whole exporting process. I used Mako Ray's tutorial on YouTube to figure it out but this is the 3rd time I’ve done this whole ass process lol. I'm probably going to make a fun Matoran model for people to use based vaguely on the Mask of Light movie :)

Y'all wanna see something awesome??

I'm nearing the final stretch with my 3.0 Vtuber model (although I ran into a bit of a hiccup) and I am super duper excited to show everyone! Here's a sneak peek at some progress made today!

Are you excited to see what I've been working so hard on??

111 notes

·

View notes

Note

(to whatever extent if any you're willing to answer) What's your software/camera setup like for streaming? Thinking about what I want in a model, and one thing I want is the ability to make hand gestures, but I don't know how complicated that is to have rigged. Thanks!

I don't mind sharing some info to help others :>

I use a Logitech webcam C930e that looks at my face and a Leap Motion controller that looks at my hands. If the .vrm file of your model has a full standard humanoid skeleton rig then the hands will basically just work automatically with most 3D Vtuber programs (VSeeFace is my personal choice) that support Leap Motion! For 2D models it's gonna be a lot more complicated, and I have no personal experience with that. I'm sure you can find tutorials for it though!

Good luck!

4 notes

·

View notes

Note

how did you make that vtuber model, it's so cool!!!!!!!

THANK YOU!! i modeled, textured, and rigged the whole thing in blender, put the vrm together in unity, and used vseeface for tracking!

honestly im not ENTIRELY sure what/how much to tell you because it's a very very long process, BUT i will say im hoping to make a few videos on it! because at the time i was making that model there wasn't a full tutorial and a lot of it i had to figure out as i go along (which cost me a lot of time) , plus i love this stuff and i would LOVE to infodump abt it :)c

i could never find resources for making vrms in non-anime/unconventional art styles and furries??? and also how you should rig faces?? which is both amazing and slightly infuriating. so whatever video i make will probably talk about that stuff specifically

#im typing this while listening to whats new pussycat on loop in a vc so i hope this makes sense#sorry if this is like. a nonanswer nbjdfgk i have no idea how to make it short enough for a tumblr post but in depth enough to explain the#process#IF i think of a better answer ill come back to this when im not listening to tom jone's whats new pussycat for the 325848782th time at 3am#wormwerds#magic 8 ball#anon

2 notes

·

View notes

Note

Do you recommend any tutorials/programs for making and using a low poly vtuber rig like yours?

Thanks for asking!! I dunno how much help I can provide since I just kinda winged my model, but I’ll try hehe

I hadn't made a full model in well over two years, since halfway through college, so I just stared at some low-poly models downloaded from models-resource.com, and then learned how to use Blender properly so I could make the mesh. I used pyxeledit for the texture and this Unity extention to set it up for use with vseeface, which is free and pretty good!

I didn't use tutorials so I sadly can't recommend any of those, but I do think that just grasping the basics of 3D modelling and texturing and then simply downloading and analysing existing low-poly models, especially in a style you like, will probably help a lot!

I hope there was some useful info in there, and wish you luck on your endeavor!! And again thanks for asking me that's neat :)

13 notes

·

View notes