#what is rest api integration

Explore tagged Tumblr posts

Text

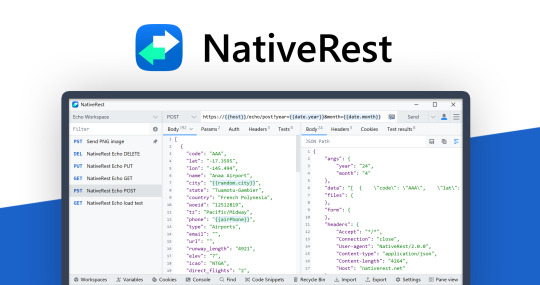

The Next Generation Native REST API Client

Welcome to the official launch of NativeRest—the native REST API client designed to make your API development journey smoother, faster, and more intuitive than ever.

If you’ve used tools like Postman ↗, Insomnia ↗, or HTTPie ↗, you know how essential a powerful API client is for modern development. But what if you could have a tool that combines high performance, a beautiful native interface, and seamless workflow integration—all in one package? That’s where NativeRest comes in.

Why NativeRest?

NativeRest is built from the ground up for speed, efficiency, and a truly native experience. Here’s what sets it apart:

Lightning-Fast Performance: NativeRest leverages native technologies for a snappy, responsive UI that never gets in your way.

Intuitive Design: Enjoy a clutter-free, modern interface that puts your requests and responses front and center.

Advanced Collaboration: Built-in features make it easy to share collections, environments, and test results with your team.

Robust Security: Your data stays private, with secure local storage and granular permission controls.

Cross-Platform Native Experience: Whether you’re on macOS, Windows, or Linux, NativeRest feels right at home.

Get Started

Ready to try it out? Download NativeRest - native rest api client↗ and see how it compares to your current workflow. Want a sneak peek? Check out our YouTube channel ↗ for quick tutorials and feature highlights.

Welcome to the future of API development—welcome to NativeRest!

2 notes

·

View notes

Text

What Are Direct Carrier Appointments? 5 Vital Insights for Agencies

Gaining a strong grasp of direct carrier appointments can significantly elevate how your insurance agency operates.

In simple terms, it’s a formal relationship where an insurer authorizes an agency to sell its policies directly — cutting out the middle layers.

But why is this such a big deal, and how does it shape your agency’s future?

Here are five key insights into direct appointments and why they’re so beneficial for your business.

Request a Demo

Insight #1: Direct Carrier Appointments Offer Wider Product Access One of the most valuable benefits of a direct appointment is the immediate access to a broader range of the carrier’s insurance products.

This enables your agency to provide clients with more tailored options, accommodating varied coverage needs and preferences.

Such diversification strengthens your service portfolio, makes your agency more appealing to a broader audience, and positions you more competitively in the marketplace.

Over time, this enhanced market access contributes to stronger revenue generation and business stability.

Insight #2: Direct Appointments Can Improve Earnings When working directly with carriers, agencies often avoid the layers of commissions that come with using intermediaries or aggregators.

This means you can receive a higher portion of the premium revenue, leading to better profit margins per policy.

With increased commission percentages and potential for negotiating favorable rates, your agency’s income per client improves — supporting financial growth over the long term.

This revenue advantage is key to building a scalable and profitable business model.

Insight #3: Carriers Require Agencies to Meet Eligibility Standards Insurers typically evaluate agencies before granting direct appointments, ensuring the partnership is secure and mutually beneficial.

Common criteria may include years of operational history, proof of production capabilities, and compliance with regulatory standards.

Meeting these benchmarks shows that your agency is trustworthy, productive, and capable of representing the carrier’s interests responsibly.

These requirements help maintain quality and safeguard the insurer's brand and policyholders.

Insight #4: Access to Unique Products Can Set You Apart With a direct appointment, you may gain access to exclusive insurance plans or services that aren’t distributed through indirect channels.

These exclusive offerings allow you to provide value that competitors may lack — fulfilling niche market needs and attracting high-intent clients.

Your agency becomes a go-to source for specialized or higher-tier solutions, strengthening your position as a trusted advisor in the industry.

This exclusivity enhances your credibility and helps retain loyal clients looking for premium options.

Insight #5: Appointments Must Be Actively Maintained Receiving a direct appointment is just the start — agencies must work consistently to keep it active.

That includes hitting required production targets, delivering top-tier service, following carrier policies, and ensuring that records stay updated — including changes to staff.

Failure to maintain performance or compliance can jeopardize the relationship and result in losing the appointment and its benefits.

Ongoing communication and alignment with the carrier are key to keeping the partnership strong and sustainable.

Manage Appointments Easily with Agenzee Meet Agenzee — your all-in-one insurance compliance platform that redefines how agencies handle licenses and appointments.

No more juggling spreadsheets or missing renewal dates. Agenzee helps your agency stay efficient and compliant with features like:

All-in-One License & Appointment Dashboard

Automated Alerts Before License Expiry

Simplified License Renewal Tools

New Appointment Submission & Tracking

Termination Management Features

CE (Continuing Education) Hour Tracking

Robust REST API Integration

Mobile Access for Producers on the Go

With Agenzee, your agency can minimize administrative burdens and maximize focus on growth.

Request a free demo and see how easy compliance management can be!

2 notes

·

View notes

Text

Crafting Web Applications For Businesses Which are Responsive,Secure and Scalable.

Hello, Readers!

I’m Nehal Patil, a passionate freelance web developer dedicated to building powerful web applications that solve real-world problems. With a strong command over Spring Boot, React.js, Bootstrap, and MySQL, I specialize in crafting web apps that are not only responsive but also secure, scalable, and production-ready.

Why I Started Freelancing

After gaining experience in full-stack development and completing several personal and academic projects, I realized that I enjoy building things that people actually use. Freelancing allows me to work closely with clients, understand their unique challenges, and deliver custom web solutions that drive impact.

What I Do

I build full-fledged web applications from the ground up. Whether it's a startup MVP, a business dashboard, or an e-commerce platform, I ensure every project meets the following standards:

Responsive: Works seamlessly on mobile, tablet, and desktop.

Secure: Built with best practices to prevent common vulnerabilities.

Scalable: Designed to handle growth—be it users, data, or features.

Maintainable: Clean, modular code that’s easy to understand and extend.

My Tech Stack

I work with a powerful tech stack that ensures modern performance and flexibility:

Frontend: React.js + Bootstrap for sleek, dynamic, and responsive UI

Backend: Spring Boot for robust, production-level REST APIs

Database: MySQL for reliable and structured data management

Bonus: Integration, deployment support, and future-proof architecture

What’s Next?

This blog marks the start of my journey to share insights, tutorials, and case studies from my freelance experiences. Whether you're a business owner looking for a web solution or a fellow developer curious about my workflow—I invite you to follow along!

If you're looking for a developer who can turn your idea into a scalable, secure, and responsive web app, feel free to connect with me.

Thanks for reading, and stay tuned!

2 notes

·

View notes

Text

okay, so- the past three days have been pretty insane, hence no to-do lists. did not know hour-to-hour what in the hell i'd have to do next.

monday morning, there was a company meeting, and it was announced that we were being sold. this was not... the most surprising thing in the world, because about a month ago there was this sudden hasty push by the top to reorganize the business into distinct independent units that didn't depend on shared services. like, what else would the point of doing that be, if not to sell off pieces of the business? sure, they said that wasn't happening, but who the hell was fooled by that?

so i used to do most of my work on these projects for this one specific business unit, building and running a bunch of middleware API integrations for our learning management system. but my boss, who used to be in charge of the dev team generally, got assigned to this totally different unit- and she liked me enough that she pushed really hard to get me reassigned to her unit.

so i was already conflicted about that:

i really like my boss- she's really understanding of my need for flexibility to work on my side projects, she only cares that i get the work done (and even with many side projects, i still consistently exceed expectations and get a full-time workload done ahead of schedule), and she was pushing hard to get me a raise against upper management who'd taken to using covid austerity as an excuse to never give anyone any raises ever. and the team assigned to this unit didn't have any senior devs who could handle a big infrastructure transition, and i'd just become AWS certified, and without someone like me, my coworkers assigned to that unit would be in some hot water. plus, after the transition, maintaining a reduced suite of products would probably be easier day-to-day.

but on the other hand, all my projects in the other business unit, with the LMS- those are pretty vital, and the nature of the contracts with those clients necessitates frequent maintenance and changes. my code for those integrations is bad, for various reasons but mainly that there is no dev environment for testing changes. it's fundamentally about managing production data in databases we don't directly control, so every change has to be done very quickly and carefully, with no room for big refactors to clean things up (and risk breaking stuff). it's a mess, and no one in the other business unit is prepared to take it over. plus- i liked working directly with clients, doing work where if i did the work someone was appreciative of the work. it was motivating!

ultimately, i decided to trust my boss and follow her to the other business unit. we weren't completely splitting from the rest of the business- i'd still be able to train up someone else to take over my projects, we'd still have the shared customer accounts management software, and- crucially- i'd still have the boss who understood my needs and had no interest in squeezing value out of me.

so i went on vacation for a couple weeks right after committing to that decision- and then i came back on monday, and that day they announce we're being sold.

also that my boss is fired and being replaced by someone from the new company.

also that we have two months to completely disconnect all our products from shared service infrastructure and rebuild our own.

also no takesies-backsies, the acquisition agreement included terms that the former company not hire back any of the sold-off employees or even discuss the acquisition with them at all. no chance to react to the new information except to sign the new offer letter by close of business on Wednesday.

i was unhappy about this! can you tell???

so my first thought was- okay, this is bullshit. i still want to work for the LMS people, the LMS people still want me to work for them, there has to be a solve here. so i go to the guy in charge of that division, who also wants me to keep working there, and he says okay i'll have our lawyers look into it.

and then... he gets back to me sounding like a robot, "i am unable to discuss this further with you at this time", which is so obviously out of character for the guy that i can tell legal's thrown the book at him. i talk to legal myself- it's a dead end. they can't- they're unable to even talk about why they can't talk about it, because obviously this deal was engineered to prevent me from doing exactly what i'm trying to do here.

so i go at it from the other angle. president of the sold company, now a wholly-owned for-profit subsidiary of a nonprofit organization (is that even allowed???), i explain to him, hey, this is a mistake, i'm only here because my old boss really wanted me to be on her team, surely you can let me go continue doing my actual job?

nope.

so then i start playing hardball.

the salary they're offering me is, adjusted for inflation, less than the salary i was offered two years ago, which had come with the (entirely failed) non-promise that i'd be bumped up to a certain level very quickly after some formalities re: the employment structure. i explain, in detail, how upset i am with the entire state of affairs- and i threaten to walk, which i am allowed to do. i'm not required to sign their new contract- i'd need to go job-hunting, sure, but i have money in the bank, i can afford to do it, and i could definitely get a better deal somewhere else.

this is a tense situation! my old boss knew this team needed me- but they unceremoniously fired her while she was on vacation, so her opinion doesn't mean dirt to them apparently. it's unclear how vital i really am to this- they could maybe train up one of the other devs to handle the AWS stuff.

and on my side- if i walk, that's it. all that horrible messy code for the LMS stuff- i don't get two months to train someone else up and write documentation and do some housecleaning. i'm gone! my horrific dirty laundry (and hours and hours of regular maintenance work) gets handed off to some other dev who's totally unprepared for it, and that person inevitably puts a curse on my entire family line as retribution for me leaving them holding that intolerable bag. i don't actually want to walk, because then i end up the bad guy in the eyes of people i respect and care about.

(also i'd have to do a job hunt and that shit is so god damn annoying you have no idea you probably have some idea.)

so i tell the guy, look- i can do better. i'm basically starting over doing harder work at an unfamiliar company, and if i'm doing that anyway, why not do it for someone who'll pay me? if you don't give me X amount of money, i'm walking out, and now you don't have an infrastructure guy during the two-month window you have to migrate a shit-ton of infrastructure. i am a serious dude and you can't just fuck with me!

(and inside i'm like:

because oh god i am not a serious dude i am so easily fucked with what if i'm pushing my luck too hard)

and he lets me fuckin' stew. 5:00 on wednesday i need to have either signed a contract or not signed a contract, and he hedges and goes to talk with the higher-ups and makes no promises, and i have no idea whether it's because i scared him or if he's trying to work out how to replace me or what. all this negotiation has been eating my brain for the past couple days and it's coming down to the wire-

and then a couple hours before the deadline he gets back to me with a counteroffer. it's less than i was asking, because that's how negotiations work, but it is more than i was making when i was brought on, by a good 10k.

so now it's on to round two. i'm gonna stick around for this two-month period, make this transition work, clean up my mess and take care of things with my now ex-coworkers- and then if they haven't either proven their management is tolerable or given me a crystal-clear path to advancement, we're back to the standoff- except this time, they'll have a good idea of exactly what it is they stand to lose.

haaaaaaaaaaaaaaah. okay. okay. yeah. so that's dealt with for the time being. i can breathe now. we'll see how it goes. fuck.

27 notes

·

View notes

Text

Crypto Exchange API Integration: Simplifying and Enhancing Trading Efficiency

The cryptocurrency trading landscape is fast-paced, requiring seamless processes and real-time data access to ensure traders stay ahead of market movements. To meet these demands, Crypto Exchange APIs (Application Programming Interfaces) have emerged as indispensable tools for developers and businesses, streamlining trading processes and improving user experience.

APIs bridge the gap between users, trading platforms, and blockchain networks, enabling efficient operations like order execution, wallet integration, and market data retrieval. This blog dives into the importance of crypto exchange API integration, its benefits, and how businesses can leverage it to create feature-rich trading platforms.

What is a Crypto Exchange API?

A Crypto Exchange API is a software interface that enables seamless communication between cryptocurrency trading platforms and external applications. It provides developers with access to various functionalities, such as real-time price tracking, trade execution, and account management, allowing them to integrate these features into their platforms.

Types of Crypto Exchange APIs:

REST APIs: Used for simple, one-time data requests (e.g., fetching market data or placing a trade).

WebSocket APIs: Provide real-time data streaming for high-frequency trading and live updates.

FIX APIs (Financial Information Exchange): Designed for institutional-grade trading with high-speed data transfers.

Key Benefits of Crypto Exchange API Integration

1. Real-Time Market Data Access

APIs provide up-to-the-second updates on cryptocurrency prices, trading volumes, and order book depth, empowering traders to make informed decisions.

Use Case:

Developers can build dashboards that display live market trends and price movements.

2. Automated Trading

APIs enable algorithmic trading by allowing users to execute buy and sell orders based on predefined conditions.

Use Case:

A trading bot can automatically place orders when specific market criteria are met, eliminating the need for manual intervention.

3. Multi-Exchange Connectivity

Crypto APIs allow platforms to connect with multiple exchanges, aggregating liquidity and providing users with the best trading options.

Use Case:

Traders can access a broader range of cryptocurrencies and trading pairs without switching between platforms.

4. Enhanced User Experience

By integrating APIs, businesses can offer features like secure wallet connections, fast transaction processing, and detailed analytics, improving the overall user experience.

Use Case:

Users can track their portfolio performance in real-time and manage assets directly through the platform.

5. Increased Scalability

API integration allows trading platforms to handle a higher volume of users and transactions efficiently, ensuring smooth operations during peak trading hours.

Use Case:

Exchanges can scale seamlessly to accommodate growth in user demand.

Essential Features of Crypto Exchange API Integration

1. Trading Functionality

APIs must support core trading actions, such as placing market and limit orders, canceling trades, and retrieving order statuses.

2. Wallet Integration

Securely connect wallets for seamless deposits, withdrawals, and balance tracking.

3. Market Data Access

Provide real-time updates on cryptocurrency prices, trading volumes, and historical data for analysis.

4. Account Management

Allow users to manage their accounts, view transaction history, and set preferences through the API.

5. Security Features

Integrate encryption, two-factor authentication (2FA), and API keys to safeguard user data and funds.

Steps to Integrate Crypto Exchange APIs

1. Define Your Requirements

Determine the functionalities you need, such as trading, wallet integration, or market data retrieval.

2. Choose the Right API Provider

Select a provider that aligns with your platform’s requirements. Popular providers include:

Binance API: Known for real-time data and extensive trading options.

Coinbase API: Ideal for wallet integration and payment processing.

Kraken API: Offers advanced trading tools for institutional users.

3. Implement API Integration

Use REST APIs for basic functionalities like fetching market data.

Implement WebSocket APIs for real-time updates and faster trading processes.

4. Test and Optimize

Conduct thorough testing to ensure the API integration performs seamlessly under different scenarios, including high traffic.

5. Launch and Monitor

Deploy the integrated platform and monitor its performance to address any issues promptly.

Challenges in Crypto Exchange API Integration

1. Security Risks

APIs are vulnerable to breaches if not properly secured. Implement robust encryption, authentication, and monitoring tools to mitigate risks.

2. Latency Issues

High latency can disrupt real-time trading. Opt for APIs with low latency to ensure a smooth user experience.

3. Regulatory Compliance

Ensure the integration adheres to KYC (Know Your Customer) and AML (Anti-Money Laundering) regulations.

The Role of Crypto Exchange Platform Development Services

Partnering with a professional crypto exchange platform development service ensures your platform leverages the full potential of API integration.

What Development Services Offer:

Custom API Solutions: Tailored to your platform’s specific needs.

Enhanced Security: Implementing advanced security measures like API key management and encryption.

Real-Time Capabilities: Optimizing APIs for high-speed data transfers and trading.

Regulatory Compliance: Ensuring the platform meets global legal standards.

Scalability: Building infrastructure that grows with your user base and transaction volume.

Real-World Examples of Successful API Integration

1. Binance

Features: Offers REST and WebSocket APIs for real-time market data and trading.

Impact: Enables developers to build high-performance trading bots and analytics tools.

2. Coinbase

Features: Provides secure wallet management APIs and payment processing tools.

Impact: Streamlines crypto payments and wallet integration for businesses.

3. Kraken

Features: Advanced trading APIs for institutional and professional traders.

Impact: Supports multi-currency trading with low-latency data feeds.

Conclusion

Crypto exchange API integration is a game-changer for businesses looking to streamline trading processes and enhance user experience. From enabling real-time data access to automating trades and managing wallets, APIs unlock endless possibilities for innovation in cryptocurrency trading platforms.

By partnering with expert crypto exchange platform development services, you can ensure secure, scalable, and efficient API integration tailored to your platform’s needs. In the ever-evolving world of cryptocurrency, seamless API integration is not just an advantage—it’s a necessity for staying ahead of the competition.

Are you ready to take your crypto exchange platform to the next level?

#cryptocurrencyexchange#crypto exchange platform development company#crypto exchange development company#white label crypto exchange development#cryptocurrency exchange development service#cryptoexchange

2 notes

·

View notes

Text

What is Argo CD? And When Was Argo CD Established?

What Is Argo CD?

Argo CD is declarative Kubernetes GitOps continuous delivery.

In DevOps, ArgoCD is a Continuous Delivery (CD) technology that has become well-liked for delivering applications to Kubernetes. It is based on the GitOps deployment methodology.

When was Argo CD Established?

Argo CD was created at Intuit and made publicly available following Applatix’s 2018 acquisition by Intuit. The founding developers of Applatix, Hong Wang, Jesse Suen, and Alexander Matyushentsev, made the Argo project open-source in 2017.

Why Argo CD?

Declarative and version-controlled application definitions, configurations, and environments are ideal. Automated, auditable, and easily comprehensible application deployment and lifecycle management are essential.

Getting Started

Quick Start

kubectl create namespace argocd kubectl apply -n argocd -f https://raw.githubusercontent.com/argoproj/argo-cd/stable/manifests/install.yaml

For some features, more user-friendly documentation is offered. Refer to the upgrade guide if you want to upgrade your Argo CD. Those interested in creating third-party connectors can access developer-oriented resources.

How it works

Argo CD defines the intended application state by employing Git repositories as the source of truth, in accordance with the GitOps pattern. There are various approaches to specify Kubernetes manifests:

Applications for Customization

Helm charts

JSONNET files

Simple YAML/JSON manifest directory

Any custom configuration management tool that is set up as a plugin

The deployment of the intended application states in the designated target settings is automated by Argo CD. Deployments of applications can monitor changes to branches, tags, or pinned to a particular manifest version at a Git commit.

Architecture

The implementation of Argo CD is a Kubernetes controller that continually observes active apps and contrasts their present, live state with the target state (as defined in the Git repository). Out Of Sync is the term used to describe a deployed application whose live state differs from the target state. In addition to reporting and visualizing the differences, Argo CD offers the ability to manually or automatically sync the current state back to the intended goal state. The designated target environments can automatically apply and reflect any changes made to the intended target state in the Git repository.

Components

API Server

The Web UI, CLI, and CI/CD systems use the API, which is exposed by the gRPC/REST server. Its duties include the following:

Status reporting and application management

Launching application functions (such as rollback, sync, and user-defined actions)

Cluster credential management and repository (k8s secrets)

RBAC enforcement

Authentication, and auth delegation to outside identity providers

Git webhook event listener/forwarder

Repository Server

An internal service called the repository server keeps a local cache of the Git repository containing the application manifests. When given the following inputs, it is in charge of creating and returning the Kubernetes manifests:

URL of the repository

Revision (tag, branch, commit)

Path of the application

Template-specific configurations: helm values.yaml, parameters

A Kubernetes controller known as the application controller keeps an eye on all active apps and contrasts their actual, live state with the intended target state as defined in the repository. When it identifies an Out Of Sync application state, it may take remedial action. It is in charge of calling any user-specified hooks for lifecycle events (Sync, PostSync, and PreSync).

Features

Applications are automatically deployed to designated target environments.

Multiple configuration management/templating tools (Kustomize, Helm, Jsonnet, and plain-YAML) are supported.

Capacity to oversee and implement across several clusters

Integration of SSO (OIDC, OAuth2, LDAP, SAML 2.0, Microsoft, LinkedIn, GitHub, GitLab)

RBAC and multi-tenancy authorization policies

Rollback/Roll-anywhere to any Git repository-committed application configuration

Analysis of the application resources’ health state

Automated visualization and detection of configuration drift

Applications can be synced manually or automatically to their desired state.

Web user interface that shows program activity in real time

CLI for CI integration and automation

Integration of webhooks (GitHub, BitBucket, GitLab)

Tokens of access for automation

Hooks for PreSync, Sync, and PostSync to facilitate intricate application rollouts (such as canary and blue/green upgrades)

Application event and API call audit trails

Prometheus measurements

To override helm parameters in Git, use parameter overrides.

Read more on Govindhtech.com

#ArgoCD#CD#GitOps#API#Kubernetes#Git#Argoproject#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

Full Stack Testing vs. Full Stack Development: What’s the Difference?

In today’s fast-evolving tech world, buzzwords like Full Stack Development and Full Stack Testing have gained immense popularity. Both roles are vital in the software lifecycle, but they serve very different purposes. Whether you’re a beginner exploring your career options or a professional looking to expand your skills, understanding the differences between Full Stack Testing and Full Stack Development is crucial. Let’s dive into what makes these two roles unique!

What Is Full Stack Development?

Full Stack Development refers to the ability to build an entire software application – from the user interface to the backend logic – using a wide range of tools and technologies. A Full Stack Developer is proficient in both front-end (user-facing) and back-end (server-side) development.

Key Responsibilities of a Full Stack Developer:

Front-End Development: Building the user interface using tools like HTML, CSS, JavaScript, React, or Angular.

Back-End Development: Creating server-side logic using languages like Node.js, Python, Java, or PHP.

Database Management: Handling databases such as MySQL, MongoDB, or PostgreSQL.

API Integration: Connecting applications through RESTful or GraphQL APIs.

Version Control: Using tools like Git for collaborative development.

Skills Required for Full Stack Development:

Proficiency in programming languages (JavaScript, Python, Java, etc.)

Knowledge of web frameworks (React, Django, etc.)

Experience with databases and cloud platforms

Understanding of DevOps tools

In short, a Full Stack Developer handles everything from designing the UI to writing server-side code, ensuring the software runs smoothly.

What Is Full Stack Testing?

Full Stack Testing is all about ensuring quality at every stage of the software development lifecycle. A Full Stack Tester is responsible for testing applications across multiple layers – from front-end UI testing to back-end database validation – ensuring a seamless user experience. They blend manual and automation testing skills to detect issues early and prevent software failures.

Key Responsibilities of a Full Stack Tester:

UI Testing: Ensuring the application looks and behaves correctly on the front end.

API Testing: Validating data flow and communication between services.

Database Testing: Verifying data integrity and backend operations.

Performance Testing: Ensuring the application performs well under load using tools like JMeter.

Automation Testing: Automating repetitive tests with tools like Selenium or Cypress.

Security Testing: Identifying vulnerabilities to prevent cyber-attacks.

Skills Required for Full Stack Testing:

Knowledge of testing tools like Selenium, Postman, JMeter, or TOSCA

Proficiency in both manual and automation testing

Understanding of test frameworks like TestNG or Cucumber

Familiarity with Agile and DevOps practices

Basic knowledge of programming for writing test scripts

A Full Stack Tester plays a critical role in identifying bugs early in the development process and ensuring the software functions flawlessly.

Which Career Path Should You Choose?

The choice between Full Stack Development and Full Stack Testing depends on your interests and strengths:

Choose Full Stack Development if you love coding, creating interfaces, and building software solutions from scratch. This role is ideal for those who enjoy developing creative products and working with both front-end and back-end technologies.

Choose Full Stack Testing if you have a keen eye for detail and enjoy problem-solving by finding bugs and ensuring software quality. If you love automation, performance testing, and working with multiple testing tools, Full Stack Testing is the right path.

Why Both Roles Are Essential :

Both Full Stack Developers and Full Stack Testers are integral to software development. While developers focus on creating functional features, testers ensure that everything runs smoothly and meets user expectations. In an Agile or DevOps environment, these roles often overlap, with testers and developers working closely to deliver high-quality software in shorter cycles.

Final Thoughts :

Whether you opt for Full Stack Testing or Full Stack Development, both fields offer exciting opportunities with tremendous growth potential. With software becoming increasingly complex, the demand for skilled developers and testers is higher than ever.

At TestoMeter Pvt. Ltd., we provide comprehensive training in both Full Stack Development and Full Stack Testing to help you build a future-proof career. Whether you want to build software or ensure its quality, we’ve got the perfect course for you.

Ready to take the next step? Explore our Full Stack courses today and start your journey toward a successful IT career!

This blog not only provides a crisp comparison but also encourages potential students to explore both career paths with TestoMeter.

For more Details :

Interested in kick-starting your Software Developer/Software Tester career? Contact us today or Visit our website for course details, success stories, and more!

🌐visit - https://www.testometer.co.in/

2 notes

·

View notes

Text

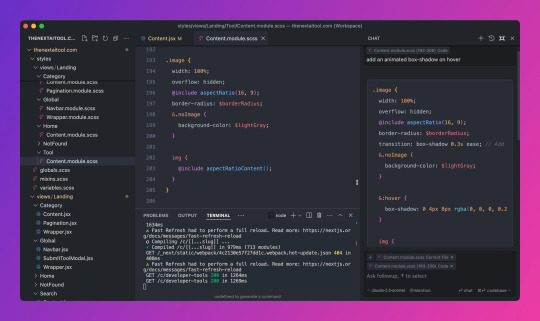

How Cursor is Transforming the Developer's Workflow

For years, developers have relied on multiple tools and websites to get the job done. The coding process was often a back-and-forth shuffle between their editor, Google, Stack Overflow, and, more recently, AI tools like ChatGPT or Claude. Need to figure out how to implement a new feature? Hop over to Google. Stuck on a bug? Search Stack Overflow for a solution. Want to refactor some messy code? Paste the code into ChatGPT, copy the response, and manually bring it back to your editor. It was an effective process, sure, but it felt disconnected and clunky. This was just part of the daily grind—until Cursor entered the scene.

Cursor changes the game by integrating AI right into your coding environment. If you’re familiar with VS Code, Cursor feels like a natural extension of your workflow. You can bring your favorite extensions, themes, and keybindings over with a single click, so there’s no learning curve to slow you down. But what truly sets Cursor apart is its seamless integration with AI, allowing you to generate code, refactor, and get smart suggestions without ever leaving the editor. The days of copying and pasting between ChatGPT and your codebase are over. Need a new function? Just describe what you want right in the text editor, and Cursor’s AI takes care of the rest, directly in your workspace.

Before Cursor, developers had to work in silos, jumping between platforms to get assistance. Now, with AI embedded in the code editor, it’s all there at your fingertips. Whether it’s reviewing documentation, getting code suggestions, or automatically updating an outdated method, Cursor brings everything together in one place. No more wasting time switching tabs or manually copying over solutions. It’s like having AI superpowers built into your terminal—boosting productivity and cutting out unnecessary friction.

The real icing on the cake? Cursor’s commitment to privacy. Your code is safe, and you can even use your own API key to keep everything under control. It’s no surprise that developers are calling Cursor a game changer. It’s not just another tool in your stack—it’s a workflow revolution. Cursor takes what used to be a disjointed process and turns it into a smooth, efficient, and AI-driven experience that keeps you focused on what really matters: writing great code. Check out for more details

3 notes

·

View notes

Text

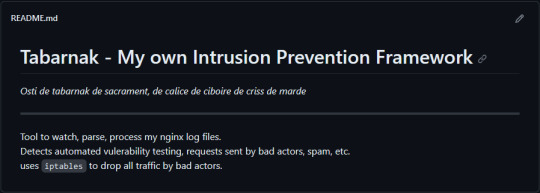

(this is a small story of how I came to write my own intrusion detection/prevention framework and why I'm really happy with that decision, don't mind me rambling)

Preface

About two weeks ago I was faced with a pretty annoying problem. Whilst I was going home by train I have noticed that my server at home had been running hot and slowed down a lot. This prompted me to check my nginx logs, the only service that is indirectly available to the public (more on that later), which made me realize that - due to poor access control - someone had been sending me hundreds of thousands of huge DNS requests to my server, most likely testing for vulnerabilities. I added an iptables rule to drop all traffic from the aforementioned source and redirected remaining traffic to a backup NextDNS instance that I set up previously with the same overrides and custom records that my DNS had to not get any downtime for the service but also allow my server to cool down. I stopped the DNS service on my server at home and then used the remaining train ride to think. How would I stop this from happening in the future? I pondered multiple possible solutions for this problem, whether to use fail2ban, whether to just add better access control, or to just stick with the NextDNS instance.

I ended up going with a completely different option: making a solution, that's perfectly fit for my server, myself.

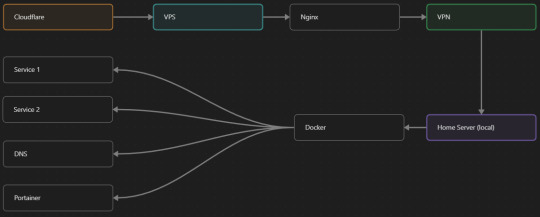

My Server Structure

So, I should probably explain how I host and why only nginx is public despite me hosting a bunch of services under the hood.

I have a public facing VPS that only allows traffic to nginx. That traffic then gets forwarded through a VPN connection to my home server so that I don't have to have any public facing ports on said home server. The VPS only really acts like the public interface for the home server with access control and logging sprinkled in throughout my configs to get more layers of security. Some Services can only be interacted with through the VPN or a local connection, such that not everything is actually forwarded - only what I need/want to be.

I actually do have fail2ban installed on both my VPS and home server, so why make another piece of software?

Tabarnak - Succeeding at Banning

I had a few requirements for what I wanted to do:

Only allow HTTP(S) traffic through Cloudflare

Only allow DNS traffic from given sources; (location filtering, explicit white-/blacklisting);

Webhook support for logging

Should be interactive (e.g. POST /api/ban/{IP})

Detect automated vulnerability scanning

Integration with the AbuseIPDB (for checking and reporting)

As I started working on this, I realized that this would soon become more complex than I had thought at first.

Webhooks for logging This was probably the easiest requirement to check off my list, I just wrote my own log() function that would call a webhook. Sadly, the rest wouldn't be as easy.

Allowing only Cloudflare traffic This was still doable, I only needed to add a filter in my nginx config for my domain to only allow Cloudflare IP ranges and disallow the rest. I ended up doing something slightly different. I added a new default nginx config that would just return a 404 on every route and log access to a different file so that I could detect connection attempts that would be made without Cloudflare and handle them in Tabarnak myself.

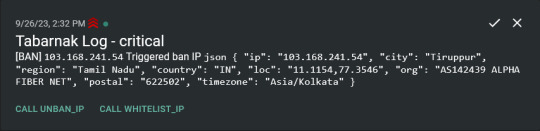

Integration with AbuseIPDB Also not yet the hard part, just call AbuseIPDB with the parsed IP and if the abuse confidence score is within a configured threshold, flag the IP, when that happens I receive a notification that asks me whether to whitelist or to ban the IP - I can also do nothing and let everything proceed as it normally would. If the IP gets flagged a configured amount of times, ban the IP unless it has been whitelisted by then.

Location filtering + Whitelist + Blacklist This is where it starts to get interesting. I had to know where the request comes from due to similarities of location of all the real people that would actually connect to the DNS. I didn't want to outright ban everyone else, as there could be valid requests from other sources. So for every new IP that triggers a callback (this would only be triggered after a certain amount of either flags or requests), I now need to get the location. I do this by just calling the ipinfo api and checking the supplied location. To not send too many requests I cache results (even though ipinfo should never be called twice for the same IP - same) and save results to a database. I made my own class that bases from collections.UserDict which when accessed tries to find the entry in memory, if it can't it searches through the DB and returns results. This works for setting, deleting, adding and checking for records. Flags, AbuseIPDB results, whitelist entries and blacklist entries also get stored in the DB to achieve persistent state even when I restart.

Detection of automated vulnerability scanning For this, I went through my old nginx logs, looking to find the least amount of paths I need to block to catch the biggest amount of automated vulnerability scan requests. So I did some data science magic and wrote a route blacklist. It doesn't just end there. Since I know the routes of valid requests that I would be receiving (which are all mentioned in my nginx configs), I could just parse that and match the requested route against that. To achieve this I wrote some really simple regular expressions to extract all location blocks from an nginx config alongside whether that location is absolute (preceded by an =) or relative. After I get the locations I can test the requested route against the valid routes and get back whether the request was made to a valid URL (I can't just look for 404 return codes here, because there are some pages that actually do return a 404 and can return a 404 on purpose). I also parse the request method from the logs and match the received method against the HTTP standard request methods (which are all methods that services on my server use). That way I can easily catch requests like:

XX.YYY.ZZZ.AA - - [25/Sep/2023:14:52:43 +0200] "145.ll|'|'|SGFjS2VkX0Q0OTkwNjI3|'|'|WIN-JNAPIER0859|'|'|JNapier|'|'|19-02-01|'|'||'|'|Win 7 Professional SP1 x64|'|'|No|'|'|0.7d|'|'|..|'|'|AA==|'|'|112.inf|'|'|SGFjS2VkDQoxOTIuMTY4LjkyLjIyMjo1NTUyDQpEZXNrdG9wDQpjbGllbnRhLmV4ZQ0KRmFsc2UNCkZhbHNlDQpUcnVlDQpGYWxzZQ==12.act|'|'|AA==" 400 150 "-" "-"

I probably over complicated this - by a lot - but I can't go back in time to change what I did.

Interactivity As I showed and mentioned earlier, I can manually white-/blacklist an IP. This forced me to add threads to my previously single-threaded program. Since I was too stubborn to use websockets (I have a distaste for websockets), I opted for probably the worst option I could've taken. It works like this: I have a main thread, which does all the log parsing, processing and handling and a side thread which watches a FIFO-file that is created on startup. I can append commands to the FIFO-file which are mapped to the functions they are supposed to call. When the FIFO reader detects a new line, it looks through the map, gets the function and executes it on the supplied IP. Doing all of this manually would be way too tedious, so I made an API endpoint on my home server that would append the commands to the file on the VPS. That also means, that I had to secure that API endpoint so that I couldn't just be spammed with random requests. Now that I could interact with Tabarnak through an API, I needed to make this user friendly - even I don't like to curl and sign my requests manually. So I integrated logging to my self-hosted instance of https://ntfy.sh and added action buttons that would send the request for me. All of this just because I refused to use sockets.

First successes and why I'm happy about this After not too long, the bans were starting to happen. The traffic to my server decreased and I can finally breathe again. I may have over complicated this, but I don't mind. This was a really fun experience to write something new and learn more about log parsing and processing. Tabarnak probably won't last forever and I could replace it with solutions that are way easier to deploy and way more general. But what matters is, that I liked doing it. It was a really fun project - which is why I'm writing this - and I'm glad that I ended up doing this. Of course I could have just used fail2ban but I never would've been able to write all of the extras that I ended up making (I don't want to take the explanation ad absurdum so just imagine that I added cool stuff) and I never would've learned what I actually did.

So whenever you are faced with a dumb problem and could write something yourself, I think you should at least try. This was a really fun experience and it might be for you as well.

Post Scriptum

First of all, apologies for the English - I'm not a native speaker so I'm sorry if some parts were incorrect or anything like that. Secondly, I'm sure that there are simpler ways to accomplish what I did here, however this was more about the experience of creating something myself rather than using some pre-made tool that does everything I want to (maybe even better?). Third, if you actually read until here, thanks for reading - hope it wasn't too boring - have a nice day :)

10 notes

·

View notes

Text

My Favorite Full Stack Tools and Technologies: Insights from a Developer

It was a seemingly ordinary morning when I first realized the true magic of full stack development. As I sipped my coffee, I stumbled upon a statistic that left me astounded: 97% of websites are built by full stack developers. That moment marked the beginning of my journey into the dynamic world of web development, where every line of code felt like a brushstroke on the canvas of the internet.

In this blog, I invite you to join me on a fascinating journey through the realm of full stack development. As a seasoned developer, I’ll share my favorite tools and technologies that have not only streamlined my workflow but also brought my creative ideas to life.

The Full Stack Developer’s Toolkit

Before we dive into the toolbox, let’s clarify what a full stack developer truly is. A full stack developer is someone who possesses the skills to work on both the front-end and back-end of web applications, bridging the gap between design and server functionality.

Tools and technologies are the lifeblood of a developer’s daily grind. They are the digital assistants that help us craft interactive websites, streamline processes, and solve complex problems.

Front-End Favorites

As any developer will tell you, HTML and CSS are the foundation of front-end development. HTML structures content, while CSS styles it. These languages, like the alphabet of the web, provide the basis for creating visually appealing and user-friendly interfaces.

JavaScript and Frameworks: JavaScript, often hailed as the “language of the web,” is my go-to for interactivity. The versatility of JavaScript and its ecosystem of libraries and frameworks, such as React and Vue.js, has been a game-changer in creating responsive and dynamic web applications.

Back-End Essentials

The back-end is where the magic happens behind the scenes. I’ve found server-side languages like Python and Node.js to be my trusted companions. They empower me to build robust server applications, handle data, and manage server resources effectively.

Databases are the vaults where we store the treasure trove of data. My preference leans toward relational databases like MySQL and PostgreSQL, as well as NoSQL databases like MongoDB. The choice depends on the project’s requirements.

Development Environments

The right code editor can significantly boost productivity. Personally, I’ve grown fond of Visual Studio Code for its flexibility, extensive extensions, and seamless integration with various languages and frameworks.

Git is the hero of collaborative development. With Git and platforms like GitHub, tracking changes, collaborating with teams, and rolling back to previous versions have become smooth sailing.

Productivity and Automation

Automation is the secret sauce in a developer’s recipe for efficiency. Build tools like Webpack and task runners like Gulp automate repetitive tasks, optimize code, and enhance project organization.

Testing is the compass that keeps us on the right path. I rely on tools like Jest and Chrome DevTools for testing and debugging. These tools help uncover issues early in development and ensure a smooth user experience.

Frameworks and Libraries

Front-end frameworks like React and Angular have revolutionized web development. Their component-based architecture and powerful state management make building complex user interfaces a breeze.

Back-end frameworks, such as Express.js for Node.js and Django for Python, are my go-to choices. They provide a structured foundation for creating RESTful APIs and handling server-side logic efficiently.

Security and Performance

The internet can be a treacherous place, which is why security is paramount. Tools like OWASP ZAP and security best practices help fortify web applications against vulnerabilities and cyber threats.

Page load speed is critical for user satisfaction. Tools and techniques like Lighthouse and performance audits ensure that websites are optimized for quick loading and smooth navigation.

Project Management and Collaboration

Collaboration and organization are keys to successful projects. Tools like Trello, JIRA, and Asana help manage tasks, track progress, and foster team collaboration.

Clear communication is the glue that holds development teams together. Platforms like Slack and Microsoft Teams facilitate real-time discussions, file sharing, and quick problem-solving.

Personal Experiences and Insights

It’s one thing to appreciate these tools in theory, but it’s their application in real projects that truly showcases their worth. I’ve witnessed how this toolkit has brought complex web applications to life, from e-commerce platforms to data-driven dashboards.

The journey hasn’t been without its challenges. Whether it’s tackling tricky bugs or optimizing for mobile performance, my favorite tools have always been my partners in overcoming obstacles.

Continuous Learning and Adaptation

Web development is a constantly evolving field. New tools, languages, and frameworks emerge regularly. As developers, we must embrace the ever-changing landscape and be open to learning new technologies.

Fortunately, the web development community is incredibly supportive. Platforms like Stack Overflow, GitHub, and developer forums offer a wealth of resources for learning, troubleshooting, and staying updated. The ACTE Institute offers numerous Full stack developer courses, bootcamps, and communities that can provide you with the necessary resources and support to succeed in this field. Best of luck on your exciting journey!

In this blog, we’ve embarked on a journey through the world of full stack development, exploring the tools and technologies that have become my trusted companions. From HTML and CSS to JavaScript frameworks, server-side languages, and an array of productivity tools, these elements have shaped my career.

As a full stack developer, I’ve discovered that the right tools and technologies can turn challenges into opportunities and transform creative ideas into functional websites and applications. The world of web development continues to evolve, and I eagerly anticipate the exciting innovations and discoveries that lie ahead. My hope is that this exploration of my favorite tools and technologies inspires fellow developers on their own journeys and fuels their passion for the ever-evolving world of web development.

#frameworks#full stack web development#web development#front end development#backend#programming#education#information

4 notes

·

View notes

Text

Laravel Integration with ChatGPT: A Disastrous Misstep in Development?

From the high-tech heavens to the innovation arena, devs embark on daring odysseys to shatter limits and redefine possibilities!

Just like Tony Stark, the genius behind Iron Man, they strive to forge mighty tools that’ll reshape our tech interactions forever.

Enter the audacious fusion of Laravel, the PHP web framework sensation, and ChatGPT, the brainchild of OpenAI, a language model so sophisticated it’ll blow your mind!

But hold on, what seemed like a match made in coding heaven soon revealed a twist — disaster, you say? Think again!

The web app and website overlords ain’t got no choice but to wield the mighty ChatGPT API to claim victory in the fierce battleground of competition and serve top-notch experiences to their users.

So, brace yourselves to uncover the secrets of Laravel and ChatGPT API integration. But before we dive in, let’s shed some light on what this magical integration is and why it’s a godsend for both the users and the stakeholders. Let’s roll!

How can integrating ChatGPT benefit a Laravel project?

Listen up, developers! Embrace the mighty fusion of ChatGPT and Laravel, and watch as your project ascends to new heights of greatness!

Picture this: Conversational interfaces and genius chatbots that serve up top-notch customer support, effortlessly tackling those pesky queries and leaving users grinning with satisfaction. Oh yeah, we’re talking next-level interactions!

But hold on, there’s more! Prepare to be blown away by the AI chatbots that churn out data-driven dynamism and tailor-made responses, catering to user preferences like nobody’s business. It’s like magic, but better!

When you plug Laravel into the almighty ChatGPT API, the result? Pure genius! Your applications will become supercharged powerhouses of intelligence, interactivity, and premium content. Brace yourself for the seamless and exhilarating user experience that’ll leave your competition shaking in their boots.

So what are you waiting for? Integrate ChatGPT with your Laravel project and unleash the killer combination that’ll set you apart from the rest. Revolutionize your UX, skyrocket your functionalities, and conquer the coding realm like never before!

How to exactly integrate Laravel with ChatGPT? Keep reading here: https://bit.ly/478wten 🚀

4 notes

·

View notes

Text

What is Solr – Comparing Apache Solr vs. Elasticsearch

In the world of search engines and data retrieval systems, Apache Solr and Elasticsearch are two prominent contenders, each with its strengths and unique capabilities. These open-source, distributed search platforms play a crucial role in empowering organizations to harness the power of big data and deliver relevant search results efficiently. In this blog, we will delve into the fundamentals of Solr and Elasticsearch, highlighting their key features and comparing their functionalities. Whether you're a developer, data analyst, or IT professional, understanding the differences between Solr and Elasticsearch will help you make informed decisions to meet your specific search and data management needs.

Overview of Apache Solr

Apache Solr is a search platform built on top of the Apache Lucene library, known for its robust indexing and full-text search capabilities. It is written in Java and designed to handle large-scale search and data retrieval tasks. Solr follows a RESTful API approach, making it easy to integrate with different programming languages and frameworks. It offers a rich set of features, including faceted search, hit highlighting, spell checking, and geospatial search, making it a versatile solution for various use cases.

Overview of Elasticsearch

Elasticsearch, also based on Apache Lucene, is a distributed search engine that stands out for its real-time data indexing and analytics capabilities. It is known for its scalability and speed, making it an ideal choice for applications that require near-instantaneous search results. Elasticsearch provides a simple RESTful API, enabling developers to perform complex searches effortlessly. Moreover, it offers support for data visualization through its integration with Kibana, making it a popular choice for log analysis, application monitoring, and other data-driven use cases.

Comparing Solr and Elasticsearch

Data Handling and Indexing

Both Solr and Elasticsearch are proficient at handling large volumes of data and offer excellent indexing capabilities. Solr uses XML and JSON formats for data indexing, while Elasticsearch relies on JSON, which is generally considered more human-readable and easier to work with. Elasticsearch's dynamic mapping feature allows it to automatically infer data types during indexing, streamlining the process further.

Querying and Searching

Both platforms support complex search queries, but Elasticsearch is often regarded as more developer-friendly due to its clean and straightforward API. Elasticsearch's support for nested queries and aggregations simplifies the process of retrieving and analyzing data. On the other hand, Solr provides a range of query parsers, allowing developers to choose between traditional and advanced syntax options based on their preference and familiarity.

Scalability and Performance

Elasticsearch is designed with scalability in mind from the ground up, making it relatively easier to scale horizontally by adding more nodes to the cluster. It excels in real-time search and analytics scenarios, making it a top choice for applications with dynamic data streams. Solr, while also scalable, may require more effort for horizontal scaling compared to Elasticsearch.

Community and Ecosystem

Both Solr and Elasticsearch boast active and vibrant open-source communities. Solr has been around longer and, therefore, has a more extensive user base and established ecosystem. Elasticsearch, however, has gained significant momentum over the years, supported by the Elastic Stack, which includes Kibana for data visualization and Beats for data shipping.

Document-Based vs. Schema-Free

Solr follows a document-based approach, where data is organized into fields and requires a predefined schema. While this provides better control over data, it may become restrictive when dealing with dynamic or constantly evolving data structures. Elasticsearch, being schema-free, allows for more flexible data handling, making it more suitable for projects with varying data structures.

Conclusion

In summary, Apache Solr and Elasticsearch are both powerful search platforms, each excelling in specific scenarios. Solr's robustness and established ecosystem make it a reliable choice for traditional search applications, while Elasticsearch's real-time capabilities and seamless integration with the Elastic Stack are perfect for modern data-driven projects. Choosing between the two depends on your specific requirements, data complexity, and preferred development style. Regardless of your decision, both Solr and Elasticsearch can supercharge your search and analytics endeavors, bringing efficiency and relevance to your data retrieval processes.

Whether you opt for Solr, Elasticsearch, or a combination of both, the future of search and data exploration remains bright, with technology continually evolving to meet the needs of next-generation applications.

2 notes

·

View notes

Text

What is the future of an SAP Fiori/UI5 developer?

The future of an SAP Fiori/UI5 developer is promising and continues to evolve with the growing demand for intuitive, user-friendly enterprise applications. As businesses increasingly move toward SAP S/4HANA and cloud-based solutions, the need for modern UI/UX experiences through SAP Fiori and SAPUI5 is rising rapidly. Organizations are looking to improve productivity, reduce training costs, and increase user satisfaction by replacing traditional SAP GUI interfaces with Fiori-based applications.

Moreover, SAP’s strategic focus on Fiori as the default user experience across all its platforms ensures long-term relevance. Developers skilled in SAPUI5, OData, REST APIs, and SAP BTP (Business Technology Platform) are in high demand. Integration with other technologies like SAP Build, CAP, and Fiori Elements further enhances opportunities.

Additionally, with the shift towards full-stack development, Fiori/UI5 developers who understand both frontend and backend (ABAP, Node.js, CAP) will be even more valuable. Freelance and remote work opportunities are also growing in this space, offering flexibility and global exposure.

If you're looking to build a solid career as an SAP Fiori/UI5 developer, Anubhav Trainings offers the most practical, real-time focused courses, guided by Anubhav Oberoy—one of the industry's top SAP trainers.

His hands-on approach and project-based learning make complex concepts simple and job-ready.

0 notes

Text

What Web Development Companies Do Differently for Fintech Clients

In the world of financial technology (fintech), innovation moves fast—but so do regulations, user expectations, and cyber threats. Building a fintech platform isn’t like building a regular business website. It requires a deeper understanding of compliance, performance, security, and user trust.

A professional Web Development Company that works with fintech clients follows a very different approach—tailoring everything from architecture to front-end design to meet the demands of the financial sector. So, what exactly do these companies do differently when working with fintech businesses?

Let’s break it down.

1. They Prioritize Security at Every Layer

Fintech platforms handle sensitive financial data—bank account details, personal identification, transaction histories, and more. A single breach can lead to massive financial and reputational damage.

That’s why development companies implement robust, multi-layered security from the ground up:

End-to-end encryption (both in transit and at rest)

Secure authentication (MFA, biometrics, or SSO)

Role-based access control (RBAC)

Real-time intrusion detection systems

Regular security audits and penetration testing

Security isn’t an afterthought—it’s embedded into every decision from architecture to deployment.

2. They Build for Compliance and Regulation

Fintech companies must comply with strict regulatory frameworks like:

PCI-DSS for handling payment data

GDPR and CCPA for user data privacy

KYC/AML requirements for financial onboarding

SOX, SOC 2, and more for enterprise-level platforms

Development teams work closely with compliance officers to ensure:

Data retention and consent mechanisms are implemented

Audit logs are stored securely and access-controlled

Reporting tools are available to meet regulatory checks

APIs and third-party tools also meet compliance standards

This legal alignment ensures the platform is launch-ready—not legally exposed.

3. They Design with User Trust in Mind

For fintech apps, user trust is everything. If your interface feels unsafe or confusing, users won’t even enter their phone number—let alone their banking details.

Fintech-focused development teams create clean, intuitive interfaces that:

Highlight transparency (e.g., fees, transaction histories)

Minimize cognitive load during onboarding

Offer instant confirmations and reassuring microinteractions

Use verified badges, secure design patterns, and trust signals

Every interaction is designed to build confidence and reduce friction.

4. They Optimize for Real-Time Performance

Fintech platforms often deal with real-time transactions—stock trading, payments, lending, crypto exchanges, etc. Slow performance or downtime isn’t just frustrating; it can cost users real money.

Agencies build highly responsive systems by:

Using event-driven architectures with real-time data flows

Integrating WebSockets for live updates (e.g., price changes)

Scaling via cloud-native infrastructure like AWS Lambda or Kubernetes

Leveraging CDNs and edge computing for global delivery

Performance is monitored continuously to ensure sub-second response times—even under load.

5. They Integrate Secure, Scalable APIs

APIs are the backbone of fintech platforms—from payment gateways to credit scoring services, loan underwriting, KYC checks, and more.

Web development companies build secure, scalable API layers that:

Authenticate via OAuth2 or JWT

Throttle requests to prevent abuse

Log every call for auditing and debugging

Easily plug into services like Plaid, Razorpay, Stripe, or banking APIs

They also document everything clearly for internal use or third-party developers who may build on top of your platform.

6. They Embrace Modular, Scalable Architecture

Fintech platforms evolve fast. New features—loan calculators, financial dashboards, user wallets—need to be rolled out frequently without breaking the system.

That’s why agencies use modular architecture principles:

Microservices for independent functionality

Scalable front-end frameworks (React, Angular)

Database sharding for performance at scale

Containerization (e.g., Docker) for easy deployment

This allows features to be developed, tested, and launched independently, enabling faster iteration and innovation.

7. They Build for Cross-Platform Access

Fintech users interact through mobile apps, web portals, embedded widgets, and sometimes even smartwatches. Development companies ensure consistent experiences across all platforms.

They use:

Responsive design with mobile-first approaches

Progressive Web Apps (PWAs) for fast, installable web portals

API-first design for reuse across multiple front-ends

Accessibility features (WCAG compliance) to serve all user groups

Cross-platform readiness expands your market and supports omnichannel experiences.

Conclusion

Fintech development is not just about great design or clean code—it’s about precision, trust, compliance, and performance. From data encryption and real-time APIs to regulatory compliance and user-centric UI, the stakes are much higher than in a standard website build.

That’s why working with a Web Development Company that understands the unique challenges of the financial sector is essential. With the right partner, you get more than a website—you get a secure, scalable, and regulation-ready platform built for real growth in a high-stakes industry.

0 notes

Text

The Ultimate Guide to Developing a Multi-Service App Like Gojek

In today's digital-first world, convenience drives consumer behavior. The rise of multi-service platforms like Gojek has revolutionized the way people access everyday services—from booking a ride and ordering food to getting a massage or scheduling home cleaning. These apps simplify life by merging multiple services into a single mobile solution.

If you're an entrepreneur or business owner looking to develop a super app like Gojek, this guide will walk you through everything you need to know—from ideation and planning to features, technology, cost, and launching.

1. Understanding the Gojek Model

What is Gojek?

Gojek is an Indonesian-based multi-service app that started as a ride-hailing service and evolved into a digital giant offering over 20 on-demand services. It now serves millions of users across Southeast Asia, making it one of the most successful super apps in the world.

Why Is the Gojek Model Successful?

Diverse Services: Gojek bundles transport, delivery, logistics, and home services in one app.

User Convenience: One login for multiple services.

Loyalty Programs: Rewards and incentives for repeat users.

Scalability: Built to adapt and scale rapidly.

2. Market Research and Business Planning

Before writing a single line of code, you must understand the market and define your niche.

Key Steps:

Competitor Analysis: Study apps like Gojek, Grab, Careem, and Uber.

User Persona Development: Identify your target audience and their pain points.

Service Selection: Decide which services to offer at launch—e.g., taxi rides, food delivery, parcel delivery, or healthcare.

Monetization Model: Plan your revenue streams (commission-based, subscription, ads, etc.).

3. Essential Features of a Multi-Service App

A. User App Features

User Registration & Login

Multi-Service Dashboard

Real-Time Tracking

Secure Payments

Reviews & Ratings

Push Notifications

Loyalty & Referral Programs

B. Service Provider App Features

Service Registration

Availability Toggle

Request Management

Earnings Dashboard

Ratings & Feedback

C. Admin Panel Features

User & Provider Management

Commission Tracking

Service Management

Reports & Analytics

Promotions & Discounts Management

4. Choosing the Right Tech Stack

The technology behind your app will determine its performance, scalability, and user experience.

Backend

Programming Languages: Node.js, Python, or Java

Databases: MongoDB, MySQL, Firebase

Hosting: AWS, Google Cloud, Microsoft Azure

APIs: REST or GraphQL

Frontend

Mobile Platforms: Android (Kotlin/Java), iOS (Swift)

Cross-Platform: Flutter or React Native

Web Dashboard: Angular, React.js, or Vue.js

Other Technologies

Payment Gateways: Stripe, Razorpay, PayPal

Geolocation: Google Maps API

Push Notifications: Firebase Cloud Messaging (FCM)

Chat Functionality: Socket.IO or Firebase

5. Design and User Experience (UX)

Design is crucial in a super app where users interact with multiple services.

UX/UI Design Tips:

Intuitive Interface: Simplify navigation between services.

Consistent Aesthetics: Maintain color schemes and branding across all screens.

Microinteractions: Small animations or responses that enhance user satisfaction.

Accessibility: Consider voice commands and larger fonts for inclusivity.

6. Development Phases

A well-planned development cycle ensures timely delivery and quality output.

A. Discovery Phase

Finalize scope

Create wireframes and user flows

Define technology stack

B. MVP Development

Start with a Minimum Viable Product including essential features to test market response.

C. Full-Scale Development

Once the MVP is validated, build advanced features and integrations.

D. Testing

Conduct extensive testing:

Unit Testing

Integration Testing

User Acceptance Testing (UAT)

Performance Testing

7. Launching the App

Pre-Launch Checklist

App Store Optimization (ASO)

Marketing campaigns

Beta testing and feedback

Final round of bug fixes

Post-Launch

Monitor performance

User support

Continuous updates

Roll out new features based on feedback

8. Marketing Your Multi-Service App

Marketing is key to onboarding users and service providers.

Strategies:

Pre-Launch Hype: Use teasers, landing pages, and early access invites.

Influencer Collaborations: Partner with local influencers.

Referral Programs: Encourage user growth via rewards.

Local SEO: Optimize for city-based searches.

In-App Promotions: Offer discounts and bundle deals.

9. Legal and Compliance Considerations

Don't overlook legal matters when launching a multi-service platform.

Key Aspects:

Licensing: Depending on your country and the services offered.

Data Protection: Adhere to GDPR, HIPAA, or local data laws.

Contracts: Create terms of service for providers and users.

Taxation: Prepare for tax compliance across services.

10. Monetization Strategies

There are several ways to make money from your app.

Common Revenue Models:

Commission Per Transaction: Standard in ride-sharing and food delivery.

Subscription Plans: For users or service providers.

Ads: In-app promotions and sponsored listings.

Surge Pricing: Dynamic pricing based on demand.

Premium Features: Offer enhanced services at a cost.

11. Challenges and How to Overcome Them

A. Managing Multiple Services

Solution: Use microservices architecture to manage each feature/module independently.

B. Balancing Supply and Demand

Solution: Use AI to predict demand and onboard providers in advance.

C. User Retention

Solution: Gamify the app with loyalty points, badges, and regular updates.

D. Operational Costs

Solution: Optimize cloud resources, automate processes, and start with limited geography.

12. Scaling the App

Once you establish your base, consider expansion.

Tips:

Add New Services: Include healthcare, legal help, or finance.

Geographical Expansion: Move into new cities or countries.

Language Support: Add multi-lingual capabilities.

API Integrations: Partner with external platforms for payment, maps, or logistics.

13. Cost of Developing a Multi-Service App Like Gojek

Costs can vary based on complexity, features, region, and team size.

Estimated Breakdown:

MVP Development: $20,000 – $40,000

Full-Feature App: $50,000 – $150,000+

Monthly Maintenance: $2,000 – $10,000

Marketing Budget: $5,000 – $50,000 (initial phase)

Hiring an experienced team or opting for a white-label solution can help manage costs and time.

Conclusion

Building a multi-service app like Gojek is an ambitious but achievable project. With the right strategy, a well-defined feature set, and an expert development team, you can tap into the ever-growing on-demand economy. Begin by understanding your users, develop a scalable platform, market effectively, and continuously improve based on feedback. The super app revolution is just beginning—get ready to be a part of it.

Frequently Asked Questions (FAQs)

1. How long does it take to develop a Gojek-like app?

Depending on complexity and team size, it typically takes 4 to 8 months to build a fully functional version of a multi-service app.

2. Can I start with only a few services and expand later?

Absolutely. It's recommended to begin with 2–3 core services, test the market, and expand based on user demand and operational capability.

3. Is it better to build from scratch or use a white-label solution?

If you want custom features and long-term scalability, building from scratch is ideal. White-label solutions are faster and more affordable for quicker market entry.

4. How do I onboard service providers to my platform?

Create a simple registration process, offer initial incentives, and run targeted local campaigns to onboard and retain quality service providers.

5. What is the best monetization model for a super app?

The most successful models include commission-based earnings, subscription plans, and in-app advertising, depending on your services and user base.

#gojekcloneapp#cloneappdevelopmentcompany#ondemandcloneappdevelopmentcompany#ondemandappclone#multideliveryapp#ondemandserviceapp#handymanapp#ondemandserviceclones#appclone#fooddeliveryapp

0 notes