#yield nodes results

Explore tagged Tumblr posts

Text

Zzzt! - Transformers X Reader 18+

I saw a post on twitter about using shock collars on bots but instead of hurting them it does the exact opposite and makes them feel so good!!! and I had to run and write something down because oh my god!!!

Starscream snippet at the end :3c

General Headcanons

Though maybe instead of collars they’re located near their interface panels? I’d imagine a low dosage shock to them would be similar to a vibration.

If I were to really describe it, shocks are a numbing buzz that you can feel circulating throughout parts of your body. If in direct contact, you can feel the energy the most but it slowly dissipates through their body. So really these shock devices would be a cool edging tool to keep their focus on their interface panel.

But that’s with low dosage shocks. Higher dosages yield for crazier results!! If some bots are into masochism then maxing out the shocks is basically sending them to the gates of heaven (well of allsparks?). On a full dosage they’re able to feel the shock throughout their whole body and then some. It blanks their processors out and they’re left a horny mess. Would also be great for those who love dumbification as their processor would be left scrambled for a bit

There’s a risk factor if you’re human. Their tolerance is CRAZZYY so if you’re giving them a high amount and end up touching them then you’re dead for sure. This is such a risk I cannot stress this enough, please throw on some thick rubber elbow high gloves, boots, ANYTHING.

———

With no mass displacement, imagine,,,,

Leaving Starscream a whimpering mess.

His hands tied together on the berth as he jerks his hips up to find stimulation. The device is placed right above his spike on one of its highest settings, curtesy from you of course. You’re standing to the side a fair distance away from him. Close enough to see the details, yet far from danger.

The pace is too slow for his liking. He’s so used to a nice hard frag. So used to being the one to tease you. So used to having you underneath him as he ruts into you like an animal. He doesn’t have to wait and think about a growing ache in him as he frags your brains out. But with you in charge, he thinks.

Starscream is running with thoughts, working overtime to delete warnings and stupid pop ups that tell him to ‘overload or overheat’. He doesn’t want to admit it but he likes this torturous buzz. And so, he chooses to overheat. His fans do little to help him as the volts short circuit it over time. He’s left to manually cool himself through large intakes of air. Focused on trying to cool now, his processor is so full that it blanks. He’s left a mumbly whiny loud mess. His valve cycles around nothing and he wants nothing more than something to pound into him. To touch him, to do anything.

His thighs are squeezed together by the time you walk near them, no longer grinding against air as he lays somewhat still.

His thighs snap open, obscenely wide at your command to open them. Bright pink fluid drips from his valve, and lots of it.

Don’t move, you tell him. He scrapes his pedes against the berth as he tries to keep them planted away from you. He doesn’t want to hurt you but he can’t help it when his hips grind against your gloved hand on his node. He doesn’t want to hurt you but frag, does it feel good when your other hand enters his valve.

His babbling turns into whines as your hands move faster. Then rougher. And now he’s trying hard to not fall into stasis as he overloads. Your hand is dripping with fluid and you’re quick to leave him. His thighs close together and he can still feel himself overloading, the charge dripping onto the berth and making a pool near his aft.

He’s out like a light when you turn off the shock device. You’re left to clean him up while he recharges. His spark swelling when he wakes every so often to see you polishing him up!!

————

Tons of aftercare after all that I promise he’s getting the princess treatment

#writing shenanigans#transformers x reader#starscream x reader#maccadams#transformers starscream#starscream#transformers#valveplug

145 notes

·

View notes

Text

Our Magical Portal For 2025: 00° Aries

f you thought four chances to do Capricorn moon magic was good last year, I counter with this:

Counting the moon, a planet will pass over 00° Aries a whopping 25 times in 2025!

In the average year, a given degree in the horoscope may see three passes from the Sun, Moon, and Venus, who traverse the entire horoscope in a year, and ~12 passes by the moon. That's 15 touch points. Which still seems like a lot.

This year, the Sun moves through 00° Aries in March, Mercury and Venus retrograde over 00° Aries in February, and both Saturn and Neptune move into Aries before retrograding back into Pisces by the year's end. As well as 14 passes from the moon!

This is something that you can use (magically, of course).

00° Aries

00° Aries is arguably the most significant degree for one straightforward reason: it is the first degree of the horoscope wheel. Astrologically, the year begins at 00° Aries with our Spring Solstice and ends at 29° Pisces. 00° Aries carries all of the potential of springtime.

It is worth remembering that astrology is a study of seasons and the constant, repetitive changes of the year. Every spring is a new chance to grow and evolve like the plants that erupt from their slumber under the ground, but it is also an ending.

From a metaphorical standpoint, 29° Pisces represents the complete desolvation of the self that comes with death, and 00° Aries represents the moment of birth/the first breath/ego consciousness.

This will be especially important this year because the planets that move across 00° Aries will do so in forward and backward motion.

In forward motion, a planet entering 00° Aries indicates a new beginning, a new leaf, a new idea, and a new project.

In retrograde motion, a planet moving through 00° Aries indicates needing to backpedal or having to step a few steps backward. We may need to review, refine, and reassess.

We will experience twenty-one passes of 00° Aries in forward motion and four passes in backward motion, indicating that we are mainly in the birthing phases, but there will be some setbacks.

The Magic

Do you want to start something new? Are you willing to work for it - putting one foot in front of the other - every single day (or as often as you can) - until you accomplish your goals?

Then 00° Aries is the right degree for you.

I have written about the power of Number 1 for starting new things; this is degree #1 of sign #1.* Anything that you want to start can be augmented by 1 energy. Use sequences of ones in your magic: burn one candle with one herb and one oil (combined, three for manifestation). Alternatively, make a spell pouch with one petition paper, one crystal, and one herb (again, combine to create 3 for manifestation).

Aries is an active (cardinal) fire sign, so I would strongly suggest working with fire in some capacity if you can. If you genuinely cannot, bring in other Aries correspondences (listed below).

I will not tell you what spell to cast; that is up to you and your goals for your own life. I can tell you that casting a spell and then repetitively working this spell as the planets move over 00° this spring (and beyond) may yield incredible results and that I would never turn down an opportunity like this, especially since you get 25 chances to get it right.

Where does 00° Aries fall in your natal birth chart? What house is it in? Does it make any significant aspects to any of your personal planets or luminaries? Does it aspect your Ascendant, Descendant, or 10th house? Does it make an aspect to Jupiter, Saturn, or your lunar nodes? I wouldn't include the outer three planets (Uranus, Neptune, or Pluto) unless they sit exactly at 00° Aries or Libra, which might still be significant. This information may help you to understand how to craft your spell (or ritual, silly words, this is energy - I'm literally trying not to tell you how to use it) or WHEN to cast it.

*I understand that this might be confusing because it is degree "00" and not 1 because astrology is based around geometry, so there must be a 0 degree to indicate a conjunction

The Dates

All Dates are in EST/EDT

2/4 - Venus enters Aries 3/3 - Mercury enters Aries 3/20 - Sun enters Aries 3/27 - Venus exits Aries 3/29 - Solar Eclipse 09° Aries, Mercury exits Aries 3/30 - Neptune enters Aries 4/16 - Mercury enters Aries 4/30 - Venus enters Aries 5/24 - Saturn enters Aries 9/1 - Saturn exits Aries 9/21 - Solar Eclipse 29° Virgo 10/22 - Neptune exits Aries

Moon at 00° Aries (check the hour for your timezone): 1/5, 2/1, 3/1, 3/28, 4/25, 5/22, 6/16, 7/16, 8/12, 9/8, 10/06, 11/2, 11/29, 12/27

I've included our two solar eclipses on this list because March's conjuncts this point (and Mercury at 00° Aries on that day), and September's tightly opposes it (within one degree). Both days can amplify eclipse magic of all kinds (another layer to this puzzle), but many people prefer to avoid doing magic during an eclipse due to its unpredictability. I've written about harnessing eclipse energy for magic here.

In reference to that, our March solar eclipse is a new/new energy eclipse, while our September eclipse is a new-old energy eclipse.

Ways to Work These Dates:

Pick the planet (Sun, Mercury, Venus) that most aligns with your goals and cast your spell on the day that planet first enters Aries

Work with Mercury or Venus's retrograde cycles to gnaw through a particularly tough problem that is keeping you stuck

Plant a spell on the Spring Equinox that will be harvested on the Autumnal Equinox

Cast a spell on the eclipsed Aries new moon that will culminate with the Aries full moon 10/08

Cast a long-term spell on the eclipsed Aries new moon and re-up its energy on the eclipsed Virgo new moon

Consider casting your spell during the hour of the transit, or, if that is not possible, cast it during the hour of the planet moving into Aries

If you don't know what dates to choose - I am offering $20 Election Consults through my Kofi - contact me with a good explanation of what type of magic you want to do, and I will give you (of t

Correspondences

Aries Fire, Cardinal, Masculine Ruling Planet: Mars House: 1 Tarot Cards: The Magician (by number), the Emporer (traditional) Herbs: Cinnamon, Dragon's Blood, Frankincense, Garlic, Ginger, Holly, Horseradish, Marjoram, Nettle, Pepper, Thistle 00° Aries, 1st Decan Ruler: Mars, Subruler: Mars Tarot Card: 2 of Wands (traditional), the Fool (by Number)

Mars Domicile/Houses: Aries/1, Scorpio/8 Exile: Libra, Taurus Exaltation: Capricorn Fall: Cancer Day of the Week: Tuesday Energies: victory, aggression, achievement, action, assertiveness, strength Colors: Scarlet, Red, Vermillion, Orange Number: 5 Metal: Iron Stones: Garnet, Ruby, Carnelian, Bloodstone Herbs: Asafoetida, Basil, Broom, Briony, Cactus, Cayenne, Dragon's Blood, Galangal, Garlic, Gentian, Ginger, Hawthorn, Horseradish, Honeysuckle, Mustard, Nettle, Peppercorn, Red Sandalwood, Rue, Tabacco, Wormwood Tarot Card: The Tower

References: Tarot and Astrology by Corrine Kenner The Herbal Alchemist's Handbook By Karen Harrison

**********************************************************************

As always, I hope I have given you some magical ideas to help you align with the astrological transits unique to this year. If you like my work, you can tip me on Kofi or purchase an astrological report written just for you.

More Reading: The Power of the Number One: Starting Over Demystifying Working With Eclipse Energy

108 notes

·

View notes

Text

Gemini Season 2025 Astrological Overview

Gemini Season this year begins on 5/20 at 2:55pm EST and goes until 6/20/2025. There are big energies filling up Gemini Season, and there's lots to consider for our personal development... so let's dive right in...

It is time once again in the annual zodiac cycle to stretch our minds, under the masculine air sign of Gemini, The Twins. We should use our words wisely for positive results, learn new subjects, engage in various events, socialize more with friends and family, travel if we can, and consider all the wonderful new possibilities that could be brought into our lives. Meeting new people from a wide variety of backgrounds is also favorable. It is a time of freshness... like a true breath of fresh air!

Gemini Season is also a time to cultivate flexibility, adaptability, broader views in life, and greater senses of movement in all aspects. It is also a terrific time of year to embrace a heady sense of fun!

Kicking off this season is a Sun-Saturn sextile, Sun-Neptune sextile, and a glorious Venus-Mars trine (this last one in fire signs). Reviewing personal habits, routines, routes, and the manners in which you create order can yield insights into greater efficiency and well-being are all indicated with the Sun-Saturn sextile. Sun and Neptune in harmony asks us to add our dreams to the restructuring mix, to make life more fulfilling (Saturn and Neptune together within a 3 degree orb also support this). And, Venus (in Aries) trine Mars (in Leo) stokes a fabulous fire that poses one powerful question for each of us to answer... "What do I truly desire?"

Of course, the big news for this year's Gemini Season is Saturn moving into Aries on 5/24, where the planet will stay until it retrogrades back into the later degrees of Pisces, in September of this year. Finally, in February 2026, Saturn will return to Aries (where it is considered in its fall) to stay for a couple of intense years. Life, and the way we all structure it, is going to get a cosmic makeover. Old orders will come tumbling down during the next couple of years, as exploration, individuality, and innovation all take precedence. It's a whole new vibe! Saturn will also be joining dreamy Neptune at this auspicious time, in a magickal blending of planning and dreaming for the future.

The Sun trines Pluto on 5/24, as well. This further instigates energy to make changes, but those changes will run deep. People may switch life directions entirely, discover huge innovations, and gain stronger momentum for the proverbial wisdom of "know thyself."

We will have a Gemini New Moon that brings our emotional awareness into the mix on 5/26, allowing for greater alignment with the predominant energies of this time.

Chiron will be in an inconjunct with the South Node in Virgo during the first full week of Gemini Season, encouraging us to let go of all the old orders, and all the outdated things, that no longer serve us. There may be pain and grief involved in the letting go process, but once we have healed from those difficulties, brighter days and healthier ways of being are right ahead of us.

Jupiter moves into the dreamy, watery, feminine sign of Cancer on 6/9, where it is exalted. Jupiter will remain here for one full year, bringing bounty and abundance, brilliance and focus to home and hearth, to family of blood and choice, and to a mystical sense of sanctuary. On the same day, however, Mercury squares Saturn, Neptune, and Pluto. This is a challenge to our greater communications around structuring, dreaming, and planning.

We have the Full Moon in Sagittarius on 6/11, super-charging our ability to take aim and fire at what we want with our proverbial arrows. We may gallivant off and chase our bigger dreams and aspirations at this time, too. New ideas stimulated by Gemini are pursued by Sagittarius, as we find the chase emotionally fulfilling. But, on this day we have a Jupiter-Saturn square. This is like a slight splash of cold water, but don't let it dampen your spirits overall. Jupiter is strengthened by both being exalted and occupying the Moon's home sign, buoying energy towards a positive. And, the Moon is in Jupiter's sign now, too, granting us all the loveliness of mutual reception vibes.

Mars square Uranus on 6/13 pits action against sudden upheaval. Can our life strategies really withstand the influences of the unexpected? We are about to find out.

May Gemini Season 2025 bring reality to your dreams and greater harmony to your life path! Blessings!

#astrology#gemini season#astrology observations#astrology readings#astro tumblr#astro notes#astrology community#astrology notes#astro community#astro observations#astrology signs#sun signs#calendar#horoscope#zodiac#occultism#occult#gemini

19 notes

·

View notes

Text

[...]

Ultimately, this story about Pakistan is more properly understood as one about the contest between China and the U.S. that pits the rest of the world in the middle. Chinese officials, we learned, regularly told their Pakistani counterparts that Beijing doesn’t see the contest as zero sum, that it’s okay to be friendly with both major powers. The U.S. does not quite see it that way, and Pakistan knows it. The result is the story below. If you’re at all interested in foreign affairs, we think you’ll find this one enlightening.

[...]

In October of 2022, a pivotal year for Pakistan, military chief Qamar Javed Bajwa finally won what he had long been striving for: an official state trip to the United States. His mission was explicit; a document prepared for Bajwa ahead of the visit is titled, “U.S. Re-Engagement with Pakistan: Ideas for Reviving an Important Relationship.”

[...]

From New York, Munir Akram, Pakistan’s representative to the United Nations, began reporting back cables highlighting “sarcastic” comments from his Chinese counterpart, who openly tweaked Akram about Pakistan’s sudden swing toward Washington. In private conversations with their Pakistani counterparts over the past year, as reported by Pakistani diplomats, Chinese officials have expressed displeasure with Islamabad for “switching camps”—rather than merely seeking open relations with both countries.

Now, with their U.S. gamble failing to pay off, Pakistani officials have become increasingly frantic in their efforts to repair relations with China, including, asthe documents reveal, by granting China approval for a military base at the port of Gwadar—a major and longstanding strategic demand of Beijing—and authorizing joint military operations inside Pakistan.

[...]

Internal reports emphasize Pakistan’s wish that its relations with the U.S. and China not be “zero-sum.” “What the Pakistani military prefers is to be able to maintain a balance between their Chinese and U.S. military relationships,” said Adam Weinstein, deputy director of the Middle East program at the Quincy Institute and an analyst on Pakistan. “They believe that if things are balanced, both sides will have an incentive to keep relations strong.”

Despite this preference, a classified internal Pakistani intelligence assessment judges China to be a more “natural strategic ally” than the U.S., with whom Pakistan is deemed to share “limited” strategic interests.

Facing such loss of trust from a key ally, the documents also show that Pakistan’s military-backed government privately promised Beijing a long-coveted concession: a Chinese military base in the key port city of Gwadar. Gwadar is a key node in China’s Belt-and-Road Initiative—the last stop in a land corridor through Pakistan that would connect China’s economy westward, and make it less reliant on shipping transit in the South China Sea.

In return, Pakistan asked for a major upgrade in economic and military assistance from Beijing in order to insulate Islamabad from the fierce reaction from the U.S. such a deal is expected to provoke.

[...]

This August, Pakistani government sources vented frustration to the media over their failed reconciliation with the U.S., lamenting the meager benefits that mending ties had brought. Government sources told the Express Tribune that “Pakistan’s reliance on the United States to secure the IMF package was not yielding the results.” This week, the IMF announced a decision to consider Pakistan’s loan request at an upcoming meeting slated for September 25, raising hopes that a deal may still be secured.

Pakistan’s private concessions to China come as the U.S. State Department has continued to publicly defend the military regime from criticism over its role in rigging elections this February, gross human rights abuses inside the country targeting the press and civil society, and an ongoing crackdown on supporters of now-imprisoned former Prime Minister Khan. That crackdown now includes credible threats to Khan’s life, as he continues to be held in government custody despite repeated rejection by the courts of the charges against him.

“We believe good governance, long-term capacity building, and sustainable market-based approaches that let the private sector flourish are the best paths to sustained growth and development,” the State Department told Drop Site News in its post-publication statement. “Our partnership with Pakistan spans the full range of regional and bilateral issues, including increasing trade and investment, strengthening security cooperation, promoting regional security and stability, building climate resilience, supporting democracy and human rights, and expanding people-to-people ties.”

The rigging of elections this February was met with general indifference in Washington, as has the ongoing suppression of press and political activism in the country. On the economic front, Pakistan’s imploding economy has consumed Western aid with nothing to show for it but soaring inflation, blackouts, an internet slowed to a crawl, and joblessness.

18 Sept 2024

28 notes

·

View notes

Text

READ THIS BEFORE INTERACTING

Alright, I know I said I wasn't going to touch this topic again, but my inbox is filling up with asks from people who clearly didn't read everything I said, so I'm making a pinned post to explain my stance on AI in full, but especially in the context of disability. Read this post in its entirety before interacting with me on this topic, lest you make a fool of yourself.

AI Doesn't Steal

Before I address people's misinterpretations of what I've said, there is something I need to preface with. The overwhelming majority of AI discourse on social media is argued based on a faulty premise: that generative AI models "steal" from artists. There are several problems with this premise. The first and most important one is that this simply isn't how AI works. Contrary to popular misinformation, generative AI does not simply take pieces of existing works and paste them together to produce its output. Not a single byte of pre-existing material is stored anywhere in an AI's system. What's really going on is honestly a lot more sinister.

How It Actually Works

In reality, AI models are made by initializing and then training something called a neural network. Initializing the network simply consists of setting up a multitude of nodes arranged in "layers," with each node in each layer being connected to every node in the next layer. When prompted with input, a neural network will propagate the input data through itself, layer by layer, transforming it along the way until the final layer yields the network's output. This is directly based on the way organic nervous systems work, hence the name "neural network." The process of training a network consists of giving it an example prompt, comparing the resulting output with an expected correct answer, and tweaking the strengths of the network's connections so that its output is closer to what is expected. This is repeated until the network can adequately provide output for all prompts. This is exactly how your brain learns; upon detecting stimuli, neurons will propagate signals from one to the next in order to enact a response, and the connections between those neurons will be adjusted based on how close the outcome was to whatever was anticipated. In the case of both organic and artificial neural networks, you'll notice that no part of the process involves directly storing anything that was shown to it. It is possible, especially in the case of organic brains, for a neural network to be configured such that it can produce a decently close approximation of something it was trained on; however, it is crucial to note that this behavior is extremely undesirable in generative AI, since that would just be using a wasteful amount of computational resources for a very simple task. It's called "overfitting" in this context, and it's avoided like the plague.

The sinister part lies in where the training data comes from. Companies which make generative AI models are held to a very low standard of accountability when it comes to sourcing and handling training data, and it shows. These companies usually just scrape data from the internet indiscriminately, which inevitably results in the collection of people's personal information. This sensitive data is not kept very secure once it's been scraped and placed in easy-to-parse centralized databases. Fortunately, these issues could be solved with the most basic of regulations. The only reason we haven't already solved them is because people are demonizing the products rather than the companies behind them. Getting up in arms over a type of computer program does nothing, and this diversion is being taken advantage of by bad actors, who could be rendered impotent with basic accountability. Other issues surrounding AI are exactly the same way. For example, attempts to replace artists in their jobs are the result of under-regulated businesses and weak worker's rights protections, and we're already seeing very promising efforts to combat this just by holding the bad actors accountable. Generative AI is a tool, not an agent, and the sooner people realize this, the sooner and more effectively they can combat its abuse.

Y'all Are Being Snobs

Now I've debunked the idea that generative AI just pastes together pieces of existing works. But what if that were how it worked? Putting together pieces of existing works... hmm, why does that sound familiar? Ah, yes, because it is, verbatim, the definition of collage. For over a century, collage has been recognized as a perfectly valid art form, and not plagiarism. Furthermore, in collage, crediting sources is not viewed as a requirement, only a courtesy. Therefore, if generative AI worked how most people think it works, it would simply be a form of collage. Not theft.

Some might not be satisfied with that reasoning. Some may claim that AI cannot be artistic because the AI has no intent, no creative vision, and nothing to express. There is a metaphysical argument to be made against this, but I won't bother making it. I don't need to, because the AI is not the artist. Maybe someday an artificial general intelligence could have the autonomy and ostensible sentience to make art on its own, but such things are mere science fiction in the present day. Currently, generative AI completely lacks autonomy—it is only capable of making whatever it is told to, as accurate to the prompt as it can manage. Generative AI is a tool. A sculpture made by 3D printing a digital model is no less a sculpture just because an automatic machine gave it physical form. An artist designed the sculpture, and used a tool to make it real. Likewise, a digital artist is completely valid in having an AI realize the image they designed.

Some may claim that AI isn't artistic because it doesn't require effort. By that logic, photography isn't art, since all you do is point a camera at something that already looks nice, fiddle with some dials, and press a button. This argument has never been anything more than snobbish gatekeeping, and I won't entertain it any further. All art is art. Besides, getting an AI to make something that looks how you want can be quite the ordeal, involving a great amount of trial and error. I don't speak from experience on that, but you've probably seen what AI image generators' first drafts tend to look like.

AI art is art.

Disability and Accessibility

Now that that's out of the way, I can finally move on to clarifying what people keep misinterpreting.

I Never Said That

First of all, despite what people keep claiming, I have never said that disabled people need AI in order to make art. In fact, I specifically said the opposite several times. What I have said is that AI can better enable some people to make the art they want to in the way they want to. Second of all, also despite what people keep claiming, I never said that AI is anyone's only option. Again, I specifically said the opposite multiple times. I am well aware that there are myriad tools available to aid the physically disabled in all manner of artistic pursuits. What I have argued is that AI is just as valid a tool as those other, longer-established ones.

In case anyone doubts me, here are all the posts I made in the discussion in question: Reblog chain 1 Reblog chain 2 Reblog chain 3 Reblog chain 4 Potentially relevant ask

I acknowledge that some of my earlier responses in that conversation were poorly worded and could potentially lead to a little confusion. However, I ended up clarifying everything so many times that the only good faith explanation I can think of for these wild misinterpretations is that people were seeing my arguments largely out of context. Now, though, I don't want to see any more straw men around here. You have no excuse, there's a convenient list of links to everything I said. As of posting this, I will ridicule anyone who ignores it and sends more hate mail. You have no one to blame but yourself for your poor reading comprehension.

What Prompted Me to Start Arguing in the First Place

There is one more thing that people kept misinterpreting, and it saddens me far more than anything else in this situation. It was sort of a culmination of both the things I already mentioned. Several people, notably including the one I was arguing with, have insisted that I'm trying to talk over physically disabled people.

Read the posts again. Notice how the original post was speaking for "everyone" in saying that AI isn't helpful. It doesn't take clairvoyance to realize that someone will find it helpful. That someone was being spoken over, before I ever said a word.

So I stepped in, and tried to oppose the OP on their universal claim. Lo and behold, they ended up saying that I'm the one talking over people.

Along the way, people started posting straight-up inspiration porn.

I hope you can understand where my uncharacteristic hostility came from in that argument.

161 notes

·

View notes

Text

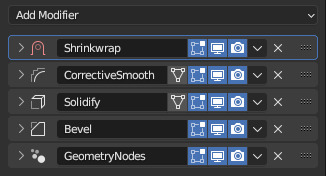

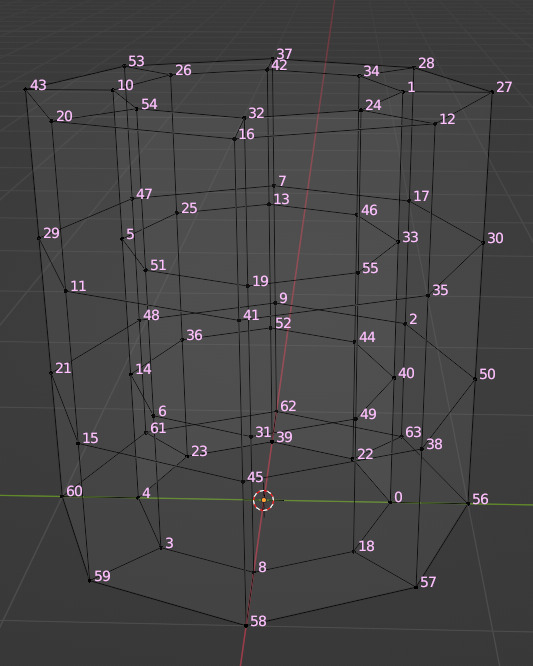

Morph Madness!

Fixing Exploding Morphs

Marik's Egyptian Choker is currently in production. It is the first accessory I've made that involves assignment to more than one bone and morphs for fat, fit and thin states. So there is a learning curve, and it is during that learning curve that interesting and unexpected things can happen.

As with my other content, I'm making the choker fit sims of all ages and genders--that's 8 different bodies.

Adding fat, fit and thin morphs multiples this number to 27 different bodies.

I'm also making 3 levels of detail for each of these. The number comes to 81 different bodies, 81 different bodies for which I need to tightly fit a cylinder around the neck and avoid clipping.

That's a lot of work. I can see why most custom content creators stick with one age, gender and detail level. At least, they did in the past. Our tools are getting better day by day, and that may partly be because of creative, ambitious and somewhat obsessive people like me.

There are usually multiple ways to solve the same problem. Some ways are faster than others. This I've learned from working in Blender3D. You can navigate to a button with your mouse or hit the keyboard shortcut. You can use proportional editing to fiddle around with a mesh or you can use a combination of modifiers.

If I am going to be creating 81 chokers, I don't want to be fiddling around on each one of them for an hour. I need something automated, repeatable and non-destructive so I can make adjustments later without having to start over from the beginning. I need to work smart rather than just work hard.

This is where modifiers and geometry nodes come in. After you develop a stack to work with one body, the same process pretty much works for the others as well. That is how it became easier for me to model each of the 81 chokers from scratch rather than to use proportional editing to fit a copy from one body to the next.

But I was about to confront an explosive problem…

Anyone who has worked with morphs before probably knows where this story is headed. There is a good reason to copy the base mesh and then use proportional editing to refit it to the fat, fit and thin bodies. That reason has to do with vertex index numbers.

You see, every vertex in your mesh has a number assigned to it so that the computer can keep track of it. Normally, the order of these numbers doesn't really matter much. I had never even thought about them before I loaded my base mesh and morphs into TSRW, touched those sliders to drag between morph states, and watched my mesh disintegrate into a mess of jagged, black fangs.

A morph is made up of directions for each vertex in a mesh on where to go if the sim is fat or thin or fit. The vertex index number determines which vertex gets which set of directions. If the vertices of your base mesh are numbered differently than the vertices of your morph, the wrong directions are sent to the vertices, and they end up going everywhere but the right places.

It is morph madness!

When a base mesh is copied and then the vertices are just nudged around with proportional editing, the numbering remains the same. When you make each morph from scratch, the numbering varies widely.

How, then, could I get each one of those 81 meshes to be numbered in exactly the same way?

Their structures and UV maps were the same, but their size and proportions varied a lot from body to body. Furthermore, I'd used the Edge Split modifier to sharpen edges, which results in disconnected geometry and double vertices.

Sorting the elements with native functions did not yield uniform results because of the varying proportions.

The Blender Add-On by bartoszstyperek called Copy Verts Ids presented a possible solution, but it was bewildered by the disconnected geometry and gave unpredictable results.

Fix your SHAPE KEYS! - Blender 2.8 tutorial by Danny Mac 3D

I had an idea of how I wanted the vertices to be numbered, ascending along one edge ring at a time, but short of selecting one vertex at a time and sending it to the end of the stack with the native Sort Elements > Selected function, there was no way to do this.

Of course, selecting 27,216 vertices one-at-a-time was even more unacceptable to me than the idea of fiddling with 81 meshes in proportional editing mode.

So… I decided to learn how to script an Add-On for Blender and create the tool I needed myself.

A week and 447 polished lines of code later, I had this satisfying button to press that would fix my problem.

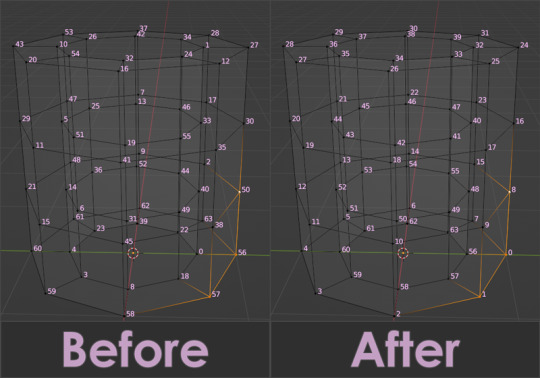

Here are the index numbers before and after pressing that wonderful button.

My morphs are not exploding anymore, and I am so happy I didn't give up on this project or give myself carpal tunnel syndrome with hours of fiddling.

Marik's Egyptian Choker is coming along nicely now. I haven't avoided fiddling entirely, but now it only involves resizing to fix clipping issues during animation.

Unfortunately, I'll have to push the release date to next month, but now, I have developed my first Blender Add-On and maybe, after a bit more testing, it could be as useful to other creators in the community as its been to me.

Looking for more info about morphing problems? See this post.

See more of my work: Check out my archive.

Join me on my journey: Follow me on tumblr.

Support my creative life: Buy me a coffee on KoFi.

#exploding#morph#mesh#sims 3#cc#custom content#tutorial#C:O#SallyCompaq122#mod the sims#cc creator#art process#blender#3d#add on#shape keys#sort#vertex#index#blendercommunity

86 notes

·

View notes

Text

Power Surge for Business: Moon in Aries Meets Mercury, Eclipse & Destiny (April 8th)

Get ready for a firestorm of opportunity, because on April 8th, we experience a powerful convergence of astrological forces that can dramatically impact your business and finances. Buckle up, entrepreneurs and go-getters, because I’m here to guide you through this dynamic cosmic dance!

Triple Threat Tuesday: Aries Takes Charge

Three key transits collide on this potent Tuesday, each influencing your business and financial landscape:

Moon Conjunct Mercury in Aries: This dynamic duo ignites clear and concise communication. Negotiations flow effortlessly, and innovative ideas spark like wildfire. Action Item: Schedule important meetings, pitches, or presentations for this day.

Business Implications:

Effective Communication: Use this alignment to express your ideas clearly and persuasively. Collaborate with colleagues, clients, and partners to convey your vision.

Swift Decision-Making: Decisions made during this transit may be impulsive but can yield positive results. Trust your instincts.

Marketing and Sales: Promote your products or services boldly. Engage with customers through direct communication channels.

Financial Impact:

Increased Transactions: Expect brisk financial activity — sales, contracts, and deals.

Risk-Taking: Be cautious of impulsive financial decisions. Balance boldness with practicality.

Moon in Aries Conjunct Mercury in Aries:

Effective Communication:

During this alignment, communication becomes assertive and direct. It’s an excellent time for negotiations, pitching ideas, and closing deals. Business leaders should express their vision clearly to clients, partners, and colleagues.

Swift Decision-Making:

Decisions made now may be impulsive but can lead to positive outcomes. Trust your instincts and act swiftly. Avoid overthinking; seize opportunities promptly.

Marketing and Sales:

Promote your products or services boldly. Use direct channels — emails, phone calls, or face-to-face interactions.

Customers respond well to confident communication.

Aries Solar Eclipse: Eclipses signify new beginnings. The fiery energy of Aries makes this a particularly potent time to launch new ventures, rebrand your business, or unveil a revolutionary product.

New Ventures:

Solar eclipses mark powerful beginnings. Use this energy to launch new projects, products, or marketing campaigns. Set intentions for growth and innovation.

Self-Discovery:

Reflect on your business identity. What drives you? What needs healing or transformation? Realign your business goals with your authentic purpose.

Courageous Initiatives:

Aries encourages bold moves. Take calculated risks. Innovate, even if it means stepping out of your comfort zone.

Business Implications:

New Ventures: Launch new projects, products, or marketing campaigns. The eclipse provides a burst of energy.

Self-Discovery: Reflect on your business identity, purpose, and leadership style. What needs healing or transformation?

Courageous Initiatives: Aries encourages bold moves. Take calculated risks.

Financial Impact:

Investments: Consider long-term investments or diversify your portfolio.

Debt Management: Address financial wounds — pay off debts, seek financial advice.

Innovation: Invest in cutting-edge technologies or business models.

Moon Conjunct North Node & Chiron in Aries: The North Node represents your destined path, while Chiron, the “wounded healer,” highlights past challenges. This alignment sheds light on any past financial roadblocks and empowers you to step into your true financial potential. Action Item: Reflect on any past financial hurdles you’ve faced. How can you use those experiences to propel yourself forward?

Moon in Aries Conjunct North Node and Chiron in Aries:

Purpose-Driven Actions: This alignment signifies a karmic turning point. Align your business actions with your soul’s purpose. Seek meaningful work that resonates with your core values.

Healing Leadership:

Address past wounds in your leadership style. Lead with empathy and authenticity. Healing within your organization can positively impact financial outcomes.

Networking and Guidance:

Connect with influential individuals who can guide your path. Collaborate with like-minded businesses for mutual growth.

Business Implications:

Purpose-Driven Actions: Align your business goals with your soul’s purpose. Seek meaningful work.

Healing Leadership: Address past wounds in your leadership style. Lead with empathy.

Networking: Connect with influential individuals who can guide your path.

Financial Impact:

Karmic Financial Shifts: Expect changes in income sources or financial stability.

Self-Worth and Abundance: Heal any scarcity mindset. Value your unique contributions.

Collaborations: Partner with like-minded businesses for mutual growth.

Remember: While the impulsive energy of Aries can be a powerful asset, balance it with careful planning. Do your research before making any major decisions, but don’t let hesitation extinguish your spark. This is a day to seize opportunities, ignite your passion, and take your business to the next level!

#astrology business#business horoscopes#business astrology#astrology updates#astro#astrology facts#astro notes#astrology#astro girlies#astro posts#astrology community#astropost#astrology observations#astro observations#astro community

14 notes

·

View notes

Text

Blockchain Payments: The Game Changer in the Finance Industry

The finance industry has experienced a remarkable transformation over the past few years, with blockchain payments emerging as one of the most groundbreaking innovations. As businesses and individuals increasingly seek secure, transparent, and efficient transaction methods, blockchain technology has positioned itself as a powerful solution that challenges traditional payment systems.

Understanding Blockchain Payments

At its core, blockchain payments utilize decentralized ledger technology (DLT) to facilitate transactions without intermediaries such as banks. Unlike conventional payment systems, which rely on centralized institutions, blockchain operates through a distributed network of nodes that validate and record transactions in an immutable ledger. This decentralized approach ensures greater transparency, security, and efficiency in financial transactions.

Key Benefits of Blockchain Payments

1. Security and Transparency

Blockchain transactions are encrypted and recorded on an immutable ledger, making them highly secure and tamper-proof. The decentralized nature of blockchain ensures that no single entity can alter transaction records, increasing transparency and reducing the risk of fraud.

2. Lower Transaction Costs

Traditional payment methods often involve intermediaries such as banks and payment processors, which charge significant fees for transaction processing. Blockchain payments eliminate the need for intermediaries, resulting in lower transaction costs for businesses and consumers.

3. Faster Cross-Border Transactions

International transactions using traditional banking systems can take days to settle due to multiple intermediaries and regulatory approvals. Blockchain payments, on the other hand, enable near-instant cross-border transactions, enhancing financial inclusivity and reducing delays.

4. Enhanced Accessibility

Blockchain payments provide financial services to individuals and businesses without requiring a traditional bank account. This feature is particularly beneficial for underbanked populations, allowing them to participate in the global economy.

Real-World Applications of Blockchain Payments

E-Commerce and Retail: Merchants are integrating blockchain payment systems to accept cryptocurrencies, offering customers an alternative and secure payment method.

Remittances: Migrant workers can send money to their families without high remittance fees, ensuring more money reaches the recipients.

Supply Chain Management: Blockchain ensures secure and transparent payments between suppliers, manufacturers, and distributors.

Decentralized Finance (DeFi): DeFi platforms leverage blockchain payments for lending, borrowing, and yield farming, providing users with financial services without traditional banks.

How Resmic is Revolutionizing Blockchain Payments?

Resmic is at the forefront of enabling seamless cryptocurrency transactions, empowering businesses to embrace blockchain payments effortlessly. The platform provides a secure and user-friendly payment infrastructure, allowing businesses to accept multiple cryptocurrencies while ensuring compliance with regulatory requirements.

Key Features of Resmic:

Multi-Currency Support: Accepts various cryptocurrencies, enhancing customer flexibility.

Fast Settlements: Near-instant transactions for efficient cash flow management.

Secure Transactions: Robust encryption and decentralized validation for enhanced security.

Seamless Integration: Easy API integration with existing payment systems and e-commerce platforms.

Embracing the Future of Finance

Blockchain payments are reshaping the financial landscape, offering businesses and individuals a more efficient and secure way to transfer value globally. As adoption continues to grow, platforms like Resmic play a crucial role in facilitating this transition. By leveraging blockchain technology, businesses can stay ahead of the curve and unlock new opportunities in the digital economy.

3 notes

·

View notes

Text

youtube

Woody herbs are staples in most productive gardens. Being woody herbs, it’s not much of a surprise that they can grow woody as the supple young plants you put into the ground become tough and mature. They also can lose their vigour as they become woody, after a few years not bouncing back quite as well as they once did after a hard prune.

Cuttings are the most common way to propagate plants for home gardeners as well as large-scale propagation nursery. Get this technique down, and you can apply it to almost all plants in your garden!

This can be done any time of year, except for the dead of winter. Undertaking it in spring will yield the fastest results.

Step 1: Taking the cutting

- Use sharp, fine-tipped snips to take cuttings. This prevents damage to the plant using blunt force or ripping the stem.

- Look for nice healthy tips to harvest from. You don’t want to take any stems or leaves that are sad or diseased. If your plant is diseased or struggling, taking healthy cuttings can be a good way to give it a fresh lease on life.

- If it is a hot day or you are taking lots of cuttings, it is a good idea to keep them fresh by storing the cuttings in a container with a wet towel to keep them hydrated while you work. If the stems dry out, they won’t strike.

- An ideal length for cuttings is about 10-15 cm long, or with around four nodes. Don’t worry about the length too much as you can always trim it back when you get to the planting phase if they are too long.

Step 2: Trim stems & excess foliage

- Bring your cuttings into your workstation or greenhouse. Now you can clean up the foliage and trim back the length.

- A minimum of four nodes is ideal for sage cuttings. The node is the area where leaves and stem meet. Josh can demonstrate how to find and count the nodes. Ensure the base of your cutting is cut underneath the node. This area has a higher concentration of the plant hormone, auxin, which encourages rooting.

- Trim or gently pull off the leaves from the bottom three nodes, leaving just the foliage at the top growing tip. Any extra foliage will speed up drying of cutting which is not ideal. If the leaves left are quite large, you can cut them in half to reduce the surface area. This will not harm the plant but will reduce water loss.

Step 3: Place in growing medium

- Fill pots with propagation mixture and wet well beforehand. This mix is a bit finer than conventional potting mix, it should be nice and fluffy and hold onto moisture well. Extra perlite mixed in is also a good idea as it allows the developing roots to push through and access air.

- Dip ends of stem in rooting hormone if you have it or would like to, but it is not required. If you do use it, remember that a little goes a long way.

- Stick the stems directly into the pre-prepared pots, up to the bottom of the remaining leaves. You may put several cuttings in the same pot at this early stage.

Step 4: Managing moisture

- Water in well and place the pots in your greenhouse, propagation station, or under a DIY humidity dome such as a plastic container to keep the soil moist. You can take the lid off of the humidity dome every few days to allow fresh air in and prevent root rot, but keeping the soil moist during the initial growth phase is crucial. If the cuttings dry out, the rooting with cease and the cuttings will die.

Step 5: Separate your plants!

- Rooting time required can take a couple of weeks or up to 2 months, depending on the season. How do you know if you have been successful, and the cuttings have set root? If you see new growth of leaves from the top of the plant. Also, you can give them a tug and they should hold nice and firm in the soil.

- Once your baby plants have grown a bit and developed a good root mass in their pots, you can separate them out from each other and pot up individually. After they have grown healthy roots in their individual pots, plant them out in the garden.

#gardening australia#solarpunk#Australia#propagation#how to#how to propagate#herbs#cuttings#plant cuttings#Youtube

6 notes

·

View notes

Text

DECam Confirms that Early-Universe Quasar Neighborhoods are Indeed Cluttered

New finding with the expansive Dark Energy Camera offers a clear explanation to quasar ‘urban density’-conundrum

Observations using the Dark Energy Camera (DECam) confirm astronomers’ expectation that early-Universe quasars formed in regions of space densely populated with companion galaxies. DECam’s exceptionally wide field of view and special filters played a crucial role in reaching this conclusion, and the observations reveal why previous studies seeking to characterize the density of early-Universe quasar neighborhoods have yielded conflicting results.

Quasars are the most luminous objects in the Universe and are powered by material accreting onto supermassive black holes at the centers of galaxies. Studies have shown that early-Universe quasars have black holes so massive that they must have been swallowing gas at very high rates, leading most astronomers to believe that these quasars formed in some of the densest environments in the Universe where gas was most available. However, observational measurements seeking to confirm this conclusion have thus far yielded conflicting results. Now, a new study using the Dark Energy Camera (DECam) points the way to both an explanation for these disparate observations and also a logical framework to connect observation with theory.

DECam was fabricated by the Department of Energy and is mounted on the U.S. National Science Foundation Víctor M. Blanco 4-meter Telescope at Cerro Tololo Inter-American Observatory in Chile, a Program of NSF NOIRLab.

The study was led by Trystan Lambert, who completed this work as a PhD student at Diego Portales University’s Institute of Astrophysical Studies in Chile [1] and is now a postdoc at the University of Western Australia node at the International Centre for Radio Astronomy Research (ICRAR). Utilizing DECam’s massive field of view, the team conducted the largest on-sky area search ever around an early-Universe quasar in an effort to measure the density of its environment by counting the number of surrounding companion galaxies.

For their investigation, the team needed a quasar with a well-defined distance. Luckily, quasar VIK 2348–3054 has a known distance, determined by previous observations with the Atacama Large Millimeter/submillimeter Array (ALMA), and DECam’s three-square-degree field of view provided an expansive look at its cosmic neighborhood. Serendipitously, DECam is also equipped with a narrowband filter perfectly matched for detecting its companion galaxies. “This quasar study really was the perfect storm,” says Lambert. “We had a quasar with a well-known distance, and DECam on the Blanco telescope offered the massive field of view and exact filter that we needed.”

DECam’s specialized filter allowed the team to count the number of companion galaxies around the quasar by detecting a very specific type of light they emit, known as Lyman-alpha radiation. Lyman alpha radiation is a specific energy signature of hydrogen, produced when it is ionized and then recombined during the process of star formation. Lyman-alpha emitters are typically younger, smaller galaxies, and their Lyman-alpha emission can be used as a way to reliably measure their distances. Distance measurements for multiple Lyman-alpha emitters can then be used to construct a 3D map of a quasar’s neighborhood.

After systematically mapping the region of space around quasar VIK J2348-3054, Lambert and his team found 38 companion galaxies in the wider environment around the quasar — out to a distance of 60 million light-years — which is consistent with what is expected for quasars residing in dense regions. However, they were surprised to find that within 15 million light-years of the quasar, there were no companions at all.

This finding illuminates the reality of past studies aimed at classifying early-Universe quasar environments and proposes a possible explanation for why they have turned out conflicting results. No other survey of this kind has used a search area as large as the one provided by DECam, so to smaller-area searches a quasar’s environment can appear deceptively empty.

“DECam’s extremely wide view is necessary for studying quasar neighborhoods thoroughly. You really have to open up to a larger area,” says Lambert. “This suggests a reasonable explanation as to why previous observations are in conflict with one another.”

The team also suggests an explanation for the lack of companion galaxies in the immediate vicinity of the quasar. They postulate that the intensity of the radiation from the quasar may be large enough to affect, or potentially stop, the formation of stars in these galaxies, making them invisible to our observations.

“Some quasars are not quiet neighbors,” says Lambert. “Stars in galaxies form from gas that is cold enough to collapse under its own gravity. Luminous quasars can potentially be so bright as to illuminate this gas in nearby galaxies and heat it up, preventing this collapse.”

Lambert’s team is currently following up with additional observations to obtain spectra and confirm star formation suppression. They also plan to observe other quasars to build a more robust sample size.

“These findings show the value of the National Science Foundation’s productive partnership with the Department of Energy,” says Chris Davis, NSF program director for NSF NOIRLab. “We expect that productivity will be amplified enormously with the upcoming NSF–DOE Vera C. Rubin Observatory, a next-generation facility that will reveal even more about the early Universe and these remarkable objects.”

Notes

[1] This study was made possible through a collaboration between researchers at Diego Portales University and the Max Planck Institute of Astronomy. A portion of this work was funded through a grant by Chile’s National Research and Development Agency (ANID) for collaborations with the Max Planck Institutes.

IMAGE: Observations using the Department of Energy-fabricated Dark Energy Camera (DECam) on the U.S. National Science Foundation Víctor M. Blanco 4-meter Telescope confirm astronomers’ expectation that early-Universe quasars formed in regions of space densely populated with smaller companion galaxies. DECam’s exceptionally wide field of view and special filters played a crucial role in reaching this conclusion, and the observations reveal why previous studies seeking to characterize the density of early-Universe quasar neighborhoods have yielded conflicting results.Credit:

NOIRLab/NSF/AURA/M. Garlick/J. da Silva (Spaceengine)/M. Zamani

youtube

2 notes

·

View notes

Link

2 notes

·

View notes

Text

Which investment is fine: business or Residential?

Property remains one of the global's most sought-after and reliable funding alternatives. Residential homes or commercial houses, something you choose, choosing the best form of assets is instrumental in getting the great returns whilst preserving dangers at bay. This blog will take you via the contrasts, 2025 marketplace traits, and most significantly, factors to appearance out for before investing.

Why real estate funding is a clever preference

Real estate provides balance and predictable returns, that is why it's miles such a famous investment choice. inventory or cryptocurrency traders however, actual estate is a tangible asset that has a tendency to benefit fee over the lengthy-term. Inflation is tackled the use of property as a hedge, even as condominium income gives gains for buyers in economically unsure times.

Confirmed Balance: actual property resists economic versions more efficaciously than most asset instructions.

Tangible Asset: gives bodily value and application above financial blessings.

Rental profits: steady monthly coins go with the flow from tenants.

Inflation Hedge: assets values and rents are possibly to boom with inflation.

Examine additionally: Is 2025 The satisfactory yr To put money into real property?

The 2025 real estate market Outlook

The 2025 belongings marketplace will grow as residential assets will remain in demand attributable to urbanization and changing life. business assets, in flip, will change as organizations respond to hybrid work and moving purchaser life. faraway paintings styles, demographic modifications, and interest fees will be predominant drivers this year.

Increase tendencies: Dwellings markets keep developing; classified ads evolve to deal with new norms.

Economic Drivers: hobby costs and urbanization drive belongings costs.

Investor Sentiment: positive sentiment in rising nodes like Gurgaon.

opportunity Zones: Suburban regions and Tier-2 cities show off developing interest from investors.

What Are commercial homes?

Commercial residences consist of income-producing real property applied for enterprise purposes. those cowl something from office buildings and retail stores to warehouses and commercial parks. Institutional traders generally control this section because of higher capital necessities, however man or woman investors are now venturing into industrial actual property for its better yields and longer tenures.

Definition: Realty devoted to enterprise use which produces condominium income.

Investor Profile: in most cases establishments, increasing individual funding.

Leasing model: lengthy-time period contracts with formal rental agreements.

Valuation foundation: earnings-based more than market comparables.

What Are Residential residences?-

Residential residences include dwelling accommodations like flats, unmarried-own family homes, and condominiums. those tend to be less costly and inside attain of first-time buyers or small landlords. it's miles easier to finance, and the regular call for for houses guarantees consistent occupancy and returns.

Definition: Residential housing units supposed for dwelling functions.

commonplace investors: Small landlords, long-term holders, and beginners.

Financing options: decrease down payments, less complicated loans, and government incentives.

call for motive force: Ongoing housing call for continues apartment markets alive.

Residential belongings investment: execs & Cons

Residential property is typically the entry factor for brand new traders because it requires much less capital and is more without difficulty financed. The assets gets desirable occupancy costs, especially in city places. apartment returns might be lower, there may be more tenant turnover, and rent manipulate and other policies can hit earnings.

pros

much less preliminary funding required than industrial belongings.

Financing greater with no trouble to be had and government tasks.

high call for resulting in solid tenant occupancy.

clean assets control ideal for new buyers.

Cons

usually lower condo returns than business property.

greater tenant turnover equals extra paintings.

uncovered to rent controls and different authorities restrictions.

examine also: prepared-to-pass Residential tasks in Gurgaon

Industrial belongings investment: professionals & Cons

business homes offer higher rental earning and solid revenues through longer tenancy terms with corporations. The tenants are extra professional and much less of a hassle, causing fewer protection troubles. commercial investments contain excessive capital outlay, have better dangers of vacancy for longer periods, and demand greater state-of-the-art management.

professionals

prospective a whole lot higher rental income.

long-term tenancies decrease turnovers and offer stable profits.

professional tenants with fewer disputes.

belongings cost immediately related to condominium income.

Cons

Has a much more preliminary funding requirement.

capability for prolonged vacancy periods.

greater state-of-the-art property management requirements.

sensitive to economic recession and market trade.

Eight. how to select the exceptional choice for your investment desires

Finding out among residential and commercial investment relies upon for your very own threat appetite, availability of capital, and time horizon. Take nearby marketplace situations into consideration, specifically in emerging areas like Gurgaon and determine whether you'll opt to be active or passive. A diverse portfolio also can have both assets kinds collectively to balance it out.

cautiously evaluate your hazard urge for food earlier than making a decision.

Align length of funding with your budget and financing opportunities.

Align belongings type and your funding time body.

look into place-precise influences like infrastructure and call for.

determine your stage of participation—lively management or passive ownership.

Study also: How monetary traits have an effect on the actual property market

nine. end

each residential and commercial property investments have their personal pros and cons. understanding the differences is the key to formulating an funding plan that suits your economic objectives and sources. With experienced guidance and distinct market evaluation, you may make knowledgeable selections that not most effective healthy your requirements but additionally create wealth over the years. seek homes to discover the proper belongings in your objective.

approximately PropertyDekho247 and how it allow you to

At PropertyDekho247, we know how difficult it can be to determine on a proper property funding. whether or not you're considering commercial or residential property, our internet site gives professional opinion, in-intensity listings, and useful assets for supporting you at each step.

From investment advice to market traits, PropertyDekho247 is your relied on platform for making informed assets alternatives that meet your monetary goals. locate the pinnacle possibilities and stay ahead of the brand new records to guide your wise investment.

begin your house investment process with self assurance — visit PropertyDekho247 nowadays!

FAQs: industrial vs Residential property funding

Q1: what's the primary difference between commercial and residential property?

A: commercial residences are used for corporations like workplaces or stores, while residential residences are intended for humans to stay in, inclusive of homes or residences.

Q2: Which funding is greater profitable?

A: industrial homes often provide better returns but come with extra dangers. Residential residences typically provide more stable, mild returns and constant demand.

Q3: What have to I take into account before making an investment?

A: recall your finances, threat tolerance, funding desires, market demand, and protection fees before choosing commercial or residential property.

Q4: can i spend money on both sorts?

A: yes, investing in each commercial and residential houses can diversify your portfolio and help balance dangers and returns efficaciously.

Q5: How can PropertyDekho247 help?

A: PropertyDekho247 offers unique listings, marketplace insights, and beneficial sources to help you make knowledgeable assets funding choices.

Meta name: Which investment is pleasant business or Residential

Meta Description: thinking about investing in actual property however no longer positive whether or not to choose commercial or residential? study our weblog for clear steerage and smart pointers.

#home#investing#buyproperty#commercial property#property listing in gurgaon#propertyforsale#realestate#sellproperty#sellpropertyingurgaon#commericial real estate#residential real estate#investment#property investment in dubai#property investment australia#real estate investment#property investment company#real estate investing#property management

0 notes

Text

Greedy Algorithm

A greedy algorithm is a problem-solving approach that makes the locally optimal choice at each step, with the hope that these choices will lead to a globally optimal solution. Unlike brute-force or dynamic programming methods, greedy algorithms do not explore all possible options but instead make decisions based on the best immediate choice. This approach is efficient but may not always yield the optimal result, depending on the problem's structure.

How Greedy Algorithms Work

Step-by-Step Decision-Making:

At each step, the algorithm selects the best possible option based on the current state of the problem.

This decision is made without considering future consequences, which can sometimes lead to suboptimal results.

No Backtracking:

Once a choice is made, the algorithm does not revisit earlier decisions. This makes greedy algorithms efficient but potentially limited in scope.

Local vs. Global Optimality:

A greedy algorithm relies on the greedy choice property: the locally optimal choice at each step leads to a globally optimal solution.

This is not always guaranteed, but when it holds, the algorithm is effective.

Key Characteristics of Problems Solved by Greedy Algorithms

A problem can be solved using a greedy algorithm if it satisfies the following two properties:

1. Greedy Choice Property

The optimal solution can be constructed incrementally by making the locally optimal choice at each step.

Example: In the activity selection problem, selecting the activity that ends the earliest ensures maximum flexibility for future choices.

2. Optimal Substructure

The optimal solution to the problem can be decomposed into subproblems that also have optimal solutions.

Example: In Huffman coding, the optimal encoding tree is built by repeatedly combining the two nodes with the smallest frequencies.

How to Identify a Problem for a Greedy Algorithm

To determine if a problem is suitable for a greedy algorithm, ask:

Can the problem be broken into steps where each step makes a locally optimal choice?

Does the choice at each step affect the remaining problem in a predictable way?

Is the problem structured such that the greedy choice leads to the global solution?

Examples of Problems Solved by Greedy Algorithms:

Activity Selection Problem:

Goal: Select the maximum number of non-overlapping activities.

Greedy Strategy: Always pick the activity that ends earliest.

Huffman Coding:

Goal: Compress data with minimum total encoded length.

Greedy Strategy: Combine the two nodes with the smallest frequencies.

Dijkstra’s Algorithm:

Goal: Find the shortest path in a graph.

Greedy Strategy: Always select the node with the smallest tentative distance.

Prim’s/Kruskal’s Algorithm:

Goal: Find the minimum spanning tree (MST) of a graph.

Greedy Strategy: Add edges or nodes that minimize the total weight.

Job Scheduling:

Goal: Schedule jobs to minimize lateness.

Greedy Strategy: Sort jobs by deadlines or processing times.

Steps to Apply a Greedy Algorithm

Define the Problem:

Identify the objective and constraints (e.g., maximize profit, minimize cost, etc.).

Identify the Greedy Choice:

Determine the rule for making the locally optimal choice (e.g., "always pick the smallest element").

Implement the Greedy Strategy:

Use a loop or recursive function to apply the greedy choice step-by-step.

Verify the Solution:

Check if the greedy choice leads to the global optimal solution (using examples or proofs).

When Greedy Algorithms Fail

Greedy algorithms may fail if:

The locally optimal choice does not lead to the global solution (e.g., the Traveling Salesman Problem).

The problem requires considering future choices (e.g., scheduling with dependencies).

Comparison with Dynamic Programming

How do I differentiate between greedy and dynamic programming? Because both use optimal substructure. But greedy algorithms make decisions without looking ahead, while dynamic programming considers all possibilities. So the key is that for a problem with the greedy choice property, you can make a choice that leads to the optimal solution without needing to check all possibilities. Greedy Algorithm : 1. Approach : Makes one choice at a time 2. Efficiency : Fast (O(n)) 3. Optimality: May not always be optimal 4. Use Case: Problems with clear greedy choice property

Dynamic Programming: 1. Approach : Explores all possibilities 2. Efficiency Slower (O(n²) 3. Optimality: Always optimal (for problems with optimal substructure) 4. Use Case Problems requiring exploration of all subproblems

Summary

Greedy algorithms are efficient but not always optimal.

They work best when the greedy choice property and optimal substructure hold.

Use them for problems where local choices directly lead to global solutions (e.g., activity selection, Huffman coding).

Always validate the solution with examples or proofs to ensure correctness.

0 notes

Text

CMP Slurry Monitoring Market, Key Industry Insights, and Forecast to 2032

Global CMP Slurry Monitoring Market size was valued at US$ 183.4 million in 2024 and is projected to reach US$ 326.7 million by 2032, at a CAGR of 8.5% during the forecast period 2025-2032. The U.S. market is estimated at USD 92.4 million in 2024, while China is expected to grow at a faster pace reaching USD 134.6 million by 2032.

CMP (Chemical Mechanical Planarization) slurry monitoring systems are critical quality control solutions used in semiconductor manufacturing to analyze and maintain slurry properties. These systems measure key parameters including Large Particle Counts (LPC), density, viscosity, pH levels, and particle size distribution to ensure optimal polishing performance. The technology plays a vital role in improving wafer yield and reducing defects in advanced node semiconductor production.

Market growth is driven by the semiconductor industry’s transition to smaller process nodes (below 7nm) which requires stricter slurry quality control. The Large Particle Counts (LPC) segment dominates with 38% market share in 2024 due to its direct impact on wafer surface defects. Key players like ENTEGRIS, INC and HORIBA are expanding their monitoring portfolios through acquisitions, with the top five companies holding 62% market share. Recent innovations include real-time monitoring systems that integrate AI for predictive maintenance in fab operations.

Get Full Report : https://semiconductorinsight.com/report/cmp-slurry-monitoring-market/

MARKET DYNAMICS

MARKET DRIVERS

Rising Demand for Advanced Semiconductor Manufacturing to Boost CMP Slurry Monitoring Adoption

The global semiconductor industry is experiencing unprecedented growth, with manufacturing complexity increasing as chip designs shrink below 10nm nodes. This drives the need for precise chemical mechanical planarization (CMP) processes where slurry quality directly impacts yield rates. Leading foundries report that improper slurry monitoring can reduce wafer yields by up to 15-20% due to defects like scratching or incomplete polishing. As a result, semiconductor manufacturers are increasingly adopting automated CMP slurry monitoring systems to maintain optimal particle size distribution, viscosity, and chemical composition throughout the polishing process.

Technology Miniaturization Trends Accelerating Market Growth

The relentless push toward smaller semiconductor nodes below 7nm is creating new challenges in CMP processes that require real-time slurry monitoring. Modern slurry formulations contain engineered abrasives with particle sizes under 100nm, where even minor deviations in particle concentration can cause catastrophic wafer defects. This technological evolution has led to a threefold increase in demand for advanced monitoring solutions capable of detecting sub-100nm particles across multiple CMP process steps. Leading manufacturers now integrate monitoring systems directly into CMP tools to enable closed-loop control, driving market growth as foundries upgrade equipment for next-generation nodes.

Increasing Focus on Predictive Maintenance Creating New Opportunities

Semiconductor manufacturers are shifting from reactive to predictive maintenance strategies, with CMP slurry monitoring playing a pivotal role. Continuous monitoring of slurry parameters allows for early detection of quality degradation before it impacts production. Analysis shows that predictive maintenance enabled by slurry monitoring can reduce unplanned tool downtime by 30-40% while extending consumable lifecycles. Major players now offer AI-powered analytics platforms that correlate slurry data with tool performance, helping fabs optimize polish rates and reduce material waste. This trend is particularly strong in memory chip production, where CMP processes account for over 25% of total manufacturing costs.

MARKET RESTRAINTS

High Implementation Costs Creating Barriers for Smaller Fabs

While CMP slurry monitoring delivers substantial ROI for high-volume manufacturers, the capital expenditure required creates significant barriers for adoption. A complete monitoring system including sensors, analytics software, and integration with CMP tools can cost $500,000-$1 million per toolset. This represents a major investment for smaller foundries or research facilities that may process fewer wafers. Additionally, the need for specialized installation and calibration further increases total cost of ownership, limiting market penetration among cost-sensitive operations.

Technical Complexity of Multi-Parameter Monitoring Presents Challenges

Modern CMP slurries require monitoring of 10+ critical parameters simultaneously, including particle counts, zeta potential, pH, and chemical concentrations. Integrating sensors for all relevant measurements without disrupting slurry flow or introducing measurement artifacts remains an engineering challenge. Many existing monitoring solutions compromise by measuring only 2-3 key parameters, potentially missing critical quality variations. The industry also faces difficulties in developing non-invasive sensors that can withstand the corrosive chemical environment of CMP slurries over extended periods without drift or contamination.

Lack of Standardization Across Slurry Formulations

The CMP slurry market includes hundreds of proprietary formulations from different suppliers, each requiring customized monitoring approaches. This lack of standardization forces equipment vendors to develop numerous sensor configurations and calibration methods. Foundries using multiple slurry types face additional complexity in maintaining separate monitoring protocols for each chemistry. The situation is particularly challenging for advanced materials like ceria-based slurries where oxidation state monitoring becomes critical but lacks established industry standards.

MARKET OPPORTUNITIES

Emerging Advanced Packaging Technologies Creating New Application Areas

The rapid growth of advanced packaging technologies like 3D IC and chiplets is opening new applications for CMP slurry monitoring. These packaging approaches require planarization of multiple material layers including copper, dielectrics, and through-silicon vias (TSVs). Each material combination demands specialized slurry formulations with tight process control. Market analysis indicates the packaging segment will grow at a 12-15% CAGR as next-generation devices increasingly adopt heterogeneous integration. This creates opportunities for monitoring solutions that can handle the diverse material sets used in advanced packaging workflows.

AI-Powered Analytics Transforming Slurry Process Control