The techalertx.com has a simple mission: Deliver important Articles about Latest Technology, Tech News, Gadget Reviews and also covered all Operating System for Windows, Mac etc.

Don't wanna be here? Send us removal request.

Text

machine learning thesis topics 2022

It's that time of year again! If you're looking for some inspiration for your next AI project, check out our list of the top 10 research and thesis topics for AI in 2022.From machine learning to natural language processing, there's plenty to choose from. So get inspired and start planning your next project today!

#machine learning thesis topics 2022#machine learning#thesis topics 2022#artificial intelligence#techalertx

0 notes

Text

thesis topics 2022

Check out our list of the top 10 research and thesis topics for AI projects in 2022. With this list, you'll be able to stay ahead of the curve and get started on your project right away. Don't miss out on this opportunity to get a head start on your competition. Visit our website now and get started on your AI project today.

0 notes

Text

top 10 ai projects

Here are the top 10 research and thesis topics for AI projects in 2022:1. The impact of AI on society and economy2. The future of AI in business and governance3. The ethical implications of AI4. The potential of AI for solving global issues5. The impact of AI on human cognition6. The future of work in an age of AI7. The role of AI in creating a more equitable world8. The impact of AI on human relationships9. The role of AI in education10. How AI is changing the nature of knowledge

0 notes

Text

ai thesis title

The Top 10 Research and Thesis Topics for AI Projects in 2022 are now available! With this list, you can easily find the perfect topic for your AI project. Find the full list of topics here: https://techalertx.com/top-10-research-and-thesis-topics-for-ai-projects-in-2022/

0 notes

Text

top ai projects 2022

Looking for some inspiration for your AI project? Check out our list of the top 10 research and thesis topics for AI in 2022. From chatbots to predictive analytics, there's sure to be something on this list that sparks your interest.

0 notes

Text

thesis topics in artificial intelligence

With so many businesses and organizations turning to artificial intelligence for help, it's no surprise that there is an increasing demand for AI research and thesis topics.To help you stay ahead of the curve, we've compiled a list of the top 10 research and thesis topics in AI for 2022.1. The impact of artificial intelligence on business and society2. The ethical implications of artificial intelligence3. The future of work in an age of artificial intelligence4. The role of artificial intelligence in education5. The use of artificial intelligence in healthcare6. The potential of artificial intelligence for solving global problems7. The risks associated with artificial intelligence development8. The impact of artificial intelligence on human cognitive abilities9. The role of artificial intelligence in military applications10. The governance of artificial intelligence

0 notes

Text

artificial intelligence research topics 2022

We are excited to announce our new artificial intelligence research topics for 2022. With this new tool, you can easily find the latest and most relevant research topics in minutes. Try it out now and see for yourself how easy it is to find the latest and most relevant research topics with our new AI builder.

#artificial intelligence research topics 2022#artificial intelligence research#artificial intelligence#latest tech news

0 notes

Text

artificial intelligence thesis topics

Are you looking for some interesting and innovative artificial intelligence thesis topics? Then look no further! We have compiled a list of the top 10 research and thesis topics for AI projects in 2022. So if you are planning to pursue a career in AI, then these topics are definitely worth exploring. Check out our list now and get started on your research journey today!

0 notes

Text

artificial intelligence thesis ideas

We are excited to announce that our new artificial intelligence thesis ideas tool is now available! With this tool, you can easily generate ideas for your thesis in minutes.Say goodbye to the hassle of coming up with ideas on your own. With our new AI tool, all you need to do is input your desired topic and our tool will do the rest.🚀Try it out now and see for yourself how easy and fast it is to generate ideas with our new AI tool.

0 notes

Text

ai thesis topics

We're excited to announce our new ai thesis topics! With this tool, you can easily find the perfect topic for your thesis. Simply enter your desired keywords and our tool will do the rest.Try it out now and see for yourself how easy it is to find the perfect ai thesis topic.

0 notes

Text

machine learning thesis topics 2022

It's that time of year again! Thesis season is upon us and we know that finding the perfect topic can be daunting. But don't worry, we're here to help. We've put together a list of the most exciting machine learning thesis topics for 2022. Whether you're interested in natural language processing or computer vision, we've got you covered. So take a look and get inspired for your next big project. Good luck!

0 notes

Text

Machine learning research topics 2022

Are you looking for the latest machine learning research topics 2022? Look no further than our list of the top 10 for 2022. With exciting new developments in the field, there's plenty to choose from for your next project.From voice recognition to predictive analytics, these topics are sure to keep you on the cutting edge of machine learning research. So what are you waiting for? Start exploring and see what you can discover!

0 notes

Text

What Is the Internet of Things (IoT)?

The Internet of Things, often abbreviated as IoT. A system of interconnected devices and sensors that collect and share data about their surroundings. The term was coined in 1999 by British technology pioneer Kevin Ashton, but the concept has only gained traction in recent years as technology has become more sophisticated. IoT devices can range from simple fitness trackers to complex industrial machines, and they are becoming increasingly prevalent in both our personal lives and our work lives. In this blog post, we will explore what the Internet of Things is? how it works? and some of the potential implications of this growing technology trend.

The Internet of Things, or IoT, refers to the billions of physical devices around the world. That are connected to the internet, collecting and sharing data. IoT devices can include everything from smartphones and wearables to home appliances and vehicles.

What is IoT?

IoT devices are powered by sensors and actuators that collect data about their surroundings and user interactions. This data is then transmitted over the internet to be processed and analyzed. The insights gained from this data can be used to improve the performance of IoT devices and systems, as well as enable new services and applications.

IoT is already having a major impact on our lives, with many homes now equipped with smart thermostats, lights, and security systems that can be controlled remotely via a smartphone. In the future, it is expected that even more aspects of our lives will be connected to the internet, with potentially billions of devices interacting with each other to make our lives easier, safer, and more efficient.

Why is the Internet of Things (IoT) so important?

There are a few key reasons why the Internet of Things is so important. First, IoT has the potential to make our lives more efficient and convenient. For example, rather than having to remember to turn off the lights when we leave the house, we could program them to turn off automatically. Or, our fridges could order milk for us when we’re running low. Second, IoT can help us to be more sustainable by reducing energy consumption. For example, if our homes are better insulated and have smart thermostats, we can save money on our energy bills and reduce our carbon footprint. Finally, IoT can improve public safety by giving us real-time data about things like traffic conditions or air quality.

What technologies have made IoT possible?

The Internet of Things (IoT) is a network of physical objects that are connected to the internet. These objects can include devices, vehicles, buildings, and people. The IoT allows these objects to collect and exchange data.

The technologies that have made IoT possible include:

Wireless communication:

This allows devices to communicate with each other without the need for wires or cables.

Sensors:

Sensors can detect things like temperature, light, sound, and movement. They allow devices to gather data about their surroundings.

Cloud computing:

This allows data from IoT devices to be stored and processed on remote servers.

What is industrial IoT?

The Internet of Things, or IoT, is a system of interconnected devices and sensors that collect and share data about their surroundings. The industrial IoT is a specialized subset of the IoT that refers to the use of connected devices and sensors in manufacturing and industrial settings.

Industrial IoT applications can be used to monitor and optimize production processes, track equipment and inventory levels, and improve safety and security. By collecting data from all aspects of the production process, the industrial IoT can provide insights that can help manufacturers reduce costs, increase efficiency, and improve quality control.

Despite its potential benefits, there are challenges associated with the industrial IoT. One of the biggest challenges is integrating legacy systems with new IoT technologies. Another challenge is ensuring that data collected by IoT devices is accurate and reliable. Security is also a major concern, as the industrial IoT creates new opportunities for cyberattacks.

Unlock business value with IoT

The internet of things, or IoT, is a system of interconnected devices and sensors that collect and share data about their surroundings. By connecting devices and sensors to the internet, businesses can collect data about their customers, products, and operations. This data can be used to improve business operations and create new products and services.

IoT can help businesses unlock value by providing data that can be used to improve decision making. For example, data from IoT devices can be used to optimize production lines, track inventory levels, or monitor customer behavior. By using data to improve decision making, businesses can increase efficiency and productivity while reducing costs.

IoT can also create new revenue streams for businesses. For example, businesses can use data from IoT devices to develop new products and services or offer premium services to customers. Additionally, businesses can sell the data they collect from IoT devices to third parties such as research firms or marketing companies.

Overall, IoT provides businesses with a way to collect data about their customers, products, and operations. This data can be used to improve business operations, create new products and services, or generate new revenue streams.

What are IoT applications?

IoT applications are vast and varied, but all share the common goal of making our lives easier, safer, and more efficient.

Some popular IoT applications include:

Smart home devices that can be controlled remotely, such as thermostats, security cameras, and lighting

Wearables that track our fitness and health data

Connected cars that can diagnose problems and report them to the driver or mechanic

Smart city infrastructure that can help reduce traffic congestion and pollution

What are some ways IoT applications are deployed?

IoT applications are deployed in a variety of ways, depending on the needs of the organization. For example, an IoT application may be deployed as a standalone system that is not connected to other systems. Alternatively, an IoT application may be deployed as part of a larger system that includes other devices and sensors.

What industries can benefit from IoT?

The Internet of Things (IoT) is a network of physical devices, vehicles, home appliances, and other items embedded with electronics, software, sensors, and connectivity which enables these objects to connect and exchange data.

IoT has the potential to transform a wide range of industries, including healthcare, transportation, manufacturing, agriculture, and energy.

In healthcare, IoT can be used to monitor patients remotely, track medical equipment, and manage hospital resources.

In transportation, Internet Of Things can be used to manage traffic congestion and optimize public transit.

In manufacturing, it is also used to improve quality control and supply chain management.

In agriculture, IoT can be used to manage irrigation systems and crop yields. And in energy, IoT can be used to optimize power usage and grid management.

How is IoT changing the world? Take a look at connected cars.

IoT is changing the world in many ways, but one of the most significant is its impact on connected cars. Cars are becoming increasingly connected, with many new vehicles now featuring built-in WiFi and cellular connectivity. This allows drivers to stay connected even when they’re on the go, and it opens up a whole new world of possibilities for car manufacturers and developers.

One of the most important ways that IoT is changing cars is by making them more efficient. Connected cars can communicate with each other and with infrastructure like traffic lights to avoid congestion and optimize routes. This not only saves drivers time, but also reduces fuel consumption and emissions.

Another way that IoT is changing cars is by making them safer. Connected cars can share data about their location, speed, and braking patterns with other cars on the road. This information can be used to warn drivers of potential hazards and help them avoid accidents. Additionally, some newer cars are equipped with features like automatic emergency breaking that can help reduce the severity of accidents.

Finally, IoT is also changing the car ownership experience. Car-sharing services like Zipcar have become popular in recent years, and they’re only possible because of connected cars. These services allow drivers to rent cars by the hour or day, without having to worry about things like insurance or maintenance. As more people embrace this model of car ownership, it’s likely that we’ll see even more changes in the automotive industry in the years to come.

Make the best business decisions with Oracle IoT

The Internet of Things, or IoT, is a system of interconnected devices and sensors that collect and share data about their surroundings. By connecting devices to the internet, businesses can collect data about how those devices are being used and make better decisions about how to improve their products and services.

Oracle’s IoT platform helps businesses take advantage of the data generated by connected devices. The platform includes a cloud-based data management system that makes it easy to collect, process, and analyze data from IoT devices. Oracle’s IoT solutions also include a set of tools for developing applications that can use data from IoT devices.

With Oracle’s IoT platform, businesses can gain insights into how their products are being used and make better decisions about how to improve them. By collecting data from connected devices, businesses can identify trends and patterns that would be otherwise difficult to detect. Oracle’s IoT platform makes it easy to get started with collecting and analyzing data from connected devices, so businesses can quickly start taking advantage of the benefits of the IoT.

Read More:

Computer Vision: What it is and why it matters

What is Quantum Computing? and How Does It Work?

What is Deepfake? How to Tell If a Video Is Fake or Real

#iot#technology#internetofthings#share#data#internet#technologies#technologynews#tech#technews#iotdevices#iotplatform#iotcommunity#iotsolutions#iottechnology#artificial intelligence#5g launch#futurism#gadgets

0 notes

Text

Computer Vision: What it is and why it matters

What is computer vision? In the broadest sense, it is the ability of computers to interpret and understand digital images. This includes everything from identifying objects in an image to understanding the meaning of an image. Computer vision is a rapidly growing field with many potential applications.

It is already being used in a number of industries, including healthcare, automotive, and security. And as the technology continues to develop, the potential uses for computer vision are only going to increase. So, why does computer vision matter? In short, because it has the potential to revolutionize how we interact with the world around us. With computer vision, we can create smarter machines that can help us automate tasks and make better decisions.

We can also use it to enhance our own human abilities, such as by giving us superhuman. It helps us to better understand the emotions of those around us. Ultimately, computer vision matters because it has the potential to change the way we live and work for the better.

Who’s using computer vision?

Computer vision is being used more and more as we move into the digital age. Here are some examples of who’s using it and why it matters:

The medical field is using computer vision to create 3D images of patients for diagnosis and treatment planning.

Law enforcement is using it to automatically identify criminals in security footage.

Manufacturers are using it to inspect products for defects.

Retailers are using it to track inventory levels and customer traffic patterns.

As you can see, computer vision is becoming increasingly important in a wide variety of industries. It’s accuracy and efficiency saves time and money, while also making our world a safer place.

How computer vision works?

Computer vision is a field of Artificial Intelligence that deals with teaching computers to interpret and understand digital images. Just like the human visual system, computer vision systems perceive the world through digital images. There are a number of different techniques that can be used for computer vision. But they all boil down to three main steps: 1) Pre-processing: This is where the raw data from an image (pixels) is converted into a format. That can be processed by a computer. This usually involves cleaning up the image, removing noise, and correcting for any distortions. 2) Feature extraction: This step involves extracting meaningful information from the pre-processed image data. This can be done using a variety of methods, but commonly used techniques include edge detection and template matching. 3) Object recognition: In this final step, the extracted features are used to recognize objects in the image. This step usually requires some form of machine learning, as it’s often not possible to write explicit rules.Tthat will reliably identify objects in all cases.

What is computer vision?

Computer vision is a field of computer science. That deals with how computers can be made to gain high-level understanding from digital images or videos. From the perspective of engineering, it seeks to automate tasks that the human visual system can do. Computer vision is concerned with the automatic extraction. It’s analysis and understanding of useful information from a single image or a sequence of images. It involves the development of computational models of objects. This scenes from one or more images for applications such as recognition, detection and segmentation. The ultimate goal of computer vision is to give computers a high level of understanding about what they see so that they can perform tasks such as object recognition, scene understanding and image retrieval automatically and efficiently.

Computer vision for animal conservation

Computer vision is a field of computer science that deals with the automatic extraction, analysis, and understanding of useful information from digital images. It’s an important tool for animal conservation because it can be used to monitor and track wildlife, identify poachers, and assess the health of ecosystems. Computer vision has been used in a variety of ways to help conserve animals and their habitats. For example, it can be used to automatically count animals in a given area, which is useful for estimating population size and density.

It can also be used to track individual animals, which is helpful for studying migration patterns and understanding how different species interact with one another. Additionally, computer vision can be used to identify poachers by their tracks or the presence of illegal hunting equipment in an area. And finally, computer vision can be used to assess the health of ecosystems by monitoring changes in vegetation over time. Overall, computer vision is a powerful tool that can be used in many different ways to help protect animals and their habitats.

Seeing results with computer vision

Computer vision is a field of computer science and engineering focused on the creation of intelligent algorithms that enable computers to interpret and understand digital images. The applications of computer vision are vast, ranging from autonomous vehicles and facial recognition to medical image analysis and industrial inspection.

Computer vision algorithms are typically designed to draw inferences from digital images in order to make decisions or take action. For example, a computer vision algorithm might be used to automatically detect pedestrians in an image in order to trigger a warning for the driver of an autonomous car. Or, a computer vision algorithm might be used to analyze a medical image for signs of cancer. The development of effective computer vision algorithms is challenging due to the vast amount of data that must be processed and the many different sources of variability present in digital images. Nevertheless, significant progress has been made in recent years thanks to advances in both hardware and software. As the field of computer vision continues to grow, we can expect even more amazing applications that make our lives easier and safer.

#computerscience#digital#computervision#engineering#machinelearning#artificialintelligence#tech#technews#techalertx#techcareer#technology#technologies#technologynews#computer#5g launch#futurism#mobile#news

0 notes

Text

VIANAI Systems Introduces First Human-Centered AI Platform for the Enterprise

In a world where technology is constantly evolving, it can be hard to keep up with the latest trends. However, one trend that has been gaining a lot of traction in recent years is artificial intelligence (AI). AI is being used in a variety of industries, from healthcare to manufacturing. And now, VIANAI Systems has introduced the first human-centered AI platform for the enterprise. With this platform, enterprises will be able to gain insights into their customers, employees, and operations. Additionally, they will be able to automate tasks and improve efficiency.

VIANAI Systems Introduces First Human-Centered AI Platform

In a world first, VIANAI Systems has introduced a human-centered artificial intelligence (AI) platform that promises to revolutionize the way enterprises interact with their customers. The new platform has been designed from the ground up to put people at the center of the AI experience, making it more natural and intuitive to use while also delivering superior results. The key to the success of the platform is its ability to learn and adapt to users’ needs over time. This means that it can constantly evolve to become more effective at meeting customer expectations, providing a truly personalized service that is always one step ahead. The launch of the platform comes at a time when businesses are under increasing pressure to adopt AI technologies in order to remain competitive. However, many have been reluctant to do so due to concerns about the potential impact on jobs and privacy. With its human-centric approach, VIANAI’s platform addresses these concerns head-on, offering a safe and secure way for businesses to harness the power of AI without compromising on ethical values. The platform is already being used by some of the world’s leading organizations, including Microsoft, SAP, and Deloitte. And with its game-changing potential, it is set to transform how enterprises operate in the years ahead.

Dealtale Revenue Science

In today’s business landscape, data is everything. And with the advent of artificial intelligence (AI), organizations are able to glean even more insights from their data than ever before. But what if there was a way to not just get more insights from data, but to also make those insights actionable? That’s where VIANAI Systems comes in. VIANAI is the creator of the first human-centered AI platform for the enterprise. What does that mean? Essentially, the platform is designed to help organizations harness the power of AI in order to drive better business outcomes. The platform does this by taking into account the entire context of an organization’s data, including both structured and unstructured data. This allows for a more comprehensive understanding of an organization’s customers, products, and operations. Armed with this understanding, businesses can then use VIANAI’s platform to automate key processes and make better decisions across the board. In short, VIANAI’s human-centered AI platform is revolutionizing how businesses use data to drive success. If you’re looking for a way to give your organization a competitive edge, VIANAI is definitely worth checking out.

#artificialintelligence#experience#people#ai#AIModel#tech#technews#techcommunity#technology#technologies#technologysolutions#machinelearning#datascience#techalertx#data#ml#vianai#vianaisystem#human#gadgets#robotics#5g launch#futurism#mobile

0 notes

Text

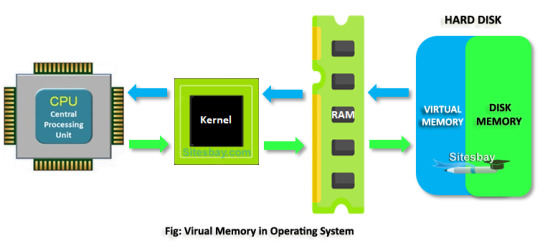

What is Virtual Memory? and How it Work?

Most people have heard of virtual memory, but few know what it actually is. Virtual memory is a computer file (or set of files) that emulates the random access memory (RAM) of a machine. Virtual memory is important because it allows a computer to run programs that are too large to fit into RAM.

It also makes it possible for a single program to use more RAM than is physically available on the machine. Despite its benefits, virtual memory can also be a source of problems if it is not used correctly. In this blog post, we will explore what virtual memory? and How it works, and some of the potential problems that can arise from its use.

What is Virtual Memory?

Image Source By: Sitesbay

Also Read | What is SSD in Laptop and How does it work?

Virtual memory is a computer technology that provides an abstraction of physical memory. Bytes in virtual memory can be mapped to bytes in physical memory. It allowing the computer to view and address more memory than is physically present in the system. This extra capacity can be used for temporary storage, or for increasing the amount of data that can be kept in active use. Virtual memory provides several benefits: • It allows more efficient use of limited physical memory resources. • It permits separation of user processes from system processes. • It supports demand paging, which can make better use of slower secondary storage devices such as hard disks. • It allows multiple processes to share the same physical pages of memory, without the need for each process to have its own private copy of those pages.

How does Virtual Memory Work?

Virtual memory is a process that gives an application program the ability to address more memory than is physically available in the computer. It allows a program to run in a larger address space than is supported by the underlying hardware. The operating system sets up and maintains a page table for each process. The page table is a data structure that maps virtual addresses to physical addresses. When the processor starts up, it loads the page table for the currently running process from memory into a cache called the Translation Look-aside Buffer (TLB). When an instruction tries to access memory, the processor uses the TLB to look up the physical address corresponding to the virtual address. If the address is not in the TLB, it causes a page fault. The operating system handles the page fault by looking up the address in the page table and then loading the appropriate page from disk into memory. Once the page is loaded, the TLB is updated with the new mapping and execution continues. Because accessing main memory can be slow, many processors also have smaller, faster caches that sit between main memory and CPU. These caches are used to store frequently accessed pages from main memory so, that they can be quickly accessed by the CPU without having to go through all of main memory every time.

What are the benefits of Virtual Memory?

Virtual memory is a type of computer data storage that stores information in both hardware and software. It is used to store frequently accessed data in a place that is easily accessible to the CPU. Virtual memory is usually faster than main memory, which is why it is often used by computers with large amounts of data. There are several benefits of virtual memory, including: 1. Increased speed:

Virtual memory can be much faster than main memory, which makes it ideal for storing frequently accessed data. 2. Increased capacity:

Virtual memory can store more data than main memory, which is especially useful for computers with large amounts of data. 3. Reduced cost:

Virtual memory can be less expensive than main memory, since it uses both hardware and software to store data. 4. Improved reliability:

Virtual memory can be more reliable than main memory, since it uses error-correcting code to ensure that stored data is not corrupted.

What are the drawbacks of Virtual Memory?

Virtual memory is often slower than physical memory, because it requires additional processing to access the virtual memory. This can cause delays when accessing data or running programs. Another drawback of virtual memory is that it can be unreliable. If there are power outages or other problems with the computer system, data in virtual memory can be lost. Finally, virtual memory can be a security risk. If the computer system is not properly configured, unauthorized users may be able to access data in the virtual memory.

How to optimize your use of Virtual Memory

Virtual memory is a feature of an operating system that allows a computer to compensate for physical memory limitations by temporarily transferring data from active memory to inactive memory. This allows more programs to run simultaneously without causing slowdown or crashing. If your computer is running slowly or crashing frequently, it may be due to insufficient virtual memory. You can optimize your use of virtual memory by increasing the size of your virtual memory paging file or by changing the location of your paging file. You can also try disabling certain features and programs that are resource-intensive. If you’re not sure how to change your virtual memory settings, contact your computer’s manufacturer or authorized service provider for assistance.

Conclusion

Virtual memory is a great way to increase the amount of available memory on your computer. By using virtual memory, you can run more programs and store more data. However, there are some drawbacks to using virtual memory, such as the potential for decreased performance and increased wear and tear on your hard drive. If you’re considering using virtual memory, be sure to weigh the pros and cons carefully to determine if it’s right for you.

#technology#tech#memory#hardware#software#technews#ram#computer#computerscience#operatingsystems#os#robotics#news#gadgets#tech news#5g launch#futurism#artificial intelligence#mobile#money

1 note

·

View note

Text

Is Quantum Encryption the Future of Secure Communication?

Is Quantum Encryption the Future of Secure Communication? After all, haven’t we been perfectly secure with standard encryption for the last couple of decades? For most of us, using email or online shopping, means inputting our personal information into a computer system and clicking “send”.

But with recent cyber-attacks and privacy breaches making headlines, how can we be sure our information is secure? It looks like the answer may be quantum cryptography. It’s not quite as simple as that though; read on to find out more about this groundbreaking technology.

Table of Contents

What is quantum encryption?

How does Quantum Encryption work?

The benefits of Quantum Encryption

Limitations of Quantum Encryption

Should we be using Quantum Encryption?

Final Words

What is quantum encryption?

Quantum encryption is a method of ensuring secure communication between two parties. It’s done by converting the message into an unbreakable code based on the laws of quantum physics.

Quantum encryption systems are set up between two parties and use optical fiber networks to transmit a key that is then used to encrypt the message. This key is only valid for a short period of time, meaning that if someone were to intercept the transmission, they wouldn’t be able to read the message.

Also Read | What is Quantum Computing? and How Does It Work?

How does Quantum Encryption work?

When two parties decide to communicate with quantum encryption, they each generate a key and then send it to the other person. The key is sent as a series of photons, which are particles of light.

When these photons reach their destination, the person with the receiving end of the communication system registers their photon arrival by measuring its arrival time. This information is then used to generate a random key, which is then used to encrypt the message.

This code is completely unbreakable because it is based on the laws of quantum physics. This way, the receiving end can read the message without anyone else being able to decrypt it.

The benefits of Quantum Encryption

Quantum cryptography is a new form of encryption that is virtually unbreakable. The system uses quantum physics to generate a random key that can be used to encrypt a message. The sender then transmits this key to the recipient, who uses it to encrypt the message. It is virtually unbreakable because it is based on the laws of quantum physics.

This means that the code it generates is completely random, without any discernible pattern that could be used to decrypt it. It is also instantaneous, unlike other encryption methods that can take hours. It is also very easy to set up, requiring only a computer and a fiber optic network.

Limitations of Quantum Encryption

Even though quantum encryption is a superb way of ensuring that information sent via computer or over the internet is secure, it has a few limitations. First off, it requires a fiber optic connection, meaning that it doesn’t work on cellular networks.

It also requires two people already be in communication with each other. This means that you can’t just use quantum encryption to protect your emails to your mother. However, you can use it for email correspondence with your business partners. Finally, it has a very short shelf life. Once the key used to encrypt the message is used, it can’t be used again. This means that you have to create a new key every time you want to send a message.

Should we be using Quantum Encryption?

The question of whether we should use quantum encryption or not boils down to one thing: trust. Establishing trust is one of the main reasons for using encryption. Quantum encryption uses physics to provide a level of security that is unsurpassed by other encryption methods.

If two parties trust each other, they can use quantum encryption to exchange messages that can’t be decrypted by a third party. If two people don’t trust each other, they can use quantum encryption to verify that the message sent by the other person is unaltered. This way, they can rest assured that the message they received is the same message that was sent, and that no third party altered or intercepted the transmission.

Final Words

In the world of business and communication, trust is everything. If you can’t trust the person you’re communicating with, there’s no point in exchanging information with them at all. Quantum encryption provides the highest level of security that exists today.

It is virtually unbreakable, and can be used to verify that a message is unaltered. Quantum encryption is different from other types of encryption in that it doesn’t use a key to encrypt the message. Instead, it uses the photons that carry the message to encrypt the key. Because of this, it can be used instantly and the key can’t be broken. This makes quantum encryption the perfect way to protect your online communications.

Read More:

Machine Learning: What It Is and How It Works

What Is Artificial Intelligence? A Guide to Understanding AI

#email#communication#future#onlineshopping#quantumphysics#quantummachinelearning#quantumcomputing#quantumtechnology#quantum#quantummechanics#quantumtechnologies#tech#technology#techcareer#technews#techies#internet#network#computer#computerscience

0 notes