#devops monitoring tools

Explore tagged Tumblr posts

Text

Explore the most effective DevOps Monitoring Tools to boost operational efficiency and ensure continuous delivery. From performance tracking to real-time alerts, these tools streamline your DevOps lifecycle. Discover how Impressico Business Solutions empowers businesses with robust monitoring solutions tailored to enhance scalability, reliability, and faster deployments.

0 notes

Text

Dive into the heart of DevOps automation tools with Impressico Business Solutions. In this episode, we unpack the top tools—from Jenkins and CircleCI to Packer and Terraform—revealing how they streamline development, enforce consistency, and boost collaboration. Tune in for expert insights, practical tips, and real-world success stories to power your pipeline.

#DevOps Automation Tools#Major DevOps Tools#DevOps Monitoring Tools#Configuration Management Tools#DevOps Automation

0 notes

Text

Dive into the world of DevOps monitoring with our ultimate guide to the best open-source tools! Discover the power of seamless automation, real-time insights, and enhanced collaboration in your software development journey. Uncover the top-notch monitoring solutions that streamline operations, boost efficiency, and ensure your systems run at their peak performance. From tracking metrics to proactive issue resolution, empower your team with the right tools for unparalleled success. Get ready to revolutionize your DevOps game!

0 notes

Text

Struggling with Microservices Monitoring?

Struggling with Microservices Monitoring?

Microservices architectures bring agility but also complexity. From Microservices application Monitoring to tackling real-time challenges in Microservices Monitoring, the right APM tool makes all the difference.

🔍 Discover how Application Performance Monitoring (APM) boosts microservices observability, performance, and reliability.

✅ Ensure seamless APM for Microservices ✅ Resolve bottlenecks faster ✅ Achieve end-to-end visibility

📖 Read now: https://www.atatus.com/blog/importance-of-apm-in-microservices

#APM#MicroservicesMonitoring#Observability#DevOps#APMTool#Application Performance monitoring#apm tool#apm for microservices#microservices observability#Microservices architectures#Microservices application Monitoring#Challenges in Microservices Monitoring

0 notes

Text

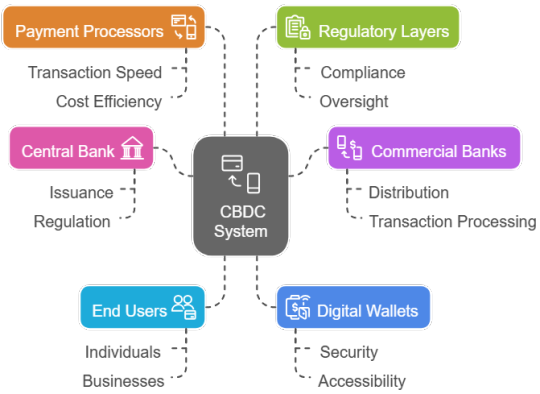

CBDC technology partner India

As CBDCs become a global reality, Prodevans equips banks with everything needed to enter the digital currency ecosystem. We provide full-spectrum CBDC implementation — including compliant architecture, token management, real-time reconciliation, secure wallet enablement, and 24/7 L1/L2 support. Trusted for our role in India’s national rollout, we help institutions go beyond pilots to scalable, production-ready platforms ensuring seamless end- user readiness. Our services ensure central bank compliance while delivering performance, observability, and rapid response to evolving regulatory needs. Whether you’re in the pilot phase or preparing for production rollout, Prodevans supports your CBDC journey at every step.

OUR ADDRESS

403, 4TH FLOOR, SAKET CALLIPOLIS, Rainbow Drive, Sarjapur Road, Varthurhobli East Taluk, Doddakannelli, Bengaluru Karnataka 560035

OUR CONTACTS

+91 97044 56015

#CBDC (Central Bank Digital Currency)#Cloud Computing & Cloud Services#Application Modernization#360° Monitoring (Server#Application#Database & Virtualization Monitoring)#Identity & Access Management (IAM)#Automation (incl. ML‑driven#Ansible#network/cloud automation)#DevOps Tools & Support#Infrastructure Management (IaaS/PaaS/SaaS#orchestration#orchestration tools)

0 notes

Text

Introducing Spike Sleuth, a real-time Linux disk I/O monitoring tool designed to help you identify and analyze unexpected disk activity spikes. Born from the need to pinpoint elusive IOPS alerts, Spike Sleuth offers a lightweight solution that logs processes, users, and files responsible for high disk usage without adding overhead. Whether you're a developer, system administrator, or solutions architect, this tool provides the insights needed to maintain optimal system performance. Discover how Spike Sleuth can enhance your system monitoring and help you stay ahead of performance issues. #Linux #Monitoring #OpenSource #SystemAdmin #DevOps

#devops monitoring#disk I/O monitoring tool#iops monitoring#linux bash script#linux disk monitoring#linux performance#linux sysadmin tools#real-time monitoring linux#systemd service#troubleshoot disk spikes

0 notes

Text

Don't Just Sit There! Start DevOps and Its Important

In the rapidly evolving landscape of software development, the term "DevOps" has become a buzzword. But what exactly is DevOps, and why is it gaining such widespread recognition and adoption? In this blog post, we'll unravel the concept of DevOps, exploring its core principles and delving into the reasons why it has become indispensable for modern businesses.

Unlocking the code of success through DevOps training in Bangalore – where collaboration meets innovation, shaping a digital masterpiece. 🚀💻 #DevOpsExcellence #InnovateWithCollaboration #TechRevolution

Understanding DevOps:

DevOps is a culture, a set of practices, and an approach that aims to bridge the gap between software development (Dev) and IT operations (Ops). It emphasizes collaboration, communication, and integration between these traditionally siloed teams throughout the entire software development lifecycle. The primary goal of DevOps is to enable organizations to deliver high-quality software at a faster pace, ensuring continuous integration, delivery, and deployment.

Why DevOps Matters:

Increased Efficiency: DevOps streamlines the development process, reducing manual intervention and accelerating the release cycle. This results in quicker delivery of features and updates, ultimately enhancing time-to-market.

Higher Quality Software: Continuous integration and automated testing in a DevOps environment contribute to the production of higher-quality software. Early detection of issues allows for timely resolutions, minimizing the presence of bugs and vulnerabilities.

Enhanced Collaboration: DevOps breaks down traditional barriers between teams, fostering a culture of shared responsibility. This collaborative environment leads to improved communication, understanding, and a more cohesive workflow.

Adaptability to Change: In today's dynamic business landscape, the ability to adapt quickly is paramount. DevOps enables organizations to respond rapidly to changing market demands, ensuring they stay competitive and innovative.

5. Startups and Scale-Ups

Agile Growth: DevOps is particularly beneficial for startups and scale-ups experiencing rapid growth. It ensures that the pace of development aligns with the increasing demands of a growing user base.

Cost-Efficiency: Startups often operate on tight budgets. DevOps helps optimize resources, reduce downtime, and achieve cost efficiency.

6. Enterprise Transformation

Cultural Shift: For large enterprises, adopting DevOps often involves a cultural shift. It encourages collaboration and transparency, breaking down traditional hierarchical barriers.

Risk Mitigation: DevOps practices contribute to risk mitigation by identifying and addressing issues early in the development process, reducing the likelihood of costly errors in production.Dive into the world of possibilities with DevOps online courses – sculpting your tech future, one virtual lesson at a time. 💻🌐 #TechSculptor #DevOpsSkills #VirtualClassroom"

7. Scalability:

As organizations grow, scalability becomes a crucial factor. DevOps practices enable seamless scalability by providing the tools and processes needed to handle increased workloads without sacrificing efficiency or compromising system stability.

DevOps is not just a set of tools; it's a cultural shift that empowers organizations to thrive in the fast-paced world of technology. By promoting collaboration, automation, and continuous improvement, DevOps has emerged as a fundamental approach for businesses seeking to remain agile, efficient, and customer-focused in the digital age. Embracing DevOps isn't just about adopting new practices; it's about cultivating a mindset that values collaboration, innovation, and excellence in every line of code.

#DevOps engineer#DevOps principles#Automation in DevOps#Cloud technologies#Monitoring tools#Infrastructure as Code#DevOps roadmap#IT career#DevOps success.

0 notes

Text

Top 10 DevOps Containers in 2023

Top 10 DevOps Containers in your Stack #homelab #selfhosted #DevOpsContainerTools #JenkinsContinuousIntegration #GitLabCodeRepository #SecureHarborContainerRegistry #HashicorpVaultSecretsManagement #ArgoCD #SonarQubeCodeQuality #Prometheus #nginxproxy

If you want to learn more about DevOps and building an effective DevOps stack, several containerized solutions are commonly found in production DevOps stacks. I have been working on a deployment in my home lab of DevOps containers that allows me to use infrastructure as code for really cool projects. Let’s consider the top 10 DevOps containers that serve as individual container building blocks…

View On WordPress

#ArgoCD Kubernetes deployment#DevOps container tools#GitLab code repository#Grafana data visualization#Hashicorp Vault secrets management#Jenkins for continuous integration#Prometheus container monitoring#Secure Harbor container registry#SonarQube code quality#Traefik load balancing

0 notes

Text

Discover the key to unlocking seamless operations with their top DevOps monitoring tools for 2024. These cutting-edge tools empower teams to proactively manage performance, troubleshoot issues, and ensure system reliability. At Impressico Business Solutions, they specialize in integrating these tools into your workflow, enhancing efficiency, and driving continuous improvement. Explore how their tailored solutions can elevate your DevOps strategy and deliver exceptional results.

#DevOps Monitoring Tools#DevOps Service Providers#DevOps Company#DevOps Consulting Company#DevOps Outsourcing Company

0 notes

Text

0 notes

Text

How Python Powers Scalable and Cost-Effective Cloud Solutions

Explore the role of Python in developing scalable and cost-effective cloud solutions. This guide covers Python's advantages in cloud computing, addresses potential challenges, and highlights real-world applications, providing insights into leveraging Python for efficient cloud development.

Introduction

In today's rapidly evolving digital landscape, businesses are increasingly leveraging cloud computing to enhance scalability, optimize costs, and drive innovation. Among the myriad of programming languages available, Python has emerged as a preferred choice for developing robust cloud solutions. Its simplicity, versatility, and extensive library support make it an ideal candidate for cloud-based applications.

In this comprehensive guide, we will delve into how Python empowers scalable and cost-effective cloud solutions, explore its advantages, address potential challenges, and highlight real-world applications.

Why Python is the Preferred Choice for Cloud Computing?

Python's popularity in cloud computing is driven by several factors, making it the preferred language for developing and managing cloud solutions. Here are some key reasons why Python stands out:

Simplicity and Readability: Python's clean and straightforward syntax allows developers to write and maintain code efficiently, reducing development time and costs.

Extensive Library Support: Python offers a rich set of libraries and frameworks like Django, Flask, and FastAPI for building cloud applications.

Seamless Integration with Cloud Services: Python is well-supported across major cloud platforms like AWS, Azure, and Google Cloud.

Automation and DevOps Friendly: Python supports infrastructure automation with tools like Ansible, Terraform, and Boto3.

Strong Community and Enterprise Adoption: Python has a massive global community that continuously improves and innovates cloud-related solutions.

How Python Enables Scalable Cloud Solutions?

Scalability is a critical factor in cloud computing, and Python provides multiple ways to achieve it:

1. Automation of Cloud Infrastructure

Python's compatibility with cloud service provider SDKs, such as AWS Boto3, Azure SDK for Python, and Google Cloud Client Library, enables developers to automate the provisioning and management of cloud resources efficiently.

2. Containerization and Orchestration

Python integrates seamlessly with Docker and Kubernetes, enabling businesses to deploy scalable containerized applications efficiently.

3. Cloud-Native Development

Frameworks like Flask, Django, and FastAPI support microservices architecture, allowing businesses to develop lightweight, scalable cloud applications.

4. Serverless Computing

Python's support for serverless platforms, including AWS Lambda, Azure Functions, and Google Cloud Functions, allows developers to build applications that automatically scale in response to demand, optimizing resource utilization and cost.

5. AI and Big Data Scalability

Python’s dominance in AI and data science makes it an ideal choice for cloud-based AI/ML services like AWS SageMaker, Google AI, and Azure Machine Learning.

Looking for expert Python developers to build scalable cloud solutions? Hire Python Developers now!

Advantages of Using Python for Cloud Computing

Cost Efficiency: Python’s compatibility with serverless computing and auto-scaling strategies minimizes cloud costs.

Faster Development: Python’s simplicity accelerates cloud application development, reducing time-to-market.

Cross-Platform Compatibility: Python runs seamlessly across different cloud platforms.

Security and Reliability: Python-based security tools help in encryption, authentication, and cloud monitoring.

Strong Community Support: Python developers worldwide contribute to continuous improvements, making it future-proof.

Challenges and Considerations

While Python offers many benefits, there are some challenges to consider:

Performance Limitations: Python is an interpreted language, which may not be as fast as compiled languages like Java or C++.

Memory Consumption: Python applications might require optimization to handle large-scale cloud workloads efficiently.

Learning Curve for Beginners: Though Python is simple, mastering cloud-specific frameworks requires time and expertise.

Python Libraries and Tools for Cloud Computing

Python’s ecosystem includes powerful libraries and tools tailored for cloud computing, such as:

Boto3: AWS SDK for Python, used for cloud automation.

Google Cloud Client Library: Helps interact with Google Cloud services.

Azure SDK for Python: Enables seamless integration with Microsoft Azure.

Apache Libcloud: Provides a unified interface for multiple cloud providers.

PyCaret: Simplifies machine learning deployment in cloud environments.

Real-World Applications of Python in Cloud Computing

1. Netflix - Scalable Streaming with Python

Netflix extensively uses Python for automation, data analysis, and managing cloud infrastructure, enabling seamless content delivery to millions of users.

2. Spotify - Cloud-Based Music Streaming

Spotify leverages Python for big data processing, recommendation algorithms, and cloud automation, ensuring high availability and scalability.

3. Reddit - Handling Massive Traffic

Reddit uses Python and AWS cloud solutions to manage heavy traffic while optimizing server costs efficiently.

Future of Python in Cloud Computing

The future of Python in cloud computing looks promising with emerging trends such as:

AI-Driven Cloud Automation: Python-powered AI and machine learning will drive intelligent cloud automation.

Edge Computing: Python will play a crucial role in processing data at the edge for IoT and real-time applications.

Hybrid and Multi-Cloud Strategies: Python’s flexibility will enable seamless integration across multiple cloud platforms.

Increased Adoption of Serverless Computing: More enterprises will adopt Python for cost-effective serverless applications.

Conclusion

Python's simplicity, versatility, and robust ecosystem make it a powerful tool for developing scalable and cost-effective cloud solutions. By leveraging Python's capabilities, businesses can enhance their cloud applications' performance, flexibility, and efficiency.

Ready to harness the power of Python for your cloud solutions? Explore our Python Development Services to discover how we can assist you in building scalable and efficient cloud applications.

FAQs

1. Why is Python used in cloud computing?

Python is widely used in cloud computing due to its simplicity, extensive libraries, and seamless integration with cloud platforms like AWS, Google Cloud, and Azure.

2. Is Python good for serverless computing?

Yes! Python works efficiently in serverless environments like AWS Lambda, Azure Functions, and Google Cloud Functions, making it an ideal choice for cost-effective, auto-scaling applications.

3. Which companies use Python for cloud solutions?

Major companies like Netflix, Spotify, Dropbox, and Reddit use Python for cloud automation, AI, and scalable infrastructure management.

4. How does Python help with cloud security?

Python offers robust security libraries like PyCryptodome and OpenSSL, enabling encryption, authentication, and cloud monitoring for secure cloud applications.

5. Can Python handle big data in the cloud?

Yes! Python supports big data processing with tools like Apache Spark, Pandas, and NumPy, making it suitable for data-driven cloud applications.

#Python development company#Python in Cloud Computing#Hire Python Developers#Python for Multi-Cloud Environments

2 notes

·

View notes

Text

A Comprehensive Guide to Deploy Azure Kubernetes Service with Azure Pipelines

A powerful orchestration tool for containerized applications is one such solution that Azure Kubernetes Service (AKS) has offered in the continuously evolving environment of cloud-native technologies. Associate this with Azure Pipelines for consistent CI CD workflows that aid in accelerating the DevOps process. This guide will dive into the deep understanding of Azure Kubernetes Service deployment with Azure Pipelines and give you tips that will enable engineers to build container deployments that work. Also, discuss how DevOps consulting services will help you automate this process.

Understanding the Foundations

Nowadays, Kubernetes is the preferred tool for running and deploying containerized apps in the modern high-speed software development environment. Together with AKS, it provides a high-performance scale and monitors and orchestrates containerized workloads in the environment. However, before anything, let’s deep dive to understand the fundamentals.

Azure Kubernetes Service: A managed Kubernetes platform that is useful for simplifying container orchestration. It deconstructs the Kubernetes cluster management hassles so that developers can build applications instead of infrastructure. By leveraging AKS, organizations can:

Deploy and scale containerized applications on demand.

Implement robust infrastructure management

Reduce operational overhead

Ensure high availability and fault tolerance.

Azure Pipelines: The CI/CD Backbone

The automated code building, testing, and disposition tool, combined with Azure Kubernetes Service, helps teams build high-end deployment pipelines in line with the modern DevOps mindset. Then you have Azure Pipelines for easily integrating with repositories (GitHub, Repos, etc.) and automating the application build and deployment.

Spiral Mantra DevOps Consulting Services

So, if you’re a beginner in DevOps or want to scale your organization’s capabilities, then DevOps consulting services by Spiral Mantra can be a game changer. The skilled professionals working here can help businesses implement CI CD pipelines along with guidance regarding containerization and cloud-native development.

Now let’s move on to creating a deployment pipeline for Azure Kubernetes Service.

Prerequisites you would require

Before initiating the process, ensure you fulfill the prerequisite criteria:

Service Subscription: To run an AKS cluster, you require an Azure subscription. Do create one if you don’t already.

CLI: The Azure CLI will let you administer resources such as AKS clusters from the command line.

A Professional Team: You will need to have a professional team with technical knowledge to set up the pipeline. Hire DevOps developers from us if you don’t have one yet.

Kubernetes Cluster: Deploy an AKS cluster with Azure Portal or ARM template. This will be the cluster that you run your pipeline on.

Docker: Since you’re deploying containers, you need Docker installed on your machine locally for container image generation and push.

Step-by-Step Deployment Process

Step 1: Begin with Creating an AKS Cluster

Simply begin the process by setting up an AKS cluster with CLI or Azure Portal. Once the process is completed, navigate further to execute the process of application containerization, and for that, you would need to create a Docker file with the specification of your application runtime environment. This step is needed to execute the same code for different environments.

Step 2: Setting Up Your Pipelines

Now, the process can be executed for new projects and for already created pipelines, and that’s how you can go further.

Create a New Project

Begin with launching the Azure DevOps account; from the screen available, select the drop-down icon.

Now, tap on the Create New Project icon or navigate further to use an existing one.

In the final step, add all the required repositories (you can select them either from GitHub or from Azure Repos) containing your application code.

For Already Existing Pipeline

Now, from your existing project, tap to navigate the option mentioning Pipelines, and then open Create Pipeline.

From the next available screen, select the repository containing the code of the application.

Navigate further to opt for either the YAML pipeline or the starter pipeline. (Note: The YAML pipeline is a flexible environment and is best recommended for advanced workflows.).

Further, define pipeline configuration by accessing your YAML file in Azure DevOps.

Step 3: Set Up Your Automatic Continuous Deployment (CD)

Further, in the next step, you would be required to automate the deployment process to fasten the CI CD workflows. Within the process, the easiest and most common approach to execute the task is to develop a YAML file mentioning deployment.yaml. This step is helpful to identify and define the major Kubernetes resources, including deployments, pods, and services.

After the successful creation of the YAML deployment, the pipeline will start to trigger the Kubernetes deployment automatically once the code is pushed.

Step 4: Automate the Workflow of CI CD

Now that we have landed in the final step, it complies with the smooth running of the pipelines every time the new code is pushed. With the right CI CD integration, the workflow allows for the execution of continuous testing and building with the right set of deployments, ensuring that the applications are updated in every AKS environment.

Best Practices for AKS and Azure Pipelines Integration

1. Infrastructure as Code (IaC)

- Utilize Terraform or Azure Resource Manager templates

- Version control infrastructure configurations

- Ensure consistent and reproducible deployments

2. Security Considerations

- Implement container scanning

- Use private container registries

- Regular security patch management

- Network policy configuration

3. Performance Optimization

- Implement horizontal pod autoscaling

- Configure resource quotas

- Use node pool strategies

- Optimize container image sizes

Common Challenges and Solutions

Network Complexity

Utilize Azure CNI for advanced networking

Implement network policies

Configure service mesh for complex microservices

Persistent Storage

Use Azure Disk or Files

Configure persistent volume claims

Implement storage classes for dynamic provisioning

Conclusion

Deploying the Azure Kubernetes Service with effective pipelines represents an explicit approach to the final application delivery. By embracing these practices, DevOps consulting companies like Spiral Mantra offer transformative solutions that foster agile and scalable approaches. Our expert DevOps consulting services redefine technological infrastructure by offering comprehensive cloud strategies and Kubernetes containerization with advanced CI CD integration.

Let’s connect and talk about your cloud migration needs

2 notes

·

View notes

Text

How-To IT

Topic: Core areas of IT

1. Hardware

• Computers (Desktops, Laptops, Workstations)

• Servers and Data Centers

• Networking Devices (Routers, Switches, Modems)

• Storage Devices (HDDs, SSDs, NAS)

• Peripheral Devices (Printers, Scanners, Monitors)

2. Software

• Operating Systems (Windows, Linux, macOS)

• Application Software (Office Suites, ERP, CRM)

• Development Software (IDEs, Code Libraries, APIs)

• Middleware (Integration Tools)

• Security Software (Antivirus, Firewalls, SIEM)

3. Networking and Telecommunications

• LAN/WAN Infrastructure

• Wireless Networking (Wi-Fi, 5G)

• VPNs (Virtual Private Networks)

• Communication Systems (VoIP, Email Servers)

• Internet Services

4. Data Management

• Databases (SQL, NoSQL)

• Data Warehousing

• Big Data Technologies (Hadoop, Spark)

• Backup and Recovery Systems

• Data Integration Tools

5. Cybersecurity

• Network Security

• Endpoint Protection

• Identity and Access Management (IAM)

• Threat Detection and Incident Response

• Encryption and Data Privacy

6. Software Development

• Front-End Development (UI/UX Design)

• Back-End Development

• DevOps and CI/CD Pipelines

• Mobile App Development

• Cloud-Native Development

7. Cloud Computing

• Infrastructure as a Service (IaaS)

• Platform as a Service (PaaS)

• Software as a Service (SaaS)

• Serverless Computing

• Cloud Storage and Management

8. IT Support and Services

• Help Desk Support

• IT Service Management (ITSM)

• System Administration

• Hardware and Software Troubleshooting

• End-User Training

9. Artificial Intelligence and Machine Learning

• AI Algorithms and Frameworks

• Natural Language Processing (NLP)

• Computer Vision

• Robotics

• Predictive Analytics

10. Business Intelligence and Analytics

• Reporting Tools (Tableau, Power BI)

• Data Visualization

• Business Analytics Platforms

• Predictive Modeling

11. Internet of Things (IoT)

• IoT Devices and Sensors

• IoT Platforms

• Edge Computing

• Smart Systems (Homes, Cities, Vehicles)

12. Enterprise Systems

• Enterprise Resource Planning (ERP)

• Customer Relationship Management (CRM)

• Human Resource Management Systems (HRMS)

• Supply Chain Management Systems

13. IT Governance and Compliance

• ITIL (Information Technology Infrastructure Library)

• COBIT (Control Objectives for Information Technologies)

• ISO/IEC Standards

• Regulatory Compliance (GDPR, HIPAA, SOX)

14. Emerging Technologies

• Blockchain

• Quantum Computing

• Augmented Reality (AR) and Virtual Reality (VR)

• 3D Printing

• Digital Twins

15. IT Project Management

• Agile, Scrum, and Kanban

• Waterfall Methodology

• Resource Allocation

• Risk Management

16. IT Infrastructure

• Data Centers

• Virtualization (VMware, Hyper-V)

• Disaster Recovery Planning

• Load Balancing

17. IT Education and Certifications

• Vendor Certifications (Microsoft, Cisco, AWS)

• Training and Development Programs

• Online Learning Platforms

18. IT Operations and Monitoring

• Performance Monitoring (APM, Network Monitoring)

• IT Asset Management

• Event and Incident Management

19. Software Testing

• Manual Testing: Human testers evaluate software by executing test cases without using automation tools.

• Automated Testing: Use of testing tools (e.g., Selenium, JUnit) to run automated scripts and check software behavior.

• Functional Testing: Validating that the software performs its intended functions.

• Non-Functional Testing: Assessing non-functional aspects such as performance, usability, and security.

• Unit Testing: Testing individual components or units of code for correctness.

• Integration Testing: Ensuring that different modules or systems work together as expected.

• System Testing: Verifying the complete software system’s behavior against requirements.

• Acceptance Testing: Conducting tests to confirm that the software meets business requirements (including UAT - User Acceptance Testing).

• Regression Testing: Ensuring that new changes or features do not negatively affect existing functionalities.

• Performance Testing: Testing software performance under various conditions (load, stress, scalability).

• Security Testing: Identifying vulnerabilities and assessing the software’s ability to protect data.

• Compatibility Testing: Ensuring the software works on different operating systems, browsers, or devices.

• Continuous Testing: Integrating testing into the development lifecycle to provide quick feedback and minimize bugs.

• Test Automation Frameworks: Tools and structures used to automate testing processes (e.g., TestNG, Appium).

19. VoIP (Voice over IP)

VoIP Protocols & Standards

• SIP (Session Initiation Protocol)

• H.323

• RTP (Real-Time Transport Protocol)

• MGCP (Media Gateway Control Protocol)

VoIP Hardware

• IP Phones (Desk Phones, Mobile Clients)

• VoIP Gateways

• Analog Telephone Adapters (ATAs)

• VoIP Servers

• Network Switches/ Routers for VoIP

VoIP Software

• Softphones (e.g., Zoiper, X-Lite)

• PBX (Private Branch Exchange) Systems

• VoIP Management Software

• Call Center Solutions (e.g., Asterisk, 3CX)

VoIP Network Infrastructure

• Quality of Service (QoS) Configuration

• VPNs (Virtual Private Networks) for VoIP

• VoIP Traffic Shaping & Bandwidth Management

• Firewall and Security Configurations for VoIP

• Network Monitoring & Optimization Tools

VoIP Security

• Encryption (SRTP, TLS)

• Authentication and Authorization

• Firewall & Intrusion Detection Systems

• VoIP Fraud DetectionVoIP Providers

• Hosted VoIP Services (e.g., RingCentral, Vonage)

• SIP Trunking Providers

• PBX Hosting & Managed Services

VoIP Quality and Testing

• Call Quality Monitoring

• Latency, Jitter, and Packet Loss Testing

• VoIP Performance Metrics and Reporting Tools

• User Acceptance Testing (UAT) for VoIP Systems

Integration with Other Systems

• CRM Integration (e.g., Salesforce with VoIP)

• Unified Communications (UC) Solutions

• Contact Center Integration

• Email, Chat, and Video Communication Integration

2 notes

·

View notes

Text

How to Choose the Right Tech Stack for Your Web App in 2025

In this article, you’ll learn how to confidently choose the right tech stack for your web app, avoid common mistakes, and stay future-proof. Whether you're building an MVP or scaling a SaaS platform, we’ll walk through every critical decision.

What Is a Tech Stack? (And Why It Matters More Than Ever)

Let’s not overcomplicate it. A tech stack is the combination of technologies you use to build and run a web app. It includes:

Front-end: What users see (e.g., React, Vue, Angular)

Back-end: What makes things work behind the scenes (e.g., Node.js, Django, Laravel)

Databases: Where your data lives (e.g., PostgreSQL, MongoDB, MySQL)

DevOps & Hosting: How your app is deployed and scaled (e.g., Docker, AWS, Vercel)

Why it matters: The wrong stack leads to poor performance, higher development costs, and scaling issues. The right stack supports speed, security, scalability, and a better developer experience.

Step 1: Define Your Web App’s Core Purpose

Before choosing tools, define the problem your app solves.

Is it data-heavy like an analytics dashboard?

Real-time focused, like a messaging or collaboration app?

Mobile-first, for customers on the go?

AI-driven, using machine learning in workflows?

Example: If you're building a streaming app, you need a tech stack optimized for media delivery, latency, and concurrent user handling.

Need help defining your app’s vision? Bluell AB’s Web Development service can guide you from idea to architecture.

Step 2: Consider Scalability from Day One

Most startups make the mistake of only thinking about MVP speed. But scaling problems can cost you down the line.

Here’s what to keep in mind:

Stateless architecture supports horizontal scaling

Choose microservices or modular monoliths based on team size and scope

Go for asynchronous processing (e.g., Node.js, Python Celery)

Use CDNs and caching for frontend optimization

A poorly optimized stack can increase infrastructure costs by 30–50% during scale. So, choose a stack that lets you scale without rewriting everything.

Step 3: Think Developer Availability & Community

Great tech means nothing if you can’t find people who can use it well.

Ask yourself:

Are there enough developers skilled in this tech?

Is the community strong and active?

Are there plenty of open-source tools and integrations?

Example: Choosing Go or Elixir might give you performance gains, but hiring developers can be tough compared to React or Node.js ecosystems.

Step 4: Match the Stack with the Right Architecture Pattern

Do you need:

A Monolithic app? Best for MVPs and small teams.

A Microservices architecture? Ideal for large-scale SaaS platforms.

A Serverless model? Great for event-driven apps or unpredictable traffic.

Pro Tip: Don’t over-engineer. Start with a modular monolith, then migrate as you grow.

Step 5: Prioritize Speed and Performance

In 2025, user patience is non-existent. Google says 53% of mobile users leave a page that takes more than 3 seconds to load.

To ensure speed:

Use Next.js or Nuxt.js for server-side rendering

Optimize images and use lazy loading

Use Redis or Memcached for caching

Integrate CDNs like Cloudflare

Benchmark early and often. Use tools like Lighthouse, WebPageTest, and New Relic to monitor.

Step 6: Plan for Integration and APIs

Your app doesn’t live in a vacuum. Think about:

Payment gateways (Stripe, PayPal)

CRM/ERP tools (Salesforce, HubSpot)

3rd-party APIs (OpenAI, Google Maps)

Make sure your stack supports REST or GraphQL seamlessly and has robust middleware for secure integration.

Step 7: Security and Compliance First

Security can’t be an afterthought.

Use stacks that support JWT, OAuth2, and secure sessions

Make sure your database handles encryption-at-rest

Use HTTPS, rate limiting, and sanitize inputs

Data breaches cost startups an average of $3.86 million. Prevention is cheaper than reaction.

Step 8: Don’t Ignore Cost and Licensing

Open source doesn’t always mean free. Some tools have enterprise licenses, usage limits, or require premium add-ons.

Cost checklist:

Licensing (e.g., Firebase becomes costly at scale)

DevOps costs (e.g., AWS vs. DigitalOcean)

Developer productivity (fewer bugs = lower costs)

Budgeting for technology should include time to hire, cost to scale, and infrastructure support.

Step 9: Understand the Role of DevOps and CI/CD

Continuous integration and continuous deployment (CI/CD) aren’t optional anymore.

Choose a tech stack that:

Works well with GitHub Actions, GitLab CI, or Jenkins

Supports containerization with Docker and Kubernetes

Enables fast rollback and testing

This reduces downtime and lets your team iterate faster.

Step 10: Evaluate Real-World Use Cases

Here’s how popular stacks perform:

Look at what companies are using, then adapt, don’t copy blindly.

How Bluell Can Help You Make the Right Tech Choice

Choosing a tech stack isn’t just technical, it’s strategic. Bluell specializes in full-stack development and helps startups and growing companies build modern, scalable web apps. Whether you’re validating an MVP or building a SaaS product from scratch, we can help you pick the right tools from day one.

Conclusion

Think of your tech stack like choosing a foundation for a building. You don’t want to rebuild it when you’re five stories up.

Here’s a quick recap to guide your decision:

Know your app’s purpose

Plan for future growth

Prioritize developer availability and ecosystem

Don’t ignore performance, security, or cost

Lean into CI/CD and DevOps early

Make data-backed decisions, not just trendy ones

Make your tech stack work for your users, your team, and your business, not the other way around.

1 note

·

View note

Text

🚀 Red Hat Services Management and Automation: Simplifying Enterprise IT

As enterprise IT ecosystems grow in complexity, managing services efficiently and automating routine tasks has become more than a necessity—it's a competitive advantage. Red Hat, a leader in open-source solutions, offers robust tools to streamline service management and enable automation across hybrid cloud environments.

In this blog, we’ll explore what Red Hat Services Management and Automation is, why it matters, and how professionals can harness it to improve operational efficiency, security, and scalability.

🔧 What Is Red Hat Services Management?

Red Hat Services Management refers to the tools and practices provided by Red Hat to manage system services—such as processes, daemons, and scheduled tasks—across Linux-based infrastructures.

Key components include:

systemd: The default init system on RHEL, used to start, stop, and manage services.

Red Hat Satellite: For managing system lifecycles, patching, and configuration.

Red Hat Ansible Automation Platform: A powerful tool for infrastructure and service automation.

Cockpit: A web-based interface to manage Linux systems easily.

🤖 What Is Red Hat Automation?

Automation in the Red Hat ecosystem primarily revolves around Ansible, Red Hat’s open-source IT automation tool. With automation, you can:

Eliminate repetitive manual tasks

Achieve consistent configurations

Enable Infrastructure as Code (IaC)

Accelerate deployments and updates

From provisioning servers to configuring complex applications, Red Hat automation tools reduce human error and increase scalability.

🔍 Key Use Cases

1. Service Lifecycle Management

Start, stop, enable, and monitor services across thousands of servers with simple systemctl commands or Ansible playbooks.

2. Automated Patch Management

Use Red Hat Satellite and Ansible to automate updates, ensuring compliance and reducing security risks.

3. Infrastructure Provisioning

Provision cloud and on-prem infrastructure with repeatable Ansible roles, reducing time-to-deploy for dev/test/staging environments.

4. Multi-node Orchestration

Manage workflows across multiple servers and services in a unified, centralized fashion.

🌐 Why It Matters

⏱️ Efficiency: Save countless admin hours by automating routine tasks.

🛡️ Security: Enforce security policies and configurations consistently across systems.

📈 Scalability: Manage hundreds or thousands of systems with the same effort as managing one.

🤝 Collaboration: Teams can collaborate better with playbooks that document infrastructure steps clearly.

🎓 How to Get Started

Learn Linux Service Management: Understand systemctl, logs, units, and journaling.

Explore Ansible Basics: Learn to write playbooks, roles, and use Ansible Tower.

Take a Red Hat Course: Enroll in Red Hat Certified Engineer (RHCE) to get hands-on training in automation.

Use RHLS: Get access to labs, practice exams, and expert content through the Red Hat Learning Subscription (RHLS).

✅ Final Thoughts

Red Hat Services Management and Automation isn’t just about managing Linux servers—it’s about building a modern IT foundation that’s scalable, secure, and future-ready. Whether you're a sysadmin, DevOps engineer, or IT manager, mastering these tools can help you lead your team toward more agile, efficient operations.

📌 Ready to master Red Hat automation? Explore our Red Hat training programs and take your career to the next level!

📘 Learn. Automate. Succeed. Begin your journey today! Kindly follow: www.hawkstack.com

1 note

·

View note

Video

youtube

Complete Hands-On Guide: Upload, Download, and Delete Files in Amazon S3 Using EC2 IAM Roles

Are you looking for a secure and efficient way to manage files in Amazon S3 using an EC2 instance? This step-by-step tutorial will teach you how to upload, download, and delete files in Amazon S3 using IAM roles for secure access. Say goodbye to hardcoding AWS credentials and embrace best practices for security and scalability.

What You'll Learn in This Video:

1. Understanding IAM Roles for EC2: - What are IAM roles? - Why should you use IAM roles instead of hardcoding access keys? - How to create and attach an IAM role with S3 permissions to your EC2 instance.

2. Configuring the EC2 Instance for S3 Access: - Launching an EC2 instance and attaching the IAM role. - Setting up the AWS CLI on your EC2 instance.

3. Uploading Files to S3: - Step-by-step commands to upload files to an S3 bucket. - Use cases for uploading files, such as backups or log storage.

4. Downloading Files from S3: - Retrieving objects stored in your S3 bucket using AWS CLI. - How to test and verify successful downloads.

5. Deleting Files in S3: - Securely deleting files from an S3 bucket. - Use cases like removing outdated logs or freeing up storage.

6. Best Practices for S3 Operations: - Using least privilege policies in IAM roles. - Encrypting files in transit and at rest. - Monitoring and logging using AWS CloudTrail and S3 access logs.

Why IAM Roles Are Essential for S3 Operations: - Secure Access: IAM roles provide temporary credentials, eliminating the risk of hardcoding secrets in your scripts. - Automation-Friendly: Simplify file operations for DevOps workflows and automation scripts. - Centralized Management: Control and modify permissions from a single IAM role without touching your instance.

Real-World Applications of This Tutorial: - Automating log uploads from EC2 to S3 for centralized storage. - Downloading data files or software packages hosted in S3 for application use. - Removing outdated or unnecessary files to optimize your S3 bucket storage.

AWS Services and Tools Covered in This Tutorial: - Amazon S3: Scalable object storage for uploading, downloading, and deleting files. - Amazon EC2: Virtual servers in the cloud for running scripts and applications. - AWS IAM Roles: Secure and temporary permissions for accessing S3. - AWS CLI: Command-line tool for managing AWS services.

Hands-On Process: 1. Step 1: Create an S3 Bucket - Navigate to the S3 console and create a new bucket with a unique name. - Configure bucket permissions for private or public access as needed.

2. Step 2: Configure IAM Role - Create an IAM role with an S3 access policy. - Attach the role to your EC2 instance to avoid hardcoding credentials.

3. Step 3: Launch and Connect to an EC2 Instance - Launch an EC2 instance with the IAM role attached. - Connect to the instance using SSH.

4. Step 4: Install AWS CLI and Configure - Install AWS CLI on the EC2 instance if not pre-installed. - Verify access by running `aws s3 ls` to list available buckets.

5. Step 5: Perform File Operations - Upload files: Use `aws s3 cp` to upload a file from EC2 to S3. - Download files: Use `aws s3 cp` to download files from S3 to EC2. - Delete files: Use `aws s3 rm` to delete a file from the S3 bucket.

6. Step 6: Cleanup - Delete test files and terminate resources to avoid unnecessary charges.

Why Watch This Video? This tutorial is designed for AWS beginners and cloud engineers who want to master secure file management in the AWS cloud. Whether you're automating tasks, integrating EC2 and S3, or simply learning the basics, this guide has everything you need to get started.

Don’t forget to like, share, and subscribe to the channel for more AWS hands-on guides, cloud engineering tips, and DevOps tutorials.

#youtube#aws iamiam role awsawsaws permissionaws iam rolesaws cloudaws s3identity & access managementaws iam policyDownloadand Delete Files in Amazon#IAMrole#AWS#cloudolus#S3#EC2

2 notes

·

View notes