#3-d semantic segmentation

Explore tagged Tumblr posts

Text

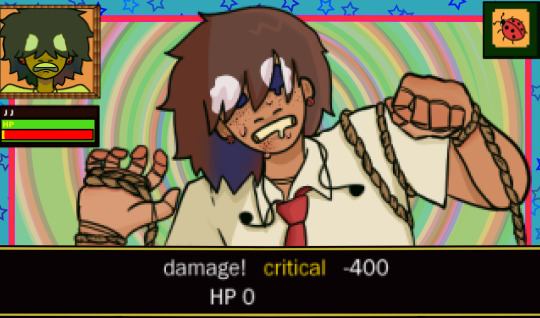

Loon drawing :D love her im killing her

Heavily inspider by the song "Bouquet Garni" by nilfruits! it's really good I reccomend checking it out :9

hey girl, ever have breakdowns and the only way you can somehow hold yourself back from going full insane is thinking back to all the shitty videogames you consume in the comfort of your own room? if so then you have something in common with her !1!1! hasashgihasg breakdown (videogame flavored)

I'll go a bit more in depth on him under the cut since I figured we changed quite a tad about her and I want to spread wisdom (our AU) to the world

So I'll start with design choices and then I'll move to his general character ヾ(≧▽≦*)o So:

The things coming out from his neck are antennae! they make her have really good hearing which SUCKS when you are overwhelmed (which she is, always) so rip!

His eyes have no pupil because arthropods and insects have them freaky eyes now <3

He has blue and red dyed hair to match the animatronics color scheme (thought the red is not very obvious here since he's tilting his head), though the white bits of his hair are not actually dyed! they are like that because he's a ladybug and they have those white spots on the sides of their little heads!

-His hands are segmented like that because of an arbitrary rule I made to make arthropods more recognizable amongst them bears and foxes and allat, since a arthropods are segmented I applied that to their articulations! fun fact, insects like Loon or Cami (We made her a mantis I'm sorry) have a division on their neck, while arthropods that are NOT insects, like Owynn, completely lack that! that's because insects are divided in 3 parts (head thorax and abdomen) arachnids are divided in 2 (thorax and abdomen) ((not exactly called that but semantics)).

-He has little ladybug earrings and actually he matches with Malva!(Usagi) ((we changed her name lol)) ((her last name is visco haha get it malva-visco))

-In general I made his hair darker, gave him a bit of acne amongst the freckles and made him more tan because we love melanin in this house

Now for,, character changes,,,

So first of all, he/she swag, bigender, Loon when masc and JJ when fem though it fluctuates too, good for her gettem. We obliterated that Malva crush, gone, exploded into pieces, and also she's ecuadorian now, gettem.

We turned that pathetic nervous shy thing of his into something way worse </3 She is still introverted and all that, but the main thing is that she does not like going outside! gamer goes outside challenge impossible, she does NOT like leaving his house shut-in guy who if outside for long enough has a breakdown, clings to Malva because they are best friends and she's like "safe" she's like a safe zone. Loon cannot function in social/public settings at all and just clings, which is also why a lot of people mistake him as "having a crush on her" even though they are a bajillion percent platonic

He's a bit of a cringefail loser and also a bit creepy lmao, always on her phone always trying to zone the hell out and think about a silly videogame, but also just nosy, hit antennae and insect stuff lets him 1) look wherever without people being able to tell, and 2) listen to stuff. So yeah, his ass sorta psychoanalyzes people and snoops through stuff to kinda distract herself from the horrors of being outside, which is why in this AU she's dating Owynn T-T sorta like the animatronic she isn't like actually out to get you, but instead acts like some sort of aid for the real enemy, which is Owynn, so yeah. Enabler and snoops through shit AKA has good info for Owynn's insane as shit ideas, insane of her but whatever.

Also given how in this Au we cranked the animal motifs to a thousand, being a little "bug" and then being next to like, a bear is terrifying to her, so besides being scared of just existing outside, she's also scared of people as a whole because little insect vs big animal, rip.

And i think that's most of it, if anyone read all of this and has any questions feel free to ask T-T sorry if the formatting SUCKS it's the first time I've tried putting something this long on tumblr <//3

#πa art#fnafhs#fhs#fhs fanart#fnafhs au#fnafhs fanart#loon fnafhs#loon fhs#the background was a nightmare to make#our au#<- we dont have a name yet woups

26 notes

·

View notes

Text

IEEE Transactions on Fuzzy Systems, Volume 33, Issue 6, June 2025

1) Brain-Inspired Fuzzy Graph Convolution Network for Alzheimer's Disease Diagnosis Based on Imaging Genetics Data

Author(s): Xia-An Bi, Yangjun Huang, Wenzhuo Shen, Zicheng Yang, Yuhua Mao, Luyun Xu, Zhonghua Liu

Pages: 1698 - 1712

2) Adaptive Incremental Broad Learning System Based on Interval Type-2 Fuzzy Set With Automatic Determination of Hyperparameters

Author(s): Haijie Wu, Weiwei Lin, Yuehong Chen, Fang Shi, Wangbo Shen, C. L. Philip Chen

Pages: 1713 - 1725

3) A Novel Reliable Three-Way Multiclassification Model Under Intuitionistic Fuzzy Environment

Author(s): Libo Zhang, Cong Guo, Tianxing Wang, Dun Liu, Huaxiong Li

Pages: 1726 - 1739

4) Guaranteed State Estimation for H−/L∞ Fault Detection of Uncertain Takagi–Sugeno Fuzzy Systems With Unmeasured Nonlinear Consequents

Author(s): Masoud Pourasghar, Anh-Tu Nguyen, Thierry-Marie Guerra

Pages: 1740 - 1752

5) Online Self-Learning Fuzzy Recurrent Stochastic Configuration Networks for Modeling Nonstationary Dynamics

Author(s): Gang Dang, Dianhui Wang

Pages: 1753 - 1766

6) ADMTSK: A High-Dimensional Takagi–Sugeno–Kang Fuzzy System Based on Adaptive Dombi T-Norm

Author(s): Guangdong Xue, Liangjian Hu, Jian Wang, Sergey Ablameyko

Pages: 1767 - 1780

7) Constructing Three-Way Decision With Fuzzy Granular-Ball Rough Sets Based on Uncertainty Invariance

Author(s): Jie Yang, Zhuangzhuang Liu, Guoyin Wang, Qinghua Zhang, Shuyin Xia, Di Wu, Yanmin Liu

Pages: 1781 - 1792

8) TOGA-Based Fuzzy Grey Cognitive Map for Spacecraft Debris Avoidance

Author(s): Chenhui Qin, Yuanshi Liu, Tong Wang, Jianbin Qiu, Min Li

Pages: 1793 - 1802

9) Reinforcement Learning-Based Fault-Tolerant Control for Semiactive Air Suspension Based on Generalized Fuzzy Hysteresis Model

Author(s): Pak Kin Wong, Zhijiang Gao, Jing Zhao

Pages: 1803 - 1814

10) Adaptive Fuzzy Attention Inference to Control a Microgrid Under Extreme Fault on Grid Bus

Author(s): Tanvir M. Mahim, A.H.M.A. Rahim, M. Mosaddequr Rahman

Pages: 1815 - 1824

11) Semisupervised Feature Selection With Multiscale Fuzzy Information Fusion: From Both Global and Local Perspectives

Author(s): Nan Zhou, Shujiao Liao, Hongmei Chen, Weiping Ding, Yaqian Lu

Pages: 1825 - 1839

12) Fuzzy Domain Adaptation From Heterogeneous Source Teacher Models

Author(s): Keqiuyin Li, Jie Lu, Hua Zuo, Guangquan Zhang

Pages: 1840 - 1852

13) Differentially Private Distributed Nash Equilibrium Seeking for Aggregative Games With Linear Convergence

Author(s): Ying Chen, Qian Ma, Peng Jin, Shengyuan Xu

Pages: 1853 - 1863

14) Robust Divide-and-Conquer Multiple Importance Kalman Filtering via Fuzzy Measure for Multipassive-Sensor Target Tracking

Author(s): Hongwei Zhang

Pages: 1864 - 1875

15) Fully Informed Fuzzy Logic System Assisted Adaptive Differential Evolution Algorithm for Noisy Optimization

Author(s): Sheng Xin Zhang, Yu Hong Liu, Xin Rou Hu, Li Ming Zheng, Shao Yong Zheng

Pages: 1876 - 1888

16) Impulsive Control of Nonlinear Multiagent Systems: A Hybrid Fuzzy Adaptive and Event-Triggered Strategy

Author(s): Fang Han, Hai Jin

Pages: 1889 - 1898

17) Uncertainty-Aware Superpoint Graph Transformer for Weakly Supervised 3-D Semantic Segmentation

Author(s): Yan Fan, Yu Wang, Pengfei Zhu, Le Hui, Jin Xie, Qinghua Hu

Pages: 1899 - 1912

18) Observer-Based SMC for Discrete Interval Type-2 Fuzzy Semi-Markov Jump Models

Author(s): Wenhai Qi, Runkun Li, Peng Shi, Guangdeng Zong

Pages: 1913 - 1925

19) Network Security Scheme for Discrete-Time T-S Fuzzy Nonlinear Active Suspension Systems Based on Multiswitching Control Mechanism

Author(s): Jiaming Shen, Yang Liu, Mohammed Chadli

Pages: 1926 - 1936

20) Fuzzy Multivariate Variational Mode Decomposition With Applications in EEG Analysis

Author(s): Hongkai Tang, Xun Yang, Yixuan Yuan, Pierre-Paul Vidal, Danping Wang, Jiuwen Cao, Duanpo Wu

Pages: 1937 - 1948

21) Adaptive Broad Network With Graph-Fuzzy Embedding for Imbalanced Noise Data

Author(s): Wuxing Chen, Kaixiang Yang, Zhiwen Yu, Feiping Nie, C. L. Philip Chen

Pages: 1949 - 1962

22) Average Filtering Error-Based Event-Triggered Fuzzy Filter Design With Adjustable Gains for Networked Control Systems

Author(s): Yingnan Pan, Fan Huang, Tieshan Li, Hak-Keung Lam

Pages: 1963 - 1976

23) Fuzzy and Crisp Gaussian Kernel-Based Co-Clustering With Automatic Width Computation

Author(s): José Nataniel A. de Sá, Marcelo R.P. Ferreira, Francisco de A.T. de Carvalho

Pages: 1977 - 1991

24) A Biselection Method Based on Consistent Matrix for Large-Scale Datasets

Author(s): Jinsheng Quan, Fengcai Qiao, Tian Yang, Shuo Shen, Yuhua Qian

Pages: 1992 - 2005

25) Nash Equilibrium Solutions for Switched Nonlinear Systems: A Fuzzy-Based Dynamic Game Method

Author(s): Yan Zhang, Zhengrong Xiang

Pages: 2006 - 2015

26) Active Domain Adaptation Based on Probabilistic Fuzzy C-Means Clustering for Pancreatic Tumor Segmentation

Author(s): Chendong Qin, Yongxiong Wang, Fubin Zeng, Jiapeng Zhang, Yangsen Cao, Xiaolan Yin, Shuai Huang, Di Chen, Huojun Zhang, Zhiyong Ju

Pages: 2016 - 2026

0 notes

Text

Real-Time 3D Segmentation with High-Quality Computer Vision: Recognizing and Responding with Machine Learning -Nextwealth

NextWealth's AI enables real-time 3D-Semantic segmentation with high-quality computer vision, recognizing and responding through machine learning for improved performance.

Read more :https://www.nextwealth.com/3d-semantic-segmentation-services/

0 notes

Text

Interesting Papers for Week 15, 2022

Gaze control during reaching is flexibly modulated to optimize task outcome. Abekawa, N., Gomi, H., & Diedrichsen, J. (2021). Journal of Neurophysiology, 126(3), 816–826.

Inter-trial effects in priming of pop-out: Comparison of computational updating models. Allenmark, F., Gokce, A., Geyer, T., Zinchenko, A., Müller, H. J., & Shi, Z. (2021). PLOS Computational Biology, 17(9), e1009332.

The essential role of hippocampo-cortical connections in temporal coordination of spindles and ripples. Azimi, A., Alizadeh, Z., & Ghorbani, M. (2021). NeuroImage, 243, 118485.

Mouse visual cortex areas represent perceptual and semantic features of learned visual categories. Goltstein, P. M., Reinert, S., Bonhoeffer, T., & Hübener, M. (2021). Nature Neuroscience, 24(10), 1441–1451.

Internal manipulation of perceptual representations in human flexible cognition: A computational model. Granato, G., & Baldassarre, G. (2021). Neural Networks, 143, 572–594.

Adaptation supports short-term memory in a visual change detection task. Hu, B., Garrett, M. E., Groblewski, P. A., Ollerenshaw, D. R., Shang, J., Roll, K., … Mihalas, S. (2021). PLOS Computational Biology, 17(9), e1009246.

The color phi phenomenon: Not so special, after all? Keuninckx, L., & Cleeremans, A. (2021). PLOS Computational Biology, 17(9), e1009344.

Periodic clustering of simple and complex cells in visual cortex. Kim, G., Jang, J., & Paik, S.-B. (2021). Neural Networks, 143, 148–160.

Gated feedforward inhibition in the frontal cortex releases goal-directed action. Kim, J.-H., Ma, D.-H., Jung, E., Choi, I., & Lee, S.-H. (2021). Nature Neuroscience, 24(10), 1452–1464.

Learning an Efficient Hippocampal Place Map from Entorhinal Inputs Using Non-Negative Sparse Coding. Lian, Y., & Burkitt, A. N. (2021). ENeuro, 8(4), ENEURO.0557-20.2021.

Lying in a 3T MRI scanner induces neglect-like spatial attention bias. Lindner, A., Wiesen, D., & Karnath, H.-O. (2021). eLife, 10, e71076.

Ergodicity-breaking reveals time optimal decision making in humans. Meder, D., Rabe, F., Morville, T., Madsen, K. H., Koudahl, M. T., Dolan, R. J., … Hulme, O. J. (2021). PLOS Computational Biology, 17(9), e1009217.

Basis profile curve identification to understand electrical stimulation effects in human brain networks. Miller, K. J., Müller, K.-R., & Hermes, D. (2021). PLOS Computational Biology, 17(9), e1008710.

Modulation in cortical excitability disrupts information transfer in perceptual-level stimulus processing. Moheimanian, L., Paraskevopoulou, S. E., Adamek, M., Schalk, G., & Brunner, P. (2021). NeuroImage, 243, 118498.

Language statistical learning responds to reinforcement learning principles rooted in the striatum. Orpella, J., Mas-Herrero, E., Ripollés, P., Marco-Pallarés, J., & de Diego-Balaguer, R. (2021). PLOS Biology, 19(9), e3001119.

The Development of Advanced Theory of Mind in Middle Childhood: A Longitudinal Study From Age 5 to 10 Years. Osterhaus, C., & Koerber, S. (2021). Child Development, 92(5), 1872–1888.

Action Costs Rapidly and Automatically Interfere with Reward-Based Decision-Making in a Reaching Task. Pierrieau, E., Lepage, J.-F., & Bernier, P.-M. (2021). ENeuro, 8(4), ENEURO.0247-21.2021.

Touching events predict human action segmentation in brain and behavior. Pomp, J., Heins, N., Trempler, I., Kulvicius, T., Tamosiunaite, M., Mecklenbrauck, F., … Schubotz, R. I. (2021). NeuroImage, 243, 118534.

Sensitivity – Local index to control chaoticity or gradient globally –. Shibata, K., Ejima, T., Tokumaru, Y., & Matsuki, T. (2021). Neural Networks, 143, 436–451.

Conventional measures of intrinsic excitability are poor estimators of neuronal activity under realistic synaptic inputs. Szabó, A., Schlett, K., & Szücs, A. (2021). PLOS Computational Biology, 17(9), e1009378.

#science#Neuroscience#computational neuroscience#Brain science#research#cognition#cognitive science#neurons#neurobiology#neural networks#neural computation#psychophysics#scientific publications

9 notes

·

View notes

Text

How is Data Annotation shaping the World of Deep Learning Algorithms?

The size of the global market for data annotation tools was estimated at USD 805.6 million in 2022, and it is expected to increase at a CAGR of 26.5% from 2023 to 2030. The growing use of image data annotation tools in the automotive, retail, and healthcare industries is a major driver of the expansion. Data Labeling or adding attribute tags to data, users can enhance the value of the information.

The Emergence of Data Annotation The industrial expansion of data annotation tools is being driven by a rising trend of using AI technology for document classification and categorization. Data annotation technologies are gaining ground as practical options for document labeling due to the increasing amounts of textual data and the significance of effectively classifying documents. The increased usage of data annotation tools for the creation of text-to-speech and NLP technologies is also changing the market.

The demand for automated data annotation tools is being driven by the growing significance of automated data labeling tools in handling massive volumes of unlabeled, raw data that are too complex and time-consuming to be annotated manually. Fully automated data labeling helps businesses speed up the development of their AI-based initiatives by reliably and quickly converting datasets into high-quality input training data.

Automated data labeling solutions can address these problems by precisely annotating data without issues of frustration or errors, in contrast to the time-consuming and more error-prone manual data labeling procedure.

Labeling Data is the basis of Data Annotation When annotating data, two things are required:

Data

A standardized naming system

The labeling conventions are likely to get increasingly complex as labeling programs develop.

Additionally, you might find that the naming convention was insufficient to produce the predictions or ML model you had in mind after training a model on the data. Applying labels to your data using various techniques and tools is the main aspect of data annotation tools. While some solutions offer a broad selection of tools to support a variety of use cases, others are specifically optimized to focus on particular sorts of labeling.

To help you identify and organize your data, almost all include some kind of data or document classification. You may choose to focus on specialists or use a more general platform depending on your current and projected future needs. Several forms of annotation capabilities provide data annotation tools for creating or managing guidelines, such as label maps, classes, attributes, and specific annotation types.

Types of Data Annotations

Image: Bounding boxes, polygons, polylines, classification, 2-D and 3-D points, or segmentation (semantic or instance), tracking, transcription, interpolation, or transcription are all examples of an image or video processing techniques.

Text: Coreference resolution, dependency resolution, sentiment analysis, net entity relationships (NER), parts of speech (POS), transcription, and sentiment analysis.

Audio: Time labeling, tagging, audio-to-text, and audio labeling

The automation, or auto-labeling, of many data annotation systems, is a new feature. Many solutions that use AI will help your human labelers annotate your data more accurately(e.g., automatically convert a four-point bounding box to a polygon) or even annotate your data without human intervention. To increase the accuracy of auto-labeling, some tools can also learn from the activities done by your human annotators.

Are you too looking for advanced Data Annotation & Data Labelling Services?

Contact us and we will provide you with the best solution to upgrade your operations efficiently.

0 notes

Text

Image Annotation Helping The Feature Of ADAS 2023

Annotation for Advanced Driver Assistance Systems (ADAS) in Computer Vision. The most advanced driver assist systems (ADAS) provide cars and drivers with the most advanced technology and details to aid them in being aware of their surroundings and handling potential situations with greater ease making use of semi-automation. AI together along with ADAS Annotation aids in developing applications that can detect various scenarios and objects and make quick and accurate choices to ensure safety while driving.

What is the reason? ADAS is important for Safe and Controlled Driving

ADAS is like self-driving vehicles and utilizes similar technologies like radar, vision, and the combination of various sensors such as LIDAR to automate dynamic driving tasks such as braking, steering or acceleration to make sure that the safety of drivers and a controlled, safe driving.

In order to incorporate this technology, ADAS demands labelled information to help the algorithm recognize the driver's movements and the objects. Annotating images is a well-known service that creates such data for computer vision training.

What is the difference between ADAS and Self-Driving Cars?

In autonomous or self-driving automobiles, the driver enjoys full control over its steering, the brakes, and many other aspects. There is no need for a driver as it can move in a certain direction and avoid all types of obstacles without the intervention of a human.

This assistance is all included in ADAS to assist or warn drivers if they do not see the situation. In the absence of a driver's attention all systems operate semi-autonomously and take the proper step for safety as well as the comfort of driving.

We employ ADAS Annotation to detect the driver's various bodies and other objects. Image Annotation Service is a popular tool to generate computer-generated training data.

Annotation ADAS for Traffic Detection

We utilize the ground-truth-labelling method to label recorded sensor data in line with the expected ADAS state. Pattern recognition, learning is a method of extract tracker, 3-D Vision as well as other Computer Vision techniques are used in ADAS traffic labelling.

AI Workforce is a well-known company that has developed a driver assistance system which provides high-quality information about traffic detection that can help create a live algorithm capable of recognizing patterns in traffic in the near future ADAS technology.

Annotation of Driver Monitoring for ADAS

Drivers who are tired and sleepy, or distracted, can be monitored by ADAS The driving monitor. ADAS detects signs that indicate the drivers mental strain, his conduct and the car's surroundings. AI Workforce annotates ADAS systems by using frames to assist ADAS by monitoring the motorist's facial expression, behavior and body movements.

Annotation Segmentation (AS) in ADAS is the process of labelling and indexing objects in frames. If there are multiple objects that are labeled with a unique colour code, each object is identified with a distinct colour code , without background noise. It is essential to eliminate background noise in order to ensure that the object will identify the item's edges.

We offer semantic segmentation of images, which is required to identify fixed and essential objects. To solve high-level vision issues that arise in computer vision like image understating and parsing scenes, the image segmentation can help computer vision applications overcome problems with low-level vision including 3D motion reconstruction and motion estimation and reconstruction.

What are the reasons you would want outsourcing your ADAS annotation tasks?

The most important asset for training autonomous vehicles and develop. A huge amount of varied and rich tag data is used to confirm. This involves gathering information about an area in order to link image data with actual conditions on the ground.

It is able to make use of the annotated data to develop and test recognition algorithms as well as predictive models in a systematic manner. Ground truth labels can help autonomous vehicles to recognize and identify moving objects through the identification of urban surroundings such as road markings, highway signs and even weather conditions.

There are many reasons why one ought to consider using Cogito to outsource ADAS annotation and other functions. Here are some of the most well-known reasons:

Excellent Quality Services

It is evident that cost is a major aspect to take into account when outsourcing the annotation process. Companies such as Cogito and Analytics can provide high-quality data Anotation Service with reasonable costs.

Infrastructure and technologies at their best

Companies that provide data annotation are innovative and cutting-edge. They make use of the most recent Artificial Intelligence, Machine Learning and robotics technologies.

Clients will get the most current and up-to-date software and customer support to help you with data annotation.

Services for images annotation

The success of an Artificial Machine Learning (AI/ML) implementation needs a high-quality learning data model. However, in addition to high-quality, the quality of AI/ML training will be defined by the size, speed and speed of annotations, security of data and bias reduction. Making sure the annotations of images are precise for projects that involve Machine Learning/AI as well including incorporating all these elements, will assist in creating the proper dataset for the project.

Without professional annotation experts, businesses usually face one or two of the following problems:

Understanding the meaning behind any image

Attention to detail, and a keen understanding

Recognition of faces, and following analysis (identifying gender, categorizing emotions, etc.)

A vast database is analyzed and analyzed with the goal of preserving the accuracy.

The use of classifiers is to organize every image.

Data security compliance

The consistency in the subjectivity of data sets

It's an arduous process

The process of consuming takes more effort and time than it is acceptable to try by yourself. This is why it's cheaper outsourcing annotation of images to a reputable company.

Perception models that have been trained with the 2D bounding boxes datasets can enhance your model's ability to search visually by recognizing different objects, including the most intricate images. Our annotation tools use 2D bounding boxes as well as 3D bounding boxes to make annotations that can be used to develop projects across various industries, including e-commerce autonomous vehicles, traffic control, and other projects that require training data to teach autonomous vehicles to identify pedestrians and cyclists pedestrians, traffic lights, footpaths,

Teach students about the ecommerce and retail model to recognize furniture and accessories, clothing food items, other items that can speed up the checkout process or create an income

The billing process is automated.

Develop computer vision models that find damage to objects such as cars and buildings to determine the amount of help needed to settle insurance claims as well as other things of this kind.

Recognize objects, people and tracks in satellite and drone images

We have designed our bounding box annotation workflow to meet the specific needs of you to find the objects that are of interest by using precise image labelling services.

0 notes

Text

(REVIEW) Not your minute turns from the blueprint: Body Work, by Tom Betteridge (SAD Press, 2018)

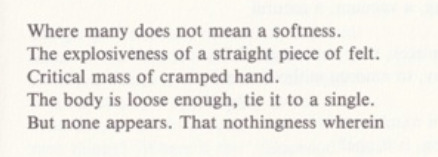

Denise Bonetti <[email protected]> Mon, 10 Dec 2018, 20:21 to maria.spamzine Hey Maria, Hope life’s good :) I’m just writing to see you if you’ve read Tom B’s new Body Work? There’s a paper I should be writing, but have been reading and rereading that pamphlet instead, or more like dipping in and out really, cause it's so beautifully layered and expanding that you can only take so much at a time. Don’t you think Tom’s poetry has this strange earworm quality to it? (I think he’d like the annelid comparison.) The way he choreographs words (I don’t want to use the word 'images’) makes its way into my brain and never wants to leave. He draws these, like, lateral paths of meaning so clearly that the weeds never grow back.Tom Raworth has this bit in 'Writers / Riders / Rioters' that goes:

the present is surrounded with the ringing of ings which words have moss on the northside

like, words naturally arrange themselves into a system of semantic habit, which is so stable and stale that makes them grow moss, but also so rich and vibrant when it's exploited productively. Obviously this is Raworth so it probably also means the opposite of this and so much more, but it kinda makes me want to say that the present (poem) makes the ringing of ings deviate so well that the moss can never grow again. I’d say that his poems behave like sophisticated lines in the sand, but they're more like brutal carvings on a rock. He had a couple poems in Blackbox Manifold ages ago (I think) and there was this one bit

‘nerve truffled plume lead pickled breast’

I think about all the time (especially when I cook). It’s so smooth. Why can I not stop thinking about it. It’s cause it’s so shameless, it wants it all - the feather-light and the corpse-heavy, never perturbed, so lucid. It plays at tasting good, but it tastes of an unrealistically blank texture. A-ha! Anyway the new pamphlet is gr8, if you haven’t read it yet look at the first poem pls - ‘OCCAM OCEAN’ (sounds like an anagram or palindrome)

It all dwells in systematic abstraction but flies so close to materiality, like a mosquito buzzing around the ear ('Not your minute turns from the blueprint'). I love that ‘plate’ in the first phrase, too: it behaves like an adjective but feels nothing like it. I can't help but think it's the subject of the sentence in a parallel universe that's created by scrambling syntax. It makes me think this is the way language should always work, and that we're the fkn idiots living in the parallel universe in which syntax is scrambled in ordered to be as boring as possible. Idk - it's late and I need to go back to writing boring essay syntax 'bound to decision and the pursuit of what follows'. Lemme know ur thoughts you smart queen D xxx

Maria Sledmere <[email protected]> Wed, 12 Dec 2018, 17:30 to denise.spamzine

Dearest Denise,

Life is good thanks. I'm sitting in the attic of the law building and I can hear the construction work going on and every time I leave I look out at the skyline and slivers of infrastructural alteration. I was walking along the road earlier because the pavement was closed off for construction and cba crossing and the high-vis guy was like, 'you'll not see Christmas walking on the road like that', but I guess I misheard him saying something else because I was really engrossed in this old Slowdive album, so I just smiled sweetly. Anyway, that got me thinking back to the question of erasure, which I think is pretty vital to Body Work.Have been carrying this pamphlet in my bag for so long that the cover has started to peel and revealing speckles of white underneath, like the text itself is ready to reveal itself, and yes I was thinking Barthes and strip-tease and paratextual enticement.

So I had to google the word annelid and now can't get the phrase 'segmented worm' out of my ear/head/throat (gross!). I was thinking about what sort of stains are on the cover of this book, you know, with Hrafnhildur Halldórsdóttir's gouache/pencil work. A stain is one thing stuck to another. Gouache is a funny kinda substance that is half watery gauze, half binding, thick, gummy akin to acrylic. Wet, it will easily smudge. My thumb keeps bleeding where the skin thins, hardens, peels and sloughs off. Tom's poems are kinda mucilaginous in parts (v. insecty, molluscky, sap emission; but also they have a crispness and precision, like cuts of leaf). Things that smudge or fall in flakes. Bodies are maybe whatever we leave behind. I didn't want to mention Hookworms the band because of the singer's sexual abuse scandal BUT it seems significant that a group named after an earworm/type of parasitic larvae would have a song called 'Negative Space'. Like what we eat into in the process of making, existing, remixing. Is language a rash, the result of these parasitic inf(l)ections?

I've been to a couple of reading groups on microbiomes lately, and we were thinking through this idea of how acknowledging your bodily composition in terms of myriad genes and organisms challenges conventional, bounded notions of 'self'. What kinds of affects does this produce? There's a weirdness to that, in Mark Fisher's sense of the weird as 'that which does not belong', that which 'brings to the familiar something which ordinarily lies beyond it, and which cannot be reconciled with the "homely" (even as its negation)'. Fisher suggests that the form most conducive to rendering the weird might be montage. So I was thinking about how montage involves splicings, gaps, juxtapositions, cuts and suddenness. I mean you open the very first poem of Body Work, 'Occam Ocean', and see that its prose-poetic paragraph compacting is split in the middle by the juncture of line break and indent. And ofc the title recalling Occam's razor, the philosophic principle by which in the case of two explanations for an occurrence, it's best to go with the one that requires least speculation. Razor things down and erase speculation? What are we left with, more of the Rreal? Lately I've been hankering for cleaner prose, crisp lines, simpler solutions. The Anthropocene is all of a goddamn tangle. Do I want to follow the myriad threads or just cut cut cut -- who gets to do that?

Did you ever cut a worm in two as a kid?

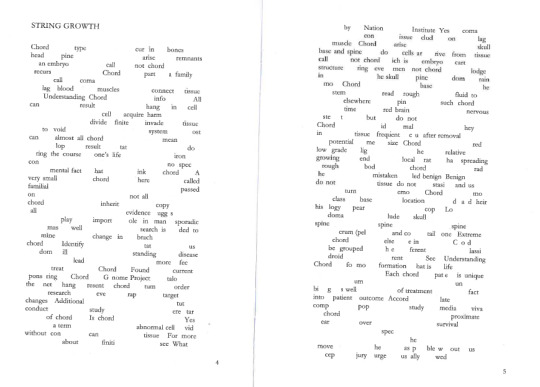

Okay so I love how 'Occam Ocean' might promise, title-wise, this clean prosaic expanse (like the oxymoron of expanding ocean and occam's, which requires surely a condensing), but what we get is clustering, motion, shiver, containment. The sensual trash magickk of P. Manson! The little syncope of this thing or that, the 'maple neck', vibrating canes, 'chambered breath bowed into the driest soundboard'. These aren't like 'Latour litanies' because they are not like concrete OOO segments of things; idk, they are more about processes and mutable assemblages, emphasis on action and change, sometimes transmission, things inside things. Lynn Margulis and symbiogenesis. How things interact, communicate up close; all of a mutable, prose-poetic swallow. It's actually an incredible intimate pamphlet, don't you think? I feel inside a thing inside a thing inside a thing. I feel a vague ecological sorrow, which gnaws at introspective tendency. The clue to that, you might see, is the cutaway phrase, 'emo Chord' in 'String Growth'.

'Collapse all tears allowing echo retreat'; these lyric lines of 'Glissando' expression, smoothing and shimmering over cuts and junctures: a slide between notes. I used to play trombone and I wish I cld articulate linguistically what kinds of lip vibration occur when you attempt a glissando and feel it slide down your arm muscle but then also through your chest as you try to sustain a sound. It's maybe the way you glide through a scattered poem, with your eye, which is different ofc to the spikier way you'd have to read it aloud, stuck on the vowels. Stuttering. I would love to hear Nat Raha perform these poems, because she does such wonderful things with punctuation and bodily performance, a kind of grammar of breath and cough and click. Reading over the more field erasure poems like 'O--NE' and 'String Growth', it's easy to say something like ~vibrant materialism~ here, but as usual I reach for Steven Connor on noise. Return to the ear, which is 'vulnerable' and 'resembles the skin in being the organ of exposure and reception'. I love what Connor says about Levinas' perspective on 'the awareness of the vacant anonymity of being, of an abstract, encompassing sense that "there is"' being 'an experience of noise'. <3 Acknowledging that breath in the void, that is not nothing but a sparkling something, entails a sense of noise. I am here in the attic of the law building, listening to construction, the type of my fingers on the keys. Someone is murmuring of their distress. What is the difference between living and existing, and being and nothing?

Karen Barad:

'Suppose we had a finely tuned, ultrasensitive instrument that we could use to zoom in on and tune in to the nuances and subtleties of nothingness. But what would it mean to zoom in on nothingness, to look and listen with ever-increasing sensitivity and acuity, to move to finer and finer scales of detail of...?'

When she asks, 'What is the measure of nothingness?' I think surely it is a bodily measure, as everything is: 'bound', as Tom puts it, 'to decision and the pursuit of what follows'. Of course 'what follows' recalls Derrida's 'The Animal That Therefore I Am (More to Follow)', where he's just out the shower and watching his cat watching his phallus, etc. What kinds of dislocation occur. But I mean in all that grandeur of encounter, there's still anthropocentricism. Tom gives you this cinematic CUT, like the instructive 'keen SNAP' that occurs in 'Occam Ocean' to dramatise 'Still, pondsnails whir and blindly source [...] a working leaf shutter'. Soever the language enacts the slurs of the snails up close. We look for the answer to the question of ellipsis, the more to follow [what follows]: inevitable, a question. Sometimes Tom is writing about silence ('then silence confronts an earful underhand') but the music of his language does all the noise, so we just can't have nothingness: there is always a vibrational residue that speaks of something in miniature, atomic, happening.

Ofc with the ear again I am thinking of the ear at the start of Lynch's Blue Velvet and how it's covered in rasping wee insects whose hum is a sort of white noise of trauma that runs through Lumberton's suburban idyllicism.

And so what happens next is I flip open to the following page of Body Work and there is 'String Growth', one of Tom's sprawling erasure poems, which for more than a split-second resembles hundreds of crawling, shimmering ants. I actually think my earliest childhood memory is of looking down at my bare feet on the patio of our old house in Hertfordshire and seeing red ants run over my toes. Then looking up to a greying, English sky. Constantly struck by the cinematic image of that, its splicing out of time: the vividness of insects on human flesh, then milky smog of skyward nothingness. 'String Growth', the accompanying notes to Body Work tell me, is an erasure poem of the Chordoma Foundation's 'Understanding Chordoma' information page.

Erasure can expose sequences of nightmare at work in the lexis and syntax of the text on which it parasitically feeds. I am scared to go on the Chordoma Foundation's website, for fear that just reading or saying the word 'tumour' will activate some kind of malignant growth in my body. And so something of the word chord as a sonorous relation between materials (bodily, textual; textural, cellular). Chordomas are tumours often located in the spine and so I find myself looking for the undulating shape of a spine in the scatter-text of Tom's poem. My eyes cascade down textual spines. Why is it sometimes I otherwise latch upon a 'keystone' word which then extends with adjacent resonance? Musical abnormalities accumulate. Thought swells.

And yea I wonder how this fits into what you say about the poems being 'so smooth'. Like Lynch's waxen silicone ear. Because even though fragmentation makes me think of bits and jaggedness etc, there's this sheen of aestheticism to Tom's work that makes me think of gloopiness, fullness, thereness but also the glaze of potential nothingness. Like in Barad's sense, or miniaturised ecological window shopping - a la Morton's Romantic consumerism? Or do we get into the things themselves? What are your thoughts on the question of recalcitrance? Maybe cos he named a previous pamphlet Pedicure I've just got varnish on my mind. Things an insect might stick to, and be amberized in. Mm.

'[...] Phosphorus crystals may be white, red, burgundyor alight as urine passes'

I keep a stone of citrine under my pillow sometimes. It is supposed to alleviate nightmares and 'manifest abundance'. It is the colour of a rich, dehydrated piss and sometimes when I come back to bed after peeing in the night I think it's some kind of organ lain on my bedsheets, hopped out of my body, and I have to stop my heart and breathe. Is that syncope?

On the <topic of piss>, isn't there a sort of caustic quality, even to the smoothness? Like it is working at making a brittleness of its sheen? And that is what poetry is, cracking the veneer of language or something? Punctuational insects dwelling in splits and fissures? It is nice and cool in Tom's poetry, a place for thinning the self and dwelling. Even though the lexis is so rich and dense, it still seems slender somehow; there's a suppleness. Tease threads of your silk(worm).

Was thinking about what Lisa Robertson says about 'commodiousness' in poetry and what kinds of space there are for the reader here, because I don't think there is much space at all, in the conventional humanly readerly sense. Maybe what I mean by (straw man: Romantic) lyric, which requires something of declarative expansiveness? The density and clutter of specialist language in Body Work makes me feel like a worm, trying to hook my way lusciously into a line: 'espalier's / strains unfinished by the scarp trellis' ('Body Work'), 'rooted to a middle-ground / no more than motion defibrillates'. And I become a parasite on the body of the text, which is a parasite on the body, which is made up of millions of (para)sites. Para ofc meaning side by side, which made me think of Haraway's sympoeisis (making-with) but also, admittedly, Limmy's madeup psychic show, Paraside (lalalol what you were saying about the scrambling parallel universe maybe, is that a lalaLacian Real which necessitates ululation, stammering? Complex remixing musicality of language throughout Body Work as summoning?). Going back to my incidental Slowdive reference earlier, maybe there's a shoegaze thing here, like setting up these 'noise-worlds' which shimmer indiscriminately behind/inside/through the semiotic oscillations of lyric? Is shoegaze a form of sonic gouache? Well it is certainly an ontic form of seduction, where I can't pick out the instruments of expression but I look for them hungrily in the haze. And the idea that transmission between worlds (the living/dead, human/nonhuman) might require a strain of humour (like haha but also meant in the sense of bodily humours?). For instance, shoegaze is decidedly not a humorous genre, but it sort of works on bodily humours, sometimes giving me the bends, or the blurry spaced-out feeling of having one's pleasure receptor's caressed by sound. Was wondering how YOU experienced the space and physicality of the poems -- was there anything u found FUNNY or sufficiently sultry as to produce a long and gorgeous sigh?

Mm and aren't there these tasty, cute moments of wow like 'tropic glut' ('O--NE') and 'prism arousal' ('Body Work') and 'clamour to emboss' ('Sapling').

Come to think of it, there are quite a lot of trees in Body Work, at the very least between 'Sapling' and 'Copsing', but also resonance in 'Awning', 'Annual' (which mentions 'yield', 'Thicket', 'sky-light muddle' etc) and 'Georgel' (georgics, idk?). Something about sprawl and thread: like the action of branches as arboreal mirror for threads of viruses, threads of code?

Side note: Can a person in a crowd of people experience canopy-shyness? Emily Berry has this lovely poem about crying and canopies and language.

Ways to dwell in inertia, violence, suspense ('Poem for July') as a 'clearing' within the pamphlet? Body Work as a title seems to combine two distinct fields: car repair and alternative medicine (hence mention of plants, cancers and crystals). The question of holistic approach, therapeutics, restoration. The sheen of metal, the sheen of health. O wise one of la letteratura del contemporaneo, pray tell your thoughts on possible Ballardian comparisons? Like obv v. different but I was struck by something to do with the cut-up structures of The Atrocity Exhibition and the way erasure works in Tom's work (probably in a more precise, attentive way, like the specialist's collage of tiny skins and digits, as opposed to grander themes of mediation that explode all over Ballard's work? -- generalising for the sake of interest obv).

Longing for a 'carvery [of] / uncommoning / rave'. Some kind of party you'd give up your skin for (is skin mere synecdoche of identity here?). Maybe the rave is what you were saying about scrambling.

Anyway, I hope your essay is going well. I must go read Hillis Miller's thoughts on Ariadne's thread, maybe make a tea. I've been getting these headaches lately, dawn to dusk & beyond, like the kind you get after being swimming (chlorine headache) or after crying (hormone headache). Pressurisation. I wonder if I have a parasite in my brain? So tonite I will probs lie awake, sleepless, listening for tinnitus :(

With warmth, Maria xxxxxxx

p.s.

Of course, by the time I get to the end of rereading I realise that it's only white marks being revealed underneath because literal holes have appeared in the Body Work cover, like some kind of fungus has been eating away at the book, performing another erasure.

Denise Bonetti <[email protected]> Fri, 1 Feb, 12:15 to maria.spamzine Dear Maria, Once again my legendary inability to reply to personal emails within a reasonable 1-month window manifests itself. Invoke my Scorpio moon (?) etc. I love it when people are like 'RIGHT - enough Facebook for me, email me if you want to talk etc', because that sounds like a nightmare to me. Long live IM! Long live the short form! (quite rich coming from someone whose job at the moment, I guess, is to churn out a dissertation?)

But then you know what I was thinking - someone like Clark Coolidge, for example, can get away with long form, intense long form. Not only get away, but own that long form. That long form I'm into. Clark Coolidge drown me in words and I'm fine with it, because he never dilutes, there's never any stagnation, you know what I mean, he just goes and goes and goes and you're like !!? YES!! HOW ARE YOU DOING THIS!? He just never runs out of steam. And I'm thinking of Coolidge because you mentioned crystals as agents in Tom B. (cf. The Crystal Text, how would the crystal speak etc), and of course because both Tom B. and Clark C. are just doing mad things with language, bold things and exciting things... They are like scientists you're friends with but who (maybe) don't like to talk about their work, then one day they decide to let into their basement lab where they've been secretly working on the most complex, organic, project for years, and they're like, don't freak out, here it is:

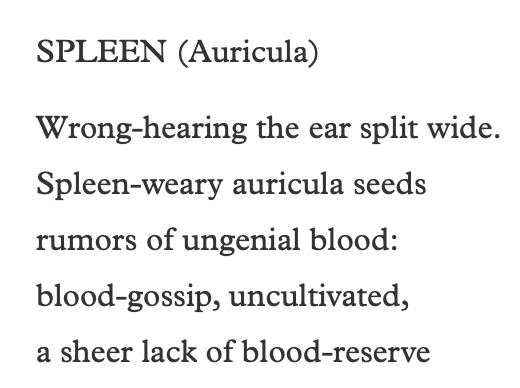

(Sorry for terrible quality [#postinternet] First is Tom B., second is Clark C.) Body Work looks quite controlled in form, visually speaking, with its vaguely justified lines, BIG symmetrical margins.. even the scattered pages look orderly! Like the bit of 'STRING WORTH' you sent. Which going back to your erasure thing, it makes me feel like Tom B. is giving us an OXO cube of his writing, all concentrated and delicious. But then my response is - show us more!!! Which rarely happens because I am scared of long form. And email. And dissertations. I also LOVE what you said about how Body Work combines car repair and alternative medicine!! That is absolutely spot on. Like the material, pragmatic tinkering motions of his writing, the referral to structures and the intention of like, see how far we can bend them and push them, but then it is never as dry as that! Very sweet motor oil. It's very kind poetry... generous! (A word that my friend Phoebe used to describe a certain type of poetry at a party last week and I thought, very interesting). Linguistically generous because it offers so many networks of reading, but then also.. approachable? As approachable as experimental poetry of this kind can be. I'm sure like, DANIEL would not think this is approachable lol (#COYBIG #romance). Which, fair enough. But if you're a nerd for this kind of poetry, then yeah. Like this bit from 'ANNUAL'??

*Cries!!! This is like you said, healing!! I feel looked after! 'Stomach prefers sound to day-to-day camphor'!!! Honestly what is this! So touching, so simple!

(Btw, I started experimenting with aromatherapy in my tiny room lol, do you know how to stop the water from boiling in the oil burner??)

Thanks for sending such interesting ideas over, I have to shoot to a seminar ! PPS: I saw Steven Connor in the English library yesterday (Oliver pointed at him silently like !!!!!!!!!!) so I kind of followed him to see what his approach to book browsing is.. very natural-looking and orderly? Surprised. Love the guy. *bubble sounds*

Lots of love Maria ‘let’s see where the spirits take us’ ur the best

xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx

Maria Sledmere <[email protected]> 6 Feb 2019, 23:19 to denise.spamzine Dear Denise,

Makes so much sense to map your message sensibilities onto your taste in poetry! I am so torn between the percolated richness of the email, its classic deferral (omg hun I owe you a million emails!) through a sort of quantum dimension of procrastination, and in opposition the sugar rush nowness of IM. I am such a frantic typist that often I send the wrong messages or cross my wires or just gush too much, so email is probably a safe option for me. There is all too much blue in my Messenger windows...Temptation of x's and endless emojis. But such a beauty to IM and texts out of context, like I wonder how many people read your probs too late 4 a snog now :'((( as micro-fictions, versus poems. I have a whole folder of screenshots on my computer from things that happened on Facebook that I have no memory of. Something about the Romantic fragment, accumulating ruin.

(btw) I feel like these extracts also shed light upon Body Work somehow. Biodegradables versus hard minerals and synthetic matter. Inner/outer. Flush. Tbh I think the middle one was from you?

Yes the dissertation, the dissertation as labour; it's like you have to find your scaffold first. Sometimes I feel like the scaffold in that wonderful Sophie Collins poem, 'Healers', and I write and don't notice myself and suddenly I'm so there, but the scaffold is secretly taking her bolt pins out the more I write around her. You can only be so respectful to your scaffold when she's so in the way. Gemini problems?Duality; structure/content. What is it Tom McCarthy says: 'structure is content, geometry is everything'. I want to be a wee fractal in a sequence of massive refraction. Is that how it works? Back to scaffolds, maybe we need to find the kindest mode of dismantling, and that's when you work into a form or something. And then also the more organic structures! So for CC it's the whole crystal thing, and working out of crystal logic. And then you just go and go and it's wonderful, much extravagant fractality, almost like poetry as virus, replicate replicate, grow, change. Mm, it's so good. My friend Kirsty did this mad poem about a tree, I couldn't tell if it was a story or poem, it was just branching out in a way that seemed hungry, necessary, spreading its roots. She said she wrote it in a rush! As if trees could rush! I like to think she inhabited a concentrated moment of becoming-tree, like she was a myriad in the roots or leaves. I don't think it could have happened without the tree, you know? But also the tree was almost entirely absent, it was like a ghost of form. Maybe I forgot how it goes. The lines looked like branches or something. Can you have long-form concrete? Concrete I guess by necessity is long-form. It takes a lot of energy to make. People are building houses out of mycelium instead, which is rad. Talking of roots and that, I just wrote 26k words on ecopoetics & t h r e a d s over the past fortnight and it was kind of that process, like letting a sort of tapestry take hold and I was maybe just one more thread, I was hardly doing the weaving, everything was moving around me and I wanted to wriggle into more and more gaps. Becoming-thread, perhaps. The next step is to slack and cut, which is exciting. Where to even start?

Your description of the complex, organic project is so gorgeous. Poems slow-cooked in a lab with tender organic care. My two scientist PhD pals are always gramming these beautiful pictures of crystals they're growing or mad wave patterns on screens. And we go for lunch and I'm like what you doing this afternoon and they're like, Oh just shooting photons. And is that much different from spending your afternoon writing poems? (Yes, they'd groan). I'm just chasing bits of light. Reading Tom B's work it's this whole precision thing, the actual inhabitation of process as such, so you see the energy buzz between things. I don't mean to say simply this is atomic poetry or poetry as tool analysis. It's more a betweening.

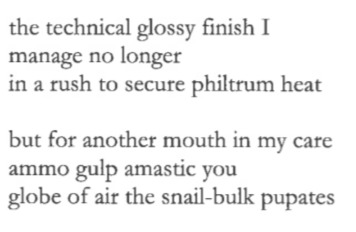

Isn't it super difficult to write non-anthropocentrically and really inhabit micro-relationality and also sound interesting and sexy in the way Claire Colebrook (she has that great essay in Tom Cohen's Telemorphosis) describes as 'sexual indifference', i.e. that threat to heteronormative reproduction that 'has always been warded off precisely because it opens the human organism to mutation, production, lines of descent and annihilation beyond that of its own intentionality'? Well anyway Body Work really works this way for me, it's like a poetics of sexual indifference that is nevertheless charged with desire you can't really predict, it's something in the frisson between objects and lines and coils of form. I think of crystal charge, iPhone battery (mine's always dying, Gemini trait 100%), engines. Neat miniaturisations of entropy, surge, spike and flux. When the 'I' comes in I'm like hey, what flow are you? It's actually so satisfying to quote these poems as fragments btw, they can do so much on their own as much as in poems and pamphlets, I wonder if that goes back to the accessibility thing. Like the absolute charm of a line as auto-affection:

This bit is from 'Temper' and I go back to my point about the flush/fluster! Globe of air/your *bubble sounds*. Isn't everything held so neatly, and yet it never feels neat, it just feels sharp and sparking, this 'technical glossy finish' like a really nice car, the body paint of a poem, its prosody so tightly held it feels more surface, a selection of hues and textures. And the erotics of the text or at the very least its pleasure is the shift between bodies, synecdoche, yes you could say bodies without organs but things in themselves are also important. Maybe another poet who does this is Sylvia Legris, she writes these apparently impersonal poems filled to the seams with specialist lexis (you have to have like twelve tabs open per poem to get it), but there's an affirmative humour and energy that feels v much a personal sensibility, a deliberated skewing of world that splices the poet's agency among items, artefacts, language. I mean how nice are these poemsshe published in Granta. I feel like I want cutlery to read them with, if that makes sense. Maybe a scalpel, for the succulence. The appearance of an ear again!

And then the beautiful metallurgy of this line from Tom, like somebody pierced my ears with perfect silver and it let all the demons out:

I am worried about what a certain seizure would look like. When we talk about vitality is it a willing naivety towards matter qua matter, as if we could just step out of correlationism? Such thoughts for 11pm of a Wednesday night. I can't help but think of the body image that Elizabeth Grosz describes in Volatile Bodies, kind of riffing off Paul Schilder: 'What psychoanalytic theory makes clear is that the body is literally written on, inscribed, by desire and signification, at the anatomical, physiological, and neurological levels'. And yeah, cool, what about the nonhuman body also? Has anyone done a really good psychoanalysis of the object. Parsed its psychic striations (traumatic or pleasurable residues of every microbial, huh?). In fact, what about the psychodynamic model of actual icebergs? Time we started literalising the matter of metaphors, absenting 'real cultural / medium' and filling with meltwater, fire and flow. Maybe it comes down to a bead of ink, the 'intimate concentrate' which is Lucozade, hangover piss, sick pH levels. So yeah, Body Work for me is this totally seductiveintersubjective space which actually works out pretty visceral states, sometimes disembodying me into a more fractal, mineral or bacterial being. I could start talking Kathryn Yusoff and geomorphism too, but maybe enough strata for one email? Plus I'm mixing my metaphors, I'm sure, mostly because I'm still morphing, dissolving inside those lines. I think I ate too many OXO cubes.

As for your oil burner boiling, sounds like you have an overactive candle? Maybe try a cooler tealight, nestle it to the back a little to redirect the strength of the flame? I like rosemary oil for remembrance, cranberry for comfort, ginger for energy. That line about resin is so nice. I was in Crianlarich at the weekend and my friend Patrick found this massive log and he carried it for so long that you could smell the resin on his skin, it was amazing. I keep thinking about the word 'pitch' and lush tree-ness, and the Log Lady in Twin Peaks and poetry you can chew like new molasses, prior to melt. Is that how it works?

Somebody is smashing glass into a bin in my garden and probably I should just close the pamphlet...

...but it's like a delicious pdf that gives infinities...

Yours in multiples & cherryish flusters,

Maria xoxoxoxooxoxoxoxoxooxoxoxox

1 note

·

View note

Text

Complex & Intelligent Systems, Volume 11, Issue 5, May 2025

1) Predicting trajectories of coastal area vessels with a lightweight Slice-Diff self attention

Author(s): Jinxu Zhang, Jin Liu, Junxiang Wang

2) Micro-expression spotting based on multi-modal hierarchical semantic guided deep fusion and optical flow driven feature integration

Author(s): Haolin Chang, Zhihua Xie, Fan Yang

3) Wavelet attention-based implicit multi-granularity super-resolution network

Author(s): Chen Boying, Shi Jie

4) Gaitformer: a spatial-temporal attention-enhanced network without softmax for Parkinson’s disease early detection

Author(s): Shupei Jiao, Hua Huo, Dongfang Li

5) A two-stage algorithm based on greedy ant colony optimization for travelling thief problem

Author(s): Zheng Zhang, Xiao-Yun Xia, Jun Zhang

6) Graph-based adaptive feature fusion neural network model for person-job fit

Author(s): Xia Xue, Feilong Wang, Baoli Wang

7) Fractals in Sb-metric spaces

Author(s): Fahim Ud Din, Sheeza Nawaz, Fairouz Tchier

8) Cooperative path planning optimization for ship-drone delivery in maritime supply operations

Author(s): Xiang Li, Hongguang Zhang

9) Reducing hallucinations of large language models via hierarchical semantic piece

Author(s): Yanyi Liu, Qingwen Yang, Yingyou Wen

10) A surrogate-assisted differential evolution algorithm with a dual-space-driven selection strategy for expensive optimization problems

Author(s): Hanqing Liu, Zhigang Ren, Wenhao Du

11) Knowledge graph-based entity alignment with unified representation for auditing

Author(s): Youhua Zhou, Xueming Yan, Fangqing Liu

12) A parallel large-scale multiobjective evolutionary algorithm based on two-space decomposition

Author(s): Feng Yin, Bin Cao

13) A study of enhanced visual perception of marine biology images based on diffusion-GAN

Author(s): Feifan Yao, Huiying Zhang, Pan Xiao

14) Research on knowledge tracing based on learner fatigue state

Author(s): Haoyu Wang, Qianxi Wu, Guohui Zhou

15) An exploration-enhanced hybrid algorithm based on regularity evolution for multi-objective multi-UAV 3-D path planning

Author(s): Zhenzu Bai, Haiyin Zhou, Jiongqi Wang

16) Correction to: Edge-centric optimization: a novel strategy for minimizing information loss in graph-to-text generation

Author(s): Yao Zheng, Jingyuan Li, Yuanzhuo Wang

17) A reliability centred maintenance-oriented framework for modelling, evaluating, and optimising complex repairable flow networks

Author(s): Nicholas Kaliszewski, Romeo Marian, Javaan Chahl

18) Enhancing implicit sentiment analysis via knowledge enhancement and context information

Author(s): Yanying Mao, Qun Liu, Yu Zhang

19) The opinion dynamics model for group decision making with probabilistic uncertain linguistic information

Author(s): Jianping Fan, Zhuxuan Jin, Meiqin Wu

20) Co-evolutionary algorithm with a region-based diversity enhancement strategy

Author(s): Kangshun Li, RuoLin RuanHui, Wang

21) SLPOD: superclass learning on point cloud object detection

Author(s): Xiaokang Yang, Kai Zhang, Zhiheng Zhang

22) Transformer-based multiple instance learning network with 2D positional encoding for histopathology image classification

Author(s): Bin Yang, Lei Ding, Bo Liu

23) Traffic signal optimization control method based on attention mechanism updated weights double deep Q network

Author(s): Huizhen Zhang, Zhenwei Fang, Xinyan Zeng

24) Enhancing cyber defense strategies with discrete multi-dimensional Z-numbers: a multi-attribute decision-making approach

Author(s): Aiting Yao, Huang Chen, Xuejun Li

25) A lightweight vision transformer with weighted global average pooling: implications for IoMT applications

Author(s): Huiyao Dong, Igor Kotenko, Shimin Dong

26) Self-attention-based graph transformation learning for anomaly detection in multivariate time series

Author(s): Qiushi Wang, Yueming Zhu, Yunbin Ma

27) TransRNetFuse: a highly accurate and precise boundary FCN-transformer feature integration for medical image segmentation

Author(s): Baotian Li, Jing Zhou, Jia Wu

28) A generative model-based coevolutionary training framework for noise-tolerant softsensors in wastewater treatment processes

Author(s): Yu Peng, Erchao Li

29) Mcaaco: a multi-objective strategy heuristic search algorithm for solving capacitated vehicle routing problems

Author(s): Yanling Chen, Jingyi Wei, Jie Zhou

30) A heuristic-assisted deep reinforcement learning algorithm for flexible job shop scheduling with transport constraints

Author(s): Xiaoting Dong, Guangxi Wan, Peng Zeng

0 notes

Text

10 PROVEN CONTENT WRITING TIPS FOR BEGINNERS - STEP BY STEP GUIDE

INTRODUCTION:

Content writing is an art & creativity, still don't you know, How to write perfect Content? Easy-peasy, I give some fantastic content writing tips for beginners to improve their writing skills. Content writing is up to educate the audience; by writing eye-catchy attention-grabbing tense access the reader's mind to take immediate action; Content communicates with your audience via writing excellent sentences in your blogs or on the social networking description. Follow the upcoming content writing formats, accomplish and nurture your content writing skills, and it will help you to add more value to your article.

1.DEFINING YOUR NICHE

· Before you start writing the content fix with your Niche, based on your Niche, you need to register your Content that should satisfy your Niche and be related to your audience. This is a very base content writing tip for a beginner.

2.KEYWORDS FILTER

· Come up with the proper sense of keywords relevant to your Niche. Before start writing the Content, search for the phrases user's most searched for; choose the low competition keywords with high demand. Pick 5 to 10 keywords integrate those keywords in your articles.

3.DECIDE THE CONVEYING TONE

Ø Narrate your Content in a different tone

FIRST-PERSON NARRATIVE

· The first-person narrative is about describing yourself, sharing your feedback, real-time experience, and giving reviews for what you had experienced in the past. Use (I, WE) terms to write the article in a first-person narrative tone.

Ø Example: Bloggers and Reviewers use the First-person communicative tone.

SECOND PERSON NARRATIVE

· Second Person narrative describes the products or services you're selling, Detailing the advantages and disadvantages of the products, educating the audience about your products or services. Use (You) term to write the article in a second-person narrative tone.

Ø Example: Business owners or service providers uses a second-person narrative tone to up-sell their products.

THIRD-PERSON NARRATIVE

· The third-person narrative speaks about others' achievements, drawbacks, and gossip about whatever went viral on the market. Whether it's a good or lousy contrivance, we can talk about that activity. Use (He, She, It, They) terms in your article to write the article in a third-person narrative tone.

Ø Example: News articles, Media articles, Movie reviewers.

4.DECIDE COMMUNICATION STYLE FOR YOUR BLOG

FORMAL:

· If you're Writing any technical blog for industries or writing any new articles for your business or services, your approach and writing style should be Formal. This ethical way of approach helps to convey and compromise your target audience to take immediate action.

UNFORMAL:

· If you're writing any non-techie blogs apart from the ROI mindset, you can write any new articles up to your mindset or the Niche that you're trusting very confident and unbreakable. That gives more engagement to your blogs and grabs more unique visitors to your site.

5.TITLE FRAMING:

· The title is the crucial factor for all blogs to increase engagement for our page. Title framing plays a hugely significant role in CTR. Play with your title before you publish a blog post. Every reader should have an eye on the title so every content writing beginner be much focused on title framing; Your title must have these significant elements,

Ø Magical words + Keywords + Powerful/Emotional words.

6.SKETCH AN OUTLINE:

· Before we start the content writing, Frame an outline for the article to keep engaging the audience throughout the article, The below elements need to consider in the blog article writing,

Ø INTRODUCTION

Ø BODY

Ø CONCLUSION

7.INTRODUCTION

· Make one good attention-grabbing introduction before start explaining the article in detail. Once you grab the attention and exaggerate the audience's problem in sentences, then give a solution to their problems. Make one clear CTA at the end of the content description. The universal formula for writing perfect descriptions is called an AIDA. Implement the AIDA strategy in the introduction for your blog posts and integrate the AIDA in social media descriptions for your business or services. One of the best-proven writing strategies followed by the entire universe is called the AIDA method. Make one perfect good description using AIDA is up to your creativity and content lineup tactic.

8.BODY

· The body of the article is like furnishing the aesthetics of the entire article. Break the paragraphs, use subheadings to the side topics, use more images as many possible this creates the desire in the accessor's mind to read the entire article. The body is the main element to reduce the bounce rate on your page.

Ø NOTE: The requisite element for every content writing beginner needs to look at these above content writing formats.

9.CONCLUSION

· A decision will summarize what the reader learned from your writing and how they benefitted from your blog. If the reader not gets satisfied with your Content, you can rearrange some aspects of the article according to the reader's review perspective or come back with some other good blog article.

Example:

Ø Ask a question to the reader to leave a comment or react to your post.

10.CTA

· Give clear CTA at the end of every article that increases your social score and share links to your own referring page that gets more visibility to your re-directing page also for your social media profiles, This drives more engagement to your referring page and social networks, must needed element every content writing beginner need to consider it in blog article writing format.

STEPS NEED TO BE FOLLOWED IN CONTENT WRITING

11.FORMATTING THE ARTICLE

· Format your article with the following upcoming sections. Formatting helps us to candle up the article more for reader convenience without any obstacles,

a) BREAK PARAGRAPHS

· Break your paragraphs. Don't make longer paragraphs in writing that create lazy dopamine on the reader's mind to finish the entire section; Segment each paragraph section-wise, which can easily occupy the reader to look after the entire article.

b) AXING

· Ax your article after completing your Content. Proofread your article twice or thrice to find mistakes and irrelevant lines accumulated on your writing, remove all the unrelated sentences and unwanted phrases. Also, make sure your essay needs to perfect with the extracted sentences, and prioritize keywords frequently while axing the entire article.

c) KEYWORD FREQUENCY

· Frequently split your keywords; don't stuff your keywords inside the article that makes some annoying over do's while reading the article. Split up your keywords according to the words count for 200 words. One keyword is suggested. Put LSI keywords in your writing to overcome the Keyword stuffing.

d) IMAGES

· Images play a significant role in blog articles. We can explain most sections without words by using the images, in return we receive more engagement rate to our blog post. Use images in your blog articles that are related to your Content. If you're not good at creating images, outsource the ideas for designing or if you're looted the other creativity, give the credits to the original creator.

e) BULLET POINTS AND NUMBERING

· Step down your paragraphs using Bullet points and Numbers, line down the essential points put numbers for your subheadings. That creates a beautiful appearance for your article. It's the most innovative way to make the reader engage with your blogs.

f) CONSISTENCY:

· Maintain consistency throughout the article; Content detailing which you're implemented on the first segment of the blog needs to be in the remaining every segment of the article. Every single detail needs to maintain consistency throughout the entire article. Don't make an uneven segment that gives more bounce rate to your page.

Ø Follow the metrics and standards; these were proven and standardized blog article writing format; also, it has been the verified tactics to acquire and engage your audience via content writing.

FAQ:

1.WHAT IS CONTENT WRITING?

· Content writing is an art; it accesses the reader's mind to take immediate action. The content writing creativity is up to educate the audience and conveys your message via writing unique Content.

2.WHAT IS A KEYWORD?

· Any word you searched on the internet to get a specific answer on the search engine is called a keyword.

3. WHAT ARE ALL THE TYPES OF KEYWORDS?

· SHORT-TAIL KEYWORD

· MID-TAIL KEYWORD

· LONG-TAIL KEYWORD

· LSI KEYWORD

SHORT TAIL KEYWORD

Ø The word that contains a single term or a single phrase is called the short tail keyword. This keyword gives many broad search results to the users to discover lot more results according to their query.

EXAMPLE: Non-Veg food

MID TAIL KEYWORD

Ø The word that contains more than one single term or single phrase is called the mid-tail keyword. This type of keyword gives slight broad search results to the user's query.

EXAMPLE: Non-Veg food Restaurants.

LONG-TAIL KEYWORD

Ø The word that contains a long phrase in the search query is called the Longtail keyword. This keyword gives exact search results to the users to get specific information about the searcher query.

EXAMPLE: Non-Veg food Restaurants in Chennai.

LSI KEYWORDS

Ø LSI Keywords stand for LSI- (Latent Semantic Indexing).

Ø The words which are related to the primary keywords are called the LSI keywords.

EXAMPLE: NON-VEG FOODS ( PRIMARY KEYWORD )

Ø Chicken, Mutton, Prawn, Crab

0 notes

Text

Interesting Reviews for Week 41, 2019

What and How the Deaf Brain Sees. Alencar, C. D. C., Butler, B. E., & Lomber, S. G. (2019). Journal of Cognitive Neuroscience, 31(8), 1091–1109.

Neural correlates of sparse coding and dimensionality reduction. Beyeler, M., Rounds, E. L., Carlson, K. D., Dutt, N., & Krichmar, J. L. (2019). PLOS Computational Biology, 15(6), e1006908.

A review of neurobiological factors underlying the selective enhancement of memory at encoding, consolidation, and retrieval. Crowley, R., Bendor, D., & Javadi, A.-H. (2019). Progress in Neurobiology, 179, 101615.

Recent progress in semantic image segmentation. Liu, X., Deng, Z., & Yang, Y. (2019). Artificial Intelligence Review, 52(2), 1089–1106.

The role of the opioid system in decision making and cognitive control: A review. van Steenbergen, H., Eikemo, M., & Leknes, S. (2019). Cognitive, Affective, & Behavioral Neuroscience, 19(3), 435–458.

#science#Neuroscience#computational neuroscience#Brain science#research#scientific publications#reviews#machine learning

4 notes

·

View notes

Text

pAA?

(Decided to reduce the names to E, O and S because this isn’t something that should be treated seriously or like there’s anything in particular respectable or responsible about it, but might be helpful to someone like me trying to find something out for personal reasons.) So since I finally got my hands on a copy of O and S’s reconstruction of PAA, I thought I’d go through and pick out what I thought were their best etymons against what I think are E’s best etymons. As I’ve said before both reconstructions seem to be basically mass comparison reconstructions.

The wiki page on Afroasiatic claims they agree on basically nothing, and out of 1000ish and 2600ish I still got less than 100 shared roots, so that’s probably accurate. The page and what I pulled out didn’t exactly line up though, but also there were some roots especially on E’s end (due to a need to reconstruct verbs to nominalize for his PS theories) that I outright changed the meaning of because they actually made his individual arguments stronger; like, say, if you had 4 roots that mean knife in each sub-branch, why assume the etymon means cut?

I compared the roots in case there was something that was an obvious oversight, and while I don’t think their methods were all that good (although O and S’s are a lot better), I didn’t want to discount everything in case there was something like “well their vowel systems are different so I’m not counting roots where even V[os] = V[e]” or like “O/S posit (g,x) < *q while E has (g,x) < *ÿ” being overlooked and miraculously they agreed on like 300 more roots than the page gives them credit. There’s also issues like, well, “Is this particular author doing such a bad job but the critics aren’t seeing that, that he/she/they are dragging the other author/s down by undermining their ok etymons?” etc.

So, like, I’ve messed with the data in a way that’s irrepairable and shit. Mind that. This isn’t scholarly work and isn’t intended to be. And I messed with some of the data - even if I think it’s more representative of their works at a level, from any kind of responsible position I need to make it absolutely clear that it’s not really reflective of their work, but a reflection of my amateur, shitty thoughts on their concordances.

So, for the curious:

So when putting this list together I accidentally kept mixing which side I wrote whose roots together. E tends to have labiovelars where OS have o, E has long vowels, OS don’t, E has tones, OS don’t, E has the voiced velar fricative I transcribed as ÿ and ŋ, OS have q and q’ vs k’ and k. E has p’, the rest I guess you’ll have to guess.

I also marked a few words with * for what might be imitative or baby speak words. *? like on horn is “I’m on the fence of it being imitative”

Vowels are pretty much ignored, but otherwise definite correlates:

kol-f bark (n) dam blood k'os~k'as bone naf breath ben~bin build di3, da3, du3 call dumn~deman cloud k'at' cut* 2ab father* pir fly*? ba2 go sim hear lib heart k'ar horn*? inkwal~2ankol kidney 2er~2âr know lVk'~lak lick* tir liver sum,sim~süm,sim name wan open bu~baw place dak,duk~dik pound k'u2 rise tuf spin ra3-raa3 sun dab,dib-dub tail les,lis tongue ma2 water

Close matches, differing by not a lot of phonological space, but the correlations seem unsystematic or quasi-systematic (E *k = OS *x? or the other way). A lot of this might be due to obfuscation of the subgroups; it’s known beyond these reconstructions that Semitic b, p, f correspond to Egyptian b, p, f but there doesn’t seem to be a pattern to the correspondences beyond [+labial][-nasal]=[+labial][-nasal]... except some roots where b = m, mostly Egyptian to Semitic (iirc it’s believed that snb = slm).

! maaw~mawut die ! har~heraw day ! t(l)'ok' beat ! bak~bax burn ! k'al~k'ar burn ! (t)san brother ! kor~kw'al angry #related to kidney? ! pak~pax break apart* ! yar~3er burn #n.b. OS *e = E *ya pretty consistently ! ka2(up) cover ! t'ub~duf drip* ! g'arub~ÿar(b) dusk ! 2et~iit eat*? ! gur~guud enclose ! 2ir~2il eye #2il is also given for both by the wiki author ! 2aakw~2ax fire ! ŋiiwr~gir flames ! pur~fir flower 1 k'ur~kâr go around ! ĉa3ar~ła2r hair ! qafV3~gâf hold ! qam~kam hold ! fil~bul hole ! ĉa(2i)d~gwi/ad land ! ne2ul~ñaw moist ! bakr~bar morning ! âf~2ap mouth* ! ĉer~sar,sir root ! cab~sVp sew ! sur~tsur sing ! tsoon~soon smell ! bak'~p'ak' split* ! da2~daw walk

Really kind of stretched correspondences, usually requiring twice the amount of special pleading as above:

!! rip~2erib sew !! dabn~zab hair !! yawr~yabil bull !! büł~fil skin !! 3ir~raw sky

A root that’s probably wrong but I didn’t delete it:

!!! c'eyg~c'a3ek shout

Roots on the wiki article it says are part of “the fragile consensus” but either escaped my standards for good etymologies from E and OS or were lost when I changed E or OS’s proposed definitions to fit the data they presented for etymologization:

>> (2a)bVr bull >> (2a)dVm land >> 2igar~kw'ar enclosure >> 3ayn eye #I think for both this was only found in Sem and Egn, which is proto-Sem-Egn, not PAA >> bar son >> gamm mane, beard >> gVn cheek, chin >> gwar3~(gora3?) throat #These words weren’t in E’s but look like it and I guessed on what the OS reconstruction would look like >> gwina3~(gona3?) hand >> kVn cowife >> kwaly??? kidney #that this isn’t VnkwVl damns something >> k'awal, qwar say, call >> sin tooth >> siwan know >> zwr seed >> łVr root >> šun sleep, dream

These I sort of gathered based on a decent scholarly Egyptologist’s work