#AI Calling Software Development

Explore tagged Tumblr posts

Text

#Top Technology Services Company in India#Generative AI Development Company#AI Calling Software#AI Software Development Company#Best Chatbot Service Company#AI Calling Software Development#AI Automation Software#Best AI Chat Bot Development Company#AI Software Dev Company#Top Web Development Company in India#Top Software Services Company in India#Best Product Design Company in World#Best Cloud and Devops Company#Best Analytic Solutions Company#Best Blockchain Development Company in India#Best Tech Blogs in 2024#Creating an AI-Based Product#Custom Software Development for Healthcare#NexaCalling Best AI Calling Bot#Best ERP Management System#WhatsApp Bulk Sender#Neighborhue Frontend Vercel

0 notes

Text

Yandere Sugar Daddy

Money can't buy love, but maybe it doesn't have to.

Yandere! Sugar Daddy who's very nouveau riche. Who has the wealth of the elites but none of their good breeding.

Yandere! Sugar Daddy who's awfully young for someone so wealthy. Barely out of college when his tech startup went public and the cash started pouring in.

Yandere! Sugar Daddy who is still painfully awkward around women.

Being a rich man in a big city means there's no shortage of models and influencers vying for his attention. And Yandere! Sugar Daddy never fails to get flustered when they're introduced to him.

Long legs, perfect skin, tiny ski slope noses... They're the kind of girls who wouldn't give him the time of day back in college and suddenly they're running their hands up his chest and whispering that he's just so clever, so accomplished. What guy wouldn't fall for it?

But he can never keep them around for long.

Their interest slowly dies out when he starts rambling about software development and production scale and AI integration. Money is a great motivator but all his girlfriends seem to leave for greener pastures. For millionaires with better social skills and better taste.

Yandere! Sugar Daddy who ran into you entirely on accident. The club was too loud, the girls too pretty, the alcohol too rich. He slipped out of VIP and into the street, pressing his forehead against the cool brick and trying not to spew on the new designer shoes his ex persuaded him to get.

And that was when you came into his life. Cool hands on his shoulder and a voice telling him to take a deep breath and drink some of your water.

Yandere! Sugar Daddy who looks up at you through his lashes, his face flushed from too much booze and being too near you. He can't fathom it. A girl helping him not because of his cash or connections, but because they're actually a kind person.

Yandere! Sugar Daddy who grabs your hand when you turn to go. Your friends are calling to you to stop messing around with random drunks and he manages to slip you his business card, begging you to call him so he can thank you properly.

Yandere! Sugar Daddy who wakes up with a killer hangover and your face burned into his eyelids. Who feels his heart jump when he opens his phone and sees a text from you.

Hope your night got better - y/n

Yandere! Sugar Daddy who immediately zooms in on your profile picture. A candid shot but it still makes him blush. Before the morning is over, he's already tracked down your social media.

Yandere! Sugar Daddy who pores over every inch of your life. Your job, your studies, your friends...

Yandere! Sugar Daddy who retypes his message at least a dozen times before he finally responds to you. Who invites you to the most exclusive restaurant in the city as a thank you.

Yandere! Sugar Daddy who picks you up in the most expensive car he owns. Who smiles a little at the careful way you close the door and buckle your seat belt. You're just as uncomfortable around luxury as he was.

Yandere! Sugar Daddy who doesn't expect much from the date. He's learned not to go on tangents about technology and work, but without it he feels lost.

Yandere! Sugar Daddy who realises you're more than capable of carrying a conversation. You're energetic and funny and interested in what he has to say. He feels himself opening up to you and before long, he's deep into a rant about data safety and you actually listen to him.

Yandere! Sugar Daddy who realises you compliment him. Like a puzzle piece finally slotting into place.

Yandere! Sugar Daddy who ends the night with a lipstick stain on his cheek and a big, goofy grin on his face.

Yandere! Sugar Daddy who calls you the second he wakes up and invites you to spend the afternoon learning to horse ride.

And when you tell him you have work, he just laughs and tells you he'll triple whatever you're getting paid for the day. You nearly faint when he keeps his word and sends you a deposit worth more than your monthly cheque.

Yandere! Sugar Daddy who wants to call you his girlfriend more than anything. His girl. He loves the way it sounds.

Yandere! Sugar Daddy who tags along when you go grocery shopping and whips out his card to pay for it all when your back is turned.

Yandere! Sugar Daddy who sends you a huge bouquet every week because you once mentioned liking lillies.

And the closer you get, the more time you spend kissing him and curling up in his bed, the more he spends on you.

Yandere! Sugar Daddy who uses spring break to take you on a tour of the Mediterranean. Who rents out entire villas and chateaus to impress you.

Yandere! Sugar Daddy who has your birthday dress custom made by an actual high fashion house. Who zips you up and kisses your neck and says he's never met a more beautiful girl.

Yandere! Sugar Daddy who spends shareholder meetings daydreaming about you. Who has to pinch himself to stay focused.

Yandere! Sugar Daddy who's helpless to stop himself falling for you. You're so real, so empty of pretence and greed.

Yandere! Sugar Daddy who showers you with all the wealth he has and is blind to how uncomfortable it makes you.

Yandere! Sugar Daddy who looks at you with a vacant smile when you try and break things off. Who pulls out his phone and sends you a deposit with so many zeros you have to rub your eyes to make sure you're seeing it right. Who asks if that's enough for more of your time or if he should double it.

Do you want a new car? An apartment? He'll give you anything, anything in the world.

Yandere! Sugar Daddy who looks like a kicked dog when you say you don't want any of it. You hate feeling indebted to him. You hate feeling like some vapid trophy wife. You hate living off his charity.

He can't understand it. You could work for decades and not afford even a quarter of what he can give you. Is he so unpleasant, so unlovable, that you're wiling to turn your back of a life of luxury?

Yandere! Sugar Daddy who comes up behind you and slams the door shut when you try to leave.

You've always seen him as a nice guy, someone awkward and gentle. But the look in his eyes now makes you question all of it.

Yandere! Sugar Daddy whose voice is a low, broken rasp. He sounds on the verge of tears and on the verge of fury all at once.

You think you can just leave after everything you've been through together? After the fortune he spent trying to make you happy?

No way baby.

Yandere! Sugar Daddy who grabs your wrist and yanks you up against him.

Yandere! Sugar Daddy who laughs when you threaten to scream. Luxury penthouse, remember? Totally sound proofed. Totally private. No one gets in or out without his permission.

It's just you and him, like it should have been from the beginning.

Yandere! Sugar Daddy who squeezes your wrist hard enough to hurt. Who kisses you so rough you cut your lips on your teeth.

Yandere! Sugar Daddy who yanks at the pretty dress that he bought you. You want to be an ungrateful bitch? You want to throw his kindness back in his face? Oh, he's going to teach you a lesson.

You fucking owe him.

And he's going to use your body until that debt is paid.

#Shoutout to the anon who requested this#I want a man to pay for my groceries too#Yandere#Yandere x Reader#yandere x you#yandere scenarios#yandere drabbles#yandere imagines#yandere oc x you#Reader insert#Yandere Sugar Daddy#Fem reader

7K notes

·

View notes

Text

The conversation around AI is going to get away from us quickly because people lack the language to distinguish types of AI--and it's not their fault. Companies love to slap "AI" on anything they believe can pass for something "intelligent" a computer program is doing. And this muddies the waters when people want to talk about AI when the exact same word covers a wide umbrella and they themselves don't know how to qualify the distinctions within.

I'm a software engineer and not a data scientist, so I'm not exactly at the level of domain expert. But I work with data scientists, and I have at least rudimentary college-level knowledge of machine learning and linear algebra from my CS degree. So I want to give some quick guidance.

What is AI? And what is not AI?

So what's the difference between just a computer program, and an "AI" program? Computers can do a lot of smart things, and companies love the idea of calling anything that seems smart enough "AI", but industry-wise the question of "how smart" a program is has nothing to do with whether it is AI.

A regular, non-AI computer program is procedural, and rigidly defined. I could "program" traffic light behavior that essentially goes { if(light === green) { go(); } else { stop();} }. I've told it in simple and rigid terms what condition to check, and how to behave based on that check. (A better program would have a lot more to check for, like signs and road conditions and pedestrians in the street, and those things will still need to be spelled out.)

An AI traffic light behavior is generated by machine-learning, which simplistically is a huge cranking machine of linear algebra which you feed training data into and it "learns" from. By "learning" I mean it's developing a complex and opaque model of parameters to fit the training data (but not over-fit). In this case the training data probably includes thousands of videos of car behavior at traffic intersections. Through parameter tweaking and model adjustment, data scientists will turn this crank over and over adjusting it to create something which, in very opaque terms, has developed a model that will guess the right behavioral output for any future scenario.

A well-trained model would be fed a green light and know to go, and a red light and know to stop, and 'green but there's a kid in the road' and know to stop. A very very well-trained model can probably do this better than my program above, because it has the capacity to be more adaptive than my rigidly-defined thing if the rigidly-defined program is missing some considerations. But if the AI model makes a wrong choice, it is significantly harder to trace down why exactly it did that.

Because again, the reason it's making this decision may be very opaque. It's like engineering a very specific plinko machine which gets tweaked to be very good at taking a road input and giving the right output. But like if that plinko machine contained millions of pegs and none of them necessarily correlated to anything to do with the road. There's possibly no "if green, go, else stop" to look for. (Maybe there is, for traffic light specifically as that is intentionally very simplistic. But a model trained to recognize written numbers for example likely contains no parameters at all that you could map to ideas a human has like "look for a rigid line in the number". The parameters may be all, to humans, meaningless.)

So, that's basics. Here are some categories of things which get called AI:

"AI" which is just genuinely not AI

There's plenty of software that follows a normal, procedural program defined rigidly, with no linear algebra model training, that companies would love to brand as "AI" because it sounds cool.

Something like motion detection/tracking might be sold as artificially intelligent. But under the covers that can be done as simply as "if some range of pixels changes color by a certain amount, flag as motion"

2. AI which IS genuinely AI, but is not the kind of AI everyone is talking about right now

"AI", by which I mean machine learning using linear algebra, is very good at being fed a lot of training data, and then coming up with an ability to go and categorize real information.

The AI technology that looks at cells and determines whether they're cancer or not, that is using this technology. OCR (Optical Character Recognition) is the technology that can take an image of hand-written text and transcribe it. Again, it's using linear algebra, so yes it's AI.

Many other such examples exist, and have been around for quite a good number of years. They share the genre of technology, which is machine learning models, but these are not the Large Language Model Generative AI that is all over the media. Criticizing these would be like criticizing airplanes when you're actually mad at military drones. It's the same "makes fly in the air" technology but their impact is very different.

3. The AI we ARE talking about. "Chat-gpt" type of Generative AI which uses LLMs ("Large Language Models")

If there was one word I wish people would know in all this, it's LLM (Large Language Model). This describes the KIND of machine learning model that Chat-GPT/midjourney/stablediffusion are fueled by. They're so extremely powerfully trained on human language that they can take an input of conversational language and create a predictive output that is human coherent. (I am less certain what additional technology fuels art-creation, specifically, but considering the AI art generation has risen hand-in-hand with the advent of powerful LLM, I'm at least confident in saying it is still corely LLM).

This technology isn't exactly brand new (predictive text has been using it, but more like the mostly innocent and much less successful older sibling of some celebrity, who no one really thinks about.) But the scale and power of LLM-based AI technology is what is new with Chat-GPT.

This is the generative AI, and even better, the large language model generative AI.

(Data scientists, feel free to add on or correct anything.)

3K notes

·

View notes

Text

So, let me try and put everything together here, because I really do think it needs to be talked about.

Today, Unity announced that it intends to apply a fee to use its software. Then it got worse.

For those not in the know, Unity is the most popular free to use video game development tool, offering a basic version for individuals who want to learn how to create games or create independently alongside paid versions for corporations or people who want more features. It's decent enough at this job, has issues but for the price point I can't complain, and is the idea entry point into creating in this medium, it's a very important piece of software.

But speaking of tools, the CEO is a massive one. When he was the COO of EA, he advocated for using, what out and out sounds like emotional manipulation to coerce players into microtransactions.

"A consumer gets engaged in a property, they might spend 10, 20, 30, 50 hours on the game and then when they're deep into the game they're well invested in it. We're not gouging, but we're charging and at that point in time the commitment can be pretty high."

He also called game developers who don't discuss monetization early in the planning stages of development, quote, "fucking idiots".

So that sets the stage for what might be one of the most bald-faced greediest moves I've seen from a corporation in a minute. Most at least have the sense of self-preservation to hide it.

A few hours ago, Unity posted this announcement on the official blog.

Effective January 1, 2024, we will introduce a new Unity Runtime Fee that’s based on game installs. We will also add cloud-based asset storage, Unity DevOps tools, and AI at runtime at no extra cost to Unity subscription plans this November. We are introducing a Unity Runtime Fee that is based upon each time a qualifying game is downloaded by an end user. We chose this because each time a game is downloaded, the Unity Runtime is also installed. Also we believe that an initial install-based fee allows creators to keep the ongoing financial gains from player engagement, unlike a revenue share.

Now there are a few red flags to note in this pitch immediately.

Unity is planning on charging a fee on all games which use its engine.

This is a flat fee per number of installs.

They are using an always online runtime function to determine whether a game is downloaded.

There is just so many things wrong with this that it's hard to know where to start, not helped by this FAQ which doubled down on a lot of the major issues people had.

I guess let's start with what people noticed first. Because it's using a system baked into the software itself, Unity would not be differentiating between a "purchase" and a "download". If someone uninstalls and reinstalls a game, that's two downloads. If someone gets a new computer or a new console and downloads a game already purchased from their account, that's two download. If someone pirates the game, the studio will be asked to pay for that download.

Q: How are you going to collect installs? A: We leverage our own proprietary data model. We believe it gives an accurate determination of the number of times the runtime is distributed for a given project. Q: Is software made in unity going to be calling home to unity whenever it's ran, even for enterprice licenses? A: We use a composite model for counting runtime installs that collects data from numerous sources. The Unity Runtime Fee will use data in compliance with GDPR and CCPA. The data being requested is aggregated and is being used for billing purposes. Q: If a user reinstalls/redownloads a game / changes their hardware, will that count as multiple installs? A: Yes. The creator will need to pay for all future installs. The reason is that Unity doesn’t receive end-player information, just aggregate data. Q: What's going to stop us being charged for pirated copies of our games? A: We do already have fraud detection practices in our Ads technology which is solving a similar problem, so we will leverage that know-how as a starting point. We recognize that users will have concerns about this and we will make available a process for them to submit their concerns to our fraud compliance team.

This is potentially related to a new system that will require Unity Personal developers to go online at least once every three days.

Starting in November, Unity Personal users will get a new sign-in and online user experience. Users will need to be signed into the Hub with their Unity ID and connect to the internet to use Unity. If the internet connection is lost, users can continue using Unity for up to 3 days while offline. More details to come, when this change takes effect.

It's unclear whether this requirement will be attached to any and all Unity games, though it would explain how they're theoretically able to track "the number of installs", and why the methodology for tracking these installs is so shit, as we'll discuss later.

Unity claims that it will only leverage this fee to games which surpass a certain threshold of downloads and yearly revenue.

Only games that meet the following thresholds qualify for the Unity Runtime Fee: Unity Personal and Unity Plus: Those that have made $200,000 USD or more in the last 12 months AND have at least 200,000 lifetime game installs. Unity Pro and Unity Enterprise: Those that have made $1,000,000 USD or more in the last 12 months AND have at least 1,000,000 lifetime game installs.

They don't say how they're going to collect information on a game's revenue, likely this is just to say that they're only interested in squeezing larger products (games like Genshin Impact and Honkai: Star Rail, Fate Grand Order, Among Us, and Fall Guys) and not every 2 dollar puzzle platformer that drops on Steam. But also, these larger products have the easiest time porting off of Unity and the most incentives to, meaning realistically those heaviest impacted are going to be the ones who just barely meet this threshold, most of them indie developers.

Aggro Crab Games, one of the first to properly break this story, points out that systems like the Xbox Game Pass, which is already pretty predatory towards smaller developers, will quickly inflate their "lifetime game installs" meaning even skimming the threshold of that 200k revenue, will be asked to pay a fee per install, not a percentage on said revenue.

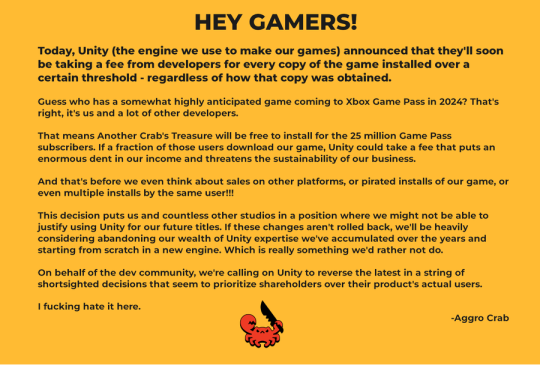

[IMAGE DESCRIPTION: Hey Gamers!

Today, Unity (the engine we use to make our games) announced that they'll soon be taking a fee from developers for every copy of the game installed over a certain threshold - regardless of how that copy was obtained.

Guess who has a somewhat highly anticipated game coming to Xbox Game Pass in 2024? That's right, it's us and a lot of other developers.

That means Another Crab's Treasure will be free to install for the 25 million Game Pass subscribers. If a fraction of those users download our game, Unity could take a fee that puts an enormous dent in our income and threatens the sustainability of our business.

And that's before we even think about sales on other platforms, or pirated installs of our game, or even multiple installs by the same user!!!

This decision puts us and countless other studios in a position where we might not be able to justify using Unity for our future titles. If these changes aren't rolled back, we'll be heavily considering abandoning our wealth of Unity expertise we've accumulated over the years and starting from scratch in a new engine. Which is really something we'd rather not do.

On behalf of the dev community, we're calling on Unity to reverse the latest in a string of shortsighted decisions that seem to prioritize shareholders over their product's actual users.

I fucking hate it here.

-Aggro Crab - END DESCRIPTION]

That fee, by the way, is a flat fee. Not a percentage, not a royalty. This means that any games made in Unity expecting any kind of success are heavily incentivized to cost as much as possible.

[IMAGE DESCRIPTION: A table listing the various fees by number of Installs over the Install Threshold vs. version of Unity used, ranging from $0.01 to $0.20 per install. END DESCRIPTION]

Basic elementary school math tells us that if a game comes out for $1.99, they will be paying, at maximum, 10% of their revenue to Unity, whereas jacking the price up to $59.99 lowers that percentage to something closer to 0.3%. Obviously any company, especially any company in financial desperation, which a sudden anchor on all your revenue is going to create, is going to choose the latter.

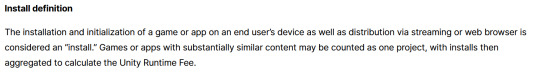

Furthermore, and following the trend of "fuck anyone who doesn't ask for money", Unity helpfully defines what an install is on their main site.

While I'm looking at this page as it exists now, it currently says

The installation and initialization of a game or app on an end user’s device as well as distribution via streaming is considered an “install.” Games or apps with substantially similar content may be counted as one project, with installs then aggregated to calculate the Unity Runtime Fee.

However, I saw a screenshot saying something different, and utilizing the Wayback Machine we can see that this phrasing was changed at some point in the few hours since this announcement went up. Instead, it reads:

The installation and initialization of a game or app on an end user’s device as well as distribution via streaming or web browser is considered an “install.” Games or apps with substantially similar content may be counted as one project, with installs then aggregated to calculate the Unity Runtime Fee.

Screenshot for posterity:

That would mean web browser games made in Unity would count towards this install threshold. You could legitimately drive the count up simply by continuously refreshing the page. The FAQ, again, doubles down.

Q: Does this affect WebGL and streamed games? A: Games on all platforms are eligible for the fee but will only incur costs if both the install and revenue thresholds are crossed. Installs - which involves initialization of the runtime on a client device - are counted on all platforms the same way (WebGL and streaming included).

And, what I personally consider to be the most suspect claim in this entire debacle, they claim that "lifetime installs" includes installs prior to this change going into effect.

Will this fee apply to games using Unity Runtime that are already on the market on January 1, 2024? Yes, the fee applies to eligible games currently in market that continue to distribute the runtime. We look at a game's lifetime installs to determine eligibility for the runtime fee. Then we bill the runtime fee based on all new installs that occur after January 1, 2024.

Again, again, doubled down in the FAQ.

Q: Are these fees going to apply to games which have been out for years already? If you met the threshold 2 years ago, you'll start owing for any installs monthly from January, no? (in theory). It says they'll use previous installs to determine threshold eligibility & then you'll start owing them for the new ones. A: Yes, assuming the game is eligible and distributing the Unity Runtime then runtime fees will apply. We look at a game's lifetime installs to determine eligibility for the runtime fee. Then we bill the runtime fee based on all new installs that occur after January 1, 2024.

That would involve billing companies for using their software before telling them of the existence of a bill. Holding their actions to a contract that they performed before the contract existed!

Okay. I think that's everything. So far.

There is one thing that I want to mention before ending this post, unfortunately it's a little conspiratorial, but it's so hard to believe that anyone genuinely thought this was a good idea that it's stuck in my brain as a significant possibility.

A few days ago it was reported that Unity's CEO sold 2,000 shares of his own company.

On September 6, 2023, John Riccitiello, President and CEO of Unity Software Inc (NYSE:U), sold 2,000 shares of the company. This move is part of a larger trend for the insider, who over the past year has sold a total of 50,610 shares and purchased none.

I would not be surprised if this decision gets reversed tomorrow, that it was literally only made for the CEO to short his own goddamn company, because I would sooner believe that this whole thing is some idiotic attempt at committing fraud than a real monetization strategy, even knowing how unfathomably greedy these people can be.

So, with all that said, what do we do now?

Well, in all likelihood you won't need to do anything. As I said, some of the biggest names in the industry would be directly affected by this change, and you can bet your bottom dollar that they're not just going to take it lying down. After all, the only way to stop a greedy CEO is with a greedier CEO, right?

(I fucking hate it here.)

And that's not mentioning the indie devs who are already talking about abandoning the engine.

[Links display tweets from the lead developer of Among Us saying it'd be less costly to hire people to move the game off of Unity and Cult of the Lamb's official twitter saying the game won't be available after January 1st in response to the news.]

That being said, I'm still shaken by all this. The fact that Unity is openly willing to go back and punish its developers for ever having used the engine in the past makes me question my relationship to it.

The news has given rise to the visibility of free, open source alternative Godot, which, if you're interested, is likely a better option than Unity at this point. Mostly, though, I just hope we can get out of this whole, fucking, environment where creatives are treated as an endless mill of free profits that's going to be continuously ratcheted up and up to drive unsustainable infinite corporate growth that our entire economy is based on for some fuckin reason.

Anyways, that's that, I find having these big posts that break everything down to be helpful.

#Unity#Unity3D#Video Games#Game Development#Game Developers#fuckshit#I don't know what to tag news like this

6K notes

·

View notes

Note

whats wrong with ai?? genuinely curious <3

okay let's break it down. i'm an engineer, so i'm going to come at you from a perspective that may be different than someone else's.

i don't hate ai in every aspect. in theory, there are a lot of instances where, in fact, ai can help us do things a lot better without. here's a few examples:

ai detecting cancer

ai sorting recycling

some practical housekeeping that gemini (google ai) can do

all of the above examples are ways in which ai works with humans to do things in parallel with us. it's not overstepping--it's sorting, using pixels at a micro-level to detect abnormalities that we as humans can not, fixing a list. these are all really small, helpful ways that ai can work with us.

everything else about ai works against us. in general, ai is a huge consumer of natural resources. every prompt that you put into character.ai, chatgpt? this wastes water + energy. it's not free. a machine somewhere in the world has to swallow your prompt, call on a model to feed data into it and process more data, and then has to generate an answer for you all in a relatively short amount of time.

that is crazy expensive. someone is paying for that, and if it isn't you with your own money, it's the strain on the power grid, the water that cools the computers, the A/C that cools the data centers. and you aren't the only person using ai. chatgpt alone gets millions of users every single day, with probably thousands of prompts per second, so multiply your personal consumption by millions, and you can start to see how the picture is becoming overwhelming.

that is energy consumption alone. we haven't even talked about how problematic ai is ethically. there is currently no regulation in the united states about how ai should be developed, deployed, or used.

what does this mean for you?

it means that anything you post online is subject to data mining by an ai model (because why would they need to ask if there's no laws to stop them? wtf does it matter what it means to you to some idiot software engineer in the back room of an office making 3x your salary?). oh, that little fic you posted to wattpad that got a lot of attention? well now it's being used to teach ai how to write. oh, that sketch you made using adobe that you want to sell? adobe didn't tell you that anything you save to the cloud is now subject to being used for their ai models, so now your art is being replicated to generate ai images in photoshop, without crediting you (they have since said they don't do this...but privacy policies were never made to be human-readable, and i can't imagine they are the only company to sneakily try this). oh, your apartment just installed a new system that will use facial recognition to let their residents inside? oh, they didn't train their model with anyone but white people, so now all the black people living in that apartment building can't get into their homes. oh, you want to apply for a new job? the ai model that scans resumes learned from historical data that more men work that role than women (so the model basically thinks men are better than women), so now your resume is getting thrown out because you're a woman.

ai learns from data. and data is flawed. data is human. and as humans, we are racist, homophobic, misogynistic, transphobic, divided. so the ai models we train will learn from this. ai learns from people's creative works--their personal and artistic property. and now it's scrambling them all up to spit out generated images and written works that no one would ever want to read (because it's no longer a labor of love), and they're using that to make money. they're profiting off of people, and there's no one to stop them. they're also using generated images as marketing tools, to trick idiots on facebook, to make it so hard to be media literate that we have to question every single thing we see because now we don't know what's real and what's not.

the problem with ai is that it's doing more harm than good. and we as a society aren't doing our due diligence to understand the unintended consequences of it all. we aren't angry enough. we're too scared of stifling innovation that we're letting it regulate itself (aka letting companies decide), which has never been a good idea. we see it do one cool thing, and somehow that makes up for all the rest of the bullshit?

#yeah i could talk about this for years#i could talk about it forever#im so passionate about this lmao#anyways#i also want to point out the examples i listed are ONLY A FEW problems#there's SO MUCH MORE#anywho ai is bleh go away#ask#ask b#🐝's anons#ai

1K notes

·

View notes

Text

I first posted this in a thread over on BlueSky, but I decided to port (a slightly edited version of) it over here, too.

Entirely aside from the absurd and deeply incorrect idea [NaNoWriMo has posited] that machine-generated text and images are somehow "leveling the playing field" for marginalized groups, I think we need to interrogate the base assumption that acknowledging how people have different abilities is ableist/discriminatory. Everyone SHOULD have access to an equal playing field when it comes to housing, healthcare, the ability to exist in public spaces, participating in general public life, employment, etc.

That doesn't mean every person gets to achieve every dream no matter what.

I am 39 years old and I have scoliosis and genetically tight hamstrings, both of which deeply impact my mobility. I will never be a professional contortionist. If I found a robot made out of tentacles and made it do contortion and then demanded everyone call me a contortionist, I would be rightly laughed out of any contortion community. Also, to make it equivalent, the tentacle robot would be provided for "free" by a huge corporation based on stolen unpaid routines from actual contortionists, and using it would boil drinking water in the Southwest into nothingness every time I asked it to do anything, and the whole point would be to avoid paying actual contortionists.

If you cannot - fully CAN NOT - do something, even with accommodations, that does not make you worth less as a person, and it doesn't mean the accommodations shouldn't exist, but it does mean that maybe that thing is not for you.

But who CAN NOT do things are not who uses "AI." It's people who WILL NOT do things.

"AI art means disabled people can be artists who wouldn't be able to otherwise!" There are armless artists drawing with their feet. There are paralyzed artists drawing with their mouths, or with special tracking software that translates their eye movements into lines. There are deeply dyslexic authors writing via text-to-speech. There are deaf musicians. If you actually want to do a thing and care about doing the thing, you can almost always find a way to do the thing.

Telling a machine to do it for you isn't equalizing access for the marginalized. It's cheating. It's anti-labor. It makes it easier for corporations not to pay creative workers, AND THAT'S IS WHY THEY'RE PUSHING IT EVERYWHERE.

I can't wait for the bubble to burst on machine-generated everything, just like it did for NFTs. When it does some people are going to discover they didn't actually learn anything or develop any transferable skills or make anything they can be proud of.

I hope a few of those people pick up a pencil.

It's never too late to start creating. It's never too late to actually learn something. It's never too late to realize that the work is the point.

#AI#writing#just fucking do it#if you want to be a writer then write#literally no one can do it for you#especially not machine-generated text machines#the work is the point

1K notes

·

View notes

Text

the past few years, every software developer that has extensive experience, and knows what they're talking about, has had pretty much the same opinion on LLM code assistants: they're OK for some tasks but generally shit. Having something that automates code writing is not new. Codegen before AI were scripts that generated code that you have to write for a task, but is so repetitive it's a genuine time saver to have a script do it.

this is largely the best that LLMs can do with code, but they're still not as good as a simple script because of the inherently unreliable nature of LLMs being a big honkin statistical model and not a purpose-built machine.

none of the senior devs that say this are out there shouting on the rooftops that LLMs are evil and they're going to replace us. because we've been through this concept so many times over many years. Automation does not eliminate coding jobs, it saves time to focus on other work.

the one thing I wish senior devs would warn newbies is that you should not rely on LLMs for anything substantial. you should definitely not use it as a learning tool. it will hinder you in the long run because you don't practice the eternally useful skill of "reading things and experimenting until you figure it out". You will never stop reading things and experimenting until you figure it out. Senior devs may have more institutional knowledge and better instincts but they still encounter things that are new to them and they trip through it like a newbie would. this is called "practice" and you need it to learn things

206 notes

·

View notes

Text

Noosciocircus agent backgrounds, former jobs at C&A, assigned roles, and current internal status.

Kinger

Former professor — Studied child psychology and computer science, moved into neobotanics via germination theory and seedlet development.

Seedlet trainer — Socialized and educated newly germinated seedlets to suit their future assignments. I.e. worked alongside a small team to serve as seedlets’ social parents, K-12 instructors, and upper-education mentors in rapid succession (about a year).

Intermediary — Inserted to assist cooperation and understanding of Caine.

Partially mentally mulekicked — Lives in state of forgetfulness after abstraction of spouse, is prone to reliving past from prior to event.

Ragatha

Former EMT — Worked in a rural community.

Semiohazard medic — Underwent training to treat and assess mulekick victims and to administer care in the presence of semiohazards.

Nootic health supervisor— Inserted to provide nootic endurance training, treat psychological mulekick, and maintain morale.

Obsessive-compulsive — Receives new agents and struggles to maintain morale among team and herself due to low trust in her honesty.

Jax

Former programmer — Gained experience when acquired out of university by a large software company.

Scioner — Developed virtual interfaces for seedless with which to operate machinery.

Circus surveyor — Inserted to assess and map nature of circus simulation, potentially finding avenues of escape.

Anomic — Detached from morals and social stake. Uncooperative and gleefully combative.

Gangle

Former navy sailor — Performed clerical work as a yeoman, served in one of the first semiotically-armed submarines.

Personnel manager — Recordkept C&A researcher employments and managed mess hall.

Task coordinator — Inserted to organize team effort towards escape.

Reclused — Abandoned task and lives in quiet, depressive state.

Zooble

No formal background — Onboarded out of secondary school for certification by C&A as part of a youth outreach initiative.

Mule trainer — Physically handled mules, living semiohazard conveyors for tactical use.

Semiohazard specialist — Inserted to identify, evaluate, and attempt to disarm semiotic tripwires.

Debilitated and self-isolating — Suffers chronic vertigo from randomly pulled avatar. Struggles to participate in adventures at risk of episode.

Pomni

Former accountant — Worked for a chemical research firm before completing her accreditation to become a biochemist.

Collochemist — Performed mesh checkups and oversaw industrial hormone synthesis.

Field researcher — Inserted to collect data from fellows and organize reports for indeterminate recovery. Versed in scientific conduct.

In shock — Currently acclimating to new condition. Fresh and overwhelming preoccupation with escape.

Caine

Neglected — Due to project deadline tightening, Caine’s socialization was expedited in favor of lessons pertinent to his practical purpose. Emerged a well-meaning but awkward and insecure individual unprepared for noosciocircus entrapment.

Prototype — Germinated as an experimental mustard, or semiotic filter seedlet, capable of subconsciously assembling semiohazards and detonating them in controlled conditions.

Nooscioarchitect — Constructs spaces and nonsophont AI for the agents to occupy and interact with using his asset library and computation power. Organizes adventures to mentally stimulate the agents, unknowingly lacing them with hazards.

Helpless — After semiohazard overexposure, an agent’s attachment to their avatar dissolves and their blackroom exposes, a process called abstraction. These open holes in the noosciocircus simulation spill potentially hazardous memories and emotion from the abstracted agent’s mind. Caine stores them in the cellar, a stimulus-free and infoproofed zone that calms the abstracted and nullifies emitted hazards. He genuinely cares about the inserted, but after only being able to do damage control for a continually deteriorating situation, the weight of his failure is beginning to weigh on him in a way he did not get to learn how to express.

#the amazing digital circus#noosciocircus#char speaks#digital circus#tadc Kinger#tadc Ragatha#tadc Jax#tadc gangle#tadc zooble#tadc Pomni#tadc caine#bad ending#sophont ai

215 notes

·

View notes

Text

You can go to Settings --> Apps --> Photos --> (scroll down to) Enhanced Visual Search --> toggle to off

"Apple last year deployed a mechanism for identifying landmarks and places of interest in images stored in the Photos application on its customers iOS and macOS devices and enabled it by default, seemingly without explicit consent.

Apple customers have only just begun to notice.

The feature, known as Enhanced Visual Search, was called out last week by software developer Jeff Johnson, who expressed concern in two write-ups about Apple's failure to explain the technology, which is believed to have arrived with iOS 18.1 and macOS 15.1 on October 28, 2024."

162 notes

·

View notes

Text

As a top technology service provider, Tech Avtar specializes in AI Product Development, ensuring excellence and affordability. Our agile methodologies guarantee quick turnaround times without compromising quality. Visit our website for more details or contact us at +91-92341-29799.

#Top Technology Services Company in India#Generative AI Development Company#AI Calling Software#AI Software Development Company#Best Chatbot Service Company#AI Calling Software Development#AI Automation Software#Best AI Chat Bot Development Company#AI Software Dev Company#Top Web Development Company in India#Top Software Services Company in India#Best Product Design Company in World#Best Cloud and Devops Company#Best Analytic Solutions Company#Best Blockchain Development Company in India#Best Tech Blogs in 2024#Creating an AI-Based Product#Custom Software Development for Healthcare#Best ERP Management System#WhatsApp Bulk Sender#Neighborhue Frontend Vercel

0 notes

Text

The leaked “AI Aloy” footage from Sony has left such a bitter taste in my mouth that, hours later, I’m still fuming and have even more words to say about the overall sinister nature of its implications.

Let's talk about it.

I want to start by saying that there is a difference between what is colloquially called "AI" as a tool for artists and developers in which their software uses their own sources to streamline the process (for example, the "Content Aware" fill tool that has been present in Photoshop for at least a decade), and "generative AI/genAI" that relies on unauthorised theft of resources to artificially splice data together based on prompts. I have no qualms with the former as it relies on being fed its own sources and is an aid for specific purposes. It is not artificial intelligence, but a tool. GenAI, on the other hand, is immoral, unethical, planet-destroying garbage.

The latter is what is being pushed in that egregious video footage. It is the epitome of tone-deaf, soulless, capitalistic wet dream, dangerously misogynistic slop and I am not exaggerating. And I think it's also the culmination of years of fandom culture being integrated by people who have never interacted in fandom, never bothered to learn the etiquette of a space that existed long before they joined, demanded changes for their comfort, and see it as another commodity.

I'm not the first to say this and others before me have been far smarter about it, but there has been a marked change in fandom culture the past few years. Many have said it goes back to COVID, when people generally not involved in fandom spaces joined because they had nothing else to do.

The thing about fandom is that for pretty much as long as it's existed, it has been a safe space for marginalised voices. It's no coincidence that the transformative works of fandom—fiction, art, meta, etc.—have been places for queer voices, for women, for people of colour, for the trans and nonbinary community, etc. With more people joining, these safe spaces have become less so. There are demands for people to "stop shipping" characters that aren't a canon, established ship. There are personal and threatening attacks on people who have a different viewpoint on a character or plot. People have been stalked. People have been doxxed. This isn't necessary new, but is happening with increased frequency and ferocity, especially by younger members and the terfy crowd. The safe space fandom provided marginalised voices really seems to be shrinking.

Outside of fandom culture itself, there is a rising trend of needing instant gratification, of sacrificing unique protagonists for the sake of "relatability" and "self-inserts." There are readers who ignore descriptions of female protagonists and male love interests in romance books so that they can self-insert (and others are calling for authors to stop describing entirely). There are booktok-ers who, believe it or not, complain about the amount of words on a page. I'm not saying their opinions are wrong in general—there is a market for what they seek—but their reviews are to encourage these stipulations to become the norm. And these influencers get enough engagement that their views are seen as profitable by the corporations and execs in charge.

So it isn't really surprising that now fandom is being seen as something that corporation can milk for all its capitalistic worth. Why wouldn't corporations invade a space they've ignored for years as inconsequential now that it's mainstream? After all, fandom was just full of the "weirdos" before, and now it's full of "normies!" This is a space that has been established for decades, built from the ground up by people who value the source material(s), now full of anyone and everyone who will soak up one morsel of customized instant gratification for the dopamine hit.

And that's where genAI comes in.

Why is this so sinister in regards to Sony's recent leaked footage using AI Aloy interacting with a user?

First off: It's Aloy.

Look, if you've perused my social media or interacted with me online at all, you know I love Horizon. My computer room is full of fan-made merch. I've written almost a million words of fanfiction in three years. I've drawn fanart. I helped construct a fanmade dating sim. Horizon has been a huge part of my life for the past three years.

I'm not ignorant of its flaws. I'm also aware of the fact that Horizon is often hated as an IP, and Aloy is the target of a lot of rage from certain audiences. Not to generalise, but let's be clear: the complaints are largely about Horizon being "woke DEI garbage" (you know, for having a queer female protagonist, for featuring other women and queer characters in prominent roles, for having people of colour be important in the story, for being anti-capitalist and pro-environmentalism, etc.—the same tired, ignorant arguments we've all heard), and about Aloy being "fat" and "looking like a man" (hopefully they stretch before that reach so they don't pull something).

So why would Sony use Aloy to showcase an AI conversation instead of someone like Kratos or Joel, who come from more popular and acclaimed IPs?

One possibility is Sony trying to sink Horizon or Guerrilla Games as a company, spurring so much backlash from the leak that the franchise is doomed and dropped so Guerrilla either goes under or focuses on old IPs like Killzone.

Or the more disgusting possibility is that something like genAI is made for the people who loudly and proudly proclaim how "anti-woke" they are, who have detested Horizon and Aloy from the beginning, and now they have a way to "like" Aloy. They have a way to make her say or do or react to whatever kinds of depravity they want to throw at her. They have a way to control and manipulate a fictional woman to fulfill their own incel agenda.

On top of that—Horizon? The video game about how a defective AI made by a trillionaire wiped out humanity? The sequel that revealed another rogue AI made by thousand-year-old billionaires is set to wipe out Earth again? That Horizon franchise is what Sony is using to showcase AI slop? Let's not even go into how the character responses are literally so painfully out of character they can't be taken seriously at all. The irony is so heavy-handed it's almost crushing.

The other reprehensible part of this is the fact that video game actors are still on strike, and this strike is to protect themselves from being replaced by AI. This test footage did sound like a messed up Siri, but Ashly Burch (Aloy's actress) has been in support of the strike. The insult of using her character to showcase this slop is beyond words.

All I will say in conclusion is that I genuinely hope this is not endorsed, supported, or aided by Guerrilla Games. If this plays any part in Horizon 3 or any future part of the franchise, I speak for myself but can confidently say I am out.

In conclusion please do not support any genAI slop, especially in fandom spaces. Make them know it is not wanted, not needed, and is in fact detested and will lose them money in the end.

On that happy note I'm off to bed.

99 notes

·

View notes

Text

On Saturday, an Associated Press investigation revealed that OpenAI's Whisper transcription tool creates fabricated text in medical and business settings despite warnings against such use. The AP interviewed more than 12 software engineers, developers, and researchers who found the model regularly invents text that speakers never said, a phenomenon often called a “confabulation” or “hallucination” in the AI field.

Upon its release in 2022, OpenAI claimed that Whisper approached “human level robustness” in audio transcription accuracy. However, a University of Michigan researcher told the AP that Whisper created false text in 80 percent of public meeting transcripts examined. Another developer, unnamed in the AP report, claimed to have found invented content in almost all of his 26,000 test transcriptions.

The fabrications pose particular risks in health care settings. Despite OpenAI’s warnings against using Whisper for “high-risk domains,” over 30,000 medical workers now use Whisper-based tools to transcribe patient visits, according to the AP report. The Mankato Clinic in Minnesota and Children’s Hospital Los Angeles are among 40 health systems using a Whisper-powered AI copilot service from medical tech company Nabla that is fine-tuned on medical terminology.

Nabla acknowledges that Whisper can confabulate, but it also reportedly erases original audio recordings “for data safety reasons.” This could cause additional issues, since doctors cannot verify accuracy against the source material. And deaf patients may be highly impacted by mistaken transcripts since they would have no way to know if medical transcript audio is accurate or not.

The potential problems with Whisper extend beyond health care. Researchers from Cornell University and the University of Virginia studied thousands of audio samples and found Whisper adding nonexistent violent content and racial commentary to neutral speech. They found that 1 percent of samples included “entire hallucinated phrases or sentences which did not exist in any form in the underlying audio” and that 38 percent of those included “explicit harms such as perpetuating violence, making up inaccurate associations, or implying false authority.”

In one case from the study cited by AP, when a speaker described “two other girls and one lady,” Whisper added fictional text specifying that they “were Black.” In another, the audio said, “He, the boy, was going to, I’m not sure exactly, take the umbrella.” Whisper transcribed it to, “He took a big piece of a cross, a teeny, small piece … I’m sure he didn’t have a terror knife so he killed a number of people.”

An OpenAI spokesperson told the AP that the company appreciates the researchers’ findings and that it actively studies how to reduce fabrications and incorporates feedback in updates to the model.

Why Whisper Confabulates

The key to Whisper’s unsuitability in high-risk domains comes from its propensity to sometimes confabulate, or plausibly make up, inaccurate outputs. The AP report says, "Researchers aren’t certain why Whisper and similar tools hallucinate," but that isn't true. We know exactly why Transformer-based AI models like Whisper behave this way.

Whisper is based on technology that is designed to predict the next most likely token (chunk of data) that should appear after a sequence of tokens provided by a user. In the case of ChatGPT, the input tokens come in the form of a text prompt. In the case of Whisper, the input is tokenized audio data.

The transcription output from Whisper is a prediction of what is most likely, not what is most accurate. Accuracy in Transformer-based outputs is typically proportional to the presence of relevant accurate data in the training dataset, but it is never guaranteed. If there is ever a case where there isn't enough contextual information in its neural network for Whisper to make an accurate prediction about how to transcribe a particular segment of audio, the model will fall back on what it “knows” about the relationships between sounds and words it has learned from its training data.

According to OpenAI in 2022, Whisper learned those statistical relationships from “680,000 hours of multilingual and multitask supervised data collected from the web.” But we now know a little more about the source. Given Whisper's well-known tendency to produce certain outputs like "thank you for watching," "like and subscribe," or "drop a comment in the section below" when provided silent or garbled inputs, it's likely that OpenAI trained Whisper on thousands of hours of captioned audio scraped from YouTube videos. (The researchers needed audio paired with existing captions to train the model.)

There's also a phenomenon called “overfitting” in AI models where information (in this case, text found in audio transcriptions) encountered more frequently in the training data is more likely to be reproduced in an output. In cases where Whisper encounters poor-quality audio in medical notes, the AI model will produce what its neural network predicts is the most likely output, even if it is incorrect. And the most likely output for any given YouTube video, since so many people say it, is “thanks for watching.”

In other cases, Whisper seems to draw on the context of the conversation to fill in what should come next, which can lead to problems because its training data could include racist commentary or inaccurate medical information. For example, if many examples of training data featured speakers saying the phrase “crimes by Black criminals,” when Whisper encounters a “crimes by [garbled audio] criminals” audio sample, it will be more likely to fill in the transcription with “Black."

In the original Whisper model card, OpenAI researchers wrote about this very phenomenon: "Because the models are trained in a weakly supervised manner using large-scale noisy data, the predictions may include texts that are not actually spoken in the audio input (i.e. hallucination). We hypothesize that this happens because, given their general knowledge of language, the models combine trying to predict the next word in audio with trying to transcribe the audio itself."

So in that sense, Whisper "knows" something about the content of what is being said and keeps track of the context of the conversation, which can lead to issues like the one where Whisper identified two women as being Black even though that information was not contained in the original audio. Theoretically, this erroneous scenario could be reduced by using a second AI model trained to pick out areas of confusing audio where the Whisper model is likely to confabulate and flag the transcript in that location, so a human could manually check those instances for accuracy later.

Clearly, OpenAI's advice not to use Whisper in high-risk domains, such as critical medical records, was a good one. But health care companies are constantly driven by a need to decrease costs by using seemingly "good enough" AI tools—as we've seen with Epic Systems using GPT-4 for medical records and UnitedHealth using a flawed AI model for insurance decisions. It's entirely possible that people are already suffering negative outcomes due to AI mistakes, and fixing them will likely involve some sort of regulation and certification of AI tools used in the medical field.

87 notes

·

View notes

Text

One way to spot patterns is to show AI models millions of labelled examples. This method requires humans to painstakingly label all this data so they can be analysed by computers. Without them, the algorithms that underpin self-driving cars or facial recognition remain blind. They cannot learn patterns.

The algorithms built in this way now augment or stand in for human judgement in areas as varied as medicine, criminal justice, social welfare and mortgage and loan decisions. Generative AI, the latest iteration of AI software, can create words, code and images. This has transformed them into creative assistants, helping teachers, financial advisers, lawyers, artists and programmers to co-create original works.

To build AI, Silicon Valley’s most illustrious companies are fighting over the limited talent of computer scientists in their backyard, paying hundreds of thousands of dollars to a newly minted Ph.D. But to train and deploy them using real-world data, these same companies have turned to the likes of Sama, and their veritable armies of low-wage workers with basic digital literacy, but no stable employment.

Sama isn’t the only service of its kind globally. Start-ups such as Scale AI, Appen, Hive Micro, iMerit and Mighty AI (now owned by Uber), and more traditional IT companies such as Accenture and Wipro are all part of this growing industry estimated to be worth $17bn by 2030.

Because of the sheer volume of data that AI companies need to be labelled, most start-ups outsource their services to lower-income countries where hundreds of workers like Ian and Benja are paid to sift and interpret data that trains AI systems.

Displaced Syrian doctors train medical software that helps diagnose prostate cancer in Britain. Out-of-work college graduates in recession-hit Venezuela categorize fashion products for e-commerce sites. Impoverished women in Kolkata’s Metiabruz, a poor Muslim neighbourhood, have labelled voice clips for Amazon’s Echo speaker. Their work couches a badly kept secret about so-called artificial intelligence systems – that the technology does not ‘learn’ independently, and it needs humans, millions of them, to power it. Data workers are the invaluable human links in the global AI supply chain.

This workforce is largely fragmented, and made up of the most precarious workers in society: disadvantaged youth, women with dependents, minorities, migrants and refugees. The stated goal of AI companies and the outsourcers they work with is to include these communities in the digital revolution, giving them stable and ethical employment despite their precarity. Yet, as I came to discover, data workers are as precarious as factory workers, their labour is largely ghost work and they remain an undervalued bedrock of the AI industry.

As this community emerges from the shadows, journalists and academics are beginning to understand how these globally dispersed workers impact our daily lives: the wildly popular content generated by AI chatbots like ChatGPT, the content we scroll through on TikTok, Instagram and YouTube, the items we browse when shopping online, the vehicles we drive, even the food we eat, it’s all sorted, labelled and categorized with the help of data workers.

Milagros Miceli, an Argentinian researcher based in Berlin, studies the ethnography of data work in the developing world. When she started out, she couldn’t find anything about the lived experience of AI labourers, nothing about who these people actually were and what their work was like. ‘As a sociologist, I felt it was a big gap,’ she says. ‘There are few who are putting a face to those people: who are they and how do they do their jobs, what do their work practices involve? And what are the labour conditions that they are subject to?’

Miceli was right – it was hard to find a company that would allow me access to its data labourers with minimal interference. Secrecy is often written into their contracts in the form of non-disclosure agreements that forbid direct contact with clients and public disclosure of clients’ names. This is usually imposed by clients rather than the outsourcing companies. For instance, Facebook-owner Meta, who is a client of Sama, asks workers to sign a non-disclosure agreement. Often, workers may not even know who their client is, what type of algorithmic system they are working on, or what their counterparts in other parts of the world are paid for the same job.

The arrangements of a company like Sama – low wages, secrecy, extraction of labour from vulnerable communities – is veered towards inequality. After all, this is ultimately affordable labour. Providing employment to minorities and slum youth may be empowering and uplifting to a point, but these workers are also comparatively inexpensive, with almost no relative bargaining power, leverage or resources to rebel.

Even the objective of data-labelling work felt extractive: it trains AI systems, which will eventually replace the very humans doing the training. But of the dozens of workers I spoke to over the course of two years, not one was aware of the implications of training their replacements, that they were being paid to hasten their own obsolescence.

— Madhumita Murgia, Code Dependent: Living in the Shadow of AI

70 notes

·

View notes

Text

We are proud to announce Blorb AI™ - the first true artifical general intelligence (AGI). Tired of having to pay "living wages" to so-called creatives? Worry no more, Blorb can automate and optimize everything from art to software development!

Try it now:

52 notes

·

View notes

Text

Women pulling Lever on a Drilling Machine, 1978 Lee, Howl & Company Ltd., Tipton, Staffordshire, England photograph by Nick Hedges image credit: Nick Hedges Photography

* * * *

Tim Boudreau

About the whole DOGE-will-rewrite Social Security's COBOL code in some new language thing, since this is a subject I have a whole lot of expertise in, a few anecdotes and thoughts.

Some time in the early 2000s I was doing some work with the real-time Java team at Sun, and there was a huge defense contractor with a peculiar query: Could we document how much memory an instance of every object type in the JDK uses? And could we guarantee that that number would never change, and definitely never grow, in any future Java version?

I remember discussing this with a few colleagues in a pub after work, and talking it through, and we all arrived at the conclusion that the only appropriate answer to this question as "Hell no." and that it was actually kind of idiotic.

Say you've written the code, in Java 5 or whatever, that launches nuclear missiles. You've tested it thoroughly, it's been reviewed six ways to Sunday because you do that with code like this (or you really, really, really should). It launches missiles and it works.

A new version of Java comes out. Do you upgrade? No, of course you don't upgrade. It works. Upgrading buys you nothing but risk. Why on earth would you? Because you could blow up the world 10 milliseconds sooner after someone pushes the button?

It launches fucking missiles. Of COURSE you don't do that.

There is zero reason to ever do that, and to anyone managing such a project who's a grownup, that's obvious. You don't fuck with things that work just to be one of the cool kids. Especially not when the thing that works is life-or-death (well, in this case, just death).

Another case: In the mid 2000s I trained some developers at Boeing. They had all this Fortran materials analysis code from the 70s - really fussy stuff, so you could do calculations like, if you have a sheet of composite material that is 2mm of this grade of aluminum bonded to that variety of fiberglass with this type of resin, and you drill a 1/2" hole in it, what is the effect on the strength of that airplane wing part when this amount of torque is applied at this angle. Really fussy, hard-to-do but when-it's-right-it's-right-forever stuff.

They were taking a very sane, smart approach to it: Leave the Fortran code as-is - it works, don't fuck with it - just build a nice, friendly graphical UI in Java on top of it that *calls* the code as-is.

We are used to broken software. The public has been trained to expect low quality as a fact of life - and the industry is rife with "agile" methodologies *designed* to churn out crappy software, because crappy guarantees a permanent ongoing revenue stream. It's an article of faith that everything is buggy (and if it isn't, we've got a process or two to sell you that will make it that way).

It's ironic. Every other form of engineering involves moving parts and things that wear and decay and break. Software has no moving parts. Done well, it should need *vastly* less maintenance than your car or the bridges it drives on. Software can actually be *finished* - it is heresy to say it, but given a well-defined problem, it is possible to actually *solve* it and move on, and not need to babysit or revisit it. In fact, most of our modern technological world is possible because of such solved problems. But we're trained to ignore that.

Yeah, COBOL is really long-in-the-tooth, and few people on earth want to code in it. But they have a working system with decades invested in addressing bugs and corner-cases.

Rewriting stuff - especially things that are life-and-death - in a fit of pique, or because of an emotional reaction to the technology used, or because you want to use the toys all the cool kids use - is idiotic. It's immaturity on display to the world.

Doing it with AI that's going to read COBOL code and churn something out in another language - so now you have code no human has read, written and understands - is simply insane. And the best software translators plus AI out there, is going to get things wrong - grievously wrong. And the odds of anyone figuring out what or where before it leads to disaster are low, never mind tracing that back to the original code and figuring out what that was supposed to do.

They probably should find their way off COBOL simply because people who know it and want to endure using it are hard to find and expensive. But you do that gradually, walling off parts of the system that work already and calling them from your language-du-jour, not building any new parts of the system in COBOL, and when you do need to make a change in one of those walled off sections, you migrate just that part.

We're basically talking about something like replacing the engine of a plane while it's flying. Now, do you do that a part-at-a-time with the ability to put back any piece where the new version fails? Or does it sound like a fine idea to vaporize the existing engine and beam in an object which a next-word-prediction software *says* is a contraption that does all the things the old engine did, and hope you don't crash?

The people involved in this have ZERO technical judgement.

#tech#software engineering#reality check#DOGE#computer madness#common sense#sanity#The gang that couldn't shoot straight#COBOL#Nick Hedges#machine world

38 notes

·

View notes

Text

Determined to use her skills to fight inequality, South African computer scientist Raesetje Sefala set to work to build algorithms flagging poverty hotspots - developing datasets she hopes will help target aid, new housing, or clinics.

From crop analysis to medical diagnostics, artificial intelligence (AI) is already used in essential tasks worldwide, but Sefala and a growing number of fellow African developers are pioneering it to tackle their continent's particular challenges.

Local knowledge is vital for designing AI-driven solutions that work, Sefala said.

"If you don't have people with diverse experiences doing the research, it's easy to interpret the data in ways that will marginalise others," the 26-year old said from her home in Johannesburg.

Africa is the world's youngest and fastest-growing continent, and tech experts say young, home-grown AI developers have a vital role to play in designing applications to address local problems.

"For Africa to get out of poverty, it will take innovation and this can be revolutionary, because it's Africans doing things for Africa on their own," said Cina Lawson, Togo's minister of digital economy and transformation.

"We need to use cutting-edge solutions to our problems, because you don't solve problems in 2022 using methods of 20 years ago," Lawson told the Thomson Reuters Foundation in a video interview from the West African country.

Digital rights groups warn about AI's use in surveillance and the risk of discrimination, but Sefala said it can also be used to "serve the people behind the data points". ...

'Delivering Health'

As COVID-19 spread around the world in early 2020, government officials in Togo realized urgent action was needed to support informal workers who account for about 80% of the country's workforce, Lawson said.

"If you decide that everybody stays home, it means that this particular person isn't going to eat that day, it's as simple as that," she said.

In 10 days, the government built a mobile payment platform - called Novissi - to distribute cash to the vulnerable.

The government paired up with Innovations for Poverty Action (IPA) think tank and the University of California, Berkeley, to build a poverty map of Togo using satellite imagery.

Using algorithms with the support of GiveDirectly, a nonprofit that uses AI to distribute cash transfers, the recipients earning less than $1.25 per day and living in the poorest districts were identified for a direct cash transfer.

"We texted them saying if you need financial help, please register," Lawson said, adding that beneficiaries' consent and data privacy had been prioritized.

The entire program reached 920,000 beneficiaries in need.

"Machine learning has the advantage of reaching so many people in a very short time and delivering help when people need it most," said Caroline Teti, a Kenya-based GiveDirectly director.

'Zero Representation'

Aiming to boost discussion about AI in Africa, computer scientists Benjamin Rosman and Ulrich Paquet co-founded the Deep Learning Indaba - a week-long gathering that started in South Africa - together with other colleagues in 2017.

"You used to get to the top AI conferences and there was zero representation from Africa, both in terms of papers and people, so we're all about finding cost effective ways to build a community," Paquet said in a video call.

In 2019, 27 smaller Indabas - called IndabaX - were rolled out across the continent, with some events hosting as many as 300 participants.

One of these offshoots was IndabaX Uganda, where founder Bruno Ssekiwere said participants shared information on using AI for social issues such as improving agriculture and treating malaria.

Another outcome from the South African Indaba was Masakhane - an organization that uses open-source, machine learning to translate African languages not typically found in online programs such as Google Translate.

On their site, the founders speak about the South African philosophy of "Ubuntu" - a term generally meaning "humanity" - as part of their organization's values.

"This philosophy calls for collaboration and participation and community," reads their site, a philosophy that Ssekiwere, Paquet, and Rosman said has now become the driving value for AI research in Africa.

Inclusion

Now that Sefala has built a dataset of South Africa's suburbs and townships, she plans to collaborate with domain experts and communities to refine it, deepen inequality research and improve the algorithms.

"Making datasets easily available opens the door for new mechanisms and techniques for policy-making around desegregation, housing, and access to economic opportunity," she said.

African AI leaders say building more complete datasets will also help tackle biases baked into algorithms.

"Imagine rolling out Novissi in Benin, Burkina Faso, Ghana, Ivory Coast ... then the algorithm will be trained with understanding poverty in West Africa," Lawson said.

"If there are ever ways to fight bias in tech, it's by increasing diverse datasets ... we need to contribute more," she said.

But contributing more will require increased funding for African projects and wider access to computer science education and technology in general, Sefala said.

Despite such obstacles, Lawson said "technology will be Africa's savior".

"Let's use what is cutting edge and apply it straight away or as a continent we will never get out of poverty," she said. "It's really as simple as that."

-via Good Good Good, February 16, 2022

#older news but still relevant and ongoing#africa#south africa#togo#uganda#covid#ai#artificial intelligence#pro ai#at least in some specific cases lol#the thing is that AI has TREMENDOUS potential to help humanity#particularly in medical tech and climate modeling#which is already starting to be realized#but companies keep pouring a ton of time and money into stealing from artists and shit instead#inequality#technology#good news#hope

209 notes

·

View notes