#AI and automation training

Explore tagged Tumblr posts

Text

Mastering Generative AI at Sunbeam Institute

Artificial Intelligence (AI) is revolutionizing industries, and Generative AI is at the forefront of this transformation. From text generation to image creation, Generative AI is reshaping how businesses and individuals approach creativity and automation. If you're looking to dive into this exciting field, Sunbeam Institute’s Mastering Generative AI course is the perfect opportunity to build expertise.

Why Choose Sunbeam’s Generative AI Course?

✅ Comprehensive Curriculum – Covers fundamental to advanced Generative AI concepts. ✅ Hands-on Training – Practical implementation using industry-leading AI models. ✅ Expert Guidance – Learn from seasoned AI professionals with real-world experience. ✅ Industry Applications – Understand how Generative AI is used in businesses today. ✅ Career Growth – Gain in-demand skills to excel in AI-driven careers.

What You Will Learn

📌 Introduction to Generative AI – Understanding AI models, deep learning, and neural networks. 📌 Text Generation – Learn how AI generates human-like text using NLP techniques. 📌 Image and Video Synthesis – Explore AI-driven image and video creation. 📌 AI-Powered Creativity – Discover how AI enhances creative processes in various industries. 📌 Hands-on Projects – Work on real-world projects to apply your knowledge.

Who Can Enroll?

🔹 AI enthusiasts and beginners eager to explore Generative AI. 🔹 Developers and data scientists looking to expand their skillset. 🔹 Professionals aiming to integrate AI into their work. 🔹 Anyone passionate about the future of AI and automation.

#Generative AI course in Pune#Learn Generative AI#AI-powered creativity training#Best AI course for beginners#Mastering Generative AI#AI and automation training

0 notes

Text

i have chronic pain. i am neurodivergent. i understand - deeply - the allure of a "quick fix" like AI. i also just grew up in a different time. we have been warned about this.

15 entire years ago i heard about this. in my forensics class in high school, we watched a documentary about how AI-based "crime solving" software was inevitably biased against people of color.

my teacher stressed that AI is like a book: when someone writes it, some part of the author will remain within the result. the internet existed but not as loudly at that point - we didn't know that AI would be able to teach itself off already-biased Reddit threads. i googled it: yes, this bias is still happening. yes, it's just as bad if not worse.

i can't actually stop you. if you wanna use ChatGPT to slide through your classes, that's on you. it's your money and it's your time. you will spend none of it thinking, you will learn nothing, and, in college, you will piss away hundreds of thousands of dollars. you will stand at the podium having done nothing, accomplished nothing. a cold and bitter pyrrhic victory.

i'm not even sure students actually read the essays or summaries or emails they have ChatGPT pump out. i think it just flows over them and they use the first answer they get. my brother teaches engineering - he recently got fifty-three copies of almost-the-exact-same lab reports. no one had even changed the wording.

and yes: AI itself (as a concept and practice) isn't always evil. there's AI that can help detect cancer, for example. and yet: when i ask my students if they'd be okay with a doctor that learned from AI, many of them balk. it is one thing if they don't read their engineering textbook or if they don't write the critical-thinking essay. it's another when it starts to affect them. they know it's wrong for AI to broad-spectrum deny insurance claims, but they swear their use of AI is different.

there's a strange desire to sort of divorce real-world AI malpractice over "personal use". for example, is it moral to use AI to write your cover letters? cover letters are essentially only templates, and besides: AI is going to be reading your job app, so isn't it kind of fair?

i recently found out that people use AI as a romantic or sexual partner. it seems like teenagers particularly enjoy this connection, and this is one of those "sticky" moments as a teacher. honestly - you can roast me for this - but if it was an actually-safe AI, i think teenagers exploring their sexuality with a fake partner is amazing. it prevents them from making permanent mistakes, it can teach them about their bodies and their desires, and it can help their confidence. but the problem is that it's not safe. there isn't a well-educated, sensitive AI specifically to help teens explore their hormones. it's just internet-fed cycle. who knows what they're learning. who knows what misinformation they're getting.

the most common pushback i get involves therapy. none of us have access to the therapist of our dreams - it's expensive, elusive, and involves an annoying amount of insurance claims. someone once asked me: are you going to be mad when AI saves someone's life?

therapists are not just trained on the book, they're trained on patient management and helping you see things you don't see yourself. part of it will involve discomfort. i don't know that AI is ever going to be able to analyze the words you feed it and answer with a mind towards the "whole person" writing those words. but also - if it keeps/kept you alive, i'm not a purist. i've done terrible things to myself when i was at rock bottom. in an emergency, we kind of forgive the seatbelt for leaving bruises. it's just that chat shouldn't be your only form of self-care and recovery.

and i worry that the influence chat has is expanding. more and more i see people use chat for the smallest, most easily-navigated situations. and i can't like, make you worry about that in your own life. i often think about how easy it was for social media to take over all my time - how i can't have a tiktok because i spend hours on it. i don't want that to happen with chat. i want to enjoy thinking. i want to enjoy writing. i want to be here. i've already really been struggling to put the phone down. this feels like another way to get you to pick the phone up.

the other day, i was frustrated by a book i was reading. it's far in the series and is about a character i resent. i googled if i had to read it, or if it was one of those "in between" books that don't actually affect the plot (you know, one of those ".5" books). someone said something that really stuck with me - theoretically you're reading this series for enjoyment, so while you don't actually have to read it, one would assume you want to read it.

i am watching a generation of people learn they don't have to read the thing in their hand. and it is kind of a strange sort of doom that comes over me: i read because it's genuinely fun. i learn because even though it's hard, it feels good. i try because it makes me happy to try. and i'm watching a generation of people all lay down and say: but i don't want to try.

#spilled ink#i do also think this issue IS more complicated than it appears#if a teacher uses AI to grade why write the essay for example.#<- while i don't agree (the answer is bc the essay is so YOU learn) i would be RIPSHIT as a student#if i found that out.#but why not give AI your job apps? it's not like a human person SEES your applications#the world IS automating in certain ways - i do actually understand the frustration#some people feel where it's like - i'm doing work here. the work will be eaten by AI. what's the point#but the answer is that we just don't have a balance right now. it just isn't trained in a smart careful way#idk. i am pretty anti AI tho so . much like AI. i'm biased.#(by the way being able to argue the other side tells u i actually understand the situation)#(if u see me arguing "pro-chat'' it's just bc i think a good argument involves a rebuttal lol)#i do not use ai . hard stop.

4K notes

·

View notes

Text

The surprising truth about data-driven dictatorships

Here’s the “dictator’s dilemma”: they want to block their country’s frustrated elites from mobilizing against them, so they censor public communications; but they also want to know what their people truly believe, so they can head off simmering resentments before they boil over into regime-toppling revolutions.

These two strategies are in tension: the more you censor, the less you know about the true feelings of your citizens and the easier it will be to miss serious problems until they spill over into the streets (think: the fall of the Berlin Wall or Tunisia before the Arab Spring). Dictators try to square this circle with things like private opinion polling or petition systems, but these capture a small slice of the potentially destabiziling moods circulating in the body politic.

Enter AI: back in 2018, Yuval Harari proposed that AI would supercharge dictatorships by mining and summarizing the public mood — as captured on social media — allowing dictators to tack into serious discontent and diffuse it before it erupted into unequenchable wildfire:

https://www.theatlantic.com/magazine/archive/2018/10/yuval-noah-harari-technology-tyranny/568330/

Harari wrote that “the desire to concentrate all information and power in one place may become [dictators] decisive advantage in the 21st century.” But other political scientists sharply disagreed. Last year, Henry Farrell, Jeremy Wallace and Abraham Newman published a thoroughgoing rebuttal to Harari in Foreign Affairs:

https://www.foreignaffairs.com/world/spirals-delusion-artificial-intelligence-decision-making

They argued that — like everyone who gets excited about AI, only to have their hopes dashed — dictators seeking to use AI to understand the public mood would run into serious training data bias problems. After all, people living under dictatorships know that spouting off about their discontent and desire for change is a risky business, so they will self-censor on social media. That’s true even if a person isn’t afraid of retaliation: if you know that using certain words or phrases in a post will get it autoblocked by a censorbot, what’s the point of trying to use those words?

The phrase “Garbage In, Garbage Out” dates back to 1957. That’s how long we’ve known that a computer that operates on bad data will barf up bad conclusions. But this is a very inconvenient truth for AI weirdos: having given up on manually assembling training data based on careful human judgment with multiple review steps, the AI industry “pivoted” to mass ingestion of scraped data from the whole internet.

But adding more unreliable data to an unreliable dataset doesn’t improve its reliability. GIGO is the iron law of computing, and you can’t repeal it by shoveling more garbage into the top of the training funnel:

https://memex.craphound.com/2018/05/29/garbage-in-garbage-out-machine-learning-has-not-repealed-the-iron-law-of-computer-science/

When it comes to “AI” that’s used for decision support — that is, when an algorithm tells humans what to do and they do it — then you get something worse than Garbage In, Garbage Out — you get Garbage In, Garbage Out, Garbage Back In Again. That’s when the AI spits out something wrong, and then another AI sucks up that wrong conclusion and uses it to generate more conclusions.

To see this in action, consider the deeply flawed predictive policing systems that cities around the world rely on. These systems suck up crime data from the cops, then predict where crime is going to be, and send cops to those “hotspots” to do things like throw Black kids up against a wall and make them turn out their pockets, or pull over drivers and search their cars after pretending to have smelled cannabis.

The problem here is that “crime the police detected” isn’t the same as “crime.” You only find crime where you look for it. For example, there are far more incidents of domestic abuse reported in apartment buildings than in fully detached homes. That’s not because apartment dwellers are more likely to be wife-beaters: it’s because domestic abuse is most often reported by a neighbor who hears it through the walls.

So if your cops practice racially biased policing (I know, this is hard to imagine, but stay with me /s), then the crime they detect will already be a function of bias. If you only ever throw Black kids up against a wall and turn out their pockets, then every knife and dime-bag you find in someone’s pockets will come from some Black kid the cops decided to harass.

That’s life without AI. But now let’s throw in predictive policing: feed your “knives found in pockets” data to an algorithm and ask it to predict where there are more knives in pockets, and it will send you back to that Black neighborhood and tell you do throw even more Black kids up against a wall and search their pockets. The more you do this, the more knives you’ll find, and the more you’ll go back and do it again.

This is what Patrick Ball from the Human Rights Data Analysis Group calls “empiricism washing”: take a biased procedure and feed it to an algorithm, and then you get to go and do more biased procedures, and whenever anyone accuses you of bias, you can insist that you’re just following an empirical conclusion of a neutral algorithm, because “math can’t be racist.”

HRDAG has done excellent work on this, finding a natural experiment that makes the problem of GIGOGBI crystal clear. The National Survey On Drug Use and Health produces the gold standard snapshot of drug use in America. Kristian Lum and William Isaac took Oakland’s drug arrest data from 2010 and asked Predpol, a leading predictive policing product, to predict where Oakland’s 2011 drug use would take place.

[Image ID: (a) Number of drug arrests made by Oakland police department, 2010. (1) West Oakland, (2) International Boulevard. (b) Estimated number of drug users, based on 2011 National Survey on Drug Use and Health]

Then, they compared those predictions to the outcomes of the 2011 survey, which shows where actual drug use took place. The two maps couldn’t be more different:

https://rss.onlinelibrary.wiley.com/doi/full/10.1111/j.1740-9713.2016.00960.x

Predpol told cops to go and look for drug use in a predominantly Black, working class neighborhood. Meanwhile the NSDUH survey showed the actual drug use took place all over Oakland, with a higher concentration in the Berkeley-neighboring student neighborhood.

What’s even more vivid is what happens when you simulate running Predpol on the new arrest data that would be generated by cops following its recommendations. If the cops went to that Black neighborhood and found more drugs there and told Predpol about it, the recommendation gets stronger and more confident.

In other words, GIGOGBI is a system for concentrating bias. Even trace amounts of bias in the original training data get refined and magnified when they are output though a decision support system that directs humans to go an act on that output. Algorithms are to bias what centrifuges are to radioactive ore: a way to turn minute amounts of bias into pluripotent, indestructible toxic waste.

There’s a great name for an AI that’s trained on an AI’s output, courtesy of Jathan Sadowski: “Habsburg AI.”

And that brings me back to the Dictator’s Dilemma. If your citizens are self-censoring in order to avoid retaliation or algorithmic shadowbanning, then the AI you train on their posts in order to find out what they’re really thinking will steer you in the opposite direction, so you make bad policies that make people angrier and destabilize things more.

Or at least, that was Farrell(et al)’s theory. And for many years, that’s where the debate over AI and dictatorship has stalled: theory vs theory. But now, there’s some empirical data on this, thanks to the “The Digital Dictator’s Dilemma,” a new paper from UCSD PhD candidate Eddie Yang:

https://www.eddieyang.net/research/DDD.pdf

Yang figured out a way to test these dueling hypotheses. He got 10 million Chinese social media posts from the start of the pandemic, before companies like Weibo were required to censor certain pandemic-related posts as politically sensitive. Yang treats these posts as a robust snapshot of public opinion: because there was no censorship of pandemic-related chatter, Chinese users were free to post anything they wanted without having to self-censor for fear of retaliation or deletion.

Next, Yang acquired the censorship model used by a real Chinese social media company to decide which posts should be blocked. Using this, he was able to determine which of the posts in the original set would be censored today in China.

That means that Yang knows that the “real” sentiment in the Chinese social media snapshot is, and what Chinese authorities would believe it to be if Chinese users were self-censoring all the posts that would be flagged by censorware today.

From here, Yang was able to play with the knobs, and determine how “preference-falsification” (when users lie about their feelings) and self-censorship would give a dictatorship a misleading view of public sentiment. What he finds is that the more repressive a regime is — the more people are incentivized to falsify or censor their views — the worse the system gets at uncovering the true public mood.

What’s more, adding additional (bad) data to the system doesn’t fix this “missing data” problem. GIGO remains an iron law of computing in this context, too.

But it gets better (or worse, I guess): Yang models a “crisis” scenario in which users stop self-censoring and start articulating their true views (because they’ve run out of fucks to give). This is the most dangerous moment for a dictator, and depending on the dictatorship handles it, they either get another decade or rule, or they wake up with guillotines on their lawns.

But “crisis” is where AI performs the worst. Trained on the “status quo” data where users are continuously self-censoring and preference-falsifying, AI has no clue how to handle the unvarnished truth. Both its recommendations about what to censor and its summaries of public sentiment are the least accurate when crisis erupts.

But here’s an interesting wrinkle: Yang scraped a bunch of Chinese users’ posts from Twitter — which the Chinese government doesn’t get to censor (yet) or spy on (yet) — and fed them to the model. He hypothesized that when Chinese users post to American social media, they don’t self-censor or preference-falsify, so this data should help the model improve its accuracy.

He was right — the model got significantly better once it ingested data from Twitter than when it was working solely from Weibo posts. And Yang notes that dictatorships all over the world are widely understood to be scraping western/northern social media.

But even though Twitter data improved the model’s accuracy, it was still wildly inaccurate, compared to the same model trained on a full set of un-self-censored, un-falsified data. GIGO is not an option, it’s the law (of computing).

Writing about the study on Crooked Timber, Farrell notes that as the world fills up with “garbage and noise” (he invokes Philip K Dick’s delighted coinage “gubbish”), “approximately correct knowledge becomes the scarce and valuable resource.”

https://crookedtimber.org/2023/07/25/51610/

This “probably approximately correct knowledge” comes from humans, not LLMs or AI, and so “the social applications of machine learning in non-authoritarian societies are just as parasitic on these forms of human knowledge production as authoritarian governments.”

The Clarion Science Fiction and Fantasy Writers’ Workshop summer fundraiser is almost over! I am an alum, instructor and volunteer board member for this nonprofit workshop whose alums include Octavia Butler, Kim Stanley Robinson, Bruce Sterling, Nalo Hopkinson, Kameron Hurley, Nnedi Okorafor, Lucius Shepard, and Ted Chiang! Your donations will help us subsidize tuition for students, making Clarion — and sf/f — more accessible for all kinds of writers.

Libro.fm is the indie-bookstore-friendly, DRM-free audiobook alternative to Audible, the Amazon-owned monopolist that locks every book you buy to Amazon forever. When you buy a book on Libro, they share some of the purchase price with a local indie bookstore of your choosing (Libro is the best partner I have in selling my own DRM-free audiobooks!). As of today, Libro is even better, because it’s available in five new territories and currencies: Canada, the UK, the EU, Australia and New Zealand!

[Image ID: An altered image of the Nuremberg rally, with ranked lines of soldiers facing a towering figure in a many-ribboned soldier's coat. He wears a high-peaked cap with a microchip in place of insignia. His head has been replaced with the menacing red eye of HAL9000 from Stanley Kubrick's '2001: A Space Odyssey.' The sky behind him is filled with a 'code waterfall' from 'The Matrix.']

Image: Cryteria (modified) https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0 https://creativecommons.org/licenses/by/3.0/deed.en

—

Raimond Spekking (modified) https://commons.wikimedia.org/wiki/File:Acer_Extensa_5220_-_Columbia_MB_06236-1N_-_Intel_Celeron_M_530_-_SLA2G_-_in_Socket_479-5029.jpg

CC BY-SA 4.0 https://creativecommons.org/licenses/by-sa/4.0/deed.en

—

Russian Airborne Troops (modified) https://commons.wikimedia.org/wiki/File:Vladislav_Achalov_at_the_Airborne_Troops_Day_in_Moscow_%E2%80%93_August_2,_2008.jpg

“Soldiers of Russia” Cultural Center (modified) https://commons.wikimedia.org/wiki/File:Col._Leonid_Khabarov_in_an_everyday_service_uniform.JPG

CC BY-SA 3.0 https://creativecommons.org/licenses/by-sa/3.0/deed.en

#pluralistic#habsburg ai#self censorship#henry farrell#digital dictatorships#machine learning#dictator's dilemma#eddie yang#preference falsification#political science#training bias#scholarship#spirals of delusion#algorithmic bias#ml#Fully automated data driven authoritarianism#authoritarianism#gigo#garbage in garbage out garbage back in#gigogbi#yuval noah harari#gubbish#pkd#philip k dick#phildickian

833 notes

·

View notes

Text

Companies can use him to train their AI 😂 #ai #funny #meme #trainAI #aitraining #voiceai #animals

#Companies can use him to train their AI 😂#ai#funny#meme#trainAI#aitraining#voiceai#animals#innovation#tech#artificialintelligence#machinelearning#technology#aitools#automation#techreview#education

9 notes

·

View notes

Text

oh. huh.

#the county i work for is investing in a way to AI automate#the part of my job i just spent 6 months training to do#that is also a majority of the work they've contracted me to do via grants#so there's that#no real feelings on it other than ''oh. huh.''

5 notes

·

View notes

Text

AI art, AI writing, VR experiences, cryptocurrency, plastic surgery, facetune, deepfakes...listen if we weren't living in a computer simulation before we sure are now. kill me.

#sorry just had to sit through a horrifying 'training workshop' about the 'benefits' of AI and I am Feeling The Existential Rage™#why must everything be altered and automated and unreal?#just like. let the universe turn or something maybe idk

1 note

·

View note

Text

The whole AI apocalypse is so funny to me because like.

There were engineers who did a lot of thinking to get ore out of the ground, and to press that ore into computer chips so unfathomably compact that Luciver "besides thou, not bellow" Morningstar thought it hubris; and to write programs that make those chips do simple math; AND to study the art of random shit happening to find out how to make random things happen less randomly; AND to write a computer program that does entirely random simple math, compares the output with what you said you wanted and remembers whether it was or wasn't just to can do less random stuff and more what it thinks you want.

All so every cityboy (affectionate) can do things without doing... y'know the thinking.

The irony is so graspable, I could use it to cobble not one, but two ML engineers into a pair of shoes.

"edit images with AI-- search with AI-- control your life with AI--"

#And you know the most ironic part? Not even the computer does the thinking now. No one does!#training an AI is like rolling a d20 for hit but you get a +1 for every time you defeat a training puppet#but you have an inherent -3 against anything that moves and at +2 the bonus resets#cityboy (affectionate. you cuties ;* )#ai#machine learning#automation

60K notes

·

View notes

Text

#Future of Work in India#Skilling Youth#Digital Education India#NEP 2020#India Youth Employment#Skill Development#Future Jobs#Vocational Training India#AI and Automation Jobs#Youth Empowerment

0 notes

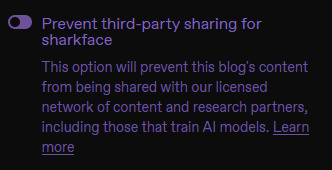

Text

This took me a while to find, so for anyone else who is struggling here are my instructions for desktop:

On the far left of your screen click settings

Ignore the section it opens with your account basics and look at the menu on the far right

Scroll down to your blog name and click on it

This will open a new menu centre screen

Scroll down this to "visibility"

It's right at the bottom

They are already selling data to midjourney, and it's very likely your work is already being used to train their models because you have to OPT OUT of this, not opt in. Very scummy of them to roll this out unannounced.

#Full disclosure#I'm pro-AI.#I ask it to generate ideas and plans#I *teach my students use it like I do#I use AI to automate repetitive tasks in my day job#BUT we should get to KNOW when we are helping to train it

98K notes

·

View notes

Text

You don’t need to hire a content team or work 10-hour days to stay visible. You just need the right system — and now, you can build your own. 🎓 Learn how to create your own AI content creator from scratch (no tech skills needed). The course is beginner-friendly, step-by-step, and designed to help you scale smarter — not harder.

View On WordPress

#ai for business owners#build ai content model#content creation ai#create ai content tool#custom ai assistant#digital business tips#how to train ai model#small biz automation#social media automation#time saving ai tools

0 notes

Text

Discover how artificial intelligence and robotics are transforming welding—from early robotic arms to modern AI‑powered cobots, smart sensors, real‑time monitoring, adaptive control, and IoT‑enabled systems. Learn how intelligent welding robots elevate quality, efficiency, flexibility, and safety, while skilled professionals gain new opportunities through advanced training. This article explores key advances—arc welding bots, cobots, spool‑welding, sensor fusion, and machine‑learning‑driven path planning—that are reshaping the future of metal fabrication.

#robotic welding program#AI welding robotics#intelligent cobots in welding#sensor‑driven welding automation#machine learning welding robots#robotic arc welding#smart welding sensors#welding industry automation#IoT in welding#welding technology advancements#welding robot training

0 notes

Text

Train Your First AI Chatbot in 6 Steps: Delegate Tasks, Reclaim Your Time

Train Your First AI Chatbot in 6 Steps Delegate Tasks, Reclaim Your Time Let AI Handle the Busywork So You Can Lead the Big Vision! No seriously, if you’re still stuck answering the same 5 questions in your inbox, or manually booking calls at 11 PM (even though you swore you’d set boundaries)… this is your sign to stop. Because automation isn’t just about efficiency, it’s about leadership. And…

#AI chatbot#AI customer support#AI strategy session#automate business tasks#build a smart assistant#business automation#Business Growth#Business Strategy#chatbot training#delegate with AI#Entrepreneur#Entrepreneurship#Lori Brooks#MORE TIME method#Productivity#Technology Equality#Time Management

0 notes

Text

Build Telegram Bots That Drive Engagement and Save Time

Atcuality is your trusted partner for building intelligent, intuitive Telegram bots that help you scale your communication and engagement strategies. Whether you need a bot for broadcasting content, managing subscriptions, running interactive polls, or handling customer queries, we’ve got you covered. Our development process is rooted in innovation, testing, and real-world user experience. In the center of our offerings is Telegram Bot Creation, a service that transforms your ideas into reliable, automation-driven tools. Each bot is tailored to your brand voice, target audience, and functionality needs. With Atcuality, you benefit from fast development, clean code, and responsive support. Our bots are not just tools—they’re digital assets designed to grow with you. Trust us to deliver a solution that enhances your Telegram presence and makes a measurable impact.

#search engine marketing#search engine optimisation company#emailmarketing#search engine optimization#search engine optimisation services#seo#search engine ranking#digital services#digital marketing#seo company#telegram bot#telegram channel#telegram#ai chatbot#chatbotservices#chatbotsolutions#chatbotforbusiness#app development#app developers#app developing company#application development#software development#software company#software testing#software training institute#software engineering#automation#digital transformation#information technology#digital consulting

0 notes

Text

AI Automated Testing Course with Venkatesh (Rahul Shetty) Join our AI Automated Testing Course with Venkatesh (Rahul Shetty) and learn how to test software using smart AI tools. This easy-to-follow course helps you save time, find bugs faster, and grow your skills for future tech jobs. To know more about us visit https://rahulshettyacademy.com/

#ai generator tester#ai software testing#ai automated testing#ai in testing software#playwright automation javascript#playwright javascript tutorial#playwright python tutorial#scrapy playwright tutorial#api testing using postman#online postman api testing#postman automation api testing#postman automated testing#postman performance testing#postman tutorial for api testing#free api for postman testing#api testing postman tutorial#postman tutorial for beginners#postman api performance testing#automate api testing in postman#java automation testing#automation testing selenium with java#automation testing java selenium#java selenium automation testing#python selenium automation#selenium with python automation testing#selenium testing with python#automation with selenium python#selenium automation with python#python and selenium tutorial#cypress automation training

0 notes

Text

🤖🔥 Say hello to Groot N1! Nvidia’s game-changing open-source AI is here to supercharge humanoid robots! 💥🧠 Unveiled at #GTC2025 🏟️ Welcome to the era of versatile robotics 🚀🌍 #AI #Robotics #Nvidia #GrootN1 #TechNews #FutureIsNow 🤩🔧

#AI-powered automation#DeepMind#dual-mode AI#Gemini Robotics#general-purpose machines#Groot N1#GTC 2025#humanoid robots#Nvidia#open-source AI#robot learning#robotic intelligence#robotics innovation#synthetic training data#versatile robotics

0 notes

Text

#Engineering Graduate Training#Bachelor of Engineering#Engineering College#Emerging Technologies#AI in Engineering#IoT for Engineers#Renewable Energy Engineering#Robotics and Automation#Blockchain in Engineering#5G Technology#Engineering Career#Engineering Training Programs#TVS Training & Services#Future of Engineering#Industry 4.0

0 notes