#AI learning program

Explore tagged Tumblr posts

Text

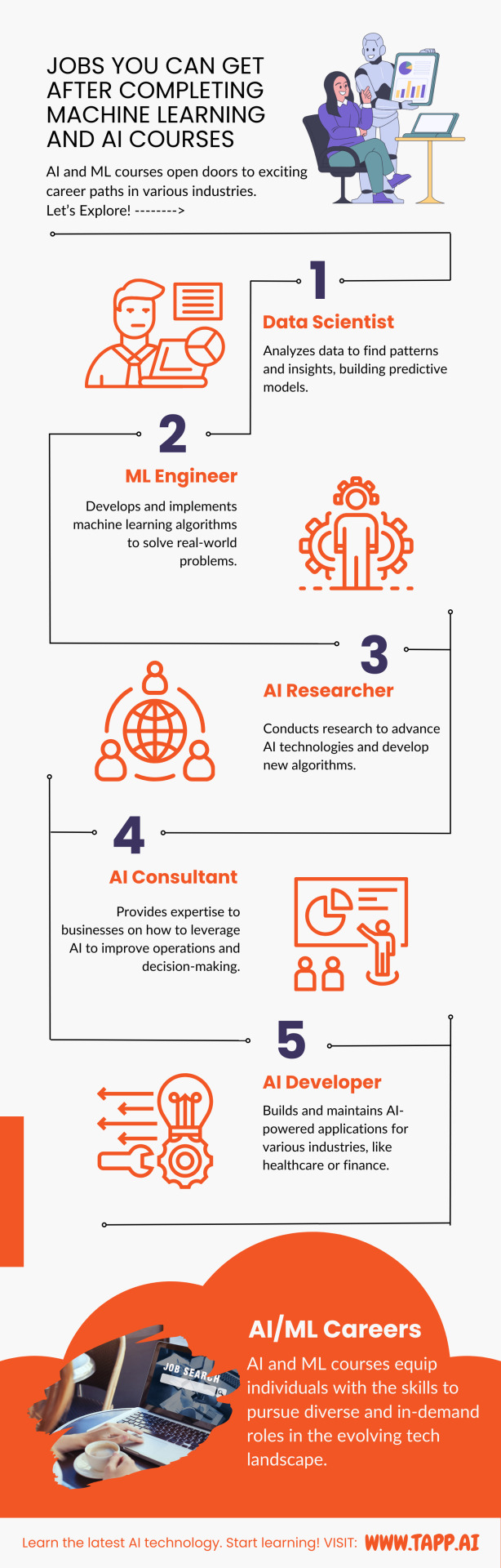

Jobs you can get after completing Machine Learning and AI courses

AI and ML courses open doors to exciting career paths in various industries. Disscover the job oppurtunities after AI ML course.

Enroll Today! Follow the Link below ----! AI ML Online Learning Program

0 notes

Note

I just watched a video where someone is using ChatGPT to generate comments on their code. Even as a layman I feel like I should be screaming at him, but on a scale from 1 to apocalypse, how bad is this?

Machine-generated comments could not possibly be more useless, nonsensical or maliciously misleading than most of the human-generated comments I've seen.

1K notes

·

View notes

Text

I wonder if gen ai users think the process isn't fun? Like I can't draw that well and maybe an ai can generate "better" drawings than me but the act of drawing is the good part?? and getting better is awesome?? and you feel like you accomplished something? the i made this myself feeling?

Like for example I don't have to crochet something myself, i could just buy the things. but where's the fun in that?

#just debating with my colleagues again#maybe it's useful for emails or programming#but even with that#I wanna be able to do that myself#it can help maybe but I STILL want to learn these things#and don't get me started on cards#how impersonal is an ai written birthday card

37 notes

·

View notes

Text

i wasn’t super sold on royalteeth/kingleader/kingmaster until i discovered my partner kins kinger and i kin caine. therefore, i had to draw them being dumb gay old men

they r holding handz <3

#i drew them from memory pls be nice to me#so much potential#realistically#i’m not sure caine would actually love any of them beyond his programmed affection for his circus performers#but i’m such a sucker for an AI learning to be human#and i think he’d be somewhat secretly obsessed with trying to be human#even if only to better understand how to help them#bc he loves everyone. he does#he just doesn’t know what it means#anyways i’m rambling#look at the gay old men. yeah. yum#tadc#the amazing digital circus#tadc caine#tadc kinger#kingleader#kingmaster#royalteeth#tadc caine x kinger#tadc kinger x caine#tadc royalteeth#tadc kingleader#tadc kingmaster#traditional art#doodles#tadc doodles

264 notes

·

View notes

Text

#programmers humor#compsci#IT#devlife#AI#chatgpt#aritificial intelligence#machine learning#programming#software engineering#software development

496 notes

·

View notes

Text

What the fuck is vibe coding

11 notes

·

View notes

Text

anyone by chance know any videos on enemy ai in games, stuff like good ai vs bad ai & ideally scary ones in horror games? all im finding are Lists (i want Videos that can go into more depth abt how it works) & i fear if i type ai in youtube ill just get garbage. i wanna know how the code Thinks but i want it explained to me as an outsider & not someone actually trying to code smth

#i hear the alien from alien isolation has pretty good behavioral ai & that it does a lot of Learning to make it scarier#but also i think itd b fun to hear abt enemies that just suck bc they were poorly programmed#torch chatter

8 notes

·

View notes

Text

I desprately need someone to talk to about this

I've been working on a system to allow a genetic algorithm to create DNA code which can create self-organising organisms. Someone I know has created a very effective genetic algorithm which blows NEAT out of the water in my opinion. So, this algorithm is very good at using food values to determine which organisms to breed, how to breed them, and the multitude of different biologically inspired mutation mechanisms which allow for things like meta genes and meta-meta genes, and a whole other slew of things. I am building a translation system, basically a compiler on top of it, and designing an instruction set and genetic repair mechanisms to allow it to convert ANY hexadecimal string into a valid, operable program. I'm doing this by having an organism with, so far, 5 planned chromosomes. The first and second chromosome are the INITIAL STATE of a neural network. The number and configuration of input nodes, the number and configuration of output nodes, whatever code it needs for a fitness function, and the configuration and weights of the layers. This neural network is not used at all in the fitness evaluation of the organism, but purely something the organism itself can manage, train, and utilize how it sees fit.

The third is the complete code of the program which runs the organism. Its basically a list of ASM opcodes and arguments written in hexadecimal. It is comprised of codons which represent the different hexadecimal characters, as well as a start and stop codon. This program will be compiled into executable machine code using LLVM IR and a custom instruction set I've designed for the organisms to give them a turing complete programming language and some helper functions to make certain processes simpler to evolve. This includes messages between the organisms, reproduction methods, and all the methods necessary for the organisms to develop sight, hearing, and recieve various other inputs, and also to output audio, video, and various outputs like mouse, keyboard, or a gamepad output. The fourth is a blank slate, which the organism can evolve whatever data it wants. The first half will be the complete contents of the organisms ROM after the important information, and the second half will be the initial state of the organisms memory. This will likely be stored as base 64 of its hash and unfolded into binary on compilation.

The 5th chromosome is one I just came up with and I am very excited about, it will be a translation dictionary. It will be 512 individual codons exactly, with each codon pair being mapped between 00 and FF hex. When evaulating the hex of the other chromosomes, this dictionary will be used to determine the equivalent instruction of any given hex pair. When evolving, each hex pair in the 5th organism will be guaranteed to be a valid opcode in the instruction set by using modulus to constrain each pair to the 55 instructions currently available. This will allow an organism to evolve its own instruction distribution, and try to prevent random instructions which might be harmful or inneficient from springing up as often, and instead more often select for efficient or safer instructions.

#ai#technology#genetic algorithm#machine learning#programming#python#ideas#discussion#open source#FOSS#linux#linuxposting#musings#word vomit#random thoughts#rant

8 notes

·

View notes

Text

they're shaking hands honest

(ai/machine learning generated animations)

14 notes

·

View notes

Text

This is part of a new project I am doing for a Facebook app that can alert someone when there is suspicious activity on their account, and block people who post rude comments and hate speech using a BERT model I am training on a dataset of hate speech. It automatically blocks people who are really rude / mean and keeps your feed clean of spam. I am developing it right now for work and for @emoryvalentine14 to test out and maybe in the future I will make it public.

I love NLP :D Also I plan to host this server probably on Heroku or something after it is done.

#machine learning#artificial intelligence#python programming#programmer#programming#technology#coding#python#ai#python 3#social media#stopthehate#lgbtq community#lgbtqia#lgbtqplus#gender equality

74 notes

·

View notes

Text

why is everything called AI now. boy thats an algorithm

#'ubers evil AI will detect your phone battery is low and raise the prices accordingly' thats fucked up but it#doesnt need a machine learning program for that#and not algorithm like your social media feed or whatever like in the most basic sense of the definition

8 notes

·

View notes

Text

Truly not allowed to have fun at my university anymore bc every group project is just done by ai now so why am I even here

#we have like this super fun/interesting seminar rn where we invent a product#and work out the marketing strategy with all the stuff we learned#so make slogans. posters. adds etc#and we had a super cool idea for an add. filmed with a 360 camera in first person perspective with video game elements and stuff#none of us really knew how to do video editing and everything but i volunteered to learn and do it#bc i was really enjoying the idea and was looking forward to doing that all#already started watching tutorials on video editing and how to film with a 360 camera how to get the effects that we want etc..#and now they call me ~1h before we planned the shoot and tell me they found an ai program that can make our video and it looks good#so yeah... now im really disappointed and sad and all the motivation ive had today is just gone#great#like what do you mean i could have spent 4 days working on a creative project i really enjoyed and now I can't anymore??? :(

2 notes

·

View notes

Text

I love scrolling through my old procreate art because wow, I used to suck. But also WOW, I suck less now. And most wowable of all, I’ll continue to improve in the future!

#the fucking DIFFERENCE that a year and a half made#never use ai folks 💪#always improve your skills#anyways I do blame part of how bad my old art was on the fact that I had zero clue how procreate worked#never touched an art program as advanced as procreate before and was VERY overwhelmed#still kind of am. but! I am learning the functions!#I can do better lighting now! I can do backgrounds!#I love drawing!!!

5 notes

·

View notes

Text

Learn how Mistral-NeMo-Minitron 8B, a collaboration between NVIDIA and Mistral AI, is revolutionizing Large Language Models (LLMs). This Open-Source model uses advanced pruning & distillation techniques to achieve top accuracy on 9 benchmarks while being highly efficient.

#MistralNeMoMinitron#AI#ModelCompression#OpenSource#MachineLearning#DeepLearning#NVIDIA#MistralAI#artificial intelligence#open source#machine learning#software engineering#programming#nlp

5 notes

·

View notes

Text

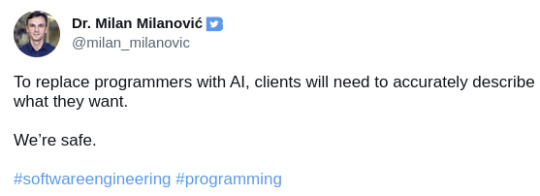

Truth speaking on the corporate obsession with AI

Hilarious. Something tells me this person's on the hellsite(affectionate)

#ai#corporate bs#late stage capitalism#funny#truth#artificial intelligence#ml#machine learning#data science#data scientist#programming#scientific programming

8 notes

·

View notes

Text

The Mathematical Foundations of Machine Learning

In the world of artificial intelligence, machine learning is a crucial component that enables computers to learn from data and improve their performance over time. However, the math behind machine learning is often shrouded in mystery, even for those who work with it every day. Anil Ananthaswami, author of the book "Why Machines Learn," sheds light on the elegant mathematics that underlies modern AI, and his journey is a fascinating one.

Ananthaswami's interest in machine learning began when he started writing about it as a science journalist. His software engineering background sparked a desire to understand the technology from the ground up, leading him to teach himself coding and build simple machine learning systems. This exploration eventually led him to appreciate the mathematical principles that underlie modern AI. As Ananthaswami notes, "I was amazed by the beauty and elegance of the math behind machine learning."

Ananthaswami highlights the elegance of machine learning mathematics, which goes beyond the commonly known subfields of calculus, linear algebra, probability, and statistics. He points to specific theorems and proofs, such as the 1959 proof related to artificial neural networks, as examples of the beauty and elegance of machine learning mathematics. For instance, the concept of gradient descent, a fundamental algorithm used in machine learning, is a powerful example of how math can be used to optimize model parameters.

Ananthaswami emphasizes the need for a broader understanding of machine learning among non-experts, including science communicators, journalists, policymakers, and users of the technology. He believes that only when we understand the math behind machine learning can we critically evaluate its capabilities and limitations. This is crucial in today's world, where AI is increasingly being used in various applications, from healthcare to finance.

A deeper understanding of machine learning mathematics has significant implications for society. It can help us to evaluate AI systems more effectively, develop more transparent and explainable AI systems, and address AI bias and ensure fairness in decision-making. As Ananthaswami notes, "The math behind machine learning is not just a tool, but a way of thinking that can help us create more intelligent and more human-like machines."

The Elegant Math Behind Machine Learning (Machine Learning Street Talk, November 2024)

youtube

Matrices are used to organize and process complex data, such as images, text, and user interactions, making them a cornerstone in applications like Deep Learning (e.g., neural networks), Computer Vision (e.g., image recognition), Natural Language Processing (e.g., language translation), and Recommendation Systems (e.g., personalized suggestions). To leverage matrices effectively, AI relies on key mathematical concepts like Matrix Factorization (for dimension reduction), Eigendecomposition (for stability analysis), Orthogonality (for efficient transformations), and Sparse Matrices (for optimized computation).

The Applications of Matrices - What I wish my teachers told me way earlier (Zach Star, October 2019)

youtube

Transformers are a type of neural network architecture introduced in 2017 by Vaswani et al. in the paper “Attention Is All You Need”. They revolutionized the field of NLP by outperforming traditional recurrent neural network (RNN) and convolutional neural network (CNN) architectures in sequence-to-sequence tasks. The primary innovation of transformers is the self-attention mechanism, which allows the model to weigh the importance of different words in the input data irrespective of their positions in the sentence. This is particularly useful for capturing long-range dependencies in text, which was a challenge for RNNs due to vanishing gradients. Transformers have become the standard for machine translation tasks, offering state-of-the-art results in translating between languages. They are used for both abstractive and extractive summarization, generating concise summaries of long documents. Transformers help in understanding the context of questions and identifying relevant answers from a given text. By analyzing the context and nuances of language, transformers can accurately determine the sentiment behind text. While initially designed for sequential data, variants of transformers (e.g., Vision Transformers, ViT) have been successfully applied to image recognition tasks, treating images as sequences of patches. Transformers are used to improve the accuracy of speech-to-text systems by better modeling the sequential nature of audio data. The self-attention mechanism can be beneficial for understanding patterns in time series data, leading to more accurate forecasts.

Attention is all you need (Umar Hamil, May 2023)

youtube

Geometric deep learning is a subfield of deep learning that focuses on the study of geometric structures and their representation in data. This field has gained significant attention in recent years.

Michael Bronstein: Geometric Deep Learning (MLSS Kraków, December 2023)

youtube

Traditional Geometric Deep Learning, while powerful, often relies on the assumption of smooth geometric structures. However, real-world data frequently resides in non-manifold spaces where such assumptions are violated. Topology, with its focus on the preservation of proximity and connectivity, offers a more robust framework for analyzing these complex spaces. The inherent robustness of topological properties against noise further solidifies the rationale for integrating topology into deep learning paradigms.

Cristian Bodnar: Topological Message Passing (Michael Bronstein, August 2022)

youtube

Sunday, November 3, 2024

#machine learning#artificial intelligence#mathematics#computer science#deep learning#neural networks#algorithms#data science#statistics#programming#interview#ai assisted writing#machine art#Youtube#lecture

4 notes

·

View notes