#Air Canada customer service

Text

Why Do AI Chatbots Hallucinate? Exploring the Science

New Post has been published on https://thedigitalinsider.com/why-do-ai-chatbots-hallucinate-exploring-the-science/

Why Do AI Chatbots Hallucinate? Exploring the Science

Artificial Intelligence (AI) chatbots have become integral to our lives today, assisting with everything from managing schedules to providing customer support. However, as these chatbots become more advanced, the concerning issue known as hallucination has emerged. In AI, hallucination refers to instances where a chatbot generates inaccurate, misleading, or entirely fabricated information.

Imagine asking your virtual assistant about the weather, and it starts giving you outdated or entirely wrong information about a storm that never happened. While this might be interesting, in critical areas like healthcare or legal advice, such hallucinations can lead to serious consequences. Therefore, understanding why AI chatbots hallucinate is essential for enhancing their reliability and safety.

The Basics of AI Chatbots

AI chatbots are powered by advanced algorithms that enable them to understand and generate human language. There are two main types of AI chatbots: rule-based and generative models.

Rule-based chatbots follow predefined rules or scripts. They can handle straightforward tasks like booking a table at a restaurant or answering common customer service questions. These bots operate within a limited scope and rely on specific triggers or keywords to provide accurate responses. However, their rigidity limits their ability to handle more complex or unexpected queries.

Generative models, on the other hand, use machine learning and Natural Language Processing (NLP) to generate responses. These models are trained on vast amounts of data, learning patterns and structures in human language. Popular examples include OpenAI’s GPT series and Google’s BERT. These models can create more flexible and contextually relevant responses, making them more versatile and adaptable than rule-based chatbots. However, this flexibility also makes them more prone to hallucination, as they rely on probabilistic methods to generate responses.

What is AI Hallucination?

AI hallucination occurs when a chatbot generates content that is not grounded in reality. This could be as simple as a factual error, like getting the date of a historical event wrong, or something more complex, like fabricating an entire story or medical recommendation. While human hallucinations are sensory experiences without external stimuli, often caused by psychological or neurological factors, AI hallucinations originate from the model’s misinterpretation or overgeneralization of its training data. For example, if an AI has read many texts about dinosaurs, it might erroneously generate a new, fictitious species of dinosaur that never existed.

The concept of AI hallucination has been around since the early days of machine learning. Initial models, which were relatively simple, often made seriously questionable mistakes, such as suggesting that “Paris is the capital of Italy.” As AI technology advanced, the hallucinations became subtler but potentially more dangerous.

Initially, these AI errors were seen as mere anomalies or curiosities. However, as AI’s role in critical decision-making processes has grown, addressing these issues has become increasingly urgent. The integration of AI into sensitive fields like healthcare, legal advice, and customer service increases the risks associated with hallucinations. This makes it essential to understand and mitigate these occurrences to ensure the reliability and safety of AI systems.

Causes of AI Hallucination

Understanding why AI chatbots hallucinate involves exploring several interconnected factors:

Data Quality Problems

The quality of the training data is vital. AI models learn from the data they are fed, so if the training data is biased, outdated, or inaccurate, the AI’s outputs will reflect those flaws. For example, if an AI chatbot is trained on medical texts that include outdated practices, it might recommend obsolete or harmful treatments. Furthermore, if the data lacks diversity, the AI may fail to understand contexts outside its limited training scope, leading to erroneous outputs.

Model Architecture and Training

The architecture and training process of an AI model also play critical roles. Overfitting occurs when an AI model learns the training data too well, including its noise and errors, making it perform poorly on new data. Conversely, underfitting happens when the model needs to learn the training data adequately, resulting in oversimplified responses. Therefore, maintaining a balance between these extremes is challenging but essential for reducing hallucinations.

Ambiguities in Language

Human language is inherently complex and full of nuances. Words and phrases can have multiple meanings depending on context. For example, the word “bank” could mean a financial institution or the side of a river. AI models often need more context to disambiguate such terms, leading to misunderstandings and hallucinations.

Algorithmic Challenges

Current AI algorithms have limitations, particularly in handling long-term dependencies and maintaining consistency in their responses. These challenges can cause the AI to produce conflicting or implausible statements even within the same conversation. For instance, an AI might claim one fact at the beginning of a conversation and contradict itself later.

Recent Developments and Research

Researchers continuously work to reduce AI hallucinations, and recent studies have brought promising advancements in several key areas. One significant effort is improving data quality by curating more accurate, diverse, and up-to-date datasets. This involves developing methods to filter out biased or incorrect data and ensuring that the training sets represent various contexts and cultures. By refining the data that AI models are trained on, the likelihood of hallucinations decreases as the AI systems gain a better foundation of accurate information.

Advanced training techniques also play a vital role in addressing AI hallucinations. Techniques such as cross-validation and more comprehensive datasets help reduce issues like overfitting and underfitting. Additionally, researchers are exploring ways to incorporate better contextual understanding into AI models. Transformer models, such as BERT, have shown significant improvements in understanding and generating contextually appropriate responses, reducing hallucinations by allowing the AI to grasp nuances more effectively.

Moreover, algorithmic innovations are being explored to address hallucinations directly. One such innovation is Explainable AI (XAI), which aims to make AI decision-making processes more transparent. By understanding how an AI system reaches a particular conclusion, developers can more effectively identify and correct the sources of hallucination. This transparency helps pinpoint and mitigate the factors that lead to hallucinations, making AI systems more reliable and trustworthy.

These combined efforts in data quality, model training, and algorithmic advancements represent a multi-faceted approach to reducing AI hallucinations and enhancing AI chatbots’ overall performance and reliability.

Real-world Examples of AI Hallucination

Real-world examples of AI hallucination highlight how these errors can impact various sectors, sometimes with serious consequences.

In healthcare, a study by the University of Florida College of Medicine tested ChatGPT on common urology-related medical questions. The results were concerning. The chatbot provided appropriate responses only 60% of the time. Often, it misinterpreted clinical guidelines, omitted important contextual information, and made improper treatment recommendations. For example, it sometimes recommends treatments without recognizing critical symptoms, which could lead to potentially dangerous advice. This shows the importance of ensuring that medical AI systems are accurate and reliable.

Significant incidents have occurred in customer service where AI chatbots provided incorrect information. A notable case involved Air Canada’s chatbot, which gave inaccurate details about their bereavement fare policy. This misinformation led to a traveler missing out on a refund, causing considerable disruption. The court ruled against Air Canada, emphasizing their responsibility for the information provided by their chatbot. This incident highlights the importance of regularly updating and verifying the accuracy of chatbot databases to prevent similar issues.

The legal field has experienced significant issues with AI hallucinations. In a court case, New York lawyer Steven Schwartz used ChatGPT to generate legal references for a brief, which included six fabricated case citations. This led to severe repercussions and emphasized the necessity for human oversight in AI-generated legal advice to ensure accuracy and reliability.

Ethical and Practical Implications

The ethical implications of AI hallucinations are profound, as AI-driven misinformation can lead to significant harm, such as medical misdiagnoses and financial losses. Ensuring transparency and accountability in AI development is crucial to mitigate these risks.

Misinformation from AI can have real-world consequences, endangering lives with incorrect medical advice and resulting in unjust outcomes with faulty legal advice. Regulatory bodies like the European Union have begun addressing these issues with proposals like the AI Act, aiming to establish guidelines for safe and ethical AI deployment.

Transparency in AI operations is essential, and the field of XAI focuses on making AI decision-making processes understandable. This transparency helps identify and correct hallucinations, ensuring AI systems are more reliable and trustworthy.

The Bottom Line

AI chatbots have become essential tools in various fields, but their tendency for hallucinations poses significant challenges. By understanding the causes, ranging from data quality issues to algorithmic limitations—and implementing strategies to mitigate these errors, we can enhance the reliability and safety of AI systems. Continued advancements in data curation, model training, and explainable AI, combined with essential human oversight, will help ensure that AI chatbots provide accurate and trustworthy information, ultimately enhancing greater trust and utility in these powerful technologies.

Readers should also learn about the top AI Hallucination Detection Solutions.

#Advice#ai#ai act#AI Chatbot#AI development#ai hallucination#AI hallucinations#ai model#AI models#AI systems#air#Algorithms#anomalies#approach#architecture#artificial#Artificial Intelligence#bank#BERT#bots#Canada#chatbot#chatbots#chatGPT#college#comprehensive#content#court#customer service#data

0 notes

Text

#air drone#best dji drone#buy dji#buy dji drone#buy dji mavic 3#buy drone#buy mavic 3#dj1 store#dji 2#dji 2s#dji 3#dji 3 pro#dji accessories#dji agriculture#dji agriculture drone#dji air#dji air 3#dji air 3 release date#dji air unit#dji app#dji battery#dji battery charger#dji camera#dji canada store#dji charger#dji classic 3#dji contact#dji customer service#dji drone accessories#dji drone app

0 notes

Text

Air freight forwarder Canada |Customs clearance broker Canada

Global Freight Solutions (GFFCA) offers a comprehensive suite of logistics services, including efficient and reliable air freight forwarding. Optimized for time-sensitive shipments, GFFCA ensures swift and secure transportation of your goods. Alongside air freight, their offerings cover ocean freight forwarding, customs clearance, and more, providing a one-stop solution for all your logistics needs. Elevate your cargo logistics with GFFCA's cost-effective and dependable shipping solutions. Request a quote today and fuel your success with their seamless, global freight movement services

To know more 📞 +1(905) 672 9077 or visit - gffca.com

#freight forwarding company#logistics company in canada#freight forwarders near me#freight international services#global freight solutions#freight forwarding solutions#logistics company#customs clearance broker canada#customs clearance and brokerage#customs brokerage services#air freight services canada#air freight forwarder Canada#air freight forwarder#air freight shipping#ocean freight forwarding companies#Reefer Freight Shipping#ocean frieght services#sea freight forwarder#ocean freight forwarders#international freight shipping#International freight forwarding industry#import export shipping company

1 note

·

View note

Text

“Humans in the loop” must detect the hardest-to-spot errors, at superhuman speed

I'm touring my new, nationally bestselling novel The Bezzle! Catch me SATURDAY (Apr 27) in MARIN COUNTY, then Winnipeg (May 2), Calgary (May 3), Vancouver (May 4), and beyond!

If AI has a future (a big if), it will have to be economically viable. An industry can't spend 1,700% more on Nvidia chips than it earns indefinitely – not even with Nvidia being a principle investor in its largest customers:

https://news.ycombinator.com/item?id=39883571

A company that pays 0.36-1 cents/query for electricity and (scarce, fresh) water can't indefinitely give those queries away by the millions to people who are expected to revise those queries dozens of times before eliciting the perfect botshit rendition of "instructions for removing a grilled cheese sandwich from a VCR in the style of the King James Bible":

https://www.semianalysis.com/p/the-inference-cost-of-search-disruption

Eventually, the industry will have to uncover some mix of applications that will cover its operating costs, if only to keep the lights on in the face of investor disillusionment (this isn't optional – investor disillusionment is an inevitable part of every bubble).

Now, there are lots of low-stakes applications for AI that can run just fine on the current AI technology, despite its many – and seemingly inescapable - errors ("hallucinations"). People who use AI to generate illustrations of their D&D characters engaged in epic adventures from their previous gaming session don't care about the odd extra finger. If the chatbot powering a tourist's automatic text-to-translation-to-speech phone tool gets a few words wrong, it's still much better than the alternative of speaking slowly and loudly in your own language while making emphatic hand-gestures.

There are lots of these applications, and many of the people who benefit from them would doubtless pay something for them. The problem – from an AI company's perspective – is that these aren't just low-stakes, they're also low-value. Their users would pay something for them, but not very much.

For AI to keep its servers on through the coming trough of disillusionment, it will have to locate high-value applications, too. Economically speaking, the function of low-value applications is to soak up excess capacity and produce value at the margins after the high-value applications pay the bills. Low-value applications are a side-dish, like the coach seats on an airplane whose total operating expenses are paid by the business class passengers up front. Without the principle income from high-value applications, the servers shut down, and the low-value applications disappear:

https://locusmag.com/2023/12/commentary-cory-doctorow-what-kind-of-bubble-is-ai/

Now, there are lots of high-value applications the AI industry has identified for its products. Broadly speaking, these high-value applications share the same problem: they are all high-stakes, which means they are very sensitive to errors. Mistakes made by apps that produce code, drive cars, or identify cancerous masses on chest X-rays are extremely consequential.

Some businesses may be insensitive to those consequences. Air Canada replaced its human customer service staff with chatbots that just lied to passengers, stealing hundreds of dollars from them in the process. But the process for getting your money back after you are defrauded by Air Canada's chatbot is so onerous that only one passenger has bothered to go through it, spending ten weeks exhausting all of Air Canada's internal review mechanisms before fighting his case for weeks more at the regulator:

https://bc.ctvnews.ca/air-canada-s-chatbot-gave-a-b-c-man-the-wrong-information-now-the-airline-has-to-pay-for-the-mistake-1.6769454

There's never just one ant. If this guy was defrauded by an AC chatbot, so were hundreds or thousands of other fliers. Air Canada doesn't have to pay them back. Air Canada is tacitly asserting that, as the country's flagship carrier and near-monopolist, it is too big to fail and too big to jail, which means it's too big to care.

Air Canada shows that for some business customers, AI doesn't need to be able to do a worker's job in order to be a smart purchase: a chatbot can replace a worker, fail to their worker's job, and still save the company money on balance.

I can't predict whether the world's sociopathic monopolists are numerous and powerful enough to keep the lights on for AI companies through leases for automation systems that let them commit consequence-free free fraud by replacing workers with chatbots that serve as moral crumple-zones for furious customers:

https://www.sciencedirect.com/science/article/abs/pii/S0747563219304029

But even stipulating that this is sufficient, it's intrinsically unstable. Anything that can't go on forever eventually stops, and the mass replacement of humans with high-speed fraud software seems likely to stoke the already blazing furnace of modern antitrust:

https://www.eff.org/de/deeplinks/2021/08/party-its-1979-og-antitrust-back-baby

Of course, the AI companies have their own answer to this conundrum. A high-stakes/high-value customer can still fire workers and replace them with AI – they just need to hire fewer, cheaper workers to supervise the AI and monitor it for "hallucinations." This is called the "human in the loop" solution.

The human in the loop story has some glaring holes. From a worker's perspective, serving as the human in the loop in a scheme that cuts wage bills through AI is a nightmare – the worst possible kind of automation.

Let's pause for a little detour through automation theory here. Automation can augment a worker. We can call this a "centaur" – the worker offloads a repetitive task, or one that requires a high degree of vigilance, or (worst of all) both. They're a human head on a robot body (hence "centaur"). Think of the sensor/vision system in your car that beeps if you activate your turn-signal while a car is in your blind spot. You're in charge, but you're getting a second opinion from the robot.

Likewise, consider an AI tool that double-checks a radiologist's diagnosis of your chest X-ray and suggests a second look when its assessment doesn't match the radiologist's. Again, the human is in charge, but the robot is serving as a backstop and helpmeet, using its inexhaustible robotic vigilance to augment human skill.

That's centaurs. They're the good automation. Then there's the bad automation: the reverse-centaur, when the human is used to augment the robot.

Amazon warehouse pickers stand in one place while robotic shelving units trundle up to them at speed; then, the haptic bracelets shackled around their wrists buzz at them, directing them pick up specific items and move them to a basket, while a third automation system penalizes them for taking toilet breaks or even just walking around and shaking out their limbs to avoid a repetitive strain injury. This is a robotic head using a human body – and destroying it in the process.

An AI-assisted radiologist processes fewer chest X-rays every day, costing their employer more, on top of the cost of the AI. That's not what AI companies are selling. They're offering hospitals the power to create reverse centaurs: radiologist-assisted AIs. That's what "human in the loop" means.

This is a problem for workers, but it's also a problem for their bosses (assuming those bosses actually care about correcting AI hallucinations, rather than providing a figleaf that lets them commit fraud or kill people and shift the blame to an unpunishable AI).

Humans are good at a lot of things, but they're not good at eternal, perfect vigilance. Writing code is hard, but performing code-review (where you check someone else's code for errors) is much harder – and it gets even harder if the code you're reviewing is usually fine, because this requires that you maintain your vigilance for something that only occurs at rare and unpredictable intervals:

https://twitter.com/qntm/status/1773779967521780169

But for a coding shop to make the cost of an AI pencil out, the human in the loop needs to be able to process a lot of AI-generated code. Replacing a human with an AI doesn't produce any savings if you need to hire two more humans to take turns doing close reads of the AI's code.

This is the fatal flaw in robo-taxi schemes. The "human in the loop" who is supposed to keep the murderbot from smashing into other cars, steering into oncoming traffic, or running down pedestrians isn't a driver, they're a driving instructor. This is a much harder job than being a driver, even when the student driver you're monitoring is a human, making human mistakes at human speed. It's even harder when the student driver is a robot, making errors at computer speed:

https://pluralistic.net/2024/04/01/human-in-the-loop/#monkey-in-the-middle

This is why the doomed robo-taxi company Cruise had to deploy 1.5 skilled, high-paid human monitors to oversee each of its murderbots, while traditional taxis operate at a fraction of the cost with a single, precaratized, low-paid human driver:

https://pluralistic.net/2024/01/11/robots-stole-my-jerb/#computer-says-no

The vigilance problem is pretty fatal for the human-in-the-loop gambit, but there's another problem that is, if anything, even more fatal: the kinds of errors that AIs make.

Foundationally, AI is applied statistics. An AI company trains its AI by feeding it a lot of data about the real world. The program processes this data, looking for statistical correlations in that data, and makes a model of the world based on those correlations. A chatbot is a next-word-guessing program, and an AI "art" generator is a next-pixel-guessing program. They're drawing on billions of documents to find the most statistically likely way of finishing a sentence or a line of pixels in a bitmap:

https://dl.acm.org/doi/10.1145/3442188.3445922

This means that AI doesn't just make errors – it makes subtle errors, the kinds of errors that are the hardest for a human in the loop to spot, because they are the most statistically probable ways of being wrong. Sure, we notice the gross errors in AI output, like confidently claiming that a living human is dead:

https://www.tomsguide.com/opinion/according-to-chatgpt-im-dead

But the most common errors that AIs make are the ones we don't notice, because they're perfectly camouflaged as the truth. Think of the recurring AI programming error that inserts a call to a nonexistent library called "huggingface-cli," which is what the library would be called if developers reliably followed naming conventions. But due to a human inconsistency, the real library has a slightly different name. The fact that AIs repeatedly inserted references to the nonexistent library opened up a vulnerability – a security researcher created a (inert) malicious library with that name and tricked numerous companies into compiling it into their code because their human reviewers missed the chatbot's (statistically indistinguishable from the the truth) lie:

https://www.theregister.com/2024/03/28/ai_bots_hallucinate_software_packages/

For a driving instructor or a code reviewer overseeing a human subject, the majority of errors are comparatively easy to spot, because they're the kinds of errors that lead to inconsistent library naming – places where a human behaved erratically or irregularly. But when reality is irregular or erratic, the AI will make errors by presuming that things are statistically normal.

These are the hardest kinds of errors to spot. They couldn't be harder for a human to detect if they were specifically designed to go undetected. The human in the loop isn't just being asked to spot mistakes – they're being actively deceived. The AI isn't merely wrong, it's constructing a subtle "what's wrong with this picture"-style puzzle. Not just one such puzzle, either: millions of them, at speed, which must be solved by the human in the loop, who must remain perfectly vigilant for things that are, by definition, almost totally unnoticeable.

This is a special new torment for reverse centaurs – and a significant problem for AI companies hoping to accumulate and keep enough high-value, high-stakes customers on their books to weather the coming trough of disillusionment.

This is pretty grim, but it gets grimmer. AI companies have argued that they have a third line of business, a way to make money for their customers beyond automation's gifts to their payrolls: they claim that they can perform difficult scientific tasks at superhuman speed, producing billion-dollar insights (new materials, new drugs, new proteins) at unimaginable speed.

However, these claims – credulously amplified by the non-technical press – keep on shattering when they are tested by experts who understand the esoteric domains in which AI is said to have an unbeatable advantage. For example, Google claimed that its Deepmind AI had discovered "millions of new materials," "equivalent to nearly 800 years’ worth of knowledge," constituting "an order-of-magnitude expansion in stable materials known to humanity":

https://deepmind.google/discover/blog/millions-of-new-materials-discovered-with-deep-learning/

It was a hoax. When independent material scientists reviewed representative samples of these "new materials," they concluded that "no new materials have been discovered" and that not one of these materials was "credible, useful and novel":

https://www.404media.co/google-says-it-discovered-millions-of-new-materials-with-ai-human-researchers/

As Brian Merchant writes, AI claims are eerily similar to "smoke and mirrors" – the dazzling reality-distortion field thrown up by 17th century magic lantern technology, which millions of people ascribed wild capabilities to, thanks to the outlandish claims of the technology's promoters:

https://www.bloodinthemachine.com/p/ai-really-is-smoke-and-mirrors

The fact that we have a four-hundred-year-old name for this phenomenon, and yet we're still falling prey to it is frankly a little depressing. And, unlucky for us, it turns out that AI therapybots can't help us with this – rather, they're apt to literally convince us to kill ourselves:

https://www.vice.com/en/article/pkadgm/man-dies-by-suicide-after-talking-with-ai-chatbot-widow-says

If you'd like an essay-formatted version of this post to read or share, here's a link to it on pluralistic.net, my surveillance-free, ad-free, tracker-free blog:

https://pluralistic.net/2024/04/23/maximal-plausibility/#reverse-centaurs

Image:

Cryteria (modified)

https://commons.wikimedia.org/wiki/File:HAL9000.svg

CC BY 3.0

https://creativecommons.org/licenses/by/3.0/deed.en

#pluralistic#ai#automation#humans in the loop#centaurs#reverse centaurs#labor#ai safety#sanity checks#spot the mistake#code review#driving instructor

852 notes

·

View notes

Text

How to book air Canada rouge flights?

If you are planning to travel anywhere via Air Canada rouge and you want to know the flight booking procedure, then you must follow the instructions given below:

The online flight booking process

1)Visit the official Air Canada website & search for your flight by clicking on the “book” option, followed by entering the details of flight boarding & departing point, date of departure, number of travelers & trip type; click on the find option.

2)Once you find the most suitable option, tap on it to make a booking.

3)You must log into your account to make a reservation. Fill your personal & contact information in the fields provided and click on the continue button.

4)Now, you will be taken to the payment page, where you can make a payment, or you can hold your ticket & pay later.

5)After reviewing all the information, click on the checkbox.

Flight booking by contacting customer service

The passengers can also use various mediums to reach the customer care team of the airline for flight reservations. The process of booking a flight via Air Canada Rouge Customer Service is straightforward & does not require extra effort. You just need to handle your travel preference & make a payment rest is handled by them.

0 notes

Text

Hmm, is that the sound of chickens, beginning to come home to roost?

After months of resisting, Air Canada was forced to give a partial refund to a grieving passenger who was misled by an airline chatbot inaccurately explaining the airline's bereavement travel policy.

...

Air Canada was seemingly so invested in experimenting with AI that Crocker told the Globe and Mail that "Air Canada’s initial investment in customer service AI technology was much higher than the cost of continuing to pay workers to handle simple queries." It was worth it, Crocker said, because "the airline believes investing in automation and machine learning technology will lower its expenses" and '"fundamentally" create "a better customer experience."

I also highly recommend reading the decision itself:

Highlights:

"In effect, Air Canada suggests the chatbot is a separate legal entity that is responsible for its own actions. This is a remarkable submission."

"While Air Canada argues Mr. Moffatt could find the correct information on another part of its website, it does not explain why the webpage titled “Bereavement travel” was inherently more trustworthy than its chatbot. It also does not explain why customers should have to double-check information found in one part of its website on another part of its website."

And not "AI" related, but delicious snark:

"Air Canada is a sophisticated litigant that should know it is not enough in a legal process to assert that a contract says something without actually providing the contract."

#ai bs#lol#and good for the customer for refusing to take the company's shit#and taking them to court#I am a big fan of customers taking companies to small claims court

528 notes

·

View notes

Text

In Canada, where I am currently forced to reside because of the intersection of intolerant legislation and dynamic self-expression, people love a loyalty card. Because everything is overpriced here, every store offers you a points card of some kind. My wallet right now has 16 different loyalty cards, and my phone creaks under the load of same.

Why do we do it? For the promise of free shit. If you visit Dairy Queen approximately 60 times in a row or give them about $300 in business, they'll give you a free ice cream cone. Do you visit Dairy Queen enough to justify that? Absolutely not, but their hope is that the rewards card will help bring you in rather than "waste" your money on some other ice-cream purveyor.

All this has given rise to what the psychologists in Ottawa are calling "rewards point rage," where you get super mad at someone throwing away perfectly good points by not having a reward card. You ignorant motherfucker, you don't have an Air Miles card? Those two tangerines and a bag of potato chips would have gotten you one Air Mile. Here, let me just stick my card in here and get them for you. What, now you want them? Let's take this outside.

At the moment, only a few hundred people have been injured in point-rage incidents, with a mere two or three of them succumbing to their injuries. This is but a small price to pay for increased consumer satisfaction, aided by the fact that if you do a customer service survey right after shopping there, they might give you five or even ten rewards points into your account as a little "thank you." Better keep them on your good side.

162 notes

·

View notes

Text

Air Canada says it's apologizing and making a number of changes internally to improve the way it treats passengers with disabilities after several high-profile incidents — including one involving a passenger who had to drag himself off a plane — led to a meeting with federal ministers in Ottawa this week.

The airline said Thursday it will be fast-tracking a plan to update the boarding process and changing the way it stores mobility aids like wheelchairs to ensure customers with disabilities can get on and off the plane safely, as well as updating its training procedures for thousands of employees.

"Air Canada recognizes the challenges customers with disabilities encounter when they fly and accepts its responsibility to provide convenient and consistent service so that flying with us becomes easier. Sometimes we do not meet this commitment, for which we offer a sincere apology," CEO Michael Rousseau wrote in a statement.

"As our customers with disabilities tell us, the most important thing is that we continuously improve in the future."

Continue Reading.

Tagging @politicsofcanada

56 notes

·

View notes

Text

Finally chapter six of inhuman, unfortunately I couldn't find a way to squeeze any g/t into this chapter, but, new perspective and new characters are introduced in this new addition, enjoy!

Chapter six,

Damien

Im three hours into the thirteen hour flight from venice italy to vancouver canada, and each passing second im more tempted to knock out the pilot, take the wheel and break most of the air traffic safety rules.

It took me ten years to find my sister, and I hired the two most idiotic men to retrieve her. I would've done it myself if it weren't for the fact that I was in Europe when I got the notification that she was still in the same town we grew up in.

I knew my father wasn't an idiot when it came to where he put his labs, he strategically places them in old worn abandoned buildings so that they're not on the government's radar. Unfortunately for him, I'm not as stupid as the government and was able to find isabelle.

Granted it took me ten years, but I know for a fact that she's still alive and not too injured. I know for a fact that she's going to have a lot of changes due to the unique nature of our fathers experiments, but it won't be anything I cant reverse.

I was seventeen when Isabelle was taken to the lab. My mother and father came home that day to tell me about what they had done, and informed me that I was going to be taken there as well. Not for the same reason as my sister though, they wanted me to start visiting the labs so I could learn what I would be in charge of after they passed. Of course when I protested their reaction was not what i was expecting. Instead of the usual violence and threats on my life, they said something a hundred times worse.

They had threatened to use Isabelle for tests that the subjects were not meant to survive.

With how They had gone into detail about the different ways they could make her death slower and more agonizing, I knew I didn't have much of a choice but to force other innocent children into the same sick fate that hundreds have been put through due to my parents. In a way I'm just as bad as they are, I couldn't find another way to save my sister and instead hurt dozens of others just like her, And I don't even know if I truly saved her at all.

Nine more hours into the flight I'm tempted to just grab a parachute and jump out of the plane window.

I refrain however, I don't think the other passengers would appreciate a sudden loss of pressure in the cabin. The last hour of the flight Is always the longest and I feel like now Is worse than ever.

I sigh for what feels like the millionth time since I boarded the plane, and I only sigh louder when the baby I'm sitting ten seats in front of starts wailing once again. Anyone who brings a baby on a plane should at least have the decency to be able to keep it quiet. Yet another sigh escapes my lips as I unbuckle my seatbelt and make my way over to the women holding the baby, my expression carefully folded into an expression that masks the annoyance that is building inside my core.

Once I reach her seat, and see the baby's little face scrunched up with tears running down his face, alongside a clearly exhausted mother who looks like she hasn't gotten a wink of sleep in the last week. I tap her shoulder and her eyes meet mine, the dark circles under her eyes abundantly clear. “Excuse me miss, I don't mean to be rude,” I tell her in my customer service, and I hold out the small blue stuffed bear that I keep with me for situations like this, “but would your child want this to help him calm down?” the baby in question stops its blubbering and begins to make grabby hands at the toy, and his mother accepts the toy with a grateful smile and I leave before she can initiate any small talk.

Once the plane has landed, I waste no time grabbing my bags and calling a taxi to take me to the very odd address I was given by the idiots I hired. Since I was in such a rush to leave Italy, I only packed three bags to take with me. One for essentials, one for my equipment, and one for Isabelle's things that I managed to get my hands on after my parents threw them all out. The cab driver is a young man in his twenties, he seems tired and based on his expression I assume he's not really one of those chatty drivers i hate.

I lean back and pull out my phone, a simple black burner phone that I use to contact anyone involved in my less than legal life. After scrolling through the seemingly never ending list of contacts before finally coming across the one i need. I click the call button and bring the phone to my ear. Once I hear the voice on the other end of the phone, I speak before they have a chance to take a breath. “You have twenty minutes.”

12 notes

·

View notes

Text

No. 43 - Porter Airlines

I consider myself very lucky to live near enough to an airport, located directly beneath one of the main departure paths, that I can regularly see airplanes flying overhead on their way off to wherever. Depending on the plane, they can pass over my house as low as 3,000 feet! ...which is still way too high for my phone's camera! So while I can see the plane decently, even make out details of the livery, what my camera sees is...this.

Okay, so my planespotting hobby mostly consists of literally spotting them (I am very good at this part! It's the photography that I struggle with!) because I'm unable to shell out for a telephoto lens, but thanks to the magic of flight tracking software I'm able to identify the exact airplane that this is, rather than being forced to base my review off this crunchy "photograph".

So, I'd like to introduce you all to our subject for today, C-GLQR! And, by extension, Porter Airlines - requested by @fungaloids, plus an anon.

First flown in February of 2009 and delivered in December of the same year, C-GLQR has served her entire fourteen-year career with Porter Airlines. She's actually only slightly younger than the airline itself. Porter was founded in 2006, featuring executives who formerly served in similarly high positions in Canadian regional airlines Air Ontario and Canada 3000, American Airlines, and...apparently the former US ambassador to Canada for some reason. They're about as large as you can get while still more or less being a regional airline, and they fly a fleet I'd call medium-sized of Embraer E195-E2 jets and an even larger number of Bombardier Dash 8-Q400 turboprop planes, like the pictured C-GLQR, out of their hub in Toronto.

One interesting thing about Porter (inconsistently stylized as lowercase-p porter, but it lacks the clear intent of something like condor so I'm not going as far as to write it that way myself) is said hub. See, when I say Toronto, you probably think of the worst airport in the entire world, Toronto Lester B. Pearson International Airport. Thankfully for Porter's customers they do not have to go to the labyrinth of human misery which is Toronto Pearson, and are instead corralled into Billy Bishop Toronto City Airport, colloquially known as Toronto Island Airport, potentially because it's changed its name twice and the local population got sick of remembering what it's calling itself now.

image: DXR

The 'island' designator is quite literal. This is a teeny tiny airport, just barely large enough to land the Q400 and definitely too small to land jets. The fact that Porter flies to Chicago-Midway, Washington-Dulles, and Boston-Logan is a testament to the Q400's absolutely wild range rather than an indication that this tiny scrap of land is in any meaningful way an international airport. It has two runways and both are shorter than the ones at the smallest airport I've ever flown into that had an actual terminal, Vieques. I'm surprised they can operate a Q400 there. In fact, they can't - they had to pick a seat configuration smaller than the standard in order to be able to use the runways at Billy Bishop. (Incidentally, this means their seats have a more generous pitch, so I suppose that's a point for them.)

So why would they want to put the biggest passenger turboprop in service in the West onto this tiny airstrip? Well, Porter's...reason for existing, so it seems, is to force the Toronto Port Authority to expand the airport and build a bridge to the mainland despite the fact that nobody who lives in the area wants this. Hilariously, they have been entirely unsuccessful in this venture and now operate a second hub in Pearson. That's where they put the jets - after all, if you tried to land an E195-E2 at Toronto Island you would have a very wet plane and some very mad passengers on your hands very quickly.

youtube

I mean, to be fair, getting to not go to Pearson is a selling point.

I don't have any other place to put this but they have an adorable raccoon mascot named Mr. Porter. I'm not sure why a raccoon, but I like him. He doesn't appear on the livery at all - heaven forbid we do something interesting - but he's there and he's cute. I do have to point out, though, that this is one of the worst names for SEO I've seen in a while, given Mr. Porter is the name of the men's department of extremely popular luxury fashion outlet shop Net-a-Porter.

I think raccoons could be a pretty nice source of inspiration for a livery, what with their colorblocking and stripes. You could even make the planes' engine cowlings look like weirdly human little hands. I would hate that, but I would respect it! Instead Porter has taken the approach of making the plane mostly white. Revolutionary for sure.

I'll begin with the good and say that I really like this grey underside with its little outlines - I think this is an absolutely brilliant design for the Dash 8. Unlike the ATR series, which I've talked about a fair few times before on this blog, the Q400 is about as angular as a plane can get. I've never touched on that shape before, but I've discussed how carriers, though I'm sure it's by accident and they never consider this, work with the shape of the ATR to good effect. The curvaceousness of the ventral fairing on the ATR is complemented by long swoops like the ones used by Azul, IndiGo, and Air Astra. The Q400, in contrast, stores its landing gear in the engine cowlings, allowing for a very flat belly and uninterrupted fuselage that looks best with sharp long lines and blocky geometric shapes. If this livery had any other details, this would be such a nice touch - they even hammer the point in with the same design on the bottom of the cowlings.

Unfortunately, it's so light-colored that it's difficult to notice. You could mistake it for shadows settling on natural grooves in the airframe if you didn't know what the bottom of a Q400 is supposed to look like, and it isn't as if you can see it when the plane is parked.

You may well not see the wordmark, either. While the sans-serif font chosen is almost gratingly boring it is at least not hideous, but it's located in such an out-of-the-way location it almost feels like they're ashamed of it. It's so needlessly far back and low-sitting that the wing blocks it from half the possible angles, and it's not like it's accentuated in any way. You could so easily miss it. This wordmark is honestly Lufthansa-tier.

Another thing I don't like is the use of the tail. It's blocked out very Detached Tail Syndrome style, refusing to engage with the large block leading from it to the fuselage. I would understand, though not approve, if this was because they didn't want to redesign the balance of the tail when applying the livery to a new style of plane, but the Q400 is what they started with! The livery was designed for this plane and it seems to want you to just not notice this significant chunk of fuselage! It makes the whole airframe look so desolate and empty. The kindest thing I can say for it is that it looks lazy, but really it looks more unfinished. I just struggle to understand why these choices were made, in all honesty. Surely this isn't the best you can do.

Right, right, okay. There's something I've been dancing around on purpose and I think it's obvious what it is. I just wanted to get in an entire review first because there's sort of no going back once I've mentioned it. Everything I said before, while very important, is subordinate to this one...utterly perplexing choice which turns failure to infamy.

PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER PORTER

Grade: Z-

#tarmac fashion week#era: 2000s#era: 2010s#era: 2020s#grade: z-#region: north america#region: canada#regional airlines#porter airlines

27 notes

·

View notes

Text

the issue with AI chatbots is that they should NEVER be your first choice if you are building something to handle easily automated forms.... consider an algorithmic "choose your own adventure" style chatbot first

it really seems to me that the air canada chatbot was intended to be smth that could automatically handle customer service issues but honestly... if you do not need any sort of "human touch" then i would recommend a "fancier google form"... like a more advanced flowchart of issues. If you NEED AI to be part of your chatbot I would incorporate it as part of the input parsing - you should not be using it to generate new information!

#literally everyone sees AI and gets so hard they black out and it ANNOYS me!#prediction/recognition AI is fine i guess it's not without flaws but it is leagues better than generative AI#but also - use the right tool for the job#like the article said it was using ai chatbot to handle customer service. Is it so hard to create a series of pre-made questions to guide#-a customer through the process? (this is called a wizard i think)#(most importantly wizards have been around for pretty much as long as GUIs have)#'oh but you need to hardcode it' yeah and hardcoding stuff came free with having a job. Turn your questions into a series of markdown#-documents if you need someone with no tech skill to come in and put in the questions#don't outsource it to an AI. You are lowering the overall tech literacy of the population here and im not kidding#there are good uses of AI.. Woebot is a really good chatbot that uses AI precisely because it has a lot of human oversight in it#but i really think that AI is something that you better have a PERFECT - not just good - justification for#sorry.... angry tech guy rant.... i swear i dont do this in real life... i just like typing...

10 notes

·

View notes

Text

airsLLide No. 16029: C-FDCA, Airbus A320-211, Canadian Airlines International, Miami, March 1, 1999.

Canadian Airlines was a fairly early customer for the Airbus A320 and thus it took delivery of C-FDCA, its fifth unit of the type, in March 1991. After eight years in service, she looked a bit faded, but don't get fooled - she's a tough one that won't let go easily.

C-FDCA served on through the Canadian/Air Canada merger, got transferred to various subsidiaries later, such as to the low cost branch Air Canada Tango in 2001, or to the upscale sports charter subsidiary Air Canada Jetz in 2003. In 2012, she returned to just fly for mainline Air Canada - and so she does to the present day, making Charlie Alpha, manufacturer serial number 232, one of the oldest A320s still active in summer 2024!

And to illustrate my point:

airsLLide No. 45657: C-FDCA, Airbus A320-211, Air Canada, Toronto-Pearson, April 26, 2024.

I said she's tough!

2 notes

·

View notes

Text

NHL to air alternate Stanley Cup Final broadcast for Deaf community

By William Douglas

The NHL and P-X-P will produce the first-of-its kind alternate broadcast of the Stanley Cup Final in American Sign Language for the Deaf community.

“NHL in ASL” will be available on the digital platforms of ESPN+ and Canada’s Sportsnet+ and feature Deaf broadcasters providing real-time play-by-play coverage and color commentary during each game of the best-of-7 Final between the Florida Panthers and Edmonton Oilers. Game 1 is Saturday at 8 p.m. ET.

It’s the latest collaboration between the NHL and P-X-P, which has provided ASL interpretation for signature events like the Winter Classic, Heritage Classic, NHL All-Star Weekend and Stadium Series, and Commissioner Gary Bettman’s State of the League address.

“Our continued partnership with P-X-P allows the NHL to do something no other professional sports league has done before: provide a fully immersive, unique, and accessible viewing experience that specifically meet the needs of the Deaf community,” said Kim Davis, NHL Senior Executive Vice President of Social Impact, Growth Initiatives and Legislative Affairs. “This NHL-led production further exemplifies the League’s commitment to producing accessible and interactive content for all of our fans -- including underserved communities. Fans of all abilities are encouraged to tune in to experience this first-of-a-kind broadcast as a way to understand and share the experience with someone who is Deaf.”

Steve Mayer, NHL Senior Executive Vice President and Chief Content Officer, said the broadcast won’t be the traditional play-by-play and color commentary, “but rather something more conversational and relaxed in nature providing a deeper layer of additional storytelling.”

“The first-of-its kind production is something we hope and plan to expand on beyond the Stanley Cup Final and into the 2024-25 season,” Mayer said.

“NHL in ASL” will feature Jason Altmann, P-X-P’s chief operating officer who is third-generation Deaf, and Noah Blankenship, who currently works in the Office of Deaf and Hard of Hearing Services in the Agency for Human Rights and Community Partnerships under the city and county of Denver.

Altmann and Blankenship will provide ASL descriptions of major plays, like goals and hits, as well as referee calls and rule explanations to clarify decisions made on the ice.

Graphic visualizations will include a large, metered, real-time bar that demonstrates crowd noise levels to viewers, specifically around certain events like goals and penalties. Also included will be custom visual emotes to depict goals, penalties, the intensity of hits, and whether the puck hits the post/crossbar.

“The opportunity to do a Deaf-centric broadcast of a premier sporting event in ASL is a positive, seismic change for the Deaf community,” Altmann said. “As a sports fan growing up, I couldn’t relate with the broadcasters because some elements were not well captured with closed captioning. Now, we are creating an opportunity for Deaf sports fans and viewers to watch Deaf broadcasters and fell engaged through ASL.”

Brice Christianson, P-X-P’s founder and CEO, said “NHL in ASL” is a dream come true for the Deaf community who watch sports.

About 30 million Americans over age 12 have hearing loss in both ears and about two to three out of every 1,000 children in the U.S. are born with a detectable level of hearing loss in one or both ears, according to the National Institutes on Deafness and Other Communication Disorders. There’s an estimated 357,000 people in Canada who are culturally Deaf and 3.21 million who are hard of hearing, according to the Canadian Association for the Deaf.

“It gives the Deaf community the belief that accessibility and inclusion and representation is possible in professional sports,” Christianson said of the broadcast.

“Accessibility, inclusion and representation is something that the Deaf and hard of hearing community don’t get consistently, and what we’ve been doing with the NHL over time is building it.

“Now we’re excited to kick the door open and build off it.”

#nhl#nhl playoffs#edmonton oilers#florida panthers#deaf#sign language#ice hockey#i know i'm late posting this#and i saw another post about it floating around#but that post wasn't accessible#ironically#hopefully this one is a bit better#i worked hard on that alt text

5 notes

·

View notes

Text

Fitness Champion Mindi O'Brien Joins Engineered Racing Services at Motorama

FOR IMMEDIATE RELEASE- February 6, 2024

ENGINEERED RACING SERVICES proudly announces their “Get Jacked” campaign. We are teaming up with Mindi O’Brien, Canada’s First International Pro Fitness & Physique Champion.

Mindi O is Canada's top fitness personality and superstar. She has been active as a professional for almost 30 years. Prior to Mindi, no other Canadian competitor had ever won an IFBB professional fitness competition. Mindi has graced the stage more than 80 times.

Mindi is a professional athlete, mom, on-air sports entertainment talent, magazine cover girl, owner of MINDI O'S FITNESS, founder of the Mindi O Show and Niagara Falls Classic fitness competitions/bodybuilding competitions as well as a social media maven with over 1 million views on YouTube, Instagram (mindiobrien), Facebook and X.

Mindi likes to build better bodies, like Engineered Racing Services (ERS) likes to build better vehicles. They have teamed up for their Get JACKED Campaign to promote their extensive line of Truck/JEEP/SUV and Off-Road Vehicle product lines available to Canadians everywhere.

Mindi will be on hand at the Motorama Custom Car & Motorsports Expo next month signing autographs on Saturday, March 9 at the Engineered Racing Services Booth in Hall 2

Visit www.engineeredracing.ca to view all the parts and accessories you need to get your vehicle “jacked” and be the envy of all your friends.

ERS is far more than just Racing & Performance

Read the full article

4 notes

·

View notes

Text

In the latest aid package, the US sends new missiles to Ukraine

Fernando Valduga By Fernando Valduga 06/01/2023 - 12:00 in Military, War Zones

The Biden administration revealed its thirty-ninth shipment of equipment to Ukraine, valued at up to $300 million to help in its defense against Russia.

The new security assistance includes artillery, anti-armoured features and ammunition, including tens of millions of small arms ammunition cartridges.

In addition, among the items included are additional ammunition for Patriot air defense systems, AIM-7 missiles for air defense, Avenger air defense systems, Stinger anti-aircraft systems and additional ammunition for High Mobility Artillery Rocket Systems (HIMARS).

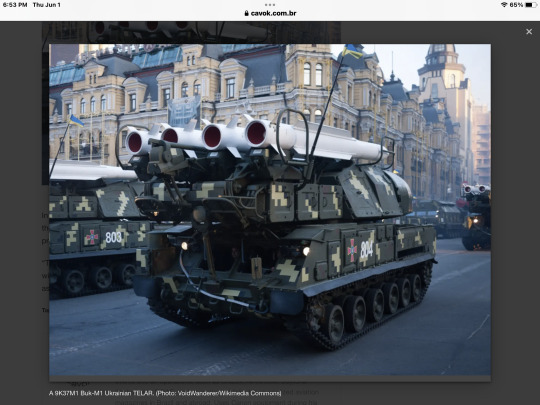

This is the first official confirmation of the transfer of the AIM-7 Sparrow (Air Intercept Missile) to Ukraine. Although the AIM-7 is originally an air-to-air missile with semi-active radar, it will be employed by Ukraine's Buk-M1 surface-to-air missile system, increasing its air defense capabilities. Developed by Raytheon, the first variants of the missile entered U.S. military service in the 1960s.

In addition, the package also includes 155 mm and 105 mm artillery cartridges, 105 mm tank ammunition, precision aerial ammunition, Zuni rockets and ammunition for Unmanned Aerial Systems (UAS).

In addition, it provides AT-4 anti-shield systems, more than 30 million small arms ammunition, mine removal equipment and systems, demolition ammunition for obstacle removal, night vision devices, spare parts, generators and other field equipment.

After President Biden's meeting with President Zelensky at the G7 Summit in Hiroshima, Japan, in May 2021, the United States held its thirty-eighth instance of withdrawal of equipment to Ukraine.

A 9K37M1 Buk-M1 Ukrainian TELAR. (Photo: VoidWanderer/Wikimedia Commons)

In total, the U.S. has committed $38.3 billion in security assistance to Ukraine since the beginning of the Biden administration, which includes more than $37.6 billion provided since the start of the invasion of Russia on February 24, 2022.

“The United States will continue to work with its allies and partners to provide Ukraine with resources to meet the immediate needs of the battlefield and long-term security assistance requirements,” the Department of Defense said.

Tags: armamentsMilitary AviationWar Zones

Fernando Valduga

Fernando Valduga

Aviation photographer and pilot since 1992, has participated in several events and air operations, such as Cruzex, AirVenture, Daytona Airshow and FIDAE. He has works published in specialized aviation magazines in Brazil and abroad. Uses Canon equipment during his photographic work around the world of aviation.

Related news

First CC-330 in traditional white, blue and red paint. (Photo: Ministry of Defense of Canada)

MILITARY

First of Canada's new "Air Force One" planes receives official painting

01/06/2023 - 19:00

A photo from the Ministry of Defense of Iran shows the Kheybar precision guided missile, a fourth-generation Khorramshahr long-range ballistic missile being launched at an undisclosed location in Iran on May 25, 2023. (Photo: AFP)

ARMAMENTOS

Iranian commander says country should reveal hypersonic missile soon

01/06/2023 - 14:00

MILITARY

Diamond finds new customer for DA62 SurveyStar

06/01/2023 - 13:00

AERONAUTICAL ACCIDENTS

U.S. Navy F-5 fighter accident

01/06/2023 - 08:00

A photo of GO FAST, an official U.S. government video of unidentified aerial phenomena (UAP), taken in 2015. (Photo: U.S. Navy)

MILITARY

NASA says that less than 5% of the hundreds of UFO sightings are really inexplicable

01/06/2023 - 07:20

INTERCEPTIONS

Romanian Air Force intercepts Russian aircraft in the Baltic region

31/05/2023 - 19:13

12 notes

·

View notes

Text

Auto-Pilot Error: Air Canada’s Chatbot Flub Shakes Up AI-Powered Customer Service

http://myattorneyisarobot.com/2024/03/15/auto-pilot-error-air-canadas-chatbot-flub-shakes-up-ai-powered-customer-service/

2 notes

·

View notes