#learn apache spark

Explore tagged Tumblr posts

Text

What is PySpark? A Beginner’s Guide

Introduction

The digital era gives rise to continuous expansion in data production activities. Organizations and businesses need processing systems with enhanced capabilities to process large data amounts efficiently. Large datasets receive poor scalability together with slow processing speed and limited adaptability from conventional data processing tools. PySpark functions as the data processing solution that brings transformation to operations.

The Python Application Programming Interface called PySpark serves as the distributed computing framework of Apache Spark for fast processing of large data volumes. The platform offers a pleasant interface for users to operate analytics on big data together with real-time search and machine learning operations. Data engineering professionals along with analysts and scientists prefer PySpark because the platform combines Python's flexibility with Apache Spark's processing functions.

The guide introduces the essential aspects of PySpark while discussing its fundamental elements as well as explaining operational guidelines and hands-on usage. The article illustrates the operation of PySpark through concrete examples and predicted outputs to help viewers understand its functionality better.

What is PySpark?

PySpark is an interface that allows users to work with Apache Spark using Python. Apache Spark is a distributed computing framework that processes large datasets in parallel across multiple machines, making it extremely efficient for handling big data. PySpark enables users to leverage Spark’s capabilities while using Python’s simple and intuitive syntax.

There are several reasons why PySpark is widely used in the industry. First, it is highly scalable, meaning it can handle massive amounts of data efficiently by distributing the workload across multiple nodes in a cluster. Second, it is incredibly fast, as it performs in-memory computation, making it significantly faster than traditional Hadoop-based systems. Third, PySpark supports Python libraries such as Pandas, NumPy, and Scikit-learn, making it an excellent choice for machine learning and data analysis. Additionally, it is flexible, as it can run on Hadoop, Kubernetes, cloud platforms, or even as a standalone cluster.

Core Components of PySpark

PySpark consists of several core components that provide different functionalities for working with big data:

RDD (Resilient Distributed Dataset) – The fundamental unit of PySpark that enables distributed data processing. It is fault-tolerant and can be partitioned across multiple nodes for parallel execution.

DataFrame API – A more optimized and user-friendly way to work with structured data, similar to Pandas DataFrames.

Spark SQL – Allows users to query structured data using SQL syntax, making data analysis more intuitive.

Spark MLlib – A machine learning library that provides various ML algorithms for large-scale data processing.

Spark Streaming – Enables real-time data processing from sources like Kafka, Flume, and socket streams.

How PySpark Works

1. Creating a Spark Session

To interact with Spark, you need to start a Spark session.

Output:

2. Loading Data in PySpark

PySpark can read data from multiple formats, such as CSV, JSON, and Parquet.

Expected Output (Sample Data from CSV):

3. Performing Transformations

PySpark supports various transformations, such as filtering, grouping, and aggregating data. Here’s an example of filtering data based on a condition.

Output:

4. Running SQL Queries in PySpark

PySpark provides Spark SQL, which allows you to run SQL-like queries on DataFrames.

Output:

5. Creating a DataFrame Manually

You can also create a PySpark DataFrame manually using Python lists.

Output:

Use Cases of PySpark

PySpark is widely used in various domains due to its scalability and speed. Some of the most common applications include:

Big Data Analytics – Used in finance, healthcare, and e-commerce for analyzing massive datasets.

ETL Pipelines – Cleans and processes raw data before storing it in a data warehouse.

Machine Learning at Scale – Uses MLlib for training and deploying machine learning models on large datasets.

Real-Time Data Processing – Used in log monitoring, fraud detection, and predictive analytics.

Recommendation Systems – Helps platforms like Netflix and Amazon offer personalized recommendations to users.

Advantages of PySpark

There are several reasons why PySpark is a preferred tool for big data processing. First, it is easy to learn, as it uses Python’s simple and intuitive syntax. Second, it processes data faster due to its in-memory computation. Third, PySpark is fault-tolerant, meaning it can automatically recover from failures. Lastly, it is interoperable and can work with multiple big data platforms, cloud services, and databases.

Getting Started with PySpark

Installing PySpark

You can install PySpark using pip with the following command:

To use PySpark in a Jupyter Notebook, install Jupyter as well:

To start PySpark in a Jupyter Notebook, create a Spark session:

Conclusion

PySpark is an incredibly powerful tool for handling big data analytics, machine learning, and real-time processing. It offers scalability, speed, and flexibility, making it a top choice for data engineers and data scientists. Whether you're working with structured data, large-scale machine learning models, or real-time data streams, PySpark provides an efficient solution.

With its integration with Python libraries and support for distributed computing, PySpark is widely used in modern big data applications. If you’re looking to process massive datasets efficiently, learning PySpark is a great step forward.

youtube

#pyspark training#pyspark coutse#apache spark training#apahe spark certification#spark course#learn apache spark#apache spark course#pyspark certification#hadoop spark certification .#Youtube

0 notes

Text

SQL Course Training: Advancing Your Database Skills

In the realm of data analysis and management, SQL (Structured Query Language) stands as a foundational skill indispensable for professionals seeking to navigate and manipulate databases effectively. As the demand for data-driven insights continues to soar, honing your SQL proficiency through targeted training can significantly enhance your capabilities in data analysis and open doors to diverse career opportunities. Let's explore the significance of SQL course training and how it can advance your database skills.

Understanding the Importance of SQL in Data Analysis:

SQL serves as the universal language for communicating with relational databases, enabling users to retrieve, manipulate, and manage data efficiently. Whether you're a data analyst, data scientist, or database administrator, mastering SQL empowers you to extract valuable insights, perform complex queries, and optimize database performance. With its widespread adoption across industries, SQL proficiency has become a prerequisite for roles involving data analysis and database management.

Key Components of SQL Course Training:

SQL course training encompasses a range of topics tailored to equip learners with comprehensive database management skills. From basic SQL syntax to advanced query optimization techniques, these courses cover essential concepts and best practices for leveraging SQL effectively. Key components of SQL course training include:

- SQL Fundamentals: Understanding basic SQL commands, data types, and database objects.

- Querying Databases: Crafting SELECT statements to retrieve data from tables and apply filtering, sorting, and aggregation.

- Data Manipulation: Performing INSERT, UPDATE, DELETE operations to modify data within tables.

- Database Design: Understanding principles of database normalization, table relationships, and entity-relationship modeling.

- Advanced SQL Topics: Exploring advanced SQL features such as joins, subqueries, stored procedures, and triggers.

- Optimization and Performance Tuning: Techniques for optimizing SQL queries, indexing strategies, and enhancing database performance.

Choosing the Best SQL Course:

When selecting a SQL course online, it's essential to consider factors such as:

- Curriculum: Ensure the course covers a comprehensive range of SQL topics, from fundamentals to advanced concepts.

- Hands-On Practice: Look for courses that offer hands-on exercises and projects to reinforce learning and practical application.

- Instructor Expertise: Choose courses led by experienced SQL professionals with a track record of delivering high-quality instruction.

- Student Reviews: Assess feedback from past learners to gauge the course's effectiveness and relevance to your learning goals.

- Certification: Some SQL courses offer certification upon completion, which can validate your skills and enhance your credentials in the job market.

Integrating SQL with Data Analysis:

SQL proficiency synergizes seamlessly with data analysis tasks, enabling analysts to extract, transform, and analyze data stored in relational databases. Whether you're performing ad-hoc analysis, generating reports, or building data pipelines, SQL serves as a powerful tool for accessing and manipulating data effectively. By mastering SQL alongside data analysis skills and tools such as Python and Apache Spark, you can enhance your capabilities as a data professional and tackle complex analytical challenges with confidence.

Conclusion:

Investing in SQL course training is a strategic step towards mastering database management skills and advancing your career in data analysis. Whether you're a novice seeking to build a solid foundation in SQL or an experienced professional aiming to sharpen your expertise, there are ample opportunities to enhance your database skills through online SQL courses. By selecting the best SQL course that aligns with your learning objectives and investing time and effort into mastering SQL concepts, you can unlock new possibilities in data analysis and become a proficient database practitioner poised for success in today's data-driven world.

#apache spark course#data analysis#data analysis skill#python course#best python course#data analysis course#data analysis course online#master data analysis#python course online#sql course#sql course online#best sql course#data analysis online#python course training#sql course training#apache spark course online#best apache spark course#learn python#learn apache spark#learn data analysis#learn sql

1 note

·

View note

Text

Are you looking to build a career in Big Data Analytics? Gain in-depth knowledge of Hadoop and its ecosystem with expert-led training at Sunbeam Institute, Pune – a trusted name in IT education.

Why Choose Our Big Data Hadoop Classes?

🔹 Comprehensive Curriculum: Covering Hadoop, HDFS, MapReduce, Apache Spark, Hive, Pig, HBase, Sqoop, Flume, and more. 🔹 Hands-on Training: Work on real-world projects and industry use cases to gain practical experience. 🔹 Expert Faculty: Learn from experienced professionals with real-time industry exposure. 🔹 Placement Assistance: Get career guidance, resume building support, and interview preparation. 🔹 Flexible Learning Modes: Classroom and online training options available. 🔹 Industry-Recognized Certification: Boost your resume with a professional certification.

Who Should Join?

✔️ Freshers and IT professionals looking to enter the field of Big Data & Analytics ✔️ Software developers, system administrators, and data engineers ✔️ Business intelligence professionals and database administrators ✔️ Anyone passionate about Big Data and Machine Learning

#Big Data Hadoop training in Pune#Hadoop classes Pune#Big Data course Pune#Hadoop certification Pune#learn Hadoop in Pune#Apache Spark training Pune#best Big Data course Pune#Hadoop coaching in Pune#Big Data Analytics training Pune#Hadoop and Spark training Pune

0 notes

Text

Unveiling the Power of Delta Lake in Microsoft Fabric

Discover how Microsoft Fabric and Delta Lake can revolutionize your data management and analytics. Learn to optimize data ingestion with Spark and unlock the full potential of your data for smarter decision-making.

In today’s digital era, data is the new gold. Companies are constantly searching for ways to efficiently manage and analyze vast amounts of information to drive decision-making and innovation. However, with the growing volume and variety of data, traditional data processing methods often fall short. This is where Microsoft Fabric, Apache Spark and Delta Lake come into play. These powerful…

#ACID Transactions#Apache Spark#Big Data#Data Analytics#data engineering#Data Governance#Data Ingestion#Data Integration#Data Lakehouse#Data management#Data Pipelines#Data Processing#Data Science#Data Warehousing#Delta Lake#machine learning#Microsoft Fabric#Real-Time Analytics#Unified Data Platform

0 notes

Text

#learn#free#resource#python#r#postgresql#chatgpt#tableau#power bi#excel#scala#apache spark#shell#git#oracle#programming#software

0 notes

Text

Why Python Will Thrive: Future Trends and Applications

Python has already made a significant impact in the tech world, and its trajectory for the future is even more promising. From its simplicity and versatility to its widespread use in cutting-edge technologies, Python is expected to continue thriving in the coming years. Considering the kind support of Python Course in Chennai Whatever your level of experience or reason for switching from another programming language, learning Python gets much more fun.

Let's explore why Python will remain at the forefront of software development and what trends and applications will contribute to its ongoing dominance.

1. Artificial Intelligence and Machine Learning

Python is already the go-to language for AI and machine learning, and its role in these fields is set to expand further. With powerful libraries such as TensorFlow, PyTorch, and Scikit-learn, Python simplifies the development of machine learning models and artificial intelligence applications. As more industries integrate AI for automation, personalization, and predictive analytics, Python will remain a core language for developing intelligent systems.

2. Data Science and Big Data

Data science is one of the most significant areas where Python has excelled. Libraries like Pandas, NumPy, and Matplotlib make data manipulation and visualization simple and efficient. As companies and organizations continue to generate and analyze vast amounts of data, Python’s ability to process, clean, and visualize big data will only become more critical. Additionally, Python’s compatibility with big data platforms like Hadoop and Apache Spark ensures that it will remain a major player in data-driven decision-making.

3. Web Development

Python’s role in web development is growing thanks to frameworks like Django and Flask, which provide robust, scalable, and secure solutions for building web applications. With the increasing demand for interactive websites and APIs, Python is well-positioned to continue serving as a top language for backend development. Its integration with cloud computing platforms will also fuel its growth in building modern web applications that scale efficiently.

4. Automation and Scripting

Automation is another area where Python excels. Developers use Python to automate tasks ranging from system administration to testing and deployment. With the rise of DevOps practices and the growing demand for workflow automation, Python’s role in streamlining repetitive processes will continue to grow. Businesses across industries will rely on Python to boost productivity, reduce errors, and optimize performance. With the aid of Best Online Training & Placement Programs, which offer comprehensive training and job placement support to anyone looking to develop their talents, it’s easier to learn this tool and advance your career.

5. Cybersecurity and Ethical Hacking

With cyber threats becoming increasingly sophisticated, cybersecurity is a critical concern for businesses worldwide. Python is widely used for penetration testing, vulnerability scanning, and threat detection due to its simplicity and effectiveness. Libraries like Scapy and PyCrypto make Python an excellent choice for ethical hacking and security professionals. As the need for robust cybersecurity measures increases, Python’s role in safeguarding digital assets will continue to thrive.

6. Internet of Things (IoT)

Python’s compatibility with microcontrollers and embedded systems makes it a strong contender in the growing field of IoT. Frameworks like MicroPython and CircuitPython enable developers to build IoT applications efficiently, whether for home automation, smart cities, or industrial systems. As the number of connected devices continues to rise, Python will remain a dominant language for creating scalable and reliable IoT solutions.

7. Cloud Computing and Serverless Architectures

The rise of cloud computing and serverless architectures has created new opportunities for Python. Cloud platforms like AWS, Google Cloud, and Microsoft Azure all support Python, allowing developers to build scalable and cost-efficient applications. With its flexibility and integration capabilities, Python is perfectly suited for developing cloud-based applications, serverless functions, and microservices.

8. Gaming and Virtual Reality

Python has long been used in game development, with libraries such as Pygame offering simple tools to create 2D games. However, as gaming and virtual reality (VR) technologies evolve, Python’s role in developing immersive experiences will grow. The language’s ease of use and integration with game engines will make it a popular choice for building gaming platforms, VR applications, and simulations.

9. Expanding Job Market

As Python’s applications continue to grow, so does the demand for Python developers. From startups to tech giants like Google, Facebook, and Amazon, companies across industries are seeking professionals who are proficient in Python. The increasing adoption of Python in various fields, including data science, AI, cybersecurity, and cloud computing, ensures a thriving job market for Python developers in the future.

10. Constant Evolution and Community Support

Python’s open-source nature means that it’s constantly evolving with new libraries, frameworks, and features. Its vibrant community of developers contributes to its growth and ensures that Python stays relevant to emerging trends and technologies. Whether it’s a new tool for AI or a breakthrough in web development, Python’s community is always working to improve the language and make it more efficient for developers.

Conclusion

Python’s future is bright, with its presence continuing to grow in AI, data science, automation, web development, and beyond. As industries become increasingly data-driven, automated, and connected, Python’s simplicity, versatility, and strong community support make it an ideal choice for developers. Whether you are a beginner looking to start your coding journey or a seasoned professional exploring new career opportunities, learning Python offers long-term benefits in a rapidly evolving tech landscape.

#python course#python training#python#technology#tech#python programming#python online training#python online course#python online classes#python certification

2 notes

·

View notes

Text

Master Big Data with a Comprehensive Databricks Course

A Databricks Course is the perfect way to master big data analytics and Apache Spark. Whether you are a beginner or an experienced professional, this course helps you build expertise in data engineering, AI-driven analytics, and cloud-based collaboration. You will learn how to work with Spark SQL, Delta Lake, and MLflow to process large datasets and create smart data solutions.

This Databricks Course provides hands-on training with real-world projects, allowing you to apply your knowledge effectively. Learn from industry experts who will guide you through data transformation, real-time streaming, and optimizing data workflows. The course also covers managing both structured and unstructured data, helping you make better data-driven decisions.

By enrolling in this Databricks Course, you will gain valuable skills that are highly sought after in the tech industry. Engage with specialists and improve your ability to handle big data analytics at scale. Whether you want to advance your career or stay ahead in the fast-growing data industry, this course equips you with the right tools.

🚀 Enroll now and start your journey toward mastering big data analytics with Databricks!

2 notes

·

View notes

Text

NVIDIA AI Workflows Detect False Credit Card Transactions

A Novel AI Workflow from NVIDIA Identifies False Credit Card Transactions.

The process, which is powered by the NVIDIA AI platform on AWS, may reduce risk and save money for financial services companies.

By 2026, global credit card transaction fraud is predicted to cause $43 billion in damages.

Using rapid data processing and sophisticated algorithms, a new fraud detection NVIDIA AI workflows on Amazon Web Services (AWS) will assist fight this growing pandemic by enhancing AI’s capacity to identify and stop credit card transaction fraud.

In contrast to conventional techniques, the process, which was introduced this week at the Money20/20 fintech conference, helps financial institutions spot minute trends and irregularities in transaction data by analyzing user behavior. This increases accuracy and lowers false positives.

Users may use the NVIDIA AI Enterprise software platform and NVIDIA GPU instances to expedite the transition of their fraud detection operations from conventional computation to accelerated compute.

Companies that use complete machine learning tools and methods may see an estimated 40% increase in the accuracy of fraud detection, which will help them find and stop criminals more quickly and lessen damage.

As a result, top financial institutions like Capital One and American Express have started using AI to develop exclusive solutions that improve client safety and reduce fraud.

With the help of NVIDIA AI, the new NVIDIA workflow speeds up data processing, model training, and inference while showcasing how these elements can be combined into a single, user-friendly software package.

The procedure, which is now geared for credit card transaction fraud, might be modified for use cases including money laundering, account takeover, and new account fraud.

Enhanced Processing for Fraud Identification

It is more crucial than ever for businesses in all sectors, including financial services, to use computational capacity that is economical and energy-efficient as AI models grow in complexity, size, and variety.

Conventional data science pipelines don’t have the compute acceleration needed to process the enormous amounts of data needed to combat fraud in the face of the industry’s continually increasing losses. Payment organizations may be able to save money and time on data processing by using NVIDIA RAPIDS Accelerator for Apache Spark.

Financial institutions are using NVIDIA’s AI and accelerated computing solutions to effectively handle massive datasets and provide real-time AI performance with intricate AI models.

The industry standard for detecting fraud has long been the use of gradient-boosted decision trees, a kind of machine learning technique that uses libraries like XGBoost.

Utilizing the NVIDIA RAPIDS suite of AI libraries, the new NVIDIA AI workflows for fraud detection improves XGBoost by adding graph neural network (GNN) embeddings as extra features to assist lower false positives.

In order to generate and train a model that can be coordinated with the NVIDIA Triton Inference Server and the NVIDIA Morpheus Runtime Core library for real-time inferencing, the GNN embeddings are fed into XGBoost.

All incoming data is safely inspected and categorized by the NVIDIA Morpheus framework, which also flags potentially suspicious behavior and tags it with patterns. The NVIDIA Triton Inference Server optimizes throughput, latency, and utilization while making it easier to infer all kinds of AI model deployments in production.

NVIDIA AI Enterprise provides Morpheus, RAPIDS, and Triton Inference Server.

Leading Financial Services Companies Use AI

AI is assisting in the fight against the growing trend of online or mobile fraud losses, which are being reported by several major financial institutions in North America.

American Express started using artificial intelligence (AI) to combat fraud in 2010. The company uses fraud detection algorithms to track all client transactions worldwide in real time, producing fraud determinations in a matter of milliseconds. American Express improved model accuracy by using a variety of sophisticated algorithms, one of which used the NVIDIA AI platform, therefore strengthening the organization’s capacity to combat fraud.

Large language models and generative AI are used by the European digital bank Bunq to assist in the detection of fraud and money laundering. With NVIDIA accelerated processing, its AI-powered transaction-monitoring system was able to train models at over 100 times quicker rates.

In March, BNY said that it was the first big bank to implement an NVIDIA DGX SuperPOD with DGX H100 systems. This would aid in the development of solutions that enable use cases such as fraud detection.

In order to improve their financial services apps and help protect their clients’ funds, identities, and digital accounts, systems integrators, software suppliers, and cloud service providers may now include the new NVIDIA AI workflows for fraud detection. NVIDIA Technical Blog post on enhancing fraud detection with GNNs and investigate the NVIDIA AI workflows for fraud detection.

Read more on Govindhtech.com

#NVIDIAAI#AWS#FraudDetection#AI#GenerativeAI#LLM#AImodels#News#Technews#Technology#Technologytrends#govindhtech#Technologynews

2 notes

·

View notes

Text

From Curious Novice to Data Enthusiast: My Data Science Adventure

I've always been fascinated by data science, a field that seamlessly blends technology, mathematics, and curiosity. In this article, I want to take you on a journey—my journey—from being a curious novice to becoming a passionate data enthusiast. Together, let's explore the thrilling world of data science, and I'll share the steps I took to immerse myself in this captivating realm of knowledge.

The Spark: Discovering the Potential of Data Science

The moment I stumbled upon data science, I felt a spark of inspiration. Witnessing its impact across various industries, from healthcare and finance to marketing and entertainment, I couldn't help but be drawn to this innovative field. The ability to extract critical insights from vast amounts of data and uncover meaningful patterns fascinated me, prompting me to dive deeper into the world of data science.

Laying the Foundation: The Importance of Learning the Basics

To embark on this data science adventure, I quickly realized the importance of building a strong foundation. Learning the basics of statistics, programming, and mathematics became my priority. Understanding statistical concepts and techniques enabled me to make sense of data distributions, correlations, and significance levels. Programming languages like Python and R became essential tools for data manipulation, analysis, and visualization, while a solid grasp of mathematical principles empowered me to create and evaluate predictive models.

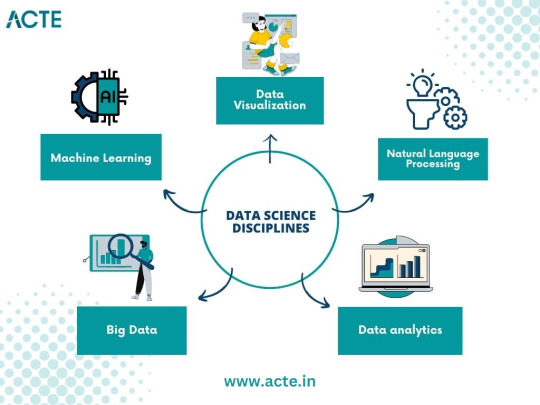

The Quest for Knowledge: Exploring Various Data Science Disciplines

A. Machine Learning: Unraveling the Power of Predictive Models

Machine learning, a prominent discipline within data science, captivated me with its ability to unlock the potential of predictive models. I delved into the fundamentals, understanding the underlying algorithms that power these models. Supervised learning, where data with labels is used to train prediction models, and unsupervised learning, which uncovers hidden patterns within unlabeled data, intrigued me. Exploring concepts like regression, classification, clustering, and dimensionality reduction deepened my understanding of this powerful field.

B. Data Visualization: Telling Stories with Data

In my data science journey, I discovered the importance of effectively visualizing data to convey meaningful stories. Navigating through various visualization tools and techniques, such as creating dynamic charts, interactive dashboards, and compelling infographics, allowed me to unlock the hidden narratives within datasets. Visualizations became a medium to communicate complex ideas succinctly, enabling stakeholders to understand insights effortlessly.

C. Big Data: Mastering the Analysis of Vast Amounts of Information

The advent of big data challenged traditional data analysis approaches. To conquer this challenge, I dived into the world of big data, understanding its nuances and exploring techniques for efficient analysis. Uncovering the intricacies of distributed systems, parallel processing, and data storage frameworks empowered me to handle massive volumes of information effectively. With tools like Apache Hadoop and Spark, I was able to mine valuable insights from colossal datasets.

D. Natural Language Processing: Extracting Insights from Textual Data

Textual data surrounds us in the digital age, and the realm of natural language processing fascinated me. I delved into techniques for processing and analyzing unstructured text data, uncovering insights from tweets, customer reviews, news articles, and more. Understanding concepts like sentiment analysis, topic modeling, and named entity recognition allowed me to extract valuable information from written text, revolutionizing industries like sentiment analysis, customer service, and content recommendation systems.

Building the Arsenal: Acquiring Data Science Skills and Tools

Acquiring essential skills and familiarizing myself with relevant tools played a crucial role in my data science journey. Programming languages like Python and R became my companions, enabling me to manipulate, analyze, and model data efficiently. Additionally, I explored popular data science libraries and frameworks such as TensorFlow, Scikit-learn, Pandas, and NumPy, which expedited the development and deployment of machine learning models. The arsenal of skills and tools I accumulated became my assets in the quest for data-driven insights.

The Real-World Challenge: Applying Data Science in Practice

Data science is not just an academic pursuit but rather a practical discipline aimed at solving real-world problems. Throughout my journey, I sought to identify such problems and apply data science methodologies to provide practical solutions. From predicting customer churn to optimizing supply chain logistics, the application of data science proved transformative in various domains. Sharing success stories of leveraging data science in practice inspires others to realize the power of this field.

Cultivating Curiosity: Continuous Learning and Skill Enhancement

Embracing a growth mindset is paramount in the world of data science. The field is rapidly evolving, with new algorithms, techniques, and tools emerging frequently. To stay ahead, it is essential to cultivate curiosity and foster a continuous learning mindset. Keeping abreast of the latest research papers, attending data science conferences, and engaging in data science courses nurtures personal and professional growth. The journey to becoming a data enthusiast is a lifelong pursuit.

Joining the Community: Networking and Collaboration

Being part of the data science community is a catalyst for growth and inspiration. Engaging with like-minded individuals, sharing knowledge, and collaborating on projects enhances the learning experience. Joining online forums, participating in Kaggle competitions, and attending meetups provides opportunities to exchange ideas, solve challenges collectively, and foster invaluable connections within the data science community.

Overcoming Obstacles: Dealing with Common Data Science Challenges

Data science, like any discipline, presents its own set of challenges. From data cleaning and preprocessing to model selection and evaluation, obstacles arise at each stage of the data science pipeline. Strategies and tips to overcome these challenges, such as building reliable pipelines, conducting robust experiments, and leveraging cross-validation techniques, are indispensable in maintaining motivation and achieving success in the data science journey.

Balancing Act: Building a Career in Data Science alongside Other Commitments

For many aspiring data scientists, the pursuit of knowledge and skills must coexist with other commitments, such as full-time jobs and personal responsibilities. Effectively managing time and developing a structured learning plan is crucial in striking a balance. Tips such as identifying pockets of dedicated learning time, breaking down complex concepts into manageable chunks, and seeking mentorships or online communities can empower individuals to navigate the data science journey while juggling other responsibilities.

Ethical Considerations: Navigating the World of Data Responsibly

As data scientists, we must navigate the world of data responsibly, being mindful of the ethical considerations inherent in this field. Safeguarding privacy, addressing bias in algorithms, and ensuring transparency in data-driven decision-making are critical principles. Exploring topics such as algorithmic fairness, data anonymization techniques, and the societal impact of data science encourages responsible and ethical practices in a rapidly evolving digital landscape.

Embarking on a data science adventure from a curious novice to a passionate data enthusiast is an exhilarating and rewarding journey. By laying a foundation of knowledge, exploring various data science disciplines, acquiring essential skills and tools, and engaging in continuous learning, one can conquer challenges, build a successful career, and have a good influence on the data science community. It's a journey that never truly ends, as data continues to evolve and offer exciting opportunities for discovery and innovation. So, join me in your data science adventure, and let the exploration begin!

#data science#data analytics#data visualization#big data#machine learning#artificial intelligence#education#information

17 notes

·

View notes

Text

Can I use Python for big data analysis?

Yes, Python is a powerful tool for big data analysis. Here’s how Python handles large-scale data analysis:

Libraries for Big Data:

Pandas:

While primarily designed for smaller datasets, Pandas can handle larger datasets efficiently when used with tools like Dask or by optimizing memory usage..

NumPy:

Provides support for large, multi-dimensional arrays and matrices, along with a collection of mathematical functions to operate on these arrays.

Dask:

A parallel computing library that extends Pandas and NumPy to larger datasets. It allows you to scale Python code from a single machine to a distributed cluster

Distributed Computing:

PySpark:

The Python API for Apache Spark, which is designed for large-scale data processing. PySpark can handle big data by distributing tasks across a cluster of machines, making it suitable for large datasets and complex computations.

Dask:

Also provides distributed computing capabilities, allowing you to perform parallel computations on large datasets across multiple cores or nodes.

Data Storage and Access:

HDF5:

A file format and set of tools for managing complex data. Python’s h5py library provides an interface to read and write HDF5 files, which are suitable for large datasets.

Databases:

Python can interface with various big data databases like Apache Cassandra, MongoDB, and SQL-based systems. Libraries such as SQLAlchemy facilitate connections to relational databases.

Data Visualization:

Matplotlib, Seaborn, and Plotly: These libraries allow you to create visualizations of large datasets, though for extremely large datasets, tools designed for distributed environments might be more appropriate.

Machine Learning:

Scikit-learn:

While not specifically designed for big data, Scikit-learn can be used with tools like Dask to handle larger datasets.

TensorFlow and PyTorch:

These frameworks support large-scale machine learning and can be integrated with big data processing tools for training and deploying models on large datasets.

Python’s ecosystem includes a variety of tools and libraries that make it well-suited for big data analysis, providing flexibility and scalability to handle large volumes of data.

Drop the message to learn more….!

2 notes

·

View notes

Text

Are you looking to build a career in Big Data Analytics? Gain in-depth knowledge of Hadoop and its ecosystem with expert-led training at Sunbeam Institute, Pune – a trusted name in IT education.

Why Choose Our Big Data Hadoop Classes?

🔹 Comprehensive Curriculum: Covering Hadoop, HDFS, MapReduce, Apache Spark, Hive, Pig, HBase, Sqoop, Flume, and more. 🔹 Hands-on Training: Work on real-world projects and industry use cases to gain practical experience. 🔹 Expert Faculty: Learn from experienced professionals with real-time industry exposure. 🔹 Placement Assistance: Get career guidance, resume building support, and interview preparation. 🔹 Flexible Learning Modes: Classroom and online training options available. 🔹 Industry-Recognized Certification: Boost your resume with a professional certification.

Who Should Join?

✔️ Freshers and IT professionals looking to enter the field of Big Data & Analytics ✔️ Software developers, system administrators, and data engineers ✔️ Business intelligence professionals and database administrators ✔️ Anyone passionate about Big Data and Machine Learning

Course Highlights:

✅ Introduction to Big Data & Hadoop Framework ✅ HDFS (Hadoop Distributed File System) – Storage & Processing ✅ MapReduce Programming – Core of Hadoop Processing ✅ Apache Spark – Fast and Unified Analytics Engine ✅ Hive, Pig, HBase – Data Querying & Management ✅ Data Ingestion Tools – Sqoop & Flume ✅ Real-time Project Implementation

#Big Data Hadoop training in Pune#Hadoop classes Pune#Big Data course Pune#Hadoop certification Pune#learn Hadoop in Pune#Apache Spark training Pune#best Big Data course Pune#Hadoop coaching in Pune#Big Data Analytics training Pune#Hadoop and Spark training Pune

0 notes

Text

Sadly, she doesn’t speak Scots

When it comes to minority language speaker representation in the X-Men stories, it seems like there’s almost so few, if any, characters who speak a minority language that they’re underrpresented in a way redheads aren’t, which is saying when it comes to characters like Jean Grey, Rachel Summers, Cessily Kincaid, Theresa and Sean Cassidy, Shatterstar, Mystique, Firestar, Sapphire Styx, Hope Summers and even Alison Blaire at some point, if you consider strawberry blond to be a shade of red. Within the X-Men stories, there’s practically only one minority language speaker and that’s Kate Pryde whenever she does speak a word in Yiddish at all.

It’s already pretty disproportionate that within the world of the X-Men stories there are more redheads than there are minority language speakers, you might say I’m generalising things and that some languages are harder to learn. But I personally believe the latter belief undermines any sincere efforts at revitalising a dying tongue, especially if it’s been endangered for so long and the odd possibility that somebody else would learn such a language with ease and effort. That’s from my experience bothering to learn some Scottish Gaelic, with my father finding that language difficult or something. But the thing here is that even if some languages are tricky to learn, there will always be somebody eager to learn it anyways.

But it seems almost none of the X-Men writers are minority language speakers, none of them are eager to learn a minority language which undermines efforts at using mutants as a metaphor for ethnic discrimination. Especially when almost none of the mutants themselves speak a minority language that they’re going to be really underrepresented in a way redheads aren’t, that it must be a pretty sad situation where we could’ve gotten minority language speaker representation with more mutants onboard. Rahne Sinclair could’ve been a Gaelic speaker, but I could settle for her speaking in Scots. Sadly neither language gets represented in any of the X-Men stories.

It would’ve been nice to see more mutants speaking in languages like Scots, Frisian, Scottish Gaelic, Comanche, Cheyenne, Lakota and Apache, but I suppose that’s going to take more effort and actual enthusiasm for any minority language to have it take off big time. Like I said before it’s kind of unfortunate that there’s not a lot of minority language speaker representation in the X-Men stories, which is ironic because X-Men writers love pushing the minority angle yet have no real interest in or experience with minority languages themselves. That only makes it worse really.

We could have characters sparking people’s interest in learning a minority language, like what Scottish and Irish folk music did for me before. Some characters like Rahne Sinclair again could be reimagined as speaking in a minority language like Scots, but it’s the road that’s barely ever taken by both X-Men writers and X-Men fans. X-Men writers want to advocate for minorities, but when it comes to other aspects of the ethnic minority experience language is one of the things they don’t do much so far. Even if it could help revitalise a language, so it wouldn’t hurt if she spoke Scottish Gaelic for instance. Or for another matter, have Pixie speak Welsh.

Or even Theresa and Sean speaking Irish, but it doesn’t just have to be Celtic language speakers that need representation.

#scottish gaelic#gaelic#irish#irish language#theresa cassidy#rahne sinclair#endangered languages#minority languages#marvel#marvel comics#x-men

6 notes

·

View notes

Text

From Math to Machine Learning: A Comprehensive Blueprint for Aspiring Data Scientists

The realm of data science is vast and dynamic, offering a plethora of opportunities for those willing to dive into the world of numbers, algorithms, and insights. If you're new to data science and unsure where to start, fear not! This step-by-step guide will navigate you through the foundational concepts and essential skills to kickstart your journey in this exciting field. Choosing the Best Data Science Institute can further accelerate your journey into this thriving industry.

1. Establish a Strong Foundation in Mathematics and Statistics

Before delving into the specifics of data science, ensure you have a robust foundation in mathematics and statistics. Brush up on concepts like algebra, calculus, probability, and statistical inference. Online platforms such as Khan Academy and Coursera offer excellent resources for reinforcing these fundamental skills.

2. Learn Programming Languages

Data science is synonymous with coding. Choose a programming language – Python and R are popular choices – and become proficient in it. Platforms like Codecademy, DataCamp, and W3Schools provide interactive courses to help you get started on your coding journey.

3. Grasp the Basics of Data Manipulation and Analysis

Understanding how to work with data is at the core of data science. Familiarize yourself with libraries like Pandas in Python or data frames in R. Learn about data structures, and explore techniques for cleaning and preprocessing data. Utilize real-world datasets from platforms like Kaggle for hands-on practice.

4. Dive into Data Visualization

Data visualization is a powerful tool for conveying insights. Learn how to create compelling visualizations using tools like Matplotlib and Seaborn in Python, or ggplot2 in R. Effectively communicating data findings is a crucial aspect of a data scientist's role.

5. Explore Machine Learning Fundamentals

Begin your journey into machine learning by understanding the basics. Grasp concepts like supervised and unsupervised learning, classification, regression, and key algorithms such as linear regression and decision trees. Platforms like scikit-learn in Python offer practical, hands-on experience.

6. Delve into Big Data Technologies

As data scales, so does the need for technologies that can handle large datasets. Familiarize yourself with big data technologies, particularly Apache Hadoop and Apache Spark. Platforms like Cloudera and Databricks provide tutorials suitable for beginners.

7. Enroll in Online Courses and Specializations

Structured learning paths are invaluable for beginners. Enroll in online courses and specializations tailored for data science novices. Platforms like Coursera ("Data Science and Machine Learning Bootcamp with R/Python") and edX ("Introduction to Data Science") offer comprehensive learning opportunities.

8. Build Practical Projects

Apply your newfound knowledge by working on practical projects. Analyze datasets, implement machine learning models, and solve real-world problems. Platforms like Kaggle provide a collaborative space for participating in data science competitions and showcasing your skills to the community.

9. Join Data Science Communities

Engaging with the data science community is a key aspect of your learning journey. Participate in discussions on platforms like Stack Overflow, explore communities on Reddit (r/datascience), and connect with professionals on LinkedIn. Networking can provide valuable insights and support.

10. Continuous Learning and Specialization

Data science is a field that evolves rapidly. Embrace continuous learning and explore specialized areas based on your interests. Dive into natural language processing, computer vision, or reinforcement learning as you progress and discover your passion within the broader data science landscape.

Remember, your journey in data science is a continuous process of learning, application, and growth. Seek guidance from online forums, contribute to discussions, and build a portfolio that showcases your projects. Choosing the best Data Science Courses in Chennai is a crucial step in acquiring the necessary expertise for a successful career in the evolving landscape of data science. With dedication and a systematic approach, you'll find yourself progressing steadily in the fascinating world of data science. Good luck on your journey!

3 notes

·

View notes

Text

Navigating the Data Landscape: A Deep Dive into ScholarNest's Corporate Training

In the ever-evolving realm of data, mastering the intricacies of data engineering and PySpark is paramount for professionals seeking a competitive edge. ScholarNest's Corporate Training offers an immersive experience, providing a deep dive into the dynamic world of data engineering and PySpark.

Unlocking Data Engineering Excellence

Embark on a journey to become a proficient data engineer with ScholarNest's specialized courses. Our Data Engineering Certification program is meticulously crafted to equip you with the skills needed to design, build, and maintain scalable data systems. From understanding data architecture to implementing robust solutions, our curriculum covers the entire spectrum of data engineering.

Pioneering PySpark Proficiency

Navigate the complexities of data processing with PySpark, a powerful Apache Spark library. ScholarNest's PySpark course, hailed as one of the best online, caters to both beginners and advanced learners. Explore the full potential of PySpark through hands-on projects, gaining practical insights that can be applied directly in real-world scenarios.

Azure Databricks Mastery

As part of our commitment to offering the best, our courses delve into Azure Databricks learning. Azure Databricks, seamlessly integrated with Azure services, is a pivotal tool in the modern data landscape. ScholarNest ensures that you not only understand its functionalities but also leverage it effectively to solve complex data challenges.

Tailored for Corporate Success

ScholarNest's Corporate Training goes beyond generic courses. We tailor our programs to meet the specific needs of corporate environments, ensuring that the skills acquired align with industry demands. Whether you are aiming for data engineering excellence or mastering PySpark, our courses provide a roadmap for success.

Why Choose ScholarNest?

Best PySpark Course Online: Our PySpark courses are recognized for their quality and depth.

Expert Instructors: Learn from industry professionals with hands-on experience.

Comprehensive Curriculum: Covering everything from fundamentals to advanced techniques.

Real-world Application: Practical projects and case studies for hands-on experience.

Flexibility: Choose courses that suit your level, from beginner to advanced.

Navigate the data landscape with confidence through ScholarNest's Corporate Training. Enrol now to embark on a learning journey that not only enhances your skills but also propels your career forward in the rapidly evolving field of data engineering and PySpark.

#data engineering#pyspark#databricks#azure data engineer training#apache spark#databricks cloud#big data#dataanalytics#data engineer#pyspark course#databricks course training#pyspark training

3 notes

·

View notes

Text

Unleashing the Power of Big Data Analytics: Mastering the Course of Success

In today's digital age, data has become the lifeblood of successful organizations. The ability to collect, analyze, and interpret vast amounts of data has revolutionized business operations and decision-making processes. Here is where big data analytics could truly excel. By harnessing the potential of data analytics, businesses can gain valuable insights that can guide them on a path to success. However, to truly unleash this power, it is essential to have a solid understanding of data analytics and its various types of courses. In this article, we will explore the different types of data analytics courses available and how they can help individuals and businesses navigate the complex world of big data.

Education: The Gateway to Becoming a Data Analytics Expert

Before delving into the different types of data analytics courses, it is crucial to highlight the significance of education in this field. Data analytics is an intricate discipline that requires a solid foundation of knowledge and skills. While practical experience is valuable, formal education in data analytics serves as the gateway to becoming an expert in the field. By enrolling in relevant courses, individuals can gain a comprehensive understanding of the theories, methodologies, and tools used in data analytics.

Data Analytics Courses Types: Navigating the Expansive Landscape

When it comes to data analytics courses, there is a wide range of options available, catering to individuals with varying levels of expertise and interests. Let's explore some of the most popular types of data analytics courses:

1. Introduction to Data Analytics

This course serves as a perfect starting point for beginners who want to dip their toes into the world of data analytics. The course covers the fundamental concepts, techniques, and tools used in data analytics. It provides a comprehensive overview of data collection, cleansing, and visualization techniques, along with an introduction to statistical analysis. By mastering the basics, individuals can lay a solid foundation for further exploration in the field of data analytics.

2. Advanced Data Analytics Techniques

For those looking to deepen their knowledge and skills in data analytics, advanced courses offer a treasure trove of insights. These courses delve into complex data analysis techniques, such as predictive modeling, machine learning algorithms, and data mining. Individuals will learn how to discover hidden patterns, make accurate predictions, and extract valuable insights from large datasets. Advanced data analytics courses equip individuals with the tools and techniques necessary to tackle real-world data analysis challenges.

3. Specialized Data Analytics Courses

As the field of data analytics continues to thrive, specialized courses have emerged to cater to specific industry needs and interests. Whether it's healthcare analytics, financial analytics, or social media analytics, individuals can choose courses tailored to their desired area of expertise. These specialized courses delve into industry-specific data analytics techniques and explore case studies to provide practical insights into real-world applications. By honing their skills in specialized areas, individuals can unlock new opportunities and make a significant impact in their chosen field.

4. Big Data Analytics Certification Programs

In the era of big data, the ability to navigate and derive meaningful insights from massive datasets is in high demand. Big data analytics certification programs offer individuals the chance to gain comprehensive knowledge and hands-on experience in handling big data. These programs cover topics such as Hadoop, Apache Spark, and other big data frameworks. By earning a certification, individuals can demonstrate their proficiency in handling big data and position themselves as experts in this rapidly growing field.

Education and the mastery of data analytics courses at ACTE Institute is essential in unleashing the power of big data analytics. With the right educational foundation like the ACTE institute, individuals can navigate the complex landscape of data analytics with confidence and efficiency. Whether starting with an introduction course or diving into advanced techniques, the world of data analytics offers endless opportunities for personal and professional growth. By staying ahead of the curve and continuously expanding their knowledge, individuals can become true masters of the course, leading businesses towards success in the era of big data.

2 notes

·

View notes

Text

Data Engineering Concepts, Tools, and Projects

All the associations in the world have large amounts of data. If not worked upon and anatomized, this data does not amount to anything. Data masterminds are the ones. who make this data pure for consideration. Data Engineering can nominate the process of developing, operating, and maintaining software systems that collect, dissect, and store the association’s data. In modern data analytics, data masterminds produce data channels, which are the structure armature.

How to become a data engineer:

While there is no specific degree requirement for data engineering, a bachelor's or master's degree in computer science, software engineering, information systems, or a related field can provide a solid foundation. Courses in databases, programming, data structures, algorithms, and statistics are particularly beneficial. Data engineers should have strong programming skills. Focus on languages commonly used in data engineering, such as Python, SQL, and Scala. Learn the basics of data manipulation, scripting, and querying databases.

Familiarize yourself with various database systems like MySQL, PostgreSQL, and NoSQL databases such as MongoDB or Apache Cassandra.Knowledge of data warehousing concepts, including schema design, indexing, and optimization techniques.

Data engineering tools recommendations:

Data Engineering makes sure to use a variety of languages and tools to negotiate its objects. These tools allow data masterminds to apply tasks like creating channels and algorithms in a much easier as well as effective manner.

1. Amazon Redshift: A widely used cloud data warehouse built by Amazon, Redshift is the go-to choice for many teams and businesses. It is a comprehensive tool that enables the setup and scaling of data warehouses, making it incredibly easy to use.

One of the most popular tools used for businesses purpose is Amazon Redshift, which provides a powerful platform for managing large amounts of data. It allows users to quickly analyze complex datasets, build models that can be used for predictive analytics, and create visualizations that make it easier to interpret results. With its scalability and flexibility, Amazon Redshift has become one of the go-to solutions when it comes to data engineering tasks.

2. Big Query: Just like Redshift, Big Query is a cloud data warehouse fully managed by Google. It's especially favored by companies that have experience with the Google Cloud Platform. BigQuery not only can scale but also has robust machine learning features that make data analysis much easier. 3. Tableau: A powerful BI tool, Tableau is the second most popular one from our survey. It helps extract and gather data stored in multiple locations and comes with an intuitive drag-and-drop interface. Tableau makes data across departments readily available for data engineers and managers to create useful dashboards. 4. Looker:�� An essential BI software, Looker helps visualize data more effectively. Unlike traditional BI tools, Looker has developed a LookML layer, which is a language for explaining data, aggregates, calculations, and relationships in a SQL database. A spectacle is a newly-released tool that assists in deploying the LookML layer, ensuring non-technical personnel have a much simpler time when utilizing company data.

5. Apache Spark: An open-source unified analytics engine, Apache Spark is excellent for processing large data sets. It also offers great distribution and runs easily alongside other distributed computing programs, making it essential for data mining and machine learning. 6. Airflow: With Airflow, programming, and scheduling can be done quickly and accurately, and users can keep an eye on it through the built-in UI. It is the most used workflow solution, as 25% of data teams reported using it. 7. Apache Hive: Another data warehouse project on Apache Hadoop, Hive simplifies data queries and analysis with its SQL-like interface. This language enables MapReduce tasks to be executed on Hadoop and is mainly used for data summarization, analysis, and query. 8. Segment: An efficient and comprehensive tool, Segment assists in collecting and using data from digital properties. It transforms, sends, and archives customer data, and also makes the entire process much more manageable. 9. Snowflake: This cloud data warehouse has become very popular lately due to its capabilities in storing and computing data. Snowflake’s unique shared data architecture allows for a wide range of applications, making it an ideal choice for large-scale data storage, data engineering, and data science. 10. DBT: A command-line tool that uses SQL to transform data, DBT is the perfect choice for data engineers and analysts. DBT streamlines the entire transformation process and is highly praised by many data engineers.

Data Engineering Projects:

Data engineering is an important process for businesses to understand and utilize to gain insights from their data. It involves designing, constructing, maintaining, and troubleshooting databases to ensure they are running optimally. There are many tools available for data engineers to use in their work such as My SQL, SQL server, oracle RDBMS, Open Refine, TRIFACTA, Data Ladder, Keras, Watson, TensorFlow, etc. Each tool has its strengths and weaknesses so it’s important to research each one thoroughly before making recommendations about which ones should be used for specific tasks or projects.

Smart IoT Infrastructure:

As the IoT continues to develop, the measure of data consumed with high haste is growing at an intimidating rate. It creates challenges for companies regarding storehouses, analysis, and visualization.

Data Ingestion:

Data ingestion is moving data from one or further sources to a target point for further preparation and analysis. This target point is generally a data storehouse, a unique database designed for effective reporting.

Data Quality and Testing:

Understand the importance of data quality and testing in data engineering projects. Learn about techniques and tools to ensure data accuracy and consistency.

Streaming Data:

Familiarize yourself with real-time data processing and streaming frameworks like Apache Kafka and Apache Flink. Develop your problem-solving skills through practical exercises and challenges.

Conclusion:

Data engineers are using these tools for building data systems. My SQL, SQL server and Oracle RDBMS involve collecting, storing, managing, transforming, and analyzing large amounts of data to gain insights. Data engineers are responsible for designing efficient solutions that can handle high volumes of data while ensuring accuracy and reliability. They use a variety of technologies including databases, programming languages, machine learning algorithms, and more to create powerful applications that help businesses make better decisions based on their collected data.

2 notes

·

View notes