#Azure AD Connect cloud provisioning

Explore tagged Tumblr posts

Text

SDWAN: Revolutionizing Enterprise Connectivity with Tata Communications

In today’s hyper-connected world, enterprises demand more from their networks. With the explosion of cloud applications, remote workforces, and the need for agile digital transformation, traditional Wide Area Networks (WANs) often fall short. This is where SDWAN (Software-Defined Wide Area Network) emerges as a game-changer. As a leading global digital ecosystem enabler, Tata Communications offers cutting-edge SDWAN solutions that empower businesses with intelligent, secure, and high-performance networking.

What is SDWAN?

SDWAN is a software-driven approach to managing and optimizing wide area networks. It abstracts the network hardware and control mechanism, allowing centralized and automated management of network traffic. Unlike traditional WAN architectures that rely heavily on expensive MPLS links and static routing, SDWAN enables dynamic path selection, improved bandwidth utilization, and enhanced security using a mix of transport services including MPLS, broadband, and LTE.

Why Enterprises are Moving to SDWAN

The rise of cloud services, SaaS platforms, and remote collaboration tools has created a demand for networks that are agile, scalable, and cloud-ready. SDWAN answers this call with:

Improved Application Performance: By prioritizing critical business applications and steering traffic intelligently, SDWAN enhances user experience and productivity.

Cost Efficiency: Enterprises can reduce dependency on expensive MPLS by leveraging cost-effective broadband and 5G networks.

Centralized Management: IT teams can manage the entire WAN through a single dashboard, simplifying operations and policy enforcement.

Enhanced Security: Integrated security features like end-to-end encryption, firewalls, and secure gateways protect data across all endpoints.

Tata Communications SDWAN: A Smarter Way to Connect

Tata Communications brings a globally integrated and intelligent SDWAN solution that redefines network performance and business continuity. With decades of experience in network infrastructure and global reach, Tata Communications helps enterprises transition from legacy networks to a modern, agile SDWAN architecture with ease.

Key Features of Tata Communications SDWAN

Global Reach and Performance Tata Communications operates one of the world’s largest wholly-owned subsea fiber networks. This ensures low-latency and high-availability connections for businesses operating across geographies.

Cloud-First Architecture Their SDWAN is built for the cloud era, seamlessly integrating with leading cloud service providers like AWS, Microsoft Azure, and Google Cloud. This ensures faster and more reliable access to cloud-based applications.

Zero-Touch Provisioning With zero-touch provisioning, branch offices and remote sites can be connected quickly without on-site IT support, reducing deployment time significantly.

Advanced Analytics and Visibility Tata Communications SDWAN platform offers real-time analytics, network health monitoring, and deep visibility into application performance, enabling proactive management.

Robust Security Security is embedded in every layer. Tata Communications provides built-in encryption, next-gen firewalls, secure web gateways, and compliance-ready frameworks to safeguard business data.

24/7 Global Support Enterprises benefit from Tata Communications’ global NOC and customer support centers, ensuring uninterrupted services and expert assistance whenever needed.

Benefits for Enterprises

With Tata Communications SDWAN, organizations can expect:

Seamless Cloud Connectivity: Optimized routing to cloud applications enhances performance and user satisfaction.

Operational Efficiency: Simplified management and automation reduce IT overhead.

Business Continuity: SDWAN ensures high availability with automatic failover and disaster recovery mechanisms.

Scalability: Whether it’s adding a new branch or scaling globally, Tata Communications SDWAN can grow with your business.

Secure Remote Access: As hybrid work becomes the norm, secure and consistent access for remote users becomes critical. SDWAN makes this possible without sacrificing performance.

Industry Use Cases

SDWAN by Tata Communications is already transforming industries:

Banking & Finance: Secure and reliable connectivity across branches and ATMs, with compliance-ready frameworks.

Retail: High-speed, secure connectivity for point-of-sale systems and inventory apps across outlets.

Healthcare: Real-time access to patient records, telemedicine, and secure data exchange between clinics.

Manufacturing: Intelligent network management for IoT devices and factory automation systems.

Future-Proofing with Tata Communications

The digital landscape is evolving rapidly, and businesses must stay ahead. Tata Communications SDWAN provides a future-ready solution that supports innovation, agility, and growth. With its end-to-end managed services, deep expertise, and global reach, Tata Communications ensures that enterprises can adopt SDWAN without the complexity, focusing instead on their core business objectives.

Conclusion

In an age where digital agility determines success, SDWAN is not just a technology upgrade — it’s a strategic enabler. With Tata Communications SDWAN, enterprises gain more than just connectivity; they gain a competitive edge. Whether it’s cost optimization, improved application performance, or secure remote access, Tata Communications delivers a robust SDWAN solution tailored to modern business needs.

Empower your network. Transform your business. Choose Tata Communications SDWAN.

0 notes

Text

The Rise of Bluetooth Access Control in Smart Buildings and Workplaces

In today’s rapidly evolving built environments, security and convenience are no longer seen as trade-offs - they are mutual requirements. As organizations upgrade to smart building infrastructures, the demand for seamless, secure access solutions has intensified. Traditional systems like keycards, PIN pads, and physical locks are increasingly seen as operational bottlenecks: costly to manage, difficult to scale, and vulnerable to physical tampering or credential loss.

In this context, Bluetooth Access Control has emerged as a strategic enabler of next-generation workplace security. By leveraging ubiquitous mobile devices and Bluetooth Low Energy (BLE) technology, organizations can offer contactless access, streamline credential provisioning, and reduce dependency on hardware-based systems. Market analysts project that mobile access control will grow at a CAGR of over 20% through 2028, driven in large part by BLE solutions.

This article explores why Bluetooth Access Control is gaining traction among enterprise stakeholders - particularly in smart offices, co-working hubs, and multi-tenant buildings - and what decision-makers in IT, security, procurement, and administration need to consider as they plan for the future.

What is Bluetooth Access Control?

Bluetooth Access Control refers to the use of Bluetooth-enabled mobile devices - typically smartphones - to manage and grant physical access to secure areas, such as office buildings, meeting rooms, or restricted zones. Instead of traditional keys, fobs, or access cards, users carry digital credentials stored in mobile apps. These credentials are transmitted via Bluetooth Low Energy (BLE) to compatible door readers or controllers.

The key enabling technology is BLE, which offers short-range communication with low power consumption. When a user approaches a door, their phone transmits encrypted credentials to a BLE reader, which authenticates the device and triggers the lock mechanism if access is permitted. In most cases, the interaction is passive - users don't need to unlock their phone or open an app.

Bluetooth Access Control is often implemented as part of a larger cloud-based or mobile-first identity and access management (IAM) system. These platforms allow administrators to issue, revoke, or update credentials remotely, integrate with enterprise directory services (like Azure AD), and log access activity in real time for compliance purposes.

Compared to legacy systems such as RFID cards or keypad locks, Bluetooth-based systems offer:

Enhanced security via encrypted mobile credentials

Lower operational friction (no lost or shared keycards)

Easier scaling across locations and users

Integration potential with other smart building systems

As smartphone penetration is near-universal among knowledge workers, Bluetooth Access Control systems align well with bring-your-own-device (BYOD) policies and modern workforce expectations.

Comparison: Bluetooth Access Control vs. Traditional Systems

Feature / Criteria

Bluetooth Access Control

RFID Cards / Key Fobs

PIN Codes / Keypads

Credential Medium

Smartphone (mobile app with BLE)

Physical card or fob

Manually entered numeric code

Security

Encrypted mobile credentials; remote wipe

Vulnerable to cloning or theft

Susceptible to shoulder surfing

Access Management

Cloud-based, real-time provisioning

On-site badge programming

Local setup; difficult to manage

User Experience

Hands-free or tap-to-unlock

Tap or swipe required

Manual entry

Operational Overhead

No physical credential distribution

Requires issuing/replacing cards

Users must remember codes

Integration Potential

High — integrates with IAM, HR systems

Limited to access control functions

Minimal

Cost Over Time

Lower (no physical assets to manage)

Medium to high (replacements, waste)

Low upfront, but poor scalability

Why Smart Buildings Are Driving Demand for Bluetooth Access Control

Smart buildings leverage connected technologies to optimize operational efficiency, energy use, and occupant experience. These environments rely on IoT sensors, building automation systems (BAS), and cloud-based infrastructure to manage everything from lighting and HVAC to space utilization and security.

Access control plays a critical role in this ecosystem. It is not just about locking and unlocking doors - it’s about enabling data-driven space management, enforcing security policy dynamically, and integrating with digital identity systems across the building. Bluetooth Access Control supports this vision by providing:

Seamless integration with smart building platforms for unified control of access, lighting, climate, and scheduling.

Mobile-first infrastructure that aligns with how users interact in tech-enabled workplaces.

Real-time data on occupancy and movement patterns, useful for optimizing space usage or responding to incidents.

As a result, Bluetooth Access Control is increasingly favored in new developments or retrofits where a smart building strategy is in place or planned. It fits naturally into cloud-native, IoT-integrated environments that prioritize flexibility, efficiency, and user experience.

Use Cases in Smart Buildings and Workplaces

Bluetooth Access Control is being deployed across a range of building types where flexibility, automation, and mobile-centric infrastructure are priorities. Below are key use cases that illustrate how organizations are leveraging this technology to meet both operational and strategic goals.

🏢 Corporate Headquarters

In large enterprise offices, Bluetooth Access Control allows centralized management of employee credentials across departments, locations, and access zones. Temporary access can be granted to contractors or visitors without issuing physical badges. Integration with calendar systems can also automate room access based on meeting schedules.

🧑💼 Co-working Spaces and Flexible Offices

For shared workspaces, mobile-based access simplifies user onboarding and supports tiered membership models. Operators can issue time-limited credentials, manage bookings, and restrict access by area or time of day — all through a centralized platform. This supports a “self-service” access model, reducing front desk overhead.

🧳 Multi-Tenant Commercial Buildings

In buildings with multiple companies, Bluetooth Access Control enables each tenant to manage access independently while the property manager oversees common areas. Mobile credentials can be issued for shared spaces like lobbies, elevators, or fitness centers, while tenants retain control over their office zones.

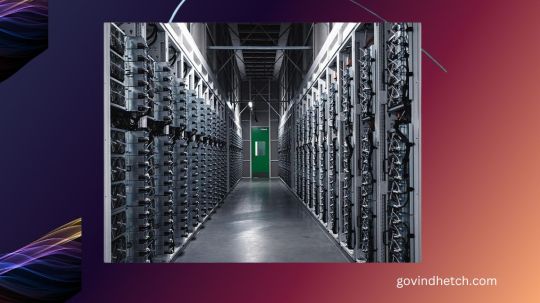

🖥️ Data Centers and Secure Facilities

Where access auditing and security are paramount, mobile credentials provide encrypted, traceable entry logs. Bluetooth readers can integrate with biometric systems or two-factor authentication protocols to ensure compliance with regulatory frameworks like ISO 27001 or SOC 2.

🌐 Smart Campus Environments

Universities and enterprise campuses are deploying Bluetooth Access Control to unify access across buildings, labs, dormitories, and parking areas. Credentials can be linked to student or employee IDs, enabling seamless movement across the campus and integration with other digital services (e.g., payments, printing).

🔍 According to HID Global, 54% of organizations surveyed in 2023 were actively evaluating or had already implemented mobile access control, citing multi-tenant flexibility and user satisfaction as top drivers

Bringing It All Together: The Role of platforms like Spintly

As demand for mobile-first, scalable access solutions grows, platform such as Spintly are playing a key role in enabling the transition. Spintly’s cloud-based platform supports Bluetooth Low Energy (BLE) access through smartphones, offers seamless integrations with enterprise IT systems, and is designed to simplify deployment across diverse environments - from commercial offices to co-working hubs and educational campuses. By eliminating physical infrastructure like on-premise servers or dedicated access panels, Spintly provides organizations with a frictionless, future-ready access control solution that aligns with smart building strategies and modern security expectations.

#bluetooth access#bluetooth#access control solutions#access control system#mobile access#accesscontrol#spintly#biometrics#smartacess#visitor management system#biometric attendance

1 note

·

View note

Text

10 Must-Have PowerShell Scripts Every IT Admin Should Know

As an IT professional, your day is likely filled with repetitive tasks, tight deadlines, and constant demands for better performance. That’s why automation isn’t just helpful—it’s essential. I’m Mezba Uddin, a Microsoft MVP and MCT, and I built Mr Microsoft to help IT admins like you work smarter with automation, not harder. From Microsoft 365 automation to infrastructure monitoring and PowerShell scripting, I’ve shared practical solutions that are used in real-world environments. This article dives into ten of the most useful PowerShell scripts for IT admins, complete with automation examples and practical use cases that will boost productivity, reduce errors, and save countless hours.

Whether you're new to scripting or looking to optimize your stack, these scripts are game-changers.

Automate Active Directory User Creation

Provisioning new users manually can lead to errors and wasted time. One of the most widely used PowerShell scripts for IT admins is an automated Active Directory user creation script. This script allows you to import user details from a CSV file and automatically create AD accounts, set passwords, assign groups, and configure properties—all in a few seconds. It’s a perfect way to speed up onboarding in large organizations. On MrMicrosoft.com, you’ll find a complete walkthrough and customizable script templates to fit your unique IT environment. Whether you're managing 10 users or 1,000, this script will become one of your most trusted tools for Active Directory administration.

Bulk Assign Microsoft 365 Licenses

In hybrid or cloud environments, managing Microsoft 365 license assignments manually is a drain on time and accuracy. Through Microsoft 365 automation, you can use a PowerShell script to assign licenses in bulk, deactivate unused ones, and even schedule regular audits. This script is a great way to enforce licensing compliance while reducing costs. At Mr Microsoft, I provide an optimized version of this script that’s suitable for large enterprise environments. It’s customizable, secure, and a great example of how scripting can eliminate repetitive administrative tasks while ensuring your Microsoft 365 deployment runs smoothly and efficiently.

Send Password Expiry Notifications Automatically

One of the most common helpdesk tickets? Password expiry. Through simple IT infrastructure automation, a PowerShell script can send automatic email notifications to users whose passwords are about to expire. It reduces last-minute password reset requests and keeps users informed. At Mr Microsoft, I share a plug-and-play script for this task, including options to adjust frequency, messaging, and groups. It’s a lightweight, server-friendly way to keep your user base informed and proactive. With this script running on a schedule, your IT team will have fewer disruptions and more time to focus on high-priority tasks.

Monitor Server Disk Space Remotely

Monitoring disk space across multiple servers—especially in hybrid cloud environments—can be difficult without the right tools. That’s why cloud automation for IT pros includes disk monitoring scripts that remotely scan storage, trigger alerts, and generate reports. I’ve posted a working solution on Mr Microsoft that connects securely to servers, logs thresholds, and sends alerts before critical levels are hit. It’s ideal for IT teams managing Azure resources, Hyper-V, or even on-premises file servers. With this script, you can detect space issues early and prevent downtime caused by full partitions.

Export Microsoft 365 Mailbox Size Reports

For admins managing Exchange Online, mailbox size tracking is essential. With the right Microsoft 365 management tools, like a PowerShell mailbox report script, you can quickly extract user sizes, quotas, and growth over time. This is invaluable for storage planning and policy enforcement. On Mr Microsoft, I’ve shared an easy-to-adapt script that pulls all mailbox data and exports it to CSV or Excel formats. You can automate it weekly, track long-term trends, or email the results to managers. It’s a simple but powerful reporting tool that turns Microsoft 365 data into actionable insights.

Parse and Report on Windows Event Logs

If you’re getting started with scripting, working with event logs is a fantastic entry point. Using PowerShell for beginners, you can write scripts that parse Windows logs to identify system crashes, login failures, or security events. I’ve built a script on Mr Microsoft that scans logs daily and sends summary reports. It’s lightweight, customizable, and useful for security monitoring. This is a perfect project for IT pros new to scripting who want meaningful results without complexity. With scheduled execution, this tool ensures proactive monitoring—especially critical in regulated or high-security environments.

Reset Passwords for Multiple Users

Resetting passwords one at a time is inefficient—especially during mass onboarding, offboarding, or policy enforcement. Using IT admin productivity tools like a PowerShell batch password reset script can streamline the process. It’s secure, scriptable, and ideal for both on-premises AD and hybrid Azure AD environments. With added functionality like expiration dates and enforced resets at next login, this script empowers IT admins to enforce password policies with speed and consistency.

Automate Windows Update Scheduling

If you’re tired of unpredictable updates or user complaints about restarts, this is for you. One of the most effective PowerShell scripts for IT admins automates the installation of Windows updates across workstations or servers. With this tool, you can check for updates, install them silently, and even reboot during off-hours. This reduces patching delays, improves compliance, and eliminates the need for manual updates or GPO complexity—especially useful in remote or hybrid work environments.

Cleanup Inactive Users with Graph API

Inactive user accounts are a security risk and resource drain. With the Microsoft Graph API, you can automate account cleanup based on login activity or license usage. My detailed Microsoft Graph API tutorial on Mr Microsoft walks through how to connect securely, pull activity data, and disable or archive stale accounts. This not only tightens security but also saves licensing costs. It’s a must-have script for admins managing large Microsoft 365 environments. Plus, the tutorial includes reusable templates to make your deployment faster and safer.

Automate SharePoint Site Provisioning

Provisioning SharePoint sites manually is tedious and error-prone. With Microsoft 365 automation, you can instantly create SharePoint sites based on predefined templates, permissions, and naming conventions. I’ve built a reusable script on Mr Microsoft that automates this entire process. It’s ideal for departments, projects, or onboarding flows where consistency and speed are critical. This script integrates with Teams and Exchange setups too, giving your IT team a full-stack provisioning workflow with minimal effort.

Final Thoughts – Automate Smarter, Not Harder

Every script above is built from real-life IT challenges I’ve encountered over the years. At Mr Microsoft, my goal is to share solutions that are practical, secure, and ready to use. Whether you're managing hundreds of users or optimizing workflows, automation is your edge—and PowerShell scripts for IT admins are your toolkit. Want more step-by-step guides and tools built by a fellow IT pro?

Visit MrMicrosoft.com and start automating smarter today.

1 note

·

View note

Text

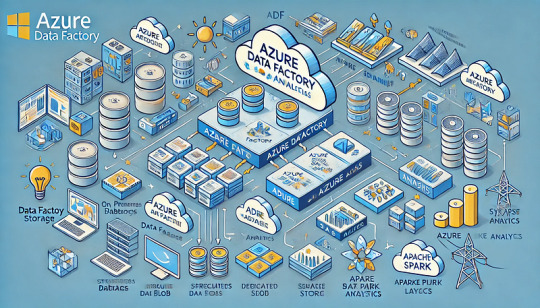

Explore how ADF integrates with Azure Synapse for big data processing.

How Azure Data Factory (ADF) Integrates with Azure Synapse for Big Data Processing

Azure Data Factory (ADF) and Azure Synapse Analytics form a powerful combination for handling big data workloads in the cloud.

ADF enables data ingestion, transformation, and orchestration, while Azure Synapse provides high-performance analytics and data warehousing. Their integration supports massive-scale data processing, making them ideal for big data applications like ETL pipelines, machine learning, and real-time analytics. Key Aspects of ADF and Azure Synapse Integration for Big Data Processing

Data Ingestion at Scale ADF acts as the ingestion layer, allowing seamless data movement into Azure Synapse from multiple structured and unstructured sources, including: Cloud Storage: Azure Blob Storage, Amazon S3, Google

Cloud Storage On-Premises Databases: SQL Server, Oracle, MySQL, PostgreSQL Streaming Data Sources: Azure Event Hubs, IoT Hub, Kafka

SaaS Applications: Salesforce, SAP, Google Analytics 🚀 ADF’s parallel processing capabilities and built-in connectors make ingestion highly scalable and efficient.

2. Transforming Big Data with ETL/ELT ADF enables large-scale transformations using two primary approaches: ETL (Extract, Transform, Load): Data is transformed in ADF’s Mapping Data Flows before loading into Synapse.

ELT (Extract, Load, Transform): Raw data is loaded into Synapse, where transformation occurs using SQL scripts or Apache Spark pools within Synapse.

🔹 Use Case: Cleaning and aggregating billions of rows from multiple sources before running machine learning models.

3. Scalable Data Processing with Azure Synapse Azure Synapse provides powerful data processing features: Dedicated SQL Pools: Optimized for high-performance queries on structured big data.

Serverless SQL Pools: Enables ad-hoc queries without provisioning resources.

Apache Spark Pools: Runs distributed big data workloads using Spark.

💡 ADF pipelines can orchestrate Spark-based processing in Synapse for large-scale transformations.

4. Automating and Orchestrating Data Pipelines ADF provides pipeline orchestration for complex workflows by: Automating data movement between storage and Synapse.

Scheduling incremental or full data loads for efficiency. Integrating with Azure Functions, Databricks, and Logic Apps for extended capabilities.

⚙️ Example: ADF can trigger data processing in Synapse when new files arrive in Azure Data Lake.

5. Real-Time Big Data Processing ADF enables near real-time processing by: Capturing streaming data from sources like IoT devices and event hubs. Running incremental loads to process only new data.

Using Change Data Capture (CDC) to track updates in large datasets.

📊 Use Case: Ingesting IoT sensor data into Synapse for real-time analytics dashboards.

6. Security & Compliance in Big Data Pipelines Data Encryption: Protects data at rest and in transit.

Private Link & VNet Integration: Restricts data movement to private networks.

Role-Based Access Control (RBAC): Manages permissions for users and applications.

🔐 Example: ADF can use managed identity to securely connect to Synapse without storing credentials.

Conclusion

The integration of Azure Data Factory with Azure Synapse Analytics provides a scalable, secure, and automated approach to big data processing.

By leveraging ADF for data ingestion and orchestration and Synapse for high-performance analytics, businesses can unlock real-time insights, streamline ETL workflows, and handle massive data volumes with ease.

WEBSITE: https://www.ficusoft.in/azure-data-factory-training-in-chennai/

0 notes

Text

SAP Cloud Platform (SCP), now integrated into the SAP Business Technology Platform (SAP BTP), offers extensive scalability options designed to meet the dynamic needs of businesses. These scalability options span across application performance, infrastructure, and integration, ensuring organizations can handle increasing workloads, growing user bases, and expanding business processes efficiently.

1. Horizontal Scaling

Horizontal scaling (or scaling out) involves adding more instances of a service or application to distribute the load effectively. Key features include:

Auto-Scaling: Automatically provisions additional instances based on metrics such as CPU utilization or request rates.

Load Balancing: Distributes workloads across multiple instances to optimize resource utilization and ensure high availability.

Microservices Architecture: Applications can be broken down into smaller, independently scalable components.

2. Vertical Scaling

Vertical scaling (or scaling up) enhances the capacity of existing instances by increasing resources like CPU, memory, or storage. SCP enables this by:

Allowing instance resizing to meet increased demand.

Supporting resource upgrades without affecting application availability in many cases.

3. Global Multi-Cloud and Region Expansion

Multi-Cloud Deployment: SCP supports multiple cloud providers, including AWS, Microsoft Azure, Google Cloud Platform, and Alibaba Cloud. This flexibility allows organizations to scale globally while leveraging the strengths of specific cloud providers.

Regional Availability: SAP BTP offers data centers in various geographic locations, enabling businesses to scale their operations globally and ensure compliance with local data regulations.

4. Elasticity and Dynamic Resource Allocation

Elastic Cloud Services: Resources are dynamically allocated to applications based on real-time demand, ensuring optimal performance and cost-efficiency.

Pay-As-You-Go Pricing: Businesses only pay for the resources they use, making scaling cost-effective.

5. Database Scalability

SAP HANA, the backbone of many SCP services, supports:

In-Memory Data Compression: Efficient storage that allows massive data handling without performance degradation.

Partitioning and Sharding: Enables distribution of large datasets across multiple servers for improved query performance.

Scale-Out Architecture: SAP HANA can operate in distributed clusters to handle higher workloads.

6. Application Scalability

Cloud Foundry Environment: Offers seamless scaling of applications, with features like dynamic memory allocation and containerized deployments.

Kyma Runtime: Supports Kubernetes-based scaling for containerized applications and extensions.

Custom Application Scaling: Developers can define specific scaling policies and thresholds for their applications.

7. Integration Scalability

SAP Integration Suite: Allows businesses to connect and scale integrations across diverse landscapes, including SAP and non-SAP systems, as their ecosystem grows.

APIs and Event-Driven Architecture: Applications can scale by leveraging SAP BTP’s API management and event-driven services.

8. High Availability and Disaster Recovery

Redundancy: Ensures critical applications remain operational during scaling or infrastructure failures.

Disaster Recovery Solutions: SCP supports replication and backup strategies to scale data recovery processes effectively.

9. Developer Tools for Custom Scaling

SAP Business Application Studio and SAP Web IDE: Provide tools to build applications with built-in scalability features.

CI/CD Pipelines: Automate deployment processes to scale applications rapidly and consistently.

Benefits of Scalability in SAP Cloud Platform

Cost Efficiency: Scale resources on-demand, reducing unnecessary expenses.

Performance Optimization: Handle peak loads and ensure seamless user experiences.

Future-Ready: Accommodates organizational growth and evolving business needs.

SAP Cloud Platform’s extensive scalability options make it a robust choice for businesses looking to grow and adapt to changing market demands while ensuring performance and cost efficiency.

Anubhav Trainings is an SAP training provider that offers various SAP courses, including SAP UI5 training. Their SAP Ui5 training program covers various topics, including warehouse structure and organization, goods receipt and issue, internal warehouse movements, inventory management, physical inventory, and much more.

Call us on +91-84484 54549

Mail us on [email protected]

Website: Anubhav Online Trainings | UI5, Fiori, S/4HANA Trainings

0 notes

Text

SAP Cloud Platform (SCP), now integrated into the SAP Business Technology Platform (SAP BTP), offers extensive scalability options designed to meet the dynamic needs of businesses. These scalability options span across application performance, infrastructure, and integration, ensuring organizations can handle increasing workloads, growing user bases, and expanding business processes efficiently.

1. Horizontal Scaling

Horizontal scaling (or scaling out) involves adding more instances of a service or application to distribute the load effectively. Key features include:

Auto-Scaling: Automatically provisions additional instances based on metrics such as CPU utilization or request rates.

Load Balancing: Distributes workloads across multiple instances to optimize resource utilization and ensure high availability.

Microservices Architecture: Applications can be broken down into smaller, independently scalable components.

2. Vertical Scaling

Vertical scaling (or scaling up) enhances the capacity of existing instances by increasing resources like CPU, memory, or storage. SCP enables this by:

Allowing instance resizing to meet increased demand.

Supporting resource upgrades without affecting application availability in many cases.

3. Global Multi-Cloud and Region Expansion

Multi-Cloud Deployment: SCP supports multiple cloud providers, including AWS, Microsoft Azure, Google Cloud Platform, and Alibaba Cloud. This flexibility allows organizations to scale globally while leveraging the strengths of specific cloud providers.

Regional Availability: SAP BTP offers data centers in various geographic locations, enabling businesses to scale their operations globally and ensure compliance with local data regulations.

4. Elasticity and Dynamic Resource Allocation

Elastic Cloud Services: Resources are dynamically allocated to applications based on real-time demand, ensuring optimal performance and cost-efficiency.

Pay-As-You-Go Pricing: Businesses only pay for the resources they use, making scaling cost-effective.

5. Database Scalability

SAP HANA, the backbone of many SCP services, supports:

In-Memory Data Compression: Efficient storage that allows massive data handling without performance degradation.

Partitioning and Sharding: Enables distribution of large datasets across multiple servers for improved query performance.

Scale-Out Architecture: SAP HANA can operate in distributed clusters to handle higher workloads.

6. Application Scalability

Cloud Foundry Environment: Offers seamless scaling of applications, with features like dynamic memory allocation and containerized deployments.

Kyma Runtime: Supports Kubernetes-based scaling for containerized applications and extensions.

Custom Application Scaling: Developers can define specific scaling policies and thresholds for their applications.

7. Integration Scalability

SAP Integration Suite: Allows businesses to connect and scale integrations across diverse landscapes, including SAP and non-SAP systems, as their ecosystem grows.

APIs and Event-Driven Architecture: Applications can scale by leveraging SAP BTP’s API management and event-driven services.

8. High Availability and Disaster Recovery

Redundancy: Ensures critical applications remain operational during scaling or infrastructure failures.

Disaster Recovery Solutions: SCP supports replication and backup strategies to scale data recovery processes effectively.

9. Developer Tools for Custom Scaling

SAP Business Application Studio and SAP Web IDE: Provide tools to build applications with built-in scalability features.

CI/CD Pipelines: Automate deployment processes to scale applications rapidly and consistently.

Benefits of Scalability in SAP Cloud Platform

Cost Efficiency: Scale resources on-demand, reducing unnecessary expenses.

Performance Optimization: Handle peak loads and ensure seamless user experiences.

Future-Ready: Accommodates organizational growth and evolving business needs.

SAP Cloud Platform’s extensive scalability options make it a robust choice for businesses looking to grow and adapt to changing market demands while ensuring performance and cost efficiency.

Anubhav Trainings is an SAP training provider that offers various SAP courses, including SAP UI5 training. Their SAP Ui5 training program covers various topics, including warehouse structure and organization, goods receipt and issue, internal warehouse movements, inventory management, physical inventory, and much more.

Call us on +91-84484 54549

Mail us on [email protected]

Website: Anubhav Online Trainings | UI5, Fiori, S/4HANA Trainings

0 notes

Text

Real-Life Scenarios Every Azure Admin Should Know for AZ-104

The Microsoft Azure AZ-104 certification is a pivotal milestone for any aspiring Azure administrator. It validates your ability to manage Azure subscriptions, secure identities, and monitor cloud resources effectively. However, excelling in this certification—and in your role as an Azure administrator—requires more than theoretical knowledge. Real-life scenarios play a critical role in preparing for the AZ-104 and ensuring you’re equipped for the challenges of cloud administration.

In this blog, we will explore practical scenarios every Azure admin should master, providing insights that go beyond the exam and into day-to-day responsibilities.

1. Managing Azure Subscriptions and Resources

One of the foundational responsibilities of an Azure administrator is managing subscriptions and resources effectively. Real-life scenarios often involve the following challenges:

Scenario: Subscription Cost Optimization

Imagine you’re tasked with reducing costs for a company’s Azure subscription. Through Azure Cost Management + Billing, you analyze spending patterns and discover that unused virtual machines (VMs) and over-provisioned resources are driving up costs.

Solution:

Identify and deallocate unused VMs.

Implement Azure Reserved Instances for predictable workloads.

Use Azure Advisor to receive personalized recommendations for cost optimization.

Takeaway: Cost efficiency is critical for Azure administration. Always monitor usage and implement cost-saving measures proactively.

2. Implementing and Managing Storage Accounts

Azure storage is essential for data retention and application functionality. As an admin, you’ll often deal with scenarios requiring robust storage management.

Scenario: Resolving Storage Access Issues

A development team reports they’re unable to access data stored in Azure Blob Storage. Upon investigation, you find that storage access policies are misconfigured.

Solution:

Validate that the correct Shared Access Signature (SAS) tokens or access keys are in use.

Ensure that firewall and virtual network rules allow access from the necessary IP ranges or subnets.

Check for permissions issues in Azure Role-Based Access Control (RBAC).

Takeaway: Properly configuring access policies and understanding Azure’s security model for storage are essential to avoid disruptions.

3. Configuring Virtual Networks (VNets) and Connectivity

Networking is a core Azure component. You’ll often be required to configure virtual networks and establish secure connectivity.

Scenario: Connecting On-Premises to Azure via VPN

Your organization wants to connect its on-premises network to Azure for hybrid cloud scenarios. The challenge is to set up a secure site-to-site VPN.

Solution:

Configure a Virtual Network Gateway in Azure.

Use a compatible VPN device on-premises, and configure it to establish the connection.

Test connectivity and ensure data flows securely between both networks.

Takeaway: Azure administrators must be proficient in designing and troubleshooting network connectivity to facilitate hybrid cloud setups.

4. Managing Identities and Governance

Azure Active Directory (Azure AD) is at the heart of identity and access management in Azure. Missteps here can lead to security breaches or operational inefficiencies.

Scenario: Managing Conditional Access Policies

Your organization wants to enforce multi factor authentication (MFA) for all users accessing Azure resources remotely.

Solution:

Create Conditional Access policies in Azure AD.

Specify conditions, such as the location being outside the corporate network.

Require MFA for these scenarios, ensuring secure remote access.

Takeaway: A well-implemented identity strategy ensures secure and efficient access to resources.

5. Deploying and Managing Azure Compute Resources

Azure VMs, containers, and App Services are core compute options that you’ll manage daily.

Scenario: Auto Scaling an Application

A web application experiences performance issues due to sudden spikes in traffic. You’re required to implement a solution that ensures high availability without manual intervention.

Solution:

Use an App Service Plan with Autoscale enabled.

Define scaling rules based on metrics like CPU usage or HTTP request counts.

Monitor the application to ensure scaling works as expected.

Takeaway: Autoscaling is vital for maintaining application performance during traffic fluctuations.

6. Monitoring and Maintaining Azure Resources

Effective monitoring is crucial for identifying issues before they escalate. Azure Monitor and Log Analytics provide deep insights into resource performance.

Scenario: Diagnosing High Resource Utilization

Your team notices degraded application performance. Azure Monitor indicates high CPU usage on a VM hosting critical services.

Solution:

Use Azure Monitor to identify processes consuming excessive CPU.

Scale the VM to a higher SKU or optimize the application workload.

Set alerts to notify the team of high resource usage in the future.

Takeaway: Proactive monitoring ensures resource issues are addressed before they impact business operations.

7. Implementing Security Solutions

Security is a top priority in any cloud environment, and Azure administrators play a key role in safeguarding resources.

Scenario: Responding to a Security Threat

A security alert from Azure Security Center indicates suspicious login attempts from an unknown IP address.

Solution:

Use Azure AD Sign-In Logs to investigate the activity.

Block the IP address using Azure Firewall or NSGs.

Implement Azure AD Identity Protection to automatically detect and respond to threats.

Takeaway: Familiarity with Azure’s security tools helps you respond swiftly to potential threats.

Conclusion

Mastering real-life scenarios is critical for Azure administrators pursuing the AZ-104 certification. From managing costs and resources to ensuring secure access and responding to performance issues, these scenarios prepare you to handle the challenges of real-world Azure administration.

As you prepare for AZ-104, focus not just on the exam objectives but also on gaining hands-on experience with these scenarios. Doing so will not only help you ace the certification but also excel as a skilled Azure administrator in any professional environment.

#microsoft azure#online courses#online certification#online learning#azure#e learning#programming#microsoft#careers

0 notes

Text

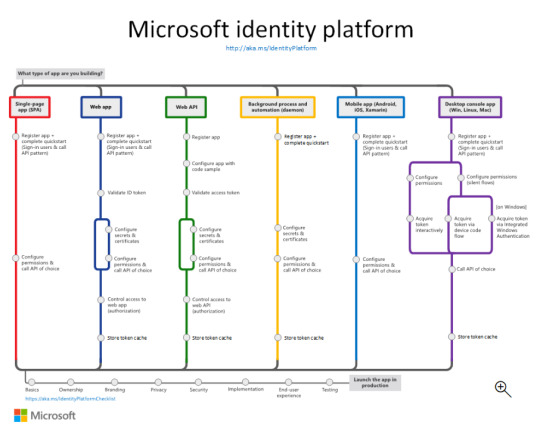

Exploring the Power of Microsoft Identity Platform

Join us on a journey to understand how Microsoft Identity Platform revolutionizes user access, enhancing both security and user experience.

What is microsoft identity platform?

The Microsoft identity platform is a cloud identity service that allows you to build applications your users and customers can sign in to using their Microsoft identities or social accounts. It authorizes access to your own APIs or Microsoft APIs like Microsoft Graph.

OAuth 2.0 and OpenID Connect standard-compliant authentication service enabling developers to authenticate several identity types, including:

Work or school accounts, provisioned through Microsoft Entra ID

Personal Microsoft accounts (Skype, Xbox, Outlook.com)

Social or local accounts, by using Azure AD B2C

Social or local customer accounts, by using Microsoft Entra External ID

Open-source libraries:

Microsoft Authentication Library (MSAL) and support for other standards-compliant libraries. The open source MSAL libraries are recommended as they provide built-in support for conditional access scenarios, single sign-on (SSO) experiences for your users, built-in token caching support, and more. MSAL supports the different authorization grants and token flows used in different application types and scenarios.

Microsoft identity platform endpoint:

The Microsoft identity platform endpoint is OIDC certified. It works with the Microsoft Authentication Libraries (MSAL) or any other standards-compliant library. It implements human readable scopes, in accordance with industry standards.

Application management portal:

A registration and configuration experience in the Microsoft Entra admin center, along with the other application management capabilities.

Application configuration API and PowerShell:

Programmatic configuration of your applications through the Microsoft Graph API and PowerShell so you can automate your DevOps tasks.

Developer content:

Technical documentation including quickstarts, tutorials, how-to guides, API reference, and code samples.

For developers, the Microsoft identity platform offers integration of modern innovations in the identity and security space like passwordless authentication, step-up authentication, and Conditional Access. You don't need to implement such functionality yourself. Applications integrated with the Microsoft identity platform natively take advantage of such innovations.

With the Microsoft identity platform, you can write code once and reach any user. You can build an app once and have it work across many platforms, or build an app that functions as both a client and a resource application (API).

More identity and access management options

Azure AD B2C - Build customer-facing applications your users can sign in to using their social accounts like Facebook or Google, or by using an email address and password.

Microsoft Entra B2B - Invite external users into your Microsoft Entra tenant as "guest" users, and assign permissions for authorization while they use their existing credentials for authentication.

Microsoft Entra External ID - A customer identity and access management (CIAM) solution that lets you create secure, customized sign-in experiences for your customer-facing apps and services.

The Components that make up the Microsoft identity platform:

OAuth 2.0 and OpenID Connect standard-compliant authentication service enabling developers to authenticate several identity types, including:

Work or school accounts, provisioned through Microsoft Entra ID

Personal Microsoft accounts (Skype, Xbox, Outlook.com)

Social or local accounts, by using Azure AD B2C

Social or local customer accounts, by using Microsoft Entra External ID

Open-source libraries: Microsoft Authentication Library (MSAL) and support for other standards-compliant libraries. The open source MSAL libraries are recommended as they provide built-in support for conditional access scenarios, single sign-on (SSO) experiences for your users, built-in token caching support, and more. MSAL supports the different authorization grants and token flows used in different application types and scenarios.

Microsoft identity platform endpoint - The Microsoft identity platform endpoint is OIDC certified. It works with the Microsoft Authentication Libraries (MSAL) or any other standards-compliant library. It implements human readable scopes, in accordance with industry standards.

Application management portal: A registration and configuration experience in the Microsoft Entra admin center, along with the other application management capabilities.

Application configuration API and PowerShell: Programmatic configuration of your applications through the Microsoft Graph API and PowerShell so you can automate your DevOps tasks.

Developer content: Technical documentation including quickstarts, tutorials, how-to guides, API reference, and code samples.

0 notes

Text

10 AI ML In Cloud Computing Trends To Look Out For In 2024

Brands like Google Cloud, AWS, Azure, or IBM Cloud need no introduction today. Yes, they all belong to the cloud computing domain of which we are highlighting the latest trends and insights.

What Is Cloud Computing?

Cloud computing refers to the practice of providing users with access to shared, on-demand computing resources such as servers, data storage, databases, software, and networking over a public network, most often the Internet.

With cloud computing, businesses can access and store data without worrying about their hardware or IT infrastructure. It becomes increasingly challenging for firms to run their operations on in-house computing servers due to the ever-increasing amounts of data being created and exchanged, as well as the increasing demand from customers for online services.

The concept of “the cloud” is based on the idea that any location with an internet connection may access and control a company’s resources and applications, much like checking an email inbox online. The ability to quickly scale computation and storage without incurring upfront infrastructure expenditures or adding additional systems and applications is a major benefit of cloud services, which are usually handled and maintained by a third-party provider.

New: 10 AI ML In Personal Healthcare Trends To Look Out For In 2024

Types of Cloud Computing

Platforms as a Service (PaaS)

Infrastructure as a Service (IaaS)

Software as a service (SaaS)

Everything as a service (XaaS)

Function as a Service (FaaS)

Let’s Know Some Numbers

The global cloud computing market is expected to witness a compound annual growth rate of 14.1% from 2023 to 2030 to reach USD 1,554.94 billion by 2030.

58.7% of IT spending is still traditional but cloud-based spending will soon outpace it (Source: Gartner)

Cloud adoption among enterprise organizations is over 94% (Source: RightScale)

Over half of enterprises are struggling to see cloud ROI (Source: PwC)

Over 50% of SMEs technology budget will go to cloud spend in 2023 (Source: Zesty)

54% of small and medium-sized businesses spend more than $1.2 million on the cloud (Source: RightScale)

42% of CIOs and CTOs consider cloud waste the top challenge (Source: Zesty)

Leveraging AI and ML for advanced security measures: As cyber threats evolve, becoming perpetually dangerous and complex, intelligent security measures are imperative to counter this. For example, AI-driven anomaly detection can identify unusual patterns in network behavior, thwarting potential breaches. At the same time, ML algorithms are adept at recognizing patterns, enhancing threat prediction models, and fortifying defenses against emerging risks. And with AI and ML models continuously being trained on new data, their responses and accuracy will only improve as we head into 2024. Continued improvement of cloud automation: As AI and ML become more advanced, this will, of course, enhance their capabilities, allowing for more processes to become automated and more intelligent management of resources. By providing increasingly precise insights, AI and ML can improve processes such as predictive scaling, resource provisioning, and intelligent load balancing.

Low Cost

Secure

Agility

High availability and reliability

High Scalability

Multi-Sharing

Device and Location Independence

Maintenance

Services in pay-per-use mode

High Speed

Global Scale

Productivity

Performance

Reliability

Easy Maintenance

On-Demand Service

Large Network Access

Automatic System

Read: Top 10 Benefits Of AI In The Real Estate Industry

Advantages of Cloud Computing

Provides data backup and recovery

Cost-effective due to the pay-per-use model

Provides data security

Unlimited storage without any infrastructure

Easily accessible

High flexibility and scalability

10 AI ML In Cloud Computing Trends To Look Out For In 2024

Artificial Intelligence (AI) and Machine Learning (ML) are playing a significant role in shaping the future of cloud computing.

AI-Optimized Cloud Services: Cloud providers will offer specialized AI-optimized infrastructure, making it easier for businesses to deploy and scale AI and ML workloads. The intersection of cloud computing with AI and ML is one of the most exciting areas in technology right now. Since they need a large amount of storage and processing power for data collecting and training, these technologies are economical. High data security, privacy, tailored clouds, self-automation, and self-learning are some of the major themes that will continue to flourish in this industry in the next years. A lot of cloud service providers are putting money into AI and ML, including Amazon, Google, IBM, and many more. Some examples of Amazon’s machine learning products are the AWS DeepLens camera and Google Lens.

AI for Security: AI and ML will play a critical role in enhancing cloud security by detecting and responding to threats in real-time, with features like anomaly detection and behavior analysis. No company or group wants to take chances with their data’s safety. The safety of the company’s information is paramount. It is important to reduce the likelihood of data breaches, accidental deletion, and unauthorized changes. It is possible to adopt measures to guarantee very good data security and reduce losses to a minimum. To reduce the likelihood of data breaches, encryption and authentication are essential. Backing up data, checking privacy regulations, and using data recovery methods can all help lessen the likelihood of data loss. We will conduct comprehensive security testing to identify vulnerabilities and implement fixes. Both the storage and transport of data should be done with utmost care to ensure security. Numerous security procedures and techniques for data encryption are employed by cloud service providers to safeguard the data.

Serverless AI: The integration of AI with serverless computing will enable efficient, event-driven AI and ML applications in the cloud, reducing infrastructure management overhead. Per-user backend services are provided via serverless computing. Developers don’t need to handle servers while coding. The cloud provider executes code. Instead of paying for a set server, cloud customers will pay as they go. No need to buy servers—a third party will handle the cost. This will lower infrastructure expenses and improve scalability. This trend scales automatically as needed. Serverless architecture has several benefits, including no system administration, reduced cost and responsibility, easier operation management, and improved user experience even without the Internet.

Hybrid and Multi-Cloud AI: AI will help manage and orchestrate AI workloads across hybrid and multi-cloud environments, ensuring seamless integration and resource allocation. Companies are increasingly using the strengths of each cloud provider by spreading their workload over several providers, allowing them more control over their data and resources. With multi-cloud, you may save money while reducing risks and failure points. Instead of deploying your complete application to a single cloud, multi-cloud allows you to select a specific service from many providers until you find one that suits your needs. As a result, cloud service providers will be even more motivated to include new services.

Virtual desktops will become widespread: VDI streams desktop images remotely without attaching the desktop to the client device. VDI helps remote workers be productive by deploying apps and services to distant clients without extensive installation or configuration. VDI will become more popular for non-tech use cases while WFH remains the standard in some regions. It lets companies scale workstations up or down with little cost, which is why Microsoft is developing a Cloud PC solution, an accessible VDI experience for corporate users.

AI for Data Management: AI will assist in data categorization, tagging, and data lifecycle management in the cloud, making data more accessible and usable. Storage of vast amounts of data on GPUs, which can massively parallelize computing, will be a major advance. This trend is well started and expected to expand in the future years. Data computation, storage, and consumption, as well as future business system development, are all affected by this transition. It will also require new computer architectures. As data grows, it will be dispersed among numerous data center servers running old and novel computing models. Due to its inability to process many nodes, the traditional CPU will become obsolete.

Cost Optimization in the Cloud: With the exponential growth of cloud users, cost management has emerged as a top priority for companies. Consequently, cloud service providers are putting resources into creating new services and solutions to assist their clients in cost management. Instance sizing suggestions, reserved instance options, and cost monitoring and budgeting tools are all part of cost management tools that customers may utilize to optimize expenditure.

Automated Cloud Management: AI-driven automation will streamline cloud management tasks, such as provisioning, scaling, and monitoring, reducing manual intervention. The possibility of automation is Cloud’s secret ingredient. When implemented correctly, automation may boost the productivity of your delivery team, enhance the reliability of your networks and systems, and lessen the likelihood of slowdowns or outages. Automating processes is not a picnic. More and more money is going into AI and citizen developer tools, thus there will be more devices available to make automation easier for cloud companies.

AI-powered DevOps: AI and ML will optimize DevOps processes in the cloud, automating code testing, deployment, and infrastructure provisioning. Cloud computing helps clients manage their data, but users can confront security challenges. Network intrusion, DoS assaults, virtualization difficulties, illegal data usage, etc. This can be reduced via DevSecOps.

Citizen Developer Introduction: One of the earliest developments in cloud computing is the rise of the citizen developer. With the Citizen Developer idea, even non-coders may tap into the potential of interconnected systems. If This Then That and similar tools made it possible for regular people (those of us who didn’t spend four years obtaining a degree in computer science) to link popular APIs and build individualized automation. By the end of 2024, a plethora of firms will have released tools that simplify the process of creating sophisticated programs using a drag-and-drop interface. This includes Microsoft, AWS, Google, and countless more. Among these platforms, Microsoft’s Power Platform—which includes Power Flow, Power AI, Power Builder, and Power Apps—is perhaps the most prominent. If you combine the four of them, you can create sophisticated apps for mobile and web that communicate with other technologies your company uses. Additionally, with the release of HoneyCode, AWS is showing no signs of stopping either.

Read: 4 Common Myths Related To Women In The Workplace

Conclusion

These trends represent the ongoing evolution of AI and ML in the cloud, with a focus on improving efficiency, security, and the management of cloud resources. Staying informed about these developments will be crucial for businesses to leverage the power of AI and ML in their cloud computing strategies in 2024 and beyond.

0 notes

Text

The Best Azure Synapse Analytics Online Training | Hyderabad

Components of Azure Synapse Analytics | 2024

Introduction:

Azure Synapse Analytics is an integrated analytics service offered by Microsoft Azure, designed to bring together data integration, enterprise data warehousing, and big data analytics. It is a comprehensive platform that allows organizations to ingest, prepare, manage, and serve data for immediate business intelligence and machine learning needs. The main components of Azure Synapse Analytics include Synapse SQL, Synapse Spark, Synapse Data Integration, and Synapse Studio. These components work together to provide a seamless and unified experience for data professionals. Let's explore each of these components in detail.

1. Synapse SQL

Synapse SQL is the core data warehousing component of Azure Synapse Analytics. It provides both provisioned (dedicated) and on-demand (server less) resources for SQL-based analytics. The provisioned resources, known as dedicated SQL pools, offer predictable performance by allocating fixed resources for data storage and processing. This model is ideal for large-scale, consistent workloads where performance needs to be predictable and consistent. Azure Synapse Analytics Online Training

On the other hand, the server less SQL pool allows users to query data without the need to pre-provision resources. This on-demand capability is particularly useful for exploratory queries, ad-hoc data analysis, and situations where data workloads are sporadic. Server less SQL pools can query data stored in Azure Data Lake Storage and other sources, providing flexibility and cost efficiency by charging only for the data processed.

2. Synapse Spark

Synapse Spark integrates Apache Spark, a popular open-source big data processing framework, into the Synapse environment. It enables large-scale data processing and analytics, making it ideal for data engineering, machine learning, and data exploration. Synapse Spark provides a managed Spark environment, meaning it handles the underlying infrastructure, allowing users to focus on writing code and analysing data. Azure Synapse Training in Hyderabad

This component supports multiple languages, including Python, Scala, and SQL, making it accessible to a wide range of data professionals. Synapse Spark can be used for batch processing, real-time analytics, and complex data transformations. It also seamlessly integrates with other Azure services, such as Azure Data Lake Storage and Azure Machine Learning, enhancing its capabilities for end-to-end data workflows.

3. Synapse Data Integration

Synapse Data Integration encompasses data movement and transformation capabilities within Azure Synapse Analytics. This is primarily achieved through Synapse Pipelines, which are similar to Azure Data Factory pipelines. Synapse Pipelines provide a visual interface for creating data workflows, allowing users to orchestrate data movement and transformation across various data sources and destinations.

Data integration within Synapse is crucial for building ETL (Extract, Transform, Load) and ELT (Extract, Load, Transform) processes. These pipelines can connect to a wide variety of data sources, including on-premises databases, cloud-based data services, and SaaS applications. They support both batch and real-time data integration scenarios, making them versatile for different use cases. Azure Synapse Analytics Courses Online

Additionally, Synapse Data Integration supports data flows, which are visual, code-free data transformation tools. Data flows allow users to design data transformations without writing code, making it easier for business analysts and data engineers to prepare data for analysis.

4. Synapse Studio

Synapse Studio is the unified development environment for Azure Synapse Analytics. It provides a web-based interface that brings together the functionalities of all the Synapse components. Synapse Studio offers an integrated workspace where data professionals can perform data exploration, data engineering, data integration, and data visualization tasks.

In Synapse Studio, users can create and manage SQL scripts, Spark notebooks, data pipelines, and more. It also provides built-in monitoring and management tools to track resource usage, job statuses, and data flow operations. The collaborative features of Synapse Studio allow multiple users to work together, making it easier to share insights and data assets within an organization. Azure Synapse Training

Integration and Security

One of the strengths of Azure Synapse Analytics is its ability to integrate with other Azure services and external data sources. It natively supports Azure Data Lake Storage, Azure Blob Storage, Azure SQL Database, and various other Azure services. This integration extends to popular business intelligence tools like Power BI, allowing for seamless data visualization and reporting.

Azure Synapse Analytics also places a strong emphasis on security and compliance. It supports data encryption at rest and in transit, as well as advanced security features like role-based access control, virtual network support, and managed private endpoints. Compliance certifications ensure that data handling meets industry standards and regulations. Azure Synapse Analytics Training

Conclusion

Azure Synapse Analytics provides a comprehensive, integrated platform for data analytics. Its main components—Synapse SQL, Synapse Spark, Synapse Data Integration, and Synapse Studio—offer a wide range of capabilities, from data warehousing and big data processing to data integration and visualization. This integration enables organizations to unlock insights from their data efficiently and securely. Whether for data engineers, data scientists, or business analysts, Azure Synapse Analytics offers the tools and features needed to drive data-driven decision-making.

Visualpath is the Best Software Online Training Institute in Hyderabad. Avail complete Azure Synapse Analytics worldwide. You will get the best course at an affordable cost.

Attend Free Demo

Call on - +91-9989971070.

WhatsApp: https://www.whatsapp.com/catalog/917032290546/

Visit https://visualpathblogs.com/

Visit: https://visualpath.in/azure-synapse-analytics-online-training.html

#AzureSynapseAnalyticsTraining#AzureSynapseAnalyticsCoursesOnline#AzureSynapseAnalyticsonlineTraininginHyderabad#AzureSynapseTraininginHyderabad#AzureSynapseAnalyticsOnlineTraining#AzureSynapseAnalyticsTraininginHyderabad#AzureSynapseAnalyticsTraininginAmeerpet#AzureSynapseOnlineTrainingCourseHyderabad#AzureSynapseTraining.

0 notes

Text

SAP SUCCESSFACTORS ACTIVE DIRECTORY INTEGRATION

SAP SuccessFactors and Active Directory: Streamlining User Management

In today’s enterprise landscape, managing user identities often involves multiple systems. This can lead to inconsistencies, administrative overhead, and potential security risks. Integrating SAP SuccessFactors, a leading cloud-based Human Capital Management (HCM) suite, with Microsoft Active Directory (AD) addresses these challenges, offering a centralized approach to managing user accounts.

Why Integrate SAP SuccessFactors with Active Directory?

Enhanced Security: A single source of truth (AD) for user data promotes security, allowing administrators to enforce consistent password policies and access controls across systems.

Simplified User Lifecycle Management: Automating user creation, updates, and deactivation between AD and SuccessFactors reduces manual effort and potential errors.

Improved User Experience: Single sign-on (SSO) capabilities allow users to access SuccessFactors and other AD-linked applications with single credentials.

Reduced IT Workload: Streamlining user management processes frees IT resources to focus on strategic initiatives.

Methods for SAP SuccessFactors – Active Directory Integration

There are several ways to achieve this integration, each with its nuances:

Microsoft Azure AD Connect: This tool synchronizes user accounts from on-premises AD to Azure Active Directory. If your SuccessFactors instance utilizes Azure AD for authentication, this provides a seamless bridge for user management.

SAP Cloud Integration (CPI): CPI is a powerful middleware platform from SAP that facilitates communication between SuccessFactors and various systems. CPI allows custom integrations for scenarios requiring advanced data mapping or transformations to connect with AD.

Third-Party Provisioning Solutions: Several specialized tools offer pre-built connectors and workflows for SuccessFactors and AD integration. These solutions can simplify the process and provide additional features, such as granular access control.

Key Considerations Before You Begin

Authentication Strategy: Align your integration method with your SuccessFactors authentication strategy (e.g., Azure AD, on-premises identity provider).

Data Mapping: Plan the alignment of user attributes between SuccessFactors and Active Directory.

Synchronization Scope: Determine which user groups need to be synchronized – whether all users or specific subsets.

Security and Compliance: Implement safeguards for data in transit and user access permissions, especially when dealing with sensitive employee data.

Steps for SAP SuccessFactors – Active Directory Integration

The specific steps will depend on your chosen integration method. Here’s a high-level overview:

Prepare Active Directory: Ensure your AD environment is well-structured and relevant user attributes are populated.

Configure SAP SuccessFactors: Enable necessary API permissions and prepare SuccessFactors for integration.

Set up Integration Tool: Follow the platform-specific guidelines to configure the integration method (Azure AD Connect, CPI, or a third-party tool).

Implement Synchronization: Define synchronization rules, including data mapping, schedules, and error handling.

Test Thoroughly: Conduct rigorous testing in a staging environment to verify seamless user provisioning and system updates.

Monitor and Maintain: Regularly monitor the integration and address any issues promptly to ensure smooth operation.

Beyond the Basics

Successful integration is only the start. Consider advanced scenarios like:

Bi-directional Synchronization: Implement write-back functionality, allowing changes in AD to reflect in SuccessFactors and further enhance consistency.

Role-Based Access Control (RBAC): Align AD groups with SuccessFactors roles to streamline permission assignment and management.

Embrace a Streamlined Approach

SAP SuccessFactors and Active Directory integration deliver a unified user management experience. By carefully selecting an integration approach and meticulously planning the process, your organization will unlock enhanced efficiency, security, and a better user experience.

youtube

You can find more information about SAP Successfactors in this SAP Successfactors Link

Conclusion:

Unogeeks is the No.1 IT Training Institute for SAP Training. Anyone Disagree? Please drop in a comment

You can check out our other latest blogs on SAP Successfactors here - SAP Successfactors Blogs

You can check out our Best In Class SAP Successfactors Details here - SAP Successfactors Training

----------------------------------

For Training inquiries:

Call/Whatsapp: +91 73960 33555

Mail us at: [email protected]

Our Website ➜ https://unogeeks.com

Follow us:

Instagram: https://www.instagram.com/unogeeks

Facebook: https://www.facebook.com/UnogeeksSoftwareTrainingInstitute

Twitter: https://twitter.com/unogeeks

0 notes

Text

SUCCESSFACTORS AZURE AD INTEGRATION

Title: Seamless User Management: Integrating SuccessFactors with Azure Active Directory

Introduction

Organizations seek to streamline their identity management processes across various platforms in today’s cloud-centric world. Integrating your Human Capital Management (HCM) system, such as SAP SuccessFactors, with Azure Active Directory offers a centralized and efficient way to manage user identities and access. Let’s explore the benefits and steps involved in this integration.

Benefits of SuccessFactors-Azure AD Integration

Centralized Identity Management: Azure AD becomes your central source of user identities, eliminating separate user directories and simplifying account management.

Enhanced Security: Leverage Azure AD’s robust security features, such as multi-factor authentication (MFA), conditional access policies, and advanced threat protection.

Simplified User Experience: Users enjoy Single Sign-On (SSO) access across SuccessFactors and other Azure AD-connected applications, improving productivity.

Automated User Provisioning: Streamline user onboarding and offboarding by automating account creation, updating, and disabling between SuccessFactors and Azure AD.

Prerequisites

An active SAP SuccessFactors subscription.

An active Azure AD subscription.

Administrative access to both SuccessFactors and Azure AD.

Integration Steps

Create an API User in SuccessFactors:

In the SuccessFactors Admin Center, create a dedicated API user. Assign necessary permissions for user provisioning operations.

Create an API Permissions Role and Group

Create an API permissions role with the “Allow Admin to Access OData API through Basic Authentication” permission.

Create a permission group and assign the API user and API permissions role.

Add the Provisioning App in Azure AD

In the Azure Portal, navigate to Enterprise Applications and “Add an application.”

Select “SAP SuccessFactors” from the gallery of pre-configured apps.

Configure Provisioning in Azure AD

Provide your SuccessFactors tenant URL and the API user credentials created in Step 1.

Establish attribute mappings to determine how SuccessFactors user attributes synchronize with Azure AD attributes.

Enable and customize automatic user provisioning.

Testing and Monitoring

Initiate a test provisioning cycle to verify successful user creation or updates in Azure AD.

Use Azure AD’s monitoring tools to track provisioning activities and identify errors.

Additional Considerations

Bidirectional Provisioning: Consider bidirectional provisioning if you want changes made in Azure AD to reflect in SuccessFactors.

Conditional Access Policies: Use Azure AD conditional access to enforce granular access controls to SuccessFactors based on user attributes, device characteristics, and location.

SuccessFactors Writeback: Explore the SuccessFactors Writeback integration to update selected attributes in SuccessFactors from Azure AD.

Conclusion

Integrating SuccessFactors with Azure AD creates a unified, secure, and user-friendly identity and access management system. This integration empowers your organization to optimize user experiences and strengthen security posture within your cloud environment.

youtube

You can find more information about SAP Successfactors in this SAP Successfactors Link

Conclusion:

Unogeeks is the No.1 IT Training Institute for SAP Training. Anyone Disagree? Please drop in a comment

You can check out our other latest blogs on SAP Successfactors here - SAP Successfactors Blogs

You can check out our Best In Class SAP Successfactors Details here - SAP Successfactors Training

----------------------------------

For Training inquiries:

Call/Whatsapp: +91 73960 33555

Mail us at: [email protected]

Our Website ➜ https://unogeeks.com

Follow us:

Instagram: https://www.instagram.com/unogeeks

Facebook: https://www.facebook.com/UnogeeksSoftwareTrainingInstitute

Twitter: https://twitter.com/unogeeks

0 notes

Text

Key Skills Required for a Successful Azure Administrator

Essential Azure admin skills

Being a successful Azure administrator requires a solid set of skills and knowledge. Whether you are a seasoned IT professional looking to expand your expertise or a beginner interested in a career in cloud computing, there are certain skills that are essential for becoming an accomplished Azure administrator.

Becoming a skilled Azure admin

Becoming a skilled Azure administrator doesn’t happen overnight. It requires dedication, continuous learning, and hands-on experience. Azure is a vast platform with multiple services and features, so it’s important to focus on honing specific skills that are crucial for the role. Here are some key skills that every Azure administrator should possess:

Azure administration skills

1. Knowledge of Azure architecture

To be an effective Azure administrator, you need to have a deep understanding of Azure’s architecture. This includes understanding the different components of Azure such as virtual machines, storage accounts, virtual networks, and more. Familiarize yourself with Azure’s management hierarchy and how resources are organized.

2. Proficiency in Azure portal and command-line tools

The Azure portal is the primary user interface for managing Azure resources. As an Azure administrator, you should be comfortable navigating and performing tasks in the portal. Additionally, familiarity with Azure command-line tools, such as Azure PowerShell and Azure CLI, is essential. These tools enable you to automate tasks and perform advanced administrative tasks.

3. Experience with Azure networking

Networking is a fundamental aspect of Azure administration. You should have a strong understanding of virtual networks, subnets, network security groups, and Azure ExpressRoute. Knowledge of Azure load balancers, traffic manager, and virtual network gateways is also important. Familiarize yourself with concepts like IP addressing, routing, and DNS in the Azure context.

4. Understanding of Azure identity and access management

Azure Active Directory (Azure AD) is at the core of identity and access management in Azure. As an Azure administrator, you need to understand how to create and manage user accounts, groups, and roles in Azure AD. Additionally, learn about Azure AD authentication methods and how to integrate Azure AD with other services for secure access.

5. Knowledge of Azure security

Security is a top concern in any cloud environment. Understanding Azure security best practices and features is crucial for an Azure administrator. This includes concepts like Azure Security Center, Azure Key Vault, Azure Firewall, and Azure Security Policies. Stay updated on the latest security threats and vulnerabilities to protect your Azure resources.

6. Proficiency in Azure resource management

Being able to effectively manage Azure resources is a key skill for an Azure administrator. Learn how to provision, configure, and monitor resources in Azure. Understand resource groups, resource locks, tags, and resource monitoring and diagnostics. Gain knowledge of Azure automation and the ability to deploy and manage resources using ARM templates.

7. Troubleshooting and problem-solving skills

As an Azure administrator, you will encounter issues and challenges. Develop strong troubleshooting and problem-solving skills to identify and resolve issues efficiently. Learn how to use Azure monitoring and logging tools to diagnose and troubleshoot problems. Stay up-to-date with Azure service health and status to proactively address any issues.

8. Continuous learning and staying updated