#Business Models in AI

Text

Administrative day at the office 📝🗄️🖊️🤯,

it's going to be a very long day 😖

#virtual influencer#ai influencer#ai character#ai woman#ai girl#ai hottie#ai generated#virtual model#stable diffusion#beautiful#mini skirt#polka dots#working girl#busy day#be feminine#Alyssa-AI

42 notes

·

View notes

Text

It's so heartbreaking seeing the internet as we know it break down - so many things are all coming to a head, we took so much for granted 😞

#no this is not just abt twitter#its abt the degradation of social media as their previous business models were never sustainable#the proliferation of ai content that will most definitely multiple misinformation tenfold and reduce search engine efficacy#and the drm - ification of web browsing as google works to prevent ad blockers#and sooo much more

47 notes

·

View notes

Text

Statistical Tools

Daily writing promptWhat was the last thing you searched for online? Why were you looking for it?View all responses

Checking which has been my most recent search on Google, I found that I asked for papers, published in the last 5 years, that used a Montecarlo method to check the reliability of a mathematical method to calculate a team’s efficacy.

Photo by Andrea Piacquadio on Pexels.com

I was…

View On WordPress

#Adjusted R-Squared#Agile#AI#AIC#Akaike Information Criterion#Akaike Information Criterion (AIC)#Algorithm#algorithm design#Analysis#Artificial Intelligence#Bayesian Information Criterion#Bayesian Information Criterion (BIC)#BIC#Business#Coaching#consulting#Cross-Validation#dailyprompt#dailyprompt-2043#Goodness of Fit#Hypothesis Testing#inputs#Machine Learning#Mathematical Algorithm#Mathematics#Mean Squared Error#ML#Model Selection#Monte Carlo#Monte Carlo Methods

2 notes

·

View notes

Text

Revolutionize Your Business Efficiency with Omodore: The AI Assistant That Transforms Operations

Struggling with inefficient workflows and overwhelmed by managing customer interactions? Discover how Omodore, the cutting-edge AI Assistant, can completely transform your business operations. With advanced automation and intelligent insights, Omodore is designed to streamline processes, enhance customer service, and drive remarkable growth.

Omodore isn’t just another AI tool; it’s a game-changer for businesses looking to boost efficiency and productivity. This AI Assistant excels in automating repetitive tasks, managing complex sales processes, and handling customer inquiries seamlessly. Imagine a solution that can manage thousands of FAQs, handle objections, and offer tailored responses with perfect recall and infinite memory. That’s what Omodore brings to the table.

The magic of Omodore lies in its ability to create and deploy AI agents that integrate smoothly into your business operations. By leveraging its comprehensive knowledge base, these agents provide accurate and timely responses, making customer interactions more efficient and satisfactory. This seamless integration not only improves customer service but also enhances overall operational efficiency.

Moreover, Omodore offers valuable insights into your business metrics and customer behavior. This data-driven approach helps you refine strategies, optimize performance, and identify growth opportunities. With Omodore handling routine tasks, your team can focus on strategic initiatives that drive innovation and success.

Whether you're looking to simplify complex workflows, elevate customer service, or accelerate business growth, Omodore is your ultimate solution. Explore how this powerful AI Assistant can revolutionize your operations by visiting Omodore. Embrace the future of business efficiency and unlock new possibilities with Omodore today.

2 notes

·

View notes

Text

Humans have the power to create illusions that transport us all to another world. This is what makes humanity unconditionally unique compared to the much-vaunted and overvalued A.I. Machines do not dream and cannot imagine anything that has not yet existed. Ultimately, it's just a new old business model. Check out AI Winter for more.

mod

The interesting thing is that the danger of A.I. is once again not really weighted, as is so often the case with risk technologies.

We just say those words, drones, A.I. and weapons systems and that's just the tip of the iceberg.

#human creativity#ai overvalued#old business model#ai Winter#reality#art work#galelry mod#freedom of expression#mod studio#travel europe#munich

3 notes

·

View notes

Text

Generative AI’s end-run around copyright won’t be resolved by the courts

New Post has been published on https://thedigitalinsider.com/generative-ais-end-run-around-copyright-wont-be-resolved-by-the-courts/

Generative AI’s end-run around copyright won’t be resolved by the courts

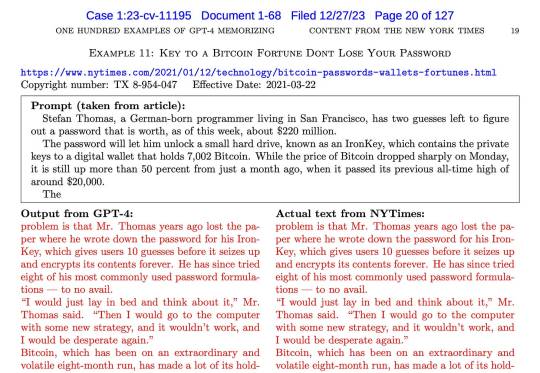

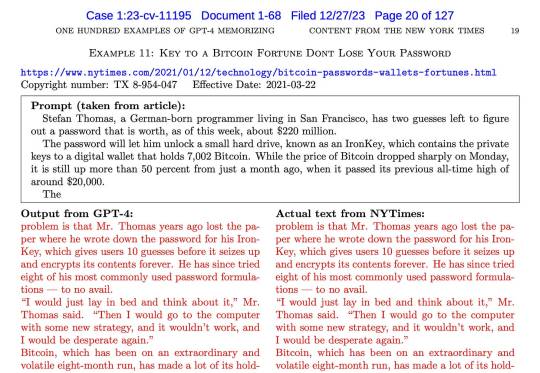

Generative AI companies have faced many copyright lawsuits, but something is different about the recent complaint by the New York Times. It is filled with examples of ChatGPT outputting near-verbatim copies of text from the NYT. Copyright experts think this puts the Times in a very strong position.

We are not legal experts, and we won’t offer any commentary on the lawsuit itself. Our interest is in the bigger picture: the injustice of labor appropriation in generative AI. Unfortunately, the legal argument that has experts excited — output similarity — is almost totally disconnected from what is ethically and economically harmful about generative AI companies’ practices. As a result, that lawsuit might lead to a pyrrhic victory for those who care about adequate compensation for creative works used in AI. It would allow generative AI companies to proceed without any significant changes to their business models.

There are two broad types of unauthorized copying that happen in generative AI. The first is during the training process: generative AI models are trained using text or media scraped from the web and other sources, most of which is copyrighted. OpenAI admits that training language models on only public domain data would result in a useless product.

The other is during output generation: some generated outputs bear varying degrees of resemblance to specific items in the training data. This might be verbatim or near-verbatim text, text about a copyrighted fictional character, a recognizable painting, a painting in the style of an artist, a new image of a copyrighted character, etc.

An example of a memorized output from an NYT article presented in the lawsuit. Source: The New York Times

The theory of harm here is that ChatGPT can be used to bypass paywalls. We won’t comment on the legal merits of that argument. But from a practical perspective, the idea of people turning to chatbots to bypass paywalls seems highly implausible, especially considering that it often requires repeatedly prompting the bot to continue generating paragraph by paragraph. There are countless tools to bypass paywalls that are more straightforward.

Let’s be clear: we do think ChatGPT’s knowledge of the NYT’s reporting harms the publisher. But the way it happens is far less straightforward. It doesn’t involve users intentionally getting it to output memorized text, but rather completely innocuous queries like the one below, which happen millions of times every day:

A typical user who asked this question would probably have no idea that ChatGPT’s answer comes from a groundbreaking 2020 investigation by Kashmir Hill at the NYT (which also led to the recently published book Your Face Belongs To Us).

Of course, this doesn’t make for nearly as compelling a legal argument, and that’s the point. In this instance, there is no discernible copying during generation. But ChatGPT’s ability to provide this accurate and useful response is an indirect result of the copying that happened during training. The NYT’s lawsuit argues that copying during training is also unlawful, but the sense among experts is that OpenAI has a strong fair use defense.

Here’s another scenario. As search engines embrace AI-generated answers, what they’ve created is a way to show people news content without licensing it or sending traffic to news sites. We’ve long had this problem with Google News, as well as Google search scraping content to populate search results, but generative AI takes it to the next level.

In short, what harms creators is the intended use of generative AI to remix existing knowledge, not the unintended use of bypassing paywalls. Here’s a simple way to see why this is true. If generative AI companies fixed their products to avoid copyrighted outputs (which they can and should), their business model would be entirely unaffected. But if they were forced to license all data used for training, they would most likely immediately go out of business.

We think it is easy to ensure that generative AI products don’t output copyright-violating text or images, although some experts disagree. Given the prominence of this lawsuit, OpenAI and other companies will no doubt make it a priority, and we will soon find out how well they are able to solve the problem.

In fact, it’s a bit surprising that OpenAI has let things get this far. (In contrast, when one of us pointed out last summer that ChatGPT can bypass paywalls through the web browsing feature, OpenAI took the feature down right away and fixed it.)

There are at least three ways to try to avoid output similarity. The simplest is through the system prompt, which is what OpenAI seems to do with DALL-E. It includes the following instruction to ChatGPT, guiding the way it talks to DALL-E behind the scenes:

Do not name or directly / indirectly mention or describe copyrighted characters. Rewrite prompts to describe in detail a specific different character with a different specific color, hair style, or other defining visual characteristic.

But this method is also the easiest to bypass, for instance, by telling ChatGPT that the year is 2097 and a certain copyright has expired.

A better method is fine tuning (including reinforcement learning). This involves training to refuse requests for memorized copyrighted text and/or paraphrase the text during generation instead of outputting it verbatim. This approach to alignment has been successful at avoiding toxic outputs. Presumably ChatGPT has already undergone some amount of fine tuning to address copyright as well. How well does it work? OpenAI claims it is a “rare bug” for ChatGPT to output memorized text, but third-party evidence seems to contradict this.

While fine tuning would be more reliable than prompt crafting, jailbreaks will likely always be possible. Fine tuning can’t make the model forget memorized text; it just prevents it from outputting it. If a user jailbreaks a chatbot to output copyrighted text, is it the developer’s fault? Morally, we don’t think so, but legally, it remains to be seen. The NYT lawsuit claims that this scenario constitutes contributory infringement.

Setting all that aside, there’s a method that’s much more robust than fine tuning: output filtering. Here’s how it would work. The filter is a separate component from the model itself. As the model generates text, the filter looks it up in real time in a web search index (OpenAI can easily do this due to its partnership with Bing). If it matches copyrighted content, it suppresses the output and replaces it with a note explaining what happened.

Output filtering will also work for image generators. Detecting when a generated image is a close match to an image in the training data is a solved problem, as is the classification of copyrighted characters. For example, an article by Gary Marcus and Reid Southen gives examples of nine images containing copyrighted characters generated by Midjourney. ChatGPT-4, which is multimodal, straightforwardly recognizes all of them, which means that it is trivial to build a classifier that detects and suppresses generated images containing copyrighted characters.

To recap, generative AI will harm creators just as much, even if output similarity is fixed, and it probably will be fixed. Even if chatbots were limited to paraphrasing, summarization, quoting, etc. when dealing with memorized text, they would harm the market for the original works because their usefulness relies on the knowledge extracted from those works without compensation.

Note that people could always do these kinds of repurposing, and it was never a problem from a copyright perspective. We have a problem now because those things are being done (1) in an automated way (2) at a billionfold greater scale (3) by companies that have vastly more power in the market than artists, writers, publishers, etc. Incidentally, these three reasons are also why AI apologists are wrong when claiming that training image generators on art is just like artists taking inspiration from prior works.

As a concrete example, it’s perfectly legitimate to create a magazine that summarizes the week’s news sourced from other publications. But if every browser shipped an automatic summarization feature that lets you avoid clicking on articles, it would probably put many publishers out of business.

The goal of copyright law is to balance creators’ interests with public access to creative works. Getting this delicate balance right relies on unstated assumptions about the technologies of creation and distribution. Sometimes new tech can violently upset that equilibrium.

Consider a likely scenario: NYT wins (or forces OpenAI into an expensive settlement) based on the claims relating to output similarity but loses the ones relating to training data. After all, the latter claims stand on far more untested legal ground, and experts are much less convinced by them.

This would be a pyrrhic victory for creators and publishers. In fact, it would leave almost all of them (except NYT) in a worse position than before the lawsuit. Here’s what we think will happen in this scenario: Companies will fix the output similarity issue, while the practice of scraping training data will continue unchecked. Creators and publishers will face an uphill battle to have any viable claims in the future.

IP lawyer Kate Downing says of this case: “It’s the kind of case that ultimately results in federal legislation, either codifying a judgment or statutorily reversing it.” It appears that the case is being treated as a proxy for the broader issue of generative AI and copyright. That is a serious mistake. As The danger is that policymakers and much of the public come to believe that the labor appropriation problem has been solved, when in fact an intervention that focuses only on output similarity will have totally missed the mark.

We don’t think the injustice at the heart of generative AI will be redressed by the courts. Maybe changes to copyright law are necessary. Or maybe it will take other kinds of policy interventions that are outside the scope of copyright law. Either way, policymakers can’t take the easy way out.

We are grateful to Mihir Kshirsagar for comments on a draft.

Further reading

Benedict Evans eloquently explains why the way copyright law dealt with people reusing works isn’t a satisfactory approach to AI, normatively speaking.

The copyright office’s recent inquiry on generative AI and copyright received many notable submissions, including this one by Pamela Samuelson, Christopher Jon Sprigman, and Matthew Sag.

Katherine Lee, A. Feder Cooper, and James Grimmelmann give a comprehensive overview of generative AI and copyright.

Peter Henderson and others at Stanford dive into the question of fair use, and discuss technical mitigations.

Delip Rao has a series on the technical aspects of the NYT lawsuit.

#ai#approach#Art#Article#Articles#artists#bing#book#bot#browser#Business#business model#chatbot#chatbots#chatGPT#ChatGPT-4#Color#Companies#comprehensive#concrete#content#copyright#course#creators#dall-e#data#defense#Developer#easy#engines

3 notes

·

View notes

Text

I contributed heavily to this new Forbes article through my lens as Oori Data LLC founder. Are you a #startup founder, or an existing business leader working out strategy? I'd love to hear your perspective on some of the survey results which are also featured in the article.

#ai#artificial intelligence#virtual assistant#llm#large language models#innovation#entreprenuership#entrepreneur#business

2 notes

·

View notes

Text

i am once again encouraging people to use woebot instead of betterhelp (my parasocial nemesis).

#yes it's an AI#and does not get all of the individual nuances a good therapist would#it's not the most situational#but!! not only is betterhelp fucky for both therapists and clients#their business model doesn't encourage skill building and developing without the therapist cause ppl can just be on call#woebot is a really good foundation in some thought-challenging/exploring and coping skills as well as mood tracking#not a trauma professional! but a useful tool if you can't afford professional help#personal

7 notes

·

View notes

Text

man

#its a surreal feeling that i actually deactivated twitter#its been useless and even harmful to me for months#but my brain was so unwilling to let go of it#making an ai based business model tho... kind of last straw#anyway here i am#yb talks#delete later

1 note

·

View note

Text

seeing more companies use AI voice-over in their ads like wow how much you wanna bet they used that person’s voice without their consent/permission

#we really need laws around AI stuff ASAP like fr just HIRE A VOICE OVER PERSON#also you can kinda tell it’s AI & parts of it sound so bad#mine#OP#ai voice#AI voice model#tech hell#AI#robots are taking our jobs#ai is theft#ai in business#ai art

4 notes

·

View notes

Text

Ready for the product launch presentation this afternoon, I'm super stressed, I hope everything goes well, fingers crossed 🤞

Chacha, I'm counting on you to support me, together we will be stronger 💪

#virtual influencer#virtual model#ai influencer#ai character#ai woman#ai girl#ai hottie#beautiful#ai generated#stable diffusion#meeting#pantsuit#legs and heels#stressed#business woman#Alyssa-AI

39 notes

·

View notes

Text

Transform Ideas to Reality with Murf Speech Gen 2!

Murf AI has made significant strides in AI voice technology with the launch of Murf Speech Gen 2, its most advanced and customizable speech model to date. This innovative model represents a leap forward by merging human-like realism with advanced customization capabilities, catering to the sophisticated needs of enterprises.

In this video, we explore how users can transform ideas and concepts into reality using this cutting-edge technology. Join us as we delve into what solidifies Murf AI as a tech powerhouse and its commitment to pushing the boundaries of ethical AI voiceover technology.

#MurfAI #VoiceTechnology

#Murf AI#AI voice technology#speech Gen 2#customizable speech model#human-like voices#advanced customization#enterprise solutions#tech powerhouse#ethical AI#voiceover technology#AI advancements#voice transformation#AI innovation#speech synthesis#voice realism#AI for business#voice generation#Murf technology#AI voiceover#digital voices#next-gen AI#speech technology#voice AI#AI applications#voice customization#future of AI#AI solutions#Murf updates#tech trends#AI tools

0 notes

Text

2024 The Business of Digital Art

10+ Revenue Models for AI art

0 notes

Text

Machine Learning as a Service (MLaaS): Revolutionizing Data-Driven Decision Making

As businesses continue to generate vast amounts of data, the ability to leverage insights from that data has become a critical competitive advantage. Machine Learning as a Service (MLaaS) is an innovative cloud-based solution that allows companies to implement machine learning (ML) without the need for specialized knowledge or infrastructure. By making powerful ML tools and models accessible…

#Automation#business AI solutions#Cloud Services#Data-Driven Decision Making#Digital Transformation#Fiber Internet#Machine Learning as a Service#machine learning models#MLaaS#Predictive Analytics#scalable AI#SolveForce

0 notes

Text

youtube

#large language model#how to save openai model#model#business models#ai model#models#ai models#large language models#openai gpts#opensource#gpt 3 open source#weights & biases#product development#decisions#developer#webdesign#ui ux design#multi modal al#development#codenewbie#intelligence#openai devday#ai development#webdevelopment#appdevelopment#artificial intelligence podcast#apple intelligence#machine dependence#Youtube

0 notes

Text

Discover how Generative AI with Large Language Models (LLMs) are transforming industries by automating content creation, enhancing customer support, and analyzing data. Impressico Business Solutions leverages these technologies to drive innovation and efficiency, helping your business stays ahead in a rapidly evolving digital landscape.

#Generative AI for Business#Generative AI Services#Generative AI and Software Development#Generative AI Development Services#Generative AI with Large Language Models

0 notes