#Click to Call API

Explore tagged Tumblr posts

Text

Click to Call Service - PRP Services

A premier Click to Call service provider in India, offering seamless communication solutions. Connecting businesses and customers effortlessly through a user-friendly interface, enhancing customer engagement and satisfaction. Enjoy real-time conversations, boosting conversions and accessibility. Elevate your communication strategy with our reliable and innovative services.

#Click to Call API#Click to Call Software#Click to Call#Click to Call Service#Click to Call Solution#Click to Call Solution Provider in Delhi

0 notes

Text

Use AI-Powered Smart Cloud Telephony Solutions for Your Business

Get smart cloud telephony solutions with the power of AI integrated into your business communication systems. Automate business operations and deliver high-quality customer experience. To know more, connect with go2market.

#go2market#cloud telephony service#AI-Powered#voice broadcasting#bulk sms service provider in delhi#whatsapp api service provider#bulk sms at low rate delhi#bulk sms provider in delhi ncr#click to call solutions in delhi#bulk sms companies in delhi

0 notes

Text

Improving Customer Experience with Click to Call in Digital Banking

In the rservicedly evolving landscape of digital banking, customer experience has emerged as a critical differentiator. Traditional banking methods have given way to innovative digital solutions designed to enhance convenience and satisfaction. One such innovation is the "Click to Call" feature, which enables customers to connect with their bank representatives with a single click.

This article explores the significance of Click to Call in digital banking, its benefits, and how it can be effectively implemented to improve customer experience.

Understanding Click to Call

Click to Call is a feature integrated into digital banking platforms, allowing customers to initiate a voice call with a bank representative by simply clicking a button. This functionality can be embedded in mobile apps, websites, and other digital interfaces, making it easier for customers to seek assistance without navigating through complex phone menus or waiting in long queues.

Benefits of Click to Call

1. Enhanced Convenience

Click to Call offers unparalleled convenience by reducing the steps required for customers to contact their bank. Instead of dialing a number and navigating through an IVR (Interactive Voice Response) system, customers can connect directly with a representative, streamlining the process and saving time.

2. Improved Customer Satisfaction

By providing a direct line to customer support, Click to Call significantly improves customer satisfaction. Customers appreciate the immediacy and personal touch, which enhances their overall experience with the bank. This feature is particularly beneficial during critical moments, such as resolving issues with transactions or seeking urgent assistance.

3. Increased Engagement

Implementing Click to Call can lead to higher engagement rates. Customers are more likely to reach out for support when the process is straightforward and hassle-free. This increased interaction allows banks to gather valuable feedback and insights, which can be used to further refine their services and address pain points effectively.

4. Cost Efficiency

For banks, Click to Call can be a cost-effective solution. By reducing the number of steps customers must take to contact support, banks can streamline their call center operations and allocate resources more efficiently. Additionally, the integration of automated call routing can further optimize the support process, ensuring that customers are directed to the right department or representative promptly.

Implementing Click to Call in Digital Banking

1. Seamless Integration

To maximize the benefits of Click to Call, seamless integration with existing digital platforms is crucial. Banks should ensure that the feature is accessible across various touchpoints, including mobile apps, websites, and online banking portals. This integration should be intuitive, allowing customers to initiate a call with minimal effort.

2. Personalized Customer Support

Leveraging customer data to provide personalized support is a key aspect of enhancing the Click to Call experience. By analyzing transaction history and previous interactions, banks can tailor their responses to individual needs, ensuring a more relevant and satisfying customer experience.

3. Advanced Analytics

Implementing advanced analytics can help banks monitor and improve the performance of their Click to Call feature. By tracking metrics such as call duration, resolution rates, and customer feedback, banks can identify areas for improvement and optimize their support processes.

4. Training and Empowering Staff

Empowering customer support representatives with the right training and tools is essential for the success of Click to Call. Staff should be equipped to handle a wide range of inquiries efficiently and with empathy, ensuring that customers receive the best possible service.

Frequently Asked Questions (FAQ)

Q: How does Click to Call work in digital banking?

A: Click to Call allows customers to initiate a call with their bank by clicking a button within the bank's digital platform. This feature connects them directly to a customer support representative.

Q: What are the benefits of Click to Call for customers?

A: Click to Call offers enhanced convenience, improved customer satisfaction, increased engagement, and cost efficiency by streamlining the process of contacting customer support.

Q: Can Click to Call be integrated with mobile banking apps?

A: Yes, Click to Call can be seamlessly integrated into mobile banking apps, websites, and other digital platforms to provide easy access to customer support.

Q: How does Click to Call improve customer satisfaction?

A: By providing direct and immediate access to customer support, Click to Call reduces wait times and enhances the overall customer experience, leading to higher satisfaction levels.

Q: What should banks consider when implementing Click to Call? A: Banks should focus on seamless integration, personalized support, advanced analytics, and empowering staff to ensure the success of the Click to Call feature.

Conclusion

Click to Call is a powerful tool that can significantly enhance the customer experience in digital banking. By providing a direct and convenient way for customers to connect with support representatives, banks can improve satisfaction, increase engagement, and optimize their support operations. As the digital banking landscape continues to evolve, implementing features like Click to Call will be essential for banks aiming to stay competitive and meet the growing expectations of their customers.

0 notes

Text

#cloud calling#cloudcomputing#cloud telephony#ivr services#ivr solution#voiceover#voip technology#business voip#bulk sms service#whatsapp business api#virtual phone number#click to call#phone calls#voice broadcasting#startup#entrepreneur#b2b#b2bsales#voice message#sms marketing

1 note

·

View note

Text

Are you looking for a click-to-call dialer? We offer you click-to-call White label VOIP solutions that fit your business needs.

#click to call service solution#click to call API solutions#click to call solution for business#click-to-dial software#click to call API services#best voice call APIs platform

0 notes

Text

Interesting article by The Guardian

The most interesting aspect of the controversy, though, is the effrontery of Huffman’s attempt to seize the moral high ground. What’s bothering him is that the data on Redditors’ interests and behaviour over the decades that is stored on its servers constitutes gold dust for the web crawlers of the tech giants as they hoover up everything in training their large language models (LLMs). Providing a free API makes that a cost-free exercise for them. “The Reddit corpus of data is really valuable,” Huffman told the New York Times. “But we don’t need to give all of that value to some of the largest companies in the world for free.”

Note the sleight of mind here. That “corpus of data” is the content posted by millions of Reddit users over the decades. It is a fascinating and valuable record of what they were thinking and obsessing about. Not the tiniest fraction of it was created by Huffman, his fellow executives or shareholders. It can only be seen as belonging to them because of whatever skewed “consent” agreement its credulous users felt obliged to click on before they could use the service. So it’s a bit rich to hear him complaining about LLMs which were – and are – being trained via the largest and most comprehensive exercise in intellectual piracy in the history of mankind. Or, to coin a phrase, it’s just another case of the kettle calling the slag heap black.

297 notes

·

View notes

Note

please teach us how to meow

writing quickly as I have to be somewhere. the way to do it is janky, since I'm getting 'not authorized' in response to API requests. open the network tab of the Firefox developer console. scroll to the bottom. click the cat on the boop-o-meter. you will see the request that sent the boop to yourself:

actually you can use any boop. right-click it and select copy value->copy as cURL. you receive a call to cURL with all of the necessary authentication information. this expires eventually, so you'll need to repeat this step occasionally. I had to set the 'Accept-Encoding' parameter to only 'gzip, deflate' because my cURL didn't like the others. at the end of the request, you see the field

--data-raw '{"receiver":"metastablephysicist","context":"BoopOMeter","type":"cat"}'

you can change receiver to anyone you like and the type can be e.g. "normal", "super", "evil", "cat". by the way, there exists the boop type "spooky" this time also. idk how to get it normally but you can guess the name that's not true

paste the long command in a terminal and it sends boops. paste it in a shell script with a while loop and a delay between boops and great powers can be yours.

23 notes

·

View notes

Text

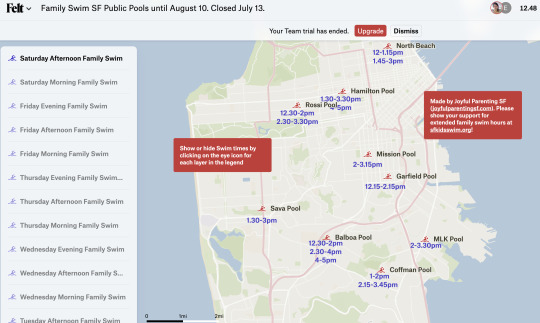

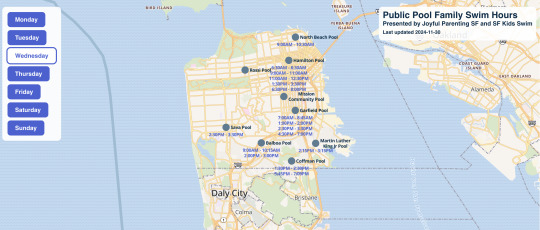

The making of the SF family swim map!

This is a technical blog post showcasing a project (swim.joyfulparentingsf.com) made by Double Union members! Written by Ruth Grace Wong.

Emeline (a good friend and fellow DU member) and I love swimming with our kids. The kids love it too, and they always eat really well after swimming! But for a long time we were frustrated about SF Rec & Park's swim schedules. Say today is Wednesday and you want to swim, you have to click on each pool's website and download their PDF schedule to check where and when family swim is available, and the schedules change every few months.

Emeline painstakingly downloaded all the PDFs and manually collated the schedules onto our Joyful Parenting SF blog. The way Rec and Parks structure their schedule assumes that swimmers go to their closest pool, and only need the hours for that particular pool. But we found that this was different from how many families, especially families with young children, research swim times. Often, they have a time where they can go swimming, and they are willing to go to different swimming pools. Often, they’re searching for a place to swim at the last minute. Schedules hence need to allow families to search which pools are open at what time for family swimming. Initially, we extracted family swim times manually from each pool’s pdf schedule and listed them in a blog post. It wasn't particularly user friendly, so she made an interactive map using Felt, where you could select the time period (e.g. Saturday Afternoon) and see which pool offered family swim around that time.

But the schedules change every couple of months, and it got to be too much to be manually updating the map or the blog post. Still, we wanted some way to be able to easily see when and where we could swim with the kids.

Just as we were burning out on manually updating the list, SF Rec & Park released a new Activity Search API, where you can query scheduled activities once their staff have manually entered them into the system. I wrote a Python script to pull Family Swim, and quickly realized that I had to also account for Parent and Child swim (family swim where the parents must be in the water with the kids), and other versions of this such as "Parent / Child Swim". Additionally, the data was not consistent – sometimes the scheduled activities were stored as sub activities, and I had to query the sub activity IDs to find the scheduled times. Finally, some pools (Balboa and Hamilton) have what we call "secret swim", where if the pool is split into a big and small pool, and there is Lap Swim scheduled with nothing else at the same time, the small pool can be used for family swim. So I also pulled all of the lap swim entries for these pools and all other scheduled activities at the pool so I could cross reference and see when secret family swim was available.

We've also seen occasional issues where there is a swim scheduled in the Activity Search, but it's a data entry error and the scheduled swim is not actually available, or there's a Parent Child Swim scheduled during a lap swim (but not all of the lap swims so I can't automatically detect it!) that hasn't been entered into the Activity Search at all. Our friends at SF Kids Swim have been working with SF Rec & Park to advocate for the release of the API, help correct data errors, and ask if there is any opportunity for process improvement.

At the end of the summer, Felt raised their non profit rate from $100 a year to $250 a year. We needed to pay in order to use their API to automatically update the map, but we weren't able to raise enough money to cover the higher rate. Luckily, my husband Robin is a full stack engineer specializing in complex frontends such as maps, and he looked for an open source WebGL map library. MapBox is one very popular option, but he ended up going with MapLibre GL because it had a better open source license. He wrote it in Typescript transpiled with Vite, allowing all the map processing work to happen client-side. All I needed to do was output GeoJSON with my Python script.

Originally I had been running my script in Replit, but I ended up deciding to switch to Digital Ocean because I wasn't sure how reliably Replit would be able to automatically update the map on a schedule, and I didn't know how stable their pricing would be. My regular server is still running Ubuntu 16, and instead of upgrading it (or trying to get a newer version of Python working on an old server or – god forbid – not using the amazing new Python f strings feature), I decided to spin up a new server on Almalinux 9, which doesn't require as frequent upgrades. I modified my code to automatically push updates into version control and recompile the map when schedule changes were detected, ran it in a daily cron job, and we announced our new map on our blog.

Soon we got a request for it to automatically select the current day of the week, and Robin was able to do it in a jiffy. If you're using it and find an opportunity for improvement, please find me on Twitter at ruthgracewong.

As a working mom, progress on this project was stretched out over nearly half a year. I'm grateful to be able to collaborate with the ever ineffable Emeline, as well as for support from family, friends, and SF Kids Swim. It's super exciting that the swim map is finally out in the world! You can find it at swim.joyfulparentingsf.com.

6 notes

·

View notes

Text

The Israeli spyware maker NSO Group has been on the US Department of Commerce “blacklist” since 2021 over its business of selling targeted hacking tools. But a WIRED investigation has found that the company now appears to be working to stage a comeback in Trump's America, hiring a lobbying firm with the ties to the administration to make its case.

As the White House continues its massive gutting of the United States federal government, remote and hybrid workers have been forced back to the office in a poorly coordinated effort that has left critical employees without necessary resources—even reliable Wi-Fi. And Elon Musk’s so-called Department of Government Efficiency (DOGE) held a “hackathon” in Washington, DC, this week to work on developing a “mega API” that could act as a bridge between software systems for accessing and sharing IRS data more easily.

Meanwhile, new research this week indicates that misconfigured sexual fantasy-focused AI chatbots are leaking users' chats on the open internet—revealing explicit prompts and conversations that in some cases include descriptions of child sexual abuse.

And there's more. Each week, we round up the security and privacy news we didn’t cover in depth ourselves. Click the headlines to read the full stories, and stay safe out there.

In a secret December meeting between the US and China, Beijing officials claimed credit for a broad hacking campaign that has compromised US infrastructure and alarmed American officials, according to Wall Street Journal sources. Tensions between the two countries have escalated sharply in recent weeks, because of President Donald Trump's trade war.

In public and private meetings, Chinese officials are typically firm in their denials about any and all accusations of offensive hacking. This makes it all the more unusual that the Chinese delegation specifically confirmed that years of attacks on US water utilities, ports, and other targets are the result of the US's policy support of Taiwan. Security researchers refer to the collective activity as having been perpetrated by the actor “Volt Typhoon.”

Meanwhile, the National Counterintelligence and Security Center, along with the FBI and Pentagon’s counterintelligence service, issued an alert this week that China’s intelligence services have been working to recruit current and former US federal employees by posing as private organizations like consulting firms and think tanks to establish connections.

DHS Is Now Monitoring Immigrants’ Social Media for Antisemitism

US Citizenship and Immigration Services said on Wednesday that it is starting to monitor immigrants' social media activity for signs of antisemitic activity and “physical harassment of Jewish individuals.” The agency, which operates under the Department of Homeland Security, said that such behavior would be grounds for “denying immigration benefit requests.” The new policy applies to people applying for permanent residence in the US as well as students and other affiliates of “educational institutions linked to antisemitic activity.” The move comes as Immigration and Customs Enforcement has made controversial arrests of pro-Palestinian student activists, including Mahmoud Khalil of Columbia University and Rumeysa Ozturk of Tufts University, over alleged antisemitic activity. Their lawyers deny the allegations.

Trump Revokes Security Clearance, Orders Investigation of Ex-CISA Director

President Trump this week ordered a federal investigation into former US Cybersecurity and Infrastructure Security Agency director Chris Krebs. An executive order on Wednesday revoked Krebs’ security clearance and also directed the Department of Homeland Security and the US attorney general to conduct the review. Krebs was fired by Trump in November 2020 during his first term after Krebs publicly refuted Trump’s claims of election fraud during that year's presidential election. The executive order alleges that by debunking false claims about the election while in office, Krebs violated the First Amendment's prohibition on government interference in freedom of expression.

In addition to removing Krebs' clearance, the order also revokes the clearances of anyone who works at Krebs' current employer, the security firm SentinelOne. The company said this week in a statement that it “will actively cooperate in any review of security clearances held by any of our personnel” and emphasized that the order will not result in significant operational disruption, because the company only has a handful of employees with clearances.

NSA, Cyber Command Officials Cancel RSA Security Conference Appearances

NSA Cybersecurity Division Director Dave Luber and Cyber Command Executive Director Morgan Adamski will no longer speak at the prominent RSA security conference, scheduled to begin on April 28 in San Francisco. Both appeared at the conference last year. A source told Nextgov/FCW that the cancellations were the result of agency restrictions on nonessential travel. RSA typically features top US national security and cybersecurity officials alongside industry players and researchers. President Trump recently fired General Timothy Haugh, who led both the NSA and US Cyber Command.

3 notes

·

View notes

Text

Blur Token Airdrop: How to Claim $Blur Airdrop

Blur Airdrop Eligibility : How to Get $Blur Token Airdrop?

Introduction Blur Airdrop:

Blur Airdrop ($BLUR) is a decentralized NFT marketplace known for its fast access to NFT reveals and improved user experience. They have completed the first season of airdrops called “Care Packages” and are now preparing for Season 3. In this guide, we will explore the steps to participate in the Blur Airdrop and maximize your rewards.

Step-by-step Guide for Blur Airdrop:

Connect your wallet to the Blur Airdrop Page.2. Navigate to the “Airdrop” tab to see the number of Care Packages you have earned. 3. Click on “Claim Airdrop” to claim your earned blur tokens. 4. To claim your $BLUR tokens, click on “Continue to BLUR,” “connect wallet and check eligibility,” and then “Next.” 5. complete all steps to approve and claim your tokens (if eligible). 6. Use MetaMask or a compatible wallet to claim $BLUR. 7. Confirm the transaction on your wallet.

Understanding $BLUR Tokenomics:

$BLUR has a maximum supply of 3 billion tokens, with 51% allocated to the community treasury, 29% to core contributors, 19% to investors, and 1% to advisors. Currently, only 360 million $BLUR tokens are unlocked and in circulation, with the remaining tokens still locked. Tokens are unlocked gradually, with the next unlock scheduled for June 15, 2023. 1. Strategies for Blur Season 3 Airdrop: Maximizing Blur Points: Bidding, listing, and lending on the Blur platform will earn you Blur Points. Actively participate in these activities to maximize your Blur Points.

2. Maximizing Bid Points: Place bids closest to the floor price across multiple active collections and keep your bids active for a longer duration to earn more Bid Points.

3. Maximizing Listing Points: List all your NFTs, especially blue chip and active collections, to earn more Listing Points. Utilize all of Blur’s listing tools and avoid gaming the system.

4. Maximizing Lending Points on Blend: Make Loan Offers using ETH in your Blur Pool with higher Max Borrow and lower APY to earn more Lending Points. Make multiple Loan Offers on different collections.

5. Maximizing Loyalty Points: List your NFTs exclusively through Blur to maintain 100% loyalty. Actively list blue chip and active collections while maintaining loyalty throughout Season 3.

6. Maximizing Holder Points: Deposit $BLUR tokens to earn Holder Points, which count for 50% of the Season 3 airdrop rewards. Maintain your deposit and avoid withdrawing to maximize your Holder Points.

30 notes

·

View notes

Note

first of all, I'm so sorry you had to deal with all those troubles. I'm just entering the fandom, so I have no clue how bad it was or possibly still is, but that shit ain't acceptable. I hope you're feeling well in the future, and better now.

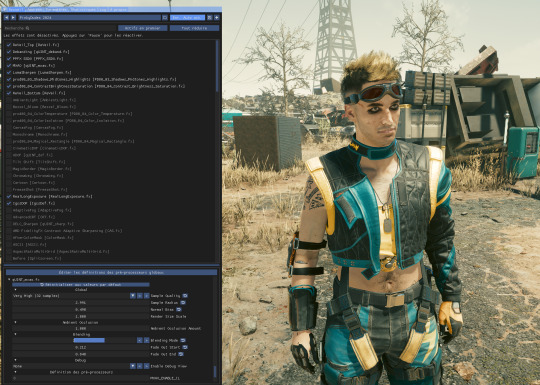

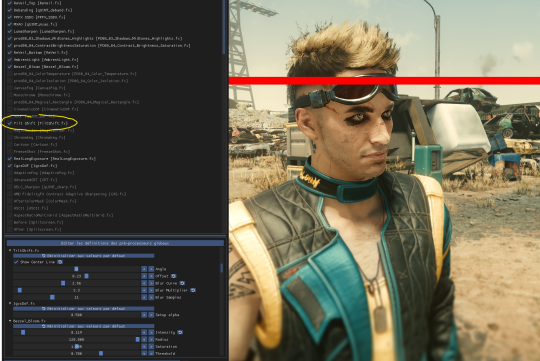

Second of all, I have a question for you about a technical problem that I can't find much of any resources on how to combat, and seeing that you're very good with virtual photography, I thought I'd ask you.

Depth of Field in the vanilla game's photomode is handled oddly, with artifacting(?) around V and certain other objects and NPCs at lower aperture values

Example:

The only advice I've seen that directly addresses this issue is simply "Raise the aperture" but that doesn't help when I'm trying to take photos with a very intense DoF (Which I really would love to do)

is there a mod/setting I could use to fix this issue?

Welcome in the fandom and thanks a lot for your words! 🧡 If you know how to curate your space, use the filter and block features, it's not that bad, especially if you find people you vibe and hang out with! Hope you'll find your comfy corner there :>

As for the question; The vanilla PM's DOF isn't really good and there isn't anyway to "tweak" it as far as I know (we can forcely disable it via some settings but that's about it)

If you're playing on PC, I can recommend getting ReShade! It's totally free, it allows you to layer all kind of shaders and post-processing effect on top of games. Since CP77 is a single player game, I recommend downloading the version with Addons support!

▶ ReShade

⚠ ReShade has its own Screenshot key; using the Vanilla photomode's key to capture your screenshots won't capture the layered shaders! So set up your pic, hide the HUD using photomode, and then use ReShade to set up more effects and polish, before taking your pic with ReShade!

More aboute ReShade and Tips on different DOFs under read more :>

Launch the installation and select Cyberpunk 2077 in the list, pick DirectX 10/11/12 as its API and dowload the recommended shaders pack

After launching the game, ReShade window should open, I recommend going through the tutorial to understand the interface better!

Then, you can start either enabling some shaders and tweaking them yourself, or looking for ReShade Presets on the internet! There's a lot of them on Nexus Mods too :>

▶ My old ReShade preset

When it comes to DOF shaders, the best one imo is IGCSDof The problem is that, even tho the DOF itself is free, you need the IGCS camera for it to work properly, and this plugin is not free

For free DOF, I can recommend ADOF and CinematicDOF! They can work together, tho I recommend only using One or Another

Here's an example of ADOF Both shaders can be tweaked with to achieve similar results

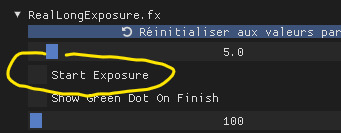

You'll notice some artifacts and pixels around the hair especially, that's when RealLongExposure save the day!

It allows you to "freeze" the game by staking frames on top of each others, "smoothing" the edges and getting rid of the artifacts

It looks less "crispy" on the right, but it's easier to spot in game!

It's recommended to bind this shader to a shortcut; to bind it to a key, simply right click on the "Start Exposure" box

Another Shader that I like is called Tilt Shift It allows you to place a gradient blur that follows a Line's angle and position!

These are only just a couple of Shaders, I invite you to test and play around with all of them to see what you vibe with!

#Hope this answer your question! And thank you for the kind words 🧡#Cyberpunk 2077#Tutorial#ReShade#Ask#demon-of-side-quest-hell

21 notes

·

View notes

Text

How To Use Llama 3.1 405B FP16 LLM On Google Kubernetes

How to set up and use large open models for multi-host generation AI over GKE

Access to open models is more important than ever for developers as generative AI grows rapidly due to developments in LLMs (Large Language Models). Open models are pre-trained foundational LLMs that are accessible to the general population. Data scientists, machine learning engineers, and application developers already have easy access to open models through platforms like Hugging Face, Kaggle, and Google Cloud’s Vertex AI.

How to use Llama 3.1 405B

Google is announcing today the ability to install and run open models like Llama 3.1 405B FP16 LLM over GKE (Google Kubernetes Engine), as some of these models demand robust infrastructure and deployment capabilities. With 405 billion parameters, Llama 3.1, published by Meta, shows notable gains in general knowledge, reasoning skills, and coding ability. To store and compute 405 billion parameters at FP (floating point) 16 precision, the model needs more than 750GB of GPU RAM for inference. The difficulty of deploying and serving such big models is lessened by the GKE method discussed in this article.

Customer Experience

You may locate the Llama 3.1 LLM as a Google Cloud customer by selecting the Llama 3.1 model tile in Vertex AI Model Garden.

Once the deploy button has been clicked, you can choose the Llama 3.1 405B FP16 model and select GKE.Image credit to Google Cloud

The automatically generated Kubernetes yaml and comprehensive deployment and serving instructions for Llama 3.1 405B FP16 are available on this page.

Deployment and servicing multiple hosts

Llama 3.1 405B FP16 LLM has significant deployment and service problems and demands over 750 GB of GPU memory. The total memory needs are influenced by a number of parameters, including the memory used by model weights, longer sequence length support, and KV (Key-Value) cache storage. Eight H100 Nvidia GPUs with 80 GB of HBM (High-Bandwidth Memory) apiece make up the A3 virtual machines, which are currently the most potent GPU option available on the Google Cloud platform. The only practical way to provide LLMs such as the FP16 Llama 3.1 405B model is to install and serve them across several hosts. To deploy over GKE, Google employs LeaderWorkerSet with Ray and vLLM.

LeaderWorkerSet

A deployment API called LeaderWorkerSet (LWS) was created especially to meet the workload demands of multi-host inference. It makes it easier to shard and run the model across numerous devices on numerous nodes. Built as a Kubernetes deployment API, LWS is compatible with both GPUs and TPUs and is independent of accelerators and the cloud. As shown here, LWS uses the upstream StatefulSet API as its core building piece.

A collection of pods is controlled as a single unit under the LWS architecture. Every pod in this group is given a distinct index between 0 and n-1, with the pod with number 0 being identified as the group leader. Every pod that is part of the group is created simultaneously and has the same lifecycle. At the group level, LWS makes rollout and rolling upgrades easier. For rolling updates, scaling, and mapping to a certain topology for placement, each group is treated as a single unit.

Each group’s upgrade procedure is carried out as a single, cohesive entity, guaranteeing that every pod in the group receives an update at the same time. While topology-aware placement is optional, it is acceptable for all pods in the same group to co-locate in the same topology. With optional all-or-nothing restart support, the group is also handled as a single entity when addressing failures. When enabled, if one pod in the group fails or if one container within any of the pods is restarted, all of the pods in the group will be recreated.

In the LWS framework, a group including a single leader and a group of workers is referred to as a replica. Two templates are supported by LWS: one for the workers and one for the leader. By offering a scale endpoint for HPA, LWS makes it possible to dynamically scale the number of replicas.

Deploying multiple hosts using vLLM and LWS

vLLM is a well-known open source model server that uses pipeline and tensor parallelism to provide multi-node multi-GPU inference. Using Megatron-LM’s tensor parallel technique, vLLM facilitates distributed tensor parallelism. With Ray for multi-node inferencing, vLLM controls the distributed runtime for pipeline parallelism.

By dividing the model horizontally across several GPUs, tensor parallelism makes the tensor parallel size equal to the number of GPUs at each node. It is crucial to remember that this method requires quick network connectivity between the GPUs.

However, pipeline parallelism does not require continuous connection between GPUs and divides the model vertically per layer. This usually equates to the quantity of nodes used for multi-host serving.

In order to support the complete Llama 3.1 405B FP16 paradigm, several parallelism techniques must be combined. To meet the model’s 750 GB memory requirement, two A3 nodes with eight H100 GPUs each will have a combined memory capacity of 1280 GB. Along with supporting lengthy context lengths, this setup will supply the buffer memory required for the key-value (KV) cache. The pipeline parallel size is set to two for this LWS deployment, while the tensor parallel size is set to eight.

In brief

We discussed in this blog how LWS provides you with the necessary features for multi-host serving. This method maximizes price-to-performance ratios and can also be used with smaller models, such as the Llama 3.1 405B FP8, on more affordable devices. Check out its Github to learn more and make direct contributions to LWS, which is open-sourced and has a vibrant community.

You can visit Vertex AI Model Garden to deploy and serve open models via managed Vertex AI backends or GKE DIY (Do It Yourself) clusters, as the Google Cloud Platform assists clients in embracing a gen AI workload. Multi-host deployment and serving is one example of how it aims to provide a flawless customer experience.

Read more on Govindhtech.com

#Llama3.1#Llama#LLM#GoogleKubernetes#GKE#405BFP16LLM#AI#GPU#vLLM#LWS#News#Technews#Technology#Technologynews#Technologytrends#govindhtech

2 notes

·

View notes

Text

Boosting Engagement with Click-to-Call: A Game-Changer for Businesses

In today’s fast-paced digital world, businesses are constantly seeking innovative ways to engage with customers and drive conversions. One such innovation that has revolutionized customer interaction is the click to call feature. This simple yet powerful tool has proven to be a game-changer for businesses, enhancing customer engagement, improving satisfaction, and ultimately driving growth. In this blog, we will explore the click to call service, its benefits, how to implement it effectively, and conclude with the success of SparkTG in leveraging this service.

If you want to know more about Whatsapp Business API — Schedule a Free Demo

If you want to read more about our Latest Blogs , then check it Now!! —

Capitalizing on Financial Institutions Integrated IVR Solution: An Overview

Beyond Button Mashing: How Conversational IVR Revolutionizes IVRs

Top 10 WhatsApp Business API Use Cases Reshaping Real Estate Communication

Beyond the Hype: The Real-World Power of Chatbots for Lead Generation

#business #sparktg #technology #ivrservice #cloudtelephony #sparktechnology #cloud #whatsappbusinessapi #ivrsolution #click2call @click2callsolution #click2callservice #click2callserviceprovider

2 notes

·

View notes

Note

OK the continuation of the sibling competition fic.

.

.

.

"Ehhhh?!?! You want to join the competition????? "

That comes from Api who just receive the news that Cahaya announced. Along with the other siblings that is the same room with them.

"Huh? But we have already team up, who's going to team up with you? And didn't said it was wasting your time? "

Daun asked with a skeptical look as if Cahaya say something unbelievable .

"Yes! I'm joining the competition and I have someone to join with ! "

Cahaya said that in a annoyed tone. Low key regretting to tell them the news knowing they have such reaction.

" Who's the person that you team up with? "

Said by Tanah with a concern tone

"......It's Fang ."

"Fang? "

"Yes, Fang "

"....... "

Silent just pure silent.

"...... So if you don't have anything to said I'm going upstairs. Bye! "

The siblings watch Cahaya went up stairs with the door closed click. Their come back with their sense with a shock expression on their faces.

"Ehhhhhh He's going to team up with Fang?!?!??! "

That's what all of them though.

.

.

.

"Ladies and gentlemen it's time - For the competition !!!!"

Many pairs of competitors gathering at the field. Including Cahaya(finally) .

Cahaya maybe wouldn't admit out loud but he have been dying to want to attend this . And he's coming with his ...... Friend! How cool is that!

While Cahaya is in his low key hyping. Fang who is beside him is actually nervous .

"Hey? Haya? "

"Yes? "

"I- I'm find if we didn't win. "

"What? "

"I never attend a competition with anyone before and I don't want you to push yourself because of me. "

"I just want to have fun with you. So-"

Fang look at Cahaya with honesty.

"It's totally fine if you want to stop ."

" I don't want you force yourself on this. "

Cahaya stare at Fang with a dumbfounded look as he is prossesing what he just said.

Then it clicked , Cahaya don't know if he should be angry or be touched by Fang's word.

He grips Fang's shoulders look in his eyes with a serious look.

"Fang look - I not forcing myself or want to win the competition. "

"And I'm definitely not gonna stop because I don't want to continue. "

"And this is also my frist time attending something like this with someone that I can call friend !"

" So...... I also want to have fun with you Fang! "

"I really mean it! "

"Haya...... "

"Attention for every competitors! The race is going to start in a minute! Once it's starts we'll inform you what kind of race you'll have! "

"So Fang..... No matter what happens, if we lose or win. "

" Let's enjoy the race and have fun! Alright? "

"You- you said that yourself don't whine if we really lose! "

"Oh totally! "

"And !!!! The competition starts now! "

.

.

.

I'll make the next part what happened in the competition.

Theyre so skeptical of fang-

And fang is so sweet, considering Cahaya and all of this, fang, its fine haya loves being with you

13 notes

·

View notes

Text

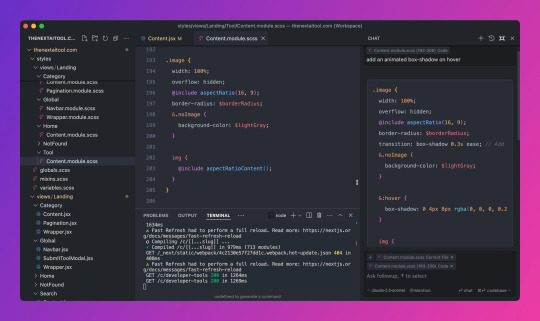

How Cursor is Transforming the Developer's Workflow

For years, developers have relied on multiple tools and websites to get the job done. The coding process was often a back-and-forth shuffle between their editor, Google, Stack Overflow, and, more recently, AI tools like ChatGPT or Claude. Need to figure out how to implement a new feature? Hop over to Google. Stuck on a bug? Search Stack Overflow for a solution. Want to refactor some messy code? Paste the code into ChatGPT, copy the response, and manually bring it back to your editor. It was an effective process, sure, but it felt disconnected and clunky. This was just part of the daily grind—until Cursor entered the scene.

Cursor changes the game by integrating AI right into your coding environment. If you’re familiar with VS Code, Cursor feels like a natural extension of your workflow. You can bring your favorite extensions, themes, and keybindings over with a single click, so there’s no learning curve to slow you down. But what truly sets Cursor apart is its seamless integration with AI, allowing you to generate code, refactor, and get smart suggestions without ever leaving the editor. The days of copying and pasting between ChatGPT and your codebase are over. Need a new function? Just describe what you want right in the text editor, and Cursor’s AI takes care of the rest, directly in your workspace.

Before Cursor, developers had to work in silos, jumping between platforms to get assistance. Now, with AI embedded in the code editor, it’s all there at your fingertips. Whether it’s reviewing documentation, getting code suggestions, or automatically updating an outdated method, Cursor brings everything together in one place. No more wasting time switching tabs or manually copying over solutions. It’s like having AI superpowers built into your terminal—boosting productivity and cutting out unnecessary friction.

The real icing on the cake? Cursor’s commitment to privacy. Your code is safe, and you can even use your own API key to keep everything under control. It’s no surprise that developers are calling Cursor a game changer. It’s not just another tool in your stack—it’s a workflow revolution. Cursor takes what used to be a disjointed process and turns it into a smooth, efficient, and AI-driven experience that keeps you focused on what really matters: writing great code. Check out for more details

3 notes

·

View notes

Text

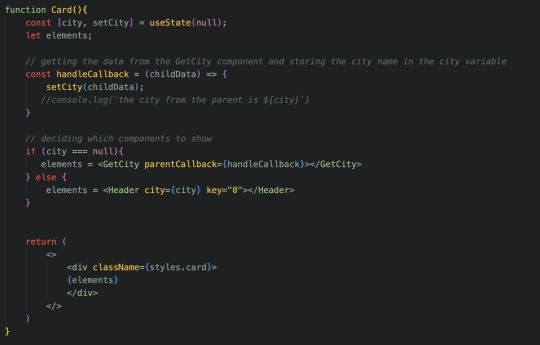

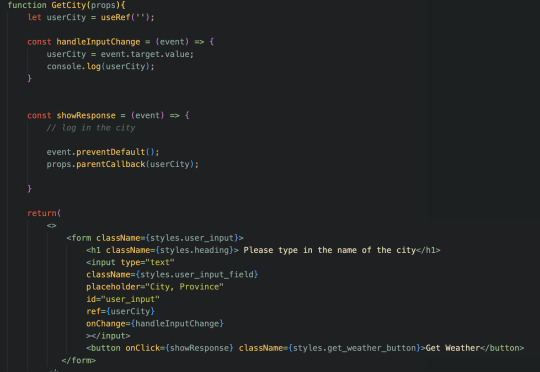

14/07/2023 || Day 51

React & Weather App Log # 2

React:

Decided to skip on the video tutorials today and instead focused on Hooks and Refs, and oh god that hurt my brain. From my understanding, Hooks are used to keep the state of a functional component, letting them have states to begin with. Along with Refs, we can update states/variables when appropriate (i.e. a user types in their name, we can now store that name in a variable). One thing that I struggled with was understanding what the code for useState in the documentation meant, but after watching a video I learned that useState returns an array in which the first element is the variable you want to keep track of, and the second element is the method used to set the value of the variable ( i.e. const [userAnswer, setUserAnswer] = useState(null) ). So, when you want to update userAnswer, you call setUserAnswer() and do the logic inside this method. To get used to using both Hooks and Refs, I continued a bit with my Weather App.

Weather App:

Like I said, I mainly wanted to practice using Hooks and Refs. One thing that I need in my weather app is for the user to type in their city (or any city), so this was the perfect time to use Hooks and Refs. I have the app set up this way: if the variable city is undefined (i.e. the user hasn't entered the city they want to get the weather for), the React component for entering the city will show up (which I called GetCity). Otherwise, the component holding the weather info will display (which I called Header).

To get the user input from the input element in my GetCity component, I ended up using the useRef method (though I think I can also use useState), and actually ended up passing this variable to the parent component, which will then pass it down to the Header component that will display the weather and such.

Now that the user typed in the city, I need to make an API call to get the weather. I'm doing this with the OpenWeather API, but first I actually need to get some location information from another API they have; their Geocoding API. With this, I can send in my city name and get back information such as the longitude and the latitude of the city, which I'll need to make the API call to get the weather. So far, I have it where I can get the longitude and latitude, but I have yet to store the values and make the 2nd API call. It'll be a few days before I get this working, but here's a little video of it "working" so far (you can see it works from the console on the right). The first screen is the GetCity component, as the user hasn't entered a city yet, and once they do and click the button, the parent component now has the city name and passes it down to the Header component:

20 notes

·

View notes